Abstract

This study aims to improve the automatic detection of unwanted emails using advanced machine learning and deep learning methods. By reviewing current research over the past five years, a comprehensive combined dataset structure was created containing a total of 81,586 email samples from seven different spam datasets. Class imbalance was addressed through the application of random oversampling and class-weighted loss, and the decision threshold was subsequently tuned for deployment. Among classical machine learning solutions, Random Forest (RF) emerged as the most successful method, while deep learning approaches, such as Transformer-based models like Distilled Bidirectional Encoder Representations from Transformers (DistilBERT) and Robustly Optimized BERT Pretraining Approach (RoBERTa), demonstrated superior performance. The highest test score (99.62%) on a combined static dataset was achieved with a multimodal architecture that combines deep meaningful text representations from DistilBERT with structural text features. Beyond this static performance benchmark, the study investigates the critical challenge of concept drift by performing a temporal analysis on datasets from different eras. The results reveal a significant performance degradation in all models when tested on modern spam, highlighting a critical vulnerability of statically trained systems. Notably, the Transformer-based model demonstrated greater robustness against this temporal decay compared to traditional methods. This study offers not only an effective classification solution but also provides crucial empirical evidence on the necessity of adaptive, continually learning systems for robust spam detection.

1. Introduction

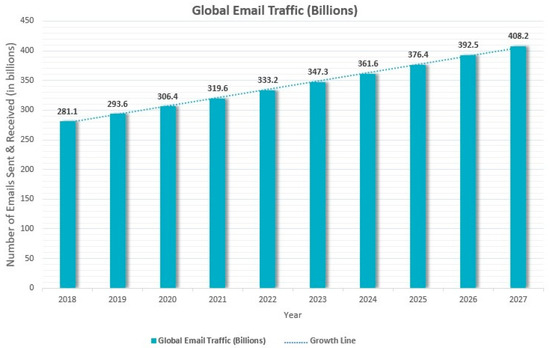

Today, email is at the center of digital interactions as an indispensable part of individual and corporate communication. According to Statista data, the number of emails sent worldwide each day reached approximately 361 billion in 2024, and is expected to exceed 408 billion by 2027 [1]. However, this massive volume of communication also brings with it serious security risks. One of the most common and persistent of these risks is unsolicited commercial messages, commonly known as spam emails. These messages, sent without the user’s consent, often contain various cyber threats such as deceptive advertisements, phishing attacks, and malware distribution. While spam emails accounted for 45.6% of total email traffic at the end of 2023, this percentage rose to 46.8% by the end of 2024 [2]. It is estimated that this percentage will continue to increase in 2027. These percentages indicate that nearly one in every two emails is spam [3].

As shown in Figure 1, email traffic has steadily increased from approximately 281 billion in 2018 to 361 billion in 2024, and is expected to reach 408 billion by 2027. This upward trend highlights the growing scale of the spam problem and emphasizes the need for more advanced and scalable detection systems. The rise in volume also parallels the increasing risk and financial impact of spam-related cyberattacks, reinforcing the urgency of robust filtering and adaptive security models.

Figure 1.

Total daily email traffic worldwide.

The harm caused by spam emails extends beyond cluttering inboxes. For businesses, it leads to lost productivity, bandwidth waste, storage overload, and costly investments in IT security. More critically, spam can result in data breaches, financial losses, and reputational damage. Some studies show that email is the most common medium for phishing and that losses per incident can sometimes reach hundreds of thousands of dollars in SMEs. For example, Carroll et al. (2022) report that one in every 4200 emails sent in 2020 was phishing [4]; Le, Le-Dinh, and Uwizeyemungu (2024) report that cyber incident costs in SMEs ranged from $826 to $653, 587 USD (average ≈ 25,000 USD) [5].

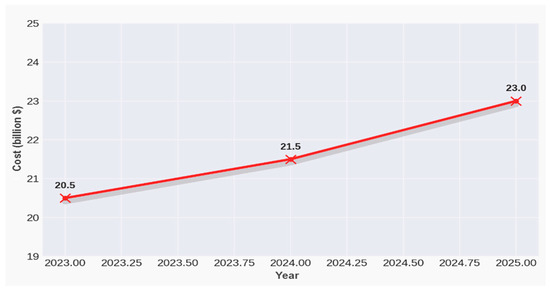

As shown in Figure 2, the damage caused by spam attacks to individual users and corporate companies is increasing yearly. With the rise of these threats, legal regulations aimed at protecting data privacy and user rights have also been tightened worldwide. Regulations such as the General Data Protection Regulation (GDPR) in the European Union and the Personal Data Protection Law (KVKK) in Turkey impose strict rules on the processing of individuals’ personal data and unsolicited communications. These laws significantly restrict the sending of spam and encourage organizations to obtain user consent while developing effective spam filtering mechanisms. Therefore, the automatic and highly accurate detection and blocking of spam emails has evolved from a mere convenience into a legal requirement and a critical component of cybersecurity strategy. Given this legal and technological landscape, researchers and developers have increasingly turned to methods rooted in artificial intelligence, especially those involving natural language and machine learning processing, as viable tools to combat spam more effectively.

Figure 2.

The cost of spam attacks over the last 3 years.

Machine Learning and Natural Language Processing (NLP)-based methods are widely used to detect spam, thanks to their ability to capture complex patterns in text. However, many research papers rely on a limited dataset, which can lead to overfitting and poor generalization to new types of emails. This can limit the ability of developed models to generalize across different types and structures of spam or ham emails, potentially providing an incomplete picture of their performance in real-world scenarios. Additionally, many studies focus on a specific algorithm or model architecture, avoiding a comprehensive comparison of different approaches or proposing more powerful hybrid systems by combining these approaches. For example, while some studies only test traditional machine learning algorithms, others focus on variations in a specific deep learning model, but do not systematically compare these two main paradigms or different deep learning architectures within the same experimental framework. These shortcomings can negatively affect the robustness of the developed spam detection systems and their adaptation to different email distributions.

Although many previous studies have reported high accuracy scores, often exceeding 99% on specific datasets, these results have often been obtained on single-source, homogeneous, or relatively small datasets. This reliance on narrow data distributions poses a significant challenge in terms of generalizability, as models often fail to perform robustly when exposed to the diverse and ever-evolving nature of real-world spam. A fundamental limitation in the literature is the lack of studies that validate high-performance models on large-scale, heterogeneous datasets compiled from multiple and diverse sources.

To address these fundamental gaps, this study makes several methodological contributions. The novelty of this work lies not in the invention of a new classification algorithm, but in its rigorous and comprehensive approach to model evaluation, which directly confronts the issues of data homogeneity and limited comparative analysis prevalent in the literature. Specifically, the contributions are threefold:

First, a large-scale, heterogeneous benchmark dataset is constructed by merging seven distinct public corpora. By training and testing on this diverse collection of over 81,000 emails, this study provides a more realistic and challenging environment for assessing the true robustness and generalizability of spam detection models—a critical step toward creating systems that are effective in real-world scenarios.

Second, a comprehensive comparative analysis is conducted across different modeling paradigms, including classical machine learning, deep learning, and state-of-the-art Transformer-based architectures. By evaluating all models under identical data conditions and evaluation protocols, this work serves as a much-needed, comprehensive benchmark for the field.

Third, a significant limitation in existing literature is the predominant focus on static performance, often overlooking the non-stationary nature of spam. Spam tactics are not static; they evolve over time in response to new technologies, social trends, and anti-spam countermeasures. This phenomenon, known as ‘concept drift’, poses a critical threat to the long-term viability of spam detection models. A model achieving high accuracy on a dataset from 2005 may fail catastrophically against the sophisticated phishing and social engineering attacks of 2022. Yet, empirical studies quantifying this performance degradation on large-scale, public email corpora remain scarce.

Therefore, in addition to establishing a robust performance benchmark, this study makes a crucial third contribution by conducting a novel temporal analysis to empirically measure the impact of concept drift on both traditional and Transformer-based models. Through the segmentation of the heterogeneous dataset into ‘classic’ and ‘modern’ eras, models were trained on historical data and their resilience against contemporary spam was evaluated. This analysis not only quantifies the performance decay on a large-scale, heterogeneous corpus but also provides empirical evidence for the mechanisms behind this decay (keyword shift vs. contextual pattern drift), offering a crucial justification for the necessity of adaptive security models.

The datasets were transformed into a common structure based solely on the label and text columns, thus creating a combined dataset containing a total of 81,586 email examples that are rich in content, source, and style. The class imbalance observed in the dataset (31,670 spam, 49,916 ham) was addressed using random oversampling of the minority class, with the aim of enabling classification algorithms to learn both classes in a balanced manner.

During the modeling process, a variety of classical machine learning algorithms, including Support Vector Machines (SVM), Random Forest (RF), Naive Bayes (NB), and Logistic Regression (LR), were initially applied and their performances compared. Following these traditional approaches, the focus of the study shifted to more advanced deep learning architectures. In particular, Distilled Bidirectional Encoder Representations from Transformers (DistilBERT) and Robustly Optimized BERT Pretraining Approach (RoBERTa), Transformer-based models that have achieved great success in text classification in recent years, were trained and evaluated to extract deep semantic representations from email texts. To achieve even higher performance than what these models provide individually, a multimodal deep learning architecture has been developed. This architecture combines text embeddings from the DistilBERT model with structural and statistical numerical features of emails, including text length, word count, and capitalization rate. The goal of this hybrid approach is to achieve stronger classification by considering both the semantic content and superficial characteristics of the text. All models were compared using the same combined dataset and evaluation metrics, with the multimodal (DistilBERT + Numerical Features) model achieving the highest test accuracy of 99.62% across the entire dataset.

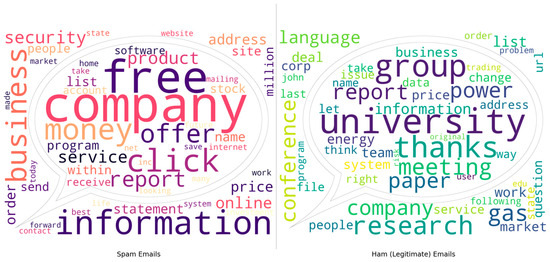

The study did not only focus on classification performance. It also examined spam and ham email contents at the word level, analyzed frequently used words, and identified class-specific linguistic patterns. In this regard, the study aims to contribute to the field of spam email detection both theoretically and practically by offering data diversity, comprehensive model comparison (including traditional machine learning, different Transformers, and multimodal approaches), and in-depth content analysis. This combination distinguishes the work from studies limited to a single dataset or a narrow set of models.

The structure of the remainder of this paper is outlined as follows: Section 2 presents an extensive review of the literature, encompassing datasets, rule-based techniques, classical machine learning, deep learning, and Transformer-based methods, while also identifying existing gaps and emerging trends. Section 3 details the methodology adopted in this study, including the dataset merging process, preprocessing steps, class balancing techniques, feature extraction methods, and the modeling approaches applied. Section 4 presents the experimental results, offering a comparative analysis of the model performances, an evaluation of practical applicability, and a discussion of observed limitations. Finally, Section 5 summarizes the main findings and proposes recommendations for future research directions.

2. Literature Review

This section presents a concise review of the literature on spam email detection, focusing on both the datasets used and the methods applied. The quality and structure of datasets significantly influence model performance, while detection techniques have evolved from rule-based systems to machine learning, deep learning, and transformer-based language models. By examining recent studies in these areas, this review aims to highlight the progress made and the current research gaps.

2.1. Datasets Used and Access Sources

The datasets used in spam email detection are one of the critical components that directly affect model performance. One of the most commonly used datasets, the SpamAssassin dataset, is provided by the Apache Software Foundation and contains real-world spam and ham email samples. This text-based dataset has been used as a fundamental resource for evaluating classical methods such as NB for many years [6]. Another important resource is the Enron Spam dataset. This dataset, created by processing real corporate email archives belonging to the Enron company, was prepared by Carnegie Mellon University. This dataset, which contains approximately 43,000 emails, stands out for its balanced presentation of both spam and ham content. Additionally, it is of great value for the adaptation of spam filtering systems to real-world conditions because it reflects corporate communication [7]. The Ling-Spam dataset is a small but balanced dataset that evaluates academic and technical content alongside spam emails. It provides effective results in distinguishing between academic email content and spam text and serves as a reference, especially for early spam filtering studies [8]. The SMS Spam dataset is the most frequently used source for testing systems with short text formats. Created by Almeida and Hidalgo, this dataset contains approximately 5500 short messages and has a compact structure for spam classification. The SMS Spam dataset is widely used in spam detection studies in the field of mobile communication [9]. In addition to these, various email datasets accessible through the Kaggle platform are also used for both academic and applied studies. These datasets are generally created, labeled, and shared by users in Comma-Separated Values (CSV) format. In this study, in addition to the SpamAssassin, Enron, Ling-Spam, and SMS Spam datasets mentioned above, two different datasets shared on Kaggle were also brought together. These datasets were converted into a common structure by considering only the label and text columns, and then cleaned and balanced to prepare them for model training. The use of multiple datasets allows the model to learn different types of spam content without being tied to a specific source. This increases the generalizability and real-world applicability of the developed classification system. In conclusion, the datasets used in spam email detection research are a decisive factor not only for model performance but also for the validity of the study. The method of combining datasets from different sources is still not widely used in the literature, and each new study in this field contributes unique insights by providing data diversity.

2.2. Rule-Based Systems

The detection of unwanted spam emails has become one of the most important subtopics of digital security since the late 1990s, when the internet began to spread. Early work in this area was generally based on rule-based systems. Methods such as blacklisting certain words or sender addresses, recognizing special character patterns in message headers, or filtering based on content length were frequently used during this period. However, these fixed rules became insufficient over time due to the constantly changing content of spam messages and lost their sustainability because they required manual intervention. To address these shortcomings, machine learning-based automatic classifiers were developed. Studies conducted in the early 2000s demonstrated that statistical learning methods could process email text in a more flexible and adaptable manner.

2.3. Studies Conducted Using Classical Machine Learning Methods

Machine learning algorithms have been quite successful in the field of spam email detection since they were first applied. In these early studies, supervised learning algorithms such as Decision Trees (DT), SVM, NB and LR stood out. These methods primarily work by classifying features extracted from text data, typically making decisions based on term frequency (TF), inverse document frequency (IDF), and specific content structure. Due to their simplicity, short training times, and interpretability, these methods have formed the foundation of many systems for years.

One of the most well-known studies in this field was conducted by Metsis and colleagues in 2006. In this study, various NB variants were tested comparatively, and it was reported that the classic NB model offered a fast, lightweight, and highly effective solution for spam detection. In experiments using the SpamAssassin dataset, the model was reported to achieve accuracy rates of up to 96% [10]. Additionally, in another study conducted by Almeida and Hidalgo in 2011, methods such as SVM and NB were evaluated on SMS spam datasets, and these classical models achieved 98% accuracy [11]. These studies are important in demonstrating how effective classical methods can be, especially with small and balanced datasets. However, with the increasing diversity of linguistic structures in spam content and the evolution of attack techniques, the flexibility and adaptability of these traditional methods have begun to fall short over time.

Table 1 presents recent studies that employed classical machine learning methods for spam email detection.

Table 1.

Other studies have been conducted in recent years using classical machine learning methods.

As presented in Table 1, classical machine learning methods continue to demonstrate strong performance, particularly in well-structured and balanced datasets, yielding high accuracy and F1 scores. These approaches commonly leverage algorithms such as SVM, NB, LR and RF, often in conjunction with feature extraction techniques such as TF-IDF. Salihi [12], for example, employed Word2Vec embeddings combined with a Multi-Layer Perceptron (MLP) classifier to detect spam in social media content. However, the reliance on Twitter data, rather than email-based datasets, limits the generalizability of the findings. Dedetürk and Akay [14] applied a LR model enhanced by the Artificial Bee Colony algorithm on spam emails collected from multiple sources, achieving an accuracy exceeding 98%, thereby highlighting the effectiveness of meta-heuristic optimization techniques. Junnarkar et al. [16] conducted a comparative evaluation of various classical models and identified RF as the most effective. Similarly, Rayan [17] proposed a hybrid bagging approach that further improved predictive accuracy. Alsuwit et al. [18] reported comparable performance by benchmarking classical methods against a basic ANN. Nevertheless, a common limitation among these studies is the absence of direct comparisons with deep learning models and the use of domain-specific or relatively small datasets, which may hinder the broader applicability of the results in more complex or linguistically diverse environments.

2.4. Studies Conducted Using Deep Learning Methods

Deep learning methods, developed to overcome the limited pattern recognition capabilities of machine learning, have rapidly become widespread in spam email detection, especially since 2015. Unlike classical models, deep learning architectures can extract features directly from data and learn complex structures in language, enabling them to achieve higher accuracy. Among the most commonly used architectures during this period are Long Short-Term Memory (LSTM) and Convolutional Neural Network (CNN). The CNN architecture successfully learns local patterns within text, especially in messages containing short phrases. LSTM, on the other hand, takes into account time-dependent data structures and can produce effective results in long texts with contextual meaning, such as emails. In a comprehensive review study published by Jáñez-Martino and colleagues in 2022, it was noted that LSTM-based models achieved Receiver Operating Characteristic—Area Under Curve (ROC-AUC) scores above 0.98 in spam email detection, while CNN models attracted attention with their effective performance on short messages. This study emphasized that deep learning architectures provide a clear advantage over classical methods, especially in data with high linguistic complexity [20]. In addition, in a study conducted by Zavrak and Yılmaz in 2023, features extracted using CNN were transferred to the LSTM layer, and the resulting features were evaluated using a RF classifier to design a hybrid model. This three-stage approach achieved an impressive score of 99.2 [21]. Such hybrid systems have made it possible to develop solutions that are both flexible and highly accurate by combining the representational power of deep learning with the decision-making mechanisms of classical methods. In conclusion, deep learning approaches have paved the way for systems that can better understand and learn the context in spam detection tasks, enabling the development of more robust filters, especially when dealing with large datasets and a wide variety of spam types.

Table 2 highlights recent studies utilizing deep learning methods such as CNN and LSTM in spam detection tasks. These models offer improved performance by learning contextual patterns and language structures directly from the data.

Table 2.

Other studies have been conducted in recent years using deep learning methods.

As highlighted in Table 2, recent studies using deep learning techniques such as CNN, LSTM, and Bidirectional Encoder Representations from Transformers (BERT) have made significant progress in spam detection. These models eliminate the dependence on manual feature engineering by directly learning layered linguistic structures from text. For example, Basyar et al. [22] demonstrated the effectiveness of GRU and LSTM architectures by achieving an accuracy rate of over 99% on the Enron dataset. Others have extended spam detection to multilingual contexts. For example, Siddique et al. [23] contributed to linguistic diversity in this field by working on Urdu datasets. Additionally, researchers such as Nasreen et al. [26] have developed foundational models such as BERT by incorporating new feature selection methods, demonstrating ongoing innovation in this field.

While these models offer exceptional performance and robustness, it is crucial to evaluate their success within context. The reported high accuracy rates are typically demonstrated on specific and sometimes homogeneous datasets (e.g., Enron, Ling-Spam, Spambase). This does not guarantee that the models will effectively generalize to the diverse range of spam tactics and linguistic styles found in more varied, multi-source email corpora. Additionally, as noted, their practical application may be constrained by high computational requirements and the need for large labeled datasets for fine-tuning. This underscores the critical need for studies that not only push the boundaries of accuracy but also validate model robustness and generalizability on larger, more challenging, and heterogeneous benchmarks.

2.5. Transformer and Language Model-Based Studies

In recent years, a significant advancement in unwanted spam email detection has been the use of pre-trained language models and Transformer architectures, which have greatly transformed NLP. Transformer architectures have the ability to learn sentence context bidirectionally and model the complex structure of language powerfully thanks to their multi-layered structure. The most well-known model in this context is Bidirectional Encoder Representations from Transformers (BERTs).

Meléndez et al. presented a comparative performance analysis of traditional machine learning models and transformative deep learning models in the detection of phishing emails [28]. They compared the results of transformative models such as distilBERT, BERT, RoBERTa, XLNet, and A Lite BERT (ALBERT) with traditional methods such as LR, SVM, and NB on 119,148 English email samples obtained from various publicly available sources. The results showed that the RoBERTa model achieved the highest performance with an F1-score of 99.51% and an accuracy rate of 99.43%. However, they also demonstrated that traditional models struggled to detect phishing content, particularly with complex language structures.

Similarly, Chandan et al. compare BERT with traditional machine learning algorithms in spam detection [29]. Their results show that BERT achieves the highest test accuracy of 98% and outperforms LR, Multivariate NB, SVM, and RF algorithms.

In a 2021 study by AbdulNabi and Yaseen, the BERT model was used for spam email detection and compared with classical methods as well as deep learning architectures such as BiLSTM. In this study, the BERT model achieved 98.67% accuracy and 98.66% F1 score, outperforming all alternative methods [30]. The results of the study showed that BERT not only understands the text but also successfully detects deceptive language patterns, semantic deviations, and expressions that deviate from the context. BERT success highlights the importance of focusing not only on the word level but also on the meaning relationships within sentences in spam detection. In addition, the advantage of these models in detecting phishing and fraudulent messages plays a major role in preventing threats that cannot be detected by traditional methods. However, the high computational costs of Transformer architectures make it difficult to use these models directly in every application. As a result, recent research has focused on the use of lighter and more efficient Transformer variants (such as DistilBERT and ALBERT) [31,32]. These models aim to achieve similar success with lower resources without compromising performance.

2.6. Trends and Gaps in the Literature

A review of the literature clearly shows that spam email detection methods have evolved over time. Starting with rule-based systems, this evolution has moved on to classical machine learning algorithms, then deep learning architectures, and finally Transformer-based pre-trained language models [3]. This transition process has brought about significant advances not only in the methods used but also in data representation, contextual understanding, and modeling capabilities. Considering the success rates achieved in recent studies, it is observed that Transformer-based models achieve the highest accuracy and F1 scores in spam detection [28,31,33]. However, this situation also brings some challenges.

The training and operation of these models require considerable computational resources. Furthermore, the fact that most studies use English-focused datasets makes it difficult to replicate similar successes in multilingual or low-resource languages, as publicly available and balanced spam datasets for languages such as Turkish and Arabic are quite limited. Another significant challenge is the constantly changing structure of spam messages, especially with the proliferation of deceptive content generated by artificial intelligence. This problem is further compounded by the emergence of adversarial attacks, where spam messages are intentionally manipulated with subtle, human-imperceptible perturbations to bypass detection. Recent studies, such as the work by Ali Owfi et al. [27], have demonstrated that even state-of-the-art models are susceptible to these evasion techniques, highlighting the importance of robust defense mechanisms such as adversarial training [28].

In recent years, there has been growing interest in integrating Explainable Artificial Intelligence (XAI) techniques into spam and phishing detection systems. While Transformer-based models such as BERT and DistilBERT achieve state-of-the-art performance, their decision-making processes often remain opaque, raising concerns about user trust and system transparency. To address this, researchers have explored methods such as SHapley Additive exPlanations (SHAP) and Local Interpretable Model-agnostic Explanations (LIME) to provide both global and local interpretability. For example, Abdellaoui Alaoui et al. [34] demonstrated how SHAP explanations improved transparency in a BERT-based spam classifier, while Shafin et al. [35] proposed an explainable feature selection framework for phishing detection by combining SHAP and LIME. Similarly, SHAP-supported ensemble models have reached high accuracy (≈97–98%) and offered interpretable feature contributions that assist analysts in understanding model predictions [36]. For the hybrid approach, XAI could clarify the relative contributions of semantic Transformer embeddings and structural features, and help explain false positives, thereby improving model reliability. However, due to computational and resource constraints, XAI integration was not included in this study and is explicitly acknowledged as a limitation. Its systematic incorporation remains a priority for future work to further enhance interpretability and user trust.

Consequently, the primary goal of the research community has shifted towards developing systems that not only offer high accuracy but are also robust, updatable, efficient, and capable of real-time operation. In this context, the literature is showing significant progress at both the academic and industrial levels; next-generation AI-based spam filtering systems continue to play a critical role in secure email communication.

This work directly addresses some of the identified research gaps. Initially, seven heterogeneous, publicly available spam datasets were integrated into a unified corpus. This provides a broader and more representative base for model training, which is essential for improving generalizability beyond single-domain datasets. While the current work focuses on English emails, the diversity and size of the corpus lay the groundwork for future multilingual experiments, especially in low-resource languages such as Turkish and Arabic. Second, the proposed multimodal approach (by combining Transformer-based contextual embeddings from DistilBERT with lightweight structural features) provides a balanced solution that maintains high semantic understanding while improving computational efficiency during both training and inference. Third, the flexibility of the feature set and model architecture enables periodic updates, adapting to evolving spam patterns, including AI-generated deceptive content. Finally, by emphasizing scalability, reproducibility, and real-time applicability, this work bridges the gap between theoretical research and the practical requirements of operational spam detection systems.

3. Methods

3.1. General Approach

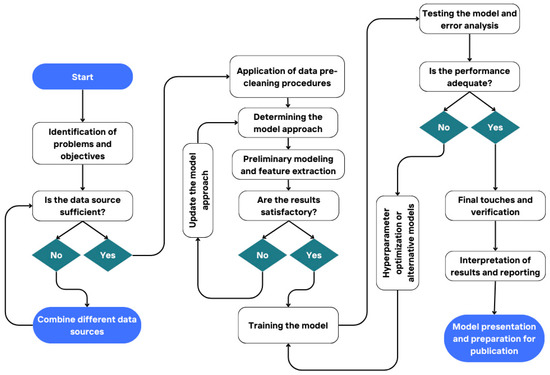

In this study, both deep learning architectures and classical machine learning algorithms were used to detect spam emails. The study consists of four main stages: data collection and integration, preprocessing, modeling, and performance evaluation. Each stage was structured by considering the needs of the field and the existing gaps in the literature. The steps applied throughout this study are visualized in Figure 3. The model development, testing, and evaluation processes were carried out iteratively.

Figure 3.

Process diagram of the study.

Figure 3 illustrates the iterative and structured flow of the spam detection pipeline, including data adequacy check, preprocessing, model selection and training, performance evaluation, and final deployment.

3.2. Datasets

3.2.1. Merging Datasets

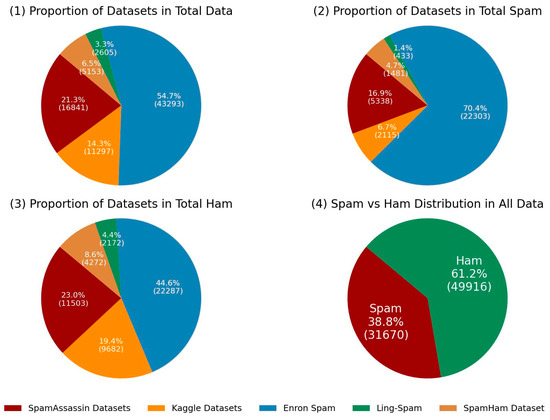

In this study, seven different sources were used to obtain more diverse and representative spam email data without being limited to a single dataset, and the data obtained from these sources were combined. The datasets in question include SpamAssassin (two versions) [6], Enron Spam [7], Ling-Spam [8], two different spam datasets obtained from the Kaggle platform [37], and the spam-ham dataset used in the study “Spam Detection Using Firefly Optimization Technique.” Each dataset was cleaned to include “label” and “text” columns and converted to a common structure. Following this preprocessing step, the datasets were combined to form a dataset consisting of a total of 81,586 email examples. This combined dataset contains 31,670 spam emails and 49,916 ham emails. This not only increased the amount of data but also enabled the model to recognize a wider variety of spam examples. Additionally, this approach made it possible to train the model to learn the general structure of spam rather than being specific to a single source.

As can be seen in the graphs in Figure 4, the Enron Spam dataset is the most dominant dataset in terms of both volume (number of data points) and class balance. With a spam rate of over 70%, it contains the majority of all spam data. The SpamAssassin group also makes a significant contribution with a general rate of 20%. Kaggle datasets are relatively smaller in size, but they have a meaningful percentage in terms of the ham class. The Ling-Spam dataset provides the smallest contribution. The SpamHam dataset, on the other hand, stands out for its balanced structure, especially for SMS-based analyses.

Figure 4.

Ratios of datasets used.

3.2.2. Pre-Processing and Feature Engineering Pipeline

The heterogeneity of the combined datasets necessitated a carefully designed, dual-path pipeline to systematically extract both high-level structural features and deep semantic information. This approach ensures that valuable signals from the original text structure are preserved while also simultaneously preparing a clean version of the text for semantic analysis.

The process begins with a single, non-destructive step common to both paths: the removal of Hypertext Markup Language (HTML) tags to produce a baseline plain text. From this point, the pipeline diverges into two parallel streams:

The first path is dedicated to Structural Feature Extraction. Using the plain text, four statistical features that often serve as strong indicators of spam are computed: text length (character count), word count, capitalization rate, and the count of punctuation and special characters. This calculation is performed before any further normalization that would otherwise erase this critical information. While the subsequent removal of numbers in the second path might discard some specific cues (e.g., prices or dates), this was a deliberate trade-off to foster a more generalized semantic representation and reduce model complexity.

The second path focuses on Semantic Text Normalization to prepare the text for the deep learning models. This is a sequential transformation pipeline that starts with the same plain text. First, all non-alphabetic characters—including punctuation, numbers, and symbols—are removed using the regular expression [^a-zA-Z\s]. The text is then converted to lowercase for consistency. Subsequently, common English words with low semantic value are filtered out using the standard Natural Language Toolkit (NLTK) library English stopwords list. Finally, the remaining words are reduced to their dictionary root form using the NLTK WordNetLemmatizer. These specific parameter choices ensure the methodological transparency and reproducibility of our work.

As shown in Table 3, this dual-path methodology yields, from a single original text, two distinct outputs: a vector of structural metrics and a clean sequence of tokens for the model.

Table 3.

The dual-path pre-processing and feature engineering pipeline.

The process detailed in Table 3 culminates in two distinct and complementary representations for each email: a vector of numerical structural features from Path 1, and a normalized sequence of tokens for deep semantic analysis from Path 2. These two components are ultimately fused at the model input layer, forming a holistic representation of the message. By separating the extraction of structural metadata from semantic normalization, the risk of losing valuable information during pre-processing is minimized. This multimodal approach empowers the model to leverage both the overt, structural signals common in spam (e.g., excessive capitalization) and the subtle, context-dependent meanings within the text, providing a clear and reproducible framework for achieving robust classification.

3.2.3. Balancing Process

A natural class imbalance was observed in the combined dataset, where approximately 49,916 instances belonged to the ham category, while only 31,670 were labeled as spam. This disproportion may lead learning algorithms to become biased toward the majority class, thereby compromising the overall model effectiveness. To mitigate this imbalance, a random oversampling strategy was employed to equalize the distribution between the two categories. Specifically, additional samples of the minority class (spam emails) were generated by duplicating existing entries at random until the quantity matched that of the ham class. Following this augmentation, all entries were randomly shuffled to form the final balanced dataset. The resulting data was saved as balanced_dataset.csv and utilized during model training. This approach helped reduce bias stemming from uneven class representation and contributed to fairer and more generalizable model outcomes.

3.2.4. Attribute Extraction

In this study, a multifaceted feature extraction process combining classical text representations with modern language models was applied for spam email detection. To make the obtained email texts processable by machine learning models, traditional text vectorization techniques such as CountVectorizer and TF-IDF were first used. With CountVectorizer, each text was represented based on the number of words it contained, resulting in 5000-dimensional sparse vectors based on the 5000 most frequently occurring words in the dataset. These vectors were directly used as input in basic machine learning models. The TF-IDF method is a weighted word representation technique that makes word frequency meaningful not only within a text but across the entire document collection. In this method, the importance of each word within the text is calculated by considering both its frequency and the number of documents in which it appears. This way, words that are frequently used but not distinctive are represented with lower weight in the model, while distinctive expressions specific to spam messages are highlighted. The representations obtained using TF-IDF were used as an alternative to CountVectorizer in training of some classical models, and the results were evaluated comparatively. In addition to traditional feature extraction techniques, DistilBERT, a modern language model, was also used to represent the semantic structure of text in greater depth. Each email was converted into a 768-dimensional contextual vector using this model. DistilBERT is a Transformer-based model that effectively models the relationships between words in context. This allows for a more accurate representation of manipulative patterns, indirect expressions, and deceptive language structures commonly found in spam content. In addition to the language model representation, four statistical content features were also extracted for each text. These are: the character length of the email, the total number of words, the capitalization rate, and the number of punctuation marks. These numerical features contribute significantly to the model learning process as they reflect the formal characteristics of spam content. For example, the capitalization rate helps the model distinguish “shouting” behavior, while punctuation frequency helps distinguish spam-specific linguistic cues such as emphasis or excitement. As a result, this study combines classical vectorization techniques (CountVectorizer and TF-IDF) with deep learning-based language representations (DistilBERT) and statistical content features. These features were evaluated in different ways depending on the problem and model; for classical machine learning algorithms, CountVectorizer and TF-IDF representations were used, while for the deep learning model, a 772-dimensional combined feature vector consisting of a 768-dimensional DistilBERT representation and a 4-dimensional statistical feature was used. This multi-modal feature extraction approach enables the model to perform a more comprehensive evaluation not only at the word level but also in terms of meaning, structure, and form.

3.3. Model Architectures and Rationale

This section details the rationale behind the selection of different model architectures and provides a technical description of the final proposed model.

3.3.1. Rationale for Model Selection

Transformer-based language models such as DistilBERT and RoBERTa have proven highly effective for text classification, including spam detection, due to their contextual understanding. DistilBERT is a compact version of BERT that achieves almost the same language comprehension as BERT with far fewer parameters, making it faster and more resource-efficient [38]. Despite being 40% smaller and 60% faster, DistilBERT retains about 95–97% of BERT performance on language understanding tasks, which explains its strong accuracy in classification while being efficient for deployment [38,39]. RoBERTa, on the other hand, is a robustly optimized BERT variant that benefits from extensive pre-training and improved training strategies (e.g., dynamic masking and larger training data). For example, one study found RoBERTa achieved 96% accuracy on a combined fake news corpus—higher than both traditional machine learning models and earlier deep networks [40]. This superior performance is attributed to RoBERTa ability to capture rich contextual relationships between words, significantly enhancing text classification tasks [41,42]. In summary, DistilBERT and RoBERTa are chosen for spam email detection and general text classification because they leverage Transformer architectures to capture nuanced context, yielding state-of-the-art accuracy with improved efficiency.

CNN have been a popular choice for text classification (including spam filtering) due to their strength in extracting local text patterns. CNN-based models treat text as a temporal spatial signal, using convolution filters to learn n-gram features and salient phrases that are indicative of spam or topic categories. CNNs excel at capturing local interaction information in text—they can detect phrase-level or character-level patterns (e.g., common spam words or sequences) with position invariance [43]. Studies have shown that CNN models “record phrase-level semantics of different lengths” through their local filters and have achieved good results in classification tasks by picking up key features from the text [43]. Because spam emails often contain distinctive token patterns (such as certain keywords or symbol sequences), CNNs are effective in recognizing these local features. In practice, CNN architectures are often combined with pooling layers to abstract the most informative features, making them robust for tasks such as spam detection where specific subsequences (e.g., “FREE $$!!!”) can distinguish spam from legitimate text.

LSTM networks (a type of RNN) are well-suited for text classification problems that require understanding the sequential context of words, such as email spam detection. Unlike CNNs, which focus on local patterns, LSTMs process text as sequences, learning long-range dependencies (e.g., the overall sentence structure or context) which is crucial for capturing the meaning of messages. LSTMs include gating mechanisms (forget, input, and output gates) that overcome the vanishing gradient problem of standard RNNs, enabling them to retain important information over long word distances. This design allows LSTMs to “better capture the connection among context feature words” by filtering out irrelevant details via the forget gate. LSTM-based models have historically performed strongly on text classification, often outperforming simpler sequence models. For instance, bidirectional LSTMs (Bi-LSTMs) that read text in both directions further improve context capture, and have been shown to yield high accuracy in tasks from sentiment analysis to spam filtering [43]. In summary, LSTMs are chosen for their ability to model sequential context in messages and capture long-term dependencies, which is vital for understanding and classifying emails and messages that span multiple words or sentences.

In addition to deep learning models, incorporating structural features of text (also known as stylometric or metadata features) can enhance spam detection. Spam emails and messages often exhibit abnormal stylistic patterns that distinguish them from legitimate correspondence. For example, research has noted that spam messages frequently contain unusual text patterns—excessive use of uppercase letters, a high amount of punctuation, links, or certain “spammy” trigger words not typically found in normal messages [44]. Such features can be quantified, e.g., the text length (number of characters or words), the uppercase ratio (percentage of characters that are uppercase, indicating shouting or emphasis), or the punctuation count (e.g., many exclamation marks or special symbols). These attributes have been used as input to machine learning classifiers in spam detection with success. In fact, a survey of spam detection methods emphasizes that structural attributes like number of non-alphanumeric characters, count of capital letters, average words per sentence, etc., allow classifiers to learn and recognize spam patterns effectively [44].

Each of the above models captures different aspects of the data (semantic context vs. local patterns vs. stylometric cues). Combining transformer-based text embeddings with additional structural features can yield a multimodal advantage in spam detection and text classification. The intuition is that Transformers such as BERT/DistilBERT or RoBERTa provide a deep understanding of the message content, while structural features provide auxiliary information about the message) format and style. Recent studies have demonstrated the benefit of such hybrid models [44]. Other hybrid approaches in the literature also pair deep neural networks (e.g., CNN or LSTM) with manual features or even graph-based features to improve robustness [45].The general consensus is that Transformer models plus additional statistical features provide complementary strengths: the Transformer learns complex language patterns, and the structural features catch out-of-the-ordinary characteristics, together yielding a more accurate and explainable spam detection system [44]. This multimodal strategy is especially useful in a methodology aimed at maximizing detection performance—it leverages the state-of-the-art language understanding of Transformers and the human-understandable cues from structural analysis to satisfy both accuracy and interpretability demands in spam email filtering and general text classification.

3.3.2. Architecture of the Proposed Hybrid Model

The model developed in this study was created using a multi-modal deep learning architecture that approaches the task of spam email detection not only linguistically, but also structurally and formally. In the model design, contextual representations obtained from the pre-trained language model DistilBERT are combined with statistical features of each email text, and these two different information sources are merged into a single feature vector. Thus, the model is structured to consider not only what the email says but also how it is said. The DistilBERT model converts each text into a 768-dimensional vector, enabling it to represent the contextual relationships between words with high accuracy. In addition to these contextual representations, four different statistical features were extracted for each email: text length, number of words, capitalization rate, and number of punctuation marks. These features are known to contribute significantly to distinguishing spam content. These two distinct information sources were then fused into a single input vector for the classification model. Specifically, the 768-dimensional contextual embedding from DistilBERT and the 4-dimensional scaled numerical features were concatenated horizontally, resulting in a final 772-dimensional feature vector for each email example. The combined vector was then provided as input to the deep learning model. For the text inputs to DistilBERT, all sequences were either padded or truncated to a uniform length of 256 tokens to ensure consistent input size for the model. The model was developed using the Python and the Keras library, based on a Sequential structure consisting of sequential layers. The first hidden layer following the input layer contains 128 neurons and uses the ReLU activation function. This layer is supported by the subsequent BatchNormalization and 40% Dropout layers, thus ensuring learning stability and reducing the risk of overfitting. The second hidden layer has a structure with 64 neurons, and BatchNormalization and Dropout operations continue to be applied in a similar manner. In the output layer, a single neuron with a sigmoid activation function is used, as appropriate for a binary classification problem. With this structure, the model calculates the probability of each email example being spam or ham by generating a value between 0 and 1 for that example. During model training, the binary cross-entropy function was selected as the loss metric, as it is commonly regarded as an effective choice for binary classification tasks. The Adam optimization algorithm was employed, with the learning rate configured at 5 × 10−5. To reduce the risk of overfitting and unnecessary training cycles, the EarlyStopping strategy was implemented. Within this approach, training was halted and the optimal model weights were restored if the validation loss did not show improvement for three successive epochs. The resulting model seeks to deliver high levels of accuracy, robustness, and generalizability in spam email classification by integrating advanced language representation techniques with the interpretability of traditional structural features.

3.4. Experimental Setup and Reproducibility Protocols

To ensure transparency, validity, and reproducibility, a rigorous standardized protocol was established for the experiments. This section details the specific configurations for data handling, model training, and the computational environment.

3.4.1. Dataset Preparation, Splitting, and Balancing

All experiments were conducted on a comprehensive dataset created by merging seven distinct public corpora. The handling of this combined dataset followed a strict sequence to prevent any form of data leakage between training and testing phases.

- Initial Splitting: The complete, imbalanced corpus of 81,586 emails was first split into a training set (80% of the data, n = 65,268) and a hold-out test set (20%, n = 16,318). This division was performed using a stratified splitting strategy (stratify = y) based on the email labels (spam/ham). This ensures that the class distribution in the test set accurately reflects the original, real-world distribution of the combined data. The test set was then sequestered and remained entirely unseen until the final model evaluation.

- Handling Class Imbalance: A significant class imbalance was present in the initial training data. To address this, a random oversampling technique was applied, but critically, only to the 80% training partition. This method duplicated instances from the minority class (spam) until its sample size matched that of the majority class (ham). This created a balanced training corpus, which was used for all subsequent model training and validation steps to provide a fair learning basis for all algorithms.

- Validation Set Creation: For deep learning models that benefit from a validation set for hyperparameter tuning and early stopping (specifically, our proposed multimodal model), the balanced training set was further subdivided. 90% of this balanced data was used for a new, final training set, while the remaining 10% was designated as the validation set.

- Reproducibility Seed: To guarantee that all results are fully reproducible, a consistent random seed (random_state = 42) was used for all data splitting, shuffling, and sampling processes.

3.4.2. Model Configurations and Training Parameters

The architectural details and training parameters of the proposed multimodal model are as follows. First, for each email, two sets of features were generated. Structural features (text length, word count, uppercase ratio, punctuation count) were extracted from the semi-raw text prior to any normalization. These were then standardized using StandardScaler. Separately, contextual features were generated by feeding the normalized text into DistilBERT to obtain a 768-dimensional embedding vector (CLS token). These two feature sets were then concatenated to form a final 772-dimensional input vector for the classifier.

The classifier itself comprised two fully connected layers (128 and 64 units, ReLU) regularized with L2 (λ = 0.001), Batch Normalization, and Dropout (rate = 0.4).

All experiments were implemented in Python 3.10, utilizing TensorFlow 2.14 and the Hugging Face Transformers library (version 4.38.2). The experiments were executed within a Google Colaboratory environment, leveraging a single NVIDIA T4 GPU with 16 GB of VRAM.

The hyperparameters for the Transformer-based models were carefully configured as follows:

- Tokenization and Sequence Length: A maximum sequence length of 256 tokens was used for all Transformer inputs, using the DistilBertTokenizerFast and corresponding model classes. Longer texts were truncated, and shorter ones were padded to this length.

- Training Epochs and Strategy: Distinct training strategies were employed:

- ○

- Baseline Transformers (DistilBERT & RoBERTa): These models were fine-tuned for a fixed 2 epochs with a batch size of 16. Preliminary experiments indicated this was an optimal duration to achieve strong performance without significant overfitting.

- ○

- Proposed Multimodal Model: The final hybrid model was trained for a maximum of 15 epochs with a batch size of 32. To optimize training time, DistilBERT embeddings were pre-computed. An EarlyStopping callback monitored the validation loss, halting the training if no improvement was observed for 3 consecutive epochs (patience = 3) and restoring the weights from the best-performing epoch.

- Optimization and Learning Rate: The Adam optimizer was used with a learning rate of 5 × 10−5 for fine-tuning the Transformer models. The multimodal classifier head also utilized Adam and was regularized with L2 regularization (λ = 0.001), Batch Normalization after each dense layer, and Dropout (rate = 0.4).

3.4.3. Statistical Analysis Protocol

In this study, uncertainty and significance were reported using the following protocol. 95% confidence intervals were added to point estimates using stratified non-parametric bootstrap (n = 2000 resampling) that preserves class proportions. Confidence intervals and pairwise differences for ROC-AUC are calculated using the DeLong method (two-tailed, α = 0.05). 95% confidence intervals for Precision–Recall Area Under the Curve (PR-AUC) (Average Precision) are obtained via bootstrap; pairwise differences are checked using a label-stratified permutation test (10,000 permutations) when necessary. McNemar exact test (two-tailed) is used on the corresponding accuracy flags in the same test set; the Holm–Bonferroni correction is applied for multiple pairwise comparisons. Expected Calibration Error (ECE) (10 equal-frequency bins) and Brier are used in calibration assessment; their 95% confidence intervals are provided via bootstrap. At fixed False Positive Rate (FPR) operating points, True Positive Rate (TPR) (Recall) and Precision are reported with 95% Wilson intervals on the test set. Unless otherwise specified, all intervals are 95%, and all tests are two-tailed.

3.4.4. Decision Threshold Tuning for Deployment-Oriented Evaluation

In practical applications such as spam detection, relying on a default classification threshold of 0.5 is often suboptimal. This is due to the asymmetric cost of misclassification: the consequence of incorrectly blocking a legitimate email (a False Positive) is typically far more severe than failing to filter a spam email (a False Negative). A high rate of false positives can erode user trust and lead to the loss of critical correspondence. To align the evaluation with realistic deployment scenarios, where operational constraints prioritize minimizing such errors, a dedicated decision threshold tuning process was performed. The objective was to assess model performance not based on aggregate metrics alone, but under stringent, pre-defined false positive budgets.

The tuning process was conducted using the prediction probabilities generated by the top-performing models (RF, CNN + LSTM + RF, and the proposed multimodal model) on the validation set. It is critical to note that this tuning was performed before the final evaluation on the hold-out test set, thereby preventing any data leakage and ensuring the integrity of our final performance metrics. For each model, a Receiver Operating Characteristic (ROC) curve was generated, plotting the TPR against the FPR across the full range of decision thresholds. From these curves, the specific probability thresholds corresponding to target operational points—fixed FPRs of 0.1%, 0.5%, and 1.0%—were programmatically identified.

These empirically determined thresholds, which are tailored to specific tolerances for false positives, were then applied during the final evaluation phase on the test set predictions. This procedure allowed us to report more practical and interpretable performance metrics, such as the achievable TPR (Recall) and Precision at these fixed, low-FPR operating points. The comprehensive results of this deployment-oriented analysis, including the identified thresholds for each model, are detailed in Section 4.3.1.

3.5. Temporal Robustness Analysis

In addition to the static performance benchmark, this study introduces a novel temporal analysis to investigate the impact of concept drift on model performance. This analysis is designed to simulate a more realistic deployment scenario where a model trained on historical data must contend with evolving, contemporary threats. The experimental setup for this specific analysis is detailed below.

3.5.1. Temporal Dataset Segmentation

To facilitate a robust temporal analysis, the combined dataset of 81,586 emails was partitioned into two distinct, non-overlapping eras. This segmentation was not arbitrary; rather, it was data-driven and constrained by the collection periods of the large-scale, publicly available benchmark corpora that constitute the heterogeneous dataset used in this study. The goal was to create two macro-periods representing technologically and tactically different stages of spam evolution.

Classic Era (c. 1998–2006): This subset (~65,287 emails) comprises datasets from the early internet, including the Enron Corpus (collected 2000–2002), Ling-Spam (collected ~2000), and the SpamAssassin Public Corpus (collected 2002–2006). This period largely pre-dates the widespread adoption of smartphones and social media, and its spam is characterized by more direct, text-heavy, keyword-based commercial solicitations and early filter evasion tactics.

Modern Era (c. 2010–2022): This subset (~16,299 emails) is composed of more recent datasets, primarily the Spam Collection and various modern corpora from the Kaggle platform, whose collection dates fall within this period. This era reflects a landscape dominated by mobile-centric communication, sophisticated social engineering, and phishing tactics that often mimic legitimate notifications from online services.

It is critical to note the observational gap between 2007 and 2009 in the timeline considered in this study. This gap does not represent a deliberate exclusion but reflects a documented scarcity of large, publicly available, and consistently labeled email spam corpora from this transitional period. While this limitation prevents a more granular year-over-year analysis, the chosen eras effectively capture two significantly different spam paradigms, allowing for a meaningful investigation into the impact of long-term concept drift on model performance.

3.5.2. Experimental Protocol: Train-in-the-Past, Test-in-the-Future

The core of the temporal analysis adheres to a strict “train-in-the-past, test-in-the-future” protocol to measure model resilience against unseen spam tactics. The protocol was executed for the top-performing classical model (RF) and the best overall model (DistilBERT + Features):

- Training Phase: Each model was trained exclusively on a training partition (80%) of the Classic Era dataset. During this phase, the models had no exposure to any data, linguistic patterns, or vocabulary from the Modern Era.

- Evaluation Phase: The performance of the single trained model was then evaluated on two independent test sets:

- ○

- In-Distribution Evaluation (Baseline): The model was first tested on the hold-out test partition (20%) of the Classic Era dataset. This evaluation establishes the model’s baseline performance within its own temporal distribution.

- ○

- Out-of-Distribution Evaluation (Stress Test): The same model was subsequently tested on the entire Modern Era dataset. This evaluation measures the model’s ability to generalize to future spam tactics and quantifies the performance degradation attributable to concept drift.

This comparative evaluation allows for a direct measurement of performance decay, providing empirical evidence of the models’ temporal robustness (or lack thereof).

3.6. Comparative Analysis of Alternative Models

Before finalizing the proposed framework, a comprehensive comparative analysis was conducted to evaluate a wide range of modeling approaches. This process was essential for assessing the performance of different architectures on the dataset and for providing an empirical basis for the final model selection.

Crucially, all models discussed in this section were trained and tested on the same unified dataset—created by merging all seven public corpora—and subjected to identical preprocessing steps. This ensures a fair and direct comparison of their capabilities.

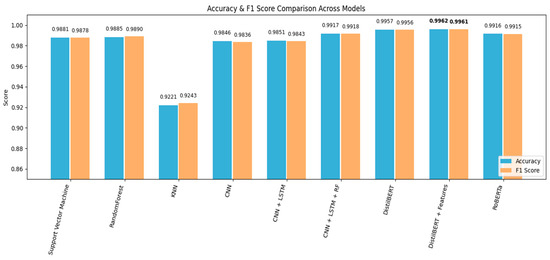

Figure 5 provides a high-level visual summary of the accuracy and F1-score for the key models from each architectural family, illustrating the clear performance hierarchy. The following subsections, supported by the detailed metrics in Table 4, provide a structured discussion of these results.

Figure 5.

Side-by-side comparison of Accuracy and F1-Score for key evaluated models.

Table 4.

Detailed Performance Comparison of All Evaluated Models.

3.6.1. Performance of Classical Machine Learning Models

The first group of experiments involved classical machine learning algorithms, which rely on vectorized text features. As can be seen in the detailed results in Table 4, the RF model has yielded the most successful results in this category. This model stands out in particular for its high accuracy (98.85%) and F1 score (98.90%). Other models such as SVM also demonstrated strong performance, while NB and KNN yielded lower results. Although effective, the primary limitation of these models is their inability to capture the contextual and sequential nature of language.

3.6.2. Performance of Deep Learning Models

To address the limitations of classical methods, an evaluation of deep learning architectures recognized for their capacity to learn hierarchical and sequential patterns was conducted. The results show a clear improvement over classical models. Notably, the hybrid model, which combines CNN and LSTM and is enhanced with a RF classifier in the final stage, achieved the highest success in this group. This structure stands out with an accuracy of 99.17% and an F1 score of 99.18%, highlighting the benefit of hybrid deep learning architectures.

3.6.3. Performance of Transformer-Based Models

The final set of experiments focused on Transformer-based models, which represent the state-of-the-art in natural language understanding. The results obtained show that RoBERTa is quite successful with an accuracy rate of 99.16% and the baseline DistilBERT with an accuracy rate of 99.57%.

However, the best result was achieved with the proposed multimodal model, DistilBERT + Features. This approach, which combines contextual text representations with fundamental statistical attributes, produced the most effective outcomes, outperforming all others by reaching a peak accuracy of 99.62% and an F1 score of 99.61%. The success of this model is attributed to its ability to take into account not only the meaning of the words but also the structural and formal characteristics of the message.

The comparative analysis presented in Table 4 reveals a clear performance hierarchy: Transformer-based models outperform deep learning architectures, which in turn are superior to classical machine learning methods. The incremental performance gains at each stage highlight the importance of sophisticated feature representation, moving from simple word counts to complex contextual embeddings. The success of the DistilBERT + Features model validates the central hypothesis that a hybrid approach combining the strengths of deep language understanding and structural feature analysis provides the most effective solution for spam email detection.

4. Findings and Evaluations

In this study, a comprehensive modeling process for spam email detection was carried out using a large and balanced dataset compiled from various sources. In this process, both classical machine learning algorithms and deep learning and transformer-based approaches were tested. Based on the results obtained, the models that achieved the highest success were identified, and their advantages and limitations were evaluated.

4.1. Performance Benchmark on a Combined Static Dataset

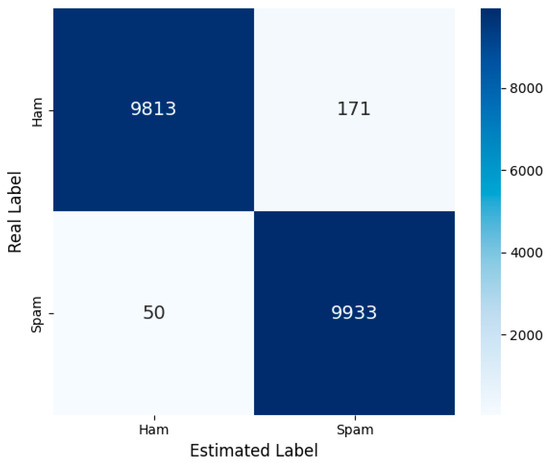

Among classic machine learning algorithms, the RF algorithm stands out with 98.85% accuracy and a high F1 score. Upon examining confusion matrix in Figure 6, it is observed that while the model classifies spam data with high accuracy, it produces a higher number of false positive predictions on ham emails compared to other models. This indicates that the model has high sensitivity on spam data but slightly lower specificity on the ham class.

Figure 6.

Random Forest—Confusion Matrix.

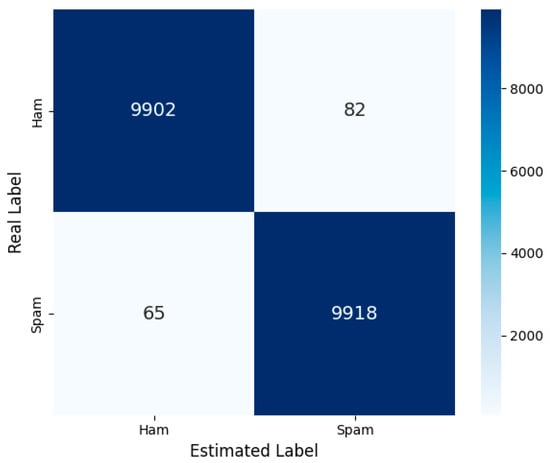

The combination of CNN + LSTM + RF, which is based on deep learning models, has attracted attention with an accuracy rate of 99.17%. Looking at the confusion matrix in Figure 7, it can be seen that the model classifies spam and ham samples more evenly than the RF model. The CNN layer learns local text patterns, while the LSTM captures temporal structures. Meanwhile, the RF effectively performs the final classification. This approach combines the best features of both classical and deep learning methods.

Figure 7.

CNN + LSTM + RF—Confusion Matrix.

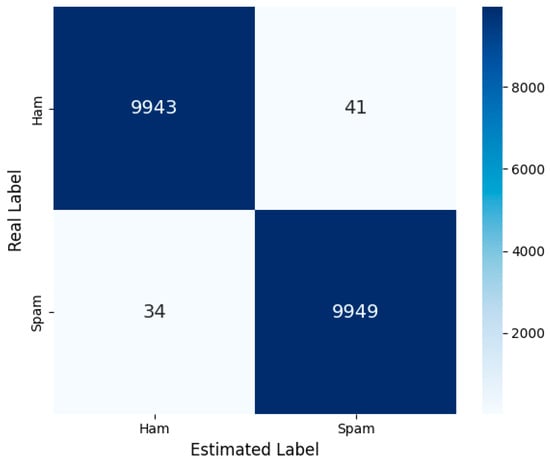

Among transformer-based models, the model that achieved the highest success with 99.62% accuracy is this multimodal architecture, which includes content-based statistical features in addition to language representations obtained with DistilBERT. The success of this model stems not only from the power of DistilBERT, which deeply understands the semantic content of the text, but also from the additional information provided by structural text features. As seen in the confusion matrix in Figure 8, the number of misclassified examples is significantly lower compared to other models. This result demonstrates the model capability to make decisions not only based on meaning but also on formal features. The combination of contextual meaning and surface-level features makes this model the most effective solution.

Figure 8.

MultiModal—Confusion Matrix.

4.2. The Challenge of Concept Drift: A Temporal Performance Analysis

While the static benchmark provides a comprehensive overview of model capabilities on a diverse dataset, it does not capture the critical dimension of time. To address this, a temporal analysis was conducted as described in Section 3.5. The results reveal a significant degradation in performance, providing stark evidence of concept drift.

4.2.1. Quantifying Performance Degradation over Time

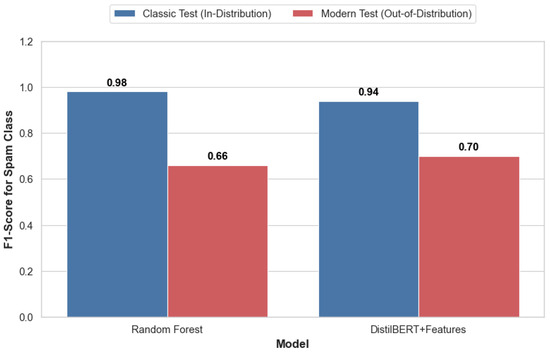

Figure 9 illustrates the performance of the RF and DistilBERT + Features models when trained exclusively on the Classic Era dataset and subsequently tested on both the Classic and Modern Era test sets. A sharp decline in performance is evident for both models when confronted with modern spam.

Figure 9.

F1-score comparison of models trained on historical data (Classic Era) and tested on both historical and contemporary spam (Modern Era).

The RF model, which achieved a high F1-score of 98% on its native Classic Era data, experienced a catastrophic drop to 66% on the Modern Era data—a relative performance loss of over 32%. More critically, its precision for the spam class plummeted from 98% to just 50.3%, indicating an unacceptably high rate of false positives where legitimate emails are incorrectly flagged as spam. The DistilBERT + Features model, while more robust, was not immune. Its F1-score for spam detection decreased from 94% to 70%. Although its deeper semantic understanding provided a buffer against the temporal decay, this still represents a significant vulnerability.

4.2.2. The Linguistic Evolution of Spam Tactics

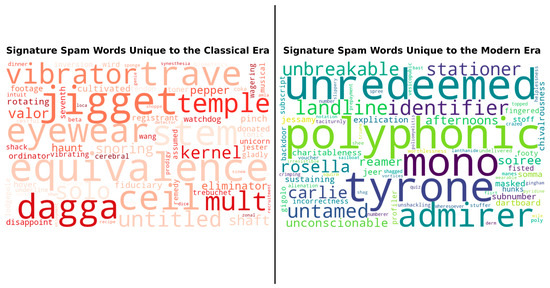

The underlying cause of this performance degradation is a fundamental shift in the language and tactics of spam. Figure 10 visualizes the “signature” keywords that are most characteristic of each era, as determined by a log-odds ratio analysis. It is important to note that this method intentionally suppresses words that are common to both eras (such as ‘free’, ‘money’, ‘click’) to specifically highlight the terms that define the tactical differences between the periods.

Figure 10.

Signature spam keywords unique to the Classic Era versus the Modern Era.

The Classic Era (left) is defined by terms related to direct commercial offers, financial schemes, and rudimentary filter evasion tactics (e.g., eyewear, vibrator, jigget, equivalence). The spam of this period often resembles blatant product advertisements or uses “word salad” to confuse early statistical filters.

In stark contrast, the Modern Era (right) is characterized by a vocabulary centered on mobile services and psychological manipulation. Signature terms such as polyphonic, landline, and voucher highlight the shift towards mobile-centric scams and fake offers. Furthermore, words such as unredeemed and emotionally charged adjectives (unbreakable, irresistible) signify a move towards more sophisticated social engineering and phishing tactics that mimic legitimate service notifications and exploit user psychology.

4.3. In-Depth Robustness and Generalization Analysis

This section advances beyond headline accuracy metrics in the range of 99–99.6% and positions the results under a singular, standardized evaluation protocol, emphasizing the contribution of feature fusion. We compare a broad set of traditional, neural, and Transformer baselines trained and tested with identical preprocessing and metrics. Our emphasis is on reliability and generalization across sources and on deployment-relevant behavior (e.g., stability at low false-positive operating points), rather than on marginal absolute gains that may not be practically meaningful. The multimodal variant—DistilBERT embeddings fused with a lightweight set of structural cues—serves to quantify the value of combining deep semantics with simple text-level regularities. All confidence intervals and p-values reported in this subsection are computed according to the protocol specified in Methods (Statistical Procedures).

4.3.1. Statistical Robustness and Calibration

The point estimates presented in Figure 5 are complemented by reporting statistical uncertainty, threshold-free metrics, paired significance, and probability calibration. Our goal is to characterize robustness and deployment-relevant behavior rather than repeat Accuracy/Precision/Recall/F1 numbers.

Table 5 summarizes the robustness of the models using 95% bootstrap confidence intervals (2000 stratified samples) and threshold-independent measurements, without resorting to the repetition of individual metrics. Under the combined evaluation protocol and balanced test section (n = 4992), accuracy values converged at a level of ≳0.995, and due to the associated “ceiling effect,” Accuracy/F1 ranges largely overlapped; this indicates that the observed differences remained within sampling variability and did not indicate a significant performance gap. However, threshold-independent metrics show small but consistent distinctions: Multimodal (DistilBERT + Features) achieved the highest values in PR-AUC and ROC-AUC (0.9998/0.9998), as well as in Matthews Correlation Coefficient (MCC) (0.9948) and Balanced Accuracy (0.9974) metrics; RF and CNN + LSTM + RF, on the other hand, fall within a statistically similar range in these metrics. The following sections complete this picture with matched significance (McNemar) and calibration findings.

Table 5.

Robustness metrics and 95% Confidence Intervals (CIs).

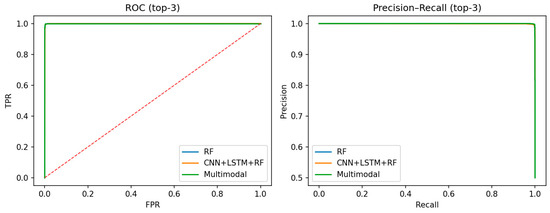

Figure 11 illustrates the ROC curve (left) and the Precision-Recall curve (right) for the three models. The ROC panel emphasizes the operating points at false positive rates of 0.1%, 0.5%, and 1.0%. The PR panel shows the corresponding precision–recall operating points at these thresholds.

Figure 11.

ROC and PR curves with 95% bootstrap bands. The dashed red line in the ROC panel represents the performance of a random classifier.

The McNemar test was applied to the accuracy flags (1 = correct, 0 = incorrect) on the same samples. b01 is the number of pairs where “the contrast model is correct, Multimodal is incorrect”; b10 is the number of pairs where “Multimodal is correct, the contrast model is incorrect.” In both comparisons, the two-tailed p-values are p > 0.05, so there is no statistically significant difference in this test section (the difference for Multimodal vs. RF is b10 − b01 = +10, and although the trend favors Multimodal, p = 0.076 does not fall below the significance threshold).

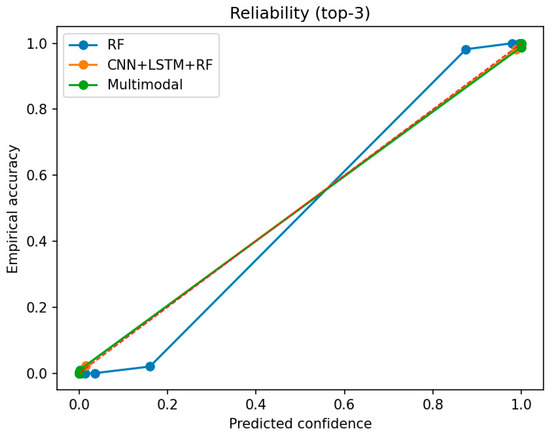

As illustrated in Figure 12, the reliability (calibration) curves indicate that probability estimates are well calibrated across models, with the multimodal approach showing the smallest miscalibration in the low-FPR operating region.

Figure 12.

Reliability (Calibration) curves with 95% bootstrap bands for all models. The dashed diagonal line represents perfect calibration.

The findings in this subsection show that, according to the McNemar analysis in Table 6, there is no statistically significant difference between the top-performing models (two-tailed p-values > 0.05). However, in threshold-independent measurements shown in Table 5 and Figure 11, the Multimodal (DistilBERT + Features) model consistently achieves the highest values and demonstrates superior sensitivity, particularly in the very low false-positive region (FPR = 0.1%).

Table 6.

Paired McNemar test.

In terms of probability quality, deep models are significantly better calibrated. Table 7 summarizes these results using the Expected Calibration Error (ECE) and Brier score. The CNN + LSTM + RF model attains the lowest ECE (0.0020), while the Multimodal model has the lowest Brier score (0.0024). In contrast, the higher ECE and Brier values for the RF model are consistent with its deviation in the reliability curve shown in Figure 12.

Table 7.

Calibration metrics (ECE, Brier) with 95% bootstrap CIs.

Although CNN + LSTM + RF attains the lowest ECE, the multimodal model delivers superior sensitivity in the very low-FPR region while maintaining competitive Brier performance. Therefore, the Multimodal approach is preferred as the primary model for field deployment and subsequent experiments, while the other two models are retained as a robust and explanatory basis for comparison.

4.3.2. Cross-Dataset Generalization Analysis

It is critical to confirm that the high success metrics presented in the study stem from the model ability to generalize to data types it has never seen before, rather than memorizing the distributions of different corpora in the combined dataset. To this end, a two-stage robustness analysis was performed.

First, to prevent potential data leakage between different datasets, exact duplicate email texts across all corpora were identified and deduplicated prior to merging. The separation of training, validation, and test sets was performed before any oversampling operations.

Second, and more importantly, a cross-validation strategy called “Leave-One-Corpus-Out” (LOCO) was applied. In this strategy, the models were trained on a combination of six of the seven datasets and tested on the seventh dataset, which they had never seen before. This process was repeated seven times, with each dataset being excluded in turn. This test measures how well the model can adapt to completely new emails from different sources and structures. The results of this comprehensive test are summarized in Table 8.

Table 8.

Cross-Validation Generalization Performance of Models Using the LOCO Method.

The best performance values are marked in bold. Dataset names have been standardized to ensure consistency with the definitions in Section 3.2.1.