Multi-Agent Hierarchical Reinforcement Learning for PTZ Camera Control and Visual Enhancement

Abstract

1. Introduction

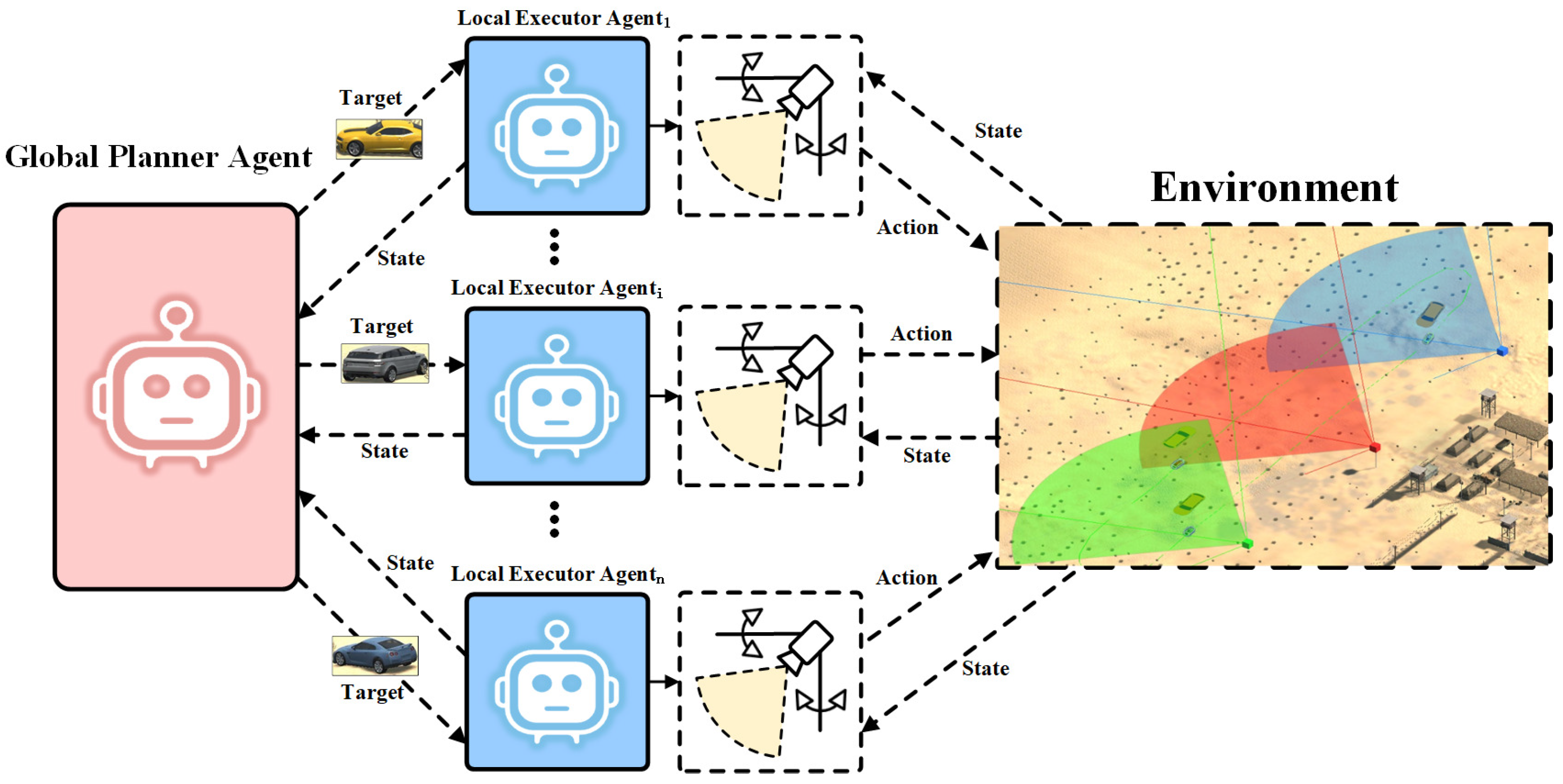

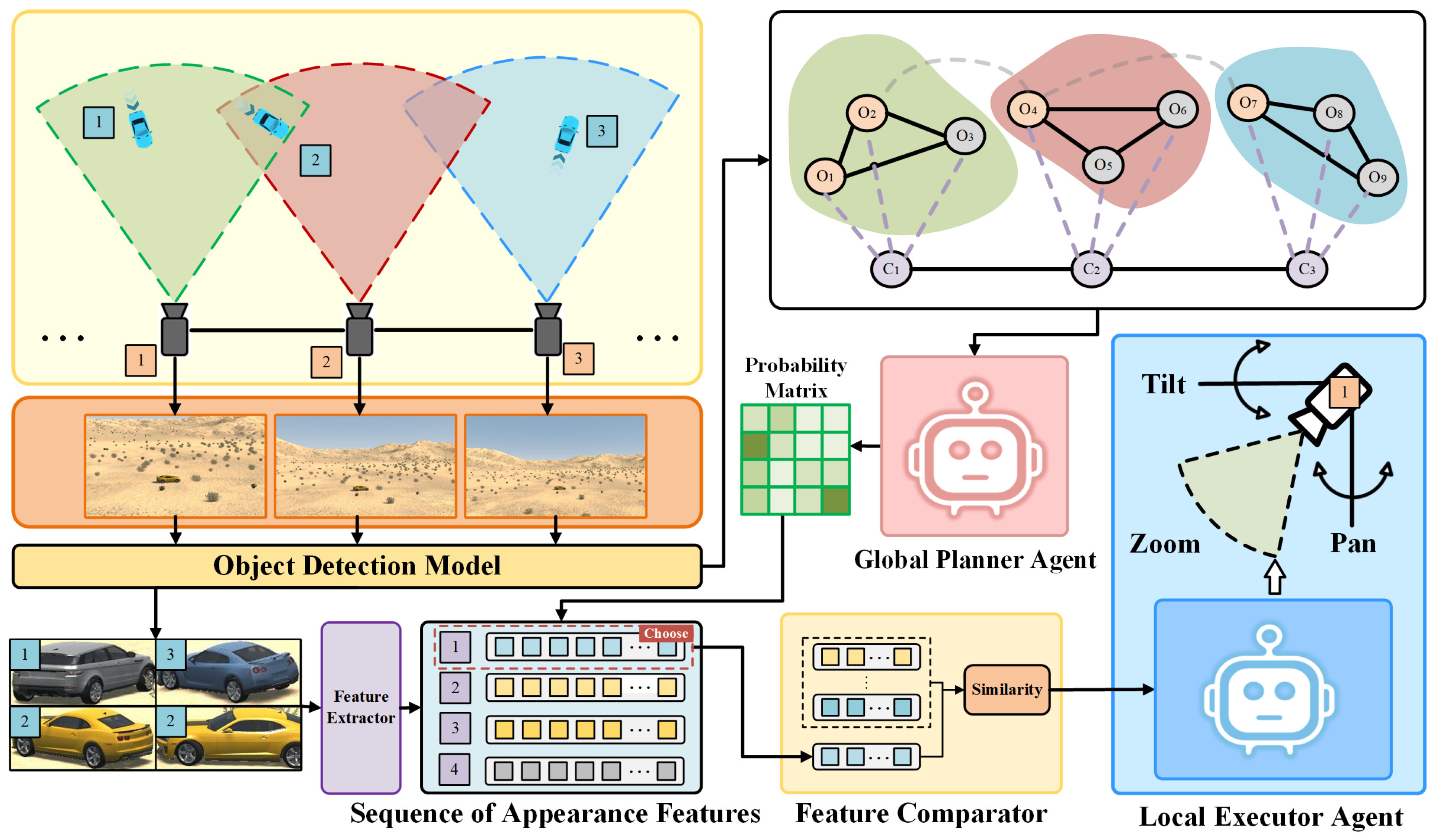

- We design a two-level hierarchical reinforcement learning architecture consisting of a high-level Global Planner Agent and low-level Local Executor Agents. The high-level agent assigns optimal attention targets to each PTZ camera from a global perspective, while the low-level agents autonomously decide on specific control actions to perform visual enhancement, enabling cross-scale, multi-target collaborative perception to improve task execution efficiency.

- To effectively capture the topological relationships between cameras and targets, we propose a graph-based joint state space and introduce a graph neural network model to learn complex structural relationships, extract high-level features, and enhance the learning of inter-node dependencies.

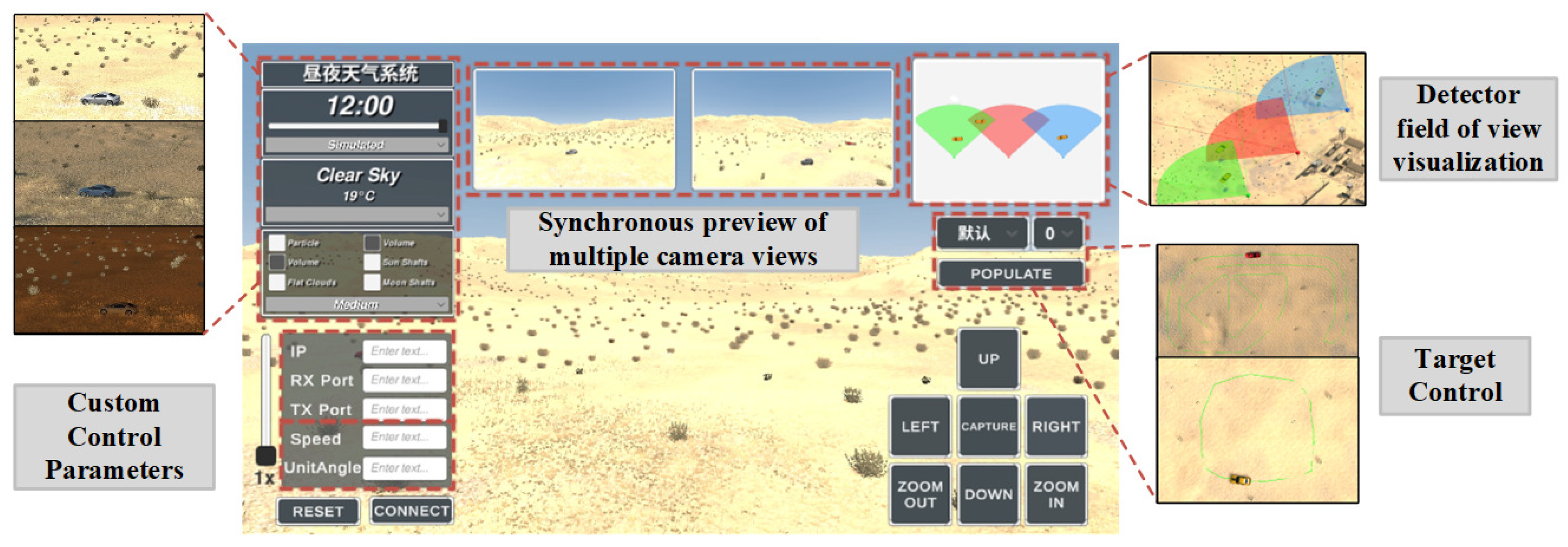

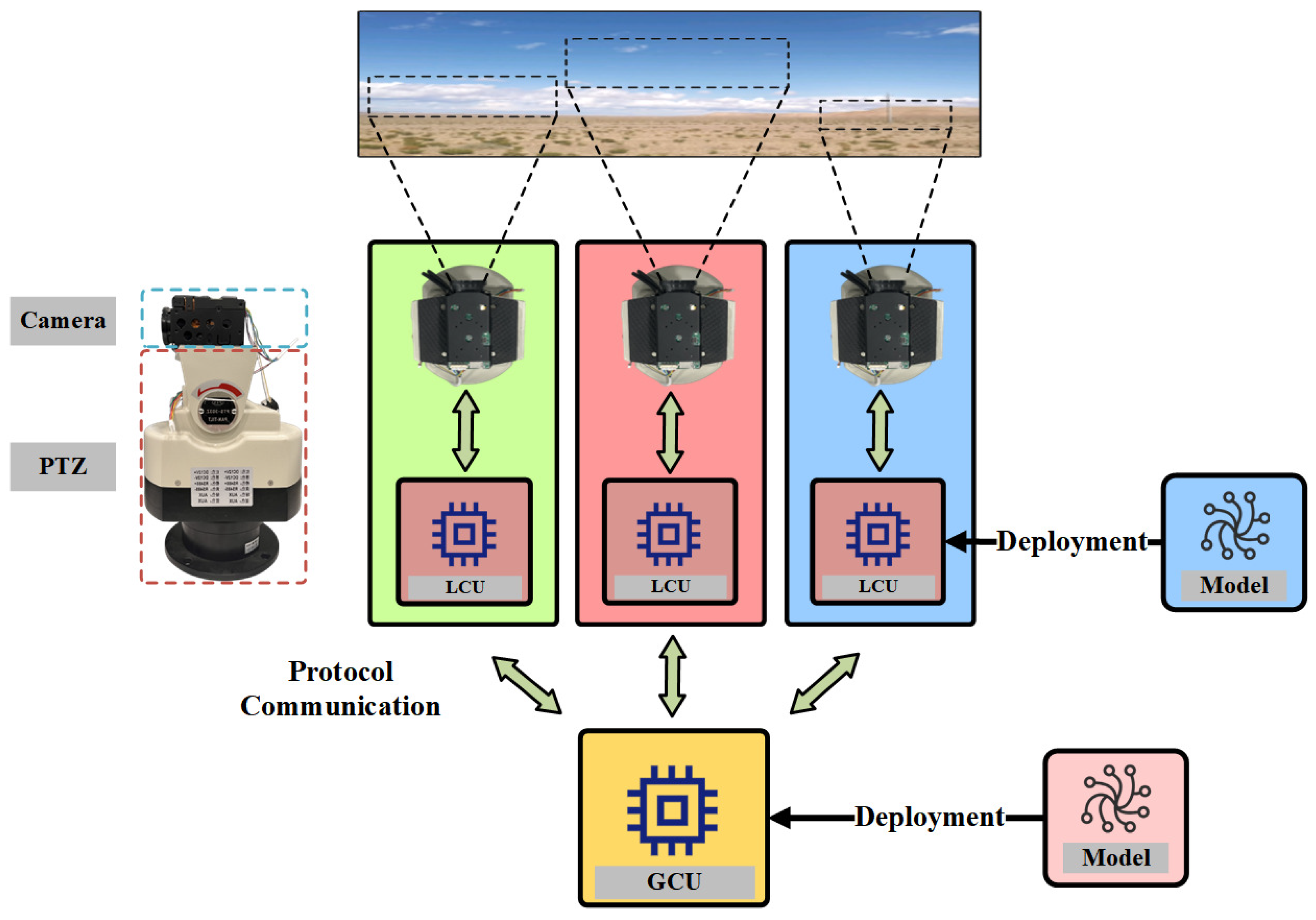

- We develop a simulation environment that mimics real-world scenarios for training and evaluation and implement a corresponding hardware interface to validate the proposed method’s transferability and feasibility in real-world applications.

2. Related Work

2.1. Multi-Agent Reinforcement Learning

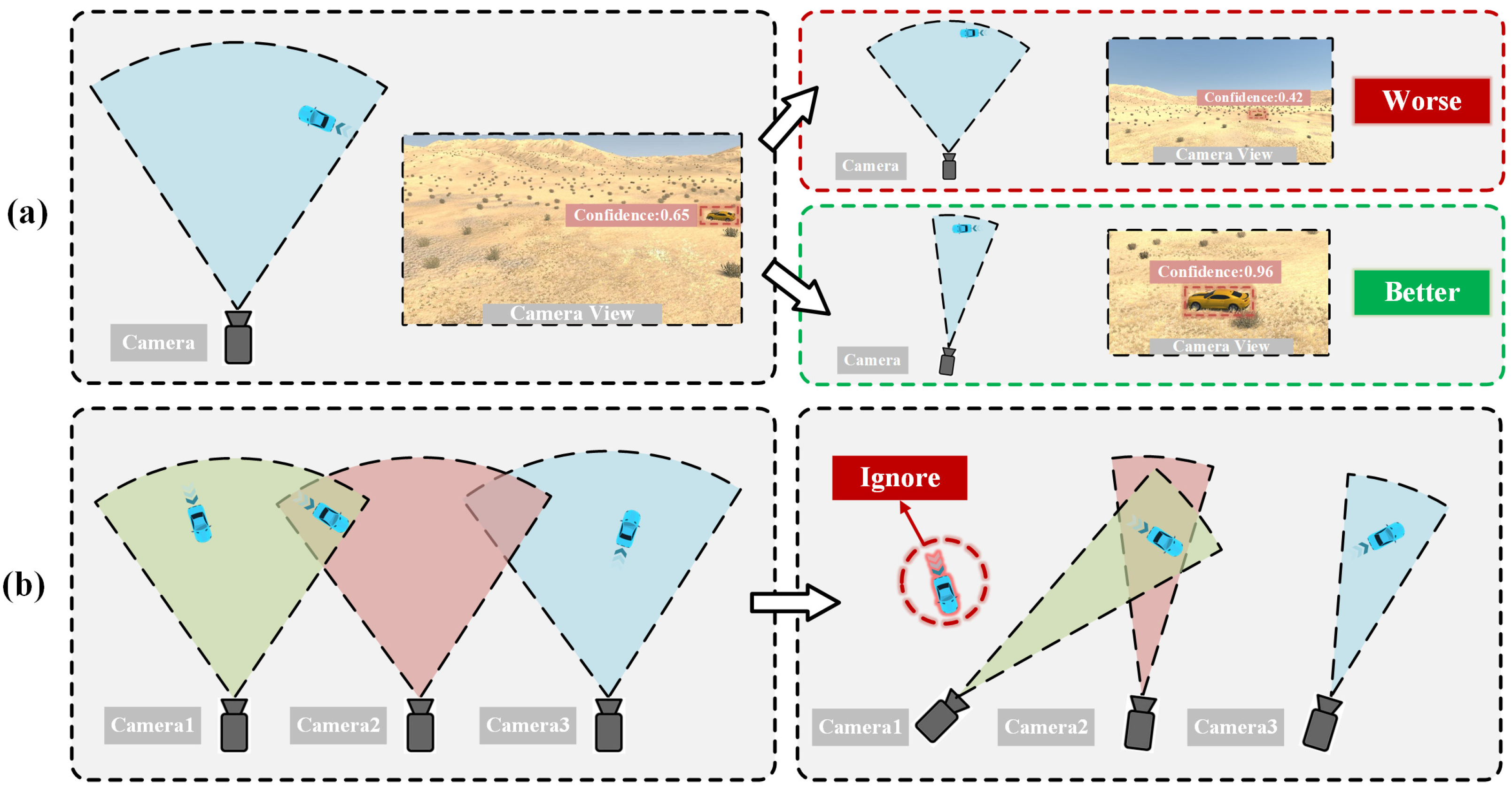

2.2. Reinforcement Learning-Based Autonomous Cooperative Decision-Making for Multiple PTZ Cameras

3. Multi-Agent Cooperative Algorithm for PTZ Cameras Based on Hierarchical Reinforcement Learning

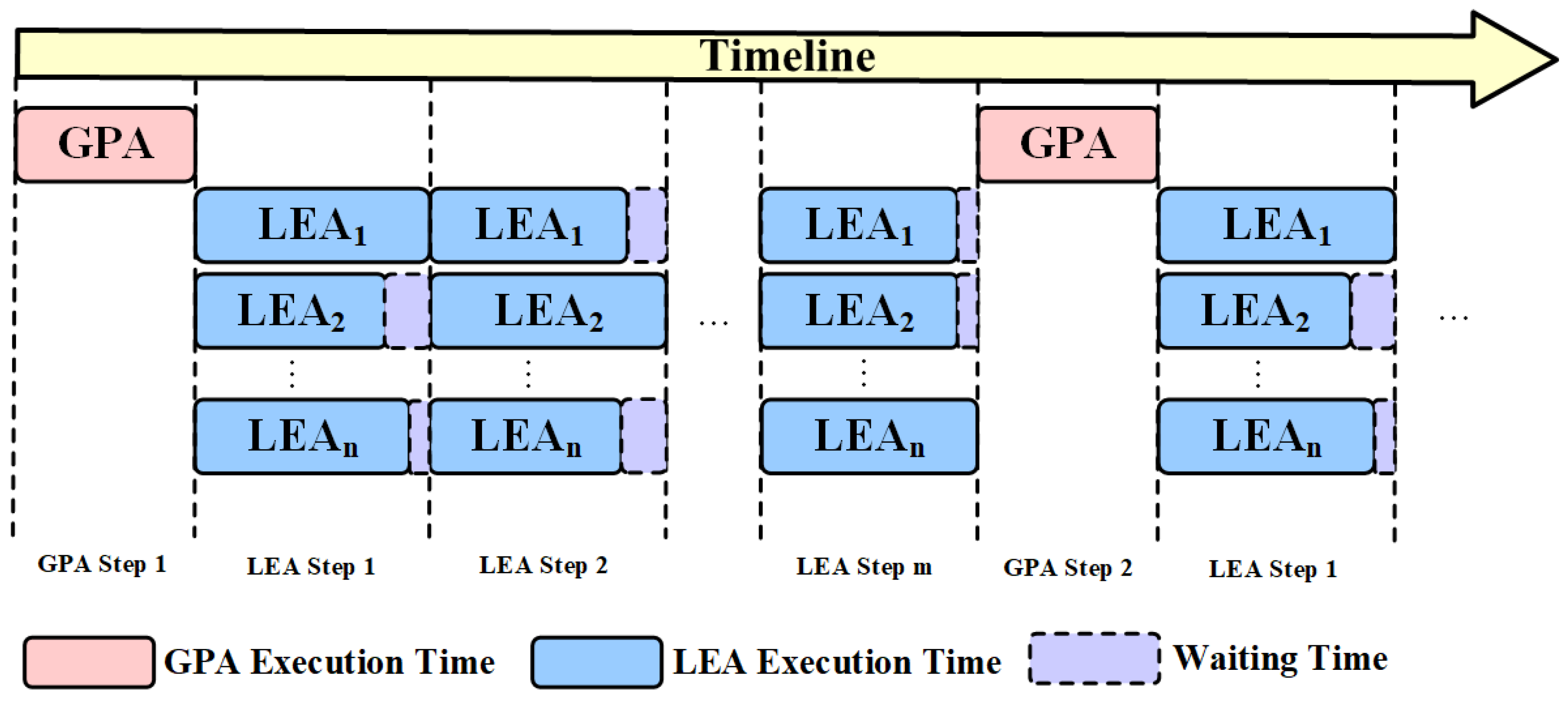

3.1. Overall Framework

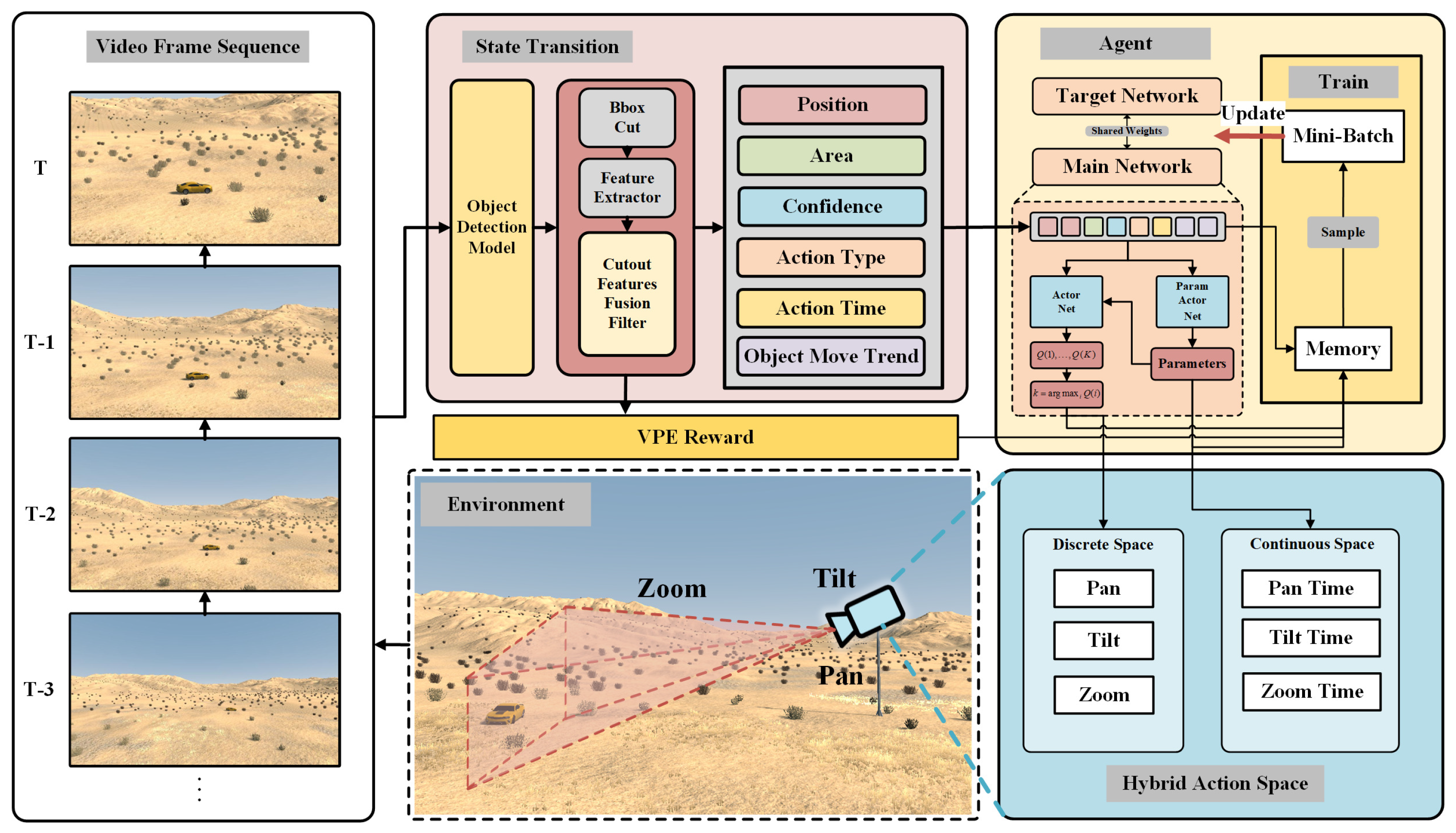

3.2. Local Executor Agent

3.3. Global Planner Agent

3.3.1. Joint Action Space Design

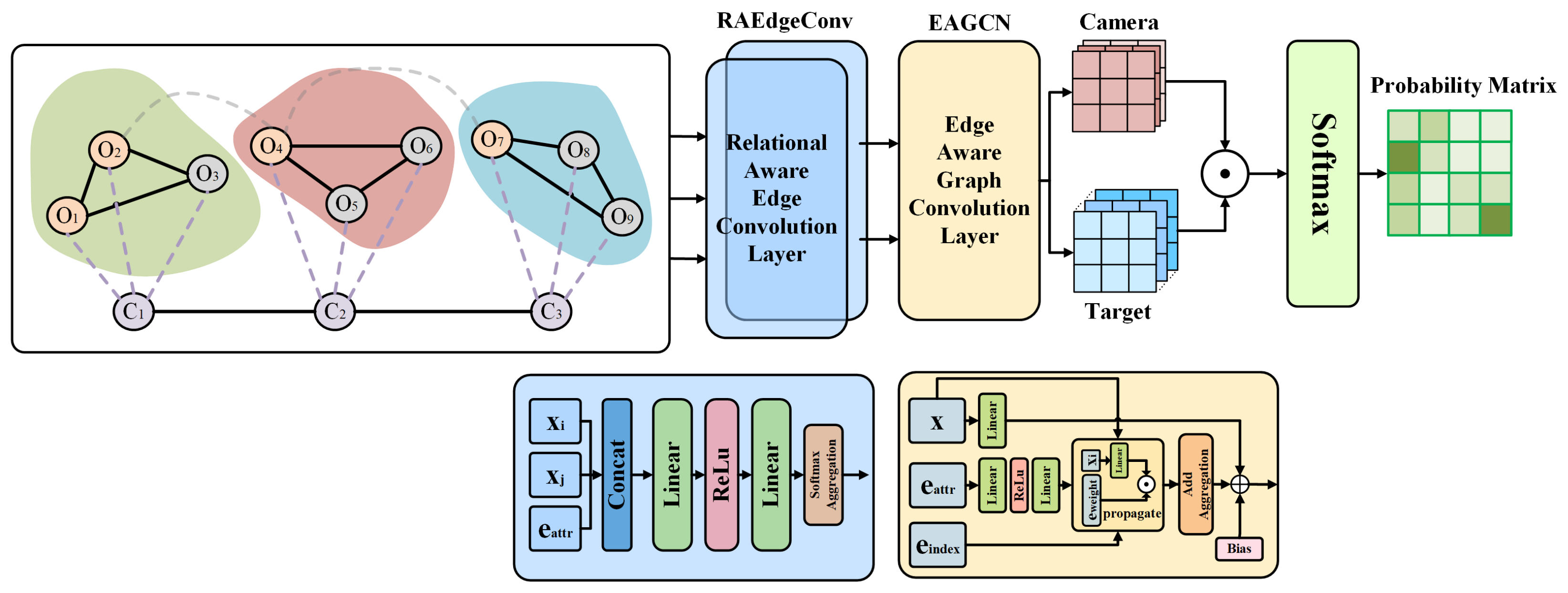

3.3.2. Joint State Space Design

- Intra-camera Target Node Connections: A fully connected structure is applied among all target nodes detected by the same PTZ camera. This configuration captures local spatial correlations and reveals spatial distributions and inter-target relationships. Edge weights are computed as the inverse of the Euclidean distance between target coordinates, such that closer targets have higher weights. This design encourages the model to focus on spatially proximate interactions.

- Camera-to-Target Node Connections: Each camera node is connected to the target nodes it detects. The edge weight is determined based on the relative angular position of the target within the camera’s field of view. This encoding allows the graph structure to incorporate directional information, enhancing the model’s perception of spatial layout between cameras and targets.

- Inter-camera Connections: Fixed-weight edges are established between neighboring PTZ camera nodes to encode adjacency relationships. This connection facilitates the modeling of the camera topology and promotes inter-camera information exchange.

- Cross-camera Target Connections: To improve cooperative perception across PTZ cameras, a special connection strategy is introduced to link targets detected by different cameras. Specifically, the most spatially proximate targets across camera views are connected. This enables cross-regional information sharing, enhancing the system’s environmental awareness. Additionally, when a camera fails to detect any targets, these connections allow it to leverage information from neighboring cameras to support detection and decision-making.

3.3.3. Reward Function Design

3.3.4. Agent Network Design

4. Experiments

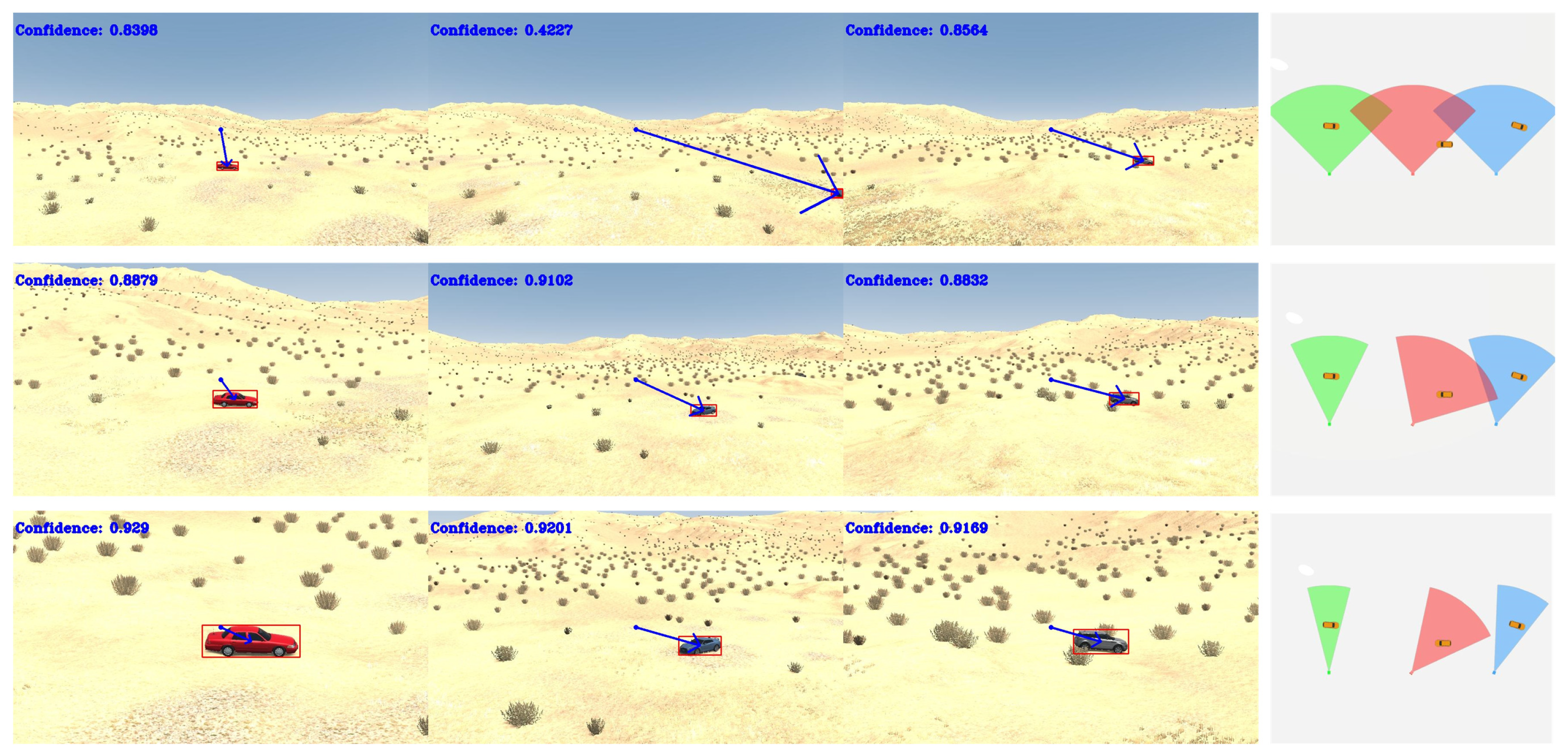

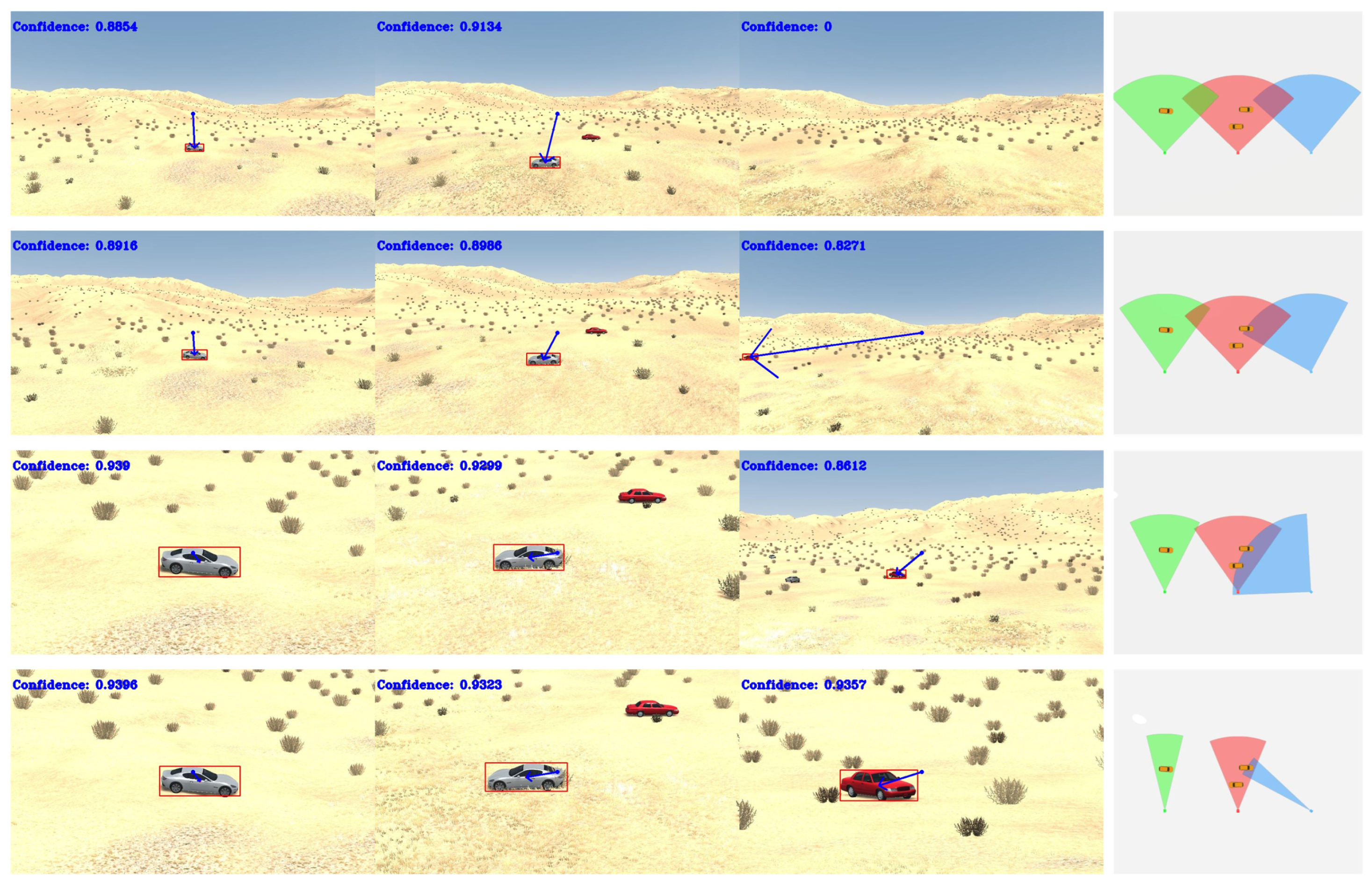

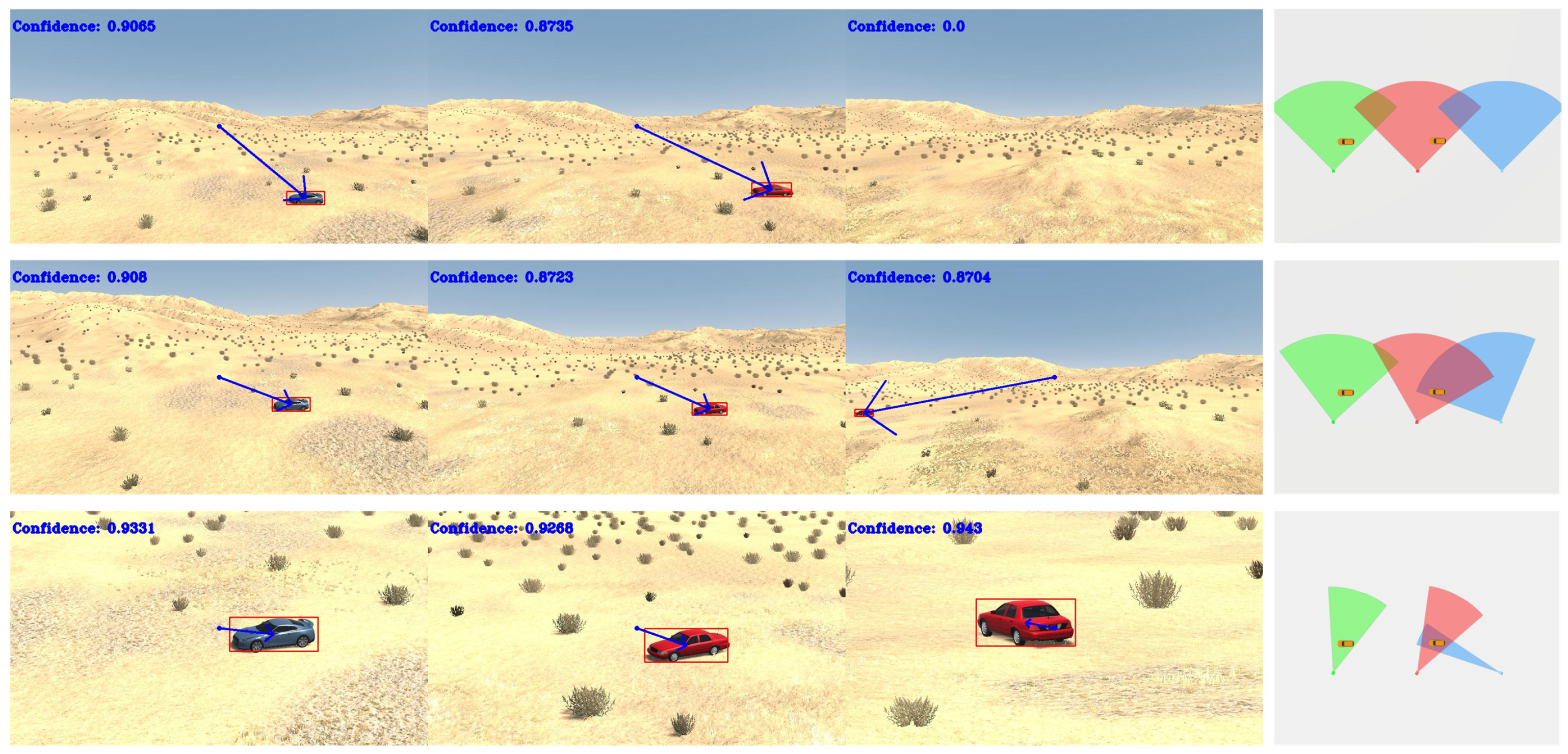

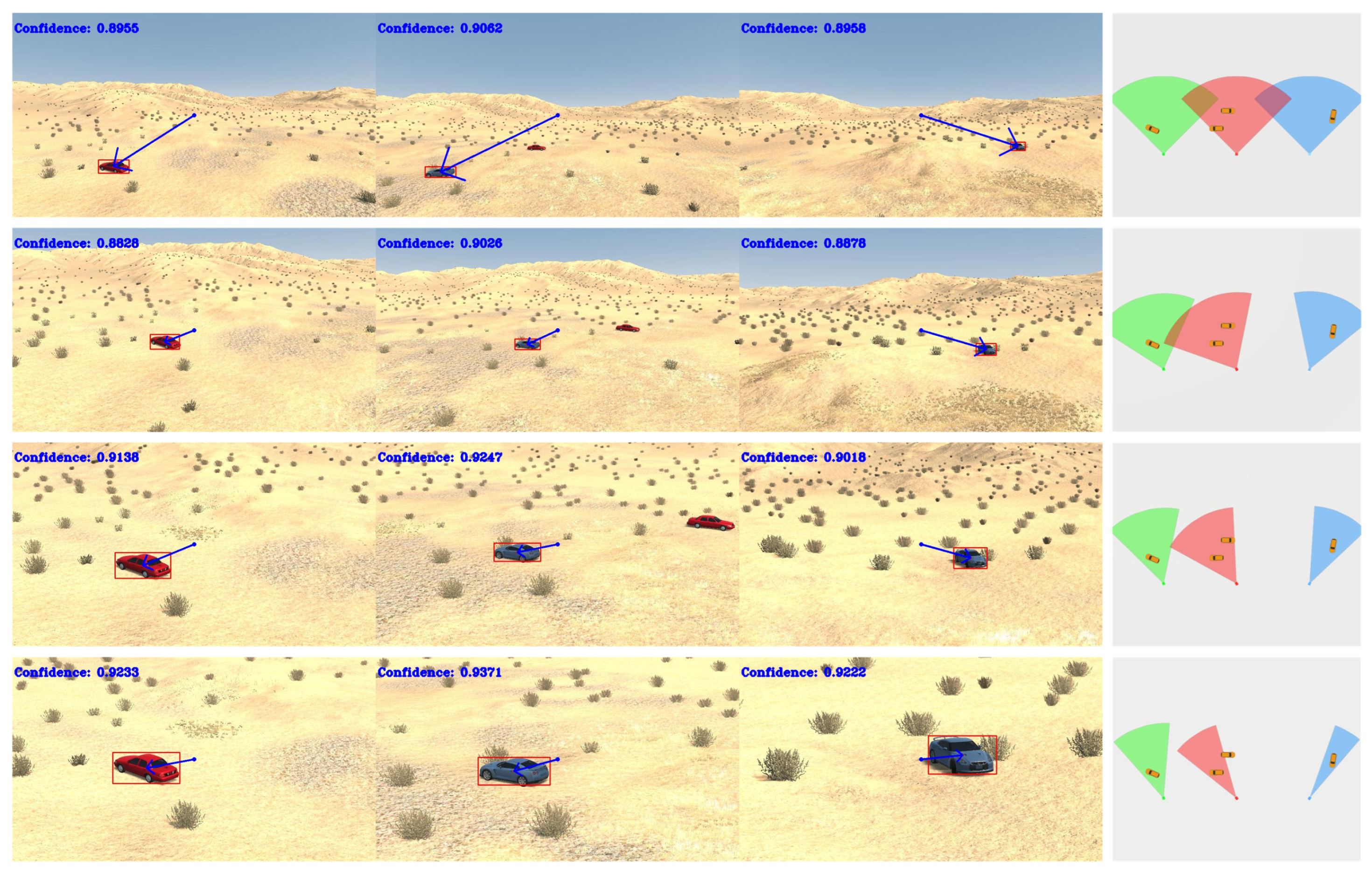

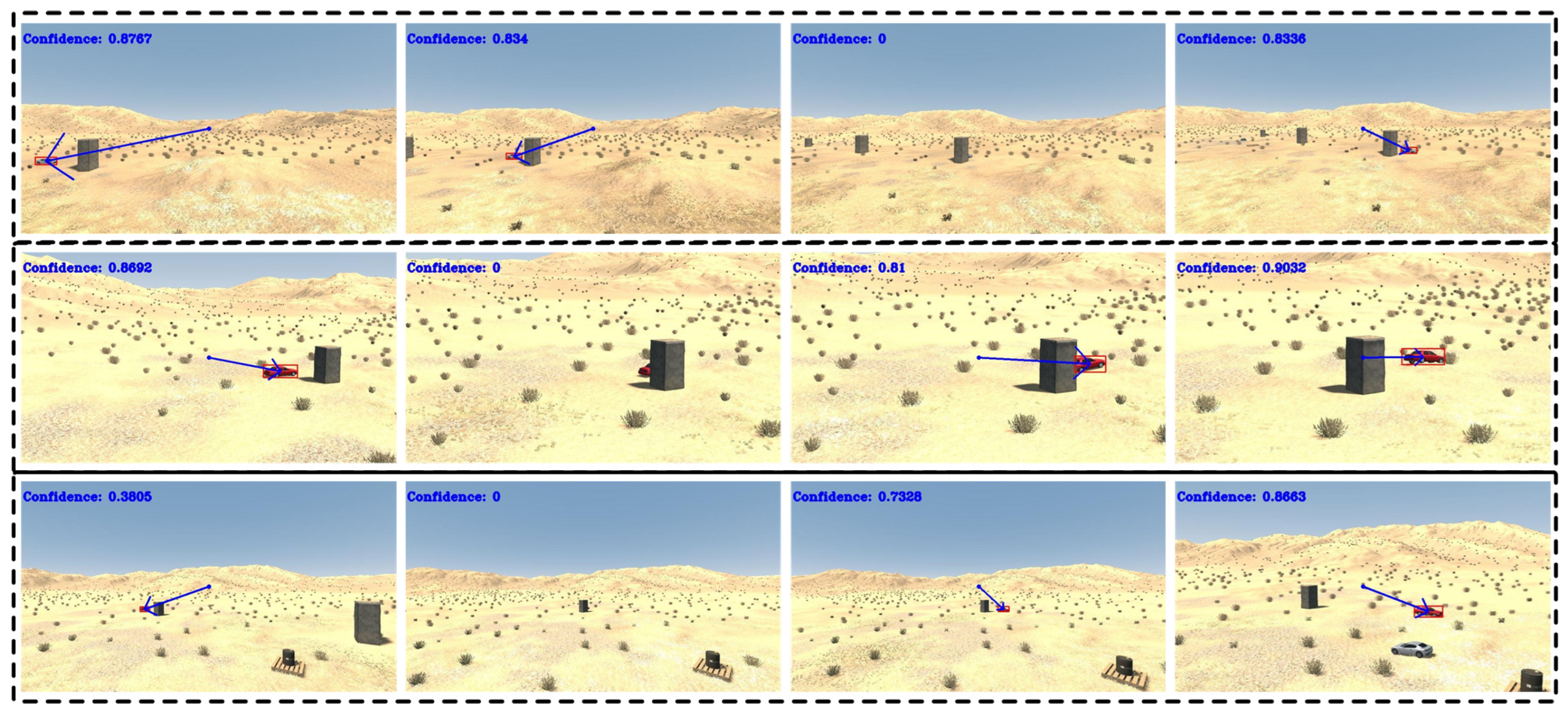

4.1. Simulation Environment

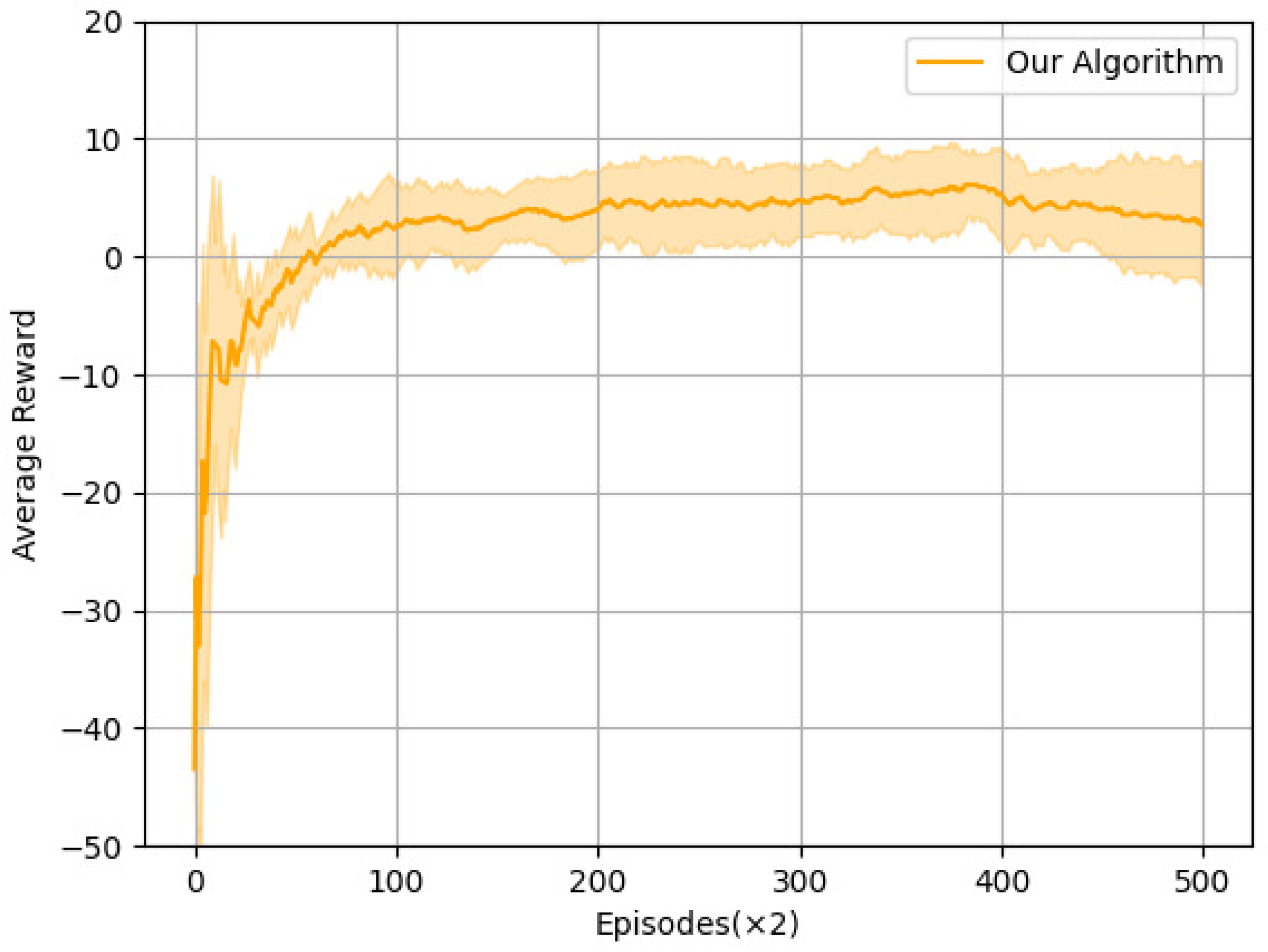

4.2. Training Settings and Results

4.2.1. Training Objective and Loss Function

4.2.2. Episode Initialization and Termination

- The average confidence of all observed targets reaches 0.8 or above for two consecutive steps;

- The total number of steps taken by the agent reaches or exceeds 10.

4.3. Evaluation Metric

4.4. Comparative Experiment

4.5. Ablation Experiment

4.5.1. Ablation Study on Network Architecture

4.5.2. Ablation Study on Reward

4.6. Complex Scenario Experiments

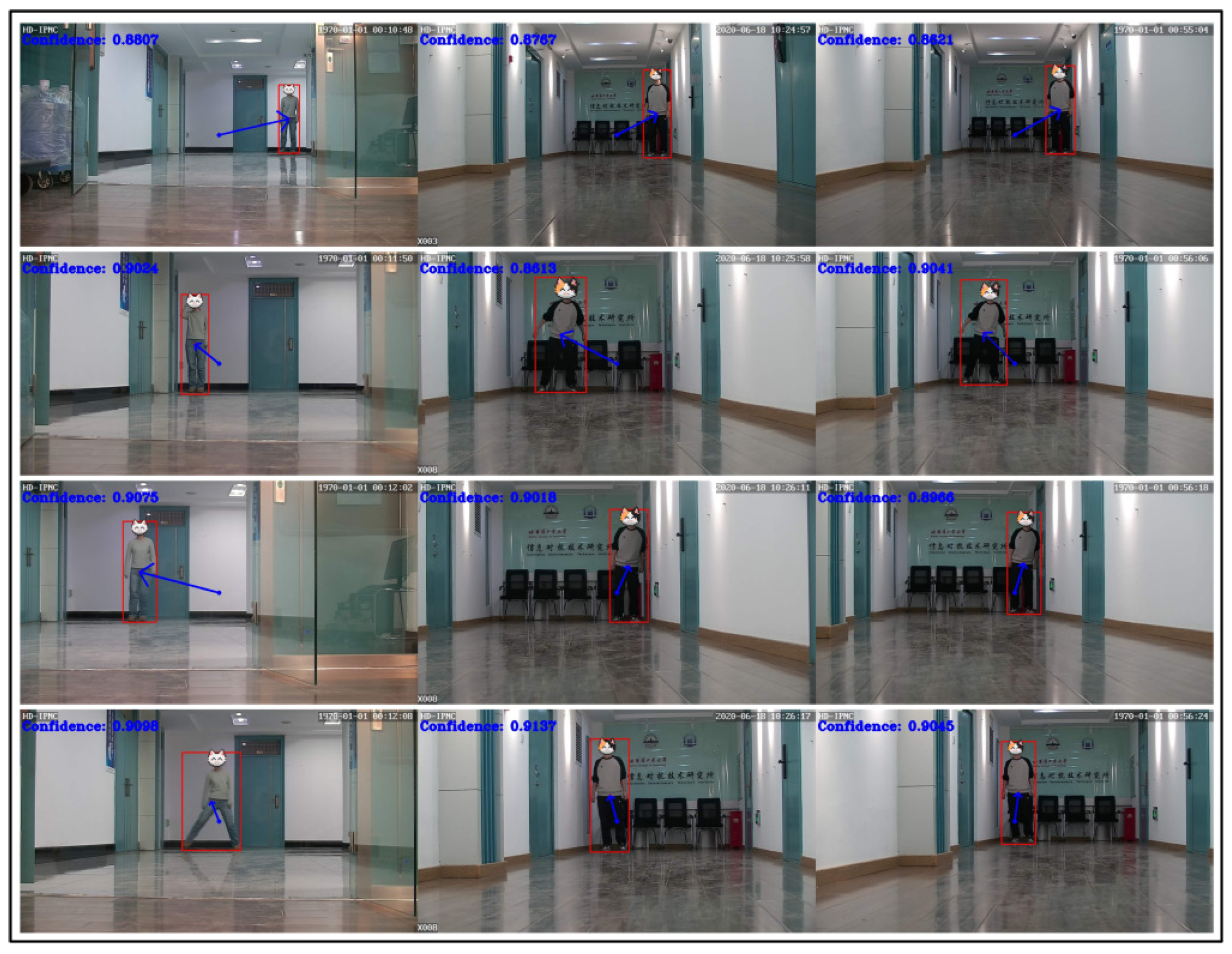

4.7. Hardware Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bhupathi, T.; Chittala, A.; Mani, V. A video surveillance based security model for military bases. In Proceedings of the 2021 International Conference on Recent Trends on Electronics, Information, Communication & Technology (RTEICT), Bangalore, India, 27–28 August 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 517–522. [Google Scholar]

- Kim, D.; Kim, K.; Park, S. Automatic PTZ camera control based on deep-Q network in video surveillance system. In Proceedings of the 2019 International Conference on Electronics, Information, and Communication (ICEIC), Auckland, New Zealand, 22–25 January 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–3. [Google Scholar]

- Wang, S.; Tian, Y.; Xu, Y. Automatic control of PTZ camera based on object detection and scene partition. In Proceedings of the 2015 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), Ningbo, China, 19–22 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar]

- Sharma, A.; Subasharan, V.; Gulati, M.; Wanniarachchi, D.; Misra, A. CollabCam: Collaborative Inference and Mixed-Resolution Imaging for Energy-Efficient Pervasive Vision. ACM Trans. Internet Things 2025, 6, 1–35. [Google Scholar] [CrossRef]

- Zhou, X.; Li, X.; Zhu, Y.; Ma, C. Towards building digital twin: A computer vision enabled approach jointly using multi-camera and building information model. Energy Build. 2025, 335, 115523. [Google Scholar] [CrossRef]

- Hu, K.; Li, M.; Song, Z.; Xu, K.; Xia, Q.; Sun, N.; Zhou, P.; Xia, M. A review of research on reinforcement learning algorithms for multi-agents. Neurocomputing 2024, 599, 128068. [Google Scholar] [CrossRef]

- Liang, J.; Miao, H.; Li, K.; Tan, J.; Wang, X.; Luo, R.; Jiang, Y. A Review of Multi-Agent Reinforcement Learning Algorithms. Electronics 2025, 14, 820. [Google Scholar] [CrossRef]

- Zhuang, H.; Lei, C.; Chen, Y.; Tan, X. Cooperative decision-making for mixed traffic at an unsignalized intersection based on multi-agent reinforcement learning. Appl. Sci. 2023, 13, 5018. [Google Scholar] [CrossRef]

- Chen, Y.; Tu, Z.; Zhang, S.; Zhou, J.; Yang, C. A synchronous multi-agent reinforcement learning framework for UVMS grasping. Ocean Eng. 2024, 307, 118155. [Google Scholar] [CrossRef]

- Lee, K.M.; Ganapathi Subramanian, S.; Crowley, M. Investigation of independent reinforcement learning algorithms in multi-agent environments. Front. Artif. Intell. 2022, 5, 805823. [Google Scholar] [CrossRef]

- Matignon, L.; Laurent, G.J.; Le Fort-Piat, N. Independent reinforcement learners in cooperative markov games: A survey regarding coordination problems. Knowl. Eng. Rev. 2012, 27, 1–31. [Google Scholar] [CrossRef]

- Sunehag, P.; Lever, G.; Gruslys, A.; Czarnecki, W.M.; Zambaldi, V.; Jaderberg, M.; Lanctot, M.; Sonnerat, N.; Leibo, J.Z.; Tuyls, K.; et al. Value-decomposition networks for cooperative multi-agent learning. arXiv 2017, arXiv:1706.05296. [Google Scholar]

- Rashid, T.; Samvelyan, M.; De Witt, C.S.; Farquhar, G.; Foerster, J.; Whiteson, S. Monotonic value function factorisation for deep multi-agent reinforcement learning. J. Mach. Learn. Res. 2020, 21, 1–51. [Google Scholar]

- Jiang, Z.; Chen, Y.; Wang, K.; Yang, B.; Song, G. A Graph-Based PPO Approach in Multi-UAV Navigation for Communication Coverage. Int. J. Comput. Commun. Control 2023, 18. [Google Scholar] [CrossRef]

- Lowe, R.; Wu, Y.I.; Tamar, A.; Harb, J.; Pieter Abbeel, O.; Mordatch, I. Multi-agent actor-critic for mixed cooperative-competitive environments. Adv. Neural Inf. Process. Syst. 2017, 30, 6382–6393. [Google Scholar]

- Yu, C.; Velu, A.; Vinitsky, E.; Gao, J.; Wang, Y.; Bayen, A.; Wu, Y. The surprising effectiveness of ppo in cooperative multi-agent games. Adv. Neural Inf. Process. Syst. 2022, 35, 24611–24624. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar] [CrossRef]

- Liu, L.; Ustun, V.; Kumar, R. Leveraging Organizational Hierarchy to Simplify Reward Design in Cooperative Multi-agent Reinforcement Learning. In Proceedings of the the International FLAIRS Conference Proceedings, Sandestin Beach, FL, USA, 19–21 May 2024; Volume 37. [Google Scholar]

- Xu, J.; Zhong, F.; Wang, Y. Learning multi-agent coordination for enhancing target coverage in directional sensor networks. Adv. Neural Inf. Process. Syst. 2020, 33, 10053–10064. [Google Scholar]

- Chen, Q.; Heydari, B. Adaptive Network Intervention for Complex Systems: A Hierarchical Graph Reinforcement Learning Approach. J. Comput. Inf. Sci. Eng. 2025, 25, 061006. [Google Scholar] [CrossRef]

- Ci, H.; Liu, M.; Pan, X.; Zhong, F.; Wang, Y. Proactive multi-camera collaboration for 3d human pose estimation. arXiv 2023, arXiv:2303.03767. [Google Scholar] [CrossRef]

- Masihullah, S.; Kandaswamy, S. A Decentralized Collaborative Strategy for PTZ Camera Network Tracking System using Graph Learning: Assessing strategies for information sharing in a PTZ camera network for improving vehicle tracking, via agent-based simulations. In Proceedings of the 2022 5th International Conference on Mathematics and Statistics, Paris, France, 17–19 June 2022; pp. 59–65. [Google Scholar]

- Darázs, B.; Bukovinszki, M.; Kósa, B.; Remeli, V.; Tihanyi, V. Comparison of Barrier Surveillance Algorithms for Directional Sensors and UAVs. Sensors 2024, 24, 4490. [Google Scholar] [CrossRef]

- Hou, Y.; Leng, X.; Gedeon, T.; Zheng, L. Optimizing camera configurations for multi-view pedestrian detection. arXiv 2023, arXiv:2312.02144. [Google Scholar] [CrossRef]

- Kim, D.; Yang, C.M. Reinforcement learning-based multiple camera collaboration control scheme. In Proceedings of the 2022 Thirteenth International Conference on Ubiquitous and Future Networks (ICUFN), Barcelona, Spain, 5–8 July 2022; IEEE: Piscataway, NJ, USA; pp. 414–416. [Google Scholar]

- Yin, M.; Sun, Z.; Guo, B.; Yu, Z. Effi-MAOT: A Communication-Efficient Multi-Camera Active Object Tracking. In Proceedings of the 2023 19th International Conference on Mobility, Sensing and Networking (MSN), Nanjing, China, 14–16 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 9–16. [Google Scholar]

- Li, J.; Xu, J.; Zhong, F.; Kong, X.; Qiao, Y.; Wang, Y. Pose-assisted multi-camera collaboration for active object tracking. Proc. AAAI Conf. Artif. Intell. 2020, 34, 759–766. [Google Scholar] [CrossRef]

- Wang, P.; Ma, R.; Yang, Z.; Hao, Q. Robotic Camera Array Motion Planning for Multiple Human Face Tracking Based on Reinforcement Learning. IEEE Sens. J. 2024, 24, 24649–24658. [Google Scholar] [CrossRef]

- Veesam, S.B.; Rao, B.T.; Begum, Z.; Patibandla, R.L.; Dcosta, A.A.; Bansal, S.; Prakash, K.; Faruque, M.R.I.; Al-Mugren, K. Multi-camera spatiotemporal deep learning framework for real-time abnormal behavior detection in dense urban environments. Sci. Rep. 2025, 15, 26813. [Google Scholar] [CrossRef] [PubMed]

- Bustamante, A.L.; Molina, J.M.; Patricio, M.A. Distributed active-camera control architecture based on multi-agent systems. In Proceedings of the Highlights on Practical Applications of Agents and Multi-Agent Systems: 10th International Conference on Practical Applications of Agents and Multi-Agent Systems, Salamanca, Spain, 28–30 March 2012; Springer: Berlin, Germany, 2012; pp. 103–112. [Google Scholar]

- Fang, H.; Liu, H.; Wen, J.; Yang, Z.; Li, J.; Han, Q. Automatic visual enhancement of PTZ camera based on reinforcement learning. Neurocomputing 2025, 626, 129531. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic graph cnn for learning on point clouds. ACM Trans. Graph. (TOG) 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar] [CrossRef]

| Hyperparameter Name | Value |

|---|---|

| Learning Rate | 0.001 |

| Discount Factor | 0.90 |

| Replay Buffer Capacity | 5000 |

| Batch Size | 256 |

| Number of Iterations | 1000 |

| Optimizer | Adam |

| Target Network Update Frequency | 5 |

| -Greedy Strategy | linear decay to 0.1 over first 150 episodes |

| Target Quantity | Method | ACR | VERA | IAC | EAC |

|---|---|---|---|---|---|

| 2 | MADDPG | 91.15% | 16.67% | 0.86 ± 0.01 | 0.45 ± 0.15 |

| Ours | 99.52% | 95.83% | 0.86 ± 0.02 | 0.90 ± 0.02 | |

| 3 | MADDPG | 80.96% | 30.58% | 0.86 ± 0.03 | 0.54 ± 0.12 |

| Ours | 95.80% | 90.21% | 0.85 ± 0.04 | 0.91 ± 0.03 | |

| 4 | MADDPG | 85.33% | 31.03% | 0.87 ± 0.01 | 0.69 ± 0.14 |

| Ours | 92.12% | 74.14% | 0.86 ± 0.04 | 0.92 ± 0.00 |

| Target Quantity | Architecture | ACR | VERA | IAC | EAC | AS |

|---|---|---|---|---|---|---|

| 2 | MLP | 95.05% | 79.17% | 0.85 ± 0.04 | 0.80 ± 0.18 | 6 |

| GNN | 99.52% | 95.83% | 0.86 ± 0.02 | 0.90 ± 0.02 | 2 | |

| 3 | MLP | 93.39% | 70.64% | 0.86 ± 0.03 | 0.82 ± 0.13 | 6 |

| GNN | 95.80% | 90.21% | 0.85 ± 0.04 | 0.91 ± 0.03 | 3 | |

| 4 | MLP | 92.81% | 65.52% | 0.87 ± 0.03 | 0.90 ± 0.06 | 5 |

| GNN | 92.12% | 74.14% | 0.86 ± 0.04 | 0.92 ± 0.00 | 2 |

| Target Quantity | ACR | VERA | IAC | EAC | AS | ||

|---|---|---|---|---|---|---|---|

| 2 | ✔ | ✗ | 84.00% | 0% | 0.85 ± 0.04 | 0.00 ± 0.00 | 10 |

| ✔ | ✔ | 99.52% | 95.83% | 0.86 ± 0.02 | 0.90 ± 0.02 | 2 | |

| 3 | ✔ | ✗ | 93.78% | 0% | 0.86 ± 0.03 | 0.00 ± 0.00 | 10 |

| ✔ | ✔ | 95.80% | 90.21% | 0.85 ± 0.04 | 0.91 ± 0.03 | 3 | |

| 4 | ✔ | ✗ | 94.75% | 0% | 0.87 ± 0.02 | 0.00 ± 0.00 | 10 |

| ✔ | ✔ | 92.12% | 74.14% | 0.86 ± 0.04 | 0.92 ± 0.00 | 2 |

| Target Quantity | ACR | VERA | IAC | EAC |

|---|---|---|---|---|

| 2 | 100.00% | 100.00% | 0.83 ± 0.01 | 0.88 ± 0.03 |

| 3 | 94.38% | 88.10% | 0.84 ± 0.05 | 0.91 ± 0.01 |

| 4 | 89.57% | 66.96% | 0.86 ± 0.03 | 0.91 ± 0.01 |

| Parameter Name | Value |

|---|---|

| Zoom | 30× Optical Zoom |

| Communication Interface | RS485 Interface |

| Access Protocol | ONVIF |

| Operating Temperature | −20 °C 60 °C |

| Resolution | 1920 × 1080 |

| Parameter Name | Value |

|---|---|

| Horizontal Rotation Angle | ±175° |

| Vertical Rotation Angle | ±35° |

| Rotation Speed | 10°/s |

| Operating Temperature | −25 °C 50 °C |

| Communication Method | RS485 Half-Duplex Bus |

| Protocol | Pelco-D |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.; Liu, H.; Fang, H.; Li, J.; Jiang, Y. Multi-Agent Hierarchical Reinforcement Learning for PTZ Camera Control and Visual Enhancement. Electronics 2025, 14, 3825. https://doi.org/10.3390/electronics14193825

Yang Z, Liu H, Fang H, Li J, Jiang Y. Multi-Agent Hierarchical Reinforcement Learning for PTZ Camera Control and Visual Enhancement. Electronics. 2025; 14(19):3825. https://doi.org/10.3390/electronics14193825

Chicago/Turabian StyleYang, Zhonglin, Huanyu Liu, Hao Fang, Junbao Li, and Yutong Jiang. 2025. "Multi-Agent Hierarchical Reinforcement Learning for PTZ Camera Control and Visual Enhancement" Electronics 14, no. 19: 3825. https://doi.org/10.3390/electronics14193825

APA StyleYang, Z., Liu, H., Fang, H., Li, J., & Jiang, Y. (2025). Multi-Agent Hierarchical Reinforcement Learning for PTZ Camera Control and Visual Enhancement. Electronics, 14(19), 3825. https://doi.org/10.3390/electronics14193825