Deep Learning System for Speech Command Recognition

Abstract

1. Introduction

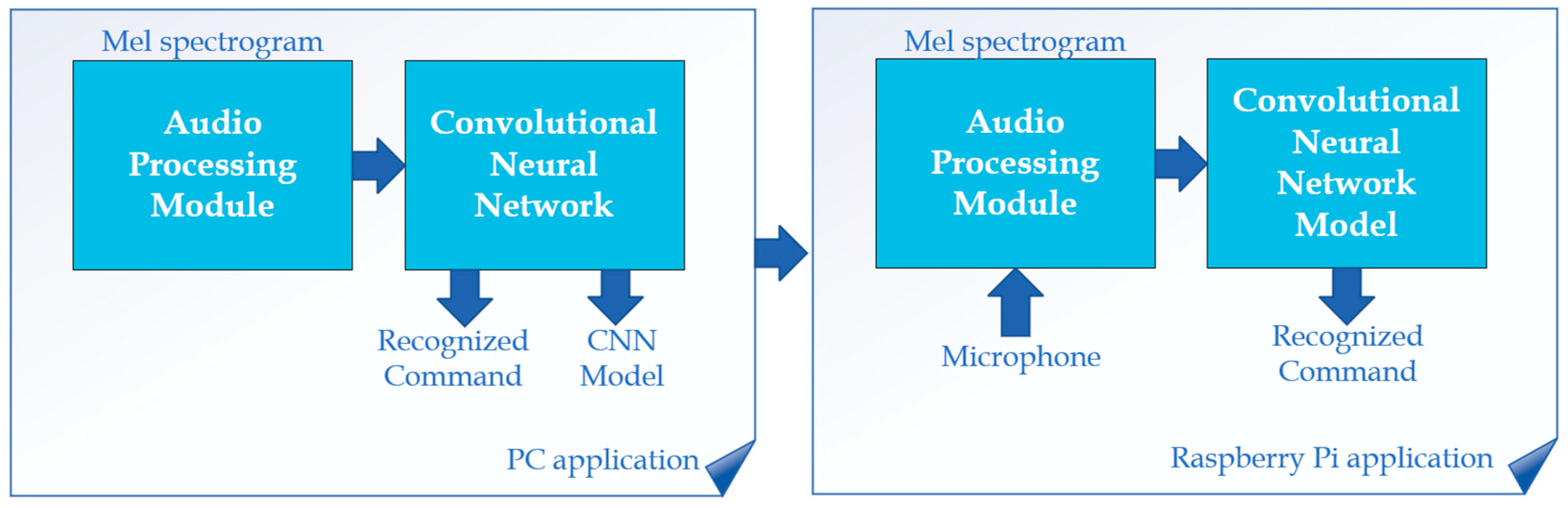

- The implementation of a CNN-based model for embedded real-time speech recognition on a Raspberry Pi 5.

- The integration of Mel spectrograms with a CNN architecture for accurate classification of both standard commands and additional “silence” and “unknown” classes.

- A lightweight and efficient real-time processing pipeline for audio capture, feature extraction, and top-3 prediction suitable for Fog and edge devices.

2. Related Work

2.1. Classical Baselines for Keyword Spotting and Audio Classification

2.2. Transfer Learning Approaches

2.3. Attention and Conformer Architectures

2.4. Tiny Models and Efficiency-Focused Baselines

2.5. Noise Robustness and Out-of-Distribution Handling (OOD)

3. Mel Spectrograms in Speech Recognition

4. Convolutional Neural Networks (CNNs)

5. Materials and Methods

- Audio processing module.

- Convolutional neural network.

5.1. Audio Signal Processing

- Loading and preprocessing audio:

- Audio files in wav format are loaded and converted to mono.

- Signals are resampled to 16 kHz, a standard choice for speech recognition tasks [6].

- Amplitude normalization is applied to ensure consistent volume levels across all samples.

- 2.

- Mel spectrogram generation:

- The audio is divided into overlapping windows of n_fft = 1024 samples, with a hop length of 256 samples.

- Each window is transformed using Short-Time Fourier Transform (STFT) to obtain the frequency spectrum.

- The minimum frequency is 20 Hz, and maximum frequency is 8 kHz.

- The spectrum is projected onto 64 triangular filter banks according to the Mel scale, providing finer resolution at low frequencies and coarser resolution at high frequencies.

- The spectrogram is converted to decibel (dB) scale to enhance contrast and emphasize speech-relevant features.

- 3.

- Padding and fixed-size input:

- Spectrograms are padded to 64 time steps. Clips longer than this are truncated. This ensures a consistent input shape for the CNN.

5.2. Convolutional Neural Network

- Convolutional layers:

- First layer: 16 output channels, 3 × 3 kernel, padding = 1, stride = 1, ReLU activation, 2 × 2 max pooling, 20% dropout.

- Second layer: 32 output channels, same kernel and stride, ReLU, 2 × 2 max pooling, 20% dropout.

- Third layer: 64 output channels, same kernel and stride, ReLU, 2 × 2 max pooling, 20% dropout.

- Fourth layer: 128 output channels, same kernel and stride, ReLU, 2 × 2 max pooling, 20% dropout.

- These convolutional layers learn local patterns in the Mel spectrogram, such as edges, harmonics, and transitions. Batch normalization is applied after each convolution to stabilize training and accelerate convergence. Max pooling reduces the spatial resolution, and dropout regularization helps prevent overfitting.

- 2.

- Fully connected classifier (ANN head):

- The output of the final convolutional layer is flattened into a 1D vector.

- A hidden layer with 128 neurons applies ReLU activation and 20% dropout.

- The output layer maps these features to the number of classes (10 main commands, plus additional “silence” and “unknown” classes). A softmax activation produces probabilities for each class.

- 3.

- Dataset handling and augmentation:

- The dataset includes ten main commands, along with “silence” and “unknown” classes. Silence samples are generated from background noise segments and recorded environmental sound from microphone, while unknown samples include words outside the main command set.

- A speaker-independent split ensures that training and validation sets do not share speakers, preventing overfitting and providing a realistic measure of generalization.

- For training, 70% of the dataset is used, for testing 15% and for validation 15%.

- During training, simple augmentations are applied to improve robustness: small random time shifts, additive background noise, and minor amplitude scaling.

- Random seeds are fixed to ensure reproducibility of the splits and augmentations. The random seed was 42 (for random, numpy, and torch libraries), and batch size was 16.

- Early stopping criterion was set to 10 epochs with no improvement in accuracy, after which the training stops.

- 4.

- Training setup:

- The network is trained with cross-entropy loss and the Adam optimizer with learning rate of 0.001.

- Batch size, number of epochs, learning rate, and random seeds are fixed to ensure reproducibility. The number of epochs was 50.

- 5.

- Operation and evaluation:

- The CNN extracts relevant features from the spectrogram, and the classifier head uses these features to assign probabilities to each class.

- Model performance is evaluated using top-1 and top-3 accuracy, per-class precision, recall, and F1 scores, including macro and micro averages.

- A speaker-independent split ensures that audio from the same speaker does not appear in both training and validation sets.

6. Results and Discussion

6.1. Computer Model Results

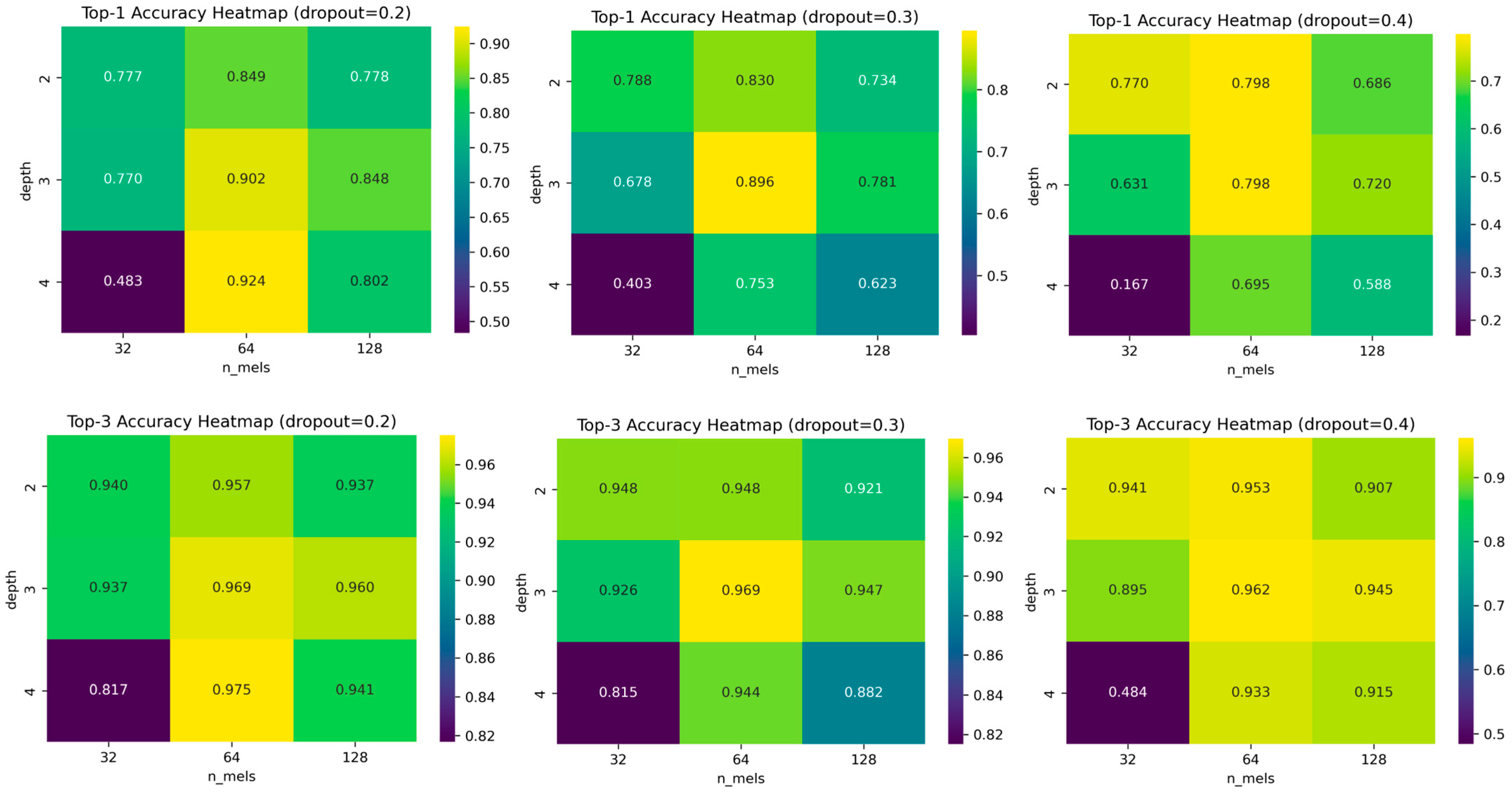

- Top-1 and Top-3 accuracy:

- ○

- Top-1 accuracy measures the percentage of test samples for which the highest probability class matches the true label.

- ○

- Top-3 accuracy measures the percentage of samples for which the true label is among the three highest probability predictions.

- Per-class precision, recall, and F1-score:

- ○

- Precision: proportion of correct predictions among all predictions for a class.

- ○

- Recall: proportion of correctly predicted samples of a class among all true samples.

- ○

- F1-score: harmonic mean of precision and recall.

- Macro and micro-averages:

- ○

- Macro-average treats all classes equally, while micro-average accounts for class imbalance.

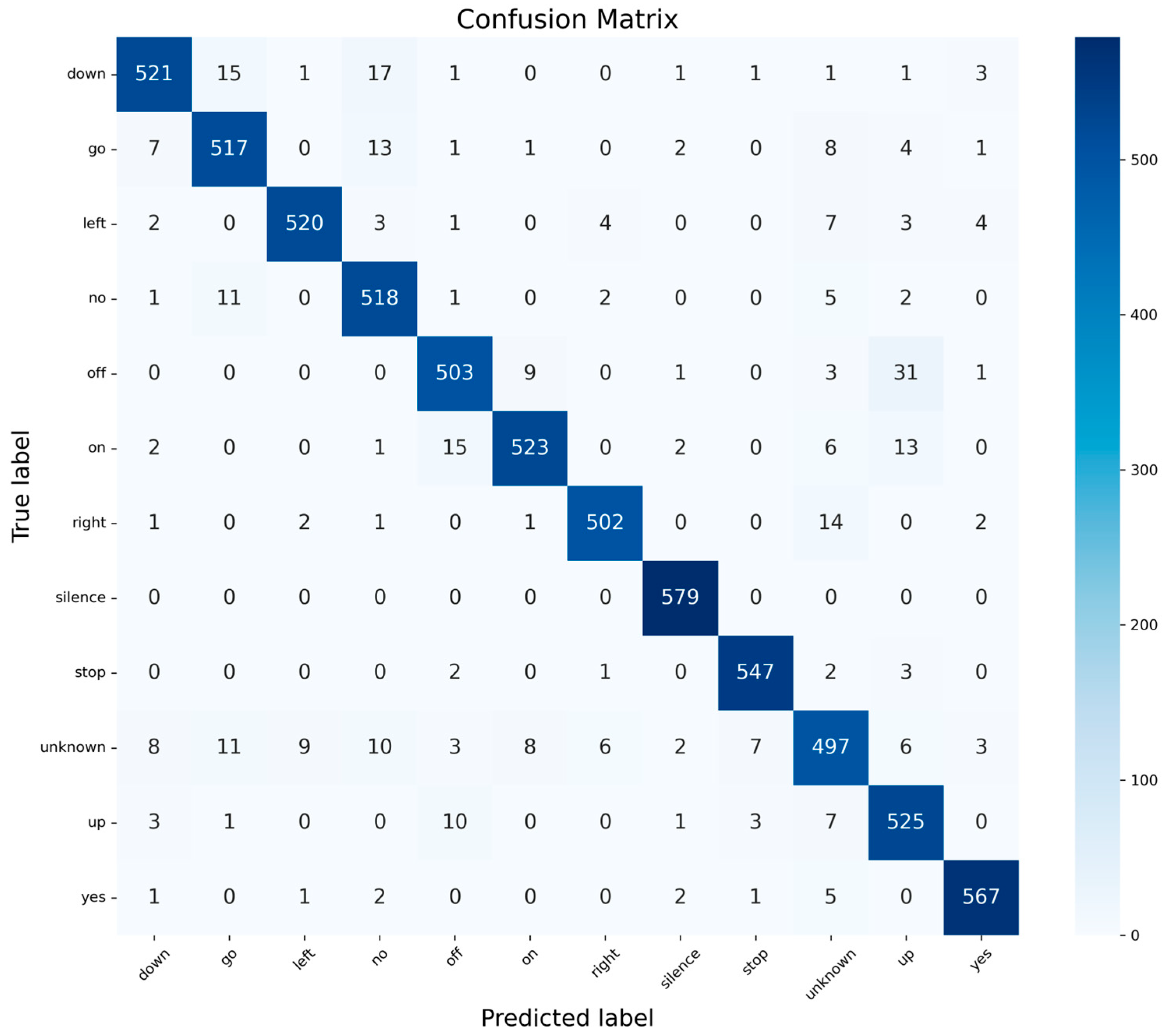

- A confusion matrix is computed to visualize classification performance across all classes.

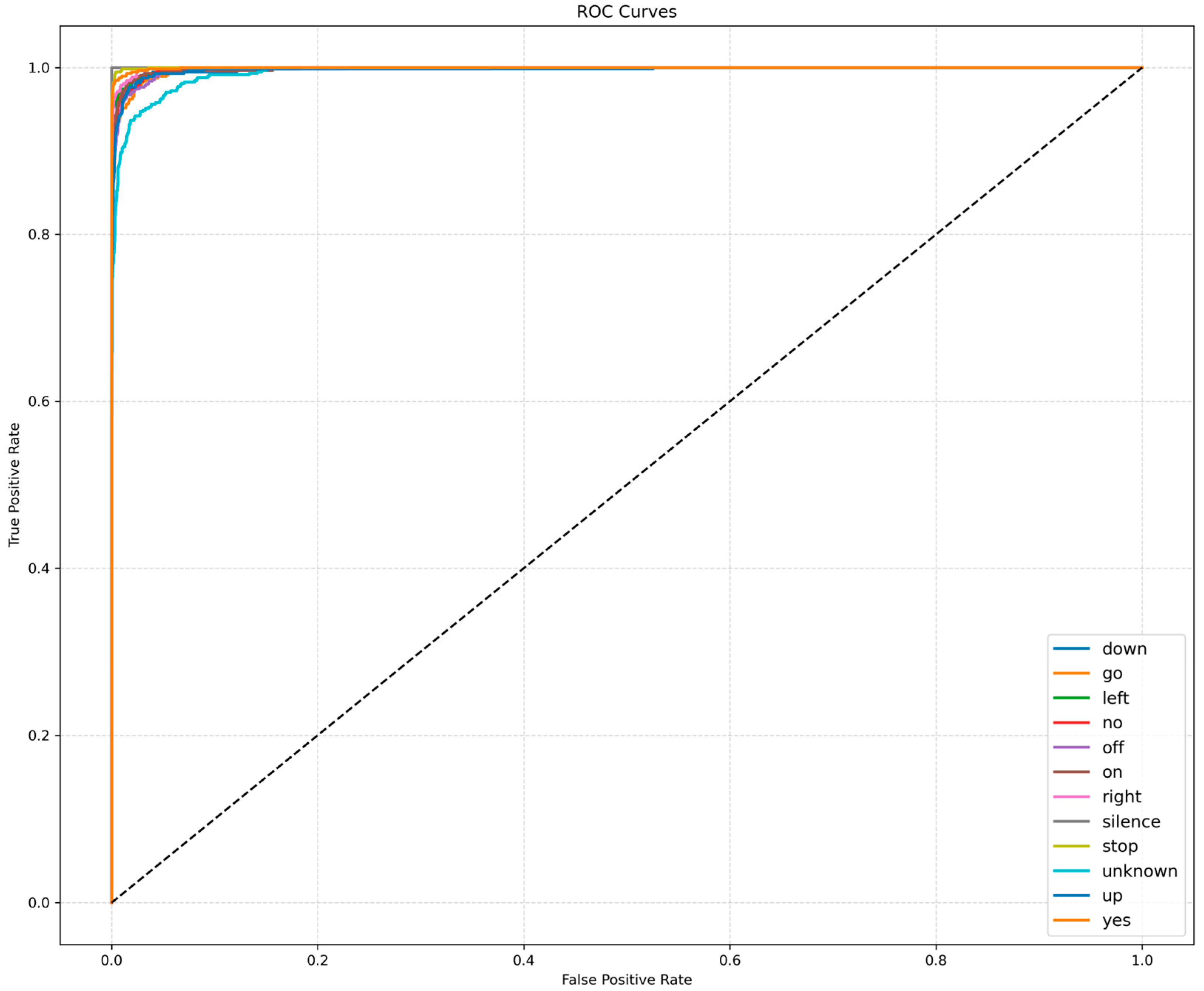

- For binary or one-vs-all tasks, Receiver Operating Characteristic (ROC) curves are computed, and the Area Under the Curve (AUC) quantifies the discriminative ability of the model.

6.2. Fog Computer Results

- Buttons “Start Recording” and “Stop Recording” for starting and stopping the voice recording via microphone and “Play Last” for playing the last recorded command.

- Mel spectrogram of the recorded command.

- Top three predictions with probabilities.

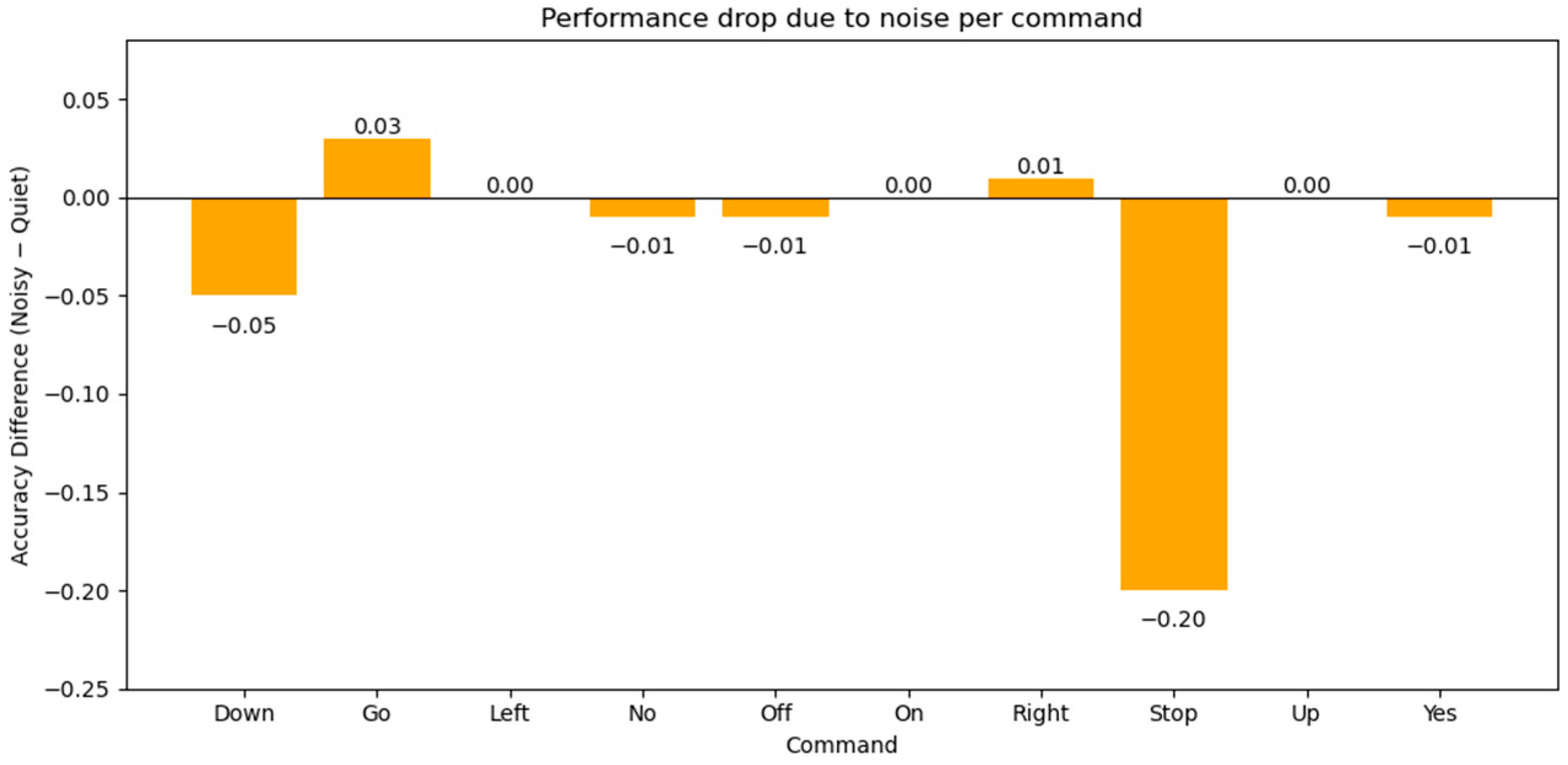

- Quiet environment: Most commands are recognized almost perfectly, with averages around 96–100%. “Stop” is slightly lower (93%).

- Noisy environment: Accuracy drops for some commands, particularly “down” (91%) and “stop” (73%), indicating these are more sensitive to background noise. Commands like “on”, “up”, and “yes” remain highly robust (≥99%).

- Overall averages: Across all commands and participants, the model achieves about 95–98% accuracy, showing strong performance even under noise, but “stop” is the most challenging command in noisy conditions.

6.3. Comparison with Relevant Models

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Warden, P. Speech Commands: A Public Dataset for Single-Word Speech Recognition. 2017. Available online: http://download.tensorflow.org/data/speech_commands_v0.01.tar.gz (accessed on 10 September 2025).

- Chen, J.; Teo, T.H.; Kok, C.L.; Koh, Y.Y. A Novel Single-Word Speech Recognition on Embedded Systems Using a Convolution Neuron Network with Improved Out-of-Distribution Detection. Electronics 2024, 13, 530. [Google Scholar] [CrossRef]

- Zinemanas, P.; Rocamora, M.; Miron, M.; Font, F.; Serra, X. An Interpretable Deep Learning Model for Automatic Sound Classification. Electronics 2021, 10, 850. [Google Scholar] [CrossRef]

- Sainath, T.N.; Parada, C. Convolutional neural networks for small-footprint keyword spotting. In Proceedings of the Interspeech, Dresden, Germany, 6–10 September 2015; pp. 1478–1482. [Google Scholar] [CrossRef]

- Zhang, Y.; Suda, N.; Lai, L. Hello Edge: Keyword Spotting on Microcontrollers. arXiv 2017, arXiv:1711.07128. [Google Scholar] [CrossRef]

- Warden, P. Speech Commands: A dataset for Limited-Vocabulary Speech Recognition. arXiv 2018, arXiv:1804.03209. [Google Scholar] [CrossRef]

- De Andrade, D.C.; Leo, S.; Viana, M.L.D.S.; Bernkopf, C. A neural attention model for speech command recognition. arXiv 2018, arXiv:1808.08929. [Google Scholar] [CrossRef]

- Wong, A.; Famouri, M.; Pavlova, M.; Surana, S. Tinyspeech: Attention condensers for deep speech recognition neural networks on edge devices. arXiv 2020, arXiv:2008.04245. [Google Scholar] [CrossRef]

- Lin, Z.Q.; Chung, A.G.; Wong, A. Edgespeechnets: Highly efficient deep neural networks for speech recognition on the edge. arXiv 2018, arXiv:1810.08559. [Google Scholar] [CrossRef]

- Kuzminykh, I.; Shevchuk, D.; Shiaeles, S.; Ghita, B. Audio Interval Retrieval Using Convolutional Neural Networks. In Internet of Things, Smart Spaces, and Next Generation Networks and Systems; Galinina, O., Andreev, S., Balandin, S., Koucheryavy, Y., Eds.; NEW2AN 2020, ruSMART 2020; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12525. [Google Scholar] [CrossRef]

- Schmid, F.; Koutini, K.; Widmer, G. Dynamic Convolutional Neural Networks as Efficient Pre-Trained Audio Models. IEEE/ACM Trans. Audio Speech Lang. Process. 2024, 32, 2227–2241. [Google Scholar] [CrossRef]

- Baevski, A.; Zhou, H.; Mohamed, A.; Auli, M. wav2vec 2.0: A Framework for Self-Supervised Learning of Speech Representations. arXiv 2020, arXiv:2006.11477. [Google Scholar] [CrossRef]

- Chang, H.-J.; Bhati, S.; Glass, J.; Liu, A.H. USAD: Universal Speech and Audio Representation via Distillation. arXiv 2025, arXiv:2506.18843. [Google Scholar] [CrossRef]

- Shor, J.; Jansen, A.; Maor, R.; Lang, O.; Tuval, O.; Quitry, F.d.C.; Tagliasacchi, M.; Shavitt, I.; Emanuel, D.; Haviv, Y. Towards Learning a Universal Non-Semantic Representation of Speech. In Proceedings of the Interspeech, Shanghai, China, 25–29 October 2020; pp. 140–144. [Google Scholar] [CrossRef]

- Gulati, A.; Qin, J.; Chiu, C.-C.; Parmar, N.; Zhang, Y.; Yu, J.; Han, W.; Wang, S.; Zhang, Z.; Wu, Y.; et al. Conformer: Convolution-augmented Transformer for Speech Recognition. In Proceedings of the Interspeech, Shanghai, China, 25–29 October 2020; pp. 5036–5040. [Google Scholar] [CrossRef]

- Han, W.; Zhang, Z.; Zhang, Y.; Yu, J.; Chiu, C.-C.; Qin, J.; Gulati, A.; Pang, R.; Wu, Y. ContextNet: Improving Convolutional Neural Networks for Automatic Speech Recognition with Global Context. In Proceedings of the Interspeech, Shanghai, China, 25–29 October 2020; pp. 3610–3614. [Google Scholar] [CrossRef]

- Bartoli, P.; Bondini, T.; Veronesi, C.; Giudici, A.; Antonello, N.; Zappa, F. End-to-End Efficiency in Keyword Spotting: A System-Level Approach for Embedded Microcontrollers. arXiv 2025, arXiv:2509.07051. [Google Scholar] [CrossRef]

- Alhashimi, S.A.; Aliedani, A. Embedded Device Keyword Spotting Model with Quantized Convolutional Neural Network. Int. J. Eng. Trends Technol. 2025, 73, 117–123. [Google Scholar] [CrossRef]

- Kadhim, I.J.; Abdulabbas, T.E.; Ali, R.; Hassoon, A.F.; Premaratne, P. Enhanced speech command recognition using convolutional neural networks. J. Eng. Sustain. Dev. 2024, 28, 754–761. [Google Scholar] [CrossRef]

- Pervaiz, A.; Hussain, F.; Israr, H.; Tahir, M.A.; Raja, F.R.; Baloch, N.K.; Ishmanov, F.; Zikria, Y.B. Incorporating Noise Robustness in Speech Command Recognition by Noise Augmentation of Training Data. Sensors 2020, 20, 2326. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Hou, N.; Hu, Y.; Shirol, S.; Chng, E.S. Noise-robust speech recognition with 10 minutes unparalleled in-domain data. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 7717–7721. [Google Scholar] [CrossRef]

- Porjazovski, D.; Moisio, A.; Kurimo, M. Out-of-distribution generalisation in spoken language understanding. In Proceedings of the Interspeech Kos, Greece, 1–5 September 2024; pp. 807–811. [Google Scholar] [CrossRef]

- Baranger, A.; Maison, L. Evaluating and Improving the Robustness of Speech Command Recognition Models to Noise and Distribution Shifts. arXiv 2025, arXiv:2507.23128. [Google Scholar] [CrossRef]

- Chernyak, B.R.; Segal, Y.; Shrem, Y.; Keshet, J. PatchDSU: Uncertainty Modeling for Out of Distribution Generalization in Keyword Spotting. arXiv 2025, arXiv:2508.03190. [Google Scholar] [CrossRef]

- Rabiner, L.; Juang, B.H. Fundamentals of Speech Recognition; Prentice Hall: Englewood Cliffs, NJ, USA, 1993. [Google Scholar]

- Jurafsky, D.; Martin, J.H. Speech and Language Processing, 3rd ed. Available online: https://web.stanford.edu/~jurafsky/slp3/ (accessed on 10 September 2025).

- Zhou, Q.; Shan, J.; Ding, W.; Wang, C.; Yuan, S.; Sun, F.; Li, H.; Fang, B. Cough recognition based on Mel-spectrogram and convolutional neural network. Front. Robot. AI 2021, 8, 580080. [Google Scholar] [CrossRef]

- Sharan, R.V.; Berkovsky, S.; Liu, S. Voice Command Recognition Using Biologically Inspired Time-Frequency Representation and Convolutional Neural Networks. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 998–1001. [Google Scholar] [CrossRef]

- Stevens, S.S.; Volkmann, J.; Newman, E.B. A scale for the measurement of the psychological magnitude pitch. J. Acoust. Soc. Am. 1937, 8, 185–190. [Google Scholar] [CrossRef]

- Davis, S.B.; Mermelstein, P. Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Trans. Acoust. Speech Signal Process. 1980, 28, 357–366. [Google Scholar] [CrossRef]

- Gemmeke, J.F.; Ellis, D.P.W.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R.C.; Plakal, M.; Ritter, M. AudioSet: An ontology and human-labeled dataset for audio events. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 776–780. [Google Scholar] [CrossRef]

- O’Shaughnessy, D. Speech Communication: Human and Machine; Addison-Wesley: Boston, MA, USA, 1987. [Google Scholar]

- Feichtinger, H.G.; Luef, F. Gabor Analysis and Algorithms; Engquist, B., Ed.; Encyclopedia of Applied and Computational Mathematics; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar] [CrossRef]

- Mallat, S. A Wavelet Tour of Signal Processing; Academic Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1–9. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Krichen, M. Convolutional Neural Networks: A Survey. Computers 2023, 12, 151. [Google Scholar] [CrossRef]

- O’shea, K.; Nash, R. An Introduction to Convolutional Neural Networks. arXiv 2015, arXiv:1511.08458. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Cantiabela, Z.; Pardede, H.F.; Zilvan, V.; Sulandari, W.; Yuwana, R.S.; Supianto, A.A.; Krisnandi, D. Deep learning for robust speech command recognition using convolutional neural networks (CNN). In Proceedings of the 2022 International Conference on Computer, Control, Informatics and Its Applications, Virtually, 22–23 November 2022; pp. 101–105. [Google Scholar] [CrossRef]

- Li, X.; Zhou, Z. Speech command recognition with convolutional neural network. CS229 Stanf. Educ. 2017, 31, 1–6. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Fifine Ampligame A2 Microphone. Available online: https://fifinemicrophone.com/products/fifine-ampligame-a2 (accessed on 10 September 2025).

| Method | Characteristics | Limitations | Advantages of Mel |

|---|---|---|---|

| STFT | Linear frequency resolution, widely used in spectral analysis | Equal weight to all frequencies; does not reflect human auditory perception | Mel emphasizes perceptually relevant low–mid frequency bands |

| Wavelet Transform | Good time–frequency localization, flexible basis | Computationally more expensive; less standardized for speech/audio | Mel is simpler, more efficient, and widely adopted |

| Gabor Transform | Localized time–frequency analysis with Gaussian windows | High computational cost; less efficient on embedded systems | Mel is hardware-friendly and efficient for low-power applications |

| Mel Filter Banks | Perceptually motivated, efficient, widely validated in ASR and sound classification | Lower resolution at high frequencies | Best trade-off between accuracy, perceptual relevance, and efficiency |

| Class | Precision | Recall | F1-Score |

|---|---|---|---|

| Down | 0.95 | 0.93 | 0.94 |

| Go | 0.93 | 0.93 | 0.93 |

| Left | 0.98 | 0.96 | 0.97 |

| No | 0.92 | 0.96 | 0.94 |

| Off | 0.94 | 0.92 | 0.93 |

| On | 0.96 | 0.93 | 0.95 |

| Right | 0.97 | 0.96 | 0.97 |

| Silence | 0.98 | 1 | 0.99 |

| Stop | 0.98 | 0.99 | 0.98 |

| Unknown | 0.90 | 0.87 | 0.88 |

| Up | 0.89 | 0.95 | 0.92 |

| Yes | 0.98 | 0.98 | 0.98 |

| Macro avg | 0.95 | 0.95 | 0.95 |

| Weighted avg | 0.95 | 0.95 | 0.95 |

| Micro | 0.9479 | 0.9479 | 0.9479 |

| Macro | 0.9482 | 0.9479 | 0.9479 |

| Parameter | Main Model | Pruned Model | Quantized Model |

|---|---|---|---|

| Parameter count | 295,916 | 295,916 | 97,632 |

| Nonzero parameters | 295,916 | 148,388 | 49,176 |

| Sparsity | 0% | 49.85% | 49.63% |

| Model size | 1.15 MB | 1.15 MB | 0.58 MB |

| FLOPS | 14.38 MFLOPs | 14.38 MFLOPs | 14.18 MFLOPs |

| Single inference latency | 3.04 ms | 3.47 ms | 5.8 ms |

| Throughput | 329.28 samples/s | 288.50 samples/s | 172.53 samples/s |

| Memory footprint | 418.94 MB | 425.23 MB | 429.89 MB |

| CPU utilization | 6.8% | 7% | 7.2% |

| Command | P1 Quiet | P2 Quiet | P3 Quiet | P1 Noisy | P2 Noisy | P3 Noisy | Average Quiet | Average Noisy | Average Overall |

|---|---|---|---|---|---|---|---|---|---|

| Down | 0.96 | 0.98 | 0.94 | 0.97 | 0.99 | 0.78 | 0.96 | 0.91 | 0.94 |

| Go | 0.99 | 0.95 | 0.85 | 0.99 | 0.95 | 0.94 | 0.93 | 0.96 | 0.95 |

| Left | 1 | 0.98 | 0.92 | 1 | 1 | 0.91 | 0.97 | 0.97 | 0.97 |

| No | 0.98 | 0.97 | 0.93 | 0.99 | 0.96 | 0.89 | 0.96 | 0.95 | 0.95 |

| Off | 1 | 0.96 | 0.96 | 1 | 0.99 | 0.89 | 0.97 | 0.96 | 0.97 |

| On | 0.99 | 1 | 0.99 | 0.99 | 1 | 0.97 | 0.99 | 0.99 | 0.99 |

| Right | 1 | 0.98 | 0.93 | 0.98 | 0.99 | 0.98 | 0.97 | 0.98 | 0.98 |

| Stop | 0.98 | 0.91 | 0.89 | 0.93 | 0.68 | 0.59 | 0.93 | 0.73 | 0.83 |

| Up | 1 | 0.99 | 0.99 | 0.99 | 1 | 0.99 | 0.99 | 0.99 | 0.99 |

| Yes | 1 | 1 | 1 | 0.99 | 1 | 0.99 | 1.00 | 0.99 | 1.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vujičić, D.; Damnjanović, Đ.; Marković, D.; Stamenković, Z. Deep Learning System for Speech Command Recognition. Electronics 2025, 14, 3793. https://doi.org/10.3390/electronics14193793

Vujičić D, Damnjanović Đ, Marković D, Stamenković Z. Deep Learning System for Speech Command Recognition. Electronics. 2025; 14(19):3793. https://doi.org/10.3390/electronics14193793

Chicago/Turabian StyleVujičić, Dejan, Đorđe Damnjanović, Dušan Marković, and Zoran Stamenković. 2025. "Deep Learning System for Speech Command Recognition" Electronics 14, no. 19: 3793. https://doi.org/10.3390/electronics14193793

APA StyleVujičić, D., Damnjanović, Đ., Marković, D., & Stamenković, Z. (2025). Deep Learning System for Speech Command Recognition. Electronics, 14(19), 3793. https://doi.org/10.3390/electronics14193793