Deep-Learning-Based Human Activity Recognition: Eye-Tracking and Video Data for Mental Fatigue Assessment

Abstract

1. Introduction

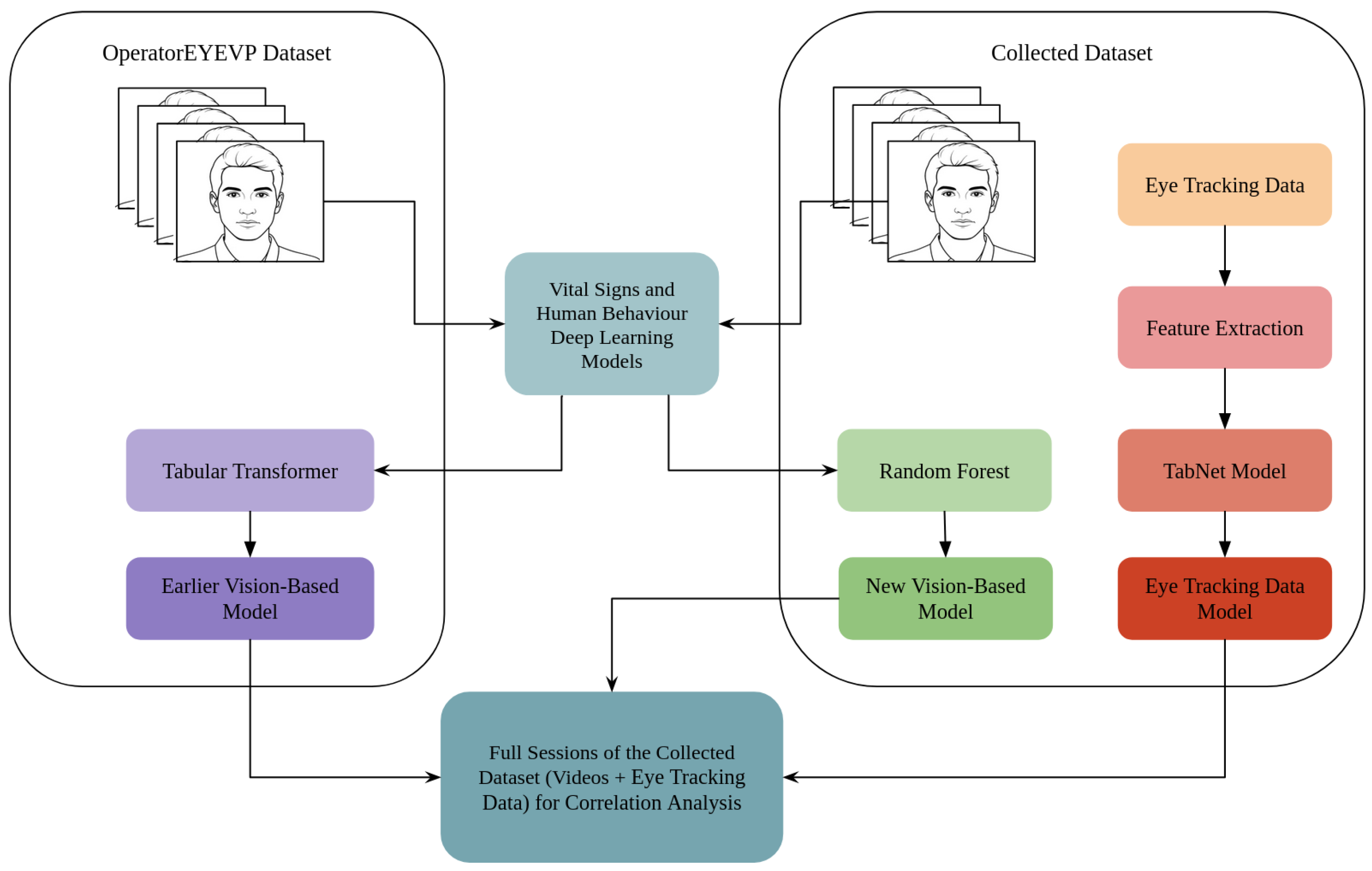

- Cross-Validation of Methodological Robustness: By deploying the vision-based model in a novel collected dataset, we aim to assess the robustness and reproducibility of the earlier-developed vision-based model trained on another dataset collected under different experimental conditions. This is essential for verifying that the model is not overfitted to a specific protocol, subject group, or recording environment.

- Functional Comparison to Advanced-Devices-Based Models: Our previously developed vision-based model has not been functionally compared to real-time, advanced-devices-based measures of fatigue. In this study, the availability of eye-tracking-derived fatigue predictions enables a functional comparison between two distinct modalities: eye movement patterns collected with advanced device and vision-estimated vital signs and human activity indicators. This comparison allows us to test for convergent validity whether different definitions and measurement techniques of fatigue produce consistent trends over time.

- Validation of Contactless Alternatives in Practical Use Cases: In real-world applications, contactless monitoring is often preferred due to its scalability and minimal user burden. Proving that the vision-based model maintains predictive value in a new dataset, and aligns with eye-tracking model outputs, provides compelling evidence for its use as a viable alternative or supplement to advanced-devices-based systems.

- We develop and evaluate a fatigue detection model based on eye-tracking data using statistical features extracted from eye movement data;

- We implement a vision-based model and assess its performance on a constrained subset of the data to test its robustness and generalizability;

- We conduct a comprehensive correlation analysis to explore the convergence of predictions between the two models and validate their consistency.

2. Literature Review

2.1. Fatigue Estimation via Sensors and Advanced-Device Data

2.2. Fatigue Estimation via Features Estimated Using Computer Vision and Deep Learning

3. Methodology

3.1. Proposed Models for Fatigue Assessment

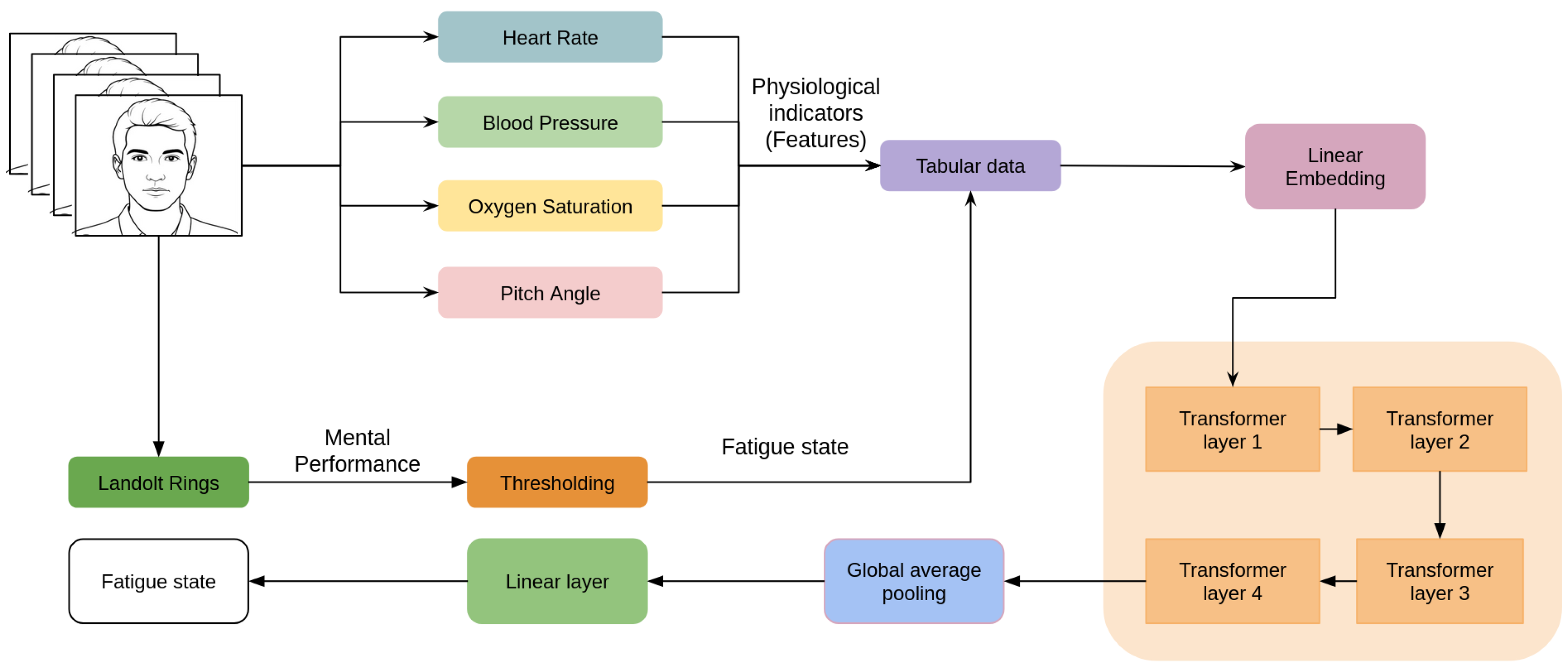

3.2. Earlier-Developed Vision-Based Method

3.2.1. Mental Fatigue Estimation Method Using Vital Signs and Human Activity

3.2.2. Deep Learning Models for Physiological Indicator Estimation

- Respiratory Rate and Breathing Characteristics: The respiratory rate model combines OpenPose for chest keypoint detection with SelFlow [42] for displacement analysis. Signal processing techniques further refine the output, achieving a mean absolute error (MAE) of 1.5 breaths per minute. However, the model is limited in dynamic settings, such as during movement.

- Heart Rate: The heart rate estimation model employs facial region extraction followed by processing with a Vision Transformer [43]. A layered block structure calculates heart rate using a weighted average. While effective overall, the model underperforms for extreme heart rates due to limited training data coverage.

- Blood Pressure: Blood pressure estimation begins by identifying cheek regions in video frames. Features are extracted using EfficientNet and fed into LSTM layers. The model reports MAEs of 11.8 mmHg (systolic) and 10.7 mmHg (diastolic), with accuracies of 89.5% and 86.2%. Skin tone diversity in the dataset remains a limitation.

- Oxygen Saturation: The estimation model uses 3DDFA_V2 [44] for face detection, VGG19 for feature extraction, and XGBoost for regression. It achieves MAEs of 1.17% and 0.84% on two datasets. However, it lacks sufficient low samples, affecting performance for certain clinical cases.

- Head Pose: Head pose is estimated via face detection using YOLO Tiny [45], followed by 3D face reconstruction and landmark tracking to compute Euler angles. The model is effective but constrained to head angles under 70, and performance is slower than other approaches.

- Eye and Mouth States: Eye state detection is based on facial inputs from FaceBoxes, while the mouth state is classified using a modified MobileNet, achieving 95.2% accuracy. Despite high performance, the use of a private dataset for training may limit generalizability.

3.3. Proposed Methods

3.3.1. Eye-Tracking-Based Method

- Feature-wise attention, allowing the model to focus on the most relevant indicators of fatigue;

- Built-in interpretability, offering insights into which features influence predictions;

- Strong performance on small datasets, which is ideal given our limited sample size;

- Improved generalization, helping the model remain robust across different individuals and sessions.

3.3.2. New Vision-Based Method

- Random Forests perform well even with relatively small datasets, which is advantageous given the limited size of our current annotated data.

- The ensemble nature of Random Forest reduces the risk of overfitting, particularly important when dealing with noisy or estimated physiological features.

- Compared to transformer-based models, Random Forests are faster to train and require less computational cost.

3.4. Datasets

3.4.1. OperatorEYEVP Dataset

3.4.2. Collected Dataset

4. Experiments and Results

4.1. Fatigue Detection Using Eye Movement Characteristics

4.2. Vision-Based Fatigue Detection Using Physiological Indicators

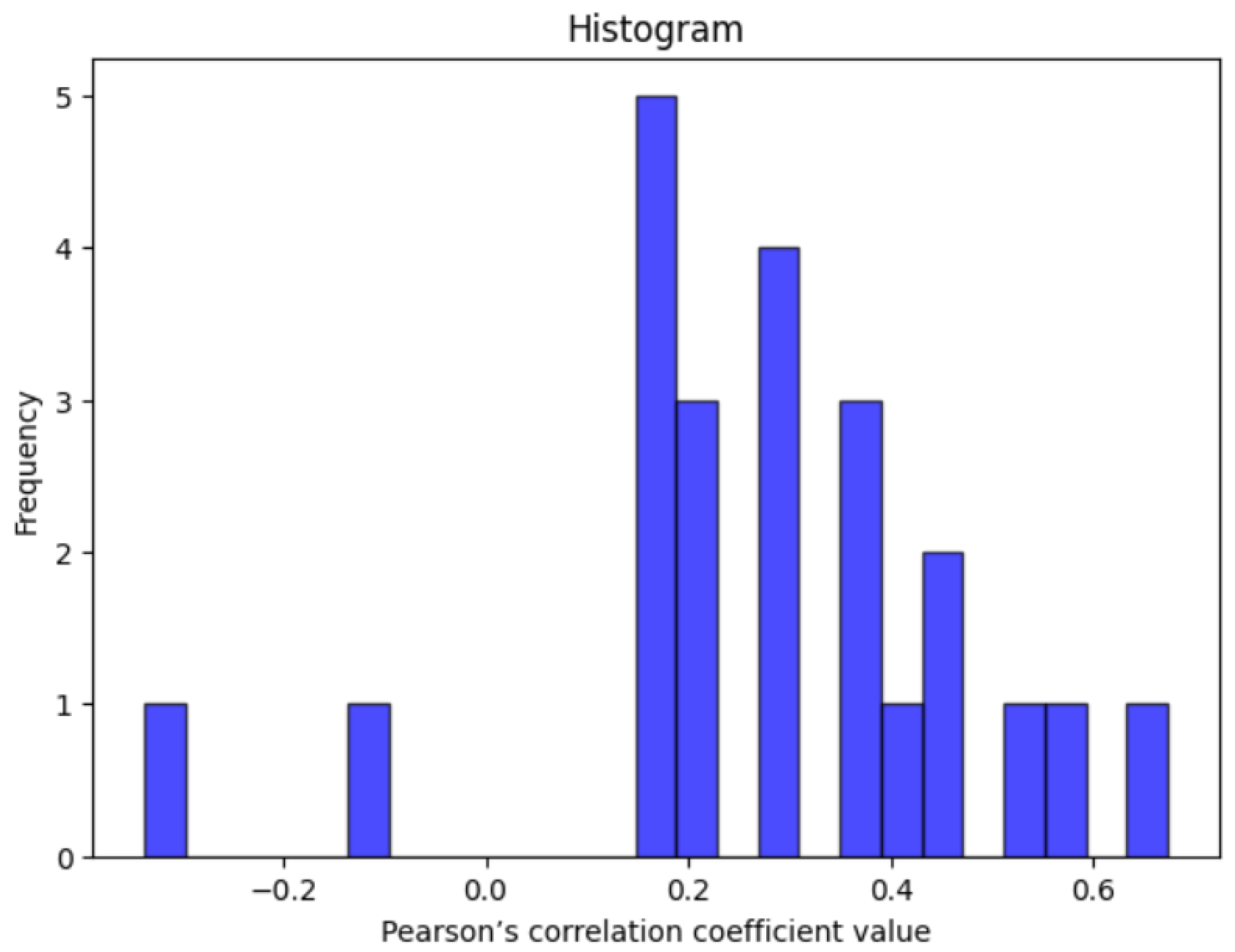

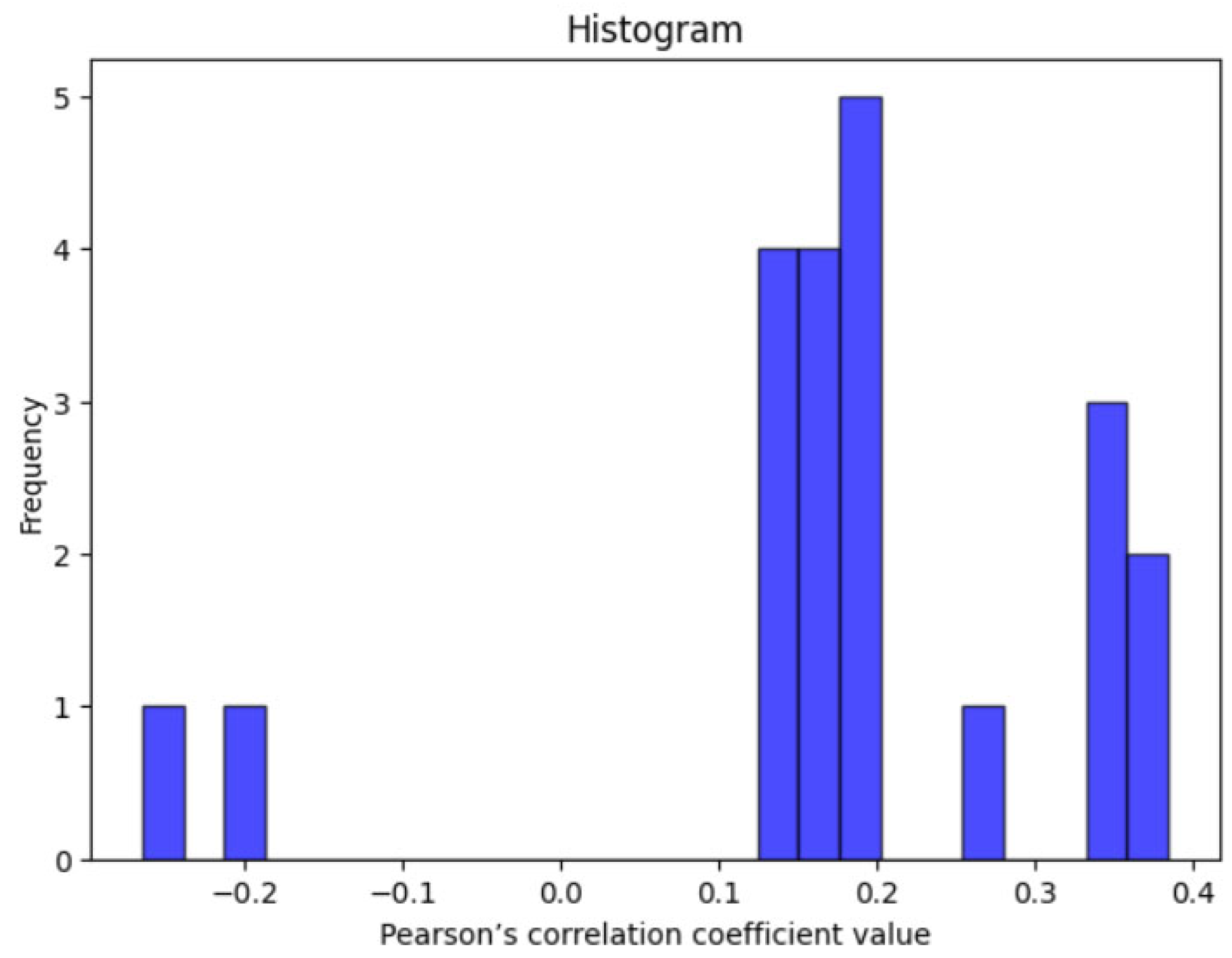

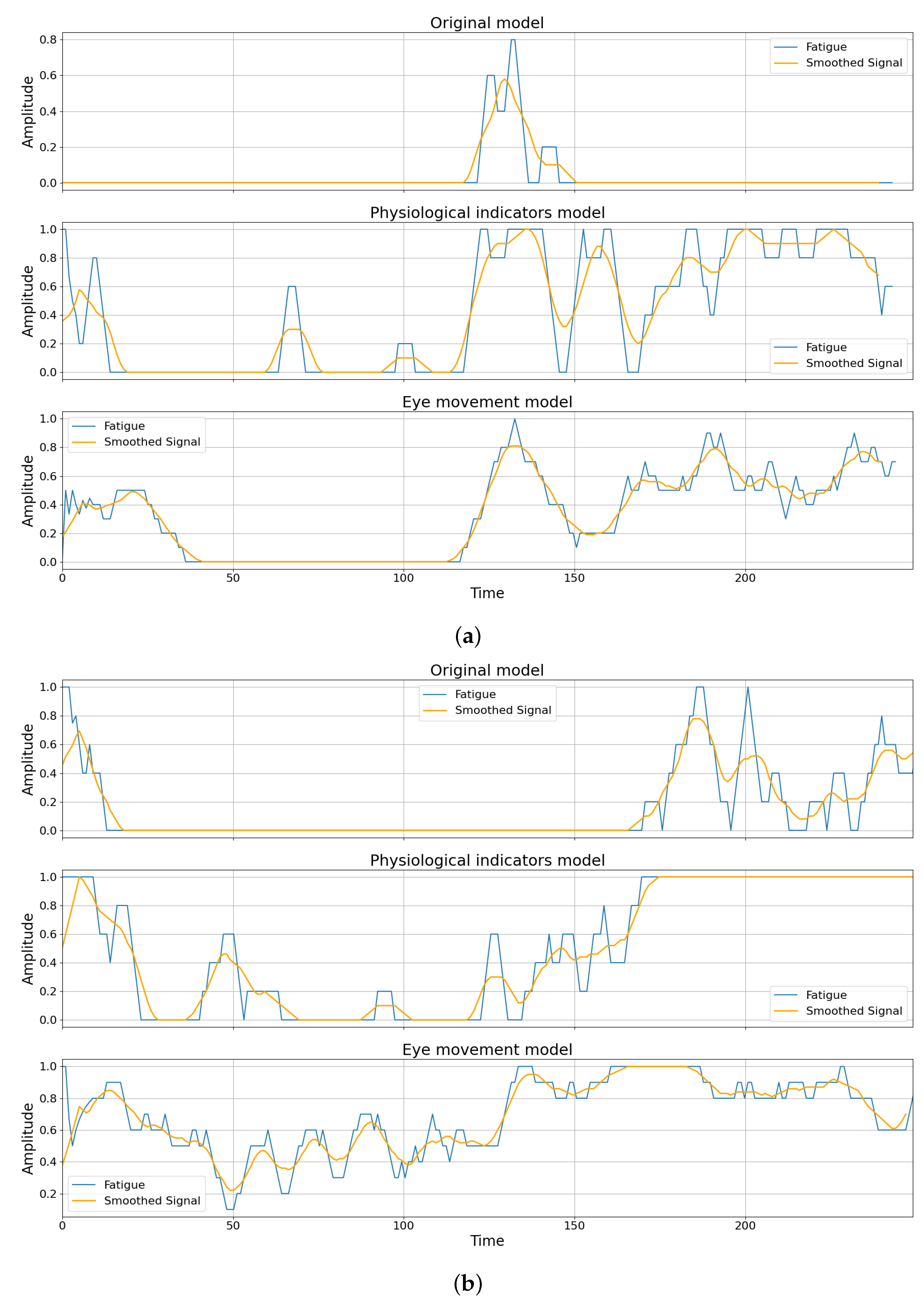

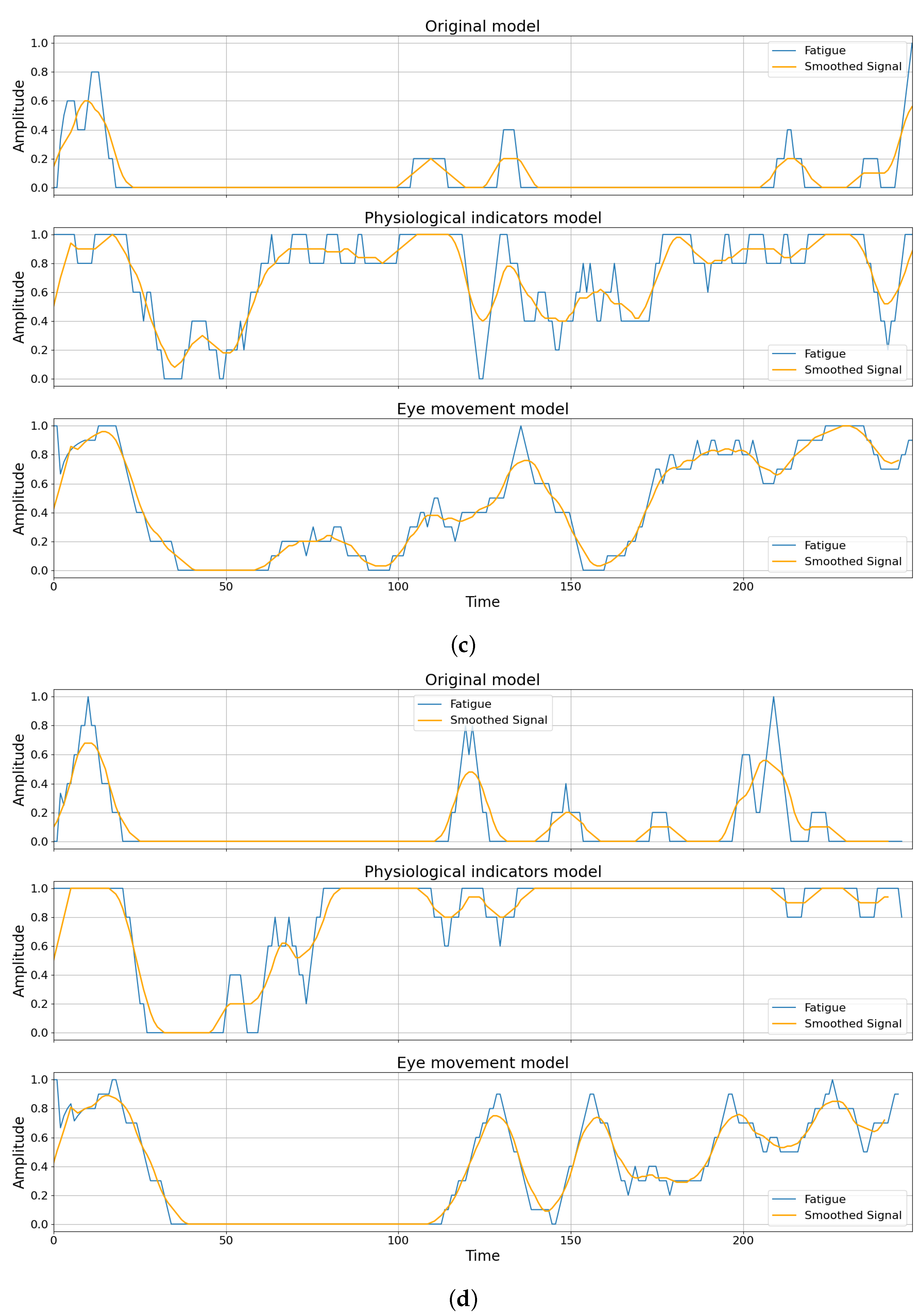

4.3. Correlation Analysis for Validity Check

- Cross-modal agreement: It demonstrates that fatigue measured by patterns of eye movements is aligned with fatigue estimated from vital signs and human activity indicators, suggesting an underlying cognitive or physical state of fatigue common to both. This validates the vision-based physiological inference model.

- Feasibility of low cost contactless fatigue estimation: The results support the practical feasibility of deploying fatigue detection models based on standard camera inputs. Given the consistency with a well-established eye-movement-based model, the second model could serve as a scalable surrogate or complement to traditional methods in contexts where sensors or advanced devices are impractical, expensive, or intrusive.

5. Discussion

5.1. Model Effectiveness and Cross-Validation Stability

5.2. Cross-Modal Convergence and Correlation Insights

5.3. Limitations and Future Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, G.; Yau, K.K.; Zhang, X.; Li, Y. Traffic accidents involving fatigue driving and their extent of casualties. Accid. Anal. Prev. 2016, 87, 34–42. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Lodewijks, G. Detecting fatigue in car drivers and aircraft pilots by using non-invasive measures: The value of differentiation of sleepiness and mental fatigue. J. Saf. Res. 2020, 72, 173–187. [Google Scholar] [CrossRef] [PubMed]

- Dawson, D.; Searle, A.K.; Paterson, J.L. Look before you (s)leep: Evaluating the use of fatigue detection technologies within a fatigue risk management system for the road transport industry. Sleep Med. Rev. 2014, 18, 141–152. [Google Scholar] [CrossRef] [PubMed]

- Gomer, J.; Walker, A.; Gilles, F.; Duchowski, A. Eye-Tracking in a Dual-Task Design: Investigating Eye-Movements, Mental Workload, and Performance. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2008, 52, 1589–1593. [Google Scholar] [CrossRef]

- Revanur, A.; Dasari, A.; Tucker, C.S.; Jeni, L.A. Instantaneous Physiological Estimation using Video Transformers. arXiv 2022, arXiv:2202.12368. [Google Scholar] [CrossRef]

- Jain, M.; Deb, S.; Subramanyam, A.V. Face video based touchless blood pressure and heart rate estimation. In Proceedings of the 2016 IEEE 18th International Workshop on Multimedia Signal Processing (MMSP), Montreal, QC, Canada, 21–23 September 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Othman, W.; Hamoud, B.; Shilov, N.; Kashevnik, A. Human Operator Mental Fatigue Assessment Based on Video: ML-Driven Approach and Its Application to HFAVD Dataset. Appl. Sci. 2024, 14, 510. [Google Scholar] [CrossRef]

- He, X.; Li, S.; Zhang, H.; Chen, C.; Li, J.; Dragomir, A.; Bezerianos, A.; Wang, H. Towards a nuanced classification of mental fatigue: A comprehensive review of detection techniques and prospective research. Biomed. Signal Process. Control 2026, 111, 108496. [Google Scholar] [CrossRef]

- Wang, L.; Li, H.; Yao, Y.; Han, D.; Yu, C.; Lyu, W.; Wu, H. Smart cushion-based non-invasive mental fatigue assessment of construction equipment operators: A feasible study. Adv. Eng. Inform. 2023, 58, 102134. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. In Human Mental Workload; Advances in Psychology; Hancock, P.A., Meshkati, N., Eds.; Elsevier: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar] [CrossRef]

- Luo, H.; Lee, P.A.; Clay, I.; Jaggi, M.; De Luca, V. Assessment of Fatigue Using Wearable Sensors: A Pilot Study. Digit. Biomark. 2020, 4, 59–72. [Google Scholar] [CrossRef]

- Meng, J.; Zhao, B.; Ma, Y.; Ji, Y.; Nie, B. Effects of fatigue on the physiological parameters of labor employees. Nat. Hazards 2014, 74, 1127–1140. [Google Scholar] [CrossRef]

- Mehmood, I.; Li, H.; Qarout, Y.; Umer, W.; Anwer, S.; Wu, H.; Hussain, M.; Fordjour Antwi-Afari, M. Deep learning-based construction equipment operators’ mental fatigue classification using wearable EEG sensor data. Adv. Eng. Inform. 2023, 56, 101978. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014; Volume 27. [Google Scholar]

- Khishdari, A.; Mirzahossein, H. Unveiling driver drowsiness: A probabilistic machine learning approach using EEG and heart rate data. Innov. Infrastruct. Solut. 2025, 10, 275. [Google Scholar] [CrossRef]

- Anitha, C.; Venkatesha, M.; Adiga, B.S. A Two Fold Expert System for Yawning Detection. Procedia Comput. Sci. 2016, 92, 63–71. [Google Scholar] [CrossRef][Green Version]

- Hussain, A.; Saharil, F.; Mokri, R.; Majlis, B. On the use of MEMs accelerometer to detect fatigue department. In Proceedings of the 2004 IEEE International Conference on Semiconductor Electronics, Kuala Lumpur, Malaysia, 7–9 December 2004; p. 5. [Google Scholar] [CrossRef]

- Melo, H.; Nascimento, L.; Takase, E. Mental Fatigue and Heart Rate Variability (HRV): The Time-on-Task Effect. Psychol. Neurosci. 2017, 10, 428–436. [Google Scholar] [CrossRef]

- Csathó, Á.; Van der Linden, D.; Matuz, A. Change in heart rate variability with increasing time-on-task as a marker for mental fatigue: A systematic review. Biol. Psychol. 2024, 185, 108727. [Google Scholar] [CrossRef]

- Matuz, A.; van der Linden, D.; Kisander, Z.; Hernádi, I.; Kázmér, K.; Csathó, Á. Low Physiological Arousal in Mental Fatigue: Analysis of Heart Rate Variability during Time-on-task, Recovery, and Reactivity. bioRxiv 2020. [Google Scholar] [CrossRef]

- Kashevnik, A.; Shchedrin, R.; Kaiser, C.; Stocker, A. Driver Distraction Detection Methods: A Literature Review and Framework. IEEE Access 2021, 9, 60063–60076. [Google Scholar] [CrossRef]

- Zhao, Q.; Nie, B.; Bian, T.; Ma, X.; Sha, L.; Wang, K.; Meng, J. Experimental study on eye movement characteristics of fatigue of selected college students. Res. Sq. 2023. [Google Scholar] [CrossRef]

- Sampei, K.; Ogawa, M.; Torres, C.C.C.; Sato, M.; Miki, N. Mental Fatigue Monitoring Using a Wearable Transparent Eye Detection System. Micromachines 2016, 7, 20. [Google Scholar] [CrossRef]

- Cui, Z.; Sun, H.M.; Yin, R.N.; Gao, L.; Sun, H.B.; Jia, R.S. Real-time detection method of driver fatigue state based on deep learning of face video. Multimed. Tools Appl. 2021, 80, 25495–25515. [Google Scholar] [CrossRef]

- Dua, M.; Shakshi; Singla, R.; Raj, S.; Jangra, A. Deep CNN models-based ensemble approach to driver drowsiness detection. Neural Comput. Appl. 2021, 33, 3155–3168. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the 26th International Conference on Neural Information Processing Systems, NIPS’12, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 1, pp. 1097–1105. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Ye, M.; Zhang, W.; Cao, P.; Liu, K. Driver Fatigue Detection Based on Residual Channel Attention Network and Head Pose Estimation. Appl. Sci. 2021, 11, 9195. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image Super-Resolution Using Very Deep Residual Channel Attention Networks. arXiv 2018, arXiv:1807.02758. [Google Scholar] [CrossRef]

- Zhan, T.; Xu, C.; Zhang, C.; Zhu, K. Generalized Maximum Likelihood Estimation for Perspective-n-Point Problem. arXiv 2024, arXiv:2408.01945. [Google Scholar] [CrossRef]

- Dey, S.; Chowdhury, S.A.; Sultana, S.; Hossain, M.A.; Dey, M.; Das, S.K. Real Time Driver Fatigue Detection Based on Facial Behaviour along with Machine Learning Approaches. In Proceedings of the 2019 IEEE International Conference on Signal Processing, Information, Communication & Systems (SPICSCON), Dhaka, Bangladesh, 28–30 November 2019; pp. 135–140. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar] [CrossRef]

- Mehmood, I.; Li, H.; Umer, W.; Arsalan, A.; Anwer, S.; Mirza, M.A.; Ma, J.; Antwi-Afari, M.F. Multimodal integration for data-driven classification of mental fatigue during construction equipment operations: Incorporating electroencephalography, electrodermal activity, and video signals. Dev. Built Environ. 2023, 15, 100198. [Google Scholar] [CrossRef]

- Hamoud, B.; Othman, W.; Shilov, N. Analysis of Computer Vision-Based Physiological Indicators for Operator Fatigue Detection. In Proceedings of the 2025 37th Conference of Open Innovations Association (FRUCT), Narvik, Norway, 14–16 May 2025; pp. 47–58. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Hosmer, D.W.; Lemeshow, S. Applied Logistic Regression; Wiley: Hoboken, NJ, USA, 1989. [Google Scholar]

- Haykin, S. Neural Networks: A Comprehensive Foundation; Prentice Hall PTR: Hoboken, NJ, USA, 1994. [Google Scholar]

- Quinlan, J.R. Induction of Decision Trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the KDD ’16: The 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Krishnapuram, B., Shah, M., Smola, A.J., Aggarwal, C., Shen, D., Rastogi, R., Eds.; ACM: New York, NY, USA, 2016; pp. 785–794. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Liu, P.; Lyu, M.; King, I.; Xu, J. SelFlow: Self-Supervised Learning of Optical Flow. arXiv 2019, arXiv:1904.09117. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Guo, J.; Zhu, X.; Yang, Y.; Yang, F.; Lei, Z.; Li, S.Z. Towards Fast, Accurate and Stable 3D Dense Face Alignment. arXiv 2020, arXiv:2009.09960. [Google Scholar] [CrossRef]

- Khokhlov, I.; Davydenko, E.; Osokin, I.; Ryakin, I.; Babaev, A.; Litvinenko, V.; Gorbachev, R. Tiny-YOLO object detection supplemented with geometrical data. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25–28 May 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Arik, S.Ö.; Pfister, T. TabNet: Attentive Interpretable Tabular Learning. arXiv 2019, arXiv:1908.07442. [Google Scholar] [CrossRef]

- Kovalenko, S.; Mamonov, A.; Kuznetsov, V.; Bulygin, A.; Shoshina, I.; Brak, I.; Kashevnik, A. OperatorEYEVP: Operator Dataset for Fatigue Detection Based on Eye Movements, Heart Rate Data, and Video Information. Sensors 2023, 23, 6197. [Google Scholar] [CrossRef]

- Kashevnik, A.; Kovalenko, S.; Mamonov, A.; Hamoud, B.; Bulygin, A.; Kuznetsov, V.; Shoshina, I.; Brak, I.; Kiselev, G. Intelligent Human Operator Mental Fatigue Assessment Method Based on Gaze Movement Monitoring. Sensors 2024, 24, 6805. [Google Scholar] [CrossRef] [PubMed]

- Sedgwick, P. Pearson’s correlation coefficient. BMJ 2012, 345, e4483. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hamoud, B.; Othman, W.; Shilov, N.; Kashevnik, A. Deep-Learning-Based Human Activity Recognition: Eye-Tracking and Video Data for Mental Fatigue Assessment. Electronics 2025, 14, 3789. https://doi.org/10.3390/electronics14193789

Hamoud B, Othman W, Shilov N, Kashevnik A. Deep-Learning-Based Human Activity Recognition: Eye-Tracking and Video Data for Mental Fatigue Assessment. Electronics. 2025; 14(19):3789. https://doi.org/10.3390/electronics14193789

Chicago/Turabian StyleHamoud, Batol, Walaa Othman, Nikolay Shilov, and Alexey Kashevnik. 2025. "Deep-Learning-Based Human Activity Recognition: Eye-Tracking and Video Data for Mental Fatigue Assessment" Electronics 14, no. 19: 3789. https://doi.org/10.3390/electronics14193789

APA StyleHamoud, B., Othman, W., Shilov, N., & Kashevnik, A. (2025). Deep-Learning-Based Human Activity Recognition: Eye-Tracking and Video Data for Mental Fatigue Assessment. Electronics, 14(19), 3789. https://doi.org/10.3390/electronics14193789