1. Introduction

In the last few years, the preservation and documentation of architectural and cultural heritage have become increasingly reliant on digital technologies, which are becoming more widely available. When it comes to creating 3D models using conventional techniques such as photogrammetry and laser scanning, which are widely used, they often require specialized equipment and extensive manual processing and can be limited in terms of scalability and accessibility. Moreover, two-dimensional images can be transformed into a complex three-dimensional scene using a neural network for a reconstruction that is called a Neural Radiance Field (NeRF). Employing artificial intelligence (AI) enables the generation of photorealistic 3D scenes from a sparse set of 2D images. By learning the volumetric scene representation and capturing how light interacts with it, NeRF offers a powerful alternative to traditional methods, particularly in scenarios where access to the site is restricted or where non-invasive documentation is preferred.

Compared to traditional 3D reconstruction methods such as photogrammetry, Structure-from-Motion (SfM), and terrestrial laser scanning (TLS), NeRF offers distinct advantages in visualization and immersive experiences. While laser scanning and photogrammetry provide superior metric accuracy and are thus indispensable for conservation and structural analysis, NeRF excels in generating photorealistic renderings and continuous view synthesis, even under challenging lighting conditions. In terms of efficiency, NeRF requires significant computational resources during training but enables smooth real-time visualization, once trained, whereas photogrammetry and TLS often involve lengthy preprocessing and meshing stages. Cost considerations also differ: NeRF can be implemented using standard RGB cameras, reducing fieldwork expenses, while TLS requires highly specialized and expensive equipment. Consequently, NeRF is most applicable in scenarios prioritizing visualization and accessibility, such as virtual exhibitions, education, and tourism, while traditional methods remain preferable for precise architectural documentation and scientific measurement.

The application of NeRF in the restoration and digital documentation of historical buildings is gaining traction as a powerful alternative to traditional 3D reconstruction methods. NeRF synthesizes novel views from sparse 2D image data by modeling volumetric scenes using neural networks, enabling highly realistic renderings of complex environments [

1,

2,

3,

4]. This capacity proves particularly advantageous in cultural heritage contexts, where details such as reflective surfaces, homogeneous textures, and translucent materials often challenge conventional photogrammetric workflows [

5,

6]. Compared to the traditional SfM and Multi-View Stereo (MVS) techniques, which struggle with textureless or shiny surfaces, NeRF-based approaches—especially Instant-NGP and Nerfacto—demonstrate superior visual fidelity and improved completeness in 3D models [

2,

7,

8,

9]. These methods are also more robust when dealing with degraded image inputs or limited viewpoint diversity, conditions frequently encountered in the documentation of endangered or inaccessible heritage sites [

10,

11,

12].

NeRF also significantly enhances operational efficiency. Instant-NGP, for instance, drastically reduces processing time and computational cost while delivering high-resolution outputs [

7,

9]. NeRF pipelines require minimal manual intervention and can reconstruct complete scenes from overlapping or even sparse image datasets, making them suitable for field conditions where time, budget, or access are constrained [

6,

13]. Nevertheless, challenges remain, particularly in terms of geometric accuracy. While NeRF excels in visual reconstruction, it may fall short when high metric precision is required, an area where traditional SfM-MVS methods still retain an advantage [

5,

14]. Hybrid approaches—such as integrating NeRF with GAN-based post-processing or 3D point cloud validation—have shown promise in addressing these limitations and improving structural fidelity [

15,

16]. Recent advances, such as Mip-NeRF, have improved anti-aliasing and multiscale fidelity, enhancing the rendering of fine surface details in heritage assets [

10,

16].

Other approaches, such as Plenoxels, demonstrate that radiance field representations can be achieved without neural networks, offering advantages in interpretability and speed [

17,

18]. NeRF’s scalability has even extended to city-scale scenes, enabling broader cultural and urban heritage reconstructions [

6,

9]. Notably, NeRF has also proven effective in reconstructing interior spaces and in managing reconstructions from low-quality or partially available datasets [

8,

11]. In real-time heritage documentation, NeRF has enabled high-quality reconstructions even in the presence of equipment and lighting limitations [

13]. In conclusion, NeRF represents a major advancement in the digital preservation of cultural heritage. It offers enhanced visual realism, reduced operational complexity, and increased geometric robustness through hybridization. As research continues to expand, NeRF stands out as a promising and evolving tool for both the visualization and conservation of historical sites [

2].

This study focuses on the digital preservation and virtual restoration of Katolička Porta in Novi Sad through the integration of cutting-edge technologies that combine aerial data acquisition, advanced 3D reconstruction, and innovative texture enhancement methods. A single drone, the DJI Mini 4 Pro Fly More Combo Plus with DJI RC 2, was used to efficiently and comprehensively capture high-resolution aerial images from multiple viewpoints. This approach was especially valuable for documenting hard-to-reach or restricted parts of the monument that are typically difficult to access using conventional methods. The acquired images were then processed using NeRF, a state-of-the-art technique for photorealistic 3D reconstruction.

NeRF enables the synthesis of detailed and visually accurate 3D models by interpreting volumetric scene information from sparse 2D image inputs. The result is highly realistic digital replicas that faithfully represent the monument’s complex surface properties, including reflections, translucency, and fine architectural details. To further enhance the visual quality and interpretive potential of the digital model, virtual texture modification techniques were applied to the NeRF reconstruction. These techniques digitally simulate material aging, weathering effects, and restoration processes, allowing for a non-invasive exploration of different conservation scenarios. By avoiding physical intervention, this method preserves the original fabric of the monument while enabling stakeholders to visualize potential restoration outcomes in a virtual environment. The combination of drone data acquisition with the DJI Mini 4 Pro Fly More Combo Plus with DJI RC 2, NeRF reconstruction, and virtual texture modification results in a robust workflow for heritage documentation and conservation planning. This integrated process is demonstrated in the video documentation of the

Katolička Porta study available on YouTube [

19]. The video highlights the practical application of these methods, showing how emerging digital technologies can significantly contribute to the preservation and promotion of cultural heritage sites.

The data acquisition for the

Katolička Porta project was carried out using a single DJI Mini 4 Pro drone, a high-performance, professional-grade UAV widely used in aerial photography and surveying [

20]. Known for its compact foldable design, the Mini 4 Pro combines portability with powerful imaging capabilities, making it ideal for detailed cultural heritage documentation [

21]. It features a high-resolution 1-inch CMOS sensor with 20 megapixels, enabling the capture of sharp and detailed images essential for high-quality NeRF reconstruction. The drone’s 84° field of view and advanced 3-axis gimbal stabilization system ensure wide coverage and steady, blur-free shots from multiple perspectives. With an approximate flight time of 34 min, the drone efficiently covers the monument, including hard-to-reach or complex areas. Its six-directional obstacle avoidance sensors allow safe navigation around architectural structures, while GPS and GLONASS positioning provide accurate geolocation data that supports precise alignment of images in 3D space (please see

Table 1). These advanced imaging and stable flight capabilities guarantee consistent, high-quality data acquisition, which is crucial for feeding NeRF algorithms. Ultimately, this enables the creation of detailed, photorealistic 3D reconstructions of the heritage site, supporting the digital preservation and virtual restoration efforts for

Katolička Porta [

22].

2. The History and Cultural Significance of Katolička Porta in Novi Sad

The

Katolička Porta stands as a testament to the development of Novi Sad from its earliest days. Throughout history, this space has never formally been designated as a square; rather, it has always served the Roman Catholic Church. Even in the earliest graphic depictions, it is indicated as church property or a courtyard. Consequently, the study of the history of the

Katolička Porta is closely linked to the Roman Catholic Church, which has always been located at the edge of the courtyard, serving as a reference point for its architectural surroundings. Opposite the Petrovaradin Fortress, in the Petrovaradin Trench (Šanac), a Roman Catholic parish has existed since 1702, initially as a branch of Petrovaradin, and was established as an independent parish in 1718. It was during this time that the development of the

Katolička Porta area began, as the county administration granted the Roman Catholic parish a large plot for a church, a parish house, and a cemetery. The first church, more of a chapel built with rammed earth construction, was followed by a second church constructed of brick and clay in 1743, according to historical sources [

23,

24,

25].

A map drawn around 1745 already marks the

Katolička Porta, depicted as an irregular polygonal shape. Next to the church, there is another religious structure, presumably a burial chapel. In contrast, at the opposite end of the courtyard, a rectangular building is believed to have served as the rectory. The courtyard itself served as a cemetery [

26]. Due to a decree issued by Maria Theresa in 1777, burials around churches were prohibited, and burials in the

Katolička Porta were also banned [

27]. The exact timing of when the cemetery was converted into a square is unknown, but it is assumed to have occurred by the late 18th century, as subsequent maps no longer depict the burial grounds.

The next known representation of the area dates to the mid-19th century. In that map, alongside the church and the courtyard, whose shape remained unchanged, a large building with an inner courtyard is marked. This building, known as the “Iron Man”, was used by the city magistrate during that period (the building was damaged during the bombing in 1849, after which it was reconstructed; the structure that exists today was built in the early 20th century in the Neo-Gothic and Romantic architectural styles) [

28]. The cadastral map from 1877 shows that the

Katolička Porta retained its shape but was transformed into a park with leaf-shaped pathways. All previously described structures are visible on this map, along with two additional buildings located on the northeastern side [

29]. The Roman Catholic Church of the Name of Mary, which continues to play a crucial role in shaping both the main city square and the

Katolička Porta, was built between 1891 and 1895 in the Neo-Gothic style, following designs by the architect György Molnár [

30]. On the 1900 map, the outlines of this new church building are visible, and its size inevitably reshaped the square. The same situation is depicted in 1929: the

Katolička Porta is shown as an irregular rectangular shape, featuring the previously mentioned buildings with the church structure as its dominant feature. In the 1930s, the architectural landscape of the square was enhanced, transforming the courtyard into a clearly defined rectangular space that remained true to its original designation as a church courtyard. At that time, much of the open area in the courtyard was covered with vegetation [

29]. This layout has been preserved to this day, despite several redesigns. Currently, most of the area is paved, with a fountain located in front of the rectory.

The

Katolička Porta is part of the protected cultural and historical unit known as the “Old Core of Novi Sad” [

31]. Its architectural framework consists of buildings of varying significance, constructed or renovated from the early 19th century to the early 21st century. The only building in the Classicist style, with Baroque elements known as the Copf style, that enjoys special protection is the Roman Catholic Parish Office (Rectory), which is designated as a Cultural Property of Great Importance [

29]. Built in 1808, the rectory is one of the few structures that survived the bombing of Novi Sad in 1849, although a parish house had existed on the same site since the first half of the 18th century, as indicated by historical maps. In addition to the Roman Catholic Church and the rectory, the square includes several other buildings of great historical significance to the city. These structures serve a variety of functions and embody different architectural styles. Today, the architectural framework of the

Katolička Porta consists not only of the Roman Catholic Church of the Name of Mary and the rectory but also the following buildings, most of which were owned by the Roman Catholic parish at the time of their construction:

- −

The Cultural Centre, built during the interwar period in a Modernist style. It was owned by the Roman Catholic Church until World War II.

- −

A mixed-use residential and commercial building locally referred to as “The Vatican.” This structure was built in 1930 in Neo-styles and designed by architect Daka Popović, commissioned by the Roman Catholic parish.

- −

Several residential buildings were also constructed during the interwar period.

- −

The former “Iron Man” complex, built in the 19th century, has undergone several modifications. This complex once housed a school and now serves as a residential and commercial property [

32].

Figure 1 shows the

Katolička Porta in Novi Sad through the ages (area marked in red).

This study is guided by the following research questions:

- i.

How effective is the NeRF approach, combined with drone-acquired imagery, in producing accurate and photorealistic digital reconstructions of the Katolička Porta?

- ii.

To what extent can virtual restoration techniques, specifically IP-Adapter and ControlNet, support the simulation of conservation scenarios and the visualization of historical architectural states?

- iii.

How do cultural heritage professionals perceive the usefulness, credibility, and applicability of such digital visualizations in their conservation practice?

Based on these questions, it is hypothesized that NeRF-based reconstructions, enhanced with AI-assisted restoration methods, will provide (i) highly realistic 3D models of the site, (ii) enhanced interpretive and educational insights, and (iii) substantial practical value for heritage experts, particularly in education, public communication, and conservation planning.

3. Related Works

3.1. NeRF Technology in Cultural Heritage Digitalization

NeRF has revolutionized digital preservation in the field of cultural heritage by enabling photorealistic 3D reconstructions from sparse 2D image data [

1,

2]. These methods are particularly effective for handling challenging materials such as reflective, translucent, or textureless surfaces, which are common in historical buildings, overcoming the limitations of traditional photogrammetry methods [

5,

6]. Recent advancements, such as Instant-NGP and Nerfacto, have significantly reduced computational time while improving rendering fidelity, making them highly practical for on-site heritage documentation [

2,

6,

33]. Multiscale representations, such as Mip-NeRF, address aliasing issues and improve the preservation of architectural textures [

5,

15,

34]. Although NeRF excels in visual quality, geometric accuracy remains an area of ongoing research, with hybrid approaches that combine NeRF outputs with traditional SfM and MVS methods showing improvements in metric precision [

10,

11,

35]. Furthermore, studies have demonstrated that NeRF can generate high-quality 3D models even with sparse datasets, making it a valuable tool for challenging documentation scenarios [

33,

34].

Modern approaches to cultural heritage protection increasingly rely on AI-based technologies to improve accuracy and efficiency. In line with this, one study explores the use of artificial intelligence in architecture and cultural heritage, with a focus on NeRF for 3D representation of heritage buildings. The aim of the paper is to enhance restoration and conservation processes by optimizing construction workflows and reducing production time [

36]. Additionally, Chenxi Ge pointed out the application of AI in cultural heritage, taking the entire process of cultural heritage protection as a case study [

37].

3.2. Drones in 3D Modeling and Digital Preservation of Historical Monuments

Drones have become an indispensable tool for capturing aerial imagery in the 3D modeling of heritage sites, enabling efficient documentation of complex environments, including areas that are difficult to access through traditional means [

8,

9]. When combined with NeRF-based reconstruction pipelines, drone imagery allows for the rapid generation of highly detailed 3D models of monuments, even under challenging lighting and environmental conditions [

9,

10,

38]. Real-time reconstruction systems that integrate drone data also enable interactive visualization and monitoring of heritage sites, improving conservation workflows [

9,

39]. Additionally, advancements in autonomous drone navigation and optimized image acquisition techniques enhance both the spatial coverage and quality of datasets used in NeRF training, leading to more accurate reconstructions [

40].

3.3. Applications of Texture Modification and Object Restoration Using Virtual Techniques

Virtual restoration techniques have increasingly complemented NeRF reconstructions, enhancing the realism of textures and correcting geometric inaccuracies through methods such as GAN-based techniques and point cloud refinement [

6,

10,

18]. These approaches facilitate non-invasive exploration of potential restorations and simulate scenarios like material aging or damage, which can assist in conservation decision-making [

41]. Innovative voxel-based radiance field techniques offer flexible platforms for interactive texture manipulation and editing without the complexity of neural networks [

7,

42]. Additionally, the integration of extended reality (XR) with NeRF-based models has enabled immersive, interactive experiences of restored heritage sites, bridging the gap between digital preservation and public engagement [

43].

3.4. Comparative Evaluation of NeRF and Traditional 3D Reconstruction Methods

NeRF is often compared to traditional ways of 3D modeling, and one study offers a comparative evaluation of 3D reconstructions produced using artificial intelligence—namely NeRF neural networks—and conventional open-source photogrammetric techniques, including SfM and MVS [

44]. One of the criteria is the effectiveness of applying NeRF to image datasets, which was analyzed in [

45]. Condorelli and Perticarini evaluated the advantages and disadvantages of various AI-based algorithms [

46], while Basso et al. explored emerging digitization methods based on radiance fields and neural network-driven rendering and learning systems, emphasizing their potential for understanding and dissemination of cultural heritage [

47].

One study aims to compare machine learning and deep learning approaches for large-scale 3D cultural heritage classification. Based on the strengths of each method, it introduces a hybrid architecture called DGCNN-Mod + 3Dfeat, which integrates the advantages of both techniques to enhance the semantic segmentation of cultural heritage point clouds [

48]. Another study examines key components of the NeRF framework, including its sampling strategy, positional encoding techniques, network architecture, and the volume rendering process [

18], while a further study shows the impact of ISO, shutter speed, and aperture settings on the quality of the resulting 3D reconstructions, pointing out which combination is the most suitable [

49].

Regarding the quality of point clouds produced by NeRF, in one article, the results were compared with those obtained from four conventional MVS algorithms—two commercial (Agisoft Metashape and Pix4D) and two open-source (Patch-Match and Semi-Global Matching) [

50]. Additionally, some of the last NeRF methods applied to various cultural heritage datasets collected with smartphone videos, touristic approaches, or reflex cameras were reviewed in a paper [

2].

4. Materials and Methods

Creating an accurate NeRF model begins with proper video recording, from which specific frames are selected for the model’s training. The number of frames required depends on the particular scene or object being captured and typically ranges from 50 to 120, but it depends on the object size. In this case, 174 frames were used (see

Figure 2).

Proper recording involves capturing the object (scene or exterior) from various angles, both in terms of height and rotation around the object. The camera movements can be likened to the moon’s orbit around the Earth. Moreover, it is crucial that the object or scene remains static throughout the recording. Any movement in the object, its parts, or variations in lighting can introduce errors in the resulting NeRF model. These errors are often visual and manifest as blurred clouds or “floaters” in the final output.

The configuration of the recording device, whether a drone or camera, is also critical. NeRF technology is highly sensitive to blurry images and changes in lighting conditions. Motion blur occurs due to improper exposure settings or focus adjustments relative to the speed of the device. This issue becomes more pronounced as the camera speed increases. To mitigate this, the following adjustments can be implemented:

Increasing exposure time: Helps capture sharper images during movement;

Slowing down the camera movement: Reduces motion blur;

Using a tripod: Ensures the camera remains steady during the shot.

Lighting consistency is another crucial factor that can affect model quality. Variations in lighting are commonly caused by:

Automatic exposure and aperture adjustments.

Long recording durations, which may lead to changes in the position of the Sun, affecting shadow angles and light temperature.

4.1. Katolička Porta—On-Site Survey and Interpretation

The first challenge encountered was the fact that the location intended for surveying is a pedestrian zone, which is crowded during daytime hours. The site in question is the

Katolička Porta in Novi Sad, Serbia, shown in

Figure 3. An appropriate time for recording had to be chosen when there were few or no pedestrians present. For this reason, the survey was conducted early in the morning, immediately after dawn, at 6 AM in November 2024. This timing proved effective, as the location (the square) was free of pedestrians at that time. Equally important for the quality of the resulting NeRF model was consistent and unchanging lighting, which was also achieved by recording at this time of day. A characteristic feature of dawn is indirect sunlight diffused through the Earth’s atmosphere when the center of the solar disk reaches an angle of 18° below the observer’s horizon. This provided natural diffuse lighting without pronounced and changing shadows over time, creating ideal conditions for scanning a space of this scale. Each recording cycle lasted approximately one and a half hours, and the recording was repeated over three days. After the recording, the next phase involved exporting frames and selecting those to be used for NeRF model training.

The sketch of the drone trajectory during the capture is given in

Figure 4. This figure presents a top-down satellite view (Google Maps location of the

Katolička Porta in Novi Sad, Serbia—

https://maps.app.goo.gl/E4GhFbZbRzmmYJyMA, accessed on 11 June 2025) of the

Katolička Porta in Novi Sad, illustrating the area documented during the NeRF-based data acquisition process. The yellow square marks the broader zone covered by the drone, while the red lines depict the drone’s spiral flight trajectory used to ensure comprehensive spatial coverage of the site. At the center of the marked area, the drone’s initial position is indicated, from which the recording sequence began. This visualization effectively communicates both the spatial context of the cultural heritage site and the methodological approach used to obtain high-quality visual data under optimal lighting conditions.

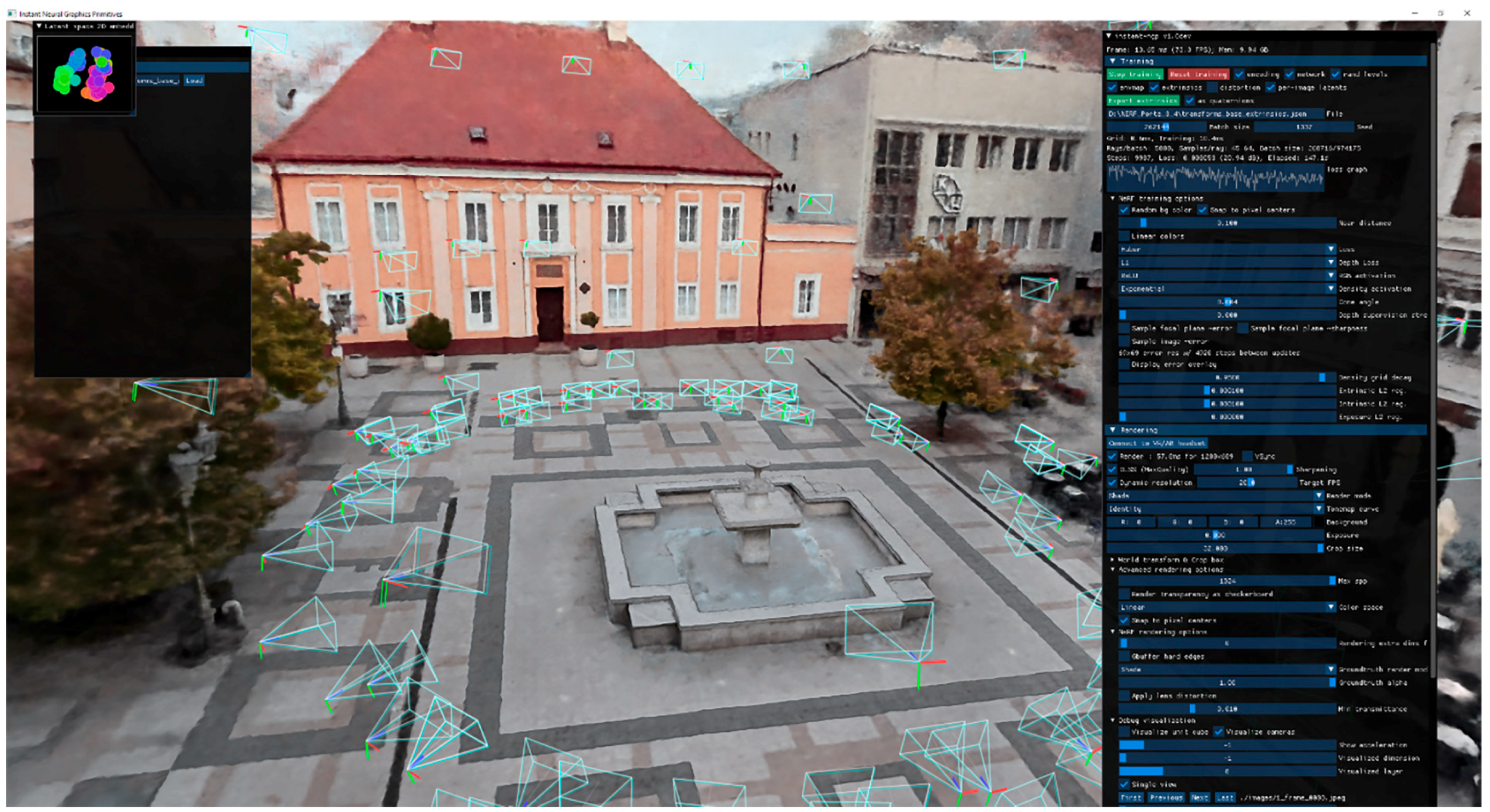

4.2. Three-Dimensional Reconstruction and Visualization Using NeRF

Instant-ngp, an NVIDIA research project implementing four neural graphic primitives (NeRF, signed distance functions (SDFs) [

51], neural images [

52], and neural volumes [

53]), was used for NeRF model training. The model generation took approximately 30 min.

Table 2 shows the specifications of the computer used for this purpose.

The NeRF training process is highly sensitive to the quality of the dataset, meaning that the dataset must be of high quality in order for the process to converge during training. The dataset should provide wide-angle and representative views of the scene from multiple perspectives, enabling the model to understand the full 3D structure.

Accurate camera parameters are essential because if the dataset has incorrect camera positions during its movement, the model will not be able to properly align the images in 3D space, resulting in poor object reconstruction. The

colmap2nerf.py script (as well as other tools) extracts these parameters from the images. That script extracts these parameters from the images, similar to the COLMAP integration workflow described by Mildenhall et al. [

54]. Two scripts,

colmap2nerf.py and

record3d2nerf.py [

55,

56], are designed to take training images and prepare them for NeRF training by extracting and aligning camera parameters. If the background is visible in the images and is located behind the object of interest, it can still be reconstructed, but only if a certain parameter called AABB [

55] scale is set correctly. The parameter

aabb_scale controls the size of the Axis-Aligned Bounding Box (AABB) around the object [

57]. By increasing the

aabb_scale value, the model can include the background as part of the reconstructed scene. Images should not be blurry due to motion blur (when the camera moves during the exposure) or defocus blur (when the camera’s focus is not accurate). Both types of blurs make it difficult for the model to recognize details in adjacent images. NeRF models generally show significant improvement within the first few seconds or minutes of training. Experience shows that if a model does not show visible improvements after about 20 s of training, it is unlikely that longer training times will solve the problem. The key to successful training is early convergence. If the model does not start to converge early, this likely indicates a problem with dataset selection or quality, or with the training approach itself. For large scenes, it may take a few minutes of training to achieve additional model sharpness, but most of the convergence happens very quickly, within the first few seconds. Some problems that can prevent a NeRF model from being trained successfully include inaccurate camera positions in the dataset, in terms of both scale and offset. If the camera positions are off, then the model will usually produce incorrect reconstructions of the 3D object. Relatively small image sets or incorrect camera parameters are also problems. If there are insufficient images in the dataset, then the model will not have enough data to analyze the scene from different viewpoints, resulting in poor-quality reconstruction. Incorrectly extracted camera parameters (focal length, orientation, etc.) must be avoided. If COLMAP [

58] (or similar SfM) software fails to extract these parameters correctly, the model will struggle to align and integrate the images in 3D. External tuning, using tools such as COLMAP, may involve manual adjustment of the training data to ensure good quality. While the

instant-ngp (instant neural graphics primitives) [

55] framework provides tools for training NeRF models, issues such as acquiring multiple images or refining camera pose calculations are beyond the scope of

instant-ngp itself. NeRF is extremely sensitive to the quality and accuracy of the dataset, as blurry images, incorrect camera positions, and insufficient coverage hinder training and reduce model accuracy. If the model does not show improvement in the first few seconds or minutes of training, then there will be no faster convergence, which tells us that the dataset probably needs tuning (better scene coverage, precise camera parameters, and clear images).

A prerequisite for NERF model training is the creation of a COLMAP (general-purpose SfM and MVS pipeline). For this purpose, the

colmap2nerf.py script was used, which is also provided on the GitHub page (Version 2.0) [

59]. The paper [

60] provides a comprehensive overview of SfM and MVS techniques, particularly in forestry applications. The diagram created by the authors outlines the sequential steps involved in transforming a set of images into a detailed 3D model:

Feature Detection: Identifying key points and descriptors in the images.

Keypoint Matching: Establishing correspondences between features across multiple images.

Bundle Adjustment: Refining camera positions and 3D point locations to minimize reconstruction errors.

MVS: Generating a dense 3D point cloud by analyzing multiple image views.

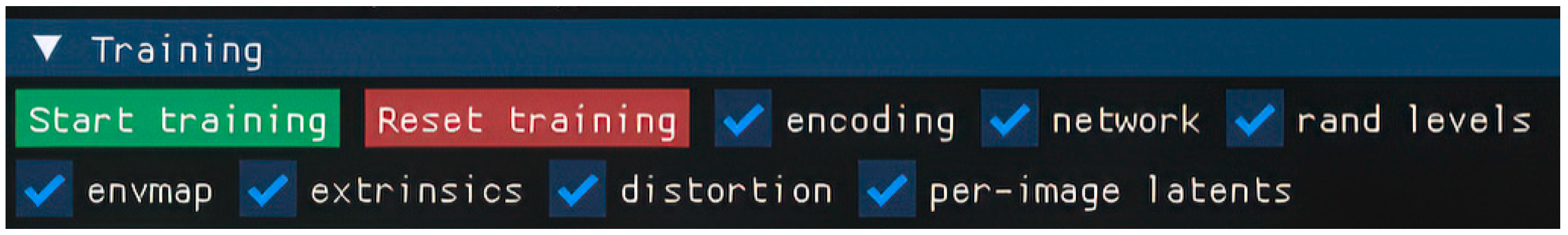

In this study, several key parameters that are disabled by default were enabled to significantly improve the quality of 3D models during training. Each parameter in

Table 3 and

Figure 4 contributes to a specific aspect of the 3D modeling process and improves textures, lighting effects, and geometric accuracy, preserving the level of detail from each image used to train the model. Based on these parameters, the final model is more accurate, realistic, and visually rich. Stochastic variations were introduced into textures to simulate natural surface imperfections, preventing flatness and adding visual complexity. Environment mapping techniques were utilized to improve lighting within the scene, allowing realistic reflections and illumination effects to be accurately represented on surfaces such as glass or wet materials [

61]. Accurate camera positioning and orientation in 3D space were achieved by incorporating extrinsic parameters, ensuring coherent alignment of objects relative to the camera viewpoint, and minimizing errors related to surface mismatches or incorrect orientation [

62]. Optical distortions arising during image capture were corrected to refine geometric accuracy and reduce artifacts such as warped lines or bent surfaces [

63]. Furthermore, per-image latent variables were integrated to capture the unique visual characteristics of each frame, enabling preservation and reproduction of detailed textures and color nuances, resulting in a final reconstruction with higher fidelity and realism [

64].

The activation of these parameters was crucial for producing more precise, visually rich, and realistic 3D models. Their combined effect demonstrates a robust and effective methodology for advancing the quality of 3D reconstructions in complex modeling workflows.

Figure 5 shows the NeRF training options.

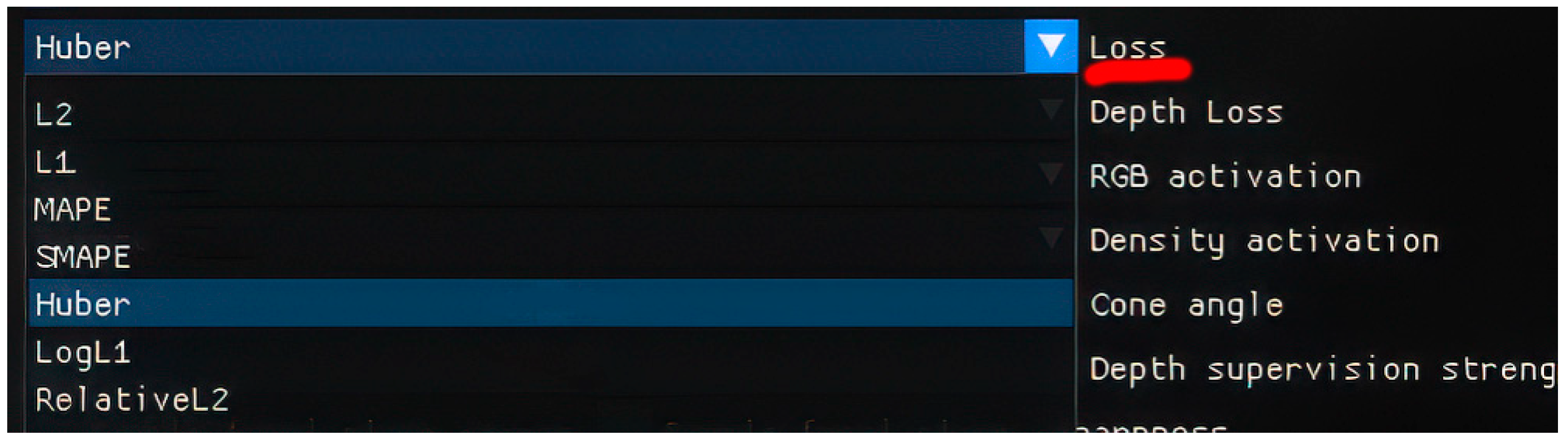

All other parameters are kept at their default settings. Important parameters that affect the quality of the trained model are the activation functions (

Figure 6,

Figure 7,

Figure 8,

Figure 9 and

Figure 10). Each function has specific applications in different scenarios. The Loss Function [

54] in NeRF models measures how far the model’s predictions are from the actual values. In NeRF, the Loss Function is typically calculated as the difference between the predicted and actual color and depth values of pixels, based on various camera positions and light sources. It is a critical component of training, providing feedback that helps optimize the model parameters.

There are several types of Loss Functions:

Mean Squared Error (MSE) is a basic loss function, which calculates the average squared difference between predicted and actual RGB values for each point in 3D space [

65].

The Depth Loss [

66,

67] is used in NeRF models to improve the prediction of object depth in the 3D scene. Depth for each pixel is crucial for accurately reconstructing the layout and positioning of each object in space.

The RGB Activation [

68] refers to how the NeRF model predicts RGB values in 3D space. The activation function for colors allows the model to generate realistic colors according to lighting conditions, surface textures, and object geometry

In NeRF, color activations are typically achieved using functions like rectified linear unit (ReLU) or Sigmoid [

69], which allow colors to be generated within a specific range, taking into account lighting and environmental effects.

Density activation [

10] refers to the prediction of the light density at each point in a 3D scene. Light density is useful for simulating effects such as smoke, fog, or transparent objects. This parameter in NeRF defines the interaction between light and objects in the scene, making it more realistic. In NeRF, light density is modeled as the change in light intensity absorbed or emitted as it passes through objects or scenes.

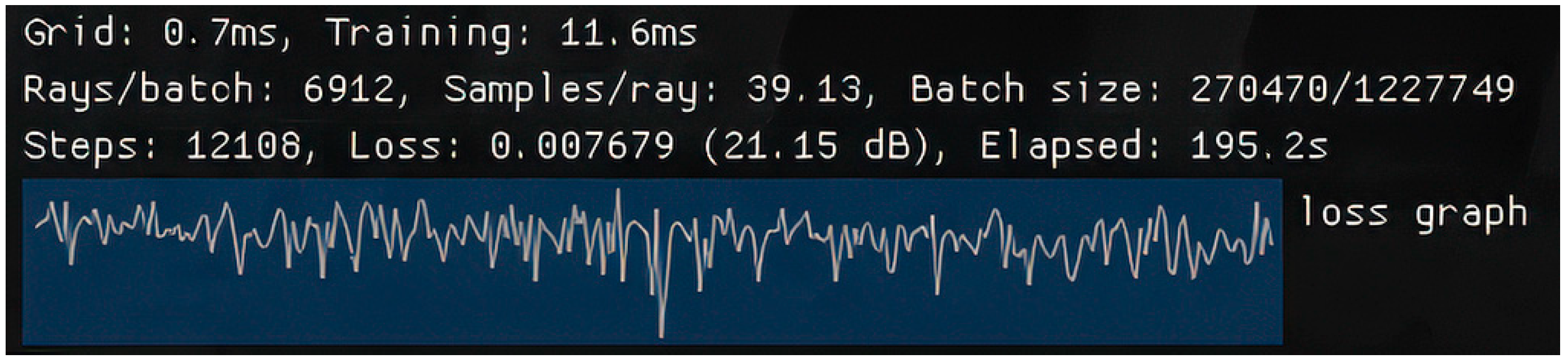

Figure 10 shows some of the basic data related to model training, such as Batch size, Seed, and Loss graph (

Figure 11).

Once the model training is completed, virtual cameras are deployed to render a video of the reconstructed scene (

Figure 12). These cameras are placed independently of the original drone viewpoints used during data capture and are not constrained by the drone’s flight path. Instead, they are strategically positioned to capture the scene from multiple angles and perspectives, allowing for a more comprehensive and visually informative presentation of the reconstruction. The rendered video is then processed using Latent Diffusion Models (LDMs) [

70], enabling further refinement and generation of realistic visual outputs based on the reconstructed scene.

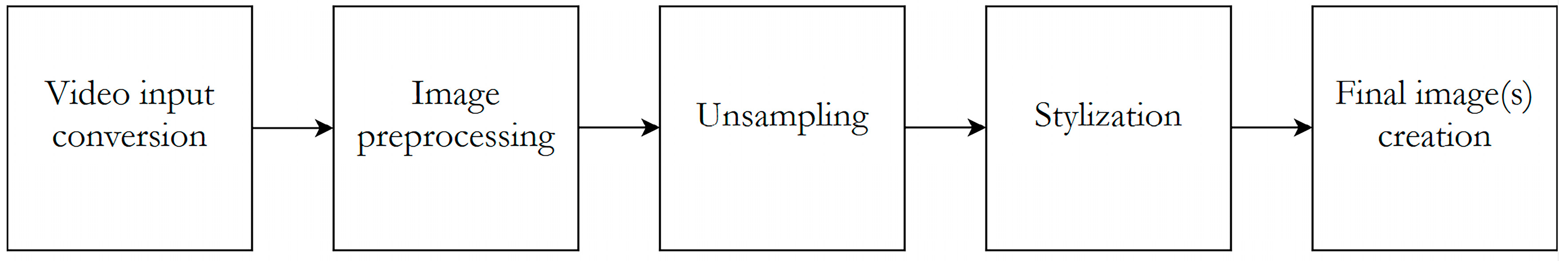

4.3. Data Processing Workflow

To reconstruct the old appearance of the façade (

Figure 13), Latent Diffusion technology was employed in the ComfyUI environment (ComfyUI—

https://github.com/comfyanonymous/ComfyUI, accessed on 11 June 2025). That is an open-source and node-based program that allows users to generate images from a series of text prompts. It consists of the following stages: video input conversion, image preprocessing, unsampling, stylization, and final video creation. LDMs are a class of generative models that produce images by operating in “latent” space rather than directly in pixel space [

69]. This approach enables efficient synthesis of high-quality images while reducing computational requirements compared to earlier diffusion models. Stable Diffusion, one of the most prominent LDMs, has seen significant progress, culminating in the release of Stable Diffusion XL (SDXL), which sets a new standard for high-resolution image generation [

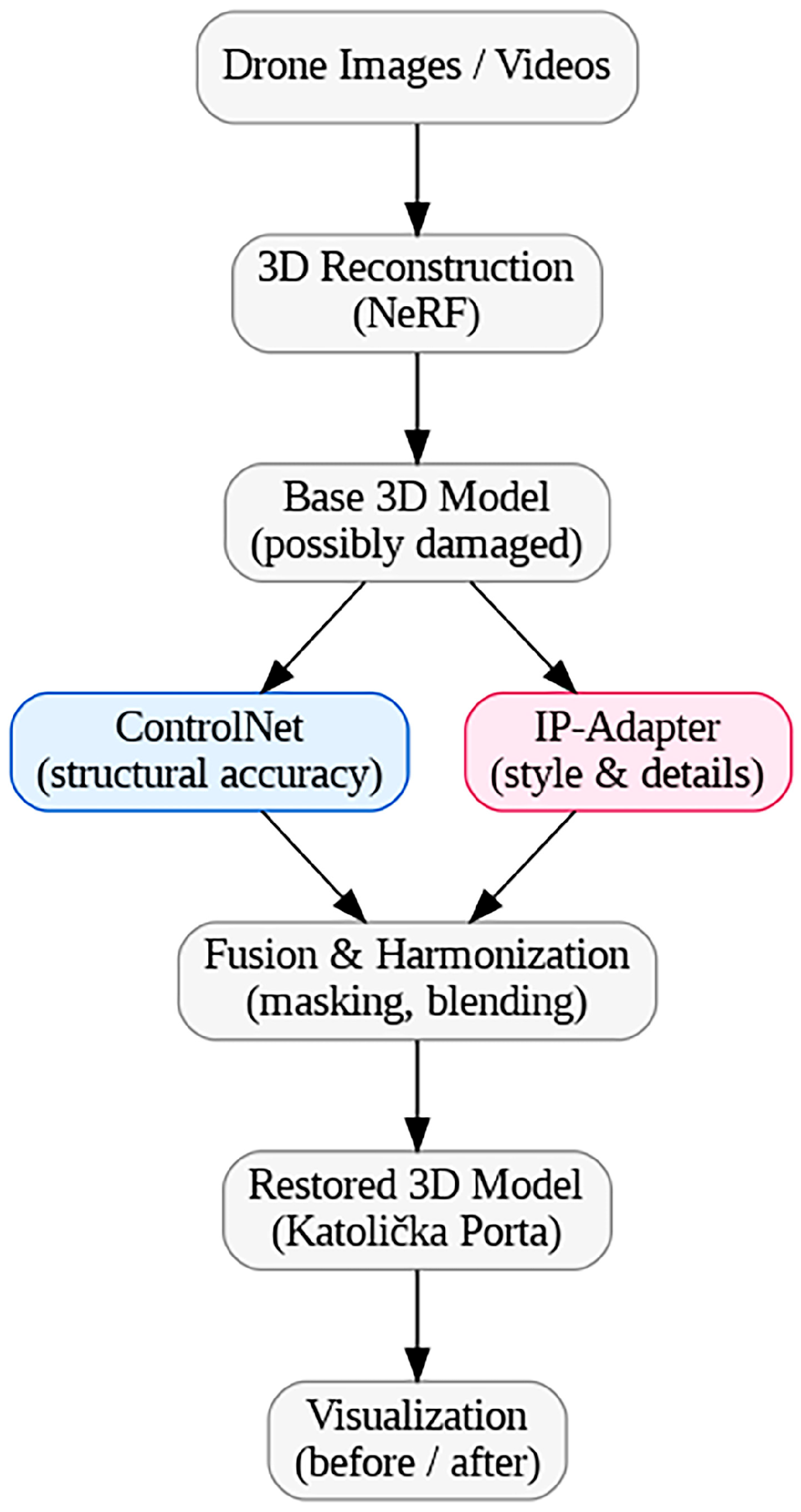

71]. The unsampling method in ComfyUI is a specialized technique designed for video-to-video workflows. It converts input video frames into structured latent noise, which enables precise style transfers while maintaining motion and compositional consistency throughout the sequence. To accurately capture and convey the style and composition of the old façade, IP-Adapter and ControlNet were employed. The following section provides a detailed breakdown of the mechanics and implementation of these techniques.

Figure 14 illustrates the virtual restoration workflow applied in this study. High-resolution images and videos of the

Katolička Porta were initially captured using a drone and processed with a NeRF to obtain a base 3D model. Since the raw reconstruction may contain visual artifacts or signs of degradation, two complementary AI tools were employed for restoration. ControlNet ensured structural accuracy by preserving the geometry and contours of the object, while the IP-Adapter transferred stylistic and material details from reference images. The results from both modules were fused and harmonized to generate a visually coherent output. Finally, the restored 3D model (parts of the façade) was visualized and composited with the original state, allowing for a clear before/after interpretation of the restoration process. The restored 3D model is a virtually reconstructed and digitally enhanced version of the original NeRF model, not a physical reconstruction.

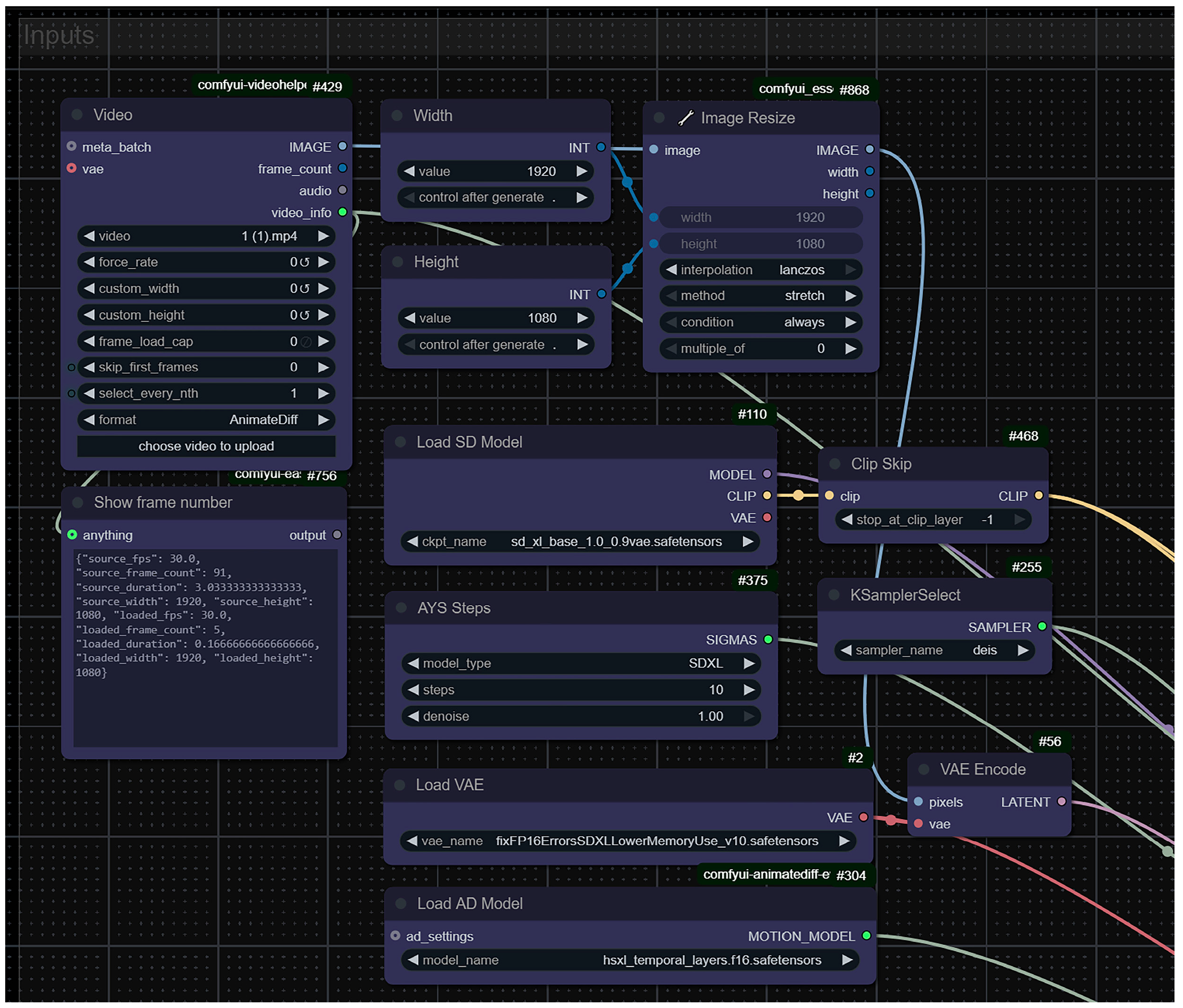

4.3.1. Video Input Conversion

Video frames are encoded into latent space using a Variational Autoencoder (VAE) [

72].

Figure 15 illustrates the workflow for loading a video file and converting it into latent space. The

Video node loads the video file, while the

Width,

Height, and

Image Resize nodes adjust the resolution to the desired size. The

Load SD Model node loads the Latent Diffusion model, and the

AYS Steps node specifies the parameters for the sampling process. The

Load VAE node loads the VAE model, and the

Load AD Model node loads the

AnimateDiff model. The

Clip Skip node defines which layers to skip in the CLIP model, and the

KSampler Select node selects the sampler to be used in the diffusion process. Finally, the

VAE Encode node encodes the video frames into the latent space using the loaded VAE model.

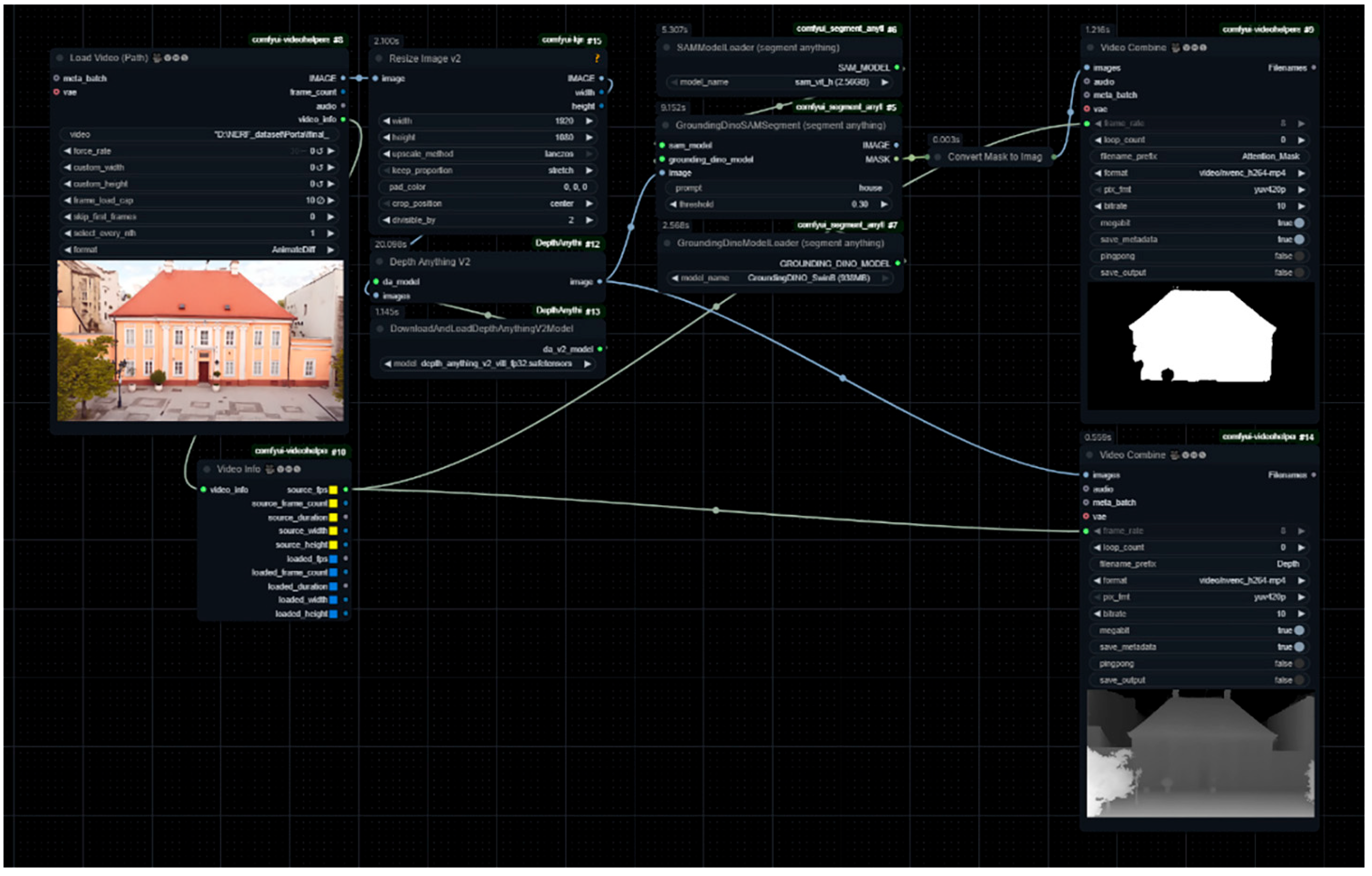

4.3.2. Image Preprocessing

For targeted edits—such as restyling specific objects like roof tiles—SAM-2 segmentation masks are employed to isolate regions of interest [

73]. This approach enables the controlled application of a desired style by utilizing an attention mask to restrict the transformation only to the specified areas of the image.

The attention mask [

73] is primarily used to exclude padding tokens or other special tokens from attention computations. Padding tokens are added solely to standardize input lengths for batch processing and should not affect model attention. To optimize processing and minimize memory usage, the attention mask is precomputed ahead of time (

Figure 16).

4.3.3. Segmentation and Masking

ControlNet [

74] acts as an auxiliary network that works alongside the Stable Diffusion model. While standard Stable Diffusion relies solely on text prompts to guide image generation, ControlNet introduces a secondary conditioning input—referred to as a control map—derived from an input image or other data source. This additional conditioning guides the generation process to adhere to specific structures or features present in the control map, such as depth information, outlines, or human poses.

In this example, ControlNet Depth is applied to maintain geometric consistency, while IP-Adapter injects style and composition references.

Figure 17 illustrates the node for loading a video file (used to obtain the depth map in this case) and the nodes for loading the ControlNet model (

Load ControlNet Model and

Apply Advanced ControlNet).

4.3.4. Unsampling

Unlike random noise, unsampling produces structured, representative noise that preserves both motion and structural consistency of the original input video. This is achieved by reversing the denoising process—i.e., by applying a forward diffusion step—which incrementally introduces noise according to a predefined schedule. A custom sampler, such as DEIS, is employed with a flipped sigma schedule to invert the traditional denoising workflow. For instance, the

Flip Sigma node in ComfyUI adjusts the noise schedule to prioritize noise addition during the initial steps, thereby aligning the resulting latent representation with the motion and content of the original video (see

Figure 18). The

Custom Sigmas node defines the sigma values,

FlippedSigmas reverses the schedule, and

KSamplerSelect specifies the sampling algorithm to be used.

Figure 19 illustrates the unsampling workflow, where the image is sampled using a fixed seed specified in the

SampleCustom node (the

noise seed parameter). A key parameter in this process is the classifier-free guidance (CFG), which regulates the extent to which the generated image conforms to the original content. Specifically, the CFG parameter determines the influence of the text prompt on the image generation process. Lower CFG values produce outputs that remain closer to the original image, while higher values amplify the impact of the text prompt, potentially introducing greater deviations from the input.

4.3.5. Stylization

The structured latent noise is fed into a diffusion model (SDXL and

AnimateDiff (AnimateDiff—

https://animatediff.github.io—accessed on 11 June 2025)) [

75,

76] together with a textual prompt (

Figure 20) and processed through the IP-Adapter (IP-Adapter—

https://github.com/tencent-ailab/IP-Adapter—accessed on 11 June 2025) (

Figure 21). Since the text prompt influences the final output, it is kept neutral in this case to avoid conflicts with the IP-Adapter over the style transfer, as the desired style was provided via an image rather than text. The IP-Adapter is designed to inject image-based conditioning information into Stable Diffusion models by modifying the cross-attention layers, integrating image features alongside text embeddings. To control where and how the IP-Adapter affects image generation, binary masks define which regions of the output image are influenced by each IP-Adapter input image. This technique, known as the attention masking mechanism, enables selective spatial conditioning during the diffusion process. According to the Hugging Face documentation, these masks are processed by the

IPAdapterMaskProcessor, which generates appropriately sized binary masks to spatially guide the adapter’s influence throughout generation.

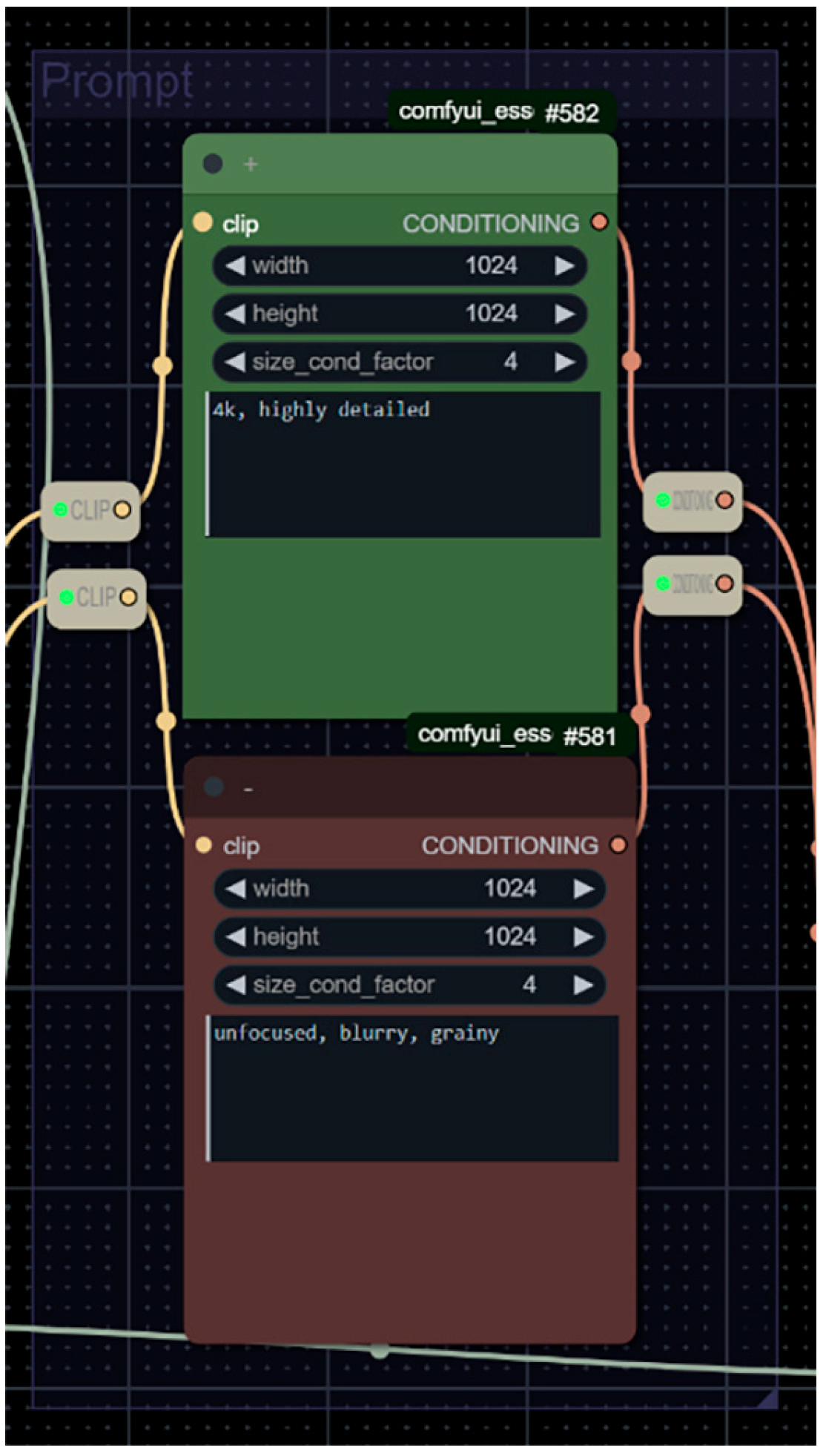

Figure 20 displays the nodes used for encoding a textual prompt: the green node represents a positive prompt (elements intended to appear in the image), while the brown node corresponds to a negative prompt (elements to be excluded from the image).

Figure 21 shows the nodes (color-coded in green) for loading a mask from video file (

Load video node), converting the image into a mask (

Convert image to Mask node), and processing the mask (

Grow Mask Woth Blur node). On the right side are the IP-Adapter nodes (color-coded in purple);

Load Clip Vision node loads Clip Vision model,

IP-Adapter Model Loader loads IP-Adapter model,

IP-Adapter Batch node defines a type of process performed by the IP-Adapter.

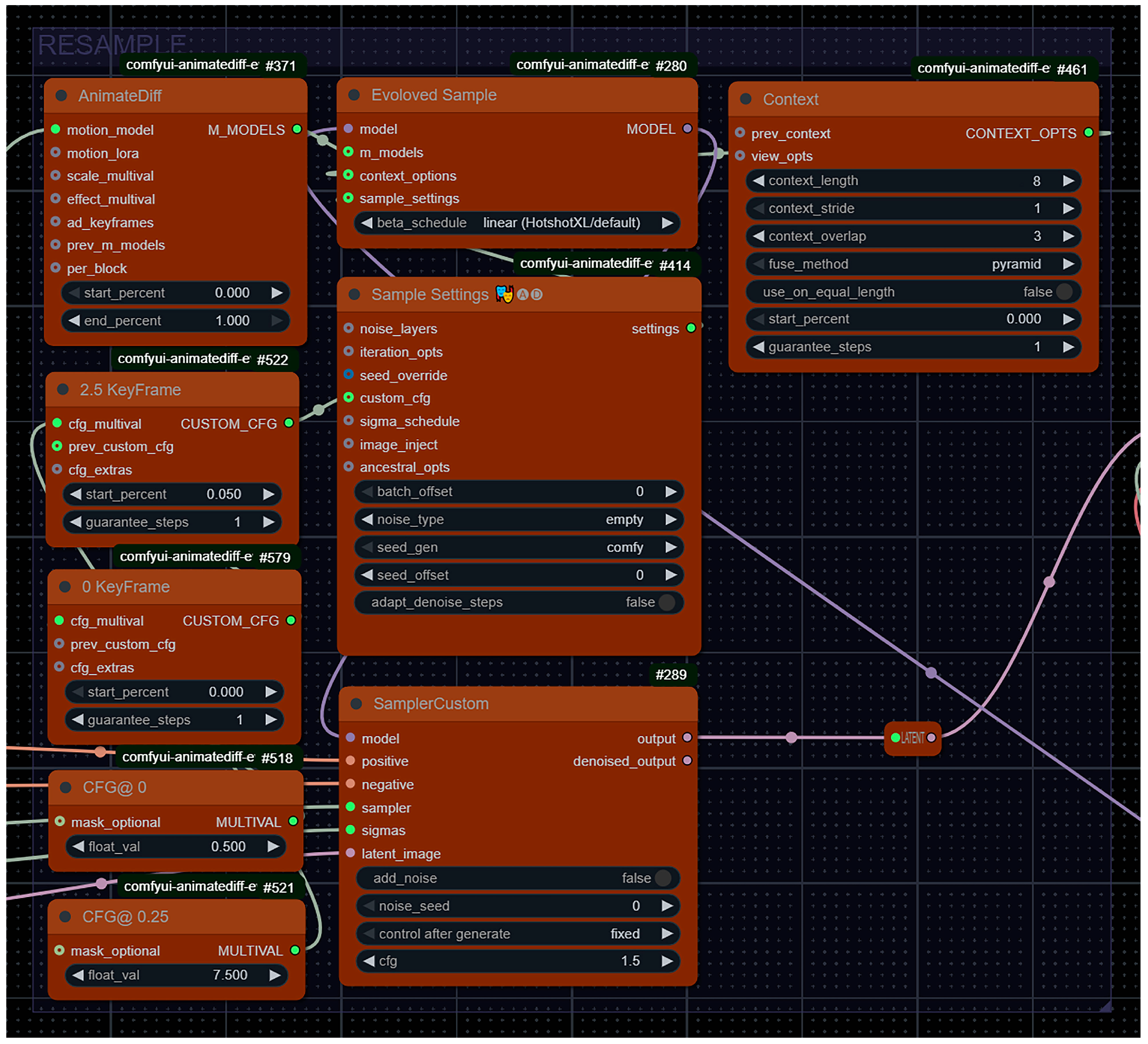

Figure 22 illustrates the resampling workflow, which utilizes a fixed seed of 0 in the

Sampler Custom node. In this process, the classifier-free guidance (CFG) parameter plays a key role, alongside the

start_percent parameter within the

AnimateDiff nodes. Together, these parameters control the degree of stylization applied to the processed image, a transformation primarily driven by the IP-Adapter.

4.3.6. Final Video Creation

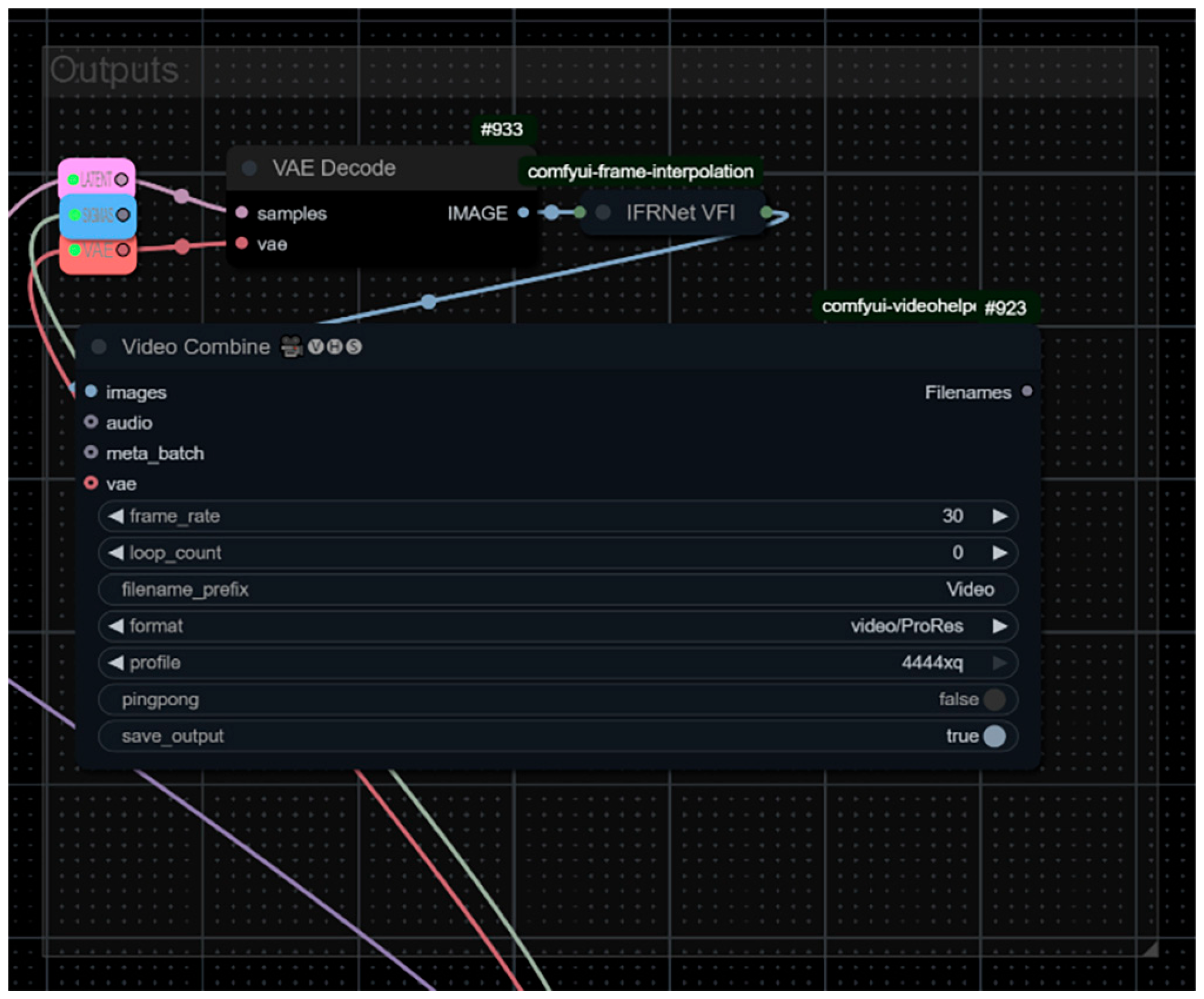

It is essential to convert the image from latent space back to pixel space, as visualization and saving in standard video formats require pixel-based data.

Figure 23 illustrates the conversion of images from latent to pixel space, as well as the node responsible for recording the final video file (

Video Combine node). To enhance the smoothness of the output video, frame interpolation was applied, effectively doubling the total number of frames using the

IFRNet VFI node.

4.4. Evaluation of the Digitization of the Katolička Porta and Its Impact on the Protection of Cultural Heritage

Advanced digital technologies, such as 3D scanning, virtual reconstruction, and interactive visualization, offer innovative opportunities for protecting valuable cultural assets while increasing accessibility for a wider audience. The Katolička Porta, as a notable cultural monument, was digitally documented and reconstructed to explore these benefits in practice. To evaluate the effectiveness and impact of this digitization effort, a targeted evaluation was conducted involving professionals directly engaged in cultural heritage protection. Their insights are vital for understanding how such digital interventions can support conservation efforts, enhance educational outreach, and inform the management of heritage sites.

After viewing the video [

19], a group of 20 respondents—all employees of a city institution in Novi Sad specializing in cultural heritage protection—participated in a survey. The purpose of the survey was to collect their opinions and experiences regarding the application of new digital technologies in the protection and presentation of cultural heritage. These affiliated experts were asked a total of 10 questions, each with multiple-choice answers, with only question Q9 allowing multiple answers. The aggregated survey results are presented in the

Table 4.

5. Results

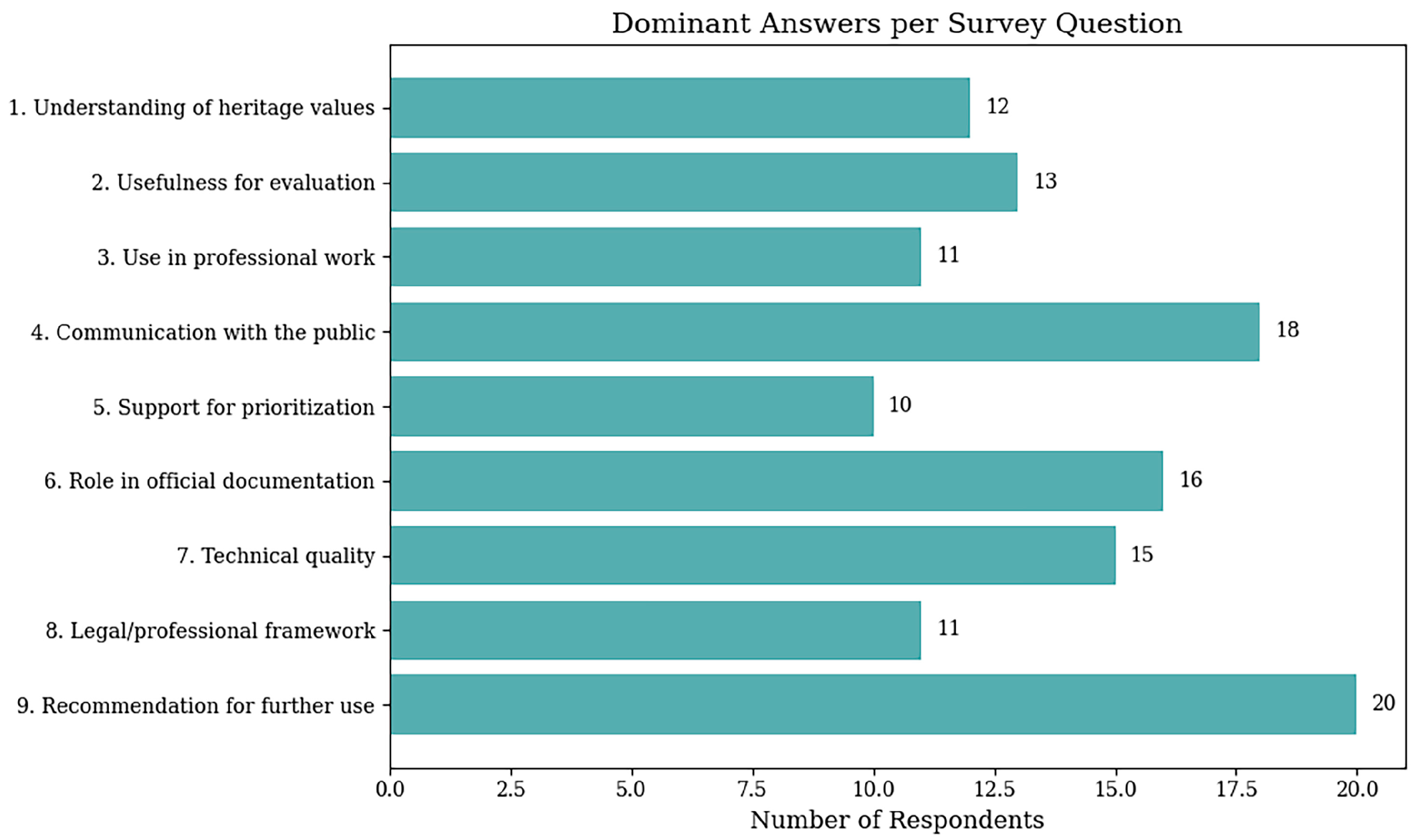

As part of the research, a survey was conducted to evaluate the digital visualization of the Katolička Porta in Novi Sad, targeting professionals in the field of cultural heritage protection (N = 20). The aim was to assess the potential of digital technologies, specifically 3D modeling and visualization, for enhancing the assessment, protection, and presentation of immovable cultural heritage.

The results show (

Figure 24) a high level of agreement regarding the usefulness of digital visualization in a professional context:

90% of respondents believe that such visualization significantly aids in understanding the historical and cultural values of the site;

65% rated the video as very useful for evaluating cultural properties.

When asked about practical application, 18 out of 20 participants indicated they would incorporate digital models in their work (35% regularly, 55% occasionally), and all participants unanimously recommended the continued development and deployment of such tools within heritage protection institutions.

A particularly noteworthy finding is that 90% of respondents believe that digital visualizations should become integrated into standard or supplementary documentation in heritage conservation procedures. Regarding quality, 75% rated the technical accuracy and authenticity of the video as very high, underscoring its reliability as a digital representation.

The most valuable aspects of such visualizations for heritage professionals were identified as follows:

educational potential (16 responses);

improved public communication (5);

pre-intervention visualization (4);

archival value was considered less relevant (1 response).

Although only half of the respondents believe these technologies could directly contribute to improving the legal and professional framework for heritage protection (40% fully, 55% partially), all participants unanimously supported their continued application and development.

These findings confirm that the use of advanced digital methods, such as NeRF-based reconstructions, provides multiple benefits for cultural heritage professionals, not only for documentation and analysis but also as effective tools for public engagement and education.

While the findings of this study underscore the strong potential of NeRF and AI-based restoration methods, certain limitations must be acknowledged. The evaluation survey was conducted with a relatively small and homogeneous group of participants (N = 20), all of whom were professionals employed within cultural heritage institutions in Novi Sad. At this stage, the focus was specifically on employees of the Institute for the Protection of Cultural Monuments in the territory of the city of Novi Sad. This limited sample size constrains the generalizability of the results, as it may not fully represent the diversity of perspectives from other regions or professional backgrounds. Future studies should therefore incorporate a larger and more diverse group of stakeholders, including conservation practitioners and members of the general public, to achieve broader validation of the methodology.

6. Discussion

This study set out to address the following three primary research questions: (i) the effectiveness of NeRF in reconstructing the Katolička Porta, (ii) the role of AI-based tools such as IP-Adapter and ControlNet in virtual restoration, and (iii) the reception of these techniques by cultural heritage professionals.

Regarding the first question, the results confirm that NeRF, combined with drone imagery, provides high-fidelity reconstructions of complex architectural features, including reflective and translucent surfaces. The second question was investigated through the integration of AI-based tools, which enabled realistic simulations of façade restoration and material transformations, demonstrating the versatility and flexibility of virtual conservation methods. Finally, survey results directly addressed the third question, showing that 90% of participants recognized the strong educational and communicative value of such models and unanimously supported their institutional adoption within professional institutions. Together, these findings validate the proposed workflow and support the hypothesis that NeRF and AI techniques can significantly enhance both documentation and conservation practice.

This paper presents a structured procedure designed to guide the rapid and high-quality creation of 3D environments using NeRF technology. The data and images used to train NeRF were obtained via drone capturing, with careful attention given to critical aspects of scene capture to ensure data usability and enable fast convergence toward a 3D model. Although the procedure requires technical rigor, it has been organized as a practical guide for professionals in cultural institutions who may lack prior expertise in this domain. Consequently, this method holds significant value for practitioners engaged in 3D space digitization and the application of AI for cultural heritage preservation. The historical and cultural significance of the Katolička Porta for the city of Novi Sad is outlined, followed by an explanation of how NeRF technology can be leveraged for the digital documentation of heritage sites. The NeRF pipeline is described comprehensively, covering video input conversion, image preprocessing, unsampling, stylization, and final video generation, providing a complete workflow for accurate and visually rich 3D reconstructions.

The approach was evaluated in collaboration with 20 experts specializing in cultural heritage protection. These participants reviewed the outputs and the resulting video, assessing the methodology through a ten-question survey, which enabled the formulation of relevant conclusions regarding the procedure’s effectiveness and applicability. The results indicate a high level of agreement among professionals regarding the importance of advanced visualization tools—particularly three-dimensional reconstructions—for the evaluation, preservation, and presentation of immovable cultural heritage. Notably, 90% of respondents stated that such visualizations significantly contribute to the understanding of a site’s historical and cultural values, affirming their relevance and potential within professional practice. A similarly high percentage supported the integration of 3D models as a standard or complementary element of documentation in conservation and restoration work, reflecting trust in their technical reliability and informational value. Importantly, 90% of respondents also expressed a willingness to incorporate such models into their professional workflows, either on a regular basis (35%) or occasionally (55%). This positive attitude was further reinforced by unanimous agreement on the need for continued development and institutional implementation of these technologies within the heritage protection system. The educational value of digital reconstructions emerged as the most significant benefit (cited in 16 responses), aligning with contemporary trends that emphasize public engagement in cultural heritage interpretation and preservation. Additionally, improved public communication and the ability to preview interventions prior to execution were recognized as key advantages.

However, the relatively low number of participants who acknowledged the archival value of digital tools (only one response) suggests that such technologies are still primarily viewed as auxiliary instruments for interpretation and planning, rather than as replacements for formal documentation in legal or administrative contexts. Furthermore, while only half of the respondents believed that these technologies could directly enhance the legal and institutional framework for heritage protection (40% fully, 55% partially), the strong support for their continued application reflects a growing professional consensus and a gradual shift toward the acceptance of digital methods as legitimate and valuable components of contemporary conservation practices.

Despite these promising results, the study faces certain limitations. The most significant limitation is the small number of survey participants (N = 20), which constrains the generalizability of the findings. Additionally, while the NeRF reconstructions achieved high visual realism, geometric accuracy remains a challenge compared to traditional methods such as SfM and MVS. Furthermore, the application of AI-based tools like IP-Adapter and ControlNet, although effective, requires technical expertise and computational resources that may not be readily available in all heritage institutions. Future work should address these limitations by expanding participant groups, refining hybrid workflows for improved metric accuracy, and developing more user-friendly AI tools tailored to conservation professionals.

Beyond the survey findings, the implementation of NeRF and AI-based methods in this study revealed both technical opportunities and practical challenges. The NeRF workflow demanded careful flight planning and frame extraction to ensure consistent lighting and sufficient coverage. The sensitivity of NeRF to blurred frames or inaccurate camera parameters underscored the importance of rigorous data acquisition protocols. Another limitation was the computational demand, as training required a high-end GPU, posing potential barriers for institutions without comparable hardware. Nevertheless, once trained, the NeRF model delivered highly photorealistic reconstructions, capturing reflective and translucent surfaces more effectively than conventional photogrammetry or SfM-MVS methods.

The application of AI-based tools, particularly IP-Adapter and ControlNet, further expanded the reconstruction process. These tools enabled selective style transfer and restoration simulations, allowing for controlled exploration of conservation scenarios such as façade renewal and material aging. This represents a significant methodological advancement: whereas traditional approaches primarily document current conditions, the AI-enhanced pipeline allows the visualization of potential futures in a non-invasive manner. Such visualizations are not only useful for expert analysis but also highly effective in communication with the public and stakeholders.

Taken together, the integration of NeRF with AI-based restoration tools demonstrates a dual benefit: precise technical reconstruction with high visual fidelity on the one hand and innovative applications in heritage interpretation, planning, and education on the other. These results confirm that the proposed methodology goes beyond a technical experiment, offering a practical framework with tangible cultural and institutional value.

7. Conclusions

The survey results among cultural heritage experts clearly indicate a high level of acceptance and recognition of the value of digital technologies, particularly three-dimensional visualizations based on methods such as NeRF. The majority of respondents assessed that such models significantly contribute to the understanding, evaluation, and interpretation of cultural assets while also improving communication with the broader public. Although their legal and archival roles are not yet perceived as central, there is a consensus on the need for further institutional implementation and development. As discussed earlier, while these digital reconstructions are widely recognized for their interpretative and communicative value, their formal archival and legal status remains to be fully established. This highlights the importance of future research aimed at assessing the legal and institutional validity of such models in heritage preservation contexts.

While the findings of this study highlight the strong potential of NeRF and AI-based restoration methods, certain limitations must be acknowledged. The evaluation survey involved a relatively small and homogeneous group of participants (N = 20), all of whom are professionals employed within cultural heritage institutions in Novi Sad. This narrow scope limits the generalizability of the results, as it may not fully represent the diversity of perspectives from other regions or professional backgrounds. Future studies should therefore include a larger and more varied group of stakeholders, including conservation practitioners and members of the general public, in order to achieve broader validation of the proposed methodology.

In addition to the survey findings, the creation of the NeRF model of the Katolička Porta in Novi Sad underscored the complexity and technical precision required by such technologies. A particular challenge was the location, situated in a pedestrian zone with high foot traffic, which was successfully addressed by conducting recordings in the early morning hours under natural diffused lighting conditions. These circumstances facilitated the generation of a high-quality model with stable illumination and minimal visual noise—factors that are crucial for the successful training of a NeRF network. The combination of careful planning during the recording process and technical post-processing contributed to the creation of a digital visualization that, according to the respondents, is characterized by high technical accuracy and authenticity.

Therefore, the integration of advanced digital methods such as NeRF in the context of cultural heritage documentation and presentation not only introduces innovative forms of visual interpretation but also holds the potential to transform the paradigm through which cultural assets are perceived, valued, and preserved. When applied with technical precision and methodological rigor, such models can evolve from being auxiliary tools into standard instruments within contemporary conservation practices.

Building upon these findings, future work could proceed along several directions, including the integration of NeRF models into extended reality (XR) environments to enable immersive and interactive experiences that enhance public engagement and educational outreach. Parallel to this, the development of user-friendly interfaces tailored for museums, cultural institutions, and educational platforms could support storytelling and interactive learning. Additionally, automating and optimizing data acquisition and processing workflows would increase efficiency, scalability, and reproducibility, facilitating wider adoption of these methods in both institutional and professional contexts.

Further research is required to evaluate the legal and archival validity of NeRF-generated models within heritage preservation contexts, thereby bridging the current gap between technological innovation and institutional frameworks. Moreover, the integration of NeRF with complementary technologies such as Geographic Information Systems (GIS), Building Information Modeling (BIM), photogrammetry, and LiDAR, as well as applying sensor fusion techniques that combine data from various sources (e.g., RGB imagery, depth sensors, laser scanning), could significantly enhance the geometric precision, richness, and interpretative potential of digital heritage records. Such hybrid approaches position NeRF as a key component in the future of heritage conservation and digital humanities.