Abstract

This study introduces a machine learning–driven extended reality (XR) interaction framework that leverages electroencephalography (EEG) for decoding consumer intentions in immersive decision-making tasks, demonstrated through functional food purchasing within a simulated autonomous vehicle setting. Recognizing inherent limitations in traditional “Preference vs. Non-Preference” EEG paradigms for immersive product evaluation, we propose a novel and robust “Rest vs. Intention” classification approach that significantly enhances cognitive signal contrast and improves interpretability. Eight healthy adults participated in immersive XR product evaluations within a simulated autonomous driving environment using the Microsoft HoloLens 2 headset (Microsoft Corp., Redmond, WA, USA). Participants assessed 3D-rendered multivitamin supplements systematically varied in intrinsic (ingredient, origin) and extrinsic (color, formulation) attributes. Event-related potentials (ERPs) were extracted from 64-channel EEG recordings, specifically targeting five neurocognitive components: N1 (perceptual attention), P2 (stimulus salience), N2 (conflict monitoring), P3 (decision evaluation), and LPP (motivational relevance). Four ensemble classifiers (Extra Trees, LightGBM, Random Forest, XGBoost) were trained to discriminate cognitive states under both paradigms. The ‘Rest vs. Intention’ approach achieved high cross-validated classification accuracy (up to 97.3% in this sample), and area under the curve (AUC > 0.97) SHAP-based interpretability identified dominant contributions from the N1, P2, and N2 components, aligning with neurophysiological processes of attentional allocation and cognitive control. These findings provide preliminary evidence of the viability of ERP-based intention decoding within a simulated autonomous-vehicle setting. Our framework serves as an exploratory proof-of-concept foundation for future development of real-time, BCI-enabled in-transit commerce systems, while underscoring the need for larger-scale validation in authentic AV environments and raising important considerations for ethics and privacy in neuromarketing applications.

1. Introduction

In the post-pandemic era, consumer awareness surrounding health and wellness has grown significantly, leading to an increased focus on disease prevention, nutritional self-care, and lifestyle optimization [1,2]. As part of this shift, individuals are seeking tools that support continuous, real-time monitoring of their health status [3]. Among the key behavioral changes is the rising consumption of dietary supplements, which serve as proactive complements to everyday nutrition [4,5,6]. Concurrently, the advent of digital transformation in automotive technology has transformed vehicles from mere modes of transport into a multifunctional space that supports infotainment, productivity, and even commerce [7]. With the rapid development of autonomous driving technologies, drivers are increasingly disengaged from traditional driving tasks, opening the door to novel in-car experiences such as real-time health tracking and on-the-go product purchasing [8,9,10,11,12]. These trends motivate the need for seamless, intuitive interfaces that enable users to explore and evaluate wellness-related products—including dietary supplements—while in transit.

Motivation—Market and Use Case: As automation increases, the in-vehicle experience is shifting from transport to a multifunctional setting for infotainment, productivity, and commerce [7]. In parallel, functional foods/supplements represent a large and fast-growing wellness segment [13,14]. These trends jointly motivate a setting where passengers can explore and evaluate wellness products during transit, rather than framing autonomous driving merely as a way to “free the hands.”

Motivation—Technical and Human–Machine Interaction: Higher-automation cockpits must ensure mode awareness and low-distraction interaction [15], making intention-aware input modalities attractive. EEG has demonstrated feasibility for monitoring cognitive and affective states in vehicular contexts [16] and aligns with intelligent cockpit roadmaps emphasizing adaptive, multimodal interaction [17]. Embedding an EEG–XR interface inside the cockpit, therefore, addresses concrete human factor needs for hands-free, low-effort engagement during non-driving tasks. Within this framework, dietary supplement evaluation serves as a representative high-involvement wellness decision domain appropriate for a simulated AV EEG–XR interface context.

Autonomous-vehicle (AV) cockpits introduce design imperatives that go far beyond simply “freeing the hands.” As vehicles transition toward higher levels of automation, human–machine interfaces (HMIs) must ensure driver/passenger mode awareness, manage residual attention demands, and enable safe engagement in non-driving activities, with careful control of interaction modality, workload distribution, and feedback timing [15,18]. Within this context, intention-aware interaction becomes especially relevant: electroencephalography (EEG) has demonstrated feasibility in vehicular environments and provides continuous, fine-grained indices of cognitive and affective states pertinent to automated driving and cockpit safety [16,19,20]. Complementing this, recent advances in infrared few-shot object detection address critical perception challenges in low-visibility conditions, enhancing the robustness of contextual awareness systems essential for adaptive cockpit interfaces [21]. This aligns with current intelligent cockpit roadmaps that emphasize adaptive, multimodal interfaces capable of supporting diverse in-car activities [17].

Scenario alignment in our design: To approximate cockpit constraints within a controlled laboratory XR simulation rather than a generic XR task, our experiment was designed to (i) minimize manual input (HoloLens-based viewing with brief keypad responses), (ii) employ brief, time-locked exposures appropriate for low-distraction interaction windows, and (iii) interleave resting baselines to emulate non-interaction segments (see Section 2 for protocol details). We situate dietary supplement evaluation within this framework because it represents a high-involvement decision domain where reducing manual load and cognitive friction is particularly beneficial. While not a substitute for an operational autonomous vehicle environment, this setup provides an ecologically motivated testbed for preliminary validation of EEG-driven XR interaction concepts in intelligent mobility contexts.

Extended reality (XR), encompassing both augmented reality (AR) and virtual reality (VR), has emerged as a transformative medium for online retail, offering immersive and interactive shopping experiences [22]. Studies grounded in the Technology Acceptance Model (TAM) demonstrate that XR-based retail interfaces significantly enhance perceived ease of use and usefulness, particularly by leveraging vivid imagery, real-time interactivity, and affective engagement [23]. These systems are known to evoke positive emotions that strengthen enjoyment, immersion, and ultimately, purchase intention. A growing body of research has applied XR interfaces across diverse consumer domains—such as fashion, furniture, food, and tourism—with evidence showing that virtual try-ons and immersive simulations increase brand affinity and purchasing confidence [24,25,26]. In food retail contexts, VR environments have been used to replicate real-world grocery experiences, with studies showing that consumer behavior within virtual supermarkets strongly mirrors that observed in physical stores [27,28]. For example, Jonathan et al. [29] found that low-income parents were more likely to choose healthier products when front-of-package nutritional labeling was made salient in VR environments, across multiple food categories.

Despite these advances, the field of XR neuromarketing is constrained by several methodological challenges that limit the ecological validity and interpretability of its findings. First, a heavy reliance on self-reported questionnaires and behavioral observation fails to capture the subconscious, real-time neuroaffective reactions that underpin consumer decision-making [30]. While the adoption of EEG has begun to address this gap by providing direct neural correlates of perception and preference [31,32], extant studies exhibit further limitations. Systematic reviews emphasize that EEG-based neuromarketing has disproportionately focused on stimulus response effects while neglecting broader social, cultural, and situational dimensions of consumer behavior [33,34]. This narrow scope restricts the generalizability of findings to real-world contexts. Furthermore, although frontal alpha asymmetry (FAA) and the late positive potential (LPP) are consistently highlighted as robust neural markers, their predictive validity has proven inconsistent across product categories and task demands. A review of EEG and machine-learning studies underscores that such coarse measures are rarely integrated with complementary physiological modalities or advanced analytics, thereby limiting the precision and robustness of predictive models [35].

To compound these issues, EEG analyses in consumer research often rely on coarse neural metrics. For example, studies have reported changes in broad spectral power bands (e.g., frontal alpha asymmetry) which, while useful, lack the temporal precision to dissect the rapid, sequential stages of cognitive processing involved in product evaluation [30]. Similarly, ERP-based investigations such as those examining food-warning labels often report aggregate components without distinguishing specific subcomponents (e.g., an early P3a vs. a late P3b), thereby conflating novelty detection with evaluative processing [36]. This lack of specificity makes it difficult to map neurological findings onto well-established cognitive models of decision-making.

In addition to these conceptual limitations, issues of ecological validity further undermine the generalizability of XR neuromarketing findings. Many XR neuromarketing experiments still rely on simplified or low-fidelity virtual stimuli—scenarios lacking material textures, realistic lighting, or sensory richness—and are frequently situated in generic laboratory store environments. Such conditions disregard the cognitive load, attentional constraints, and motion dynamics characteristic of real-world decision-making, for example, in the cognitively demanding environment of an autonomous vehicle cockpit [34,37]. Findings generated under such static, decontextualized conditions, therefore, risk misrepresenting the true neurocognitive processes that govern consumer choice.

Finally, EEG as a methodology has well-known technical limitations. While it provides millisecond-level temporal resolution, it suffers from poor spatial resolution, is highly susceptible to ocular and motion artifacts, and is fundamentally limited in its ability to capture subcortical neural processes. These methodological constraints complicate efforts to map electrophysiological activity directly onto well-established cognitive models of decision-making [38].

Taken together, these shortcomings highlight the need for research that unites ecologically valid, high-fidelity XR environments with fine-grained, theory-driven EEG/ERP analyses and multimodal integration strategies. Our study directly addresses these deficiencies by embedding wellness product evaluation within an AV-aligned XR cockpit and applying nuanced ERP markers capable of distinguishing rapid, sequential stages of electrophysiological processing. In doing so, we extend neuromarketing research beyond static laboratory contexts and provide a framework that is both ecologically realistic and analytically precise.

Dietary supplements are distinct from general food items in both psychological and regulatory contexts. They are often classified as high-involvement products [39], where consumers base decisions on a combination of intrinsic attributes (e.g., ingredients, efficacy, formulation) and extrinsic features (e.g., packaging, color, origin) [40]. While product packaging plays a crucial role in eliciting emotional responses, especially in high-involvement decisions, there is limited research investigating how these design cues affect consumer cognition and neural engagement during supplement evaluation. To fill this gap, our study proposes a brain–computer interface (BCI)-enabled XR shopping system that allows consumers to interactively explore dietary supplements in a 3D immersive environment while seated in a simulated autonomous vehicle. EEG signals are used to decode users’ cognitive states during product evaluation, enabling intention-aware interaction with minimal physical effort.

Electroencephalography (EEG) was selected for this study due to its high temporal resolution and non-invasive nature, which are critical for capturing rapid cognitive processes underlying real-time consumer decisions. Compared to functional magnetic resonance imaging (fMRI) [41] or functional near-infrared spectroscopy (fNIRS) [42], EEG enables millisecond-level measurement of transient neural events associated with attention, salience detection, and cognitive control—key elements of decision-making captured via event-related potentials (ERPs). While fMRI offers superior spatial resolution [43], it lacks the temporal specificity needed to resolve these fast processes in interactive XR settings. Likewise, although fNIRS provides portability and moderate spatial resolution [44], its slower hemodynamic response limits suitability for real-time intention decoding. Accordingly, we focus on well-established ERP components—N1, P2, N2, P3, and LPP—which together span perceptual encoding (N1), salience (P2), cognitive control (N2), decision evaluation (P3), and sustained motivational attention (LPP) during goal-directed product evaluation. This choice supports fast, interpretable decoding aligned with the aims of intention-aware interaction in autonomous mobility.

Research Problem and Motivation: While previous EEG-based studies have attempted to classify consumer preference using a “Preference vs. Non-Preference” paradigm, we found that such binary classification often fails to capture strong cognitive activation, especially in XR-based product evaluations involving abstract or subtle stimuli. Low involvement and narrow contrast between the classes may lead to weak ERP signatures, poor class separability, and suboptimal classification performance. Therefore, a novel cognitive framing is needed—one that emphasizes broader intentional engagement rather than narrow subjective preference.

Hypothesis: We hypothesize that merging all goal-directed product evaluation states into a single “Intention” class and contrasting them against “Rest” periods (i.e., non-interaction) will elicit stronger, time-locked ERP contrast—particularly in early/mid-latency components linked to attentional prioritization and cognitive control—thereby improving decoding accuracy and interpretability relative to “Preference vs. Non-Preference.”

- Clarification: The objective is not to solve a harder labeling problem, but to align neural decoding with AV HMI requirements where robustness, low distraction, and interpretability are paramount. Preference judgements in brief XR exposures often produce weak, heterogeneous ERPs with narrow class contrast, which undermines reliable, cross-subject inference. By targeting volitional engagement (Intention) versus non-engagement (Rest), we elicit stronger, more consistent ERP modulations that are theoretically expected and practically desirable for intelligent–cockpit interaction. In other words, the observed higher accuracy is best understood as the natural outcome of a more neurophysiologically appropriate contrast for in-vehicle use, not as an artifact of model opportunism.

Proposed Approach: To test this hypothesis, we developed a modular EEG–XR framework tailored for dietary supplement decision-making inside a simulated autonomous vehicle. Using 64-channel EEG data, we extracted ERP features (N1, P2, N2, P3, LPP) and trained four ensemble classifiers, Extra Trees (ET), LightGBM (LGBM), Random Forest (RF), and XGBoost (XGB), to decode brain activity under two binary classification settings: (1) Preference vs. Non-Preference and (2) Rest vs. Intention. The products varied in key visual attributes such as formulation type (gummy/tablet), packaging color, and country of origin. All trials were rendered in immersive XR and synchronized with real-time EEG acquisition.

- Key Contributions:

- 1.

- We introduce a machine learning–driven XR interaction framework that decodes event-related potentials (ERPs) to enable intention-based decision-making in immersive contexts. The framework is demonstrated through a case study on dietary supplement purchasing within autonomous mobility, bridging cognitive neuroscience with consumer behavior and in-vehicle commerce.

- 2.

- We propose a paradigm shift from “Preference vs. Non-Preference” to “Rest vs. Intention”, grounded in ERP theory and supported by empirical findings. This reframing enhances neurophysiological interpretability and robustness in XR settings, rather than pursuing novelty through more complex but less interpretable classification tasks.

- 3.

- We empirically show that specific ERP components—particularly P2 and N2, associated with salience detection and cognitive control—play central roles in decoding consumer intention under immersive XR conditions.

These contributions are presented as preliminary evidence based on a limited-sample setting and are intended to guide future research at the intersection of machine learning, cognitive neuroscience, and XR interaction.

Findings Preview: Our preliminary results demonstrate that the “Rest vs. Intention” paradigm consistently outperformed the traditional “Preference vs. Non-Preference” classification across all models and tasks. The 10-fold cross-validation accuracy reached up to 97.3% in our controlled experimental sample, and SHAP-based interpretability showed that specific ERP features (e.g., N1, P2, N2) were the primary contributors to intention decoding. These neural patterns align with cognitive processes underlying attentional allocation and cognitive control, providing strong preliminary evidence for both the decoding potential and neurophysiological coherence of the proposed paradigm.

Impact and Implications: This study provides an exploratory proof-of-concept foundation for exploring touchless, intention-aware interfaces in autonomous mobility. By aligning EEG-derived ERP features with immersive XR experiences, we demonstrate the feasibility of a scientifically interpretable and methodologically transparent framework for neuroadaptive interaction. Importantly, translation into operational AV systems will require larger, demographically diverse cohorts and validation in real-world driving contexts to confirm scalability and robustness. Within this scope, our findings highlight the potential of cognitive intention decoding to complement existing modalities such as gaze tracking or manual input, while emphasizing that paradigm validation and mechanistic interpretability remain essential prerequisites for any future real-time deployment.

2. Materials and Methods

2.1. Participants

Eight healthy adults (5 male, 3 female; mean age = 23.5 ± 1.5 years) participated in the experiment. All participants provided written informed consent prior to the study. The study protocol was approved by the Institutional Review Board of Jeonju University (approval no. JJIRB-221103-HR-2022-1002), and all experimental procedures were conducted in accordance with the Declaration of Helsinki. Participants wore a Microsoft HoloLens 2 headset (Microsoft Corp., Redmond, WA, USA) throughout the study to view immersive XR-based products.

2.2. Experimental Design and Stimuli

The experiment was designed to analyze the effects of consumers’ preferences for intrinsic attributes (functional ingredients, country of origin) and extrinsic attributes (packaging color, formulation) of health supplements on purchase intention and attention within an XR environment. According to the Korea Health Supplements Association, as of 2024, the number of purchase transactions for major functional ingredients reached approximately 29,535,000 for probiotics and 15,014,000 for multivitamins [45]. In this study, multivitamins—selected for their high preference rate—served as the basis for experimental stimuli, with intrinsic and extrinsic attributes systematically varied.

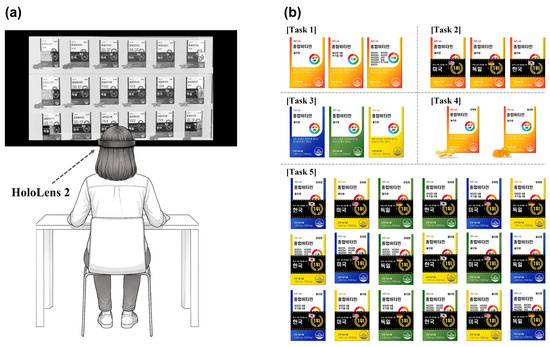

Visual stimuli for the preference analysis were presented to participants via the HoloLens 2 (Figure 1). To ensure participants fully recognized the attribute variations and remained immersed in the XR environment, a virtual exhibition space was provided before starting any tasks (Figure 1b).

Figure 1.

Immersive XR experimental setup and visual stimuli design. (a) Participant wearing a Microsoft HoloLens 2 headset while immersed in a virtual shopping interface rendered in 3D extended reality (XR). The headset displayed dietary supplement products within a simulated autonomous vehicle environment during EEG recording. (b) Visual presentation of product stimuli used across the five experimental tasks. Each task targeted a distinct product attribute: functional ingredient type (Task 1), country of origin (Task 2), packaging color (Task 3), formulation form (Task 4), and combined attributes using a Taguchi L18 orthogonal design (Task 5). Multivitamin packaging was systematically manipulated to assess neural responses to intrinsic and extrinsic product cues under cognitive load in XR conditions.

The study comprised four single-attribute tasks and one interaction task (Table 1). The interaction task was designed by combining the four primary factors—functional ingredients, country of origin, packaging color, and formulation type.

Table 1.

Experimental task design for analyzing supplement attributes.

Attribute Definitions: To ensure interpretability and reproducibility of the experimental design, each attribute was operationalized as follows. Functional Ingredient variations included: (1) All-in-One—a multivitamin containing both essential vitamins and key minerals; (2) Blend—a formulation containing 8 vitamins and 3 minerals without additional components; and (3) Single—formulations featuring only one isolated component such as Vitamin C, B12, or Zinc. Country of Origin referred to the declared manufacturing source on packaging (Korea, America, Germany), which serves as a known consumer preference cue. Packaging Color (Blue, Green, Yellow) was varied to assess perceptual salience and branding influence. Formulation Type included Tablet and Jelly, representing commonly consumed supplement formats. Task 5 utilized an L18 Taguchi orthogonal array to systematically combine all four attributes while minimizing stimulus redundancy and cognitive load.

Because the number of possible combinations across four factors can grow prohibitively large, the Taguchi Design Method [46] was employed to ensure both experimental efficiency and statistical reliability. The Taguchi method, based on orthogonal arrays, identifies optimal combinations of key factors while minimizing the total number of trials, thus preserving statistical power [47,48]. In the present study, an L18 orthogonal array was used to generate 18 optimized combinations of attribute levels. For clarity, the numerical codes presented in Table 2 correspond directly to the attribute levels defined above (e.g., Ingredient: 1 = All-in-One, 2 = Blend, 3 = Single; Origin: 1 = Korea, 2 = USA, 3 = Germany; Color: 1 = Blue, 2 = Green, 3 = Yellow; Formulation Type: 1 = Tablet, 2 = Jelly).

Table 2.

Transposed L18 orthogonal array used in Task 5 for combined attribute evaluation. Columns (T1–T18) represent individual trials, and rows denote product attributes. Numerical codes correspond to attribute categories as follows: Ingredient (1 = All-in-One, 2 = Blend, 3 = Single), Origin (1 = Korea, 2 = USA, 3 = Germany), Packaging Color (1 = Blue, 2 = Green, 3 = Yellow), and Formulation Type (1 = Tablet, 2 = Jelly). This explicit mapping ensures clarity and reproducibility of the experimental design.

Note: To conserve page space and improve layout readability, we present the L18 array in transposed form—displaying attributes as rows and trials as columns. This transformation does not alter the experimental logic but enhances visual compactness for publication.

2.3. Experimental Procedure

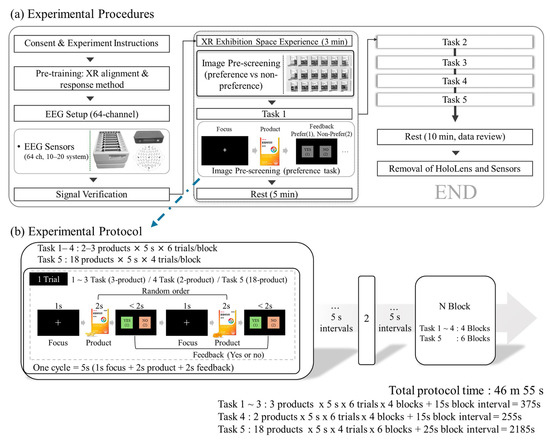

The complete experimental flow is illustrated in Figure 2. After informed consent and EEG setup, participants completed five tasks in the following sequence: Task 1 → Task 2 → Task 3 → Task 4 → Task 5. To approximate the in-cabin conditions of an autonomous vehicle (AV), all tasks were conducted through immersive product exploration using the Microsoft HoloLens 2 in a simulated XR shopping environment. This setup served as a stationary cockpit proxy, enabling controlled and reproducible evaluation of intention decoding while reflecting the low-distraction interaction requirements of future AV infotainment systems.

Figure 2.

Experimental workflow and EEG-XR protocol timeline. (a) Overview of the full experimental procedure, starting with informed consent, pre-training on XR interaction, and EEG setup (64-channel BioSemi ActiveTwo, 10–20 system), followed by immersive product exploration through HoloLens 2 in a simulated XR shopping environment. Participants completed five sequential tasks involving dietary supplement evaluations, with short resting baselines interleaved and a final 10 min rest phase before debriefing. (b) Detailed trial structure and timing for each task. Each trial included a 1 s fixation period, 2 s XR product stimulus, and a maximum 2 s response window for preference input. Tasks 1–4 featured 2 or 3 products per block over 4 blocks, while Task 5 used 18 combined-attribute products across 6 blocks, designed via Taguchi L18 orthogonal array. Block intervals (15–25 s) allowed EEG stabilization between task segments. The total session duration was approximately 46 min and 55 s, encompassing all rest phases and inter-block intervals.

Each trial followed the same structure:

- Fixation cross: 1 s;

- Stimulus display: 2 s;

- Response window: max 2 s for preference input via keypad (1 = “Yes”, 2 = “No”).

The block and trial design were as follows:

- Tasks 1–3: 3 product types × 5 repetitions × 6 trials = 90 s/block × 4 blocks;

- Task 4: 2 product types × 5 repetitions × 6 trials = 60 s/block × 4 blocks;

- Task 5: 18 product types × 5 repetitions × 4 trials = 360 s/block × 6 blocks.

Rest intervals of 15–25 s were included between blocks. The total duration of the experiment was approximately 46 min and 55 s. Resting EEG (baseline) segments were recorded before Task 1, between each block, and after the final task to ensure signal stability under simulated in-vehicle conditions.

2.4. EEG Data Acquisition

Continuous EEG was recorded using a 64-channel ActiveTwo system (BioSemi B.V., Amsterdam, The Netherlands) with electrodes positioned according to the international 10–20 system. Active Ag/AgCl electrodes were used, with signals routed through the ActiveTwo AD-box for amplification and transmitted via a fiber-optic cable to a USB2 receiver. The system’s default Common Mode Sense (CMS) and Driven Right Leg (DRL) electrodes were used as active referencing and ground, forming a feedback loop for noise reduction. Data were digitized at a sampling rate of 512 Hz. Electrode offsets were maintained below ±20 mV throughout the recording session using a conductive gel (Signa Gel; Parker Laboratories, Fairfield, NJ, USA). Signal quality was monitored online using ActiView v9 software (BioSemi, Amsterdam, The Netherlands).

2.5. EEG Preprocessing

All preprocessing was conducted in MATLAB R2023a (MathWorks Inc., Natick, MA, USA) using custom scripts and functions from the EEGLAB toolbox. The continuous data were first bandpass filtered between 1 and 12 Hz using a fourth-order zero-phase Butterworth filter (24 dB/octave roll-off). This specific passband was selected a priori for its neurophysiological justification: the 1 Hz high-pass effectively attenuates very low-frequency drift, sweat, and movement artifacts, and the 12 Hz low-pass excludes higher-frequency activity (e.g., EMG and line-related noise) that can obscure component morphology, thereby prioritizing ERP interpretability and consistency across analyses. This approach prioritizes the isolation and clear definition of canonical early (N1, P2) and mid-latency (N2, P3) ERP components relevant to this study. The data were then segmented into epochs spanning from –200 ms to +1000 ms relative to stimulus onset. Each epoch was baseline-corrected using the pre-stimulus interval (–200 to 0 ms). Ocular artifacts were corrected using a regression-based algorithm applied to vertical and horizontal electrooculogram (EOG) channels. Subsequent automated artifact rejection removed epochs containing amplitudes exceeding ±100 µV. Finally, channels exhibiting excessive kurtosis (kurtosis > 5 standard deviations from the mean) were interpolated using spherical splines.

To evaluate the impact of filter settings on ERP morphology and subsequent analysis, an ablation study was performed. In addition to the primary 1–12 Hz bandpass, we applied three alternative filter configurations: a narrower bandpass (0.1–12 Hz) to include slow-wave activity, and broader bandpasses (0.1–30 Hz and 1–30 Hz) to include higher-frequency information. It is acknowledged that these alternative filters may yield higher classification accuracy in a machine learning context, as they permit a larger volume of data to be processed by the classifier. However, this potential gain can come at the cost of analytical specificity, as the broader frequency range incorporates non-specific neural and non-neural noise that may obscure the specific components under investigation. The primary analysis, therefore, employs the 1–12 Hz filter to maintain a tight neurophysiological link to the traditional ERP components of interest. For this ablation analysis, only the filter passband was altered; all other preprocessing steps, epoching, ERP feature definitions, and subsequent classification procedures remained identical across conditions.

2.6. ERP Feature Extraction

From each preprocessed epoch, five canonical ERP components were extracted using channel-averaged windowed means, following time windows adapted from Goto et al. [49]:

- N1(70–150 ms): Early perceptual attention and initial sensory encoding;

- P2 (130–250 ms): Recognition and stimulus discrimination;

- N2 (130–250 ms): Conflict monitoring and cognitive control;

- P3 (250–400 ms): Decision-making and stimulus evaluation;

- LPP (400–800 ms): Affective and motivational processing;

The mean amplitude of each component was calculated across all 64 EEG channels, resulting in a five-dimensional ERP feature vector per trial (N1, P2, N2, P3, LPP). We adopted all-channel averaging rather than region-restricted extraction (e.g., frontal or central) to reduce dimensionality, minimize overfitting in a modest-sample setting, and avoid imposing region-specific priors that could limit cross-subject generalizability; this choice lets the classifier capture global ERP dynamics while subsequent SHAP-based analyses retain component-level interpretability. Notably, the P2 and N2 were extracted using the same 130–250 ms window. This overlap was a deliberate implementation choice to preserve comparability with prior ERP-based classification studies [49] and to ensure that both positive- and negative-going deflections within this latency range were captured for subsequent analysis. Given our aim of interpretable and cross-subject robust decoding within a modest sample size, we restricted features to canonical ERP components; richer time–frequency and connectivity features are intentionally reserved for follow-on studies focused on real-vehicle deployment, where larger datasets and advanced preprocessing will support their effective integration.

2.7. ERP-Based Classification

ERP features extracted from each preprocessed trial were used to classify two distinct binary paradigms:

- 1.

- Preference vs. Non-Preference: Classification based on user input (Yes/No) per trial.

- 2.

- Rest vs. Intention: Classification of rest trials (baseline) vs. all active decision trials.

Four ensemble-based classifiers selected for their proven efficacy in managing high-dimensional, nonlinearly separable neural data: Random Forest (RF), Extra Trees (ET), XGBoost (XGB), and LightGBM (LGBM). Their inherent robustness and native support for feature importance estimation make them particularly suitable for EEG-based decoding and subsequent interpretability analysis [50].

To ensure comprehensive and generalizable performance evaluation, two complementary cross-validation schemes were employed:

- The 10-fold Stratified Cross-Validation: Applied within subjects to provide an initial, computationally efficient estimate of model performance and comparative ablation analysis across filter settings.

- Leave-One-Subject-Out (LOSO) Cross-Validation: Applied to the primary 1–12 Hz dataset to assess the ultimate test of generalizability: a model’s performance on completely novel, unseen individuals. This method provides the most rigorous and conservative estimate of real-world applicability, effectively mitigating concerns of overfitting to subject-specific neural signatures.

The primary classification analysis was conducted on data preprocessed with the neurophysiologically-motivated 1–12 Hz bandpass filter. An ablation analysis evaluating alternative filter passbands (0.1–12 Hz, 0.1–30 Hz, 1–30 Hz) was performed using 10-fold cross-validation on the combined ‘All tasks’ dataset to isolate the effect of frequency content. For all analyses—primary, ablation, and LOSO—no per-dataset or per-variant hyperparameter tuning was performed. This critical control ensures that any performance differences are attributable solely to the preprocessed data input (e.g., filter choice) and not to bespoke model optimization, allowing for a fair and interpretable comparison. Model performance was evaluated using accuracy, precision, recall, F1-score, and ROC-AUC. Results are reported for each classifier and paradigm.

2.8. Model Interpretability and Feature Importance

To interpret the contribution of individual ERP features to model decisions, we applied SHAP (Shapley Additive Explanations) using the TreeExplainer module from the SHAP library. SHAP analysis was performed separately for all four classifiers (Random Forest, Extra Trees, XGBoost, and LightGBM) under both classification paradigms (“Preference vs. Non-Preference” and “Rest vs. Intention”). Each classifier was configured with standard library hyperparameters to ensure comparability across models. In particular, the Random Forest was implemented with 100 trees, unlimited depth, and the Gini impurity criterion; the Extra Trees classifier used 200 trees with otherwise default settings; XGBoost was configured with 100 trees, a maximum depth of 6, learning rate of 0.1, and ‘mlogloss’ as the evaluation metric; and LightGBM was configured with 100 trees, 31 leaves, maximum depth set to –1 (no limit), and a learning rate of 0.1. A fixed random seed was applied across all models to guarantee reproducibility. Feature importance was quantified by aggregating the absolute SHAP values across all test samples in each 10-fold cross-validation run, enabling paradigm-specific interpretability of how ERP components contributed to classification outcomes.

3. Results

This section presents the complete evaluation of our ERP-based cognitive intention decoding framework across two classification paradigms: (1) Preference vs. Non-Preference and (2) Rest vs. Intention. Each paradigm was assessed over five virtual shopping tasks and a combined-task setting using four ensemble classifiers. We report descriptive performance, inferential statistics, SHAP-based interpretability, and physiological insights, all grounded in cognitive neuroscience and statistical validity. Unless otherwise specified, all primary results were obtained under our predefined 1–12 Hz preprocessing pipeline; band-pass sensitivity is examined explicitly in Section 3.2.

3.1. Dual Classification Framework

The Preference vs. Non-Preference paradigm follows a conventional go/no-go structure, where users indicate subjective product preferences. However, such binary setups often fail to elicit robust neural signatures, especially in XR contexts where realism, duration, and motivational engagement may be limited. To address this limitation, we reframed the task into a binary Rest vs. Intention classification by merging all active decision epochs into a single “intent” class and contrasting them with baseline (non-interacting) periods. This formulation is neurophysiologically more robust, targeting well-documented ERP components—N1, P2, N2, P3, and LPP—linked to perceptual, evaluative, and motor-intentional processes.

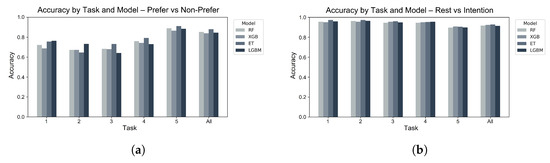

3.2. Classification Accuracy and AUC Performance

Figure 3 presents model-wise classification accuracies across both paradigms. The Preference vs. Non-Preference condition yielded variable performance, with accuracies mostly ranging from 64% to 79.3% across tasks, and peaking at 91.2% in Task 5 using the ET model. In contrast, the Rest vs. Intention paradigm demonstrated consistently high accuracy across all tasks, with most values exceeding 94% and reaching a maximum of 97.3% in Task 2, also achieved by the ET classifier.

Figure 3.

Classification accuracy trends across all five tasks and model types in two decoding paradigms. (a) In the Preference vs. Non-Preference paradigm, performance varied notably by task and model, with higher accuracy in multifeatured product evaluation (Task 5) and cumulative integration (All). (b) In contrast, the Rest vs. Intention paradigm demonstrated uniformly high accuracy across all tasks, indicating enhanced separability of cognitive states associated with intention execution. ET consistently yielded superior or near-optimal performance, suggesting robustness to inter-trial variability in ERP responses. These patterns highlight the potential of intention-based decoding for reliable real-time cognitive inference in XR environments.

To further illustrate task-specific differences, Table 3 reports the classification accuracy for each model and task under both paradigms using 10-fold cross-validation. The “Rest vs. Intention” condition consistently outperformed the “Preference vs. Non-Preference” paradigm across all tasks and classifiers. Notably, in Task 2, the “Rest vs. Intention” condition achieved a peak accuracy of 97.3% with ET, while the corresponding “Preference vs. Non-Preference” accuracy was 64.7% for ET and 73.3% for LGBM. These results confirm that the neural contrast between resting and intentional engagement yields more robust and discriminative ERP patterns than preference-based decisions.

Table 3.

Classification accuracy (%) of four ensemble models—ET, LGBM, RF, and XGB—across five XR-based product evaluation tasks and a combined condition (“All”), under two paradigms: “Preference vs. Non-Preference ” and “Rest vs. Intention”. Values represent mean classification accuracy over 10-fold cross-validation using ERP features (N1, P2, N2, P3, LPP) extracted from 64-channel EEG, preprocessed with a 1–12 Hz band-pass filter. The “Rest vs. Intention” paradigm consistently outperforms the traditional preference-based approach, with the highest accuracy observed in Task 2 (ET: 97.3%) and All-task integration (ET: 93.2%), confirming stronger neural separability and enhanced decoding reliability for intention-based classification.

Band-Pass Sensitivity (Ablation)

To assess robustness to the passband while keeping the main pipeline unchanged, we compared four settings (0.1–12 Hz, 1–12 Hz, 1–30 Hz, 0.1–30 Hz) under identical ERP features, models, cross-validation, and All-tasks aggregation. Note that the primary 1–12 Hz setting preserves alpha activity within its passband; our aim was not to suppress alpha per se, but to reduce slow drift (<1 Hz) and higher-frequency confounds that can blur component morphology. Table 4 reports Accuracy/F1 for each classifier under both paradigms. The 0.1–30 Hz passband yielded the highest scores across models and paradigms, 1–30 Hz was consistently strong, and 0.1–12 Hz degraded performance (notably in Rest vs. Intention), consistent with slow-drift contamination below 1 Hz and useful beta-band contributions up to 30 Hz. These results confirm that the superior performance of the 1–12 Hz configuration over the 0.1–12 Hz variant validates our primary filter choice for suppressing low-frequency drift. Furthermore, the strong performance of broader passbands provides a valuable context for the high generalizability observed under our primary pipeline, suggesting that the extracted neural features are robust. We nonetheless retain 1–12 Hz as the primary configuration for its neurophysiological interpretability and to ensure comparability across analyses. These ablations indicate that while excluding <1 Hz drift and retaining up to 30 Hz can improve decoding, the performance of our primary 1–12 Hz pipeline remains strong and neurophysiologically well-motivated.

Table 4.

Band-pass ablation across all classifiers (All Tasks, 10-fold stratified CV). Cells show Accuracy/F1. The 1–12 Hz pipeline is used in the main analyses; the other passbands are reported as controlled ablations.

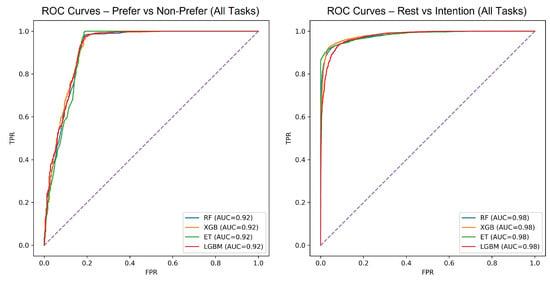

ROC curve analysis (Figure 4) confirmed these trends. Unless otherwise stated, ROC analyses use the same primary 1–12 Hz preprocessing and All-tasks aggregation. The Preference condition achieved AUC values around 0.92 at best, while the Rest vs. Intention condition yielded AUCs exceeding 0.98 across all classifiers, confirming strong class separability in the latter.

Figure 4.

Receiver operating characteristic (ROC) curves comparing classifier performance across paradigms using combined task data. The left panel (“Preference vs. Non-Preference”) shows moderate classification performance with AUC values around 0.92 across all ensemble models, reflecting partial overlap in ERP features related to subjective preference. The right panel (“Rest vs. Intention”) demonstrates superior signal separability, with AUCs reaching 0.98 across models—indicating highly reliable decoding of intentional mental engagement. These results confirm that volitional cognitive states elicit more distinct neurophysiological signatures than preference-based evaluation, underscoring the advantage of intention-driven BCI frameworks in XR applications.

3.3. Inter-Subject Variability (LOSO Analysis)

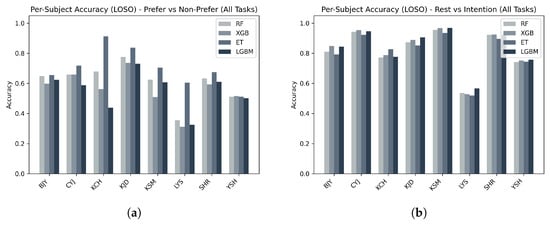

To rigorously evaluate the generalizability of our primary 1–12 Hz pipeline to unseen individuals—the most critical test for a potential BCI—we conducted a leave-one-subject-out (LOSO) analysis. Unless otherwise noted, LOSO results were computed under the same primary preprocessing (1–12 Hz) and the All-tasks aggregation as in Table 3, with identical model settings. Figure 5 presents per-subject classification accuracies under both paradigms across all tasks and classifiers. Results revealed clear differences in stability between the two paradigms. In the Preference vs. Non-Preference condition, accuracies varied substantially across individuals, with some subjects achieving moderate decoding performance (e.g., up to 91.2% with ET), while others showed near-chance levels, reflecting the heterogeneity of subjective evaluation processes in XR-based product preference. In contrast, the Rest vs. Intention condition exhibited consistently higher and more uniform accuracies across participants, with the best-performing model for most subjects exceeding 85% accuracy and for several subjects surpassing 90%. These findings indicate that intention-related ERP components are more reliably expressed and less subject-dependent than preference-related signals, strengthening the case for the proposed paradigm reframing. The high LOSO accuracy achieved under the 1–12 Hz filter further validates its selection, demonstrating that the neural features it preserves are not only interpretable but also generalize robustly across participants in our sample.

Figure 5.

Per-subject classification accuracy (LOSO) across all tasks and classifiers in the two decoding paradigms, using the primary 1–12 Hz preprocessing pipeline. (a) In the Preference vs. Non-Preference paradigm, accuracies varied substantially across individuals, with some subjects achieving high performance (e.g., up to 91.2%) while others approached chance level (e.g., ∼32–36%). This variability reflects heterogeneity in subjective evaluation processes and limited robustness of preference-related ERPs in XR settings. (b) In contrast, the Rest vs. Intention paradigm yielded consistently higher accuracies across participants. The best-performing model for most subjects (5 out of 8) exceeded 85% accuracy, and for several subjects (3 out of 8), it surpassed 90%. These results demonstrate that intention-related ERP components preprocessed with the 1–12 Hz filter are more reliably expressed at the individual level, supporting stronger generalizability and stability of intention-based decoding frameworks.

3.4. Inferential Statistics and Effect Size Analysis

Despite statistically significant p-values (Table 5) in the Preference condition, effect sizes (Cohen’s d, Cliff’s ) were weak across all features, indicating marginal group separability. In contrast, the Rest vs. Intention condition yielded very strong significance (p < ) for P2 and N2, with moderate Cliff’s values (0.22–0.24), supporting their neurophysiological relevance. The negative values of Cohen’s d reflect the direction of the mean difference between the groups (e.g., lower ERP amplitude in the Rest condition compared to Intention), but the strength of the effect is interpreted based on its absolute magnitude, not the sign. The discrepancy between the extremely small p-values and the modest effect sizes is likely attributable to the very large number of epochs analyzed, which provides high statistical power to detect even subtle effects.

Table 5.

Inferential statistics comparing ERP components across the two classification paradigms. In the “Preference vs. Non-Preference” condition, some components (e.g., N1 and P2) reached statistical significance, but effect sizes were small, indicating limited discriminative power. By contrast, the “Rest vs. Intention” condition showed extremely small p-values for P2 and N2, accompanied by moderate effect sizes (Cliff’s –0.24), suggesting clearer neural separability for intentional engagement. Because the analysis was restricted to a small, fixed set of five a priori ERP components (N1, P2, N2, P3, LPP), p-values are reported without multiple-comparison correction; interpretation is based primarily on effect sizes (Cohen’s d, Cliff’s ) to contextualize significance.

3.5. SHAP-Based Interpretability and ERP Feature Importance

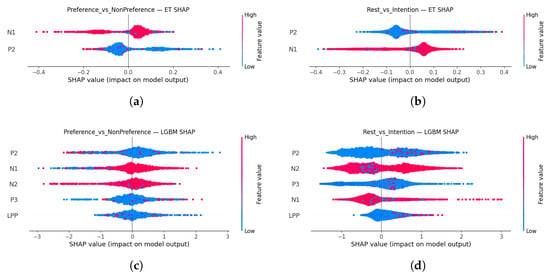

To interpret model-specific decision processes, SHAP beeswarm plots were generated for the top two classifiers—ET and LGBM—under both classification paradigms (Figure 6). These models were selected due to their top-tier performance across tasks and their distinct architectural differences, offering complementary views of feature attribution. We note that SHAP values reflect model-specific attribution rather than causal effects or univariate effect size; features with small SHAP magnitudes (e.g., LPP) should not be over-interpreted.

Figure 6.

SHAP beeswarm plots visualizing the contribution of ERP components to classification decisions for both paradigms using Extra Trees (ET, top row) and Light Gradient Boosting Machine (LGBM, bottom row). In the “Preference vs. Non-Preference” condition, both models emphasize early cognitive components—particularly N1 and P2—reflecting attentional and perceptual differentiation during product evaluation. LGBM shows small, non-zero contributions in N2, P3, and LPP; given their low SHAP magnitudes, we interpret these as weak, task-dependent modulations rather than primary drivers of classification. In the “Rest vs. Intention” condition, P2 emerges as a dominant discriminator across models, aligning with volitional stimulus engagement, while N1 also contributes, consistent with early sensory readiness. ET shows sparse, focused feature usage, while LGBM distributes attribution across all five ERP components—mirroring the depth of feature exploration inherent to each architecture. These interpretability results indicate model reliance primarily on P2 (and N1), with secondary, often minimal, contributions from other components, and illustrate model transparency in BCI-XR decision frameworks. (a) ET-Preference vs. Non-Preference. (b) ET-Rest vs. Intention. (c) LGBM-Preference vs. Non-Preference. (d) LGBM-Rest vs. Intention.

The ET beeswarm plots revealed non-zero SHAP values primarily for two ERP features: P2 and N1. This sparse attribution stems from ET’s tree construction process, which samples random feature subsets at each split. In the present configuration, only P2 and N1 were selected by any of the ensemble’s decision trees. Consequently, other ERP components (N2, P3, LPP) received zero SHAP impact. In contrast, LGBM plots exhibited distributed importance across all five ERP features. This aligns with its gradient boosting strategy, where weak learners are sequentially added to minimize residual error—thus integrating weaker but consistent contributors such as N2, P3, and LPP.

Complementary models also confirmed these observations. RF, similar to ET, showed a selective impact from N1 and P2, excluding other components entirely. Conversely, XGB resembled LGBM in attributing non-zero but comparatively small SHAP values to all five ERP features, with particularly weak contributions for LPP and greater variability in magnitude. These trends collectively demonstrate that boosting models provide a more inclusive view of cognitive feature relevance, while tree ensembles emphasize only the strongest discriminators.

From a neurophysiological perspective, the dominant role of P2 and N1 is consistent with their roles in attention and stimulus-driven processing. P2 reflects the early categorization of visual stimuli and decision-making relevance, while N1 is associated with attentional allocation and perceptual discrimination. The moderate contributions of N2 and P3—observed in LGBM and XGB—support their function in cognitive control and working memory updates. Consistent with its canonical association, LPP is linked to motivational salience and sustained attentional engagement; however, in our dataset, its SHAP contribution was consistently weak across models, so we do not treat LPP as a primary driver of decoding in this dataset. Its role may emerge more prominently in emotionally laden or preference-oriented tasks.

Taken together, SHAP-based explanations not only corroborate the neurocognitive relevance of ERP features (e.g., dominance of P2 across all classifiers) but also provide neurocognitive insight into how distinct components shape classification boundaries in both preference-based and intention-based tasks.

3.6. Physiological Interpretation of Findings

The five ERP components extracted (as detailed in Section 2.6) correspond to distinct neurocognitive operations, whose activation patterns varied between paradigms and influenced model performance.

- N1 (70–150 ms): Early perceptual attention and sensory encoding—showed moderate contributions across classifiers, consistent with its role in orienting to visual stimuli.

- P2 (130–250 ms): Recognition and stimulus discrimination—consistently emerged as the dominant discriminator during decision-driven engagement, particularly in the Rest vs. Intention paradigm.

- N2 (130–250 ms): Conflict monitoring and cognitive control—expressed more strongly in intentional states, aligning with elevated executive demands during goal-directed evaluation.

- P3 (250–400 ms): Context updating and decision evaluation—made moderate, distributed contributions, especially in boosting models.

- LPP (400–800 ms): Canonically linked to motivational salience and sustained evaluative attention, but in our dataset, its SHAP contribution was consistently weak across models, suggesting minimal impact in this task context.

In contrast, the Preference vs. Non-Preference paradigm lacked sufficient neurocognitive contrast—likely due to brief stimulus exposures and lower decisional stakes—resulting in weaker ERP activation and reduced classifier separation. This was reflected in lower classification accuracy and narrower SHAP attributions, confirming the limited neural differentiation of preference states in this XR setting.

3.7. Summary and Implications

These findings support our core hypothesis: reframing the classification task from “Preference vs. Non-Preference” to “Rest vs. Intention” enhances both the separability of intention-related neural signals and the performance of machine learning classifiers. P2 and N2 consistently emerged as key cognitive markers across SHAP, statistical, and classifier analyses. The ET and LGBM models offered complementary interpretability—ET emphasizing sparse, high-confidence features and LGBM reflecting distributed, nuanced feature usage. Together, these results lay a foundation for interpretable, real-time brain–computer interfaces tailored for cognitive intent recognition in XR shopping and in-vehicle interaction scenarios. The findings and their implications are further explored in Section 4.

4. Discussion

This study investigated a brain–computer interface (BCI)-enabled extended reality (XR) interaction system tailored for functional food purchasing within autonomous vehicle (AV) environments. By introducing a novel cognitive paradigm—“Rest vs. Intention”—we demonstrated significantly enhanced neurophysiological decoding of user engagement compared to traditional preference-based classification. Our findings provide converging evidence that EEG-based ERP features serve as interpretable neural markers of cognitive intention during immersive, in-vehicle product evaluations.

The observed prominence of P2 and N2 components, highlighted through SHAP-based interpretability and statistical testing, offers preliminary neurophysiological support for our approach. In our limited-sample study, the P2 component (130–250 ms), often linked with salience detection and stimulus categorization [49], showed consistent amplification during decision epochs, suggesting early attentional prioritization of task-relevant stimuli. Similarly, N2 (200–300 ms), typically associated with conflict monitoring and cognitive control [49], appeared more prominent during intention-driven interaction, reflecting elevated executive demands in evaluating product relevance and suppressing competing impulses.

Together, these findings affirm that intention-related neural dynamics are more reliably expressed and classified than subjective preference states. This may reflect not only the inherent differences in cognitive salience between the two conditions but also a potential limitation in our task design—where the XR-based preference scenario may not have evoked sufficiently strong motivational or evaluative processes to drive reliable neural separability. Thus, higher performance under Rest vs. Intention is expected from ERP theory: intention evokes early salience detection (P2) and conflict/control (N2), which are reliably time-locked and consistently observed. This makes the paradigm theoretically advantageous for future AV interface research, where stable neurocognitive signatures matter more than fine-grained subjective preference. It is important to clarify, however, that the observed performance differences between the two paradigms should not be interpreted as evidence that our modeling approach simply benefits from an easier classification problem. Rather, the “Rest vs. Intention” paradigm was deliberately designed to elicit stronger cognitive contrast and clearer ERP modulation (particularly in P2 and N2 components), yielding clearer and interpretable signatures. This property positions the paradigm as an exploratory proof-of-concept for XR intention decoding, showing feasibility under controlled laboratory conditions. While promising for future real-time, cross-subject applications, further validation in authentic AV contexts and larger, more diverse cohorts will be essential. These findings align with broader trends in functional food consumption and the emergence of AVs as infotainment and commerce spaces. While our controlled XR setup approximates cockpit-like conditions, the ecological plausibility of the framework remains preliminary and will require validation in authentic AV environments.

Importantly, this framework addresses the rising need for neuroadaptive interfaces in AV settings, where conventional input modalities (e.g., speech, gesture) may be impractical or distracting. As autonomous vehicles evolve into multifunctional digital spaces, users are expected to engage in infotainment, commerce, and wellness activities while commuting. Our system provides an exploratory proof-of-concept demonstration of intention-aware interaction—powered solely by neural signals—suggesting the potential to support product exploration and selection in high-involvement domains like functional food, where consumer decisions are cognitively intensive and context-sensitive [9,10].

Methodologically, our ERP-centric classification approach confers several advantages. First, using interpretable ERP components (N1, P2, N2, P3, LPP) ensures physiological transparency and traceability in model outputs—unlike deep neural embeddings. Second, we intentionally constrained the feature space to canonical ERP amplitudes to maximize physiological interpretability and maintain a compact feature set appropriate for XR–AV scenarios, while reducing overfitting risk under cross-subject evaluation with a modest sample size. While time–frequency (e.g., band-power/ERD–ERS) and connectivity (e.g., coherence, phase-lag–based indices) features can encode complementary dynamics, they are more sensitive to motion/EMG contamination and typically require larger datasets and additional regularization for stable generalization. We therefore position the ERP-only model as a transparent, real-time–feasible baseline upon which richer feature families can be layered (see Section 5). Third, the shift from “Preference vs. Non-Preference” to “Rest vs. Intention” significantly improves class separability, as confirmed by our SHAP plots, statistical tests, and classification results (mean accuracy up to 97.3% in Task 2). This reframing captures volitional cognitive engagement more effectively than passive affective preference, aligning with fundamental distinctions in neural readiness and goal-oriented processing.

In the broader landscape of consumer neuroscience, our work extends beyond prior EEG studies centered on packaging or branding stimuli presented as 2D images with subjective ratings [30,31,32]. By situating decision tasks in a stationary XR cockpit–proxy that simulates in-vehicle shopping and focusing on health-relevant functional foods (e.g., multivitamins), we increase task realism relative to 2D paradigms, while acknowledging that full ecological validity requires validation in moving AVs. Our paradigm helps bridge affective neuroscience with goal-directed interaction, providing preliminary evidence that intention decoding may inform neuroadaptive commerce use cases.

It should be emphasized that the use of a controlled XR laboratory environment was a deliberate design choice to balance immersion with experimental control. This setting enabled us to isolate intention-related neural signatures under reproducible conditions prior to field validation in moving AVs, where confounds such as motion artifacts, ambient variability, and multitasking pressures are unavoidable. As such, our paradigm should be viewed as a pragmatic, scientifically motivated bridge between conventional laboratory BCI studies and future field-validated neuroadaptive interfaces in real AV environments.

Moreover, our simulation environment expands on previous research using immersive technologies for food-related decisions. Prior studies have demonstrated that virtual and augmented reality can increase consumer engagement and affective immersion in dietary contexts [27,28,29]. By integrating this immersive XR context with neurophysiological data, our system offers both contextual richness and cognitive traceability—paving the way for more naturalistic neuromarketing applications.

To complement these group-level findings, we also examined robustness at the individual level through a per-subject leave-one-subject-out (LOSO) analysis. While overall accuracies were strong, subject-wise results in the Preference vs. Non-Preference paradigm were highly variable, reflecting heterogeneity in subjective evaluation processes. In contrast, the Rest vs. Intention paradigm yielded more consistent accuracies across participants, providing theoretical advantages of paradigm reframing while underscoring the importance of evaluating generalizability beyond aggregate metrics. Nonetheless, several limitations warrant discussion. The participant sample (n = 8), although common in ERP-based exploratory studies, restricts broader generalizability across diverse cognitive, cultural, and demographic profiles. Moreover, the XR simulation, while immersive, represents an exploratory proof-of-concept laboratory proxy rather than an operational AV environment. Real-world deployment will require larger and more demographically diverse cohorts, as well as validation under authentic transit conditions. Future work should also integrate complementary biosignals—such as eye tracking, electrodermal activity, and heart rate variability—to support multimodal modeling of user states. These extensions would not only enhance robustness and ecological validity in naturalistic, multitasking environments but, when combined with larger and more diverse participant samples in real autonomous vehicle contexts, will be essential for transitioning this framework from exploratory proof-of-concept models toward deployable systems.

Reported accuracies may nevertheless carry a risk of inflation from overfitting, given the small sample size and within-subject design. To mitigate this risk, we conducted LOSO analyses in addition to stratified 10-fold cross-validation and deliberately restricted the feature space to five canonical ERP components with ensemble tree classifiers (ET, RF, XGB, LGBM) to reduce variance. The LOSO results indicate that the Rest vs. Intention paradigm achieves consistently high accuracies across participants, whereas the Preference vs. Non-Preference task shows greater variability. Taken together with the conservative framing throughout the manuscript, these findings emphasize that the reported accuracies should be interpreted as proof-of-concept feasibility rather than as definitive benchmarks for population-level deployment.

Limitations of ERP-Based Classification: Although ERP features provide interpretable and time-resolved neural markers, they are inherently sensitive to artifacts, including eye blinks, muscle movement, and environmental noise—especially in mobile or AV scenarios. Furthermore, inter-subject variability in ERP morphology and latency can affect generalization unless carefully accounted for through cross-subject validation or personalized calibration. Moreover, ERP responses reflect population-level averages across trials; single-trial variability may limit rapid, high-throughput interaction unless ensemble smoothing or real-time signal denoising is implemented. In addition, the present framework relies exclusively on ERP-based representations and does not incorporate time–frequency dynamics (e.g., theta, alpha, or beta band power and ERD/ERS measures) or inter-regional connectivity features (e.g., coherence or phase-lag indices). While these modalities may provide complementary information for intention decoding, they also introduce trade-offs in terms of computational latency, susceptibility to motion artifacts, and reduced interpretability, which must be carefully balanced in future extensions of this work.

Looking ahead, we envision that future research could extend this exploratory proof-of-concept toward real-time multimodal neuroadaptive interfaces integrated into AV dashboards, enabling passengers to explore products, receive recommendations, and transact via neural intention alone. The convergence of BCI, XR, and AV platforms creates novel opportunities in neurocommerce—especially in healthcare, wellness, and adaptive infotainment. Grounding interaction in neural evidence enables interfaces to detect not just attention, but cognitive readiness, decision intent, and motivational salience—ushering in personalized, ethically-informed user experiences. At the same time, realizing such ethically-informed user experiences will require proactive safeguards to prevent misuse in neuromarketing contexts, to guard against unintended forms of cognitive surveillance, and to ensure informed and revocable consent in shared AV environments. These considerations are expanded on in the Limitations section, underscoring that ethical foresight is not optional but integral to the design of neuroadaptive commerce systems.

Collectively, this study introduces a scientifically rigorous and interpretable BCI–XR framework that provides an exploratory proof-of-concept step toward intention-based neural interfaces in autonomous mobility. While our preliminary results demonstrate promising feasibility in a simulated AV environment, translation into practical systems will require larger and more diverse cohorts, validation under real driving conditions, and proactive integration of ethical safeguards. Within these bounds, the present work lays an early foundation for future research on hands-free, neuroadaptive commerce and infotainment interfaces.

5. Limitations and Future Work

This study introduces an exploratory proof-of-concept BCI framework for XR-based interaction in autonomous vehicles (AVs). While the results are promising, several limitations highlight directions for future work.

To begin with, the participant sample size (n = 8), though typical for exploratory ERP studies, limits generalizability across diverse populations. Our leave-one-subject-out (LOSO) analysis partially mitigated overfitting concerns but cannot substitute for replication in larger, heterogeneous cohorts. Future work should expand sample sizes to provide stable population-level estimates and enable subgroup analyses by demographics such as age and gender.

Furthermore, the study was conducted in a controlled XR laboratory rather than an operational AV. This ensured reproducibility but did not capture motion artifacts, ambient variability, or multitasking pressures present in real vehicles. A critical next step is validation in moving AVs to evaluate signal quality, classifier robustness, and user experience under authentic driving conditions.

Equally important, ethical considerations must be addressed before real-world deployment. Continuous neural monitoring raises questions of privacy, consent, and potential misuse in neuromarketing. Future systems must ensure explicit and revocable consent, transparency in data use, and safeguards such as privacy-preserving processing and fairness-aware algorithms, supported by interdisciplinary governance across neuroscience, HCI, and policy.

In addition, the current framework depends on discrete, time-locked decision epochs. Real-world systems will require continuous, asynchronous monitoring with minimal calibration. Future research should examine latency across the full pipeline (acquisition to classification) and explore adaptive temporal models that enable fluid, real-time decoding.

Moreover, our ERP-only feature set prioritized interpretability but excluded time–frequency dynamics, connectivity measures, and peripheral biosignals. While ERP features proved effective, integrating additional modalities (e.g., band-power metrics, phase-based connectivity, eye tracking, heart-rate variability) could enhance robustness in multitasking, naturalistic environments. Future studies should evaluate these extensions against trade-offs in computational cost and interpretability.

Finally, the study focused on functional food purchasing, an appropriate but narrow in-transit use case. Broader validation is needed across other domains such as retail, infotainment, and safety-critical alerts. Task design should also be refined—for example, by incorporating time pressure or contextual cues—to improve ecological realism and test the generalizability of intention decoding.

6. Ethical Considerations

Beyond technical and methodological challenges, this research raises important ethical considerations that must be addressed before any real-world deployment of neuroadaptive commerce in autonomous vehicles (AVs). While our study was conducted in a controlled laboratory setting with informed consent, the translation of such systems into public environments requires careful governance.

Neuromarketing and consumer autonomy: EEG-based interfaces offer the potential to decode user intention and support hands-free interaction. However, in commercial contexts, these same tools risk being repurposed for neuromarketing strategies that nudge or manipulate purchasing behavior without the passenger’s full awareness. Future systems must be designed to augment user decision-making—by reducing friction or providing accessibility—rather than covertly shaping preferences. Transparency in system goals, limitations, and data use will be essential to preserve consumer autonomy.

Cognitive surveillance and privacy: Continuous monitoring of neural states introduces the risk of cognitive surveillance, where sensitive brain data could be recorded, stored, or misused. In shared AV settings, this risk extends to multiple passengers with different privacy expectations. To mitigate this, neural data should be processed locally and ephemerally, with strict minimization policies ensuring that only task-relevant features are extracted. Safeguards such as privacy-preserving signal processing, on-device inference, and data deletion guarantees are needed to protect user rights.

Informed and revocable consent: Ethical deployment requires that passengers provide explicit, context-specific, and revocable consent for neural monitoring. Unlike conventional sensors, neural data cannot be considered incidental or trivial: it reflects highly personal cognitive and affective states. Interfaces must therefore provide users with clear choices to opt in or out, to pause monitoring, and to revoke consent at any time during transit. In shared vehicles, each passenger’s autonomy must be respected without coercion or default enrollment.

Fairness and inclusivity: Finally, neuroadaptive systems must be evaluated across diverse demographic groups to avoid bias in decoding accuracy or accessibility. Models trained on narrow samples may systematically underperform for certain populations, raising issues of fairness and exclusion. Addressing this requires larger and more heterogeneous validation cohorts, together with fairness-aware algorithmic design.

In summary, while our study demonstrates the technical feasibility of intention decoding in XR–AV contexts, responsible translation into practice will depend equally on embedding ethical safeguards. Any future development of BCI-enabled commerce must proceed with transparency, privacy protection, and consumer autonomy as foundational design principles rather than afterthoughts.

7. Conclusions

This study introduces a novel EEG-based cognitive intention decoding framework tailored for extended reality (XR) interaction within autonomous vehicle (AV) environments, with a specific focus on functional food purchasing. By shifting from a traditional “Preference vs. Non-Preference” classification paradigm to a cognitively grounded “Rest vs. Intention” approach, we achieved substantially improved decoding performance and enhanced neurophysiological interpretability.

Leveraging five canonical event-related potential (ERP) components—N1, P2, N2, P3, and LPP—extracted from 64-channel EEG recordings, we trained ensemble classifiers (Extra Trees, LightGBM, Random Forest, XGBoost) to distinguish between cognitive engagement states during immersive product evaluations. The proposed paradigm achieved a peak classification accuracy of 97.3% and AUC scores exceeding 0.98, consistently outperforming the preference-based model, which peaked at 91.2%. SHAP-based model interpretability revealed P2 and N2 as the most influential features, underscoring their roles in attentional salience and cognitive control during intention-driven interaction.

These findings demonstrate the feasibility of a scientifically grounded and machine-interpretable framework for intention-aware interaction in AVs. By removing the need for overt behavioral input (e.g., touch or speech), the system demonstrates potential for enabling seamless, cognitively-driven interfaces—offering new possibilities for hands-free engagement in infotainment and e-commerce.

Overall, this work lays a foundational contribution toward future brain–computer interface (BCI)-enabled interaction models in autonomous mobility. The combination of robust classification performance, explainability, and context relevance highlights the promise of neuroadaptive XR systems in enhancing personalization, safety, and accessibility in next-generation transportation environments. While validated in a controlled XR laboratory setting, this framework should be regarded as an exploratory proof-of-concept foundation, providing the necessary methodological rigor and neurophysiological evidence to enable future deployment and validation in operational autonomous vehicles.

Author Contributions

Conceptualization, A.R., M.L. and S.M.; methodology, A.R. and S.M.; software, A.R. and S.M.; validation, A.R., M.S.C. and S.M.; formal analysis, A.R.; investigation, A.R. and M.S.C.; resources, S.M.; data curation, A.R., Y.K. and M.S.C.; writing—original draft preparation, A.R. and M.L.; writing—review and editing, A.R., M.L. and S.M.; visualization, A.R., Y.K. and M.S.C.; supervision, S.M.; project administration, S.M.; funding acquisition, S.M. All authors have read and agreed to the published version of the manuscript.

Funding

This study was partially supported by the National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (RS-2024-00340293) and the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (No. RS-2023-00247958, Development of XR Cross-modal Neural Interface with Adaptive Artificial Intelligence).

Institutional Review Board Statement

The study protocol was approved on 24 November 2022 by the Institutional Review Board of Jeonju University (approval no. JJIRB-221103-HR-2022-1002), and all experimental procedures were conducted in accordance with the Declaration of Helsinki. Participants wore a Microsoft HoloLens 2 headset (Microsoft Corp., Redmond, WA, USA) throughout the study to view immersive XR-based products.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Due to institutional and intellectual property restrictions, the source code and data are available from the corresponding authors upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sgroi, F.; Sciortino, C.; Baviera-Puig, A.; Modica, F. Analyzing Consumer Trends in Functional Foods: A Cluster Analysis Approach. J. Agric. Food Res. 2024, 15, 101041. [Google Scholar] [CrossRef]

- Ponte, L.G.S.; Ribeiro, S.F.; Pereira, J.C.V.; Antunes, A.E.C.; Bezerra, R.M.N.; Da Cunha, D.T. Consumer Perceptions of Functional Foods: A Scoping Review Focusing on Non-Processed Foods. Food Rev. Int. 2025, 41, 1738–1756. [Google Scholar] [CrossRef]

- Woo, J.; Shin, J.; Kim, H.; Moon, H. Which Consumers Are Willing to Pay for Smart Car Healthcare Services? A Discrete Choice Experiment Approach. J. Retail. Consum. Serv. 2022, 69, 103084. [Google Scholar] [CrossRef]

- Korea Health Supplements Association. 2024 Health Functional Foods Market Status and Consumer Survey; Korea Health Supplements Association: Seoul, Republic of Korea, 2024. [Google Scholar]

- Statista. Total Dietary Supplements Market Size Worldwide from 2016 to 2028 (in Billion U.S. Dollars). 2025. Available online: https://www.statista.com/statistics/828481/total-dietary-supplements-market-size-worldwide/ (accessed on 17 July 2025).

- Ministry of Food and Drug Safety. Food and Drug Statistical Yearbook 2024; Ministry of Food and Drug Safety: Cheongju, Republic of Korea, 2024. [Google Scholar]

- Jiang, Q.; Deng, L.; Zhang, J. How Does Aesthetic Design Affect Continuance Intention in In-Vehicle Infotainment Systems? An Exploratory Study. Int. J. Hum.-Interact. 2025, 41, 429–444. [Google Scholar] [CrossRef]

- Fortune Business Insights. Automotive Active Health Monitoring System Market Size, Share & Industry Analysis, By Type (Dashboard, Seat), By Application Type (Blood Pressure, Blood Glucose Level, Pulse, Others), By Market Type (OEM, Aftermarket), and Regional Forecasts, 2024–2032. 2024. Available online: https://www.fortunebusinessinsights.com/ (accessed on 17 July 2025).

- Melders, L.; Smigins, R.; Birkavs, A. Recent Advances in Vehicle Driver Health Monitoring Systems. Sensors 2025, 25, 1812. [Google Scholar] [CrossRef]

- Coppola, R.; Morisio, M. Connected Car: Technologies, Issues, Future Trends. ACM Comput. Surv. 2017, 49, 1–36. [Google Scholar] [CrossRef]

- Pott, J. In-Car Retail: Apps and Services for a Seamless Shopping Experience—Target and Domino’s Lead the Way in In-Car Commerce. EuroShop Magazine, 16 October 2024. Available online: https://www.euroshop-tradefair.com/en/euroshopmag/in-car-retail-apps-and-services-for-a-seamless-shopping-experience (accessed on 17 July 2025).

- Global Industry Analysts, Inc. Automotive Infotainment Systems; Global Industry Analysts, Inc.: Seoul, Republic of Korea, 2025. [Google Scholar]