Dynamic and Lightweight Detection of Strawberry Diseases Using Enhanced YOLOv10

Abstract

1. Introduction

2. Proposed Method

2.1. Introduction of the CBAM Attention Mechanism

- —channel attention weights;

- —activation function sigmoid;

- —the feature mapping in space after tie pooling;

- —the feature mapping in space after maximum pooling;

- —the weight matrix of the 1st fully connected layer;

- —the weight matrix of the 2nd fully connected layer.

- —spatial attention weights;

- —activation function sigmoid;

- —convolutional operational filters of size;

- —the feature mapping after tie pooling on the channel;

- —the feature mapping after maximum pooling on the channel.

- —input feature map;

- —the final feature map obtained after CBAM processing.

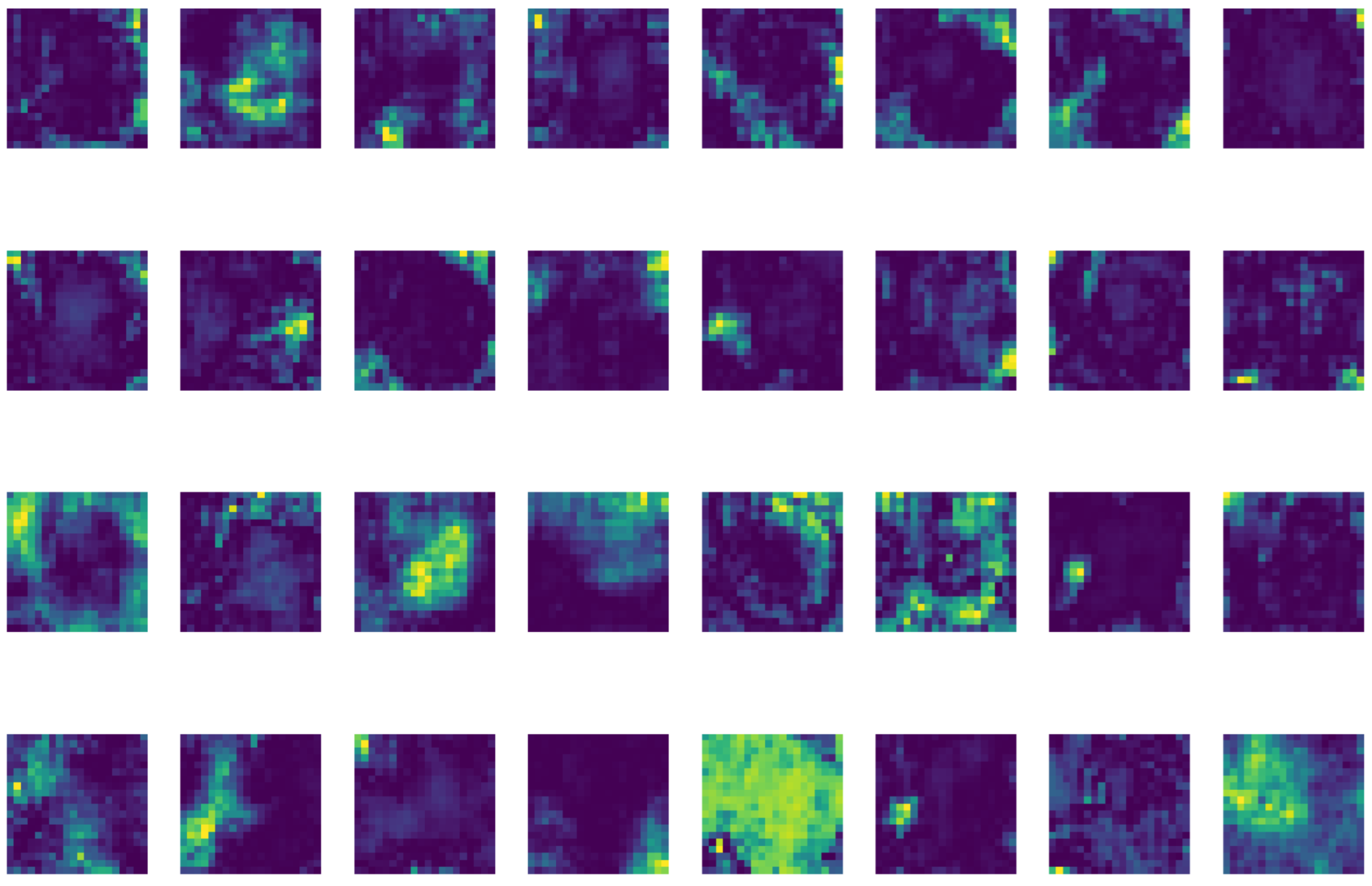

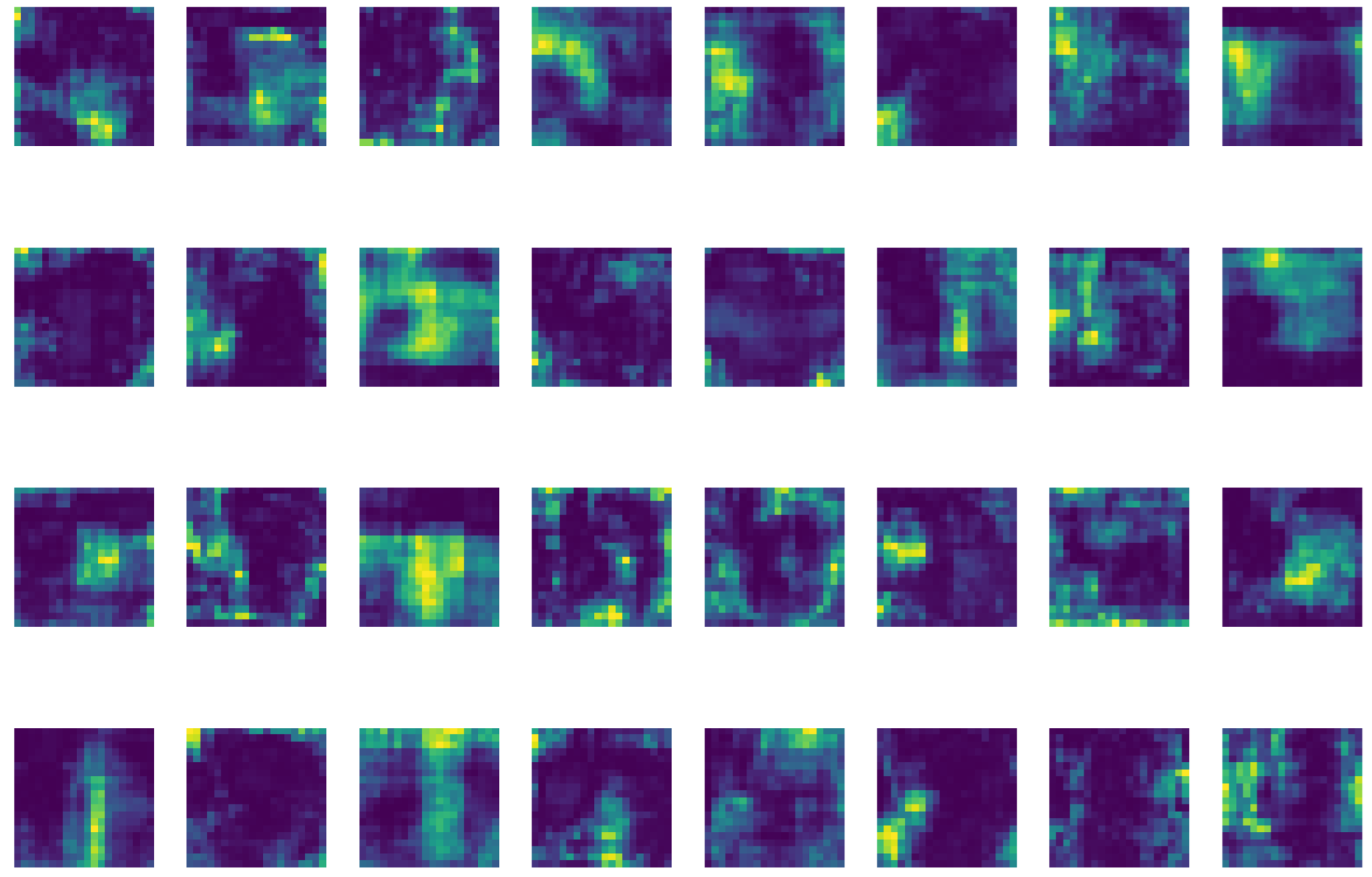

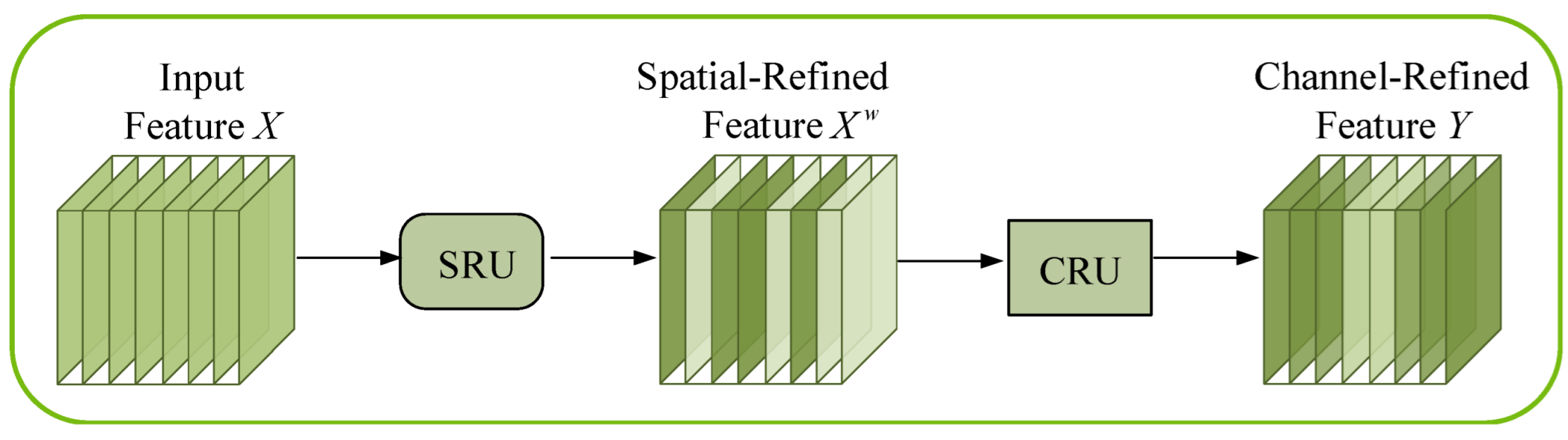

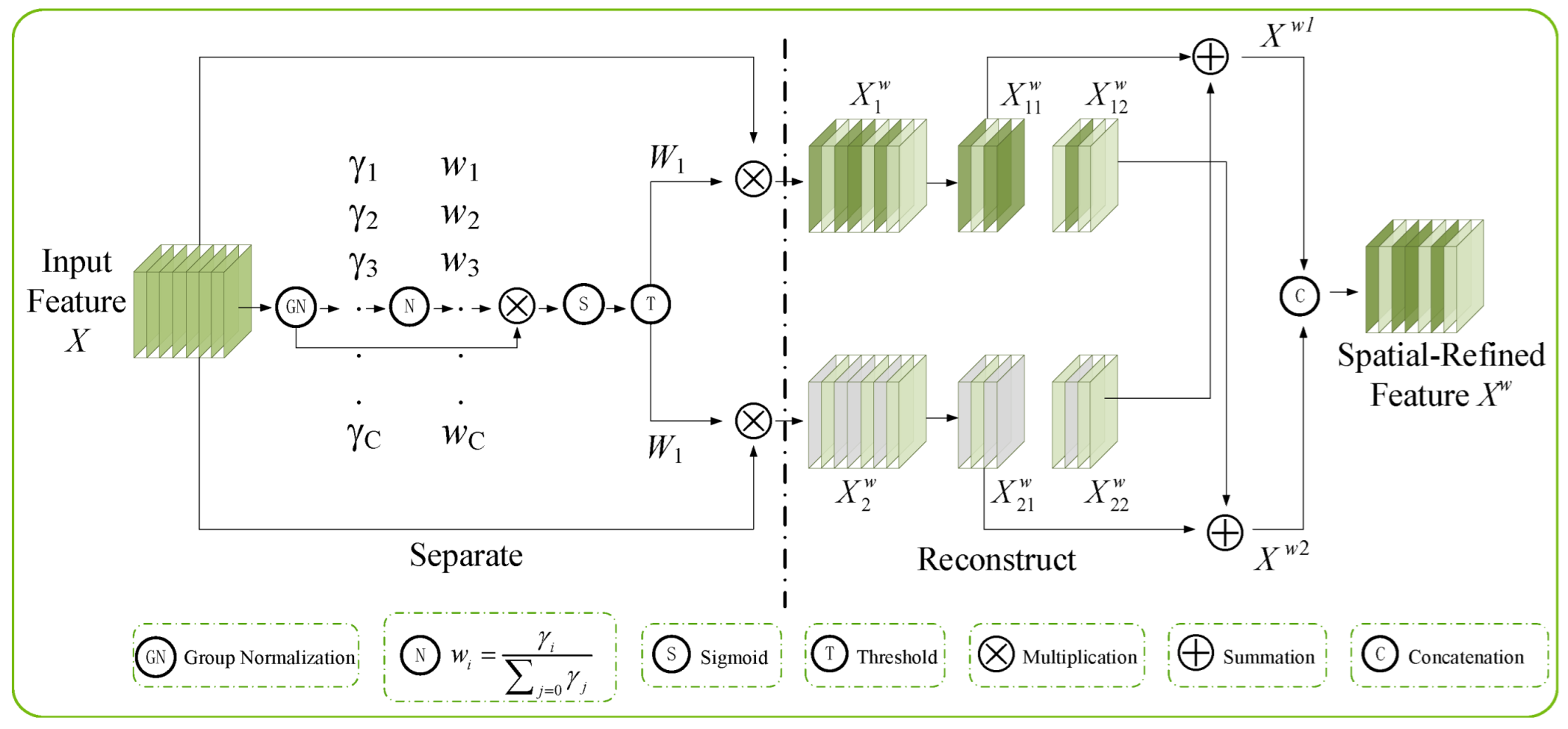

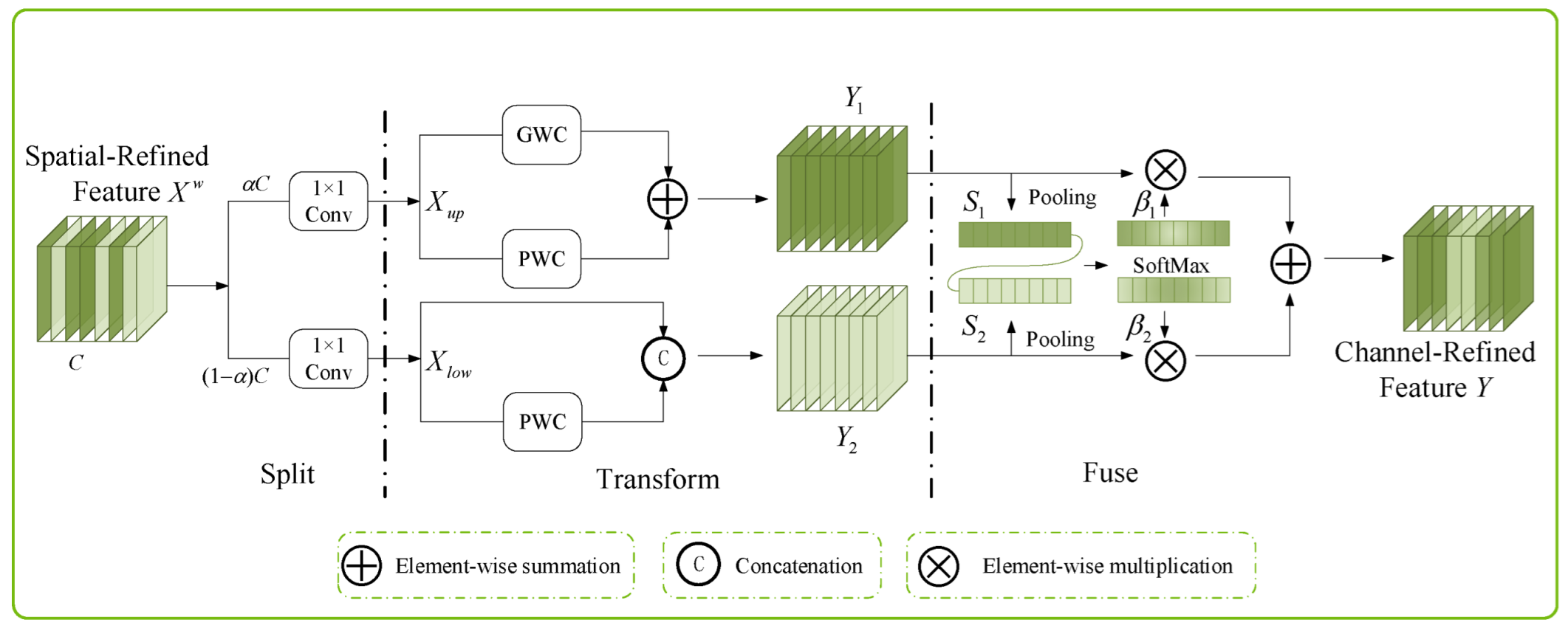

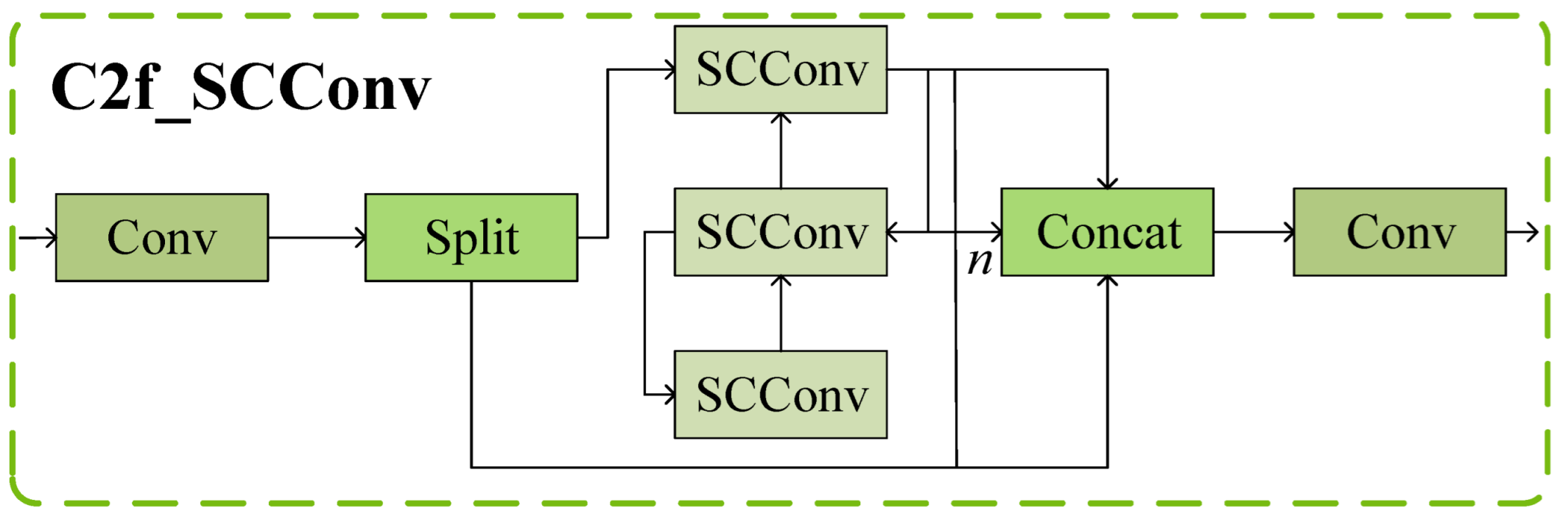

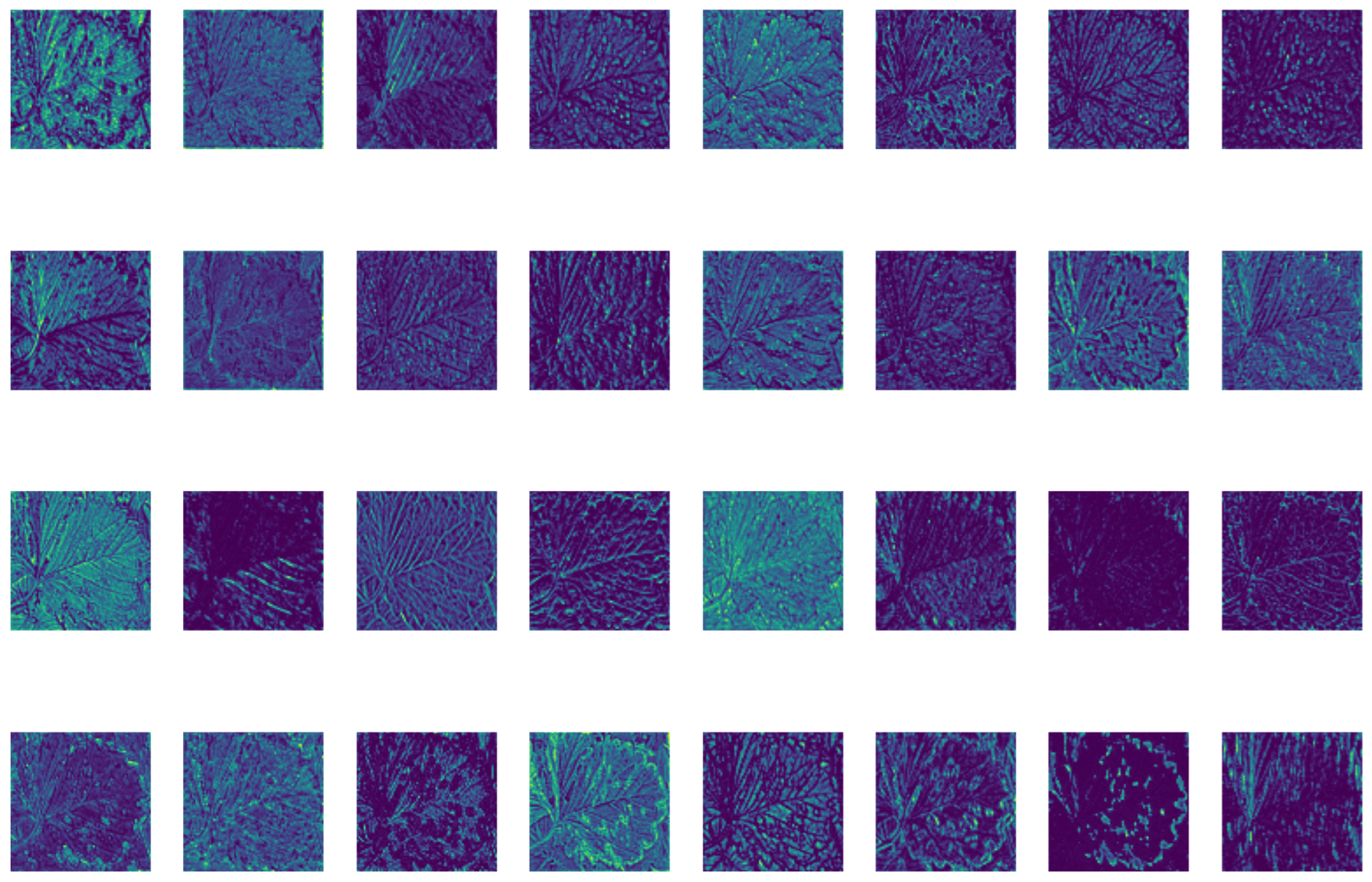

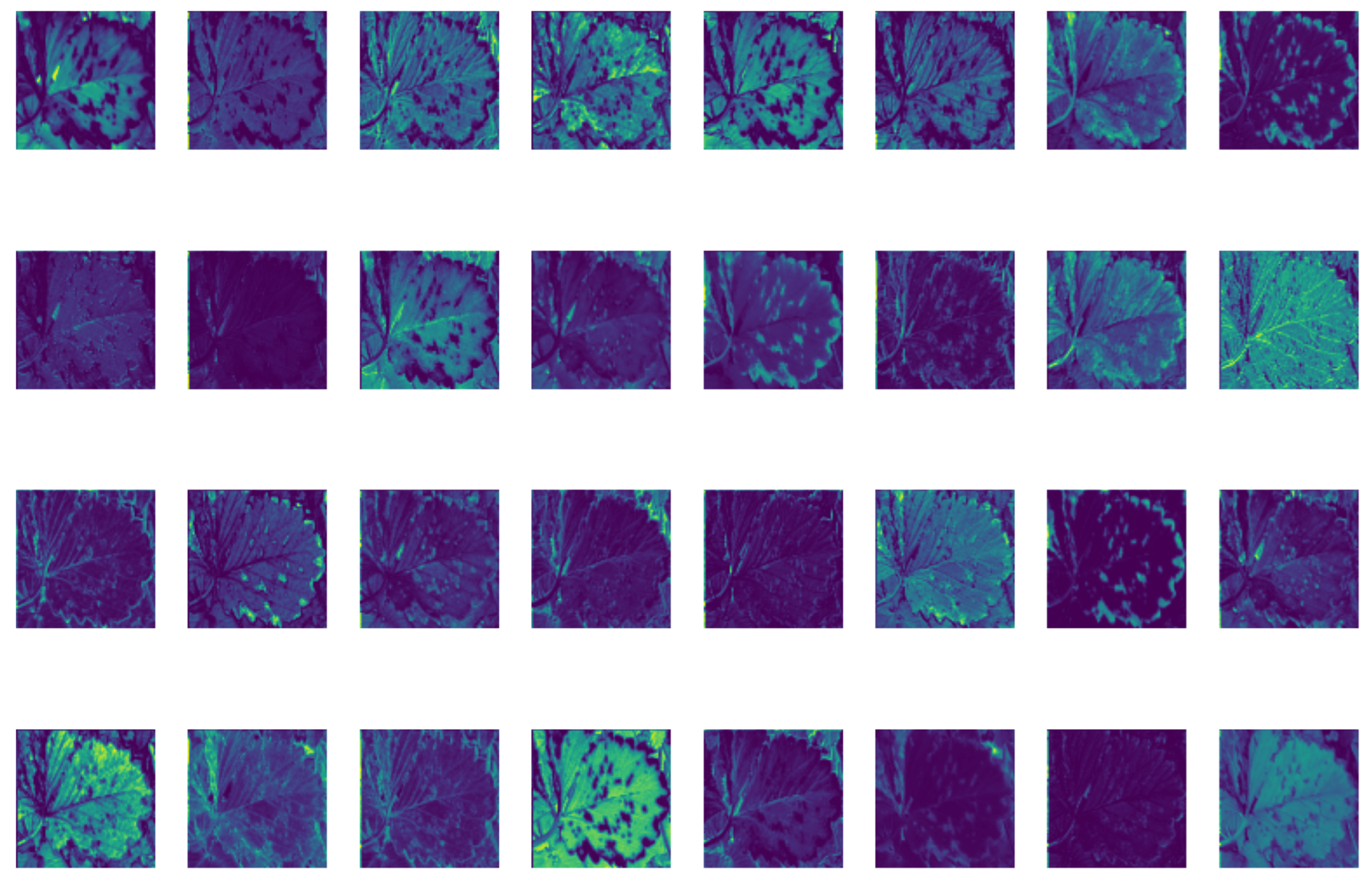

2.2. Integration of the SCConv Module with the C2f Module to Establish the C2f_SCConv Module

2.3. Introducing DySample, an Ultra-Lightweight and Effective Dynamic Upsampler

3. Experiments

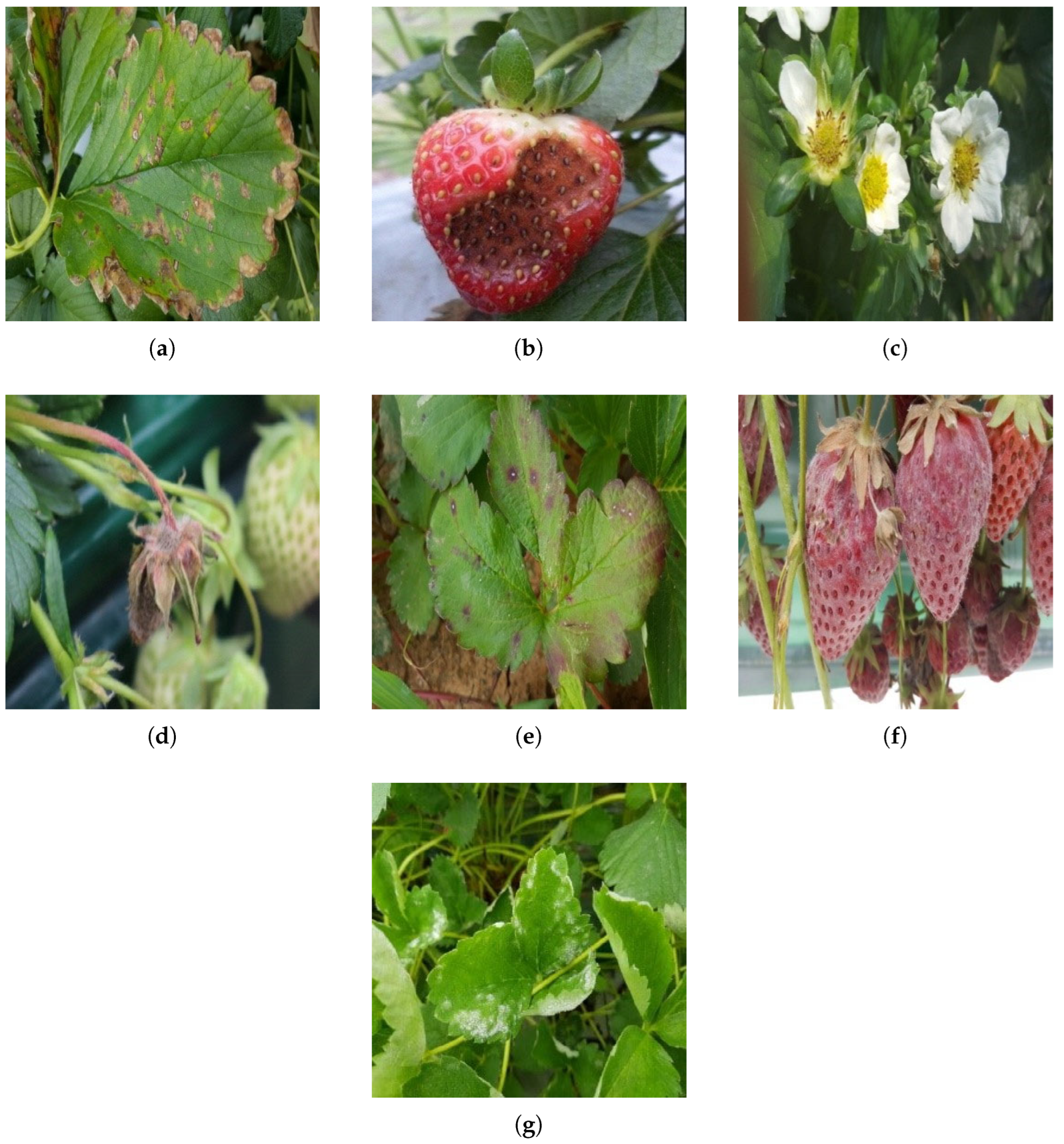

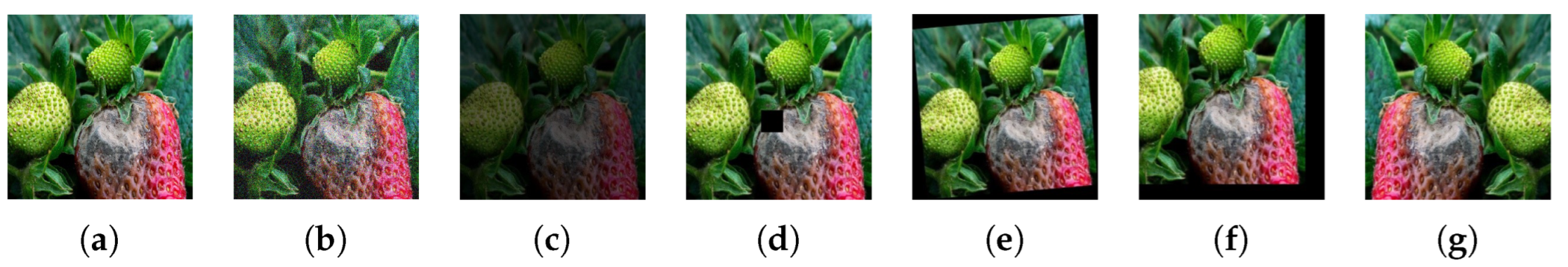

3.1. Dataset

3.2. Experimental Platform and Parameters

3.3. Evaluation Indicators

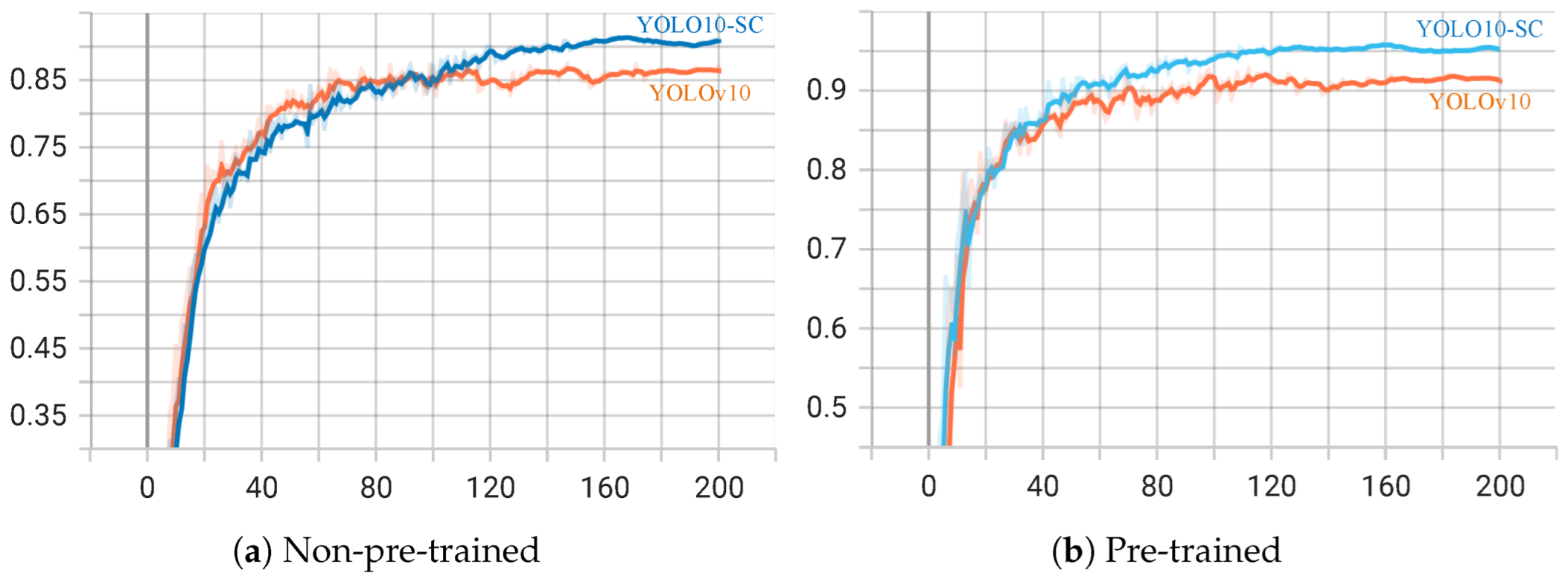

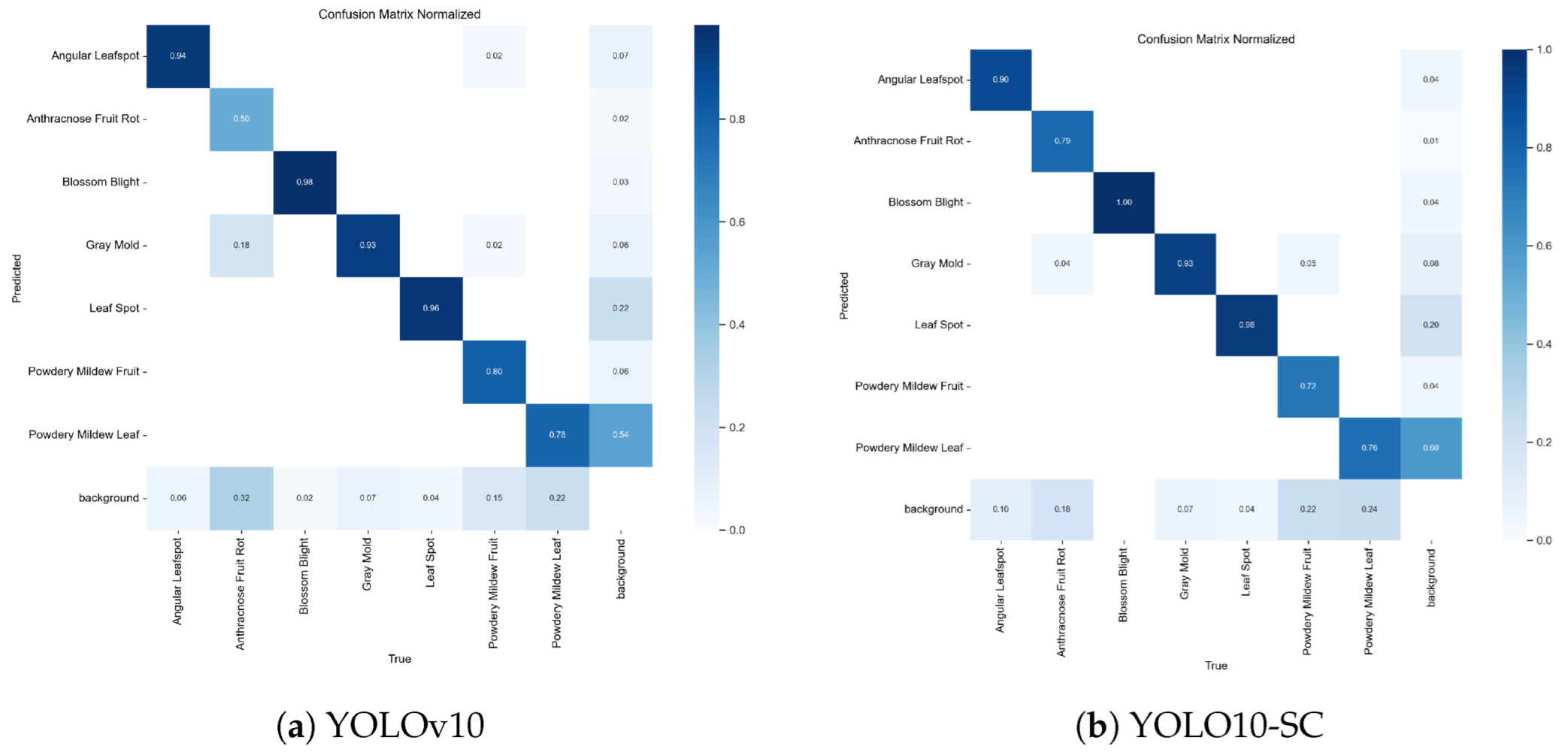

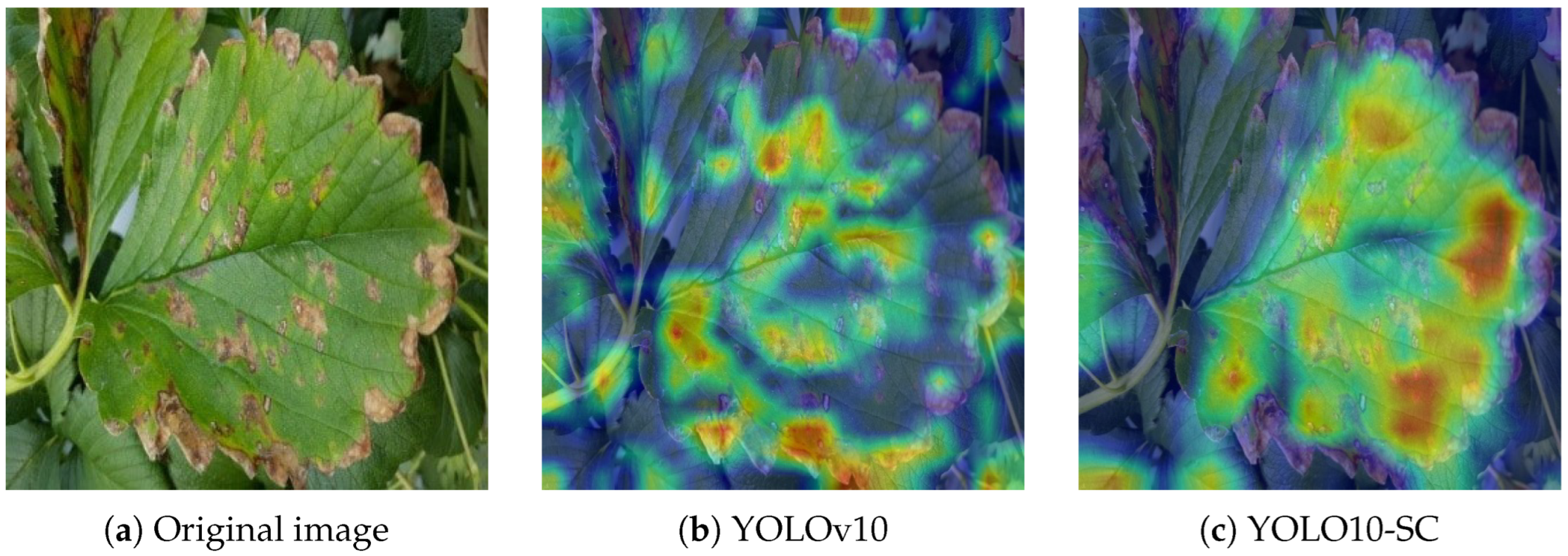

3.4. Comparison Experiment Before and After Improvement

3.5. Ablation Experiment

3.6. Comparison Experiment

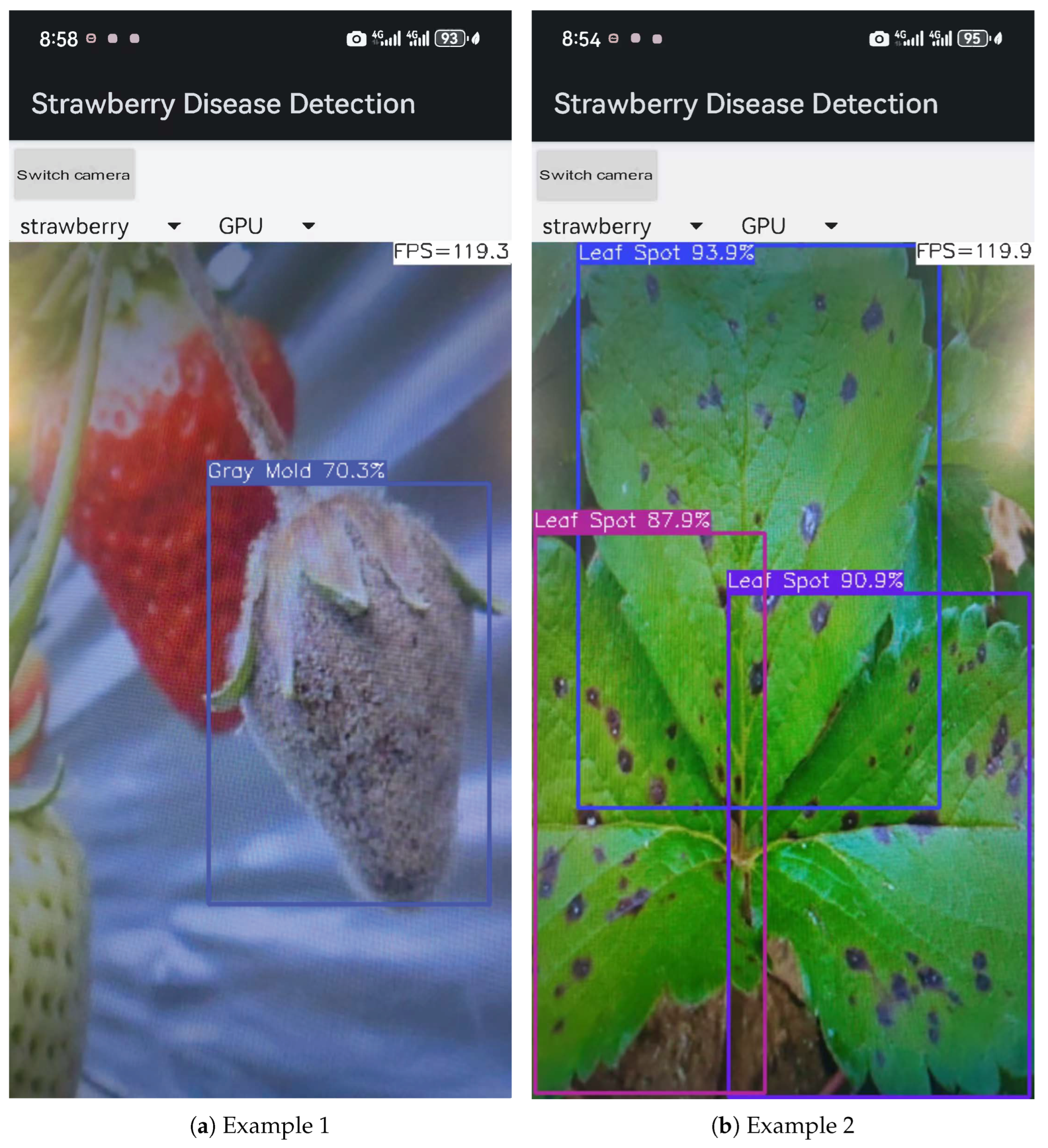

3.7. Strawberry Disease Detection System

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hernández-Martínez, N.R.; Blanchard, C.; Wells, D.; Salazar-Gutiérrez, M.R. Current state and future perspectives of commercial strawberry production: A review. Sci. Hortic. 2023, 312, 111893. [Google Scholar] [CrossRef]

- Yang, J.-W.; Kim, H.-I. An overview of recent advances in greenhouse strawberry cultivation using deep learning techniques: A review for strawberry practitioners. Agronomy 2024, 14, 34. [Google Scholar] [CrossRef]

- Hazgui, M.; Ghazouani, H.; Barhoumi, W. Genetic programming-based fusion of hog and lbp features for fully automated texture classification. Vis. Comput. 2022, 38, 457–476. [Google Scholar] [CrossRef]

- Djimeli-Tsajio, A.B.; Thierry, N.; Jean-Pierre, L.T.; Kapche, T.; Nagabhushan, P. Improved detection and identification approach in tomato leaf disease using transformation and combination of transfer learning features. J. Plant Dis. Prot. 2022, 129, 665–674. [Google Scholar] [CrossRef]

- Yang, N.; Qian, Y.; EL-Mesery, H.S.; Zhang, R.; Wang, A.; Tang, J. Rapid detection of rice disease using microscopy image identification based on the synergistic judgment of texture and shape features and decision tree–confusion matrix method. J. Sci. Food Agric. 2019, 99, 6589–6600. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Guo, R.; Li, M.; Chen, Y.; Li, G. A review of computer vision technologies for plant phenotyping. Comput. Electron. Agric. 2020, 176, 105672. [Google Scholar] [CrossRef]

- Jia, S.; Gao, H.; Hang, X. Research progress on image recognition technology of crop pests and diseases based on deep learning. Trans. Chin. Soc. Agric. Mach. 2019, 50, 313–317. [Google Scholar]

- Redmon, J. You only look once: Uunified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Chodey, M.D.; Shariff, C.N. Hybrid deep learning model for in-field pest detection on real-time field monitoring. J. Plant Dis. Prot. 2022, 129, 635–650. [Google Scholar] [CrossRef]

- Gehlot, M.; Gandhi, G.C. “effinet-ts”: A deep interpretable architecture using efficientnet for plant disease detection and visualization. J. Plant Dis. Prot. 2023, 130, 413–430. [Google Scholar] [CrossRef]

- Zhang, K.; Wu, Q.; Chen, Y. Detecting soybean leaf disease from synthetic image using multi-feature fusion faster r-cnn. Comput. Electron. Agric. 2021, 183, 106064. [Google Scholar] [CrossRef]

- Hu, G.; Yang, X.; Zhang, Y.; Wan, M. Identification of tea leaf diseases by using an improved deep convolutional neural network. Sustain. Comput. Inform. Syst. 2019, 24, 100353. [Google Scholar] [CrossRef]

- Jiang, Y.; Lu, L.; Wan, M.; Hu, G.; Zhang, Y. Detection method for tea leaf blight in natural scene images based on lightweight and efficient lc3net model. J. Plant Dis. Prot. 2024, 131, 209–225. [Google Scholar] [CrossRef]

- Zhao, S.; Zhao, M.; Qi, L.; Li, D.; Wang, X.; Li, Z.; Hu, M.; Fan, K. Detection of ginkgo biloba seed defects based on feature adaptive learning and nuclear magnetic resonance technology. J. Plant Dis. Prot. 2024, 131, 2111–2124. [Google Scholar] [CrossRef]

- Zhao, S.; Liu, J.; Wu, S. Multiple disease detection method for greenhouse-cultivated strawberry based on multiscale feature fusion faster r_cnn. Comput. Electron. Agric. 2022, 199, 107176. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X. Early recognition of tomato gray leaf spot disease based on mobilenetv2-yolov3 model. Plant Methods 2020, 16, 83. [Google Scholar] [CrossRef]

- Xue, Z.; Xu, R.; Bai, D.; Lin, H. Yolo-tea: A tea disease detection model improved by yolov5. Forests 2023, 14, 415. [Google Scholar] [CrossRef]

- Li, R.; Li, Y.; Qin, W.; Abbas, A.; Li, S.; Ji, R.; Wu, Y.; He, Y.; Yang, J. Lightweight network for corn leaf disease identification based on improved yolo v8s. Agriculture 2024, 14, 220. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 15 September 2024).

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. arXiv 2018, arXiv:1807.06521. [Google Scholar] [CrossRef]

- Li, J.; Wen, Y.; He, L. Scconv: Spatial and channel reconstruction convolution for feature redundancy. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6153–6162. [Google Scholar]

- Loy, C.C.; Lin, D.; Wang, J.; Chen, K.; Xu, R.; Liu, Z. Carafe: Content-aware reassembly of features. arXiv 2019, arXiv:1905.02188. [Google Scholar]

- Lu, H.; Liu, W.; Fu, H.; Cao, Z. Fade: Fusing the assets of decoder and encoder for task-agnostic upsampling. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 23–27 October 2022. [Google Scholar]

- Lu, H.; Liu, W.; Ye, Z.; Fu, H.; Liu, Y.; Cao, Z. Sapa: Similarity-aware point affiliation for feature upsampling. Adv. Neural Inf. Process. Syst. 2022, 35, 20889–20901. [Google Scholar]

- Liu, W.; Lu, H.; Fu, H.; Cao, Z. Learning to upsample by learning to sample. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 6027–6037. [Google Scholar]

- Afzaal, U.; Bhattarai, B.; Pandeya, Y.R.; Lee, J. An instance segmentation model for strawberry diseases based on mask r-cnn. Sensors 2021, 21, 6565. [Google Scholar] [CrossRef]

- Wang, P.; Huang, H.; Wang, M. Complex road target detection algorithm based on improved yolov5. Comput. Eng. Appl. 2022, 58, 81–92. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Chien, C.-T.; Ju, R.-Y.; Chou, K.-Y.; Chiang, J.-S. Yolov8-am: YOLOv8 Based on Effective attention mechanisms for pediatric wrist fracture detection. arXiv 2024, arXiv:2402.09329. [Google Scholar] [CrossRef]

- Ju, R.-Y.; Chien, C.-T.; Xieerke, E.; Chiang, J.-S. Pediatric wrist fracture detection using feature context excitation modules in x-ray images. arXiv 2024, arXiv:2410.01031. [Google Scholar]

- Youwai, S.; Chaiyaphat, A.; Chaipetch, P. Yolo9tr: A lightweight model for pavement damage detection utilizing a generalized efficient layer aggregation network and attention mechanism. J. Real-Time Image Process. 2024, 21, 163. [Google Scholar] [CrossRef]

- Tang, S.; Zhang, S.; Fang, Y. Hic-yolov5: Improved yolov5 for small object detection. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 6614–6619. [Google Scholar]

- Kang, M.; Ting, C.-M.; Ting, F.F.; Phan, R.C.-W. Rcs-yolo: A fast and high-accuracy object detector for brain tumor detection. In International Conference on Medical Image Computing and Computer-Assisted Intervention, Vancouver, BC, Canada, 8–12 October 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 600–610. [Google Scholar]

- Chen, S.; Liao, Y.; Lin, F.; Huang, B. An improved lightweight yolov5 algorithm for detecting strawberry diseases. IEEE Access 2023, 11, 54080–54092. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

| Category of Disease | Angular Leafspot | Anthracnose Fruit Rot | Blossom Blight | Gray Mold | Leaf Spot | Powdery Mildew Fruit | Powdery Mildew Leaf |

|---|---|---|---|---|---|---|---|

| Quantities | 870 | 194 | 416 | 954 | 1230 | 270 | 1066 |

| Software and Hardware Platform | Model Parameters |

|---|---|

| Operating system | Windows 11 |

| Processing unit | 11th Gen Intel(R) Core(TM) i9-11900 @ 2.50 GHz |

| Display card (computer) | NVIDIA GeForce RTX 3080 |

| Organizing plan | Pytorch 2.3.1 |

| Programming Environment | Python 3.9 |

| Video Memory, GB | 36 GB |

| Memory, GB | 32 GB |

| Image Size | 640 × 640 |

| Optimizer | AdamW |

| Learning Rate | 0.01 |

| Epochs | 200 |

| Batch Size | 32 |

| Arithmetic | P (%) | R (%) | mAP50 (%) | F1 | Parameters | GFLOPs | Model Size | FPS |

|---|---|---|---|---|---|---|---|---|

| Pre-improvement | 0.882 | 0.812 | 0.873 | 0.846 | 2,697,146 | 8.2 | 5.8 | 142.8 |

| Improved | 0.885 | 0.865 | 0.914 | 0.875 | 2,624,204 | 7.9 | 5.7 | 149 |

| Arithmetic | P (%) | R (%) | mAP50 (%) | F1 | Parameters | GFLOPs | Model Size | FPS |

|---|---|---|---|---|---|---|---|---|

| Pre-improvement | 0.935 | 0.851 | 0.917 | 0.891 | 2,697,146 | 8.2 | 5.8 | 141.1 |

| Improved | 0.951 | 0.903 | 0.958 | 0.926 | 2,624,204 | 7.9 | 5.7 | 146.5 |

| Arithmetic | P (%) | R (%) | mAP50 (%) | F1 |

|---|---|---|---|---|

| Pre-improvement | 0.587 | 0.402 | 0.436 | 0.477 |

| Improved | 0.574 | 0.420 | 0.449 | 0.485 |

| Serial Number | CBAM | C2f_SCConv | Dysample | P | R | mAP50 | F1 |

|---|---|---|---|---|---|---|---|

| A | 0.882 | 0.812 | 0.873 | 0.846 | |||

| B | √ | 0.883 | 0.843 | 0.896 | 0.863 | ||

| C | √ | 0.928 | 0.806 | 0.899 | 0.863 | ||

| D | √ | 0.915 | 0.838 | 0.892 | 0.875 (0.87480) | ||

| E | √ | √ | 0.901 | 0.845 | 0.901 | 0.872 | |

| F | √ | √ | 0.918 | 0.822 | 0.904 | 0.867 | |

| G | √ | √ | 0.915 | 0.82 | 0.892 | 0.865 | |

| YOLO10-SC (non-pre-trained) | √ | √ | √ | 0.885 | 0.865 | 0.914 | 0.875 (0.87488) |

| YOLO10-SC (pre-trained) | √ | √ | √ | 0.951 | 0.903 | 0.958 | 0.926 |

| Method | P | R | mAP | F1 |

|---|---|---|---|---|

| YOLOv5 (2020) | 0.892 | 0.829 | 0.888 | 0.859 |

| Rt-DETR (2023) | 0.862 | 0.856 | 0.874 | 0.859 |

| YOLOv7 (2022) | 0.871 | 0.802 | 0.854 | 0.835 |

| YOLOv8 (2023) | 0.878 | 0.843 | 0.888 | 0.860 |

| YOLOv9 (2024) | 0.885 | 0.824 | 0.896 | 0.853 |

| YOLOv8-AM (2024) | 0.842 | 0.808 | 0.869 | 0.825 |

| FCE-YOLOv8 (2024) | 0.843 | 0.823 | 0.873 | 0.833 |

| YOLO9tr (2024) | 0.869 | 0.825 | 0.891 | 0.846 |

| HIC-YOLOv5 (2023) | 0.798 | 0.791 | 0.803 | 0.794 |

| RCS-YOLO (2024) | 0.933 | 0.802 | 0.855 | 0.863 |

| Mask R-CNN (2021) | 0.702 | 0.815 | 0.824 | 0.754 |

| YOLOv11 (2024) | 0.889 | 0.824 | 0.885 | 0.855 |

| YOLOv12 (2025) | 0.880 | 0.823 | 0.892 | 0.851 |

| YOLO10-SC | 0.885 | 0.865 | 0.914 | 0.875 |

| Improved Faster R_CNN (pre-trained) (2022) | - | - | 0.922 | - |

| YOLO-GIC-C (pre-trained) (2023) | 0.933 | 0.903 | 0.947 | 0.918 |

| YOLO10-SC (pre-trained) | 0.951 | 0.903 | 0.958 | 0.926 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, H.; Ji, X.; Liu, W. Dynamic and Lightweight Detection of Strawberry Diseases Using Enhanced YOLOv10. Electronics 2025, 14, 3768. https://doi.org/10.3390/electronics14193768

Jin H, Ji X, Liu W. Dynamic and Lightweight Detection of Strawberry Diseases Using Enhanced YOLOv10. Electronics. 2025; 14(19):3768. https://doi.org/10.3390/electronics14193768

Chicago/Turabian StyleJin, Huilong, Xiangrong Ji, and Wanming Liu. 2025. "Dynamic and Lightweight Detection of Strawberry Diseases Using Enhanced YOLOv10" Electronics 14, no. 19: 3768. https://doi.org/10.3390/electronics14193768

APA StyleJin, H., Ji, X., & Liu, W. (2025). Dynamic and Lightweight Detection of Strawberry Diseases Using Enhanced YOLOv10. Electronics, 14(19), 3768. https://doi.org/10.3390/electronics14193768