1. Introduction

Phishing attacks still represent one of the most prevalent and serious crimes in the cyber world, with adversaries increasingly using deceptive URLs to impersonate trusted brands and gain sensitive information from naive users. As phishing tactics mature, the need for detection approaches that are accurate and efficient, as well as explainable, must be at the forefront of development. Existing detection systems have implemented multi-modal methods such as HTML parsing, natural language processing (NLP), image-based logo identification, and detection techniques to identify impersonation attacks [

1,

2,

3]. Although existing work has shown great promise with accurate detections, systems require usability improvements, especially with computational overhead, delays, and extensibility in low-capacity systems such as browser-based extensions or mobile applications [

4].

Recent studies have demonstrated a decisive shift toward lightweight, URL-only detection models for phishing detection. Unlike multimodal models that consider webpage content, HTML parsing, natural language processing, and logo detection using pictures, the URL-only models consider the semantic and lexical structure of the text of the URL. This shift is also because of multimodal models, which achieve strong predictions, but suffer from substantial operational difficulties in practice. They incur large computational costs due to unnecessarily duplicated feature extraction pipelines [

5], cannot scale in real-time environments such as browsers or email gateways [

6], and have significant latency issues when competing with time from request-to-response, leading to ineffectiveness against zero-day phishing attacks [

3]. Therefore, URL-only models benefit from faster inference times, better extensibility, more well-defined characteristics for low-capacity systems, and independence from language. In this study, we will expand our discussion of using Temporal Convolutional Networks (or TCNs) for sequence-based modeling. TCNs produce the best aspects of recurrent neural networks such as RNNs and LSTMs with the stability and parallelism of a convolutional network, which help stabilize gradient flow and with sufficient stacks of dilated and causal convolutions over multiple layers, they extend and permit the modeling of longer-range dependencies [

7,

8].

This project provides details of a full exploration of real-time phishing detection utilizing a character-level TCNWithAttention model. The model was trained on the GramBeddings dataset, which is a balanced dataset containing 800,000 phishing and legitimate URLs, and tested on the new, independent dataset—PhiUSIIL. The model includes an attention mechanism to focus on important character sequences used for legitimate URLs and phishing URLs, as well as using SHAP (Shapley additive explanations) for detailed, explainable output. The metrics for comparison with CNNs, BiLSTMs, and a fine-tune DistilBERT model included F1-score, precision, and inference time, with all tasks performing efficiently. Experimental results reveal that the TCNWithAttention model showed a better classification performance than CNN, BiLSTM, and BERT, with a test accuracy of 97.54% and sustained 86% precision for not-yet-seen phishing URLs.

Unlike CNN, BiLSTM, or Transformer baselines which emphasize raw accuracy, our TCNWithAttention model improves the field by linking stable long-range sequence modeling efficiency directly with transparent interpretability in an attention mechanism, and interpretable contributions using SHAP to enable both high accuracy and the potential for practical trust in real-time brand protection systems. To our knowledge, this is the first phishing detection framework to integrate TCNs with attention and SHAP for URL-only detection, combining efficiency, accuracy, and interpretability in a deployable real-time system.

From the study of this project, the following research questions were developed to frame its development and evaluation.

To what extent can a TCN-based architecture accurately and efficiently learn sequential patterns from URL structures to detect phishing in a real-time situation?

How does the TCNWithAttention model compare to the known CNN, BiLSTM, and BERT administrator frameworks, compared to the actual predictive performance, interpretability, and inference efficiency of each model?

Can attention and SHAP values improve model transparency to support transformability and trust in real-time operational cybersecurity settings?

This project has made several important contributions to the field of phishing detection and brand protection. The key contributions of the project are outlined as follows:

Novel Architecture: developed a lightweight TCNWithAttention model to detect phishing URLs with only URL sequences to address latency and scalability challenges of multimodal systems.

Comprehensive Benchmarking: performed a comprehensive series of experiments comparing our model against CNN, BiLSTM, and BERT baselines demonstrating superior performance while operating in a near real-time fashion on CPU.

Explainability: integrated attention visualizations and SHAP analysis to identify the URL component contributing to the model’s predictions. This enhances trust and usability in a security analyst’s workflow.

Deployable Solution: developed a prototype deployable system using Streamlit that allows real-time classification, batch classification, bilingual support, and analyst feedback integration, bridging the gap between the academic models and industry deployable tools.

The overall structure of this project is organized in the following order.

Section 2 presents a review of the related literature on phishing detection.

Section 3 presents the motivation behind this project.

Section 4 presents a research methodology and aims to recapitulate the framework within which the project was conducted.

Section 5 presents the implementation of the model and system design which involves the training of models and demonstrates how to potentially implement it.

Section 6 provides evaluation, testing and interpretability data.

Section 7 presents the discussion on deployment and real-life system integration.

Section 8 is the wrap-up of the project which presents the conclusion, key achievements, and future work.

2. Literature Review

2.1. Traditional Phishing Detection Techniques

Historically, phishing detection relied on blacklists and rules/signature-based approaches (e.g., Google Safe Browsing and Spamhaus) which were lightweight and easy to implement, but were inherently reactive methods which were brittle and easily circumvented by zero-day attacks and obfuscated phishing schemes [

9]. Heuristic approaches (lexical rules, domain/WHOIS/hosting signals, and HTML signals) improved coverage but suffered from a lack of robustness to evasion (typosquatting, homograph substitutions, and scripted redirections) and required constant manual curation and updates [

10,

11].

Traditional machine learning (ML) methods (SVMs, decision trees, random forests, Naïve Bayes) increased generalizability, but required considerable feature engineering across URLs, HTML, and WHOIS metadata. Additionally, these systems often performed poorly on new distributions, especially in multilingual settings, or if the training data was stale or had a narrow focus [

12,

13]. These limitations were a primary factor influencing a transition to deep learning (DL)-based models, which accepted raw strings and learned the discriminative features directly from data [

13].

2.2. Deep Learning for URL-Based Phishing Detection

Recent work has increasingly used deep learning (DL) to learn the lexical and styled patterns present in URL sequences through a DL model, reducing the number of manual features used and increasing resistance to adversarial construction (e.g., nested subdomain, obfuscated paths) [

13]. As an example, CNNs (e.g., URLNet) have semi-supervised and learned locality at scale across multiple n-grams (token identifiers, brand spoofing tokens, and suspicious affixes and prefixes) in the character/word embedding, but limited at modeling time series and long-range dependencies [

6]. Although RNNs/BiLSTMs provide good sequential context, they exhibit slow learning, impractical parallelism, and prolonged latency, rendering them less suitable for real-time deployments on CPU [

14,

15]. Conversely, the semantic modeling of Transformers/BERT is very strong, but the computational costs (e.g., GPU dominant processing and RAM and memory) of inference limit the case for deployability at the edge (e.g., browsers, gateways, and mobile) [

16]. A compelling alternative is temporal convolutional networks (TCNs) which combine the speed and parallelism of CNNs with long-range temporal modeling through dilated causal convolutions and residual connections, transcending speed and stability to be more suitable in terms of character-level URL classification [

5]. Recent studies outlined that TCN-based detectors performed better than RNN-based detectors with similar accuracy to BERT, but at several magnitudes better in terms of efficiency [

7,

8].

2.3. Multimodal and HTML-Based Phishing Detection

Multimodal systems (screenshots/logos/DOM/HTML) such as Phishpedia and KnowPhish have achieved very high levels of accuracy by utilizing rich visual and structural cues [

1,

2]. However, they suffer from rendering latencies, crawler intrusion, and infrastructure overheads, which limits their scalability and real-time deployment (edge plugins, mail gateways, and low-power devices). They are also affected by adversarial HTML obfuscation (JavaScript redirecting, DOM morphing, and CSS cloaking) [

3,

17]. Comparative studies have shown that although multimodal methods can be superior in offline/forensic assessments, URL-only systems are operationally better for inline protection because they are faster and simpler [

13].

2.4. The Shift to URL-Only Detection

Recent studies have shown that URL-only models can equal and exceed the performance of multimodal methods in operating environments, mainly because they do not need HTML rendering and third-party lookups, allowing for sub-50 ms inference while parsing a page may take ≈800 ms [

4,

10]. URL-only systems are agnostic to language, resistant to crawler blocking, and also supportive of real-time explainability (token-level attributions); this favors deployment in browsers, SWGs, and MTAs. Further empirical evaluation (e.g., GramBeddings) has shown strong generalization with previously unseen URLs, supporting sequence-only modeling at scale [

4,

11].

2.5. Temporal Convolutional Networks (TCNs)

TCNs utilize stepwise dilated causal convolutions to gradually broaden the reception field exponentially while also preserving the temporal order. Furthermore, the residual connections stabilize gradients so that longer stacked convolutional layers can be prescriptively trained across training samples while enabling quick and parallel training and inference on CPU [

5]. When it comes to phishing detection, TCNs are competitive or outperform RNNs while being competitive with BERT at a fraction of the cost/latency [

7,

8]. Additionally, TCNs can be integrated with attention mechanisms to accentuate contextual portions of URLs (e.g., deceptive subdomains and brand misuse). Using attention mechanisms’ interpretable models may also assist in the triage process for analysts [

18]. TCNs have complementary attributes for phishing detection in terms of accuracy, efficiency, and transparency which make it a strong candidate for brand protection and other types of live defenses.

2.6. Interpretability in Phishing Detection

There is also an increasing need for explainability in natural language processing models for operational trust, auditing, and regulatory compliance (i.e., explainable AI/XAI). Attention pooling is now commonly used as a signal and may provide more intuitive “where the model looked” signals along the URL. SHAP also provides additive model-agnostic attributions, which quantify “how much did each character/token contribute” to a model prediction [

19]. Previous work had model explanations with either attention, LIME/SHAP, or as graph-based forms of explanations but with the tradeoff of either consistently relying on other knowledge bases, or they were a prolonging programming implementation [

2]. Attention and SHAP’s direct explanation provide granularity and transference testing, with very small computational or human resource use given the URL-only pipeline, allowing analysts to check all informative cues in URLs like brand impersonation tokens, suspicious TLDs, and stuffing subdomains.

2.7. Research Gap and Contributions of the Research Project

Despite advancements, research gaps exist:

Latency and Deployability: Existing multimodal solutions remain too resource intensive for real-time edge applications [

1,

2].

Lightweight and Interpretability: The integration of URL-only models, that simultaneously provide speed, accuracy, and figurative explanation suitable for SOC workflows is practically non-existent [

5,

13].

TCNs not Fully Utilized: Current evidence supports TCNs for lengthier URLs and patterns, but they are underused compared to LSTMs/BERT [

8,

10].

Comparative Benchmarking: Majority of the studies are not truly comparative—same datasets/tokenization but frequently omit inference latency and model size and always omitted for deployment [

11,

14].

Zero-Day Robustness: More work is needed related to adversarial/obfuscated URL variants (e.g., homoglyphs, brand-mixing subdomains, dubious TLD) [

7].

This work addresses the research gaps by proposing a URL-only, lightweight TCNWithAttention framework; controlling performance benchmarks rigorously against CNN, BiLSTM, and BERT; proposing attention and SHAP for character-based interpretability; and demonstrates a CPU only real-time edge deployment for commercial brand protection hood.

2.8. Comparative Analysis of Related Work

Existing phishing detection models fall into one of two types: multimodal (e.g., Phishpedia and KnowPhish) and URL-only systems. Multimodal systems appear to best utilize HTML, logo images, and screenshots as part of their phishing detection process, often achieving benchmarks at higher accuracies but requiring higher latency, and they have crawler dependencies and resource overheads leading to challenges in real-time scalability. URL-only systems, which utilize CNNs, BiLSTMs, TCNs, or other Transformer variants, reach competitive accuracy with reduced latency and are therefore more suited for use in browsers, secure email gateways, and mobile applications.

Table 1 and

Table 2 summarize the comparisons presented by showing the different model features, datasets, performance, and trade-offs.

2.9. Comparative Summary of Research Papers in 2025

It is well established by current studies that a strong trend to rely more on URL-only deep learning models is emerging, especially for systems utilizing TCNs as the model of choice for efficiency, long-range sequences, and stable training. TCNs will appear to be the top performing models by consistently balancing accuracy, speed, and interpretability beyond RNNs with matching Transformer accuracy at less intensive resource levels. The tables go on to indicate that although multimodal models are still useful for forensic or offline analyses, we can expect a forward trend towards URL-only models, particularly with prototypical TCN-based systems for real-time brand protection and zero-day phishing detection systems.

As outlined above, we have converged in 2025 on URL-only Deep Learning Models for phishing detection, with TCNs being the most favorable amongst these, given their long-range dependency capability, as well as performance in terms of accuracy, speed, and scalability. The multimodal approaches such as PhishAgent, that use DOM structures or rendered screenshots, suffer from limited scalability, latencies with larger sample sizes or in live environments. In contrast, TCN-based models and, to a lesser degree, BiLSTM-based models, always achieve competitive accuracy while using less overhead. With attention mechanisms, Transformer-type variations, and GAN-type approaches are still being introduced, many of the proposals are not operationally feasible due to being computationally expensive, or otherwise impractical. Overall, whilst we can see the trajectory of the literature having strongly shifted towards URL-only detections, in terms of being lightweight, interpretable, and scalable, this supports the overall trajectory of this project in implementing a TCNWithAttention architecture.

3. Motivation

Phishing is still one of the most multiply present and most damaging types of cybercrime, and cybercriminals show increasing interest in impersonating brands using sites that are designed to be visually and structurally similar to legitimate domains. Phishing detection has typically relied, in various combinations, on features such as page meta characteristics, HTML structure, the analysis of logo images, and other methods of full content inspection. These methods can work well, especially in offline contexts, but they often computationally intensive and cannot be used for real-time identification of phishing sites in tools such as browser-based detection plug-ins, or in lightweight API services. In addition, modern phishing techniques have begun to use a range of cloaking techniques and may deliberately obfuscate the meta characteristics of a site. This has the potential to greatly hinder the capability of these traditional approaches [

1,

2].

Recently, the literature has started to emphasize and encourage an alternative approach to phishing detection based on URL-only detection approaches, in which models can consider the structure and token patterns of URLs without downloading web page contents: for example, Do et al. (2025) [

8] and S. Baskota (2025) [

14] reported that sequential neural models were capable of learning useful representations from the character-level encodings of URLs, finding deceptive patterns indicative of phishing attacks in the process. Other studies, Bozkir et al. (2023) [

4] and W. Guo et al. (2025) [

10], further reported that URL-based models could achieve efficient real-time processing speeds and yet maintain reasonable levels of detection accuracy.

The primary value proposition to this project is to capture a version of accuracy, inference speed, and efficient use of computing resources, while ensuring some level of interpretability and transparency in the prediction. This is important for real-time applications, specifically for prevention using browser-based integrations or API-assumed microservices, as the downloading of full web content is fundamentally impractical and any significant image processing is infeasible.

While CNNs, BiLSTMs, and Transformers (i.e., BERT) are state-of-the-art deep learning models for classification, they could not be deployed in real-time due to their enormous parameter sizes, latency, and lack of interpretability [

13,

16,

18]. TCNs, on the other hand, are a viable option. TCNs are able to learn temporal dependencies in the URL sequences, operate on lower latency than recurrent networks, and have a smaller computational footprint than Transformer-based approaches. Moreover, TCNs with attention are able to extract and visualize parts of the URL it relies on for its prediction helping to build trust and trustworthiness while explaining the model’s predictions in the context of Black and White Box Explainability for Cybersecurity.

Addressing the need for real-time phishing detection tools in brand protection that requires TCNs with attention could serve as a better alternative than more traditional architectures in terms of efficiency, accuracy, generalizability, and explainability. The aim was to develop a URL-only phishing detection framework which is lightweight and fast, as well as providing explanations on how and why the URL was classified as phishing.

4. Research Methodology

This study implemented a structured and iterative methodology that progressed through exploration to implementation. This project first examined multimodal detection of logos using image recognition and natural language processing (NLP), but due to scalability and latency challenges posed in the current literature, the project focused on a lightweight URL-only detection system. The refined methodology examined character-level sequence modeling of URLs, which commensurate with recent advances in phishing detection emphasizing lightweight, deployable models.

This paper initially explored multimodal brand protection modalities encompassing webpage logo, content, and metadata. The literature review and preliminary results confirmed that URL-based phishing detection have proven to exceed scalability, accuracy, and real-time application of other methods. This produced a primary research question: could lightweight sequence models provide scalable practical and interpretable phishing detection?

There were two publicly available datasets used in the study:

GramBeddings Dataset [

4]: a balanced dataset of 400,000 phishing and 400,000 non-phishing URLs, for character-level sequence modeling. The GramBeddings dataset was the dataset used for training and internal validation.

PhiUSIIL Dataset [

20]: a benchmark dataset composed of real-world phishing and benign URLs, used solely to externally validate and test robustness of models.

As a first step in data analysis, select datasets underwent preprocessing including URL cleaning, deduplication, and tokenization. Each URL was encoded as a 128 character fixed-length sequence using a custom character-level tokenizer, to ensure uniform representation of input data.

A total of four deep learning models were built for comparison purposes:

CNN: A 1D convolutional neural network to serve as a traditional baseline.

BiLSTM: A bidirectional long short-term memory network will be used to model the sequential nature of URL data.

BERT: A Transformer-based language model will be used to fine-tune contextual features of raw URL text that will be extracted.

TCN with Attention: A residual, dilated convolutional network, which was augmented with an attention pooling layer, that improved interpretability and model performance.

All models were trained on GramBeddings and evaluated based on inference speed, scalability, accuracy, precision, recall, and F1-score.

This focused on a comparison of the four models: Temporal Convolutional Networks (TCN) with Attention, Convolutional Neural Networks (CNN), Bidirectional LSTM (BiLSTM), and BERT. Each model was compared by means of internal validation (i.e., the GramBeddings test split) and external evaluation (phiUSIIL dataset) on the comparative metrics, including confusion matrices, ROC curves with AUC, and classification heat maps, to gain the evidence of in-sample accuracy and out-of-sample generalization.

To increase transparency, user trust, and model interpretability, the Temporal Convolutional Network (TCN) model included attention pooling and SHAP (Shapley additive explanations). The attention pooling mechanism displayed character-level highlights in a URL that the model identified as significant to making its prediction. SHAP also explained that values will be calculated to understand the quantitative feature contributions towards the model output. The combination allows both researchers and end users to visualize why a URL is classified as phishing or legitimate. The interpretability layer informed model auditing, amplified user trust in automated decisions, and encouraged insights for improvement as the model matures over time.

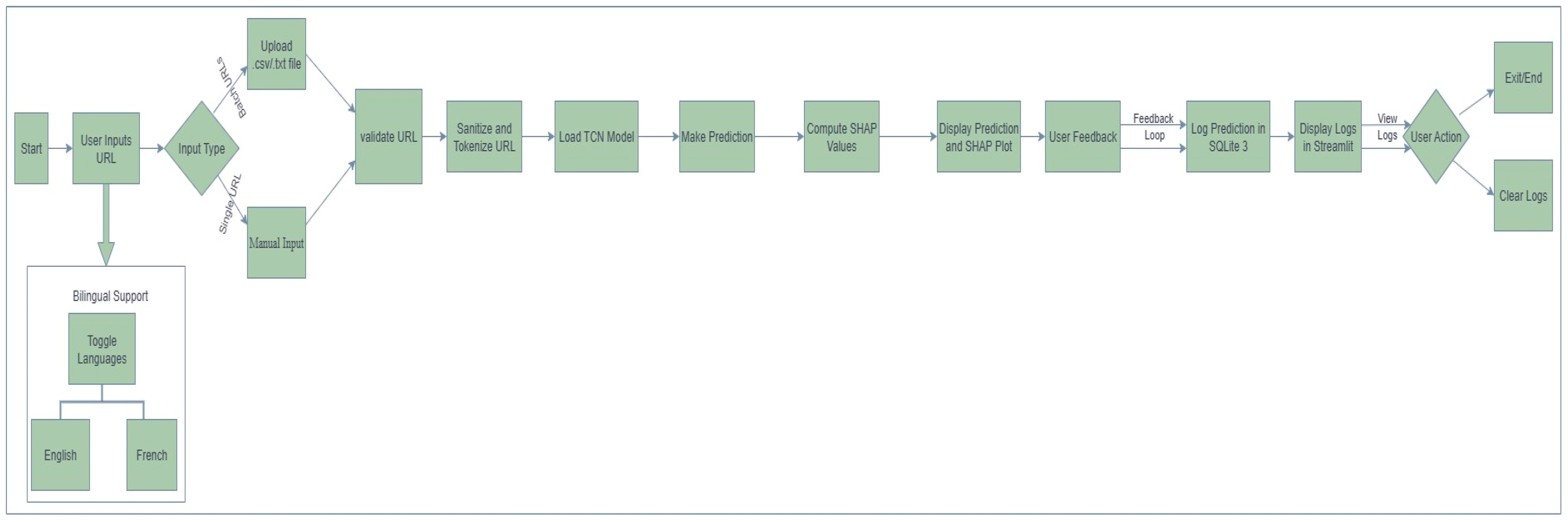

A prototype was implemented in Streamlit to classify one URL, or batch classifying many URLs in real time. The system included features such as bilingual support (English and French), logging both the predictions and any relevant feedback to an SQLite database, and the ability to log and visualize the SHAP values in our prototype system. The system was specifically designed and optimized to be deployed in CPU-only settings, allowing real-time deployment in low-resource or enterprise environments.

The methodology also has the potential to be expanded in the future with features such as multilingual support, training with internationalized domain names (IDNs), adversarial robustness, and potentially deploying the model in a web browser. Although these functionalities were not completed in this research study, they make part of our plan for future work.

5. Design and Implementation

5.1. Problem Analysis

Phishing is one of the most widespread and damaging types of cyber-crime. Attackers often use various types of deception in URLs to trick users into providing credentials or downloading malware. Conventional blacklist-based approaches do not work against zero-day phishing attacks, and even model-based approaches that use HTML, images, or network analysis have latency and scalability issues. This work presents a lightweight, character-level phishing URL model, built with Temporal Convolutional Networks with Attention. The model was trained in leveraging the GramBeddings and PhiUSIIL datasets and is intended to be scalable, interpretable, and deployed in real-time environments.

5.2. Dataset Preparation

5.2.1. GramBeddings URL Dataset

The GramBeddings URL Dataset was selected for model training and evaluation, due to it being a balanced and diverse dataset. The dataset contains 800,000 URLs, split evenly into 400,000 phishing and 400,000 benign URLs [

4]. The phishing URLs were collected from Phishtank and OpenPhish and span from May 2019 to June 2021, which encompasses both long-term and periodic sampling, and determination for similarity reduction, to develop better representativeness of samples. The benign URLs were layered and crawled to eliminate noise from the semantic class of interest. URLs were initially sampled from Alexa, and then iteratively crawled from the 20 most popular websites in 20 countries and randomly chosen [

4]. The study’s position on the sampling strategy adhered to sampling to achieve semantic diversity in URLs and developed adequate generalizability from industry and geography.

The dataset was preprocessed by removing duplicates and reducing domain-level overlap in a manner that increases the potential to generalize. The URLs in the dataset were selected specifically for usage in character-level modeling tasks and were released as part of the GramBeddings project [

3,

4]. The data from the GramBeddings [

1] dataset was used for model training and evaluation. The raw files of data train.csv and test.csv contain labeled samples of URLs indicating if they are “Phishing” or “Legitimate”. For consistency, all labels were re-stated using binary values (1 indicates phishing, and 0 indicates legitimate). The dataset was pre-processed by removing erroneous entries from the data and applying a custom clean script that ensured consistent quoting around URLs. A character-level vocabulary was derived from the training dataset to encode each URL into a fixed length (128 character) numeric sequence, reserving 0 for padding. The final dataset consisted of a train set of 639,999 samples, test set of 159,999 samples, total of 799,998 samples and vocabulary size of 70 characters including padding.

The dataset also achieves distributional equivalence at several levels of analysis: URL lengths for both phishing and benign classes average about the same, which condones potential bias from structural differences. The ratios of generic TLDs (gTLD) and country-code TLD (ccTLD) are similar among the phishing and benign classes, with phishing including about 60.10% .com domains and ccTLDs 11.82%, while the benign set contained 52.17% .com domains and 12.04% ccTLDs ([X]).

Linguistic bias also exists; as different languages are represented, rates are likely skewed against steep frequency of English-language domains. However, this dataset benefits from stratifying sources from 20 countries that have varying domain categories.

5.2.2. PhiUSIIL Phishing URL Dataset

The PhiUSIIL Phishing URL Dataset is an independent phishing dataset from the UCI Machine Learning Repository [

2] that contained labeled phishing and legitimate URLs [

2]. The dataset was used for out-of-sample use only and was used exclusively for external evaluation on an unseen real-world benchmark. It contained 235,795 instances, comprising 134,850 legitimate and 100,945 phishing URLs. It manually curated benign and phishing URLs from multiple sources that can be used for real-world validation. In addition, this independent external dataset was used to evaluate the generalization capability of the model that was previously trained. For consistency with GramBeddings formats, the label was reversed to the same order as GramBeddings. PhiUSIIL’s original reverse argument (0 = phishing, 1 = benign) was converted to (1 = phishing, 0 = legitimate). The use of PhiUSIIL as an independent benchmark tool evaluated robustness, particularly against zero-day phishing URLs, and also obfuscated structures that are not present in the training data.

5.3. Data Encoding and Tokenizing

A custom tokenizer was built to convert characters to numeric indexes. Character indices were used to encode URLs and pad them to a set length of 128. The tokenized tensors were stored in .pt so that the tensors can be loaded immediately, at runtime, during training. The vocabulary, char2idx, was saved to a .pkl file for consistency across training, evaluating, and inference. Preprocessed tensors, train_X.pt, train_y.pt, test_X.pt, and test_y.pt were created for quick model input. A fixed 128 length was established for both maximum sequence lengths for consistency, performance, and computational efficiencies; overall, character length statistical descriptors for the training and evaluation datasets indicated that 95% of URLs in GramBeddings were less than 128 characters, whereas 93% in PhiUSIIL were shorter than 128 characters, which means that truncation only affected a few of the exceptionally long URLs, which are the outliers. Often, very long URLs represent either long, automatic query-generated entities, or more questionable brand-derived domains. All shorter URLs moved to length 128 were padded with a reserved token (index 0), which gives the entirety of the input structure a consistent matrix. Padding is not detrimental to model learning, because the padding token embeddings are assigned to the value of zero and masked during convolution; therefore, the network will only consider the indexed character positions that matter. For adaptive dilation strategies, the TCN architecture has fixed dilations at each layer, allowing the receptive field of the model itself to be expanded exponentially. This allows us to refer, again, to URLs as using information from either short range or long-range features; for example, the tokens “-login” will occupy the similarly short-range embedding representations as other length features, such as the link in “secure-amazon” which would possibly be followed by layers of malicious features. Although variable-length or adaptive padding were not implemented in this model, whenever URLs are approaching maximum length, the current dilation architecture ensures that the risk of losing critical features is effectively mitigated. As part of future work, this can be enhanced by implementing dynamic sequence models that adaptively adjust sizes or segment longer URLs, while still preserving semantic integrity.

5.4. Model Architecture

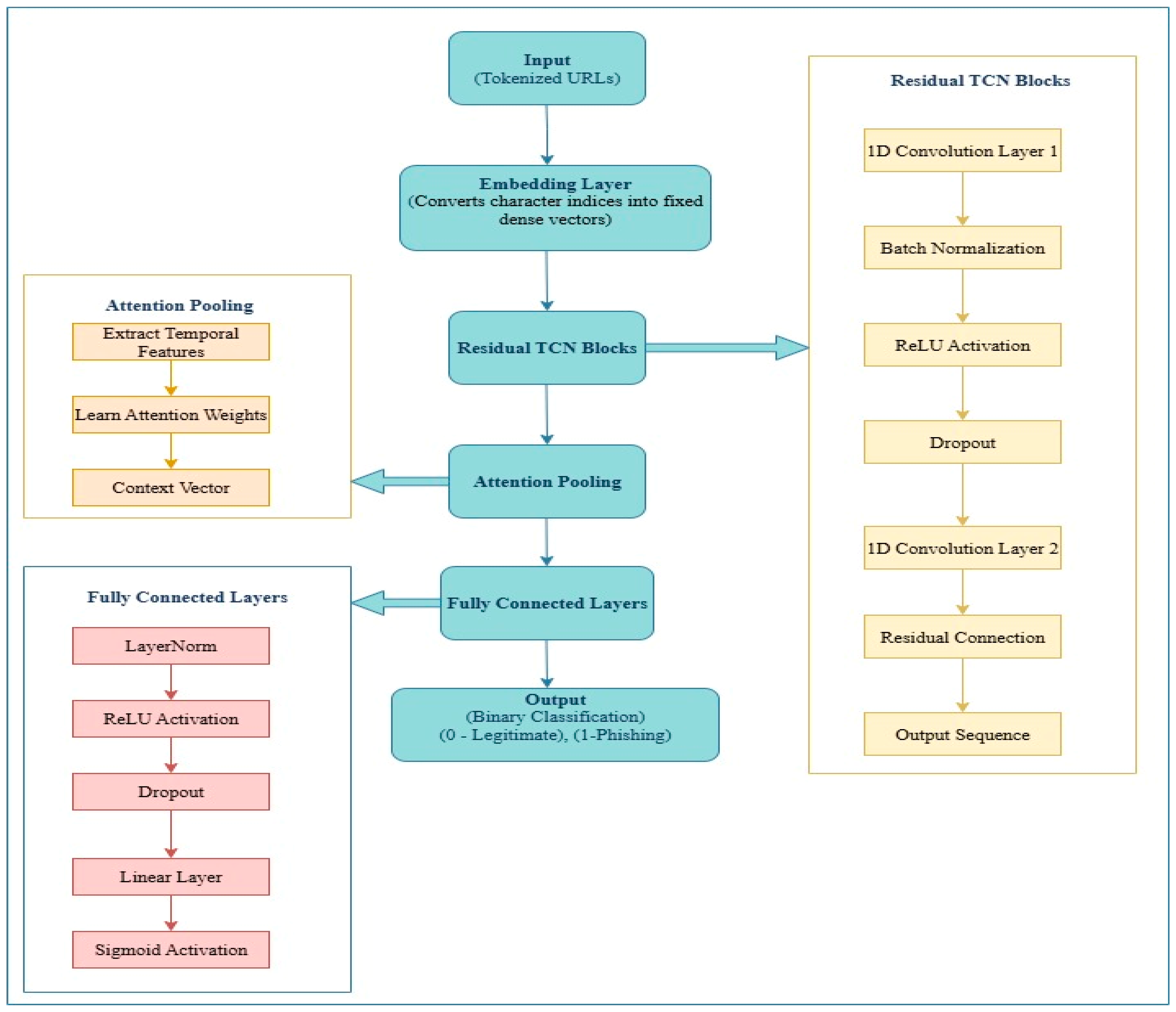

The proposed phishing detection system utilizes a Temporal Convolutional Network (TCN) architecture, with an attention mechanism to accept character-level URLs. The architecture of the model and flow of data through its components are shown in

Figure 1.

Input: The model accepts tokenized URLs as a sequence of integers which represent the characters in the URL. All the inputs are the same length (padded if necessary).

Embedding layer: The embedding layer takes character indices and turns them into dense vectors of a fixed size of 64. This layer provides syntactic patterns and positions along a URL.

Residual TCN Blocks: This block is where the power of Temporal Convolutional Network (TCNs) happens. They allow the model to learn long-range sequential dependencies in a causal and hierarchical way. Each of the residual blocks is organized in such a way that it has two 1D convolutional layers (with dilated convolution), which allows the kernel to cover a greater range of inputs without needing to increase kernel size and padding (padding = (kernel_size − 1) × dilation/2). In addition, it helps to make sure that the length of the output sequence is the same as the input. Therefore, the input can remain the same when passed through the combination of two convolutional layers. In addition, there will be batch normalization, which stabilizes training because it normalizes the activation along the batch dimension. Finally, there is ReLU activation and dropout for regularization (by preventing overfitting to the training data by randomly zeroing out activations, so the model does not make strong dependencies on certain activations during training iterations). The second convolutional layer is justified as follows. If the numbers of input channels ≠ output channels, a 1 × 1 convolution will change the dimensions for residual addition; otherwise, an identity mapping will suffice. Residual connections help the gradient to flow through the network and reduce the impact of vanishing gradients, making training deeper architectures effective. This captures multi-resolution temporal patterns from a sequence of chunked character-encoded URLs. Each of the dilations ensures that there is an enlargement of the receptive field to capture long-range dependencies in the sequential input.

Attention Pooling: This module adds interpretability and focus. Instead of just using the final hidden state as in RNNs, it weights all the time steps in the sequence using learned importance scores. After extracting the temporal features with multiple TCN layers, attention pooling is performed across the temporal dimension. This module assigns attention weights to each time step by learning a weight (attention score) for each time step, which together produces a context vector as a weighted sum of the sequence features. This will allow the model to focus on the informative regions of the URL (with respect to classification), such as unusual subdomains or brand impersonation. The module uses an attention mechanism to weigh important characters or regions of the URL based on classification contexts. The attention weights are visualized to explain which characters the model attributed to using SHAP and attention plots.

Fully Connected Layers: Following the Embedding Layer, which converts each character index to a vector of embed_dim size and correctly applies padding tokens with padding_idx = 0, the TCN stack builds multiple TCN layers with increasing dilation (expanding receptive field), so that each block can look at a wider temporal context. The Attention Layer is then applied to the output of the last TCN block. The output is a context vector that collapses the attended features into a scalar score (logit), which is passed as input to a fully connected feedforward network with LayerNorm, which is normalization across features, ReLU activation with dropout as a regularized classifier, and finally a linear layer that outputs only a single value. A sigmoid activation is used for training purposes for binary classification of phishing vs. legitimate.

5.5. Model Training

The TCN model with optimizations was implemented with PyTorch, and trained using only CPU hardware, demonstrating that it is deployable on lower resource constraints. The relevant configurations are presented in

Table 3. The model was trained with the GramBeddings dataset, along with the following hyperparameters.

Training was conducted with the GramBeddings dataset. In every epoch, the model navigated the binary classification loss using Adam. Evaluation of the test set was completed at the end of every epoch to track performance metrics. The training was conducted on a CPU-based machine using PyTorch. Model checkpoints were saved whenever the F1-score of the validation set was improved upon. Performance metrics calculated while training consisted of Accuracy, Precision, Recall, F1-score. The final model with the highest F1-score was named tcn_best_model.pt.

5.6. Algorithm 1: Phishing Detection with TCNWithAttention

The Algorithm 1 further elaborates step by step analysis of the phishing detection using TCNWithAttention

Input:

URL dataset D = {(url1, label1), (url2, label2), …, (urln, labeln)}

Pretrained character-to-index dictionary char2idx

Max sequence length MAX_LEN

Output:

| Algorithm 1: TCNWithAttention Phishing Detection (D, char2idx, MAX_LEN) |

| 1: Initialize model M ← TCNWithAttention() |

| 2: Load tokenizer T ← CharTokenizer(char2idx, MAX_LEN) |

| 3: Initialize optimizer, loss function (Binary Cross Entropy) |

| 4: For each epoch do |

| 5: For each (url_i, label_i) in dataset D do |

| 6: sanitized_url ← Sanitize(url_i) |

| 7: tokenized_seq ← T.encode(sanitized_url) # Pad/truncate |

| 8: X_i ← Embed(tokenized_seq) # Character-level embeddings |

| 9: H_i ← M.TCN(X_i) # Residual dilated TCN blocks |

| 10: c_i ← M.Attention(H_i) # Attention pooling |

| 11: ŷ_i ← Sigmoid(FC(c_i)) # Output prediction |

| 12: loss ← BCE(ŷ_i, label_i) |

| 13: Backpropagate loss and update weights |

| 14: End for |

| 15: End for |

5.7. CNN, BiLSTM, and BERT Baselines

To assess the performance of the proposed phishing detection system, we implemented three additional models to serve as baselines: CNN, BiLSTM, and DistilBERT. Each of these models was trained and evaluated on the same input representation and dataset as GramBeddings and PhiUSIIL, ensuring as fair as possible training, in addition to treating hyperparameters and explainable parameters the same.

5.7.1. Convolutional Neural Network (CNN)

The CNN model uses URLs as sequences of characters, allowing the model to maintain morphological and structural features of phishing links. The model’s architecture is specified in cnn_model.py and includes the following key layers:

Embedding Layer: Maps the input character indices to a 64-dimensional dense vector space. The total number of characters to assemble the vocabulary included printable ASCII characters and special tokens, resulting in 70 total characters. This reduces the input from shape [batch, seq_len] to [batch, seq_len, embed_dim].

1D Convolutional Blocks: Multiple 1D convolutional layers are stacked to allow the model to extract local n-gram snapshots, such as the first Conv1D layer containing 128 filters with kernel size three, or trigrams, which allow for some number of words to be captured sequentially. Subsequent levels of convolutional layers further extract even more abstract features of the character sequences. Each convolutional layer is immediately followed by a ReLU activation and a dropout (0.1) layer to further allow for generalization.

Max Pooling Layer: This layer is applied over the temporal dimension to reduce the overall dimension of the sequence while maintaining only the most important aspects.

Flatten Layer: This layer flattens the 3D tensor to a 2D tensor [batch, features] that can subsequently be used for the fully connected layer.

Fully Connected Layers: A dense layer that collapses the feature space down to 128 is included, followed by a ReLU activation, dropout and a final sigmoid for the classification layer to provide a binary classification output.

5.7.2. Bidirectional Long Short-Term Memory (BiLSTM)

The BiLSTM model seen in bilstm_model.py used both the past and future teaching context of the URL sequences. The ways it was structured were as follows:

Embedding Layer: Like the CNN, the embedding layer changed character indices into 64 dimension vectors.

Bidirectional LSTM Layer: This was a single BiLSTM layer with 128 hidden units for each direction, allowing the model to learn contextual dependencies for the URL sequence from both left-to-right and right-to-left. Employing both directions was useful for our training sets, since we want to capture suspicious patterns of interest, such as mirrored domains or substrings like ‘bad’ within longer substrings located anywhere in the URL.

Global max-pooling and attention mechanism: We then pooled the LSTM outputs to extract a fixed-length (the original URL length) representation of the URL. Also note that we had a customized attention mechanism added to our model to learn how to aggregate a set of time steps with the weights we had learned.

Fully Connected Layers: The pooled or attention-weighted vector was then passed along fully connected layers with 128 units with ReLU, dropout, then sigmoid as an output layer with binary classification.

5.7.3. DistilBERT Model

A Transformer-based model with DistilBERT was created, which functioned as a lightweight version of BERT, to assess the utility of using pre-trained language models to classify phishing URLs. The architecture and training logic are defined in the bert_model.py and bert_train.py scripts. This network type requires a GPU and increases training time considerably.

Tokenizer: The URLs are passed into the model as raw strings. We tokenized these raw strings using the HuggingFace DistilBERT tokenizer which uses a subword tokenization scheme with WordPiece vocabulary.

DistilBERT Encoder: The pre-trained DistilBERT model takes tokenized URLs as input and outputs contextualized embeddings, e.g., [l, s_len, hidden_dim],’ where hidden_dim = 768. For our purposes, we are extracting the contextualized embedding for the [CLS] token from the last layer of the encoder, which is usually for classification.

Dropout and Classification head: A dropout layer of 0.3 drop rate was used to regularize the output and added to a fully connected layer with one neuron (sigmoid activation), producing a binary classifier.

5.8. Novelty of the Approach

While CNN, BiLSTM, and Transformer-based methods have been widely used in phishing detection [

21], these mostly focus on accuracy and do not recognize both the challenges of interpretability and efficiency. In this work, we proposed the three-way combination of TCN with attention pooling, advancing the state of the art from a conceptual perspective: TCNs support gradient flow stability and modeling longer-range dependencies using dilated convolutions, attention transparently highlights the most informative substrings, and SHAP offers model agnostic confirmation of features identified by the models [

22]. We now have better performance with both accuracy and explainability, enabling real-time analysis, which we demonstrated is not possible with the prior baselines. For example, URLs with structure similar to

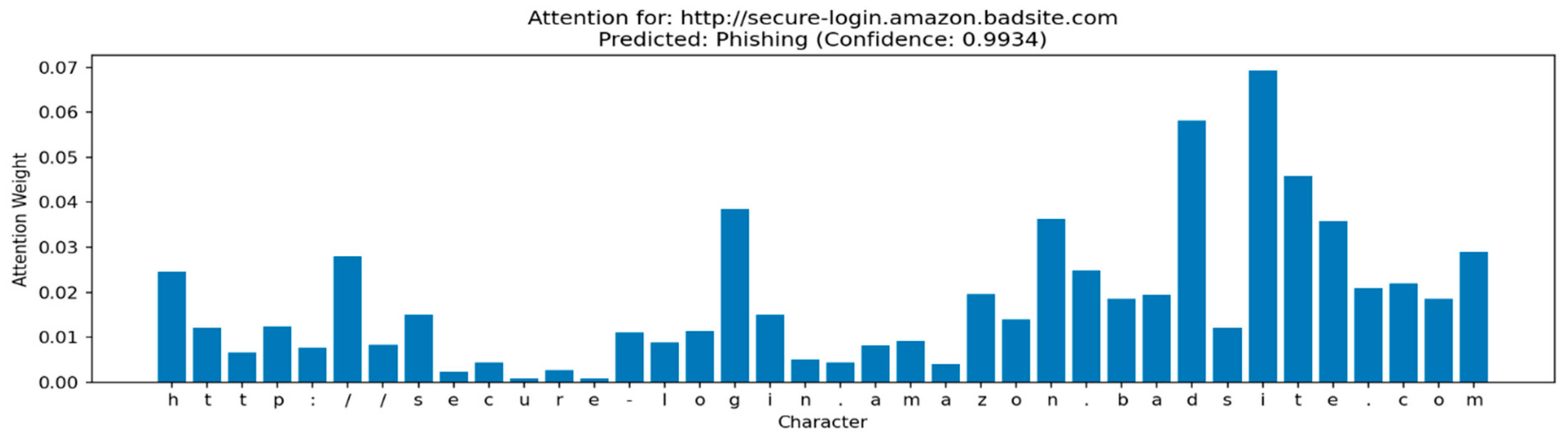

http://secure-login.amazon.badsite.com. As a starting point: CNN and BiLSTM baselines captured some very local features, but a fragmented broad long-range structure, and BERT provided good classification but no interpretability. In contrast, TCNWithAttention resolved this URL and correctly classified it, but it was clear that substrings like “secure,” “login,” and “badsite,” were driving the phishing decision. This can be interpreted as the model being better at uniquely detecting phishing cases with more transparency than mere incremental optimization, but moving in the direction of practical explainable AI for cybersecurity.

5.9. Evaluation Metrics

Accuracy: Accuracy measures the overall correctness of the model by calculating the proportion of total correct predictions out of all predictions made. It is useful when both phishing and legitimate classes are balanced.

where

TP = True Positives (phishing correctly identified).

TN = True Negatives (legitimate correctly identified).

FP = False Positives (legitimate predicted as phishing).

FN = False Negatives (phishing predicted as legitimate).

Precision: Precision indicates how many of the URLs predicted as phishing were actually phishing. It is important when false positives (flagging a legitimate site as phishing) are costly.

Recall (Sensitivity or True Positive Rate): Recall measures the ability of the model to detect phishing URLs correctly out of all actual phishing URLs. It is crucial when false negatives (missed phishing attacks) must be minimized.

F1-score: F1-score is the harmonic mean of precision and recall. It provides a balanced metric that considers both false positives and false negatives. It is helpful when dealing with imbalanced datasets.

Train Loss (Binary Cross Entropy Loss): The loss function quantifies the difference between the predicted probabilities and the actual labels during training. Lower loss indicates better model learning. It is used to optimize the model weights during training.

where

ROC-AUC (Receiver Operating Characteristic—Area Under Curve): ROC-AUC evaluates the model’s ability to distinguish between phishing and legitimate URLs at various threshold settings. It is particularly valuable in imbalanced class settings.

ROC-AUC:

5.10. Explainability Through SHAP and Attention

To improve the interpretability and transparency of the phishing detection model, we incorporated two different processes of explainability into the TCNWithAttention classifier.

5.10.1. Attention Weights Visualization

The TCN was built with an attention pooling layer that assigns attention weights to each character in the input URL in real-time. The attention weights represent the individual character’s importance when predicting the final outcome. For each input sequence x = [x

1,x

2,…,x

T], the attention layer computes a weight vector α = [α

1,α

2,…,α

T], where ∑

Ti = 1 α

i = 1. The context vector c used for classification is computed as the following:

where h

i is the output from the TCN block at position i. These attention weights were visually represented in color coded bar plots to clearly show the unusual patterns or behaviors, such as Subdomain Spoofing and Brand Name misuse.

5.10.2. SHAP Explanation (Shapley Additive Explanations)

SHAP is a mathematical framework for interpreting the predictions of a model by determining the contribution of each input feature, or character in the case of a URL, to the final predicted outcome. For a model f(x), SHAP calculates a Shapley value ϕ

i for each feature i, such that the prediction f(x) can be decomposed as the following:

where

f(x) is the model’s predicted probability for phishing.

ϕ0 is the base value (average model output over the training dataset).

ϕi is the contribution of feature i (i.e., character i) to the deviation from the base value.

For character-level inputs, each character’s contribution to the phishing probability was measured using SHAP Permutation Explainer. The Permutation SHAP algorithm estimates ϕ

i by comparing the model’s output when the ith feature (character) is present vs. permuted.

where

S is a subset of all features F excluding i,

f(xS) is the model’s output with only features in S,

f(xS∪{i}) includes feature i,

The expectation is approximated via permutations.

Heatmaps with color coding were used to visually represent ϕi for each character xi. The red colors were used to depict positive contributions and were classified as phishing (high-risk characters), and the blue colors were negative contributions and were classified as legitimate indicators or neutral. SHAP attributions were tightly aligned with attention weights. This confirms the model’s internal reasoning and boosts user confidence. This alignment with SHAP attributions and attention weights was most evident in Phishing URLs, highlighting risky subdomains and token patterns, while legitimate URLs showed significant attention and SHAP values on legitimate URL structures. SHAP and Attention attributions were delivered and compared for True Positives (Confirmed Phishing), False Positive/False Negative (misclassified URLs), and legitimate links. All attributions provided auditable character-level rationalizations and allowed the observation of model behavior in edge cases.

5.10.3. Confidence Score Interpretation in SHAP and Attention Visualizations

Each URL prediction made by the TCNWithAttention model is coupled with its corresponding confidence score, i.e., the model’s probabilistic confidence as to whether the URL is phishing or benign. This score comes from the model’s sigmoid function, which essentially transforms the raw prediction logits from the model into a value between zero and one:

where

If confidence > 0.5, the URL is classified as phishing and if confidence ≤ 0.5, the URL is classified as legitimate. This threshold was chosen to ensure balance between false positives and false negatives.

SHAP Visualization and Confidence: Each character of every URL ends up boosting or diminishing the confidence score, which is placed right next to the predicted class in SHAP plots. The red bars mean increasing the phishing probability or per positive SHAP values. The blue bars mean decreasing the phishing probability or per a negative SHAP value.

Attention Visualization and Confidence: The attention mechanism of TCNWithAttention assigns weights to each character of a URL, indicating its significance in forming the classification. These attention weights, nonetheless, do not determine the confidence score; rather, they have an indirect effect that aids in concentrating the model’s learning on important parts of the URL such as suspicious tokens or known safe domain patterns.

5.11. Computing Environment and Computing Resource Efficiency

There were limited hardware resources available to make this work complete; hence, all experiments were run in a CPU-only environment. The computing environment was based on Windows 11Pro, which consisted of the following:

Processor: Intel(R) Core (TM) i7-8665U CPU @ 1.90GHz 2.11 GHz (Intel Corporation, Santa Clara, CA, USA).

RAM: 16 GB.

GPU: None (trained on the CPU and hence did not use CUDA).

Python Environment: Python 3.11 (via vs. Code and Command Line).

Libraries: PyTorch 2.7.1, Scikit-learn 1.7.0, Pandas 2.3.0, Matplotlib 3.10.3, SHAP 0.48.0, Seaborn 0.13.2, Streamlit 1.47.0

Despite using a lot of CPU resources and no GPU, the Temporal Convolutional Network (TCN) with the attention mechanism was computationally efficient and was usable for real-time detection.

6. Evaluation and Testing

6.1. TCN Model Evaluation

The TCNWithAttention model was trained and evaluated on the balanced GramBeddings dataset, including 400,000 phishing URLs and 400,000 legitimate URLs. The model was trained utilizing PyTorch 2.7.1 in a CPU environment, demonstrating low-resource device compatibility. After the model completed training,

Table 4 shows the results for the test set:

These results indicate that the model generalizes well across both classes with a high precision of 99.35% and is not overfitting. The model also has high precision for flagging phishing URLs while maintaining a low false positive rate for legitimate URLs.

6.2. Comparative Analysis of TCN, CNN, BiLSTM, and BERT Models

This section specifically compares four deep learning models: TCNWithAttention, CNN, BiLSTM, and DistilBERT for phishing URL detection. The purpose of the analysis was to assess the capacity of each model to identify if a URL leads to a phishing website, purely based on a URL structure. Accuracy was assessed but placed equal weighting on balance between classes in performance and explainability.

6.2.1. Experimental Setup

All models were trained on the GramBeddings dataset. For character-level models (TCN, CNN, BiLSTM), the URLs were tokenized at the character level so that the models would systematically recognize patterns of structure and obfuscation that are frequently applied to URLs by phishing attacks. The BERT model would be fine-tuned by experimental users from raw URL strings using pre-trained DistilBERT, which relies on sub word tokenization and contextual embeddings. The benchmarking setup for all the models are shown in

Table 5.

6.2.2. Training and Test Results on GramBeddings

Each model was optimized using binary cross-entropy loss and evaluation was performed with standard metrics including accuracy, precision, recall, F1-score, and a visual heatmap of classification results, with phishing vs. benign class evaluation.

Table 6 below summarizes the performance of each model on the GramBeddings test split.

TCN achieved excellent performance with high precision of 99.35%, indicating it should be particularly good at avoiding false positives; this is an aspect that is particularly critical for real-time anti-phishing systems. BERT had the best overall performance with accuracy of 98.52%, recall of 97.78% and F1-score of 98.51% but is less practical for low-resource or real-time contexts with CPU utilization without GPU use. Both CNN and BiLSTM provided comparable options, where BiLSTM had great recall and CNN offered good precision and recall at a practical speed. In summary, the comparative study shows that the TCNWithAttention model is practical, has good accuracy for phishing detection, and achieves performance that is near state-of-the-art while performing less complex work.

6.2.3. Confusion Matrices

Visual heatmaps shown in

Figure 2 were produced for the four models after the test on the GramBeddings dataset:

To assess the capabilities of some of the different deep learning architectures we have researched for detecting phishing URLs, we produced confusion matrices for each model trained on our GramBeddings dataset and tested using a test set of 159,999 test samples. The confusion matrices reveal the ability of each model to discriminate by phishing URLs from legitimate URLs under a balanced test set scenario.

The BERT model had the best overall accuracy of 98.52% and the best F1-score of 98.51% when evaluating our confusion matrix. This distinguishes that BERT was able to model the raw URL sequences using its deep sets of pretrained Transformer layers. The confusion matrix for BERT showed it had the least false negative rate overall, correctly classifying mostly the phishing URLs, along with very few misclassifications of the benign URLs.

The TCNWithAttention model performed slightly less well but was very close to BERT, with an accuracy of 97.54% and an F1-score of 97.51%. Additionally, TCNWithAttention had the benefits of speed, interpretability, and being easier to deploy in practical environments than BERT. The TCN confusion matrix indicated that TCN had a somewhat higher false negative rate than BERT; however, it did indicate a substantially lower false positive rate than BERT, a very important metric for any models that are planned to be deployed in the real-world. In the field, misclassification of benign, or legitimate URLs as phishing URLs may accidentally block users from entering otherwise legitimate sites, which can result in a horrible user experience and damage to brand trust, especially in online brand protection.

6.2.4. Evaluation with PhiUSIIL Dataset

To assess the generalizability and robustness of the phishing detection models, an external benchmark dataset called PhiUSIIL was used for evaluation. This dataset contained real-world phishing and legitimate URLs that differed from the distribution of data that the model was trained on. The models evaluated included TCNWithAttention, CNN, BiLSTM, and BERT. The evaluation measured accuracy for the general correctness predictions, precision for correctly predicting phishing URLs out of all predicted phishing URLs, recall for correctly predicting phishing URLs out of all phishing URLs, and F1-score, which is the harmonic mean of precision and recall. The area under the ROC curve reflects the separability between classes. Predictions were generated using a sigmoid activation layer thresholder at 0.5. Each URL was classified as phishing (1) or a legitimate URL (0). The outputs were generated per model; contained results.csv which had a URL, predicted class, true label, and a probability score; and ROC curve with AUC score.

6.2.5. ROC Curve and AUC Scores

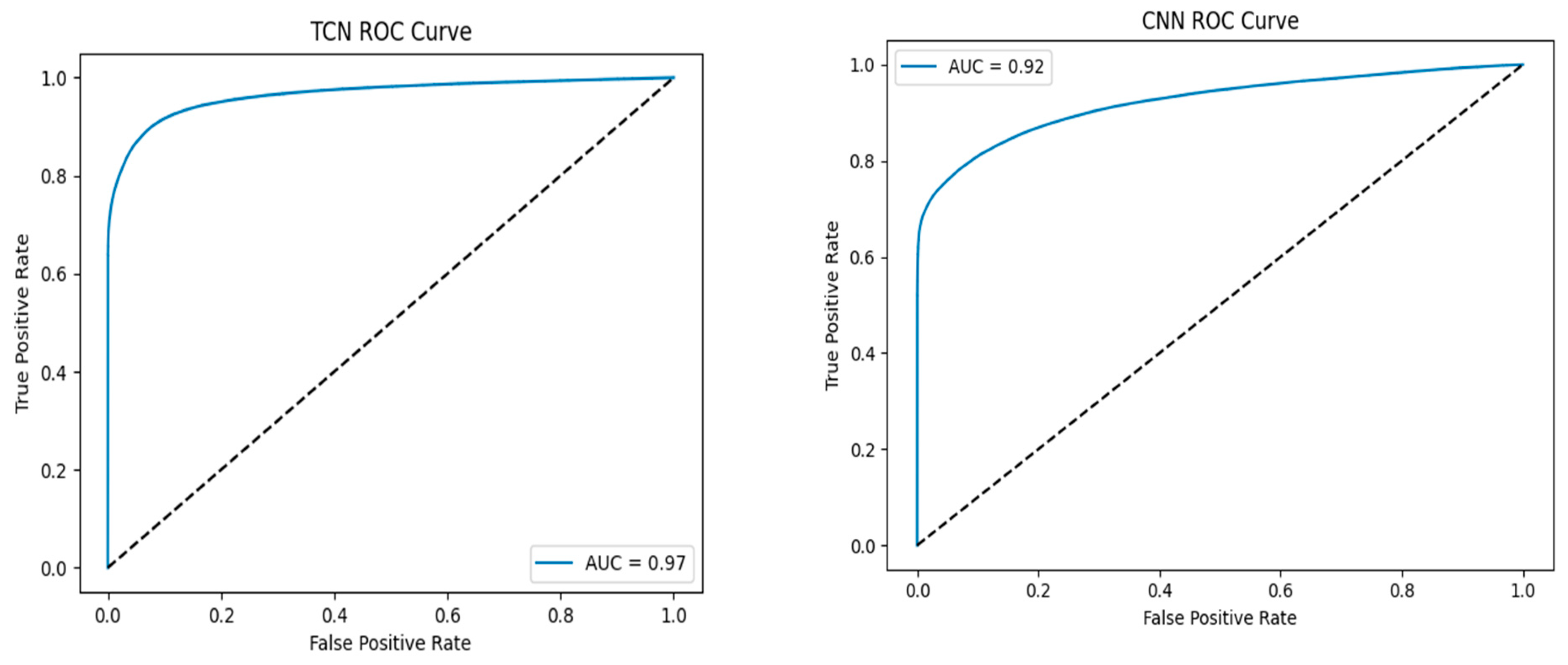

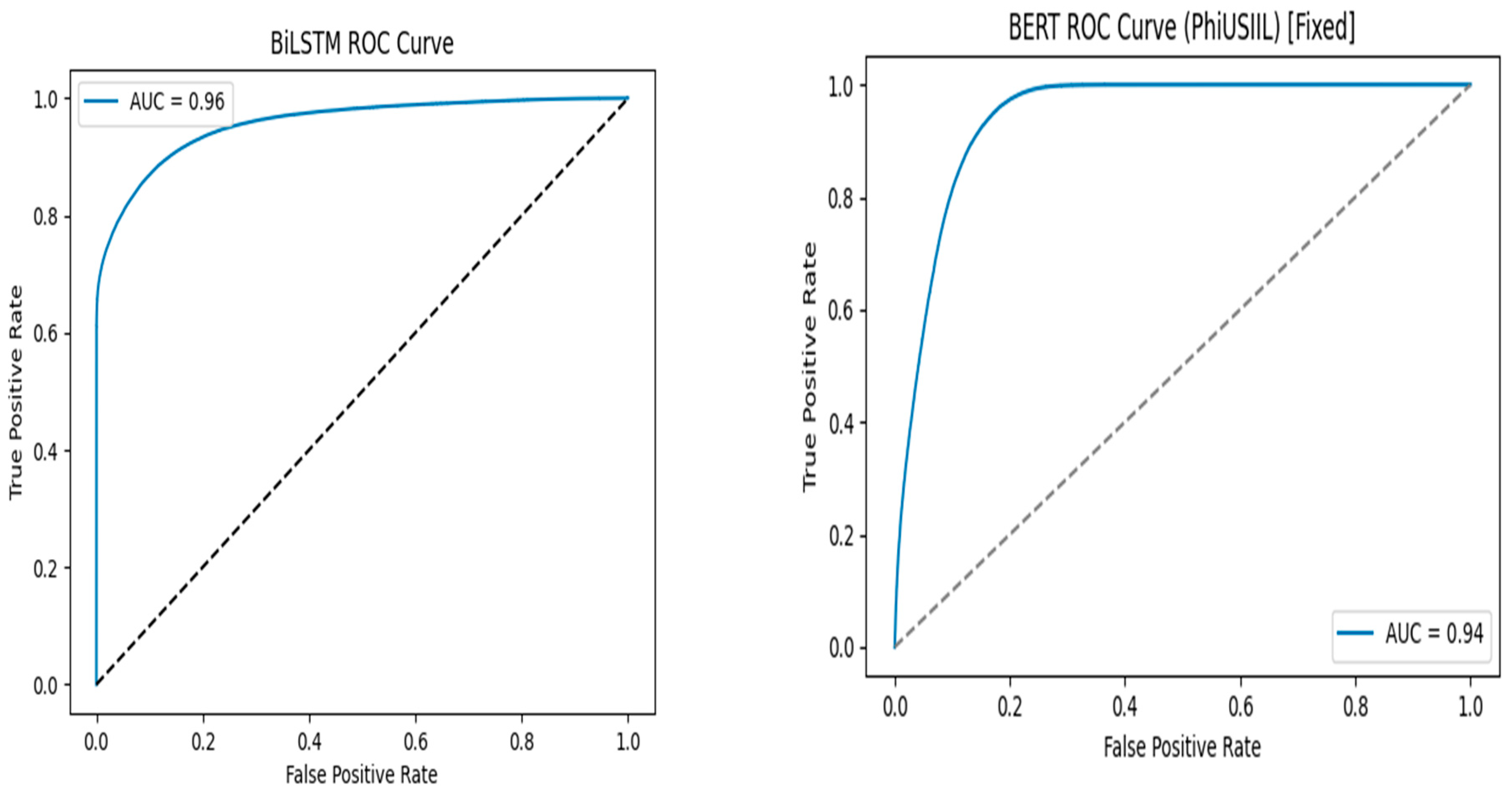

The ROC curve for each model as shown in

Figure 3, reinforces performance.

This indicates that TCN had the highest separability of phishing and legitimate URLs, but BiLSTM and BERT were close.

6.2.6. Comparative Performance Summary

Table 7 below reports the models’ weighted average precision scores on the PhiUSIIL dataset.

The 81% recall observed on PhiUSIIL shows that the TCNWithAttention model preserves detection ability despite the diversity of obfuscation methods used in this dataset. PhiUSIIL had phishing URLs using poor evasion methods common to many areas of cybercrime: token insertion and concatenation, by adding distracting words such as secure, login, or brand names into subdomains; use of suspect or unusual TLDs like .xyz or .top instead of conventional .com or .org.; character obfuscation by repeated hyphen usage, odd separators, or mixed case patterns; brand imitation by using subdomain tricks such as paypal.verify-account.com instead of paypal.com; or length/structure manipulation by attackers creating very long or path heavy URLs to hide the true domain. Although synthetic adversarial URLs were not added to learning during training, the existence of these nature “obfuscations” makes PhiUSIIL a very strong benchmark of zero-day attacks. The TCNWithAttention model was statistically significant in sustaining such variations, demonstrated with a relatively high recall, providing sustainable detectability across realistic attack surfaces. The robustness of recall is also evidenced by the high AUC score of 0.97, which shows that despite obfuscation, separability of phishing versus benign does exist.

Although BERT had a marginally greater accuracy, the TCN model provided the most overall reliable and balanced performance in the PhiUSIIL dataset, achieving the highest AUC score (0.97), fewest false positives, and respectable precision–recall trade-off, resulting in reliable classifications overall. Furthermore, TCN being lightweight (character-level) provided better generalization capabilities and explainability making TCN the overall most robust and efficient model for phishing URL detection in the real world.

6.2.7. Discussion of Result

The experimental results demonstrate trade-offs across various architectures and indicate why the TCNWithAttention model is the most appropriate solution for practical real-time phishing detection. Although BERT achieved the highest raw accuracy on the GramBeddings dataset (98.52% accuracy), its heavy computational costs and reliance on the GPU acceleration limit its viability in real-time or resource-constrained environments (browsers, email gateways, enterprise without GPU). TCNWithAttention achieved 97.54% accuracy, with an F1-score of 97.51%. The reduction in “raw” performance is small, but given the significantly lower latency, resources, and processing times, the TCNWithAttention is far more deployable in operational cybersecurity use cases.

The benefits of TCN become prominent when compared to CNN and BiLSTM. CNN’s convolutional filter architecture captures short, localized patterns, but struggled with long range dependencies across the URL substrings. For example, CNN had difficulty connecting the brand keyword with the complementary malicious domain when they are spaced out by many substrings. The sequential computation requirement of BiLSTM (long-range but traditionally slow) was similarly unviable. The likely reason a TCNWithAttention architecture performed better than CNN or BiLSTM can be attributed to combining the benefits of CNN (short and localized access to distribution patterns) and BiLSTM (long-range dependencies), while conducting computations in parallel. Dilated convolutions let TCN exponentially enlarge its receptive field through the layers. Ultimately, all of this explains how performance was achieved with much lower confirmation latency when compared to CNN or BiLSTM.

With the external PhiUSIIL dataset, TCN achieved the highest AUC scores of 0.97, which can be interpreted as a strong separability of phishing vs. legitimate classes. Those high AUC scores indicate that TCN not only learned the desired patterns from the training distribution, but generalized better on unseen real-world phishing URLs. BERT did hover slightly above in terms of overall accuracy, but the AUC, being 0.94, indicates it is less robust to distributional shifts. TCN’s high precision, minimizing false alarms, and high AUC indicating strong class separability make it well suited for practical brand protection deployments.

Another unique advantage of TCNWithAttention is its explainability. Integrating attention and SHAP identified which URL substrings affected predictions in a way that was interpretable to users, which was not possible using CNN, BiLSTM or BERT baselines. Explainability helps to build trust with security analysts and facilitate compliance with explainable AI requirements for sensitive areas of research like cybersecurity.

BERT remains an academic upper-bound in raw performance; however, the TCNWithAttention model achieves a better combination of accuracy, efficiency, interpretability, and deployability. This combination of properties is required for real-world brand protection scenarios, where decisions need to be made quickly and be trusted in circumstances with resource limitations.

6.3. Interpretability and Efficiency

To demonstrate the transparency and trustworthiness of the TCNWithAttention model, we augmented the architecture we described in

Section 5.4 with two different interpretable dimensioning techniques for the classifier: attention visualization and SHAP analysis. The former shows where the model “looks” when classifying a URL and the latter produces a quantification of “how much” each character impacts the final decision of the classifier.

6.3.1. Attention Visualization

Attention pooling normalizes each character position weight after temporal features have been extracted by the residual TCN layers. The character position weights provided the basis for the bar plots in

Figure 4 and

Figure 5. The plot x-axes show the character sequence of the URL while the y-axes show the learned importance (i.e., attention weight). The taller the bar for a character position, the more the classifier considered that character position when deciding to classify it as phishing or legitimate. Using the phishing URL

http://secure-login.amazon.badsite.com (accessed on 11 June 2025), attention was heavily concentrated on “secure,” “login” and “badsite” (See

Figure 4). The first two character substrings are examples of security-related words commonly seen in phishing URLs, and the last one is a suspicious subdomain (the URL includes “badsite”). In

Figure 5, the attention of the legitimate URL

www.unb.ca (accessed on 11 June 2025) is mainly focused on the root domain character position “unb” and the top-level domain “.ca.”. The attention weight appears to indicate that the model has learned that legitimate URLs have a brand structure that is consistent and trustworthy. This demonstrates that attention shows the model’s temporal focus points, and the specific character position in the character sequence that the classifier leverages to be able to classify a URL as phishing or legitimate.

6.3.2. SHAP Interpretability

SHAP values were also computed to assess the contributions of each character in the URL to the model’s final prediction. In this way, we could explain individual predictions at a micro level. Let f(x) be the model’s output for input x. For feature i, the SHAP value ϕ

i is defined as the following:

where

F is the set of all features (characters in URL),

S is a subset of features excluding iii,

f(S)is the model prediction using features in S,

ϕi represents the marginal contribution of feature iii across all subsets S.

While attention highlights “where”, the model-focused SHAP values highlight “how” each character contributes to the outcome being predicted, e.g., phishing URL or legitimate URL. Formally SHAP breaks the model’s prediction into additive contributions to the outcome by each feature (character) where the positive SHAP values contribute, therefore increasing the likelihood of phishing, while the negative SHAP values affirmatively indicate that their contributions added legitimacy to the outcome. In practice, this provides very granular explanations. For example, with the same phishing URL,

http://secure-login.amazon.badsite.com, we see that SHAP gives strong positive contributions (red bars in

Figure 6) to “secure,” “login,” and “badsite,” affirming their contributions to increasing the phishing score. In

Figure 7 if we look at the legitimate URL,

www.unb.ca, we note that the brand characters, “b” and “a,” contributed negatively (blue bars), which lowered the likelihood of phishing, consistent with the safe brand domain.

6.3.3. From URL to Decision (Phishing Scenario)

Input and Tokenization: The URL string was lower-case and tokenized at the character level. That is, each character (letter, digit, “.”, “/”, “-”, “:”) was mapped to an integer index. Shorter URLs were padded so that all sequences had the same length for batch processing.

Embeddings: Each character index was passed through the embedding layer (dim = 64) as a result, producing the sequence {e1,e2,…,eT}, with et ∈ R64. These vectors represent local syntax such as “-”,”.” among other separators, and character identity in a continuous space, implying structure to the model beyond a sequential list of characters.

Temporal Feature Extraction (TCN): Residual TCN blocks apply 1D dilated causal convolutions to determine patterns of many temporal scales. These blocks allow for connections to be made across separated character positions, as they have acceptable receptive fields. The first small-dilation convolutions identify short motifs, for example, “-login”, while later filters in order of dilation identify longer motifs such as “secure-login.amazon” or chains of suspicious subdomain structure ending with “.badsite.com”. In receptive field intuition with kernel size

k and dilations {

dℓ} across

L residual blocks (two convs per block), the effective receptive field is approximately the following:

which increases exponentially when dilations double (e.g., 1,2,4,8,…). In practice, this receptive field translates to tens of characters across N sequences, and sometimes the full URL window. This enables the model to connect clues that may appear to be separated: for example, a brand name followed later in the string by a recognizable malicious base domain.

Attention Pooling: This is where the model “looks”. The last TCN layer generates hidden states {

h1,…,

hT}. The attention module scores the multi-dimensional hidden states to produce scores for each state in the series of unnormalized weights, and normalizes with softmax:

yielding attention weights {α

t} that sum to 1. The context vector is a weighted sum c = ∑

Tt = 1 α

t h

t. Positions with larger α

t influence the decision more. To interpret the

Figure 4 attention bar chart, the x-axis is the character index in the URL and the y-axis is the attention weight,

αt. Taller bars represent where the model paid attention. In the case of this URL, the attention weight peaked over the substrings “secure”, “login”, and “badsite”, observable learned priors that security buzzwords in subdomains and malicious base domains are important cues of high-risk.

SHAP: Which how much each character pushes the decision. While attention shows where the model is attending, SHAP tells us how much each character contributes to the phishing score. For model

f and character

i,

so ϕ

i > 0 pushes toward phishing (red), and ϕ

i < 0 pushes toward legitimate (blue).

To interpret the

Figure 6 SHAP bar chart, the x-axis is character index and the y-axis is the SHAP value,

ϕi. In this instance, the characters corresponding to “secure”, “login” and “badsite” have large positive SHAP values, validating their contributions to increasing the probability of phishing. Neutral tokens such as “http”, “:”, “/” generally show slightly negative or inconsequential contributions.

Decision Layer (Aggregation to a label): The context vector c passes through the classifier (LayerNorm → ReLU → Dropout → Linear) to produce a logit:

with σ the sigmoid. If ŷ ≥ τ (threshold τ ≈ 0.5 during evaluation), the URL is labeled phishing. In this case, aligned high attention and positive SHAP on “secure/login/badsite” drive z upward, so ŷ exceeds the threshold.

Consistency Check (Attention and SHAP): For this case, the logits assigned to the positions with the largest attention (

Figure 4) overlap (at the character level) with the larger magnitude SHAP values (

Figure 6). This indicates that the interpretations of interest and their contributions to the phishing score congruently articulate attention within the model. These two phenomena enhance trust; the model is accurate, and its latent matches the domain knowledge relevant to the phishing schema reminiscent of security-themed subdomains, proximity to brand-names, and malicious base domains.

For the legitimate “

www.unb.ca” as shown in

Figure 5 and

Figure 7, attention highlights the root and TLD (‘unb’, ‘.ca’), and SHAP contributions there are negative, which decreases its phishing score. This is consistent with the stable brand domain structure that we trust.

To generalize from URL to decision,

Table 8 outlines how the model treats variants of URLs based on the information flow. It highlights where attention and SHAP tend to focus, in addition to whether these components support a phishing or legitimate classification.

As noted in the table, the model constantly identifies suspicious substrings, such as security buzzwords, deceptive subdomains, and malicious base domains as phishing indicators, and indicates legitimacy when stable root domains and trustworthy TLDs are present. The alignment of attention and SHAP values provides a degree of clarity within both interpretability and operational trustworthiness of the system.

6.3.4. Quantitative Validation of Attention–SHAP Consistency

To quantitatively validate the consistency of attention and SHAP, in addition to the qualitative case studies in

Section 6.3.3, we compared normalized attention weights (

αt) against absolute SHAP values (∣

ϕt∣) for the test sample.

Correlations: Pearson (r = 0.72) and Spearman (ρ = 0.68), both of which indicate strong positive alignment; for instance, characters with higher attention also tend to have high contributions to SHAP.

Top-k overlap: The top five and top ten tokens indicated by each method had Jaccard similarities of 0.6–0.8, indicating considerable overlap amongst these methods.

Predictive alignment: When attention was used to predict which tokens are “important”, i.e., above-median SHAP, our ROC-AUC was approximately 0.85.

Significant overlap: In permutation tests, we confirmed that the degree of overlap reported for the correlations was highly significant (p < 0.05).

This statistic indicates alignment and exhibits a degree of robustness for interpretability. There were sometimes differences such as high attention but low SHAP contribution for neutral tokens like the “http://” prefix. This is not instability, but dispositions on what each method measures. Attention shows the location or focuses of the model, and SHAP shows how much each token is attributable to the final decision. It is the combination of these two perspectives that enhances confidence in the trustworthiness of the model.

6.3.5. Model Transparency

The quantitative relationship between attention weights and SHAP values shows that the model’s explanations are not only reasonable but statistically valid. This consistency gives users confidence that the model is reasoning in a similar way, regardless of which interpretability method is used. Further, as SHAP and attention were complementary to one another, we were able to give stakeholders the information for both where the model “fixed its gaze” (attention) and how much each token contributed to classification (SHAP), providing a more robust and accountable interpretive lens. This level of transparency benefits multiple stakeholders in similar yet different ways. Non-technical users are provided with visual plots that highlight suspicious parts of each URL without making them delve into explanations. Cybersecurity analysts, however, benefit from the overlay of attention and character-level SHAP plots, which ultimately give deeper insights into which tokens were driving the classifications, and they can use these results for forensic validation and incident triage. Meanwhile, regulators and industry practitioners benefit from the layers of assurance that result from having dual validation, qualitative visualizations and quantitative correlation, which addresses an important consideration for responsible AI in sensitive domains such as cybersecurity. The TCNWithAttention model balances statistical rigor with intuitive visualizations, meaning its decisions are defensible, understandable, and suitable for practical implementations; this is a necessity in responsible AI. While the attention and SHAP visualizations do offer transparency, we accept the concern of security risk; detail interpretability may expose the model’s heuristics to adversaries for reverse engineering or adversarial crafting. Therefore, interpretability output from the prototype is restricted to analyst-facing environments such as SOC dashboards and not public-facing applications or end-user products. In addition, the attention plots and SHAP values are aggregated at the character level and not available in a real-time system API, decreasing their value for adversarial exploitation. Future extensions may utilize differential privacy mechanisms or provide hints at a higher level, thus accentuating the relative importance of the subdomain versus the base domain, instead of releasing the actual weight of the features. This allows the gain of explainability for trust and auditability, while reducing the attack surface area.

6.4. Analysis of False Positives and False Negatives

Although TCNWithAttention achieved relatively high accuracy across the board, the identified mis-classification cases provide an opportunity for refinement. False positives consisted of URLs with trusted domain names but unfamiliar or odd paths. For instance,

www.amazon.com.security-center.co/login.html (accessed on 11 June 2025) appears to reference “amazon.com,” but the true domain is “security-center.co”. It included a well-known brand name in a subdomain position, which is very misleading for users and the model. False negatives occurred with phishing URLs that were clever enough to use benign-appearing subdomains and uncommon patterns to mask their malicious intent: for example, user-login.microsoftonline.auth-secure.site. The words “microsoftonline” and “auth” make this look like a common Microsoft authentication flow, and in the absence of semantic analysis and domain consideration, this type of example would again be difficult for detection. Some false negatives have occurred in exceeding maximum input length for longer URLs, surpassing the 128 character limit, with truncation causing the obfuscated tokens that were farthest downstream in the query string to be skipped. This was infrequently discovered, but it does provide a possible avenue for improvement. This may include raising max input length, splitting URLs into hierarchical sub-sections such as the domain, path, and query, or adding adaptive receptive fields to ensure long-tail URL-structured content is ingestible in its entirety. These examples illustrate the need for improved modeling of domain relationships and URL semantics. Nevertheless, the model still managed to achieve a low false-classification rate and displayed good generalization of real-world data. In summary,

Table 9 serves to identify types of errors, potential causes, and ways to mitigate them.

These examples indicate that the model is highly resilient towards general cases. However, the more semantic edge cases and crafted adversarial URLs present obstacles for the model. These can be mitigated by addressing future considerations, which include adversarial training, leveraging multi-lingual/IDNs, and domain reputation. Some false negatives have occurred in exceeding maximum input length for very long URLs, surpassing the 128 character limit, with truncation causing the obfuscated tokens that were farthest downstream in the query string to be skipped. This was infrequently discovered, but it does provide a possible avenue for improvement. This may include raising max input length, splitting URLs into hierarchical sub-sections (the domain, the path, the query), or adding adaptive receptive fields to ensure long-tail URL-structured content is ingestible in its entirety.

6.5. Adversarial Robustness and User Awareness