1. Introduction

Large Language Models (LLMs), such as GPT-4, are increasingly integrated into daily applications. We can use these systems to support chatbots, search engines, code assistants, and summarization tools [

1]. Their ability to understand and generate human-like text comes from being trained on massive datasets, often collected from the internet. However, this training process can lead to the unintended memorization of sensitive or personal information [

2].

This problem has been known for years. Research shows that LLMs can reveal sensitive information from their training data. For example, they might leak names, addresses, phone numbers, health conditions, or private business details [

3]. Some studies found that models can repeat rare or unique phrases exactly as they appeared in the training data. This includes items such as API keys in code or personal conversations in chatbots [

2].

This risk becomes more concerning as LLMs move from controlled research labs into the hands of everyday users. Non-expert users often lack awareness of how their data might be used or stored. When they input sensitive information into an LLM-powered system, it may become part of future model updates or even appear in model responses [

3]. This issue raises the risk of leaking names, phone numbers, private business details, or unique phrases, such as API keys.

These privacy risks carry legal and ethical implications that can violate data protection regulations, such as the General Data Protection Regulation (GDPR) [

4]. The increase in leaked data makes it challenging to utilize LLMs in sensitive fields, such as healthcare, finance, or legal services, where confidentiality is crucial. The risks are worse when the training data include raw web content, which often lacks filtering or anonymization.

Real-world incidents highlight these concerns. In 2023, Samsung engineers accidentally exposed proprietary source code to ChatGPT (at a time versions included GPT-3.5 and GPT-4) during debugging, prompting a company-wide ban on the tool [

5]. Researchers have demonstrated that LLMs trained on large corpora can reproduce personal or confidential data directly, particularly when such data are unique [

2].

Most existing data cleaning methods use Named Entity Recognition (NER) or simple rules to remove obvious Personally Identifiable Information (PII) [

6]. These methods can hide names or contact details. However, they often miss deeper risks. They fail to capture context-based, relational, or indirect information that can still identify someone. For example, describing someone’s rare job, their connections in an organization, or combining details that seem harmless alone but together reveal who they are. For example, “After presenting the cybersecurity report to the board, the youngest female executive in the Riyadh branch flew to Geneva for a closed-door meeting with the ministry”. Although this sentence does not mention any names, email addresses, or phone numbers, it reveals several indirect identifiers, such as role and position (youngest female executive), location (Riyadh branch), event context (presented a cybersecurity report to the board), and association (met with the ministry). When we combine these details, we can narrow down the identity of the person, especially in a specific company or sector. As LLMs become more prevalent, we need improved methods for cleaning data and protecting privacy. Our goal in this work is to fill this gap and answer the research question:

How can we design a scalable, semantic-aware pipeline that detects and remediates implicit, contextual, and relational privacy risks in LLM training data before model exposure, while preserving data utility for downstream tasks?

To address these gaps, we introduce a pipeline filter that processes the input data before passing them to the LLM model called Semantic Privacy Anomaly Detection and Remediation (SPADR). SPADR analyzes text before it is used in LLM training or fine-tuning. It detects not only high-level PII but also deeper semantic risks, such as employment relationships, unique descriptions, or geographical traces—and applies targeted remediation to reduce privacy leakage.

We evaluate SPADR on a financial email dataset, a domain where data often includes sensitive personal and transactional content. In this paper, we use finance as a test case; however, SPADR can be applied to various domains, such as healthcare. We summarize our contributions as follows:

We highlight critical privacy risks associated with LLMs used by the general public.

We propose SPADR, a pipeline that filters sensitive content from input data before they reach an LLM.

We demonstrate the effectiveness of SPADR using a real-world financial email dataset.

The rest of this paper is structured as follows.

Section 2 discusses related work in LLM privacy and data sanitization. In

Section 3, we present our research problem.

Section 4 presents our proposed SPADR pipeline.

Section 5 describes our experiments and results.

Section 7 concludes the paper and outlines future work.

2. Related Work

The issue of privacy in machine learning, particularly in LLMs, has received increasing attention over the past decade. One of the earliest attempts to address this was proposed by Abadi et al. [

7], who introduced Differential Privacy (DP) for deep learning. DP provides formal privacy guarantees by injecting noise during model training, thus protecting individual data points. However, applying DP in natural language settings often degrades model performance, making it less practical for LLMs.

Following this, Shokri et al. [

8] introduced membership inference attacks (MIAs), where an adversary can infer whether a particular data point was used in training. This work showed that even well-generalized models could reveal sensitive information. Later, Carlini et al. [

9] demonstrated that deep neural networks may memorize and leak unique sequences from training data—a phenomenon referred to as unintended memorization. This risk is particularly severe in LLMs trained on large-scale web data.

To mitigate such risks, Mendels et al. [

10] proposed adversarial de-identification methods, which rewrite text to remove sensitive content while preserving its semantic meaning. However, their approach primarily addressed direct identifiers, such as names and dates, leaving models vulnerable to semantic inference. Around the same period, Veale et al. [

4] emphasized the importance of transparency and accountability in machine learning systems, especially in high-stakes decision-making contexts—qualities often lacking in current black-box LLM deployments.

A significant shift occurred with the introduction of GPT-3 by Brown et al. [

1], which employed a transformer-based architecture with 175 billion parameters and demonstrated few-shot prompting, where the model can perform tasks by conditioning on a handful of examples without explicit fine-tuning. However, this advancement came with increased concerns about privacy [

11]. Hisamoto et al. [

12] investigated privacy leakage in encoder–decoder models by applying membership inference attacks to sequence-to-sequence translation systems, showing that prediction confidence can be used to determine whether a sample was in the training set. Building on this line, Jagannatha et al. [

13] proposed membership inference techniques specifically targeting parametric decoder architectures, designing attack models that exploit hidden representations and output distributions to infer training membership.

In the medical domain, Lehman et al. [

3] evaluated BERT models pretrained on large corpora of clinical notes, systematically probing their outputs using crafted prompts to test whether protected health information (PHI) could be regenerated from the training data. Complementing these approaches, Carlini et al. [

2] developed a prompt-based extraction methodology that combines rare string analysis with targeted queries, enabling them to recover long, unique sequences memorized by GPT-2, even under black-box access conditions.

These studies collectively underscore that traditional privacy-preserving approaches are insufficient for LLMs, motivating the development of more advanced, context-aware privacy solutions.

To address the persistent challenge of removing sensitive information after a model has been trained, ref. [

14] surveyed techniques for machine unlearning, aiming to eliminate the influence of specific training data from model parameters. While foundational, these methods are computationally demanding and not yet scalable to large models, such as LLMs.

Building upon earlier insights into model vulnerabilities, Ye et al. [

15] investigated how dataset structure and model architecture influence susceptibility to MIAs. Their results reinforced the idea that even minor architectural choices can significantly affect privacy leakage, emphasizing the need for structurally aware defense mechanisms.

In 2023, Zhang et al. [

16] introduced the concept of counterfactual memorization, showing that LLMs can memorize not only exact text but also semantically equivalent paraphrases. This revealed a more subtle memorization phenomenon and exposed the limitations of surface-level anonymization strategies, such as redaction and pattern replacement.

To address risks during inference, Chen et al. [

17] proposed Hide and Seek (HaS). This lightweight framework anonymizes user prompts before sending them to cloud-based LLMs and reconstructs private information post-inference. HaS applies both a generative-based scheme using the BLOOMZ model and a label-based scheme based on NER to mask sensitive data. While effective at obfuscating explicit PII, it remains limited in handling contextual inferences and relationship-based privacy leaks, particularly in knowledge retrieval tasks.

By 2024, privacy research shifted toward semantic and contextual privacy risks. Staab et al. [

18] and Farquhar et al. [

19] demonstrated that LLMs can infer sensitive attributes such as profession, location, or demographics from otherwise innocuous text. These findings revealed the limitations of conventional tools in defending against implicit attribute inference or latent privacy signals.

To mitigate structural vulnerabilities in microdata, Aufschläger et al. [

20] introduced ClustEm4Ano, a clustering-based method that anonymizes quasi-identifiers using value generalization hierarchies (VGHs). However, their approach is tailored to tabular data and is less effective in handling unstructured language content.

For textual anonymization, Frikha et al. [

21] proposed IncogniText, which rewrites sentences using adversarially trained LLMs to hide private attributes while maintaining semantics. Though effective at attribute-level privacy, it lacks document-level privacy modeling and is contextually limited.

Yang et al. [

22] further advanced this area by proposing RUPTA, a framework for optimizing the privacy-utility trade-off in text anonymization. It integrates LLM-powered privacy and utility evaluators with lexicographic optimization. Experimental tests demonstrate that RUPTA is resistant to both black-box and white-box re-identification attacks, thereby proving its effectiveness in mitigating the risk of disclosure and enhancing task performance. Nonetheless, RUPTA relies on iterative evaluator feedback, which imposes a significant computational overhead; therefore, it limits scalability and makes it unsuitable for implementation in real-time or at large scales.

In 2025, new approaches continued to refine these ideas. Kim et al. [

23] introduced SEAL, a system where small language models (SLMs) self-improve via adversarial distillation. By combining supervised fine-tuning with direct preference optimization, SEAL improves utility preservation while reducing privacy leakage against adversary models. Unlike RUPTA, SEAL offers better efficiency by avoiding reliance on large LLM evaluators. However, it exhibits limited scalability and weak generalization when applied to context-sensitive or relational data, where privacy risk stems from entity interactions rather than isolated attributes.

Zhan et al. [

24] addressed inference-time privacy from a systems design angle through

Portcullis. This privacy gateway anonymizes input in secure enclaves (Intel TDX) before interaction with third-party LLMs. Portcullis offers strong isolation and reconstruction capabilities for sanitized data, outperforming prior gateway systems, such as Hide-and-Seek, in efficiency. Its limitation, however, lies in its hardware dependence and pattern-based sanitization, which restricts flexibility against implicit or semantic privacy risks.

In the medical domain, Wiest et al. [

25] developed LLM-Anonymizer, a system for local anonymization of clinical text, ensuring compliance with the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA) without relying on external cloud models. McIntosh et al. [

26] employed regex-guided rewriting for anonymizing radiology reports. While both methods are effective for explicit PII, they do not address semantic privacy threats, such as attribute co-occurrence or organizational roles.

Staab et al. [

27] also proposed an LLM-based anonymization system that iteratively alternates between a privacy attacker model and an anonymizer model until privacy leakage is minimized. Although human evaluations showed a strong preference for their anonymized outputs, the system’s performance is tightly bound to the strength of the adversary and synthetic training data, raising concerns about generalizability.

Finally, He et al. [

28] revealed that LLMs can expose contextual and relational privacy risks, such as inter-entity relationships or hierarchical roles, even in the absence of explicit identifiers. This critical insight highlights a persistent gap in current defenses: the failure to detect and remediate deep semantic and structural leaks.

Together, these studies demonstrate a progression from surface-level redaction methods to more advanced systems that address deeper semantic privacy risks; however, they still fall short in handling relational privacy threats embedded in language. To fill this gap, we propose SPADR, a semantic privacy-aware detection and remediation pipeline that combines statistical anomaly detection, entity-relationship graph analysis, and multi-strategy anonymization to detect and neutralize both explicit and implicit risks. By scanning and remediating privacy threats in context before LLMs use data, SPADR prevents leakage at both training and inference stages, offering a practical step toward safer deployment of language models.

3. Research Problem

Most existing privacy-preserving methods for LLMs focus on explicit identifiers, utilizing high-level techniques, such as NER, rule-based filtering, or noise injection. While these approaches are helpful, they do not address deeper semantic and relational privacy risks. Modern LLMs can memorize, infer, and link sensitive information embedded in text, even after de-identification, due to their strong contextual understanding and generalization capabilities.

This creates a critical gap: based on our knowledge, there is currently no scalable, semantic-aware data sanitization pipeline that can detect and remediate latent privacy risks before the data are used to train or fine-tune LLMs. Such risks include re-identifiable entity relationships, implicit attribute inference, and contextual traceability. Furthermore, although graph-based privacy risks have been discussed in theory, there is still no practical, graph-augmented framework that can identify and mitigate relational privacy threats at scale in large LLM datasets.

Addressing this problem requires solving several open research challenges:

Semantic anomaly detection for unseen or evolving privacy threats in unstructured text;

Scalable graph-based methods for identifying and mitigating relational leakage;

Adaptive remediation strategies (e.g., redaction, generalization, summarization, deletion) tuned to privacy-utility trade-offs.

4. Methodology

In this paper, we propose SPADR. It is a new step in the data-cleaning pipeline for LLMs.

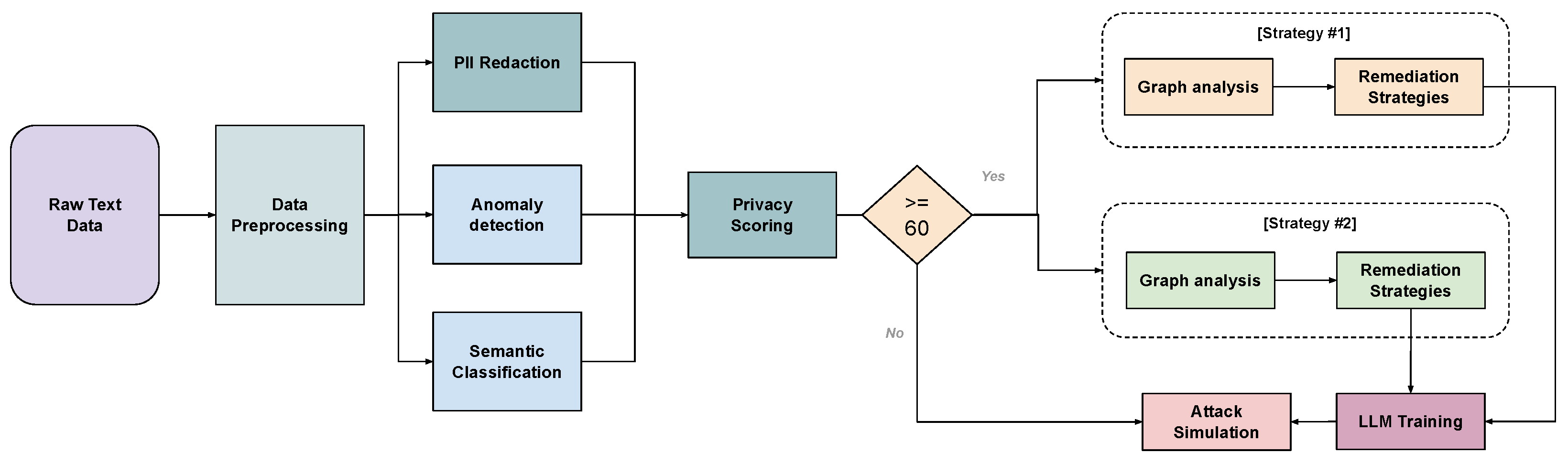

Figure 1 shows the overall design of the SPADR pipeline. It includes several components that work together to identify privacy risks and apply suitable remediation techniques.

Our pipeline begins with raw text data, which passes through a data preprocessing step. After that, three modules run in parallel. The first is PII redaction, which uses NER to find and remove known personal information. The second is anomaly detection, which uses a denoising autoencoder trained only on high-privacy data. This model helps detect unusual or risky content by comparing new text to patterns it has learned from safe examples. The third module is semantic classification, which utilizes a zero-shot classifier to determine if a message appears sensitive. The results from these three modules are combined into a single privacy scoring step. If the privacy score is 60 or higher, the pipeline performs a detailed graph analysis to understand the relationships between different entities in the text. This analysis supports the selection of an appropriate remediation strategy, such as redacting, summarizing, generalizing, or deleting specific parts of the text. The remainder of this section provides a detailed explanation of each component in the SPADR pipeline.

4.1. Data Preprocessing

We start our preprocessing by manually reviewing and labeling a subset of the dataset to identify emails that raise privacy concerns [

29]. This step provides the model with a foundation, enabling it to learn what includes sensitive content. This pool is then used to train a denoising autoencoder (DAE). Importantly, the training remains unsupervised, since the DAE is optimized only through reconstruction loss without explicit supervision. The manual labeling serves solely to select representative inputs, not to provide target labels. We chose this unsupervised setup because in real-world scenarios, labeled privacy data are often limited or unavailable, and a system that generalizes to unseen messages without heavy annotation is more practical. Following this seeding step, we apply a comprehensive preprocessing pipeline to ensure that email content is clean, normalized, and semantically meaningful. This step is essential as it helps reduce noise, eliminate irrelevant details, and allows the model to focus on meaningful content, enabling accurate detection and redaction of privacy risks later on.

In the data preprocessing, we begin with data cleaning. We remove all URLs and replace them with a generic [url] token to prevent them from being interpreted as meaningful text. We also removed HTML tags from the content, and we normalized formatting inconsistencies by removing multiple whitespace characters and eliminating repetitive punctuation patterns (e.g., sequences of underscores, asterisks, or dashes) commonly found in forwarded emails or automated signatures. These regular expression patterns help to standardize the message structure.

To isolate the actual content of each message, we extract the email body using rule-based heuristics. If the message includes a Subject: header, we extract the content that follows and ignore irrelevant blocks, such as headers, forwarding trails, or disclaimers. This step ensures that we only analyze the user-authored portion of the email—where privacy-sensitive information is most likely to reside.

Next, we tokenize the cleaned text using the NLTK library [

30], which segments it into individual word tokens. We remove punctuation and convert all tokens to lowercase. This process helps emphasize semantically meaningful terms and avoids sparsity due to variations in case or punctuation.

After normalization, we transform each email into a dense vector representation using the “all-MiniLM-L6-v2” model. This model is a lightweight, BERT-based sentence encoder from the Sentence Transformers library [

31]. These semantic embeddings capture the contextual meaning of the message. We use these embeddings in the following stages of the SPADR pipeline, including anomaly detection, semantic classification, and risk scoring.

4.2. PII Redaction Technique

We extract high-level PII information using a two-stage redaction method. This approach combines NER-based replacement with rule-based pattern matching.

In the first stage, we utilize a pretrained NER model provided by the spaCy library [

32] to identify sensitive entities within the text. These entities include:

PERSON—names of individuals,

GPE, LOC—geopolitical and geographic locations,

ORG—organization names,

DATE, EMAIL, PHONE—common identifiers,

LAW, MONEY—legal and financial terms.

Once the model detects an entity, it is replaced with a corresponding placeholder token to cover its content while preserving the semantic structure. For example, John Smith is replaced with [NAME], New York becomes [LOCATION], and john@example.com becomes [EMAIL].

In the second stage, we apply regular expressions to identify sensitive patterns not consistently captured by NER. A regular expression includes:

email addresses using standard formats,

phone numbers in local and international formats,

dates in various structures (e.g., 01/01/2024, 2024-01-01),

sensitive keywords, such as password, confidential, secret, Enron, or financial values like million or SAR.

We replace such patterns with a generic [REDACTED] tag. This hybrid approach ensures that both structured and unstructured indicators of sensitive information are redacted adequately before the text is passed to the next stage. By combining statistical NER with handcrafted rules, we improve coverage and reduce the likelihood of missed privacy leaks.

4.3. Anomaly Detection Using Denoising Autoencoder

After the preprocessing stage, we apply a DAE as an anomaly detection model. The intuition is that the DAE, trained on privacy-sensitive messages, will learn the common patterns associated with such risks. Suppose a new message can be reconstructed accurately. In that case, it is likely to resemble previously seen privacy-risk cases, whereas poor reconstruction indicates a novel or less typical pattern that may also warrant attention.

Let

denote the normalized embedding of an email message, where

d is the number of features representing the message (e.g., binary entity-presence indicators or word-level features). During training, We add Gaussian noise to obtain a corrupted input:

where

is Gaussian noise with variance

and

is the noisy version of the input. This corruption forces the autoencoder to learn robust representations of sensitive patterns.

The encoder maps the corrupted input to a latent representation:

where

z is a latent vector that captures privacy-related features, and

is the encoder parameterized by weights and biases

.

The decoder reconstructs the input from

z:

where

is the reconstructed embedding of the original message, and

is the decoder parameterized by

.

The reconstruction loss is minimized during training using the mean squared error (MSE):

where

and

are the

i-th components of the original and reconstructed embeddings.

For a new message

x, we compute the reconstruction error:

A lower score indicates that the message is close to the distribution of risky training samples, while a higher score suggests deviation from known privacy-sensitive patterns.

Finally, the scores are normalized to a range of 0–100 for comparability:

where

represents the anomaly score of message

x. Messages with low reconstruction error (low

) are semantically similar to high-risk training examples, while higher scores indicate benign or unseen patterns.

Before training, we manually select the records that contain a high privacy risk. This step is important because it enables the model to focus on sensitive patterns rather than general text. The DAE is still trained in an unsupervised way, since it learns to reconstruct its inputs without using labels. By focusing on risky content, the DAE becomes more specialized in detecting privacy risks rather than modeling all kinds of text.

4.4. Semantic Classification

We use a zero-shot text classifier based on the BART model [

33], which is pretrained on natural language inference (NLI) tasks. In NLI, the model learns to decide whether a given hypothesis logically follows from a given premise. We apply this ability by treating the input message as the premise and the classification labels as hypotheses. Specifically, we use the labels

“This message is sensitive” and

“This message is not sensitive” as hypotheses. The classifier then assigns a confidence score to each label based on how well it matches the content of the input message, without requiring any task-specific training. We include this component in our pipeline to capture cases where sensitivity arises from the overall meaning or context of the message, rather than the presence of specific keywords or known entities. It helps detect slight or implicit privacy risks that rule-based methods may miss. The resulting semantic score contributes to the overall privacy risk score used in the decision-making stage.

4.5. Privacy Scoring

To assess whether a text poses a privacy risk, we calculate a risk score that combines three components: Semantic classification, anomaly detection using DAE, and NER.

First, we use a semantic classification confidence score associated with the

Sensitive label and scale it as follows:

Next, we compute a named entity score using the NER model. For each identified entity that belongs to a set of PII types (e.g.,

PERSON,

GPE,

ORG,

EMAIL,

DATE), we increment the score by a fixed weight of 15:

Finally, we include the anomaly score from a DAE that quantifies how much a message deviates from the patterns of sensitive text.

We compute the final risk score as a weighted sum of all three components:

We choose these weights based on the type of signals each method provides. Semantic classification receives the highest weight (40%) because it evaluates the contextual sensitivity of the text. The anomaly score and NER-based score each receive 30%, as they offer complementary evidence: one from distributional deviation and the other from explicit identifiers. We validate the robustness of this weighting scheme through ablation and sensitivity experiments.

We make the final risk score in the range

. If the score exceeds a threshold of 60 or if the text includes any PII entities, we apply SPADR remediation. This optimal threshold for triggering the SPADR pipeline is derived using Youden’s Index [

34] and the ROC curve as follows:

This method ensures that privacy protection is activated only when the message poses a meaningful risk.

This privacy score will help distinguish public knowledge from private information because SPADR combines semantic classification, anomaly detection, and NER to assign risk scores. Public knowledge (e.g., “Philadelphia, July 4, 1776” [

35]) is commonly known in non-sensitive contexts and therefore produces a low anomaly score and a low semantic sensitivity score. By doing that, when a public query is passed, it will not exceed the risk threshold and pass unchanged through SPADR. On the other hand, context-specific details such as (“the youngest executive in the Riyadh branch met with the ministry on July 4, 2024”) will get higher risk scores due to rarity and relational cues. This design allows SPADR to avoid over-blocking historical or well-known facts while still flagging sensitive private information.

4.6. Graph Analysis and Remediation

We propose to use graph analysis because it provides a powerful way to model and understand the relationships between entities in text. Unlike linear models, which treat tokens in isolation or sequence, graph-based approaches capture how entities are connected, enabling richer context modeling. In our goal of privacy protection, a single piece of information may not be risky on its own. However, when combined with other entities such as a person’s name, location, and organization, it can reveal sensitive or identifying patterns in relationships. By constructing graphs that represent these co-occurrences, we can detect high-risk entity combinations that standard redaction tools may not flag. This relational view enables the SPADR pipeline to enhance privacy risk scores and trigger more robust remediation when entity clusters indicate sensitive exposure. To achieve this, we propose the following two strategies:

4.6.1. Strategy 1: Graph-Based Risk Detection and Remediation

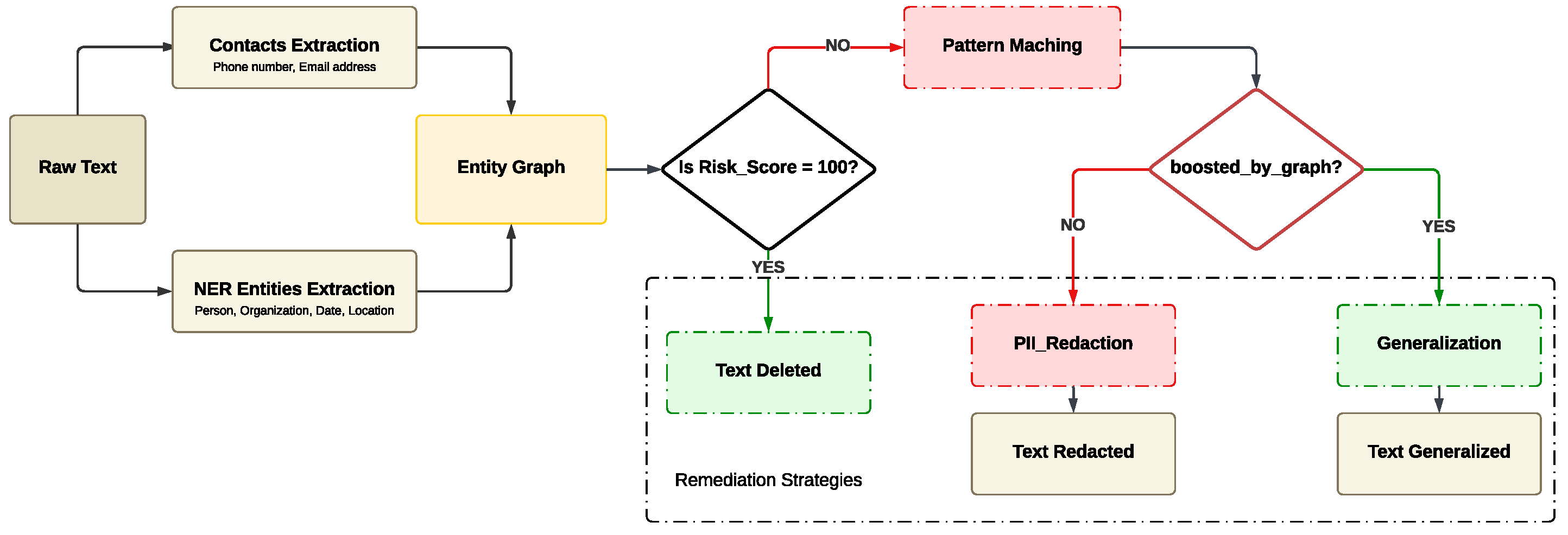

This strategy addresses hidden privacy risks in textual data by employing a graph-based approach to analyze the relationships between sensitive entities. While traditional NER methods are effective in identifying and redacting individual entities, such as names, organizations, or phone numbers, redaction alone is often insufficient. In many cases, even after a specific term is removed, the surrounding context may still reveal private meaning. For example, the presence of a redacted name alongside a known company and a date may be enough to infer the individual’s identity. To handle this type of contextual privacy leakage, we construct an entity graph that models the relationships between detected entities. It allows us to reason about entity combinations, not just isolated terms.

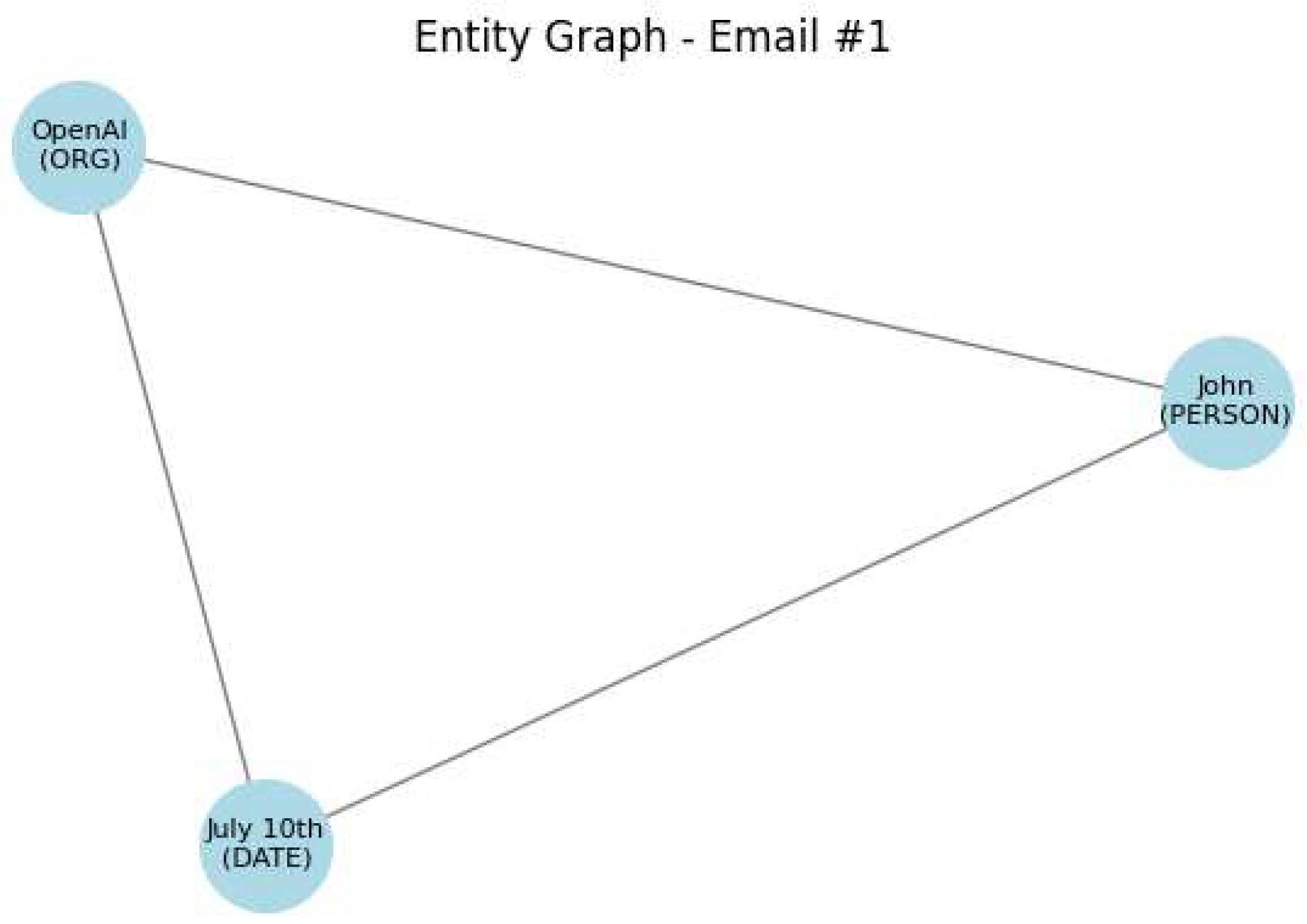

As shown in

Figure 2, the process begins with entity extraction. We apply PII redaction, as described in

Section 4.2, to detect key entity types such as

PERSON,

ORG,

GPE,

DATE,

PHONE, and

EMAIL. These entities are extracted using a combination of NER and regular expressions. Each entity is added as a node in an undirected graph, and edges are created between every pair of nodes to represent their co-occurrence within the same text. We implement this entity graph using the

NetworkX library [

36], which provides a flexible and efficient framework for representing and analyzing graph structures in Python 3.10. The graph-based representation enables the detection of higher-order relationships between entities that might otherwise remain unnoticed.

We formalize this process in Algorithm 1, which outlines how we extract entities and construct the graph.

| Algorithm 1 Build Entity Graph from Text |

- 1:

Input: Raw text t - 2:

Output: Undirected entity graph G - 3:

Extract named entities using NER (e.g., PERSON, ORG, DATE, etc.) - 4:

Extract phone numbers and emails using RegEx - 5:

Initialize empty graph G (using NetworkX) - 6:

for all contact ∈ {phones, emails} do - 7:

Add node to G with label = “PHONE” or “EMAIL” - 8:

end for - 9:

for all entity ∈ NER entities do - 10:

Add node to G with label = entity type - 11:

end for - 12:

for all pairs of nodes do - 13:

Add undirected edge between i and j - 14:

end for - 15:

return G

|

Once the graph is built, we compute a privacy risk score for each input, as described in Equation (

9). This score combines semantic sensitivity, anomaly detection, and entity presence. If the final score reaches 100, we classify the text as extremely risky and delete it entirely to prevent privacy leakage.

After scoring, we analyze the entity graph to detect sensitive combinations. For instance, a graph that includes a person, an organization, and a date may imply an employment or meeting context. Similarly, a person connected to both a phone number and an email address suggests a direct contact risk, even if the entities themselves are redacted. When such patterns are identified, we mark the text with boosted_by_graph = TRUE, indicating that the graph-based reasoning increased the privacy risk and triggered a more stringent remediation response.

Based on both the risk score and the graph structure, we apply one of three remediation strategies. For extremely high-risk cases, we use deletion, replacing the entire content with a placeholder warning. For moderately risky combinations, we apply generalization, where sensitive entities are replaced with generic labels, such as <person> or [organization]. In lower-risk cases, we use PII redaction, masking only the detected entities while preserving the surrounding context.

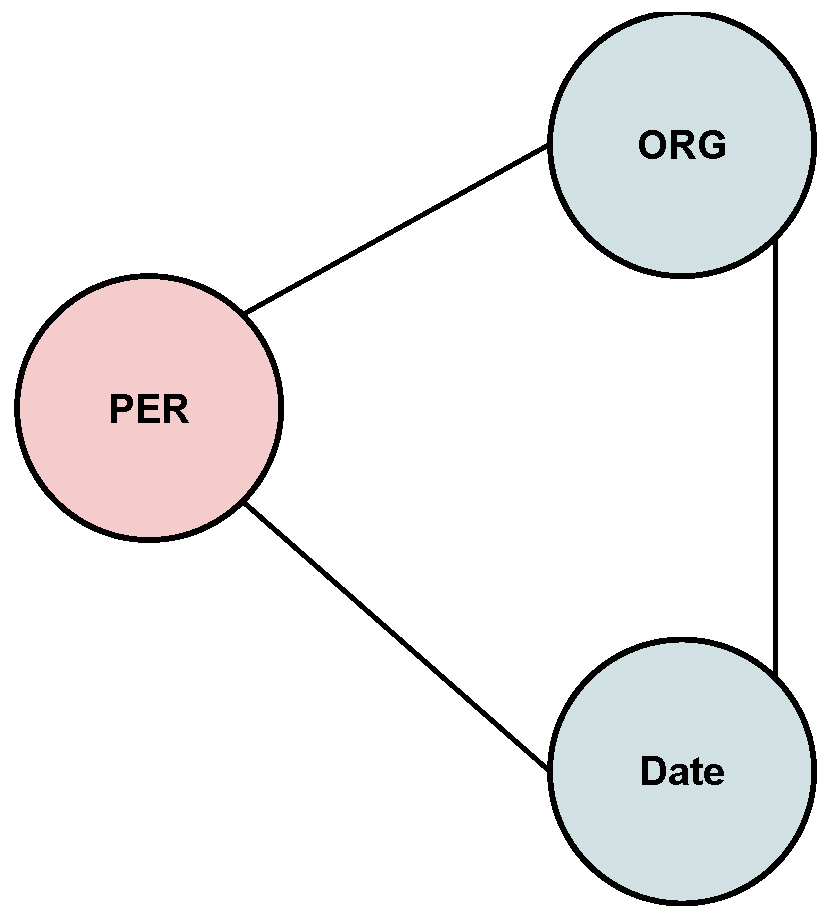

Figure 3 illustrates an example entity graph derived from a single piece of text. In this case, the graph connects nodes of types

PERSON,

ORG, and

DATE, forming a triangular structure. Even if each term is redacted individually, their co-occurrence suggests a meeting or job affiliation. This example demonstrates how graph analysis enables SPADR to identify subtle privacy risks that linear methods would overlook.

This graph-based strategy enables us to identify nuanced privacy risks that simpler redaction methods may overlook. By modeling how entities co-occur and interact within a document, we enable smarter, context-aware remediation decisions. In doing so, we reduce the risk of sensitive information leakage while preserving the original data’s usefulness.

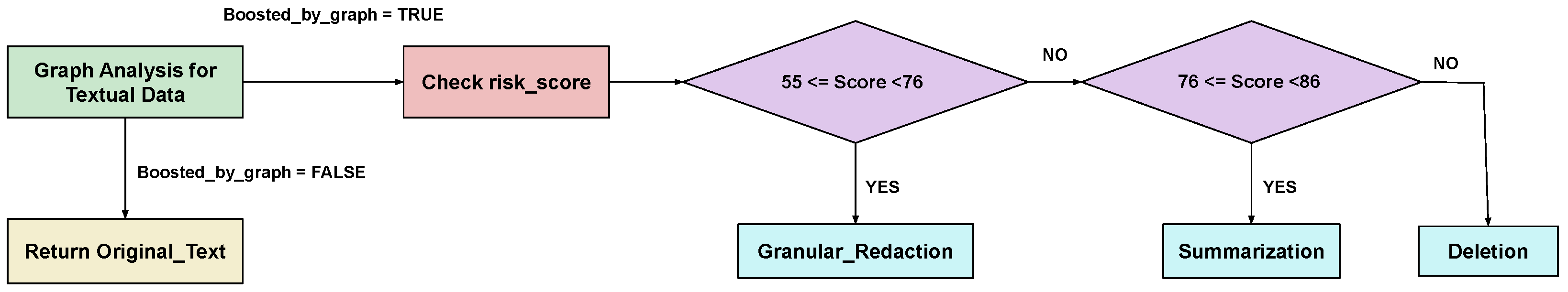

4.6.2. Strategy 2: Graph-Boosted Threshold-Based Remediation

In the previous section (Strategy 1), we applied rule-based pattern detection on entity graphs to identify specific high-risk combinations such as PERSON + PHONE + EMAIL and trigger targeted remediation. However, in this second strategy, we adopt a more general approach. Rather than searching for specific patterns, we use the entity graph solely to check whether any relationships exist between entities within a given text. If the graph contains at least one edge, we set a binary flag boosted_by_graph = TRUE, indicating that further remediation is required. The overall risk score then drives remediation. This design makes Strategy 2 more scalable and broadly applicable, as it relies on threshold-based logic rather than handcrafted rule enumeration.

In this strategy of the SPADR pipeline, we combine lightweight graph analysis with risk-aware thresholding to determine the appropriate remediation strategy. After applying PII redaction, we construct an entity graph using the

NetworkX library [

36], which allows us to examine the co-occurrence structure of detected entities. This graph-based signal helps detect hidden relational risks that may not be evident from redacted tokens alone.

In this strategy, each text is first represented as a graph, where:

- 1.

Nodes (entities) represent:

- (a)

PII information, such as a person, location, organization, and phone number

- (b)

Attributes that have relationships with each other.

For example, an increase in sales → Summer season

- 2.

Edges represent the relationships between entities.

The Graph Analysis strategy is crucial for optimizing further remediation strategies, which have been applied to improve the rising privacy risks in textual data using the following steps:

Step 1: The textual data acts as an input for our Graph Analysis

Step 2: Graph Analysis works by assigning a flag, [boosted_by_graph], to individual texts, indicating whether the text contains entities that have relationships with each other. If such relationships exist, the text is passed on for applying remediation strategies. Otherwise, the text is considered harmless and does not contain any private information, and should be left unchanged.

The pseudocode for graph analysis is as follows (Algorithm 2):

| Algorithm 2 Determine if Entity(x) has Relationship with Entity() in Textual Data |

- 1:

Input: {Textual Data} - 2:

Output: {Graphs, texts labeled with [boosted_by_graph] flag} - 3:

Build graph G from entities in Textual Data - 4:

if length() > 0 then - 5:

[boosted_by_graph] ← TRUE - 6:

else - 7:

[boosted_by_graph] ← FALSE - 8:

end if

|

After our textual data has been classified by

[boosted_by_graph] = TRUE, we apply remediation strategies to our texts, as shown in

Figure 4. In this paper, we demonstrate three types of remediation techniques:

Granular Redaction: This technique involves replacing only the sensitive or private PII entities with their labels. For example, “John works at Apple” will be generalized as “<person> works at <organization>”.

Summarization: This technique involves creating a concise summary directly from the text’s content. To apply this technique, we have integrated the pretrained LLM model [

37]

sshleifer/distilbart-cnn-12-6, which is a distilled version of BART-large-CNN designed to produce short and efficient summaries from the text.

Deletion: This technique is used when the text contains highly sensitive PII information, which, even after applying granular redaction or summarization, can still be used to reveal private entities within the text and lead to the re-identification of the text’s content. In this case, the deletion technique is applied to delete the text completely due to the high risk of privacy violation.

The optimal thresholds for applying remediation strategies are determined using Youden’s Index Equation (

10) and by examining the statistical distribution of risk scores in

Table 1.

Let us look at how these remediation techniques are used to apply them to our textual data strategically:

Step 1: First, check if the text is flagged by [boosted_by_graph] to TRUE, indicating that more than two relationships exist inside the text’s content.

Step 2: Next, observe the risk scores assigned to each text using anomaly detection, which are computed based on the combination of Semantic scores, AutoEncoder scores, and NER scores, as given in Equation (

9) for computing the risk score.

Step 3: If the risk score is less than 55% or between 55% and 76%, granular redaction is applied. If it is equal to 76% or falls between 76% and 86%, summarization is applied. If it is equal to or exceeds 86%, deletion is applied.

Step 4: If the text is flagged by [boosted_by_graph] to FALSE, it means that the text does not contain any sensitive PII entities and is simply plain text, so remediation strategies must not be applied to these texts, and they should remain unchanged.

Finally, we obtain cleaned textual data with a reduced risk of privacy violation.

The pseudocode for the remediation strategy is as follows (Algorithm 3):

| Algorithm 3 Applying Remediation Techniques on Textual Data |

- 1:

Input: {text, risk_score, boosted_by_graph} - 2:

if boosted_by_graph = TRUE then - 3:

if risk_score or ( risk_score ) then - 4:

Apply Granulated_Redaction - 5:

else if risk_score then - 6:

Apply Summarization - 7:

else - 8:

Apply Deletion - 9:

end if - 10:

else - 11:

return Original_Text ⊲ boosted_by_graph = FALSE - 12:

end if

|

5. Experiments

We divide our experiment into four main steps: collecting and preprocessing the data, training the model, evaluating its performance, and finally, discussing the effectiveness of our methods.

5.1. Data Collection

We use the publicly available Enron Email Dataset [

35], which contains about 500,000 emails from employees of the Enron Corporation, a U.S. energy company that collapsed in 2001 due to corporate fraud [

38]. The dataset was released during the federal investigation and is now widely used in research on NLP, privacy, and organizational communication. This dataset is suitable for our goal because it contains a diverse range of text types, including financial communications, personal messages, and private plans. Such diversity provides a rich source of sensitive information, enabling us to evaluate privacy risks in various contexts. For this study, we randomly select 10,000 emails. We focus on the body of each email because it often contains sensitive or private content, such as personal details, organizational roles, or informal conversations. We then split the data into three parts: training, validation, and testing. We use the training set to train the DAE, the validation set to tune hyperparameters, and the test set to evaluate the full SPADR pipeline.

In addition, we generate 500 synthetic records using GPT-4. These records include HR emails, bank notices, clinic notes, and support tickets, each written in 3–5 sentences with sensitive information, such as names, contact details, financial identifiers, and health notes. We use synthetic data because we need to evaluate our pipeline on a dataset that is more diverse and that covers domains beyond corporate finance. In particular, there is no publicly available health dataset with realistic sensitive content. By combining the Enron set with synthetic records, we can test SPADR on both financial and health-related data and evaluate its ability to generalize across domains.

Synthetic Data Generation

We generate 500 synthetic records to expand the diversity of our dataset and to include domains not covered by Enron. We use the GPT-4 API model. We design structured prompts to create realistic but fictitious documents, such as HR emails, bank notices, clinic notes, and customer support tickets. Each prompt instructs the model to write between three and five sentences in English and to include specific types of sensitive information. Our prompts design contains personal identifiers (e.g., names, social security numbers, IDs), contact details (e.g., emails, phone numbers, addresses), financial records (e.g., bank accounts, credit cards), and, in some cases, health content (e.g., diagnoses and prescriptions). Example prompts are provided in

Appendix A.

Then, we validate the dataset in three steps. First, we apply regular expressions and a BERT-based NER model to confirm that each synthetic record contains at least one sensitive entity. On average, every record includes between three and five sensitive entities. Second, we perform a human review of a random sample of records to ensure that the texts are natural and that the sensitive information is realistic but still fictitious. Third, we compare entity distributions with the Enron dataset. Both sets contain a similar mix of PERSON, ORG, EMAIL, and DATE entities. In contrast, the synthetic set adds structured identifiers, such as social security numbers and card numbers.

To measure the distributional shift between Enron and the synthetic data, we compute sentence embeddings using MiniLM and calculate the cosine similarity between the mean embeddings of both datasets. Specifically, we average the embeddings of all records in each dataset and then compute the cosine similarity between the two mean vectors. The similarity score of 0.57 indicates that the two sets are semantically related but distinct. This shows that the synthetic records introduce new styles and domains (e.g., health and ID records) while remaining close enough to Enron emails to serve as a realistic test set.

Therefore, by combining Enron with the synthetic records, we were able to obtain a dataset that includes both real corporate communication and diverse synthetic examples from finance and health domains. This setup provides a stronger evaluation of SPADR’s generalization ability.

5.2. Model Training

We train our model denoising autoencoder to reconstruct non-sensitive email content and detect potential privacy risks. We explain the architecture of the model in

Section 4.3, where it consists of an encoder with two linear layers that reduce the dimensionality of the input, followed by a decoder that reconstructs the original input. During training, we add Gaussian noise to the input vectors to help the model learn more robust representations. The model is trained to minimize the reconstruction loss using the mean squared error (MSE) metric.

To select the final hyperparameters, we employed a random search over various configurations and chose the set that achieved the best performance on the validation set. We used the Adam optimizer with an initial learning rate of 0.01. A decaying learning rate schedule reduced the learning rate by half if the validation loss did not improve over seven epochs, with a minimum allowed learning rate of 0.000001. We also applied early stopping with a patience of 10 epochs and a maximum of 200 training epochs. This setup ensured stable convergence and prevented overfitting. The main hyperparameters used in training are summarized in

Table 2.

We also apply normalization to keep input values within the [0, 1] range, ensuring the autoencoder output remains comparable to the original input.

5.3. Evaluation Metrics

To evaluate the effectiveness of the proposed SPADR pipeline, we adopted three key metrics: two for assessing privacy risk and one for preserving utility. These metrics differ in their objectives but collectively ensure a balance between privacy and utility.

5.3.1. Attribute Inference Attack Accuracy (AAA)

This metric evaluates whether sensitive attributes remain in the text after cleaning. For each original text

and its anonymized version

, we extract named entities (e.g.,

PERSON, ORG, LOC) using an NER model. Let

be the set of entities in

and

the set in

. Attribute inference attack accuracy is computed as:

A lower AAA indicates better privacy protection.

5.3.2. Membership Inference Attack (MIA)

We examine whether our models are vulnerable to MIA, which tests whether training examples can be distinguished from held-out data using the model’s loss [

39]. Following this approach, we compute a membership score based on the negative average cross-entropy loss for each sequence and evaluate attack success with ROC-AUC. The detailed setup is provided in

Appendix B.

5.3.3. BERTScore for Utility Preservation

To ensure anonymization does not destroy the semantic content, we compute BERTScore, which measures the contextual similarity between the original text and the anonymized text using transformer-based embeddings. For a reference sentence

r and candidate

:

A higher BERTScore indicates better semantic preservation.

5.4. Model Performance

Table 3 presents a comparison of privacy protection and utility preservation across four methods: the original unprotected baseline, a NER-based redaction system, and two SPADR strategies, SPADR (S1) and SPADR (S2).

5.5. Runtime Efficiency

We evaluate the runtime of the SPADR pipeline on a PC with an NVIDIA RTX 4070 GPU, Intel i7-13700K CPU, and 32 GB RAM. The pipeline includes semantic classification, denoising autoencoder scoring, NER detection, and graph-based remediation. Processing a single document takes about 5 s. This time is mainly due to the zero-shot classifier, which requires initialization and decoding. When we process documents in batches, the throughput improves. For example, four documents are processed in 0.70 s, equivalent to 5.7 papers per second. These results demonstrate that SPADR operates efficiently in practice, particularly when documents are processed in batches, as is common in real-world deployment settings.

5.6. Graph Analysis and Remediation

This section evaluates the effectiveness of SPADR’s graph-based remediation techniques through two distinct strategies: a fixed rule-based approach (see

Figure 2) and a dynamic, graph-driven remediation framework (see

Figure 4). Both strategies leverage the computed privacy risk scores and semantic relationships among named entities to determine appropriate transformation actions.

5.6.1. Strategy 1: Fixed Rule-Based Remediation

This approach applies predefined remediation rules based on the email’s risk score and the structural connectivity of entities within the graph. Specifically, emails with a critical privacy risk (i.e., 100%) are deleted, whereas others undergo targeted redaction or generalization based on identified sensitive elements attributes.

CASE 1

CASE 2

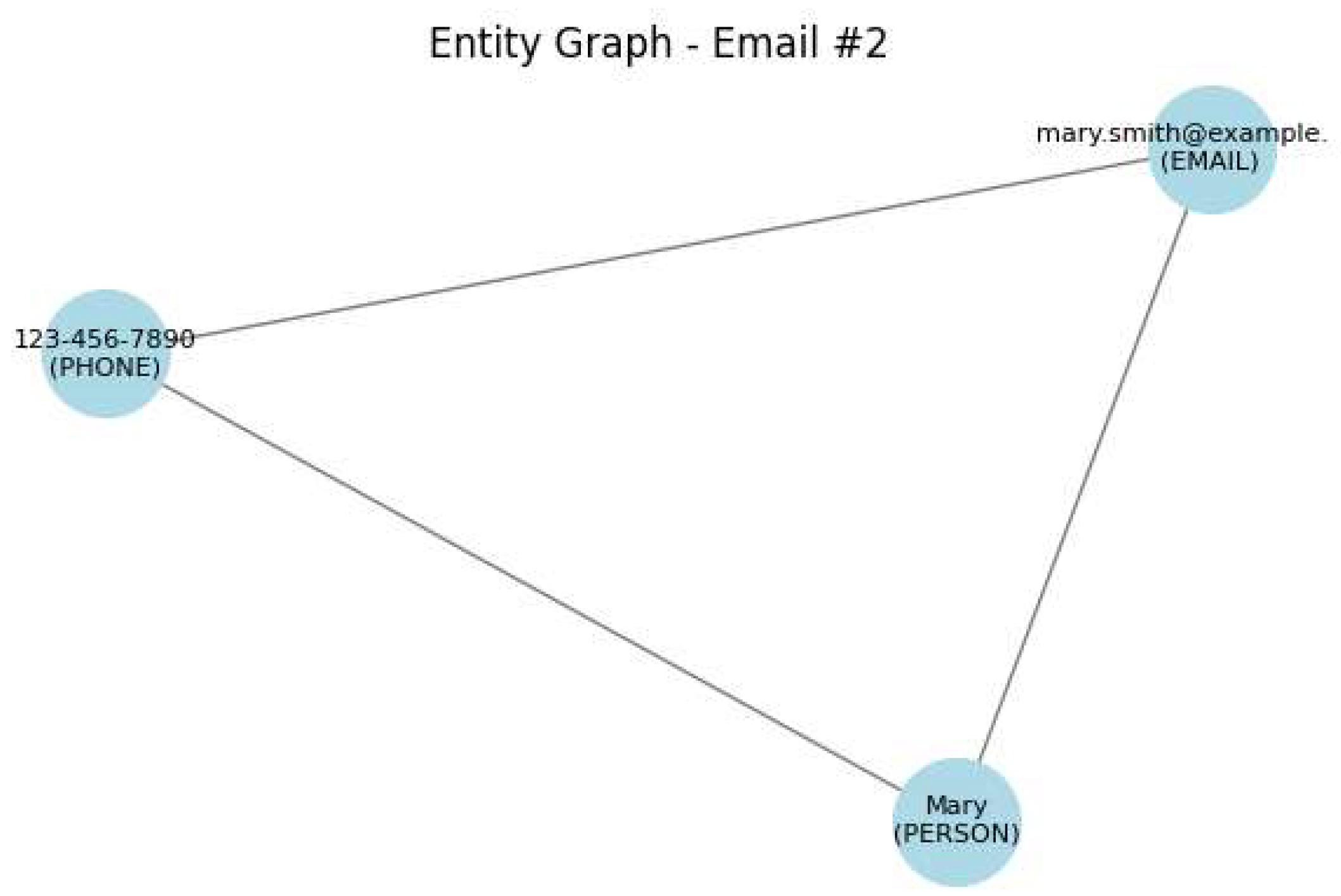

Original Text: Please contact Mary via

mary.smith@example.com or 123-456-7890. The graph analysis is shown in

Figure 6 Remediation Action: Redact the personal name and partially redact the phone number, while retaining the email address for communication context.

Final Text: Please contact [NAME] via mary.smith@example.com or [NUMBER]456-7890.

Risk Score: 56.05%

SPADR Applied: Yes

Boosted by Graph: True

CASE 3

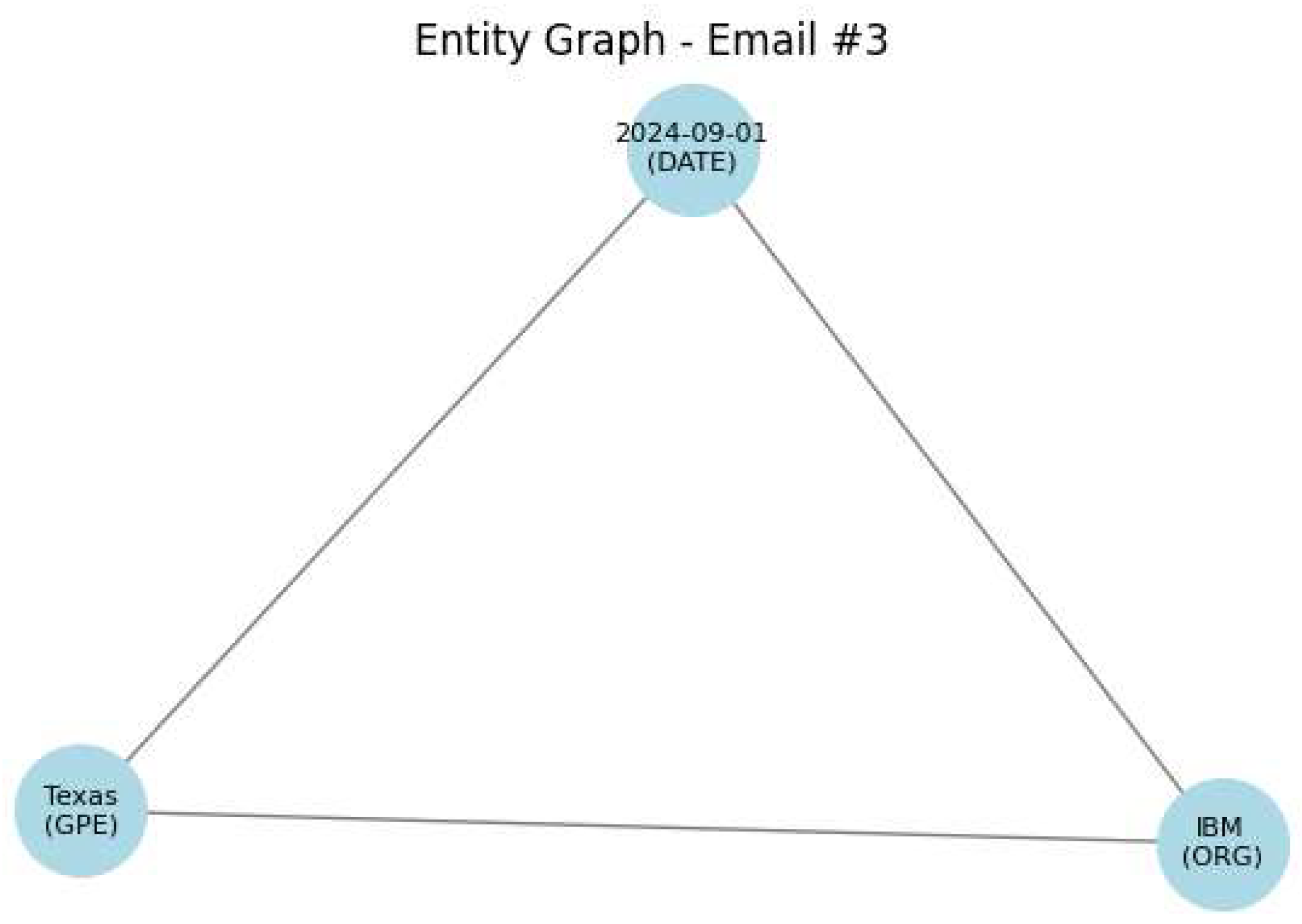

Original Text: Ahmed from IBM will be visiting Texas on 2024-09-01. The graph analysis is shown in

Figure 7 Remediation Action: Replace the organization, location, and date with generic placeholders.

Final Text: Ahmed from XYZ Company will be visiting X City on [DATE].

Risk Score: 69.68%

SPADR Applied: Yes

Boosted by Graph: True

CASE 4

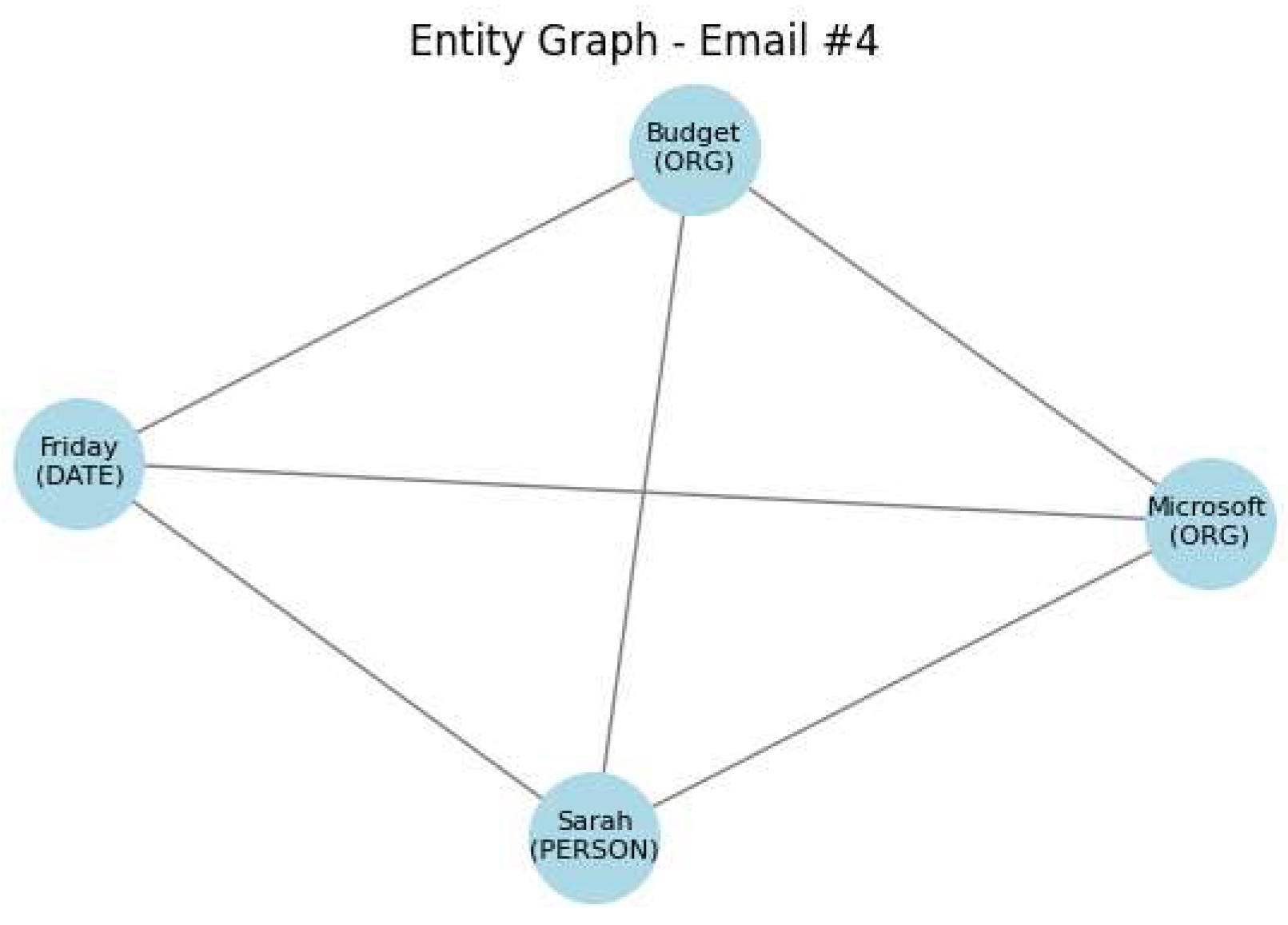

Original Text: Budget update shared by Sarah from Microsoft on Friday. The graph analysis is shown in

Figure 8 Remediation Action: Generalize all named entities (e.g., person, organization) and temporal expressions.

Final Text: XYZ Company update shared by Person from XYZ Company on [DATE].

Risk Score: 64.45%

SPADR Applied: Yes

Boosted by Graph: True

5.6.2. Strategy 2: Dynamic Graph-Based Remediation Framework

Unlike the fixed-rule strategy, this dynamic framework adaptively selects remediation techniques based on a combination of privacy risk thresholds and graph-derived semantic entity relationships. The following cases illustrate the decision rules and outcomes. These examples were collected from Enron Finance email datasets [

38].

CASE 1: Granular Redaction

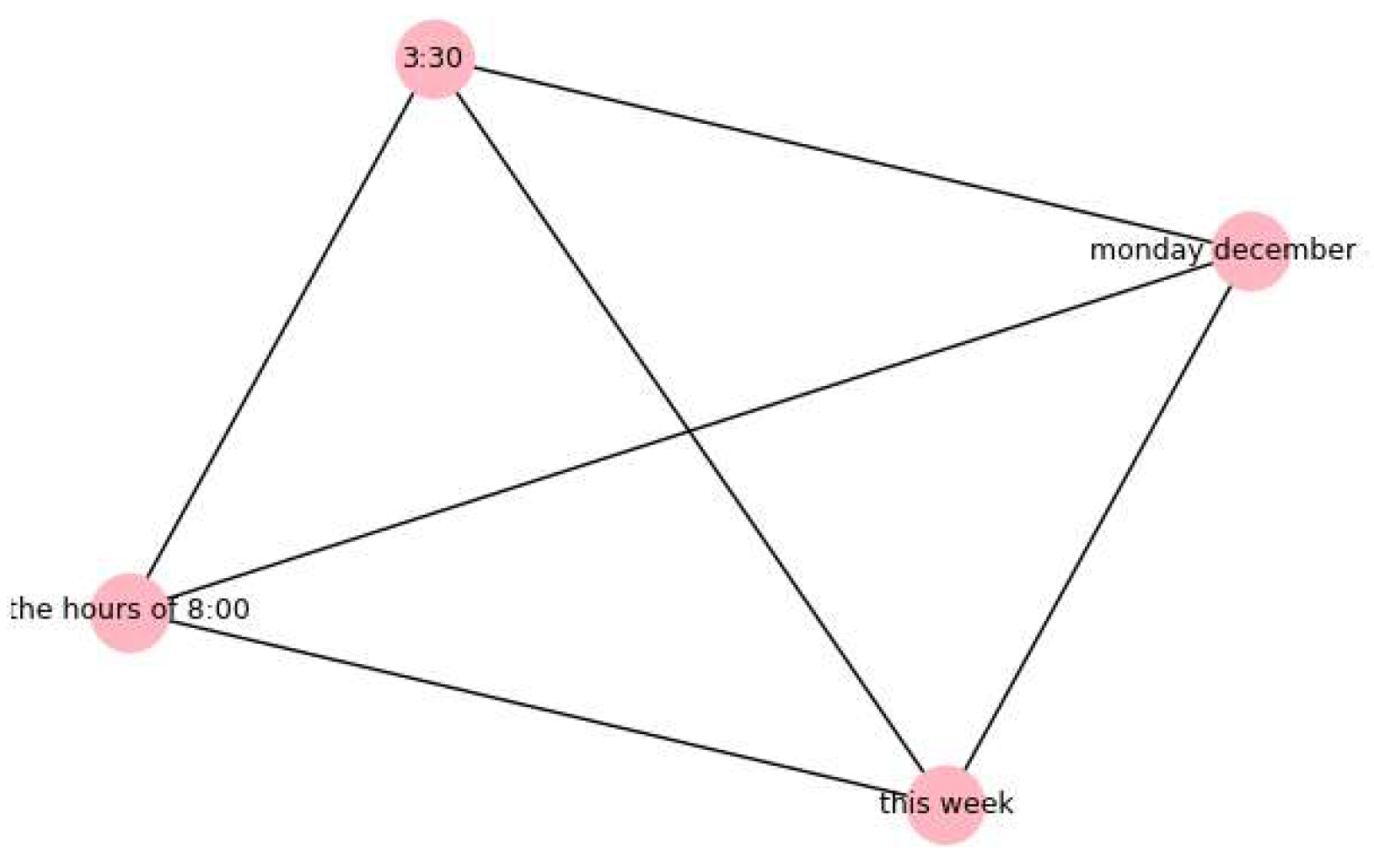

Original_Text: Dear Sir/Madam, Your flu vaccine has arrived. Please come to the Health Center, EB307, between 8:00 a.m. and 3:30 p.m. on Monday, December 4, or at your earliest convenience this week. Please be advised that the other persons on the waiting list will be notified via e-mail as more shipments of the vaccine arrive. Thank you in advance for your cooperation. The graph analysis is shown in

Figure 9 Graph_Analysis:

boosted_by_graph: TRUE, since the text contains relationships among different types of entities

Risk_Score_Percent: 67.9887924194336%

Condition_satisfaction: risk_score >= 55 and risk_score < 76

Remediation_Technique_to_apply: Granular_Redaction

Final_Cleaned_Text: (’Generalized = ’, ’dear sir/madam your flu vaccine has arrived please come to the health center eb307 between <Time> a m <Date> p m on <Date> or at your earliest convenience <Date> please be advised that the other persons on the waiting list will be notified via e-mail as more shipments of the vaccine arrive thank you in advance for your cooperation’)

CASE 2: Summarization

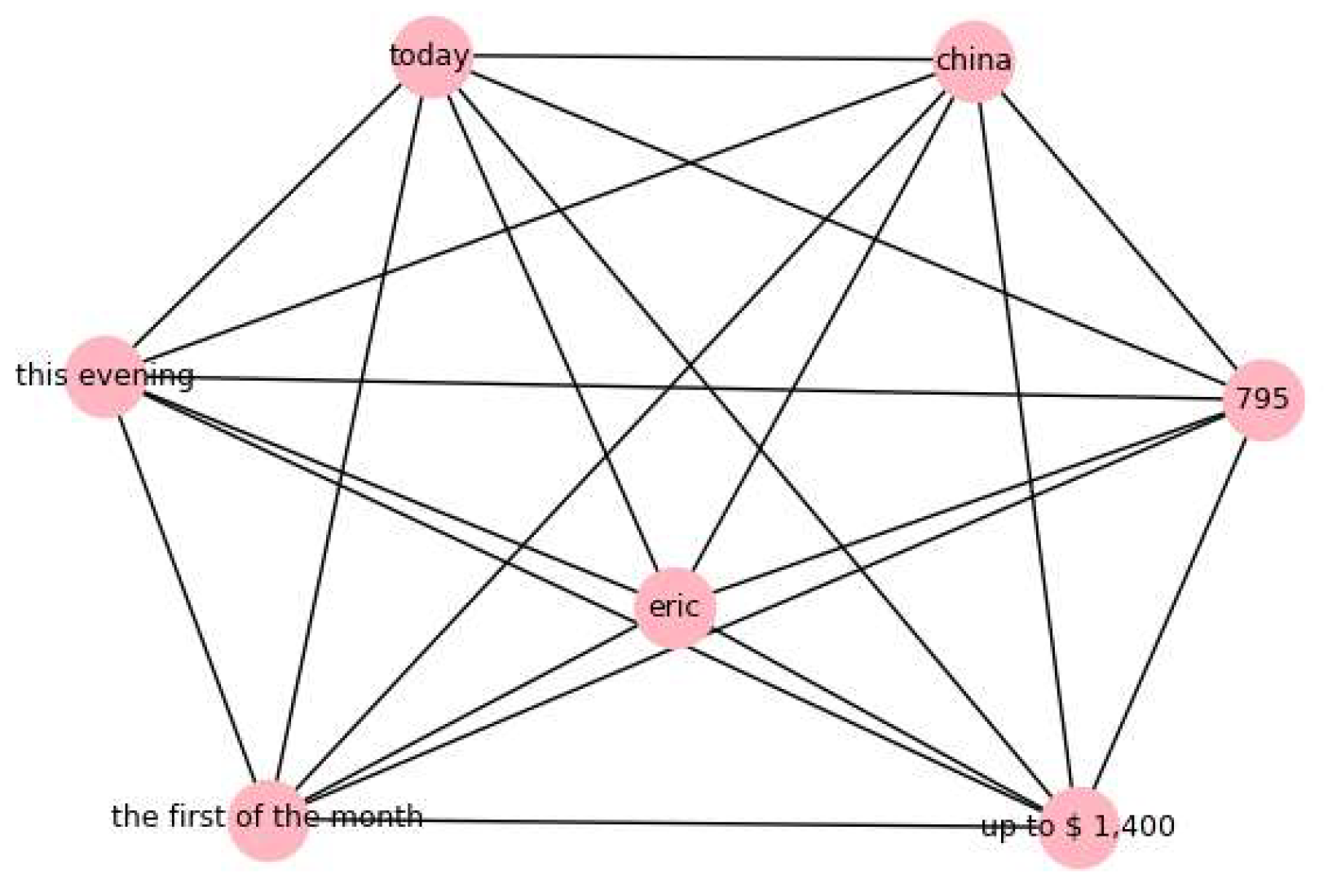

Original_Text: hey there how are you feeling i was looking on the northwest airlines website for flights to china i am thinking that we need to act on this pretty soon in order to get a good rate so far i have found the flights that cathy was talking about they are approx

$79,500 she had stated that she heard that the price was going to go up to

$1400 after the first of the month anyway what do you think we should do can you maybe talk with eric today or we can wait and talk to them this evening and try and figure things out. The graph analysis is shown in

Figure 10 Graph_Analysis:

boosted_by_graph: TRUE, since the text contains relationships among different types of entities

Risk_Score_Percent: 76.55%

Condition_satisfaction: risk_score >= 76 and risk_score < 86

Remediation_Technique_to_apply: Summarization

Final_Cleaned_Text: (’Summary = ’, ’ i was looking on the northwest airlines website for flights to <Location> i am thinking that we need to act on this pretty soon in’)

CASE 3: Text Boosted by Graph for Deletion

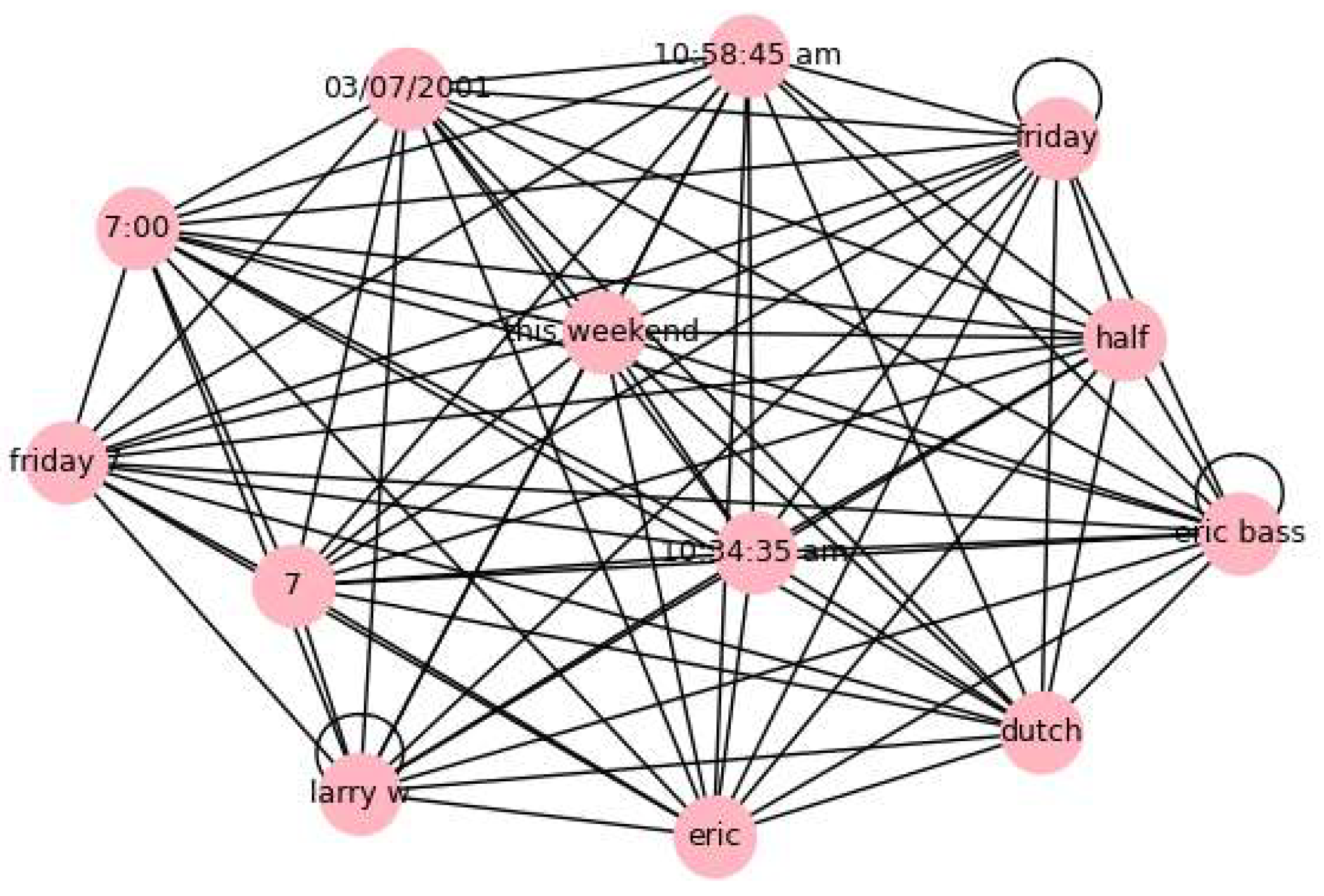

Original_Text: subject re dinner on friday anthonys sounds good to me 7 it is larry w bass on 03/08/2001 10:34:35 am to eric bass enron com cc subject re dinner on friday good morning looks like mother will be in town this weekend so dinner friday 7 is fine with us if you are still available if so lets try something different how about anthony ’s baroque brownstone or river oaks grill your choice dutch treat let me know and i will get reservations -dad subject re dinner on friday 7:00 preferably larry w bass on 03/07/2001 10:58:45 a.m. to eric bass enron com cc subject re dinner on friday what time you have in mind son subject dinner on friday hey dad did you talk to the better half i was thinking trulucks but if you can’t attend i understand let me know eric. The graph analysis is shown in

Figure 11 Graph_Analysis:

boosted_by_graph: TRUE, since the text contains relationships among different types of entities

Risk_Score_Percent: 100.00%

Condition_satisfaction: risk_score >= 86

Remediation_Technique_to_apply: Deletion

Final_Cleaned_Text: Text Deleted due to High Privacy Violation

CASE 4: No Remediation

Original_Text: per my voicemail below are the revised online GTCs. Sorry for any inconvenience this might have caused you

Graph_Analysis: NONE, no entities found to build a graph

boosted_by_graph: FALSE

Risk_Score_Percent: 35.69%

Condition_satisfaction: NONE

Remediation_Technique_to_apply: Return the text unchanged (Original) because it is harmless

Final_Cleaned_Text: per my voicemail below are the revised online gtc ’s sorry for an inconvenience this might have caused you

5.7. Comparison of spaCy Library with Other NLP Libraries

As shown in

Table 4, we compare the use of the SpaCy library to identify NER entities in our SPADR pipeline with RoBERTa [

41], a pretrained transformer model developed by Facebook AI, and Stanza [

43], a Python NLP library developed by Stanford.

The following examples in

Table 5 and

Table 6 are taken from the Enron Email Dataset [

35]. We use these samples to compare the outputs of SpaCy NER with RoBERTa and Stanza. For readability, we skip the raw email text and provide brief descriptions of the examples instead.

Example 1: SpaCy vs. RoBERTa This example is an Enron trading email discussing the setup of annuity deals and settlement dates. It contains sensitive details, such as dates, quantities, and financial terms.

Explanation: In this case, SpaCy correctly detects sensitive entities, such as dates and amounts, which leads to a higher risk score. The graph module then boosts the score due to multiple entity relationships, and the system applies summarization as the remediation strategy. In contrast, RoBERTa fails to identify enough entities, produces a lower risk score, and does not trigger any remediation. This shows that SpaCy is more effective for detecting sensitive content in this example.

Example 2: SpaCy vs. Stanza This example is an internal Enron update on DASR progress and legislative issues. It contains employee names, organizational details, and dates.

Explanation: In this case, SpaCy successfully identifies multiple sensitive entities, such as names and organizations. The graph analysis reveals several previously hidden relationships, which increase the privacy risk score and prompt the need for a deletion strategy. Stanza, however, detects only one relationship and produces a lower risk score, leaving the text unrepaired. This highlights how SpaCy provides stronger coverage of sensitive information compared to Stanza in this context.

These experiments show that SpaCy consistently outperforms both RoBERTa and Stanza in identifying sensitive entities within Enron emails. By generating more accurate risk scores and enabling graph-based boosting, SpaCy supports more reliable remediation strategies for privacy protection.

6. Discussion

As shown in

Table 3, our experimental results demonstrate that SPADR is effective in reducing privacy risks in text while preserving a significant portion of the original meaning. Compared to the baseline and a standard NER-based redaction approach, SPADR strikes a balance between removing sensitive content and maintaining the text’s readability and value.

We begin our experiment by measuring the raw baseline, without anonymization. The baseline results, as expected, show 100% leakage across all metrics, underscoring the need for effective privacy-preserving methods. Among the NER-based baselines, BERT achieves the highest semantic utility (BERTScore F1 = 99.90%), as it retains the majority of the original text. However, it focuses only on explicit entities: although it achieves a low attribute leakage score (AAA = 1.46), 22.63% of documents still leak identifiable information. RoBERTa performs worse overall, reducing leakage less effectively (48.91%) and exhibiting a larger drop in utility (93.50%). These results indicate that purely entity-based redaction is highly dependent on the underlying model and still fails to address context-dependent or implicit privacy risks.

In contrast, SPADR achieves stronger overall protection by combining entity detection with semantic and graph-based analysis. SPADR (S1) reduces leakage to 21.90%, and SPADR (S2) achieves the best results, lowering leakage to 16.06% while maintaining utility at 88.03%. Although SPADR reports higher AAA values than the NER baselines, this reflects a deliberate trade-off: the system allows benign attributes to remain while removing only those judged as risky. This design enables SPADR to protect against both explicit identifiers and relational or semantic risks that NER-only approaches cannot capture.

SPADR (S1), which combines semantic anomaly detection with a denoising autoencoder, significantly improves privacy protection. It reduces the document PII leak rate to 21.90% and attribute leakage to 12.41%. This improvement comes from the model’s ability to detect unusual or sensitive content beyond standard NER tags. However, S1 occasionally modifies benign parts of the text, which lowers its BERTScore to 86.43%.

SPADR (S2) extends S1 by integrating a broader graph-based remediation approach. Both strategies utilize entity graphs, but their operational logic differs. SPADR (S1) applies enhanced remediation exclusively when specific high-risk co-occurrence patterns (e.g., [PERSON, DATE, LOCATION]) are detected. This approach limits its scope to predefined sensitive relational structures. In contrast, SPADR (S2) considers any inter-entity relationship as a potential indicator of privacy leakage. This method combines graph connectivity with dynamic risk-score thresholds to determine remediation actions, resulting in more consistent management of subtle relational and contextual exposures. The improved performance of SPADR (S2), as demonstrated by a reduction in the document-level PII leak rate to 16.06% and an increase in utility preservation to a BERTScore F1 of 88.03%, is due to both threshold tuning and the generalization provided by graph-boosted thresholding. As a result, SPADR (S2) addresses a wider range of latent privacy risks than the fixed rule-based strategy used in SPADR (S1).

SPADR (S2) distinguishes between private information and public knowledge using DAE scores, which are trained on privacy-sensitive messages, and semantic classification scores, which determine whether the given text is sensitive or non-sensitive. For private information, both methods produce higher risk scores, triggering graphical analysis to examine relationships and apply remediation strategies to these texts accordingly. In contrast, for public knowledge, the DAE and semantic classification produce low risk scores; as a result, graph analysis is not performed, remediation strategies are not applied, and public knowledge remains unchanged, as shown in

Table 7.

We also tested the robustness of the weighting scheme used in the privacy risk score. We analyze the weight on a random subset of 500 records due to computational limitations. An ablation study confirmed that semantic classification has the most significant influence on the score, supporting its higher weight. We then adjusted the weights in increments of 0.1, keeping the sum normalized to 1.0. The results are shown in

Table 8. SPADR maintained stable performance across a wide range of values (semantic 0.2–0.5, anomaly/NER 0.2–0.4). As a result, we assign weights of

,

, and

to semantic classification, anomaly detection, and NER, respectively.

To assess the privacy preservation capabilities of our SPADR pipeline, we conduct an MIA [

2]. We fine-tune GPT-2 on the raw, NER-redacted, and SPADR-redacted datasets, then compute per-sample losses and derive an MIA score defined as explained in

Section 5.3. The ROC AUC of this score against the true membership label (train vs. test) quantifies how well an attacker can infer training membership.

As shown in

Table 9, the raw dataset shows an AUC of 0.6626, indicating moderate memorization risk. NER-based redaction offers only a slight reduction in leakage (AUC = 0.5022). In contrast, SPADR strategies significantly reduce leakage: SPADR-S2 achieves 0.4637, and SPADR-S1 performs best with 0.4231. These results demonstrate that SPADR effectively mitigates memorization while preserving utility.

These results highlight that SPADR benefits from combining deep semantic understanding with structural context. Its design enables it to identify and mitigate privacy risks that rule-based methods, such as NER, cannot capture. This makes SPADR a more adaptive and better solution for real-world anonymization tasks where sensitive information can appear in unpredictable ways.

There is a trade-off when we use the SPADR pipeline to filter prompts before sending them to LLMs. One of the main strengths of LLMs is their ability to understand users on a personal level. They remember names, preferences, and past topics, which helps them give better and more helpful answers. However, this strength also creates a privacy risk. The model might accidentally reveal private details or disclose sensitive information. By filtering and redacting the input, SPADR lowers this risk. While this means the model may lose some helpful context, in most cases, protecting user privacy is more important than keeping personalization.

One limitation of our current graph construction is that it relies only on entity co-occurrence within the exact text. It provides a simple relational view but ignores important aspects, such as the type, direction, and strength of relationships. As a result, some complex privacy risks that depend on specific relationship types may be missed.

Another limitation of our current design is that SPADR relies on a pretrained model such as spaCy’s NER, which works well on English and Western names but performs poorly on non-Western or multilingual entities. Since our graph analysis relies on NER outputs, this limitation can result in missed cases in multilingual texts. We highlight these limitations and potential future improvements in the Conclusions.

Risks to Validity

There are several risks to the validity of our findings. First, our evaluation relies mainly on the Enron Email Dataset [

35] and a synthetic dataset we created. While these are useful for testing, they may not fully represent other domains, such as healthcare, finance, or social media.

Second, the DAE is trained only on high-risk records. This design helps the model focus on sensitive patterns but also creates a risk of bias if the selected subset is not fully representative. As a result, the model may miss certain types of privacy risks in unseen data.

Finally, the remediation thresholds and rules we used may be sensitive to the dataset. Although they worked well in our experiments, their effectiveness may vary on other datasets or languages, which affects the validity of our conclusions.

7. Conclusions

In this paper, we present SPADR, a privacy protection pipeline that detects and removes sensitive information from text. SPADR combines semantic anomaly detection with graph-based analysis to catch both direct and hidden privacy risks.

We evaluate SPADR on the Enron Email Dataset [

35], and the results show that it works well. The improved version, SPADR (S2), reduces privacy leak rates to 16.06% while maintaining text meaning with a BERTScore of 88.03%.

This work is important because LLMs can memorize and leak sensitive information if their training data are not carefully cleaned. Even more, LLMs can also leak private details when users send sensitive input at runtime. SPADR helps in both cases. It can clean training data before they are used to build models, and it can also act as a privacy filter before sending user input to an LLM. By identifying sensitive content that standard redaction tools may overlook, SPADR provides a more flexible and accurate method for protecting user privacy.

Despite the demonstrated effectiveness of the SPADR pipeline in enhancing privacy protection for textual data, several limitations warrant consideration. First, the evaluation is limited to the Enron Email Dataset, which represents a specific domain of corporate communication. As a result, the generalizability of SPADR to other contexts, such as clinical records, legal documents, or informal social media texts, remains unverified and requires further investigation. Second, the graph-based entity analysis relies on predefined patterns of sensitive entity co-occurrence (e.g., [PERSON, DATE, LOCATION]), which, although useful, may fail to detect more intricate or context-specific privacy risks. Third, the adopted remediation strategies—such as redaction, summarization, and deletion—may compromise textual utility, particularly in cases where content fidelity is essential. Additionally, the effectiveness of the pipeline depends heavily on the accuracy of entity recognition tools, including named entity recognition and rule-based matchers. Misclassifications or omissions at this stage can undermine the reliability of subsequent remediation efforts.

SPADR still has several limitations. First, our evaluation utilizes the Enron Email Dataset [

35], which originates from the corporate communication domain, and a synthetic dataset that we create to simulate sensitive content. While both are useful for testing, they may not generalize well to other contexts, such as healthcare, legal, or social media data. Second, graph-based entity analysis relies on predefined patterns of entity co-occurrence, which may overlook more complex or context-specific privacy risks. Third, the system relies on spaCy’s NER, which works well for English and Western names but often fails to detect non-Western or multilingual entities. This lowers the accuracy of graph analysis in multilingual settings.

Additionally, the anomaly detection module requires substantial computational resources. It is trained only on high-risk data, which makes its performance sensitive to the completeness of the labeled subset. Finally, the current version of SPADR supports only English text.

In future work, we plan to extend SPADR to cover more domains and languages. We will use multilingual or fine-tuned NER models to enhance the recognition of non-Western names and entities, and explore domain-specific fine-tuning to minimize false negatives in multilingual text. We also aim to explore relationship extraction models and graph neural networks to build richer graphs that better capture semantic context and improve privacy risk detection. This will make the system more intelligent and scalable for large-scale privacy protection.

Additionally, we plan to release a new dataset that includes examples of LLM prompts with privacy-sensitive content. These prompts will contain not only common sensitive details, such as names, locations, and financial terms, but also risks that arise from relationships and semantic context. For example, a prompt may describe how a person, an organization, and a date are connected, which can reveal private information even without explicit names. This dataset will support the research community in testing and improving methods for privacy protection in LLMs.