Real-Time Rice Milling Morphology Detection Using Hybrid Framework of YOLOv8 Instance Segmentation and Oriented Bounding Boxes

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset Preparation

2.2. Sample Preparation

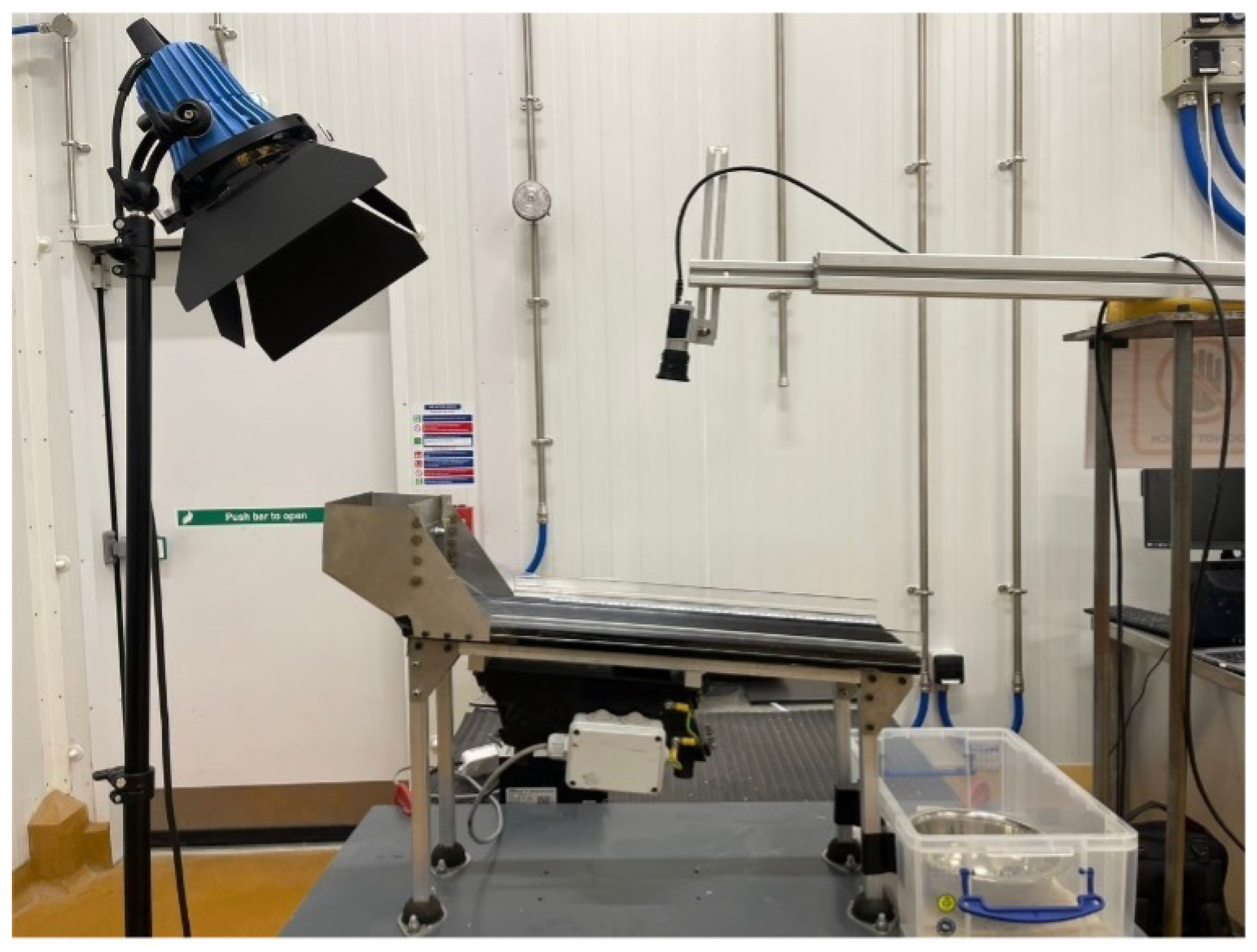

2.3. Image Acquisition

2.4. Annotation Pipeline

2.5. Image Processing Model Architecture

2.5.1. YOLOv8 Instance Segmentation

2.5.2. YOLOv8 Oriented Bounding Box Model

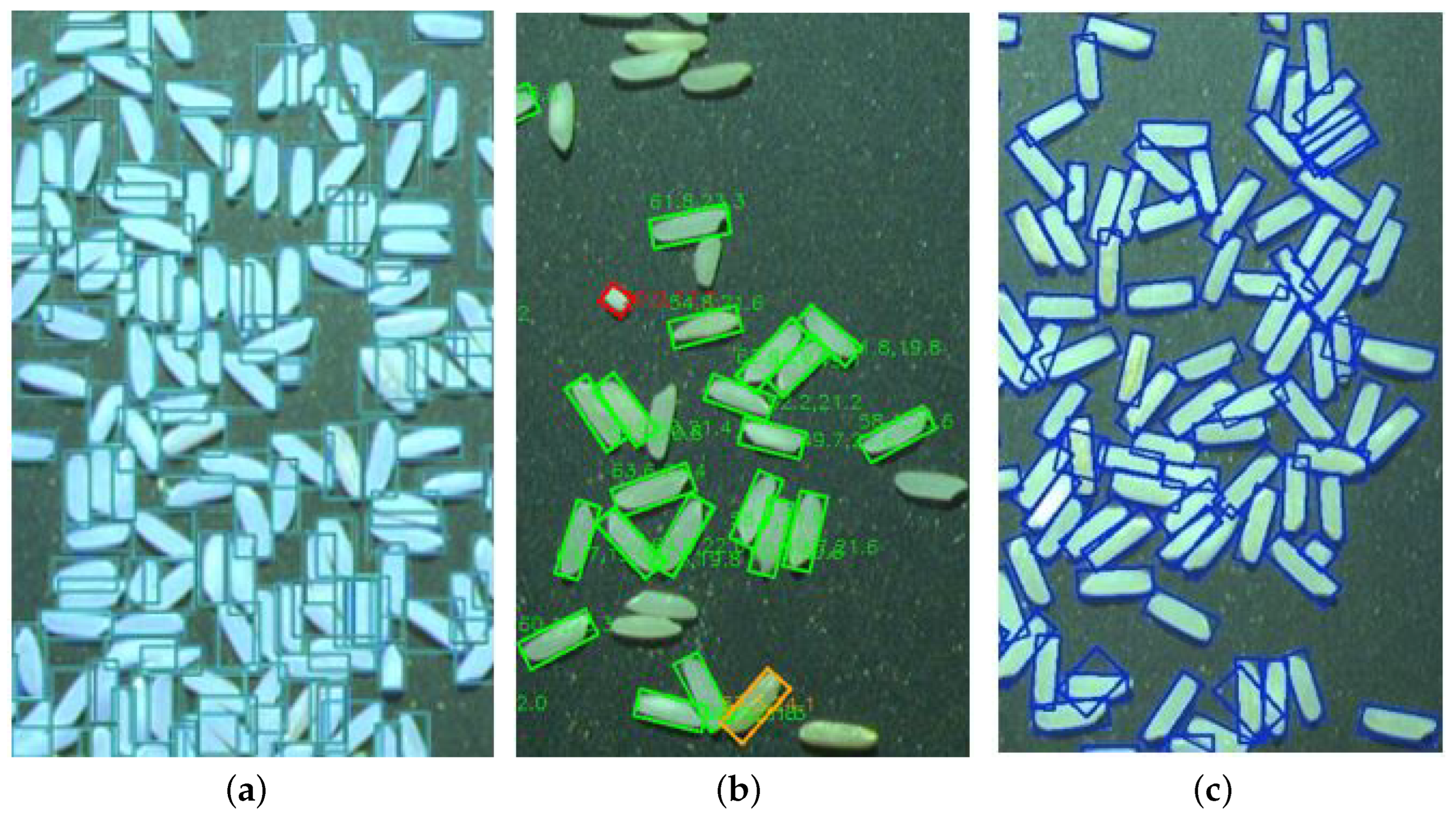

2.5.3. Hybrid Fusion Algorithm Approach

3. Experiments and Results

3.1. Experimental Setup and Evaluation

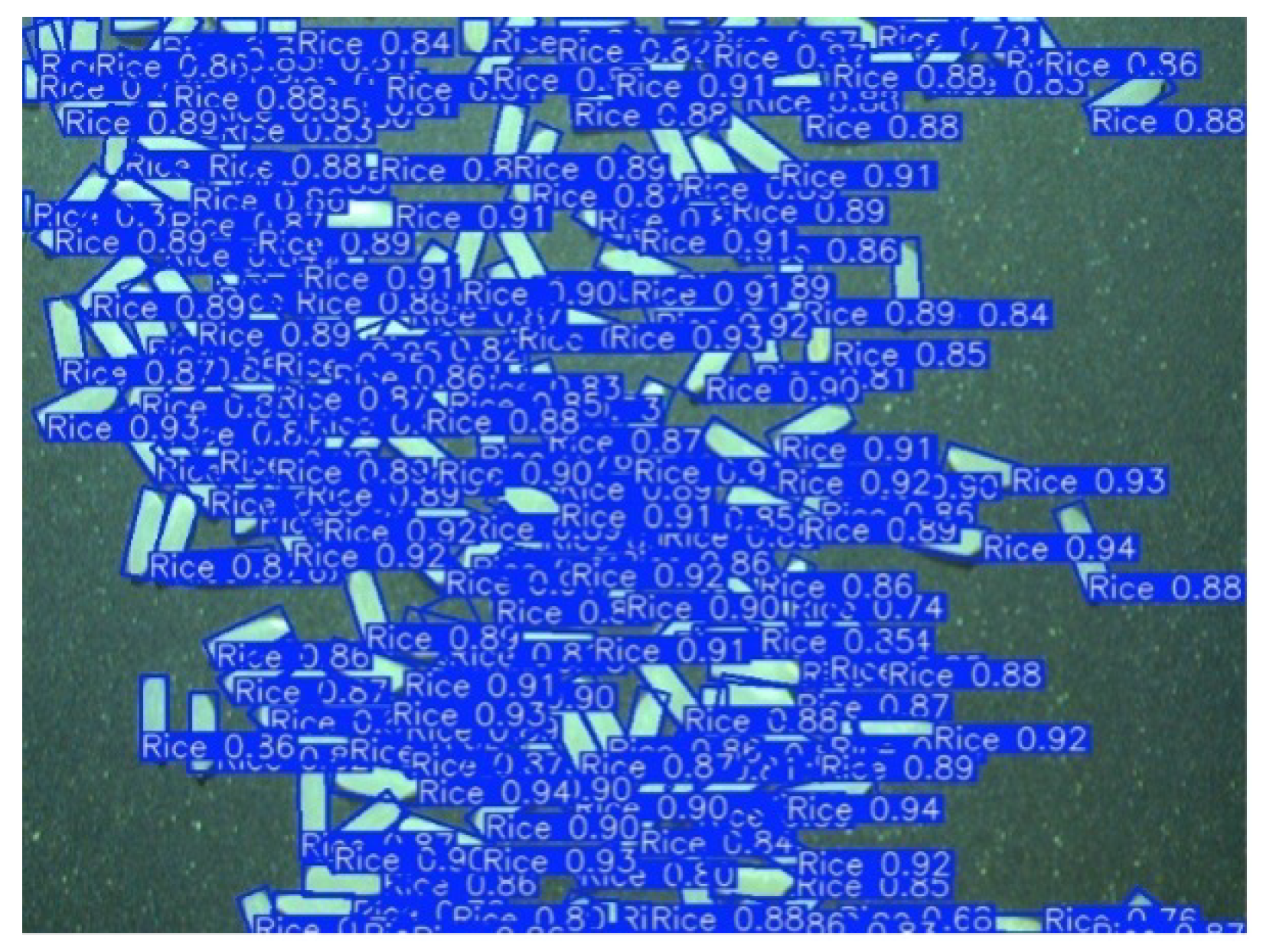

3.1.1. Instance Segmentation Evaluation Metrics

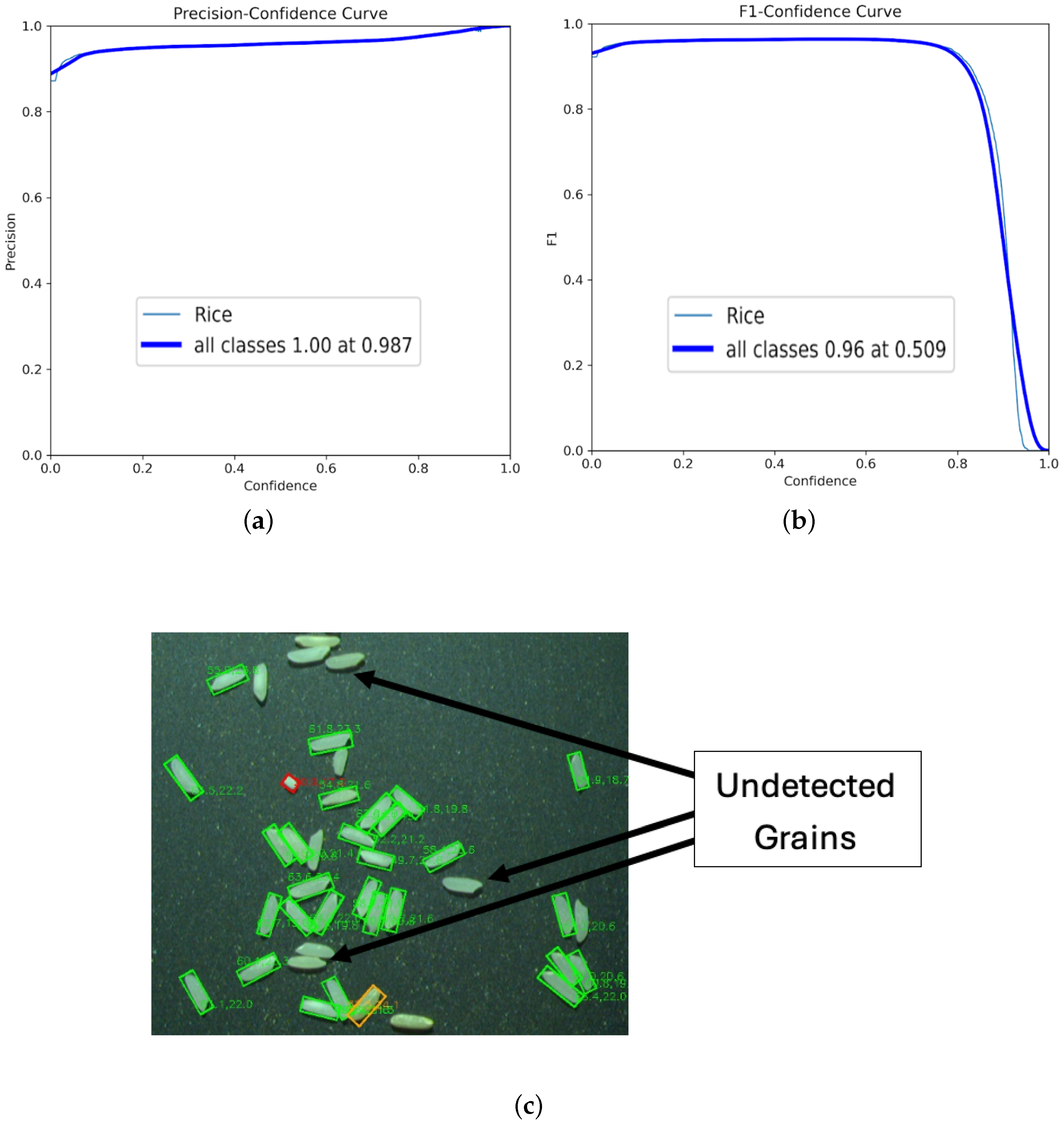

3.1.2. Oriented Bounding Box Evaluation Metrics

3.2. Comparative Analysis of Model Performance

4. Discussion

5. Conclusions and Future Work

5.1. Conclusions

5.2. Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ML | Machine Learning |

| YOLOv8 | You Only Look Once version 8 |

| OBB | Oriented Bounding Box |

| FPS | Frames Per Second |

| mAP | Mean Average Precision |

| MAE | Mean Absolute Error |

References

- Food and Agriculture Organization of the United Nations. Rice Market Monitor (RMM). 2023. Available online: https://www.fao.org/markets-and-trade/commodities/rice/rmm/en (accessed on 8 July 2025).

- United States Department of Agriculture. Grain: World Markets and Trade. 2019. Available online: https://www.fas.usda.gov/data/grain-world-markets-and-trade-09122025 (accessed on 8 July 2025).

- Fukagawa, N.K.; Ziska, L.H. Rice: Importance for global nutrition. J. Nutr. Sci. Vitaminol. 2019, 65, S2–S3. [Google Scholar] [CrossRef] [PubMed]

- Kuchekar, N.; Yerigeri, V. Rice grain quality grading using digital image processing techniques. IOSR J. Electr. Electron. Eng. 2018, 13, 84–88. [Google Scholar]

- Tuğrul, B. Classification of five different rice seeds grown in Turkey with deep learning methods. Commun. Fac. Sci. Univ. Ank. Ser. A2-A3 Phys. Sci. Eng. 2022, 64, 40–50. [Google Scholar]

- Kongsilp, P.; Sangsai, N. Thai Milled Rice Quality Classification Based on Deep Learning Approach. In Proceedings of the 2022 International Electrical Engineering Congress (iEECON), Khon Kaen, Thailand, 9–11 March 2022; pp. 1–4. [Google Scholar]

- Safaldin, M.; Zaghden, N.; Mejdoub, M. An improved YOLOv8 to detect moving objects. IEEE Access 2024, 12, 59782–59806. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, X.; Zhu, P.; Tang, X.; Chen, P.; Jiao, L.; Zhou, H. High-quality angle prediction for oriented object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5614714. [Google Scholar] [CrossRef]

- Jiao, W.; Luo, Y.; Tian, Y.; Yu, B.; Aslam, M.S. Attention-Guided Oriented Bounding Box Real-Time Image Analytics Model for Dense Wheat Imperfect Grains and Impurities. In Proceedings of the 2024 International Conference on Interactive Intelligent Systems and Techniques (IIST), Bhubaneswar, India, 4–5 March 2024; pp. 247–253. [Google Scholar]

- Zhang, J.; Pan, Y.; Zhang, Y.; Sun, H. Dual Channel Attention Mechanism for Apple Localization Detection and Segmentation. In Proceedings of the 2023 International Conference on Advances in Electrical Engineering and Computer Applications (AEECA), Dalian, China, 18–19 August 2023; pp. 609–613. [Google Scholar]

- Sharma, J.; Kumar, D.; Verma, R. Deep Learning-Based Wheat Stripe Rust Disease Recognition Using Mask RCNN. In Proceedings of the 2024 International Conference on Intelligent Systems and Advanced Applications (ICISAA), Pune, India, 25–26 October 2024; pp. 1–5. [Google Scholar]

- El Amrani Abouelassad, S.; Rottensteiner, F. Vehicle instance segmentation with rotated bounding boxes in uav images using cnn. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 1, 15–23. [Google Scholar] [CrossRef]

- Zand, M.; Etemad, A.; Greenspan, M. Oriented bounding boxes for small and freely rotated objects. IEEE Trans. Geosci. Remote Sens. 2021, 60, 4701715. [Google Scholar] [CrossRef]

- Zhao, T.; Fu, C.; Song, W.; Sham, C.W. RGGC-UNet: Accurate Deep Learning Framework for Signet Ring Cell Semantic Segmentation in Pathological Images. Bioengineering 2024, 11, 16. [Google Scholar] [CrossRef] [PubMed]

- Gu, Y.; Fu, C.; Song, W.; Wang, X.; Chen, J. RTLinearFormer: Semantic Segmentation with Lightweight Linear Attentions. Neurocomputing 2025, 625, 4–8. [Google Scholar] [CrossRef]

- Oli, P.; Talbot, M.; Snell, P. Understanding Pre- and Post-Milling Crack Formation in Rice Grain. Trans. ASABE 2021, 64, 1795–1804. [Google Scholar] [CrossRef]

- Appiah, F.; Guisse, R.; Dartey, P. Post Harvest Losses of Rice from Harvesting to Milling in Ghana. J. Stored Prod. Postharvest Res. 2011, 2, 64–71. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLOv8: The Cutting-Edge Solution. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 3 September 2025).

- Jocher, G. YOLOv5: The Established and Versatile Standard. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 3 September 2025).

- Chen, F.; Zhang, Y.; Fu, L.; Xie, H.; Zhang, Q.; Bi, S. From YOLOv5 to YOLOv8: Structural Innovations and Performance Improvements. Int. J. Comput. Sci. Inf. Technol. 2025, 6. [Google Scholar] [CrossRef]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-time flying object detection with YOLOv8. arXiv 2023, arXiv:2305.09972. [Google Scholar] [CrossRef]

| Model | mAP@0.5 | Mask IoU | Size MAE (mm) | Recall (Max) | Precision (Max) | F1-Score (Max) |

|---|---|---|---|---|---|---|

| YOLOv8-Seg | 0.92 | 0.85 | 0.35 | 0.99 | 1.00 (0.984 conf) | 0.99 (0.408 conf) |

| YOLOv8-OBB | 0.88 | – | 0.12 | 0.98 | 1.00 (0.987 conf) | – |

| Proposed Hybrid | 0.94 | 0.86 | 0.10 | 0.995 | 1.00 | 0.995 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ilo, B.; Rippon, D.; Singh, Y.; Shenfield, A.; Zhang, H. Real-Time Rice Milling Morphology Detection Using Hybrid Framework of YOLOv8 Instance Segmentation and Oriented Bounding Boxes. Electronics 2025, 14, 3691. https://doi.org/10.3390/electronics14183691

Ilo B, Rippon D, Singh Y, Shenfield A, Zhang H. Real-Time Rice Milling Morphology Detection Using Hybrid Framework of YOLOv8 Instance Segmentation and Oriented Bounding Boxes. Electronics. 2025; 14(18):3691. https://doi.org/10.3390/electronics14183691

Chicago/Turabian StyleIlo, Benjamin, Daniel Rippon, Yogang Singh, Alex Shenfield, and Hongwei Zhang. 2025. "Real-Time Rice Milling Morphology Detection Using Hybrid Framework of YOLOv8 Instance Segmentation and Oriented Bounding Boxes" Electronics 14, no. 18: 3691. https://doi.org/10.3390/electronics14183691

APA StyleIlo, B., Rippon, D., Singh, Y., Shenfield, A., & Zhang, H. (2025). Real-Time Rice Milling Morphology Detection Using Hybrid Framework of YOLOv8 Instance Segmentation and Oriented Bounding Boxes. Electronics, 14(18), 3691. https://doi.org/10.3390/electronics14183691