1. Introduction

Image classification has long been a central task in computer vision, driving advancements across diverse applications such as autonomous driving, medical diagnostics [

1], and security surveillance [

2]. With the emergence of deep learning, convolutional neural networks (CNNs) have become the dominant architecture for achieving state-of-the-art (SOTA) performance. Models such as AlexNet [

3], VGG [

4], and particularly ResNet [

5] have significantly improved classification accuracy by increasing network depth and incorporating architectural innovations like residual connections [

5].

However, these accuracy gains come at the cost of higher computational complexity. Deep CNNs require substantial floating-point operations (FLOPs), leading to increased learning time, memory usage, and energy consumption. This limits their usage on resource-constrained platforms such as mobile devices or embedded systems [

6,

7]. To alleviate this burden, several approaches have been proposed: pruning [

8], quantization [

9], and efficient network designs like MobileNet [

10] and ShuffleNet [

11], which employ techniques such as depthwise separable convolutions and grouped convolutions.

Despite these advances, most models still apply convolutions uniformly across the entire image, regardless of local spatial characteristics. In real-world images, information is not evenly distributed. High-frequency regions tend to carry richer content than low-frequency regions. This observation has motivated a work that aims to separate convolution calculations based on decision masks [

12].

In this paper, we propose a frequency-aware, multi-path convolutional architecture that dynamically adapts its operations based on the frequency composition of input images. By generating a binary mask that separates high-frequency and low-frequency regions, the model applies full standard convolution to information-rich areas and lightweight and depthwise separable convolution to smoother regions. This selective approach significantly reduces redundant computation while preserving key features for classification. We further enhance this mechanism by introducing a morphological dilation step to capture contextual neighborhoods around high-frequency regions, improving the model’s receptive coverage without excessive computational cost.

Experiments on the CIFAR-10 dataset demonstrate that our method reduces computational cost by up to 29% compared to baseline ResNet models, while maintaining or slightly decreasing classification accuracy. These results highlight the effectiveness of combining frequency-based spatial analysis with efficient convolutional strategies for resource-constrained deep learning.

2. Related Works

Deep convolutional neural networks (CNNs) have led to substantial advancements in both image classification and image restoration tasks. In image classification, creative architectures such as VGG [

4] and ResNet [

5] have demonstrated that deeper networks with residual connections significantly improve performance. However, this comes at the cost of increased computational complexity, which has motivated a line of research into efficient CNN designs, including MobileNet [

10] and ShuffleNet [

11], which use depthwise separable and group convolutions to reduce FLOPs.

The most closely related work to our approach is ASCNN [

12], which introduces an area-specific convolutional strategy for super-resolution by generating a binary decision mask that separates high-frequency and low-frequency regions. High-frequency regions are processed using full-capacity convolutions, while low-frequency areas are handled by lightweight convolutions, effectively reducing FLOPs. Furthermore, morphological dilation is used to expand the receptive context of high-frequency regions to improve restoration quality.

Inspired by ASCNN, our work adapts this frequency-aware design philosophy to the domain of image classification. Additionally, we extend the efficiency further by incorporating depthwise separable convolutions into the low-frequency path and optimizing mask through threshold control morphological dilation. This is the first work to integrate pixel-wise frequency-aware masking with dynamic convolution routing in the context of image classification. Unlike ASCNN [

12], which was developed for single image super-resolution, our model is specifically designed for image classification. Moreover, our approach further improves efficiency by incorporating depthwise separable convolution into the low-frequency path. This extension highlights the novelty of our work, as it not only adapts ASCNN’s core insight to a new domain but also introduces an additional mechanism to achieve greater FLOP reduction.

3. Proposed Method

This section provides an explanation of the core concepts underlying this study, including frequency-based mask generation, multi-path conventional convolution operation, and depthwise convolution.

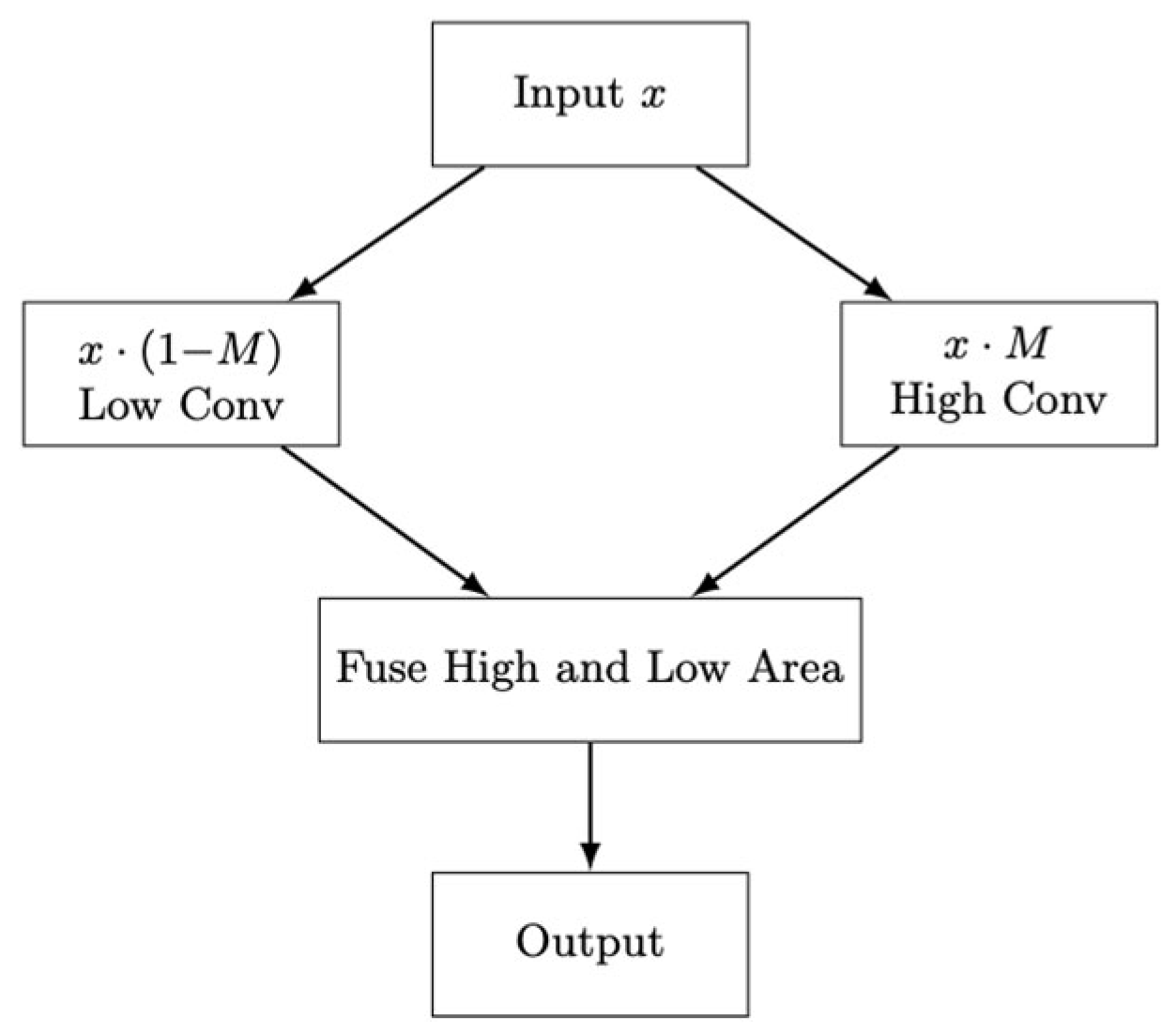

The model adopts a ResNet-style backbone with three residual stages and replaces standard residual blocks with frequency-aware blocks. As illustrated in

Figure 1, a binary mask is generated from the input based on local variation and thresholding, then optionally dilated. This mask is used to split feature maps into high- and low-frequency regions, which are processed separately using a standard 3 × 3 convolution and a lightweight 3 × 3 → 1 × 1 bottleneck, respectively. The outputs are fused and passed through batch normalization and activation. After the final stage, global average pooling and a linear layer produce the final prediction. Key parameters include network depth, channel width, threshold theta, reduction ratio r, and dilation size d.

3.1. Frequency-Based Decision Mask

In conventional deep learning-based image classification models, convolution operations are typically applied uniformly across the entire input image or the resulting feature maps from the previous layers. However, real-world images contain distinct high-frequency and low-frequency regions, and accounting for this characteristic can lead to more computationally efficient operations. Notably, high-frequency regions tend to carry richer information than low-frequency ones, making it advantageous to focus computations more heavily on those areas. To achieve computational efficiency, the proposed method introduces a mechanism for generating a binary mask that separates high-frequency (HF) and low-frequency (LF) regions. This mask guides the convolution process to selectively apply operations based on the spatial frequency characteristics of the input. The mask generation procedure is as follows. First average pooling is applied to the input image to measure local variations. This operation effectively produces a blurred version of the input by retaining mainly low-frequency components. Then the absolute difference between the original input and the blurred image is computed. A pixel at location (i, j) is considered part of high-frequency region if the magnitude of this difference exceeds a predefined threshold

θ, which serves as a hyperparameter. The resulting binary mask is later utilized during the conventional operation to differentiate between the HF and LF regions. The following Equation (1) compactly defines the mask-generation procedure.

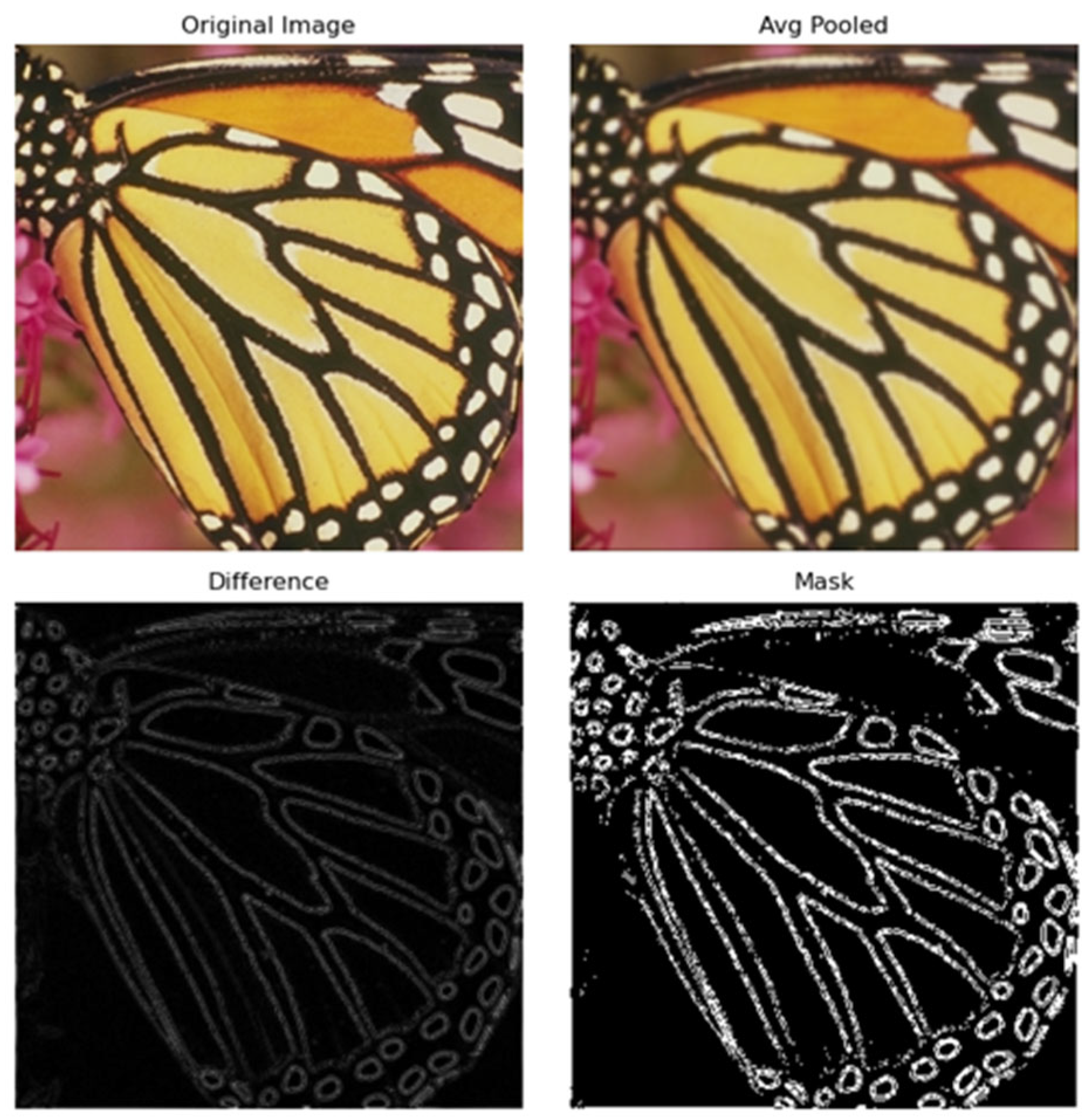

To illustrate the proposed mask generation process,

Figure 2 presents a visual example using a 256 × 256 image of a butterfly. The top row shows the original image and its blurred counterpart obtained via average pooling, which retains only low-frequency components. The bottom row visualizes the absolute pixel-wise difference between the original and the blurred image, and the resulting binary mask that identifies high-frequency regions. The binary mask is computed by applying a threshold

θ to the difference map. Specifically, pixels with local variation exceeding

θ are assigned a value of 1 (high-frequency), while others are set to 0 (low-frequency). As

θ increases, the condition for identifying high-frequency regions becomes stricter, resulting in a lower proportion of pixels labeled as 1, thus producing a sparser mask. Conversely, decreasing

θ relaxes the condition, yielding a denser mask with more pixels included as high-frequency regions. This frequency-aware masking mechanism enables the model to focus computational resources on more informative regions of the image, which are typically rich in sharp edges, textures, object boundaries and fine details. For clarity, the binary mask

M is generated independently for each input image at the beginning of the forward pass. Masks are not pre-computed for the dataset nor shared across different images.

3.2. Multi-Path Convolution Block

To enhance computational efficiency while preserving representational capacity, we propose a multi-path convolutional block that selectively applies different convolution operations based on the frequency characteristics of the input. Specifically, the binary mask

M ∈ {0, 1}

H×W generated from the input image guides the routing of the computation. The input feature map

X ∈

RCin×H×W is split into two separate components. For each spatial location, the model applies a full convolution path

Convhigh, if

M = 1 (i.e., high-frequency region), and a lightweight convolution path

Convlow if

M = 0 (i.e., low-frequency region). This process is shown in (2). Below, ⊙ denotes element-wise multiplication.

In the high-frequency part, a standard

k ×

k convolution is applied to

Xhigh, maintaining the full output dimensionality

Cout. For the low-frequency path, a computationally efficient strategy is employed: the number of channels is first reduced to

Cout/

r via a

k ×

k convolution and then restored to

Cout using a 1 × 1 convolution which is shown in (3).

Finally, the outputs of both paths are aggregated. This fusion effectively combines rich spatial detail from high-frequency regions with efficient approximations for low-frequency areas.

By conditionally allocating computational resources in this manner, the model focuses capacity on information-rich (high-frequency) regions while maintaining efficiency in low-frequency areas. For additional information, the mask M is generated once from the input image and then reused throughout the network. At each stage transition, where the spatial resolution of the feature maps is reduced, the mask is down-sampled by a factor of 2 using average pooling. This operation converts the originally binary mask into a soft mask while maintaining its structural information. Inside each block, if the spatial resolution of M does not match that of the feature maps, the mask is resized via nearest-neighbor interpolation. This ensures consistent application of M to gate the high- and low-frequency paths across all stages.

3.3. Depthwise Convolution

While the proposed frequency-aware convolution block already reduces computational cost by applying lightweight convolutions to low-frequency regions, we further optimize this design by replacing standard convolutions in the low-frequency path with depthwise separable convolutions. This adjustment significantly reduces the number of floating-point operations (FLOPs). Therefore, replacing standard convolutions with depthwise convolutions in these early stages results in substantial computational savings. In particular, the low-frequency component

Xlow is processed using a depthwise convolution followed by a pointwise projection. The depthwise convolution performs spatial filtering independently for each input channel, without mixing information across channels. Formally, this operation applies

Cin separate

k ×

k kernels to

Cin input channels, yielding an intermediate feature map of the same shape as shown in (5).

Subsequently, a 1 × 1 pointwise convolution aggregates and projects the channels into the target output dimension

Cout. Which is shown in (6).

As shown in Equation (4), the final output of the block remains the sum of the high-frequency and low-frequency outputs. By applying the depthwise separable convolution only to the low-frequency path we significantly reduce the computation cost without sacrificing high-frequency regions. This is particularly beneficial in deeper stages of the network or when scaling to higher-resolution inputs.

3.4. Morphological Dilation

To further refine the binary mask used for frequency-aware convolution, we apply a morphological dilation operation, which is a technique in image morphology [

13], by using max pooling. Max pooling is equivalent to standard morphological dilation with a square kernel. This choice yields the same effect while being computationally efficient and natively supported in deep-learning frameworks like PyTorch 2.6.0. Specifically, after the high-frequency mask

M ∈ {0, 1}

H×W is initially computed based on local variation, we perform the following update when the dilation parameter

d > 0. This method is shown in (7).

This operation effectively expands the high-frequency regions by including their local neighborhoods. Although increasing the dilation parameter does not lead to a reduction in FLOPs—since more pixels are retained as high-frequency—it serves a different purpose: allowing the model to consider not just the high-frequency points themselves, but also the nearby pixels that contribute to understanding them. In natural images, high-frequency areas such as edges and boundaries often contain critical semantic information. By dilating the mask, we ensure that not only the exact high-frequency pixels but also their surrounding pixels are processed with full convolution. This provides a balance between saving computation and preserving important image features. Empirically, we observe that larger dilation values (e.g.,

d = 7) yield better classification accuracy without significantly increasing computational cost. This suggests that the expanded receptive context of high-frequency zones plays a valuable role in preserving meaningful information. To further clarify the role of dilation in the frequency-aware masking mechanism,

Figure 3 visualizes the change in binary masks across increasing dilation values. As seen in the figure, setting the dilation parameter to higher values (e.g.,

d = 5 or

d = 7) results in the thickening of high-frequency regions, effectively expanding the mask boundaries. This morphological dilation includes surrounding pixels that are semantically related to structures such as edges and textures.

4. Experiments

All models were implemented in PyTorch 2.6.0 and trained on a single NVIDIA RTX 3060 GPU. We used the CIFAR-10 dataset for both training and testing. We trained with SGD (momentum 0.9), initial learning rate 0.1, weight decay 5 × 10−4, a cosine-annealing schedule over 150 epochs, and batch size 256. Method-level hyperparameters are tuned by grid search over the mask threshold θ and dilation d ∈ {0, 3, 5, 7}. To mitigate overfitting, we apply Random Crop (32, padding = 4) and Random Horizontal Flip, weight decay, input normalization with CIFAR-10 statistics, and batch normalization.

4.1. Effect of Morphological Dilation

To investigate the impact of expanding the high-frequency regions identified by the binary mask, we conducted a series of experiments incorporating morphological dilation into the frequency-aware convolutional framework. The goal of this approach is to enhance the contextual understanding of principal regions by extending convolutional coverage beyond strict high-frequency boundaries. This allows the model to more effectively capture local structure without significantly increasing computational burden. Models were trained with three different dilation settings d ∈ {3, 5, 7}, while all other components remained fixed. Importantly, the low-frequency convolution path used standard convolutions, and depthwise operations were not applied in this setting. Each model was fine-tuned from a common baseline ResNet-32 model with three residual stages and standard residual blocks without frequency-aware masking or depthwise convolution trained with CIFAR-10 dataset, ensuring consistent initialization.

The results shown in

Table 1 demonstrate that increasing dilation generally leads to improved accuracy across varying MFLOPs (While the reported MFLOPs in

Table 1 account only for convolutional layers, we additionally measured the cost of mask generalization. For a batch of 256 images, the mask generation requires approximately 9.44 MFLOPs in total, which amounts to only about 5% of the full model (181 MFLOPs). This marginal contribution confirms that mask generation introduces negligible overhead relative to the dominant cost of convolutional operation.) Specifically, the model with

d = 7 achieved top-1 accuracy of 94.98% at 168 MFLOPs—outperforming the baseline ResNet (94.80% at 181 MFLOPs) while using 13 MFLOPs fewer. Even under tighter computational budgets, such as 128 MFLOPs (this model is highlighted in bold in

Table 1), the same model maintained 94.72% accuracy, only 0.08% below the baseline with 29% less computation. The reduction rate is calculated as {(181 − 128)/181} × 100% which corresponds to a 29% decrease in computational complexity. This trend indicates that large dilation not only preserves important information along edges and object boundaries but also effectively extends the receptive field of full convolutions, resulting in consistent accuracy improvements at reduced FLOPs.

While

Table 1 shows that larger dilation values consistently improve accuracy by extending the receptive field,

Figure 4 reveals that this also leads to a steady increase in MFLOPs. The expansion of the binary mask at higher dilation levels causes high-cost convolutions to cover larger image regions, raising computational complexity. To address this, our design adjusts the threshold parameter

θ to maintain a balanced mask size, thereby limiting unnecessary growth into high-frequency areas. This prevents the mask from becoming excessively thick and allows the model to capture richer contextual information without proportionally inflating computational cost. Consequently, the proposed approach achieves accuracy gains comparable to large-dilation configurations while preserving computational efficiency.

4.2. Effect of Depthwise Convolution

Building upon the benefits of dilation, we further explored the effect of applying depthwise separable convolutions to the low-frequency path. Unlike standard convolution, which operates across both spatial and channel dimensions simultaneously, depthwise separable convolution splits this process into a spatially focused depthwise operation followed by a lightweight pointwise projection. This design drastically reduces FLOPs, especially beneficial in early network stages where feature maps are large.

Figure 5 clearly illustrates this advantage: across all threshold settings (

θ ∈ {0.016, 0.044, 0.072, 0.1}), models with depthwise convolution consistently require fewer operations than their standard-convolution counterparts. In the figure, the numerical labels placed above each point indicate the corresponding top-1 accuracy (%) for that configuration, allowing direct comparison of efficiency and performance. Relative to the standard multi-path model, the depthwise variant achieves an average MFLOP reduction of 17.5, corresponding to a 14.3% decrease in operations, while the corresponding average drop in top-1 accuracy remains at only 0.18%.

These results confirm that depthwise convolution delivers double-digit percentage reductions in FLOPs across a wide range of thresholds, while maintaining accuracy drop within 0.18% of the standard model. The minimal performance degradation suggests that the reduced inter-channel interaction inherent to depthwise convolution is not harmful in the low-frequency path, where structural details are less critical.

When combined with morphological dilation in the high-frequency path, depthwise convolution forms a complementary mechanism: dilation expands the receptive field to capture contextual detail along edges, while depthwise convolution minimized computational redundancy in smooth regions. This synergy enables the proposed architecture to maintain competitive top-1 accuracy while significantly lowering computational demand, validating the use of depthwise convolution as a core element of frequency-aware design for resource-constrained environment.

4.3. Additional Experiments

To further validate the effectiveness of the proposed dual-path and depthwise convolution design, we conducted additional experiments under the more challenging CIFAR-100 dataset. For consistency, the dilation parameter was fixed at 7, which had been identified in

Table 1 as the most effective configuration in terms of accuracy–efficiency trade-off. All models were trained under the same conditions, using 200 epochs with SGD, a cosine learning rate schedule, with standard CIFAR augmentations.

The results summarized in

Table 2 demonstrate that the proposed design consistently reduces FLOPs while maintaining nearly the same accuracy as the baseline ResNet. Here, the baseline refers to a standard ResNet trained on CIFAR-100 without incorporating dual-path routing or depthwise convolutions. The baseline model achieves 77.58% top-1 accuracy at 647.72 MFLOPs. While our best-performing dual-path and depthwise variant reaches 77.53% accuracy with only 546 MFLOPs (Our-546 Model), corresponding to a 15.7% reduction in FLOPs, computed as (647.72 − 546)/647.72 × 100%, with only 0.05% accuracy drop. This model is highlighted in bold in

Table 2.

This trend indicates that the dual-path design allows the model to selectively allocate expensive full convolutions only to critical high-frequency regions, while employing lightweight depthwise operations elsewhere. As a result, the network maintains rich representational capacity despite substantial reductions in FLOPs. Importantly, the ability to preserve baseline-level accuracy under the more complex CIFAR-100 dataset further highlights the generalizability and robustness of our approach.

We further investigated whether the proposed dual-path and depthwise convolution design provides benefits when applied to a lightweight backbone such as EfficientNet-B0.

As shown in

Table 3, the baseline EfficientNet-B0 trained on CIFAR-10 achieves 91.56% top-1 accuracy with 30.76 MFLOPs. When incorporating our dual-path routing and depthwise convolution, the resulting model attains 91.52% accuracy while reducing the computational cost to 28.98 MFLOPs. In this setting, the dilation parameter was fixed to

d = 7, consistent with the ResNet experiments described above, and the threshold

θ was selected through a grid search, which identified

θ = 0.002 as the most effective configuration. This choice provides a 5.8% FLOP reduction with only a negligible accuracy drop of 0.04%. These results demonstrate that the proposed design extends effectively to lightweight backbones, confirming its robustness across different network families.

5. Conclusions

This paper presents a frequency-aware, multi-path convolutional architecture that delivers competitive classification performance while substantially reducing computational cost. By decomposing input features into high- and low-frequency regions via a binary mask, our model dynamically applies full convolutional operations to information-rich areas and lightweight operations to smoother regions. This selective computation strategy preserves accuracy where it is most critical while minimizing redundant processing in less informative zones. The introduction of morphological dilation enhances the mask’s effectiveness by expanding the spatial coverage of high-frequency regions, thereby capturing valuable contextual information around edges and fine details. Furthermore, incorporating depthwise separable convolutions in the low-frequency path reduces floating-point operations (FLOPs) without compromising accuracy-particularly in the early stages where feature maps are large.

Unlike conventional ResNet models, the proposed method leverages spatial-frequency priors to dynamically reallocate computational resources, enabling efficient deployment in resource-constrained environments such as edge devices and real-time applications without sacrificing classification performance. In our experiments, the proposed method reduced FLOPs by 29% compared to original ResNet with slightly reduced accuracy. Also, by replacing standard convolution with depthwise convolution in the low-frequency path achieved an additional average 14.3% reduction over the standard multi-path model with only a 0.18% drop in top-1 accuracy. Overall, our results demonstrate that a principled, frequency-aware design can effectively break the traditional trade-off between accuracy and efficiency in deep convolutional networks.

Author Contributions

Conceptualization, K.L.; Software, J.B.; Writing—Original Draft Preparation, J.B.; Writing—Review and Editing, Y.J. and K.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data used in this study are publicly available. CIFAR-10 and CIFAR-100 can be accessed at

https://www.cs.toronto.edu/~kriz/cifar.html and are also available via the torchvision.datasets library in PyTorch. URL accessed on 11 April 2024.

Acknowledgments

This work was supported by the Sungshin Women’s University Research Grant of 2025 (Grand Recipient: Kyujoong Lee).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, Y.; Sixou, B.; Peyrin, F. A review of the deep learning methods for medical imager super resolution problems. IRBM 2021, 42, 120–133. [Google Scholar] [CrossRef]

- Rasti, P.; Uiboupin, T.; Escalera, S.; Anbarjafari, G. Convolutional neural network super resolution for face recognition in surveillance monitoring. In Proceedings of the 9th International Conference, Articulated Motion and Deformable Objects, Palma de Mallorca, Spain, 13–15 July 2016; pp. 175–184. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Han, S.; Pool, J.; Tran, J.; Dally, W. Learning both Weights and Connections for Efficient Neural Network. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar]

- Sze, V.; Chen, Y.-H.; Yang, T.-J.; Emer, J.S. Efficient Processing of Deep Neural Networks. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- Molchanov, P.; Tyree, S.; Karras, T.; Aila, T.; Kautz, J. Pruning Convolutional Neural Networks for Resource Efficient Transfer Learning. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and Training of Neural Networks for Efficient Integer-Arithmetic-Only Inference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Alao, H.; Kim, T.S.; Lee, K. Area-Specific Convolutional Neural Networks for Single Image Super-Resolution. IEEE Access 2022, 10, 104567–104576. [Google Scholar] [CrossRef]

- Goyal, M. Morphological image processing. Int. J. Comput. Sci. Telecommun. 2011, 2, 59. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).