1. Introduction

Industrial energy system digitalization has accelerated the deployment of smart devices, capable of real-time monitoring, control, and communication. These components include Direct Current-to-Direct Current (DC/DC) converters, Alternating Current-to-Direct Current (AC/DC) converters, Electric Vehicle (EV) chargers, energy storage system (ESS) units, and Power Distribution Units (PDUs). They are typically integrated into a shared Low-Voltage Direct Current (LVDC) grid and coordinated by a centralized Energy Management System (EMS). To ensure interoperability, data exchange between the EMS and the devices often relies on industrial protocols such as Modular Digital Bus (MODBUS) over Transmission Control Protocol (TCP). These protocols use a client-server model over Ethernet networks [

1].

Each device exposes its internal variables, such as voltage, current, temperature, or state of charge. These variables are stored in predefined memory locations known as MODBUS register maps, which are organized in structured tables, with each parameter assigned a specific address. In this way, the EMS retrieves data by periodically querying these addresses. Such a polling mechanism is simple and robust, but it treats all variables equally, regardless of their importance or urgency. While this structure ensures simplicity and broad compatibility, it lacks any native mechanism for prioritizing critical or time-sensitive information. As a result, communication networks may become congested, and the delivery of important updates to the EMS can be delayed.

MODBUS/TCP remains one of the most widely adopted protocols in industrial automation due to its simplicity and interoperability with legacy devices. However, it does not provide native mechanisms for prioritization of contextual data across registers or devices. This limitation motivates the lightweight scheduling approach proposed in this work.

To address these constraints, the proposed solution is designed to run entirely on resource-constrained edge gateways, which are commonly used in industrial environments and offer limited computational capabilities compared to cloud or server-based platforms.

Although these field devices continuously generate operational data, not all monitored parameters have the same relevance at all times. In the absence of a prioritization mechanism based on contextual relevance, communication resources may be wasted on low-impact data, while delays in delivering critical updates can compromise system responsiveness. To address this limitation, we propose a lightweight edge-level approach that leverages Machine Learning (ML) to dynamically adjust the transmission frequency of each parameter, optimizing both responsiveness and communication efficiency.

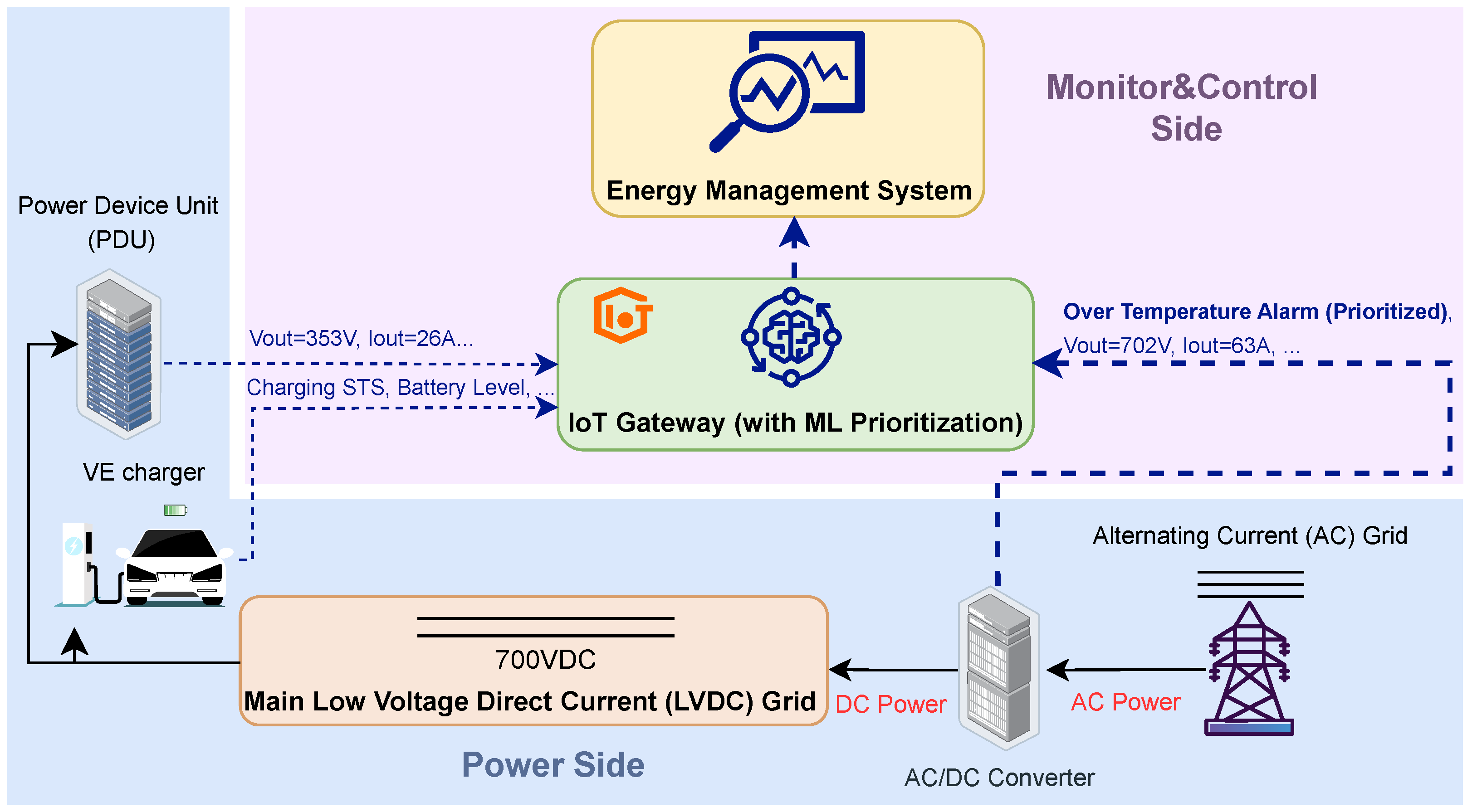

As an overview of the proposed communication concept,

Figure 1 illustrates a simplified architecture of an industrial LVDC system. It highlights the interaction between distributed devices such as DC/DC converters, EV chargers, ESS units, and the IoT gateway, which connects the field layer to the EMS via a MODBUS TCP server. The gateway integrates a lightweight ML engine that adjusts the update frequency of each parameter in real time, based on its contextual relevance.

Recent works have applied AI and ML techniques in energy systems, mainly for high-level functions such as demand forecasting, microgrid optimization, and resource scheduling [

2,

3]. These contributions enhance decision-making at the EMS level. However, they do not address challenges in low-level communication between field devices and supervisory platforms. In particular, the lack of contextual data prioritization mechanisms in industrial protocols such as MODBUS remains an open issue in the implementation of efficient and scalable industrial communication frameworks [

1].

Other works have applied ML models for anomaly detection in MODBUS/TCP networks [

4]. Such approaches contribute to enhanced cybersecurity by identifying irregular traffic patterns and system behaviors [

5]. In particular, a detailed comparison of these methods is presented in [

4], emphasizing the importance of integrating contextual information for more effective communication layer processing.

In parallel, the benefits of edge computing have been widely demonstrated in Industrial Internet of Things (IIoT) systems. Local data preprocessing at the gateway level has proven effective in reducing latency and improving responsiveness [

6,

7]. However, many of these solutions require protocol-level modifications or depend heavily on cloud infrastructure, and this limits their applicability in resource-constrained or legacy industrial environments. To address these limitations, the Reference Architecture Model Edge Computing (RAMEC) [

8] was proposed to support the design and classification of edge computing solutions in industrial contexts. It is particularly relevant when working with legacy protocols such as MODBUS/TCP, as it promotes low-latency, autonomous operation without relying on cloud infrastructure. These gaps motivate the design of context-sensitive solutions that can be deployed directly at the edge, without disrupting legacy systems.

To overcome these limitations, we present a lightweight data prioritization mechanism based on AI/ML, fully embedded within an IoT gateway. Unlike other approaches, our solution does not require changes to the existing MODBUS TCP protocol. It also preserves the polling behavior of the EMS. Developed as part of the Horizon Europe-funded SHIFT to Direct Current (SHIFT2DC) project [

9], it is evaluated through simulation using real-world signal profiles representative of LVDC environments. The deployment architecture promotes the use of low-end edge devices, such as the Revolution Pi 5 (RevPi5). This device was selected based on the constraints and architecture of the project demonstrators.

Although previous works have addressed improvements in reliability [

10], performance, or security for MODBUS-based systems, they tend to focus on general system behavior rather than the real-time interaction between devices and control platforms. Widely used protocols like MODBUS are simple and compatible but lack any built-in way to prioritize the variables that should be updated more frequently, based on their relevance. We found little attention to solutions that enable this kind of dynamic, context-aware prioritization without altering existing infrastructures, a limitation that can hinder responsiveness in practice.

Despite recent advances in ML-at-the-edge for adaptive sampling, event-triggered communication, and microgrid optimization, existing approaches do not address contextual prioritization within MODBUS/TCP over LVDC systems. Current solutions typically optimize generic IoT data flows or rely on protocol-specific modifications. However, they lack protocol-aware mechanisms that prioritize MODBUS registers according to evolving operating conditions. This is the gap that motivates the lightweight prioritization scheme presented in this paper.

Our approach directly targets this gap by adjusting the update rate of each parameter depending on its current importance, all while preserving full protocol compatibility. This aligns with recent trends in gateway design, which aim to improve communication reliability through redundancy [

10], and encourage the integration of more intelligent behavior at the edge. This is consistent with recent developments in edge computing, where embedded intelligence is increasingly used to improve responsiveness and efficiency in industrial environments. For instance, ref. [

11] describes an edge-based system that applies AI techniques for real-time monitoring and predictive maintenance in manufacturing. These advances show the growing potential of intelligent gateways to support decision-making closer to the data source. They also encourage the integration of context-aware mechanisms in industrial control systems.

As a summary, the main contributions of this work are as follows:

The design of a dynamic and resource-efficient ML-based data prioritization method that adapts to signal conditions, fully compatible with standard MODBUS TCP.

Its seamless integration into an edge-level IoT gateway, targeting resource-constrained hardware platforms without requiring modifications to the EMS logic or polling behavior.

A simulation-based evaluation conducted under realistic LVDC grid conditions, as a first step toward deployment in the SHIFT2DC demonstrators.

3. Materials and Methods

This section outlines the design rationale, the Machine Learning model selection, and the system architecture of the proposed approach, as well as the simulation setup used for the validation.

3.1. Problem Definition

In conventional MODBUS TCP communication, all data points are transmitted with equal priority. This uniform treatment leads to an inefficient use of network resources, particularly in distributed energy systems where multiple heterogeneous IoT devices interact continuously with a centralized EMS.

In practical industrial scenarios, such as those considered in the SHIFT2DC project, a wide variety of field-level devices are interconnected via a shared LVDC bus. This setup supports integrated energy management. These devices generate diverse real-time data points, including temperature, voltage, and state of charge, each with a different level of importance for energy management. Without prioritization, the EMS receives all data at a uniform rate. This occurs regardless of the data’s relevance or variability.

This lack of intelligent filtering introduces several challenges:

Network congestion, caused by the continuous transmission of low-impact or slowly changing values.

Delayed delivery of high-priority data, such as temperature measurements or fault signals.

Reduced system responsiveness in time-sensitive scenarios where immediate EMS reaction is required.

The main objective of this work is to integrate an AI/ML-based prioritization strategy within an IoT gateway to optimize MODBUS TCP communication. The goal is to classify each monitored parameter (e.g., temperature, voltage, current) as high or low priority, and to adjust its update frequency in the MODBUS register map accordingly. This ensures that critical information is delivered to the EMS with lower latency, without requiring changes to its polling behavior. This binary prioritization scheme is the result of using a lightweight ML classifier that labels each parameter based on contextual features. Restricting classification to two classes reduces computational complexity, which is crucial for deployment on resource-constrained edge devices like the RevPi5—while ensuring compatibility with the existing MODBUS register structure.

The proposed mechanism reduces bandwidth consumption by lowering the frequency of less relevant data transmissions. At the same time, it also reduces the latency of critical information delivered to the EMS and enhances the efficiency and scalability of MODBUS-based energy systems.

The system is being developed in the context of the SHIFT2DC project, with deployment planned on a real industrial demonstrator involving actual DC/DC converters, IoT gateways, and an EMS. Since hardware integration is ongoing, this paper focuses on simulation-based validation of the proposed priority engine.

3.2. AI Model Selection

To implement real-time data prioritization in the IoT gateway, a set of lightweight ML algorithms was evaluated. The goal is to classify each data point directly on the device as high or low priority, without relying on cloud-based processing. This local approach is essential to reduce latency, maintain system autonomy in case of connectivity issues, and preserve data privacy by avoiding the transmission of sensitive information to external servers.

Additionally, the solution must operate within the memory and processing constraints of edge hardware, such as the RevPi5 (more details:

https://revolutionpi.com/shop/en/revpi-connect-5 (accessed 15 July 2025)), an industrial gateway based on the Raspberry Pi Compute Module 5 with a quad-core Arm Cortex-A76 CPU (up to 2.4 GHz), 8 GB of high-speed LPDDR4X RAM, and 32 GB eMMC storage.

Although classified as an edge device, this platform is substantially more capable than ultra-constrained microcontrollers. Consistent with this, the per-sample p95 latencies, which are analyzed in detail later (see

Section 4.6.1), were obtained in a Debian 12 Linux Container (LXC). These values remain below 1 ms for the selected Multilayer Perceptron (MLP) model (i.e., less than 0.1% of the 1 Hz processing budget). Thus, the use of Tiny Machine Learning (TinyML)-specific optimizations was not essential for the present scope, as the target platform was a RevPi5-class gateway.

Three lightweight supervised classifiers were considered: Decision Tree (DT), Random Forest (RF), and MLP. A DT is a tree-based model that partitions the input space into hierarchical rules [

25], an RF extends this by aggregating multiple randomized trees for higher accuracy [

26], and an MLP is a feedforward neural network trained by backpropagation [

27].

Although modern ML techniques such as Convolutional Neural Network (CNN), Long Short-Term Memory (LSTM), or Transformer-based architectures deliver excellent performance across many applications, they typically require substantial computational resources and memory. These demands make them unsuitable for deployment in embedded gateways operating under real-time constraints. In contrast, classical algorithms such as DT, RF, and MLP offer a better trade-off between accuracy, interpretability, and efficiency, making them more appropriate for edge-level execution. Their transparent decision logic and ease of interpretation are particularly valuable in industrial environments, where explainability and traceability are essential for validation, debugging, and certification.

To meet deployment constraints, all classifiers were trained for binary classification (high vs. low priority). This simplifies decision logic and reduces computational complexity. This choice simplifies the decision logic, lowers computational complexity, and facilitates integration with MODBUS systems.

Considering both the computational constraints and the need for explainability in binary classification, three algorithms were selected for further evaluation:

RF: An ensemble learning method suitable for structured data. It aggregates multiple decision trees to enhance predictive accuracy while retaining a degree of transparency through feature importance measures.

MLP: A feedforward neural network capable of modeling nonlinear relationships and adapting to more complex data patterns. Although it requires more computational resources, its flexibility can be advantageous in environments with high signal variability.

DT: A simple model based on explicit decision rules. Its clarity and low computational footprint make it ideal for deployment on devices with constrained hardware such as the RevPi5.

The input features used for classification were derived specifically from the signals selected for prioritization, namely temperature, voltage, and state of charge.

These features capture various aspects of each signal, such as current value, local variability, short-term trends, and anomaly-like behavior, depending on the physical characteristics of the parameter.

In addition, statistical and temporal indicators such as dynamic variation, frequency of change, and event-like fluctuations were included to characterize dynamic patterns with greater precision. The relevance of each parameter to critical decision-making processes in the EMS was also taken into account. A detailed breakdown of the features used per prioritized signal is presented later in the results section.

The selected model assigns a binary label to each parameter:

The final model selection is based on a trade-off between classification accuracy, inference time, and the need for transparent decision-making. Although RF and DT models offer fast and explainable predictions suitable for most operational scenarios, the MLP can be advantageous in environments with high data variability and nonlinear dependencies.

To ensure that the selected ML models could effectively learn from the labeled data in all configurations tested, including those with a single high-priority parameter, two strategies were applied to improve the robustness of the training. First, during dataset generation, a minimum ratio of out-of-bounds values was enforced for prioritized parameters. This guarantees that a significant portion of high-priority samples exhibit critical behavior (e.g., threshold violations). Such cases reflect realistic fault or anomaly conditions. Second, all classifiers were trained using the class_weight = ‘balanced’ option provided by scikit-learn, allowing the models to compensate for label imbalance without altering the dataset distribution. These measures help maintain consistency across simulations with varying numbers of parameters and priority labels, and are essential to ensure the reproducibility and real-world relevance of the proposed classification framework.

The training process assumes evenly spaced samples (1 Hz). Subsequent simulations, however, introduce realistic communication jitter to test how transmission delays affect runtime prioritization. Since time-based features are not used, this variability in sampling time does not impact model predictions.

3.3. System Architecture

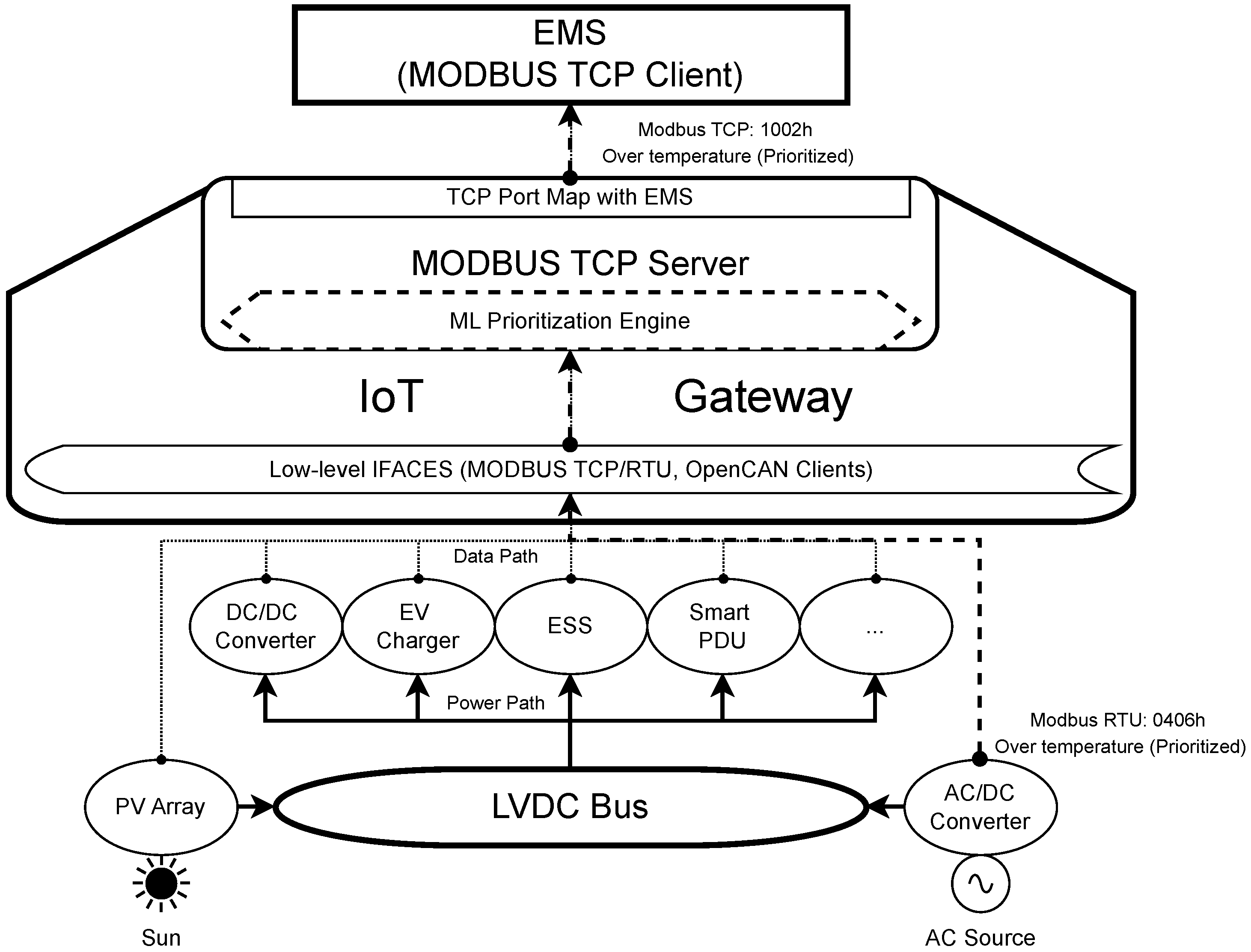

Figure 2 illustrates the system architecture, which integrates an EMS with multiple heterogeneous field devices deployed within a LVDC network, forming a coordinated control and monitoring environment. These include DC/DC converters, Electric Vehicle (EV) chargers, energy storage systems (ESSs), smart PDUs, and intelligent photovoltaic modules. All components are connected to the same LVDC bus and contribute to the overall energy management strategy.

At the core of the system lies an IoT gateway based on the RevPi5 platform. Acting as a MODBUS TCP server, the gateway bridges communication between the high-level EMS (via Ethernet) and field devices (via MODBUS RTU or CAN, depending on the communication interface supported by each device). The gateway maintains a dynamic register map that reflects the latest data received from all connected devices.

To optimize communication efficiency under bandwidth constraints, the gateway incorporates the ML model described in the previous section. This model assigns dynamic priorities to selected parameters, such as temperature, voltage, or state of charge, and adjusts their update rates accordingly within the MODBUS register map. As a result, time-critical data is refreshed more frequently, while less relevant parameters are throttled to reduce network overhead.

This update rate modulation strategy is particularly effective in MODBUS-based systems where the EMS operates as a polling client. Since MODBUS does not support event-driven communication, server-side control of data update frequency offers a compliant and nonintrusive solution. Importantly, this mechanism does not require any modification to the EMS client or to the underlying communication protocol, making it suitable for deployment in legacy industrial environments.

As physical integration with real hardware is still ongoing within the SHIFT2DC project, a simulation environment has been developed to validate the proposed ML-based prioritization strategy. The testbed includes the following:

A Python-based MODBUS TCP server emulating the intelligent gateway.

Synthetic data streams emulating realistic behavior of field devices.

Runtime integration of the ML model to dynamically control parameter prioritization.

Although the simulated EMS client was initially included to mimic polling operations, it is not required for evaluating the prioritization model itself and was omitted during performance analysis.

The architecture details shown in

Figure 2 illustrate the internal organization of the gateway, emphasizing the role of the embedded ML-based prioritization engine in managing data flow between field-level devices and the EMS.

Data from heterogeneous field devices connected to the LVDC network is first collected by the data acquisition module. This includes raw sensor readings such as voltage, current, temperature or state of charge, captured at different sampling rates depending on the device type and communication protocol.

A lightweight feature extraction stage processes these signals. It computes statistical and contextual metrics such as signal variance, rate of change, threshold exceedance flags, and relative stability.

Formally, the feature extraction stage computes the following indicators for each signal

:

The first group above summarizes the continuous statistical descriptors that characterize local dynamics (e.g., trends, variability, and short-term averages). The following group focuses on event-like flags and normalization, which are key to capturing abrupt transitions and to scaling signals with different physical units.

The subset of features actually used to train each individualized classifier is summarized later in

Section 4.4, where parameters such as

temp_acdc,

vout_acdc, and

soc_bat are associated with the most physically relevant indicators.

These features capture both the dynamic behavior and the operational relevance of each parameter. The resulting feature vector is then passed to an embedded ML engine, which performs real-time inference using one of the supported models, RF, DT, or MLP. The output is a binary label that indicates whether a given parameter is considered high (1) or low priority (0).

We acknowledge that the present framework adopts a binary prioritization (0 = low, 1 = high) to ensure simplicity and real-time feasibility on resource-constrained gateways. While real LVDC deployments may benefit from multi-level granularity (e.g., critical, high, medium, low), the binary formulation provides a tractable first step that is fully compatible with existing MODBUS register structures. The proposed design could be naturally extended to multi-class classification if required.

This label is used by the MODBUS register manager to adjust the update frequency of each parameter within the register map exposed to the EMS. High-priority data points are refreshed at a higher rate to ensure low-latency delivery. Less relevant values are throttled to optimize bandwidth. This prioritization strategy is implemented entirely within the gateway, preserving compatibility with legacy EMS polling behavior.

Figure 3 illustrates the internal processing workflow of the IoT gateway. It highlights the role of the embedded ML classification logic responsible for the prioritization of dynamic data.

On the left, the data path begins with the acquisition of raw sensor values from field devices. It is followed by a lightweight feature extraction stage that computes key statistical and contextual metrics, such as signal variance, rate of change, threshold exceedance flags, and relative stability. These features capture both the dynamic behavior and the operational relevance of each parameter. The resulting feature vector is then passed to a compact ML classifier, based on RF, DT, or MLP, which assigns a binary priority label to each parameter. The MODBUS register manager then adjusts the update frequency of each monitored value accordingly.

On the right, the control path remains unaffected by the ML logic, ensuring that EMS setpoints are forwarded directly to field devices. This preserves deterministic control behavior while enabling adaptive monitoring.

3.4. Baseline Comparison: Static Rule-Based Prioritization

To contextualize the benefits of the proposed ML-based prioritization strategy, a baseline approach using a static rule-based logic is considered. In this conventional scheme, each monitored parameter, such as temperature, voltage, or state of charge, is assigned a fixed priority level. This assignment is based on engineering heuristics or domain-specific knowledge. High-priority parameters are updated in the MODBUS register map at a higher refresh rate, while low-priority values are updated less frequently.

This manual configuration approach is commonly found in industrial systems, particularly in scenarios where simplicity and predictability are preferred over adaptability. However, it presents notable limitations. Priority levels remain constant throughout system operation, regardless of contextual changes or abnormal signal behaviors. For example, a temperature value that is typically stable may suddenly spike due to an external fault or unexpected thermal event—yet its update frequency would remain unchanged unless manually reconfigured.

Moreover, static prioritization does not scale well with an increasing number of sensors or heterogeneous devices. Maintaining and validating priority rules becomes increasingly complex and error-prone, especially in dynamic environments with variable operating conditions.

In contrast, the proposed ML-based approach continuously evaluates the real-time behavior of each monitored parameter. It extracts statistical and temporal features that capture variability, trend shifts, and anomaly-like patterns. The embedded model then dynamically assigns a binary priority label to each parameter, allowing the system to react autonomously to evolving conditions without requiring user intervention. This ensures that only contextually relevant data is transmitted at high rates, while non-critical values are downsampled, reducing overall network load.

Despite the introduction of a classification layer, the computational overhead introduced by the ML model remains negligible, as demonstrated later in the scenario-based evaluation. The gateway implementation supports real-time execution even on resource-constrained hardware platforms, maintaining deterministic control while enabling adaptive data delivery.

This flexible and self-adjusting mechanism provides a scalable alternative to traditional rule-based schemes, enhancing communication efficiency and robustness in industrial LVDC networks.

3.5. Realistic Signal Generation and Dataset Preparation

To train and evaluate the proposed ML classifiers under conditions that reflect realistic industrial telemetry, a dataset was generated using a custom Python-based simulation framework. The signal generation process emulates the temporal behavior of actual MODBUS-based communication, including parameters with both high-impact and low-variability profiles.

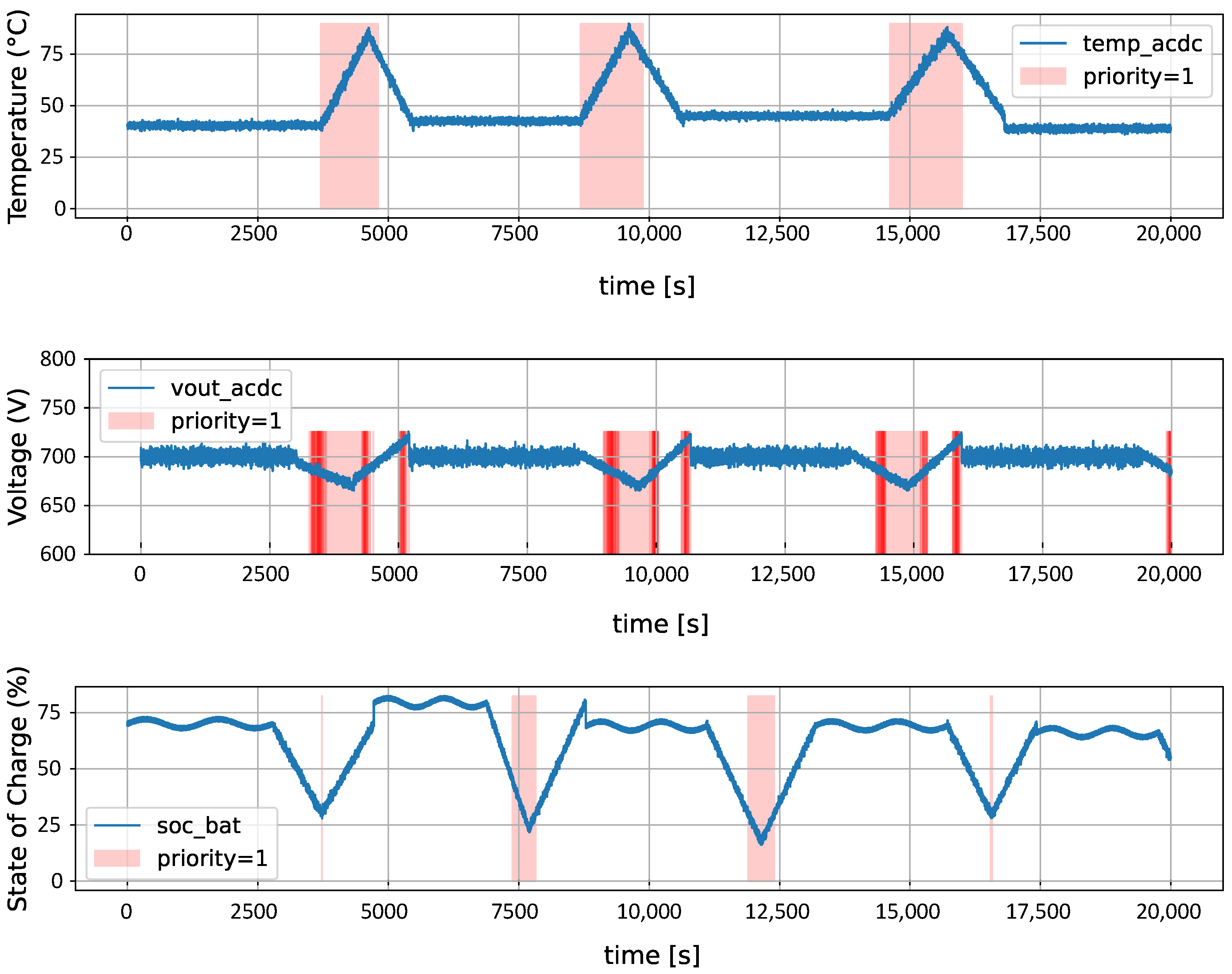

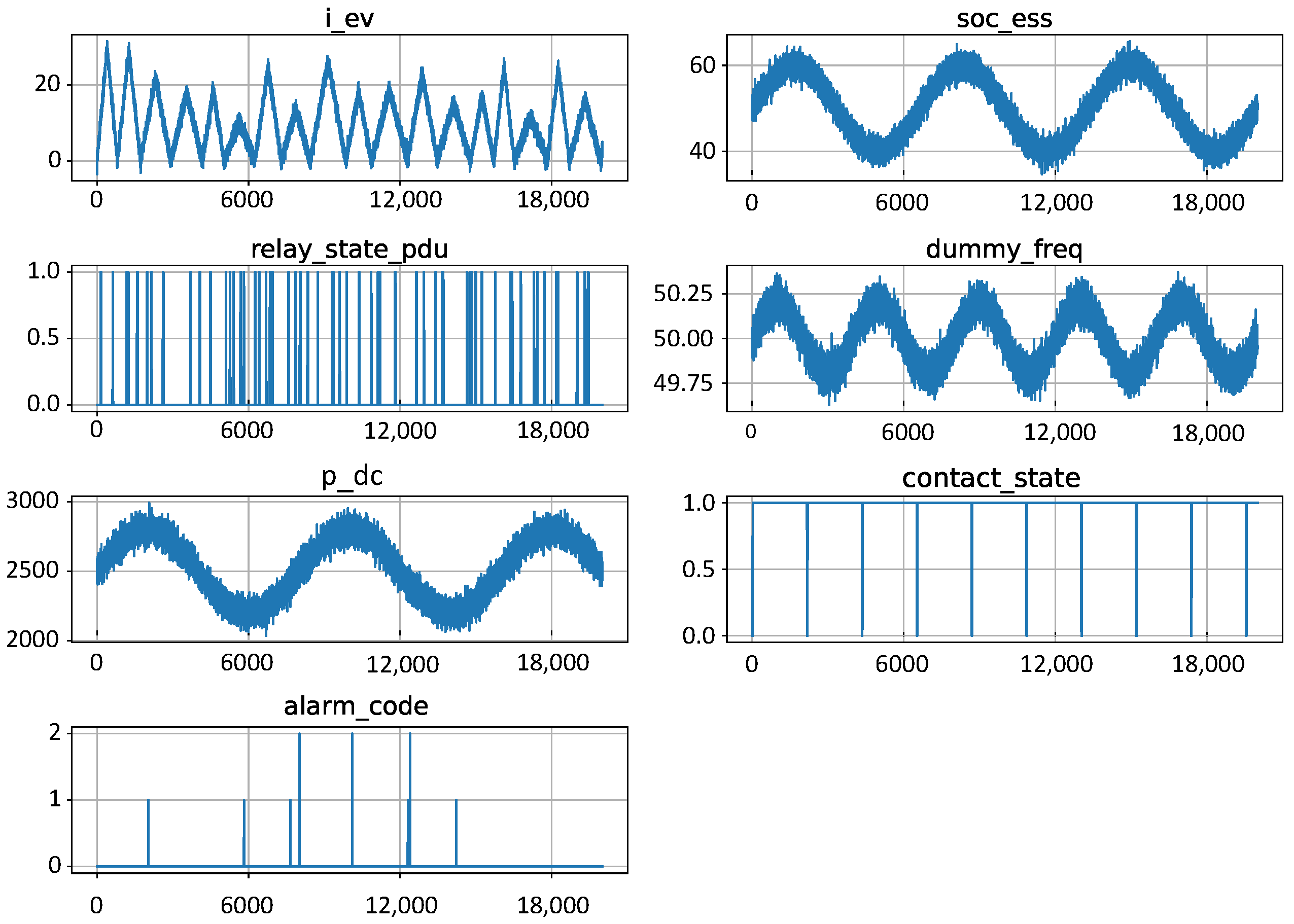

A total of ten representative signals were simulated, covering temperature, voltage, state of charge, current, and discrete status indicators. Among them, three parameters (temp_acdc, vout_acdc, and soc_bat) were designed to exhibit critical dynamic behavior. Specifically, temp_acdc represents the internal temperature of the AC/DC converter, and vout_acdc corresponds to its output voltage. Meanwhile, soc_bat indicates the state of charge of the energy storage system (ESS). Initial rules for labeling priority events (label= 1) combined fixed thresholds and time-domain patterns to reflect domain-informed expectations of abnormal operation.

Signals were first sampled at 1 Hz over a 5.56 h interval, resulting in 20,000 time steps per parameter. Controlled variability in amplitude, slope duration, noise level, and event timing was introduced to enhance realism. However, preliminary training revealed that the initial labeling logic was insufficiently consistent for certain signals. In particular, priority events in temp_acdc and soc_bat lacked clearly learnable patterns, leading to poor generalization and degraded classification performance.

To address this, the labeling logic for these signals was refined to reflect more robust event semantics. New rules enforced better alignment between signal behavior (e.g., sustained rises or drops) and priority activation, ensuring that abnormal trends were properly flagged. Subsequently, additional datasets were generated to improve training diversity and address class imbalance.

First, a 100,000-sample dataset was created, increasing the number of priority-labeled instances and aiding convergence. Then, a 200,000-sample version was prepared, preserving signal realism while further improving the frequency of meaningful events. Finally, a 1,000,000-sample dataset was generated using the revised logic. This version became the definitive source for training the final ML models evaluated in this work.

Each dataset was preprocessed using a feature extraction script that computed signal-specific indicators such as absolute and relative differences, rolling statistics (mean, standard deviation, range), and normalized values. These values were derived based on known physical limits. A binary priority_label column was derived for each sample to serve as ground truth.

Training subsets such as 4p_2prio, 6p_1prio, and 10p_2prio were created by selecting subsets of parameters and applying a logical OR across their labels. For example, the 4p_2prio configuration includes four parameters, two of which (temp_acdc and vout_acdc) are labeled as priority. The other configurations follow the same logic, varying in the number of total and prioritized signals. However, following validation tests and error analysis, the study shifted toward individual model training per prioritized parameter, further improving classification consistency and minimizing inter-signal label noise.

This progressive refinement of the dataset, both in terms of sample volume and label quality, was essential to ensure the learnability and generalization capabilities of the proposed models. The next section describes the training strategy and validation procedure used to assess classifier performance across different parameter configurations and scenarios.

3.6. Model Training and Selection

Each generated dataset was used to train three classification models: RF, DT, and MLP. Two complementary training procedures were applied. First, an 80–20 holdout split was used to obtain initial performance estimates. Each model was evaluated using accuracy, precision, recall, and -score metrics. A threshold calibration process was conducted for real-time deployment, where thresholds ranging from 0.10 to 0.90 (in steps of 0.01) were tested, and the threshold maximizing the -score was selected for deployment on the RevPi5 gateway.

Second, a five-fold stratified cross-validation procedure was conducted to evaluate generalization. All features were reused per fold, and the mean ± standard deviation of each metric was recorded. -score values were visualized using comparative bar charts. This dual strategy, combining holdout validation and cross-validation, ensured both robust model selection and repeatable performance evaluation.

Based on these results, individual MLP models trained with up to 1,000,000 samples per prioritized signal were selected as the most suitable option. Although the RF model achieved slightly better scores in certain configurations, the MLP offered significantly superior inference efficiency on the RevPi5 hardware. Its favorable balance between accuracy and computational cost motivated its exclusive adoption as the default inference engine for all subsequent scenario-based simulations.

Latency analysis considered two scenarios. First, inference-only, which measures only classifier prediction time. Second, end-to-end (E2E), which includes the overhead introduced by feature extraction.

The E2E case was evaluated in vectorized (batch) and incremental (sample-by-sample) modes. The latter best reflects real-time operation on embedded gateways. Results under both conditions are presented in

Section 4.6.1.

3.7. Simulation Scenarios

To validate the effectiveness of the proposed MLP-based prioritization strategy, a set of targeted simulation scenarios was designed. Each scenario varies in the number of monitored parameters and the number of signals to be prioritized. These configurations reflect representative use cases in industrial ICS environments, where communication load and signal diversity must be balanced in real time.

All simulations were carried out using the final MLP models trained with up to 1,000,000 samples per prioritized signal. For each case, the gateway emulation applied dynamic prioritization using real-time inference. It then compared the output against the static ground-truth labels defined during dataset generation.

All simulations were executed on a Debian 12 (Bookworm) Linux environment running inside an LXC on a Proxmox host. Python 3.11.2 was used together with

NumPy [

28],

Pandas [

29], and

scikit-learn [

30] for dataset generation, feature extraction, and model training. Synthetic signals were generated at a sampling rate of 1 Hz to emulate realistic field-device telemetry, producing datasets up to 1,000,000 samples per parameter.

The solver relied on the standard scikit-learn implementations of RF, DT, and MLP, with the latter selected for deployment due to its balance of accuracy and inference efficiency. Although the long-term objective is deployment on a RevPi5 gateway in the SHIFT2DC demonstrators, all results reported here were obtained in this controlled Linux-based simulation environment.

The specific simulation scenarios and their detailed configurations are summarized in

Table 1, which is presented at the beginning of

Section 4.

4. Results

The effectiveness of the proposed ML-based data prioritization mechanism was evaluated through simulation experiments. These were designed to emulate realistic edge-level communication between an EMS and multiple field devices using the MODBUS TCP protocol. Signal traces used for these simulations were based on physically plausible telemetry patterns, incorporating both normal and critical operating conditions. These included temperature, voltage, current, and state of charge measurements, among others.

As previously described in the methodology, multiple datasets were generated with increasing volume and signal complexity to enable robust classifier training. Although the generation process and feature extraction steps were detailed earlier, the present section focuses on the analysis of classification behavior, signal-level labeling dynamics, and model performance across several configurations. The emphasis is on visualizing the labeling logic, comparing the classifier results, and demonstrating how the size of the training dataset affects the generalization capacity of each model.

Although the simulation framework supports the injection of stochastic jitter to emulate industrial network conditions, no timing variations were applied during the final scenario evaluations. This choice is justified by the fact that all ML models were trained using data sampled at a fixed 1 Hz interval, with no temporal jitter introduced during training. Since the classifiers rely solely on signal-derived features and not on time-based indicators, the presence or absence of jitter has no measurable effect on prediction accuracy. Therefore, all scenarios were executed with uniform sampling to ensure consistency, reproducibility, and isolation of model behavior.

Only the MLP-based dynamic prioritization strategy was applied in the evaluated scenarios. As described in

Section 3, the final deployment architecture uses one independently trained MLP model per prioritized parameter. Each model was trained using a dedicated feature set specifically designed to capture the dynamic characteristics of its target signal. The use of separate classifiers minimizes inter-signal noise and enhances detection robustness across diverse operating conditions.

The following sections analyze classification accuracy, prioritization behavior, and real-time execution metrics across all simulated scenarios. Particular attention is given to the detection of critical signal events, latency of inference, and computational efficiency of the embedded prioritization mechanism.

Table 1 provides a summary of all simulation scenarios evaluated, their main parameters, and links to the corresponding subsections of the results and figures for rapid reference. These scenarios are discussed in order of increasing system complexity and signal concurrency in the following sections.

To contextualize these scenarios, we begin by presenting the signal generation method and the logic used to assign priority labels.

4.1. Synthetic Signal Generation and Priority Labeling

To evaluate the effectiveness of the proposed ML-based prioritization strategy, we generated synthetic training signals using domain-informed dynamics. These signals emulate the expected behavior of monitored MODBUS parameters in a representative LVDC environment, covering both nominal and fault conditions. Three key parameters (temperature and voltage of the AC/DC converter, and the state of charge (SoC) of the main battery) were modeled with realistic patterns and explicit priority labeling rules. The remaining parameters, characterized by low variability, were excluded from the prioritization process.

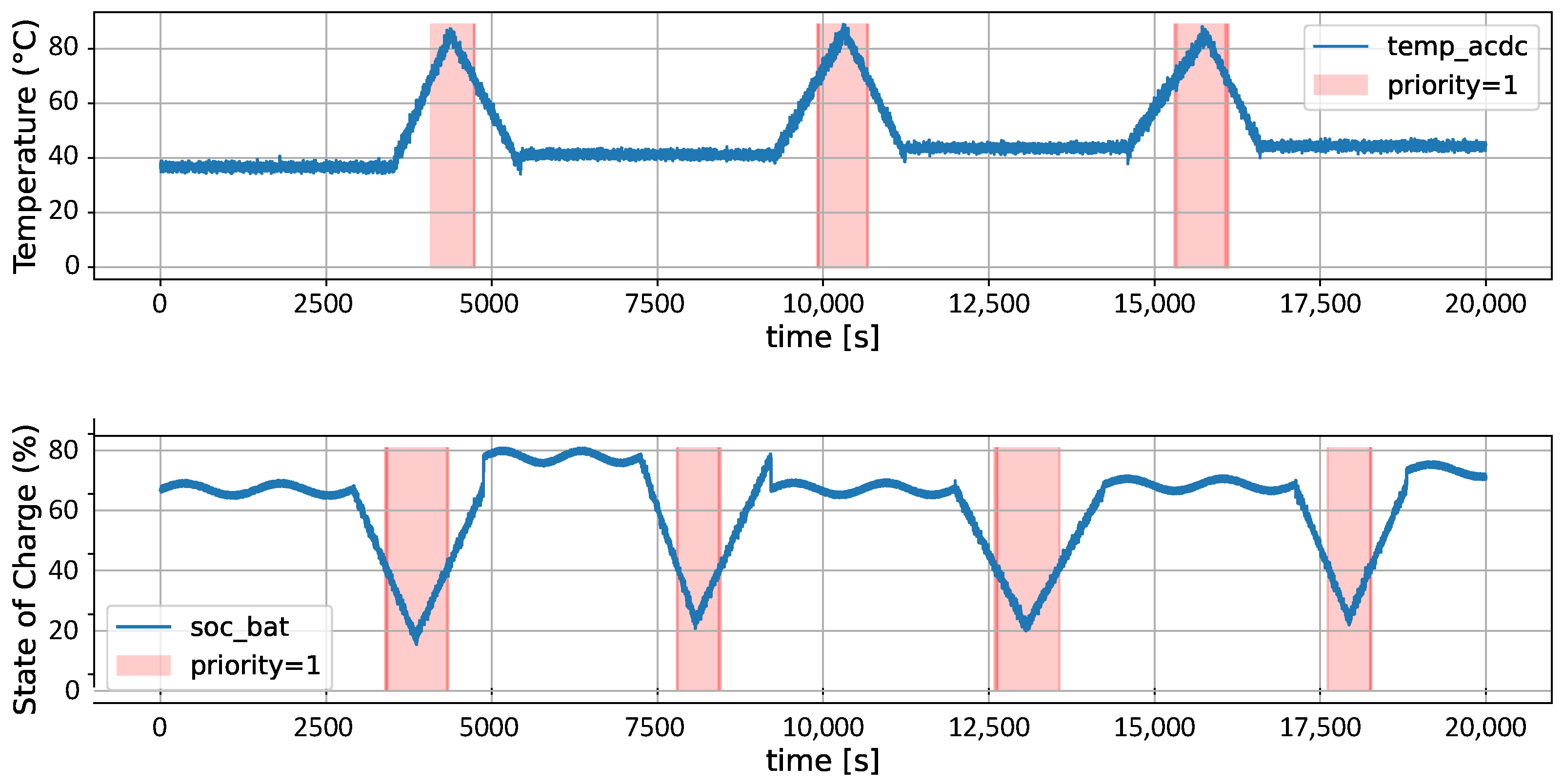

Each signal comprises 20,000 samples recorded at 1 Hz, corresponding to over 5 h of continuous operation. During this period, the evolution of the signal value was used to compute derived features (e.g., rolling average, standard deviation, out-of-range flags). A binary priority label was then assigned for training the classifier.

Table 2 summarizes the criteria used to assign high-priority labels (

label = 1) to each primary parameter. These rules combine static thresholds with temporal dynamics, allowing the detection of both abrupt and gradual events indicative of upcoming critical conditions.

Figure 4 illustrates the resulting time series for the three prioritized parameters: temperature (

T) and voltage (

V) of the AC/DC converter, and the state of charge (SoC) of the main battery, together with their respective high priority intervals (red bands).

Note that in this context, the main battery refers to a single storage unit monitored for critical events. In contrast, the state of charge of the energy storage system (ESS), included among the non-prioritized parameters (

Figure 5), refers to the aggregate charge level of the entire storage system. Each signal is generated according to the rules described in

Table 2, providing representative examples of both nominal and critical operation.

The remaining signals exhibit low-variability behavior and are therefore excluded from the training of priority classifiers. Nonetheless, their inclusion ensures the dataset reflects realistic telemetric scenarios, encompassing typical status, measurement, and communication variables encountered in operational LVDC environments.

Figure 5 shows representative examples.

4.2. Cross-Validation Analysis of Physically Aware Models

After defining physically interpretable criteria for signal prioritization (see

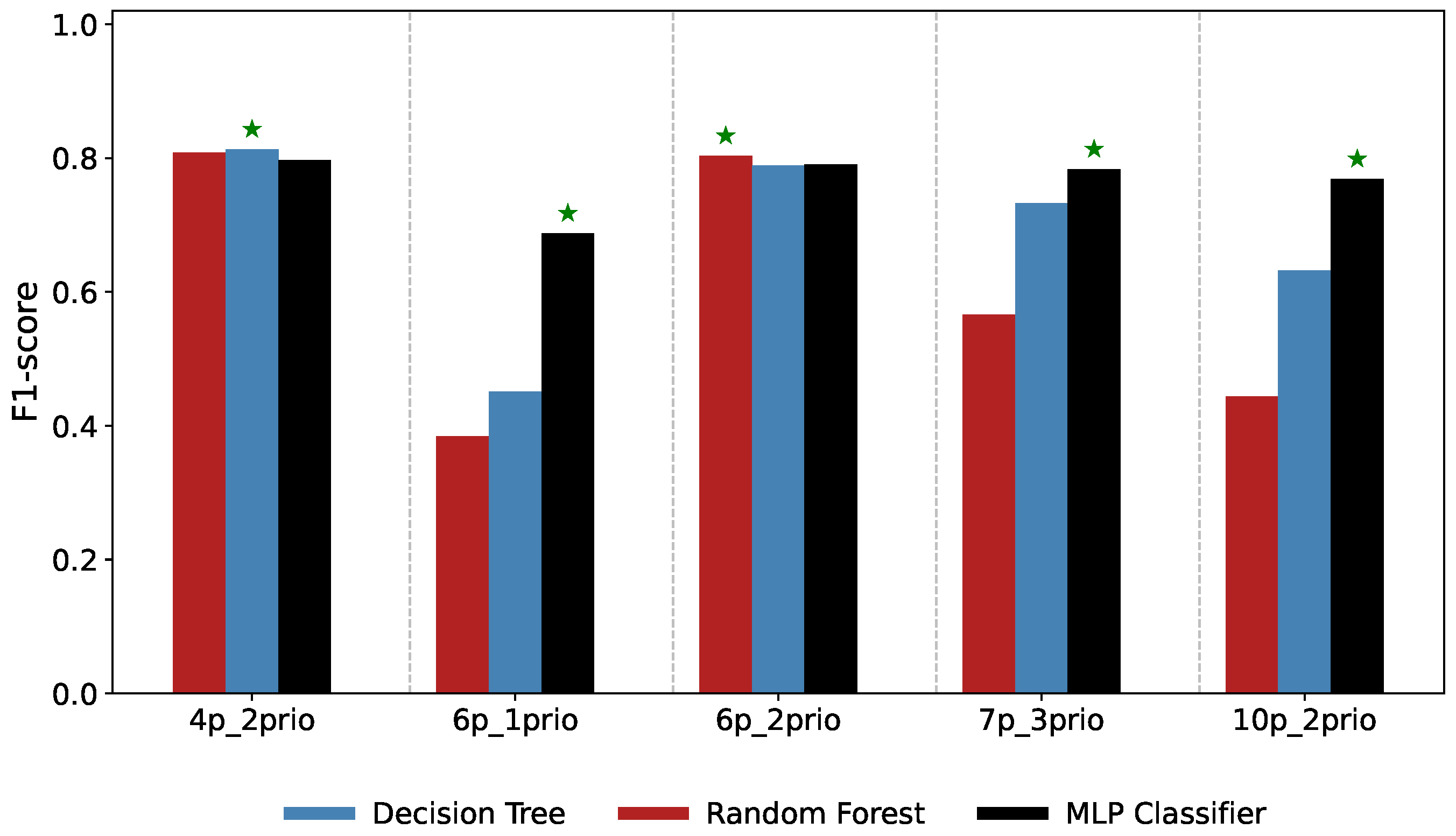

Section 4.5), we evaluated the performance of three ML classifiers (RF, DT, and MLP) across several prioritization configurations. These sets of parameters varied in the total number of monitored parameters (from 4 to 10) and in the number of signals prioritized simultaneously (from 1 to 3).

We performed five-fold stratified cross-validation on realistic datasets containing 200,000 samples to compute accuracy, precision, recall, and the

score. The

score is a weighted harmonic mean of precision (

P) and recall (

R), defined as

where

controls the relative importance of recall with respect to precision. The

-score (

) equally weights precision and recall and is the most relevant case for our evaluation, as it balances false positives (precision) and false negatives (recall) [

31].

Table 3 reports the average performance across all folds for each configuration and classifier. The results reflect how each model responds to the increasing complexity of the input and the number of signals simultaneously prioritized. To facilitate comparison, the best

-score for each configuration is highlighted in bold.

As shown in

Figure 6, the MLP classifier achieves the highest

-score in most configurations, specifically in

4p_2prio,

6p_2prio, and

7p_3prio. This confirms the strong generalization capability of the MLP model when trained on large, physically guided datasets. The capability holds even as the number of monitored signals and simultaneous priorities increases. The DT model performs slightly better than the MLP in

6p_1prio and

10p_2prio, highlighting its potential as a computationally efficient and robust alternative for edge deployments.

Overall, the MLP classifier provides the most balanced trade-off between precision and recall, making it a suitable choice for deployment in industrial embedded environments. The DT classifier also shows consistent performance across all scenarios, with lower complexity. In contrast, the RF classifier generally underperforms in terms of F1-score, primarily due to low precision in multi-signal scenarios, despite its good recall. These results emphasize the importance of selecting the appropriate model according to the application constraints and available edge computing resources.

All classifiers (RF, DT, and MLP) may benefit from adjusting the decision threshold used during inference. The presented results rely on a default threshold of 0.5, but further evaluations revealed that tuning this parameter can significantly improve the balance between precision and recall, especially in imbalanced scenarios or for signals with infrequent priority events. This aspect is explored in more detail in the following subsection.

4.3. Limitations of Multi-Signal Models and Methodological Refinement

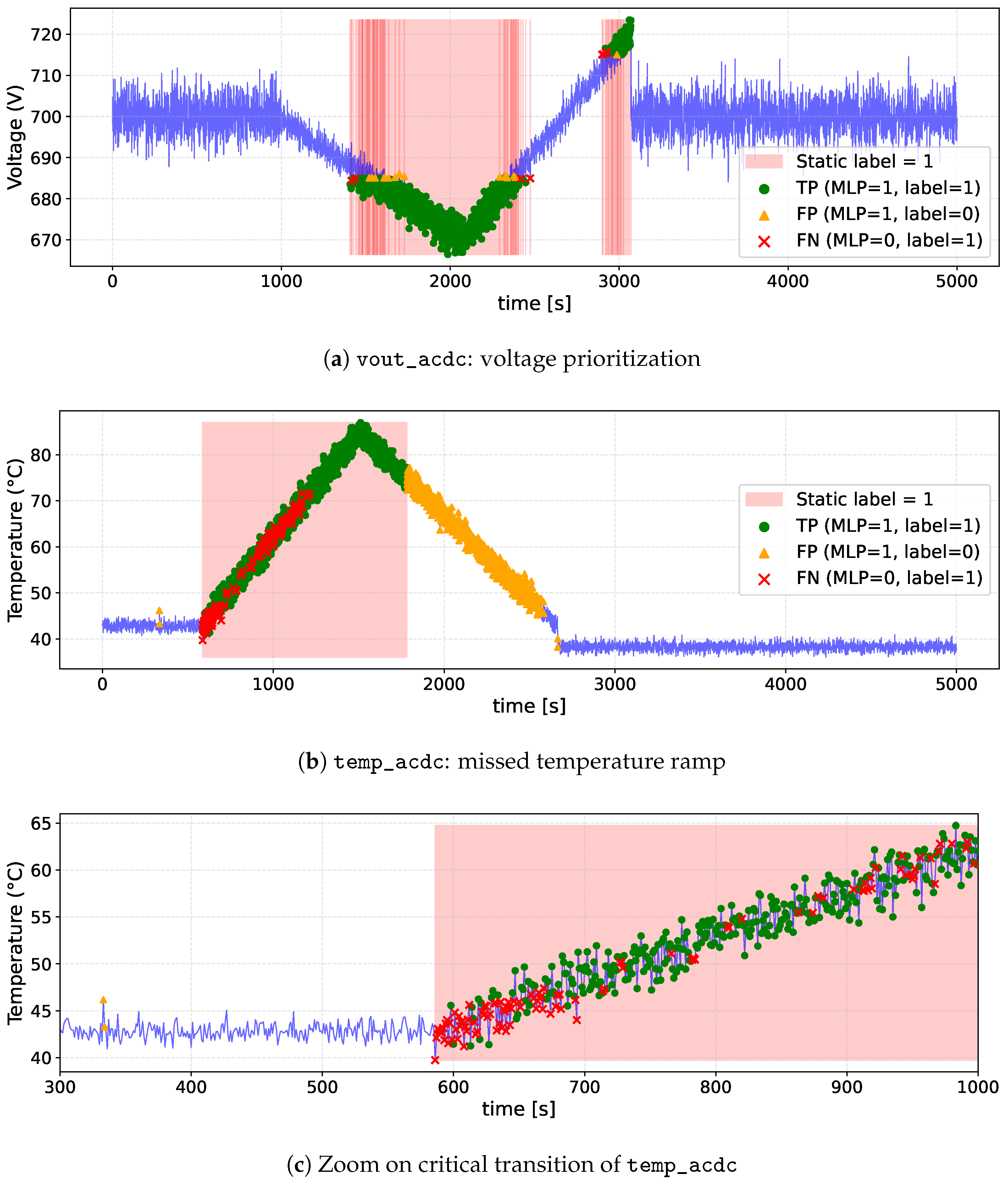

Despite promising average metrics, a detailed analysis of individual outputs revealed important limitations when using multi-signal models. For instance, the MLP trained with multiple prioritized parameters (e.g., configuration 6p_2prio, 200,000 samples) successfully detected abrupt voltage excursions in vout_acdc, but often failed to generalize to more dynamic behaviors, such as rapid temperature ramps or gradual battery discharges.

Figure 7 illustrates two representative outcomes for the multi-signal MLP model: (

a) correct prioritization of under and overvoltage events in

vout_acdc; (

b) missed detection of a temperature ramp in

temp_acdc; and (

c) a zoom on the critical transition of (

b), where clustered false negatives (red) are observed. In (a), the true positives (green) align with the reference high priority intervals, while in (b)–(c), the model fails to capture the rapid slope change despite the overall correct detection in the stable regions.

These results suggested that training a single model to handle multiple signals simultaneously introduces ambiguity, especially when signals differ in dynamics and thresholds. While suitable for parameters with sharp transitions like vout_acdc, this approach led to reduced sensitivity in more nuanced cases like temp_acdc and soc_bat.

These limitations highlighted the need for a more tailored approach, motivating the transition from a multi-signal model to dedicated classifiers for each prioritized signal.

To overcome these limitations, we refined the modeling strategy by training a dedicated MLP for each prioritized signal. Each model was trained using tailored feature sets and ground-truth labels that reflected the specific dynamics of the corresponding parameter. Although this individualized approach improved consistency, generalization issues persisted in certain cases. This happened despite using enriched features and expanding the datasets up to 1,000,000 samples.

This outcome highlighted a fundamental limitation: scaling data and features alone cannot resolve poor generalization when the labeling criteria lack physical interpretability. Ultimately, robust performance was only achieved after simplifying and redefining the priority labeling rules using clear, physically grounded thresholds and ramp-based activation logic. The effectiveness of this final methodological refinement is demonstrated in the next section through updated validation metrics and comparative figures.

4.4. Intermediate Approach: Individual Models with Original Priority Criteria

After observing the generalization limitations of multi-signal models, we trained dedicated MLP classifiers for each prioritized parameter (temp_acdc, vout_acdc, and soc_bat). Each model was trained using only the features and labels relevant to its corresponding signal to eliminate interference from unrelated signals.

To support this individualized training strategy, a customized feature set was defined for each parameter based on its physical behavior. While all signals initially shared a common feature pool, further analysis revealed that specific dynamics—such as temperature ramps or battery SoC drops—required tailored features to capture directional trends. As summarized in

Table 4,

temp_acdc and

soc_bat incorporated slope-based indicators and trend flags, while

vout_acdc retained a delta-oriented set suitable for its sharper transitions.

To further enhance generalization, the dataset size was increased from 200,000 to 1,000,000 samples. At the same time, engineered features were introduced to capture signal dynamics (e.g., rolling slopes, rising/falling flags). However, while cross-validation metrics improved modestly, real-world simulations still revealed systematic failures in detecting critical events, particularly for

temp_acdc and

soc_bat.

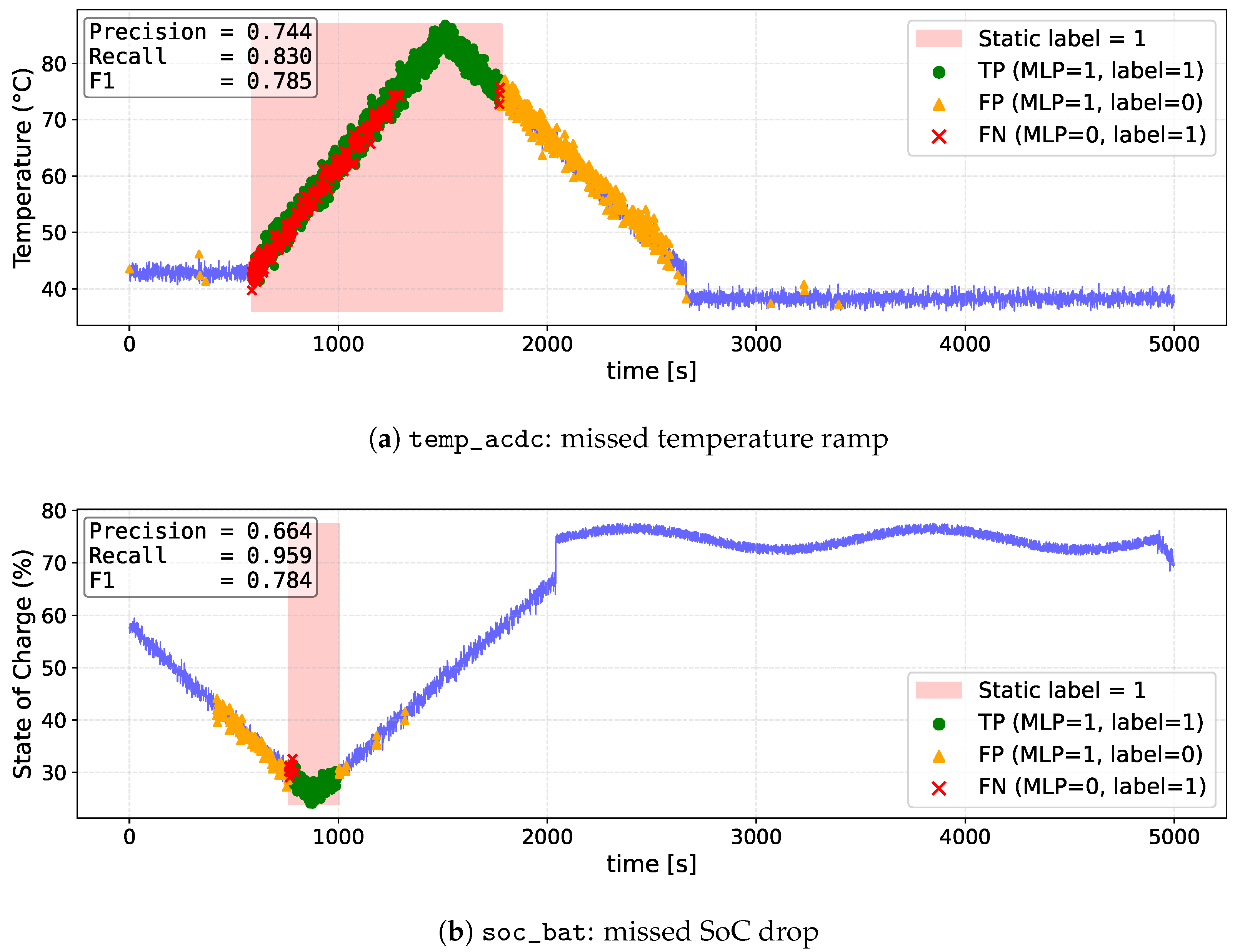

Figure 8 shows representative 5000-sample segments from real signal traces not included in the training set, highlighting persistent generalization gaps.

As shown in

Table 5, individualized models achieved higher cross-validation scores after increasing both the size of the training set and the feature set. However, these improvements were insufficient to ensure reliable detection in realistic simulation scenarios.

Figure 8 shows typical missed detections for both

temp_acdc and

soc_bat, underscoring the need for more generalizable labeling rules.

These findings prompted a final methodological revision. Despite the expanded dataset and the individualized feature sets, the persistent simulation failures observed for temp_acdc and soc_bat confirmed that the root cause of the poor generalization was not model complexity or insufficient data. Instead, the original labeling criteria, although detailed, lacked transferability and failed to reflect physically robust activation patterns.

To resolve this, the labeling logic was fundamentally redefined using simplified, domain-informed thresholds and ramp-based transitions. As demonstrated in the next section, this final refinement significantly improved the precision and reliability of prioritization under realistic conditions.

4.5. Final Strategy: Priority Label Simplification and Feature Alignment

The results of the intermediate approach confirmed that better generalization could not be achieved through individualized feature engineering or larger datasets alone. Despite cross-validation gains,

Figure 8 shows that

temp_acdc and

soc_bat still suffered systematic detection failures under realistic conditions, pointing to shortcomings in the original priority labeling rules rather than model complexity or input features.

To address this, the labeling strategy for temp_acdc and soc_bat was fundamentally revised using simplified and physically meaningful rules based on thresholds and ramp logic informed by domain knowledge.

Table 6 summarizes the revised rules. These updated labels proved far more robust across varied input scenarios and enabled the models to focus on detecting events of real operational relevance.

Figure 9 illustrates how this final labeling strategy was applied to the temperature (

T) of the AC/DC converter and the SoC of the main battery during model training. The red regions indicate intervals where the priority label is active and correspond to physically meaningful operational conditions.

This final refinement led to consistent improvements in simulation outcomes and classifier responsiveness, particularly for the parameters that had previously suffered from low recall. The performance of the resulting models will be presented in the next section through scenario-based evaluations.

Table 7 summarizes the key steps in the modeling strategy.

4.6. Scenario-Based Evaluation of Priority Detection and Latency

To assess the deployment performance of the final models, a set of simulation scenarios was designed to replicate realistic industrial signal streams. Each scenario evaluates the classifier’s ability to detect and prioritize critical events across different signals and deployment configurations.

The simulations were executed using the Python-based MODBUS TCP server that replays pre-recorded signal fragments with 1 Hz sampling. The server was originally implemented with support for variable communication jitter, allowing random delays in message delivery. However, experimental analysis revealed that jitter had no significant impact on the classification outcomes because the model processes static snapshots of the signal state at each time step and feature computation depends on signal evolution rather than inter-arrival times; therefore fixed-step simulation was used in all final evaluations to ensure consistency and enable fair performance comparison.

Nonetheless, jitter simulation is retained in the script and may be reintroduced in future real-time deployments. This is particularly relevant for tests involving the RevPi5 platform, where actual communication delays and buffering could affect system dynamics and prioritization behavior.

All scenarios apply the individualized MLP classifiers trained using 1,000,000 samples per parameter, each configured with an optimal decision threshold obtained through cross-validation. The MLP architecture was selected for final deployment because it consistently balanced classification performance and low inference latency across all prioritized signals and simulation configurations.

The scenarios are organized as follows:

Scenarios A1–A3: Evaluate the isolated behavior of each individual model (temp_acdc, vout_acdc, and soc_bat) during realistic priority events. For each case, the predicted priority signal is compared to the average inference delay introduced by the MLP classifier is measured.

Scenario B1: Tests scalability and performance degradation as the number of monitored and prioritized signals increases, simulating concurrent classification across multiple parameters.

To highlight the generalization improvement, we compared the MLP classifier in two settings: (i) multi-signal training with 200,000 samples (

temp_acdc and

vout_acdc from

6p_2prio, and

soc_bat from

7p_3prio), and (ii) individualized training with 1,000,000 samples per signal.

Table 8 summarizes the

-scores from cross-validation in both cases.

These results confirm that the simplified labeling rules (see

Table 6) effectively reduced the ambiguity, allowing each per-signal model to generalize robustly. This refinement eliminated the missed event issue reported in early experiments, particularly for

temp_acdc and

soc_bat, showing that the gain was not only due to larger training sets but also to better prioritization criteria.

Classical baselines such as Support Vector Machine (SVM), K-Nearest Neighbors (KNN), or Logistic Regression were not retained because the prioritization problem was formulated as binary classification and simpler models already performed very well. In particular, DT and MLP achieved excellent generalization with sub-millisecond inference times, and MLP provided the best trade-off between accuracy and latency, making additional baselines less informative for this study.

All experiments reported in this paper were conducted in simulation. This controlled setup enabled reproducibility and precise evaluation of critical conditions (ramps, noise, hysteresis, jitter) during model development. Hardware-in-the-Loop (HIL) and small-scale validation are already planned within the SHIFT2DC demonstrators, where the trained models will be deployed on RevPi5 gateways connected to real AC/DC converters, ESS units, and EV chargers.

4.6.1. Classifier Latency Comparison (RF, DT, MLP)

Before presenting the scenario-based results, we report a comparative analysis of the three candidate classifiers (RF, DT, MLP) in terms of inference latency. This complements the accuracy-based selection criteria summarized in

Table 3 (see also

Figure 6), providing the quantitative trade-off between

-score and latency.

Table 9 reports the 95th percentile per sample latency in all prioritized signals. Three conditions are distinguished: inference-only (classifier prediction only), end-to-end vectorized (batch including feature extraction), and end-to-end incremental (streaming including feature extraction).

The results show that DT achieves the lowest incremental latencies (≈200 µs), while MLP remains within the same order of magnitude (≈270–300 µs). In contrast, RF exhibits millisecond-range latencies (>5 ms), unsuitable for real-time deployment. Furthermore, as the size of the training set increased (from 200 k to 1 M samples), the MLP consistently improved its

-score and generalization, whereas DT showed limited gains. Since MLP achieved the best trade-off between accuracy and latency (

Table 3), it was adopted as the final model.

Inference latencies remained well below 1 ms per sample (

Table 9) for the MLP classifier, representing less than 0.1% of the 1 Hz processing budget. As already discussed in

Section 3.2, the target platform (RevPi5) is an industrial edge gateway with ample CPU and memory resources, and therefore, TinyML-specific optimizations were not essential for this study. Nonetheless, TinyML implementations remain a promising direction for future work, particularly for deployment on ultra-constrained microcontroller devices.

Beyond these per-model latency results, a theoretical breaking-point analysis was performed for the selected MLP classifier. The latency benchmarks were obtained in a Debian 12 LXC hosted on an Intel Xeon Silver 4214R server (2.4 GHz, 64 GB RAM), which provides significantly more resources than the target RevPi5 edge gateway.

With a measured p95 end-to-end latency of approximately 300 µs per sample (including feature extraction overhead), a single CPU core could in theory accommodate more than 1000 concurrent MLP instances within the 1 s processing budget of the 1 Hz MODBUS/TCP loop. However, considering the more constrained hardware characteristics of RevPi5 and the additional operating system overhead, a conservative estimate in the range of 100–500 concurrent models per core is more realistic. This confirms that the scalability margin of the proposed solution remains comfortably above the requirements of the SHIFT2DC demonstrators.

In all cases, inference was executed in real time to assess both classification performance and latency.

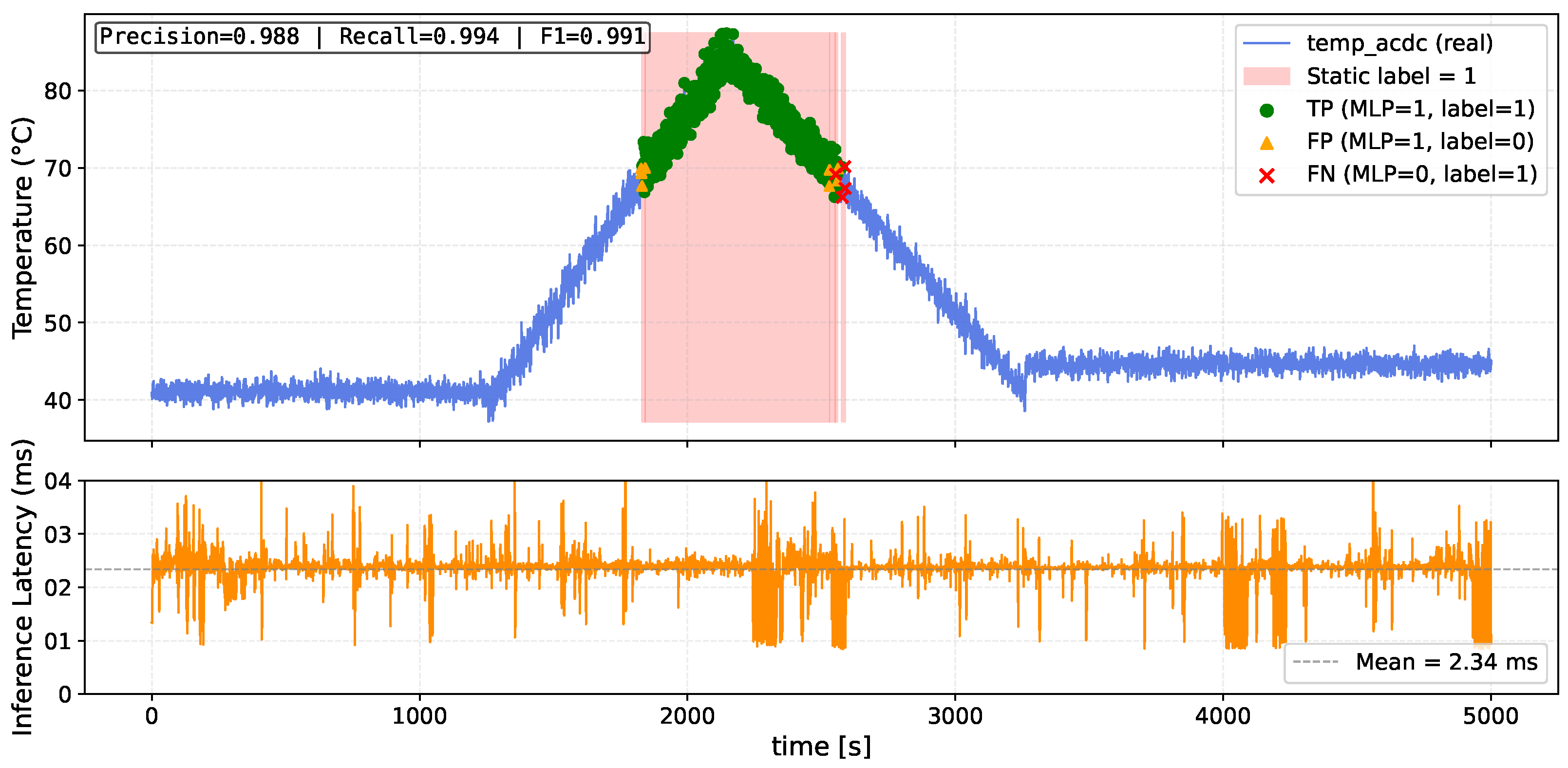

4.6.2. Scenario A1: Prioritization of temp_acdc

Scenario A1 evaluates the detection capability of the individualized MLP classifier for

temp_acdc. This parameter exhibits gradual temperature increases, and the simulation tests whether the model can respond promptly to trend crossing thresholds. The corresponding results are shown in

Figure 10.

The results confirm that the classifier effectively generalizes to unseen signal segments. As shown in

Figure 10, most high-priority events are detected within a few milliseconds of their onset. The MLP model achieved an accuracy of

0.997,

-score of

0.991, recall of

0.994, and precision of

0.988. The mean inference latency was

2.34 ms, supporting its deployment in edge-based ICS applications.

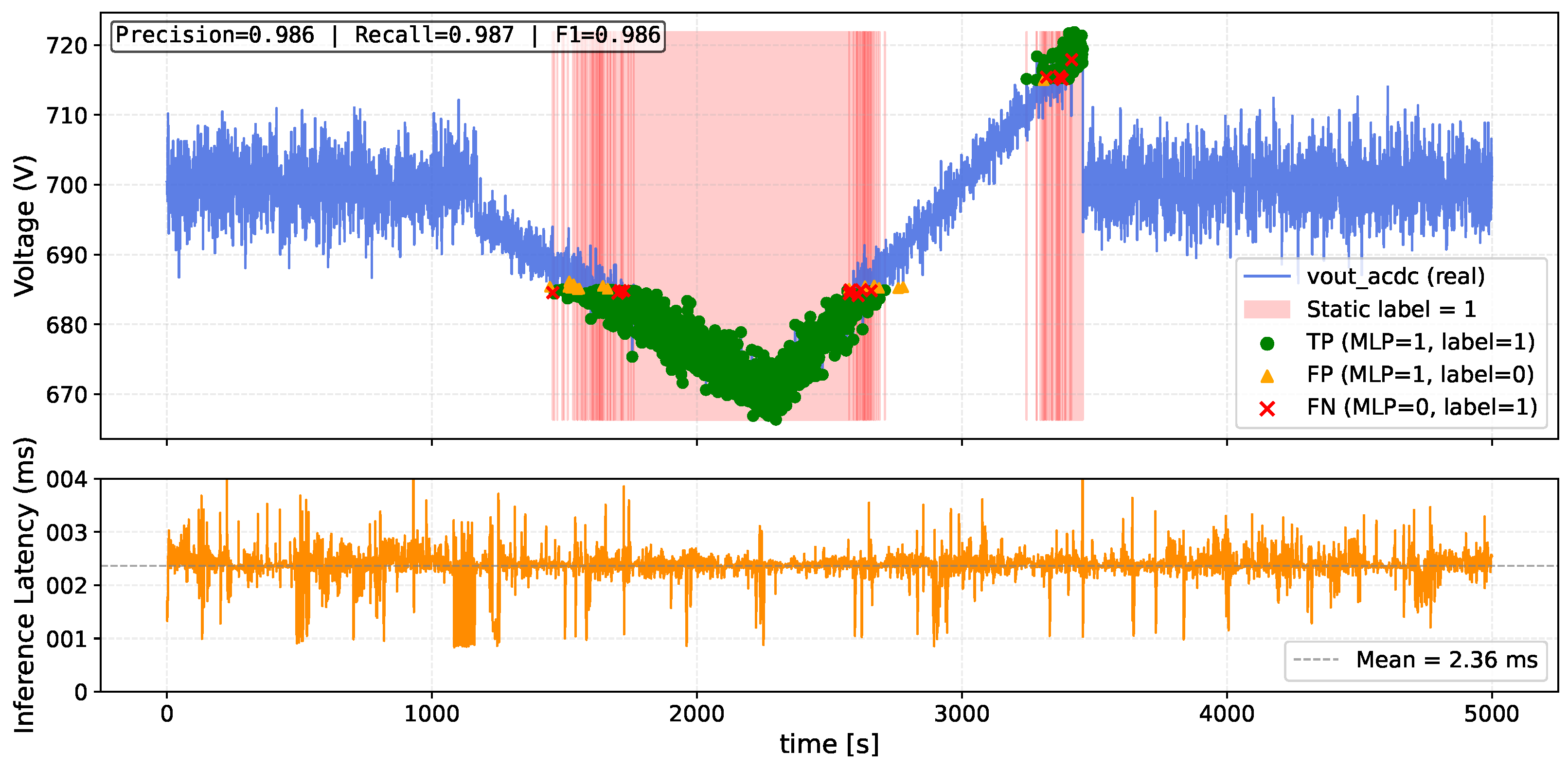

4.6.3. Scenario A2: Prioritization of vout_acdc

Scenario A2 evaluates the detection capability of the individualized MLP classifier for

vout_acdc. This parameter exhibits sharp voltage fluctuations, and the simulation tests whether the model can promptly detect overvoltage and undervoltage events. The results, shown in

Figure 11, illustrate the classifier’s ability to respond accurately to abrupt anomalies with low latency.

The results confirm that the classifier effectively generalizes to abrupt signal changes. As shown in

Figure 11, most priority events are detected with minimal delay. The MLP model achieved an accuracy of

0.994,

-score of

0.986, recall of

0.987, and precision of

0.986. The mean inference latency was

2.36 ms, supporting its suitability for real-time voltage anomaly detection in edge-level ICS deployments.

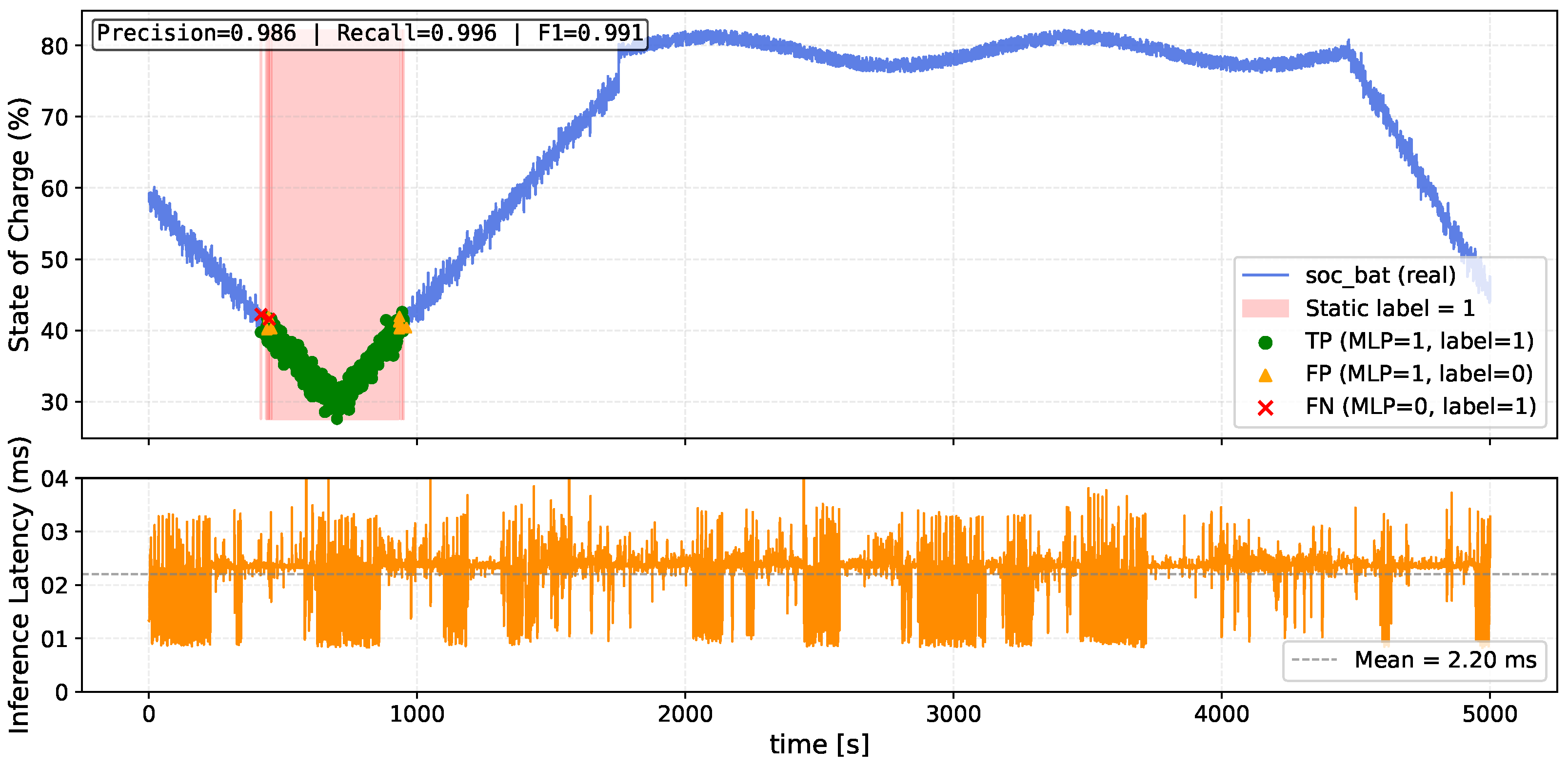

4.6.4. Scenario A3: Prioritization of soc_bat

Scenario A3 evaluates the detection capability of the individualized MLP classifier for

soc_bat. This parameter represents the state of charge of the battery, which can decline progressively in real conditions. The model must identify critical low-charge events accurately, especially during gradual depletion periods.

Figure 12 shows how the classifier performs under such conditions.

The results confirm the classifier’s robustness in detecting declining SoC conditions. As shown in

Figure 12, high-priority events are captured with minimal delay and low false positive rate. The MLP model achieved an accuracy of

0.998,

-score of

0.991, recall of

0.996, and precision of

0.986. The average inference latency was

2.20 ms, demonstrating suitability for real-time decision-making in ICS environments.

4.6.5. Scenario B1: Scalability with Multiple Prioritized Signals

Scenario B1 evaluates the system’s scalability as the number of prioritized signals increases from one to three. This simulates deployment conditions where several critical parameters must be monitored and prioritized concurrently at the edge. The objective is to assess how this added complexity affects the inference latency and computational load within the IoT gateway.

All results correspond to the final configuration using per-signal MLP classifiers trained with 1,000,000 samples and physically informed priority labels. A new input dataset of 5000 realistic samples was generated specifically for this evaluation, following the same physical labeling criteria used during training but using previously unseen signal traces. This ensures that all three variants (with one, two, and three prioritized signals) are evaluated under identical yet independent conditions, allowing fair comparison of scalability effects.

Figure 13 shows the total cycle latency for each configuration. When only one signal is prioritized (e.g.,

temp_acdc), the mean latency per inference cycle remains low at approximately 2.32 ms. With two concurrent prioritized signals (

temp_acdc and

vout_acdc), latency increases to 3.12 ms, and reaches 3.65 ms with three classifiers (adding

soc_bat). The empirical CDF (left) illustrates that even under full load, over 95% of inference cycles complete in less than 5 ms.

This confirms that the use of independent models per parameter enables effective parallelization and scalability without significant latency penalties.

Table 10 summarizes the latency analysis and resource usage metrics for Scenario B1. The total memory footprint increases linearly with the number of active models, reaching 0.20 MB for three signals, which remains well within the available memory of the RevPi5 gateway. Similarly, the mean inference latency per cycle remains below 4 ms in all configurations, indicating that up to three classifiers can operate in real time without impacting the communication responsiveness of the MODBUS server.

These results validate the feasibility of deploying multiple lightweight MLP models in parallel for contextual prioritization in industrial communication gateways. Even under increased computational load, the system maintains real-time performance with sub-5 ms inference cycles and minimal memory usage, making it suitable for embedded deployment on platforms such as the RevPi5.

5. Discussion

The simulation results demonstrate the feasibility and effectiveness of using individualized MLP-based classifiers to dynamically prioritize critical signals in industrial communication scenarios. By leveraging physically informed labeling criteria and models trained with 1,000,000 samples, the proposed approach achieves high accuracy and responsiveness across diverse signal behaviors, including smooth temperature ramps, sharp voltage transitions, and gradual battery discharge patterns.

One key contribution of this work is the shift from multi-signal models to individualized classifiers, which significantly improved generalization and reduced false positives in the presence of heterogeneous signal dynamics. The decision to decouple training per parameter, combined with feature engineering guided by physical intuition, enabled each model to specialize in detecting relevant trends for its target signal. This was especially beneficial for parameters such as temp_acdc and soc_bat, where transient behaviors and slow gradients challenge general-purpose models.

The results from scenarios A1–A3 confirm that these models can anticipate critical conditions with minimal latency (below 2.5 ms on average) and high reliability (F1-scores above 0.98 in all cases). This real-time behavior is particularly valuable in edge-based ICS deployments, where timely prioritization can improve system safety and stability.

In addition, Scenario B1 shows that the proposed approach scales well as the number of prioritized parameters increases. Thanks to the modular structure and low inference latency of each model, the system maintains sub-5 ms cycle times even when monitoring three parameters in parallel. The memory footprint remains minimal (0.20 MB in the worst case), confirming suitability for lightweight IoT gateways such as the RevPi5.

Unlike traditional static prioritization schemes, which rely on fixed rules and require manual configuration, the proposed strategy adapts to changing signal conditions in real time. This dynamic behavior removes the need for predefining thresholds or priorities, making the solution more robust and easier to maintain in evolving industrial environments.

While all experiments were conducted in a simulated environment, the models, platform, and communication protocol were selected with deployment in mind. Compatibility with standard MODBUS TCP polling ensures seamless integration into existing EMS architectures, and the inference engine is lightweight enough to run directly on embedded hardware.

The synthetic datasets were generated using scripts that reproduce typical operating profiles of industrial signals. For instance,

temp_acdc combined stable phases (35–45 °C) with Gaussian noise (

), gradual ramps toward high temperatures (up to 85 °C), and cooldown periods back to baseline. Priority labels were assigned when thresholds exceeded (

T > 70 °C) and maintained until recovery with a negative slope, emulating the hysteresis effects observed in real converters:

where

is the initial temperature,

the slope of the ramp, and

a Gaussian noise term with variance

.

Similarly, vout_acdc signals alternated stable nominal voltages around 700 V with abrupt excursions to undervoltage (<685 V) and overvoltage (>715 V), while soc_bat was generated as smooth discharge cycles with sinusoidal ripples and noise, interrupted by recovery phases to emulate recharge events. Priority windows were defined when SoC dropped below 40% and closed upon recovery above 41% with a positive slope. This approach ensured that each synthetic trace reproduced the qualitative dynamics of real processes, while preserving reproducibility and full control for classifier benchmarking.

6. Conclusions

This work has presented a lightweight and scalable ML-based framework for dynamic signal prioritization in industrial communication gateways operating over LVDC infrastructures. The proposed architecture integrates standard protocols such as MODBUS TCP/RTU and CAN, and is designed for deployment on RevPi5-based gateways connected to heterogeneous field devices including DC/DC converters, EV chargers, ESS units, and smart PDUs.

To address the limitations of traditional rule-based strategies, the prioritization mechanism leverages individualized MLP classifiers trained with physically informed labeling criteria. The models are deployed within a real-time MODBUS server loop, enabling the system to identify and promote contextually relevant signals with low latency and minimal computational overhead.

The methodology included rigorous validation across synthetic and realistic datasets, followed by scenario-based simulations to assess detection capability, latency, and scalability. The results confirm that individualized classifiers trained on 1,000,000 samples achieve F1-scores above 0.98 and maintain inference cycle times below 2.5 ms, even when multiple signals are prioritized in parallel. These properties validate the feasibility of deploying multiple models concurrently on resource-constrained platforms like the RevPi5.

Unlike static prioritization schemes, the proposed approach does not rely on fixed thresholds or predefined signal hierarchies. Instead, it adapts dynamically to signal behavior, reducing configuration effort and improving resilience in evolving Industrial Control System (ICS) environments. The framework remains fully compatible with existing Energy Management System (EMS) architectures and MODBUS polling mechanisms, ensuring seamless integration.

Moreover, while this work focused on MODBUS and CAN protocols, the proposed prioritization framework is inherently protocol-agnostic and could also be adapted to other widely used industrial communication standards, such as PROFINET, EtherCAT, or IEC 61850. This potential transferability underscores the versatility of the solution, enabling improved signal management and efficiency across a broad range of industrial automation and energy management scenarios. Future work will also explore adapting and testing the framework with other industrial protocols to expand its applicability and impact.

As part of the SHIFT2DC project, future efforts will focus on deploying the proposed prioritization framework on real RevPi5 gateways operating in demonstrator environments. These demonstrators will integrate physical field devices (e.g., AC/DC converters, EV chargers, energy storage systems, and smart PDUs) within a shared LVDC infrastructure to enable Hardware-in-the-Loop validation under realistic conditions such as communication jitter, physical delays, gateway resource contention, and dynamic electrical loads.

Further research will address enhancements such as adaptive thresholds that evolve over time or adjust dynamically to runtime context. This contrasts with the present approach, where thresholds are optimized offline during training and then applied statically during inference. Additional directions include context-sensitive models, online learning, integration with predictive maintenance, and hybrid edge–cloud coordination to enable more responsive and scalable large-scale IIoT deployments.

Moreover, extending the framework to event-driven protocols such as Message Queuing Telemetry Transport (MQTT) and Open Platform Communications Unified Architecture (OPC-UA) is considered a key step. These protocols complement the current MODBUS and CAN implementation and enable more efficient communication in heterogeneous industrial environments.

Another relevant direction is the exploration of TinyML-based implementations for ultra-constrained microcontroller platforms, complementing the RevPi5-class gateway results presented in this study.

Finally, while the present scalability analysis relied on latency benchmarks obtained in an LXC hosted on a server-class platform, future work will include direct benchmarking on physical RevPi5 gateways. This step will enable validating the extrapolated estimates under realistic deployment conditions, considering actual hardware limitations and system-level overhead.