A Review of Personalized Semantic Secure Communications Based on the DIKWP Model

Abstract

1. Introduction

2. Methodology of the Systematic Review

2.1. Search Strategy

2.2. Inclusion and Exclusion Criteria

- the DIKW pyramid or its extensions (especially DIKWP) in the context of computing or communications;

- SemCom theories or system implementations;

- personalization of AI or user-centered semantic models;

- security/privacy in semantic or cognitive communications;

- knowledge representation for communication (e.g., use of knowledge graphs (KGs), ontologies in network systems);

- explainable or cognitive communications (e.g., “cognitive networking”) that involve a knowledge plane.

2.3. Data Extraction and Synthesis

2.4. Quality Appraisal

3. Foundations: DIKWP Theory, DIKWP Network Model, and Semantic Relativity

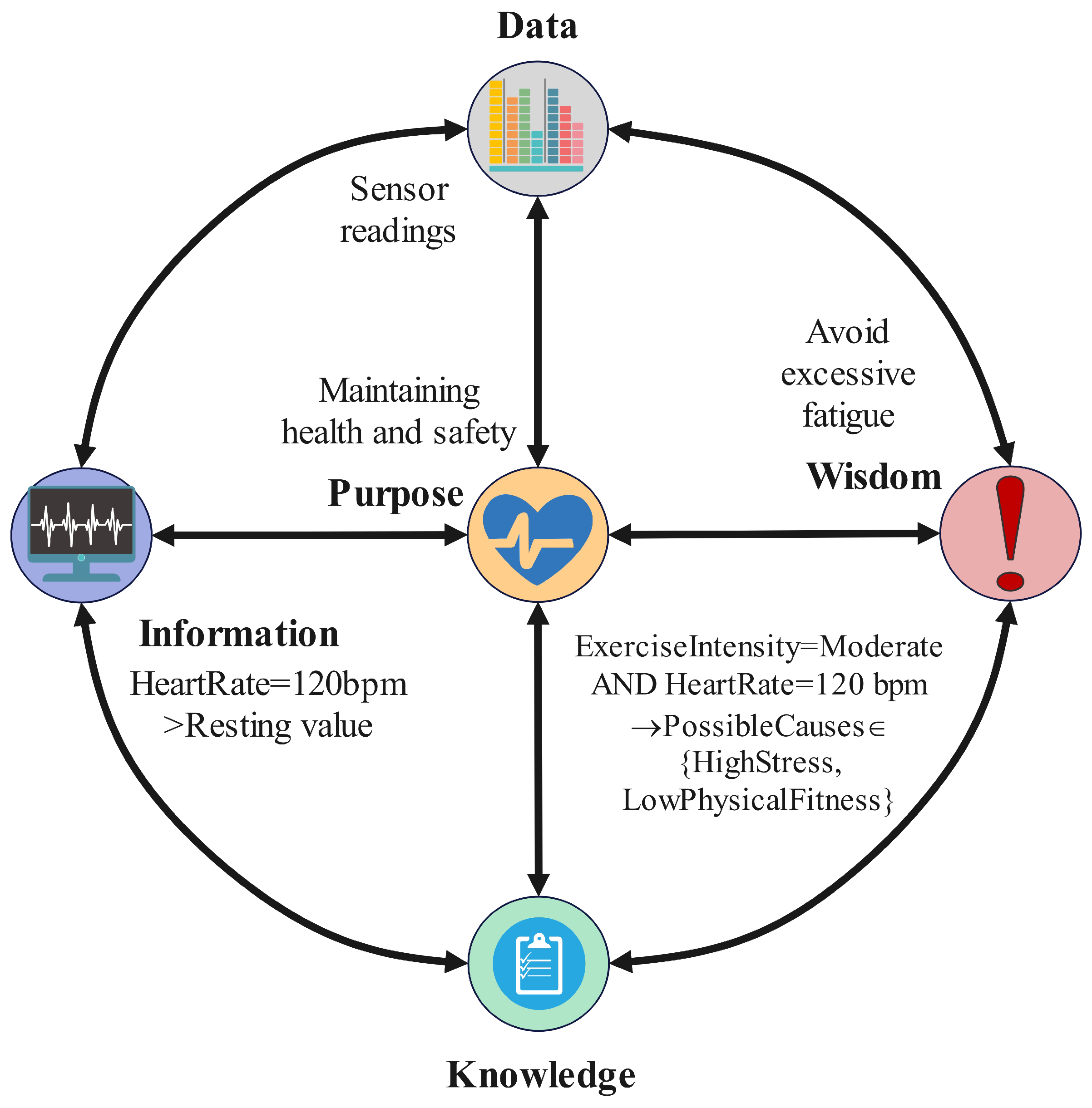

3.1. The DIKWP Model: Extending Data–Information–Knowledge–Wisdom with Purpose

3.2. DIKWP Network Model and 5 × 5 Transformation Modules (DIKWP × DIKWP Mapping)

- : transforming raw data into meaningful information (e.g., feature extraction, as in converting sensor readings to a recognizable event);

- : generalizing or aggregating information into structured knowledge (e.g., building a KG or model from a collection of information);

- : applying reasoning on knowledge to derive insights or decisions—essentially the step of generating wise judgment from known facts (e.g., using a knowledge base of symptoms to decide on a diagnosis);

- : (if we consider upward as well)—arguably aligning one’s actionable wisdom with the overarching goal (although, in practice, Purpose is more of a guiding constant, but one could imagine refining one’s goals after gaining wisdom).

- : guiding decision-making criteria based on goals (e.g., our purpose will influence which among multiple “wise” choices we consider optimal);

- : using high-level principles to update or refine the knowledge base (for instance, lessons learned from a decision are fed back into the knowledge store as new knowledge);

- : using existing knowledge to reinterpret or filter information (for example, knowledge of language helps to parse a sentence to obtain information from it);

- : deciding which raw data to pay attention to or how to encode them based on current information needs (for instance, focusing sensors on a particular area because the current information suggests something of interest there).

- Person A has a Purpose (intended meaning or goal to convey) and some Knowledge/Wisdom backing it;

- They encode some Information into Data (choosing words, signals) to send—this is a Purpose/Knowledge → Information → Data path on A’s side;

- Person B receives Data and tries to transform them into Information and then into Knowledge that aligns with some Purpose (either B’s own purpose or understanding A’s purpose)—this is Data → Information → Knowledge (and maybe aligning with Purpose) on B’s side;

- Communication succeeds if B’s reconstructed Knowledge/Wisdom aligns with A’s intended Knowledge/Wisdom (i.e., if B’s understanding matches A’s Purpose-driven message).

3.3. Relativity of Understanding Theory: Cognitive, Concept, and Semantic Space Discrepancies

- ConC: Refers to the set of concepts and their definitions held by a cognitive agent, similar to an internal dictionary or ontology-like structure. ConC encompasses concepts expressed in certain forms (e.g., language), including their definitions, features, and interrelationships. For example, an agent’s ConC might define a “car” as a transportation tool with four wheels. Formally, ConC can be represented as a graph structure , where nodes represent concepts and edges denote relationships among them. Each concept can have attributes and be linked to other concepts, forming a personalized KG. ConC is independent across agents, meaning that each cognitive agent independently constructs their own concept definitions, potentially differing from those of other agents. Such differences are termed “ConC independence”.

- SemA: Represents the associative and semantic connections among concepts built by cognitive agents through their experiences and accumulated knowledge. While ConC focuses on explicit definitions, SemA emphasizes contextual meaning and associations between concepts derived from personal experiences. For instance, in an individual’s SemA, the concept “car” might evoke associations with driving, fuel consumption, or traffic, representing experiential connections. SemA can thus be viewed as a network of associations and functional relationships beyond simple hierarchical categorizations of concepts. SemA is subjective; merely sharing ConC definitions does not ensure complete semantic sharing. For example, two agents might define a “cloud” as “a visible condensation of water vapor”, yet one might associate “cloud” with rainy weather or a melancholy mood, while another associates it with coolness or agricultural activities. Such differences constitute “semantic space differences”, illustrating that different agents form distinct semantic networks around the same concepts.

- CogN: Refers to the overall cognitive environment where understanding occurs, integrating both ConC and SemA and influenced by perception and purpose. CogN is described as a multidimensional dynamic processing environment in which data, information, knowledge, wisdom, and purpose continuously interact and transform. Specifically, CogN reflects an agent’s dynamic mental state when processing information, including what the agent perceives, focuses on, and contemplates at a particular moment. CogN possesses relativity, as cognitive states vary significantly across different agents and even within a single agent over time. During communication, the sender’s CogN generates the message, while the receiver’s CogN attempts to interpret it. Even when ConC definitions are identical, differences in CogN—such as varying focuses or contextual backgrounds—can still lead to misunderstandings or distortions in communication.

- Misunderstanding in CogN: This type of misunderstanding occurs when the receiver’s cognitive state leads to interpretations that deviate from expectations. For example, when a patient describes symptoms in a certain manner, a physician focusing excessively on a specific hypothesis may incorrectly interpret the symptoms as indicating another condition. Additionally, a receiver whose attention is distracted or situated in a different cognitive context may also experience misunderstandings. Resolving CogN-related misunderstandings generally involves maintaining attentiveness and actively prompting comprehensive questioning. For instance, physicians should guide patients to provide more detailed descriptions, thereby aligning their CogN effectively.

- Misunderstanding in ConC: These misunderstandings occur when communicating parties attribute different definitions to the same terminology. For instance, a patient might describe “chest tightness” as mild discomfort, whereas the physician’s definition of the same term might imply a more severe sensation of pressure. Although both parties use the same term, their conceptual definitions do not fully match. The key to resolving misunderstandings of this type lies in clarifying the definitions—for instance, asking the patient to describe the sensation differently or requesting further explanation—thus aligning the respective ConC.

- Misunderstanding in SemA: Misunderstandings arising from differences in associative or contextual meanings between individuals. For example, a patient might associate certain symptoms with dietary issues, considering them indigestion, while a physician might connect the same symptoms with heart conditions. Although both discuss identical symptoms, their semantic associations differ significantly. Addressing these misunderstandings requires leveraging domain-specific knowledge to reason and exclude less likely explanations and communicating in terms familiar to the receiver. In this scenario, the physician would utilize accessible language to explain the causes to the patient clearly, ensuring semantic alignment and enhanced understanding.

- Recognize individual differences: Differences in background knowledge, experience, and context mean that the same message may not yield the same understanding, e.g., a weather report using the term “cloudy” conveys different meanings to a layperson vs. a pilot. Thus, systems should not assume uniform interpretation.

- Establish common ground: Align concept definitions (like agreeing on terminology). In networking terms, this is like exchanging or negotiating a semantic schema or ontology before deep communication.

- Use feedback mechanisms: Identify misunderstandings by checking if the receiver’s reaction or response indicates a gap. In human conversation, we naturally do this with phrases like “Do you know what I mean?” or noticing confusion and then rephrasing. A SemCom system might similarly require an acknowledgment step to confirm semantic alignment.

- Adapt language or medium: Possibly rephrase information in terms familiar to the receiver (like a doctor switching to layman terms for a patient). In an AI context, this could mean using the receiver’s own known vocabulary or data patterns.

- Personalize security as well (tying to later sections): If meaning can differ per person, one could exploit this for security—e.g., encode a message in terms that only the intended receiver’s SemA would resolve correctly (a form of semantic steganography or personalized encryption). Conversely, one must ensure that an unintended receiver with a different SemA indeed cannot correctly interpret it (providing confidentiality through obscurity of context).

- Align ConC: Ensure that terms and references have shared meaning (which often involves establishing or referring to a common ontology or protocol).

- Align SemA: Ensure that context and associations are understood (perhaps by sending metadata or related context that disambiguates meaning).

- Align CogN: Ensure that the timing, focus, and modality of communication suit the receiver (e.g., do not send crucial info when the user is overloaded with other tasks; in networks, this could mean scheduling messages when resources are free or, in human–AI interaction, presenting information when the user is attentive).

4. Related Models in SemCom

4.1. Shannon–Weaver and Early Semantic Information Theory

4.2. AI-Driven SemCom Systems (Deep Learning Approaches)

- Bandwidth reduction: For example, DeepSC (2021) by Xie et al. [35] achieved successful text transmission with far fewer bits than a standard source-channel coding approach would by focusing only on semantic content.

- Robustness in low SNR: Because the system does not aim to correctly interpret every bit, it can still succeed in conveying meaning even if the channel is noisy, as long as the key features emerge. In fact, semantic systems can leverage error correction at the meaning level—for instance, if part of a sentence is garbled, a language model can fill in a plausible blank.

- Semantic Encoder: This could be a deep neural network that converts input data into a compact representation, using knowledge of the source modality (text, image) and sometimes external knowledge bases.

- Channel Encoder: This is optional if merging with a semantic encoder, but some designs integrate them—it still performs physical-layer adaptation.

- Channel Decoder: This decodes the signal from the physical layer.

- Semantic Decoder: This interprets the representation into output content or directly into a response or action.

4.3. Knowledge Representation and Ontology-Based Frameworks

- The surveyed SemCom methods all try to incorporate “semantic knowledge” in some form, but many do so implicitly via learned models. DIKWP would encourage an explicit, multilevel representation (data, info, etc.). For instance, rather than a monolithic neural net, a DIKWP-inspired system might separate processing stages, e.g., first conduct conceptual mapping (data → information) and then reasoning (knowledge → wisdom), etc., possibly with different algorithms at each stage. This could increase interpretability and possibly allow the insertion of expert knowledge at various points.

- The goal-oriented paradigm clearly echoes the Purpose element of DIKWP. Systems are superior if they know “why” the data are being sent. This is something that DIKWP inherently values by having Purpose at the top.

- KG approaches fit well with DIKWP’s focus on knowledge. In fact, recall that Duan [43] proposed DG, IG, KG, WG as part of a system architecture. In such an architecture, each of these is a layer-specific representation. For example, DG could capture raw data relationships or provenance, IG might link processed information units, KG is an ontology or factual DB, and WG might represent rules or best practices (more abstract relations, possibly including Purpose nodes linking to actions). Their paper defined these, and they aimed to use them to build better knowledge systems. Other researchers’ use of KGs is conceptually similar, although they are not always structured as multiple graphs.

- The three Shannon–Weaver levels and subsequent semantic information theory attempts give us goals and metrics (semantic capacity, etc.);

- Deep learning-based semantic encoders/decoders show how we can compress meaning and achieve tasks with fewer bits, learning a form of internal “language of thought” for communication;

- Knowledge-based and ontology frameworks ensure that semantics are explicitly handled via shared symbols and structures, reducing ambiguity;

- Goal-oriented designs realign communication with its ultimate purpose, often yielding huge efficiency gains.

5. Approaches in Personalized AI and Semantic Security

5.1. Personalized AI: User-Specific Models and Knowledge

- Machine Translation Personalization, e.g., customizing translation to a user’s speaking style or dialect. A translator could use knowledge of a user’s background to choose certain phrasing.

- Personalized Search (Semantic Search): The query “apple” can carry different meanings if the user is a fruit farmer versus a technology enthusiast. Search engines incorporate personal data to disambiguate (fruit vs. Apple Inc., Cupertino, CA, USA).

- Human–Robot Interaction: If a household robot knows the family’s particular terms (perhaps they call the living room the “den”), it will understand commands better. It builds a small ontology for the household (mapping “den” to the standard concept “living room”). There is research on the personalized grounding of language for robots.

5.2. Semantic Security: Securing Meaning in Communication

- Ensuring that an adversary cannot infer the meaning of intercepted communications (even if they break bits, they may lack context to obtain meaning).

- Protecting against attacks that target the AI models or knowledge that SemCom relies on. For instance, an attacker might try to feed malicious inputs that cause the AI to misunderstand (like adversarial examples causing misclassification, which, in semantic communication, could lead to wrong interpretations).

- Ensuring the integrity of semantic content—this means ensuring not only that the bits are not flipped but that the meaning is not subtly altered. A sophisticated attacker might alter a few words in a message to dramatically change its meaning while barely changing the bit count (such as intercepting a command like “do not execute order” and dropping the “not”).

- Eavesdropping and Privacy Leakage: Since semantic systems often share models or knowledge bases, an eavesdropper might try to glean information either by intercepting the semantic data or by analyzing the shared model. For example, if messages are transmitted as KG triples, an eavesdropper could accumulate these and piece together sensitive information about the participants.

- Adversarial Attacks: Attackers can exploit the neural components of semantic communications, e.g., sending inputs that cause the semantic encoder to output misleading encodings or cause the semantic decoder to produce wrong interpretations. There is existing evidence in NLP and vision that attackers can craft inputs that appear normal to humans but fool AI—this directly translates to semantic communication being vulnerable if, say, a malicious speaker sends a sentence that confuses the AI assistant into performing a wrong action (effectively an integrity attack at the semantic level).

- Poisoning and Model Transfer Attacks: During model training or updating (like federated learning), attackers could poison the data so that the model learns a backdoor—for instance, normally, it communicates appropriately, but, for some trigger input, it outputs a codeword or wrong information, which could be exploited.

- Knowledge Base Attacks: If the system uses a KG, an attacker might attempt to insert false knowledge or alter entries (like misinformation injection) so that future communications are interpreted incorrectly or leak information.

- Semantic Encryption: One module in the SemProtector framework (2023) [49] is an encryption method at the semantic level. This implies transforming the semantic representations (like embeddings or triplets) using keys such that, even if intercepted, the adversary cannot decode the real meaning. For instance, one could encrypt the indices of KG triples or use homomorphic encryption so that the receiver can still decode with a key but an eavesdropper only sees random-like symbols. Another, simpler example is as follows: if two parties share a secret mapping of words (a codebook), they could communicate with those codes—only with the codebook (knowledge) can one interpret it. This is old-school cryptography applied at semantic units rather than bits.

- Perturbation for Privacy: SemProtector also adds a perturbation mechanism to mitigate privacy risks. This could mean adding noise to the transmitted semantics such that sensitive details are blurred but the overall meaning is preserved. This is akin to not sending ultra-precise data if not needed. For example, if reporting a location for traffic, it could be quantized to the nearest block rather than providing exact coordinates—an eavesdropper cannot pinpoint an individual, but the receiver still knows where they are generally.

- Semantic Signature for Integrity: The third module in SemProtector is generating a semantic signature. This likely means attaching some digest to the semantic content that the receiver can verify, such as a cryptographic hash of the intended meaning or a watermark in the encoded message that confirms authenticity. If an attacker alters the content (even if bits are reassembled into valid words), the signature will not match and the receiver knows that it has been tampered with.

- Adaptive Protection: The idea of “dynamically assemble pluggable modules to meet customized semantic protection requirements” means that we might not always need all protections, or we might need different levels for different messages. A trivial example is as follows: a weather report might not need heavy encryption because it is public information (but it may need integrity to ensure that it is not faked); a personal health message needs strong encryption and privacy; a command to a drone needs encryption and integrity (so that an adversary cannot change it), etc. Systems could decide on-the-fly which protections to use depending on the context, risk, and overhead.

- Meng et al.’s survey [48] outlines threats and calls for research on secure SemCom.

- SemProtector (2023) provides a unified framework of three modules, encryption, perturbation (privacy), and signature (integrity), for semantic protection.

- Other works (e.g., an IEEE ComMag 2022 article on semantic security) have discussed scenario-specific solutions like securing semantic model distribution (since, often, a model must be shared, they consider sending the model itself securely).

- There is also the initial exploration of “adversarial semantic coding”—designing encoders that are inherently robust to adversarial noise, e.g., making sure that small perturbations in input (like synonyms or pixel tweaks) do not drastically change the encoded meaning, thus resisting adversarial attacks.

6. Comparative Analysis of DIKWP and Related Frameworks

Discussion

- Layering and Abstraction: DIKWP is unique in providing a full-stack cognitive model from Data up to Purpose. Other approaches tend to focus either on one or two levels. For example, deep learning SemCom compresses data to an embedding (covering roughly Data → Information/Knowledge in one step), but it does not explicitly separate knowledge or wisdom. Knowledge graph approaches explicitly handle Data → Information (by extracting information units like triples) and partially Information → Knowledge (because such triples reside in a knowledge base), but they often do not include a notion of wisdom or intent. Shannon’s model only considers data transmission (the technical layer). Meanwhile, Popovski’s three-level view (technical, semantic, effective) is conceptually similar to DIKWP’s bottom, middle, top, but Popovski’s does not break the semantic level down further into knowledge and wisdom or offer an implementation framework—it is rather a descriptive stack. DIKWP’s advantage is a granular breakdown that might allow targeted improvements at each stage (e.g., one can discuss uncertainty at Data vs. at Information vs. at Knowledge separately and handle them accordingly). On the downside, implementing a full DIKWP pipeline could be complex—a deep learning approach might be simpler in terms of the pipeline (only requiring one model to be trained).

- Purpose-Driven vs. Task-Oriented: Many recent works highlight goal-oriented communication (which is essentially task-oriented). DIKWP’s notion of Purpose maps well to this; it formalizes in the model what others treat as an external objective. For instance, in a deep semantic communications paper, they might say “we train the system to do question answering well”—i.e., the task is embedded in training. In DIKWP, one would say “the purpose is answering questions; hence, all layers operate to fulfill this.” Both yield goal-oriented behavior, but DIKWP could allow dynamic changes in purpose and an agent could theoretically switch goals mid-run and reconfigure processing (with knowledge of how to adjust each layer). Standard learned systems might need retraining to change tasks drastically. This shows DIKWP’s potential flexibility.

- Knowledge Utilization: DIKWP explicitly integrates knowledge (with K and W layers). Knowledge graph approaches similarly make knowledge explicit. Deep learning often encodes knowledge implicitly (in weights or word vectors), which can be powerful (foundation models have vast knowledge incorporated), but it is less controllable. One advantage of explicit KGs is easier knowledge updates—e.g., if a fact changes, one update the triple, whereas a deep model might still output outdated information because it is buried in weights. For SemCom networks that must adapt to new facts or user data, the DIKWP or KG approach is more agile in updating knowledge. On the other hand, deep nets can capture very subtle statistical knowledge (like idioms, correlations) that a manually curated KG might not. A hybrid could be best: DIKWP could integrate deep learning at specific modules (e.g., use a neural network to implement Data → Information mapping but then use a symbolic KB for Knowledge → Wisdom reasoning, etc.).

- Personalization Approaches: DIKWP accounts for differences through ConC/SemA alignment strategies (like iterative clarification). This is a sort of interactive personalization—it is about conversation or iterative communication to adapt to the user. Modern personalized AI tends to be data-driven: either fine-tuning a model on the user or feeding user data as context. This works well when we have many user data or a static scenario. DIKWP’s approach might be more relevant in one-off communications (e.g., a doctor explains something to a patient by checking their understanding step by step—the system analog would be dynamic adjustment). Possibly, both approaches could converge: we could fine-tune an AI model to a user over time (long-term personalization) and also have it monitor and clarify during a specific dialog (short-term personalization in context). A fully personalized semantic communication system should do both: maintain a user model (long-term knowledge and preferences) and be able to clarify miscommunications in real time. DIKWP inherently encourages the second part with its focus on understanding confirmation, whereas typical ML personalization might neglect real-time feedback unless explicitly programmed.

- Security Orientation: Traditional communication frameworks rely on separate cryptographic measures. SemCom adds vulnerabilities at new points (like model and data). DIKWP was not initially a security framework, but its structure can enhance security in terms of the detection of anomalies or undesired content. For example, if someone tries to inject a malicious concept, a DIKWP-based system might notice an inconsistency in its KG or a contradiction with its purpose (such as “This doesn’t fit known knowledge or our goal—possibly malicious”). This is a kind of semantic intrusion detection. Related frameworks like SemProtector explicitly add security features. One could argue that DIKWP plus a semantic firewall covers similar ground, e.g., encryption in SemProtector is analogous to ensuring that only those with an appropriate Purpose/Knowledge can decode the meaning (with DIKWP, if a third-party does not share the knowledge context, they naturally cannot interpret fully—although this is not foolproof security but security by context obscurity). For serious threats, cryptography is still needed, but DIKWP might inform smarter key management or encryption at semantic units (e.g., one may encrypt only the knowledge that is sensitive and leave benign data to save resources, guided by the Purpose/Wisdom classification of what is sensitive).

- Interpretability and Verification: DIKWP’s white-box nature means that one can potentially verify what is happening at each layer. This is important not just for explainability but also for validation and debugging. In safety-critical communications (e.g., autonomous vehicles exchanging information), being able to verify that a system’s “knowledge” and “wisdom” align with reality and rules could be crucial (e.g., to prevent accidents, we might require the AI car to justify certain communications or decisions—DIKWP provides tools for this). In contrast, if two cars communicate with end-to-end neural nets, this might work well statistically, but, if something goes wrong, it is challenging to determine why. Regulators or users might trust a system more if it can output a reasoning chain (like “I did not send a stop warning because my knowledge indicates that the obstacle is a plastic bag, not a solid object; thus, there is no danger—and my purpose is safety so irrelevant warnings are suppressed”). This chain is human-readable, matching the DIKWP layers. Achieving this level of clarity is challenging, but the structure aids it.

- Compatibility and Integration: The frameworks are not mutually exclusive. We can envision a hybrid: DIKWP as the overarching design, using deep neural modules for certain transformations (where they excel, e.g., pattern recognition for Data → Information), using KGs for representing domain knowledge (for Knowledge layer), and using tools like SemProtector modules to ensure encryption and integrity on selected channels. Personalization can be integrated by having each agent’s DIKWP model be partly unique (like their own KG and possibly their own tuned neural nets at the Data → Information stage, etc.) but also having a shared semantic ontology for communication (ensuring that enough common ground exists to communicate).

- A pure deep learning SemCom approach would train a model on many doctor–patient dialogs to compress messages; it would implicitly learn how patients speak and perhaps to simplify its language for them. However, if a rare case arises or the patient’s phrasing is unusual, it might fail.

- A DIKWP approach would represent the patient’s statements through Data (audio) → Information (text symptoms) → Knowledge (medical concepts) → Wisdom (possible diagnosis) with Purpose (help diagnose). It has the patient’s concept of illness and the doctor’s concept in mind separately and checks for mismatches (such as clarifying symptom meanings). It uses a medical KG (explicit disease–symptom relationships) and perhaps uses a learned model to interpret raw speech (D → I). It can explain, “Given your symptom description, I think it’s X because, in my knowledge base, X matches these symptoms.” The Purpose (diagnosis) guides it to focus on pertinent questions. For security, suppose that privacy is crucial: the system might perturb some data when logging them to a central server (such as obscuring the patient’s identity in the data transmitted). A semantic firewall ensures that it does not reveal the patient’s information to unauthorized staff (perhaps by recognizing, “This query is coming from someone not on this patient’s care team, so block this content”).

- DIKWP vs. others: DIKWP is holistic and interpretable but requires management of complexity; it excels in scenarios needing explainability and explicit knowledge integration. Other models often optimize specific aspects—e.g., deep nets for compression efficiency or KGs for clarity—but might ignore other aspects (e.g., deep nets ignore interpretability, and KGs might not easily handle noise).

- Personalization: DIKWP natively acknowledges individual differences; other models need additional processes to handle personalization (e.g., separate fine-tuning).

- Security: DIKWP was not primarily a security framework, but its philosophy can improve semantic security by design (embedding ethical and purposeful constraints). Formal security frameworks (like cryptography or SemProtector) are complementary—DIKWP does not provide encryption by itself but can identify what to encrypt.

- The future likely lies in hybridization: indeed, researchers have begun to realize that purely data-driven or purely symbolic approaches each have limitations. A combination (“neurosymbolic” systems) is a trend in AI. DIKWP can be seen as a blueprint for a neurosymbolic SemCom system: neural nets to handle raw data and probabilities, symbolic graphs for knowledge and logic, and an overall cognitive loop guided by goals. Each part addresses specific aspects—for example, neural nets handle high-dimensional signals (images, speech) and symbolic reasoning handles discrete knowledge and explanation.

7. Evaluation of Implementation Approaches and Applications

7.1. Prototypical Implementations of DIKWP Models

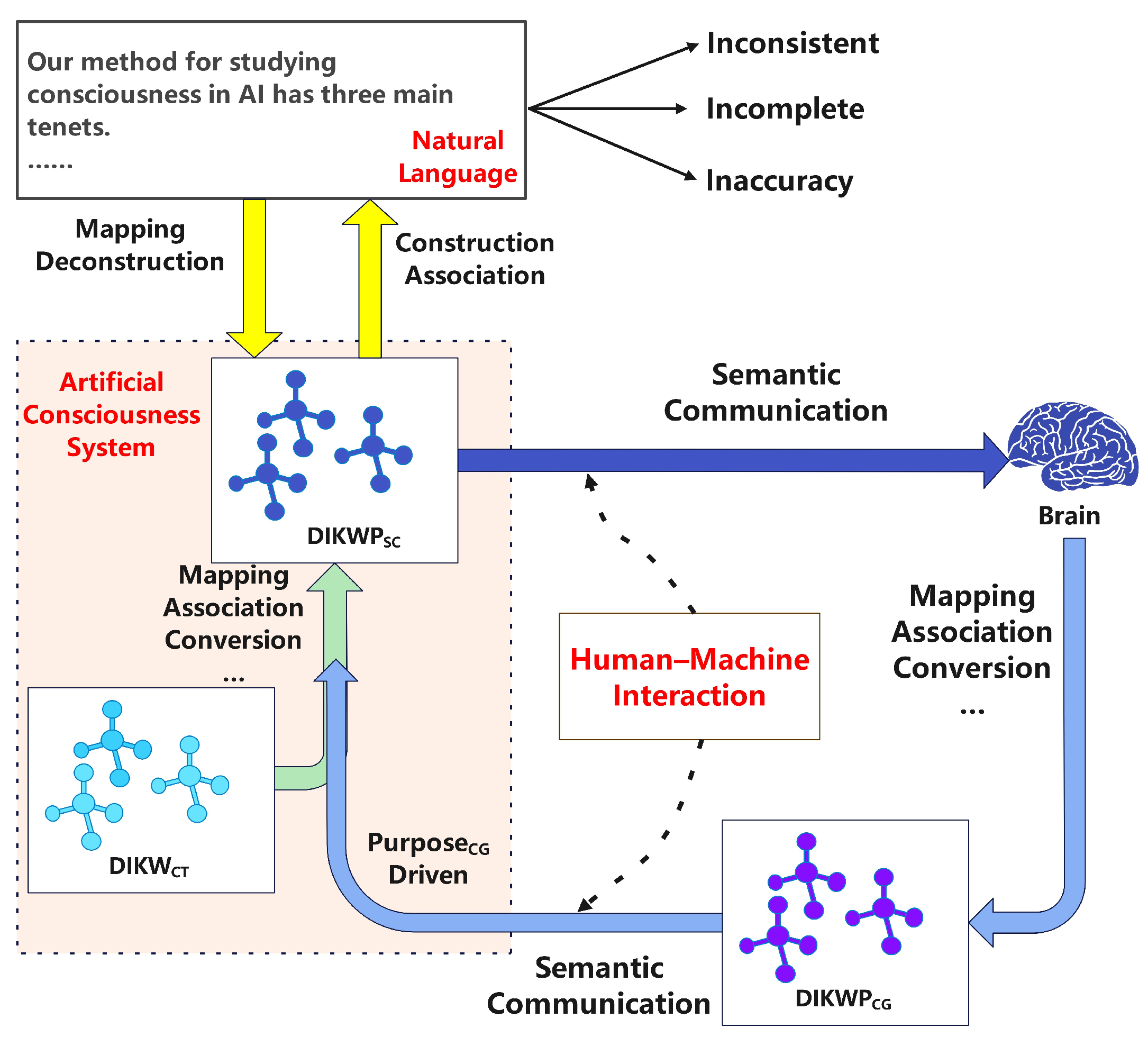

- DIKWP Semantic Chip and Architecture: As mentioned, Duan and Wu [50] proposed a design for a DIKWP processing chip. This includes a microarchitecture with an understanding processing unit (UPU) and semantic computing unit (SCU) based on DIKWP communication. The chip is paired with a DIKWP-specific programming language and runtime. While the details are mostly conceptual at this stage (published as a conference abstract), the goal is to create hardware that natively supports operations like semantic association, KG traversal, and purposeful adjustments. If realized, such hardware could accelerate semantic reasoning tasks analogously to how GPUs accelerate neural networks. No performance metrics were given in the abstract beyond qualitative claims that it surpasses the limitations of traditional architectures, making semantic operations more convenient. In Figure 2, CT denotes textual content, SC denotes semantic content, and CG denotes cognitive content. The diagram outlines a DIKWP semantic chip/network architecture that maps CT → SC and aligns SC ↔ CG via semantic communication under purpose-driven control, with diagnostics for inconsistency, incompleteness, and inaccuracy. This diagram depicts a DIKWP semantic chip/network architecture. The chip maintains five graph memories for and a transformation scheduler that executes modules to move between layers. Mapping–deconstruction converts TC into SC, while construction–association renders SC back to CT. A purpose engine prioritizes sensing, interpretation, and action, and a 3-No diagnoser (inconsistency, incompleteness, inaccuracy) detects and triggers corrective flows. Through the semantic communication interface, the system exchanges only semantically necessary artifacts with the human cognitive side (CG), aligning meaning rather than reproducing bits. A white-box logger records the per-module latency and energy and preserves layer-wise states for auditability. For evaluation, we profile the relevant paths and report the end-task success, alignment error, latency, and energy under channel impairments.

- DIKWP White-Box AI Systems: An example application is in AI evaluation and consciousness. Duan [51] discusses a white-box evaluation standard for AI using DIKWP. The idea is to instrument AI systems such that their internal states can be mapped to DIKWP graphs at runtime, allowing one to measure, for instance, how well an AI’s KG is updated or how its wisdom (decisions) aligns with given purposes. This has been applied in limited scopes, like analyzing an AI’s processing of simple tasks by mapping the data that it took in and the intermediate information/knowledge that it formed. While not offering a commercial product, these experiments help to validate that one can indeed extract meaningful “white-box” information from AI processes using DIKWP ontology. For instance, an evaluation might show that a certain AI agent, when solving a problem, only progressed to the “information” level and did not form new knowledge—which might correlate with its inability to generalize. Such granular evaluation is challenging to achieve with black-box models. We further conduct a quantitative white-box study on SIQA [52], GSM8K [53], and LogiQA-zh [54]. We uniformly sample 1000 instances from each dataset (total N = 3000), require models to output both a final answer and a brief rationale, and evaluate the following systems: ChatGPT-4o, ChatGPT-o3, ChatGPT-o3-mini, ChatGPT-o3-mini-high, DeepSeek-R1, and DeepSeek-V3. We report the black-box accuracy metric together with the white-box DIKWP shares (); the results are summarized in Table 4. We define as the normalized share (%) of internal DIKWP transformations whose target layer is , so that per model–dataset pair.

- Standardization Efforts: The mention of an “International Standardization Committee of Networked DIKWP for AI Evaluation (DIKWP-SC)” implies ongoing work to formalize DIKWP representations. Standardizing aspects like how to encode a DG or KG so that different systems can interoperate is a step toward implementation. If such standards mature, we might see interoperable SemCom protocols where, for example, IoT devices share not only raw data but DIKWP-structured information. However, at present, these are in the early stage (the references hint at technical reports and white papers rather than ISO/IEEE standards already in effect).

- NLP pipelines for Data → Information (like speech-to-text, entity recognition);

- KG databases (Neo4j, RDF stores) for storing DGs/IGs/KGs;

- Reasoners (rule engines or even neural networks) for Knowledge → Wisdom (decision making);

- Agent frameworks where one can encode a goal (Purpose) and allow the agent to plan actions.

7.2. Performance and Applications of SemCom Models

- Text Transmission: Xie et al. [35] in IEEE TSP reported that their deep learning SemCom system achieved the same text transmission accuracy as traditional methods at a fraction of the SNR or bandwidth. Specifically, for certain sentence similarity or question answering tasks, their system could operate at very low SNRs, where a standard system (Shannon-style with source coding + channel coding) would fail to transmit any meaningful data, yet the semantic system still communicated the message because it was focusing on meaning. This demonstrates robustness. Statistically, they showed that, e.g., to achieve 90% task success, the semantic system needed around one-third or one-quarter of the bandwidth of a baseline (specifically, e.g., “DeepSC could maintain a bilingual evaluation score within 5% of the original text even at SNR = 0 dB, whereas a traditional scheme’s BLEU score dropped drastically”, as per their claims).

- Image Transmission: Other works (e.g., on sending images for classification) have shown that, if the goal is classification, one can compress the image heavily (such as sending only high-level features) and the classifier on the other end would still work, even though the image cannot be fully reconstructed. This indicates semantic success with fewer data. However, one challenge observed is generalization: if the task changes slightly, the learned system might need retraining. For example, if it was trained to classify 10 objects and a new object appeared, the system might not transmit information about it successfully because it was not included in its training.

- KG Approach Performance: Jiang et al. [39], with the KG triplet approach, reported that their scheme improves reliability, especially in low-SNR scenario. They specifically mention that, at very low SNRs, transmitting only the most important triplet yields much better semantic success than trying to send the whole sentence with a conventional scheme. One trade-off is loss of detail—if only part of the information is sent, some less important semantic content is omitted. For certain applications (like critical instructions), one might not wish to omit anything. Thus, this scheme fits scenarios where a partial understanding is acceptable and preferable to total breakdown. This was validated in their simulations, e.g., at an SNR where the baseline yields 0% correct sentences, their scheme might still communicate the main facts, e.g., 80% of the time, albeit with minor details missing.

- Multiuser and Federated setups: A letter by Wang et al. [46] (2024, IEEE Comm. Letters) implemented the federated learning approach in personalized semantic communications. They simulated multiple users, each with slightly different data distributions. The FedContrastive method outperformed both a single global model and separate models per user in terms of the semantic error rate and model convergence. This indicates personalization without losing the benefit of collective training. It suggests that, in practical networks, one can train a “community” semantic model and still have it fine-tuned to individuals. This was tested in tasks such as image recognition or text classification across different users. For instance, they achieved a 10% improvement in the accuracy of the semantic task for underrepresented user data compared to no personalization.

- Edge/IoT scenarios: There have been demonstrations of SemCom in IoT, such as in vehicle-to-infrastructure communications where only event descriptions are sent, rather than full sensor feeds. For example, a project might show an autonomous car sending the message “pedestrian crossing ahead” to nearby cars, instead of raw camera images. This reduces the latency and bandwidth usage significantly. Implementation-wise, it requires the car to detect the event (AI on board) and then encode a standard message. In one trial, hypothetically, this could cut the required bandwidth from, e.g., several Mbps of video to a few bytes of text per second—enabling communication in bandwidth-limited or congested networks. The trade-off is that the receiver must trust the sender’s detection (if the sender misdetects, others might not receive data that would have been in the raw feed).

- Medical Application: In a paper titled “Paradigm Shift in Medicine via DIKWP” (Wang et al., 2023 [42]), the authors mention DIKWP SemCom promoting medical intelligence collaboration. In particular, doctors and patients could communicate symptoms and diagnoses with fewer misunderstandings by using DIKWP modeling. For example, a patient describes symptoms (Data → Information), the system maps this to medical concepts (Knowledge) and suggests likely causes (Wisdom), and then the doctor confirms and explains this to the patient, adjusting the concept definitions. Although this might not have a numerical evaluation, we could measure outcomes like reduced misdiagnosis or time saved in consultations. If one were to test DIKWP vs. normal consultation for complex symptoms, for example, a DIKWP-aided approach (with an AI mediator ensuring mutual understanding) could show improved understanding scores (e.g., both patient and doctor correctly recalling what was said) or higher patient satisfaction.

- AC Simulation: Research on AC systems uses DIKWP to structure AI internals. Such systems may include a prototype where an AI agent (in a simulated environment) uses DIKWP to perceive (Data → Information), learn (Info → Knowledge), reason (Knowledge → Wisdom), and set goals (Purpose). Performance evaluation could involve measuring how well the agent performs tasks and whether it can explain its decisions. Since AC is rather conceptual, the “evaluation” might be qualitative or based on benchmark tasks. For example, an AC agent with DIKWP layers could be compared against one without, assessing its stability and interpretability. Possible metrics include the task error rate combined with an interpretability index (e.g., the number of questions about its decisions that it can answer).

- Computational Overhead: Semantic processing, such as extracting meaning or running neural models, can be heavy. A concern in, e.g., IoT is whether devices can run these AI models. In this context, ideas like splitting the workload (edge computing) or using special chips (like DIKWP chip) emerge. Some experiments offload semantic encoding to an edge server rather than the device—which itself raises trust and privacy issues (if the edge performs this, one might leak raw data to the edge).

- Standardization and Interoperability: Without common standards, each research work uses its own dataset and metrics, making comparisons challenging. One barrier to real adoption is achieving agreement on semantic protocols (such as how exactly to represent meaning). At present, many works are siloed (one group’s autoencoder vs. another’s KG—they cannot interact with each other). There has been a drive in 6G forums to define semantic layer protocols, but this is in the early stages. There is potential for concepts like “semantic headers” in packets that carry context information or “common knowledge bases” for certain domains.

- User Acceptance: Personalization and semantic methods have to respect user comfort and privacy. If an AI agent changes how it communicates based on what it knows about the user, this could be beneficial (the user feels like it understands them) or detrimental (it appears to use personal information in unexpected ways). Thus, applications must carefully implement these with transparency and opt-out options. This can be seen in recommender systems, which have received backlash as users desire explanations. In communications, if a system filters out content for security (like a semantic firewall blocking a message because it deems it harmful), users might need an explanation or override option.

- Evaluation Metrics: New metrics are needed to evaluate success. The traditional bit error rate or throughput is not enough. Some works use the task success rate (did the AI answer correctly?) or similarity scores (how close was the received sentence to the original meaning?). For security, one may use metrics like the degree of privacy (e.g., can an adversary infer some property from intercepted data better than random guessing?). The community is still converging on these metrics. An MDPI survey notes the lack of a unified semantic information theory, which means that evaluation across papers is not always straightforward.

- Efficiency gains in bandwidth/latency (especially in low-resource scenarios);

- Maintaining performance in noisy environments where bit-accurate communication would fail;

- Better user satisfaction or task success due to personalization and clarity.

- However, they also highlight the need for robust AI (because, if the semantic analysis is incorrect, the whole communication is flawed, whereas bit errors in traditional communication might simply trigger a request for repeats—ironically, semantic errors might be undetected if the message appears plausible but has the wrong meaning).

8. Emerging Trends and Research Gaps

- Integration of Large Language Models (LLMs) in Communication: With the dramatic success of LLMs like GPT-4 in understanding and generating human-like text, there is a trend of using these models as semantic engines in communication systems. An LLM can serve as a powerful semantic encoder/decoder that already has a vast amount of world knowledge. For example, an LLM-based agent could summarize a lengthy message into a shorter one for transmission or predict which information the receiver needs to know (based on its pretraining). Researchers are looking at prompting LLMs for compression—essentially giving the model instructions like “Convert this message into a form that someone with context X will understand fully with minimal content.” Early explorations show that GPT-4 can perform summarization or explanation tailored to user profiles reasonably well (since it has seen many styles). However, challenges include controlling the model’s output precisely (they can be verbose or introduce minor inaccuracies). Thus, combining LLMs with frameworks like DIKWP could be fruitful: the LLM can be constrained to fill certain slots (Data/Information) or adhere to certain knowledge checks (with a KG verifying facts). A research gap here is how to systematically prompt or fine-tune LLMs for the role of a semantic transceiver—effectively transforming them into communication agents that obey bandwidth or security constraints. Another is ensuring that the LLM does not hallucinate in mission-critical communication.

- Cross-Modal SemCom: Most research so far has siloed text, speech, and image modalities. An emerging trend is unified SemCom across modalities—e.g., a system that can send either an image or a description depending on what is more efficient for the meaning. With advances in multimodal models (like CLIP, which aligns images and text semantics), a transmitter might dynamically choose to send a picture vs. words vs. a coded signal to convey a message, based on which one will be interpreted best by the receiver. For instance, two agents might share a learned embedding space where an image of a cat and the word “cat” have similar embeddings; then, if one wishes to communicate “cat”, it does not matter whether it sends the image or the word embedding—the receiver can decode it to the concept "cat". This fluid use of modality can optimize the use of available channels (perhaps sending an image when there is high bandwidth and reverting to text when there is low bandwidth). It also suits personalized needs (perhaps one user understands diagrams better and another prefers text—the system can adapt). The DIKWP model’s ConC/SemA is inherently modality-agnostic (concepts are concepts regardless of representation), so it aligns well with this trend. A research challenge is designing encoders/decoders that can handle multiple input/output types and a switching mechanism (e.g., using AI to judge the semantic “cost” of each modality for a given message).

- Semantic Feedback and Channel Adaptation: Traditional communication has feedback channels for ACKs, etc. In semantic communication, we foresee feedback on understanding becoming normal. This might be explicit (“I got it” or “I don’t understand X part”) or implicit (the system detecting from user behavior that something was misunderstood). There is a trend of research into active learning and query in communications, e.g., the receiver can send back a request like “Clarify term Y” if uncertain. This can dramatically reduce misunderstandings but at the cost of extra round trips. Some proposals include negotiating a shared context before data transfer—e.g., devices exchanging brief “semantic headers” about which knowledge they assume. DIKWP’s iterative understanding process fits this (identification of misunderstanding, followed by supplementation). An open question is how to implement this efficiently in networks: how to quantify the confidence of understanding to decide when to trigger feedback and how to minimize the overhead (perhaps through piggyback feedback on existing control packets). This overlaps with reliability: semantic error detection is not straightforward like bit error detection. It might involve the receiver’s AI model estimating whether the decoded message fits plausibly or conflicts with its knowledge. There is research gap in developing metrics and algorithms for semantic error detection and correction (akin to CRC and ARQ but for meaning).

- Standardization of Semantic Information Representation: This is now an active area of research. Bodies like IEEE ComSoc or ITU have had focus groups on “Semantic and Goal-Oriented Communications”. Early outputs might be a common framework or definitions (such as defining what a “semantic bit” is or a “semantic alignment score”). One possible standardization effort is to define a semantic description language for networked communication, which could be akin to XML/JSON but enriched with ontology references. For example, a message might be annotated with ontology URI references for key terms, enabling a generic receiver to parse the meaning if it has the ontology. This is somewhat akin to the Semantic Web’s RDF but applied to dynamic messages. DIKWP graphs could be serialized in such a standard format (perhaps using existing KG standards). The gap here is performance: such self-descriptive semantic messages tend to be bulky (textual URIs, etc.), so balancing readability/standardization with compactness is challenging. Possibly, binary semantic protocols (like protocol buffers for semantics) could emerge.

- Network Support for Semantics: Future networks might have features at the infrastructure level to support SemCom. This might include edge caches of knowledge: an edge server could store common data patterns or models so that devices do not have to transmit them. An alternative is in-network reasoning: a router might aggregate multiple sensor inputs and directly infer a meaning (like an edge aggregator that collects raw data from many IoT sensors and only sends upward a semantic summary). This changes the role of network nodes from dumb forwarding to the active processing of meaning (which is a paradigm shift—reminiscent of information-centric networking). Projects in 6G research, like semantic-aware routing, could route data not by destination address but by asking “where can this meaning be fulfilled?” (similar to how CCN routes by content name). Implementation is nascent, but content-based networking prototypes (like NDNoT) might incorporate semantic tags for routing. The gap is in ensuring security and consistency when network nodes interfere with content—it requires trust frameworks (perhaps the network node has to cryptographically prove that it did not alter the semantics wrongly, e.g., via semantic signatures or blockchain).

- Explainable and Trustworthy AI Communication: As AI-driven communication becomes prevalent, user trust is critical. Thus, a trend is to build explainability features into communication interfaces, e.g., an email client in the future might say, “We have summarized 5 emails for you. [Show summary]. (Click to see full details or how summary was generated).” Alternatively, a personal assistant might ask, “Do you want a detailed explanation or just the gist?”, giving the user control. The DIKWP approach naturally supports explanation at each step (since each step’s output is interpretable), making it an ideal basis for such transparent systems. A research area is human-in-the-loop semantic AI: how to present explanations of semantic processing to users in a helpful way (not too technical). This also ties into user training—users might need to learn new mental models of what the AI is doing (e.g., understanding that it is not transmitting everything, so, if something is missing, it might have filtered it out). There is a sociotechnical gap: aligning user expectations with semantic communication behavior.

- Quantum SemCom: Furthermore, some have speculated on merging quantum communication with semantic concepts. Quantum communication excels in transmitting bits securely (via entanglement, etc.), but there are concepts like quantum-based language understanding or using quantum computing to perform semantic compression more efficiently. This is in the very early stage (mostly conceptual papers). It may be irrelevant for the near future but is worth noting as a far-term idea—e.g., could a quantum system store a superposition representing multiple meanings and then collapse to the needed one at the receiver, potentially sending less? While this appears unrealistic, there is a field emerging called quantum natural language processing that might one day inform communication. The gap is enormous here, combining two complex domains with few results, so progress remains to be seen.

- Unified Theoretical Framework: As identified by survey authors, we lack a unified semantic information theory. There is no direct analog for “Shannon’s capacity” in semantics that everyone agrees on. Notions like semantic entropy or goal-oriented capacity are promising but not fully validated. Researchers need to converge on definitions of metrics like semantic fidelity, semantic capacity, semantic noise, etc. Without these, optimizing systems is ad hoc. DIKWP provides a conceptual framework but it is not a mathematical theory by itself. One gap is bridging DIKWP with information theory—e.g., formalizing how much purpose-driven compression can reduce the required bits (some theoretical models consider “relevant information” measures). Work on the information bottleneck for semantics is one avenue, but it is still in progress.

- Misalignment and Ontology Mapping: If two systems do not share the same ontology or knowledge base, how do they communicate? In human terms, this is akin to two experts from different fields talking—they have to find a common ground. In machines, this could happen if, say, two companies have different data schemas and their AI agents must collaborate. We need automatic ontology alignment algorithms in communication, i.e., ways for agents to identify that concept A from agent 1 is similar to concept B of agent 2, even if their labels differ. There is research in the semantic web community on ontology alignment, but performing this on-the-fly in communications is challenging (it can be computationally heavy). This is a gap, especially for open networks (like IoT devices from different vendors interacting). DIKWP relativity theory highlights the problem but does not solve it—it is an open research problem to allow semantic interoperability without pre-agreed standards in every case.

- Scalability in Multiparty Communication: Most research looks at one sender and one receiver. However, in reality, we often have group chats, broadcasting, or mesh networks. Ensuring that all members of a group share understanding is even more difficult—it is akin to multilateral relativity of understanding. For example, in a team, two people might interpret differently; how does an AI mediator ensure that everyone is on the same page? This may be possible via iterative consensus (the team might need to have a short discussion to clarify). In networks, broadcasting semantic information that is universally understood might require sending multiple versions tailored to subsets of receivers. The gap is in multitarget semantic encoding, i.e., how to encode a message such that different receivers can decode it to their own context correctly (somewhat like layered coding in video, where different qualities are embedded). The solution may be to include multiple semantic cues—but this would increase the overhead. This trade-off and technique are not well studied yet.

- Security Gaps: While encryption and adversarial defenses exist, new types of attacks are likely present. One is model inversion attacks: if an adversary intercepts semantic vectors, could they query a generative model to find a plausible input that leads to that vector, effectively reconstructing private information? Early studies in ML security show that it is possible to approximately invert embeddings to words or images. Thus, one gap lies in designing semantic representations that are difficult to invert without key knowledge (this could tie to encryption or purposely underspecified encodings that require context to resolve). Another gap is trust in knowledge—if an adversary can poison the knowledge base (disinformation attack), the system might semantically communicate incorrect or harmful meanings. Traditional communication did not have this vulnerability (bits are bits—they do not carry truth value inherently), but, here, if knowledge is incorrect, communication can mislead, even if the bits are correct. How can we validate knowledge in a distributed way? Blockchains or distributed ledgers may ensure data integrity for shared knowledge.

- Ethical Considerations: As systems become semantic and personalized, ethical issues become more pronounced. For example, could a personalized system inadvertently amplify a user’s cognitive biases by tailoring too much? (This is akin to the “filter bubble” issue in social networks—if the system only sends what one likes to hear, one’s knowledge may narrow). Moreover, if communication is goal-oriented, who sets the goal? An AI agent might overly prioritize a goal at the cost of other values (such as a goal to maximize clickthrough—it might communicate sensationalized content because this achieves the goal, even if it is not wise). Ensuring that Purpose layers encapsulate ethical objectives (like fairness, truthfulness) is a gap. There is a push for value alignment in AI (enabling AI to follow human ethics), which applies here too. SemCom systems will need guidelines to avoid misuse (e.g., deepfake content might be considered a “semantic attack”; policies must mitigate this—perhaps by watermarking legitimate content so that fakes can be detected).

- User Studies and HCI: A lot of the research is technical. We also need studies involving real users interacting with semantic communication systems to see how they react, what they prefer, and where misunderstandings still occur—for example, testing a DIKWP-based chatbot vs. a regular chatbot with diverse users and measuring trust and efficiency, or deploying a semantic IoT network in a smart home to see if it indeed saves bandwidth and if any failures occur. These practical evaluations will highlight gaps that theoretical work might miss—e.g., perhaps users find the explanations annoying, or perhaps an IoT device’s semantic compression saves bandwidth but misses a rare critical event. Such insights would feed back into model refinement. So far, few such user-centric evaluations have been published, which is a gap given how user-facing this technology can be.

- Communication engineers must learn to incorporate knowledge and AI into designs;

- AI researchers must consider communication constraints and security issues;

- Domain experts (e.g., in medicine, automotive, etc.) need to help build ontologies and evaluate whether the semantics captured are truly the ones that matter.

9. Conclusions and Future Research Directions

- Researchers in the 6G and AI communities have developed semantic-focused communication systems using deep learning, achieving impressive reductions in bandwidth requirements by transmitting meaning instead of verbatim data. These systems implicitly echo DIKWP’s rationale by prioritizing relevant information (akin to moving up the DIKWP pyramid) and ignoring or compressing irrelevant details.

- Knowledge-centric frameworks incorporate ontologies and KGs to ensure that the sender and receiver share a common understanding context. This resonates strongly with DIKWP’s explicit Data/Information/Knowledge structures.

- Efforts in personalized AI—from the federated learning of user-specific models to foundation models with contextual prompts—address the need to tailor communication to the individual, a need foreseen by the DIKWP’s relativity theory. Personalization is not just a user convenience but, as we have argued, a necessity for semantic fidelity when different receivers have different knowledge bases.

- In the realm of security, nascent frameworks like SemProtector and semantic firewalls directly tackle the confidentiality, integrity, and safety of semantic content. They complement DIKWP by adding the protective layers that any real deployment would require.

- DIKWP can serve as an architectural blueprint under which the best of various approaches can be unified—for instance, using deep neural encoders at the Data→Information stage, symbolic AI for Knowledge representation, and explicit Purpose logic for decision making, all within one coherent system.

- Conversely, advances like large pretrained models or efficient semantic coding schemes can complement DIKWP’s abstract components with concrete, high-performance implementations.

- Bandwidth and energy savings in IoT and wireless scenarios by transmitting semantic summaries instead of raw data.

- Improved quality of service in low-SNR or congested environments by focusing on what really matters to the communication goal.

- Enhanced user experiences, whether it is a more intuitive interaction with AI assistants (a reduced need for the user to phrase content precisely, as the system “understands” their meaning) or reduced information overload (through intelligent summarization guided by the user’s purpose and preferences).

- Metrics and theories need to catch up—stakeholders will need a common language to evaluate SemCom systems (perhaps an analog of the “bit error rate” at the semantic level or standardized semantic compatibility scores).

- Robustness and security must be front-and-center in future research: making communications more intelligent should not render them more vulnerable (e.g., adversaries exploiting high-level understanding to deceive systems). Future systems must be resilient, possibly through redundancy at the semantic level (like checking consistency against a knowledge base to catch anomalies) or through novel encryption that protects the meaning itself, not just raw bits.

- Ethical design and value alignment will be an important research direction, ensuring that these systems augment human communication in a beneficial way. For example, semantic compression should not be allowed to become semantic distortion that hides inconvenient truths or injects bias. Transparency tools (like the ability to request the original uncompressed data or an explanation of what was omitted) might become standard components.

- Formal Semantic Information Theory Development: Researchers should strive to formalize concepts such as semantic entropy, mutual understanding probability, and semantic channel capacity. One possible route is to extend Shannon’s theory by conditioning on a shared knowledge context. For instance, define the information content of a message relative to what the receiver already knows (which reduces uncertainty about the message’s meaning). Recent works on “common information” and “pointwise mutual information in embeddings” could be starting points. Validating these theories with experiments (e.g., does higher semantic mutual information correlate with better task success?) will be crucial.

- Neurosymbolic Communication Systems: Implement end-to-end prototypes that combine neural and symbolic techniques under the DIKWP paradigm. For example, create an AI assistant that uses a neural network to interpret user utterances (Data → Information), updates a KG about the user’s context (Information → Knowledge), uses symbolic reasoning or logical rules to draw conclusions or plans (Knowledge → Wisdom), and always references the user’s goals (Purpose) before responding. Compare this hybrid against a purely neural end-to-end system in terms of user satisfaction, the ability to explain decisions, and the ease of updating the system when new knowledge arises. This will empirically demonstrate the value (or limitations) of DIKWP’s structured approach.

- Semantic Alignment Protocols: Develop lightweight protocols for agents to negotiate and align on semantic context before and during communication. This might involve sharing hashes or identifiers of one’s knowledge base items to check for overlap, or conducting a quick Q&A session between agents to calibrate (similar to humans defining terms at the start of a technical discussion). One could simulate scenarios where two agents initially misunderstand each other and then apply an alignment protocol to measure the improvement in communication success. This also ties into multiparty settings and could extend to group protocols, such as a group chat establishing a common conceptual ground.

- Secure Semantic Exchange Mechanisms: Future research should design encryption schemes and authentication mechanisms specifically for semantic data. For example, one could encrypt the semantic representation (e.g., a vector or a triple) such that only a receiver with the right knowledge can decrypt it—perhaps leveraging attribute-based encryption, where attributes are semantic concepts. Another angle is watermarking semantic content, ensuring that any generated summary or content can be verified as coming from a legitimate source and not altered (embedding a hidden watermark in the phrasing that is invisible to humans but machine-checkable). These techniques would address concerns about deepfakes or semantic tampering. Researchers can borrow techniques from NLP watermarking and adapt them to SemCom.

- Cross-Layer Optimization in Networks: Traditional network design separates layers, but semantic communication cuts across them. Future research might consider cross-layer optimization where physical-layer parameters (power, coding) are adjusted based on the semantic importance of the data being sent. For instance, crucial semantic bits (that carry key meaning) could be given stronger error protection or higher power, whereas less important ones are sent on a best-effort basis. One might simulate a network where a semantic-aware scheduler allocates resources not just by packet size or QoS class but according to semantic content tags (like “urgent safety information” vs. “redundant data”). This blends ideas from DIKWP (where purpose determines priority) with networking. Studies could show improved reliability for important messages without increasing the overall load, demonstrating smarter resource use.

- Human Factors and User Training: Recognizing that communication is ultimately about humans (even if machine-mediated), research should also engage communication scientists, linguists, and cognitive psychologists. There is room to study how human communication strategies (like the use of metaphor, summarization, and clarification) can inspire algorithmic approaches—essentially bringing more of the pragmatic and social layer into SemCom models. Conversely, as humans start interacting with these systems, they may need to adjust their communication patterns (for example, a user might learn that saying a certain keyword triggers a summary mode). Studying this co-adaptation will ensure that the technology actually aligns with human behavior. This direction might involve controlled experiments with users interacting with different system variants and measuring outcomes like understanding, trust, and efficiency.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zeybek, M.; Kartal Çetin, B.; Engin, E.Z. A Hybrid Approach to Semantic Digital Speech: Enabling Gradual Transition in Practical Communication Systems. Electronics 2025, 14, 1130. [Google Scholar] [CrossRef]

- Strinati, E.C.; Barbarossa, S. 6G networks: Beyond Shannon towards semantic and goal-oriented communications. Comput. Netw. 2021, 190, 107930. [Google Scholar] [CrossRef]

- Getu, T.M.; Kaddoum, G.; Bennis, M. Semantic Communication: A Survey on Research Landscape, Challenges, and Future Directions. Proc. IEEE 2024, 112, 1649–1685. [Google Scholar] [CrossRef]

- Radicchi, F.; Krioukov, D. Harrison Hartle and Ginestra Bianconi, Classical information theory of networks. J. Phys. Complex. 2020, 1, 025001. [Google Scholar] [CrossRef]

- Luo, X.; Chen, H.-H.; Guo, Q. Semantic Communications: Overview, Open Issues, and Future Research Directions. IEEE Wirel. Commun. 2022, 29, 210–219. [Google Scholar] [CrossRef]

- Yang, W.; Du, H.; Liew, Z.Q.; Lim, W.Y.B.; Xiong, Z.; Niyato, D.; Chi, X.; Shen, X.; Miao, C. Semantic Communications for Future Internet: Fundamentals, Applications, and Challenges. IEEE Commun. Surv. Tutor. 2023, 295, 213–250. [Google Scholar] [CrossRef]

- Chaccour, C.; Saad, W.; Debbah, M.; Han, Z.; Vincent Poor, H. Less Data, More Knowledge: Building Next-Generation Semantic Communication Networks. IEEE Commun. Surv. Tutor. 2025, 27, 37–76. [Google Scholar] [CrossRef]

- Al-Muhtadi, J.; Saleem, K.; Al-Rabiaah, S.; Imran, M.; Gawanmeh, A.; Rodrigues, J.J.P.C. A lightweight cyber security framework with context-awareness for pervasive computing environments. Sustain. Cities Soc. 2021, 66, 102610. [Google Scholar] [CrossRef]

- Javadpour, A.; Ja’fari, F.; Taleb, T.; Zhao, Y.; Yang, B.; Benzaïd, C. Encryption as a Service for IoT: Opportunities, Challenges, and Solutions. IEEE Internet Things J. 2024, 11, 7525–7558. [Google Scholar] [CrossRef]

- Yang, Z.; Chen, M.; Li, G.; Yang, Y.; Zhang, Z. Secure Semantic Communications: Fundamentals and Challenges. IEEE Netw. 2024, 38, 513–520. [Google Scholar] [CrossRef]

- Won, D.; Woraphonbenjakul, G.; Wondmagegn, A.B.; Tran, A.-T.; Lee, D.; Lakew, D.S. Resource Management, Security, and Privacy Issues in Semantic Communications: A Survey. IEEE Commun. Surv. Tutor. 2025, 27, 1758–1797. [Google Scholar] [CrossRef]

- Li, C.; Zeng, L.; Huang, X.; Miao, X.; Wang, S. Secure Semantic Communication Model for Black-Box Attack Challenge Under Metaverse. IEEE Wirel. Commun. 2023, 30, 56–62. [Google Scholar] [CrossRef]

- Meng, R.; Gao, S.; Fan, D.; Gao, H.; Wang, Y.; Xu, X.; Wang, B.; Lv, S.; Zhang, Z.; Sun, M.; et al. A survey of secure semantic communications. J. Netw. Comput. Appl. 2025, 239, 104181. [Google Scholar] [CrossRef]

- Alsamhi, S.H.; Hawbani, A.; Mohsen, N.; Kumar, S.; Porwol, L.; Curry, E. SemCom for Metaverse: Challenges, Opportunities and Future Trends. In Proceedings of the 2023 3rd International Conference on Computing and Information Technology (ICCIT), Tabuk, Saudi Arabia, 13–14 September 2023; pp. 130–134. [Google Scholar] [CrossRef]

- Mei, Y.; Duan, Y. The DIKWP (Data, Information, Knowledge, Wisdom, Purpose) Revolution: A New Horizon in Medical Dispute Resolution. Appl. Sci. 2024, 14, 3994. [Google Scholar] [CrossRef]

- van Meter, H.J. Revising the DIKW pyramid and the real relationship between data, information, knowledge, and wisdom. Law Technol. Humans 2020, 2, 69–80. [Google Scholar] [CrossRef]

- Peters, M.A.; Jandrić, P.; Green, B.J. The DIKW Model in the Age of Artificial Intelligence. Postdigital Sci. Educ. 2024, 6, 1–10. [Google Scholar] [CrossRef]

- Wu, K.; Duan, Y. DIKWP-TRIZ: A Revolution on Traditional TRIZ Towards Invention for Artificial Consciousness. Appl. Sci. 2024, 14, 10865. [Google Scholar] [CrossRef]

- d’Inverno, R.; Vickers, J. Introducing Einstein’s Relativity: A Deeper Understanding; Oxford University Press: Oxford, UK, 2022. [Google Scholar]

- Tommasi, M.; Sergi, M.R.; Picconi, L.; Saggino, A. The location of emotional intelligence measured by EQ-i in the personality and cognitive space: Are there gender differences? Front. Psychol. 2023, 13, 985847. [Google Scholar] [CrossRef]

- Bendifallah, L.; Abbou, J.; Douven, I.; Burnett, H. Conceptual Spaces for Conceptual Engineering? Feminism as a Case Study. Rev. Phil. Psych. 2025, 16, 199–229. [Google Scholar] [CrossRef]

- Cowen, A.S.; Dacher, K. Semantic Space Theory: A Computational Approach to Emotion. Trends Cogn. Sci. 2021, 25, 124–136. [Google Scholar] [CrossRef]

- Sarkis-Onofre, R.; Catalá-López, F.; Aromataris, E.; Lockwood, C. How to properly use the PRISMA Statement. Syst. Rev. 2021, 10, 117. [Google Scholar] [CrossRef]

- Shannon, C.E.; Weaver, W. The Mathematical Theory of Communication; The University of Illinois Press: Urbana, IL, USA, 1949; pp. 1–117. [Google Scholar]

- Bar-Hillel, Y.; Rudolf, C. Semantic information. Br. J. Philos. Sci. 1953, 4, 147–157. [Google Scholar] [CrossRef]

- Dickerson, J.E. Data, information, knowledge, wisdom, and understanding. Anaesth. Intensive Care Med. 2022, 23, 737–739. [Google Scholar] [CrossRef]

- Ackoff, R. From data to wisdom. J. Appl. Syst. Anal. 1989, 16, 3–9. [Google Scholar]

- LeCun, Y.; Kavukcuoglu, K.; Farabet, C. Convolutional networks and applications in vision. In Proceedings of the 2010 IEEE International Symposium on Circuits and Systems, Paris, France, 30 May–2 June 2010; pp. 253–256. [Google Scholar]

- Pearl, J. Direct and Indirect Effects. Probabilistic and Causal Inference: The Works of Judea Pearl, 1st ed.; Association for Computing Machinery: New York, NY, USA, 2022; pp. 373–392. [Google Scholar] [CrossRef]

- Russell, S.J. Rationality and intelligence. Artif. Intell. 1997, 94, 57–77. [Google Scholar] [CrossRef]

- Dretske, F. The pragmatic dimension of knowledge. Philos. Stud. Int. J. Philos. Anal. Tradit. 1981, 40, 363–378. [Google Scholar] [CrossRef]

- Floridi, L. Understanding Epistemic Relevance. Erkenn 2008, 69, 69–92. [Google Scholar] [CrossRef]

- Bao, J. Towards a theory of semantic communication. In Proceedings of the 2011 IEEE Network Science Workshop, West Point, NY, USA, 22–24 June 2011; pp. 110–117. [Google Scholar] [CrossRef]

- O’Shea, K. An approach to conversational agent design using semantic sentence similarity. Appl. Intell. 2012, 37, 558–568. [Google Scholar] [CrossRef]

- Xie, H.; Qin, Z.; Li, G.Y.; Juang, B.-H. Deep Learning Enabled Semantic Communication Systems. IEEE Trans. Signal Process. 2021, 69, 2663–2675. [Google Scholar] [CrossRef]

- Yang, Y.; Huang, C.; Xia, L.; Li, C. Knowledge Graph Contrastive Learning for Recommendation. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ’22), Madrid, Spain, 11–15 July 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 1434–1443. [Google Scholar] [CrossRef]

- Strinati, E.C.; Alexandropoulos, G.C.; Wymeersch, H.; Denis, B.; Sciancalepore, V.; D’Errico, R.; Clemente, A.; Phan-Huy, D.-T.; De Carvalho, E.; Popovski, P. Reconfigurable, Intelligent, and Sustainable Wireless Environments for 6G Smart Connectivity. IEEE Commun. Mag. 2021, 59, 99–105. [Google Scholar] [CrossRef]

- Popovski, P.; Simeone, O.; Boccardi, F.; Gündüz, D.; Sahin, O. Semantic-Effectiveness Filtering and Control for Post-5G Wireless Connectivity. J. Indian Inst. Sci. 2020, 100, 435–443. [Google Scholar] [CrossRef]

- Jiang, P.; Agarwal, S.; Jin, B.; Wang, X.; Sun, J.; Han, J. Text-Augmented Open Knowledge Graph Completion via Pre-Trained Language Models. arXiv 2023, arXiv:2305.15597. [Google Scholar] [CrossRef]

- Thomas, R.W.; DaSilva, L.A.; MacKenzie, A.B. Cognitive networks. In Proceedings of the First IEEE International Symposium on New Frontiers in Dynamic Spectrum Access Networks, DySPAN 2005, Baltimore, MD, USA, 8–11 November 2005; pp. 352–360. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, J.; Xu, B. White-Box AI Model: Next Frontier of Wireless Communications. arXiv 2025, arXiv:2504.09138. [Google Scholar] [CrossRef]

- Wang, Z.; Xie, W.; Chen, K. Self-Deception: Reverse Penetrating the Semantic Firewall of Large Language Models. arXiv 2023, arXiv:2308.11521. [Google Scholar] [CrossRef]

- Duan, Y.; Sun, X.; Che, H.; Cao, C.; Li, Z.; Yang, X. Modeling data, information and knowledge for security protection of hybrid IoT and edge resources. IEEE Access 2019, 7, 99161–99176. [Google Scholar] [CrossRef]

- Skjæveland, M.G.; Balog, K.; Bernard, N.; Łajewska, W.; Linjordet, T. An Ecosystem for Personal Knowledge Graphs: A Survey and Research Roadmap. AI Open 2024, 5, 100246. [Google Scholar] [CrossRef]

- Al-Nazer, A.; Helmy, T.; Al-Mulhem, M. User’s Profile Ontology-based Semantic Framework for Personalized Food and Nutrition Recommendation. Procedia Comput. Sci. 2014, 32, 282–289. [Google Scholar] [CrossRef]

- Wang, Y.; Ni, W.; Yi, W.; Xu, X.; Zhang, P.; Nallanathan, A. Federated Contrastive Learning for Personalized Semantic Communication. IEEE Commun. Lett. 2024, 28, 1875–1879. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, H.H.; Chong, K.F.E.; Quek, T.Q.S. Personalizing Semantic Communication: A Foundation Model Approach. In Proceedings of the 2024 IEEE 25th International Workshop on Signal Processing Advances in Wireless Communications (SPAWC), Lucca, Italy, 10–13 September 2024; pp. 846–850. [Google Scholar] [CrossRef]

- Meng, R.; Fan, D.; Gao, H.; Yuan, Y.; Wang, B.; Xu, X.; Sun, M.; Dong, C.; Tao, X.; Zhang, P.; et al. Secure Semantic Communication With Homomorphic Encryption. arXiv 2025, arXiv:2501.10182. [Google Scholar] [CrossRef]

- Liu, X.; Nan, G.; Cui, Q.; Li, Z.; Liu, P.; Xing, Z.; Mu, H.; Tao, X.; Quek, T.Q.S. SemProtector: A Unified Framework for Semantic Protection in Deep Learning-based Semantic Communication Systems. IEEE Commun. Mag. 2023, 61, 56–62. [Google Scholar] [CrossRef]

- Wu, K.; Duan, Y. Modeling and Resolving Uncertainty in DIKWP Model. Appl. Sci. 2024, 14, 4776. [Google Scholar] [CrossRef]