Abstract

Gesture elicitation studies (GES) is a promising method for intuitive interaction with mobile robots in urban environments. Traditional gesture elicitation methods rely on predefined commands, which may restrict creativity and adaptability. This study explores referent-free gesture elicitation as a method for discovering natural, user-defined gestures to control a hexapod robot. Through a three-phase user study, we explore gesture diversity, user confidence, and agreement rates across tasks. Results show that referent-free methods foster creativity but present consistency challenges, while referent-based approaches offer better convergence for familiar commands. As a proof of concept, we implemented a subset of gestures on an embedded platform using a stereo vision system and tested live classification with two gestures. This technical extension demonstrates early-stage feasibility and informs future deployments. Our findings contribute a design framework for human-centered gesture interfaces in mobile robotics, especially for dynamic public environments.

1. Introduction

As robotic systems continue to transition from industrial and research domains into public and urban environments, the challenge of designing effective, intuitive, and robust interaction methods becomes increasingly critical. Mobile robots intended for general availability—such as service robots, delivery bots, or assistive platforms—must operate without direct supervision or user training. In these contexts, traditional interfaces such as remote controllers, screens, or manual buttons are often impractical or even counterproductive. Instead, control systems must be embedded, contactless, and universally accessible. This paper contributes to that vision by exploring a gesture-based interface for real-time control of a hexapod robot operating in public urban space.

In high-density or noise-sensitive environments such as city streets, museums, or industrial sites, verbal or touchscreen interfaces may be unavailable, unreliable, or just non-functional. Gesture-based interaction offers several advantages for such contexts: it supports contactless input, mimics natural human behavior, reduces the learning curve, and enhances safety through physical distance. However, designing gesture sets for robot control—especially for public-facing systems—poses challenges related to consistency, intuitiveness, spatial awareness, and user variability [1,2,3,4].

We investigate this problem in the context of hexapod robots, whose six-legged structure provides high terrain adaptability and operational stability. These robots have traditionally been used in specialized applications such as search and rescue or hazardous inspection [5,6], typically under the control of trained operators. With advances in embedded perception, servomotor control, and onboard computation, hexapods are now increasingly feasible for deployment in unstructured and crowded environments by untrained users [7,8,9].

To explore this emerging use case, we designed and built “Spiderbot,” a custom hexapod robot equipped with a stereo vision system and integrated gesture recognition pipeline. Our study addresses two complementary goals. First, we evaluate the efficacy of a referent-free gesture elicitation method, in which users define gestures without being prompted by specific commands. This contrasts with the traditional referent-based elicitation approach, where each task is tied to a predefined robot action referred to as referent (e.g., “move forward,” “stop,” or “summon”) and participants are asked to propose gestures corresponding to these referents. Second, we implement and validate a real-time gesture recognition system using a ZED 2i stereo camera and an NVIDIA Jetson Nano. This end-to-end deployment supports practical evaluation of recognition accuracy, inference latency, and live gesture-to-command mapping in a real system.

Our contributions to the field of human–robot interaction (HRI) and embedded robotics are as follows:

- Gesture elicitation: We contribute a comparison between referent-free and referent-based gesture design for mobile robots operating in public, dynamic environments. We analyze agreement rates, spatial awareness, and user preferences.

- System implementation: We present a real-time gesture recognition pipeline based on stereo vision, deep learning, and edge deployment on embedded hardware. Two gestures selected through elicitation were successfully implemented for robot control.

- Design implications: Through proxemic analysis and participant feedback, we offer practical guidelines for designing spatially aware, intuitive gesture interfaces for general-availability robots.

By combining user-driven gesture discovery with technical implementation and evaluation, this paper bridges human-centered design and system-level integration in urban robotic control—a central theme of smart robotics and automation research.

2. Related Work

2.1. Gestural Interaction in Robotics

Gestural interaction enables users to communicate with robotic systems using hand and body movements, providing an intuitive alternative to traditional interfaces such as controllers or touchscreens. As non-verbal communication plays a key role in human–human interaction [10], so leveraging gestures for robotic control is a natural extension, particularly in scenarios where verbal commands are impractical.

Drawing on research on interpersonal interaction, it can be beneficial to embrace the achievements of experimental semiotics, which bridges semiotics with cognitive science and experimental psychology to study how sign systems develop, evolve, and function [11], as similar rules apply to HRI. Two of them may be especially relevant in this context: efficiency through conventionalization and usability vs. learnability tradeoff. The first one describes how gestural communication systems rapidly develop conventions that reduce effort while maintaining informativeness. Participants initially use elaborate, iconic gestures but converge on simplified, agreed-upon forms through repeated interaction [12]. The latter one addresses the evolution of communication systems to be easier to use (production efficiency and reproduction fidelity), but harder to learn (identification accuracy) for a second generation of naïve participants. Thus, usability trumps learnability [13]. This suggests robots should prioritize gestures that are efficient for repeated users over those immediately intuitive to newcomers. Moreover, experimental semiotics research shows that gestural conventions emerge through repetitive calibration [14], which seems to be natural, but emphasizes the need for an iterative process of gesture development.

Gesture-based control has been explored in various domains, including industrial and assistive robotics, or human–robot collaboration [15,16,17,18,19,20,21,22]. A major focus in this area is improving gesture recognition accuracy and robustness, with recent advancements utilizing deep learning and sensor fusion techniques [15,18,20]. Comparisons between gestural and traditional interfaces, such as graphical user interface (GUI)-including augmented reality, or controllers, suggest that gesture-based interaction can enhance usability in hands-free and dynamic environments [23,24,25,26]. However, challenges remain in mitigating issues such as legacy bias, where users default to previously learned gestures, and performance bias, where fatigue affects gesture execution [27,28]. Researchers continue to explore novel approaches, such as interacting through a robot’s end effector [29], body movement mirroring [30], and designing robot-performed gestures to improve human understanding and collaboration [31].

2.2. Gesture Elicitation for Human–Robot Interaction

Gesture elicitation is a widely used method for designing intuitive gestural interfaces, aiming to identify gestures that users naturally associate with specific tasks [32,33,34,35,36]. The method is especially relevant in human–robot interaction (HRI), where the design of intuitive input vocabularies directly influences interaction fluency, user trust, and the system’s ability to integrate into everyday contexts. Gesture elicitation studies typically follow two primary approaches: (1) Referent-based elicitation, where users propose gestures for predefined commands (e.g., “move forward”), facilitating consistency across users; and (2) Referent-free elicitation, which allows users to generate gestures without predefined referents, encouraging creativity and uncovering novel interaction paradigms [37,38,39].

Both methods have their advantages and drawbacks. Referent-based elicitation produces more structured and predictable gestures, making it suitable for refining gesture sets for robotic applications [40,41]. In contrast, referent-free elicitation enables greater diversity, which is beneficial for emerging technologies where predefined gesture sets may not yet exist. However, biases such as legacy bias and performance bias influence gesture selection and must be considered in study design [27,42,43]. The refinement of gesture elicitation methodologies remains an ongoing research challenge, particularly in developing adaptive interfaces that balance consistency and user preference.

2.3. Robotic Systems in Urban Environments

The integration of robots into urban environments presents opportunities for automation in logistics, transportation, and emergency response [44,45]. However, deploying robots in dynamic urban settings introduces challenges related to navigation, obstacle avoidance, and real-time interaction with pedestrians [46].

One major application is Urban Search and Rescue (USAR), where robots navigate complex environments to assist first responders. Advances in deep reinforcement learning have improved robots’ ability to traverse unpredictable terrains [47], while multi-sensor fusion techniques enhance localization and mapping accuracy in densely populated areas [48,49]. These developments help reduce human exposure to hazardous conditions, making robotic deployment in crisis scenarios more effective [50].

Another emerging domain is autonomous delivery robots (ADRs), which provide last-mile delivery solutions in urban areas, reducing congestion and emissions [51]. However, ensuring safe navigation in pedestrian-dense environments remains a significant challenge, requiring real-time adaptation to dynamic obstacles [52]. Haptic feedback and advanced sensing systems are being developed to improve human–robot interaction in public spaces, ensuring safety and usability [53].

Beyond technical challenges, social acceptance is critical for integrating robots into cities. Public perception of robotic systems influences deployment success, with concerns over safety, privacy, and employment displacement requiring careful consideration [54]. The concept of Robot-Friendly Cities suggests designing urban spaces that accommodate robotic systems without disrupting human activities [55]. Urban “living labs” have emerged as a platform for testing robotic technologies in real-world conditions, facilitating collaboration between researchers, policymakers, and citizens to refine deployment strategies [56].

As urban robotics continues to evolve, further research is needed to address real-time navigation challenges, human–robot interaction in public spaces, and ethical concerns related to autonomous systems in urban settings.

3. Methodology

The study involved 52 participants (25F, 27M), who completed one of the elicitation sessions: the referent-free (Phases 1: N = 16 and 2: N = 20) and referent-based gesture elicitation (Phase 3: N = 16). The sample included individuals aged between 19 and 53 years (mean = 24.8, SD = 5.47).

Participants were recruited from a location with preexisting smart city technologies implemented, which may have influenced their openness to technology and interaction systems. All participants provided informed consent prior to participation. They were asked about previous experience with mobile robots and gesture-based interfaces. Of the 52 participants, 28 had previously interacted with mobile robots, while 34 reported having some familiarity with gesture interfaces. Despite this, no direct correlation was found between familiarity with gesture systems and performance in either the referent-free or referent-based phases.

3.1. Study Design

During Phase 1 and Phase 3, participants performed gestures in a controlled lab environment where the hexapod robot was centrally positioned. Participants were asked to propose 20 different gestures they considered useful for controlling a mobile robot in an urban environment. The hexapod robot was physically present in the lab, placed in front of the participant, but remained turned off during this phase. Its role was to act as a visual anchor for the imagined interaction scenario. Participants also provided verbal feedback using the think-aloud method, describing their reasoning while developing and executing gestures. The gesture videos collected in Phase 1 were manually clustered into 37 distinct categories based on syntactic similarity, meaning gestures were grouped according to observable features such as body part used, movement direction, amplitude, repetition, and spatial configuration. This clustering was conducted independently by three researchers, who first reviewed all gestures and proposed initial groupings. Disagreements were resolved through discussion until full consensus was reached on the final set of categories. From these, the 21 most frequently occurring categories were unified to one gesture each and selected for inclusion in Phase 2.

Phase 2 consisted of a gesture ranking survey, where participants evaluated the top 21 gestures from Phase 1 by selecting the three most suitable gestures for each action provided by researchers. The survey, administered via the university’s forms platform, included links to video recordings of each gesture hosted on the university’s servers. This allowed participants to view and compare gestures before making their selections. For each referent, they were asked to choose the gestures they found most intuitive or appropriate. No personal data was collected during this phase, and all participants provided informed consent. This stage aimed to determine user preferences and refine the most guessable gestures before moving to a structured elicitation method.

In Phase 3, a referent-based elicitation approach was used, where participants were asked to propose gestures for predefined robot functions (referents). The collected rankings were analyzed using AGATe software, version 2.0 [38,39,57], and the detailed procedure is described in Results.

The experimental setup, shown in the top panel of Figure 1, was used in both Phase 1 and Phase 3. In Phase 1 (referent-free), the robot remained switched off and acted only as a visual anchor while participants invented new gestures. In Phase 3 (referent-based), the same setup was used but the robot additionally performed short motion sequences to illustrate predefined referents. These movements were manually triggered by the researcher via a control pad, ensuring consistent visualization across participants. The rulers visible on the floor were not explained to participants and were later used only by the researchers to approximate participant distance for proxemic analysis. The bottom panel of Figure 1 is a screenshot from the working recognition interface developed after the elicitation phases, during the implementation stage.

Figure 1.

Experiment setup during Phase 1 and 3 (top); gesture detection interface (bottom).

The division of the procedure into the above phases was intended to compare two approaches in gesture elicitation: one based on the referent-free method, where gestures were first created freely by participants (Phase 1) and then assigned by a different group to a set of actions (Phase 2), with the traditional GES method. This structured approach allowed for direct comparison of those two approaches, highlighting differences in creativity, consistency, and user preferences between them.

3.2. Referent-Free Elicitation Method

The initial phase of the study used a referent-free gesture elicitation method. Participants were asked to generate gestures for controlling a hexapod robot without being provided with predefined referents. The goal of these phases was to encourage participants to freely create gestures they felt would intuitively map to the robot’s functionalities. Each participant generated up to 20 gestures—value driven by the number of referents in the next phases and potential cognitive load—discussing a broad range of robot actions, including movement, interaction initiation, and task completion.

3.3. Referent-Based Elicitation Method

In Phase 3, participants followed a referent-based GES approach. It involved predefined referents, where participants were presented with specific robot actions and asked to propose corresponding gestures. Unlike the referent-free method, where participants had complete freedom to create any gesture, the GES method directed participants to align their gestures with predefined actions such as “Move Forward,” “Rotate Left,” or “Summon Robot.” The elicited gestures were recorded and analyzed to determine the degree of overlap between participants’ gesture choices. This structured approach allowed for a direct comparison of the participants’ creativity and the consistency of their responses.

3.4. Tasks

During Phase 2, participants selected gestures from a predefined set of 21 gestures (identified in Phase 1) for each task, ranking the three most intuitive ones. In Phase 3, participants created gestures in response to predefined actions, aligning their movements with specific references. Actions in both phases were categorized into three main groups: (a) movement-related (basic navigational actions), (b) action-related (interactions between the user and the robot), and (c) abstract (less directly tied to everyday experiences). The whole set was structured to elicit user-generated gestures for three categories of robot behavior: (a) spatial navigation and movement control, (b) interaction management (e.g., initiating or ending communication), and (c) abstract functions requiring more creative expression. It was presented in both the evaluative stage of referent-free (Phase 2) and traditional gesture elicitation (Phase 3), allowing for comparative analysis of gesture creation in structured versus unstructured conditions.

4. Results

4.1. Referent-Free Gesture Elicitation

In the referent-free phase, a total of 37 unique groups of similar gestures were catalogued. From these, the 21 most commonly occurring gestures were selected for further analysis in Phase 2, where participants ranked them based on their intuitiveness. Agreement Rates (AR) were calculated for each task using the AGATe tool, version 2.0 which quantifies consensus by analyzing the frequency distribution of selected gestures. Specifically, AR reflects the probability that two randomly chosen participants would select the same gesture for a given task. Higher AR values indicate stronger consensus across the participant group. The resulting values revealed varying levels of agreement depending on task type (Table 1). Representative images of the most frequent gestures from both referent-free and referent-based conditions are provided in Appendix A. This visual reference illustrates how participants physically performed the gestures reported in the agreement rate analysis.

Table 1.

Results of agreement rate computations for referent-free and referent-based elicitation.

For the interaction management tasks, such as initiating or ending communication, gesture agreement was more varied. The “initiate interaction” task produced a moderate agreement rate (AR = 0.289), with many participants opting for attention-getting gestures like clapping or waving. In contrast, the “end interaction” task showed lower consensus (AR = 0.174), possibly due to ambiguity around how to signal disengagement with a robot. These results suggest that while users intuitively associate certain actions with initiating contact, signaling closure may be more context-dependent and culturally variable. For the movement-related tasks, such as “backward,” “move left/right,” and “rotate left/right,” agreement rates ranged from 0.105 for “forward” to 0.242 for “backward,” reflecting a moderate level of consensus. Gestures for tasks like summoning a robot (AR = 0.274) and stopping the robot (AR = 0.337) demonstrated higher agreement rates, suggesting that participants found these tasks more intuitive to perform, or the participants had prior experience with technology that might bias the results.

However, the abstract task of “attack” failed to elicit any gesture with a high enough agreement rate, resulting in no consistent gesture for this action. This reflects the difficulty participants encountered when trying to conceptualize less obvious commands in a referent-free context. On average, the agreement rate for the referent-free method was 0.204, highlighting the variety of interpretations participants brought to the tasks.

4.2. Referent-Based Gesture Elicitation

In Phase 3, participants were asked to create gestures for the same set of tasks, but this time using a traditional referent-based method, where they were explicitly given a list of tasks to map gestures to. The first iteration of this phase resulted in 124 unique gestures, which were later grouped and refined in a second iteration.

The highest agreement rates for the referent-based method were observed in tasks such as stopping the robot (AR = 0.383) and backward (AR = 0.242), both of which involved gestures like “pushing away” or using a static arm gesture (Table A1). Notably, the task of summoning a robot had no consensus, resulting in an absence of an elicited gesture.

Compared to the referent-free method, the referent-based elicitation showed lower agreement rates overall, with an average AR of 0.122 in the first iteration and 0.143 in the second (Table 1). Also, as in the referent-free approach, the abstract task of “attack” failed to generate any gestures with sufficient agreement.

To explore potential differences in gesture consensus between elicitation methods, a paired-sample t-test was conducted for tasks that were present in both referent-free and referent-based conditions. Four overlapping tasks were included: backward, stop, initiation of the interaction, and rotate left/right. Results indicated a statistically significant difference in agreement rates between the two methods (, ), with referent-free gestures yielding higher overall agreement. Due to the small number of overlapping tasks and the exploratory nature of the study, this finding should be interpreted with caution and validated in future work with a larger dataset. Having that in mind, we can infer that providing predefined referents did not necessarily lead to more consistent gesture choices.

4.3. Comparison of Methods

The referent-based method produced more unique gestures (124) than the referent-free method (37), largely due to the requirement for three gestures per task. This led participants to create variations rather than relying solely on natural movements, complicating analysis. In contrast, the referent-free approach yielded a smaller but more representative set of intuitive gestures, reflecting user comfort with the technology.

However, the referent-free method posed challenges, as many participants struggled to generate 20 gestures in Phase 1, leading to data gaps. Its two-phase structure also required more participants and extended the study timeline. Despite these limitations, it remains valuable for early-stage technology interactions, capturing spontaneous user input without predefined constraints.

4.4. Distance and Gesture Dynamics

In this section, we present the findings related to how participants perceived and navigated their physical space during the gesture elicitation sessions. We gathered insights into how participants adjusted their proximity to the robot, the emotional responses prompted by their gestures, and their overall satisfaction with the process. The analysis revealed several key themes, which are detailed below.

Fear and Caution: A recurring theme among participants was the fear of damaging the robot or hitting it by mistake. Many participants reported hesitating in their movements, particularly when gestures required them to move closer to the robot. This sense of caution significantly affected their confidence in performing certain dynamic gestures, while, e.g., coming closer to the robot or pointing in on the ground.

Proxemics and Distance Awareness: Participants varied in their approach to proximity, with some maintaining distance for clarity while others moved closer for effectiveness. While rulers provided spatial reference, uncertainty remained about the optimal distance for gesture detection. Throughout the sessions, participants consciously adjusted their proximity in response to the robot’s reactions or lack thereof. These variations highlight the importance of considering spatial factors when designing gesture-based interaction systems, particularly in ensuring clear guidelines for optimal gesture detection.

Physical Engagement and Satisfaction: Despite some apprehension, participants reported a general satisfaction with the physical engagement required by the task. They felt more involved in the interaction process, especially when the robot responded to their gestures successfully, reinforcing a sense of achievement and connection with the technology.

Familiarity and Gesture Comfort: Participants felt more at ease with gestures that resembled everyday interactions with humans or animals. Actions such as summoning or stopping the robot were performed more confidently when they mirrored familiar body language. While the opportunity to create novel gestures was appreciated, many preferred movements with real-world analogues. Gestures for directional commands, such as “Move Forward” or “Turn Left,” were often influenced by common human interactions or gaming experiences, leading to more fluid and natural execution. This preference for familiar gestures suggests that leveraging known movement patterns can enhance user confidence and ease of interaction in gesture-based systems. At the same time, it remains an open question about the possibility of introducing completely new gestures in a state of such strong user habits.

Confidence, Uncertainty, and Perceived Aggressiveness: Participants’ confidence in performing gestures varied based on complexity and interpretation. Simple gestures like waving or pointing were executed with ease, while more abstract or dynamic gestures led to uncertainty, as participants questioned whether the robot could correctly interpret their actions. Additionally, some gestures were perceived as overly aggressive or inappropriate, particularly those involving large, forceful movements, most evident in the “Attack” gesture. These findings highlight the need to consider both user confidence and the social perception of gestures when designing gesture-based interactions.

5. Prototype Implementation and Technical Feasibility Test

To demonstrate the technical feasibility of deploying referent-free gestures in an embedded control scenario, we implemented a real-time gesture recognition prototype using a StereoLabs ZED 2i stereo camera and an NVIDIA Jetson Nano. This proof-of-concept system mapped gesture recognition outputs to robot commands on our custom hexapod platform. For this initial deployment, we selected two gesture classes from our elicitation study—pointing gestures (left/right) and leg lifts—based on their high visual separability and agreement scores.

5.1. System Pipeline and Data Processing

The pipeline—from video capture to robot command—was designed for edge execution. RGB-D video streams were recorded using the ZED 2i stereo camera and stored as SVO files. A Python (version 3.11)-based tool was developed to extract frames from these recordings via the ZED SDK, version ZED SDK for JetPack 5.1.1. Each gesture sequence was manually segmented into discrete examples to serve as training data.

To improve generalization, the dataset was augmented using Keras’ 3.11.3 ImageDataGenerator module, applying randomized transformations including rotation, translation, shear, zoom, and horizontal flips. This increased robustness to lighting conditions and user variability, which is critical for public deployment scenarios. While temporal modeling methods (e.g., 3D CNNs or RNNs) are common in gesture recognition, we focused on frame-based classification to prioritize real-time inference on constrained embedded hardware.

5.2. Neural Network Architecture

We implemented a compact convolutional neural network (CNN) to classify gesture images in real time. The model was optimized for the limited computational resources of the Jetson Nano. The architecture, presented in Table 2, included

- Two Conv2D layers with 32 and 64 filters, respectively, followed by max pooling;

- Dropout layers to prevent overfitting;

- A dense hidden layer (128 units, ReLU);

- A softmax classification layer with 24 output units for gesture labels (including extended testing classes).

Table 2.

CNN architecture summary.

Table 2.

CNN architecture summary.

| Layer | Output Shape | Parameters |

|---|---|---|

| Conv2D | (26, 26, 32) | 320 |

| MaxPooling2D | (13, 13, 32) | 0 |

| Dropout | (13, 13, 32) | 0 |

| Conv2D | (11, 11, 64) | 18,496 |

| MaxPooling2D | (5, 5, 64) | 0 |

| Dropout | (5, 5, 64) | 0 |

| Flatten | (1600) | 0 |

| Dense (ReLU) | (128) | 204,928 |

| Dropout | (128) | 0 |

| Dense (Softmax) | (24) | 3096 |

| Total Parameters | 226,840 |

The model was intentionally kept compact to ensure compatibility with low-power embedded systems. Larger models or sequence-based approaches will be explored in future iterations. But it is worth noting that in the final deployment, we can expect a set of gestures that are quite recognizable due to the natural reduction and conventionalization process that experimental semiotics predicts.

5.3. Training and On-Device Deployment

The model was trained for 100 epochs using categorical cross-entropy loss and evaluated on a held-out test set. Final training metrics were as follows:

- Cross-validation accuracy: 31.10%.

- Test accuracy: 30.75%.

- Loss on test set: 2.0414.

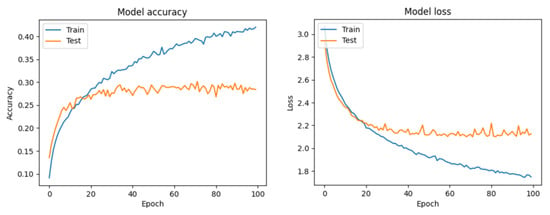

Figure 2 shows the CNN training and test curves. Accuracy stabilized around 30%, reflecting dataset size and task complexity, while the loss curve converged without divergence. This indicates the model achieved stable though limited learning, which supports its role as a feasibility baseline but not a performance benchmark. Although the overall accuracy was modest (30.75% on 24 classes), only a subset of three gestures (point_left, point_right, leg_lift) were deployed live. These were chosen for their high visual separability and consistency across participants. In practice, these gestures could be classified with sufficient reliability to drive simple robot commands such as turning or initiating locomotion. The implementation should therefore be understood as an early feasibility demonstration. This implementation was not intended to optimize classification performance across all gesture categories but rather to validate that a referent-free elicited gesture can be reliably deployed in an embedded control system.

Figure 2.

Training accuracy and loss curves for CNN.

The trained model was exported to the Jetson Nano and executed using TensorFlow 2.15.0 Lite for fast inference. End-to-end latency from frame capture to classification was approximately 110 ms. Robot response was close to immediate.

5.4. Summary and Implications

This implementation serves as an initial feasibility test showing that referent-free gesture designs can be mapped onto real-time embedded control pipelines. It bridges exploratory elicitation with functioning robot interaction and provides a foundation for iterative improvements. Future work will focus on expanding the gesture vocabulary, training with larger and more balanced datasets, and deploying the system in real-world outdoor environments to validate robustness and system usability.

6. Discussion

Looking at the core differences between the two approaches, the referent-free method encouraged greater creativity, allowing participants to generate gestures based on personal intuition rather than fixed commands. This diversity is particularly valuable in the early stages of interface design for unfamiliar or novel systems [39]. It is also expected according to the findings of experimental semiotics, showing initial over-specification in novel communication contexts, representing the exploratory phase, and can be reduced and optimized with an iterative approach [12]. However, it also introduced challenges related to consistency and cognitive load, especially when participants were asked to produce a large number of gestures without guidance. Despite generating fewer gestures, the referent-based method did not produce significantly higher agreement rates, highlighting that even with predefined prompts, interpretation varies. It offers better convergence, which directly parallels the usability–learnability tradeoff [32]. It means that predefined referents essentially pre-solve the conventionalization problem, but potentially at the cost of long-term adaptability.

Potential for Hybrid Approaches: One promising avenue for further research is to use the referent-free method as a preliminary stage for eliciting a broad range of creative gestures [38]. The most promising or frequently occurring could be tested in a more structured GES phase, where participants are provided with referents and asked to refine or select gestures from the initial set. Our study proved this approach effective and indicated a potentially better performance of the referent-free method, guiding the direction of our future research.

Future studies could also explore whether participants respond differently to hybrid methods based on their familiarity with technology [33]. For example, those with more experience using gesture-based interfaces may benefit from the structured guidance of GES, while less experienced users may thrive in the creative, open-ended environment of referent-free elicitation.

Proxemics and Spatial Calibration in Urban Robot Control: A key outcome of our study was the emergence of proxemic behavior—participants instinctively adjusted their distance from the robot when creating or performing gestures. This behavior was shaped by both emotional factors (e.g., fear of damaging the robot) and pragmatic considerations (e.g., gesture visibility or physical comfort). Participants reported hesitation during close-range interactions, particularly when performing large or dynamic gestures, such as pointing or mimicking a leg lift. These findings underscore the need to explicitly define and calibrate gesture detection zones in real-world deployments.

In public urban environments, maintaining safe and socially acceptable interaction distances is not only a technical constraint but also a usability and safety requirement [36]. Gesture recognition systems must account for variable user proximity while ensuring reliable classification. Our results suggest that feedback mechanisms—such as visual or haptic cues indicating optimal interaction distance—could enhance user confidence and system performance.

Furthermore, the use of rulers in our study was effective in guiding users’ sense of space, but future systems could leverage dynamic depth sensing (from stereo or RGB-D cameras) to auto-calibrate gesture zones per user. This would allow more adaptive and inclusive interactions across diverse body types, movement abilities, and cultural gesture norms. Incorporating proxemic sensitivity into embedded gesture control systems could improve recognition accuracy, prevent misclassifications, and support safer human–robot collaboration in uncontrolled, open environments.

Emotional Responses and User Confidence: Participants’ emotional states significantly shaped their interactions with the robot. Many reported hesitation or uncertainty when performing unfamiliar or abstract gestures—particularly in the referent-free phase, where no predefined commands were available as guidance. Conversely, gestures resembling familiar actions, such as gaming inputs or human-to-human cues, were executed with more confidence. This comfort translated into positive experiences when the robot successfully responded to commands, reinforcing engagement. These results highlight the need for intuitive, culturally grounded gesture sets [34] and suggest that physical or visual feedback from the robot can help reduce uncertainty in future systems.

Technical Validation and Design Implications: To support the transition from gesture elicitation to real-world application, we implemented a lightweight recognition system using embedded hardware and vision-based sensing. While the prototype deployed only a small subset of gestures, the live test confirmed that even with limited accuracy at the full 24-class level, selected gestures could still be recognized and mapped to robot actions in real time. This should be seen as an initial feasibility probe of referent-free gestures in an embedded control setting, not as a claim of full system viability. Participants reported high satisfaction with the system’s responsiveness and intuitiveness, particularly when their gestures reliably triggered visible robot behavior—reinforcing the importance of immediate feedback in gesture-based interaction.

This validation reinforces the practical viability of the elicitation results and offers insight into constraints that must be addressed in future deployments—such as model scalability, inference latency, and gesture distinctiveness. Importantly, the prototype exposed how technical limitations may intersect with design factors, such as the need for visually separable gestures, body-position invariance, and proxemic robustness. Future iterations of the system will benefit from improved modeling strategies and expanded gesture coverage, informed directly by this early-stage test.

Practical Use Implications and Author Perspective: Our findings provide actionable insights for real-world deployment of gesture-based control systems in urban robotic platforms. Although participants were not given any predefined use case or target application beyond the general description of a “mobile robot in an urban environment,” many spontaneously proposed plausible scenarios during the elicitation process—particularly in Phase 1. These included urban navigation aids, food or parcel delivery robots, companions or guides for children, and assistive mobility support for older adults or people with disabilities.

Such participant-driven projections reflect high perceived relevance of the interaction model and reinforce the need for adaptable, inclusive control strategies. For example, a robot operating on a university campus, sidewalk, or shopping street could be controlled by gestures to pause, redirect, or initiate task-specific behaviors such as guiding or delivering.

From a design perspective, we advocate for a staged development process: beginning with referent-free elicitation to gather creative and culturally grounded user input, followed by referent-based validation to optimize gesture consistency and technical feasibility. This hybrid approach supports socially embedded robotic systems, where intuitive, naturalistic interactions enhance usability, public trust, and long-term system adoption. From our perspective, the results highlight the importance of engaging end users early in the design of robotic interaction. The diversity of gestures proposed in the referent-free phase reflects the creativity and expectations people bring to new technologies, while the lower agreement rates underline the difficulty of standardizing commands across varied users. We interpret this as evidence that successful gesture sets will likely need both bottom-up user-driven discovery and top-down refinement to ensure usability in public contexts.

7. Limitations

This study represents an early-stage exploration of gesture-based control using referent-free elicitation and embedded implementation. The technical prototype included only two gesture classes, selected for their visual distinctiveness and agreement scores. Model accuracy was limited due to the small training dataset and computational constraints of the embedded system.

The experimental sessions were conducted in a static lab setting, primarily with young adults with technical backgrounds. This limits ecological validity and generalizability to real-world environments. In public settings, factors such as lighting, occlusion, and crowd density may significantly impact system performance and user behavior.

Moreover, social context is likely to influence interaction dynamics. Participants may alter or suppress gestures when observed by bystanders, especially when actions are large, unconventional, or socially ambiguous. This observer effect was not present in our controlled environment but must be addressed in future field studies.

8. Future Work

Our next phase of research will extend the prototype system to support the full gesture vocabulary derived from the elicitation study. Encouraged by the promising results of this initial implementation, we will retrain the model using a larger and more diverse dataset and investigate spatiotemporal modeling approaches and transfer learning to improve classification accuracy.

Crucially, we aim to deploy the system in a real-world pilot across university campus settings. This will allow us to evaluate interaction quality, gesture recognition robustness, and social acceptability under natural conditions. In particular, we will examine how proxemic behavior and observer effects influence gesture performance and user comfort. These insights will inform the design of socially aware, adaptive robot control systems suitable for deployment in public urban environments. Furthermore, the obtained results may potentially be generalized to other environments, for instance for semi-autonomous systems collaborating with humans in interactive desk-based settings, such as those described here [58].

9. Conclusions

This paper presents a human-centered gesture elicitation study and a proof-of-concept implementation for hexapod robot control in urban contexts. Through a three-phase protocol, we compared referent-free and referent-based methods, demonstrating that referent-free elicitation fosters greater gesture diversity and user creativity—making it particularly suitable for early-stage interface design. Referent-based elicitation, while more structured, was less effective at increasing gesture agreement.

We validated a subset of elicited gestures through a technical prototype that used a compact CNN model deployed on embedded hardware for real-time hexapod robot control. Although overall model accuracy was limited by dataset size and hardware constraints, a small subset of three gestures (point_left, point_right, and leg_lift) was deployed live. These gestures were sufficiently distinct to be recognized and mapped to robot actions, demonstrating early feasibility rather than full viability of integrating user-defined commands with our robotic platform.

From our perspective, these findings highlight the tension between user creativity and the need for standardization in gesture-based interaction. The referent-free phase revealed a wide range of intuitive proposals, reflecting the diversity of how people imagine communicating with robots. At the same time, the relatively low agreement rates underscore that any practical deployment will require iterative refinement and convergence toward a stable gesture set. We see this as evidence that successful gesture design must combine bottom-up discovery with top-down standardization.

In terms of applications, the elicited gestures point toward clear opportunities for real-world use. Participants themselves often imagined functions such as delivery in city streets, guiding or assisting children, or supporting older adults and people with disabilities. Even though our prototype only implemented three gestures in real time, these scenarios illustrate how the broader vocabulary could be integrated into service, assistive, or educational robots operating in public environments.

This proof-of-concept implementation bridges exploratory gesture elicitation with real-time execution, and lays the groundwork for in situ deployment and evaluation. While not yet tested in public environments, proxemic behaviors observed during the elicitation study revealed important spatial patterns that will guide gesture zone calibration and system responsiveness. By connecting early-stage HRI methods with embedded control, this work contributes a complete pipeline for developing contactless, socially aware interaction systems suitable for future urban deployments.

Author Contributions

Conceptualization, N.W., A.W., A.R. and K.G.; methodology, A.W.; software, J.T. and W.K.; validation, N.W. and F.S.; formal analysis, J.T., W.K. and F.S.; investigation, N.W.; resources, N.W.; data curation, J.T. and W.K.; writing—original draft preparation, N.W. and A.W.; writing—review and editing, A.W. and N.W.; visualization, N.W.; supervision, A.R. and K.G.; project administration, N.W.; funding acquisition, N.W. and K.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was co-financed by the Minister of Education and Science from the state budget under the “Studenckie Koła Naukowe Tworzą Innowacje” program. Contract number: SKN/SP/570630/2023. The APC was funded by Lodz University of Technology.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to non-interventional character of the study, conducted with a non-vulnerable group of participants. All participants were fully informed whether their anonymity was assured, why the research was being conducted, how their data would be used, and if there were any risks involved in participating.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to privacy reasons.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Gesture Elicitation Results

Table A1.

Elicited gestures during referent-free and referent-based elicitation.

Table A1.

Elicited gestures during referent-free and referent-based elicitation.

| Final Task Representation | Referent-Free Gesture | Referent-Based Gesture | ||

|---|---|---|---|---|

| Image | Description of the Gesture | Image | Description of the Gesture | |

| Backward |  | Quick two-handed push gesture with open palms |  | One-handed pushing motion with open palm |

| End of interaction |  | Arms crossed in front of chest | gesture for this task was not elicited | |

| Forward |  | Waving arm toward the robot | gesture for this task was not elicited | |

| Initiation of the interaction |  | Repeated hand clapping |  | Repeated hand clapping |

| Move to the left/right |  | Pointing left or right with extended arm | gesture for this task was not elicited | |

| Rotate left/right |  | Alternating arm waves to the left or right |  | Drawing circles in the air with pointing finger |

| Stop |  | Static extended arm with open palm facing forward |  | Static extended arm with open palm facing forward |

| Summoning the robot |  | Patting thigh repeatedly with hand | gesture for this task was not elicited | |

References

- Halim, I.; Saptari, A.; Perumal, P.A.; Abdullah, Z.; Abdullah, S.; Muhammad, M. A Review on Usability and User Experience of Assistive Social Robots for Older Persons. Int. J. Integr. Eng. 2022, 14, 102–124. [Google Scholar] [CrossRef]

- Prati, E.; Peruzzini, M.; Pellicciari, M.; Raffaeli, R. How to include User eXperience in the design of Human-Robot Interaction. Robot. Comput.-Integr. Manuf. 2021, 68, 102072. [Google Scholar] [CrossRef]

- Huang, G.; Moore, R.K. Freedom comes at a cost?: An exploratory study on affordances’ impact on users’ perception of a social robot. Front. Robot. AI 2024, 11, 1288818. [Google Scholar] [CrossRef] [PubMed]

- Ramaraj, P. Robots that Help Humans Build Better Mental Models of Robots. In Proceedings of the Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, New York, NY, USA, 8–11 March 2021; HRI ’21 Companion. pp. 595–597. [Google Scholar] [CrossRef]

- Bellingham, J.G.; Rajan, K. Robotics in remote and hostile environments. Science 2007, 318, 1098–1102. [Google Scholar] [CrossRef] [PubMed]

- Ding, X.; Wang, Z.; Rovetta, A.; Zhu, J. Locomotion analysis of hexapod robot. Climbing Walk. Robot. 2010, 28, 291–310. [Google Scholar]

- Brodoline, I. Shaping the Energy Curves of a Servomotor-Based Hexapod Robot. Sci. Rep. 2024, 14, 11675. [Google Scholar] [CrossRef]

- Abhishek, C. Development of Hexapod Robot with Computer Vision. Int. J. Res. Appl. Sci. Eng. Technol. 2021, 9, 1796–1805. [Google Scholar] [CrossRef]

- Chang, Q.; Mei, F. A Bioinspired Gait Transition Model for a Hexapod Robot. J. Robot. 2018, 2018, 2913636. [Google Scholar] [CrossRef]

- De Stefani, E.; De Marco, D. Language, Gesture, and Emotional Communication: An Embodied View of Social Interaction. Front. Psychol. 2019, 10, 2063. [Google Scholar] [CrossRef]

- Galantucci, B. Experimental Semiotics: A New Approach For Studying Communication As A Form Of Joint Action. Top. Cogn. Sci. 2009, 1, 393–410. [Google Scholar] [CrossRef]

- Motamedi, Y.; Schouwstra, M.; Culbertson, J.; Smith, K.; Simon, K. Evolving artificial sign languages in the lab: From improvised gesture to systematic sign. Cognition 2019, 192, 103964. [Google Scholar] [CrossRef]

- Fay, N.; Ellison, T.M. The Cultural Evolution of Human Communication Systems in Different Sized Populations: Usability Trumps Learnability. PLoS ONE 2013, 8, e71781. [Google Scholar] [CrossRef]

- Smith, K.; Tamariz, M.; Kirby, S. Linguistic structure is an evolutionary trade-off between simplicity and expressivity. In Proceedings of the 35th Annual Conference of the Cognitive Science Society, Cognitive Science Society, Austin, TX, USA, 31 July–3 August 2013; pp. 1348–1353. [Google Scholar]

- Ma, C.; Tan, Q.; Xu, C.; Zhao, J.; Wang, X.; Sun, J. Comparative Analysis on Few Typical Algorithms of Gesture Recognition for Human-robot Interaction. In Proceedings of the 4th International Conference on Computer Science and Application Engineering, Sanya, China, 20–22 October 2020; Association for Computing Machinery: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Pomykalski, P.; Woźniak, M.P.; Woźniak, P.W.; Grudzień, K.; Zhao, S.; Romanowski, A. Considering Wake Gestures for Smart Assistant Use. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 25–30 April 2020; CHI EA ’20. pp. 1–8. [Google Scholar] [CrossRef]

- Laplaza, J.; Romero, R.; Sanfeliu, A.; Garrell, A. Body Gesture Recognition to Control a Social Mobile Robot. In Proceedings of the Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, Stockholm, Sweden, 13–16 March 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 456–460. [Google Scholar] [CrossRef]

- Liu, L.; Yu, C.; Song, S.; Su, Z.; Tapus, A. Human Gesture Recognition with a Flow-based Model for Human Robot Interaction. In Proceedings of the Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, Stockholm, Sweden, 13–16 March 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 548–551. [Google Scholar] [CrossRef]

- Ren, Z.; Meng, J.; Yuan, J. Depth camera based hand gesture recognition and its applications in Human-Computer-Interaction. In Proceedings of the ICICS 2011-8th International Conference on Information, Communications and Signal Processing, Singapore, 13–16 December 2011. [Google Scholar] [CrossRef]

- Gao, Q.; Liu, J.; Ju, Z. Hand gesture recognition using multimodal data fusion and multiscale parallel convolutional neural network for human–robot interaction. Expert Syst. 2021, 38, e12490. [Google Scholar] [CrossRef]

- Fujii, T.; Lee, J.; Okamoto, S. Gesture Recognition System for Human-Robot Interaction and Its Application to Robotic Service Task. Lect. Notes Eng. Comput. Sci. 2014, 2209, 63–68. [Google Scholar]

- Canal, G.; Escalera, S.; Angulo, C. A real-time Human-Robot Interaction system based on gestures for assistive scenarios. Comput. Vis. Image Underst. 2016, 149, 65–77. [Google Scholar] [CrossRef]

- Chen, J.; Moemeni, A.; Caleb-Solly, P. Comparing a Graphical User Interface, Hand Gestures and Controller in Virtual Reality for Robot Teleoperation. In Proceedings of the Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, Stockholm, Sweden, 13–16 March 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 644–648. [Google Scholar] [CrossRef]

- Nowak, A.; Zhang, Y.; Romanowski, A.; Fjeld, M. Augmented Reality with Industrial Process Tomography: To Support Complex Data Analysis in 3D Space. In Proceedings of the Adjunct Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2021 ACM International Symposium on Wearable Computers, New York, NY, USA, 21–26 September 2021. [Google Scholar] [CrossRef]

- Walczak, N.; Sobiech, F.; Buczek, A.; Jeanty, M.; Kupiński, K.; Chaniecki, Z.; Romanowski, A.; Grudzień, K. Towards Gestural Interaction with 3D Industrial Measurement Data Using HMD AR. In Digital Interaction and Machine Intelligence; Biele, C., Kacprzyk, J., Kopeć, W., Owsiński, J.W., Romanowski, A., Sikorski, M., Eds.; Springer: Cham, Switzerland, 2023; pp. 213–221. [Google Scholar] [CrossRef]

- Pomboza-Junez, W.G.; Holgado-Terriza, J.A. Gestural Interaction with Mobile Devices: An analysis based on the user’s posture. In Proceedings of the XVII International Conference on Human Computer Interaction. Association for Computing Machinery, Salamanca, Spain, 13–16 September 2016. [Google Scholar] [CrossRef]

- Ruiz, J.; Vogel, D. Soft-Constraints to Reduce Legacy and Performance Bias to Elicit Whole-body Gestures with Low Arm Fatigue. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015. [Google Scholar] [CrossRef]

- Morris, M.; Danielescu, A.; Drucker, S.; Fisher, D.; Lee, B.; Schraefel, M.; Wobbrock, J. Reducing legacy bias in gesture elicitation studies. Interactions 2014, 21, 40–45. [Google Scholar] [CrossRef]

- Pascher, M.; Saad, A.; Liebers, J.; Heger, R.; Gerken, J.; Schneegass, S.; Gruenefeld, U. Hands-On Robotics: Enabling Communication Through Direct Gesture Control. In Proceedings of the Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction. Association for Computing Machinery, Boulder, CO, USA, 11–15 March 2024; pp. 822–827. [Google Scholar] [CrossRef]

- Stoeva, D.; Kriegler, A.; Gelautz, M. Review Paper: Body Movement Mirroring and Synchrony in Human-Robot Interaction. J. Hum.-Robot Interact. 2024, 13, 1–26. [Google Scholar] [CrossRef]

- de Wit, J.; Vogt, P.; Krahmer, E. The Design and Observed Effects of Robot-performed Manual Gestures: A Systematic Review. J. Hum.-Robot Interact. 2023, 12, 1–62. [Google Scholar] [CrossRef]

- Wobbrock, J.O.; Morris, M.R.; Wilson, A.D. User-Defined Gestures for Surface Computing; Association for Computing Machinery: New York, NY, USA, 2009. [Google Scholar]

- Ruiz, J.; Li, Y.; Lank, E. User-defined motion gestures for mobile interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011. [Google Scholar] [CrossRef]

- Vatavu, R.D. User-defined gestures for free-hand TV control. In Proceedings of the 10th European Conference on Interactive TV and Vide, Berlin, Germany, 4–6 July 2012. [Google Scholar] [CrossRef]

- Micire, M.; Desai, M.; Courtemanche, A.; Tsui, K.; Yanco, H. Analysis of natural gestures for controlling robot teams on multi-touch tabletop surfaces. In Proceedings of the ACM International Conference on Interactive Tabletops and Surfaces, Banff, AB, Canada, 23–25 November 2009. [Google Scholar] [CrossRef]

- Chan, E.; Seyed, T.; Stuerzlinger, W.; Yang, X.D.; Maurer, F. User Elicitation on Single-hand Microgestures. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016. [Google Scholar] [CrossRef]

- Heydekorn, J.; Frisch, M.; Dachselt, R. Evaluating a User-Elicited Gesture Set for Interactive Displays; Oldenbourg Wissenschaftsverlag: München, Germany, 2011. [Google Scholar] [CrossRef]

- Vatavu, R.D.; Wobbrock, J. Formalizing Agreement Analysis for Elicitation Studies: New Measures, Significance Test, and Toolkit. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015. [Google Scholar] [CrossRef]

- Vatavu, R.D.; Wobbrock, J. Between-Subjects Elicitation Studies: Formalization and Tool Support. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016. [Google Scholar] [CrossRef]

- Nacenta, M.A.; Kamber, Y.; Qiang, Y.; Kristensson, P. Memorability of pre-designed and user-defined gesture sets. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013. [Google Scholar] [CrossRef]

- Morris, M.; Wobbrock, J.; Wilson, A.D. Understanding users’ preferences for surface gestures. In Proceedings of the GI ’10: Graphics Interface 2010, Ottawa, ON, Canada, 31 May–2 June 2010. [Google Scholar]

- Hoff, L.; Hornecker, E.; Bertel, S. Modifying Gesture Elicitation: Do Kinaesthetic Priming and Increased Production Reduce Legacy Bias? In Proceedings of the TEI ’16: Tenth International Conference on Tangible, Embedded, and Embodied Interaction, Eindhoven, The Netherlands, 14–17 February 2016. [Google Scholar] [CrossRef]

- Henschke, M.; Gedeon, T.; Jones, R. Touchless Gestural Interaction with Wizard-of-Oz: Analysing User Behaviour. In Proceedings of the Annual Meeting of the Australian Special Interest Group for Computer Human Interaction, Parkville, VIC, Australia, 7–10 December 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 207–211. [Google Scholar] [CrossRef]

- Park, E.; del Pobil, A.P.; Kwon, S.J. The Role of Internet of Things (IoT) in Smart Cities: Technology Roadmap-Oriented Approaches. Sustainability 2018, 10, 1388. [Google Scholar] [CrossRef]

- Bunders, D.J.; Varró, K. Problematizing Data-Driven Urban Practices: Insights From Five Dutch ‘Smart Cities’. Cities 2019, 93, 145–152. [Google Scholar] [CrossRef]

- Lee, J. Learning Robust Autonomous Navigation and Locomotion for Wheeled-Legged Robots. Sci. Robot. 2024, 93, 191–200. [Google Scholar] [CrossRef]

- Pan, H. Deep Reinforcement Learning for Flipper Control of Tracked Robots in Urban Rescuing Environments. Remote Sens. 2023, 15, 4616. [Google Scholar] [CrossRef]

- Qingqing, L. Multi Sensor Fusion for Navigation and Mapping in Autonomous Vehicles: Accurate Localization in Urban Environments. arXiv 2021, arXiv:2103.13719. [Google Scholar] [CrossRef]

- Wen, W.; Hsu, L.; Zhang, G. Performance Analysis of NDT-based Graph SLAM for Autonomous Vehicle in Diverse Typical Driving Scenarios of Hong Kong. Sensors 2018, 18, 3928. [Google Scholar] [CrossRef] [PubMed]

- Goldhoorn, A.; Garrell, A.; Alquézar, R.; Sanfeliu, A. Searching and Tracking People in Urban Environments with Static and Dynamic Obstacles. Robot. Auton. Syst. 2017, 98, 147–157. [Google Scholar] [CrossRef][Green Version]

- Jennings, D.; Figliozzi, M. Study of Sidewalk Autonomous Delivery Robots and Their Potential Impacts on Freight Efficiency and Travel. Transp. Res. Rec. J. Transp. Res. Board. 2019, 2673, 317–326. [Google Scholar] [CrossRef]

- Valdez, A.M.; Cook, M.; Potter, S. Humans and Robots Coping with Crisis–Starship, COVID-19 and Urban Robotics in an Unpredictable World. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021. [Google Scholar] [CrossRef]

- Rakhmatulin, V.; Cabrera, M.A.; Hagos, F.; Oleg, S.; Tirado, J.; Uzhinsky, I.; Tsetserukou, D. CoboGuider: Haptic Potential Fields for Safe Human-Robot Interaction. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021. [Google Scholar] [CrossRef]

- Vekhter, J.; Biswas, J. Responsible Robotics: A Socio-Ethical Addition to Robotics Courses. In Proceedings of the 13th Symposium on Educational Advances in Artificial Intelligence (EAAI-23), AAAI-23, Washington, DC, USA, 11–12 February 2023. [Google Scholar] [CrossRef]

- Loke, S.W. Robot-Friendly Cities. arXiv 2019, arXiv:1910.10258. [Google Scholar] [CrossRef]

- Macrorie, R.; Kovacic, M.; Lockhart, A.; Marvin, S.; While, A. The Role of ‘Urban Living Labs’ in ‘Real-World Testing’ Robotics and Autonomous Systems. In Proceedings of the UKRAS20 Conference: “Robots into the Real World”, University of Lincoln, Lincoln, UK, 17 April 2020. [Google Scholar] [CrossRef]

- Vatavu, R.D.; Wobbrock, J.O. Clarifying Agreement Calculations and Analysis for End-User Elicitation Studies. ACM Trans. Comput.-Hum. Interact. 2022, 29, 1–70. [Google Scholar] [CrossRef]

- Dominiak, J.; Walczak, A.; Sałata, A.J.; Romanowski, A.; Woźniak, P.W. The Office Awakens: Building a Mobile Desk for an Adaptive Workspace with RolliDesk. In Proceedings of the 2025 ACM Designing Interactive Systems Conference, Madeira, Portugal, 5–9 July 2025; DIS ’25. pp. 3635–3646. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).