Abstract

Historic buildings and urban streetscapes face increasing threats from climate change, development, and aging infrastructure, creating a pressing need for accurate and scalable documentation methods. This review assesses the combined use of photogrammetry and unmanned aerial vehicle (UAV) technologies in preserving built cultural heritage. We systematically analyze the end-to-end workflow, from the sophisticated processing of imagery into highly detailed and accurate 3D models in photogrammetry software via data acquisition using diverse UAV platforms and sensor payloads. Through case studies, including the mapping of ancient Maya sites in the Yucatán Peninsula and the conservation of the Notre Dame Cathedral, the review highlights the accuracy, efficiency, and accessibility offered by this technological synergy, underscoring its significance for heritage conservation, research, and the development of digital twins. Furthermore, it explores how these advancements foster public engagement and virtual accessibility, enabling immersive experiences and enriched educational opportunities. The paper also critically assesses the inherent technical, ethical, and legal challenges associated with this methodology, offering a balanced perspective on its application. By synthesizing the current knowledge, this review proposes future research trajectories and advocates for best practices, aiming to guide heritage professionals in leveraging photogrammetry and UAVs for the effective documentation and safeguarding of global cultural heritage.

1. Introduction

1.1. Background of Cultural Building Heritage

Historic buildings and streetscapes tell the stories of who we are. They are tangible links to the past that capture the architectural skill, artistic values, and social histories of the generations before us. Whether in the fine details of a façade or the layout of an old city street, these places anchor the community’s identity and offer a direct link to its history [1,2]. In this sense, preserved buildings are not static monuments but active classrooms to experience the past [3]. Preserving this heritage is therefore not just about protecting bricks and mortar; it is about sustaining cultural identity and ensuring that future generations can learn from and connect with their history.

However, their most captivating features—from weathered carvings to historic urban layers—also make them fragile. This vulnerability is now amplified. Climate change brings harsher weather that accelerates material decay [4], while the economics of urban growth often sacrifice conservation for new development [5]. These forces, along with aging infrastructure, create a plethora of threats that can lead to the permanent loss of our built heritage.

To counter these risks, we need documentation methods that are both precise and practical. Although traditional surveys remain important, Letellier reminds us that they are often too slow, costly, and difficult to apply on a large scale [6,7]. This limitation has pushed the heritage field toward photogrammetry technologies, which can produce detailed 3D models of entire sites with remarkable speed and accuracy [8,9]. These digital replicas serve as precise records, safeguarding cultural treasures for future generations and providing a basis for research, education, and conservation. Thus, they are becoming essential tools, allowing us to create a lasting digital record that can protect our architectural legacy from the threats of time and change.

1.2. The Rise of Photogrammetry for Cultural Building Heritage

The pressing need for more effective documentation has spurred the adoption of “capture the reality”, a term for technologies that create 3D models that are dimensionally accurate from physical objects and environments [10]. For the construction of cultural heritage, these methods provide an invaluable tool for preservation and analysis, supporting crucial restoration and educational work by capturing detailed digital records of historic structures [11]. Central to this approach is photogrammetry, the science of extracting 3D information from overlapping photographs. As this field has matured, the development of specialized software has become instrumental in streamlining the complex pipeline from image acquisition to a fully realized 3D model.

Beyond geometric documentation, photogrammetry also plays a critical role in the study of building pathology. High-resolution, image-based models allow researchers and conservators to detect and monitor cracks, surface erosion, moisture ingress, and biological growth over time [8]. Because these surveys capture texture and color, as well as shape, they can reveal subtle material changes that conventional inspections may miss. This information supports predictive maintenance, helping stakeholders to prioritize interventions before damage becomes severe.

There are numerous leading photogrammetry software programs, such as RealityCapture (Reality Scan v2.0) [12], Agisoft Metashape v2.2 [13], and Pix4Dmapper v4.10 [14], and open-source alternatives like Meshroom [15]. RealityCapture is renowned for its high-speed processing and exceptional detail, making it a favorite in professional surveying and VFX [12]. Agisoft Metashape offers a robust feature set suitable for a wide array of applications, from archaeology to construction [13]. Pix4Dmapper is particularly strong in UAV-based mapping and analysis [14]. Meanwhile, Meshroom provides a powerful and accessible entry point into photogrammetry for researchers and hobbyists [15].

At the same time, the hardware for data acquisition has advanced equally rapidly, with unmanned aerial vehicles (UAVs) (or drones) becoming essential tools for heritage documentation [16]. UAVs overcome the physical limitations of ground-based surveys, safely accessing high-altitude features and systematically covering large areas in a fraction of the time [17]. Equipped with high-resolution cameras, they can capture both nadir (top-down) and oblique (angled) imagery, providing the comprehensive dataset required for a complete and accurate 3D reconstruction. Additionally, the combination of UAVs with light detection and ranging (LiDAR) and 3D laser scanning is also an emerging trend in aerial data capture [18]. Photogrammetry can be integrated with these methods to produce highly precise 3D data.

The combination of UAV-based photogrammetry and related technologies unlocks a highly efficient documentation workflow. UAVs gather rich, overlapping imagery across hard-to-reach areas, while RealityScan processes these photographs into accurate, georeferenced 3D models. In tandem, they streamline all aspects, from broad site surveys to the capture of fine architectural details, offering heritage professionals a fast, scalable way to preserve at-risk structures.

1.3. Aim and Scope

This review provides a focused analysis of the workflow that combines UAVs with photogrammetry technology for the documentation of cultural buildings and street heritage. The primary aim is to move beyond a general overview of photogrammetry and instead to focus on the specific outcomes, advantages, and challenges inherent in this widely adopted technological pairing.

To achieve this, the scope of this paper is defined by four objectives:

- To analyze and detail the complete workflow, from data acquisition to final model generation in photogrammetry software and UAVs, as it applies specifically to the complex geometries of historic buildings and urban streetscapes;

- To identify the unique contributions that this specific hardware and software combination offers the heritage field, such as enhanced model fidelity, scalability, and efficiency compared to other methods;

- To critically examine the distinct limitations and challenges that arise from this workflow, including issues of data quality, processing bottlenecks, and the practical hurdles in field deployment in heritage contexts;

- To synthesize these findings to propose future research directions and establish a set of best practices for heritage professionals using UAVs photogrammetry for documentation and preservation.

2. Review Methodology

2.1. Data Sources and Search Strategy

To support the objectives of this review, we conducted a comprehensive literature search across academic databases with high relevance to cultural heritage, geospatial technologies, and digital documentation. Background studies are organized into two sections: databases and registers (DNR) and other methods. The DNR category includes Scopus, Web of Science, IEEE Xplore, and the ACM Digital Library, and we also targeted specialist journals in UAVs, photogrammetry, and LiDAR (e.g., drones, remote sensing). Other methods comprise preprint servers (ArXiv), vendor and technical documentation for relevant software and hardware, and publications from leading cultural protection organizations.

The search strategy was guided by a targeted set of “keywords and Boolean operators” to capture the literature [19] at the intersection of UAV technologies, photogrammetric methods, and heritage documentation. There were three stages of searching.

The first stage involved a technology overview. Examples of search strings include the following:

- “photogrammetry” AND “algorism”;

- “photogrammetry” AND “Sfm”;

- “photogrammetry” AND “MVS”;

- “photogrammetry” AND “RealityCapture” OR “RealityScan”;

- “photogrammetry” AND “software”;

- “Quixel” AND “RealityCapture” OR “RealityScan”;

- “Unreal” AND “RealityCapture” OR “RealityScan”;

- “UAV photogrammetry” AND “building”;

- “UAV sensors” AND “building”;

- “UAV LiDAR” AND “building”;

- “UAV thermal” AND “building”;

- “UAV” AND “flight plan”;

- “photogrammetry” AND “BIM”.

The second stage involved different projects. Examples of search strings include the following:

- “UAV photogrammetry” AND “Maya”;

- “UAV LiDAR” AND “Maya”;

- “UAV photogrammetry” AND “Notre-Dame Cathedral”;

- “UAV LiDAR” AND “Notre-Dame Cathedral”;

- “photogrammetry” AND “virtual reality”;

- “photogrammetry” AND “game”;

- “game” AND “Notre-Dame Cathedral”.

The third stage involved different challenges. Examples of search strings include the following:

- “UAV photogrammetry” AND “limitation”;

- “UAV sensors” AND “limitation”;

- “Surface-only Capture” AND “limitation”;

- “UAV photogrammetry” AND “cost”;

- “UAV LiDAR” AND “cost”;

- “UAV photogrammetry” AND “privacy”;

- “UAV photogrammetry” AND “copyright”.

Additionally, secondary search strategies were employed to expand the scope and ensure the inclusion of highly relevant research. This involved the following:

- Backward Searching (Citation Tracking): Examining the bibliographies of key papers identified through the initial search to discover foundational or related studies that might have been missed.

- Forward Searching (Cited By): Utilizing the “cited by” features within databases (e.g., Scopus, Web of Science, Google Scholar) to identify more recent research that has built upon or referenced the foundational works.

2.2. Screening, Inclusion/Exclusion, and Reporting

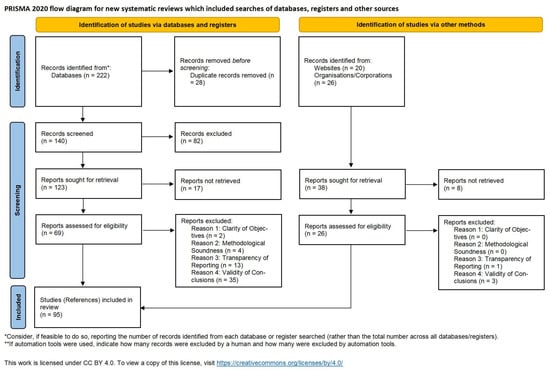

The PRISMA flow diagram can provide transparency and supports the reproducibility of the review methodology. Therefore, the overall search and selection process, including the numbers of records identified, screened, excluded, and retained, is illustrated in the PRISMA-style flow diagram (Appendix A Figure A1).

Screen Process: We included only English-language publications that directly addressed UAVs, photogrammetry, LiDAR, or related digital documentation methods to ensure relevance and quality. Priority was given to peer-reviewed journal articles, conference proceedings, and major software or institutional reports; promotional and non-technical materials were excluded.

Retrieval Process: The titles and abstracts of all retrieved records were screened systematically. Full texts of potentially relevant studies were then reviewed in detail. To reduce bias, the screening and selection were conducted by the first author and cross-checked by a second reviewer (other authors). Disagreements were resolved through discussion until consensus was achieved.

Guolong has noted the rapid growth in research output on spatial technology for world heritage since 2015 [20]. This review’s literature search included studies published between 2015 and 2025 (inclusive). Some significant theories and findings from earlier periods could be included by specific consideration. This range was selected to reflect the emergence and rapid evolution of UAV and photogrammetry technologies applied in cultural heritage contexts. It also aligns with the timeframe in which RealityScan (first version in 2016 [12]) and other photogrammetric tools became widely accessible and robust enough for professional-grade heritage work.

Eligibility Selection Process: To strengthen the reliability of our findings, we conducted a methodological quality appraisal of all included studies. Because the corpus included peer-reviewed articles, conference papers, and technical reports, we used a narrative appraisal approach informed by the Joanna Briggs Institute (JBI) checklists appropriate to each study design [21]. This flexible yet systematic method allowed us to assess the methodological rigor of each publication and incorporate these assessments into our synthesis. Our appraisal focused on 4 core criteria to evaluate the trustworthiness, relevance, and potential for bias in each study, as shown in Table 1.

Table 1.

Core criteria in eligibility selection process.

As with the retrieval process, the quality appraisal was performed independently by the first author and verified by a second reviewer (other authors). Any discrepancies in the assessment were resolved through discussion to reach a consensus. The outcomes of this quality appraisal, along with important details of each included study, are presented in a comprehensive table in Appendix A Table A1.

3. How to Capture Reality

3.1. Understanding Photogrammetry Technology

3.1.1. Core Functionality

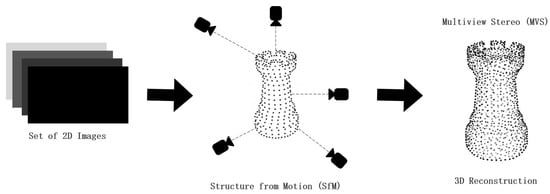

Photogrammetry technology’s core functionality is based on the twin pillars of structure from motion (SfM) and multiview stereo (MVS) [12], the two principal photogrammetric processes to derive 3D geometry from overlapping photographs, as shown in Figure 1. In the SfM stage, the application automatically detects and matches feature points in input images, estimates camera positions and orientations, and generates a sparse point cloud representing the general shape of the object or scene [22]. Based on this, the MVS stage densifies the sparse cloud by estimating depth maps for each view and combining them into a high-density point cloud, before constructing a continuous mesh surface [23]. This two-step pipeline ensures both robustness (through SfM’s global bundle adjustment) and detail (through MVS’s local depth estimation).

Figure 1.

Sfm and MVS workflow.

A key software program in this field is RealityCapture from Epic Games, which has set a high standard for photogrammetric processing [12,24]. Its latest version, rebranded as RealityScan in June 2025, has a reputation for speed and the ability to manage immense datasets [12]. The engine is highly effective in aligning images and reconstructing them into detailed, textured 3D models with impressive fidelity. For these reasons, it has become a go-to tool for heritage professionals who need to produce archival-grade digital models from photographic data.

When compared to other leading photogrammetry software, such as Agisoft Metashape [13] and Pix4Dmapper [14], RealityScan consistently outperforms in handling more than 1 million images [12], both in terms of speed and memory efficiency. Its GPU-centric architecture offers a significant advantage over CPU-bound competitors, reducing reconstruction times by up to 50–70% on equivalent hardware configurations [12]. In addition, RealityScan’s automated outlier detection and camera calibration routines require minimal user intervention, making it particularly suitable for large-scale heritage surveys, where a rapid response and ease of use are paramount.

We summarize the key features of RealityScan, the most popular representation of photogrammetry technology, as follows:

- High-speed processing: By taking advantage of GPU acceleration and optimized parallel algorithms, RealityScan dramatically reduces the alignment and reconstruction times, enabling users to process thousands of images in a matter of hours rather than days [12].

- Accuracy and robustness: The integrated bundle adjustment routines minimize the reprojection error on all cameras, producing geometrically precise models even under challenging conditions (e.g., low-texture or repetitive patterns) [12].

- Dense mesh generation: After MVS reconstruction, the software efficiently converts the dense point cloud into a watertight triangular mesh, preserving fine architectural details such as ornamentation and weathering [12].

- High-quality texture mapping: RealityScan projects the original high-resolution images onto the mesh, blending multiple views to produce seamless, photorealistic textures that faithfully reproduce the surface color and material properties [12].

In short, the software’s key features include its remarkable speed in processing large datasets, its high degree of accuracy in geometric reconstruction, its ability to generate dense and detailed meshes, and its sophisticated texture mapping capabilities. These attributes make it particularly well suited for complex projects involving extensive data acquisition, such as those encountered in cultural heritage documentation.

3.1.2. Workflow and Integration Within Epic Ecosystem

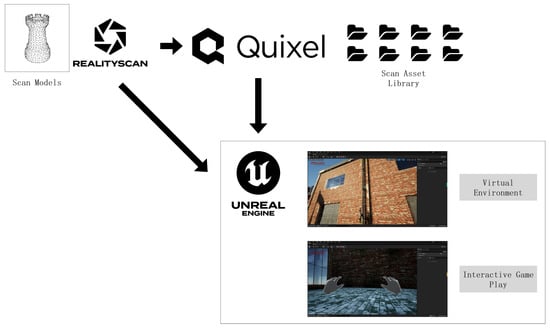

Apart from its abovementioned strong advantages, RealityScan has another significant/unique feature: integration into a standardized game development solution. RealityScan’s versatile output formats enable a fluid workflow and deep integration within the Epic Games ecosystem, as shown in Figure 2. This synergy is particularly beneficial for heritage documentation, allowing the creation of rich and interactive digital experiences.

- Scanning Assets: RealityScan’s output seamlessly complements Quixel, a vast library of high-quality scanned assets. Heritage models can be integrated with scan assets under Mixer to enrich scenes [25], providing context and detail that might not have been captured or is difficult to replicate. This combination allows for the creation of highly detailed and historically accurate digital environments.

- Game Engine: Direct integration with Unreal Engine allows these detailed, reality-captured models to be imported and used in interactive real-time environments. This is crucial in creating virtual reconstructions of heritage sites, enabling immersive exploration and detailed analysis. The ability to create large-scale, high-fidelity open worlds within Unreal Engine 5’s Nanite technologies means that digitized heritage assets can be presented with unprecedented realism [26,27,28].

By combining RealityScan’s accurate photogrammetry with Epic’s real-time engine, creators can build immersive, data-driven reconstructions. These can include interactive guides, realistic lighting, and scalable deployment, all within the Epic ecosystem.

Figure 2.

How heritage object information proceeds from scan to virtual environment via Epic ecosystem.

3.2. UAV Photogrammetry Data Acquisition

3.2.1. Types of UAVs and Sensor Payloads

UAVs employed in photogrammetric surveys are broadly classified into two main categories: multirotor and fixed-wing platforms [29]. Multirotor UAVs, including quadcopters and hexacopters, provide superior maneuverability, stable hovering, and safe operation within confined or complex environments. These attributes make them highly suitable for the high-resolution, detailed mapping of small- to medium-scale areas, such as archaeological sites, the exteriors of buildings, and localized topographic surveys [18,29]. However, their primary limitations are limited flight durations (typically 20–40 min) and lower cruise speeds, restricting the geographical scope of a single mission [18,29]. In contrast, fixed-wing UAVs are more efficient in covering large areas due to their longer flight times (often exceeding one hour) and higher airspeeds [29,30]. Consequently, they are favored for applications such as agricultural monitoring, watershed-scale terrain modeling, and other scenarios that demand broad, continuous aerial coverage. The inherent trade-off is that fixed-wing platforms lack hovering capabilities and require larger open spaces for takeoff and landing, potentially limiting their deployment in urban or densely vegetated terrains [30].

A camera’s sensor plays a huge role in photogrammetry. Today, most UAV surveys use high-resolution RGB cameras with either full-frame or APS-C sensors and interchangeable lenses. Full-frame sensors gather more light, produce less noise, and offer a greater dynamic range, which translates into sharper images and more dependable feature matching in processing [31]. Cameras with 20 MP or more also reduce the ground sampling distance (GSD) at a given altitude, so that the digital surface models and orthophotos are crisper [31]. While a fixed-focus, downward-looking mount is the simplest setup, adding a gimbal to counteract pitch and roll is usually beneficial, maintaining even overlap and reducing motion blur. Moreover, when users need the highest precision—e.g., for engineering control surveys or measuring stockpile volumes—swapping in metric or cine-style lenses and a global (mechanical) shutter eliminates the rolling-shutter “jello” or skew effects common to CMOS sensors [32].

While photogrammetry is powerful, there are scenarios, particularly in vegetated or highly complex terrains, where LiDAR payloads provide complementary advantages [33,34]. LiDAR can be categorized by platform and by signal/return type. Platform categories include airborne (topographic) LiDAR for broad-area surveys; terrestrial laser scanning (TLS) for high-resolution, close-range documentation; and satellite LiDAR, carried on Earth-orbiting satellites, which can survey large terrestrial and atmospheric regions [35]. For UAV applications, the most relevant are lightweight UAV-mounted units and TLS.

UAV-borne LiDAR systems emit laser pulses and measure return times to directly capture three-dimensional point clouds directly, penetrating gaps in foliage to map underlying ground surfaces and intricate structural details [33]. Such systems typically weigh more and consume more power than standard cameras, and their raw data often require different processing pipelines [34]. Nevertheless, integrating UAV LiDAR with photogrammetric imagery can yield richer datasets: the LiDAR point cloud adds true 3D structure and penetration capabilities, while the high-resolution imagery supplies color information and texture for enhanced visualization and classification [34,36]. In practice, mission planners select between photogrammetry, LiDAR, or a hybrid approach based on the project scale, desired accuracy, terrain complexity, and available payload capacity.

Apart from photogrammetry and LiDAR, some high-value projects have also used thermal infrared (TIR) sensors on UAVs to acquire radiometric imagery [37,38]. TIR sensors measure the surface brightness temperature rather than visible-spectrum reflectance and therefore provide information complementary to photogrammetric and LiDAR measurements [38]. However, TIR sensors impose specific operational and processing constraints that must be addressed to produce quantitatively useful results. Typical TIR cameras have lower spatial resolutions than visible cameras for a given payload class, and the measured temperatures are affected by sensor calibration, detector warmup and drift, the sensor’s field of view, atmospheric effects, surface emissivity, mixed pixels, and the time of day and sky conditions at acquisition [39].

3.2.2. UAV Flight Planning and Data Acquisition

Effective UAV photogrammetry begins with thorough mission planning, typically conducted in dedicated flight planning software (e.g., Pix4Dcapture, DJI Ground Station Pro, UgCS [40,41,42,43]), as shown in Figure 3. Figure 3 shows how these platforms allow users to define the survey area, set the flight altitude, specify the overlap of the image (front and side), and generate waypoints along predetermined flight lines [44]. Standard flight patterns include nadir passes (vertical), ideal for terrain and orthophoto production, and oblique passes that capture building façades and vertical structures. Combining nadir and oblique imagery significantly enhances the 3D reconstruction of complex features such as rooftops, balconies, and architectural details [45].

Figure 3.

Overview of UAV flight planning and data acquisition.

When surveying buildings, special attention must be paid to image overlap and camera angles to ensure the complete coverage of façades and roof planes. A minimum of 75% frontal and 60% side overlap is common for nadir flights, as shown in Figure 3 [46]; oblique flights often require similar or slightly higher overlap to resolve vertical details [47]. Complex geometries (e.g., turrets, dormers, overhangs) and inaccessible areas (e.g., steep roofs, upper façades) may necessitate additional or tighter flight lines, variable-altitude passes, and manual waypoint adjustments. Ground control points (GCPs) or real-time kinematic (RTK) GNSS can improve the absolute accuracy, particularly in urban settings, where GPS multipath may be problematic [48].

Furthermore, street-scale surveys introduce further constraints. Linear flight corridors must follow street centerlines or parallel offsets to capture façades and road surfaces, while maintaining safe distances from buildings, pedestrians, and vehicles [49]. Image overlap remains critical, but the flight altitude and speed should be balanced against local airspace regulations and the need to avoid moving obstacles [50]. Vertical elements, such as street signs, lamp posts, and trees, are best captured with nadir and shallow oblique passes to support 3D modeling and semantic classification [51]. In pedestrian zones or narrow alleys, manual piloting or hybrid automatic/manual missions may be required to navigate tight turns and ensure comprehensive coverage without intrusion into no-fly or high-traffic zones [49].

3.3. The Synergy: UAV Data Processed by RealityScan

Combining UAV multi-angle imagery with RealityScan’s GPU-powered engine delivers a rapid, seamless workflow and produces detailed 3D outputs, including the following:

- Highly Detailed 3D Models: Through its robust SfM and MVS pipelines, RealityScan reconstructs the captured environment into dense, geometrically precise 3D models. These models preserve intricate architectural details, surface textures, and the overall form of the surveyed objects or areas with remarkable fidelity.

- Textured and Material: The software seamlessly projects the high-resolution, often color-rich imagery onto the generated 3D mesh. This process creates photorealistic, visually compelling textured meshes that faithfully represent the surface appearance, materials, and colors of the original subject, making them ideal for visualization, virtual tours, and detailed inspection.

Beyond geometric reconstruction, these outputs serve as the foundation for the creation of semantically rich building information models (BIM) through a process known as Scan-to-BIM [52]. This workflow uses computer vision and deep learning algorithms to perform semantic segmentation on the unstructured point clouds or meshes, automatically identifying and classifying architectural elements like walls, floors, windows, and doors [53]. These classified point clusters are then converted into structured, parametric BIM objects, transforming a purely visual model into an intelligent, data-rich asset. While this process is often semi-automated and requires human oversight for validation, it bridges the gap between reality capture and digital design and construction, enabling advanced analysis, facility management, and heritage conservation.

4. Contributions to Cultural Building Heritage

4.1. Unprecedented Detail and Accuracy

We note that modern documentation techniques have elevated heritage preservation beyond traditional photography and architectural drawings. High-resolution laser scanning, photogrammetry, aerial drone platforms, and 3D modeling now enable researchers, conservators, and architects to create comprehensive “as-is” digital archives—rich in detail at the centimeter to millimeter level. The Yucatán Peninsula and Notre Dame Cathedral are high-profile subjects that attract researchers, organizations, and corporations. Therefore, we conducted case studies to review the value of these methods in both contexts.

4.1.1. Case Study: Mapping and Managing Ancient Maya Sites in the Yucatán Peninsula, Mexico

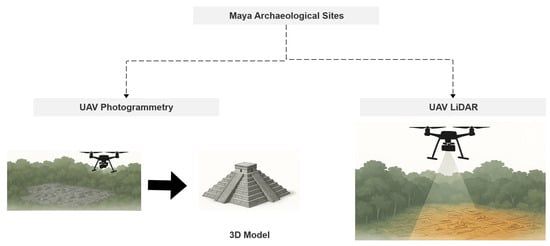

The Yucatán Peninsula of Mexico is home to numerous sprawling ancient Mayan archaeological sites, many of which are nestled within dense tropical rainforests [54]. These environments present significant challenges for traditional archaeological survey methods, including limited visibility, difficult terrain, and the risk of damaging delicate archaeological features. UAVs, equipped with both photogrammetric sensors and LiDAR, have emerged as a gamechanger for the mapping and management of these complex heritage landscapes.

Projects at sites such as Chichén Itzá and Calakmul have extensively utilized UAV technology to overcome these obstacles, as shown in Figure 4. In areas around Chichén Itzá, where dense vegetation obscures ground-level features, UAV-mounted LiDAR has proven invaluable. Geoearth used the YellowScan Explorer LiDAR unit on a Skyfront Perimeter drone to survey approximately 30 km2 across Chichén Itzá over just three days [55]. They achieved a point density of 110 pts/m2 and digital terrain models with a 30 cm resolution, penetrating the forest canopy to reveal buried structures, causeways, and terraces formerly hidden beneath foliage [55]. Similarly, surveys in Yucatán’s Puuc region mapped water storage features—such as chultuns and aguadas—as well as terracing and stone workshops, illustrating LiDAR’s utility for landscapes beyond monumental architecture [56]. In 2024, Northern Arizona University scholar Luke Auld-Thomas reanalyzed decade-old LiDAR survey data (originally gathered for carbon stock studies) across Campeche, uncovering a “lost” city (Valeriana) with approximately 6674 unseen structures—some comparable to Chichén Itzá—in just 50 mi2 [57].

Figure 4.

Overview of UAV photogrammetry and LiDAR work in Yucatán Peninsula.

Complementing the LiDAR data, high-resolution UAV photogrammetry has been used at the same site to create detailed 3D models of visible monumental architecture—such as El Castillo and the Great Ball Court—capturing carvings, limestone textures, and architectural forms with exceptional accuracy within the Chichen Itza 3D Atlas project [58]. These models facilitate detailed condition assessments, pinpointing areas of erosion or structural instability and enabling targeted restoration and conservation.

At Calakmul, a UAV-LiDAR survey in the Campeche rainforest revealed hundreds of previously unmapped structures, plazas, and agricultural features under heavy canopies. Hansen’s team conducted broader regional airborne LiDAR surveys in the Mirador–Calakmul Karst Basin, which uncovered over 775 ancient Maya settlements within a 1703 km2 region, linked by an extensive network of causeways—underscoring the method’s regional-scale potential [59].

In conclusion, UAVs—when equipped with LiDAR and photogrammetric sensors—have transformed archaeological 3D scanning in the Yucatán. From high-density 3D terrain mapping at Chichén Itzá to regional-scale settlement revelations across Calakmul and beyond, these tools enable non-invasive, comprehensive documentation, critical for conservation, urban planning, tourism, and research into Maya urbanism and environmental adaptation.

4.1.2. Case Study: The Conservation and Digital Recreation of the Notre Dame Cathedral, Paris

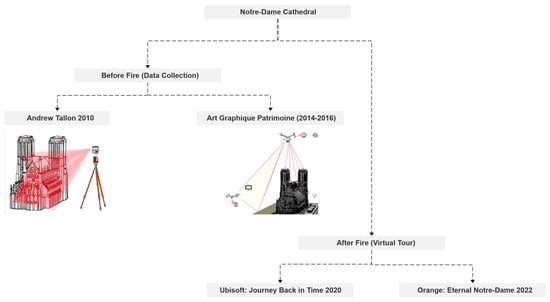

The devastating fire that affected the Notre Dame Cathedral in April 2019 [60] highlighted the critical importance of accurate and comprehensive digital documentation for cultural heritage. Fortunately, years before the disaster, numerous high-resolution photogrammetric and LiDAR surveys had been conducted on the iconic Parisian landmark. These surveys, often carried out for conservation and research purposes, provided an invaluable digital repository of the cathedral’s intricate gothic architecture.

Before the fire, architectural historians and conservationists had already leveraged drone-based photogrammetry and laser scanning to document Notre Dame with unprecedented detail. In 2010, art historian Andrew Tallon deployed a tripod-mounted Leica ScanStation C10 LiDAR scanner, spending 5 days capturing data from approximately 50 positions inside and outside the cathedral [61,62,63]. This meticulous process collected over a billion points, yielding a point cloud model with accuracy of within 5 millimeters that formed the foundation of a precise digital blueprint of the cathedral [61]. Tallon also combined these scans with high-resolution panoramic photographs to overlay color and texture onto the 3D data [61]. Between 2014 and 2016, Art Graphique Patrimoine (AGP) expanded on this work using UAVs and additional terrestrial scans. They assembled a rich billion-point database of the entire structure [64,65]. Their detailed scan of the oak timber roof, known as “the forest”, involved 150 scan locations that captured 3 to 5 billion data points, resulting in an exceptional point density of one to two points per square millimeter [64]. During the fire response, UAVs were indeed deployed for aerial reconnaissance, thermal assessment, and post-fire structural mapping [66].

These aerial and ground photogrammetric surveys, processed via SfM pipelines, produced high-resolution 3D meshes capturing façade ornamentation, statue sculptures, gargoyles, and stone erosion patterns. Meanwhile, LiDAR point clouds provided accurate geometric data—complete spatial coordinates for every architectural feature and structural element. The combined dataset was integrated within a BIM framework by Autodesk and AGP [64,67], enabling a digital twin that fused geometry, material metadata, temporal documentation, and structural annotations. The immediate aftermath of the fire saw these pre-existing digital datasets become indispensable. They served as the primary reference for the international efforts to assess the damage and plan the reconstruction. Architects and engineers relied heavily on these high-accuracy 3D models to understand the original forms and constructions of the damaged sections, particularly the collapsed spire and roof.

Furthermore, these digital twins enabled researchers and conservationists to virtually explore and analyze the cathedral in its pre-fire state. These digital replicas also powered immersive virtual reality (VR) experiences like Ubisoft’s “Journey Back in Time [68]” and Orange’s “Eternal Notre Dame” [69], where visitors could wander through the cathedral in stereoscopic 3D, viewing the nave, towers, and inaccessible spaces from multiple historical perspectives. Such experiences fostered emotional connections and enabled condition assessments even when the physical site was closed for restoration.

We summarize how digital protection was implemented in major projects related to the Notre Dame site below (see Figure 5). Significantly, the Notre Dame fire serves as a stark reminder of heritage’s fragility—and a powerful testament to the irreplaceable value of integrated photogrammetry, LiDAR, and digital twin technologies for future preservation initiatives.

Figure 5.

Overview of major digital protection project at Notre Dame Cathedral.

4.2. Public Engagement and Virtual Accessibility

Emerging digital technologies are not only revolutionizing professional conservation, restoration, and monitoring workflows but are also opening up new avenues for public engagement and education. By transforming heritage sites into interactive digital assets, these tools dissolve geographic and physical barriers, inviting global audiences to explore cultural treasures from anywhere in the world [70]. Such accessibility fosters a deeper public connection to heritage, positioning communities as active participants in preservation, rather than passive observers [71].

A core component of this transformation is the creation of highly realistic 3D models. Photogrammetry platforms like RealityScan enable the rapid generation of dense, textured meshes from smartphone imagery, which can then be optimized and imported into game engines such as Unreal Engine [12]. The result is an immersive environment where users can undertake guided virtual tours, engage in augmented-reality sandbox experiences, or navigate gamified narratives that blend play with pedagogy. Industry leaders have already demonstrated the educational power of this approach. CyArk’s MasterWorks: Journey Through History—developed with FarBridge and optimized for Oculus Rift, Gear VR, and Rift—transforms precise LiDAR and photogrammetry data into fully explorable VR environments [72]. It offers guided tours through four iconic heritage sites (Ayutthaya, Chavín de Huántar, Mesa Verde, and Mount Rushmore), complete with audio narration from experts, interactive artifact collection, and nuanced storytelling that is rooted in cultural and climate contexts. In addition to Journey Back in Time, Ubisoft’s “Discovery Tour” series utilizes the Anvil engine (used in Assassin’s Creed) to deliver historian-curated, combat-free explorations of ancient worlds—offering a structured and engaging learning experience rooted in photogrammetric authenticity [73].

The potential of these technologies also extends to educational applications, where interactive 3D models can significantly promote cultural awareness and facilitate digital storytelling. One notable project is the “Re-Live History” project—a VR learning experience that reconstructs a Maltese Neolithic hypogeum [74]. Developed in collaboration with heritage experts and tested with middle-school students (ages 11–12), this immersive VR environment blends spatial exploration with representations of prehistoric cultural practices. Evaluation showed strong engagement, authenticity, and improved learning outcomes compared to traditional materials. Such VR stories effectively dissolve geographical barriers, enabling any student or enthusiast with a headset to explore remote archaeological contexts from anywhere. Yueting’s research also supported this by combining guided navigation, multimodal narration, and visually rich reconstructions; these experiences democratize heritage education—making it accessible, immersive, and emotionally resonant [75]. In doing so, they help to foster deeper cultural connections, support curriculum-aligned learning, and empower users globally to engage with heritage, irrespective of physical constraints.

5. Challenges and Limitations

5.1. Technical Challenges

5.1.1. UAV Data Acquisition Specifics

Collecting high-quality UAV imagery in street heritage environments presents a range of technical challenges. Environmental and operational constraints can degrade both flight performance and the accuracy of downstream photogrammetric processing. Key issues include the following:

- Environmental Factors: Wind gusts can destabilize small multirotor platforms, introducing motion blur or misalignment between frames. Rain or high humidity not only reduces image clarity but also risks water ingress into sensitive electronics [76,77]. Temperature extremes—both hot and cold—affect battery chemistry, reducing the available flight time and potentially triggering automatic safety cut-outs [76].

- Lighting Conditions: Street scenes feature highly variable illumination: sharp contrasts between sunlit façades and deep shadows, glare from glass windows or wet pavement, and rapid changes as clouds pass overhead [78]. These inconsistencies result in uneven feature detection across images, leading to gaps or noise in 3D reconstructions.

- Sensor Limitations: Standard RGB cameras struggle to “see through” dense foliage or deep recesses beneath overhangs. Although LiDAR sensors offer better penetration, they also come with a higher payload, weight, power consumption, and cost [79]. Deploying hybrid systems can be challenging on lightweight UAVs.

- Surface-Only Capture Limitations: Photogrammetry typically records surface responses such as visible texture. Therefore, it cannot directly reveal subsurface features, interior structural conditions, or elements hidden behind occluding objects [80].

- Battery Life and Flight Duration: Typical UAV batteries support short-time flight under ideal conditions [81]. Mapping extensive street corridors or complex heritage districts thus demands multiple batteries and careful mission planning to ensure complete coverage without data gaps.

To tackle these issues in the field, it is necessary to choose rugged, weather-rated UAVs and fly them in the clearest daylight and calmest conditions possible. One can add extra sensors—like LiDAR or multispectral cameras—to fill in the blindspots that a standard RGB camera cannot handle. Then, one can rely on smart mission-planning tools that adjust the altitude, speed, and image overlap on the fly, based on live telemetry. Altogether, these steps make it significantly easier to capture clean, complete data when surveying heritage streetscapes.

5.1.2. Photogrammetry Software Processing Specifics

Post-flight processing in software such as RealitySacn and Pix4Dmapper introduces its own set of technical challenges, particularly when handling UAV-derived imagery for street heritage projects. Key issues include the following:

- Massive Data Volume: A single mission can yield thousands of high-resolution photographs. Processing such datasets demands substantial RAM (often 64 GB+), powerful GPUs, and high-speed storage (SSDs or RAID arrays) [82]. Long load times and intermediate cache files further increase disk usage and extend the overall processing time.

- Processing Complexity: Insufficient image overlap, repetitive architectural textures (e.g., brick façades), reflective surfaces (glass windows, wet stone), and motion blur from wind-induced UAV shake can confuse feature matching. These factors often manifest as alignment errors, mesh holes, or texture artifacts in the final model [83].

- Learning Curve: Achieving optimal results requires operators to master UAV flight planning (setting overlap, altitude, and camera parameters) as well as RealityScan’s parameters (alignment tolerances, reconstruction modes, texture baking). Inexperienced users can inadvertently degrade model quality or waste compute resources.

5.1.3. Cost Considerations

An additional practical consideration is cost: photogrammetry workflows can often be deployed at a relatively low upfront cost (consumer/prosumer drones plus photogrammetry software), while LiDAR-based systems typically require substantially higher hardware and platform investments [18]. Photogrammetry hardware and software options exist across a broad price range, from hobbyist setups to professional subscriptions, which makes them attractive for budget-constrained projects. However, the photogrammetric processing of large image sets can drive up the computing and cloud processing costs [84]. By contrast, UAV LiDAR solutions commonly incur higher capital, maintenance, and operator-training costs (sensor, GNSS/IMU integration and platform). LiDAR does offer operational advantages in certain contexts, especially in terms of the better penetration of vegetation and reduced dependence on ambient lighting. Thus, the choice often becomes a trade-off between the required deliverable (penetration/accuracy/texture fidelity), project budget, and operational complexity. Hybrid workflows (photogrammetry for broad-area, texture-rich mapping and targeted LiDAR for vegetation penetration or engineering-grade surveys) can balance cost and data quality.

5.2. Ethical, Legal, and Social Considerations

5.2.1. Privacy Concerns

Deploying UAVs for street heritage documentation raises privacy challenges. Aerial imaging can inadvertently record identifiable individuals, license plates, private gardens, and other private property details. While UAVs provide valuable perspectives on architecture and urban patterns, they also traverse airspace in ways that may infringe on privacy rights [85].

First, capturing identifiable persons in public or semi-public spaces creates legal risks. Under regulations such as the EU’s General Data Protection Regulation (GDPR) or various US privacy laws, recognizable images constitute personal data [86]. Collecting and processing such footage without consent can incur fines and reputational harm. Even in “public” airspace, individuals may expect privacy in contexts like private courtyards or small gatherings.

Second, because license plates and other vehicle identifiers visible in UAV imagery are treated as personal data under frameworks like the GDPR and various US laws [87], they must be anonymized by pixelating or masking—otherwise, the footage can leave people vulnerable to stalking, identity theft, or other harms.

Third, details of private properties are often captured unintentionally. While landmark owners may welcome documentation, neighboring residents typically do not. Heritage surveys must clearly define flight boundaries to avoid peering over fences or recording beyond public rights of way.

Balancing the legitimate need for comprehensive heritage documentation with these privacy concerns requires a multi-pronged strategy.

- Pre-flight Planning. Conduct a privacy impact assessment to identify sensitive areas. Plan routes to maintain setbacks from private homes and schedule flights when foot traffic is minimal.

- Community Engagement. Notify residents, property owners, and authorities in advance. Secure written permissions and post public notices to allow opt-outs.

- Technical Controls. Use face and license plate detection tools to blur or mask identifiers.

- Data Governance. Restrict raw footage access through role-based controls. Define retention periods after which images containing private details are deleted or permanently anonymized.

- Transparency. Publish a privacy statement with any public release, detailing the collection scope, anonymization measures, and intended uses. Audit flight logs and archives regularly for compliance.

5.2.2. Data Ownership and Copyright

Advances in UAV-based photogrammetry and software like RealityScan have made it straightforward to generate high-fidelity 3D models of heritage sites. However, the question of who owns these models is nuanced, especially when the subject is public property or protected cultural heritage. Four main factors determine ownership and downstream rights:

Source Imagery and Raw Data: Typically, the UAV operator holds the raw aerial imagery under the principle that photographs are protected by copyright from the moment of capture. Ahmad indicated that, if the operator is an employee or contractor, the commissioning entity (e.g., a municipal heritage office) may claim “work for hire” ownership [85]. In the absence of a contract, the photographer retains copyright, including rights over any derivative works created from the images.

EULA and Output Rights: An end-user license agreement (EULA) generally grants the licensee full rights to the output data, subject to payment of any applicable fees [88]. Unlike some SaaS photogrammetry solutions that restrict the commercial use of derived 3D meshes, RealityScan allows the user to exploit, modify, and distribute the results without additional royalties [89]. It is critical to review the specific terms to ensure that there are no clauses requiring attribution or restricting redistribution.

Commissioning Agreements and Institutional Policies: Public heritage projects are often funded by governmental or non-profit bodies that impose open data mandates. The local government’s cultural heritage department might require that all 3D assets be released under a Creative Commons license (CC BY or CC BY–SA) to maximize public access and scholarly reuse [90]. Conversely, a private donor may insist on exclusive commercial rights, limiting sharing to internal or paid licensing channels. Clear contractual language at project inception is therefore essential.

Copyrightability of 3D Models of Public-Domain Subjects: While many historic monuments are themselves in the public domain, the photogrammetric model can still attract copyright if it exhibits sufficient originality—for instance, in the choice of viewpoints, texture editing, or post-processing enhancements. For instance, purely mechanical reconstructions with no creative embellishment may be deemed “merely factual” and thus uncopyrightable in US jurisdictions [91], but practitioners should not assume automatic public-domain status without legal counsel.

6. Discussion

The integration of photogrammetry with UAV technology represents a significant leap forward in the documentation and preservation of cultural building heritage. As highlighted throughout this review, this synergy addresses the limitations of traditional survey methods by offering unparalleled efficiency, detail, and accessibility [8,9,16]. The capability to rapidly capture vast amounts of high-resolution imagery from diverse perspectives, even from previously inaccessible vantage points, has revolutionized how we record, analyze, and engage with historic structures.

6.1. Technological Contributions

UAVs have transformed data acquisition by providing stable, high-resolution aerial platforms. Their ability to execute pre-programmed flight plans with precise overlap ensures comprehensive coverage, while the flexibility for manual control allows for the capture of intricate details on complex facades and roofs [44,47]. The evolution of sensor payloads, from high-resolution RGB cameras to complementary LiDAR systems, further enhances the richness and accuracy of the captured data, allowing for the penetration of dense foliage and detailed structural analysis where needed [33,34].

Simultaneously, photogrammetry software, exemplified by RealityScan, has kept pace with these hardware advancements. The sophisticated SfM and MVS algorithms, coupled with powerful GPU acceleration, enable the processing of massive image datasets into highly accurate, textured 3D models [12,22,23]. The seamless integration of photogrammetry outputs with game engines like Unreal Engine further unlocks innovative applications in digital heritage, facilitating immersive virtual reconstructions and interactive educational experiences [25,26].

The case studies from the Yucatán Peninsula and Notre Dame Cathedral vividly illustrate the profound impact of these technologies. In the Yucatán, UAV-LiDAR and photogrammetry have been instrumental in uncovering and mapping vast archaeological landscapes previously hidden by dense rainforests, revealing the scale and complexity of Maya urbanism [55,59]. At Notre Dame, pre-existing digital records proved invaluable in the assessment and planning of its reconstruction following a devastating fire, underscoring the critical role of accurate digital twins for heritage resilience [64,65]. Digital twins can link 3D assets with geographic information systems (GIS), placing buildings and monuments in a broader digital management context [92]. They support integration with geospatial and building datasets, enable multi-user web–GIS access for stakeholders and the public, and make data actionable for conservation workflows, automated alerts, and multidisciplinary collaboration. VR applications can extend public access, improve training, and enhance interpretation and outreach [93]. These examples highlight how advanced documentation methods provide unprecedented detail and accuracy, crucial for conservation, restoration, and scholarly research.

Furthermore, the democratization of these technologies through user-friendly software and more accessible hardware has broadened their application beyond specialist institutions. This accessibility is fostering greater public engagement with and virtual accessibility to cultural heritage. Interactive 3D models and VR experiences allow global audiences to explore heritage sites, enhancing cultural awareness and educational outreach in ways that were previously unimaginable [70,71].

6.2. Technical and Ethical Challenges

Despite the immense progress, several challenges remain. Technical hurdles in data acquisition, such as environmental factors, lighting variability, and battery limitations, necessitate careful planning and execution [76,81]. In terms of processing, managing massive datasets, mitigating alignment errors caused by complex textures or reflective surfaces, and mastering the software’s parameters are critical in achieving optimal results [82,83]. Ethical and legal considerations, including privacy concerns related to aerial imaging and the complexities of data ownership and copyright, require careful navigation through robust planning, community engagement, and transparent data governance [85,86].

6.3. Practical Recommendations

To fully exploit UAV photogrammetry, practitioners should adopt clear, repeatable workflows; combine drone surveys with complementary methods (LiDAR); and commit to regular training so that teams can keep up with new tools and techniques. Institutions should back this up by funding training and building in-house capacity; setting practical protocols for metadata, storage, backups, and access; and promoting close collaboration between conservators, surveyors, GIS/IT specialists, and decision makers so that digital records are useful, reliable, and reusable over the long term.

6.4. Future Directions

6.4.1. Intangible Heritage

Although this review focuses on tangible heritage, it should be acknowledged that the methods highlighted here can be explored for the capturing of the intangible cultural heritage (ICH) [94] of a space [95]. This is an unexplored area, as most methods utilize standardized video and/or photo capture.

6.4.2. Technological Directions

Looking ahead, future research should focus on developing more robust and automated workflows that can better handle challenging acquisition environments and data processing complexities. The continued integration of AI and machine learning will help to automate feature extraction and matching, enable semantic segmentation and object recognition, support the temporal analysis of performances and rituals, detect anomalies and damage, and give clear measures of model confidence. Moreover, establishing standardized best practices for data management, ethical deployment, and digital preservation will be crucial in maximizing the long-term benefits of UAV and photogrammetry technologies for cultural heritage worldwide. The ongoing evolution of these tools promises to further revolutionize our ability to document, understand, and safeguard our shared global heritage for generations to come.

Author Contributions

Conceptualization, Y.X. and J.S.; Methodology, Y.X. and J.S.; Investigation, Y.X.; Resources, S.Y., C.F., T.H. and J.S.; Writing—original draft preparation, Y.X., S.Y., C.F., T.H. and J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partly supported by the Philosophy and Social Sciences 2025 Annual Planning Project in Foshan (grant no. 2025-QN11), the 14th Five-Year Planning Project for the Development of Philosophy and Social Sciences in Guangzhou (grant no. 2025GZGJ240), and the Humanities and Social Sciences Planning Fund Project of the Ministry of Education of China (grant no. 23YJA760014).

Data Availability Statement

No new data were created or analyzed in this study.

Acknowledgments

The manuscript’s language was refined with the assistance of two AI-based proofreading tools, ChatGPT (OpenAI) and Writefull, within Overleaf. These tools were used solely for grammar correction, sentence restructuring, and overall readability enhancement, guided by prompts such as “Proofread the following content” and “Proofread only”, as well as Overleaf’s sentence correction suggestions. No content was generated, analyzed, or interpreted by AI. All scientific content, data interpretation, and conclusions remain entirely the original work of the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Figure A1.

Background study flow diagram.

Table A1.

Template data extraction table for included studies (DNR).

Table A1.

Template data extraction table for included studies (DNR).

| Study ID | Site | Design | Technology | Deliverables | Data Sources | Key Findings | Theme(s) Linked |

|---|---|---|---|---|---|---|---|

| Ahmad et al., 2021 [85] | General | Critical overview | Drones (UAVs) | Analysis of privacy threats from unregulated drones | Literature review | Unregulated drones pose a significant threat to the right to privacy, necessitating a new legal framework. | Privacy; Regulation; Drones |

| Alsadik et al., 2022 [79] | N/A (Simulation) | Simulation | Hybrid acquisition system for UAVs | Simulated performance of a hybrid UAV–sensor system | Simulation data | A hybrid system can optimize data acquisition for UAV platforms. | UAV Technology; Simulation; Data Acquisition |

| Asadzadeh et al., 2022 [34] | N/A (Review) | State-of-the-art review | UAV-based remote sensing | Overview of UAV applications in the petroleum industry and environmental monitoring | Literature review | UAVs are a powerful tool for the petroleum industry and environmental monitoring. | UAV Applications; Remote Sensing; Environmental Monitoring |

| Avrami, 2019 [1] | General | Theoretical framework | Heritage management approaches | Discussion of values in heritage management | Case studies, theoretical analysis | Emphasizes the importance of value-based approaches in heritage management. | Heritage Management; Cultural Values |

| Barbara, 2022 [74] | N/A (Virtual) | Case study | Immersive virtual reality (VR) | VR learning experience for prehistoric intangible cultural heritage | Development and user experience data | VR can provide an immersive and effective way to experience and learn about cultural heritage. | Virtual Reality; Cultural Heritage; Education |

| Bao et al., 2024 [27] | N/A (Software) | Comparative performance analysis | Game engines (Unity, Unreal) | Performance comparison of rendering optimization methods | Benchmarking and performance metrics | Provides a comparative analysis of rendering optimization in different game engines. | Computer Graphics; Software Performance; Game Development |

| Biryukova and Nikonova, 2017 [70] | General | Review and analysis | Digital technologies for cultural heritage | Analysis of the role of digital technologies | Literature review | Digital technologies play a crucial role in the preservation and accessibility of cultural heritage. | Digital Heritage; Cultural Preservation; Technology |

| Boon et al., 2017 [29] | N/A (Case study) | Case study and comparison | Fixed-wing and multirotor UAVs | Comparison of two UAV types for environmental mapping | Field data from case study | The choice between fixed-wing and multirotor UAVs depends on the specific application. | UAV Technology; Environmental Mapping; Remote Sensing |

| Bramer et al., 2018 [19] | N/A (Methodology) | Methodological paper | Systematic literature searching methods | An efficient and complete method for developing literature searches | Methodological description | Proposes a systematic approach to improve the efficiency and completeness of literature searches. | Literature Search; Research Methodology |

| Cai et al., 2025 [51] | N/A (Dataset) | Dataset creation | Drones; semantic segmentation | A varied drone dataset (VDD) for semantic segmentation tasks | Drone imagery | Introduces a new dataset to advance research in semantic segmentation for drone-captured images. | Drones; Computer Vision; Datasets |

| Chen et al., 2024 [2] | China | Methodological paper | Digital cultural heritage | Approach for integrating digital cultural heritage into sustainable education | Literature review | Explores paths for integrating digital cultural heritage into sustainable education to popularize knowledge and improve heritage protection. | Digital Heritage; Education; Cultural Preservation |

| Dai et al., 2023 [83] | N/A (Methodology) | Methodological research | UAV-SfM photogrammetry | Method to improve terrain modeling accuracy | Experimental data; error analysis | Analyzing the spatial structure of errors can enhance the accuracy of terrain models created with UAV-SfM photogrammetry. | UAV Photogrammetry; Terrain Modeling; Error Analysis |

| Dai et al., 2023 [49] | Low-altitude urban environments | Algorithm development | Vision-based UAV self-positioning | A method for UAV self-positioning without GPS | Image data; experimental results | Developed a vision-based method enabling accurate UAV self-positioning in low-altitude urban areas where GPS may be unavailable. | UAV Navigation; Computer Vision; Urban Environments |

| De Fino et al., 2023 [8] | Heritage buildings | Scoping review | Photogram-metry | Review on photogrammetry for heritage building condition assessment | Literature review | Provides a comprehensive overview of how photogrammetry can be used for the condition assessment of heritage buildings from a decision maker’s perspective. | Heritage Management; Photogrammetry; Condition Assessment |

| Enesi et al., 2022 [15] | N/A (Case study) | Case study | Photogrammetry | Quality assessment of 3D reconstruction for small objects | Case study data | Investigates the quality of 3D reconstructions of small objects using photogrammetry. | 3D Reconstruction; Photogrammetry; Quality Assessment |

| Epaud, 2019 [65] | Notre Dame | Historical analysis | N/A (Historical study) | Description of the lost roof structure of Notre Dame | Historical and architectural analysis | Provides a historical account of the medieval timber roof structure of Notre Dame Cathedral that was lost in the 2019 fire. | Architectural History; Cultural Heritage; Notre Dame |

| Fatorić and Seekamp, 2017 [4] | Global | Systematic literature review | N/A (Review) | Review of threats from climate change to cultural heritage | Literature databases | Identifies that cultural heritage is threatened by climate change and highlights research gaps. | Climate Change; Cultural Heritage; Risk Assessment |

| Furukawa and Hernández, 2015 [23] | N/A (Methodology) | Tutorial | Multiview stereo (MVS) | A comprehensive tutorial on MVS for 3D reconstruction | Computer vision literature | Provides a foundational overview and tutorial regarding multiview stereo algorithms used for 3D reconstruction from multiple images. | 3D Reconstruction; Computer Vision; Multiview Stereo |

| Gao, 2024 [7] | China | Visualization study | Digital simulation and reconstruction | Visualization of traditional artistic crafts from the “Kao Gong Ji” text | The “Kao Gong Ji” ancient text | Digital visualization can reproduce ancient craft technology, providing inspiration for modern craft innovation and promoting the integration of traditional crafts with modern technology. | Digital Heritage; Visualization; Traditional Crafts |

| Hansen et al., 2023 [59] | Guatemala – Mirador–Calakmul Basin | Archaeological analysis | LiDAR | Analysis of regional early Maya organization | LiDAR data | LiDAR analyses provide new perspectives on the socioeconomic and political organization of the early Maya civilization. | LiDAR; Archaeology; Maya Civilization |

| Hu and Minner, 2023 [81] | General (Urban environments) | Systematic review | UAVs, 3D city modeling | Review of UAVs and 3D modeling for urban planning and historic preservation | Literature review | Systematically reviews the use of UAVs and 3D city modeling to support urban planning and historic preservation, identifying trends and gaps. | UAVs; 3D Modeling; Urban Planning; Historic Preservation |

| Hurst et al., 2024 [24] | N/A (Lab/research setting) | Technology assessment | Apple’s Object Capture photogrammetry API | Assessment of the API for creating cultural heritage 3D models | 3D models created with the API, quality assessment metrics | Assesses the suitability and quality of Apple’s Object Capture for rapidly creating research-quality 3D models of cultural heritage objects. | 3D Modeling; Photogrammetry; Cultural Heritage; Technology Assessment |

| Jacquot, 2024 [67] | Notre Dame de Paris | Communication and image analysis | N/A (Analysis of images) | Analysis of the images of the “Forest” (roof structure) of Notre Dame | Images, media representations | Analyzes the communication and representation through images of the “Forest”, the historic timber roof of the Notre Dame. | Notre Dame; Cultural Heritage; Image Analysis; Communication |

| Joosen et al., 2022 [86] | EU | Political analysis | N/A (Rulemaking) | Analysis of EU aviation rulemaking | Policy analysis | Examines how interest groups and national agencies influence the rulemaking of the European Union Aviation Safety Agency (EASA). | Regulation; Aviation Safety; EU Policy |

| Komárek, 2025 [77] | General | Analysis | Drones (UAVs) | Analysis of wind constraints on drone mapping | Literature review, meteorological data | Exposes how wind acts as a major constraint for drone-based environmental mapping, affecting feasibility and data quality. | Drones; Environmental Mapping; Weather Constraints |

| Kong et al., 2022 [50] | N/A (Methodology) | Methodological development | UAV photogrammetry; GCPs | Automatic method for marking ground control points (GCPs) | Experimental data | Proposes an automatic and accurate method for marking GCPs to improve the efficiency and accuracy of UAV photogrammetry. | UAV Photogrammetry; Automation; Ground Control Points |

| Kovanič et al., 2023 [18] | N/A (Review) | Literature review | UAV, photogrammetry, LiDAR | Review of photogrammetric and LiDAR applications of UAVs | Literature review | Provides a comprehensive review of the current applications and developments in UAV-based photogrammetry and LiDAR. | UAV; Photogrammetry; LiDAR; Remote Sensing |

| Letellier, 2007 [6] | General | Guiding principles | Information management | Principles for heritage documentation and conservation | Best practices, case studies | Establishes guiding principles for recording, documentation, and information management for conserving heritage places. | Heritage Conservation; Documentation; Information Management |

| Li et al., 2025 [33] | Urban forests | Systematic review | LiDAR | Review of LiDAR for estimating individual tree aboveground biomass | Empirical studies | Systematically reviews empirical studies on modeling individual tree aboveground biomass in urban forests using LiDAR-derived metrics. | LiDAR; Urban Forestry; Remote Sensing; Biomass Estimation |

| Maboudi et al., 2023 [44] | N/A (Review) | Literature review | UAV, 3D reconstruction | Review of viewpoint and path planning methods for UAV-based 3D reconstruction | Literature review | Reviews and classifies path planning methods for UAVs to optimize the process of 3D reconstruction. | UAV; 3D Reconstruction; Path Planning; Automation |

| Marek, 2022 [91] | General | Legal analysis | Digital cultural heritage | Analysis of intellectual property (IP) issues | Legal frameworks, literature | Navigates the complex landscape of intellectual property rights as they apply to digital cultural heritage. | Digital Heritage; Intellectual Property; Law |

| Masters et al., 2022 [25] | N/A (Virtual) | Experimental study | Immersive virtual reality (VR) and VR application for stress reduction (forest bathing) | User study data | Investigates the effect of biomass levels in a virtual forest on stress reduction in an immersive VR forest bathing application. | Virtual Reality; Health | Wellbeing; Stress Reduction |

| Moyano et al., 2022 [9] | Architectural restoration | Methodological paper | Historical building information modeling (HBIM) | Systematic approach for HBIM generation | Case study/project data | Proposes a systematic approach for generating HBIM models for architectural restoration projects. | HBIM; Architectural Restoration; Digital Heritage |

| Niu et al., 2024 [48] | N/A (Field test) | Accuracy assessment | UAV photogrammetry; RTK | Accuracy assessment of RTK-UAV systems for direct georeferencing | Field measurements, UAV data | RTK-equipped UAVs can achieve centimeter-level accuracy without GCPs, but urban multipath is a challenge. | UAV Photogrammetry; RTK; Georeferencing; Accuracy |

| O’Connor et al., 2017 [31] | Geosciences | Methodological review | Aerial survey cameras | Guidelines for optimizing image data for aerial surveys | Literature review, technical specs | Discusses the importance of sensor size, pixel pitch, GSD, and calibration in optimizing aerial survey imagery. | Aerial Survey; Remote Sensing; Photogrammetry; Data Quality |

| Patrucco et al., 2020 [17] | Built heritage | Data fusion methodology | Thermal imaging, optical sensors | Fused thermal and optical data to support built heritage analyses | Sensor data | Demonstrates the benefits of fusing thermal and optical data to support the analysis and conservation of built heritage. | Data Fusion; Thermal Imaging; Built Heritage |

| Poiron, 2021 [73] | Virtual Ancient Egypt | Case study | Video game (Assassin’s Creed) | “Discovery Tour” educational mode | Game development process | Provides a behind-the-scenes look at the creation of the educational “Discovery Tour”, highlighting its potential for public engagement. | Virtual Heritage; Education; Video Games; Public Engagement |

| Remondino and Rizzi, 2010 [10] | Heritage sites | Review | 3D documentation (photogrammetry, laser scanning) | Review of techniques, problems, and examples in reality-based 3D documentation | Case studies, literature review | Reviews techniques and problems of reality-based 3D documentation, highlighting its importance for heritage conservation. | 3D Documentation; Cultural Heritage; Photogrammetry |

| Ringle et al., 2021 [56] | Yucatan, Mexico | Archaeological survey | LiDAR | LiDAR survey of ancient Maya settlement | LiDAR data | LiDAR survey reveals extensive ancient Maya settlement and landscape modifications in the Puuc region. | LiDAR; Archaeology; Maya Civilization |

| Rocha et al., 2024 [11] | Lisbon, Portugal | Case study | Scan-to-BIM | BIM model for heritage maintenance | Laser scan data, building docs | Demonstrates the application of a Scan-to-BIM approach for the maintenance of historical heritage buildings. | Scan-to-BIM; HBIM; Heritage Maintenance |

| Roders and Van Oers, 2011 [5] | World Heritage cities | Management analysis | N/A (Management frameworks) | Analysis of management practices | Case studies, policy documents | Discusses the challenges in and approaches to managing World Heritage cities, balancing conservation and development. | World Heritage; Urban Management; Heritage Policy |

| Sandron and Tallon, 2020 [62] | Notre Dame Cathedral | Historical monograph | N/A (Historical/ architectural analysis) | Comprehensive history of the cathedral | Historical archives, architectural analysis | Provides a detailed historical and architectural account of the Notre Dame Cathedral through nine centuries. | Notre Dame; Architectural History; Cultural Heritage |

| Schonberger and Frahm, 2016 [22] | N/A (Methodology) | Algorithmic improvement | Structure from motion (SfM) | An improved and more robust SfM pipeline | Image datasets, algorithm metrics | Presents a revisited and improved structure-from-motion pipeline that is more robust and accurate. | Structure From Motion; 3D Reconstruction; Computer Vision |

| Smith, 2006 [3] | General | Theoretical framework/book | N/A (Heritage studies) | Theoretical framework on the uses of heritage | Theoretical analysis, literature review | Argues that heritage is a cultural process and discourse rather than an inherent quality of objects, shaped by social and political contexts. | Heritage Studies; Cultural Theory; Social Value |

| Themistocleous, 2019 [16] | Cultural heritage and archaeology | Review and book chapter | UAVs | Overview of UAV applications in cultural heritage and archaeology | Literature review, case studies | UAVs are valuable tools for documentation, monitoring, and analysis in cultural heritage and archaeology. | UAVs; Cultural Heritage; Archaeology; Remote Sensing |

| Tu et al., 2021 [45] | Rock formations | Methodological study | UAVs, photogrammetry | Improved method for 3D reconstruction of complex structures | UAV imagery (nadir, oblique, façade) | Combining nadir, oblique, and façade imagery enhances the completeness and accuracy of 3D reconstructions of complex rock formations. | UAV Photogrammetry; 3D Reconstruction; Data Acquisition |

| Wang et al., 2022 [78] | Street scenes (virtual) | Algorithm development | Neural light fields, differentiable rendering | Method for neural light field estimation and virtual object insertion | Image datasets | Developed a neural network approach to estimate light fields, enabling realistic virtual object insertion into street scenes. | Computer Graphics; Neural Rendering; Augmented Reality |

| Wesner and Blevins, 2021 [87] | USA and UK | Comparative legal analysis | Automated license plate readers (ALPRs) | Comparison of privacy policies for ALPRs | Legal documents, policy analysis | Compares US and UK privacy policies for ALPRs, highlighting different approaches to regulating surveillance technology. | Surveillance; Privacy; Regulation; Policy |

| Yan and Du, 2025 [75] | Historical districts | User study and empirical research | Virtual reality (VR) | Analysis of factors influencing tourists’ travel intention from VR experiences | User surveys, experimental data | Investigates how VR-based reconstructions of historical districts influence a tourist’s intention to visit the actual site. | Virtual Reality; Tourism; Cultural Heritage; User Experience |

| Zhang et al., 2022 [36] | Urban environments | Methodological development | LiDAR, photogrammetry, deep learning (U-Net) | A method for 3D urban building extraction | Airborne LiDAR, photogrammetric point clouds | A deep learning model (U-Net) can effectively fuse LiDAR and photogrammetric data to extract 3D urban buildings. | 3D Modeling; Urban Mapping; LiDAR; Deep Learning; Data Fusion |

| Zhou et al., 2020 [32] | N/A (Methodology) | Methodological development | UAV photogrammetry | A two-step method to correct rolling shutter distortion | UAV imagery, experimental data | Proposes a two-step approach that effectively corrects rolling shutter distortion in UAV imagery, improving photogrammetric accuracy. | UAV Photogrammetry; Image Processing; Data Quality |

| Barker et al., 2023 [21] | N/A (Methodology) | Methodological development | N/A (Appraisal tools) | Revised JBI quantitative critical appraisal tools | Development process, expert consultation | Outlines the process and methods for revising the JBI critical appraisal tools to improve their applicability. | Research Methodology; Critical Appraisal; Evidence Synthesis |

| Casini, 2022 [93] | Smart buildings | Review | Extended reality (XR) | A review of XR for smart building operation and maintenance | Literature review | Reviews the application of extended reality technologies in improving the operation and maintenance of smart buildings. | Extended Reality; Smart Buildings; Building Management |

| Doulamis et al., 2017 [95] | Cultural heritage | Book chapter/methodology | 3D Modeling; digitization | Methods for digitizing tangible and intangible cultural heritage | N/A (Methodological description) | Discusses methods for modeling and digitizing both static (tangible) and moving (intangible) aspects of cultural heritage. | Digital Heritage; 3D Modeling; Intangible Heritage |

| Gazagne et al., 2023 [38] | Vietnam | Case study and application | UAVs, thermal infrared (TIR) sensors | Assessment of UAVs for primate monitoring | Field data (UAV imagery) | Demonstrates that UAVs with thermal sensors are effective in monitoring and counting threatened primate species. | UAVs; Thermal Imaging; Wildlife Monitoring; Conservation |

| Gerchow et al., 2025 [37] | N/A (Vegetation) | Methodological development | UAVs, thermal imaging | Enhanced flight planning and calibration methods | Experimental data | Proposes enhanced flight planning and calibration techniques for UAV-based thermal imaging to improve analysis of canopy temperature. | UAVs; Thermal Imaging; Remote Sensing; Vegetation Analysis |

| Koo et al., 2021 [53] | N/A (BIM) | Algorithm development | BIM, deep learning (DNNs) | A method for automatic classification of BIM element subtypes | 3D geometric data | Developed a deep neural network to automatically classify wall and door subtypes in BIM models based on their 3D geometry. | BIM; Deep Learning; Automation; 3D Modeling |

| Lenzerini, 2011 [94] | General | Legal and theoretical analysis | N/A | Analysis of intangible cultural heritage | Legal frameworks, literature | Discusses the concept of intangible cultural heritage as the living culture of people and its significance in international law. | Intangible Heritage; Cultural Policy; Law |

| Meschini et al., 2022 [92] | University campus | System development/case study | Digital twins, BIM, GIS | A BIM-GIS asset management system for a cognitive digital twin | University building data | Presents a framework for creating cognitive digital twins for university asset management by integrating BIM and GIS. | Digital Twin; BIM; GIS; Asset Management |