Microgrid Operation Optimization Strategy Based on CMDP-D3QN-MSRM Algorithm

Abstract

1. Introduction

- (1)

- Refinement of the functional design of the multi-stage reward mechanism to prevent erroneous outcomes caused by exceptional or edge-case data.

- (2)

- Replacement of the original BRF in the D3QN algorithm with the improved MSRM to mitigate poor convergence resulting from reward sparsity, thereby enhancing the algorithm’s optimality-seeking capability.

- (3)

- Utilization of the constructed CMDP to strengthen the algorithm’s ability to handle complex power flow scenarios and improve the operational stability of microgrids.

2. Microgrid Optimization Model

2.1. Microgrid Equipment Modeling

2.1.1. Energy Storage System

2.1.2. Fuel Units

2.1.3. Power Line Losses

2.1.4. Microgrid Bus

2.2. Constraints

2.2.1. AC Trend Constraints

2.2.2. Fuel Generator Operating Constraints

2.2.3. Battery Operation Constraints

2.2.4. Microgrid and the Main Grid Interaction Constraints

3. Optimization Model Based on Constrained Markov Strategy Process

3.1. State Space

3.2. Action Space

3.3. Transfer Probability

3.4. Reward Function

3.4.1. Self-Balancing Rate

3.4.2. Reliability Rate

3.4.3. Operating Costs

3.4.4. Penalty Functions

4. Microgrid Operation Optimization Based on CMDP-D3QN-MSRM Algorithm

4.1. D3QN Algorithm

4.2. Multi-Stage Reward Evaluation Mechanism

| Algorithm 1. Multi-Stage Reward Mechanism |

| 1: Set penalty reward coefficient 2: for episode in 1 to M do 3: Initialize final reward and step reward 4: for t in l to T do 5: Observe state St and action At 6: if External expert intervention is met then 7: is given by exert inputs 8: else 9: is given by DRL 10: end if 11: Calculate step reward at every t: 12: Calculate final reward at every t: 13: if episode terminates t = T then 14: 15: else 16: 17: end if |

4.3. Step Rewards

4.4. Final Reward

4.5. Expert Intervention

5. Example Analysis

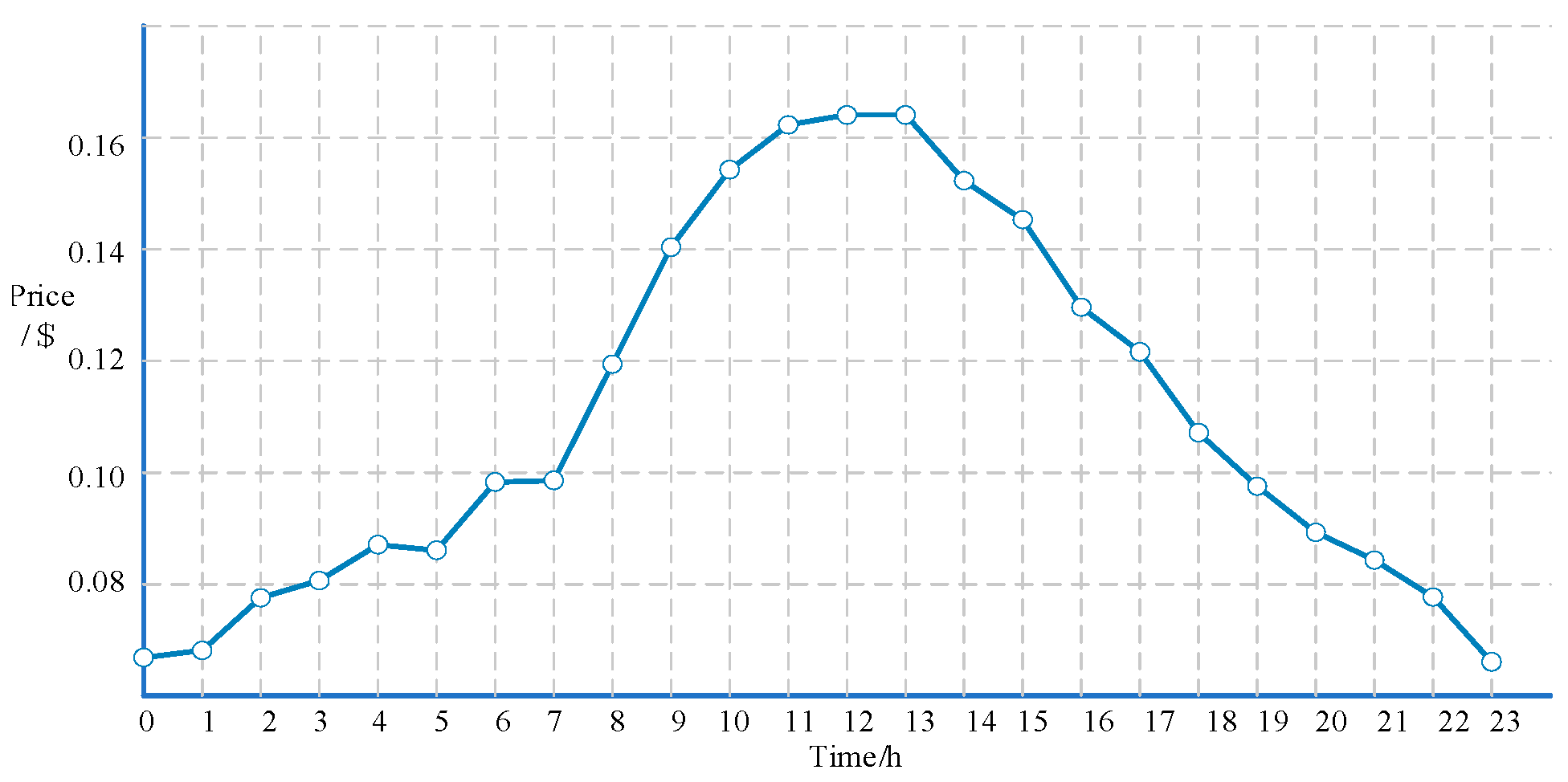

5.1. Simulation Environment Setup

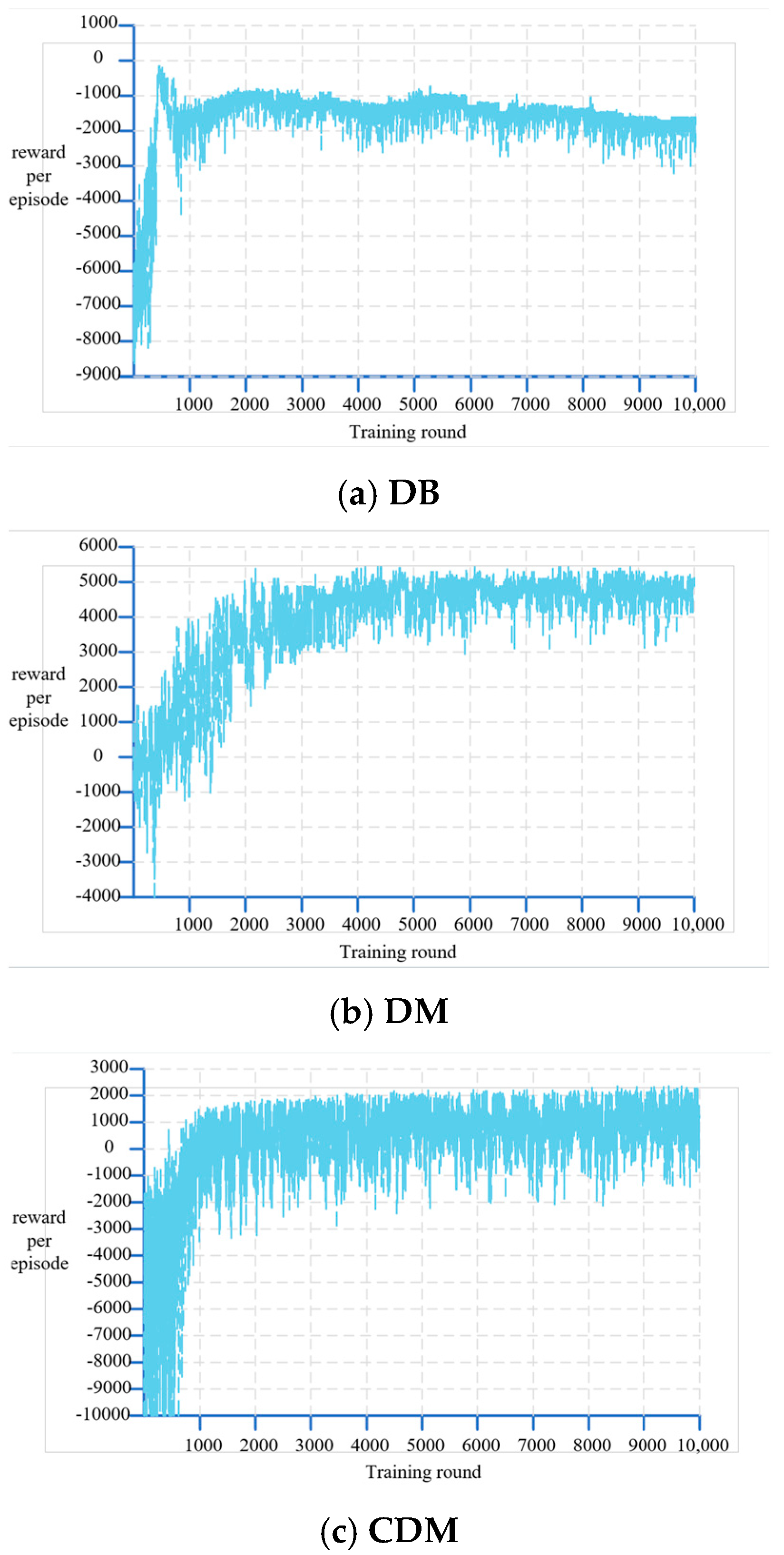

5.2. Analysis of Training Results of Algorithms

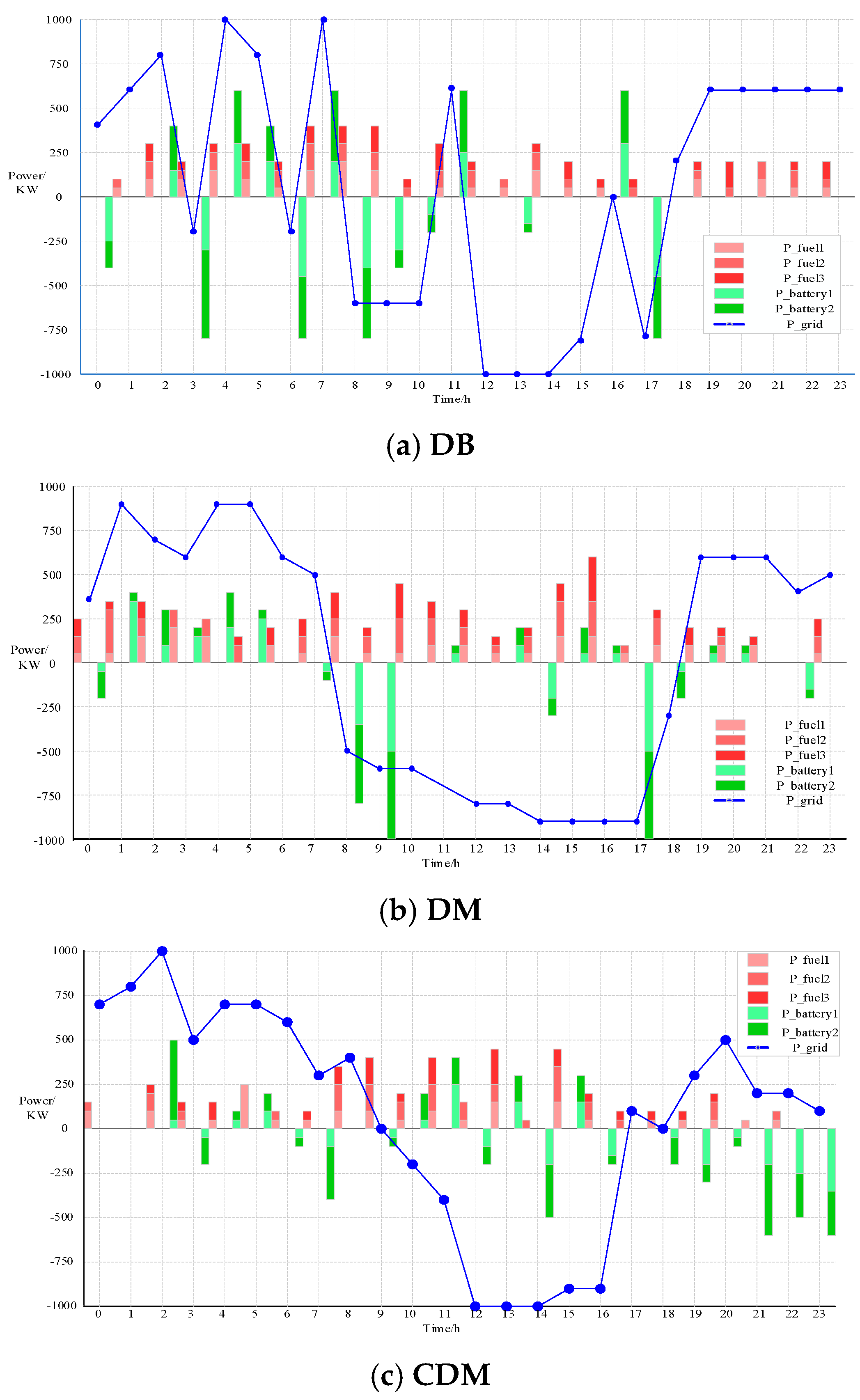

5.3. Analysis of Optimization Results of Algorithms

5.3.1. Constraints of the Running Strategy

5.3.2. Analysis of Operational Strategies Developed by Each Algorithm

6. Conclusions and Future Perspectives

- (1)

- The CMDP-D3QN-MSRM strategy proposed in this study provides an efficient data-driven tool for addressing the trade-off between “economic efficiency” and “security” in practical microgrid operations. Compared to the D3QN algorithm, this method resolves the issue of sparse rewards, significantly reducing the microgrid’s operating costs (by 16.5%). Simultaneously, it mitigates operational risks arising from complex power flow (with voltage fluctuations reduced by over 40%). This offers a feasible technical pathway for the autonomous and intelligent operation of microgrids.

- (2)

- Although this study validated the proposed algorithm on a standard test microgrid, it demonstrates strong potential for scalability. For larger microgrid networks, the core framework of the algorithm requires no fundamental changes; only the state space dimensionality and constraint conditions need to be adjusted accordingly.

- (1)

- The training in this study was based on a fixed historical data environment. Future work will explore online learning mechanisms, enabling the agent to adaptively adjust its strategies through continuous interaction with the real microgrid environment to cope with unknown load variations or equipment aging, thereby ultimately achieving real-time deployment.

- (2)

- To further enhance the global optimality of the scheduling scheme, we plan to investigate a hybrid framework integrating DRL with meta-heuristic algorithms For instance, DRL could be employed for rapid real-time decision making, while meta-heuristic algorithms could be utilized for refined cost optimization over longer timescales, with the two working in concert. We also plan to further refine the algorithm by comparing it with more methods (such as those in references [10,13,17], etc.).

- (3)

- Although this study employs heuristically designed penalty functions, it lacks formal proof of CMDP constraint satisfaction and relies on manual coefficient adjustments, which reduces its versatility. Therefore, subsequent research will utilize the Lagrangian relaxation method to address the constraint satisfaction issues and eliminate the reliance on manual parameter tuning.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| D3QN | Dual-Competitive Deep Q-Network |

| MSRM | Multi-Stage Reward Mechanism |

| CMDP | Constrained Markov Decision Process |

| BRF | Baseline Reward Function |

| DB | D3QN-BRF |

| DM | D3QN-MSRM |

| CDM | CMDP-D3QN-MSRM |

Appendix A

| State of Charge | SOC | State of charge of the energy storage system, representing the battery’s remaining energy percentage. |

| Charge Power | Charging power of the energy storage system during time period t. | |

| Discharge Power | Discharging power of the energy storage system during time period t. | |

| Total Line Loss | Total active power loss in the AC lines of the microgrid during time period t. | |

| Fuel Engine Power | Output power of the fuel engine during time period t. | |

| Photovoltaic Power | Power generated by the photovoltaic system during time period t. | |

| Wind Power | Output power of the wind power generation system during time period t. | |

| Main-Grid Exchange Power | Power exchanged between the microgrid and the main grid during time period t (positive for purchasing, negative for selling). | |

| Self-Balancing Rate | Ratio of the microgrid’s internal distributed energy resources meeting its own load demand over a defined period. | |

| Reliability Rate | Ratio of total generation to total demand, indicating the system’s ability to provide stable and continuous power supply. |

References

- National Energy Administration. Transcript of the National Energy Administration’s Q1 2020 Online Press Conference (2020–03-06); National Energy Administration: Beijing, China, 2 May 2021. [Google Scholar]

- Lasseter, R.H.; Paigi, P. Microgrid: A conceptual solution. In Proceedings of the IEEE 35th Annual Power Electronics Specialists Conference, Aachen, Germany, 20–25 June 2004; pp. 4285–4290. [Google Scholar]

- Anglani, N.; OritiI, G.; Colombini, M. Optimized energy management system to reduce fuel consumption in remote military microgrids. IEEE Trans. Ind. Appl. 2017, 53, 5777–5785. [Google Scholar] [CrossRef]

- Qi, Y.; Shang, X.; Nie, J.; Huo, X.; Wu, B.; Su, W. Operation optimization of combined heat and cold power type microgrid based on improved multi-objective gray wolf algorithm. Electr. Meas. Instrum. 2022, 59, 12–19. [Google Scholar]

- Feng, L.; Cai, Z.; Wang, Y.; Liu, P. Power fluctuation smoothing strategy for microgrid load-storage coordinated contact line taking into account load storage characteristics. Autom. Electr. Power Syst. 2017, 41, 22–28. [Google Scholar]

- Zhu, J.; Liu, Y.; Xu, L.; Jiang, Z.; Ma, C. Robust economic scheduling of cogeneration-type microgrids considering wind power consumption a few days ago. Autom. Electr. Power Syst. 2019, 3, 40–51. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Humanlevel control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

- Kober, J.; Bagnell, J.A.; Peters, J. Reinforcement learning in robotics: A survey. Int. J. Robot. Res. 2013, 32, 1238–1274. [Google Scholar] [CrossRef]

- Meysam, G.; Arman, F.; Mohammad, S.; Laurendeau, E.; Al-Haddad, K. Data-Driven Switching Control Technique Based on Deep Reinforcement Learning for Packed E-Cell as Smart EV Charger. IEEE Trans. Transp. Electrif. 2024, 11, 3194–3203. [Google Scholar]

- Liu, J.; Chen, J.; Wang, X.; Zeng, J.; Huang, Q. Research on micro-energy grid management and optimization strategies based on deep reinforcement learning. Power Syst. Technol. 2020, 44, 3794–3803. [Google Scholar]

- Li, H.; Shen, B.; Yang, Y.; Pei, W.; Lv, X.; Han, Y. Microgrid energy management and optimization strategy based on improved competitive deep Q-network algorithm. Autom. Electr. Power Syst. 2022, 46, 29–42. [Google Scholar]

- Akarne, Y.; Essadki, A.; Nasser, T.; Laghridat, H.; El Bhiri, B. Enhanced power optimization of photovoltaic system in a grid-connected AC microgrid under variable atmospheric conditions using PSO-MPPT technique. In Proceedings of the 2023 4th International Conference on Clean and Green Energy Engineering (CGEE), Ankara, Turkiye, 26–28 August 2023; pp. 19–24. [Google Scholar]

- Lu, R.; Hong, S.H.; Yu, M. Demand response for home energy management using reinforcement learning and artificial neural network. IEEE Trans. Smart Grid 2019, 10, 6629–6639. [Google Scholar] [CrossRef]

- Kim, S.; Lim, H. Reinforcement learning based energy management algorithm for smart energy buildings. Energies 2018, 11, 2010. [Google Scholar] [CrossRef]

- Foruzan, E.; Soh, L.K.; Asgarpoor, S. Reinforcement learning approach for optimal distributed energy management in a microgrid. IEEE Trans. Power Syst. 2018, 33, 5749–5758. [Google Scholar] [CrossRef]

- Feng, C.; Zhang, Y.; Wen, F.; Ye, C.; Zhang, Y. Microgrid energy management strategy based on deep expectation Q-network algorithm. Autom. Electr. Power Syst. 2022, 46, 14–22. [Google Scholar]

- Goh, H.W.; Huang, Y.; Lim, C.S.; Zhang, D.; Liu, H.; Dai, W.; Kurniawan, T.A.; Rahman, S. An Assessment of Multistage Reward Function Design for Deep Reinforcement Learning-Based Microgrid Energy Management. IEEE Trans. Smart Grid 2022, 13, 4300–4311. [Google Scholar] [CrossRef]

- Bui, V.H.; Hussain, A.; Kim, H.M. Q-learning based operation strategy for community battery energy storage system in microgrid system. Energies 2019, 12, 1789. [Google Scholar] [CrossRef]

- Xu, X.; Jia, Y.W.; Xu, Y.; Xu, Z.; Chai, S.; Lai, C.S. A multi-agent reinforcement learning-based data-driven method for home energy management. IEEE Trans. Smart Grid 2020, 11, 3201–3211. [Google Scholar] [CrossRef]

- El, H.R.; Kalathil, D.; Xie, L. Fully decentralized reinforcement learning-based control of photovoltaics in distribution grids for joint provision of real and reactive power. IEEE Open Access J. Power Energy 2021, 8, 175–185. [Google Scholar] [CrossRef]

- Zhang, Q.; Dehghanpour, K.; Wang, Z.; Qiu, F.; Zhao, D. Multi-agent safe policy learning for power management of networked microgrids. IEEE Trans. Smart Grid 2021, 12, 1048–1062. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Wang, J.; Zhang, Y. Deep reinforcement learning based volt-VAR optimization in smart distribution systems. IEEE Trans. Smart Grid 2021, 12, 361–371. [Google Scholar] [CrossRef]

- Yu, H.; Lin, S.; Zhu, J.; Chen, H. Deep reinforcement learning-based online optimization of microgrids. Electr. Meas. Instrum. 2024, 61, 9–14. [Google Scholar]

- Ji, Y.; Wang, J. Deep reinforcement learning-based online optimal scheduling for microgrids. J. Control Decis. 2022, 37, 1675–1684. [Google Scholar]

- Huang, Y.; Wei, G.; Wang, Y. V-D D3QN: The variant of double deep Q-learning network with dueling architecture. In Proceedings of the 37th Chinese Control Conference, Wuhan, China, 25–27 July 2018; pp. 558–563. [Google Scholar]

- California ISO. Open Access Same-Time Information System (OASIS). (2020-05-20) [2021-02-26]. Available online: https://www.caiso.com/systems-applications/portals-applications/open-access-same-time-information-system-oasis (accessed on 5 September 2024).

| Parameter | Value |

|---|---|

| Scheduling duration (T) | 24 |

| Batch size | 128 |

| Learning rate (L) | 0.001 |

| Initial, final, decay rates of | 1, 0.01, 0.995 |

| Discount factor () | 0.97 |

| Target network update frequency | 480 |

| Experience replay pool | 24,000 |

| Converter efficiencies () | 0.85, 0.9, 0.9, 0.9 |

| Penalty coefficient (β) | 1000 |

| Resistivity () | |

| Length between each node (l) | 500 m |

| Standard voltage (V) | 10 kV |

| Standard current (I) | 580 A |

| Fuel cost factors () | 0.00015、1.057、0.4712 |

| Algorithm | Average Rewards | Standard Deviation | 95% Confidence Interval |

|---|---|---|---|

| DB | −1731.4 | 7.36 | (−1738.6, −1724.3) |

| DM | 4904.6 | 9.75 | (4894.5, 4914.8) |

| CDM | 1012.5 | 5.9 | (1005.1, 1019.9) |

| Comparison Value | Power Balance | Bus 1 | Bus 2 | Bus 3 | Bus 4 | Bus 5 | Bus 6 | Bus 7 | Bus 8 | Bus 9 | Bus 10 | Bus 11 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Standard deviation | DB | 0.159 | 0.006 | 0.011 | 0.003 | 0.031 | 0.011 | 0.051 | 0.009 | 0.013 | 0.046 | 0.042 | 0.069 |

| DM | 0.073 | 0.006 | 0.009 | 0.002 | 0.03 | 0.01 | 0.048 | 0.008 | 0.013 | 0.043 | 0.039 | 0.066 | |

| CDM | 0.061 | 0.006 | 0.009 | 0.001 | 0.029 | 0.012 | 0.035 | 0.005 | 0.003 | 0.026 | 0.020 | 0.025 | |

| Maximum value | DB | 1.619 | 1.008 | 1.016 | 1.002 | 1.031 | 1.02 | 1.085 | 0.996 | 1.013 | 1.067 | 1.062 | 1.097 |

| DM | 1.141 | 1.007 | 1.017 | 1.001 | 1.033 | 1.018 | 1.011 | 1.015 | 0.995 | 1.052 | 1.067 | 1.097 | |

| CDM | 1.194 | 1.002 | 1.016 | 1.001 | 1.035 | 1.017 | 1.018 | 1.001 | 1.001 | 1.033 | 1.047 | 1.053 | |

| Minimum value | DB | 0.906 | 0.994 | 0.981 | 0.991 | 0.96 | 0.992 | 0.953 | 0.981 | 0.978 | 1.016 | 1.012 | 1.038 |

| DM | 0.91 | 0.991 | 0.986 | 0.996 | 0.954 | 0.99 | 0.93 | 0.989 | 0.981 | 0.942 | 1.011 | 1.013 | |

| CDM | 0.941 | 0.991 | 0.991 | 0.997 | 0.966 | 0.985 | 0.932 | 0.99 | 0.994 | 0.967 | 0.995 | 0.998 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, J.; Zeng, Y.; Wei, Q. Microgrid Operation Optimization Strategy Based on CMDP-D3QN-MSRM Algorithm. Electronics 2025, 14, 3654. https://doi.org/10.3390/electronics14183654

Kang J, Zeng Y, Wei Q. Microgrid Operation Optimization Strategy Based on CMDP-D3QN-MSRM Algorithm. Electronics. 2025; 14(18):3654. https://doi.org/10.3390/electronics14183654

Chicago/Turabian StyleKang, Jiayu, Yushun Zeng, and Qian Wei. 2025. "Microgrid Operation Optimization Strategy Based on CMDP-D3QN-MSRM Algorithm" Electronics 14, no. 18: 3654. https://doi.org/10.3390/electronics14183654

APA StyleKang, J., Zeng, Y., & Wei, Q. (2025). Microgrid Operation Optimization Strategy Based on CMDP-D3QN-MSRM Algorithm. Electronics, 14(18), 3654. https://doi.org/10.3390/electronics14183654