A Dynamic Bridge Architecture for Efficient Interoperability Between AUTOSAR Adaptive and ROS2

Abstract

1. Introduction

2. Background and Related Works

2.1. ROS2 (Robot Operating System 2)

2.2. AUTOSAR Adaptive Platform

2.3. DDS (Data Distribution Service)

2.4. SOME/IP (Scalable Service-Oriented MiddlewarE over IP)

2.5. Related Works

3. Dynamic SOME/IP-DDS Bridge Architecture and Implementation

3.1. Dynamic SOME/IP-DDS Bridge Architecture

3.1.1. Discovery Manager

- SOME/IP Handler: This component detects events like “Offer Service” and “Service Request” through the SOME/IP-SD protocol to gather information about currently available SOME/IP services.

- DDS Handler: This component monitors the Global Data Space of DDS to identify active DDS participants (communication entities).

- Discovery Synchronizer: Each handler sends its detected results to the Discovery Synchronizer, which cross-references the identified SOME/IP services and DDS participant data based on mapping rules specified in a pre-configured bridge configuration file.

3.1.2. Bridge Manager

- Handling Registration Requests: When a registration request is received from the Discovery Manager, the Bridge Manager first sets up an internal message queue for data exchange between the two platforms. It then instructs the Message Router to establish the required SOME/IP Endpoint (Server/Client) and DDS Endpoint (Publisher/Subscriber) for communication.

- Handling Deletion Requests: Upon receiving a deletion request, the Bridge Manager terminates the Endpoint objects associated with the specific communication and releases all related resources, such as the allocated message queue, to enhance system efficiency.

3.1.3. Message Router

- SOME/IP Server/Client: Acts as the communication endpoint for the SOME/IP domain. It is initialized upon request from the Bridge Manager to transmit and receive messages, facilitating data exchange with the DDS side via the internal message queue.

- DDS Publisher/Subscriber: Functions as the communication endpoint for the DDS domain. It is created based on the settings of the QoS Manager and exchanges data with the SOME/IP side through the internal message queue.

- Data Converter: This component converts the byte stream data obtained from SOME/IP messages into the message format utilized by ROS2 (or DDS) and can also perform the reverse conversion.

- QoS Manager: Serves as the authority for QoS policy enforcement. It validates discovered requirements against the mandatory QoS profile defined in the JSON configuration file. If the profiles are fully compatible, the QoS Manager authorizes the creation of the corresponding DDS Publisher or Subscriber; otherwise, it reports a failure to the Bridge Manager, which aborts the connection setup and records the error. This pre-emptive validation ensures that runtime QoS policy conflicts are prevented by design, as incompatible communication paths are never established in the first place. This fail-safe mechanism prioritizes predictability and safety over runtime adaptation, which is essential in automotive systems. The strict enforcement of QoS policy also reflects the fact that AUTOSAR SOME/IP does not provide a standardized protocol for dynamic QoS negotiation.

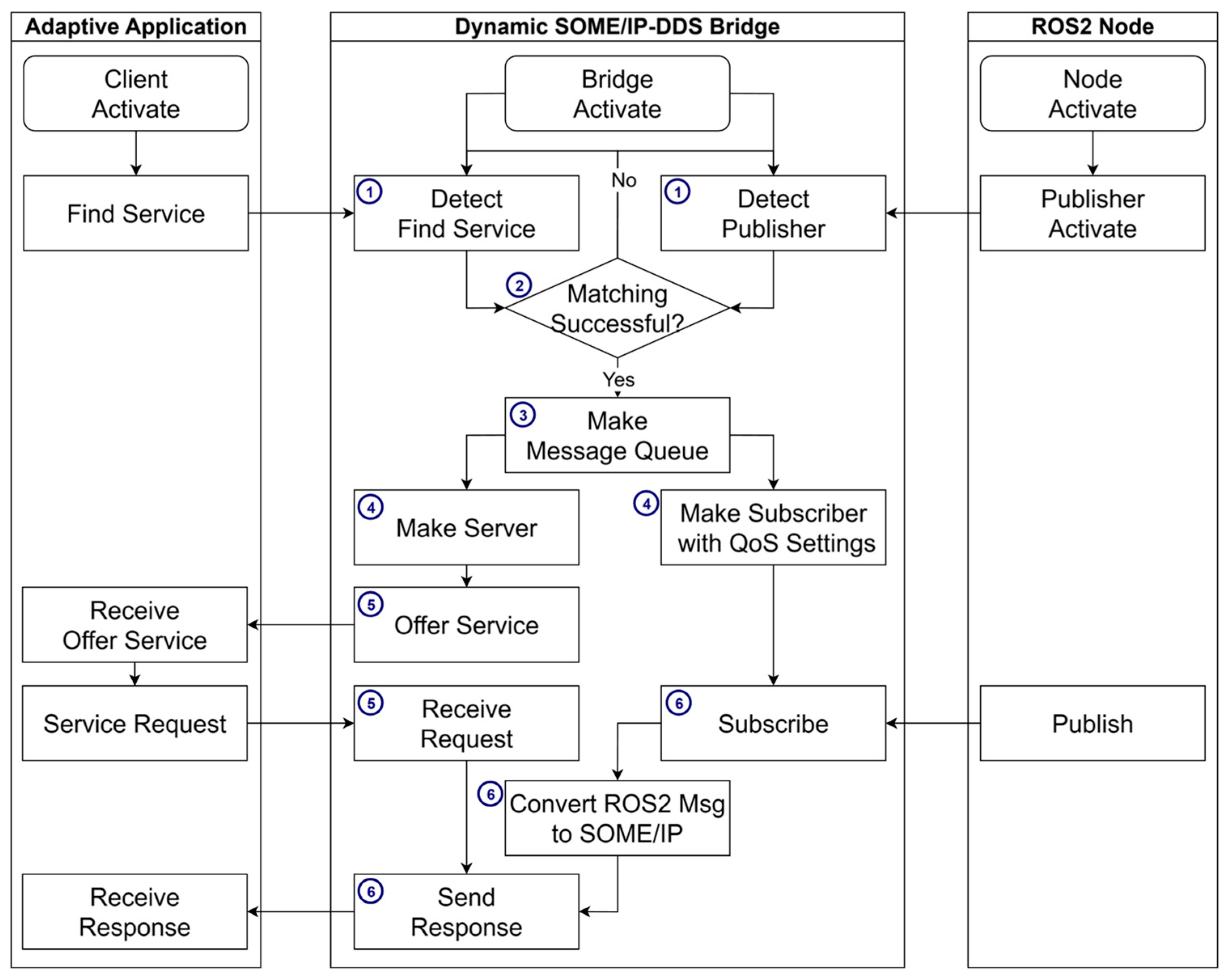

3.2. Dynamic SOME/IP-DDS Bridge Workflow

3.2.1. Adaptive Application to ROS2 Node

- Event Detection and Waiting: The bridge waits to receive an “Offer Service” event from SOME/IP-SD and a “Subscriber registration” event from DDS Discovery. The matching commences begins asynchronously, even if only one of the two events is detected.

- Service Matching: The bridge checks for the counterpart (Service or Subscriber) needed for interconnection based on the detected event. If the necessary counterpart is not yet established—such as when only an “Offer Service” from the Adaptive Application is detected—it waits for the registration of the corresponding ROS2 node’s Subscriber. Conversely, if only the Subscriber from the ROS2 node is recognized, the bridge promptly multicasts a “Find Service” message via SOME/IP-SD to locate the service and awaits a response. Once both the Service and Subscriber are confirmed and successfully matched, the process moves on to setting up the data path.

- Message Queue Creation: Utilizing the matched information, an internal message queue is established to facilitate data transmission between the two endpoints. This queue acts as a buffer for asynchronous data exchange between the two communication protocols.

- Communication Endpoint (Client/Publisher) Creation: Endpoints suitable for each communication protocol are generated for data exchange. A Client for SOME/IP communication and a Publisher for DDS communication are created. Importantly, the DDS Publisher is configured to align with the QoS policy required by the ROS2 node’s Subscriber to ensure high-quality data transmission.

- Service Request: The created SOME/IP Client sends a “Service Request” to the Server of the Adaptive Application. This request initiates a session through which actual data can be transmitted.

- Message Subscribe, Convert, and Publish: In response to the “Service Request”, the bridge receives the SOME/IP message sent by the Adaptive Application’s Server. It subsequently converts this message into the ROS2 message type required by the ROS2 node’s Subscriber. Finally, the bridge publishes the converted ROS2 message to the appropriate topic using the previously established DDS Publisher, adhering to the defined QoS policy settings and ensuring reliable delivery to the ROS2 node’s Subscriber.

3.2.2. ROS2 Node to Adaptive Application

- Event Detection and Waiting: The bridge persistently monitors for a “Publisher registration” event broadcast via DDS Discovery and a “Find Service” event sent through SOME/IP-SD. The matching process commences asynchronously, even if only one of the two events is detected.

- Service Matching: Upon detecting an event, the bridge verifies the existence of its counterpart (either Publisher or Client) for interconnection. If the needed counterpart is not present—for example, if only a “Find Service” event from the Adaptive Application is detected—the bridge will wait for the corresponding ROS2 node’s Publisher to register. Conversely, if only the ROS2 node’s Publisher is detected, the bridge immediately multicasts an “Offer Service” message via SOME/IP-SD and awaits a “Service Request.” Once both the Client and Publisher are successfully matched, the process advances to the subsequent step of establishing the data path.

- Message Queue Creation: An internal message queue is established based on the matched information to facilitate data transmission between the two endpoints. This queue acts as a buffer for asynchronous data transfers between the two communication protocols.

- Communication Endpoint Creation: Appropriate endpoints for each communication protocol are created to enable data exchange. A Server is established for SOME/IP communication, while a Subscriber is set up for DDS communication. Importantly, the DDS Subscriber is configured to align with the QoS policy of the ROS2 node’s Publisher to ensure high-quality data subscription.

- Offer Service and Service Request: The created SOME/IP Server transmits an “Offer Service” event to the Client within the Adaptive Application, signaling the availability of the service. Upon receiving this, the Client of the Adaptive Application sends a “Service Request” to the bridge’s Server to initiate data reception.

- Message Subscribe, Convert, and Respond: The bridge’s DDS Subscriber subscribes to messages published by the ROS2 node’s Publisher and transforms the received ROS2 messages into a SOME/IP data format that the Client of the Adaptive Application can comprehend. The converted data is then delivered to the Client via the SOME/IP Server.

3.2.3. Remove Connection

- Remove Communication Endpoints: Upon confirmation of disconnection, the first step is to eliminate the communication endpoints utilized for that data path. This involves removing the SOME/IP Server or Client and the DDS Publisher or Subscriber, which were created based on the direction of data flow.

- Remove Message Queue: Following the removal of both endpoints, the internal message queue that acted as a buffer between them is deleted from the memory, and its resources are released.

- Return to Waiting State: Once the resource release process is finalized, the bridge transitions back to a waiting state, ready to receive new connection events.

3.3. Dynamic SOME/IP-DDS Bridge Implementation

- When the “Type” is Event, it specifies a rule for mapping SOME/IP’s one-way Publish/Subscribe communication (Event) to a DDS topic.

- When the “Type” is Method, it establishes a rule for mapping the two-way Request/Response communication (Method) to a Remote Procedure Call (RPC) service, utilizing a pair of DDS topics.

- When the “Type” is Field, it sets a rule for relaying state-based data (Field). It also incorporates composite mapping for notifications regarding value changes (Event) and controlling values (Getter/Setter, which aligns with the Method pattern).

4. Validation

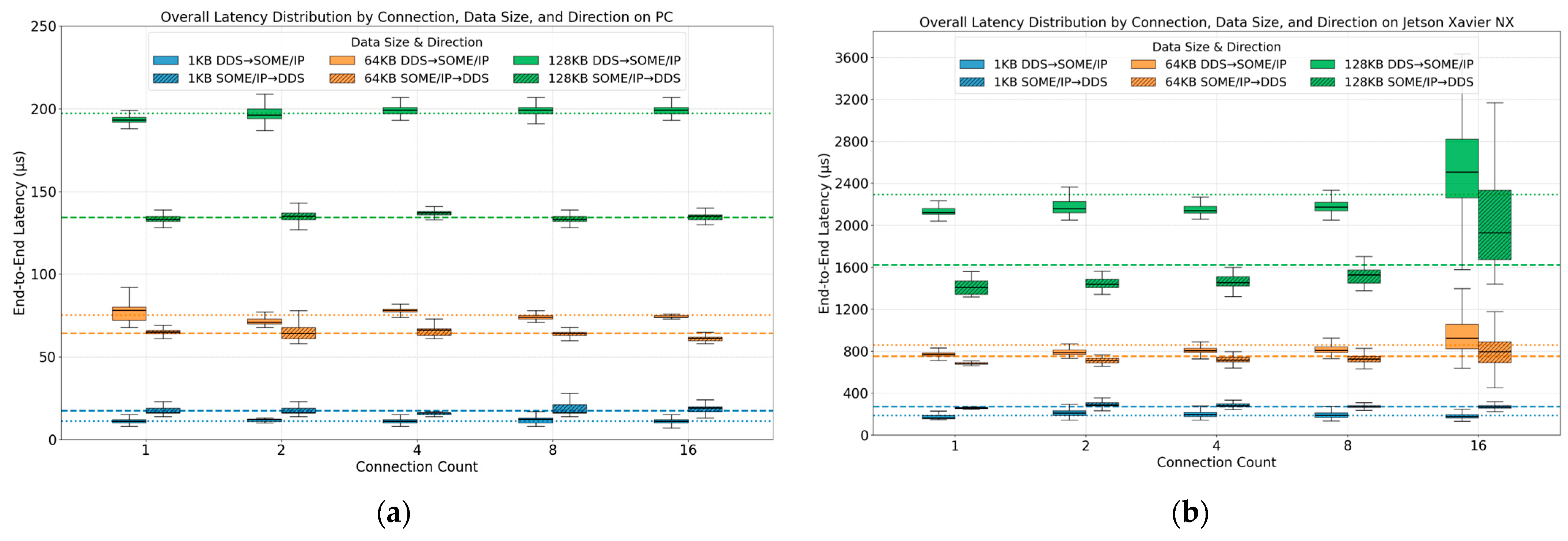

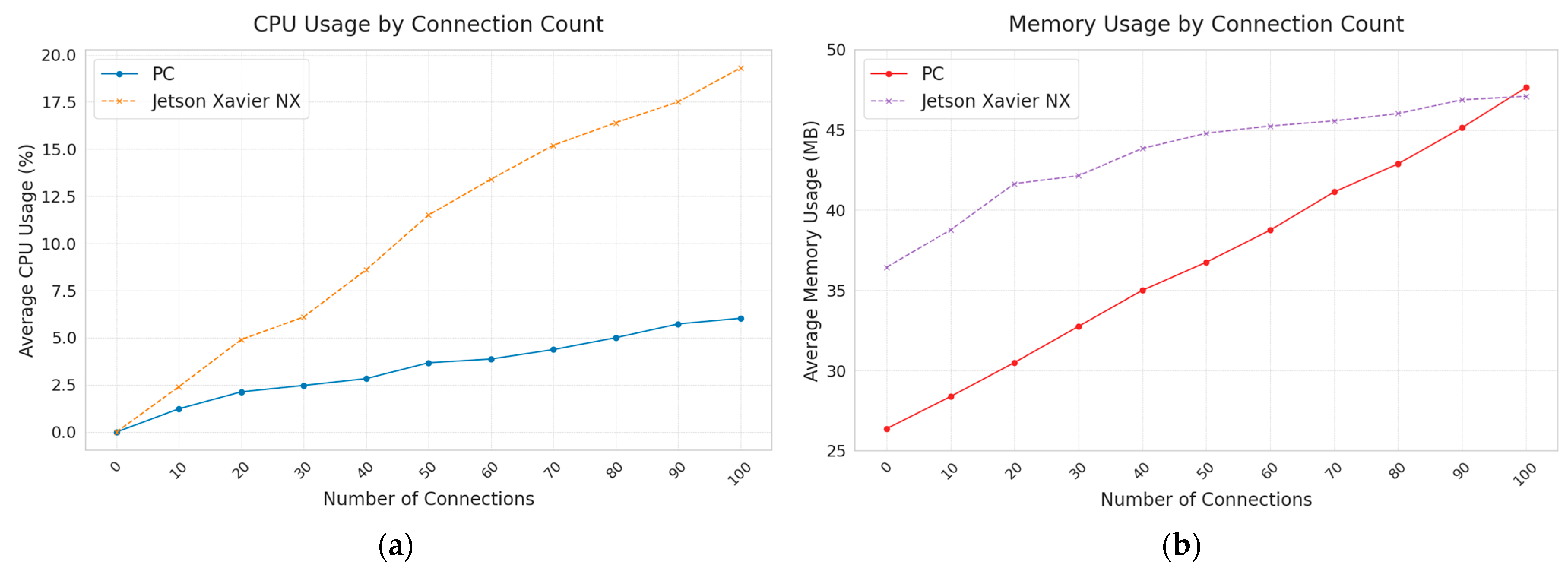

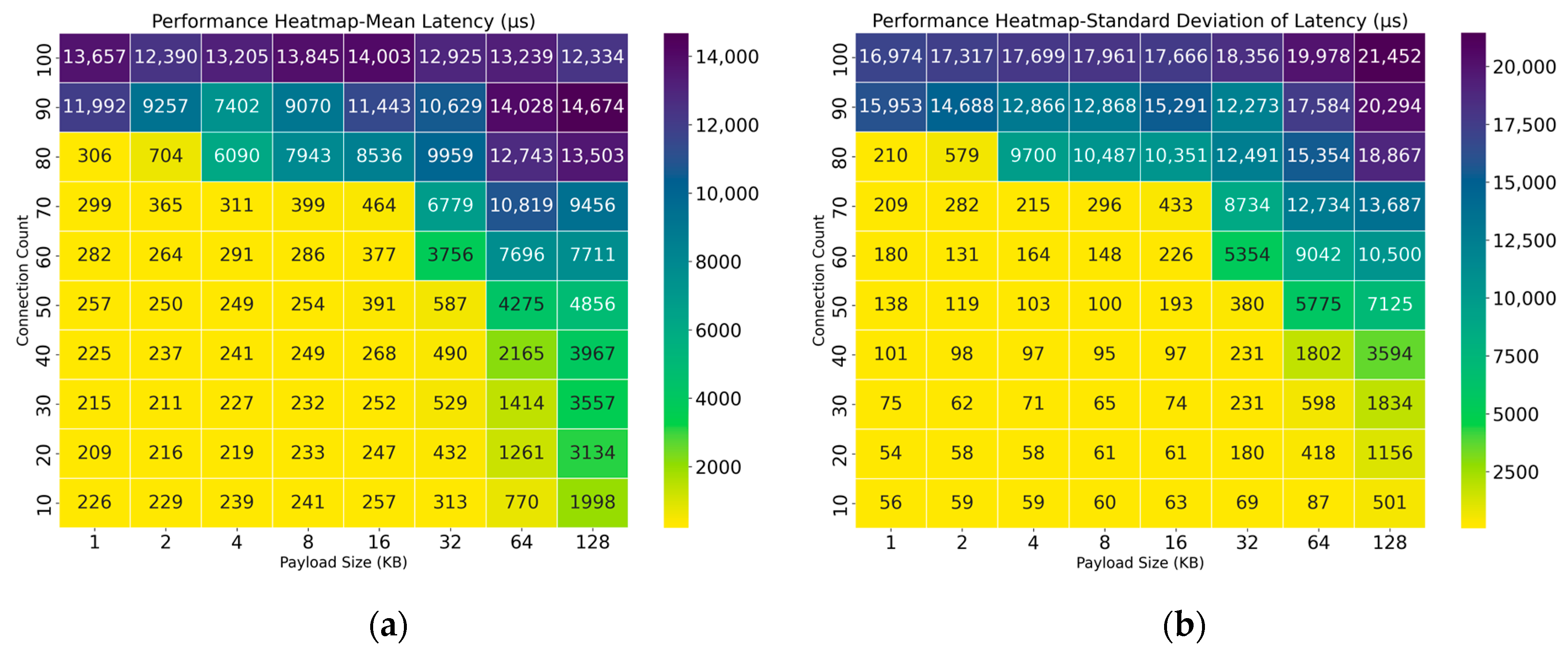

4.1. Performance Evaluation of the Dynamic SOME/IP-DDS Bridge

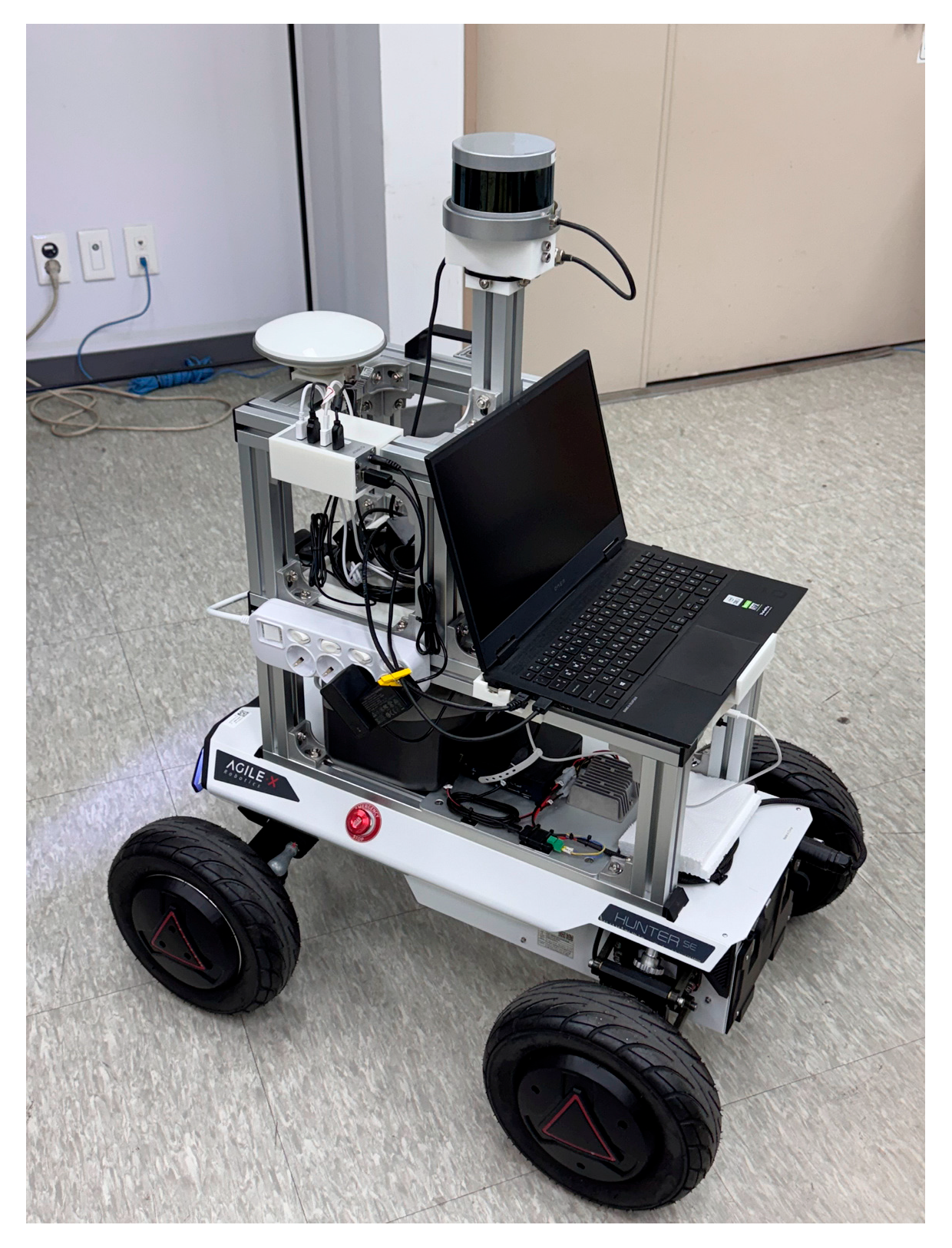

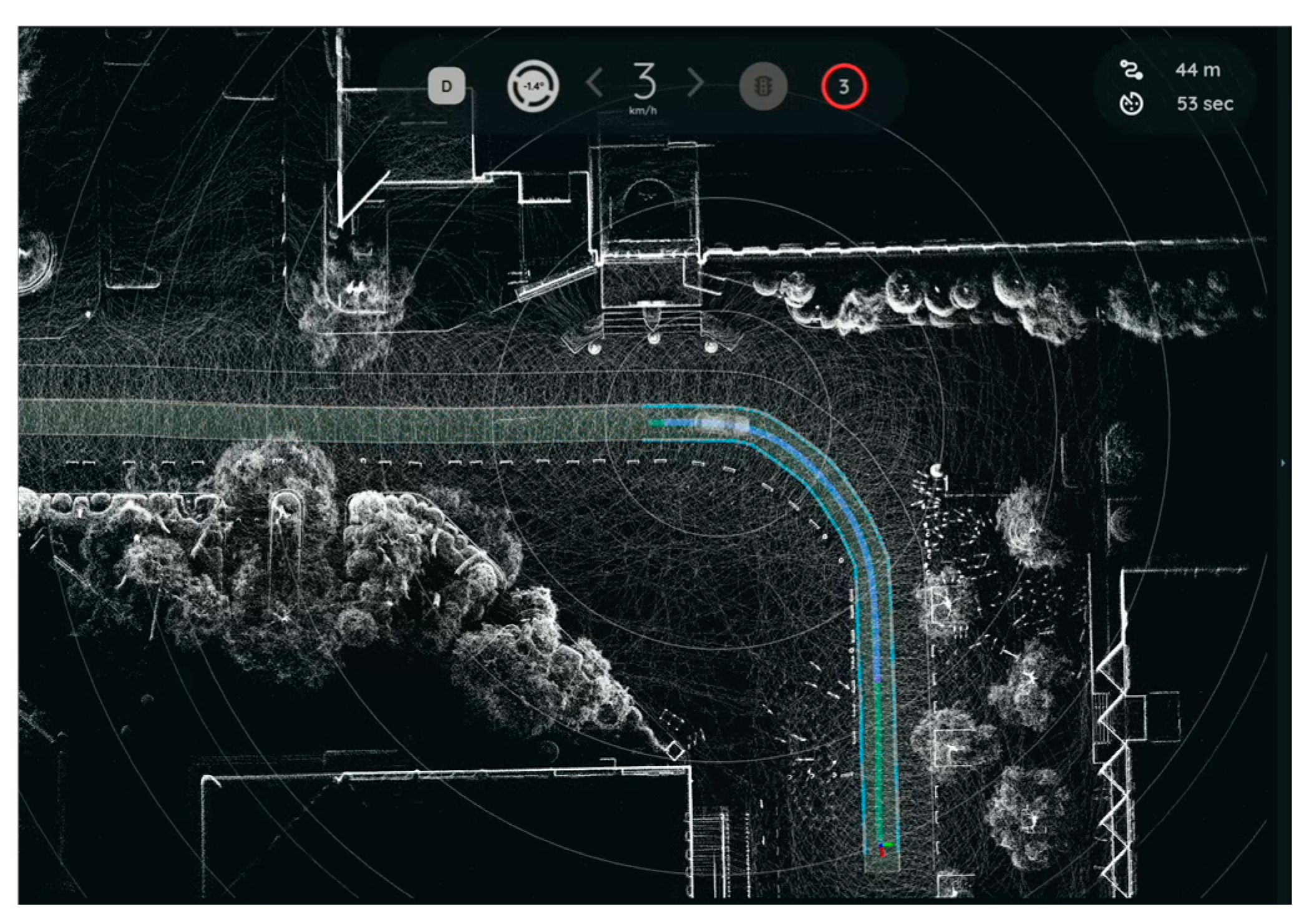

4.2. Autonomous Driving Test in the Real World Using the Dynamic SOME/IP-DDS Bridge

4.2.1. Test Environment and Scenario

4.2.2. Scenario Validation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, Z.; Zhang, W.; Zhao, F. Impact, Challenges and Prospect of Software-Defined Vehicles. Automot. Innov. 2022, 5, 180–194. [Google Scholar] [CrossRef]

- Navale, V.M.; Williams, K.; Lagospiris, A.; Schaffert, M.; Schweiker, M.A. (R)evolution of E/E Architectures. SAE Int. J. Passeng. Cars-Electron. Electr. Syst. 2015, 8, 282–288. [Google Scholar] [CrossRef]

- AUTOSAR Adaptive. Available online: https://www.autosar.org/standards/adaptive-platform (accessed on 7 August 2025).

- Shajahan, M.A.; Richardson, N.; Dhameliya, N.; Patel, B.; Anumandla, S.K.; Yarlagadda, V.K. AUTOSAR Classic vs. AUTOSAR Adaptive: A Comparative Analysis in Stack Development. Eng. Int. 2019, 7, 161–178. [Google Scholar] [CrossRef]

- ROS Open Robotics. Available online: https://www.ros.org (accessed on 7 August 2025).

- Autoware. Available online: https://autoware.org (accessed on 7 August 2025).

- Al-Batati, A.S.; Koubaa, A.; Abdelkader, M. ROS 2 Key Challenges and Advances: A Survey of ROS 2 Research, Libraries, and Applications. Preprint 2024. [Google Scholar] [CrossRef]

- Ji, Y.; Huang, Y.; Yang, M.; Leng, H.; Ren, L.; Liu, H.; Chen, Y. Physics-informed deep learning for virtual rail train trajectory following control. Reliab. Eng. Syst. Saf. 2025, 261, 111092. [Google Scholar] [CrossRef]

- Hong, D.; Moon, C. Autonomous Driving System Architecture with Integrated ROS2 and Adaptive AUTOSAR. Electronics 2024, 13, 1303. [Google Scholar] [CrossRef]

- Iwakami, R.; Peng, B.; Hanyu, H.; Ishigooka, T.; Azumi, T. AUTOSAR AP and ROS 2 Collaboration Framework. In Proceedings of the 2024 27th Euromicro Conference on Digital System Design (DSD), Paris, France, 28–30 August 2024; pp. 319–326. [Google Scholar] [CrossRef]

- DDS Specification, Version 1.4. Available online: https://www.omg.org/spec/DDS/1.4 (accessed on 7 August 2025).

- An, K.; Gokhale, A.; Schmidt, D.; Tambe, S.; Pazandak, P.; Pardo-Castellote, G. Content-based filtering discovery protocol (CFDP): Scalable and efficient OMG DDS discovery protocol. In Proceedings of the 8th ACM International Conference on Distributed Event-Based Systems (DEBS ‘14), Association for Computing Machinery, New York, NY, USA, 26–29 May 2014; pp. 130–141. [Google Scholar] [CrossRef]

- ROS2 Documentation. Version Kilted. Available online: https://docs.ros.org/en/kilted/index.html (accessed on 7 August 2025).

- AUTOSAR, R24-11. SOME/IP Protocol Specification. Available online: https://www.autosar.org/fileadmin/standards/R24-11/FO/AUTOSAR_FO_PRS_SOMEIPProtocol.pdf (accessed on 7 August 2025).

- Object Management Group(OMG). Data Distribution Service (DDS). Available online: https://www.omg.org/omg-dds-portal (accessed on 7 August 2025).

- Scalable Service-Oriented MiddlewarE over IP(SOME/IP). Available online: https://some-ip.com (accessed on 7 August 2025).

- AUTOSAR, R24-11. SOME/IP Service Discovery Protocol Specification. Available online: https://www.autosar.org/fileadmin/standards/R24-11/FO/AUTOSAR_FO_PRS_SOMEIPServiceDiscoveryProtocol.pdf (accessed on 7 August 2025).

- Henle, J.; Stoffel, M.; Schindewolf, M.; Nägele, A.T.; Sax, E. Architecture platforms for future vehicles: A comparison of ROS2 and Adaptive AUTOSAR. In Proceedings of the 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 3095–3102. [Google Scholar] [CrossRef]

- Ioana, A.; Korodi, A.; Silea, I. Automotive IoT Ethernet-Based Communication Technologies Applied in a V2X Context via a Multi-Protocol Gateway. Sensors 2022, 22, 6382. [Google Scholar] [CrossRef] [PubMed]

- Cakır, M.; Häckel, T.; Reider, S.; Meyer, P.; Korf, F.; Schmidt, T.C. A QoS Aware Approach to Service-Oriented Communication in Future Automotive Networks. In Proceedings of the 2019 IEEE Vehicular Networking Conference (VNC), Los Angeles, CA, USA, 4–6 December 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Cho, Y.; Lee, S.; Yang, J.; Cho, J. Integration of AUTOSAR Adaptive Platform with ROS2 through Network Binding. In Proceedings of the 2024 IEEE 13th Global Conference on Consumer Electronics (GCCE), Kitakyushu, Japan, 29 October–1 November 2024; pp. 1175–1176. [Google Scholar] [CrossRef]

- AUTOSAR, R23-11. Specification of Manifest. Available online: https://www.autosar.org/fileadmin/standards/R23-11/AP/AUTOSAR_AP_TPS_ManifestSpecification.pdf (accessed on 7 August 2025).

- Autoware, v.0.45.1. Defining Temporal Performance Metrics on Components. Available online: https://autowarefoundation.github.io/autoware-documentation/main/how-to-guides/others/defining-temporal-performance-metrics (accessed on 7 August 2025).

| Study | Deployment Model | SOME/IP-SD | DDS Discovery | Discovery-Level Synchronization | Resource Management | DDS QoS Policy | |

|---|---|---|---|---|---|---|---|

| 1 | D. Hong et al. (2024) [9] | ROS2 bridge node (ARISA) | Supported | Implicit via ROS2 | SOME/IP-SD only | NR | NR |

| 2 | R. Iwakami et al. (2024) [10] | ROS2 node (DDS-SOME/IP converter) | Supported | Implicit via ROS2 | SOME/IP-SD only | NR | NR |

| 3 | Y. Cho et al. (2024) [21] | AUTOSAR application (network binding with ROS2) | NR | NR | NR | NR | NR |

| 4 | Proposed (this work) | Dynamic bridge (Standalone Application) | Supported | Supported | Cross-domain | Supported | Strict enforcement |

| Parameter Name | Scope | Description |

|---|---|---|

| Type | All | Specifies the type of mapping rule (one of Event, Method, or Field). |

| someip.serviceID | All | The unique ID of the target SOME/IP service to be mapped. |

| someip.instanceID | All | The ID of the specific instance providing the service. |

| dds.qos | All | The QoS profiles to be applied to the DDS communication. |

| someip.eventgroupID | Event | The ID of the event group containing the event to be subscribed to. |

| someip.eventID | Event | The ID of the specific event to be mapped. |

| someip.methodID | Method | The ID of the specific method to be mapped. |

| someip.fieldID | Field | The ID of the specific field to be mapped. |

| dds.topic | Event, Field | The DDS topic for publishing one-way data (Event, Field Notification). |

| someip.eventType | Event, Field | The data type of the Event message. |

| dds.messageType | Event, Field | The message type to be used in the one-way data publishing topic. |

| dds.requestTopic | Method, Field | The DDS topic for RPC (Method, Field Setter/Getter) requests. |

| someip.requestType | Method, Field | The data type of the request message. |

| dds.requestMessageType | Method, Field | The message type to be used in the request topic. |

| dds.responseTopic | Method, Field | The DDS topic for RPC (Method, Field Setter/Getter) response. |

| someip.responseType | Method, Field | The data type of the response message. |

| dds.responseMessageType | Method, Field | The message type to be used in the response topic. |

| QoS Name | Description |

|---|---|

| History | Specifies the method and size for storing received or sent data. |

| Reliability | Sets reliability as the priority by preventing data loss with TCP (Reliable) or sets communication speed as the priority, such as UDP (best effort). |

| Durability | Determines whether to provide past data to subscribers who join later. |

| Deadline | Triggers an event if data transmission or reception does not occur within a specific time. |

| Lifespan | Validates only the data received within a defined period; other data is discarded. |

| Liveliness | Periodically or explicitly checks the active status of a node or topic. |

| Category | Specification | |

|---|---|---|

| PC | Processor | Intel® Core i7-10700K CPU @ 3.8 GHz (Intel, Santa Clara, CA, USA) |

| Memory | 32GB | |

| OS | Ubuntu 22.04 LTS | |

| DDS | Fast DDS v3.2.2 | |

| SOME/IP | vsomeip v3.5.6 | |

| Jetson Xavier NX | Processor | 6-core NVIDIA Carmel ARM® v8.2 64-bit CPU (NVIDIA, Santa Clara, CA, USA) 6MB L2 + 4MB L3 |

| Memory | 8 GB | |

| OS | Ubuntu 20.04 | |

| DDS | Fast DDS v3.2.2 | |

| SOME/IP | vsomeip v3.5.6 | |

| Stage | 1 KB | 2 KB | 4 KB | 8 KB | 16 KB | 32 KB | 64 KB | 128 KB | 256 KB | |

|---|---|---|---|---|---|---|---|---|---|---|

| Direction (PC)DDS to SOME/IP | DDS Subscribe | 9.90 | 10.41 | 11.53 | 8.74 | 11.02 | 8.79 | 4.34 | 2.82 | 2.26 |

| Data Convert | 17.23 | 19.42 | 20.38 | 17.54 | 20.00 | 21.60 | 11.83 | 23.54 | 26.52 | |

| Queue | 30.99 | 32.43 | 26.03 | 28.44 | 24.86 | 18.93 | 15.90 | 10.39 | 8.36 | |

| SOME/IP Publish | 41.88 | 37.74 | 42.06 | 45.28 | 44.12 | 50.68 | 67.93 | 63.25 | 62.86 | |

| Total | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| Direction (PC) SOME/IP to DDS | SOME/IP Subscribe | 0.56 | 1.33 | 2.19 | 4.54 | 3.78 | 5.01 | 4.39 | 4.13 | 3.68 |

| Queue | 18.32 | 18.32 | 18.21 | 18.74 | 16.28 | 13.85 | 9.30 | 6.92 | 6.54 | |

| Data Convert | 12.36 | 12.36 | 13.89 | 13.18 | 15.57 | 19.08 | 15.51 | 12.66 | 11.44 | |

| DDS Publish | 68.76 | 67.99 | 65.71 | 63.54 | 64.37 | 62.06 | 70.80 | 76.29 | 78.34 | |

| Total | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| Direction (Jetson) DDS to SOME/IP | SOME/IP Subscribe | 7.21 | 8.24 | 7.95 | 5.46 | 5.96 | 5.17 | 3.78 | 2.06 | 1.53 |

| Queue | 29.80 | 49.79 | 19.61 | 24.17 | 18.91 | 20.81 | 17.22 | 27.48 | 30.11 | |

| Data Convert | 16.70 | 16.24 | 7.95 | 7.46 | 7.06 | 6.42 | 9.19 | 5.88 | 4.44 | |

| DDS Publish | 46.29 | 25.73 | 64.50 | 62.90 | 68.07 | 67.60 | 69.81 | 64.88 | 63.92 | |

| Total | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| Direction (Jetson) SOME/IP to DDS | SOME/IP Subscribe | 1.92 | 2.09 | 2.10 | 2.37 | 3.41 | 2.82 | 2.26 | 1.93 | 1.58 |

| Queue | 23.19 | 17.79 | 21.46 | 17.09 | 16.21 | 15.78 | 10.65 | 5.83 | 3.97 | |

| Data Convert | 17.71 | 17.66 | 16.71 | 18.46 | 18.06 | 16.26 | 13.06 | 8.36 | 6.98 | |

| DDS Publish | 57.18 | 62.46 | 59.73 | 62.08 | 62.32 | 65.14 | 74.02 | 83.87 | 87.47 | |

| Total | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

| Category | Specification | |

|---|---|---|

| H.W. (Platform) | UGV | Agile X Hunter SE (Agilex Robotics, Shenzhen, China) |

| Sensor Processing Board | Jetson Xavier NX 8GB (NVIDIA) | |

| Laptop | OMEN 15-EK0013DX (HP, Palo Alto, CA, USA) | |

| Ethernet Switch | ipTIME H6005 mini (EFM Networks, Yongin, Republic of Korea) | |

| H.W. (Sensors) | LiDAR | Velodyne VLP-16 (Mapix Technologies, Edinburgh, UK) |

| GPS | u-blox ZED-F9P RTK (U-blox, Thalwil, Switzerland) | |

| S.W. (Sensor Processing Board) | OS | Ubuntu 20.04 |

| SOME/IP | vsomeip v3.5.6 | |

| S.W. (Laptop) | OS | Ubuntu 22.04 |

| DDS | Fast DDS v3.2.2 | |

| SOME/IP | vsomeip v3.5.6 | |

| ROS | ROS2 Humble | |

| Autoware | Autoware v0.45.1 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.; Choi, H.; Lee, S.; Kim, M.; Shin, H.; Moon, C. A Dynamic Bridge Architecture for Efficient Interoperability Between AUTOSAR Adaptive and ROS2. Electronics 2025, 14, 3635. https://doi.org/10.3390/electronics14183635

Kim S, Choi H, Lee S, Kim M, Shin H, Moon C. A Dynamic Bridge Architecture for Efficient Interoperability Between AUTOSAR Adaptive and ROS2. Electronics. 2025; 14(18):3635. https://doi.org/10.3390/electronics14183635

Chicago/Turabian StyleKim, Suhong, Hyeongju Choi, Suhaeng Lee, Minseo Kim, Hyunseo Shin, and Changjoo Moon. 2025. "A Dynamic Bridge Architecture for Efficient Interoperability Between AUTOSAR Adaptive and ROS2" Electronics 14, no. 18: 3635. https://doi.org/10.3390/electronics14183635

APA StyleKim, S., Choi, H., Lee, S., Kim, M., Shin, H., & Moon, C. (2025). A Dynamic Bridge Architecture for Efficient Interoperability Between AUTOSAR Adaptive and ROS2. Electronics, 14(18), 3635. https://doi.org/10.3390/electronics14183635