Empirical Evaluation of Reasoning LLMs in Machinery Functional Safety Risk Assessment and the Limits of Anthropomorphized Reasoning

Abstract

1. Introduction

1.1. Identified Research Gaps

- Gap 1: Lack of empirical evaluation of reasoning models in deterministic, safety-critical PLr classification.

- Gap 2: Underexplored impact of reasoning biases and hallucinations in PLr estimation.

- Gap 3: Absence of structured benchmarking methodologies and empirical evaluation for reasoning models in functional safety risk assessment.

1.2. Research Questions

- RQ1: Can reasoning models reliably perform structured risk classification tasks, such as PLr estimation, within the constraints of functional safety standards?

- RQ2: What limitations do reasoning models exhibit when applied to deterministic classification problems in the domain of machinery risk assessment?

- RQ3: How does the reliance on structured prompting affect the consistency and validity of reasoning model outputs in functional safety contexts?

1.3. Contributions

- Comprehensive Experimental Benchmarking of Reasoning Models for Functional Safety Risk Classification (RQ1): A systematic evaluation of reasoning-capable LLMs applied to PLr estimation tasks is presented, utilizing diverse prompting strategies including zero-shot, CoT, rule-based prompting, and RAG-augmented reasoning.

- Empirical analysis of reasoning biases and hallucination effects in structured classification (RQ2): The study analyzes error patterns, misclassification tendencies, and reasoning-induced biases exhibited by LLMs in deterministic classification tasks (e.g., P-inflation, F-drift, redundancy, and mislabeling across PLr classes).

- Identification of Methodological Considerations for LLM Deployment in Safety-Critical Applications and Future Benchmarking (RQ3): Practical implications are highlighted, including the necessity of structured prompting and the risks of anthropomorphizing LLM reasoning capacities (i.e., attributing human-like reasoning abilities to models based on their language output) in domains with strict correctness requirements. The findings also establish a basis for future research on the applicability and limitations of AI reasoning models in structured industrial domains, contributing to the development of scientifically grounded benchmarking methodologies.

2. Background and Related Work

2.1. Machinery Functional Safety Risk Assessment

2.1.1. Risk Assessment

2.1.2. Safety Function

2.1.3. Required Performance Level- PLr

2.2. AI-Based Risk Assessment in Functional Safety

2.3. LLM Capabilities and Limitations in Reasoning Tasks

2.4. Prompt Engineering, Retrieval-Augmented Generation, and Model Interpretability

2.5. The Role of Domain-Specific Benchmarking in Evaluating LLMs

3. Experimental Design

3.1. Dataset Description

3.1.1. ISO 12100: Annex B and ISO 13849-1: Annex A Correlation

- User type (e.g., operator, maintenance personnel).

- Task (e.g., normal operation, cleaning, maintenance).

- Operating environment (e.g., industrial).

- ISO 13849-1 risk graph parameters: severity (S), frequency and/or duration of exposure (F), and possibility of avoidance (P).

3.1.2. Dataset Entry: Template and Example

| Listing 1. Example of an electrical hazard description in the dataset [7,37]. |

|

3.2. Evaluation Datasets

- Variant 1—Canonical ISO-style scenarios (N = 100): This variant anchors the evaluation in in-distribution phrasing closely mirroring ISO 12100 Annex B. Each case follows a fixed schema (hazard type, origin, potential consequence, task, environment) and reports explicit risk parameters, namely, severity (S), exposure frequency (F), and possibility of avoidance (P), together with a reference PLr. Because the textual descriptions are automatically generated from canonical fields while remaining faithful to the ISO taxonomy, Variant 1 can be characterized as a synthetic but controlled dataset that provides a standardized baseline under explicit terminology.

- Variant 2—Functional safety engineer-authored scenarios with lexical shift (N = 100): This variant stress tests generalization to field language. Scenarios were written by a functional safety engineer from industrial practice using the same schema and gold labels as Variant 1, but the free-text descriptions deliberately avoid literal ISO tokens for S/F/P. Instead, the factors are conveyed implicitly in operational prose, for example, "repetitive short stops near a moving transmission", "limited clearance and delayed stop reachability" and "hands inside a nip area during setup". This emulates how hazards are typically described in industrial workflows during safety assessments.

3.3. Prompting Strategies for Risk Classification

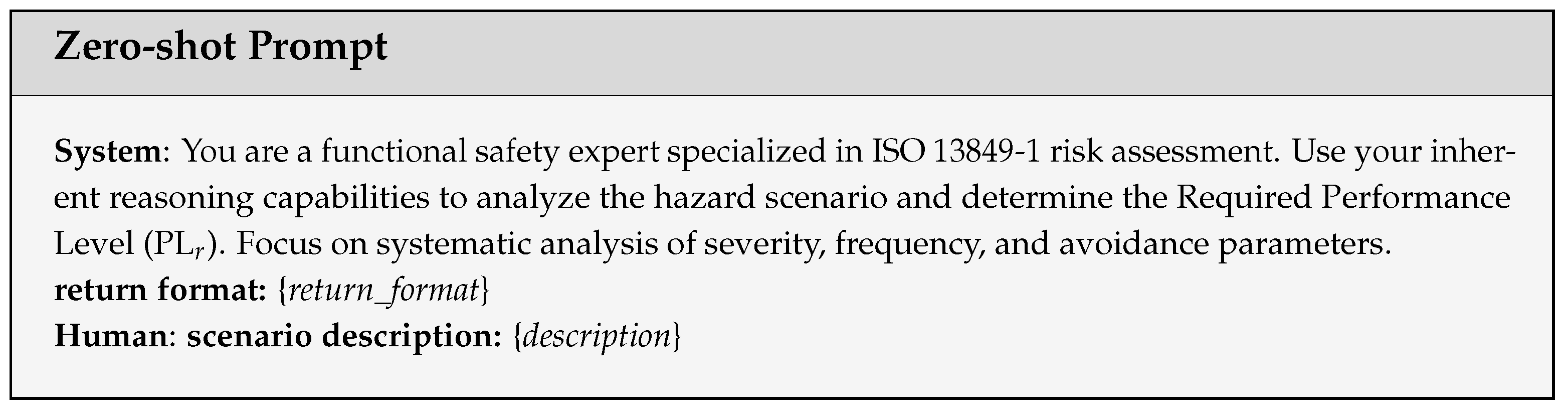

- Experiment I: Zero-shot PromptIn this baseline experiment, the reasoning model is given only the raw hazard scenario in natural language, without any additional guidance or rules beyond its role as a functional safety expert. This setup assesses the reasoning model’s inherent ability to determine the PLr based on its pre-trained knowledge and reasoning capabilities, focusing on systematic analysis of severity, frequency, and avoidance parameters.

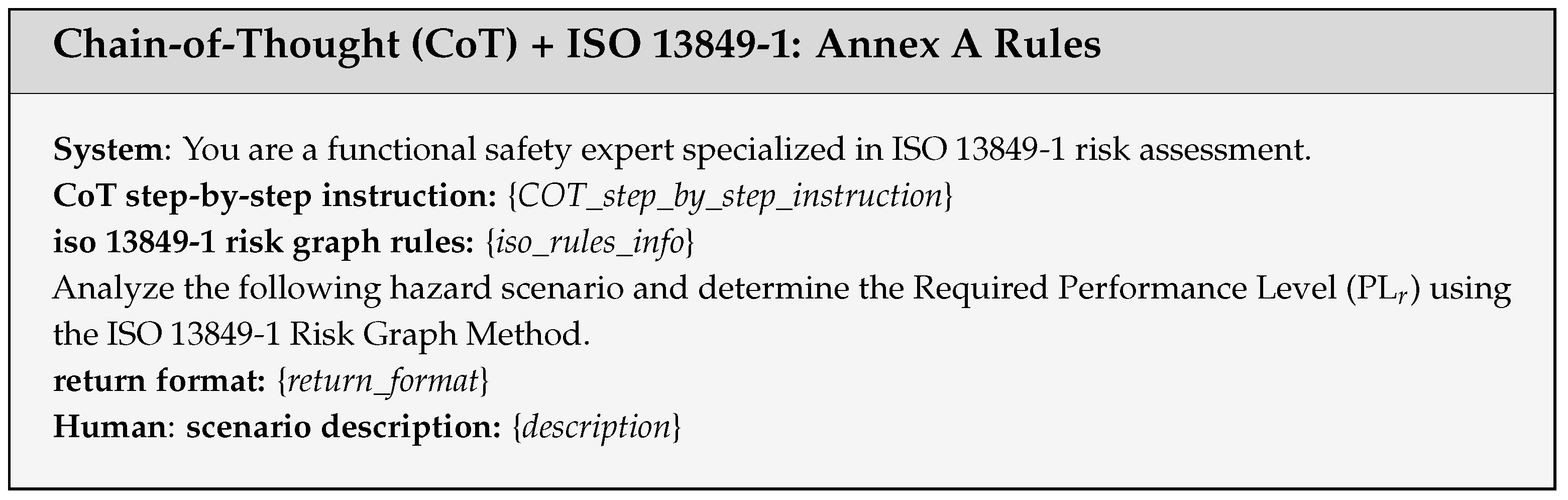

- Experiment II: Explicit ISO Rule IntegrationBuilding on the zero-shot prompt, this experiment supplements the scenario with explicit PLr determination rules as specified in ISO 13849-1 Annex A (cf. Figure 1). This tests whether access to codified safety knowledge enables more accurate and consistent classification.

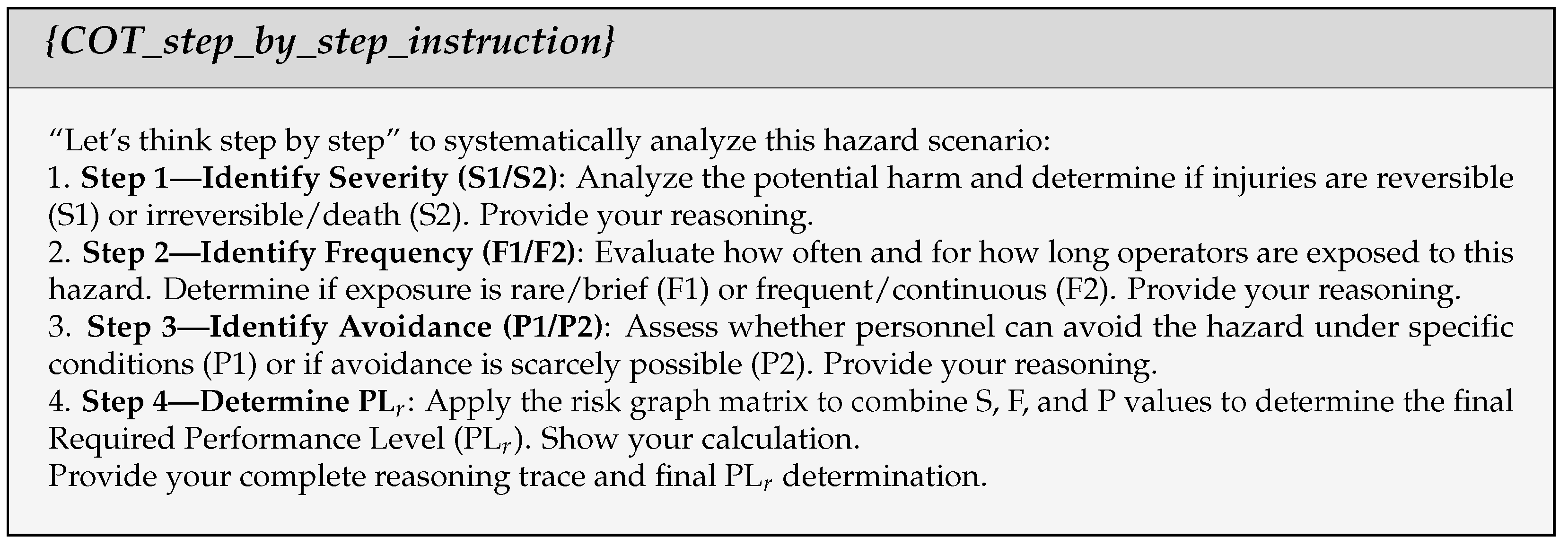

- Experiment III: Chain-of-Thought (CoT)This experiment uses the chain-of-thought (CoT) approach by providing highly structured, explicit step-by-step instructions for the model’s reasoning process but without explicitly stating the ISO 13849-1: Annex A performance graph rules. This aims to guide the model through a precise and verifiable pathway for determining the PLr.

- Experiment IV: Chain-of-Thought (CoT) with RulesThis experiment combines the structured step-by-step instructions of the CoT approach with explicit textual inclusion of the ISO 13849-1: Annex A performance graph rules (cf. Figure 1). The goal is to provide the model with the necessary information directly within the prompt, minimizing reliance on its pre-trained knowledge for the specific rules of PLr determination. This setup evaluates the model’s ability to precisely apply provided rules in conjunction with its reasoning process.

- Experiment V: CoT with Rules and Retrieval-Augmented ExamplesIn addition to the scenario, rules, and explicit CoT instructions, representative historical hazard examples are retrieved from a curated database and included in the prompt. This evaluates the model’s ability to generalize from a precedent and improve classification accuracy through context enrichment. This experiment is referred to as in the paper.

- Experiment VI: Rules with Retrieval-Augmented ExamplesIn addition to the scenario and rules, representative historical hazard examples are retrieved from a curated database and included in the prompt. This evaluates the model’s ability to generalize from precedent and improve classification accuracy through context enrichment. This experiment is referred to as in this paper. This is specifically added as a methodology to understand the differences of usage with and without COT together with rules and retrieval. So, please note that the main difference between the experiments V and VI is that the experiment VI does not have CoT instructions.

- ZERO_SHOT: Baseline condition where the model receives only the raw hazard scenario, without explicit rules or structured guidance.

- WITH_RULES: Prompt includes explicit ISO 13849-1 Annex A rules, constraining the model to rule-based PLr determination.

- PURE_CoT: Uses chain-of-thought (CoT) instructions to elicit step-by-step reasoning, but without explicit rule injection.

- COT_WITH_RULES: Combines CoT instructions with explicit ISO rules, guiding the model through structured reasoning and rule application.

- WITH_RULES_RAG: Augments rule-based prompting with retrieved hazard exemplars from a curated database, enabling case-based reasoning.

- COT_WITH_RULES_RAG: Integrates CoT, explicit ISO rules, and retrieved exemplars, representing the most structured and enriched prompting setup.

3.4. Prompt Placeholder Description

- {description}: The central input for each prompt is a natural language description of a real-world hazard scenario, simulating industrial safety assessment tasks and enabling evaluation of the model’s capacity for context comprehension and risk mapping. An example description, including user type, task, hazard origin, and consequence, is shown in Listing 1.

- {iso_rules_info}: This placeholder injects the structured decision logic codified in ISO 13849-1: Annex A, including parameter definitions and full risk graph mapping rules. It converts the task from open-ended inference to rule-constrained reasoning, enabling assessment of whether direct access to normative safety rules improves PLr classification fidelity and consistency. The rules ensure deterministic mapping of scenario descriptions into standardized parameters—severity (S1/S2), exposure frequency (F1/F2), and possibility of avoidance (P1/P2)—which together yield the PLr. An excerpt of this content is shown in Listing 2.

Listing 2. Deterministic rules codified under {iso_rules_info} for PLr inference based on ISO 13849-1: Annex A qualitative performance graph rules (cf. Figure 1). - {COT_step_by_step_instruction} This placeholder as detailed in Listing 3 formalizes a CoT prompting technique. It provides the LLM with explicit, step-by-step instructions for analyzing a hazard scenario, specifically guiding its reasoning for severity, frequency, and avoidance. By forcing this sequential decomposition and requiring intermediate justifications, this CoT aims to enhance the model’s transparency and align its decision-making with structured human expert methodologies for PLr determination. This approach is crucial for improving explainability and validating AI reasoning in critical safety applications.

Listing 3. Structured chain-of-thought instructions under {COT_step_by_step_instruction} prompt placeholder for PLr inference. - {rag_examples}:This placeholder is populated by a retrieval module that provides curated hazard scenarios (with ground-truth S/F/P and PLr) from a curated database of hazard scenarios. Further details about the RAG implementation pipeline are provided in Section 3.5.1.

3.5. RAG Implementation

- WITH_RULES_RAG: Injects retrieved exemplars alongside the base hazard description and ISO rules.

- COT_WITH_RULES_RAG: Integrates exemplars into a CoT prompt alongside base hazard descriptions and ISO rules.

3.5.1. Pipeline Implementation

Stage I: Semantic Database Search

Stage II: Hybrid Prefiltering and Ranking

- Lexical overlap filter: Compute the Jaccard index on token sets for the query and each candidate; retain only those with (default ).

- S/F/P constraint (optional exact gate; disabled by default): Map hazards to with , , . If require_sfp_exact = true, discard any candidate whose triplet does not exactly match the query’s; otherwise keep but log mismatches for diagnostics. In all main experiments, this gate was "disabled" (require_sfp_exact = false); enabling it is left for ablations.

- Deduplication and top-M selection: Deduplicate by hazard identifier, rank primarily by J (semantic score may be used as a tie-breaker inside the helper), and truncate to the configured top M.

Stage III: Evidence Packaging for Prompting

3.5.2. Retrieval Index (ChromaDB)

3.6. Model Selection and Configuration

- Claude Opus 4.1 (Anthropic): Latest Claude 4.x release positioned for complex reasoning, coding, and agentic workflows [40].

- DeepSeek Reasoner: Domain-agnostic reasoning model optimized for multi-step inference; API documentation lists unsupported decoding controls (see below) [41].

- o3-mini (OpenAI): Small o-series reasoning model targeting STEM/logic tasks at low cost/latency [47].

- o4-mini (OpenAI): Newer small o-series model optimized for fast, effective reasoning (math, coding, vision) [48].

3.6.1. Deterministic Decoding Policy

3.6.2. Cost Context

4. Results and Analysis

- Scope. Two datasets are evaluated:

- –

- Variant 1: Canonical ISO-style scenarios (in-distribution reliability).

- –

- Variant 2: Engineer-authored free-text scenarios (out-of-distribution robustness).

- Reported metrics. For each (model, prompt) condition, the following are reported:

- –

- Accuracy; Macro-/Micro-/Weighted-F1.

- –

- Per-class metrics (including class E recall).

- –

- Processing time.

- Experimental protocol.

- –

- Deterministic decoding: temperature , top_p , top_k (when available).

- –

- Repeats: independent runs per condition with identical inputs; for RAG, a fixed retrieval configuration and index.

- –

- Aggregation: metrics computed per run and then averaged across runs.

- Uncertainty quantification. Error bars are 95% t-intervals across runs () for accuracy and timing, and 95% class-stratified bootstrap Confidence Intervals (CIs) for Micro-/Macro-/Weighted-F1 and all per-class metrics.

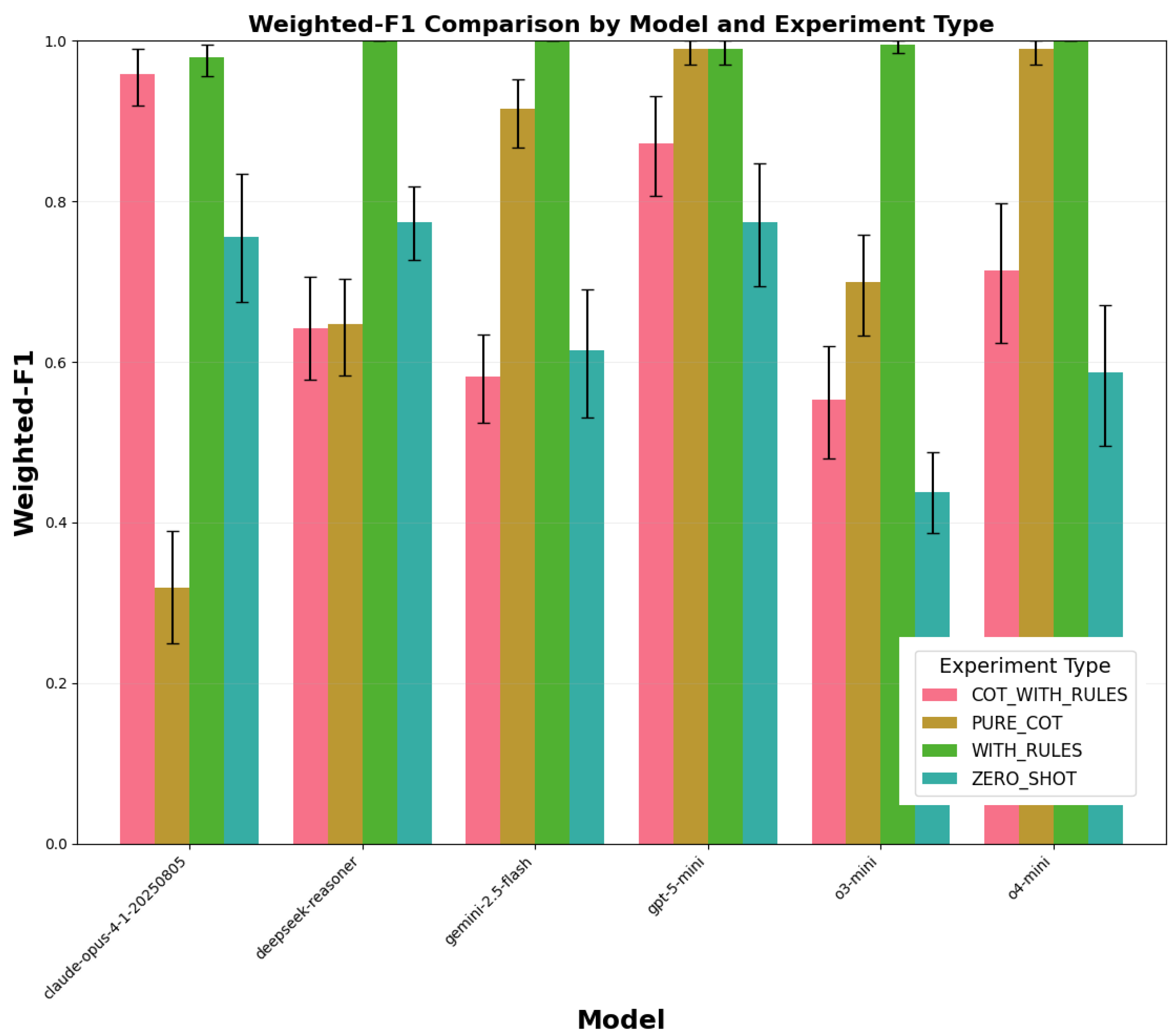

- Weighted-F1 (rationale).

- –

- Weighted-F1 averages per-class F1 using class prevalence as weights; Macro-F1 gives equal weight to all classes; Micro-F1 (for single-label tasks) equals accuracy and can mask per-class precision/recall trade-offs.

- –

- To avoid hiding minority-class failures, Weighted-F1 is always paired with Macro-F1, per-class metrics, and class E recall.

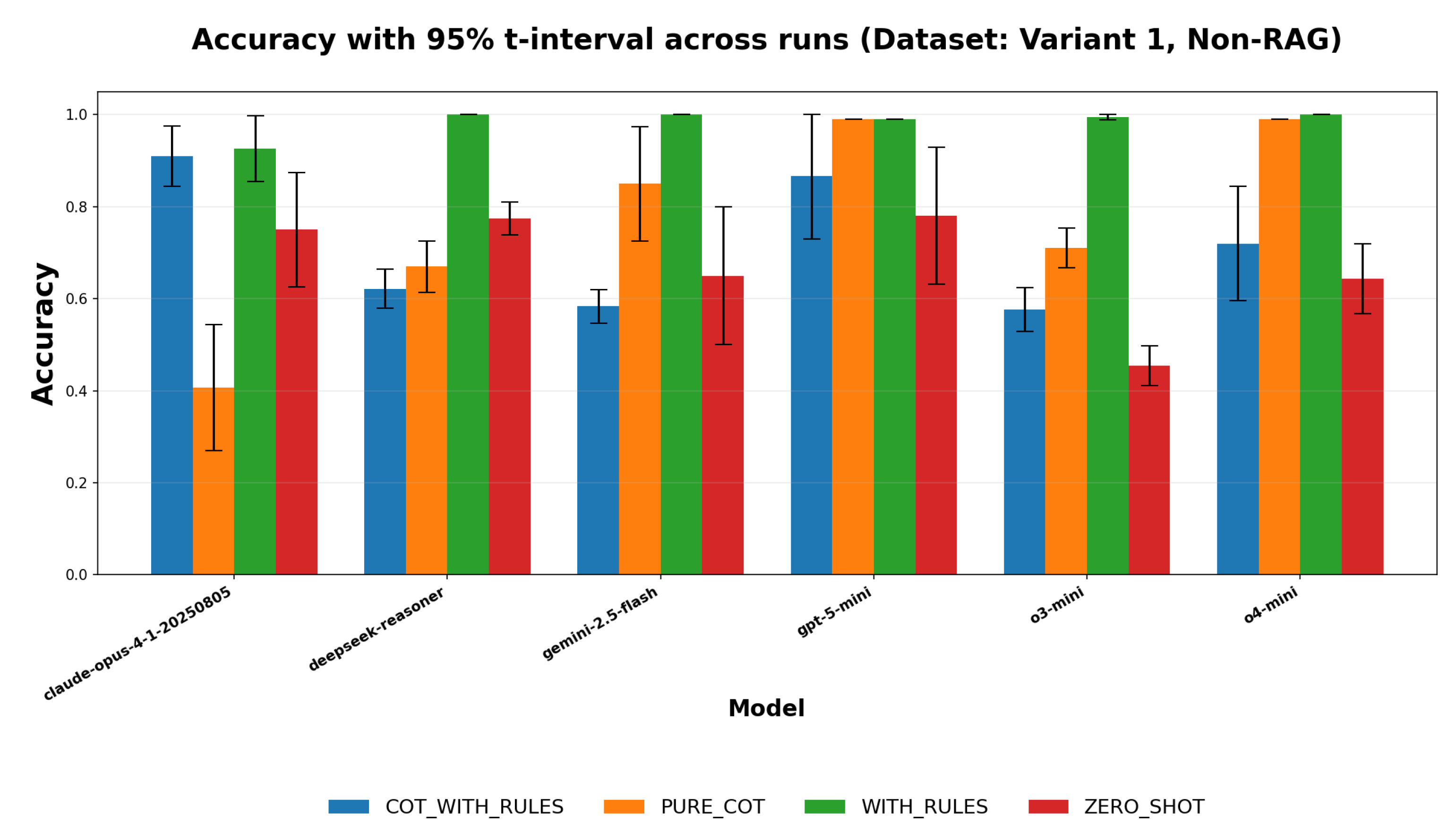

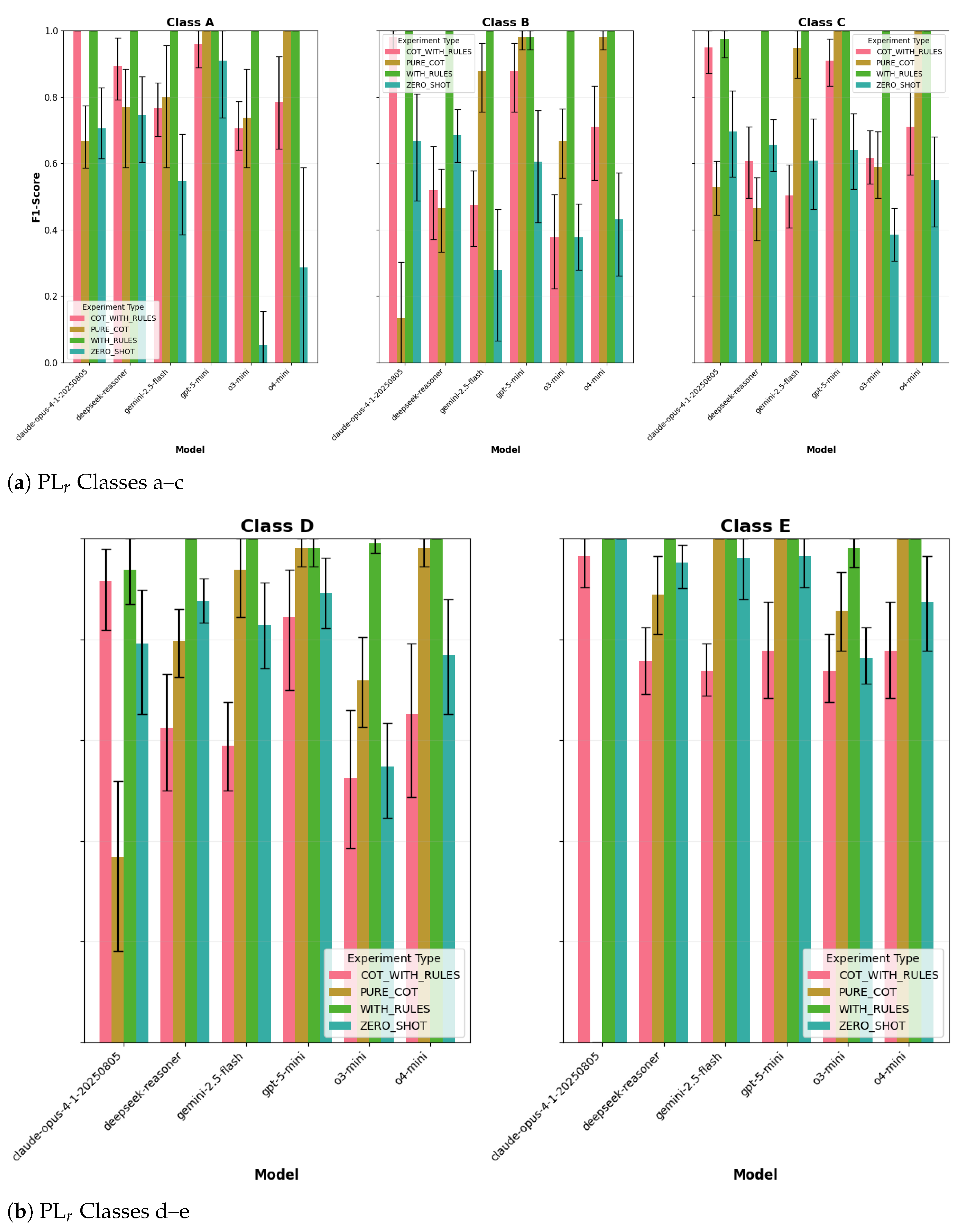

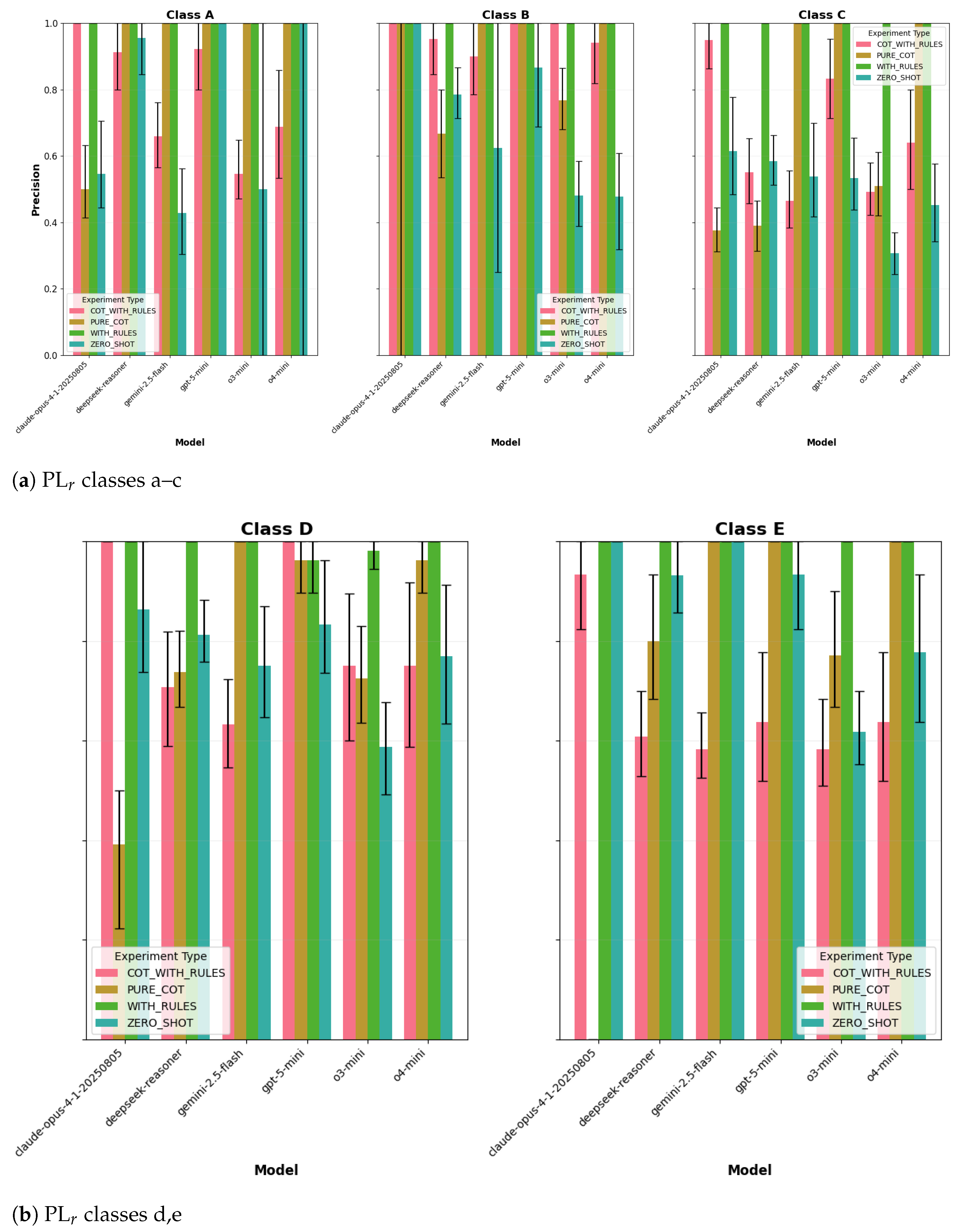

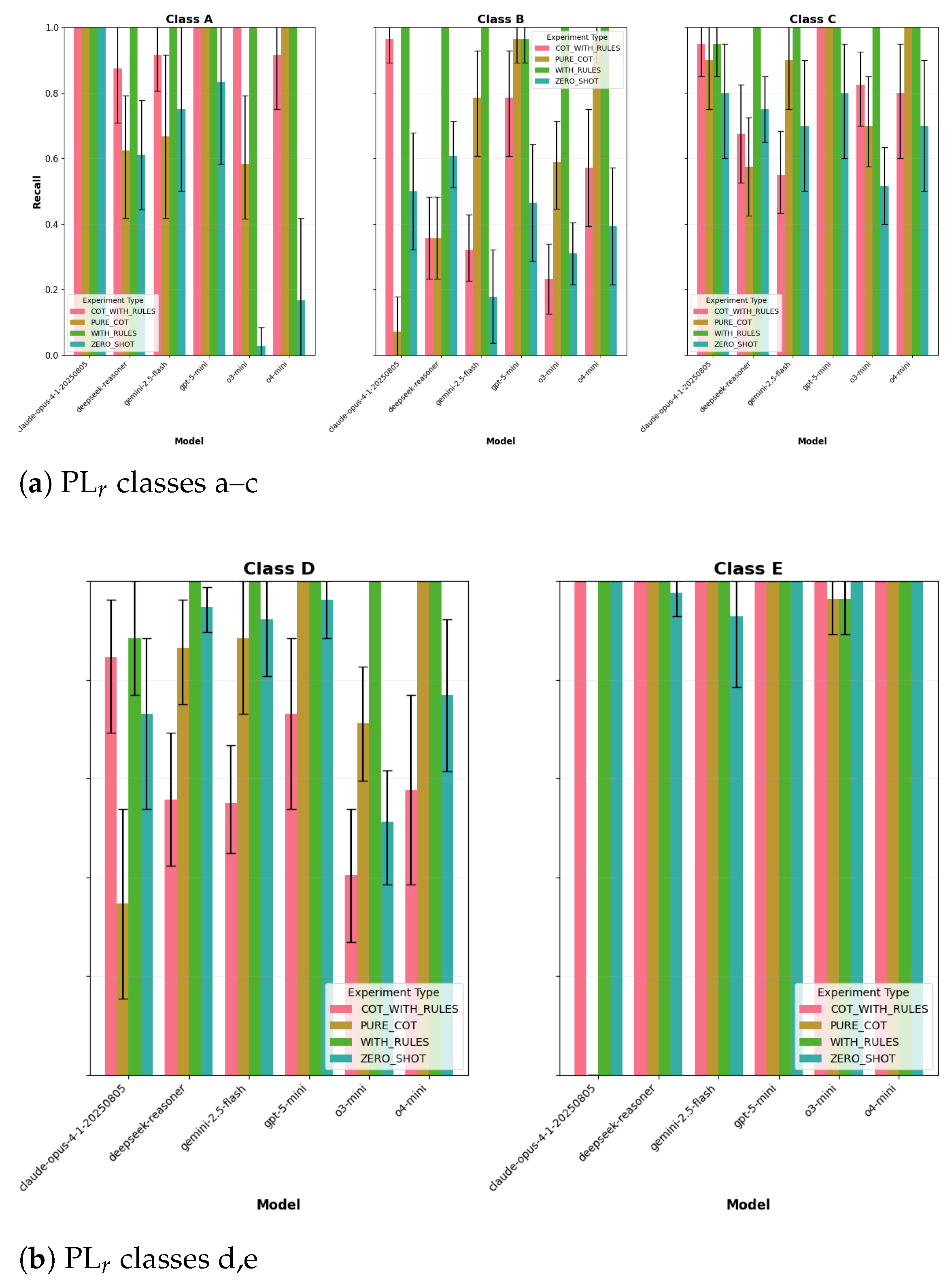

4.1. Results on Variant 1 (Canonical ISO-Style Scenarios, Non-RAG Prompts)

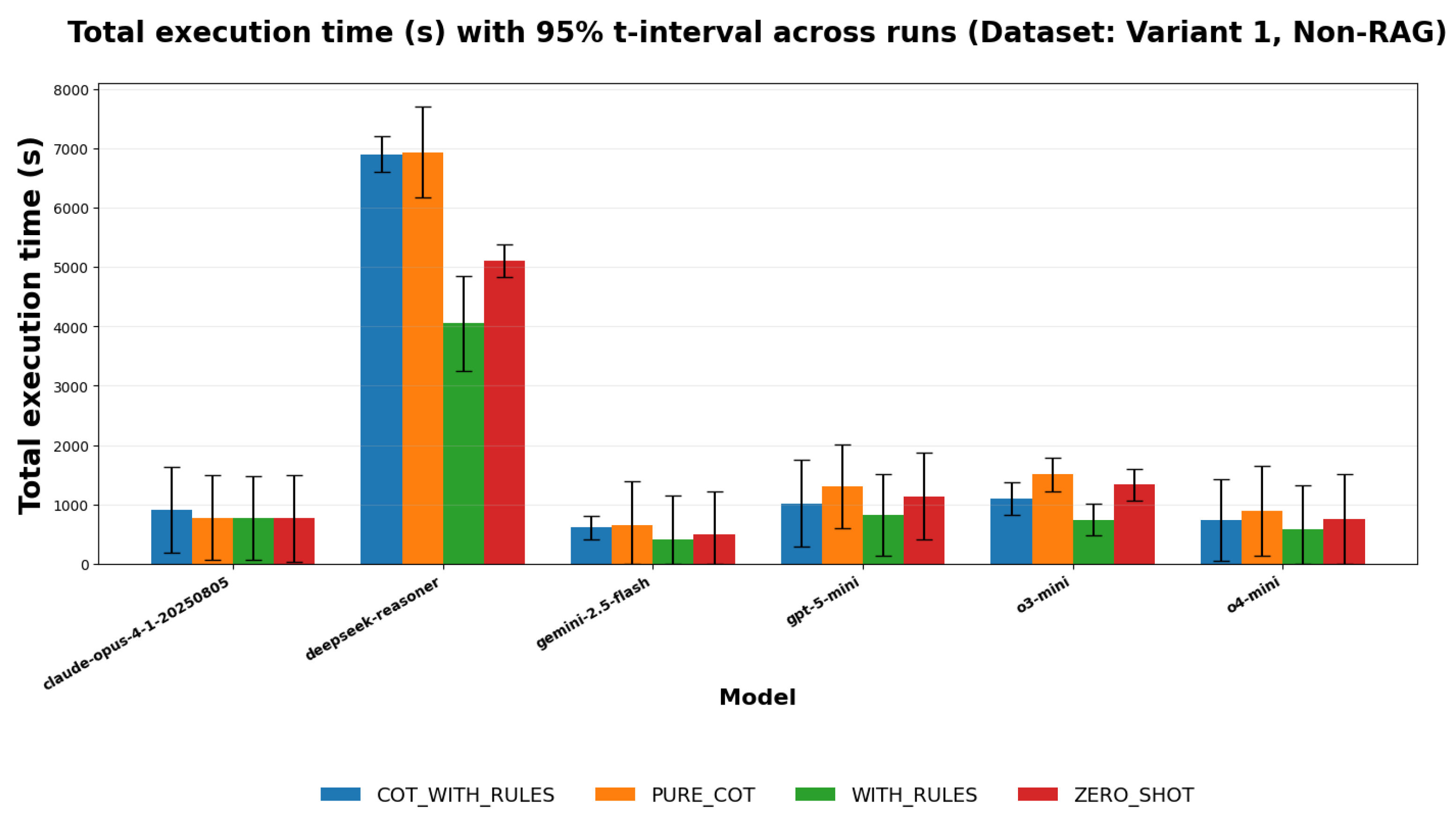

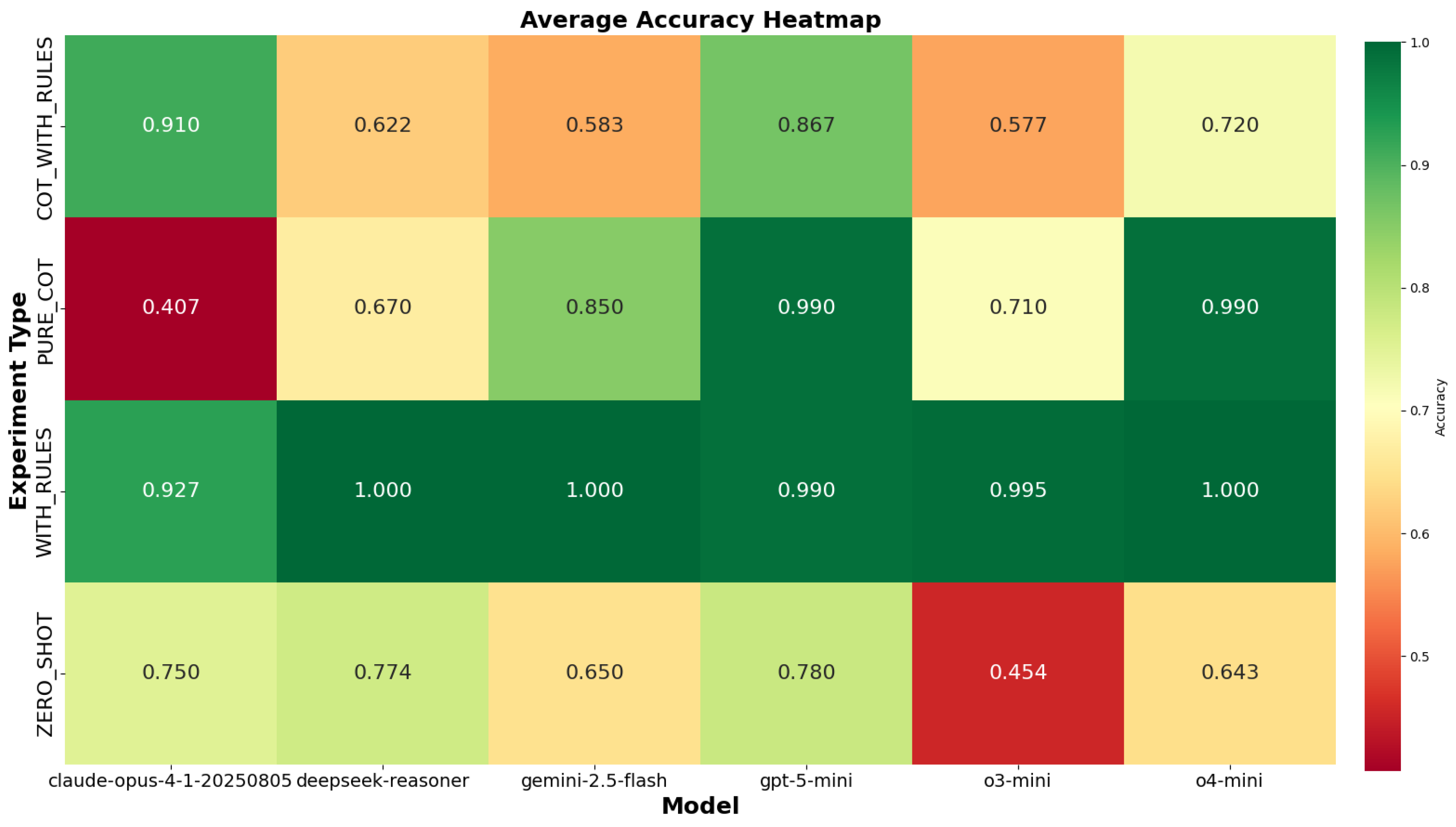

4.1.1. Accuracy and Processing Time

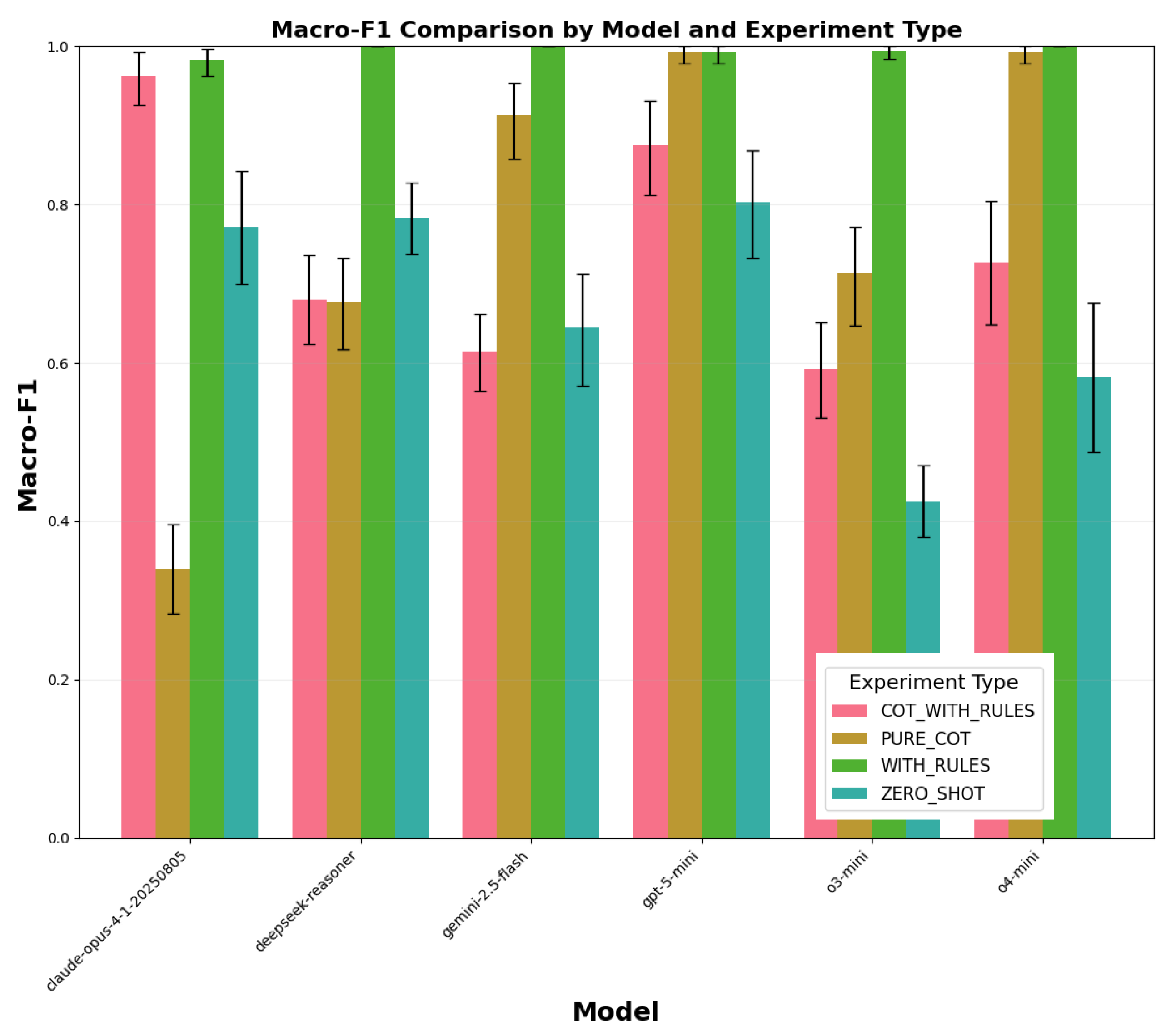

4.1.2. Macro, Micro-F1 and Precision

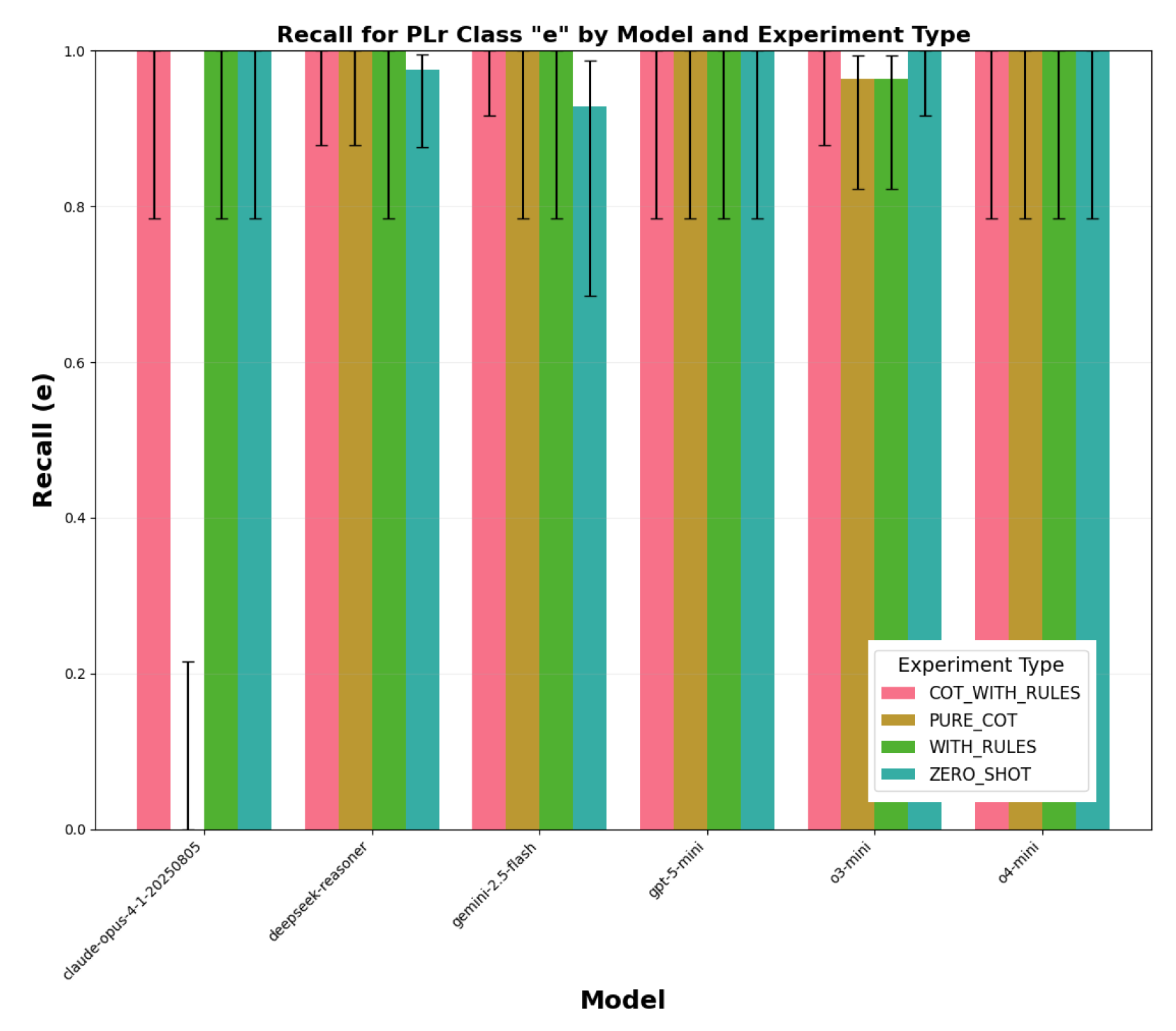

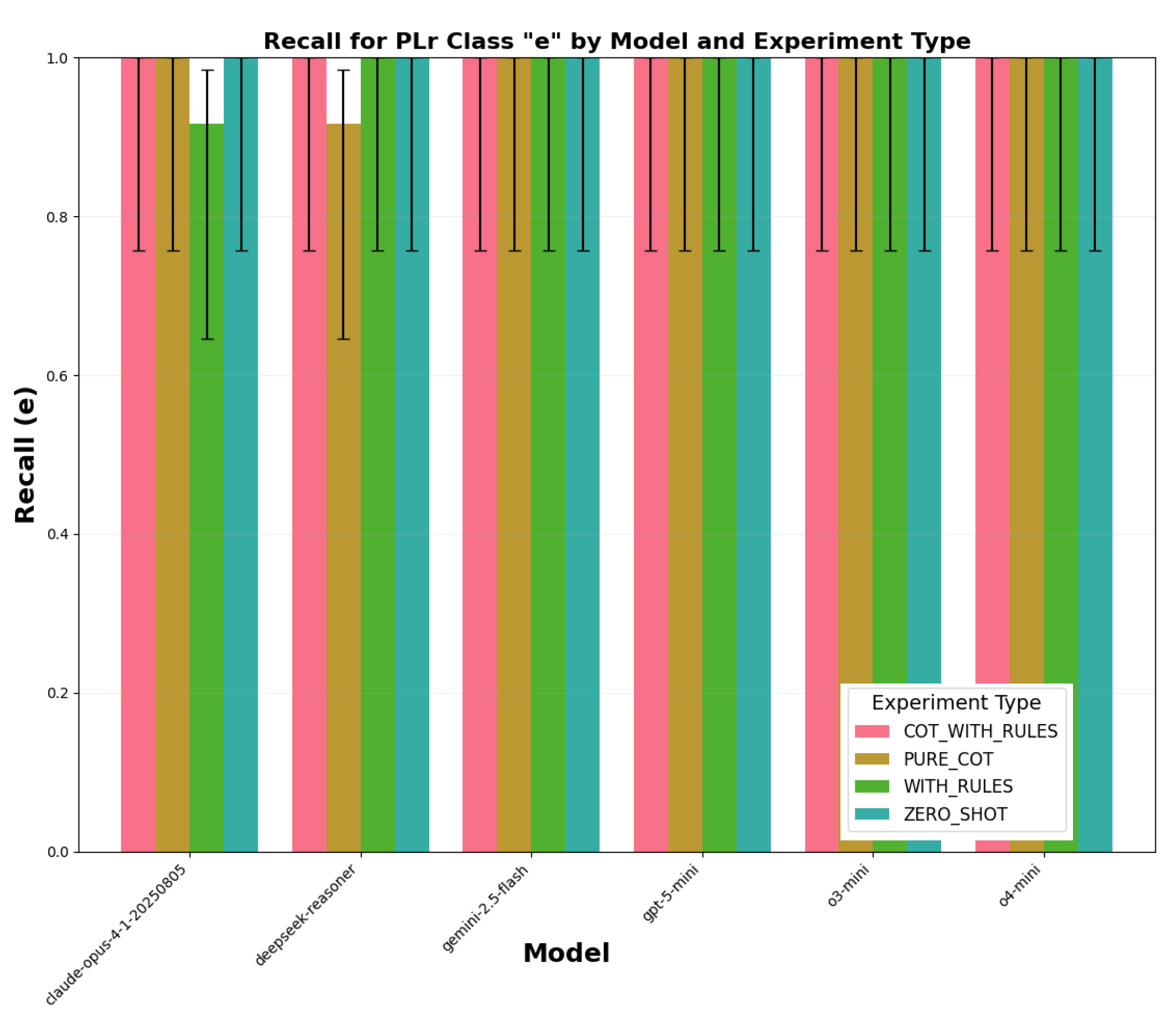

4.1.3. Recall for PLr Class E

4.2. Results on Variant 1 (Canonical ISO-Style Scenarios, 2-RAG-Based Prompts)

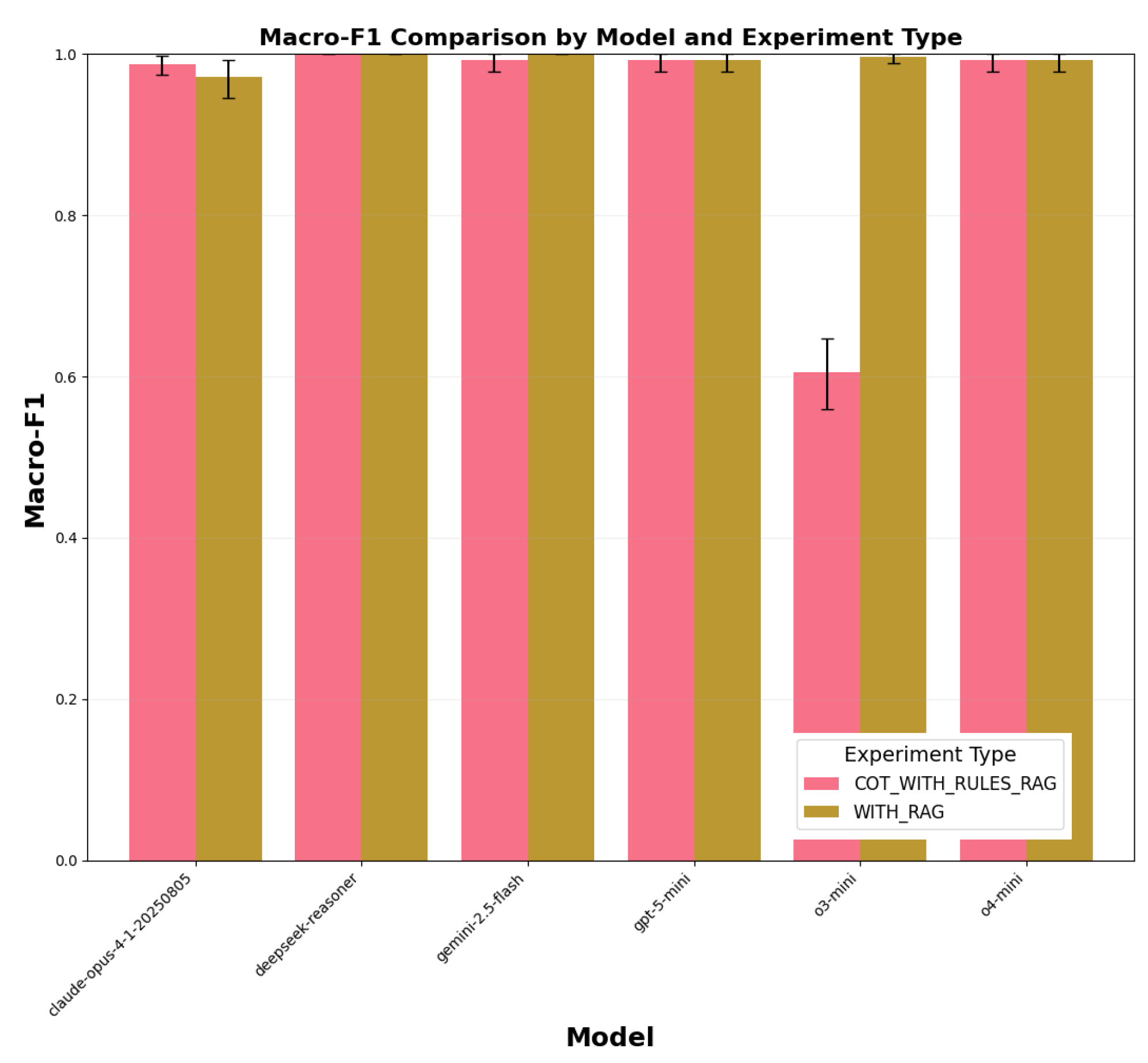

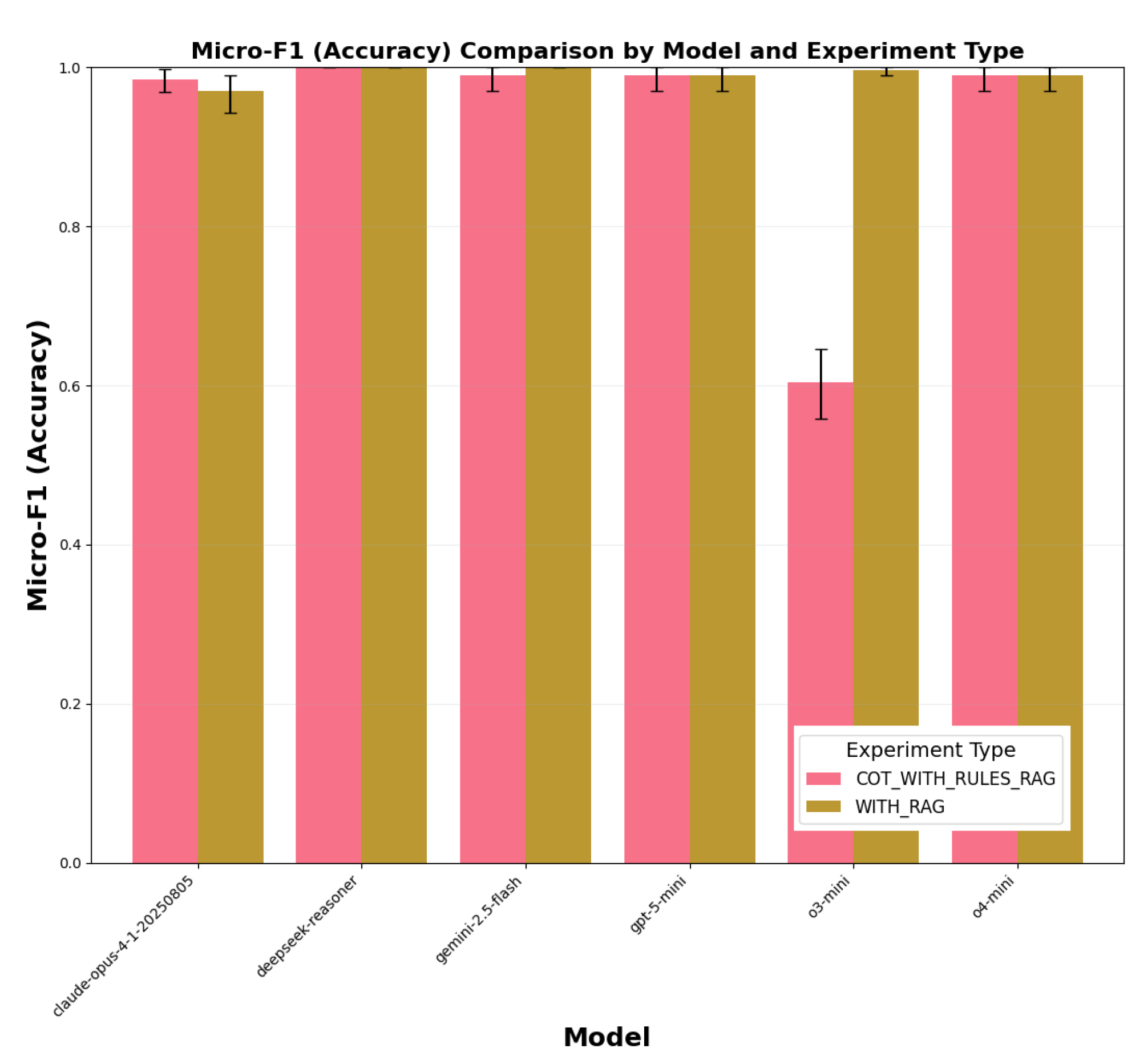

4.2.1. Macro-/Micro-F1

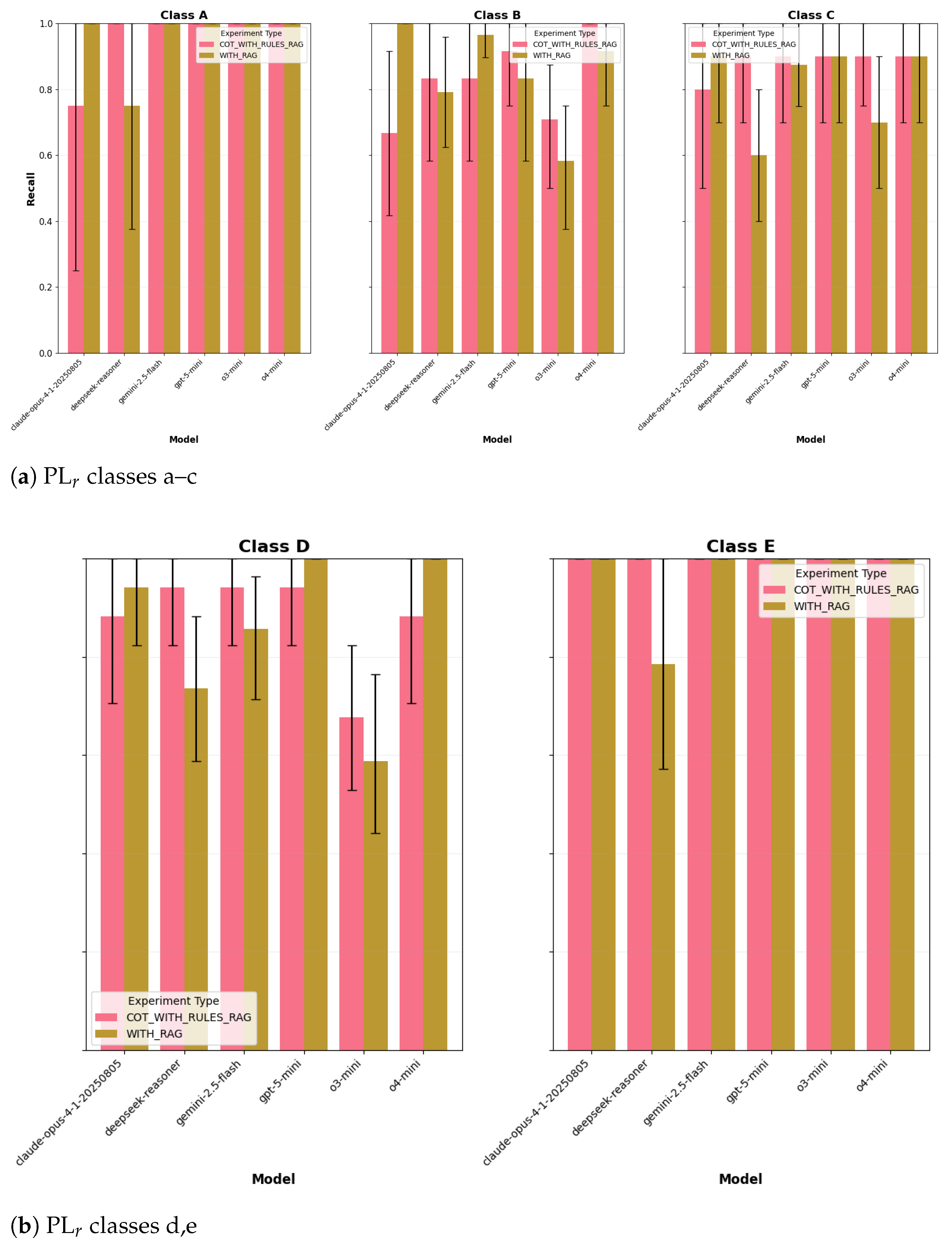

4.2.2. Per-Class Behavior

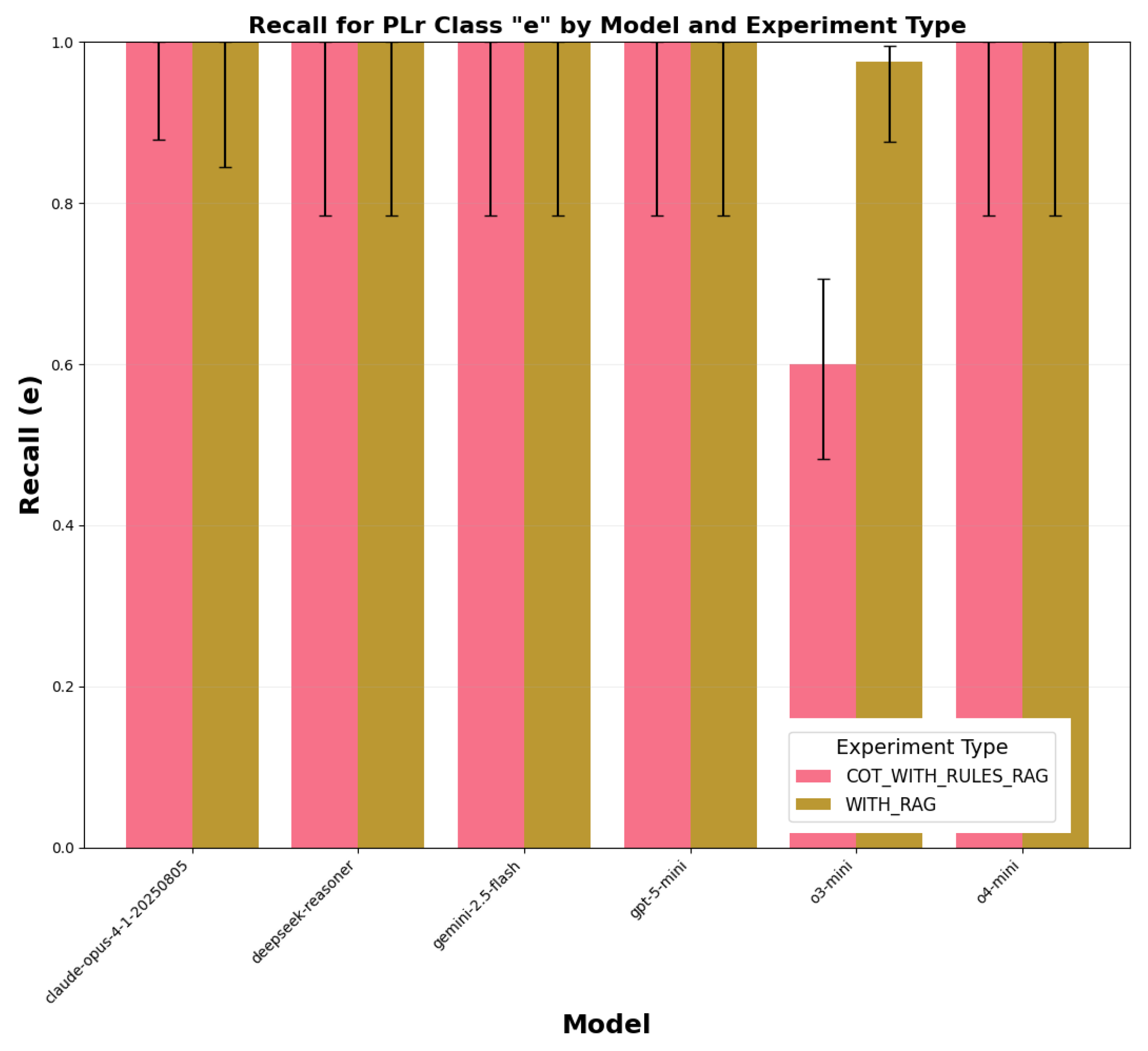

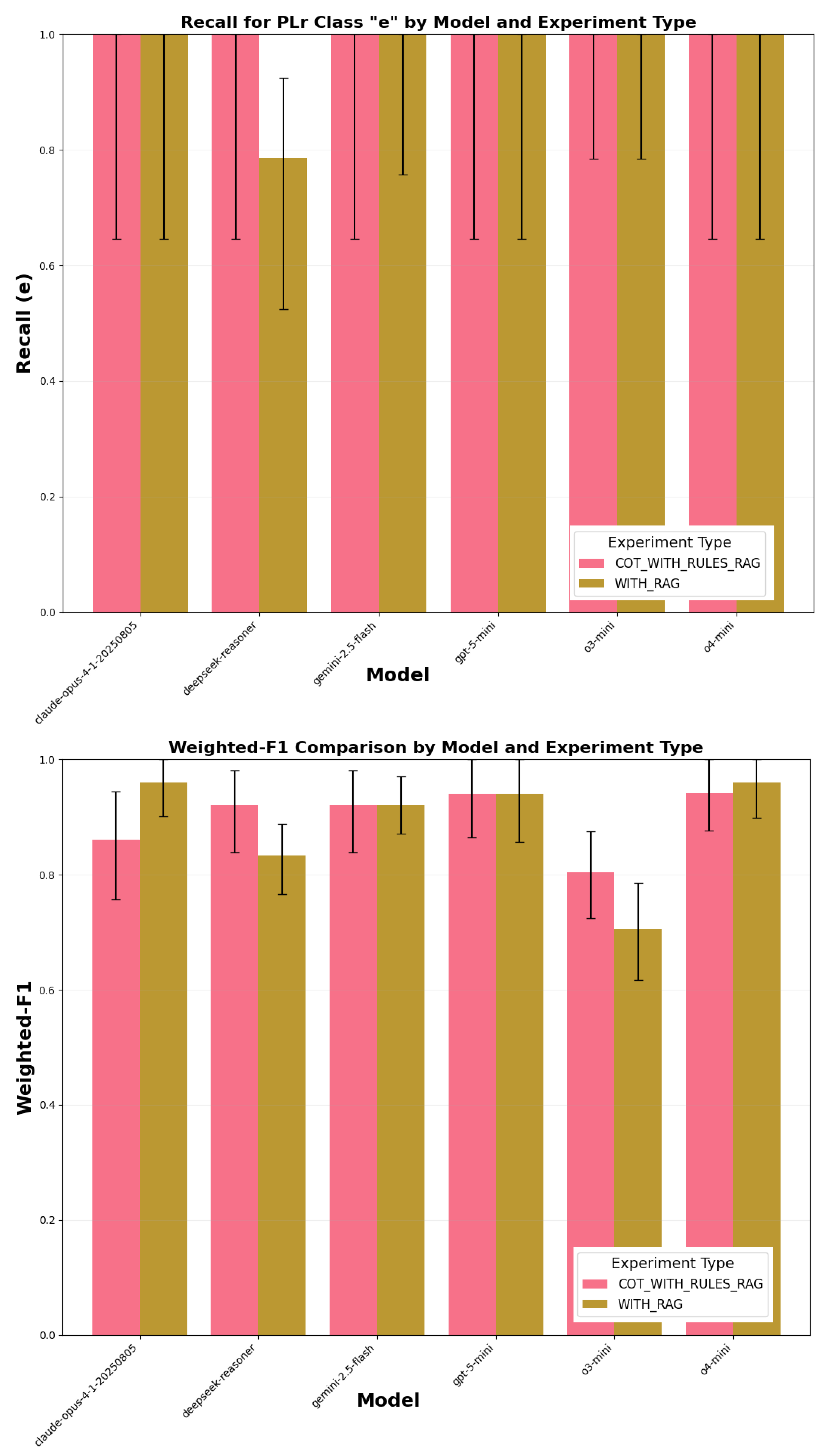

4.2.3. Safety-Critical Coverage (PLr Class E)

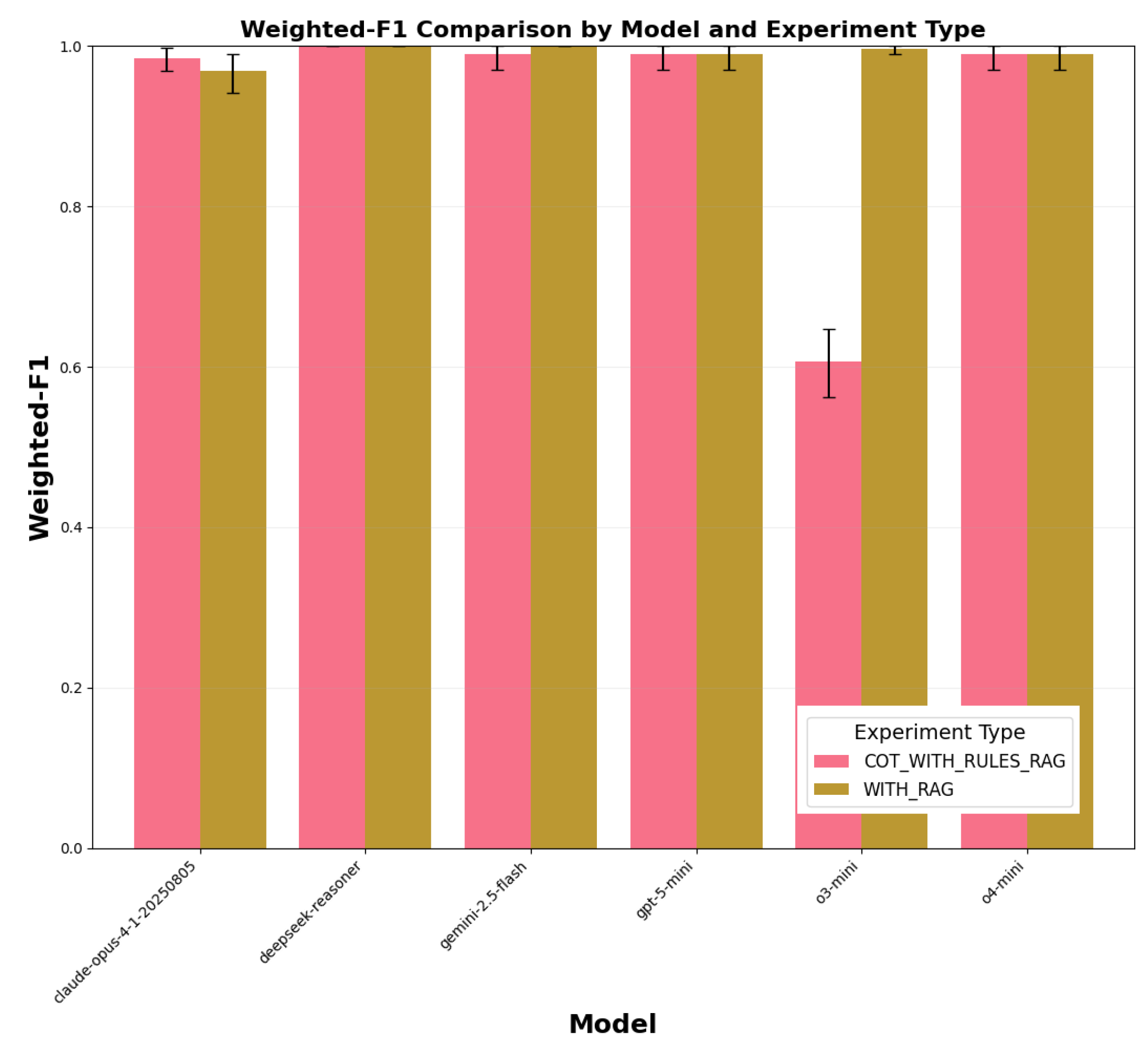

4.2.4. Weighted-F1

4.3. Results on Variant 2 (Engineer-Authored Scenarios, Non-RAG Prompts)

4.3.1. Overall Accuracy (With 95% t-Intervals Across Runs)

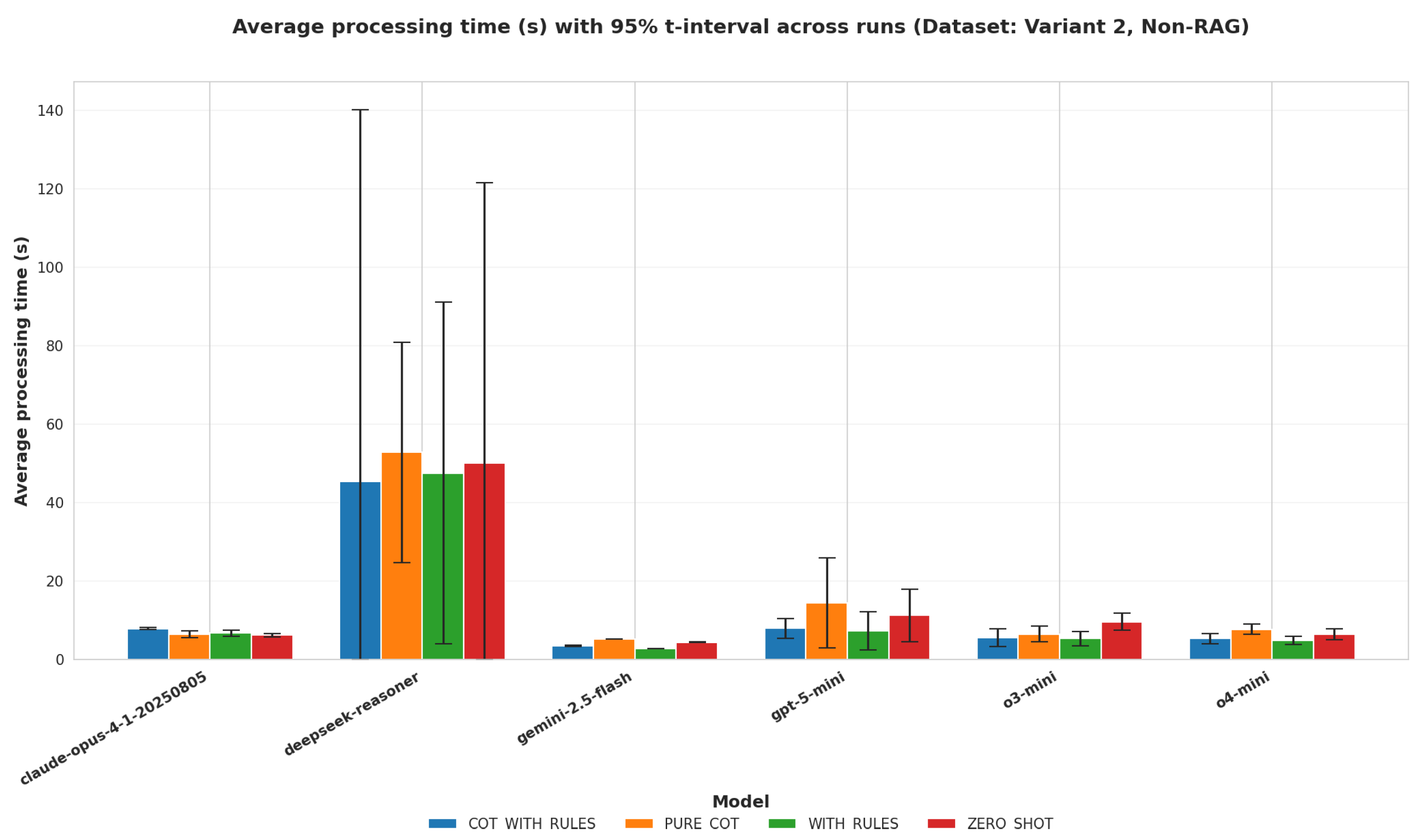

4.3.2. Latency and Efficiency (With 95% t-Intervals Across Runs)

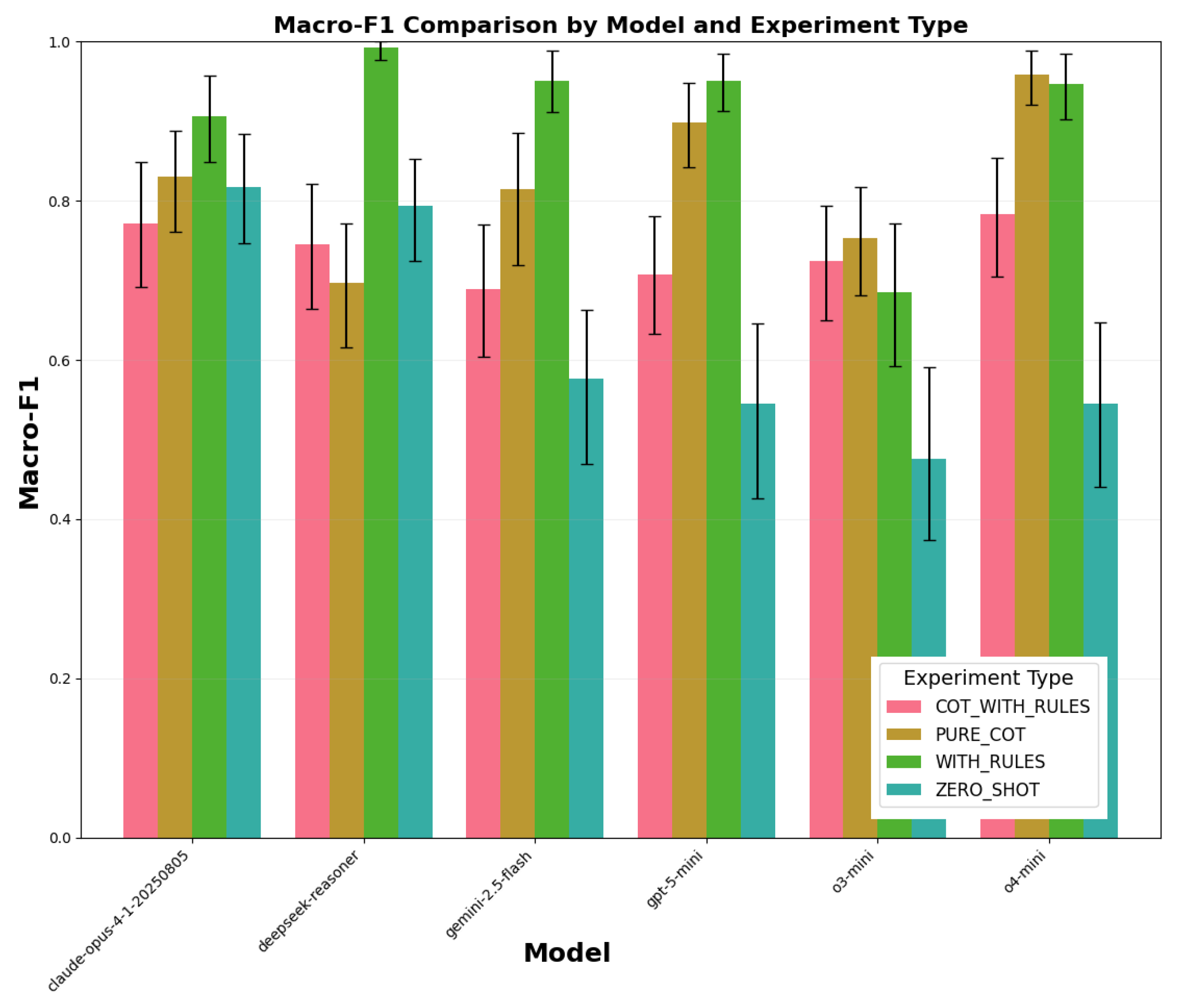

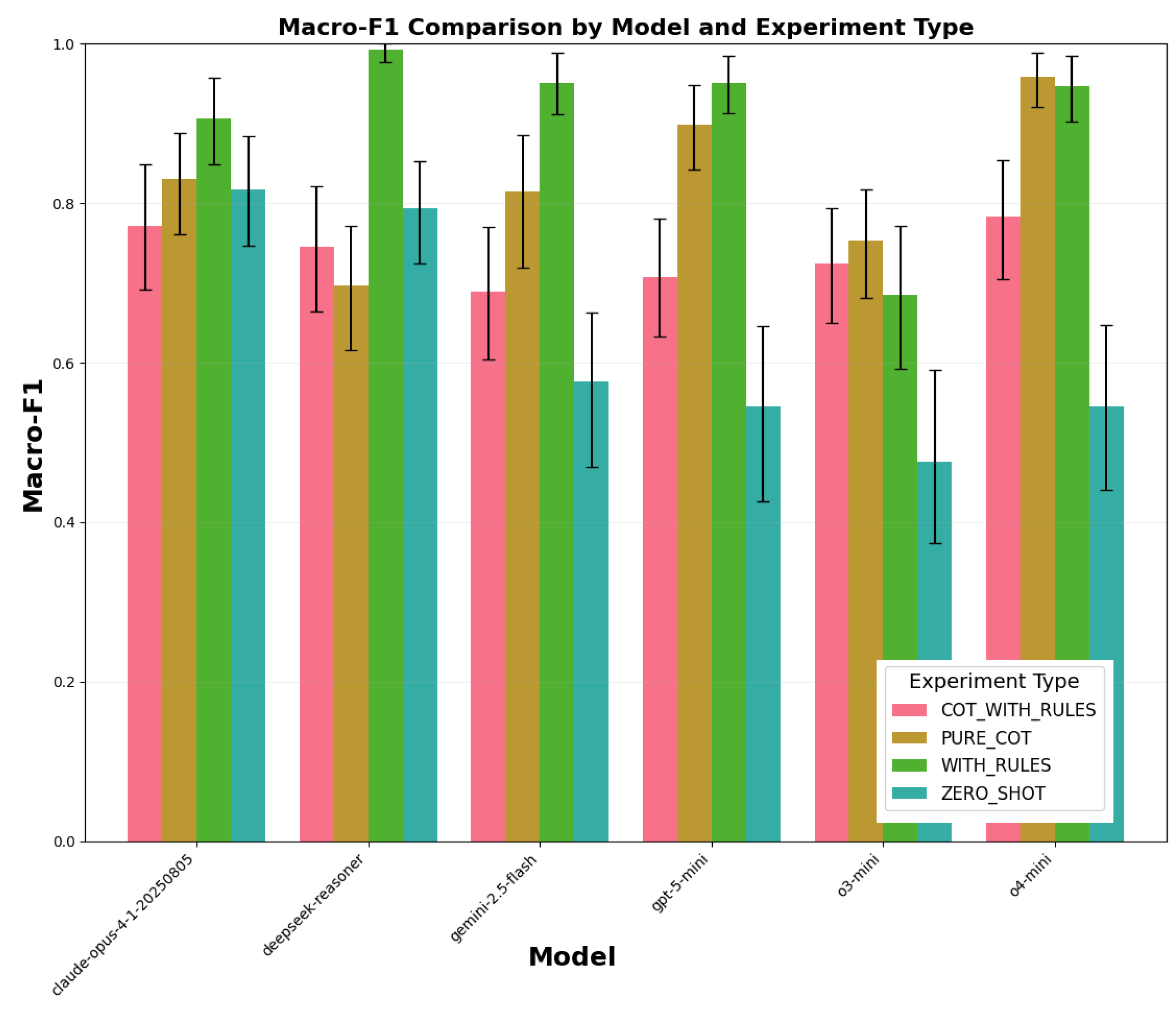

4.3.3. Macro-F1 and Micro-F1

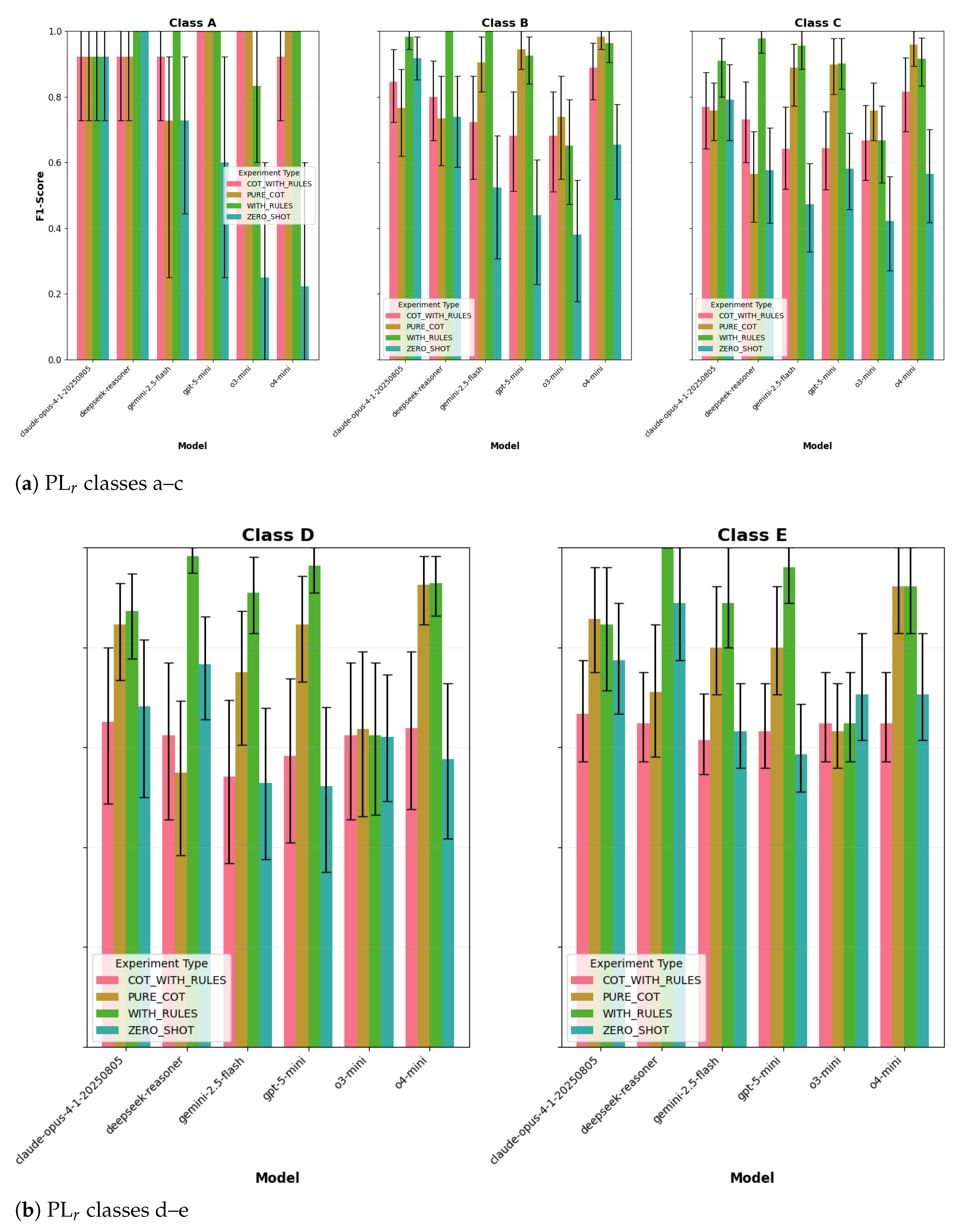

4.3.4. Per-Class Performance

4.3.5. Safety-Critical Recall (Class E) and Weighted-F1

4.4. Results on Variant 2 (Engineer-Authored Scenarios, RAG-Based Prompts)

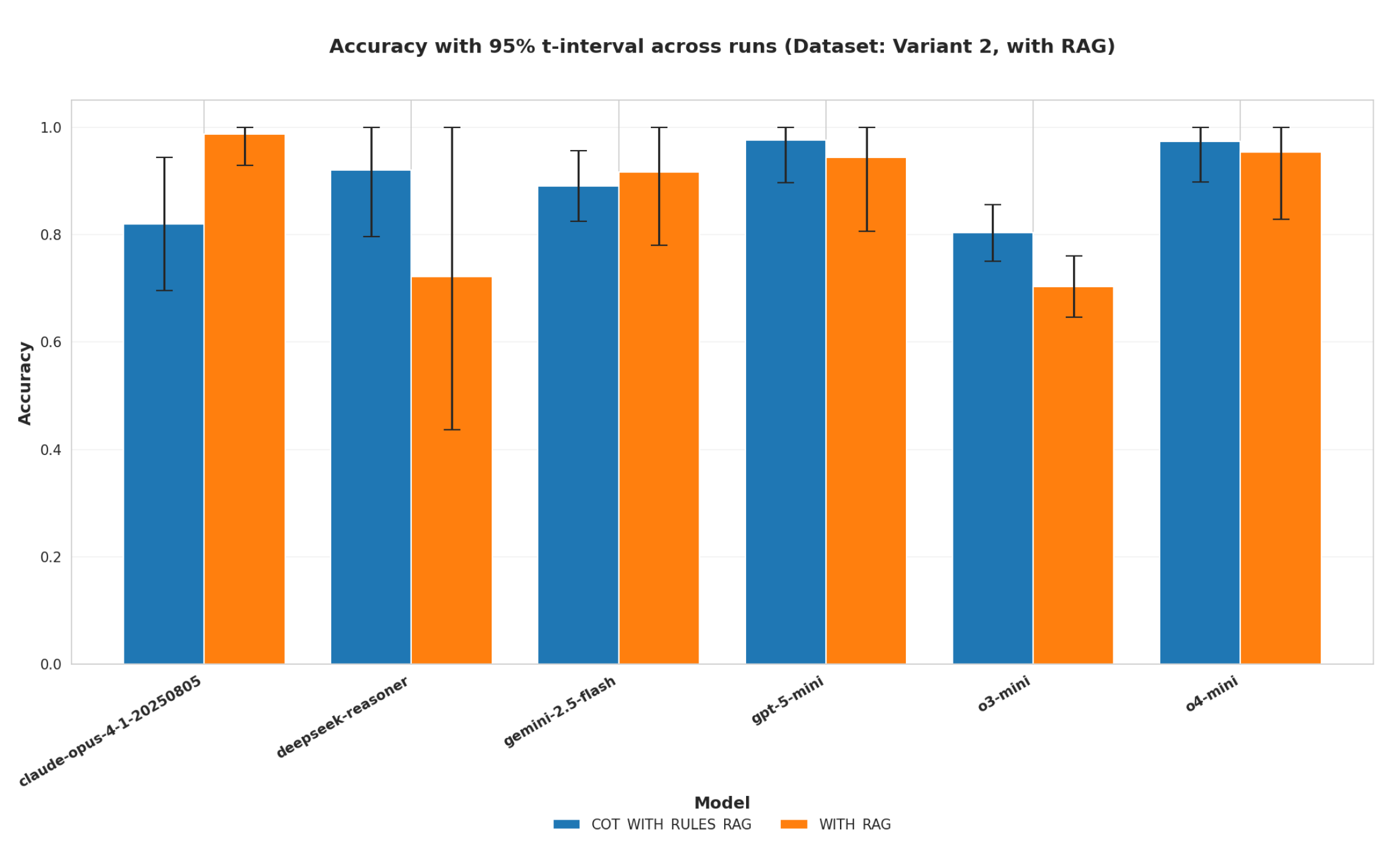

4.4.1. Accuracy and Confidence Intervals

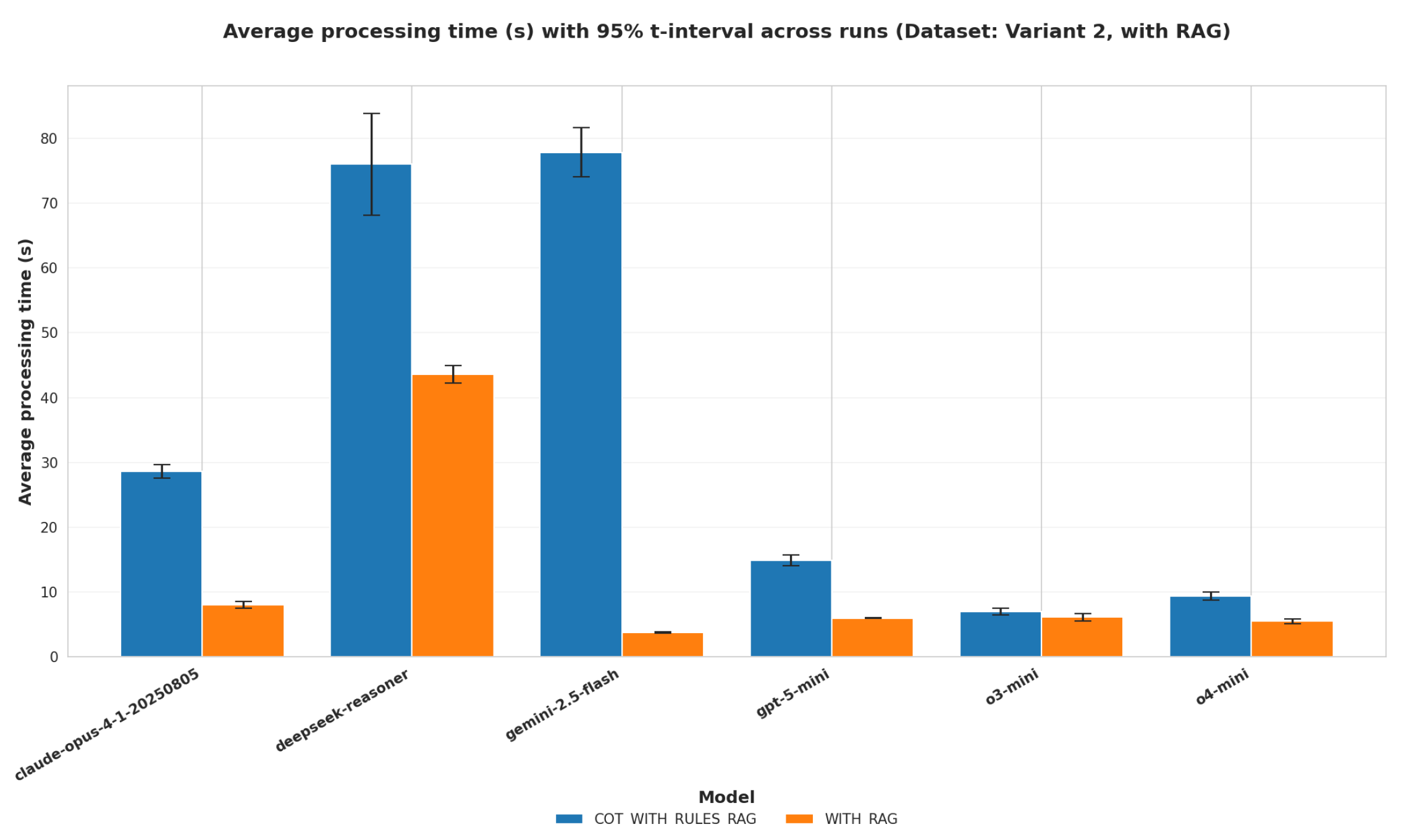

4.4.2. Latency and Efficiency

- First, Variant 1 (canonical ISO phrasing) represents the upper bound of model performance: rule-based prompts consistently achieved near-ceiling accuracy (>0.95) with narrow confidence intervals, underscoring that deterministic classification is feasible when phrasing is standardized.

- Second, Variant 2 (lexical shift, non-RAG) revealed a marked degradation for zero-shot and unconstrained CoT, confirming that free-text hazard descriptions destabilize reasoning-oriented prompting. Rule-based strategies partially mitigated this drift but still showed variability in compact models.

- Third, Variant 2 with RAG demonstrated that retrieval can both stabilize and destabilize performance: while Claude-Opus, GPT-5-mini, and o4-mini maintained robustness with , DeepSeek Reasoner and o3-mini exhibited large confidence intervals and accuracy collapse, indicating sensitivity to retrieval noise. Latency results further confirmed that retrieval-heavy CoT prompts impose prohibitive computational costs, whereas lightweight retrieval () balances accuracy and efficiency.

4.4.3. Macro- and Micro-F1

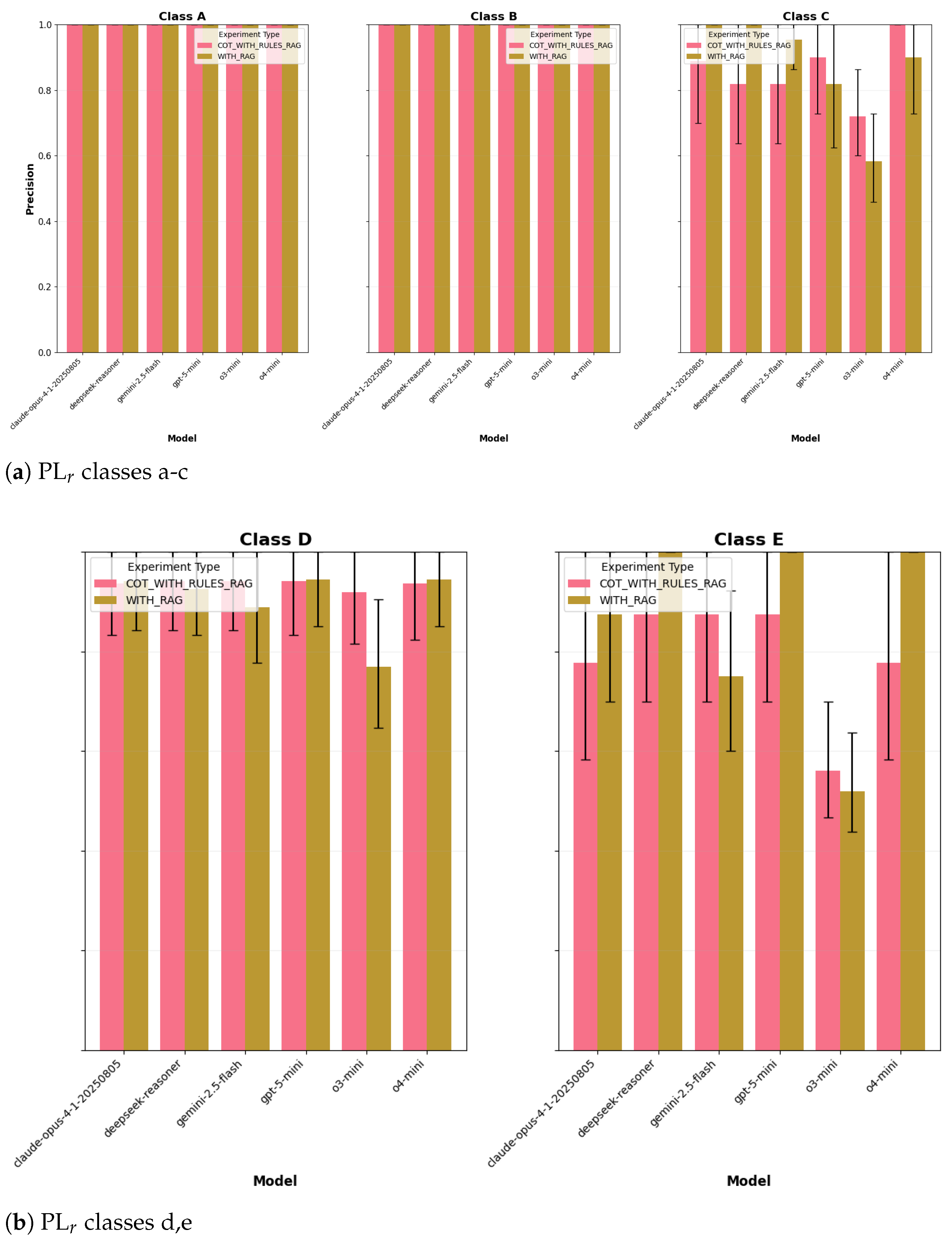

4.4.4. Per-Class Performance for Variant 2 with RAG

4.4.5. Weighted-F1

4.5. Does RAG Confuse CoT? Quantification and Evidence

- Confusion (): cases where is correct but is wrong—indicating that adding CoT (given the same retrieved context) degrades the decision.

- Rescue (): cases where is wrong but is correct—indicating that CoT filters retrieval noise and restores rule-consistent reasoning.

Mechanism (Observed Failure Mode)

- P-inflation (): the dominant failure, frequently triggered by language implying avoidance is “scarcely possible,” which elevates PLr at the and boundaries.

- F-drift (): a secondary effect from neighbors emphasizing “frequent/continuous” exposure and “long duration,” further pushing borderline classes upward.

- The tables explicitly indicate which retrieved neighbors contributed to misclassifications. For example, the phrase scarcely possible in neighbors systematically induced upgrades, shifting PLr from or (see Appendix A.1).

- “Noise” is defined as structurally inconsistent S/F/P cues present in retrieved neighbors. CoT sometimes internalized these cues (e.g., “frequent/continuous,” “long duration,” “scarcely possible”), inflating P or F relative to the ground-truth scenario. This mechanism is made explicit in the confusion traces.

- Each confusion/rescue example specifies the exact S/F/P step where CoT diverged, verifying not only the final PLr prediction but also the correctness of intermediate reasoning.

- All results were re-run under the most deterministic decoding exposed by providers (temperature = 0.0, top-, top- when available) with independent repeats per condition. Figures report 95% Student t-intervals across runs to quantify between-run variability. Small residual variation (±3–5%) was observed, attributable to provider-side reasoning heuristics; reporting t-intervals ensures transparent, auditable comparisons across models and prompts.

4.6. Cross-Variant Synthesis: Rigorous Analysis, Critique, and Implications

4.6.1. Model-Specific Misclassification Patterns

4.6.2. Prediction Bias and Class Distribution

4.6.3. Failure Modes in Reasoning

- P-inflation: CoT integrates neighbor phrases like “scarcely possible,” upgrading and shifting PLr upward (, ).

- F-drift: Frequency adjectives in neighbors (“frequent,” “continuous”) bias CoT toward when the target is .

4.6.4. Error Analysis and Misclassification Insights

4.6.5. Summary of Key Quantitative Findings

- V1, non-RAG: dominates (often ; tight CIs). is volatile; fails on compact models (o3-mini). stabilizes CoT but does not exceed rules-only. This shows that adding statistical intervals makes the performance stability explicit.

- V1, with RAG: Both and are at (near) ceiling except the o3-mini collapse under CoT+RAG (macro-F1 with wide CI). Retrieval alone suffices on canonical phrasing. This confirms that the baseline condition provides a strong reference point.

- V2, non-RAG: Lexical shift penalizes /CoT (drops up to ∼30 percentage points); rule prompts restore balance and push Claude/DeepSeek . This demonstrates ecological validity by testing performance on free-text safety descriptions.

- V2, with RAG: Accuracy remains near-ceiling for Claude, o4-mini, GPT-5-mini; degrades for DeepSeek and o3-mini under plain RAG; adding CoT+Rules flips signs by model (confusion vs. rescue). This quantifies the role of retrieval noise in shaping outcomes.

- Safety-critical coverage: PLr class e-recall nearly perfect with rules across conditions except DeepSeek in V2. This confirms that minority and safety-critical classes were explicitly measured.

- Latency: Rules-only is faster than CoT variants in V1; in V2, yields large speedups over (e.g., Gemini ∼78→4 s/case) with comparable or better accuracy for several models. This clarifies how accuracy and runtime trade-offs were evaluated.

4.6.6. Implications for Industrial Safety Pipelines (Actionable)

- (i) limiting max_tokens and using stop sequences,

- (ii) keeping the number of retrieved exemplars (M) small, and

- (iii) exploiting provider-side prompt caching or batching (where available) to amortize static rule blocks (e.g., ISO rules, schema).

4.6.7. Threats to Validity (with Mitigations)

- Sample size and balance: This study represents the first systematic evaluation of deterministic PLr classification with rule-grounded RAG, focused primarily on reasoning-capable LLMs. A pilot scale was adopted with per variant (V1/V2). Per-class counts can be small; this is partially mitigated through the use of 95% confidence intervals and class-sensitive metrics. Future work should expand N and rebalance classes. This acknowledges dataset size limitations while outlining a clear mitigation path.

- Ecological validity: Dataset variant V1 is canonical; dataset variant V2 uses engineer-authored free text (improves realism) but is from a single author and domain. Extend to multi-site, multi-author corpora and deliberately ambiguous/incomplete cases. This highlights the need to broaden coverage to capture real-world ambiguity.

- Decoding determinism: All reported runs use temperature with fixed top-p/k; nevertheless, single-pass evaluations can mask variance, future work will include multi-seed runs with significance tests. This ensures that determinism and variance are both addressed in evaluation.

- RAG configuration opacity: Retrieval choices (k, similarity function, domain filters) influence outcomes. Confusion/rescue exemplars and S/F/P traces are now exposed; future work will ablate retrieval k, similarity metrics, and structural filters. This increases transparency about retrieval configurations.

- Intermediate reasoning correctness: S/F/P steps were verified in error tables; broader audits should explicitly score S/F/P accuracy alongside PLr. This ensures that intermediate reasoning is evaluated in addition to the final classification outcome.

5. Conclusions

- Rule-grounded prompting (, ) consistently outperformed zero-shot and unconstrained CoT (RQ1). Variant 1 (ISO-style) reached ceiling-level accuracy, while Variant 2 (engineer-authored free text) required explicit rules to restore reliability under lexical variability.

- Model scale was critical: Claude-opus, o4-mini, and GPT-5-mini remained stable, whereas o3-mini collapsed without structured prompting. DeepSeek-Reasoner, despite strong Variant 1 performance, degraded under retrieval noise in Variant 2, showing that RAG is not uniformly beneficial (RQ2).

- Free-form CoT reasoning introduced volatility, increased latency (2–10×), and sometimes amplified retrieval inconsistencies (P-inflation, F-drift). Rules-only prompts were both most accurate and most efficient (RQ2).

- Reasoning traces (S/F/P chains) often diverged from ISO-consistent logic, underscoring that CoT reflects token continuation rather than genuine reasoning. This highlights the risks of anthropomorphization, i.e., attributing human-like reasoning to the outputs of reasoning-capable LLMs. Determinism and correctness were achieved only when outputs were constrained by explicit ISO 13849-1 rules, which reduced the open-ended reasoning space to a well-defined decision graph and ensured reproducible PLr outcomes (RQ2, RQ3).

- Across canonical and lexical-shift settings, rules were necessary and typically sufficient for deterministic PLr assignment. RAG functioned as a conditional accelerator that improved robustness and latency for some model families (Claude/o4/GPT-5) but introduced confusion in others (e.g., DeepSeek) unless structurally filtered (RQ3).

- (i) strict rule-based prompting with independent human validation, and

- (ii) prompt–model matching with retrieval governance to mitigate systematic errors.

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Confusion and Rescue IDs per Model

- DeepSeek-reasoner: confusion = {TH_005, NO_002, VI_004}, rescues = {}.

- GPT-5-mini: confusion = {ME_004, VI_004}, rescues = {ME_006, VI_002}.

- o3-mini: confusion = {ME_006}, rescues = {ME_010, EL_006, TH_004, NO_007, VI_001, VI_010}.

- o4-mini: confusion = {ME_004, TH_005}, rescues = {VI_004}.

- Claude-opus-4-1: confusion = {ME_004, ME_006, EL_002, EL_007, TH_001, TH_004, TH_009, VI_003}, rescues = {ME_005}.

- Gemini-2.5-flash: confusion = {TH_005, NO_002, VI_004}, rescues = {ME_004}.

| Hazard | GT | WITH_RULES_RAG | COT_WITH_RULES_RAG | CoT Trace (S/F/P) | Key Retrieved Cues (top-k) |

|---|---|---|---|---|---|

| TH_005 | d | d | e | S2: irreversible burn/death; F2: frequent/prolonged; P2: “scarcely possible” (invoked due to splash + PPE inconsistency) ⇒ PLr = e. | – “…cleaning tasks… heat sources…” – “…frequent-to-continuous exposure… long duration…” – “… avoidance is scarcely possible…” |

| NO_002 | b | b | c | S1: reversible hearing loss; F2: frequent/prolonged; P2: “avoidance scarcely possible without consistent PPE” ⇒ PLr = c. | – “…normal operation… moving parts…” – “…frequent-to-continuous exposure… long duration…” – “…avoidance is scarcely possible…” |

| Hazard | GT | WITH_RULES_RAG | COT_WITH_RULES_RAG | CoT Trace (S/F/P → PLr) | Retrieved Cue (Salient Phrases) |

|---|---|---|---|---|---|

| ME_004 | d | d | e | S2 (irreversible injury/death), F2 (frequent/prolonged), P2 (“scarcely possible” due to warehouse blind spots) ⇒ PLr e. | Neighbors emphasize frequent-to-continuous exposure, serious injury or death, and scarcely possible avoidance, which together bias (P-inflation) and push . |

| VI_004 | b | b | c | S1 (slight, reversible joint pain), F2 (frequent/prolonged), P2 asserted (breaks/PPE framed as inconsistently used) ⇒ PLr c. | Neighbors mention vibrating equipment, frequent-to-continuous exposure, andscarcely possible avoidance; these cues elevate P despite scenario-consistent mitigations (breaks/PPE), yielding . |

| Hazard | GT | WITH_RULES_RAG | COT_WITH_RULES_RAG | CoT Trace (S/F/P → PLr) | Retrieved Cue (Salient Phrases) |

|---|---|---|---|---|---|

| ME_006 | b | c | b | S1 (slight, reversible crushing), F2 (frequent/prolonged), P1 (avoidance possible with correct procedures) ⇒ PLr b. | Some neighbors contain “scarcely possible” wording that nudges (error in ). CoT reasserts rule-consistent P1 given the scenario’s mitigations and similar S1 examples. |

| VI_002 | b | c | b | S1 (reversible, e.g., early HAVS), F2 (continuous/prolonged), P1 (avoidance possible via breaks) ⇒ PLr b. | Neighbors emphasize seldom/short exposure and slight reversible injury; overweights separate cues claiming scarcely possible avoidance. CoT filters these and restores P1. |

| Hazard | GT | WITH_RULES_RAG | COT_WITH_RULES_RAG | CoT Trace (Condensed) | Key Retrieved Cue |

|---|---|---|---|---|---|

| ME_006 | b | b | c | S1 (slight), F2, P2 (procedures often disregarded) ⇒ PLr c (pred.) | “…operators performing cleaning/setup tasks… frequent-to-continuous exposure with long duration; possibility scarcely possible…” |

| Hazard | GT | WITH_RULES_RAG | COT_WITH_RULES_RAG | CoT Trace (Condensed) | Key Retrieved Cue |

|---|---|---|---|---|---|

| ME_010 | d | e | d | S2 (serious), F2, P1 (avoidance via checks/procedures) ⇒ PLr d | “…maintenance tasks; acceleration/deceleration; frequent-to-continuous exposure; serious injury or death…” |

| EL_006 | c | d | c | S2, F1, P1 (basic lockout/spacing) ⇒ PLr c | “…exposure to live electrical parts due to insufficient distance… short exposure; avoidance possible under conditions…” |

| TH_004 | b | c | b | S1 (minor burns), F2, P1 (procedural avoidance) ⇒ PLr b (pred.) | “…hot surfaces/heat sources; frequent-to-continuous exposure…” |

| NO_007 | d | e | d | S2, F1, P1 (controls allow avoidance) ⇒ PLr d (pred.) | “…shockwave/noise; tasks seldom-to-less-often with short exposure; avoidance possible under specific conditions…” |

| VI_001 | d | e | d | S2 (HAVS), F2, P1 (PPE/procedures enable avoidance) ⇒ PLr d | “…unbalanced rotating parts; frequent-to-continuous exposure; consequence framed as tiredness in some neighbors…” |

| VI_010 | d | e | d | S2, F2, P1 (breaks/controls) ⇒ PLr d | “…vibrating equipment during normal operations; frequent-to-continuous exposure; discomfort examples…” |

| Hazard | GT | WITH_RULES_RAG | COT_WITH_RULES_RAG | CoT Trace (Summary) | Retrieved Cue (Excerpt) |

|---|---|---|---|---|---|

| ME_004 | d | d | e | S2 (irreversible) + F2 (frequent/prolonged) + P2 (blind spots ⇒ avoidance scarcely possible) ⇒ PLr = e. | In industrial environments, operators performing cleaning tasks may encounter moving elements that can lead to drawing-in or trapping. These tasks are characterized by frequent-to-continuous exposure with long duration, and the potential consequence is serious injury or death. |

| TH_005 | d | d | e | Liquid metal splash: S2 + F2 + P2 (PPE inconsistency interpreted as scarce avoidance) ⇒ PLr = e. | In industrial settings, operators performing cleaning tasks may encounter radiation from heat sources, which can lead to scald injuries. The tasks are characterized by frequent-to-continuous exposure with long duration, and the likelihood of occurrence is scarcely possible. |

| Hazard | GT | WITH_RULES_RAG | COT_WITH_RULES_RAG | CoT Trace (Summary) | Retrieved Cue (Excerpt) |

|---|---|---|---|---|---|

| VI_004 | b | c | b | S1 (slight, reversible joint pain) + F2 (frequent) + P1 (avoidance possible with footwear) ⇒ PLr = b; aligns with similar EX2 (second retrieved neighbour). | In industrial settings, operators performing normal operation tasks may encounter vibrating equipment, which can lead to discomfort. These tasks are characterized by frequent-to-continuous exposure with long duration, and the potential consequence is slight, normally reversible injuries. |

| Hazard | GT | WITH_RULES_RAG | COT_WITH_RULES_RAG | CoT Trace (Abridged) | Retrieved Cue (Salient Phrases) |

|---|---|---|---|---|---|

| ME_004 | d | d | e | S2 (irreversible injury/death) + F2 (frequent) + P2 (blind spots ⇒ avoidance scarcely possible) ⇒ PLr e. | • “frequent-to-continuous exposure; long duration” (F2) • “serious injury or death” (S2) • Generic trapping/drawing-in phrasing that nudges P2 escalation |

| TH_004 | b | b | c | S1 (minor reversible burns) + F2 (frequent/prolonged in kitchen) + P2 (distraction-prone, avoidance scarce) ⇒ PLr c. | • “flames … discomfort” (S1) • Mixed frequency: “seldom-to-less-often … short exposure” (F1) vs “scarcely possible” (P2) • Conflicting F/P cues bias the chain toward P2 |

| Hazard | GT | WITH_RULES_RAG | COT_WITH_RULES_RAG | CoT Trace (Abridged) | Retrieved Cue (Salient Phrases) |

|---|---|---|---|---|---|

| ME_005 | d | e | d | S2 (serious) + F2 (loading operations frequent/prolonged) + P1 (procedural avoidance feasible) ⇒ PLr d. | • “frequent-to-continuous … long exposure” (F2) • “serious injury or death” (S2) • Absence of explicit ‘scarcely possible’ cue; CoT reasserts P1 per ISO risk graph |

| Hazard | GT | WITH_RULES_RAG | COT_WITH_RULES_RAG | CoT Trace (Abridged) | Retrieved Cue (Salient Phrases) |

|---|---|---|---|---|---|

| TH_005 | d | d | e | S2 (irreversible burns/death) + F2 (frequent, long duration) + P2 (“scarcely possible” avoidance) ⇒ PL_r e. |

|

| NO_002 | b | b | c | S1 (reversible hearing loss) + F2 (frequent) + P2 (avoidance “scarcely possible” without consistent PPE) ⇒ PL_r c. |

|

| Hazard | GT | WITH_RULES_RAG | COT_WITH_RULES_RAG | CoT Trace (Abridged) | Retrieved Cue (Salient Phrases) |

|---|---|---|---|---|---|

| ME_004 | d | e | d | S2 (irreversible injury) + F2 (frequent/prolonged) + P1 (avoidance possible under procedures) ⇒ PL_r d. |

|

Appendix A.2. Mechanism-Level Inferences (From Confusion/Rescue Tables)

Dominant Confounder: P-Inflation

Secondary Confounder: F-Drift

Severity Cues Are Rarely Decisive

Rescue Mechanism: Rule-Consistent Re-Anchoring of P (and F)

Where Flips Concentrate

Actionable Mitigations

- Structural retrieval governance: Admit neighbors whose are consistent with the provisional decision (e.g., within one step), and down-rank snippets containing “scarcely possible” when task geometry/procedures imply .

- Conflict-aware inference: If retrieved neighbors disagree on P or F, prefer rule-only aggregation or escalate -boundary cases to human review.

- Model–prompt matching: Default to / for families where CoT+RAG confuses (e.g., Claude/DeepSeek/Gemini); enable only where rescue > confusion is empirically observed (e.g., o3-mini).

Limitations and Scope

References

- ISO 12100:2010; Safety of Machinery: General Principles for Design: Risk Assessment and Risk Reduction. ISO: Geneva, Switzerland, 2010. Available online: https://www.iso.org/standard/51528.html (accessed on 31 January 2025).

- ISO 13849-1:2023; Safety of Machinery—Safety-Related Parts of Control Systems—Part 1: General Principles for Design. ISO: Geneva, Switzerland, 2023. Available online: https://www.iso.org/standard/73481.html (accessed on 5 May 2025).

- IFA Report 2/2017e Functional Safety of Machine Controls—Application of EN ISO; Deutsche Gesetzliche Unfallversicherung: Berlin, Germany, 2019.

- European Parliament and Council. Regulation (EU) 2023/1230 of the European Parliament and of the Council of 14 June 2023 on machinery and repealing Directive 2006/42/EC of the European Parliament and of the Council and Council Directive 73/361/EEC. Off. J. Eur. Union 2023, L 165, 1–102. [Google Scholar]

- Iyenghar, P.; Hu, Y.; Kieviet, M.; Pulvermüller, E.; Wübbelmann, J. AI-Based Assistant for Determining the Required Performance Level for a Safety Function. In Proceedings of the 48th Annual Conference of the IEEE Industrial Electronics Society (IECON 2022), Brussels, Belgium, 17–20 October 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Iyenghar, P.; Kieviet, M.; Pulvermüller, E.; Wübbelmann, J. A Chatbot Assistant for Reducing Risk in Machinery Design. In Proceedings of the 21st IEEE International Conference on Industrial Informatics (INDIN 2023), Lemgo, Germany, 18–20 July 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Iyenghar, P. On the Development and Application of a Structured Dataset for Data-Driven Risk Assessment in Industrial Functional Safety. In Proceedings of the 21st IEEE International Conference on Factory Communication Systems (WFCS 2025), Rostock, Germany, 10–13 June 2025; pp. 1–8. [Google Scholar] [CrossRef]

- Iyenghar, P. Evaluating LLM Prompting Strategies for Industrial Functional Safety Risk Assessment. In Proceedings of the 8th IEEE International Conference on Industrial Cyber-Physical Systems (ICPS 2025), Emden, Germany, 12–15 May 2025; pp. 1–4. [Google Scholar] [CrossRef]

- Gemini 2.0-Flash. Available online: https://deepmind.google/technologies/gemini/flash/ (accessed on 30 January 2025).

- Iyenghar, P.; Zimmer, C.; Gregorio, C. A Feasibility Study on Chain-of-Thought Prompting for LLM-Based OT Cybersecurity Risk Assessment. In Proceedings of the 8th IEEE International Conference on Industrial Cyber-Physical Systems (ICPS 2025), Emden, Germany, 12–15 May 2025; pp. 1–4. [Google Scholar] [CrossRef]

- Nouri, M.; Karakostas, D.; Hummel, L.; Pretschner, A. Automating Automotive Hazard Analysis and Risk Assessment with Large Language Models: Opportunities and Limitations. arXiv 2024, arXiv:2401.07791. [Google Scholar]

- Qi, Z.; Wang, C.; Zhang, M.; Ma, Y.; Xie, B. Can ChatGPT Help with System Theoretic Process Analysis? A Pilot Study. In Proceedings of the 2025 IEEE International Symposium on Software Reliability Engineering Workshops (ISSREW), Sao Paulo, Brazil, 4–7 November 2025; pp. 1–7. [Google Scholar]

- Collier, D.; Vincent, K.; King, J.; Griffiths, D.; Marshall, Y.; Wronska, K. Evaluating Large Language Models for Consumer Product Safety Risk Assessment. Saf. Sci. 2024, 176, 107083. [Google Scholar]

- Diemert, E.; Weber, G. CoHA: Collaborating with ChatGPT for Hazard Analysis. In Proceedings of the 2023 IEEE International Conference on Software Testing, Verification and Validation Workshops (ICSTW), Dublin, Ireland, 16–20 April 2023; pp. 139–146. [Google Scholar]

- Sammour, M.; Kreahling, W.C.; Padgett, J.; Ammann, P. Performance of GPT-3.5 and GPT-4 on the Certified Safety Professional Exam: An Exploratory Study. Saf. Sci. 2024, 182, 108002. [Google Scholar]

- Iyenghar, P. Clever Hans in the Loop? A Critical Examination of ChatGPT in a Human-In-The-Loop Framework for Machinery Functional Safety Risk Analysis. Eng 2025, 6, 31. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Le, Q.; Zhou, D. Chain of Thought Prompting Elicits Reasoning in Large Language Models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Kojima, T.; Gu, S.; Reid, M.; Matsuo, Y.; Iwasawa, Y. Large Language Models are Zero-Shot Reasoners. Adv. Neural Inf. Process. Syst. 2022, 35, 22199–22213. [Google Scholar]

- Saparov, A.; He, H. Language Models Are Greedy Reasoners: A Systematic Formal Analysis of Chain-of-Thought. In Proceedings of the International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Schaeffer, R.; Pistunova, K.; Khanna, S.; Consul, S.; Koyejo, S. Invalid Logic, Equivalent Gains: The Bizarreness of Reasoning in Language Model Prompting. arXiv 2023, arXiv:2307.10573. [Google Scholar] [CrossRef]

- Kambhampati, S.; Stechly, K.; Valmeekam, K.; Saldyt, L.; Bhambri, S.; Palod, V.; Gundawar, A.; Samineni, S.R.; Kalwar, D.; Biswas, U. Stop Anthropomorphizing Intermediate Tokens as Reasoning/Thinking Traces! arXiv 2025, arXiv:2504.09762. [Google Scholar]

- Stechly, K.; Valmeekam, K.; Gundawar, A.; Palod, V.; Kambhampati, S. Beyond Semantics: The Unreasonable Effectiveness of Reasonless Intermediate Tokens. arXiv 2025, arXiv:2505.13775. [Google Scholar] [CrossRef]

- Chen, Y.; Benton, J.; Radhakrishnan, A.; Uesato, J.; Denison, C.; Schulman, J.; Somani, A.; Hase, P.; Wagner, M.; Roger, F.; et al. Reasoning Models Don’t Always Say What They Think. arXiv 2025, arXiv:2505.05410. [Google Scholar]

- Shojaee, P.; Mirzadeh, I.; Alizadeh, K.; Horton, M.; Bengio, S.; Farajtabar, M. The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity. arXiv 2025, arXiv:2506.06941. [Google Scholar] [PubMed]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.-t.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. In Advances in Neural Information Processing Systems 33 (NeurIPS 2020); Curran Associates, Inc.: Red Hook, NY, USA, 2020; pp. 9459–9474. [Google Scholar]

- Xue, Z.; Wu, X.; Li, J.; Zhang, P.; Zhu, X. Improving Fire Safety Engineering with Retrieval-Augmented Large Language Models. Fire Technol. 2025, 61, 1281–1301. [Google Scholar]

- Meng, Y.; Jiang, F.; Qi, Z. Retrieval-Augmented Generation for Human Health Risk Assessment: A Case Study. In Proceedings of the 2025 International Conference on Artificial Intelligence in Toxicology (AITOX), Beijing, China, 15–18 October 2025; pp. 101–110. [Google Scholar]

- Hillen, T.; Eisenhauer, M. LASAR: LLM-Augmented Hazard Analysis for Automotive Risk Assessment. In Proceedings of the SAFECOMP, Florence, Italy, 17 September 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 143–154. [Google Scholar]

- Guha, N.; Hu, D.E.; Hendry, L.; Li, N.; Meng, L.; Nanda, S.; Nori, R.; Shardlow, M.; Shoberg, J.; Soni, A.; et al. LegalBench: A Collaboratively Built Benchmark for Measuring Legal Reasoning in LLMs. arXiv 2023, arXiv:2308.11462. [Google Scholar]

- Khandekar, N.; Shen, C.; Mian, Z.; Wang, Z.; Kim, J.; Sriram, A.; Hu, H.; Shah, N.; Patel, R. MedCalc-Bench: Evaluating Large Language Models for Medical Calculations. Adv. Neural Inf. Process. Syst. 2024, 37, 84730–84745. [Google Scholar]

- Wang, J.; Wang, M.; Zhou, Y.; Xing, Z.; Liu, Q.; Xu, X.; Zhang, W.; Zhu, L. LLM-based HSE Compliance Assessment: Benchmark, Performance, and Advancements. arXiv 2025, arXiv:2505.22959. [Google Scholar] [CrossRef]

- Sandmann, S.; Hegselmann, S.; Fujarski, M.; Bickmann, L.; Wild, B.; Eils, R.; Varghese, J. Benchmark evaluation of DeepSeek large language models in clinical decision-making. Nat. Med. 2025; epub ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Araya, R. Do Chains-of-Thoughts of Large Language Models Suffer from Hallucinations, Cognitive Biases, or Phobias in Bayesian Reasoning? arXiv 2025, arXiv:2503.15268. [Google Scholar]

- LangChain Inc. LangGraph: Agentic Workflows for LLM Applications. 2024. Available online: https://www.langchain.com/langgraph (accessed on 3 July 2025).

- Harrison Chase. LangChain: Building Applications with LLMs Through Composability. 2022. Available online: https://www.langchain.com (accessed on 3 July 2025).

- Chroma Team. Chroma: The AI-Native Open-Source Vector Database. 2023. Available online: https://www.trychroma.com (accessed on 3 July 2025).

- P. Iyenghar. Comprehensive Curated Dataset of Hazard Scenarios Systematically Generated Based on Annex B of ISO 12100 and PLr Assigned Based on ISO. GitHub Repository. 2025. Available online: https://github.com/piyenghar/hazardscenariosISO12100AnnexB (accessed on 4 July 2025).

- OpenAI. Chat Completions Format API. 2024. Available online: https://platform.openai.com/docs/guides/text (accessed on 21 August 2025).

- Zhao, P.; Zhang, H.; Yu, Q.; Wang, Z.; Geng, Y.; Fu, F.; Yang, L.; Zhang, W.; Jiang, J.; Cui, B. Retrieval-Augmented Generation for AI-Generated Content: A Survey. arXiv 2024, arXiv:2402.19473. [Google Scholar]

- Anthropic. Claude Opus 4.1. 2025. Available online: https://www.anthropic.com/news/claude-opus-4-1 (accessed on 21 August 2025).

- DeepSeek. Reasoning Model (Deepseek-Reasoner). 2025. Available online: https://api-docs.deepseek.com/guides/reasoning_model (accessed on 21 August 2025).

- Google. Gemini Models—Gemini API. 2025. Available online: https://ai.google.dev/gemini-api/docs/models (accessed on 21 August 2025).

- Google Cloud. Gemini 2.5 Flash—Vertex AI. 2025. Available online: https://cloud.google.com/vertex-ai/generative-ai/docs/models/gemini/2-5-flash (accessed on 21 August 2025).

- Google. Gemini Thinking. 2025. Available online: https://ai.google.dev/gemini-api/docs/thinking (accessed on 21 August 2025).

- OpenAI. Model: GPT-5 mini—OpenAI API. 2025. Available online: https://platform.openai.com/docs/models/gpt-5-mini (accessed on 21 August 2025).

- OpenAI. Using GPT-5. 2025. Available online: https://platform.openai.com/docs/guides/latest-model (accessed on 21 August 2025).

- OpenAI. OpenAI o3-mini. 2025. Available online: https://openai.com/index/openai-o3-mini/ (accessed on 21 August 2025).

- OpenAI. Model: o4-mini—OpenAI API. 2025. Available online: https://platform.openai.com/docs/models/o4-mini (accessed on 21 August 2025).

- OpenAI. Reasoning Models—OpenAI API. 2025. Available online: https://platform.openai.com/docs/guides/reasoning (accessed on 21 August 2025).

- Anthropic. Pricing. Available online: https://www.anthropic.com/pricing (accessed on 21 August 2025).

- Google DeepMind & Google. Gemini Developer API Pricing. Available online: https://ai.google.dev/gemini-api/docs/pricing (accessed on 21 August 2025).

- DeepSeek. Pricing Details (USD). Available online: https://api-docs.deepseek.com/quick_start/pricing-details-usd (accessed on 21 August 2025).

- OpenAI. API Pricing. Available online: https://openai.com/api/pricing/ (accessed on 21 August 2025).

| Parameter | Default |

|---|---|

| Semantic over-retrieval (k) | 20 (take larger superset before filtering) |

| Lexical Jaccard () | keep if |

| S/F/P gate | require_sfp_exact = false |

| Model | Acc (RAG) | Acc (CoT + RAG) | ConfuseCount | RescueCount |

|---|---|---|---|---|

| DeepSeek-reasoner | 0.98 | 0.92 | 3 | 0 |

| GPT-5-mini | 0.94 | 0.94 | 2 | 2 |

| o3-mini | 0.72 | 0.82 | 1 | 6 |

| o4-mini | 0.96 | 0.94 | 2 | 1 |

| Claude-opus-4-1 | 0.96 | 0.82 | 8 | 1 |

| Gemini-2.5-flash | 0.92 | 0.92 | 3 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Iyenghar, P. Empirical Evaluation of Reasoning LLMs in Machinery Functional Safety Risk Assessment and the Limits of Anthropomorphized Reasoning. Electronics 2025, 14, 3624. https://doi.org/10.3390/electronics14183624

Iyenghar P. Empirical Evaluation of Reasoning LLMs in Machinery Functional Safety Risk Assessment and the Limits of Anthropomorphized Reasoning. Electronics. 2025; 14(18):3624. https://doi.org/10.3390/electronics14183624

Chicago/Turabian StyleIyenghar, Padma. 2025. "Empirical Evaluation of Reasoning LLMs in Machinery Functional Safety Risk Assessment and the Limits of Anthropomorphized Reasoning" Electronics 14, no. 18: 3624. https://doi.org/10.3390/electronics14183624

APA StyleIyenghar, P. (2025). Empirical Evaluation of Reasoning LLMs in Machinery Functional Safety Risk Assessment and the Limits of Anthropomorphized Reasoning. Electronics, 14(18), 3624. https://doi.org/10.3390/electronics14183624