ForestGPT and Beyond: A Trustworthy Domain-Specific Large Language Model Paving the Way to Forestry 5.0

Abstract

1. Introduction

2. Background

2.1. What Is an LLM?

2.1.1. How an LLM Learns to Write: From Blank Slate to Fluent Assistant

2.1.2. Hosted Versus Open-Weight Models: Choosing the Right Deployment Path

- Hosted models: Closed-weight services exposed via a cloud API (e.g., OpenAI GPT-4o and GPT-5, Anthropic Claude 3.5 Sonnet and Opus, Google Gemini 2.5 Pro, xAI Grok 4).

- Open-weight models: Freely downloadable checkpoints that you can operate on your own hardware (e.g., Meta Llama 3.1 405B and 70B, Mistral Mixtral 8 × 22B, Google Gemma 3 27B, DeepSeek-V2, Qwen 2 72B).

- Sovereignty versus scale. A national forest agency that cannot allow inventory data to leave the country is likely to prefer open weights. A start-up building a climate-risk dashboard may accept hosted services to reach market faster.

- Custom analytics. Integrating proprietary growth simulators or LIDAR pipelines often pushes teams towards self-hosting, where they can splice new modules directly into the inference stack [31].

- Budget profile. Hosted models let you start with no capital outlay and scale elastically; self-hosting offers predictable costs once GPU clusters are amortised.

2.1.3. Building Trust in Domain-Specific Settings

- Domain fine-tuning. By re-training on silviculture manuals, regional yield tables, and equipment maintenance logs, the model gains a forestry “accent”, reducing the need for verbose prompts.

- Retrieval-Augmented Generation (RAG). A search component fetches the most relevant passages from current regulations, growth projections, or remote-sensing feeds and appends them to the user prompt, ensuring the answer is grounded in up-to-date evidence.

- Human oversight and guardrails. Output filters enforce professional and legal standards, while audit logs allow reviewers to trace how a recommendation was formed. Treating the model as a junior analyst, excellent at drafting but never the final authority, preserves accountability.

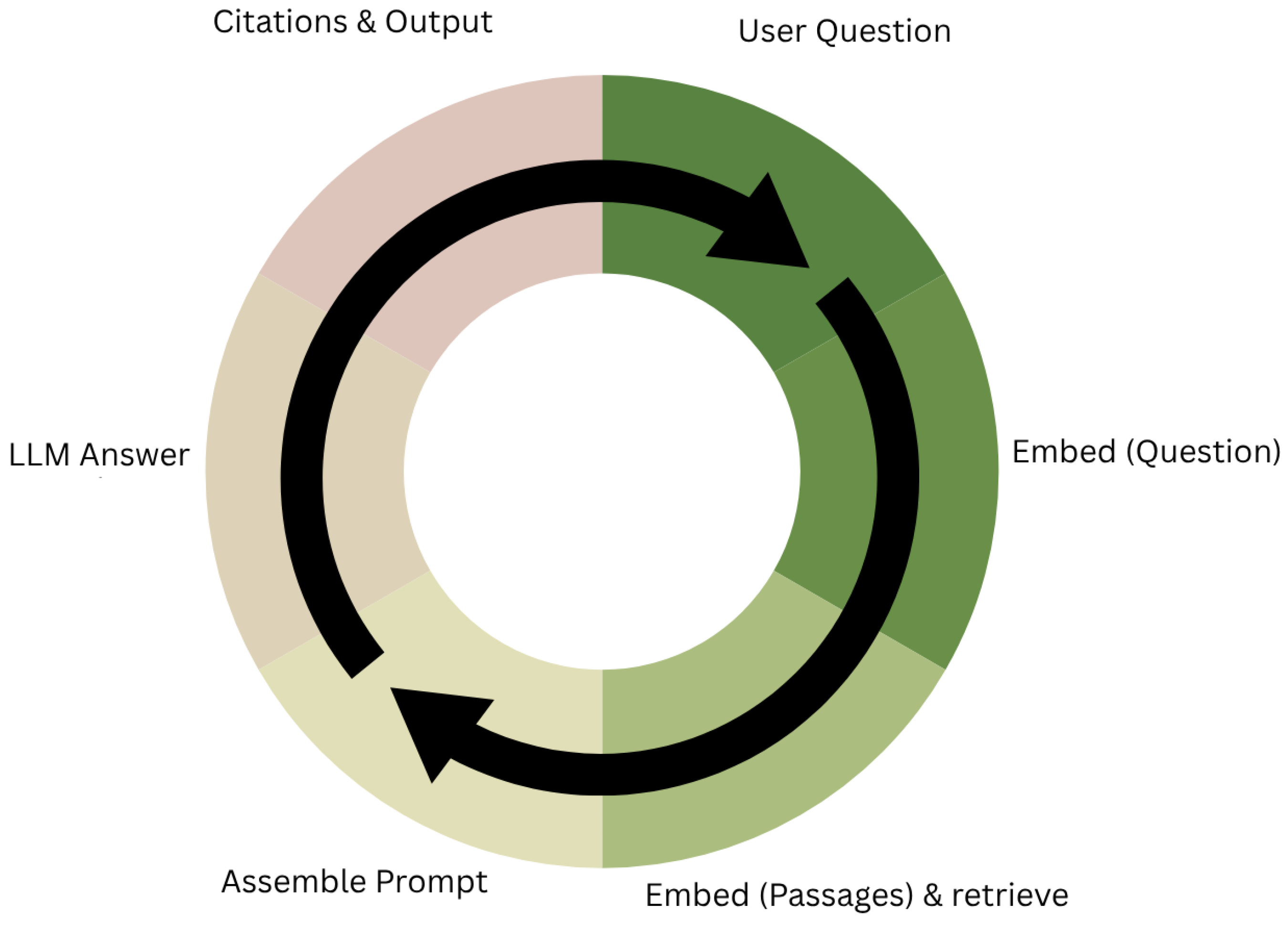

2.2. What Is RAG?

- Neural retrieval—locating and returning the most relevant pieces of information from a very large, often private, document collection (think vector databases, geospatial layers, PDF manuals, or sensor logs).

- Large-language-model generation—having a transformer-based model read those retrieved passages and draft concise, task-specific answers for the user.

2.2.1. How the Pipeline Works, Step by Step

- Chunking the corpus (corpora are usually split into overlapping blocks of 200–1000 tokens; overlap preserves context, while fixed length simplifies indexing)—Before the system ever sees a user, an offline job slices the document collection into fixed-size passages and stores metadata such as title, source URL, publication date, spatial extent, or sensor ID. Each passage is converted to a dense vector using a text-embedding model. Recent systems often use domain-adapted embedding models (e.g., BGE-M3, E5, or in-house forestry-tuned variants) to improve alignment with expert language.

- Query embedding—The user’s raw question (e.g., “Which spruce-thinning guidelines apply in Austria’s montane zone?”) is passed through the same embedding model, yielding a query vector in the same semantic space.

- Vector search—The query vector is compared to all passage vectors (typically via an Approximate Nearest-Neighbour index such as FAISS, ScaNN, or Milvus). The top k passages with the highest cosine similarity are returned. Many production stacks add a re-ranking stage that feeds the top 100–200 candidates to a bi-encoder or cross-encoder for finer scoring. Cohere Rerank v3.5 and BGE-Reranker-v2 offer state-of-practice accuracy and speed. For multilingual forestry corpora, e5-mistral-7B excels at dense embeddings across EU languages.Some newer models, such as Mistral’s Miqu or Cohere’s Command R+, are explicitly trained for retrieval-augmented generation and can score or re-rank passages natively.In addition to static retrieval, agentic strategies such as Self-RAG (dynamic retrieve-or-not decisions) and GraphRAG (graph-grounded summarisation) now underpin large-scale forestry copilots.

- Context assembly—The original question, the k retrieved snippets, and optional system instructions are concatenated into a single prompt. A typical template might read as follows:[SYSTEM] You are a forestry assistant. Cite your sources in [x]format.}[USER QUESTION] <original question>[SOURCE 1] ...[SOURCE 2] ......Developers must balance two factors here: placing enough evidence to answer accurately, yet staying within the model’s context-window budget. Many models in 2025 (e.g., Claude 3.5, Gemini 1.5 Pro, GPT-4o) support extended contexts (128 k–1 M tokens), allowing richer document history or multi-turn data inclusion. However, selective inclusion and source prioritisation remain important.

- Generation—The LLM ingests the enriched prompt and predicts the next tokens, weaving together its own prior knowledge and the freshly supplied evidence. Well-designed templates instruct the model to quote or paraphrase each snippet and attach an inline citation marker such as “[1].” Advanced prompting (e.g., chain-of-thought scaffolds, scratchpad reasoning, tool plans) can further increase answer quality. Some newer models, like Claude or DeepSeek-R1, are trained to follow such structured reasoning prompts natively.

- Answer post-processing—Citation markers are replaced with hyperlinks or footnotes, the answer is truncated or formatted to specification, and the system optionally logs which passages were used, which is crucial for auditability. For benchmarking, we use CRAG and ARES to quantify retrieval quality and hallucination mitigation. For continuous evaluation during deployment, we incorporate RAGAS. If verifier models are available, the generated answer can be automatically scored for factual consistency, confidence, or hallucination risk before being shown to the user.

- Iterative refinement (optional)—Some RAG systems loop: if the first draft seems too vague, the LLM itself proposes follow-up queries (“please retrieve recent wind-throw bulletins”), the retriever runs again, and a second-pass answer is generated. This loop can also be automated using scratchpad-based reasoning, where the model explicitly reflects on uncertainty and issues its own intermediate retrieval instructions.

2.2.2. Human-in-the-Loop (HITL) for Complex Forestry Tasks

2.2.3. Benefits of RAG at a Glance

- Timeliness—Up-to-date information (storm-track bulletins, pest alerts, log-price indices) flows straight into the answer on the day it is published.

- Transparency—Inline citations or expandable excerpts let practitioners verify numbers before prescriptions reach the field.

- Data sovereignty—Proprietary corpora stay inside a controlled retrieval store; only the short excerpts selected by the retriever are exposed to the LLM.

2.2.4. Where RAG Is Already in Use, and Why These Examples Matter for Forestry

- WildfireGPT

- Agricultural Advisory Bots

- Palantir AIP’s Ontology-Augmented Generation

- Industry Landscape Note (Non-Exhaustive)

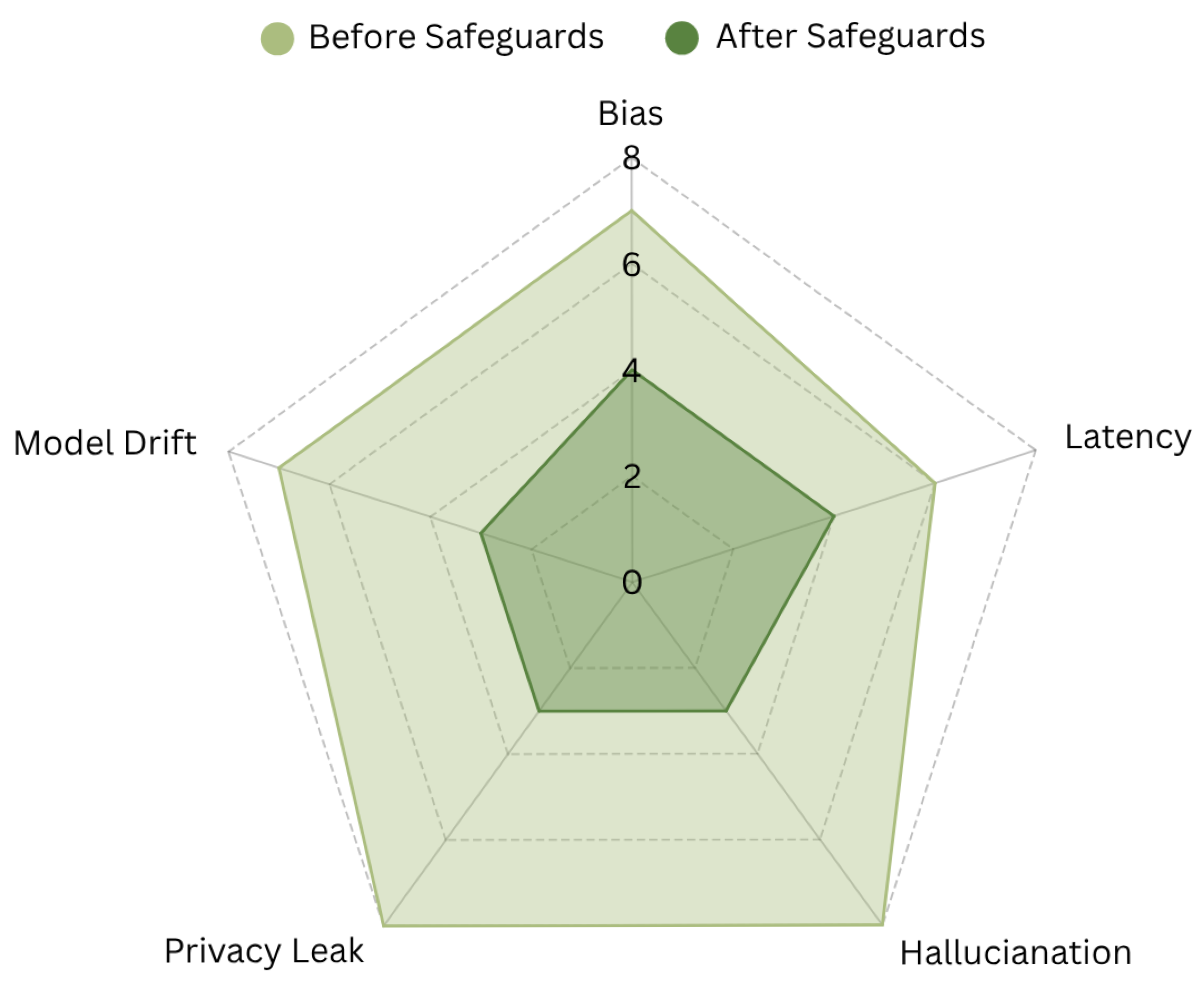

2.3. Problems When Using LLMs

2.3.1. Digging Deeper into Hallucinations

- Why probability outranks truth—During training, the model never checks the real world; it only compares its guess to the next token in its dataset. Fluency, not factuality, drives the optimisation.Why fluent text can still be wrong. During pre-training, LLMs are optimised to maximise the likelihood of the next token given preceding tokens (cross-entropy/MLE). This objective rewards plausible continuations, not ground truth. Hence models can produce confident, fluent statements that are nevertheless unfaithful to reality or to the given sources [44,54]. Calibration work shows models can sometimes assess their own uncertainty, but reliability varies by task and format [55].

- Why confidence can be misleading—The same mechanism that makes the text read smoothly also lends it an air of certainty. The model does not “know” it is guessing. It simply continues the pattern it has learned.

- Mitigation strategies

- a.

- Use Retrieval-Augmented Generation (RAG) to inject verifiable passages into the prompt so the model can quote rather than invent [56].

- b.

- Ask for citations or step-by-step reasoning, missing or circular references are red flags.

- c.

- Keep a human-in-the-loop, especially when decisions have financial, ecological or particularly safety impact [57].

2.3.2. Why Context Length and Freshness Matter

2.3.3. Bias, Privacy, and Prompt Craft

- Bias—Because the model averages over its data, it reproduces both the wisdom and the prejudice embedded in that data. Domain fine-tuning with balanced corpora and explicit bias-evaluation tests helps reduce this risk.

- Privacy leaks—If a snippet appears frequently enough in the training set, the model may regurgitate it verbatim. Guardrails that scan for personal data, as well as policy controls on what enters the fine-tune corpus, are standard defences.

- Prompt engineering—Short, vague prompts invite vague answers. Overly long prompts may waste context budget. Clear, specific, and well-structured questions guide the model down a more reliable probability path. In general the information put into the prompt by the user needs to be selected carefully to avoid the model from anchoring on certain aspects [58].

2.3.4. Prompt Injection and Prompt-Library Governance

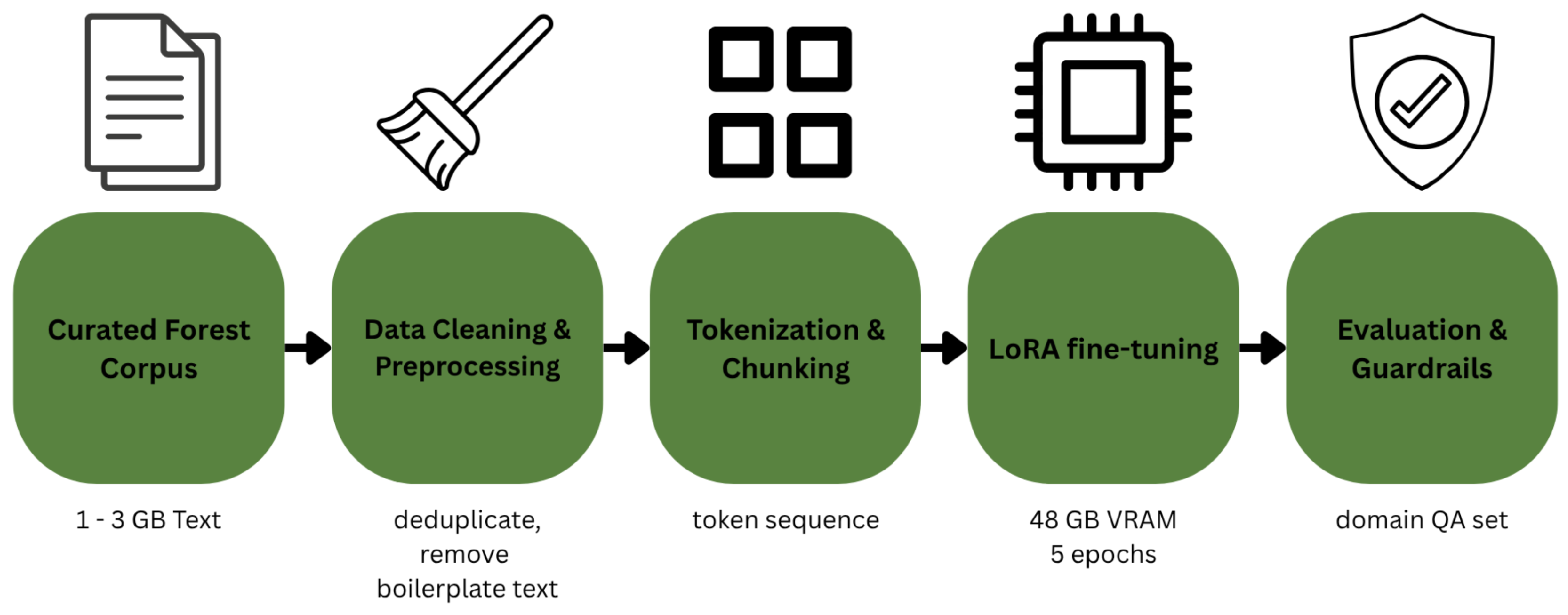

3. How to Build Your Own Domain Expert LLM

3.1. Step 1—Choose a Strong Open-Weight Base

- LLaMA 3.1 70B—near-GPT-4 quality, needs ≈48 GB VRAM in 8-bit form.

- Mixtral 8 × 22B—Mixture-of-Experts; fast and memory-frugal (≈36 GB).

- Gemma 3 27B—compact; runs on a single 24 GB card after quantisation.

- GPT-4o (OpenAI)—Introduced in 2024 with strong multi-modal reasoning, long context, and native tool integration.

- Claude 3.5 Sonnet (Anthropic)—Improved summarisation, transparency, and faithfulness at scale. Alternative: Claude 3.5 Haiku, a smaller, faster version for real-time tasks.

- Gemini 2.5 Pro (Google)—State-of-the-art reasoning and vision–language performance with deep Vertex AI integration. Alternative: Gemini Flash for latency-sensitive use cases.

- DeepSeek-R1 (DeepSeek-AI)—Open-weight model optimised for chain-of-thought and math reasoning [23]. Alternative: Miqu-1.5 (Mistral) or Command R+ (Cohere) for strong retrieval-native performance.

- Qwen 3 (Alibaba)—Strong coding and multilingual reasoning model with open weights.

3.2. Step 2—Curate a Forestry Corpus

- Authoritative sources: Silviculture handbooks, National forest acts, FSC/PEFC guidelines.

- Grey literature: Extension leaflets, Thesis PDFs, Training manuals.

- Data clean-up: Remove boilerplate, Deduplicate, Tag each file with basic metadata (country, species, elevation).

3.3. Step 3—Fine-Tune with Low Rank Adapters (LoRA)

- Cuts GPU memory to a quarter of full fine-tune needs.

- Typical run: 5 epochs, sequence length 2000 tokens, learning rate .

- Time cost: ≈10–20 h on 4–8 A100 GPUs, depending on base size.

3.4. Step 4—Add an Instruction and Safety Layer

- Seed (200–500 items): extract Q&A from manuals and guidelines; have domain experts rewrite for clarity and safety.

- Synthesize (1–5 k): use the model to draft variations and edge cases; experts triage and correct (governance: two-person review, versioning).

- Harden (500–2 k): add “red-team” prompts (adversarial/ambiguous) and safety refusals; tag each item with policy references.

3.5. Step 5—Evaluate on Forestry Tasks

- Yield check: “Calculate volume removal under XYZ Thinning Guideline for a 40-year-old spruce stand.”

- Reg-trace: “Does §3(2)b allow clear-cutting on slopes > 60°?” Please note this is just an example this does not refer to any specific paragraph in any regulation available.

- Geo-reason: “Given the reason we want to plant a height of 1200 m, suggest three fitting species.”

- Score answer correctness and clarity: Iterate on the corpus or hyper-parameters as needed.

- Beyond Qualitative Evaluation: Formal Metrics for Level 3–4

3.6. Step 6—Deploy with Simple Guardrails

- Max context length: Cap prompts to avoid GPU overload.

- Rofanity/person-data filter: Basic regex or open-source guardrail toolkit.

- Human spot-check: Log 5% of outputs for weekly review.

- Preview: How RAG Slots in

- Understands silviculture jargon (e.g., “crown-class removal”, “Selective Thinning”, “Thinning from above”);

- Cites relevant national regulations;

- Adopts a safe, professional tone.

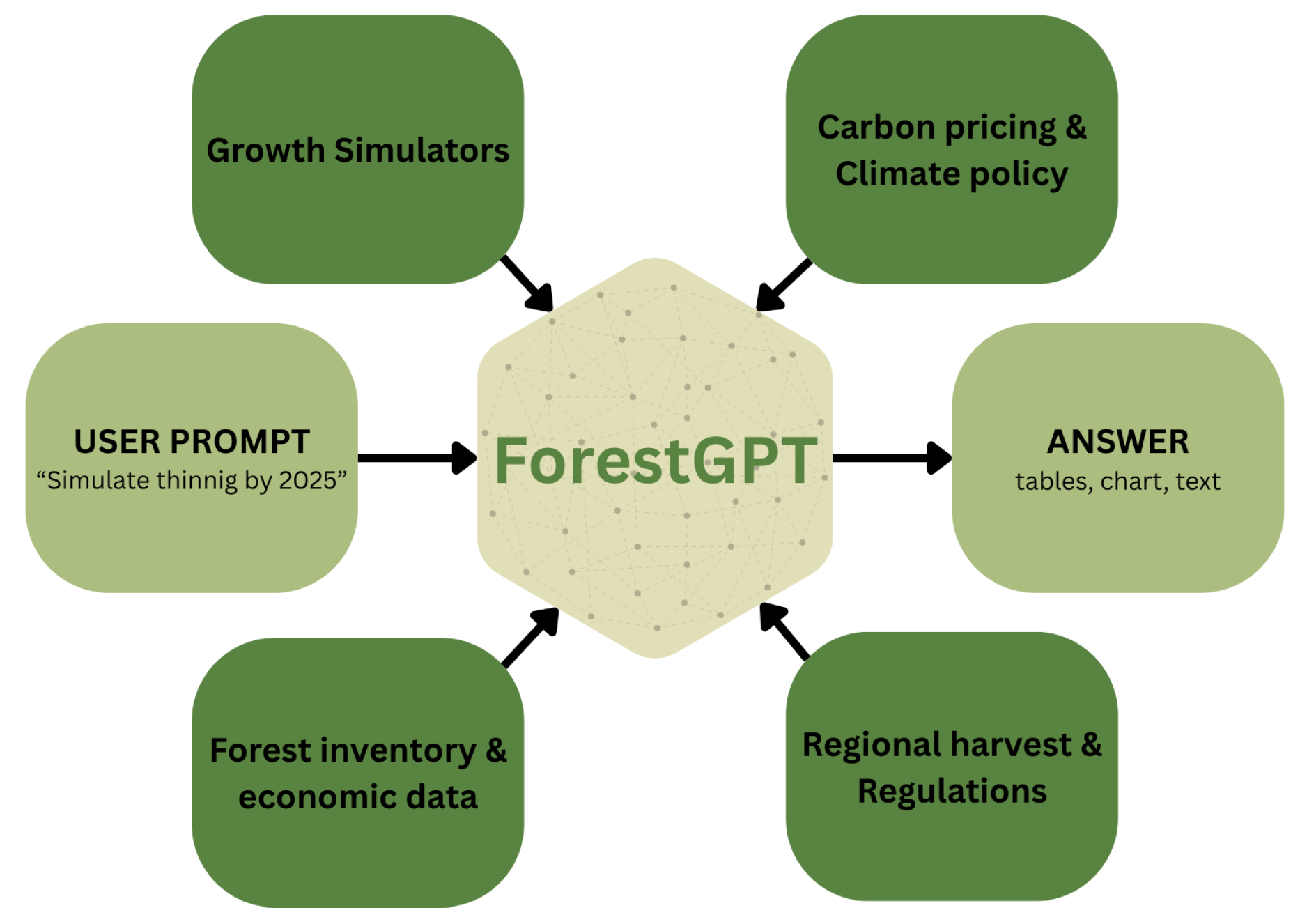

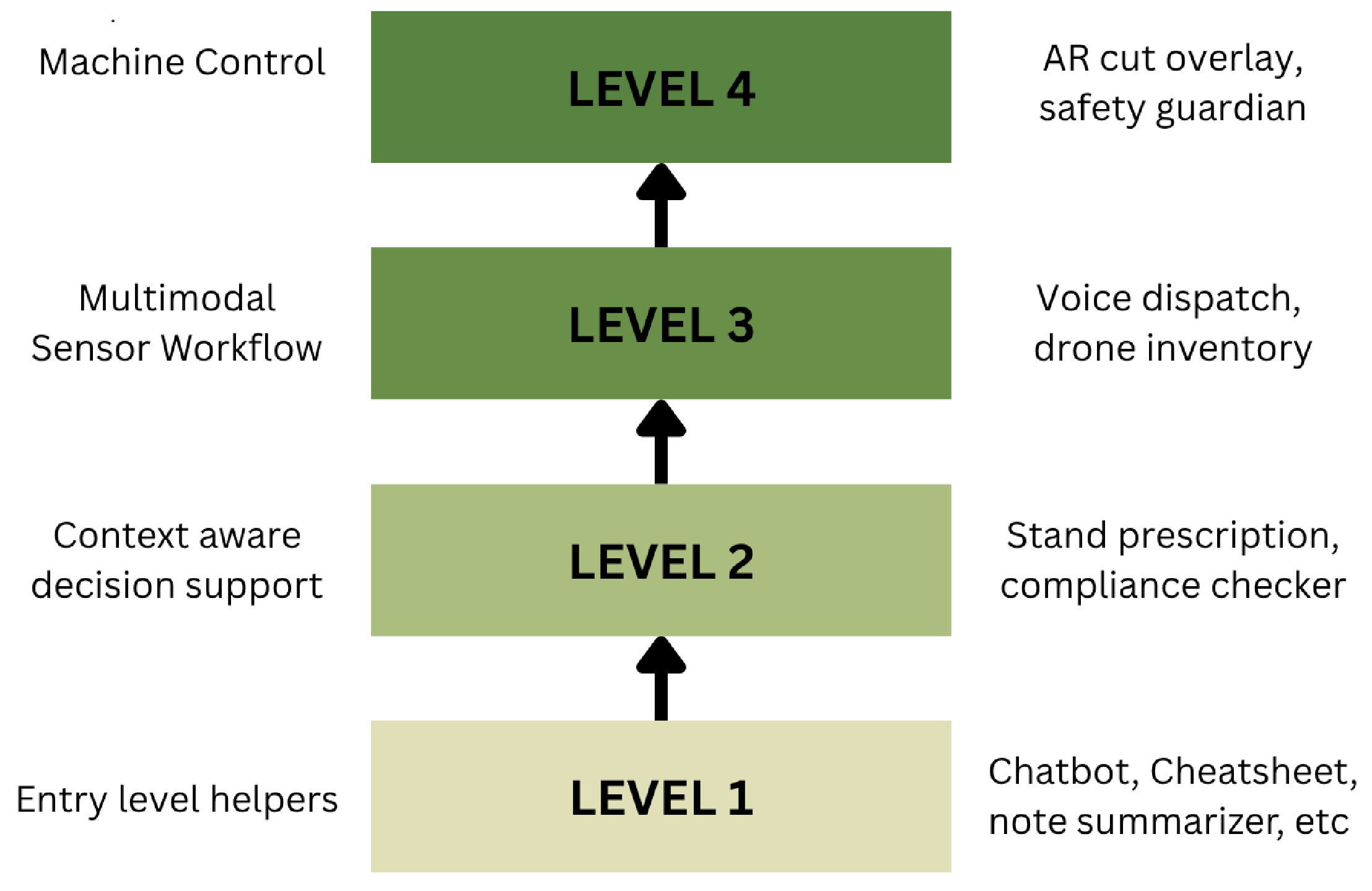

3.7. Possibilities in Forestry

- 1.

- Entry–level helpers

- Forest Management Guidelines quick-referenceA smartphone chat widget answers questions such as “What is the minimum post-harvest basal area for spruce on a site-class II stand?” and cites the exact clause from the regional thinning ordinance. This level resembles the Chatbot presented in the next section.

- Field–note summariserRangers dictate voice memos; the model tags GPS, species, and damage codes, pushing structured JSON to the inventory database.

- Extension leaflets on demandStudents request “one-page cheat sheets” on topics like resin tapping or cable-yarding set-up; the model lays out the leaflet in Microsoft Word or Microsoft PowerPoint.

- 2.

- Context–aware decision support

- Stand-level prescription engineThe assistant consumes inventory snapshots, growth-model outputs, and local market prices, then proposes treatment schedules with NPV (Net Present Value) and carbon metrics, all traceable back to cited equations.

- Regulatory compliance checkerForesters paste a coupe map, the model flags parcels that breach retention-tree rules, and drafts the exemption request letter if needed.

- Wildfire and pest bulletin generatorEach morning the LLM gathers satellite hotspots, drought indices, bark-beetle trap counts, and ECMWF (European Centre for Medium-Range Weather Forecasts) forecasts, then produces a colour-graded PDF that local forest managers can review at a glance, including possible disturbances. An example is wildfire modeling in Australia [64].

3.7.1. Limitations of Text-Centric Assumptions

3.7.2. Modality Integration Is Non-Trivial

- 3.

- Multimodal, sensor–linked workflows

- Vision-enabled forest inventory updateDrone ortho-mosaics stream through a vision-tuned branch of the model; species and diameter-at-breast-height (DBH) estimates automatically populate Geographic Information System (GIS) layers, which the text branch can instantly query in plain language.

- Voice-first dispatchDispatchers ask, “Find the nearest skidder crew qualified for cable extraction and within 30 min of compartment 47B.” The model glues telematics, skill rosters, and road-condition feeds into a single answer with route suggestions.

- Adaptive hauling optimisationLive mill quotas, truck GPS, and fuel prices flow into the LLM, which recommends load redistribution every two hours, broadcasting updates to drivers via a chat interface.

3.7.3. Worked Example A—Regulatory Compliance

3.7.4. Worked Example B—Thinning Prescription with Constraints

- 4.

- Fully augmented machine workspaces

- Heads-up prescription overlayInside the harvester cab, an AR visor highlights which stems to cut, leave, or prune based on real-time stem measurements, habitat buffers, and operator-set objectives (e.g., maximise saw-log length while preserving 20% basal area).

- Conversational machine controlThe operator says, “Switch to fuel-saving mode and recalculate the optimal cutting pattern for a 26 cm top.” The LLM translates that intent into control parameters and passes them to the PLC (Programmable Logic Controller), confirming changes verbally. A very recent example of this is a ChatGPT-based controlling device that used EEG (Electroencephalography) signals to trigger movements [66].

- Embedded safety guardianProximity sensors and computer-vision feeds stream into the same model; if a hiker or machine part enters a danger zone, the system issues an audio warning, logs the event, and when policy requires pauses the head hydraulics autonomously.

- Continuous learning loopPost-shift, cut logs are scanned at the mill, deviations between planned and realised assortments feed back into the model’s fine-tune set, refining future prescriptions without manual spreadsheet wrangling.

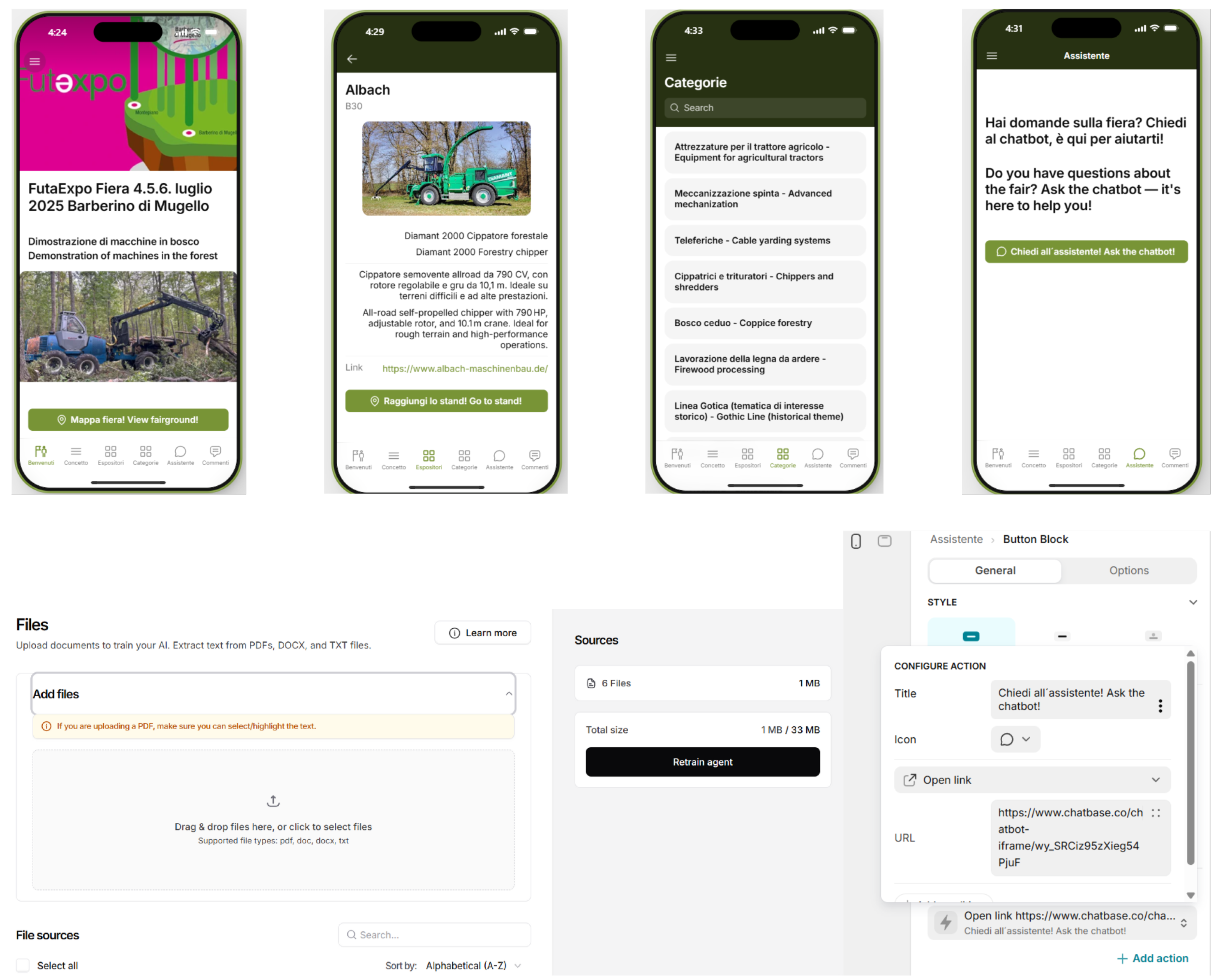

4. Level 1 Demonstration at Futa Expo 2025

- Screen layout

- Intro—Button opens the fairground map that marks the demonstration trails where exhibitors operate their machines.

- Fair concept—Overview of the 17 thematic categories. Four central themes frame the rest: (i) equipment for agricultural tractors; (ii) advanced mechanisation (harvester-forwarder or skidder-forwarder systems); (iii) cable-yarding technology for steep or sensitive soils; (iv) chippers, shredders and firewood processors for energy wood.

- Exhibitor list—Directory of all 61 exhibitors, each with flagship product, short description and a Google-Maps link to the stand.

- Browse by category—The same 61 firms are listed by thematic category to support topic-oriented exploration.

- Facilities map—Toilets, emergency services, and parking, each pin linked to navigation.

- Feedback—Form for visitor comments.

- AI assistant—The core innovation. Powered by gpt-3.5-mini via Chatbase and trained on ≈1 MB of curated data: (a) exhibitor and technology descriptions; (b) fair logistics; (c) educational notes on all 17 categories.

- Functionality

- Result

5. 2024–2025 Update: Reasoning-Centric LLMs and Implications for Forestry 5.0

5.1. Why These Models Matter Now

- Test-time compute as a capability lever. New models execute deliberate multi-step internal reasoning before responding, closing gaps on math, coding and scientific tasks that were persistent in earlier LLMs.

- Native tool use and verification. Browsing, code execution and structured tool calling are becoming first-class citizens, enabling verifiable answers that combine language with calculators, solvers or retrieval.

- Million-token context (in production). Long context reduces prompt engineering overhead and allows direct ingestion of standards, maps, logs and regulatory corpora without heavy pre-digestion.

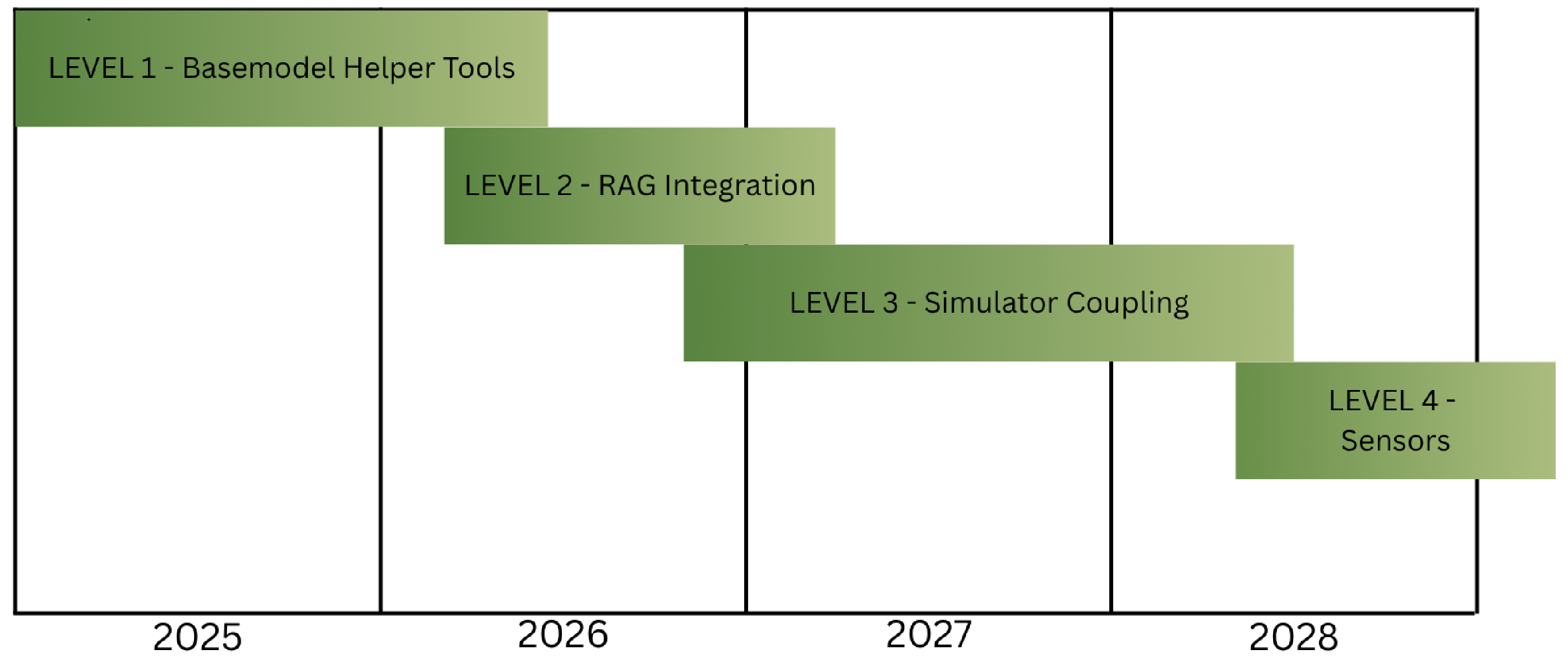

5.2. Implications for the ForestGPT Roadmap

- Model selection and deployment.

- Reasoning+RAG, not reasoning vs. RAG.

- Verifier-first outputs for safety.

- Million-token workflows.

- Evaluation refresh.

- Cost and latency planning.

5.3. Updated Level-Wise Outlook

5.4. Take-Home Message

5.5. Methodology

6. Discussion

6.1. Early Impact Versus Long-Term Ambition

- a.

- Lean, curated training data. A highly pruned 1 MB corpus proved sufficient for reliable orientation and technology explanations, underscoring the principle that local relevance beats sheer data volume in narrow domains.

- b.

- Tight scope and guardrails. The bot refused out-of-domain questions (e.g., detailed tax advice) and fell back to human staff for ambiguous requests, an early example of the “LLM as junior analyst” paradigm that underpins trustworthy deployment.

- c.

- User-centred iteration. Daily log reviews during the fair allowed rapid prompt-template tweaks, noticeably reducing hallucinations.

6.2. Technical Challenges on the Horizon

- Multimodal grounding at scale.

- Continual learning without catastrophic drift.

- Safety certification pathways.

6.3. Organisational and Ethical Considerations

- Barrier to adoption and change management.

- Data sovereignty.

- Human capital.

- Bias and equitable resource allocation.

7. Conclusions and Future Outlook

7.1. Key Takeaways

- Feasibility. A lightweight training pipeline and a modest corpus already yield a useful assistant when the scope is well defined.

- Trust is earned. Guardrails, transparent citations, and iterative user feedback remain indispensable for adoption.

- Change management. Technical progress must be matched by organisational buy-in to overcome resistance and unlock productivity gains.

7.2. Future Outlook

7.3. Human-in-the-Loop at Scale

7.4. Caveats and Readiness for Level 3–4

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AIP | Artificial Intelligence Platform |

| API | Application Programming Interface |

| AR | Augmented Reality |

| ARES | Answer-aware Retrieval Evaluation for Semantic search |

| CI | Continuous Integration |

| CRAG | Contextualized Retrieval Augmented Generation |

| DBH | Diameter at Breast Height |

| DPO | Direct Preference Optimisation |

| ECMWF | European Centre for Medium-Range Weather Forecasts |

| EEG | Electroencephalogram |

| ERP | Enterprise Resource Planning |

| ESA | European Space Agency |

| FAISS | Facebook AI SimilaritySearch |

| FSC | Forest Stewardship Council |

| GEDI | Global Ecosystems Dynamics Investigation |

| GIS | Geographic Information System |

| GPS | Global Positioning System |

| GPT | Generative Pre-Trained Transformer |

| GPU | Graphics Processing Unit |

| GROBID | Generation of bibliographic data |

| gRPC | gRPC Remote Procedure Call (originally Google Remote Procedure Call) |

| HCAI | Human-Centered AI |

| HITL | Human-in-the-loop |

| IEC | International Electrotechnical Commission |

| IoT | Internet of Things |

| ISO | International Organization for Standardization |

| JSON | JavaScript Object Notation |

| KTO | Kahneman–Tversky Optimisation |

| LiDAR | Light Detection and Ranging |

| LLM | Large Language Model |

| LoRA | Low-Rank Adaptation |

| NPV | Net Present Value |

| OAG | Ontology-Augmented Generation |

| OCR | Optical Character Recognition |

| PEFC | Programme for the Endorsement of Forest Certification |

| PFT | Preference Fine-tuning |

| PLC | Programmable Logic Controller |

| PPO | Proximal Policy Optimisation |

| QA | Question/Answer |

| RAG | Retrieval-Augmented Generation |

| RAGAS | Retrieval-Augmented Generation Assessment |

| RELAGGS | Relational Aggregations |

| REST | Representational State Transfer |

| RL | Reinforcement Learning |

| RLAIF | Reinforcement learning from AI feedback |

| RLHF | Reinforcement learning from Human feedback |

| ScaNN | Scalable Nearest Neighbors |

| SFT | Supervised Fine-tuning |

| VRAM | Video Random-Access Memory |

References

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Viswanathan, S., Garnett, R., Eds.; Curran Associates: Red Hook, NY, USA, 2017; pp. 6000–6010. [Google Scholar] [CrossRef]

- Haque, M.A.; Li, S. Exploring ChatGPT and its impact on society. AI Ethics 2025, 5, 791–803. [Google Scholar] [CrossRef]

- Rane, N. ChatGPT and similar generative artificial intelligence (AI) for smart industry: Role, challenges and opportunities for industry 4.0, industry 5.0 and society 5.0. Challenges Oppor. Ind. 2023, 4, 1–8. [Google Scholar] [CrossRef]

- Annepaka, Y.; Pakray, P. Large language models: A survey of their development, capabilities, and applications. Knowl. Inf. Syst. 2025, 67, 2967–3022. [Google Scholar] [CrossRef]

- Meng, W.; Li, Y.; Chen, L.; Dong, Z. Using the Retrieval-Augmented Generation to Improve the Question-Answering System in Human Health Risk Assessment: The Development and Application. Electronics 2025, 14, 386. [Google Scholar] [CrossRef]

- Arslan, M.; Ghanem, H.; Munawar, S.; Cruz, C. A Survey on RAG with LLMs. Procedia Comput. Sci. 2024, 246, 3781–3790. [Google Scholar] [CrossRef]

- Holzinger, A.; Schweier, J.; Gollob, C.; Nothdurft, A.; Hasenauer, H.; Kirisits, T.; Häggström, C.; Visser, R.; Cavalli, R.; Spinelli, R.; et al. From industry 5.0 to forestry 5.0: Bridging the gap with human-centered artificial intelligence. Curr. For. Rep. 2024, 10, 442–455. [Google Scholar] [CrossRef]

- Sundberg, B.; Silversides, C. Operational Efficiency in Forestry: Vol. 1: Analysis; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1988; Volume 29. [Google Scholar] [CrossRef]

- Piragnolo, M.; Grigolato, S.; Pirotti, F. Planning harvesting operations in forest environment: Remote sensing for decision support. In ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences; Copernicus GmbH: Göttingen, Germany, 2019; Volume 4, pp. 33–40. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Wang, Y.; Pan, Y.; Yan, M.; Su, Z.; Luan, T.H. A survey on ChatGPT: AI–generated contents, challenges, and solutions. IEEE Open J. Comput. Soc. 2023, 4, 280–302. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Y.; Wang, Y.; Hou, F.; Yuan, J.; Tian, J.; Zhang, Y.; Shi, Z.; Fan, J.; He, Z. A survey of visual transformers. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 7478–7498. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Gupta, N.; Choudhuri, S.S.; Hamsavath, P.N.; Varghese, A. Fundamentals of Chat GPT for Beginners Using AI; Academic Guru Publishing House: Cambridge, MA, USA, 2024. [Google Scholar]

- Kaufmann, T.; Weng, P.; Bengs, V.; Hüllermeier, E. A Survey of Reinforcement Learning from Human Feedback. In Reinforcement Learning: Algorithms, Applications and Open Challenges; Springer: Berlin/Heidelberg, Germany, 2024. [Google Scholar] [CrossRef]

- Zhang, K.; Zeng, S.; Hua, E.; Ding, N. Ultramedical: Building Specialized Generalists in Biomedicine. Adv. Neural Inf. Process. Syst. 2024, 37, 26045–26081. [Google Scholar]

- Hong, J.; Lee, N.; Thorne, J. ORPO: Monolithic Preference Optimization without Reference Model. arXiv 2024, arXiv:2403.07691. [Google Scholar] [CrossRef]

- Khairat, S.; Niu, T.; Geracitano, J.; Zhou, Z. Performance Evaluation of Popular Open-Source Large Language Models in Healthcare. Stud. Health Technol. Inform. 2025, 328, 215–219. [Google Scholar] [CrossRef]

- OpenAI. Introducing OpenAI o3 and o4-mini. Updated 10 June 2025: O3-pro Availability. 2025. Available online: https://openai.com/index/introducing-o3-and-o4-mini/ (accessed on 14 August 2025).

- OpenAI. Model Release Notes. o3-pro Available in ChatGPT and API (10 June 2025). 2025. Available online: https://help.openai.com/en/articles/9624314-model-release-notes (accessed on 14 August 2025).

- OpenAI. Introducing GPT-5. 2025. Available online: https://openai.com/index/introducing-gpt-5/ (accessed on 4 September 2025).

- Guo, D.; DeepSeek-AI. DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning. arXiv 2025. [Google Scholar] [CrossRef]

- DeepSeek-AI. DeepSeek-R1: Model Card and Checkpoints. Hugging Face Model Repository. 2025. Available online: https://huggingface.co/deepseek-ai/DeepSeek-R1 (accessed on 4 September 2025).

- Google DeepMind. Gemini 2.5: Our Most Intelligent AI Model. 2025. Available online: https://blog.google/technology/google-deepmind/gemini-model-thinking-updates-march-2025/ (accessed on 14 August 2025).

- xAI. Introducing Grok 4.Launch Announcement Post. 2025. Available online: https://x.com/xai/status/1943158495588815072 (accessed on 14 August 2025).

- QwenLM Team. Qwen3: Open-Weight Reasoning-Centric LLM Family. Model Family Repository. 2025. Available online: https://github.com/QwenLM/Qwen3 (accessed on 14 August 2025).

- QwenLM Team. Qwen3-Coder. Coder Variants and Long-Context Notes. 2025. Available online: https://github.com/QwenLM/Qwen3-Coder (accessed on 14 August 2025).

- Anthropic. Introducing Claude 3.5 Sonnet. 2024. Available online: https://www.anthropic.com/news/claude-3-5-sonnet (accessed on 20 August 2025).

- AI, M. Mistral AI Models Overview—Mistral Large and Codestral. 2025. Available online: https://docs.mistral.ai/getting-started/models/models_overview/ (accessed on 20 August 2025).

- Cohere. Cohere Command R+: Optimized for Complex RAG Workflows and Long-Context Tasks. 2024. Available online: https://docs.cohere.com/docs/command-r-plus (accessed on 20 August 2025).

- Bejar-Martos, J.A.; Rueda-Ruiz, A.J.; Ogayar-Anguita, C.J.; Segura-Sanchez, R.J.; Lopez-Ruiz, A. Strategies for the storage of large LiDAR datasets—A performance comparison. Remote Sens. 2022, 14, 2623. [Google Scholar] [CrossRef]

- Sarker, I.H. LLM potentiality and awareness: A position paper from the perspective of trustworthy and responsible AI modeling. Discov. Artif. Intell. 2024, 4, 40. [Google Scholar] [CrossRef]

- Radeva, I.; Popchev, I.; Doukovska, L.; Dimitrova, M. Web application for retrieval-augmented generation: Implementation and testing. Electronics 2024, 13, 1361. [Google Scholar] [CrossRef]

- Ahn, Y.; Lee, S.G.; Shim, J.; Park, J. Retrieval-augmented response generation for knowledge-grounded conversation in the wild. IEEE Access 2022, 10, 131374–131385. [Google Scholar] [CrossRef]

- Xie, Y.; Jiang, B.; Mallick, T.; Bergerson, J.D.; Hutchison, J.K.; Verner, D.R.; Branham, J.; Alexander, M.R.; Ross, R.B.; Feng, Y.; et al. Wildfiregpt: Tailored large language model for wildfire analysis. arXiv 2024, arXiv:2402.07877. [Google Scholar] [CrossRef]

- Digital Green. Farmer.CHAT: AI Assistant for Agricultural Extension. 2024. Available online: https://farmerchat.digitalgreen.org/ (accessed on 17 July 2025).

- Palantir Technologies Inc. Foundry AI Platform (AIP) Overview. 2025. Available online: https://www.palantir.com/docs/foundry/aip/overview (accessed on 17 July 2025).

- Zarfati, M.; Soffer, S.; Nadkarni, G.N.; Klang, E. Retrieval-Augmented Generation: Advancing personalized care and research in oncology. Eur. J. Cancer 2025, 220, 115341. [Google Scholar] [CrossRef]

- Planet Labs PBC. Forest Carbon Monitoring—Technical Specification. 2025. Available online: https://docs.planet.com/data/planetary-variables/forest-carbon-monitoring/techspec/ (accessed on 14 August 2025).

- Planet Labs PBC. Forest Carbon Monitoring—Product Overview. 2025. Available online: https://docs.planet.com/data/planetary-variables/forest-carbon-monitoring/ (accessed on 14 August 2025).

- CarbonAi Inc. CarbonAi—Digital MRV Software and Tools. 2025. Available online: https://carbonai.ca/ (accessed on 14 August 2025).

- Ge, Y.; Hua, W.; Mei, K.; Tan, J.; Xu, S.; Li, Z.; Zhang, Y. Openagi: When llm meets domain experts. Adv. Neural Inf. Process. Syst. NeurIPS 2023, 36, 5539–5568. [Google Scholar]

- Zhou, J.; Müller, H.; Holzinger, A.; Chen, F. Ethical ChatGPT: Concerns, challenges, and commandments. Electronics 2024, 13, 3417. [Google Scholar] [CrossRef]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of hallucination in natural language generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Holzinger, A.; Zatloukal, K.; Müller, H. Is Human Oversight to AI Systems still possible? New Biotechnol. 2025, 85, 59–62. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y. A survey on evaluation of large language models. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–45. [Google Scholar] [CrossRef]

- Vulova, S.; Horn, K.; Rocha, A.D.; Brill, F.; Somogyvári, M.; Okujeni, A.; Förster, M.; Kleinschmit, B. Unraveling the response of forests to drought with explainable artificial intelligence (XAI). Ecol. Indic. 2025, 172, 113308. [Google Scholar] [CrossRef]

- Martino, A.; Iannelli, M.; Truong, C. Knowledge injection to counter large language model (LLM) hallucination. In Proceedings of the European Semantic Web Conference, Hersonissos, Greece, 28–29 May 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 182–185. [Google Scholar] [CrossRef]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar] [CrossRef]

- Jin, T.; Yazar, W.; Xu, Z.; Sharify, S.; Wang, X. Self-Selected Attention Span for Accelerating Large Language Model Inference. arXiv 2024, arXiv:2404.09336. [Google Scholar] [CrossRef]

- Tian, X. Evaluating the repair ability of LLM under different prompt settings. In Proceedings of the 2024 IEEE International Conference on Software Services Engineering (SSE), Shenzhen, China, 7–13 July 2024; pp. 313–322. [Google Scholar] [CrossRef]

- Sakib, S.K.; Das, A.B. Challenging fairness: A comprehensive exploration of bias in llm-based recommendations. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData), Washington, DC, USA, 15–18 December 2024; pp. 1585–1592. [Google Scholar] [CrossRef]

- Gupta, B.B.; Gaurav, A.; Arya, V.; Alhalabi, W.; Alsalman, D.; Vijayakumar, P. Enhancing user prompt confidentiality in Large Language Models through advanced differential encryption. Comput. Electr. Eng. 2024, 116, 109215. [Google Scholar] [CrossRef]

- Maynez, J.; Narayan, S.; Bohnet, B.; McDonald, R. On Faithfulness and Factuality in Abstractive Summarization. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 1906–1919. [Google Scholar] [CrossRef]

- Kadavath, S.; Conerly, T.; Askell, A.; Henighan, T.; Drain, D.; Perez, E.; Schiefer, N.; Hatfield-Dodds, Z.; DasSarma, N.; Tran-Johnson, E.; et al. Language Models (Mostly) Know What They Know. arXiv 2022, arXiv:2207.05221. [Google Scholar] [CrossRef]

- Jiang, Z.; Xu, F.F.; Gao, L.; Sun, Z.; Liu, Q.; Dwivedi-Yu, J.; Yang, Y.; Callan, J.; Neubig, G. Active retrieval augmented generation. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; Bouamor, H., Pino, J., Bali, K., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 7969–7992. [Google Scholar] [CrossRef]

- Frering, L.; Steinbauer-Wagner, G.; Holzinger, A. Integrating Belief-Desire-Intention agents with large language models for reliable human–robot interaction and explainable Artificial Intelligence. Eng. Appl. Artif. Intell. 2025, 141, 109771. [Google Scholar] [CrossRef]

- O’Leary, D.E. An anchoring effect in large language models. IEEE Intell. Syst. 2025, 40, 23–26. [Google Scholar] [CrossRef]

- OWASP Foundation. OWASP Top 10 for Large Language Model Applications (2025). Includes LLM01: Prompt Injection. 2025. Available online: https://owasp.org/www-project-top-10-for-large-language-model-applications/ (accessed on 14 August 2025).

- UK National Cyber Security Centre. Thinking About the Security of AI Systems. 2023. Available online: https://www.ncsc.gov.uk/blog-post/thinking-about-security-ai-systems (accessed on 14 August 2025).

- O’Leary, D.E. Confirmation and specificity biases in large language models: An explorative study. IEEE Intell. Syst. 2025, 40, 63–68. [Google Scholar] [CrossRef]

- Yi, W.; Zhang, L.; Kuzmin, S.; Gerasimov, I.; Liu, M. Agricultural large language model for standardized production of distinctive agricultural products. Comput. Electron. Agric. 2025, 234, 110218. [Google Scholar] [CrossRef]

- Pensel, L.; Kramer, S. Neural RELAGGS. Mach. Learn. 2025, 114, 123. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B. Explainable artificial intelligence (XAI) for interpreting the contributing factors feed into the wildfire susceptibility prediction model. Sci. Total Environ. 2023, 879, 163004. [Google Scholar] [CrossRef]

- Buccafurri, F.; Lazzaro, S. A Framework for Secure Internet of Things Applications. In Proceedings of the 2024 10th International Conference on Control, Decision and Information Technologies (CoDIT), Vallette, Malta, 1–4 July 2024; pp. 2845–2850. [Google Scholar] [CrossRef]

- Mota, T.d.S.; Sarkar, S.; Poojary, R.; Alqasemi, R. ChatGPT-Based Model for Controlling Active Assistive Devices Using Non-Invasive EEG Signals. Electronics 2025, 14, 2481. [Google Scholar] [CrossRef]

- Mittal, U.; Sai, S.; Chamola, V.; Sangwan, D. A comprehensive review on generative AI for education. IEEE Access 2024, 12, 142733–142759. [Google Scholar] [CrossRef]

- Nunes, L.J. The Role of Artificial Intelligence (AI) in the Future of Forestry Sector Logistics. Future Transp. 2025, 5, 63. [Google Scholar] [CrossRef]

- Holzinger, A.; Fister, I., Jr.; Fister, I.; Kaul, H.P.; Asseng, S. Human-Centered AI in smart farming: Towards Agriculture 5.0. IEEE Access 2024, 12, 62199–62214. [Google Scholar] [CrossRef]

- Sarkar, D.; Chapman, C.A. The smart forest Conundrum: Contextualizing pitfalls of sensors and AI in conservation science for tropical forests. Trop. Conserv. Sci. 2021, 14, 19400829211014740. [Google Scholar] [CrossRef]

- Retzlaff, C.O.; Gollob, C.; Nothdurft, A.; Stampfer, K.; Holzinger, A. Developing a User-Friendly Interface for Interactive Cable Corridor Planning. Croat. J. For. Eng. J. Theory Appl. For. Eng. 2025, 46, 213–223. [Google Scholar] [CrossRef]

- Schraick, L.M.; Ehrlich-Sommer, F.; Stampfer, K.; Meixner, O.; Holzinger, A. Usability in Human-Robot Collaborative Workspaces. Univers. Access Inf. Soc. UAIS 2024, 24, 1609–1622. [Google Scholar] [CrossRef]

- Ehrlich-Sommer, F.; Hörl, B.; Gollob, C.; Nothdurft, A.; Stampfer, K.; Holzinger, A. Robot Usability in the Wild: Bridging Accessibility Gaps for Diverse User Groups in Complex Forestry Operation. Univers. Access Inf. Soc. 2025, 24, 2867–2887. [Google Scholar] [CrossRef]

- Kocic, V.; Lukac, N.; Rozajac, D.; Schweng, S.; Gollob, C.; Nothdurft, A.; Stampfer, K.; Ser, J.D.; Holzinger, A. LLM in the Loop: A Framework for Contextualizing Counterfactual Segment Perturbations in Point Clouds. IEEE Access 2025, 13, 85507–85525. [Google Scholar] [CrossRef]

- Kraišniković, C.; Harb, R.; Plass, M.; Al Zoughbi, W.; Holzinger, A.; Müller, H. Fine-tuning language model embeddings to reveal domain knowledge: An explainable artificial intelligence perspective on medical decision making. Eng. Appl. Artif. Intell. 2025, 139, 109561. [Google Scholar] [CrossRef]

| Stage | Description | Key Examples |

|---|---|---|

| Pre-training | Unsupervised learning from massive text corpora by predicting the next token (causal language modeling). | GPT-3, LLaMA, PaLM, Falcon, Mistral base models |

| Instruction tuning | Supervised fine-tuning on human-written prompt–response pairs to guide format and tone. | FLAN, Alpaca, OpenAssistant, Baize, LLaMA 2 |

| Preference modeling | Use of human or synthetic ranking data to teach models which completions are preferred. | InstructGPT, RLHF preference phase, QLoRA tuning with ranked datasets |

| Reward modeling | Learn a reward function from human ratings or programmatic rules to guide later tuning. | o3 “rewarded-by-verifier”, Gemini rater pipelines, Constitutional AI rewarders |

| Reinforcement learning (RLHF/RLAIF) | Fine-tune the model using reinforcement learning with human or automated feedback. | ChatGPT (RLHF), Gemini (tool-use agents), DeepSeek-R1 (RLAIF), Claude 3 constitutional fine-tuning |

| Distillation from stronger models | Use a higher-quality model (or ensemble) to label prompts, training a smaller one on these outputs. | Zephyr, DistilGPT-2, Qwen Mini, DeepSeek-MoE |

| Format transfer/reasoning induction | Copy reasoning chains, scratchpads, or tool-use traces from stronger models. | DeepSeek-R1 (verifier traces), Grok 4 (tool-use chains), Gemini 2.5 (step-wise reasoning) |

| Safety and refusal tuning | Teach the model to avoid unsafe or out-of-scope responses via refusals or safety prompts. | o3-pro refusal layers, Claude 3 refusals, Gemini red-teaming outputs |

| Multimodal alignment (optional) | Align text, image, or code modalities using curated data or contrastive objectives. | Gemini, Qwen-VL, GPT-4V, InternLM-XComposer |

| Model Family | Release (Public) | Access Type | Context Length | Notable Features (Reasoning) |

|---|---|---|---|---|

| OpenAI o3-pro | June 2025 | Hosted (ChatGPT/API) | 128k tokens | Verifier-first alignment, high-reliability reasoning, tool use (code/web/files) [19,20] |

| OpenAI GPT-5 | August 2025 | Hosted (ChatGPT/API) | 128 k tokens (with “Thinking” mode) | State-of-the-art multi-step reasoning with verifier-backed “Thinking” mode; high robustness in safety-critical workflows [21]. |

| DeepSeek-R1 | January 2025/updated May 2025 | Open weights + API | 128 k tokens | Trained via RLAIF with chain-of-verifier reasoning; very strong step-by-step math [22,23] |

| Gemini 2.5 Pro | March–May 2025 | Hosted (Vertex AI) | 1 M tokens | Scratchpad-style reasoning, agentic tool use, long-context verified mode [24] |

| Grok 4 | June 2025 | Hosted (xAI/Twitter) | 128 k tokens (est.) | Tool-use traces and API access, emphasis on dialogue structure and utility [25] |

| Qwen 3/Qwen3-Coder | April–June 2025 | Open weights (Apache 2.0) | 128 k–200 k tokens | Multi-turn reasoning, math/code scratchpads, long-context coder variants [26,27] |

| Claude 3.5 Sonnet | October 2024 | Hosted (Anthropic) | 200 k tokens | Constitutional fine-tuning; strong real-world reasoning and tool integration [28] |

| Mistral Large/Codestral | March/May 2025 | Hosted (API)/open weights | 65 k tokens (est.) | Open-weight base models and API-available reasoning-capable large variants [29] |

| Command R+ (Cohere) | April 2025 | Hosted + weights | 128 k tokens | RAG-tuned foundation model with strong retriever-integrated reasoning [30] |

| System | Primary Domain | Typical Data Sources | Forestry-Relevant Parallels |

|---|---|---|---|

| WildfireGPT | Natural-hazard decision support | Near-real-time fire-weather grids, burn-scar satellite scenes, peer-reviewed studies | Integrates dynamic geospatial layers with scientific literature to advise land managers. |

| Farmer.CHAT and Agri-Llama | Smallholder agriculture | Extension manuals, call-centre transcripts, farmer videos | Mirrors forestry extension: multilingual guidance, region-specific best practice, small-holder constraints. |

| Palantir AIP (OAG) | Supply-chain resilience | ERP streams, sensor alerts, optimisation models | Shows how live telemetry plus deterministic solvers can be piped into a chat agent, analogous to combining harvester feeds with wood-flow optimisation. |

| Problem | What It Looks Like in Practice | Underlying Reason and Mitigation (2025 Update) |

|---|---|---|

| Hallucination | The model invents a regulation, cites a non-existent journal article, or fabricates numerical results. | It always chooses the most statistically probable token sequence. If no reliable pattern exists, it will still produce a fluent guess [48]. Verifier-rewarded models (e.g., o3, DeepSeek-R1) and tool use fallback chains (e.g., Gemini 2.5) reduce hallucination frequency. |

| Stale knowledge | Answers do not mention a law passed last month or a beetle outbreak reported yesterday. | The model’s weights are frozen at the moment of training. Anything published after that date is unknown unless fed in via RAG or agents [49]. RAG-native models like Command R+ or Gemini can inject up-to-date data in context. |

| Limited “attention span” | When a prompt exceeds the model’s context window (e.g., >100 k tokens), early passages are silently dropped, leading to contradictions or omissions. | The Transformer can only process a fixed number of tokens at once; older tokens fall off the back of the window [50]. Models like Gemini 2.5 and Claude 3.5 extend limits to 200k–1M tokens, and structured summarization or retrieval mitigates overflow. |

| Prompt sensitivity | Changing a single word in the question yields a noticeably different answer. | Small phrasing shifts alter the statistical path the model follows, much like nudging a marble down a branching maze [51]. Stability improves via prompt libraries, system prompts, and verifier-guided sampling (as in o3 and Claude 3.5). |

| Bias and unfairness | Stereotypical or unbalanced language appears in generated text. | The model reflects patterns present in its training data, including historical biases [52]. 2025 models use fine-grained constitutional training (Claude), RAG-based perspective balancing, and verifier gating to reduce bias. |

| Confidentiality leak | Private fragments from earlier sessions or fine-tuning data surface in a response. | Without strict filtering, the model can echo memorised snippets when they boost token-level probability [53]. Differential privacy, fine-tuning firewalls, and audit logs are increasingly common in enterprise deployments. |

| Inconsistent tool use | The model fails to reliably invoke external tools (e.g., GIS or simulation APIs) or mixes tool output with hallucinated content. | Tool-calling in agents (e.g., Gemini, o3) can drift if reward signals are unclear. New verifier-first tool chains with rejection sampling and system checks improve reliability. |

| Verifier bypass risk | Despite verifier layers, the model produces an incorrect but confident output (e.g., unsafe forest management advice). | Verifier-guided decoding is not foolproof; attacks or distributional drift can cause bypass. Runtime feedback loops, fallback to tools, and human-in-the-loop checkpoints mitigate failure. |

| Model | Release | Access | Context (Headline) | Highlights (Forestry-Relevant) |

|---|---|---|---|---|

| OpenAI o3/o3-mini/ o3-pro | December 2024–June 2025 | Hosted (ChatGPT/API) | —(reasoning-focused) | Successors to o1 with stronger deliberate reasoning; o3-mini offers speed/latency trade-offs; o3-pro (June 2025) targets highest reliability and native tool use (web/code/files) [19,20] |

| DeepSeek-R1 | January 2025/updated May 2025 | Open weights and API | Varies by size | RL-trained reasoning; open release incl. distilled 1.5–70B checkpoints; performance comparable to o1 on reasoning tasks, at open-source cost/sovereignty [22,23] |

| Gemini 2.5 Pro | March–May 2025 | Hosted (Gemini/Vertex) | 1 M tokens (2 M announced) | Native multimodality; state-of-the-art reasoning/coding; production-grade million-token context useful for long regulations, manuals, and code bases [24]. |

| Grok 4 | July 2025 | Hosted (xAI API) | ∼256 k | RL-scaled “native tool use” and real-time search; strong frontier reasoning benchmarks; designed to autonomously plan searches and use code tools [25] |

| Qwen 3 | April–July 2025 | Open weights and API | Up to 256 k (1 M via extrapolation in Coder variants) | Hybrid dense/MoE family with “hybrid reasoning” focus; permissive licensing and long-context variants; strong multilingual support and agentic coding [26,27] |

| OpenAI GPT-5 | August 2025 | Hosted (ChatGPT/API) | 128 k tokens (“Thinking mode”) | New frontier-level baseline from OpenAI with significantly improved multi-step reasoning and the debut of Thinking Mode for better planning, explanation, and reflection. Recommended for complex forestry copilots [21] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ehrlich-Sommer, F.; Eberhard, B.; Holzinger, A. ForestGPT and Beyond: A Trustworthy Domain-Specific Large Language Model Paving the Way to Forestry 5.0. Electronics 2025, 14, 3583. https://doi.org/10.3390/electronics14183583

Ehrlich-Sommer F, Eberhard B, Holzinger A. ForestGPT and Beyond: A Trustworthy Domain-Specific Large Language Model Paving the Way to Forestry 5.0. Electronics. 2025; 14(18):3583. https://doi.org/10.3390/electronics14183583

Chicago/Turabian StyleEhrlich-Sommer, Florian, Benno Eberhard, and Andreas Holzinger. 2025. "ForestGPT and Beyond: A Trustworthy Domain-Specific Large Language Model Paving the Way to Forestry 5.0" Electronics 14, no. 18: 3583. https://doi.org/10.3390/electronics14183583

APA StyleEhrlich-Sommer, F., Eberhard, B., & Holzinger, A. (2025). ForestGPT and Beyond: A Trustworthy Domain-Specific Large Language Model Paving the Way to Forestry 5.0. Electronics, 14(18), 3583. https://doi.org/10.3390/electronics14183583