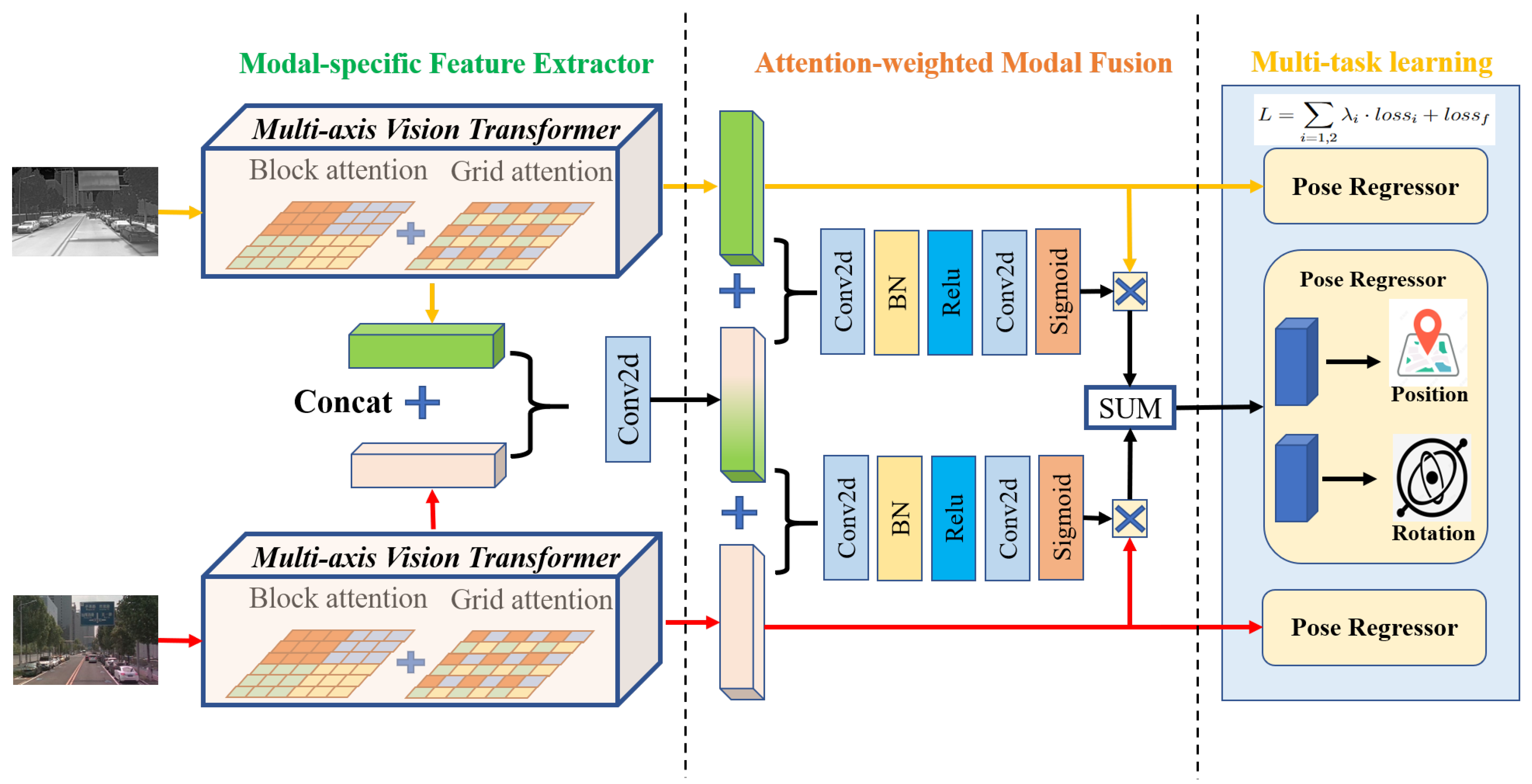

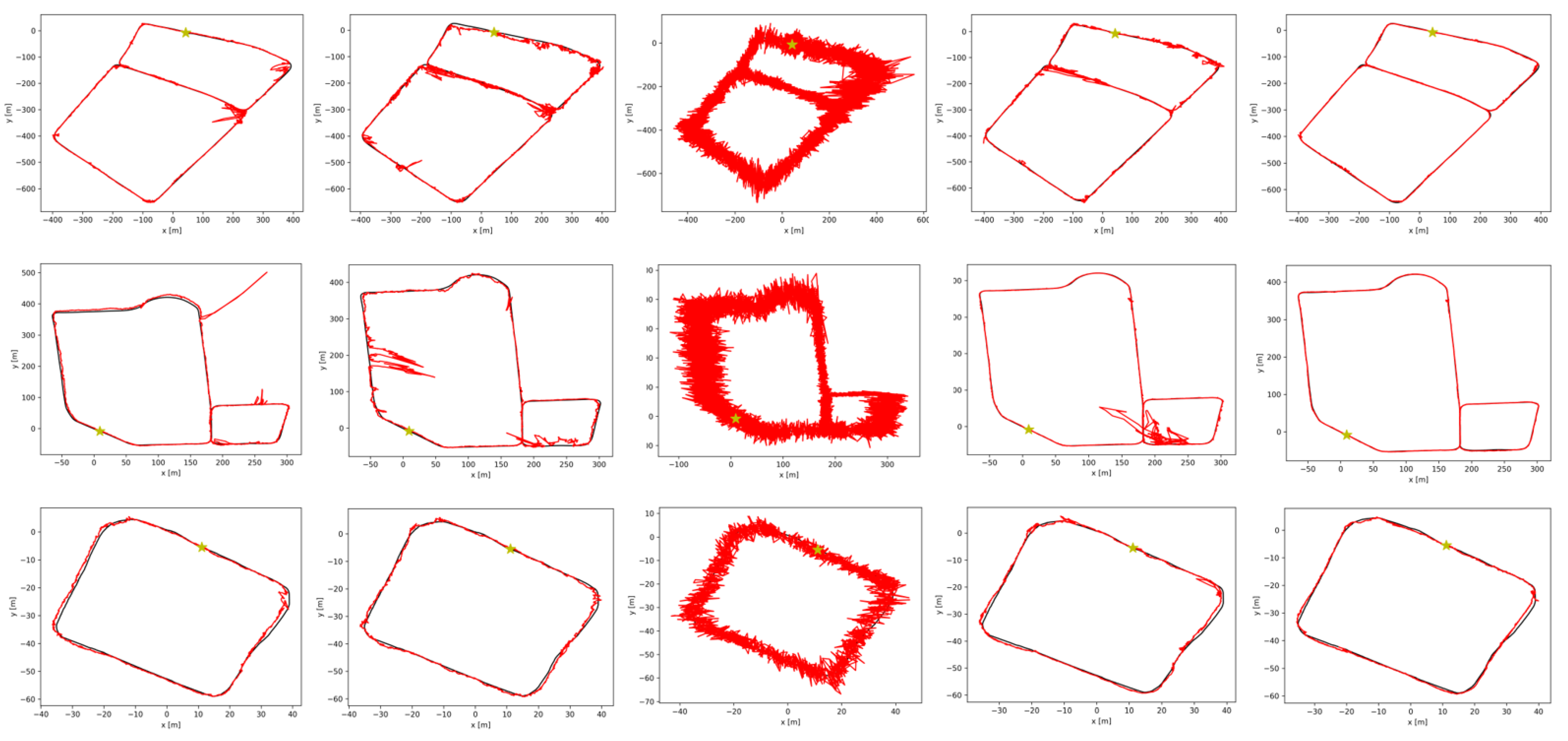

Our proposed VIFPE, a novel end-to-end multimodal learning framework that fuses visible and infrared images for pose estimation, is illustrated in

Figure 1. It employs two modality-specific feature encoders to extract features from visible and infrared images, which are subsequently fused through a modality-aware fusion module. As shown in

Figure 1, every pose regressor consists of two Multi-Layer Perceptrons (MLPs) that predict 6-Degree-of-Freedom (6DoF) pose information, including position and orientation. The pose regressor in the second row predicts the 6DoF pose from the fusion of two modal features, while another two separate MLPs directly estimate the pose from single-modal features without fusion. The final loss function jointly optimizes pose estimation across these three outputs. In general, we use the output of the MLPs in the second row as the final prediction of the 6DoF pose. Overall, the problem can be formally formulated as follows:

Here,

denote the final outputs of the model, where

represents the 3D position coordinates and

represents the 3D orientation angles;

and

are inputs, where

represents infrared-image input, with dimensions of 224 × 224 × 3;

represents an RGB image, with identical dimensions of 224 × 224 × 3;

and

represent MaxViT-based feature extraction networks for modality-specific feature encoding;

represents the feature fusion module integrating multi-modal features; and

represents the pose estimation module (specifically, the

block in the second row in

Figure 1), directly predicting the 6DoF pose

.

3.1. Modality-Specific Feature Extractor

To extract visual features, we utilize Multi-axis Vision Transformer (MaxViT) for both visible and infrared images. MaxViT integrates convolutional layers and transformer-based attention mechanisms, balancing local and global feature extraction. Compared to traditional convolutional neural networks (CNNs) like those in AtLoc and PoseNet, MaxViT provides stronger global context modeling while maintaining computational efficiency. The core component of MaxViT, Multi-Axis Self-Attention (MaxSA), employs block attention (focusing on local regions) and grid attention (capturing global dependencies) to enhance feature representation. This structured attention mechanism mitigates the computational overhead of full self-attention. Each MaxViT block consists of an MBConv layer, block attention, and grid attention. The MBConv layer, comprising depth-wise convolution (DWConv) and a Squeeze-and-Excitation (SE) module, enhances channel representation while reducing parameters.

In this context,

and

are inputs, where

represents the infrared-image input,

stands for the RGB input, and

and

denote the feature maps output by the MBConv layer, serving as inputs to the MaxSA module. Taking

as an illustrative example, the computation of block attention within the MaxSA module necessitates decomposing the input into

, allowing for the computation of attention within each q × q window, rather than applying full attention across the entire input. Conversely, grid attention requires partitioning the input to be computed into

, enabling grid-based computation of global attention. This approach fosters comprehensive interaction between local and global attention, facilitating the generation of multi-level expressive features while circumventing the exponentially increasing computational complexity associated with traditional attention mechanisms. Hence, a complete MaxViT block encompasses MBConv and MaxSA, fully integrating the advantages of convolutional modules, multi-scale attention, and residual connection structures. The formula for this structure can be expressed as follows:

In this context,

and

denote the feature maps after passing through a single MaxViT block. Consequently, we denote

and

as the feature maps after they have undergone four consecutive MaxViT blocks. Therefore, during the feature extraction phase, the process of extracting image features from the two modalities can be expressed as follows:

3.2. Attention-Weighted Modal Fusion (AWMF)

As depicted in the figure, after modal-specific feature extraction, we obtain feature representations for two modalities. In this subsection, we explore the Attention-Weighted Modal Fusion Module (AWMF), which calculates the importance of the features obtained from each modality in the previous stage to derive individual modal weights. This, in turn, allows for the weighted summation of features from both modalities.

By directly concatenating the outputs obtained from the previous stage along the channel dimension and then reducing the channel dimension back to its original size using a convolutional neural network, we can obtain a mixed feature

that encapsulates information from both modalities. Simple convolutional operations are insufficient for effectively fusing these two features, and the resulting information often contains a significant amount of redundancy and interference. Therefore, instead of directly utilizing

for pose regression, we choose to employ an attention weight calculation module for post-processing. This module enables us to obtain the weight of each modality in the feature fusion process, facilitating adaptive feature fusion and leading to more accurate pose estimation results. Furthermore, we will also provide a visual explanation of the effectiveness of this module. In terms of input and output, the AWMF module takes the concatenation of

and

as the input and ultimately produces the weighted fused feature

. Specifically, the attention weight calculation module processes each input pair of

and

to obtain the corresponding weight map

for each

:

Here,

represents the sigmoid function, and

denotes a 2D convolutional layer with a kernel size of 3 for both dimensions. Each convolutional layer is followed by batch normalization and a Leaky ReLU activation function. This module models the distribution relationship of uni-modal features within the multimodal fused features by outputting attention maps. Subsequently, we perform a weighted summation of the weights and features of the two modalities to obtain the fused feature map.

We leverage the AWMF module to compute the weights of features from different modalities during the fusion process, and then perform weighted summation on these features to obtain more discriminative fused features. In Equation (

9), we perform an element-wise multiplication (denoted by *) between the weight map

and the feature map

. This approach enables the complementary advantages of different modality data to be realized. Due to the method’s streamlined structure and excellent scalability, when the number of modalities increases, it suffices to incorporate the corresponding features and their weights for each additional modality into the weighted summation formula. This facilitates the integration of more features, holding great potential for future work involving the fusion of even more modalities, such as text, point clouds, and audio.

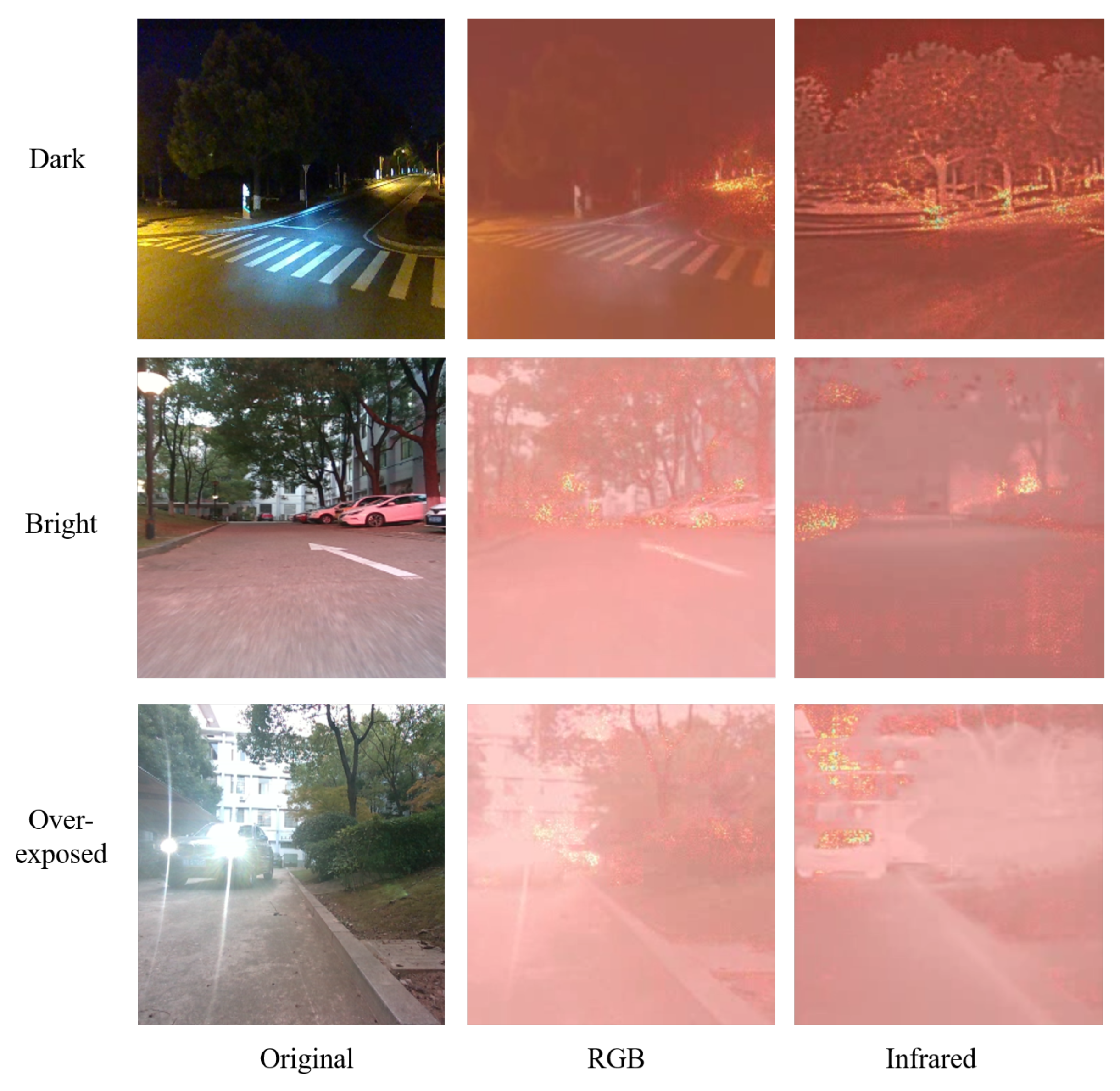

3.3. Multi-Task Learning Strategy for Multimodal Pose Estimation

Visible images often exhibit rich textures but are highly susceptible to variations in illumination. Conversely, infrared images are insensitive to changes in illumination but lack the rich textural information present in visible images. Therefore, we have devised a fusion learning strategy. This includes designing loss functions tailored for individual modalities and modal fusion, and also developing loss functions for collaborative learning between modal fusion and individual modalities. This approach enables the optimization of parameters for the fused modality while simultaneously refining the model parameters for individual modalities. Consequently, it facilitates collaborative learning among the parameters of the three models and achieves complementary integration of information from the two modalities. During the feature extraction stage, the parameters of the feature extraction networks for the two modalities can be mutually optimized during the optimization process. Therefore, we design pose regressors for each modality following the feature extraction stage, in order to design loss functions specifically tailored for individual modalities. The pose regressors are both two-layer MLPs (Multi-Layer Perceptrons), represented as

where

represents the 3D position and 3D orientation (quaternion) extracted and regressed from the single-modal feature. Accordingly, the pose predicted by MLPs from the fused feature

can be described as

where

represents the 6DoF pose regressed from the fused feature

. To simplify the computational process, the pose is expressed in the relative coordinate, with the starting point of the trajectory serving as the origin of coordinates.

In Bayesian modeling, Homoscedastic uncertainty represents a task-related aleatoric uncertainty whose variation is independent of changes in input data but rather depends on variations in tasks. The output of our proposed model can be regarded as a multi-task learning model that combines and output pose information based on different modalities. In the context of multi-task learning, the uncertainty associated with tasks can reflect the degree of mutual trust among them. These relative confidences can, in turn, indicate the uncertainty inherent in the regression tasks themselves. Ref. [

38] proposes utilizing Homoscedastic uncertainty as a reference to design the weights of different tasks in the loss function. By maximizing Gaussian likelihood through the utilization of task-dependent uncertainty, the outputs for translation and rotation can be designed as two tasks within a multi-task loss function. Furthermore, building upon this foundation, we extend the design to incorporate three regression outputs as three distinct tasks within a multi-task loss function. For single-modal outputs, we define

as the model output with input

and weights

. Based on this definition, we propose the following probabilistic model:

We define the likelihood function as a Gaussian distribution, where the mean of this distribution corresponds to the pose output of the model with a uni-modal input. The symbol

represents the observation noise. For the position and orientation outputs of the model, we define the likelihood function for multiple outputs as their factorization, in which

serves as the sufficient statistic:

In the process of estimation using Maximum Likelihood Estimation (MLE), we can take its logarithm and then maximize the log-likelihood value of the model. In the context of our method, we express the log-likelihood in the following form to represent the Gaussian likelihood function:

The symbol

denotes the magnitude of noise observed in the output; subsequently, we maximize the aforementioned logarithmic likelihood function with respect to the weights

and the noise parameter

. Given that the uni-modal output consists of two results, position and orientation, which are considered to be two separate tasks, we obtain the following multi-task logarithmic likelihood function:

Therefore, the loss function can be defined as

In this context,

, where it can be observed from the formula that during the process of minimizing this loss function, the weights of the position and orientation of the single-task output decrease as the noise parameter

increases, and vice versa. To simplify the expression, we define

and

, and use

instead of

. And we introduce

and

into the formula, the loss function for the single-modal multi-task output is expressed as

During the training process, the loss function for the two uni-modal training tasks can be represented by the following equation:

where

and

are learnable parameters used to determine the weightings of translation and rotation, respectively. In calculating the loss function, we use the logarithm of the quaternion

, denoted as

, which comprises a real part

u and an imaginary part

, to more accurately describe the continuous changes in orientational motion. To ensure a unique solution for the quaternion during rotation, we constrain all quaternions to lie within the same hemisphere. Hence, we have

Thus, the features obtained after fusing the two modal data are passed through a pose regressor to generate the fused pose output. The loss function optimized during this process is similar to that of the single-modal case, and is expressed as follows:

Therefore, the loss function required for our collaborative learning strategy can be expressed as the weighted sum of three loss functions, as shown below:

where

is a learnable hyper-parameter used to adjust the weights of the single-modal and fused-modal loss functions, which are both initially set to 0.5. From the design of the fusion loss function mentioned above, it can be observed that the role of the modal-specific

is to facilitate the optimization of the parameters of the respective modal-specific feature extractor, thereby promoting the optimization of the fused-modal

, and vice versa. This forms a mutually reinforcing effect on optimization. The final output of the model can be described as

where

stands for the final result of the prediction of the pose. Therefore, on the whole, the multimodal collaborative learning strategy not only enhances the efficiency of feature extraction for each single modality but also optimizes the regression results of the fused modality. This allows each single modality to fully leverage its respective data characteristics and integrates complementary information in the fused modality, achieving the goal of collaborative optimization and thus obtaining more accurate pose estimation. Refer to

Appendix A for detailed information of Multi-task learning strategy.