Abstract

To improve the accuracy of water surface debris detection under complex backgrounds and strong reflection conditions, this paper proposes a lightweight improved object detection algorithm based on YOLOv8n. Since shallow features are most sensitive to low-level visual interference such as water surface reflections, this paper adopts the C2f_RFAConv module to enhance the model’s robustness to reflection interference regions. By adopting the Four-Detect-Adaptively Spatial Feature Fusion (ASFF) module, the model’s perception capabilities for objects of different scales (especially small objects) are improved. To avoid excessive computational complexity caused by the addition of new components, this paper adopts the lightweight Slim-neck structure. The Minimum Point Distance Intersection over Union (MPDIoU) loss function effectively improves the localization accuracy of detected objects by directly minimizing the Euclidean distance between the predicted bounding box and the ground truth bounding box. Experiments conducted on the publicly available water surface debris dataset provided by the Roboflow Universe platform show that the proposed method achieves 94.5% and 58.6% on the mAP@0.5 and mAP@0.5:0.95 metrics, respectively, representing improvements of 2.27% and 5.21% over the original YOLOv8 model.

1. Introduction

As environmental pollution issues become increasingly severe, floating garbage in water bodies is causing growing damage to ecosystems [1], not only affecting the stability of aquatic ecosystems but also posing potential threats to shipping safety, water-based operations, and tourism activities. Currently, the cleanup of floating debris on water surfaces primarily relies on manual operations, which suffer from issues such as low efficiency, high costs, and poor real-time performance. To achieve efficient and cost-effective cleanup methods, image-processing-based automatic detection techniques have emerged as a key technology. These methods analyze collected images to automatically identify the location and category of targets, significantly enhancing real-time performance and accuracy of detection.

In recent years, the rapid development of deep learning has driven the widespread application of object detection technology, giving rise to a variety of detection frameworks, including Faster R-CNN [2], SSD [3], and the YOLO series [4]. These methods have been widely applied in practical scenarios such as industrial quality inspection and traffic monitoring, demonstrating excellent performance and application value. Yang Huapeng et al. [5] used the Faster R-CNN method to effectively identify six common objects on the water surface, enhancing the target recognition capabilities of unmanned boats in complex water environments. However, this method has limited detection capabilities for small objects and involves high computational complexity. To address computational resource constraints and detection accuracy requirements in practical deployments, Henar et al. [6] designed an edge temperature monitoring system based on a multi-task convolutional neural network, significantly improving the system’s operational efficiency and energy consumption performance on embedded platforms such as PCs and Jetson.

Among these, the YOLO [7] series, as a representative of single-stage detection, has performed exceptionally well in edge scenarios due to its end-to-end, high-speed, and flexible deployment characteristics. In recent years, it has also been gradually applied to water surface target detection for identifying floating debris, obstacles, and vessels. However, due to issues such as strong reflections, water wave disturbances, dense targets, and irregular occlusions in water surface scenarios, traditional YOLO models still face challenges in terms of accuracy and robustness [8].

To address these issues, researchers have conducted extensive studies from the perspectives of model accuracy, structural lightweighting, and environmental adaptability. For example, Liu Tao et al. [9] improved the anchor box settings and feature fusion structure of YOLOv3, significantly enhancing the accuracy of sea surface target detection. However, limitations remain in terms of anchor box dependency and target scale adaptability. Bochkovskiy et al. [10] proposed YOLOv4, a real-time object detection system that integrates multiple detection technology innovations from the past two years. It achieved outstanding performance on the MS COCO dataset, becoming the new mainstream detection framework. However, this method still has a certain false positive rate in complex water surface backgrounds and exhibits unstable recognition performance for non-structured targets such as floating debris. To balance detection accuracy and model lightweightness, the MSA-YOLOv5 model proposed by Park Jong-chan et al. [11] achieved an average accuracy of 98.3% on the VHR-10 dataset with only 1.795 million parameters, balancing accuracy, and lightweightness in small object detection tasks suitable for real-time applications in resource-constrained environments. Huang Chengwen et al. [12] replaced the C3 structure in YOLOv5 with a Transformer encoder and introduced a small object detection layer and CBAM module, achieving higher accuracy and better performance in water surface object detection, but this increased model complexity and impacted real-time performance. YOLOv8 [13], as one of the important updated versions in this series, introduces anchor-free design and a modular structure, balancing detection performance and engineering deployment flexibility. Based on YOLOv8, Zhang Haozhi et al. [14] proposed an improved lightweight water obstacle detection algorithm, achieving real-time and accurate detection of water obstacles. Wang Jie et al. [15] proposed an improved algorithm, YOLOv8-MSS. Experimental results on the public dataset FloW-Img showed that this algorithm improved detection accuracy by 5% and 2.6% compared to the original model, further enhancing the model’s accuracy and practicality while maintaining real-time performance.

Therefore, this paper proposes an improved object detection algorithm based on YOLOv8, aiming to enhance its detection accuracy and stability in complex water surface environments. Our contributions are as follows:

- (1)

- Adding the RFAConv (receptive-field attention convolution) spatial attention mechanism to the backbone network enables the model to better handle details and complex patterns in detection images, effectively addressing missed and false detections.

- (2)

- To improve detection accuracy for small objects, the original three-layer detection head is replaced with a four-layer ASFF module to achieve multi-scale feature fusion. Combined with the DFL strategy, this improves the model’s accuracy and stability in complex water surface scenes.

- (3)

- To avoid a decrease in inference speed and an increase in model size caused by the introduction of additional modules, this study adopted the Slim-neck architecture, which consists of the GSConv (Ghost-Shuffle Convolution) and VoV-GSCSP (VoVNet-based Ghost-Shuffle Cross Stage Partial) modules in the neck structure.

- (4)

- Applying the MPDIoU (Minimum Point Distance Intersection over Union) loss function to the bounding box regression task replaces the traditional CIoU method, improving localization accuracy and convergence effects with a more stable geometric metric.

2. Method

2.1. YOLOv8 Algorithm

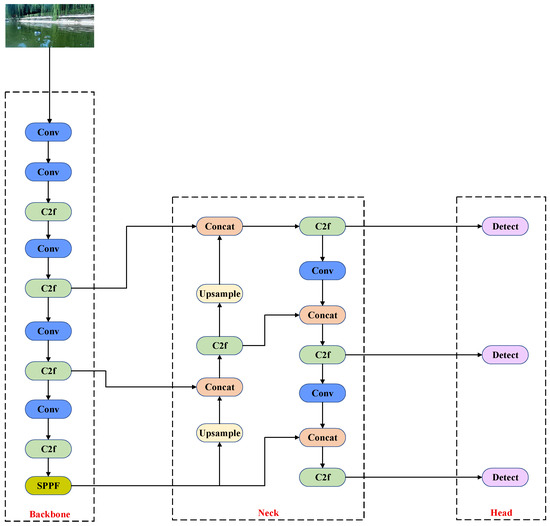

YOLOv8 [16] is the eighth-generation core version of the YOLO series released by Ultralytics in early 2023. As shown in Figure 1, the overall architecture of YOLOv8 consists of three parts: the backbone network, the neck network, and the detection head. Building on the architectural advantages of YOLOv5 [17], this version has undergone systematic optimization and upgrades in model structure, training strategies, and inference efficiency. Although YOLOv12, proposed in 2024 by a team including Tsinghua University and Alibaba DAMO Academy, represents the latest research progress in this series to some extent, YOLOv8 continues to be widely applied in object detection due to its lightweight design, high-precision detection performance, and excellent engineering scalability, and has become one of the key foundational architectures for secondary development. To adapt to different platform conditions and application requirements, YOLOv8 offers five scaled variants: YOLOv8n (nano), YOLOv8s (small), YOLOv8m (medium), YOLOv8l (large), and YOLOv8x (extra-large). These variants incrementally increase in network depth and width, covering a wide range of scenarios from lightweight rapid deployment to high-precision, high-computational-power applications, achieving a dynamic balance between model complexity and detection performance. Among these, YOLOv8n is more suitable for resource-constrained real-time deployment environments, while YOLOv8x excels in accuracy and is ideal for high-performance applications with ample computational resources.

Figure 1.

YOLOv8 architecture diagram.

Figure 2 illustrates the key structural details of each module. The Conv (Convolutional Block) is the most basic building block in YOLOv8, typically consisting of a standard convolution (Conv2d), batch normalization, and an activation function, used to extract local spatial features from images and perform channel mapping; the C2f (Cross Stage Partial with two convolution layers and feature fusion) module is structurally simpler than the CSP (Cross Stage Partial Network) in YOLOv5, using a dual-convolution branch to achieve cross-stage feature fusion, thereby enhancing semantic modeling capabilities while reducing parameter counts and computational overhead; the SPPF (Spatial Pyramid Pooling-Fast) module is a lightweight design based on SPP (Spatial Pyramid Pooling), which extracts multi-scale features by concatenating multiple max pooling operations. This not only enhances the receptive field and context awareness but also avoids redundant computations, thereby improving inference efficiency.

Figure 2.

Main module expansion details. In Figure 2, h denotes the height of the feature map, w denotes the width of the feature map, and c denotes the number of channels in the feature map (c_in and c_out denote the number of input channels and output channels, respectively).

2.2. Overview of the Improved Algorithm

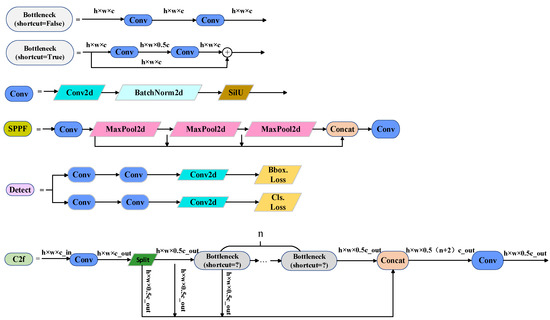

Given the dual requirements of real-time performance and lightweight architecture for water surface object detection tasks, this paper selects YOLOv8n as the base architecture for improvement. This model features a small parameter count and fast inference speed, enabling it to maintain detection accuracy while effectively reducing computational overhead. It is suitable for resource-constrained practical applications and provides a solid performance foundation for subsequent structural optimizations. Within this framework, we propose an improved YOLOv8n model. The specific structure is shown in Figure 3: In the backbone network, the C2f module in the first layer is replaced with C2f_RFAConv to enhance feature modeling for reflective regions and lay the foundation for small object detection; additionally, the detection head is upgraded to a four-branch structure, with an extra small object detection layer P2 added, and combined with the Four-Detect-ASFF multi-scale feature fusion module to achieve more precise small object detection; In the neck network, the C2f module is replaced with the VoV-GSCSP module, and GSConv is used instead of traditional convolutions to improve feature fusion efficiency while controlling model size, thereby avoiding a significant increase in parameter count and computational complexity due to the addition of too many modules.

Figure 3.

Improved YOLOV8 structure diagram.

2.3. RFAconv Module

Zhang et al. [18] proposed RFAConv (Receptive-Field Attention Convolution), a novel spatial attention mechanism whose core idea is to deeply integrate spatial attention with convolution operations, thereby enhancing the ability of convolutional neural networks (CNNs) to model spatial features. This mechanism uses attention maps guided by receptive field size to adaptively weight the importance of different spatial positions. This enables the network to suppress response shifts in overexposed areas caused by mirror reflections and strengthen edge semantic expression when processing high-brightness regions, thereby reducing missed and false detection.

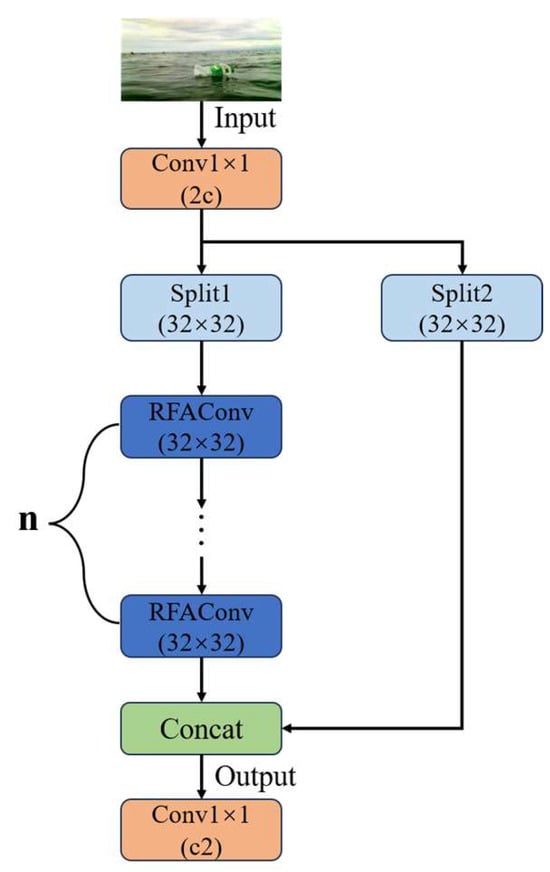

In water surface waste detection scenarios, small objects account for a large proportion, and under conditions of strong reflections and complex backgrounds, their fine-grained features are often weakened during deep feature propagation, leading to missed detections or inaccurate localization. To enhance the characterization of small objects at the early stage of feature extraction and to mitigate the impact of reflection interference, the original C2f module in the first layer of the backbone network is replaced with a C2f_RFAConv module. While retaining the efficient feature splitting and fusion advantages of C2f, this module incorporates the spatial feature enhancement mechanism of RFAConv, enabling shallow features to integrate both local details and global contextual information. As a result, it improves small object detection accuracy and robustness against reflection interference prior to multi-scale feature fusion.

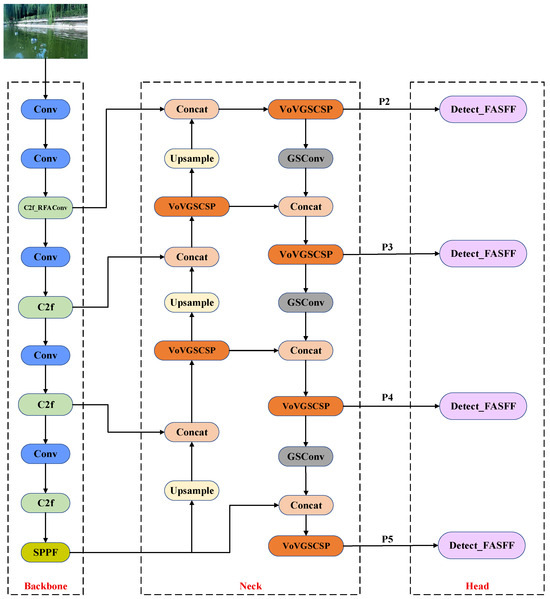

The block diagram of module C2f_RFAConv is shown in Figure 4. Its operation process can be divided into three stages: channel compression and division, multi-layer residual enhancement, and feature fusion and output. Its structural process can be expressed as follows:

Figure 4.

Structure of C2f_RFAConv.

Given an input feature map , it is first passed through a convolution to reduce the channel dimension to , i.e.:

In the above equation, represents the feature map after channel compression, represents the convolution operation, R is a set of real numbers, B represents the batch size, represents the number of intermediate channels, and H and W represent the spatial dimensions (height and width) of the feature map.

Subsequently, is split along the channel dimension into two parts, namely the first branch feature and the second branch feature, each with channels, which are used for the main path connection and the subsequent residual computation:

Taking as the initial input, successive operations are performed, with each iteration defined as follows:

where denotes the i-th module, which consists of a convolution followed by an RFAConv module, with residual connections introduced when the specified conditions are satisfied.

The initial branch and the residual-enhanced features are concatenated to form the fused feature , i.e.:

Finally, a convolution is applied to restore the channel dimension to :

In this equation, n denotes the number of residual enhancement layers, Z represents the final output feature of the module, and indicates the number of output channels.

2.4. Four-Detect-ASFF Module

In multi-scale object detection, shallow features contain rich spatial details that facilitate precise localization of small objects, while deep features possess stronger semantic representation capabilities, making them suitable for recognizing large objects. Traditional feature fusion methods (e.g., FPN [19], PAN [20]) can achieve semantic enhancement and information transfer to a certain extent; however, their fusion ways are fixed and lack dynamic modeling capabilities, making it difficult to adaptively adjust to different object scales.

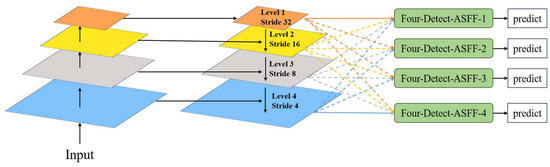

To address the aforementioned issues, this study integrates an adaptive feature fusion module based on four detection branches. This module extends the core concept of Adaptively Spatial Feature Fusion (ASFF) proposed by Liu et al. [21] by introducing an additional P2 branch specifically designed for small object detection. The P2 branch directly exploits high-resolution, low-level semantic features from the backbone network to capture more fine-grained spatial information, thereby enhancing the perception of small and complex-shaped objects.

The working principle of Four-Detect-ASFF is shown in Figure 5. This module establishes a fully connected cross-scale information interaction pathway between multi-scale feature maps (Level 1–Level 4). First, feature maps from different levels are aligned and re-calibrated through an adaptive spatial weighting mechanism to ensure spatial consistency during cross-scale information fusion. Subsequently, a hierarchical enhancement mechanism is introduced to reinforce the complementary relationship between the detailed information contained in shallow-level features and the semantic information in high-level features. After fusion, each level of features enters an independent prediction branch to achieve fine-grained detection of objects at different scales.

Figure 5.

Four-Detect-ASFF working principal diagram.

In each Four-Detect-ASFF module, shallow high-resolution features are processed by downsampling, while deep low-resolution features are aligned to the target scale by upsampling. Subsequently, each feature map is processed through a dedicated convolution layer for channel alignment and fed into a lightweight attention branch to obtain the fusion weight for each path. These weights are normalized using the Softmax function and applied in a weighted summation to achieve dynamic feature fusion, effectively alleviating the issues of information redundancy or loss commonly seen in traditional fusion methods. The fused features at each scale are then passed to independent prediction branches to achieve refined multi-scale object detection.

For the -th output layer, the calculation method for its fusion features (where ) can be expressed as follows:

where denotes the input feature map of the -th layer, where corresponding to the four input scales (P2, P3, P4, and P5), with strides of 4, 8, 16, and 32, respectively; denotes transforming the spatial resolution of the feature map at layer to the same size as layer ; is the weight parameter, corresponding to the attention weights of input in the fusion at layer , satisfying the normalization constraint; ; denotes the standard convolution layer after fusion, used for further feature extraction and channel integration.

2.5. Slim-Neck Module

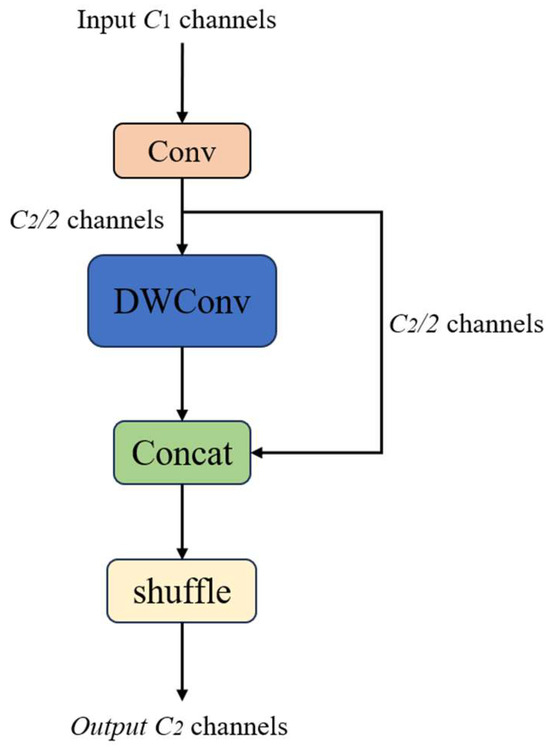

The Slim-neck module based on GSConv was proposed by Li et al. [22]. Its main innovation is to combine the lightweight convolution idea of GSConv with Ghost feature generation and Channel Shuffle technology, which reduces redundant computations and parameter counts while retaining key feature expression capabilities. It also integrates the multi-branch feature aggregation of VoV-GSCSP with the cross-stage fusion mechanism of CSP, achieving efficient multi-scale feature interaction and information completion. This method aims to reduce the computational overhead of the neck network and improve the model’s inference speed.

The module structure of GSConv is shown in Figure 6. It primarily alleviates the loss of semantic information caused by feature map spatial compression (i.e., reduction in width and height) and channel expansion by parallel concatenation of standard convolution downsampling and depth-wise separable convolution (DWConv). Standard convolution is mainly responsible for extracting local spatial features, while DWConv enhances the expressive power between channels while maintaining a low number of parameters. The synergistic effect of the two not only improves the integrity and expressive richness of features but also further optimizes the feature propagation path, helping to retain more key semantic information in a lightweight structure, thereby providing stronger support for subsequent feature fusion and object detection.

Figure 6.

The architecture of the GSConv module.

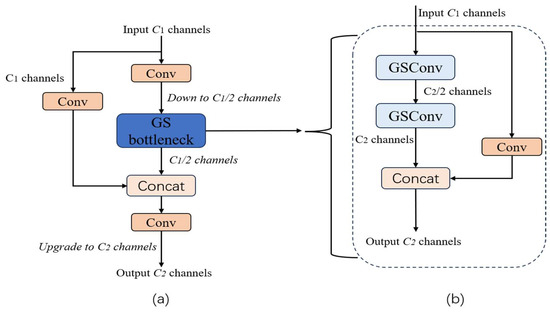

In Figure 7, VoV-GSCSP integrates the GS bottleneck module with channel splitting and reorganization strategies to enable efficient information flow and feature interaction, thereby reducing inter-layer dependency and redundant transmission. This design improves inference speed and computational efficiency while maintaining detection accuracy, making it particularly suitable for deployment on unmanned surface vehicles (USVs) with limited space and computing resources. Specifically, Figure 7a illustrates the overall framework of VoV-GSCSP: the input features are first processed by a convolutional layer, then extracted and compressed through the GSConv-based bottleneck structure (GS bottleneck), followed by feature concatenation and convolutional output. Figure 7b presents the details of the GS bottleneck, where a two-stage GSConv is applied to process features in parallel, with subsequent channel alignment and fusion. Combined with the Cross Stage Partial (CSP) structure, this design reduces computational cost and enhances cross-channel feature interaction, thereby achieving model lightweighting.

Figure 7.

(a) Module structure diagram of VoV-GSCSP. (b) GS bottleneck structure diagram.

2.6. Loss Function Improvement

The regression loss function is a key factor in the training and optimization process of object detection. The YOLOv8 model uses the Complete Intersection over Union (CIoU) as the default boundary regression loss function, which comprehensively considers three factors: overlapping area, center point distance, and width-to-height ratio difference, to enhance regression performance.

The expression for CIoU is as follows:

In Equation (7), is the Euclidean distance between the center point of the predicted box and the center point of the true box , represents the diagonal length of the minimum bounding box containing the predicted box and the true box, is a balancing weight parameter that dynamically adjusts the influence of the width and height terms on the total loss based on the Intersection over Union (IoU) value, and is the aspect ratio penalty term.

However, since the center distance term of CIoU is a smooth function, when the prediction box is far away, the gradient of this term tends to saturate and cannot provide strong regression guidance. Therefore, in the task of detecting floating garbage on water surfaces, the model’s initial predictions often exhibit significant positional deviations due to reflections, water ripples, or interference from floating objects. In such cases, CIoU struggles to effectively drive the prediction box to converge rapidly toward the target area, thereby impacting convergence speed and detection accuracy.

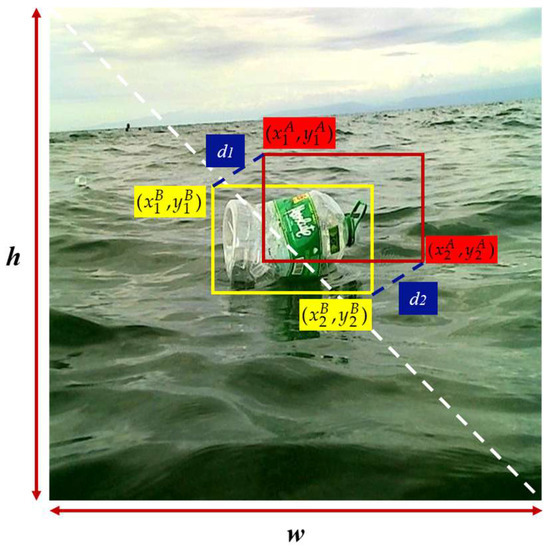

To alleviate the above issues, this paper adopts Minimum Point Distance Intersection over Union (MPDIoU)proposed in [23] as an auxiliary regression loss. This method calculates the minimum Euclidean distance between the predicted box and the true box boundary, providing an effective gradient optimization direction even when the predicted box does not overlap with the target, thereby enhancing the boundary position constraint capability. Compared to traditional IoU-based losses, MPDIoU provides stable and clear optimization signals when the bounding box is in the deviation phase, making it particularly suitable for addressing issues such as target boundary blurring caused by reflective interference and initial positioning inaccuracies of small targets. This helps the model focus on the true target region earlier in training, thereby improving regression accuracy and convergence speed.

Figure 8 shows the geometric diagram of MPDIoU, where the red box represents the predicted box A, the yellow box represents the true target box B, and the blue dashed line indicates the minimum Euclidean distance between the boundary points of the two boxes. Let denote the coordinates of the top-left and bottom-right corners of box A, respectively, and denote those of box B. The terms and denote the squared Euclidean distances between the top-left and bottom-right corners of boxes A and B, respectively, used to measure the minimum offset between the two boxes at their boundary positions; and denote the width and height of the input image.

Figure 8.

MPDIoU geometric diagram.

The MPDIoU loss function, represented as , is formulated as follows:

In the equation, represents the IoU between the ground truth box and the predicted box, and denotes the IoU based on the minimum point-to-point distance.

3. Experiments and Analysis

3.1. Dataset and Experimental Setup

To validate the effectiveness of the proposed method, experiments were conducted using a water-surface floating garbage detection dataset from the Roboflow Universe platform. This platform integrates a variety of high-quality computer vision resources, offering substantial sample diversity and precise annotation standards. The dataset contains approximately 3600 images, with representative examples shown in Figure 9. It encompasses typical aquatic environments such as lakes, rivers, and oceans, and exhibits challenging characteristics including significant variations in illumination, strong surface reflections, and complex wave patterns, making it both representative and demanding. The images cover common types of floating debris, such as plastic bottles, aluminum cans, wooden blocks, and plastic bags, thereby realistically reflecting typical water pollution scenarios and enhancing the model’s adaptability and applicability in complex environments. To ensure effective model training and reliable evaluation, the dataset was divided into training, validation, and test sets in an 8:1:1 ratio, enabling a comprehensive assessment of the model’s accuracy, robustness, and generalization performance in multi-class object detection tasks.

Figure 9.

Samples of some datasets.

The experimental environment in this study was established on a laptop equipped with an i7-12650H processor and 8 GB of RAM. The detailed specifications of other key parameters are provided in Table 1.

Table 1.

Experimental key parameters.

3.2. Evaluation Metrics

This article uses mean Average Precision (mAP) as the main evaluation metric, which is calculated as follows:

In the above equation, represents the target detection accuracy of a single category; represents the average value of multiple categories; represents the recall rate, i.e., the ability to retrieve targets; represents the total number of all categories; represents the precision, i.e., the probability that the model detects category .

Additionally, this paper uses GFLOPs (Giga Floating Point Operations) to measure the computational complexity of the model. This metric reflects the number of floating-point operations required by the model to process a single image. A higher value indicates greater computational complexity, placing higher demands on hardware resources (such as GPUs); conversely, a lower GFLOPs value indicates a lighter-weight model, making it more suitable for deployment on resource-constrained devices such as mobile or embedded systems. The calculation formula is as follows:

In the above equation, and represent the height and width of the output feature map, represents the number of output channels, represents the number of input channels, and and represent the height and width of the convolution kernel.

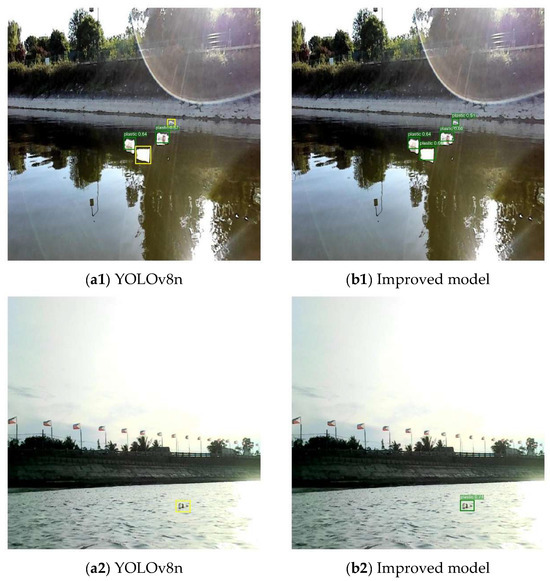

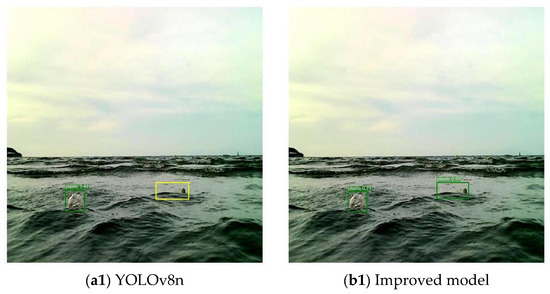

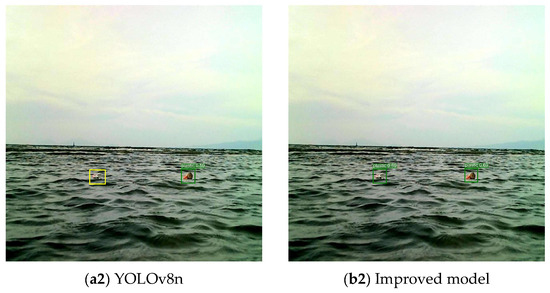

3.3. Visualization Analysis

To provide a more intuitive demonstration of the performance of the proposed improved model, representative images from the test set were selected for a comparative evaluation against the YOLOv8n model. The comparison results are presented in Figure 10 and Figure 11, where green boxes represent correctly detected targets and yellow boxes denote missed detections. Specifically, in Figure 10 and Figure 11, Figure 10(a1,a2) and Figure 11(a1,a2) correspond to the detection results of YOLOv8n, while Figure 10(b1,b2) and Figure 11(b1,b2) illustrate the detection outcomes of the proposed model.

Figure 10.

Interference resistance comparison.

Figure 11.

Comparison of detection performance when obstructed by water waves.

In Figure 10(a1,a2), due to factors such as water surface reflections and the target being at a distance, the YOLOv8n model exhibited obvious false negatives; in contrast, in Figure 10(b1,b2), the model proposed in this paper successfully detected these targets with high detection difficulty.

In Figure 11, even though the water waves partially obstruct the target, the model proposed in this article can still accurately identify and locate the target.

3.4. Ablation Experiment

As shown in Table 2, this study conducted a total of sixteen ablation experiments. In the table, “√” indicates that the module was used, while “-” indicates that it was not used. Experiment 1 used the original YOLOv8n as the baseline model, with mAP@0.5 and mAP@0.5:0.95 of 0.924 and 0.557, respectively, and GFLOPs of 8.1. After introducing RFAConv alone (Experiment 2), the mAP@0.5 improved to 0.935, and the mAP@0.5:0.95 improved to 0.569, indicating that this module effectively enhances spatial feature modeling capabilities. After replacing the detection head with Four-Detect-ASFF (Experiment 3), the mAP@0.5:0.95 improved more significantly to 0.586, but the GFLOPs increased to 15.4, indicating that while it enhances detection accuracy, it also introduces some computational overhead. After introducing the Slim-neck module (Experiment 4), the model significantly reduces computational complexity while maintaining stable detection accuracy, with GFLOPs decreasing from 8.1 to 7.3, highlighting its lightweight advantage. The use of the MPDIoU loss function (Experiment 5) yields stable gains in bounding box regression accuracy, with mAP@0.5 improving to 0.939, while computational complexity remains unchanged. Further analysis reveals that improvements from individual modules are limited, while combinations of multiple modules exhibit clear complementary advantages. For example, the combination of RFAConv and Four-Detect-ASFF (Experiments 6, 12, 13) demonstrated outstanding accuracy improvements, with Experiment 13 achieving the highest detection performance (mAP@0.5 = 0.945, mAP@0.5:0.95 = 0.588). However, its GFLOPs increased to 15.4, nearly doubling compared to the baseline model. This primarily stems from the additional computational overhead of the Four-Detect-ASFF detection head, particularly the convolutional operations on the high-resolution P2 branch, which significantly amplified the inference complexity. Further analysis of Experiment 16 reveals that introducing Slim-neck reduces GFLOPs from 15.4 to 14.2 (approximately 8.5% decrease) while maintaining near-identical detection accuracy (mAP@0.5 = 0.945, mAP@0.5:0.95 = 0.586), indicating that Slim-neck effectively alleviates the computational burden imposed by Four-Detect-ASFF. In summary, when all four modules are integrated (Experiment 16), the model achieves a significant reduction in computational complexity while maintaining high accuracy, realizing an optimal balance between detection performance and lightweight design. This makes it better suited for practical deployment in resource-constrained scenarios.

Table 2.

Ablation experiment results.

3.5. Comparison of Different Loss Functions

To evaluate the effectiveness of MPDIoU, it was compared with CIoU, DIoU [24], WIoU [25], EIoU [26], and GIoU [27]. As shown in Table 3, the YOLOv8n model using the MPDIoU loss function achieves a 1.62% and 4.13% improvement in mAP@0.5 and mAP@0.5:0.95, respectively, compared to CIoU’s 0.924 and 0.557, demonstrating superior detection accuracy. DIOU, EIoU, and GIoU also outperform CIoU, but the improvement is not as significant as that of MPDIoU, while WIoU yields relatively lower results.

Table 3.

Comparison of results of different loss functions.

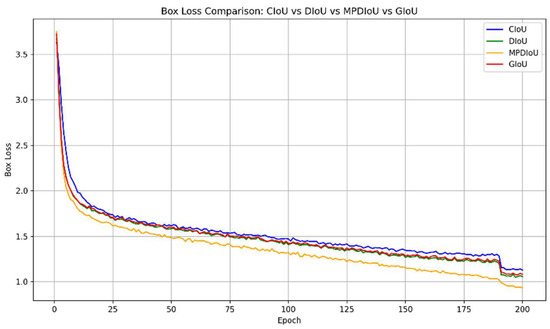

As shown in Table 3, the accuracy of DIoU and MPDIoU is relatively close in terms of mAP@0.5 and mAP@0.5:0.95. Therefore, to further verify the advantage of MPDIoU, the Box Loss variation trends of the CIoU, DIoU, GIoU, and MPDIoU loss functions were compared (see Figure 12). The results show that MPDIoU exhibits a faster loss reduction in the early stages of training compared with DIoU and CIoU and maintains a lower loss value throughout the entire training process. This indicates that MPDIoU demonstrates stronger capabilities in providing stable regression signals and accelerating convergence, thereby exhibiting higher applicability in the detection of small targets on water surfaces and in scenarios with blurred boundaries.

Figure 12.

Comparison of loss functions.

3.6. Comparison of Different Models

3.6.1. YOLO Model Comparison Experiment

To comprehensively evaluate the performance of various models in terms of detection performance and deployment efficiency, this paper selected multiple YOLO series models and the improved model proposed in this paper for comparison and analysis based on metrics such as mAP, GFLOPs, and model size. As shown in Table 4, YOLOv7 achieves the highest detection accuracy on mAP@0.5 and mAP@0.5:0.95, but its computational overhead and model size are large, making it unsuitable for lightweight deployment. In contrast, YOLOv5s has higher detection accuracy than the original YOLOv8n, but it falls short of the improved YOLOv8n proposed in this paper in terms of model size and inference speed. Although the improved YOLOv8n model is 2.41 MB larger than the original version, its mAP@0.5 and mAP@0.5:0.95 have been improved to 0.945 and 0.586, respectively, achieving a good balance between accuracy and efficiency. It is particularly suitable for water surface target detection tasks with resource constraints and high real-time requirements.

Table 4.

Detection performance comparison of different models.

3.6.2. Comparison with Other Algorithms

To evaluate the performance of the improved algorithm proposed in this paper for water surface object detection tasks, it was compared with mainstream detection algorithms such as YOLOv8n-MSS [15], Transformer-based Rt-DETR [28], and Faster R-CNN. The results are shown in Table 5. In terms of accuracy, the proposed method achieves 0.945 and 0.586 on the mAP@0.5 and mAP@0.5:0.95, respectively, outperforming the comparison models in both metrics. Compared to YOLOv8n-MSS, Rt-DETR, and Faster R-CNN, mAP@0.5 improved by 1.4%, 2.7%, and 7.8%, respectively; mAP@0.5:0.95 improved by 2.3%, 0.9%, and 32.2%, respectively. The model proposed in this paper has a size of 8.38 MB, which is slightly larger than YOLOv8n-MSS, but this difference results in better accuracy and recall performance. At the same time, the size is still much smaller than Rt-DETR-R50 and Faster R-CNN, balancing lightweight design and deployment efficiency while maintaining high accuracy and recall.

Table 5.

Comparison with other algorithms.

4. Conclusions

Due to the high complexity of the water surface environment, object detection tasks face multiple challenges. First, strong lighting and mirror-like reflections can cause bright areas on the water surface, which can interfere with the model’s ability to identify object edges and textures. Therefore, in the improved model proposed in this article, the C2f module in the first layer of the backbone network is replaced with the C2f_RFAConv module, enhancing the model’s ability to represent spatial structure and focus on key regions. Second, considering that floating garbage on water surfaces is typically small in size, has limited pixel coverage, and is prone to being missed at long distances or under low-resolution conditions, the detection head is replaced with the Four-Detect-ASFF module to enhance multi-scale feature fusion capabilities, thereby improving small object detection performance. Third, the Slim-neck module is adopted in the neck structure to reduce the computational complexity of the model and ensure its lightweight nature. Finally, to address the issue that continuously changing water waves can cause target shape distortion or local occlusion, thereby weakening the model’s ability to perceive target boundaries, this study replaces the original CIoU with MPDIoU to improve the accuracy and convergence stability of bounding box regression. Experimental results show that the model achieves 94.5% and 58.6% on mAP@0.5 and mAP@0.5:0.95, respectively, representing improvements of 2.27% and 5.21% over the original YOLOv8.

5. Future Work

However, this study also has certain limitations: first, the robustness of the algorithm under different water environments, lighting conditions, and weather scenarios still requires further validation; second, although the lightweight design of the model improves inference speed, it may still face computational challenges on extremely resource-constrained embedded platforms; finally, the scale and diversity of the dataset remain insufficient, which limits the model’s applicability in more complex and diverse water surface environments.

In future research, we will further expand the categories and labeling system of the dataset to achieve more refined target annotation, thereby enhancing the model’s adaptability and generalization capabilities across diverse types of surface debris. Additionally, we will focus on further optimizing the model’s lightweight design to reduce computational and storage overhead, enabling more efficient real-time deployment on resource-constrained embedded devices and unmanned surface vehicle platforms. Concurrently, we plan to explore integrating temporal information with video object detection methods to enhance detection stability in dynamic water waves and continuous scenes, thereby providing more practical solutions for intelligent water environment monitoring and cleanup.

Author Contributions

Conceptualization, R.X.; Methodology, W.Z.; Validation, R.X.; Formal analysis, W.Z.; Investigation, W.Z.; Resources, R.X.; Data curation, R.X.; Writing—original draft, R.X.; Writing—review & editing, W.Z.; Funding acquisition, W.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Honingh, D.; Van Emmerik, T.; Uijttewaal, W.; Kardhana, H.; Hoes, O.; Van De Giesen, N. Urban River Water Level Increase through Plastic Waste Accumulation at a Rack Structure. Front. Earth Sci. 2020, 8, 28. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Redd, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016, Lecture Notes in Computer Science; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Hussain, M. YOLO-v1 to YOLO-v8, the Rise of YOLO and Its Complementary Nature toward Digital Manufacturing and Industrial Defect Detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Peng, Y.; Yan, Y.; Feng, B.; Gao, X. Recognition and Classification of Water Surface Targets Based on Deep Learning. J. Phys. Conf. Ser. 2021, 1820, 012166. [Google Scholar] [CrossRef]

- Canilang, H.M.O.; Caliwag, A.C.; Camacho, J.R.C.; Lim, W.; Maier, M. Edge TMS: Optimized Real-Time Temperature Monitoring Systems Deployed on Edge AI Devices. IEEE Internet Things J. 2023, 11, 2490–2506. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, Y.; Yu, X.; Yuan, C. Unmanned Surface Vehicles: An Overview of Developments and Challenges. Annu. Rev. Control 2016, 41, 71–93. [Google Scholar] [CrossRef]

- Liu, T.; Pang, B.; Ai, S.; Sun, X. Study on Visual Detection Algorithm of Sea Surface Targets Based on Improved YOLOv3. Sensors 2020, 20, 7263. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Park, J.-C.; Kim, M.; Kim, G.-W. MSA-YOLOv5: An Improved Light-Weight YOLOv5 Model for Small Object Detection. In Proceedings of the 2024 15th International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 16–18 October 2024; pp. 658–663. [Google Scholar]

- Huang, C.; Zhu, Y.; Wang, J.; He, X. Water Surface Target Detection Algorithm for Unmanned Cleaning Ship Based on Improved YOLO V. In Proceedings of the 2022 International Conference on Cyber-Physical Social Intelligence (ICCSI), Nanjing, China, 18–21 November 2022; pp. 386–391. [Google Scholar]

- Sohan, M.; Sai Ram, T.; Reddy, R.; Venkata, C. A review on yolov8 and its advancements. In International Conference on Data Intelligence and Cognitive Informatics; Springer: Berlin/Heidelberg, Germany, 2024; pp. 529–545. [Google Scholar]

- Zhang, H.; Yuan, C.; Wang, K.; Wang, Y.; Liu, Q.; Shi, J. Improved YOLOv8-Based Obstacle Detection Algorithm for Waterborne Transportation. J. Phys. Conf. Ser. 2025, 2999, 012008. [Google Scholar] [CrossRef]

- Wang, J.; Zhao, H. Improved YOLOv8 Algorithm for Water Surface Object Detection. Sensors 2024, 24, 5059. [Google Scholar] [CrossRef] [PubMed]

- Swathi, Y.; Challa, M. YOLOv8: Advancements and innovations in object detection. In International Conference on Smart Computing and Communication; Springer Nature: Singapore, 2024; pp. 1–13. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; NanoCode012, C.; Changyu, L.; Laughing, H. Ultralytics/Yolov5: v3.0. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 22 May 2025).

- Zhang, X.; Liu, C.; Yang, D.; Song, T.; Ye, Y.; Li, K.; Song, Y. RFAConv: Innovating Spatital Attention and Standard Convolutional Operation. arXiv 2023, arXiv:2304.03198. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Liu, S.; Huang, D. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 385–400. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A lightweight-design for real-time detector architectures. J. Real-Time Image PR 2024, 21, 62. [Google Scholar] [CrossRef]

- Siliang, M.; Yong, X. MPDIoU: A Loss for Efficient and Accurate Bounding Box Regression. arXiv 2023, arXiv:2307.07662. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Cho, Y.J. Weighted Intersection over Union (wIoU): A New Evaluation Metric for Image Segmentation. arXiv 2021, arXiv:2107.09858. [Google Scholar]

- Zhang, Y.F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 658–666. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs Beat Yolos on Real-Time Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 16–21 June 2024; pp. 16965–16974. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).