Scaling Entity Resolution with K-Means: A Review of Partitioning Techniques

Abstract

1. Introduction

- Historical Context: We trace the evolution of K-Means from its early use as a straightforward clustering tool for blocking to its modern role as a core partitioning engine in state-of-the-art ANN indexes like the Inverted File (IVF) system.

- Architectural Analysis: We dissect the architecture of leading partition-based ANN systems, focusing on the K-Means-driven design of Google’s SCANN and contrasting it with the alternative graph-based paradigm exemplified by HNSW.

- Survey of the Cutting Edge: We analyze the limitations of static indexes in dynamic environments and provide a survey of the emerging field of adaptive indexing, which represents the next frontier in scalable vector search.

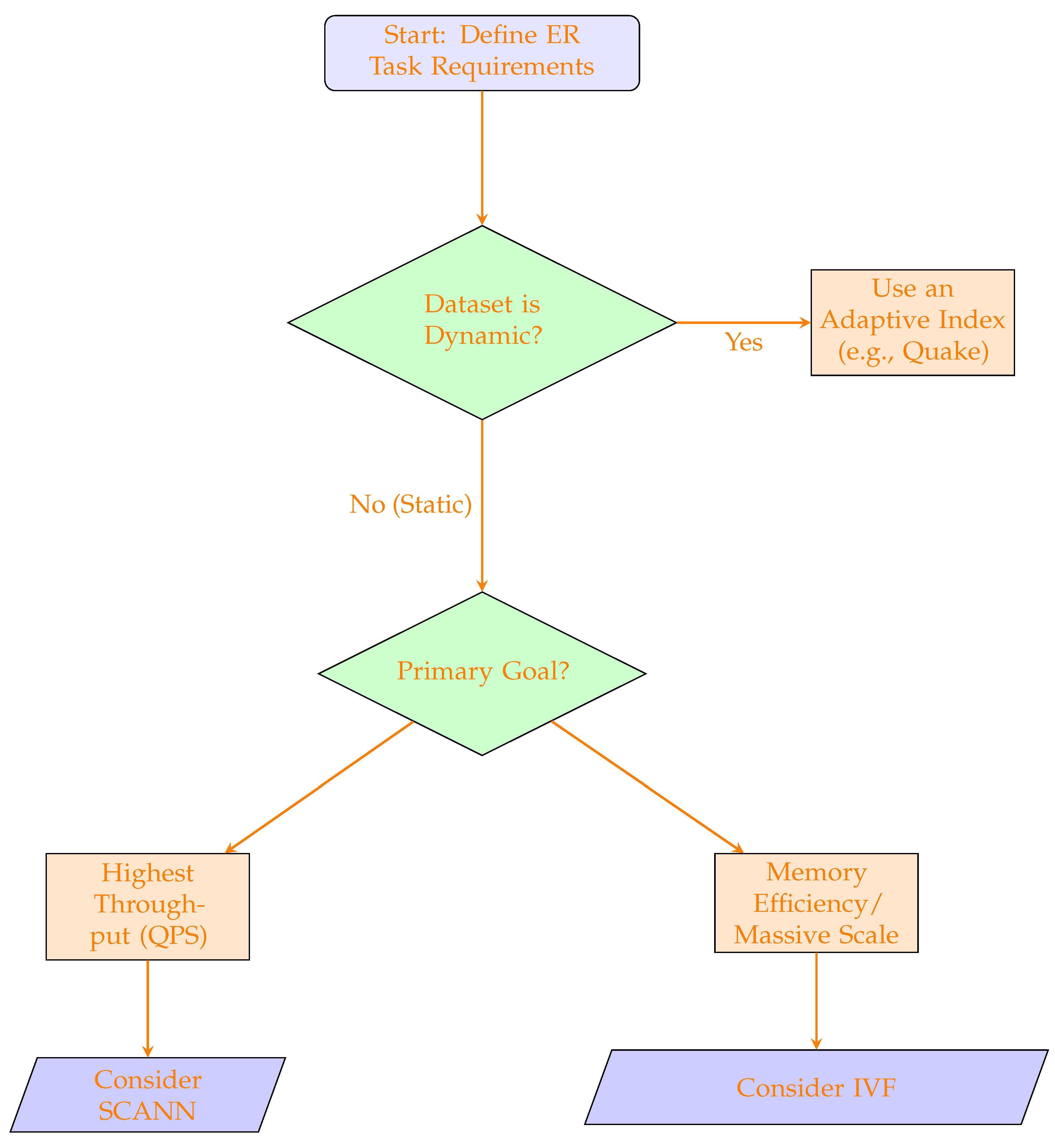

- A Framework for Practitioners: By synthesizing the literature, we clarify the trade-offs between different approaches and provide a structured framework, including a decision flowchart, to help researchers and practitioners select and optimize a partitioning strategy suited to their specific ER task.

2. Background and Related Work

2.1. The ER Workflow: From Blocking to Matching

2.2. A Brief Taxonomy of Blocking and Matching Methods

2.3. Foundational Partitioning with K-Means

3. The Evolution and Adaptation of K-Means for ER

4. Vector Quantization for Efficient ANN Search

4.1. The Sub-Optimality of Traditional Quantization

4.2. Product Quantization (PQ)

5. A Foundational Partitioned Index: The Inverted File System (IVF)

- Coarse Search: The query vector is compared only against the k centroids to find the most promising partitions. Since k is orders of magnitude smaller than the total number of vectors, this step is extremely fast.

- Fine-Grained Search: The system identifies the ‘nprobe’ nearest centroids, where ‘nprobe’ is a tunable parameter. An exhaustive search is then performed *only* on the vectors contained in the inverted lists of these few partitions [49].

6. State-of-the-Art Partition-Based Blocking: Google’s SCANN

7. An Alternative Paradigm: Graph-Based Indexing with HNSW

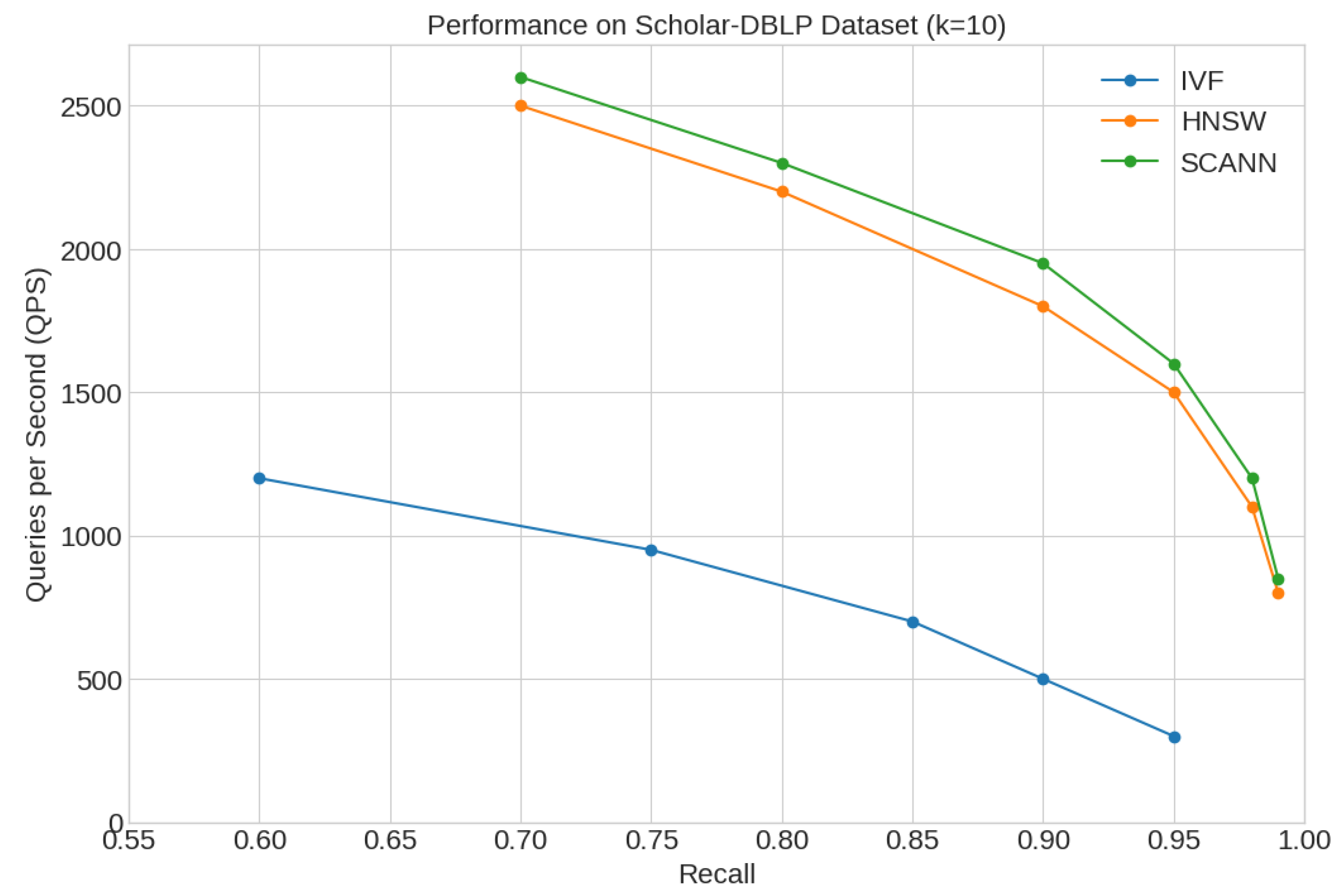

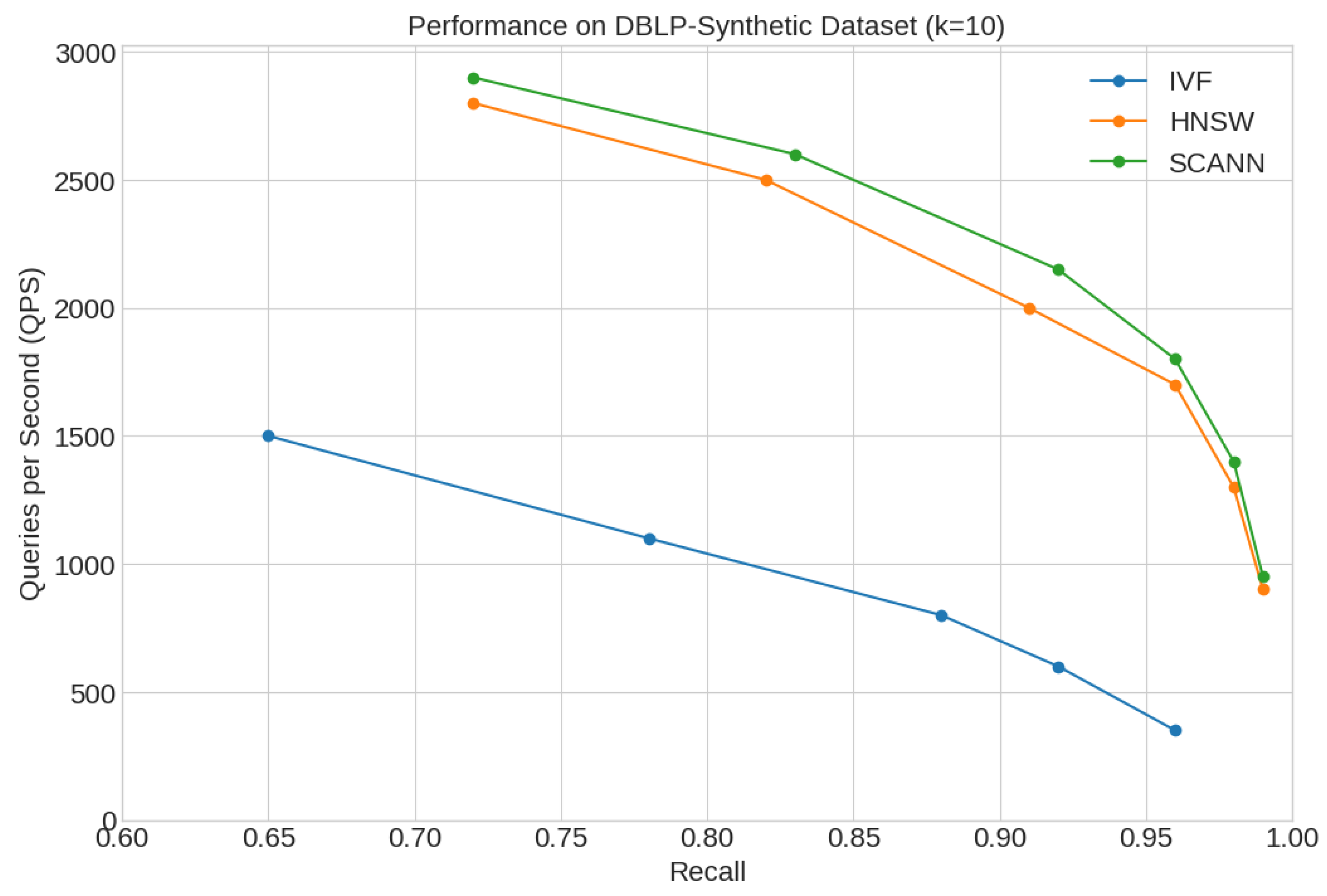

8. Comparative Analysis of Indexing Paradigms

9. Shortcomings of Existing Approaches and the Next Frontier in Adaptive Indexing

9.1. Limitations of Graph-Based Indexes

9.2. Limitations of Partitioned Indexes

9.3. Limitations of Early Termination Methods

9.4. The Next Frontier: Adaptive Indexing with Quake

10. Illustrative Performance Benchmark

11. A Practitioner’s Framework for Method Selection

12. Conclusions and Future Directions

12.1. Opportunities in Hardware Acceleration

12.2. Ethical Considerations and Bias

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Papadakis, G.; Tsekouras, L.; Thanos, E.; Giannopoulos, G.; Koubarakis, M.; Palpanas, T. Blocking and filtering techniques for entity resolution: A survey. ACM Comput. Surv. 2020, 53, 1–38. [Google Scholar]

- Elmagarmid, A.K.; Ipeirotis, P.G.; Verykios, V.S. Duplicate record detection: A survey. IEEE Trans. Knowl. Data Eng. 2007, 19, 1–16. [Google Scholar] [CrossRef]

- Christen, P. Data Matching: Concepts and Techniques for Record Linkage, Entity Resolution, and Duplicate Detection; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Hernandez, M.A.; Stolfo, S.J. The merge/purge problem for large databases. In Proceedings of the 1995 ACM SIGMOD International Conference on Management of Data, San Jose, CA, USA, 22–25 May 1995; pp. 127–138. [Google Scholar]

- Papadakis, G.; Koutras, N.; Koubarakis, M.; Palpanas, T. A comparative analysis of blocking methods for entity resolution. Inf. Syst. 2016, 57, 1–22. [Google Scholar]

- McCallum, A.; Nigam, K.; Ungar, L.H. Efficient clustering of high-dimensional data sets with application to reference matching. In Proceedings of the Sixth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Boston, MA, USA, 20–23 August 2000; pp. 169–178. [Google Scholar]

- Papadakis, G.; Ioannou, E.; Palpanas, T.; Niederee, C.; Nejdl, W. Beyond keyword search: Discovering entities in the web of data. In Proceedings of the 20th International Conference on World Wide Web, Hyderabad, India, 28 March–1 April 2011; pp. 583–592. [Google Scholar]

- Papadakis, G.; Koubarakis, M.; Palpanas, T. A survey of blocking and filtering methods for entity resolution. arXiv 2013, arXiv:1305.1581. [Google Scholar]

- Thirumuruganathan, S.; Galhotra, V.; Mussmann, S.; Gummadi, A.; Das, G. DeepBlocker: A deep learning based blocker for entity resolution. In Proceedings of the 2021 International Conference on Management of Data, Virtual Event, China, 20–25 June 2021; pp. 1639–1652. [Google Scholar]

- Ebraheem, M.; Thirumuruganathan, S.; Joty, S.; Ouzzani, M.; Tang, N. Distributed representations of tuples for entity resolution. PVLDB 2018, 11, 1454–1467. [Google Scholar]

- Indyk, P.; Motwani, R. Approximate nearest neighbors: Towards removing the curse of dimensionality. In Proceedings of the Thirtieth Annual ACM Symposium on Theory of Computing, Dallas, TX, USA, 24–26 May 1998; pp. 604–613. [Google Scholar]

- Wang, J.; Shen, H.T.; Song, J.; Ji, J. Hashing for similarity search: A survey. arXiv 2014, arXiv:1408.2927. [Google Scholar] [CrossRef]

- Zeakis, A.; Papadakis, G.; Skoutas, D.; Koubarakis, M. Pre-trained Embeddings for Entity Resolution: An Experimental Analysis. PVLDB 2023, 16, 2225–2238. [Google Scholar] [CrossRef]

- Guo, R.; Kumar, S.; Choromanski, K.; Simcha, D. Quantization based fast inner product search. In Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, Cadiz, Spain, 9–11 May 2016; pp. 482–490. [Google Scholar]

- Charikar, M.S. Similarity estimation techniques from rounding algorithms. In Proceedings of the Thiry-Fourth Annual ACM Symposium on Theory of Computing, Montréal, QC, Canada, 19–21 May 2002; pp. 380–388. [Google Scholar]

- Karapiperis, D.; Verykios, V.S. An LSH-based Blocking Approach with a Homomorphic Matching Technique for Privacy-Preserving Record Linkage. Trans. Knowl. Data Eng. 2015, 27, 909–921. [Google Scholar] [CrossRef]

- Shen, J.; Li, P.; Wang, Y.; Wang, Y.; Zhang, C. Neural-LSH for deep learning based blocking. In Proceedings of the 2022 International Conference on Management of Data, Philadelphia, PA, USA, 12–17 June 2022; pp. 1923–1936. [Google Scholar]

- Shen, J.; Li, P.; Wang, Y.; Wang, Y.; Zhang, C. Neural-LSH for deep learning based blocking: Extended version. arXiv 2023, arXiv:2303.04543. [Google Scholar]

- Karapiperis, D.; Tjortjis, C.; Verykios, V.S. LSBlock: A Hybrid Blocking System Combining Lexical and Semantic Similarity Search for Record Linkage. In Proceedings of the 29th European Conference on Advances in Databases and Information Systems, Advances in Databases and Information Systems (ADBIS), Tampere, Finland, 23–26 September 2025. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In International Conference on Machine Learning; PmLR: New York, NY, USA, 2021. [Google Scholar]

- Waleffe, R.; Mohoney, J.; Rekatsinas, T.; Venkataraman, S. Mariusgnn: Resource-efficient out-of-core training of graph neural networks. In Proceedings of the ACM SIGOPS European Conference on Computer Systems (EuroSys), Rome, Italy, 8–12 May 2023. [Google Scholar]

- Chen, Q.; Zhao, B.; Wang, H.; Li, M.; Liu, C.; Li, Z.; Yang, M.; Wang, J. SPANN: Highly-efficient Billion-scale Approximate Nearest Neighbor Search. arXiv 2020, arXiv:2111.08566. [Google Scholar]

- Baranchuk, D.; Douze, M.; Upadhyay, Y.; Yalniz, I.Z. DeDrift: Robust Similarity Search under Content Drift. arXiv 2023, arXiv:2308.02752. [Google Scholar] [CrossRef]

- Subramanya, S.J.; Devvrit; Kadekodi, R.; Krishaswamy, R.; Simhadri, H.V. DiskANN: Fast Accurate Billion-Point Nearest Neighbor Search on a Single Node; Curran Associates Inc.: Red Hook, NY, USA, 2019. [Google Scholar]

- Xu, Y.; Liang, H.; Li, J.; Xu, S.; Chen, Q.; Zhang, Q.; Li, C.; Yang, Z.; Yang, F.; Yang, Y.; et al. SPFresh: Incremental In-Place Update for Billion-Scale Vector Search. In Proceedings of the 29th Symposium on Operating Systems Principles, Koblenz, Germany, 23–26 October 2023; pp. 545–561. [Google Scholar]

- Mohoney, J.; Sarda, D.; Tang, M.; Chowdhury, S.R.; Pacaci, A.; Ilyas, I.F.; Rekatsinas, T.; Venkataraman, S. Quake: Adaptive Indexing for Vector Search. arXiv 2025, arXiv:2506.03437. [Google Scholar] [CrossRef]

- Maciejewski, J.; Nikoletos, K.; Papadakis, G.; Velegrakis, Y. Progressive entity matching: A design space exploration. Proc. ACM Manag. Data 2025, 3, 65. [Google Scholar] [CrossRef]

- Papadakis, G.; Skoutas, D.; Palpanas, T.; Koubarakis, M. A survey of entity resolution in the web of data. In The Semantic Web: Semantics and Big Data; Springer: Berlin/Heidelberg, Germany, 2013; pp. 15–30. [Google Scholar]

- Whang, S.E.; Benjelloun, O.; Garcia-Molina, H. Generic entity resolution with negative rules. VLDB J. 2009, 18, 1261–1277. [Google Scholar] [CrossRef]

- Mudgal, S.; Li, H.; Rekatsinas, T.; Doan, A.; Park, Y.; Krishnan, G.; Deep, R.; Arcaute, E.; Raghavendra, V. Deep learning for entity matching: A design space exploration. In Proceedings of the SIGMOD/PODS ’18: International Conference on Management of Data, Houston, TX, USA, 10–15 June 2018; pp. 19–34. [Google Scholar]

- Li, Y.; Li, J.; Suhara, Y.; Doan, A.; Tan, W. Deep entity matching with pre-trained language models. PVLDB 2021, 14, 50–60. [Google Scholar] [CrossRef]

- Papadakis, G.; Ioannou, E.; Palpanas, T.; Niederee, C.; Nejdl, W. A blocking framework for entity resolution in highly heterogeneous information spaces. IEEE Trans. Knowl. Data Eng. 2011, 25, 1665–1682. [Google Scholar] [CrossRef]

- Efthymiou, V.; Papadakis, G.; Stefanidis, K. MinoanER: A representative-based approach to progressive entity resolution. In Proceedings of the 20th International Conference on Extending Database Technology (EDBT), Venice, Italy, 21–24 March 2017; pp. 25–36. [Google Scholar]

- Skoutas, D.; Alexiou, G.; Papadakis, G.; Thanos, E.; Koubarakis, M. Lightweight and effective meta-blocking for entity resolution. Inf. Syst. 2022, 107, 101899. [Google Scholar]

- Wang, R.; Li, Y.; Wang, J. Sudowoodo: Contrastive self-supervised learning for multi-purpose data integration and preparation. arXiv 2022, arXiv:2207.04122. [Google Scholar]

- Chen, R.; Shen, Y.; Zhang, D. GNEM: A Generic One-to-Set Neural Entity Matching Framework. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; pp. 1686–1694. [Google Scholar]

- Zhang, W.; Wei, H.; Sisman, B.; Dong, X.L.; Faloutsos, C.; Page, D. AutoBlock: A hands-off blocking framework for entity matching. In Proceedings of the WSDM ’20: The Thirteenth ACM International Conference on Web Search and Data Mining, Houston, TX, USA, 3–7 February 2020; pp. 744–752. [Google Scholar]

- Brinkmann, A.; Shraga, R.; Bizer, C. SC-Block: Supervised Contrastive Blocking within Entity Resolution Pipelines. arXiv 2023, arXiv:2303.03132. [Google Scholar] [CrossRef]

- Cohen, W.W.; Ravikumar, P.; Fienberg, S.E. A comparison of string distance metrics for name-matching tasks. In Proceedings of the IIJ-03: Proceedings of the IJCAI-03 Workshop on Information Integration on the Web, Acapulco, Mexico, 9–10 August 2003; pp. 73–78. [Google Scholar]

- Jain, A.K.; Murty, M.N.; Flynn, P.J. Data clustering: A review. ACM Comput. Surv. 1999, 31, 264–323. [Google Scholar] [CrossRef]

- Koudas, N.; Sarawagi, S.; Srivastava, D. Record linkage: Similarity measures and algorithms. In Proceedings of the 2006 ACM SIGMOD International Conference on Management of Data, Chicago, IL, USA, 27–29 June 2006; pp. 802–803. [Google Scholar]

- Bahmani, B.; Moseley, B.; Vattani, A.; Kumar, R.; Vassilvitskii, S. Scalable k-means++. In Proceedings of the VLDB Endowment, Istanbul, Turkey, 27–31 August 2012; Volume 5, pp. 622–633. [Google Scholar]

- Gimenez, P.; Soru, T.; Marx, E.; Ngomo, A.C.N. Entity resolution with language models: A survey. arXiv 2023, arXiv:2305.10687. [Google Scholar]

- Wang, X. A fast exact k-nearest neighbors algorithm for high dimensional search using k-means clustering and triangle inequality. In Proceedings of the 2011 IEEE 11th International Conference on Data Mining, Vancouver, BC, Canada, 11–14 December 2011; pp. 794–803. [Google Scholar]

- Jegou, H.; Douze, M.; Schmid, C. Product quantization for nearest neighbor search. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 117–128. [Google Scholar] [CrossRef]

- Lloyd, S. Least squares quantization in pcm. IEEE Trans. Inf. Theory 1982, 28, 129–137. [Google Scholar] [CrossRef]

- Gong, Y.; Lazebnik, S.; Gordo, A.; Perronnin, F. Iterative quantization: A procrustean approach to learning binary codes for large-scale image retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2916–2929. [Google Scholar] [CrossRef]

- Babenko, A.; Lempitsky, V. Additive quantization for extreme vector compression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 931–938. [Google Scholar]

- Johnson, J.; Douze, M.; Jégou, H. Billion-scale similarity search with gpus. arXiv 2017, arXiv:1702.08734. [Google Scholar] [CrossRef]

- Malkov, Y.A.; Yashunin, D.A. Efficient and robust approximate nearest neighbor search using hierarchical navigable small world graphs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 824–836. [Google Scholar] [CrossRef] [PubMed]

- Xiao, W.; Zhan, Y.; Xi, R.; Hou, M.; Liao, J. Enhancing HNSW index for real-time updates: Addressing unreachable points and performance degradation. arXiv 2024, arXiv:2407.07871. [Google Scholar] [CrossRef]

- Li, C.; Zhang, N.; Andersen D., G.; He, Y. Improving approximate nearest neighbor search through learned adaptive early termination. In Proceedings of the 2020 ACM SIGMOD International Conference on Management of Data, Portland, OR, USA, 14–19 June 2020; pp. 2539–2554. [Google Scholar]

- Zhang, Z.; Jin, C.; Tang, L.; Liu, X.; Jin, X. Fast, approximate vector queries on very large unstructured datasets. In Proceedings of the 20th USENIX Symposium on Networked Systems Design and Implementation (NSDI 23), Boston, MA, USA, 17–19 April 2023; pp. 995–1011. [Google Scholar]

- Li, M.; Wang, Z.; Liu, C. A Self-Learning Framework for Partition Management in Dynamic Vector Search. In Proceedings of the ACM SIGMOD International Conference on Management of Data, Berlin, Germany, 22–27 June 2025. [Google Scholar]

| Characteristic | IVF (Faiss) | SCANN | HNSW |

|---|---|---|---|

| Paradigm | Partition-Based | Partition-Based | Graph-Based |

| Index Mechanism | K-Means partitions (Voronoi cells) with an inverted file structure. | K-Means-like partitioning combined with score-aware anisotropic vector quantization. | Multi-layered proximity graph (Navigable Small World). |

| Memory Cost | Low to Moderate. Highly tunable based on codebook size (PQ). | Moderate. Generally lower than HNSW for similar performance points. | High. The entire graph structure, including all edges, must be stored in memory. |

| Update Handling | Efficient. Inserting/deleting a vector only requires updating a specific partition’s list. | Efficient. Similar to IVF, updates are localized to partitions. | Inefficient/Costly. Updates may require expensive rewiring of graph edges across multiple layers. |

| Ideal ER Scenario | Very large-scale datasets where memory efficiency and reasonable throughput are critical. A strong, scalable baseline. | High-throughput applications on large, static datasets where maximizing the number of queries resolved is the primary goal. | Applications requiring the absolute highest recall at low latency, on static or infrequently updated datasets. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karapiperis, D.; Verykios, V.S. Scaling Entity Resolution with K-Means: A Review of Partitioning Techniques. Electronics 2025, 14, 3605. https://doi.org/10.3390/electronics14183605

Karapiperis D, Verykios VS. Scaling Entity Resolution with K-Means: A Review of Partitioning Techniques. Electronics. 2025; 14(18):3605. https://doi.org/10.3390/electronics14183605

Chicago/Turabian StyleKarapiperis, Dimitrios, and Vassilios S. Verykios. 2025. "Scaling Entity Resolution with K-Means: A Review of Partitioning Techniques" Electronics 14, no. 18: 3605. https://doi.org/10.3390/electronics14183605

APA StyleKarapiperis, D., & Verykios, V. S. (2025). Scaling Entity Resolution with K-Means: A Review of Partitioning Techniques. Electronics, 14(18), 3605. https://doi.org/10.3390/electronics14183605