1. Introduction and Background

In this ever-evolving landscape of cybersecurity, the proliferation of internet-connected devices has ushered in unprecedented levels of data exchange alongside a corresponding surge in cyber threats. As the Internet of Things (IoT) continues to expand, so does the potential for malicious activities across various sectors, ranging from healthcare to logistics [

1,

2]. In 2023, it was estimated that the number of IoT-connected devices surpassed 17 billion, and this number is expected to continue to rise significantly in the coming years [

3]. As a result, being able to monitor and recognize malicious activity and cyberattacks in real-time is imperative to cybersecurity efforts [

1].

This research contributes to the ongoing discourse on cybersecurity by providing insights into the efficacy of machine learning (ML) approaches in identifying and categorizing cyber threats. By comparing the performance of Support Vector Machine (SVM) models under different preprocessing techniques, this study aims to determine the impact of feature reduction methods on classification accuracy and efficiency. Specifically, this paper aims to evaluate the effectiveness of SVMs in predicting and classifying cyberattacks in both binary and multi-classification scenarios, while also reducing the preprocessing and evaluation time required for classification. To achieve this, the effects of three different data preprocessing techniques on the performance of binary and multi-class SVMs are compared. In the first technique, the data is minimally preprocessed; in the second technique, the data undergoes minimal preprocessing followed by Principal Component Analysis (PCA); and in the third technique, the data undergoes minimal preprocessing followed by Linear Discriminant Analysis (LDA). Given the large size of the datasets, GPU integration and MapReduce techniques were employed to attempt to minimize preprocessing and evaluation time. Performance of all three models was compared using CPUs and GPUs, with and without the MapReduce environment.

The uniqueness of this work is further enhanced by the use of Zeek connection log data. Zeek is an open-source network-monitoring tool that provides the raw network data [

4]. Modern, unique, newly created Zeek datasets, UWF-ZeekData22 [

1,

5] and UWF-ZeekDataFall22 [

5,

6], are used in this work to identify connections that lead to adversarial tactics [

1,

5]. These datasets are labeled using the MITRE Adversarial Tactics, Techniques, and Common Knowledge (ATT&CK) framework [

7], which serves as a publicly available resource detailing adversary tactics and techniques employed to achieve specific objectives [

1,

7]. Finally, in this paper, classification is performed at the tactic level, that is, the correct classification of the Reconnaissance, Discovery, Defense Evasion, Privilege Escalation, and Resource Development tactics is explored. Classification at the tactic level has not been attempted in any previous work.

To handle the large amount of data, both datasets are stored in the Hadoop Big Data framework, which is a distributed file system. The Hadoop Distributed File System (HDFS) is scalable and fault-tolerant, and allows for the storage and processing of Big Data across a cluster of computers [

8]. To perform the machine learning classification, Apache Spark 3.5.0 was used. Apache Spark is an open-source computing framework that is used in conjunction with Hadoop. In addition to being fast, scalable, and fault-tolerant, Apache Spark is also an in-memory, cluster computing framework designed to process and analyze large datasets [

9]. For preprocessing and classification, several ML libraries from Apache Spark were used.

The remainder of this paper is organized as follows:

Section 2 provides an overview of the related works in the field of cybersecurity and ML;

Section 3 provides an outline of the datasets used in this research;

Section 4 describes the preprocessing techniques used, including PCA and LDA;

Section 5 provides an overview of the PCA and LDA algorithms;

Section 6 discusses the SVM model, as well as training and testing techniques;

Section 7 describes the experimental setup as well as the hardware and software used;

Section 8 details the results and findings; and, finally,

Section 9 offers conclusions drawn from this study.

2. Related Works

As organizations call for more focus on securing their data from relevant threats and analyzing and developing effective countermeasures, the topic of Intrusion Detection Systems (IDSs) has become relatively popular [

10,

11,

12,

13,

14,

15,

16]. Numerous studies have investigated utilizing SVMs as a prediction model for classifying cyberattacks and improving IDS performance. Many papers use one of the most applicable datasets in information security research, the KDD99 Cup dataset [

10,

11,

12,

13] and its subsequent dataset NSL-KDD [

12,

14,

16] to experiment with IDS to improve classification methods.

Utilizing the KDD99 dataset, various studies have compared multiple ML algorithms, including K-Nearest Neighbor, J48, Naïve Bayes (NB), Random Forest (RF), Nearest Neighbors Generalized, and Voting Features Interval, as well as preprocessing techniques such as PCA, while utilizing SVMs. LDA and various kernels were also tested [

10,

11,

12,

13,

17].

Other studies used different datasets, such as the NSL-KDD, UNSW-NB15, and CIC-IDS2017, to compare various ML algorithms and preprocessing techniques. Feature selection techniques besides PCA and LDA were also used, such as information gain or Genetic Algorithms. These studies found that decreasing features often resulted in improved accuracy, but that SVM models often performed poorly compared to other models. However, it was shown that the linear SVM had advantages in training time and precision over the nonlinear SVM [

14,

15,

16,

18]. Additionally, one study comparing PCA, LDA, and Autoencoder-based feature extraction methods across multiple IDS datasets, including UNSW-NB15 and CSE-CIC-IDS2018, found that no single method consistently outperformed others, but it highlighted that the effectiveness of each feature extraction technique was highly dataset dependent, and, in many cases, LDA underperformed relative to PCA and Autoencoders when used with various classifiers [

19].

Several studies also sought to use feature reduction techniques in conjunction with SVM models, to include multi-class SVM models. These studies observed that accuracy often remained similar, or sometimes improved, when LDA or PCA were used. Combining feature reduction techniques with different ML algorithms, such as Logistic Regression, also showed improvements in computational time versus standard SVM models. Minimal performance loss was observed while reducing computational overhead, resulting in speedups to algorithm performance [

20,

21,

22]. Another study reinforced the importance of feature reduction for improving classifier performance by applying Singular Value Decomposition (SVD) as a feature reduction technique before classification on the NSL-KDD dataset, showing that reducing dimensionality prior to applying machine learning models led to improved detection accuracy and reduced computational load, particularly when used with SVM classifiers [

23].

Yet other studies sought to either test the effectiveness of different kernels on one- and two-class SVMs, or improve performance by modifying the Gaussian kernel. The two-class SVM model, along with the modified Gaussian kernel, was found to increase accuracy, sometimes significantly, when compared to traditional SVM methods. The modified kernel also resulted in reduced training and testing times. The two-class SVM model, however, performed poorly on certain datasets, suggesting that SVMs may serve as a complement to the performance of existing IDSs rather than a complete replacement [

24,

25].

A few studies sought to utilize MapReduce to run ML algorithms for IDSs. MapReduce runs programs on parallel clusters. These studies found that utilizing MapReduce techniques was effective for performing parallel computing on large datasets, resulting in increased performance and decreased runtime. Accuracy and other statistical metrics remained unchanged, as using MapReduce does not affect the statistical performance of the algorithms themselves [

26,

27].

This paper expands on research already completed on IDSs by using SVM as an IDS. It seeks to compare the results of minimal preprocessing (MP) as well as MP coupled with feature reduction techniques such as PCA and LDA, while also testing the speed performance of the three techniques when built using a parallel MapReduce framework. Performance of the techniques is measured using the binomial as well as multinomial SVM classifiers.

3. Datasets

Two Zeek Connection (Conn) Log MITRE ATT&CK framework labeled datasets, UWF-ZeekData22 [

1,

5], and its later counterpart and UWF-ZeekDataFall22 [

5,

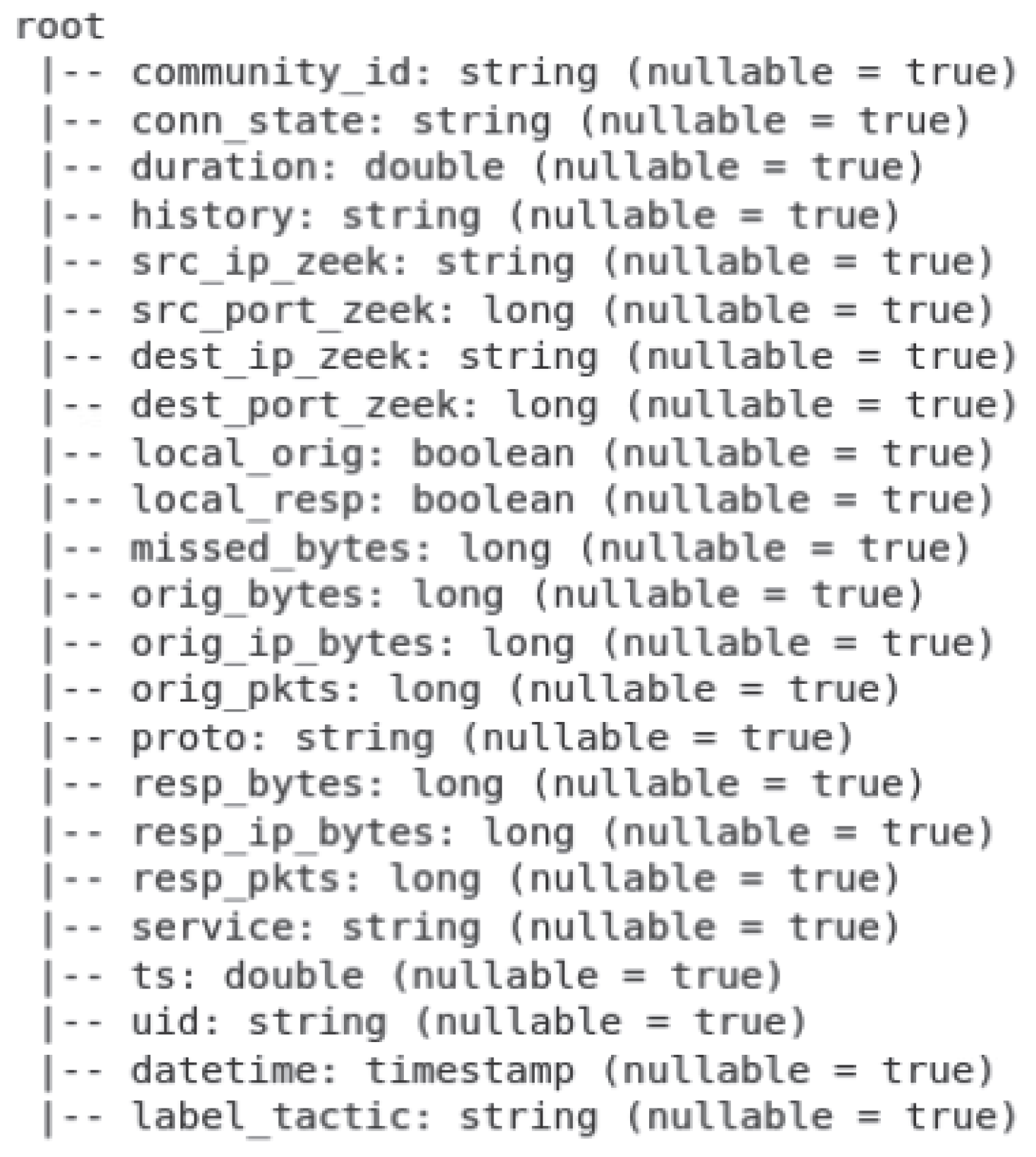

6], generated using the Cyber Range at The University of West Florida (UWF), were used for this analysis. Features used in this analysis are presented here in

Figure 1.

3.1. Overview of the UWF-ZeekData22 Dataset

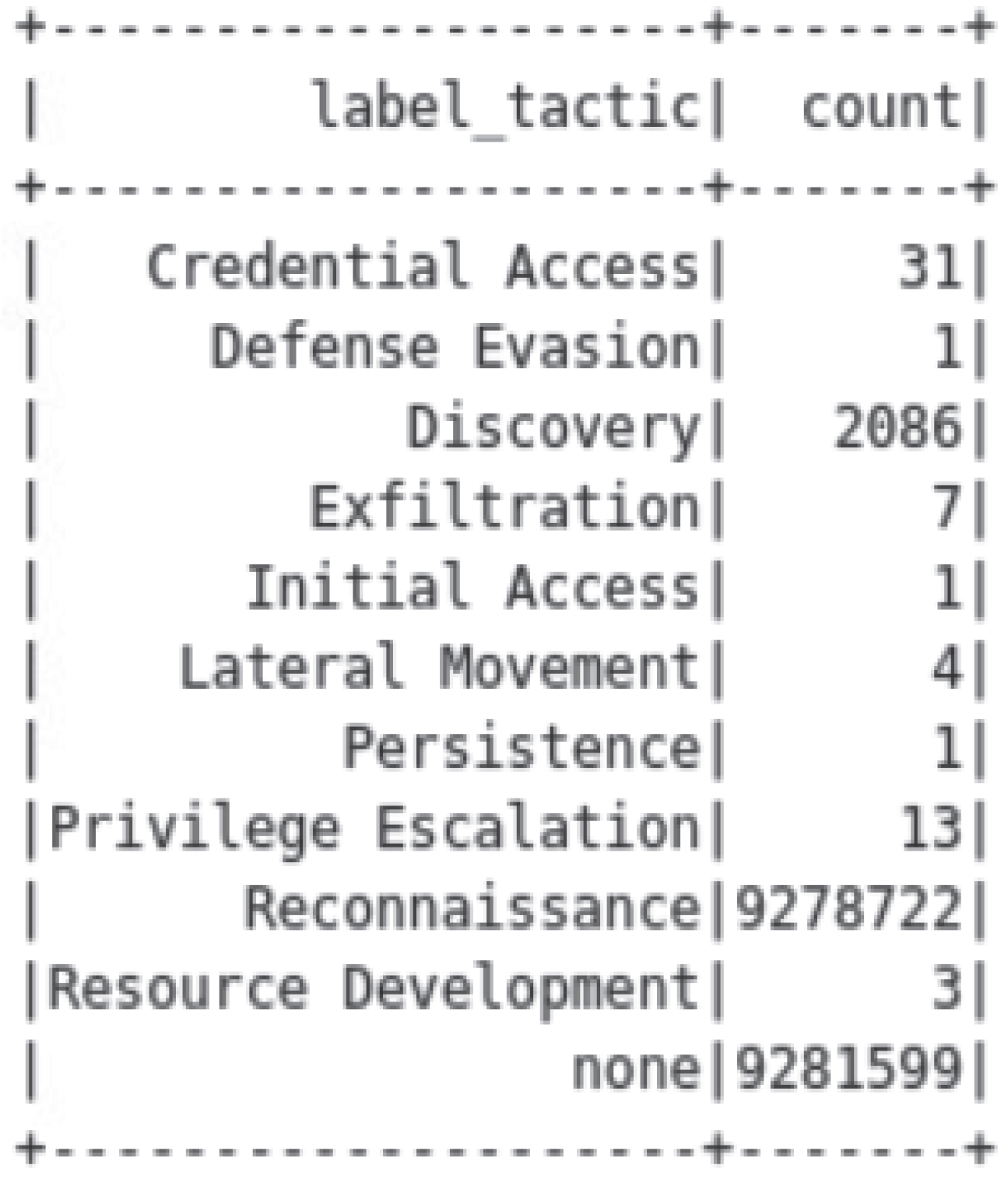

This dataset has 9,280,869 attack records and 9,281,599 benign records.

Figure 2 presents a breakdown of the data by MITRE ATT&CK Tactics. There were 9,278,722 instances of Reconnaissance activity, 2086 instances of Discovery activity, and very few instances of other adversary tactics in this dataset; hence, only Reconnaissance and Discovery were used for this analysis. Reconnaissance tactics are active or passive tactics that are used to gather information to plan future operations [

28,

29]. Discovery tactics are used to gain knowledge about the system [

28,

30].

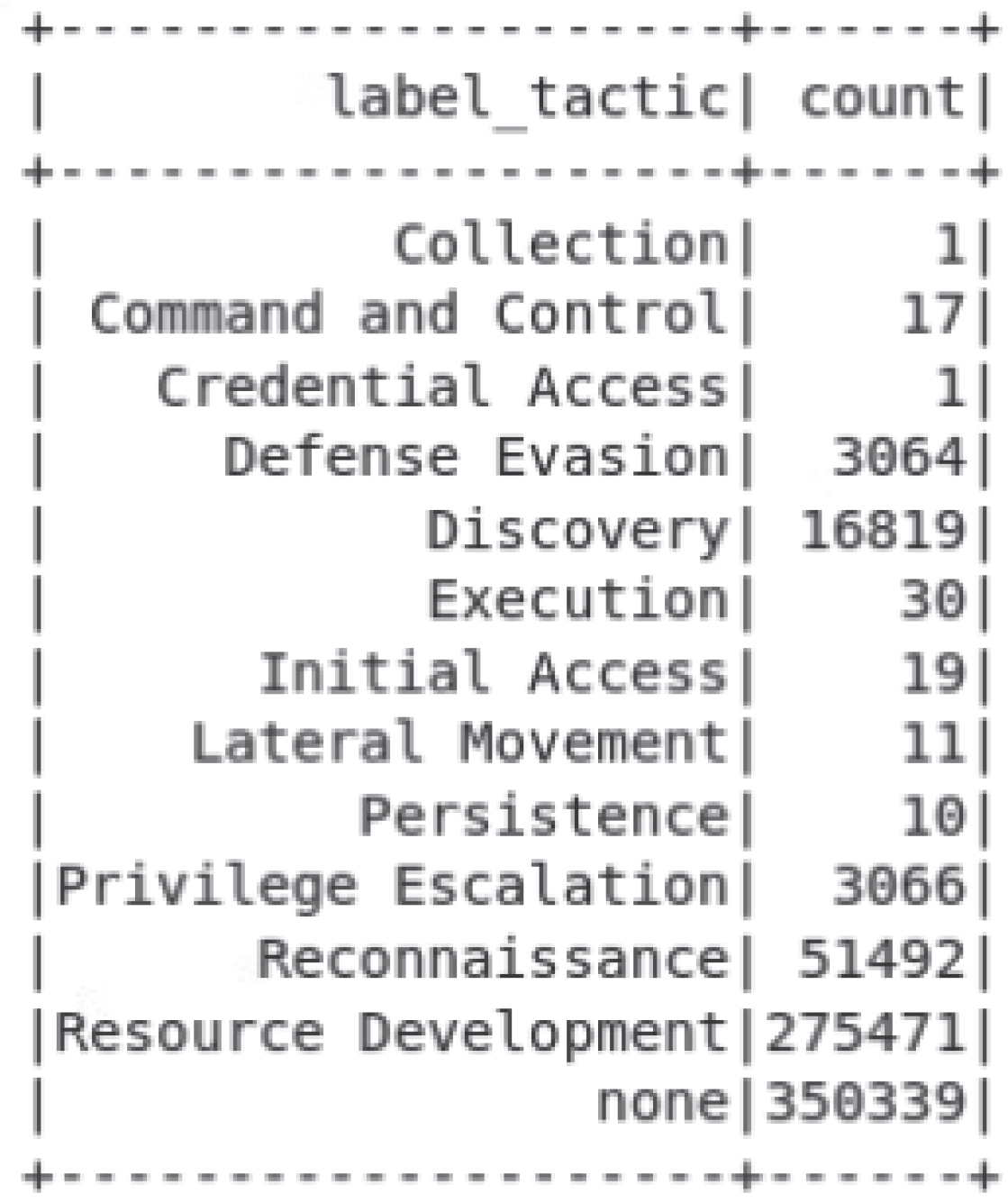

3.2. Overview of UWF-ZeekDataFall22 Dataset

This dataset, created in 2023, has 350,001 attack records and 350,339 benign records [

6]. The breakdown of the attack tactics in the UWF-ZeekDataFall22 [

6] dataset is presented in

Figure 3. There were 275,471 instances of Resource Development activity, followed by 51,492 instances of Reconnaissance, 16,819 instances of Discovery, 3064 Defense Evasion, 3066 instances of the Privilege Escalation, and very few instances of other adversary tactics in this dataset, so only the Resource Development, Reconnaissance, Discovery, Defense Evasion and Privilege Escalation tactics were used for this analysis. Resource Development tactics try to establish resources to support operations [

6,

31], Defense Evasion tactics are used to avoid detection [

6,

32] and Privilege Escalation tactics are used by adversaries to gain higher-level permissions of a system or network [

6,

33].

3.3. Sample Selection

For binary classification, one tactic and benign data were used. For multinomial classification, to ensure all models tested were trained on a sufficient set of samples, classes containing minimal instances were dropped. Hence, from the UWF-ZeekData22 dataset, all instances of the Credential Access, Defense Evasion, Exfiltration, Initial Access, Lateral Movement, Persistence, and Resource Development were dropped. Similarly, from the UWF-ZeekDataFall22 dataset, all instances of Collection, Command and Control, Credential Access, Execution, Initial Access, Lateral Movement, and Persistence were dropped. Since the number of dropped attacks is extremely small in these otherwise extremely large datasets, it does not introduce bias into the data.

4. Data Preprocessing

4.1. Dropping Features

The “uid” (user id) feature, which defines a unique identifier assigned to each user, was excluded from both datasets. The uid, which serves as a key for distinguishing individual records, lacks informative value as each record is inherently unique, and no commonality or useful variance is present that could be leveraged by ML algorithms like SVM. Hence, this feature would not contribute to understanding the underlying patterns or relationships in the datasets. Moreover, retaining this feature would only introduce unnecessary overhead, resulting in performance degradation.

Hence, in UWF-ZeekData22 [

1,

5], all other features were retained. The UWF-ZeekDataFall22 dataset [

5,

6], however, had two additional features, label_binary and label_technique, and these were dropped to keep the datasets consistent.

4.2. Preprocessing Through Data Imputation

To maintain the qualities of the original raw network data, the following methods of data imputation were used on both datasets.

4.2.1. Replacing Null Values with Averages

The null values were replaced with the mean values from their respective features in order to preserve the distribution. PySpark natively supports an Imputer class [

33], which was utilized to calculate the mean of each numerical feature and replace null values.

4.2.2. Normalization

Values were normalized since both PCA and LDA benefit from data being normalized. Hence, numerical features were normalized to comparable scales between 0 and 1. This helped reduce sensitivity to outliers that may skew the data since PCA maximizes variance and LDA optimizes between-class to within-class variance, and without proper normalization, features with larger magnitudes could dominate calculations regardless of actual relevance [

34]. Specifically, the min-max scaling method, which is what PySpark’s StandardScaler [

32] applies, is a standard method used to transform and normalize features into a bounded range to ensure all features contribute equally to the analysis. To perform the normalization, PySpark’s StandardScaler [

32] was used. Data was scaled using the following:

4.2.3. StringIndexer

The Spark implementation of SVM cannot natively handle string values, so StringIndexer [

31] was used on both datasets. The StringIndexer maps all unique strings to individual numerical values that can be processed by the SVM algorithm.

4.3. Dimensionality Reduction

4.3.1. Principal Component Analysis

After a chosen data imputation method, PySpark’s PCA module [

35] was applied to the data to transform, or essentially compress, the raw data into fewer features known as principal components derived from the covariance matrix of the original features.

4.3.2. Linear Discriminant Analysis

As Spark and PySpark do not natively support Linear Discriminant Analysis, Scikit-learn’s LinearDiscriminantAnalysis library was used on the data to perform supervised learning to transform the data into a smaller feature space based on the number of classes.

5. Feature Reduction Techniques

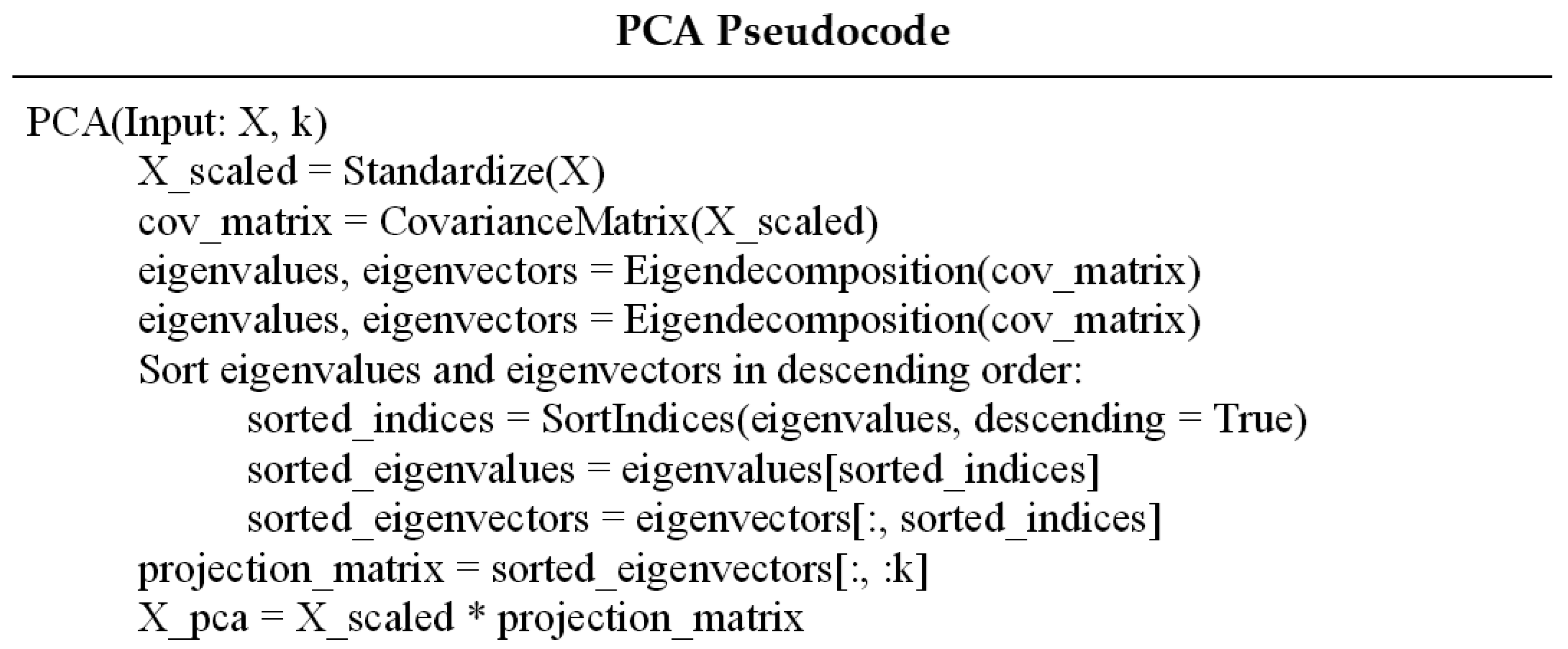

5.1. Principal Component Analysis

Principal Component Analysis (PCA) is a widely used, unsupervised approach used to reduce the dimensionality of data by using statistical approaches to find data points with the maximum potential variance [

17,

36]. PCA removes redundant and unnecessary features, allowing for the features to be more visible and organized in a new space, known as the principal space. As a result, the complexity of the overall dataset is also reduced. PCA reduces significant computation in Big Data [

36]. PCA can be applied with the following steps, and the pseudocode for the algorithm is presented in

Figure 4 [

17,

37].

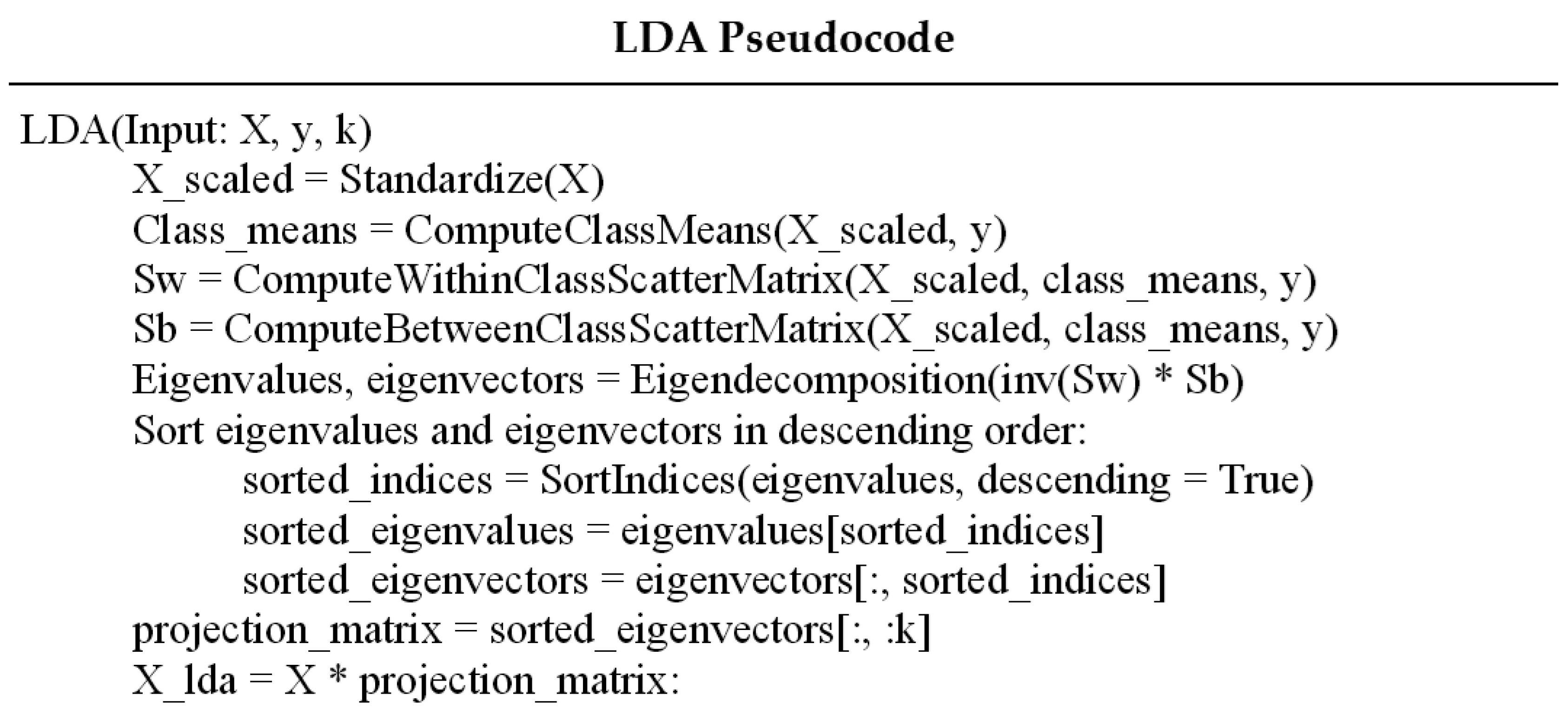

5.2. Linear Discriminant Analysis

Linear Discriminant Analysis (LDA) is a commonly used supervised statistical method designed to reduce the dimensionality of a dataset [

22,

38]. The

n-dimensional dataset is projected onto a smaller

k-dimensional dataset (where

k <

n), while retaining relevant class-discrimination information. LDA maximizes the ratio of the between-class variance to the within-class variance, which maximizes the separability between classes [

38]. The pseudocode to perform LDA can be seen in

Figure 5. To transform the dataset using LDA, the following steps are performed [

37].

Normalize the dataset by subtracting the mean and dividing by the standard deviation for each feature:

where

μ_

x is the sample mean of a feature column, and

σ_

x is the standard deviation.

Compute the mean vector for each class.

where

n_

i is the number of samples in class

i, and

D_

i is the set of samples belonging to class

i.Calculate the within-class scatter matrix:

Calculate the between-class scatter matrix:

where

m is the overall mean from all

c classes.

Compute the eigenvectors and eigenvalues of

by solving the generalized eigenvalue problem:

Choose k eigenvectors that correspond to the k largest eigenvalues, where k is the desired dimensionality of the new feature subspace. (Note: if the dataset contains K classes, the maximum value of k is K − 1.)

Construct a projection matrix W from the sorted k chosen eigenvectors.

Create a new

k-dimensional feature space by transforming the original data using the projection matrix:

6. Modeling

6.1. Support Vector Machines

Support Vector Machines (SVMs) are a powerful ML algorithm that can handle both linear and nonlinear classification and regression tasks [

39]. The SVM algorithm works by optimizing the hyperplane that maximizes the margin between classes while simultaneously minimizing classification errors [

40]. Using a kernel function, SVMs are able to map data into higher-dimensional feature spaces where nonlinear relationships become linear, which allows for complex decision boundaries without the need for explicit computation in higher-dimensional space [

39,

40]. SVMs also allow for the choice of a kernel function, such as polynomial or radial basis function, increasing the versatility [

40].

6.1.1. Binary Classification

In the UWF-ZeekData22 [

1,

5] dataset, binary classification was used for the Reconnaissance and Discovery Tactics. In the UWF-ZeekDataFall22 [

5,

6] dataset, binary classification was used for the Reconnaissance, Discovery, Defense Evasion, Privilege Escalation, and Resource Development tactics.

6.1.2. Multinomial Classification

The standard PySpark implementation of the SVM model is not capable of supporting multinomial classification. To complete the multinomial classification tasks, the OneVsRest model was used to extend the SVM model’s capabilities and allow for multinomial classification.

In the UWF-ZeekData22 dataset [

1,

5], multinomial classification was performed using the Reconnaissance and Discovery tactics together versus the benign data. In the UWF-ZeekDataFall22 dataset [

5,

6], multinomial classification was performed using the Reconnaissance, Discovery, Defense Evasion, Privilege Escalation, and Resource Development tactics together versus the benign data.

6.2. Parameter Selection

Several parameters in the PySpark implementation of SVM were adjusted in an attempt to optimize performance and reliability on the large datasets used.

Table 1 outlines these parameters. All other values were left as default.

6.3. MapReduce

Although PySpark uses transformers like map() and reduce() that conceptually align with the MapReduce paradigm [

41], further steps were taken to apply MapReduce, which would distribute data and process the data in parallel. MapReduce is a framework for executing parallelizable algorithms like LDA across Big Data. MapReduce has two functions, map and reduce. Map takes in a function that can be applied to a sequence of values, and reduce combines all the elements of a sequence using binary operations.

In this work, several Map functions were used to calculate the class means, within-class scatter matrices, and between-class scatter matrices, and also in the computation of the eigenvalues and vectors. Broadcast variables were used for distributed processing in block sizes of 33.2 MiB using the DAG scheduler. Broadcast variables benefit data-intensive applications, minimizing data transfer overhead and eliminating the need for network communication, hence reducing latency.

7. Experimental Setup

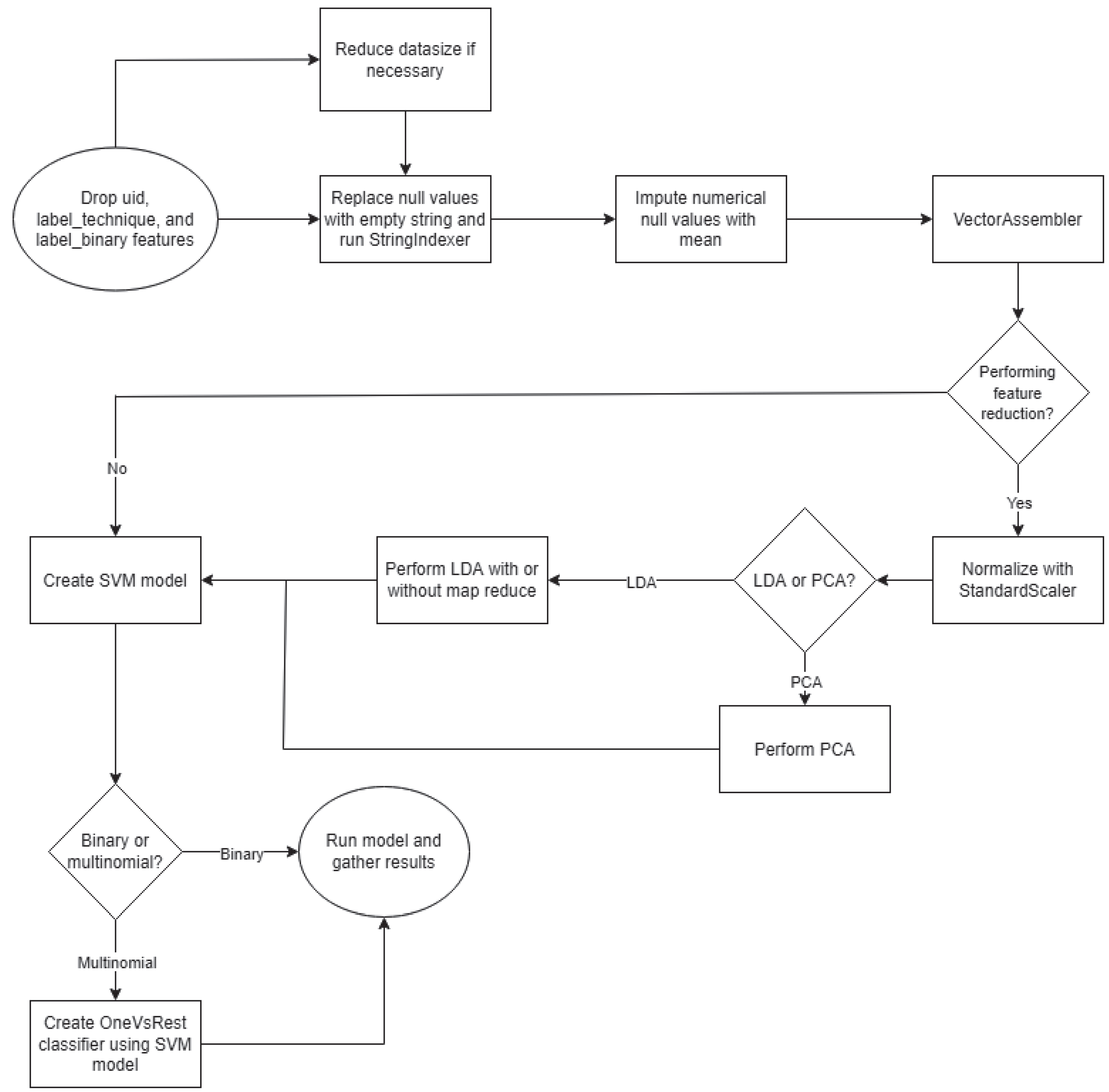

All datasets were split into a 70:30 ratio for training and testing the SVM classifier. Using the UWF-ZeekData22 dataset, for the binomial classification of the Reconnaissance class, stratified sampling was used with a seed of 42. All instances of the Discovery class were retained for both binomial and multinomial classification due to the small number of samples in this class. For the UWF-ZeekDataFall22 dataset, a random split was performed using a seed of 42 for all the tactics.

For both datasets, after removing the features uid, label_technique, and label_binary, replacing null values, and running the Strings through a StringIndexer, the experimental flow diverges, as shown in

Figure 6. In the cases where feature reduction was to be used, the datasets were normalized before LDA or PCA was performed. Binary classification was performed on individual classes, and multinomial classification was performed on all classes simultaneously.

Figure 6 presents the experimental setup.

7.1. Computing Environment

In terms of computing resources, the University of West Florida’s Hadoop cluster was used. The physical cluster consists of 6 Dell PowerEdge R750 with 128 logical cores, 1 TB of Memory, 84 TB SSD Storage, and 2 NVIDIA Tesla T4 with 16 GB of GDDR6. The logical Hadoop/Spark cluster comprises 1 Hadoop NameNode/Spark Master (64 logical cores, 512 GB of memory, 1 TB SSD storage) and 3 Hadoop DataNodes/ Spark Workers (64 logical cores, 512 GB of memory, 40 TB SSD storage). Spark 3.5.0 and Hadoop 3.3.1 were used for the environment.

7.2. Spark Configuration Parameters

The configuration parameters, as shown in

Table 2, were utilized during the creation of the Spark session. These parameters dictate the available resources that were allocated for the algorithms.

7.3. Setup Parameters

The configuration settings, resource settings, and total allocations selected are presented in

Table 3, and the Spark resource settings and total allocation are presented in

Table 4.

7.4. CPUs vs. GPUs

CPUs are designed for general-purpose computing tasks. They excel at handling a wide variety of operations, including those requiring complex logic and sequential processing. However, their architecture, which typically includes a few powerful cores, limits their efficiency in tasks that can be parallelized. UWF’s Virtual Machines (VMs) had 4 CPUs with 8 GB of memory, 1 GB active memory, and 32 GB of hard disk space.

GPUs, originally developed for rendering graphics, are optimized for parallel processing. They consist of thousands of smaller, efficient cores capable of handling multiple tasks simultaneously. This architecture makes GPUs particularly well-suited for the matrix and vector operations common in ML algorithms.

Advantages of GPUs over CPUs [43,44]

Parallel Processing: GPUs can perform many operations concurrently, leading to significant speedups in training times for large datasets and complex models.

Throughput: The high throughput of GPUs allows for processing large volumes of data more efficiently than CPUs.

Energy Efficiency: For certain workloads, GPUs can offer better performance per watt compared to CPUs, making them more energy-efficient for large-scale computations.

Dataset Size: Large datasets benefit from GPU acceleration, as the ability to process multiple data points simultaneously can lead to faster convergence during training.

In Apache Spark, both CPUs and GPUs can be utilized for data processing. By default, Spark operates on CPUs. However, with the integration of GPU acceleration frameworks like the RAPIDS Accelerator for Apache Spark [

44], certain operations can be offloaded to GPUs, enhancing performance for specific workloads.

8. Results and Analysis

In the first section, the evaluation metrics are presented, and then the results and analysis are presented. First, the results for UWF-ZeekData22 are presented, followed by the results and analysis for UWF-ZeekDataFall22. For each dataset, in addition to binomial and multinomial statistical classification results, a comparison has been made of CPU vs. GPU vs. MapReduce timings using minimal preprocessing and PCA and LDA preprocessing. The PCA variance, as well as the LDA explained variance, has also been presented.

8.1. Evaluation Metrics

Results were collected for accuracy, precision, recall, F-measure, false-positive rate (FPR), area under the operating characteristics curve (AUROC), training/test times in seconds, and preprocessing time in seconds. These metrics are defined as follows:

Accuracy is the ratio of correct classifications (true positives and true negatives) to the total number of classifications [

45]. Mathematically, it can be expressed as

Precision, often referred to as confidence, is the ratio of correctly predicted positive cases to total predicted positive cases [

45,

46]. Mathematically, precision is defined as

Recall, also known as sensitivity, represents the ability of a classifier to identify all positive samples, also known as the true-positive rate (TPR). It is defined as the proportion of TP cases correctly predicted out of all real positive (RP) cases [

45,

47]. Mathematically, it is defined as

The F1 score or F-measure is a metric that combines precision and recall into a single value. It represents the harmonic mean of both precision and recall, and serves as an overall measure of the test’s accuracy [

48]. Mathematically, it is defined as

The false-positive rate is the ratio of negative labels that are incorrectly predicted to be positive [

49]. Mathematically, the false-positive rate is defined as follows:

The Area under the Receiver Operating Characteristic (ROC) curve is another measure of the overall performance of a classifier. ROC curves depict the trade-offs between TPR and FPR. They are two-dimensional graphs of classifiers, where the TPR is on the y-axis and the FPR is on the x-axis [

49].

The AUROC represents the percentage of the area under the ROC curve relative to the entire area of the graph. It quantifies a classifier’s ability to discriminate between positive and negative instances [

49].

Preprocessing time is the duration of time required (in seconds) for an algorithm to complete its preprocessing steps. An algorithm is considered more efficient than another if it completes its preprocessing steps more quickly, assuming all other factors are equal.

Training and testing time are the duration of time required (in seconds) for an algorithm to complete its training and testing processes, respectively. An algorithm is considered more efficient than another if it completes its calculations more quickly, assuming all other factors are equal.

8.2. Results

In PCA, k values can be as high as the number of features in the dataset; however, often, this number of principal components is not necessary. In both the UWF-ZeekData22 and UWF-ZeekDataFall22 datasets, PCA was run with k values of 2, 3, 5, 10, and 11.

In LDA, the max k value that can be chosen is equal to the number of classes minus one. In the binary case, this limited the choice of k to 1. In the multinomial case, in the UWF-ZeekData22 dataset, a total of three classes exist (Reconnaissance, Discovery, and benign), allowing for k values up to 2. Both k equal to 1 and 2 were tested on this dataset.

On the UWF-ZeekDataFall22 dataset, there are a total of six classes (Reconnaissance, Discovery, Defense Evasion, Privilege Escalation, Resource Development, and benign), permitting k values of up to 5. However, k values equal to 1, 2, 3, and 4 were tested on this dataset.

For each dataset, first, the results for SVM with minimal preprocessing are presented, then the results for SVM with minimal processing plus PCA are presented, followed by the results for minimal preprocessing plus LDA. For minimal preprocessing, the following was performed: dropping features that lack informative value, dealing with null values, normalization of appropriate features, and using the string indexer where appropriate.

8.2.1. UWF-ZeekData22 Results

SVM classifier results for the UWF-ZeekData22 dataset for the Reconnaissance, Discovery, and multinomial data with minimal preprocessing are presented in

Table 5. The Reconnaissance and Discovery were binary classifications, that is, Reconnaissance vs. benign data, and, likewise, Discovery vs. benign data.

Since LDA requires significant in-memory processing and the UWF-ZeekData22 dataset is a very large dataset with over 18 million samples, this caused out-of-memory issues when running LDA with the scikit-learn implementation on UWF’s VMs. To mitigate this issue, the size of the datasets used for classification was reduced. This reduction varied between 85% and 97% of the none (benign data) and Reconnaissance classes, and a 0–10% reduction in the Discovery class. Though the reduction was necessary only for LDA, to keep the results uniform, the reduced datasets were used for all the classification runs of this dataset. The last column presents the percentage of the reduction in the dataset.

Since the full dataset could not be run for LDA using UWF-ZeekData22, and a reduced dataset was used, this motivated the use of MapReduce (presented later in this work), so that the full dataset could be run in the MapReduce Big Data environment. Hence, all MapReduce runs were performed using the full datasets.

From

Table 5, it can be noted that accuracy, precision, recall, and the F-measure all remained high, with values achieving greater than 99%. All cases achieved a very high AUROC score, and all cases maintained low false-positive rates, with the Reconnaissance tactic having the highest FPR.

SVM classifier results for the UWF-ZeekData22 dataset for the binary classification of Reconnaissance (Recon) and Discovery (Disc), and multinomial (multi) classification, using PCA with

k values of 2, 3, 5, and 10, are presented in

Table 6.

From

Table 6, it can be noted that, though on average, accuracy, precision, recall, and F-measure all remained high with values achieving greater than 97%, the multinomial classification results were slightly lower than the binary classification results. The Reconnaissance and multinomial data, however, achieved higher AUROC scores than the discovery tactics, which had lower AUROC scores. Most cases have relatively low false-positive rates, with discovery having zero false positives. And, overall, for all three cases, the two binary Reconnaissance as well as Discovery, as well as the multinomial data,

k = 10, performed slightly better.

Table 7 presents the PCA variance and cumulative variance for UWF-ZeekData22. From

Table 7, it can be noted that a significant portion of the variance was captured within the first seven principal components for both the binomial and multinomial cases, with cumulative variance exceeding 75%. In the classification results, while models on average perform as well or slightly better with ten principal components, the gains were often minimal when compared to using five components.

SVM classifier results for the UWF-ZeekData22 dataset for the binary classification of Reconnaissance and Discovery, and multinomial classification, using LDA with

k values of 1 and 2, are presented in

Table 8.

From

Table 8, it can be noted that accuracy, precision, recall, and the F-measure all remained high with values achieving greater than 99%. All cases retained a high AUROC score. The false-positive rates were also low for each case.

Table 9 presents the LDA explained variance and cumulative variance for UWF-ZeekData22. From

Table 9, it can be seen that for the binomial data, the single linear discriminant captured 100% of the between-class variance for all tactics. In the multinomial case, the first linear discriminant captured 99.82% of the explained variance, with the remaining linear discriminants contributing only minimally to the total variance. Including the second linear discriminant in preprocessing prior to running the SVM model had almost no effect on classification performance.

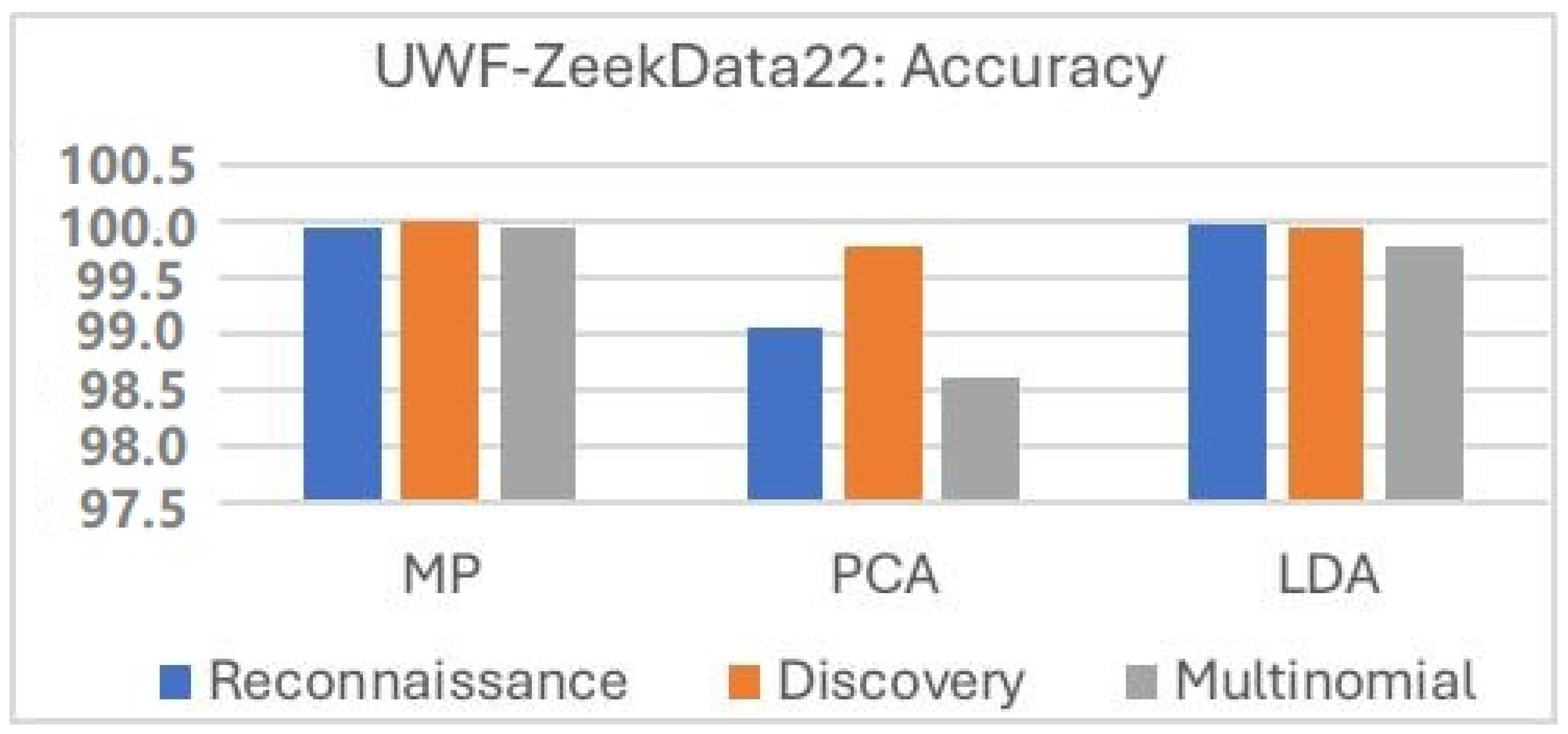

Figure 7 compares the accuracy of the three preprocessing techniques: minimal preprocessing, minimal preprocessing plus PCA, and minimal preprocessing plus LDA. Minimal preprocessing had the highest average accuracy, while PCA had the lowest average accuracy. And, with PCA, the multinomial data had the lowest performance.

8.2.2. UWF-ZeekDataFall22 Results

SVM classifier results for the UWF-ZeekDataFall22 dataset for the binary classification of Reconnaissance, Discovery, Defense Evasion, Privilege Escalation, and Resource Development, and multinomial classification, with minimal preprocessing, are presented in

Table 10. Since the size of this dataset was smaller, no dataset reduction was necessary.

From

Table 10, it can be noted that accuracy, precision, recall, and F-measure all remained high with values achieving greater than 99%. All cases retained a high AUROC score. False-positive rates were low or close to zero for each case.

SVM classifier results for the UWF-ZeekDataFall22 dataset for the binary classification of Reconnaissance, Discovery, Defense Evasion (Def Evas), Privilege Escalation (Priv Esc), and Resource Development (Res Dev), and multinomial classification, using PCA with

k values of 2, 3, 5, and 10, are presented in

Table 11.

From

Table 11, it can be noted that the results of the multinomial classification were, on average, lower than the results of the binary classifiers. Though Defense Evasion and Privilege Escalation had the same results for

k = 5 and

k = 10, in most cases,

k = 10 gave the best results. Resource Development was the only tactic where the results of

k = 5 were slightly better than

k = 10.

Table 12 presents the PCA variance and cumulative variance for UWF-ZeekDataFall22. From

Table 12, it can be noted that a significant portion of the variance was captured within the first five principal components for both the binomial and multinomial cases, with cumulative variance exceeding 75%. In the classification results, while models on average perform as well or slightly better with ten principal components, the gains were often minimal when compared to using five components. The exception is the Reconnaissance tactic, which saw substantial performance increases with ten components. However, this improvement could be due to overfitting.

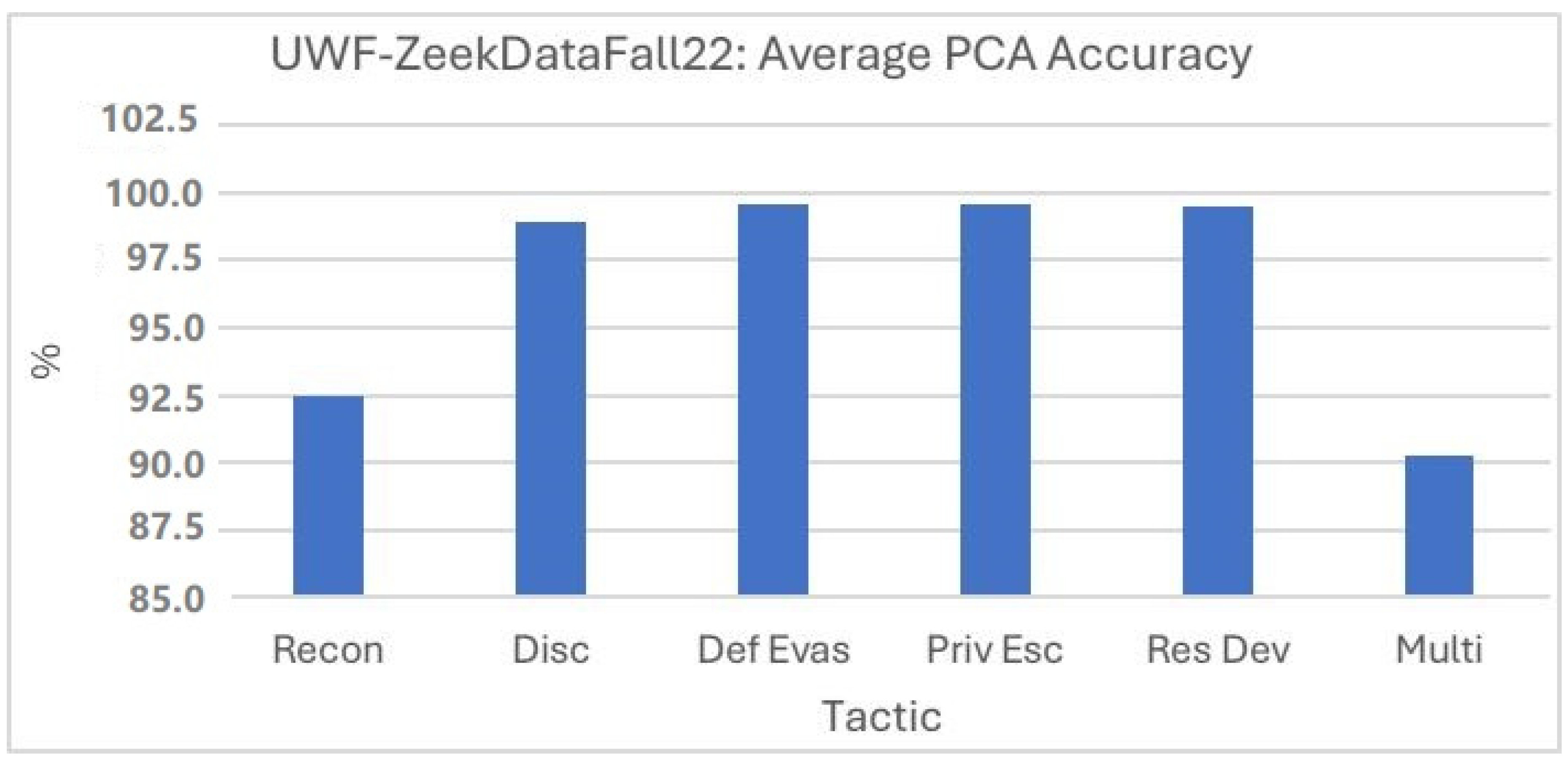

As can be seen from

Figure 8, using PCA, the average accuracy of the multinomial classification is lower than the binary classification results. Of the binary classifiers, Reconnaissance had the lowest accuracy. Though we focused only on accuracy in

Figure 8, from

Table 9, we can see that the other statistical measures had a similar trend. The FPR was also the highest for the multinomial classification, and the second highest for Reconnaissance, but Defense Evasion and Privilege Escalation had an FPR of zero.

SVM classifier results for the UWF-ZeekDataFall22 dataset for the binary classification of Reconnaissance, Discovery, Defense Evasion, Privilege Escalation, and Resource Development, and multinomial classification, with LDA, performed with

k values of 2, 3, 5, and 10, are presented in

Table 13.

From

Table 13, it can be noted that accuracy, precision, recall, and the F-measure all achieved 100% for the binomial data. For the multinomial classification, scores increased as

k increased, with minimal improvement after

k equal to 3. All cases retained a high AUROC score. False-positive rates were low or zero for each case. For UWF-ZeekDataFall22, LDA preprocessing performed the best, with 100% accuracy, precision, recall, F-measure, AUROC, and zero FPRs.

Table 14 presents the LDA explained variance and cumulative variance for UWF-ZeekDataFall22. From

Table 14, it can be noted that for the binomial data, the single linear discriminant captured 100% of the between-class variance for all tactics. In the multinomial case, the first linear discriminant captured 99.55% of the explained variance, with the remaining linear discriminants contributing only minimally to the total variance. However, despite the small additional variance captured by the second linear discriminant, including it in the preprocessing prior to running the SVM model resulted in moderate improvements in classification performance.

8.2.3. Summarizing Statistical Results

Comparing the statistical results of minimal preprocessing, PCA and LDA, it can be noted that LDA had the highest accuracy, precision, recall, F-measure and AUROC and lowest FPR for all the binary classifiers. Minimal preprocessing performed the best in terms of multinomial classification, followed by LDA. LDA also had the highest explained variance.

8.2.4. Comparing CPU and GPU Results

In Apache Spark, both CPUs and GPUs can be utilized for data processing. By default, Spark operates on CPUs. However, with the integration of the GPU acceleration framework, the RAPIDS Accelerator for Apache Spark, certain operations can be offloaded to GPUs, enhancing performance for specific workloads. In this section, we compare the results of CPUs and GPUs with and without MapReduce.

The UWF-ZeekData22 Datasets

Timing results for the UWF-ZeekData22 dataset for the binary classification of Reconnaissance and Discovery, and multinomial classification, with minimal preprocessing, are presented in

Table 15.

From

Table 15, it can be observed that minimal preprocessing and training times took on the order of several minutes, with multinomial classification taking the largest training time. Testing time remained low for each case. Training times are related to the size of the dataset being trained. The GPU training and testing are both faster than CPU training and testing in all cases.

Timing results for the UWF-ZeekData22 dataset for the binary classification of Reconnaissance and Discovery, and multinomial classification, using PCA with

k values of 2, 3, 5, and 10, are presented in

Table 16.

From

Table 16, it can be observed that PCA preprocessing and training times took in the order of several minutes, with the multinomial data, on average, having the highest preprocessing and training times. Training and testing times are related to the size of the datasets being trained or tested. The GPU training and testing times are both faster than CPU training and testing times in all cases.

Timing results for the UWF-ZeekData22 dataset for the binary Reconnaissance and Discovery, and multinomial classification, with LDA (without MapReduce) performed with

k values of 1 and 2, are presented in

Table 17.

From

Table 17, it can be observed that LDA preprocessing without MapReduce took long, on the average longer than minimal preprocessing or PCA. But, training times were significantly reduced when compared to minimal preprocessing or PCA preprocessing techniques. GPU training and testing were both faster than CPU training and testing in all cases.

Timings for the UWF-ZeekData22 dataset for the binary classification of Reconnaissance and Discovery, and multinomial classification, with LDA performed on MapReduce, with

k values of 1 and 2, are presented in

Table 18. All MapReduce runs used the full dataset.

From

Table 18, it can be noted that LDA preprocessing with MapReduce took a long time. In fact, though Reconnaissance LDA preprocessing with MapReduce was quicker than without MapReduce, the Discovery and multinomial data took longer with MapReduce than without MapReduce. LDA preprocessing, with or without MapReduce, took longer than PCA preprocessing, which took longer than minimal preprocessing. GPU training and testing were both faster than CPU training and testing in all cases.

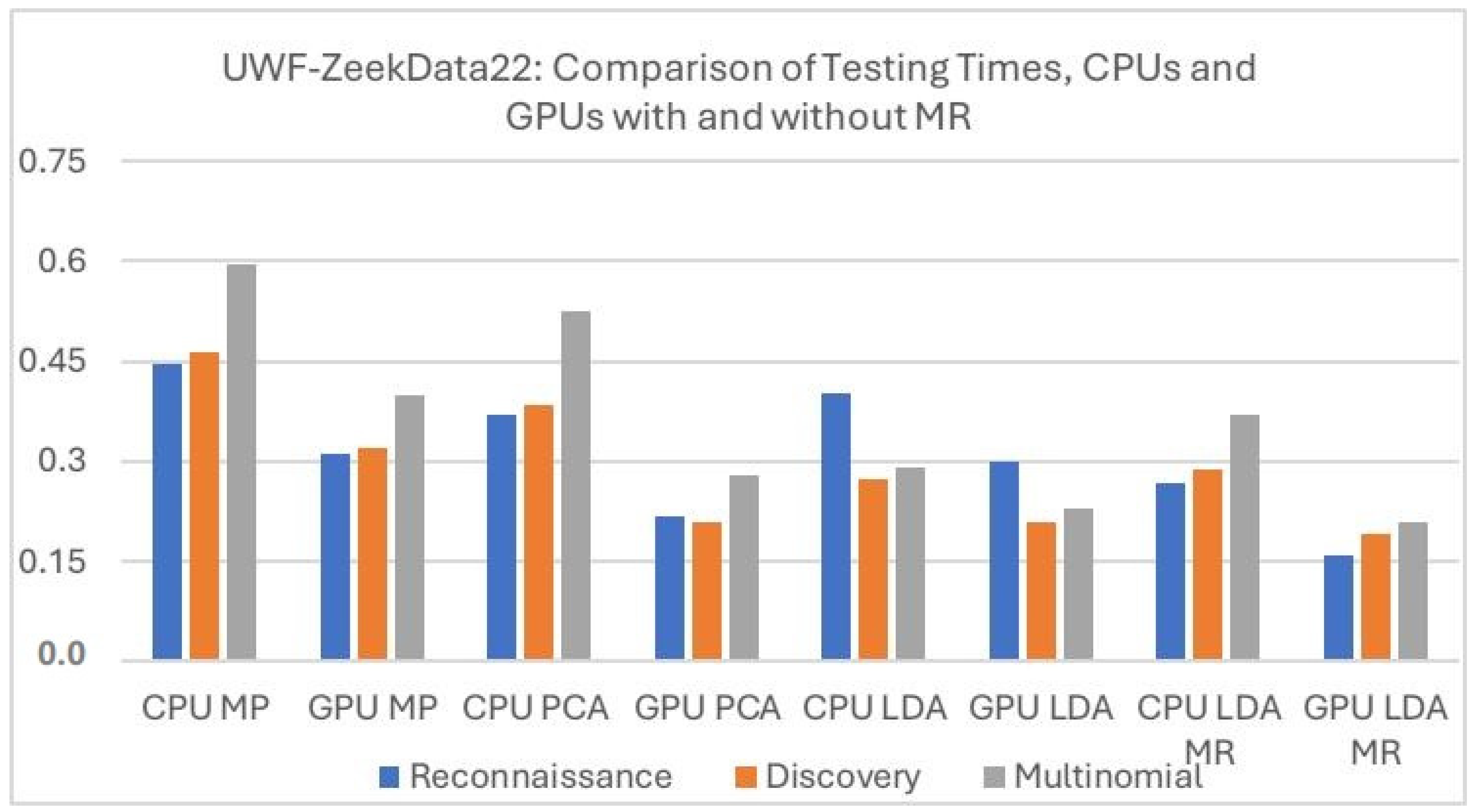

Figure 9 compares the testing times for CPUs and GPUs with and without MapReduce for the UWF-ZeekData22 dataset. The performance of PCA GPU and LDA GPU were very close, but the MapReduce LDA GPU performed better than both PCA GPU and LDA GPU. Of the three preprocessing techniques, minimal preprocessing testing times were the longest, both with the CPUs as well as the GPUs. Comparing only the CPUs, LDA performed the best. Comparing only GPUs, LDA GPU with MapReduce performed the best, followed by GPU LDA. Hence, overall, LDA had the lowest testing times. In all cases, CPU testing took longer than the GPU testing, and, in all cases, the multinomial data took the longest to run.

The UWF-ZeekDataFall22 Dataset

Timings for the UWF-ZeekDataFall22 dataset for the binary classification of Reconnaissance, Discovery, Defense Evasion, Privilege Escalation, and Resource Development, and multinomial classification, with minimal preprocessing, are presented in

Table 19.

From

Table 19, it can be observed that training times took in the order of one to several minutes, with the multinomial classification taking the largest training time. GPU training and testing times were lower than CPU training and testing times in all cases. Training times are correlated to the size of the dataset being trained.

Timing results for the UWF-ZeekDataFall22 dataset for the binary classification of Reconnaissance, Discovery, Defense Evasion, Privilege Escalation, and Resource Development, and multinomial classification, with PCA, performed with

k values of 2, 3, 5, and 10, are presented in

Table 20.

From

Table 20, it can be observed that training times took in the order of several minutes, with the multinomial data having the longest training times. The

k value has little correlation to training time. Training times are correlated to the size of the dataset being trained. GPU training and testing times were lower than CPU training and testing times in all cases.

Timing results on the UWF-ZeekDataFall22 dataset for the binary classification of Reconnaissance, Discovery, Defense Evasion, Privilege Escalation, and Resource Development, and multinomial classification, with LDA, without MapReduce, with

k values of 1, 2, 3, and 4, are presented in

Table 21.

From

Table 21, it can be seen that preprocessing took the majority of the time, with the training time somewhat reduced when compared to minimal preprocessing (

Table 19) and significantly reduced when compared to PCA preprocessing (

Table 20). GPU training and testing times were lower than CPU training and testing times in all cases. Minimal preprocessing had the lowest times when compared to the PCA and LDA preprocessing.

Timing results for the UWF-ZeekDataFall22 dataset for the binary classification of Reconnaissance, Discovery, Defense Evasion, Privilege Escalation, and Resource Development, and multinomial classification, with LDA and MapReduce, with

k values of 1, 2, 3, and 4, are presented in

Table 22. All MapReduce runs used the full dataset.

From

Table 22, it can be observed that preprocessing took the majority of the time, with the training time being somewhat reduced when compared to minimal preprocessing (

Table 19) and significantly reduced when compared to PCA preprocessing (

Table 20). In general, preprocessing times were increased when compared to MapReduce not being used. This means that MapReduce did not reduce preprocessing times. GPU MR training and testing times were lower than CPU MR training and testing times in all cases.

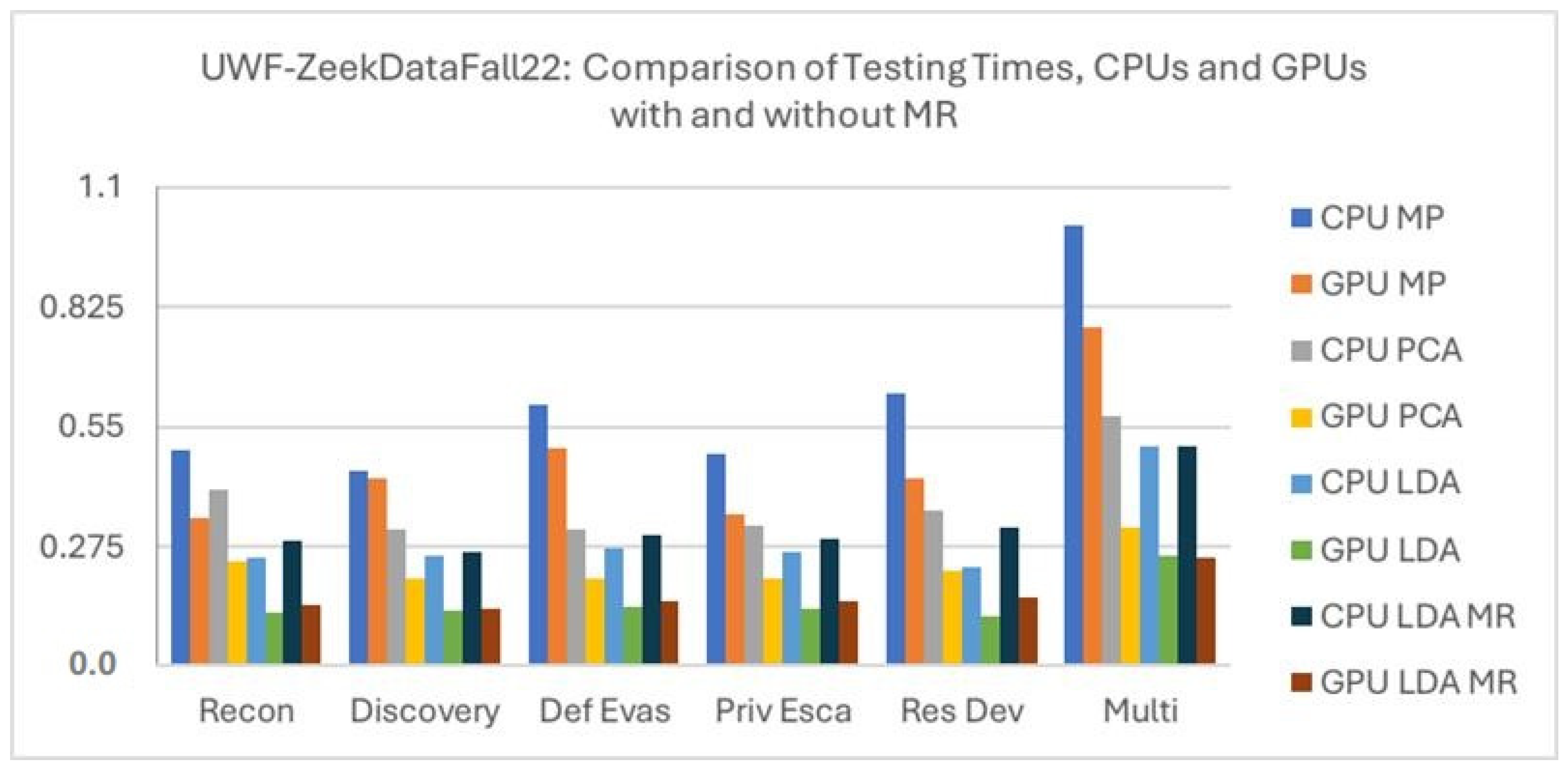

Figure 10 compares the testing times for CPUs and GPUs, with and without MapReduce for the UWF-ZeekData22 dataset. In all cases, we can see that the CPU testing took longer than the GPU testing. LDA GPU performed the best, with the lowest testing times, even better than the MR GPU LDA. The testing times were the highest with the multinomial data. Of the three preprocessing techniques, minimal preprocessing took the longest, and LDA took the shortest testing times.

9. Conclusions

This paper aimed to explore the results of various preprocessing techniques before using an SVM classifier on the MITRE ATT&CK® labeled UWF-ZeekData22 and UWF-ZeekDataFall22 datasets. It compared both the statistical effectiveness of three different preprocessing techniques, MP, PCA and LDA, as well as the time efficiency associated with the preprocessing, training, and testing of the SVM models.

In terms of statistical measures, most models achieved greater than 90% on all metrics, with many models achieving greater than 99%. In some cases, 100% was achieved. Specifically, using UWF-ZeekData22, minimal preprocessing achieved the highest results on average, with LDA being close behind, performing very well in almost all statistical metrics across most tactics. Using UWF-ZeekDataFall22, however, LDA performed slightly better than minimal preprocessing. Hence, in summary we can say that, overall, the statistical performance of minimal preprocessing and LDA were about the same. And overall, PCA had a slightly lower statistical performance. Also, using all three preprocessing techniques, multinomial results were generally slightly lower than binomial results.

Of the three preprocessing techniques compared, LDA performed the best in terms of having the lowest training as well as testing times. Minimal preprocessing had the highest testing times. GPU training and testing times were significantly lower in all cases. LDA GPU performed the best in terms of training and testing times, followed by MapReduce GPU LDA.

LDA significantly reduced training time at the expense of significantly increased preprocessing time. PCA results were less consistent and more dependent upon the choice of k, and did not result in increases to statistical metrics or reduced preprocessing or training times when compared to minimal preprocessing. It can also be noted that, for binomial data, LDA’s single linear discriminant captured 100% of the between-class variance for all tactics. In the multinomial case, the first linear discriminant captured 99.55% of the explained variance.

Multinomial data also had the highest preprocessing times, as well as the highest training and testing times in all cases.

Finally, it can be concluded that binomial LDA, on average, performed the best in terms of statistical measures as well as timing efficiency using GPUs or MapReduce GPUs.

Author Contributions

Conceptualization, S.S.B., S.C.B., D.M. and C.E.; methodology, S.S.B., C.E., R.A. and S.S.; software, C.E., R.A. and S.S.; validation, S.S.B., S.C.B. and D.M.; formal analysis, C.E., R.A. and S.S.; investigation, C.E., R.A. and S.S.; resources, S.S.B., S.C.B. and D.M.; data curation, C.E., R.A. and S.S.; writing—original draft preparation, S.S.B., C.E., R.A. and S.S.; writing—review and editing, S.S.B., S.C.B., C.E., R.A., S.S. and D.M.; visualization, C.E., R.A. and S.S.; supervision, S.S.B., S.C.B. and D.M.; project administration, S.S.B., S.C.B. and D.M.; funding acquisition, S.S.B., S.C.B. and D.M. All authors have read and agreed to the published version of the manuscript.

Funding

The research was funded by the US National Center for Academic Excellence in Cybersecurity (NCAE), 2021 NCAE-C-002: Cyber Research Innovation Grant Program, Grant Number: H98230-21-1-0170. This research was also partially supported by the Askew Institute at the University of West Florida, USA.

Data Availability Statement

The datasets are available at datasets.uwf.edu (accessed on 1 September 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bagui, S.S.; Mink, D.; Bagui, S.C.; Tirthankar, G.; McElroy, T.; Paredes, E.; Khasnavis, N.; Plenkers, R. Detecting Reconnaissance and Discovery Tactics from the MITRE ATT&CK Framework in Zeek Conn Logs Using Spark’s Machine Learning in the Big Data Framework. Sensors 2022, 22, 7999. [Google Scholar] [CrossRef]

- Huong, T.T.; Bac, T.P.; Long, D.M.; Thang, B.D.; Binh, N.T.; Luong, T.D.; Phuc, T.K. LocKedge: Low-Complexity Cyberattack Detection in IoT Edge Computing. IEEE Access 2021, 9, 29696–29710. [Google Scholar] [CrossRef]

- Pozzebon, A. Edge and Fog Computing for the Internet of Things. Future Internet 2024, 16, 101. [Google Scholar] [CrossRef]

- About Zeek—Book of Zeek. Available online: https://docs.zeek.org/en/master/about.html (accessed on 15 September 2024).

- University of West Florida. 2022. Available online: https://datasets.uwf.edu/ (accessed on 2 August 2025).

- Bagui, S.S.; Mink, D.; Bagui, S.C.; Madhyala, P.; Uppal, N.; McElroy, T.; Plenkers, R.; Elam, M.; Prayaga, S. Introducing the UWF-ZeekDataFall22 Dataset to Classify Attack Tactics from Zeek Conn Logs Using Spark’s Machine Learning in a Big Data Framework. Electronics 2023, 12, 5039. [Google Scholar] [CrossRef]

- MITRE ATT&CK. Available online: https://attack.mitre.org/ (accessed on 10 September 2024).

- Bagui, S.; Spratlin, S. A Review of Data Mining Algorithms on Hadoop’s MapReduce. Int. J. Data Sci. 2018, 3, 146–149. [Google Scholar] [CrossRef]

- Apache Spark—Unified Engine for Large-Scale Data Analytics. Available online: https://spark.apache.org/ (accessed on 1 August 2024).

- Nskh, P.; Varma, M.N.; Naik, R.R. Principle component analysis based intrusion detection system using support vector machine. In Proceedings of the 2016 IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT), Bangalore, India, 20–21 May 2016; pp. 1344–1350. [Google Scholar] [CrossRef]

- Chabathula, K.J.; Jaidhar, C.D.; Kumara, M.A. Comparative study of Principal Component Analysis based Intrusion Detection approach using machine learning algorithms. In Proceedings of the 2015 3rd International Conference on Signal Processing, Communication and Networking (ICSCN), Chennai, India, 26–28 March 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Ibrahimi, K.; Ouaddane, M. Management of intrusion detection systems based-KDD99: Analysis with LDA and PCA. In Proceedings of the 2017 International Conference on Wireless Networks and Mobile Communications (WINCOM), Rabat, Morocco, 1–4 November 2017; pp. 130–135. [Google Scholar] [CrossRef]

- Saad, A.A.; Khalid, C.; Mohamed, J. Network intrusion detection system based on Direct LDA. In Proceedings of the 2015 Third World Conference on Complex Systems (WCCS), Marrakech, Morocco, 23–25 November 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Amin, Z.; Kabir, A. A Performance Analysis of Machine Learning Models for Attack Prediction using Different Feature Selection Techniques. In Proceedings of the 2022 IEEE/ACIS 7th International Conference on Big Data, Cloud Computing, and Data Science (BCD), Danang, Vietnam, 4–6 August 2022; pp. 130–135. [Google Scholar] [CrossRef]

- Tait, K.; Khan, J.S.; Alqahtani, F.; Shah, A.A.; Khan, F.A.; Rehman, M.U.; Boulila, W.; Ahmad, J. Intrusion Detection using Machine Learning Techniques: An Experimental Comparison. In Proceedings of the 2021 International Congress of Advanced Technology and Engineering (ICOTEN), Taiz, Yemen, 4–5 July 2021; pp. 1–10. [Google Scholar] [CrossRef]

- Shah, S.; Muhuri, P.S.; Yuan, X.; Roy, K.; Chatterjee, P. Implementing a network intrusion detection system using semi-supervised support vector machine and random forest. In Proceedings of the ACM Southeast Conference (ACM SE ’21), Virtual Event, 15–17 April 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 180–184. [Google Scholar] [CrossRef]

- Almiah, M.A.; Almomani, O.; Alsaaidah, A.; Al-Otaibi, S.; Bani-Hani, N.; Hwaitat, A.; Al-Zahrani, A.; Lufti, A.; Awad, A.B.; Aldhyani, T.H.H. Performance Investigation of Principal Component Analysis for Intrusion Detection System Using Different Support Vector Machine Kernels. Electronics 2022, 11, 3571. [Google Scholar] [CrossRef]

- Zou, H.; Jin, Z. Comparative Study of Big Data Classification Algorithm Based on SVM. In Proceedings of the 2018 Cross Strait Quad-Regional Radio Science and Wireless Technology Conference (CSQRWC), Xuzhou, China, 21–24 July 2018; pp. 1–3. [Google Scholar] [CrossRef]

- Sarhan, M.; Layeghy, S.; Moustafa, N.; Gallagher, M.; Portmann, M. Feature Extraction for Machine-Learning-based Intrusion Detection in IoT Networks. arXiv 2021, arXiv:2108.12722. [Google Scholar] [CrossRef]

- Gatea, M.J.; Hameed, S.M. An Internet of Things Botnet Detection Model Using Regression Analysis and Linear Discrimination Analysis. Iraqi J. Sci. 2022, 63, 4534–4546. [Google Scholar] [CrossRef]

- Mishra, A.; Cheng, A.; Zhang, Y. Intrusion detection using principal component analysis and support vector machines. In Proceedings of the 2020 IEEE 16th International Conference on Control & Automation (ICCA), Singapore, 9–11 October 2020. [Google Scholar] [CrossRef]

- Subba, B.; Biswas, S.; Karmakar, S. Intrusion detection systems using linear discriminant analysis and logistic regression. In Proceedings of the 2015 Annual IEEE India Conference (INDICON), New Delhi, India, 17–20 December 2015. [Google Scholar] [CrossRef]

- Moradibaad, A.; Mashed, R.J. Use Dimensionality Reduction and SVM Methods to Increase the Penetration Rate of Computer Networks. arXiv 2018, arXiv:1812.03173. [Google Scholar] [CrossRef]

- Singh, S.; Agrawal, S.; Rizvi, M.; Thakur, R.S. Improved support vector machine for cyber attack detection. In Proceedings of the World Congress on Engineering and Computer Science, San Francisco, CA, USA, 19–21 October 2011; Volume 1. Available online: https://www.researchgate.net/publication/277636116_Improved_Support_Vector_Machine_for_Cyber_attack_Detection (accessed on 2 August 2025).

- Ghanem, K.; Aparicio-Navarro, F.J.; Kyriakopoulos, K.G.; Lambotharan, S.; Chambers, J.A. Support vector machine for network intrusion and cyber-attack detection. In Proceedings of the 2017 Sensor Signal Processing for Defence Conference (SSPD), London, UK, 6–7 December 2017. [Google Scholar] [CrossRef]

- Asif, M.; Abbas, S.; Khan, M.A.; Fatima, A.; Khan, M.A.; Lee, S. MapReduce based intelligent model for intrusion detection using machine learning technique. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 9723–9731. [Google Scholar] [CrossRef]

- Csaba, B. Processing Intrusion Data with Machine Learning and MapReduce. Acad. Appl. Res. Mil. Public Manag. Sci. 2022, 16, 37–52. [Google Scholar] [CrossRef]

- Bagui, S.S.; Mink, D.; Bagui, S.C.; Ghosh, T.; Plenkers, R.; McElroy, T.; Dulaney, S.; Shabanali, S. Introducing UWF-ZeekData22: A Comprehensive Network Traffic Dataset Based on the MITRE ATT&CK Framework. Data 2023, 8, 18. [Google Scholar] [CrossRef]

- Reconnaissance. Available online: https://attack.mitre.org/tactics/TA0043/ (accessed on 2 August 2025).

- Discovery. Available online: https://attack.mitre.org/tactics/TA0007/ (accessed on 2 August 2025).

- Resource Development. Available online: https://attack.mitre.org/tactics/TA0042/ (accessed on 2 August 2025).

- Defense Evasion. Available online: https://attack.mitre.org/tactics/TA0005/ (accessed on 2 August 2025).

- Privilege Escalation. Available online: https://attack.mitre.org/tactics/TA0004/ (accessed on 2 August 2025).

- Jolliffe, I.T.; Cadima, J. Principal component analysis: A review and recent developments. Philos. Trans. A Math. Phys. Eng. Sci. 2016, 374, 20150202. [Google Scholar] [CrossRef]

- Apache Spark. PCA—Pyspark Overview. 2024. Available online: https://spark.apache.org/docs/3.5.1/api/python/index.html (accessed on 2 August 2025).

- Karamizadeh, S.; Abdullah, S.M.; Manaf, A.A.; Zamani, M.; Hooman, A. An Overview of Principal Component Analysis. J. Signal Inf. Process. 2020, 4, 173–175. [Google Scholar] [CrossRef]

- Yuxi, L.; Raschka, S.; Mirjalili, V. Machine Learning with PyTorch and Scikit-Learn: Develop Machine Learning and Deep Learning Models with Python; Packt Publishing: Birmingham, UK, 2022; pp. 120–121, 139–148, 154–163. [Google Scholar]

- Tharwat, A.; Gaber, T.; Ibrahim, A.; Hassanien, A.E. Linear Discriminant Analysis: A Detailed Tutorial. AI Commun. 2017, 30, 169–190. [Google Scholar] [CrossRef]

- Kecman, V. Support Vector Machines—An Introduction. In Support Vector Machines: Theory and Applications; Springer: Berlin/Heidelberg, Germany, 2005; pp. 1–47. [Google Scholar] [CrossRef]

- Meyer, D. Support Vector Machines. R News 2001, 1, 23–26. Available online: https://journal.r-project.org/articles/RN-2001-025/RN-2001-025.pdf (accessed on 2 August 2025).

- Guller, M. Big Data Analytics with Spark: A Practitioner’s Guide to Using Spark for Large Scale Data Analysis; Apress: New York, NY, USA, 2015. [Google Scholar]

- PureStorage Blog. CPU vs. GPU for Machine Learning; PureStorage Blog: Santa Clara, CA, USA, 2025; Available online: https://blog.purestorage.com/purely-educational/cpu-vs-gpu-for-machine-learning/ (accessed on 2 August 2025).

- NVIDIA Run:ai Software. Available online: https://www.nvidia.com/en-us/software/run-ai/ (accessed on 1 August 2024).

- Spark RAPIDS User Guide. Available online: https://docs.nvidia.com/spark-rapids/user-guide/latest/ (accessed on 1 August 2024).

- Accuracy Score, Scikit-Learn. Available online: https://scikit-learn.org/stable/modules/model_evaluation.html#accuracy-score (accessed on 28 April 2024).

- Precision Score. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.metrics.precision_score.html#sklearn.metrics.precision_score (accessed on 28 April 2024).

- Recall Score. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.metrics.recall_score.html#sklearn.metrics.recall_score (accessed on 28 April 2024).

- Sasaki, Y. The Truth of the F-Measure, Teach Tutor Mater. 2007, pp. 1–5. Available online: https://www.researchgate.net/publication/268185911_The_truth_of_the_F-measure (accessed on 2 August 2025).

- Powers, D.M. Evaluation: From Precision, Recall and F-Factor to ROC, Informedness, Markedness & Correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar] [CrossRef]

Figure 1.

Common features in Zeek datasets.

Figure 1.

Common features in Zeek datasets.

Figure 2.

Counts of MITRE ATT&CK tactics in UWF-ZeekData22.

Figure 2.

Counts of MITRE ATT&CK tactics in UWF-ZeekData22.

Figure 3.

Counts of MITRE ATT&CK tactics in UWF-ZeekDataFall22.

Figure 3.

Counts of MITRE ATT&CK tactics in UWF-ZeekDataFall22.

Figure 4.

Pseudocode for the Principal Component Analysis algorithm.

Figure 4.

Pseudocode for the Principal Component Analysis algorithm.

Figure 5.

Pseudocode for the Linear Discriminant Analysis algorithm.

Figure 5.

Pseudocode for the Linear Discriminant Analysis algorithm.

Figure 6.

Flowchart of preprocessing steps and algorithm execution.

Figure 6.

Flowchart of preprocessing steps and algorithm execution.

Figure 7.

UWF-ZeekData22: comparison of preprocessing techniques.

Figure 7.

UWF-ZeekData22: comparison of preprocessing techniques.

Figure 8.

UWF-ZeekDataFall22: comparison of PCA preprocessing.

Figure 8.

UWF-ZeekDataFall22: comparison of PCA preprocessing.

Figure 9.

UWF-ZeekDataFall22: comparing testing times for CPUs and GPUs with and without MapReduce.

Figure 9.

UWF-ZeekDataFall22: comparing testing times for CPUs and GPUs with and without MapReduce.

Figure 10.

UWF-ZeekDataFall22: comparison of testing times.

Figure 10.

UWF-ZeekDataFall22: comparison of testing times.

Table 1.

Parameters specified for SVM implemented in PySpark.

Table 1.

Parameters specified for SVM implemented in PySpark.

| Algorithm | Parameters Used |

|---|

| Support Vector Machine | featuresCol: str = ‘features’,

labelCol: str = ‘label_tactic’, maxIter: int = 10, regParam: float = 0.0, fitIntercept: bool = True, threshold: float = 0.00001 |

Table 2.

Spark configuration parameters [

1,

42].

Table 2.

Spark configuration parameters [

1,

42].

| Configuration | Spark Property | Description |

|---|

| Driver Cores | spark.driver.cores | Number of cores allocated for the driver process when operating in cluster mode |

| Driver Memory | spark.driver.memory | Memory allocated for the driver process, where the Spark context is initialized |

| Executor Cores | spark.executor.cores | Number of cores allocated to each executor |

| Executor Memory | spark. executor. memory | Amount of memory assigned to each executor process |

Executor

Instances | spark.executor.instances

spark.dynamicAllocation.minExecutors

spark.dynamicAllocation.maxExecutors | Initial count of executors running when dynamic allocation is enabled, with defined upper and lower bounds for the total executors |

Shuffle

Partitions | spark.sql.shuffle.partitions | Default number of partitions used during data shuffling for joins or aggregations |

Table 3.

Spark configuration settings.

Table 3.

Spark configuration settings.

| Config Settings | Value |

|---|

| Driver Cores | 2 |

| Driver Memory | 10 g |

| Executor Instances | 16 |

| Executor Cores | 2 |

| Executor Memory | 25 g |

| Shuffle Partitions | 180 |

Table 4.

Spark resource settings and total allocation.

Table 4.

Spark resource settings and total allocation.

| Resources | Total Allocation |

|---|

| Driver Cores | 2 |

| Driver Memory | 10 g |

| Executor Total Cores | 32 |

| Executor Total Memory | 400 g |

Table 5.

UWF-ZeekData22: SVM with minimal preprocessing.

Table 5.

UWF-ZeekData22: SVM with minimal preprocessing.

| Tactic | Accuracy

(%) | Precision

(%) | Recall

(%) | F-Measure (%) | FPR

(%) | AUROC

(%) | (%) of Dataset Reduction |

|---|

| Reconnaissance | 99.93 | 99.93 | 99.93 | 99.92 | 0.14 | 99.92 | 97.5 |

| Discovery | 99.99 | 99.99 | 99.99 | 99.99 | 0.00 | 99.99 | 0 |

| Multinomial | 99.94 | 99.94 | 99.92 | 99.94 | 0.03 | 99.93 | 97 |

Table 6.

UWF-ZeekData22: SVM with PCA.

Table 6.

UWF-ZeekData22: SVM with PCA.

| k | Tactic | Accuracy

(%) | Precision

(%) | Recall

(%) | F-Measure

(%) | FPR

(%) | AUROC

(%) | (%) of Dataset Reduction |

|---|

| 2 | Recon | 97.90 | 97.90 | 97.90 | 97.90 | 2.55 | 97.90 | 97.5 |

| 3 | Recon | 98.15 | 98.16 | 98.16 | 98.16 | 2.45 | 98.16 | 97.5 |

| 5 | Recon | 97.99 | 98.01 | 97.99 | 98.99 | 3.06 | 97.99 | 97.5 |

| 10 | Recon | 99.04 | 99.04 | 99.04 | 99.04 | 1.14 | 99.04 | 97.5 |

| 2 | Disc | 99.78 | 99.57 | 99.78 | 99.67 | 0 | 49.99 | 10 |

| 3 | Disc | 99.98 | 99.57 | 99.98 | 99.67 | 0 | 49.99 | 10 |

| 5 | Disc | 99.78 | 99.61 | 99.78 | 99.67 | 0 | 50.25 | 10 |

| 10 | Disc | 99.98 | 99.97 | 99.98 | 99.97 | 0 | 95.14 | 10 |

| 2 | Multi | 97.56 | 97.36 | 97.57 | 97.46 | 2.96 | 97.57 | 95 |

| 3 | Multi | 97.84 | 97.64 | 97.84 | 97.734 | 2.66 | 97.84 | 95 |

| 5 | Multi | 97.46 | 97.25 | 97.43 | 97.32 | 3.78 | 97.43 | 95 |

| 10 | Multi | 98.64 | 98.42 | 98.64 | 98.539 | 1.51 | 98.66 | 95 |

Table 7.

UWF-ZeekData22: PCA variance and cumulative variance.

Table 7.

UWF-ZeekData22: PCA variance and cumulative variance.

| PC | Recon Var.

(%) | Recon Cum. Var.

(%) | Disc Var.

(%) | Disc Cum. Var.

(%) | Multi Var.

(%) | Multi Cum. Var.

(%) |

|---|

| 1 | 26.16 | 26.16 | 20.82 | 20.82 | 26.16 | 26.16 |

| 2 | 20.96 | 47.12 | 15.11 | 35.93 | 20.94 | 47.10 |

| 3 | 10.08 | 57.20 | 14.37 | 50.30 | 10.11 | 57.21 |

| 4 | 6.95 | 64.15 | 8.57 | 58.87 | 6.93 | 64.14 |

| 5 | 6.46 | 70.61 | 7.81 | 66.68 | 6.42 | 70.56 |

| 6 | 5.21 | 75.82 | 5.53 | 72.21 | 5.19 | 75.75 |

| 7 | 4.56 | 80.38 | 4.69 | 76.90 | 4.55 | 80.30 |

| 8 | 3.82 | 84.20 | 4.38 | 81.28 | 3.82 | 84.12 |

| 9 | 3.41 | 87.61 | 3.81 | 85.09 | 3.41 | 87.53 |

| 10 | 2.80 | 90.41 | 3.60 | 88.69 | 2.86 | 90.39 |

Table 8.

UWF-ZeekData22: SVM with LDA.

Table 8.

UWF-ZeekData22: SVM with LDA.

| k | Tactic | Accuracy

(%) | Precision

(%) | Recall

(%) | F-Measure

(%) | FPR

(%) | AUROC

(%) | (%) of Dataset Reduction |

|---|

| 1 | Recon | 99.96 | 99.96 | 99.96 | 99.96 | 0.07 | 99.96 | 92.5 |

| 1 | Disc | 99.94 | 99.94 | 99.94 | 99.94 | 0.06 | 99.07 | 5 |

| 1 | Multi | 99.76 | 99.55 | 99.76 | 99.65 | 0.03 | 99.77 | 97 |

| 2 | Multi | 99.75 | 99.54 | 99.75 | 99.65 | 0.03 | 99.77 | 97 |

Table 9.

UWF-ZeekData22: LDA explained variance and cumulative variance.

Table 9.

UWF-ZeekData22: LDA explained variance and cumulative variance.

| Tactic | LDA (k = 1) Explained Var.

(%) | Cum. Var.

(%) | LDA (k = 2) Explained Var.

(%) | Cum. Var.

(%) |

|---|

| Recon | 100.00 | 100.00 | - | - |

| Disc | 100.00 | 100.00 | - | - |

| Multi | 99.82 | 99.82 | 0.18 | 100.00 |

Table 10.

UWF-ZeekDataFall22: SVM with minimal preprocessing.

Table 10.

UWF-ZeekDataFall22: SVM with minimal preprocessing.

| k | Tactic | Accuracy

(%) | Precision

(%) | Recall

(%) | F-Measure

(%) | FPR

(%) | AUROC

(%) |

|---|

| 1 | Reconnaissance | 99.96 | 99.96 | 99.96 | 99.96 | 0.07 | 99.96 |

| 1 | Discovery | 99.94 | 99.94 | 99.94 | 99.94 | 0.06 | 99.07 |

| 1 | Multinomial | 99.76 | 99.55 | 99.76 | 99.65 | 0.03 | 99.77 |

| 2 | Multinomial | 99.75 | 99.54 | 99.75 | 99.65 | 0.03 | 99.77 |

Table 11.

UWF-ZeekDataFall22: results with PCA preprocessing.

Table 11.

UWF-ZeekDataFall22: results with PCA preprocessing.

| k | Tactic | Accuracy

(%) | Precision

(%) | Recall

(%) | F-Measure

(%) | FPR

(%) | AUROC

(%) |

|---|

| 2 | Recon | 87.27 | 76.17 | 87.28 | 81.34 | 0 | 50 |

| 3 | Recon | 92.69 | 92.23 | 92.69 | 92.33 | 2.72 | 79.24 |

| 5 | Recon | 90.01 | 90.96 | 90.01 | 87.06 | 0.02 | 60.79 |

| 10 | Recon | 99.98 | 99.98 | 99.98 | 99.98 | 0.02 | 99.98 |

| | Averages | 92.49 | 89.84 | 92.49 | 90.18 | 0.69 | 72.50 |

| 2 | Disc | 97.44 | 98.35 | 97.44 | 97.70 | 2.68 | 98.65 |

| 3 | Disc | 98.50 | 98.86 | 98.50 | 98.60 | 1.57 | 99.18 |

| 5 | Disc | 99.55 | 99.59 | 99.55 | 99.56 | 0.47 | 99.73 |

| 10 | Disc | 99.99 | 99.99 | 99.99 | 99.99 | 0.001 | 99.99 |

| | Averages | 98.87 | 99.20 | 98.87 | 98.96 | 1.18 | 99.39 |

| 2 | Def Evas | 99.14 | 98.28 | 99.14 | 98.71 | 0 | 50 |

| 3 | Def Evas | 99.14 | 98.28 | 99.14 | 98.71 | 0 | 50 |

| 5 | Def Evas | 100 | 100 | 100 | 100 | 0 | 100 |

| 10 | Def Evas | 100 | 100 | 100 | 100 | 0 | 100 |

| | Averages | 99.57 | 99.14 | 99.57 | 99.36 | 0 | 75 |

| 2 | Priv Esc | 99.14 | 98.28 | 99.14 | 98.71 | 0 | 50 |

| 3 | Priv Esc | 99.14 | 98.28 | 99.13 | 98.71 | 0 | 50 |

| 5 | Priv Esc | 100 | 100 | 100 | 100 | 0 | 100 |

| 10 | Priv Esc | 100 | 100 | 100 | 100 | 0 | 100 |

| | Averages | 99.57 | 99.14 | 99.57 | 99.35 | 0 | 75 |

| 2 | Res Dev | 98.23 | 98.30 | 98.23 | 98.23 | 0.004 | 98.42 |

| 3 | Res Dev | 99.64 | 99.64 | 99.64 | 99.64 | 0.59 | 99.67 |

| 5 | Res Dev | 99.95 | 99.95 | 99.945 | 99.95 | 0.06 | 99.95 |

| 10 | Res Dev | 99.93 | 99.93 | 99.93 | 99.93 | 0.09 | 99.93 |

| | Averages | 99.44 | 99.45 | 99.44 | 99.44 | 0.19 | 99.49 |

| 2 | Multi | 87.21 | 78.00 | 87.21 | 82.33 | 4.46 | 90.95 |

| 3 | Multi | 90.47 | 84.32 | 90.47 | 87.04 | 0.43 | 91.55 |

| 5 | Multi | 91.31 | 90.34 | 91.31 | 87.98 | 0.06 | 92.44 |

| 10 | Multi | 92.07 | 90.55 | 92.07 | 90.62 | 0.09 | 93.54 |

| | Averages | 90.26 | 85.80 | 90.26 | 86.99 | 1.25 | 92.12 |

Table 12.

UWF-ZeekDataFall22: PCA variance and cumulative variance.

Table 12.

UWF-ZeekDataFall22: PCA variance and cumulative variance.

| PC | Recon Var.

(%) | Recon Cum. Var.

(%) | Disc Var.

(%) | Disc Cum. Var.

(%) | Def Evas Var.

(%) | Def Evas Cum. Var.

(%) | Priv Esc Var.

(%) | Priv Esc Cum. Var.

(%) | Res Dev Var.

(%) | Res Dev Cum. Var.

(%) | Multi Var.

(%) | Multi Cum. Var.

(%) |

|---|

| 1 | 32.86 | 32.86 | 33.26 | 33.26 | 33.37 | 33.37 | 33.38 | 33.38 | 33.10 | 33.10 | 32.21 | 32.21 |

| 2 | 21.86 | 54.72 | 24.55 | 57.81 | 20.93 | 54.3 | 20.94 | 54.32 | 26.26 | 59.36 | 24.47 | 56.68 |

| 3 | 8.45 | 63.17 | 9.36 | 67.17 | 8.83 | 63.13 | 8.83 | 63.15 | 12.05 | 71.41 | 12.74 | 69.42 |

| 4 | 7.06 | 70.23 | 6.08 | 73.25 | 7.52 | 70.65 | 7.52 | 70.67 | 6.94 | 78.35 | 6.84 | 76.26 |

| 5 | 5.56 | 75.79 | 4.38 | 77.63 | 5.31 | 75.96 | 5.31 | 75.98 | 4.19 | 82.54 | 4.06 | 80.32 |

| 6 | 3.98 | 79.77 | 3.81 | 81.44 | 4.09 | 80.05 | 4.09 | 80.07 | 3.36 | 85.9 | 3.51 | 83.83 |

| 7 | 3.54 | 83.31 | 3.51 | 84.95 | 3.67 | 83.72 | 3.67 | 83.74 | 2.89 | 88.79 | 3.26 | 87.09 |

| 8 | 3.34 | 86.65 | 2.90 | 87.85 | 2.99 | 86.71 | 2.99 | 86.73 | 2.85 | 91.64 | 2.98 | 90.07 |

| 9 | 2.94 | 89.59 | 2.76 | 90.61 | 2.90 | 89.61 | 2.90 | 89.63 | 2.04 | 93.68 | 2.07 | 92.14 |

| 10 | 2.39 | 91.98 | 2.66 | 93.27 | 2.83 | 92.44 | 2.83 | 92.46 | 2.00 | 95.68 | 1.97 | 94.11 |

Table 13.

UWF-ZeekDataFall22: results with LDA preprocessing.

Table 13.

UWF-ZeekDataFall22: results with LDA preprocessing.

| k | Tactic | Accuracy

(%) | Precision

(%) | Recall

(%) | F-Measure

(%) | FPR

(%) | AUROC

(%) |

|---|

| 1 | Recon | 100 | 100 | 100 | 100 | 0 | 100 |

| 1 | Disc | 100 | 100 | 100 | 100 | 0 | 100 |

| 1 | Def Eva | 100 | 100 | 100 | 100 | 0 | 100 |

| 1 | Priv Esc | 100 | 100 | 100 | 100 | 0 | 100 |

| 1 | Res Dev | 100 | 100 | 100 | 100 | 0 | 100 |

| 1 | Multi | 89.44 | 81.11 | 89.44 | 84.78 | 0 | 100 |

| 2 | Multi | 96.68 | 94.36 | 96.68 | 95.34 | 0.001 | 99.99 |

| 3 | Multi | 97.83 | 97.32 | 97.83 | 97.49 | 0.005 | 99.99 |

| 4 | Multi | 97.83 | 97.33 | 97.83 | 97.49 | 0.006 | 99.99 |

| 5 | Multi | 97.83 | 97.33 | 97.83 | 97.49 | 0.008 | 99.99 |

Table 14.

UWF-ZeekDataFall22: LDA explained variance and cumulative variance.

Table 14.

UWF-ZeekDataFall22: LDA explained variance and cumulative variance.

| Tactic | LD1 Expl. Var.

(%) | Cum. Var.

(%) | LD2 Expl. Var.

(%) | Cum. Var.

(%) | LD3 Expl. Var.

(%) | Cum. Var.

(%) | LD4 Expl. Var.

(%) | Cum. Var.

(%) | LD5 Expl. Var.

(%) | Cum. Var.

(%) |

|---|

| Multi | 99.55 | 99.55 | 0.41 | 99.96 | 0.04 | 100.00 | 0.00 | 100.00 | 0.00 | 100.00 |

| Recon | 100.00 | 100.00 | - | - | - | - | - | - | - | - |

| Disc | 100.00 | 100.00 | - | - | - | - | - | - | - | - |

| Def Eva | 100.00 | 100.00 | - | - | - | - | - | - | - | - |

| Priv Esc | 100.00 | 100.00 | - | - | - | - | - | - | - | - |

| Res Dev | 100.00 | 100.00 | - | - | - | - | - | - | - | - |

Table 15.

UWF-ZeekData22: timings (in seconds) with minimal preprocessing, CPU vs. GPU.

Table 15.

UWF-ZeekData22: timings (in seconds) with minimal preprocessing, CPU vs. GPU.

| Tactic | Minimal Preprocessing | CPU Training | GPU Training | CPU Testing | GPU Testing |

|---|

| Reconnaissance | 47.85 | 110.04 | 58.25 | 0.45 | 0.31 |

| Discovery | 60.04 | 102.19 | 57.21 | 0.46 | 0.32 |

| Multinomial | 60.55 | 280.82 | 160.34 | 0.60 | 0.40 |

Table 16.

UWF-ZeekData22: timings (in seconds) with PCA, CPU vs. GPU.

Table 16.

UWF-ZeekData22: timings (in seconds) with PCA, CPU vs. GPU.

| k | Tactic | Minimal Preprocessing | CPU Training | GPU Training | CPU Testing | GPU Testing |

|---|

| 2 | Reconnaissance | 102.97 | 146.92 | 89.71 | 0.39 | 0.21 |

| 3 | Reconnaissance | 103.28 | 152.56 | 90.01 | 0.39 | 0.22 |

| 5 | Reconnaissance | 109.21 | 150.16 | 80.98 | 0.48 | 0.25 |

| 10 | Reconnaissance | 104.18 | 148.81 | 81.23 | 0.37 | 0.19 |

| 2 | Discovery | 109.30 | 320.41 | 178.76 | 0.41 | 0.21 |

| 3 | Discovery | 106.02 | 301.83 | 167.32 | 0.41 | 0.23 |

| 5 | Discovery | 104.18 | 285.68 | 154.21 | 0.42 | 0.22 |

| 10 | Discovery | 109.75 | 298.21 | 149.11 | 0.38 | 0.2 |

| 2 | Multinomial | 107.46 | 339.83 | 176.19 | 0.47 | 0.21 |

| 3 | Multinomial | 132.52 | 403.01 | 210.98 | 0.54 | 0.31 |

| 5 | Multinomial | 102.79 | 271.49 | 145.21 | 0.50 | 0.32 |

| 10 | Multinomial | 104.05 | 282.63 | 153.24 | 0.53 | 0.31 |

Table 17.

UWF-ZeekData22: timings (in seconds) with LDA, CPU vs. GPU, without MapReduce.

Table 17.

UWF-ZeekData22: timings (in seconds) with LDA, CPU vs. GPU, without MapReduce.

| k | Tactic | LDA Preprocessing | CPU Training | GPU Training | CPU Testing | GPU Testing |

|---|

| 1 | Reconnaissance | 167.97 | 23.28 | 16.31 | 0.40 | 0.3 |

| 1 | Discovery | 89.10 | 18.65 | 12.33 | 0.27 | 0.21 |

| 1 | Multinomial | 117.83 | 30.59 | 18.29 | 0.29 | 0.23 |

| 2 | Multinomial | 118.82 | 23.83 | 13.97 | 0.33 | 0.26 |

Table 18.

UWF-ZeekData22: timings (in seconds) with LDA, CPU vs. GPU, with MapReduce.

Table 18.

UWF-ZeekData22: timings (in seconds) with LDA, CPU vs. GPU, with MapReduce.

| k | Tactic | LDA Preprocessing | CPU Training | GPU Training | CPU Testing | GPU Testing |

|---|

| 1 | Reconnaissance | 134.34 | 16.03 | 12.01 | 0.27 | 0.16 |

| 1 | Discovery | 121.40 | 18.25 | 10.13 | 0.29 | 0.19 |

| 1 | Multinomial | 138.21 | 35.44 | 19.43 | 0.37 | 0.21 |

| 2 | Multinomial | 145.07 | 28.32 | 20.21 | 0.42 | 0.25 |

Table 19.

UWF-ZeekDataFall22: timings (in seconds) with minimal preprocessing, CPUs vs. GPUs.

Table 19.

UWF-ZeekDataFall22: timings (in seconds) with minimal preprocessing, CPUs vs. GPUs.

| Tactic | Preprocessing | CPU Training | GPU Training | CPU Testing | GPU Testing |

|---|

| Reconnaissance | 38.26 | 143.06 | 89.32 | 0.50 | 0.34 |

| Discovery | 34.75 | 74.46 | 43.31 | 0.45 | 0.43 |

| Defense Evasion | 35.39 | 77.36 | 45.22 | 0.6 | 0.50 |

| Privilege Escalation | 36.03 | 72.30 | 43.33 | 0.49 | 0.35 |

| Resource Development | 31.99 | 183.30 | 101.78 | 0.63 | 0.43 |

| Multinomial | 40.91 | 734.60 | 450.57 | 1.01 | 0.78 |

Table 20.

UWF-ZeekDataFall22: timing results with PCA, CPU vs. GPU.

Table 20.

UWF-ZeekDataFall22: timing results with PCA, CPU vs. GPU.

| k | Tactic | Preprocessing | CPU Training | GPU Training | CPU Testing | GPU Testing |

|---|

| 2 | Reconnaissance | 49.65 | 325.02 | 180.91 | 0.30 | 0.22 |

| 3 | Reconnaissance | 55.45 | 127.01 | 90.23 | 0.39 | 0.31 |

| 5 | Reconnaissance | 54.95 | 124.90 | 87.45 | 0.38 | 0.23 |

| 10 | Reconnaissance | 50.70 | 120.84 | 95.61 | 0.41 | 0.24 |

| 2 | Discovery | 58.28 | 157.41 | 90.87 | 0.36 | 0.20 |

| 3 | Discovery | 49.73 | 110.05 | 70.81 | 0.28 | 0.18 |

| 5 | Discovery | 52.52 | 104.06 | 68.90 | 0.27 | 0.18 |

| 10 | Discovery | 48.82 | 114.01 | 76.32 | 0.31 | 0.20 |

| 2 | Defense Evasion | 45.52 | 142.23 | 83.44 | 0.39 | 0.24 |

| 3 | Defense Evasion | 48.37 | 149.94 | 89.43 | 0.44 | 0.27 |

| 5 | Defense Evasion | 54.23 | 132.18 | 94.55 | 0.39 | 0.25 |

| 10 | Defense Evasion | 48.82 | 114.01 | 78.90 | 0.31 | 0.20 |

| 2 | Privilege Escalation | 53.16 | 141.69 | 82.98 | 0.31 | 0.20 |

| 3 | Privilege Escalation | 54.51 | 142.89 | 84.56 | 0.39 | 0.24 |

| 5 | Privilege Escalation | 58.38 | 124.57 | 78.34 | 0.38 | 0.22 |

| 10 | Privilege Escalation | 54.44 | 65.24 | 50.12 | 0.32 | 0.20 |

| 2 | Resource Development | 73.87 | 217.72 | 149.54 | 0.44 | 0.31 |

| 3 | Resource Development | 69.89 | 191.16 | 120.76 | 0.40 | 0.28 |

| 5 | Resource Development | 69.69 | 202.51 | 156.32 | 0.47 | 0.33 |

| 10 | Resource Development | 61.97 | 204.99 | 144.45 | 0.36 | 0.22 |

| 2 | Multinomial | 81.62 | 1630.58 | 1200.23 | 0.96 | 0.71 |

| 3 | Multinomial | 79.74 | 965.02 | 721.34 | 0.76 | 0.65 |

| 5 | Multinomial | 84.46 | 907.64 | 701.45 | 0.77 | 0.69 |

| 10 | Multinomial | 84.16 | 772.88 | 561.90 | 0.58 | 0.32 |

Table 21.

UWF-ZeekDataFall22: timing results with LDA, CPU vs. GPU (without MapReduce).

Table 21.

UWF-ZeekDataFall22: timing results with LDA, CPU vs. GPU (without MapReduce).

| k | Tactic | Preprocessing | CPU Training | GPU Training | CPU Testing | GPU Testing |

|---|

| 1 | Reconnaissance | 69.28 | 38.33 | 19.17 | 0.25 | 0.13 |

| 1 | Discovery | 66.82 | 13.05 | 6.52 | 0.26 | 0.13 |

| 1 | Defense Evasion | 67.20 | 13.49 | 6.74 | 0.27 | 0.13 |

| 1 | Privilege Escalation | 66.19 | 11.47 | 5.74 | 0.26 | 0.13 |

| 1 | Resource Development | 91.94 | 12.19 | 6.10 | 0.23 | 0.11 |

| 1 | Multinomial | 85.97 | 86.02 | 43.01 | 0.55 | 0.27 |

| 2 | Multinomial | 204.73 | 61.29 | 30.64 | 0.68 | 0.34 |

| 3 | Multinomial | 97.92 | 52.68 | 26.34 | 0.58 | 0.29 |

| 4 | Multinomial | 99.87 | 48.29 | 24.14 | 0.50 | 0.25 |

Table 22.

UWF-ZeekDataFall22: timing results with LDA, CPU vs. GPU with MapReduce (MR).

Table 22.

UWF-ZeekDataFall22: timing results with LDA, CPU vs. GPU with MapReduce (MR).

| k | Tactic | Preprocessing | CPU MR Training | GPU MR Training | CPU MR Testing | GPU MR Testing |

|---|

| 1 | Recon | 90.21 | 38.29 | 19.15 | 0.27 | 0.14 |

| 1 | Disc | 88.91 | 13.32 | 6.66 | 0.26 | 0.13 |

| 1 | Def Evas | 88.80 | 12.72 | 6.36 | 0.30 | 0.15 |

| 1 | Priv Esc | 85.48 | 12.37 | 6.19 | 0.29 | 0.15 |

| 1 | Res Dev | 130.66 | 16.08 | 8.04 | 0.32 | 0.16 |