Evaluating Filter, Wrapper, and Embedded Feature Selection Approaches for Encrypted Video Traffic Classification

Abstract

1. Introduction

- The identification of traffic generated by YouTube, Netflix, and Amazon Prime streaming services;

- A comparison of three feature selection methods, namely, filter, wrapper, and embedded;

- The use of previously not applied algorithms for this purpose, namely Weighted KMeans (WKMeans), Sequential Forward Selection (SFS), and LassoNet.

2. Related Works

2.1. Statistical and Shallow ML

2.2. Deep ML

3. Background

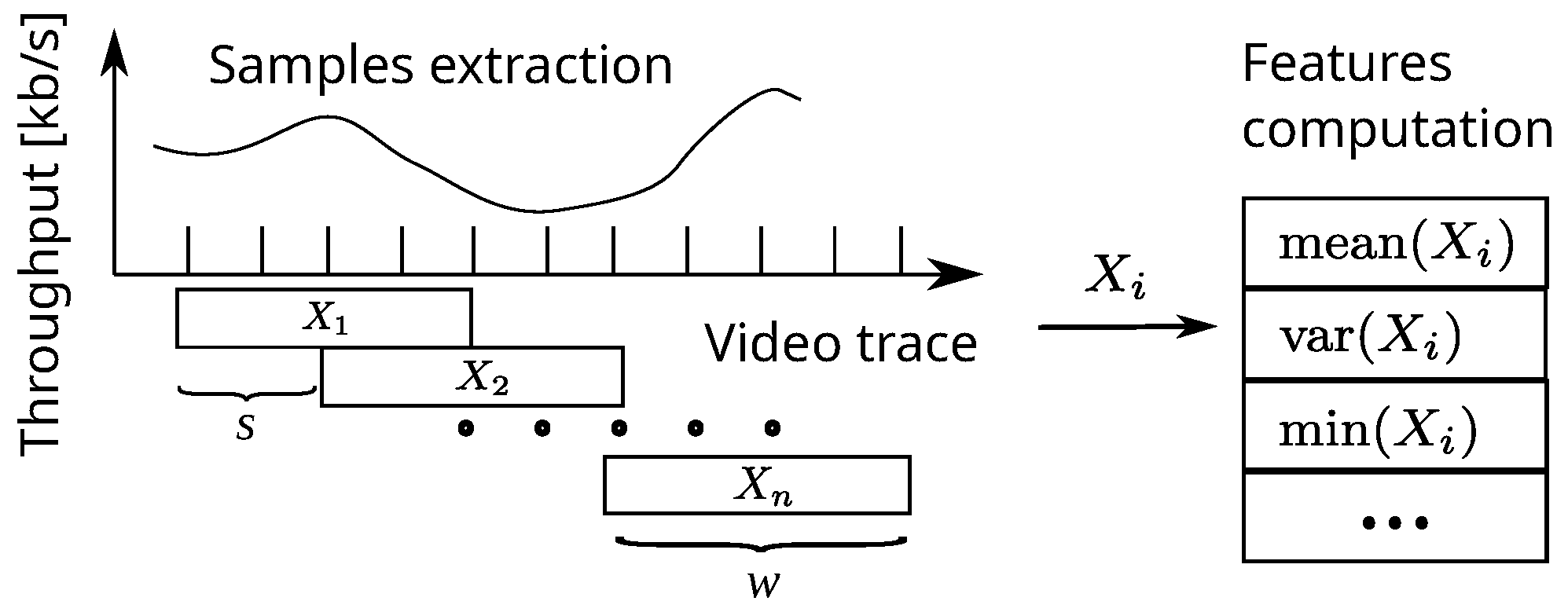

3.1. Feature Extraction

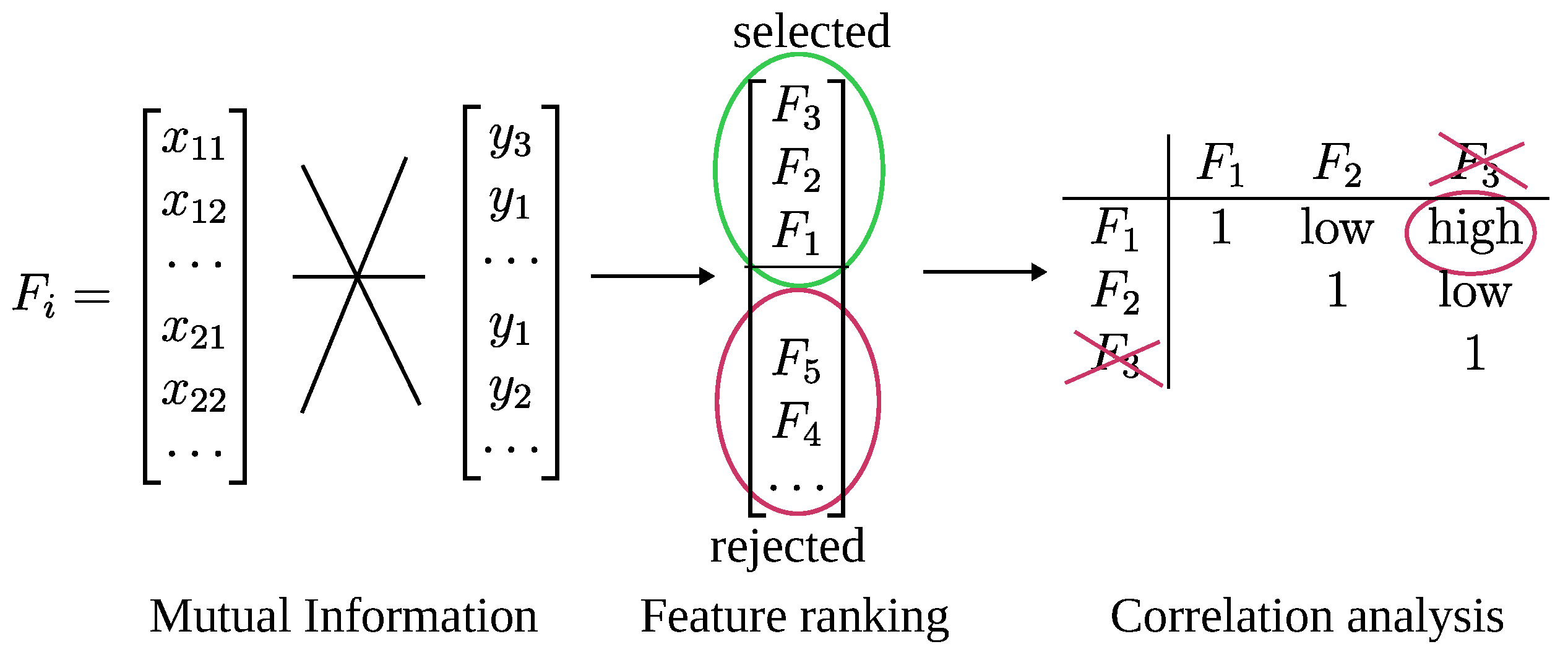

3.2. Feature Selection

3.2.1. Filter Methods

3.2.2. Wrapper Methods

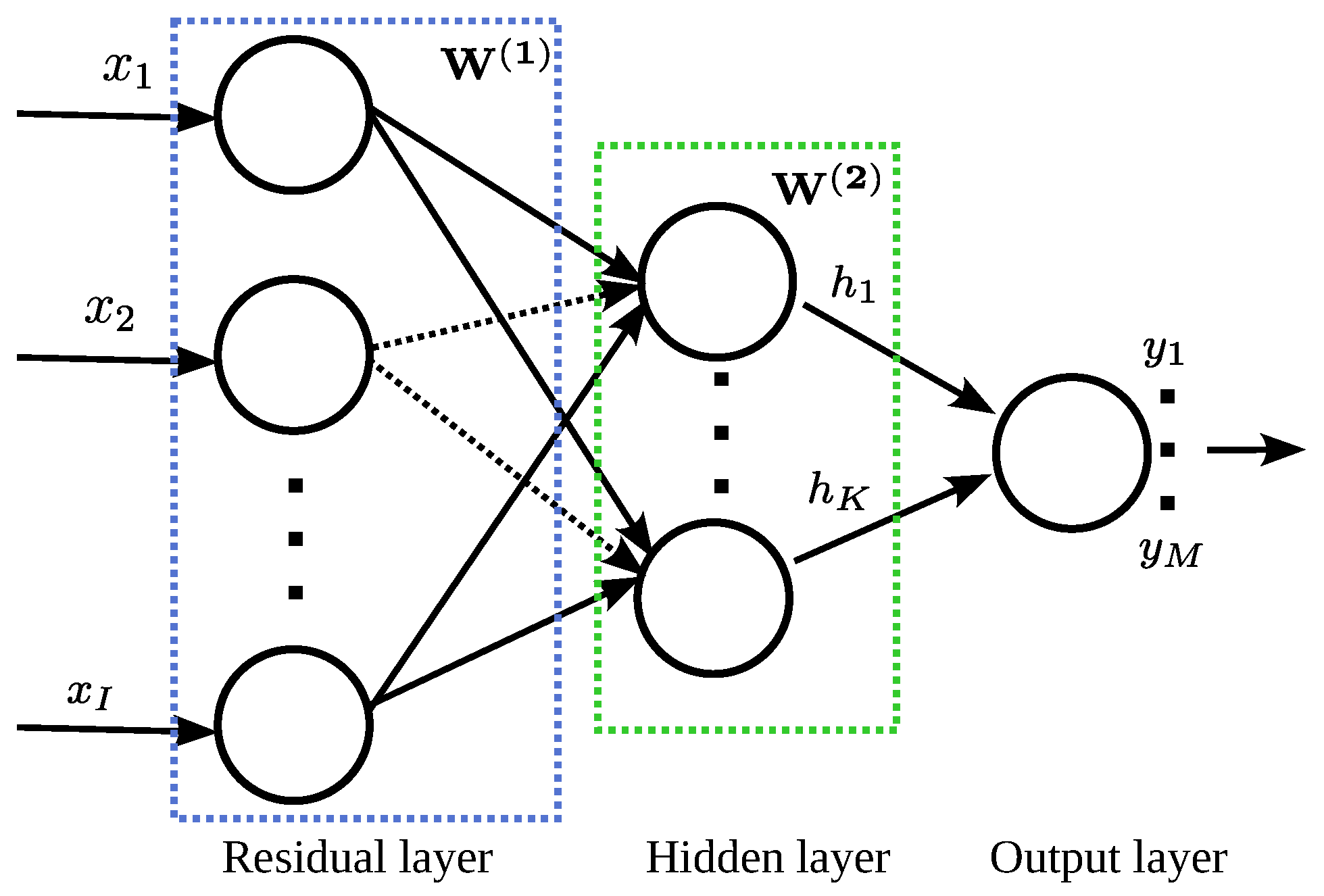

3.2.3. Embedded Method—LassoNet

3.3. Representation Learning-Based Approach

4. Experiment and Its Results

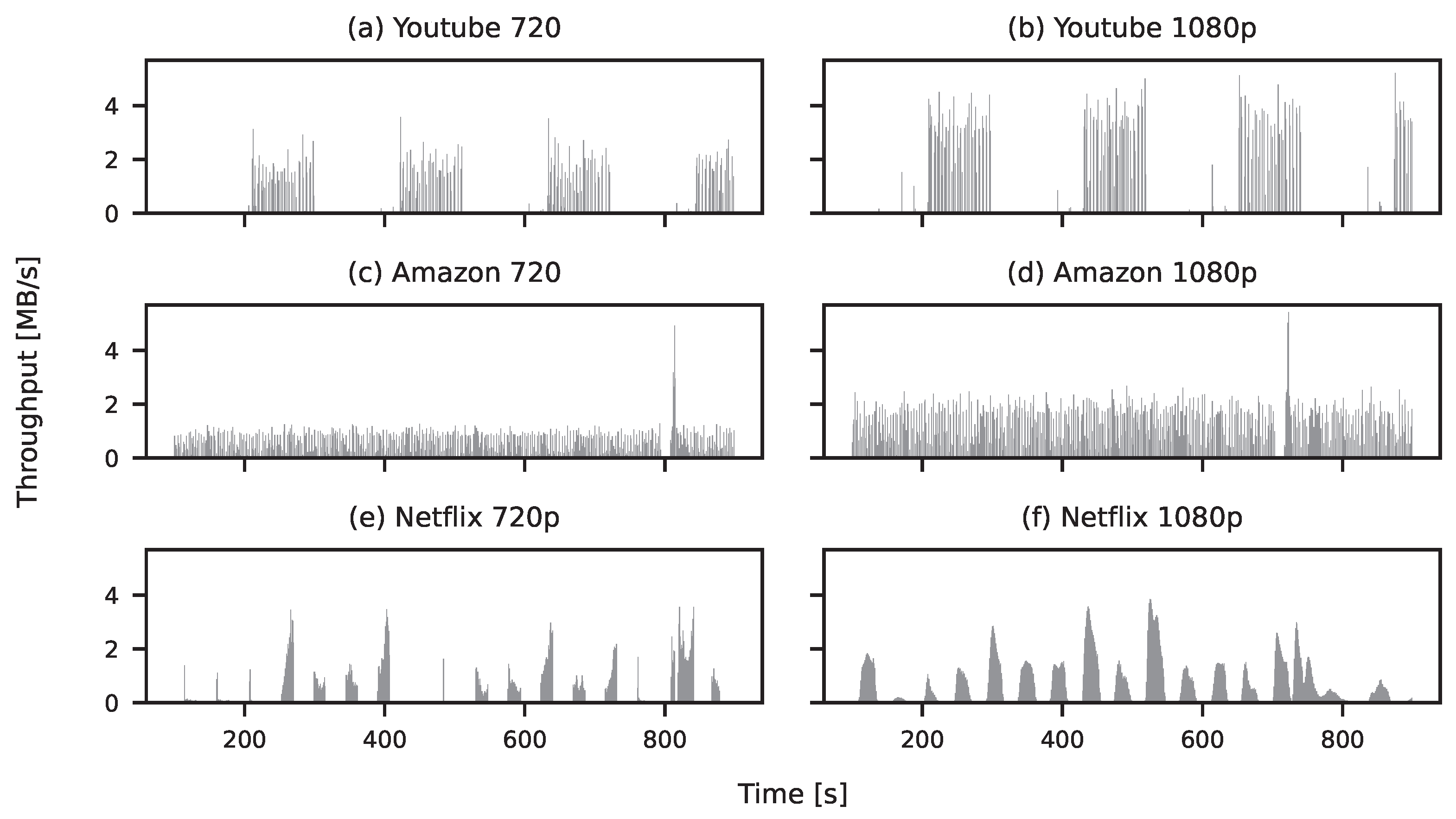

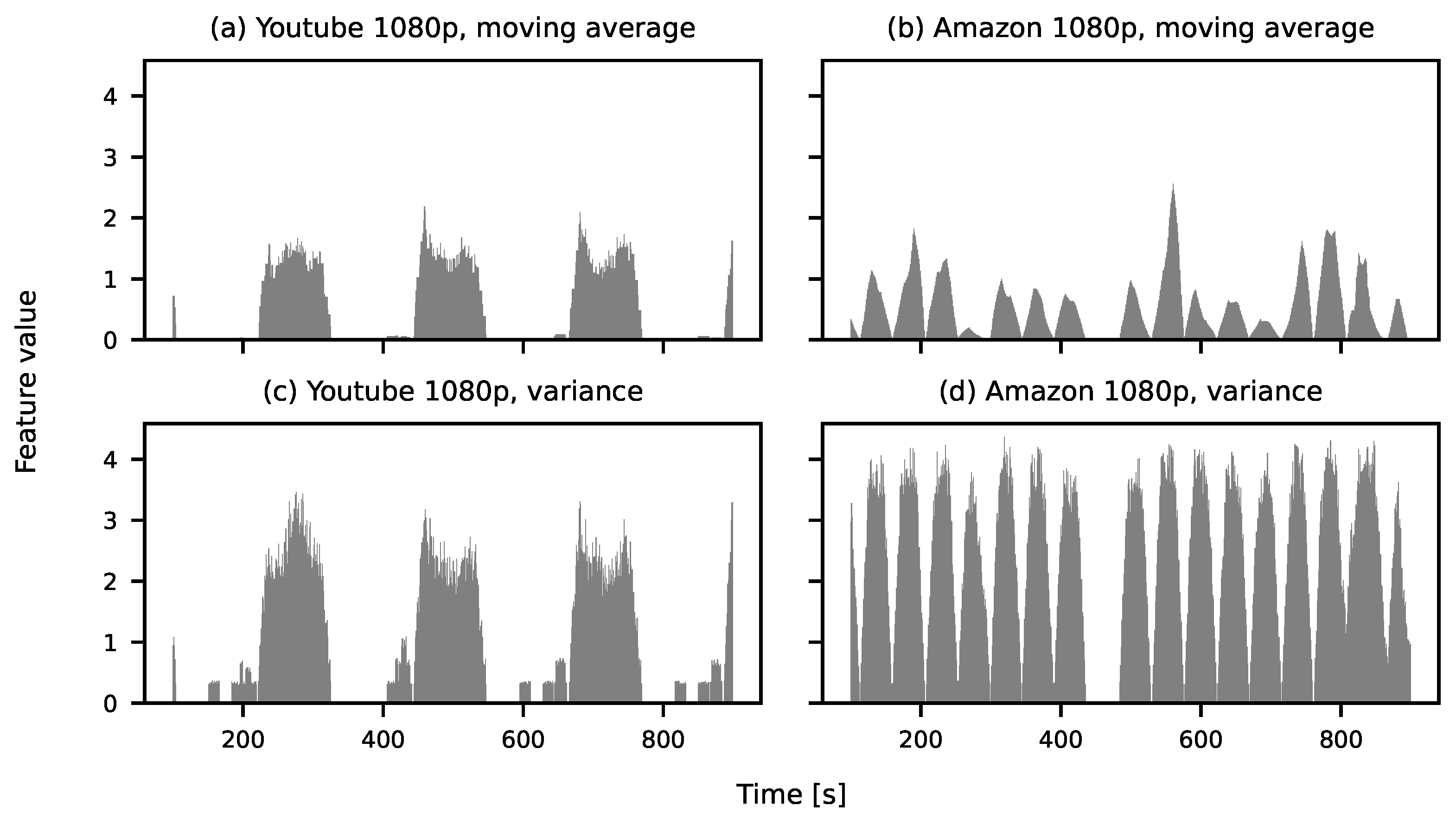

4.1. Characteristics of Network Traffic

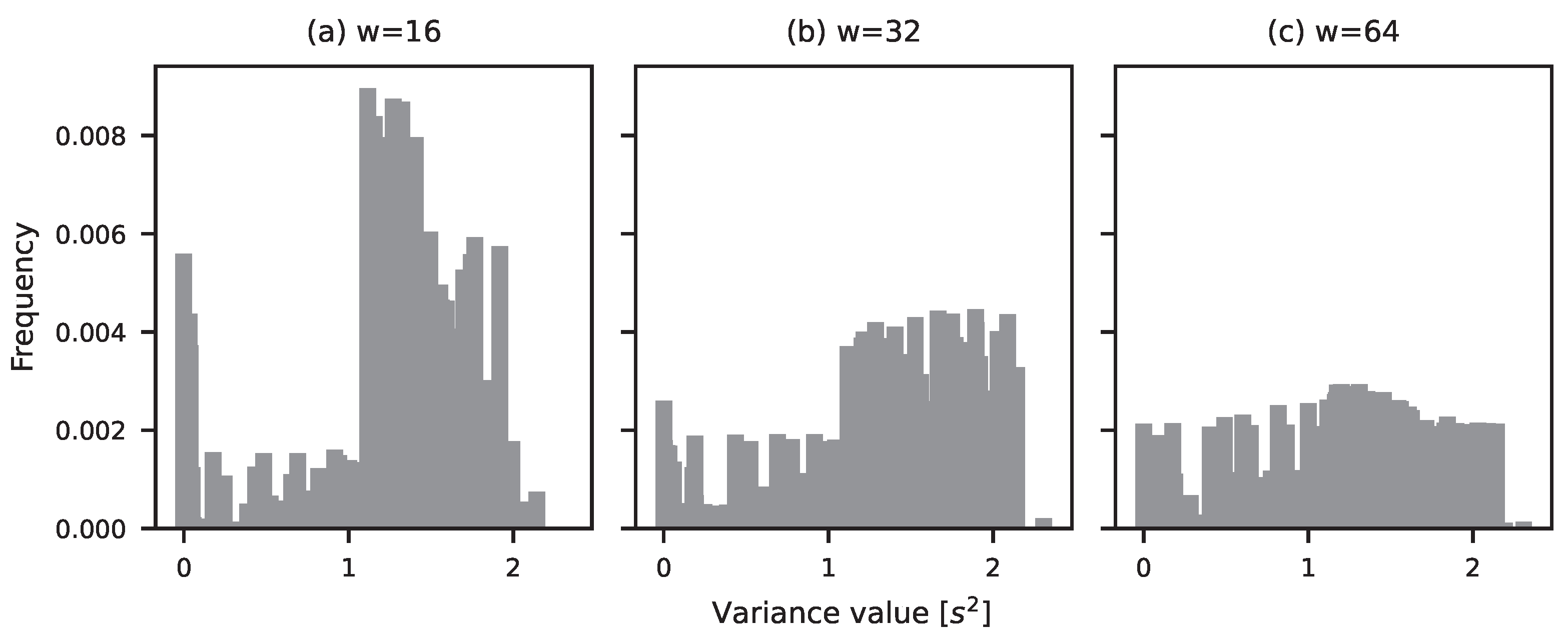

4.2. Extracted Features

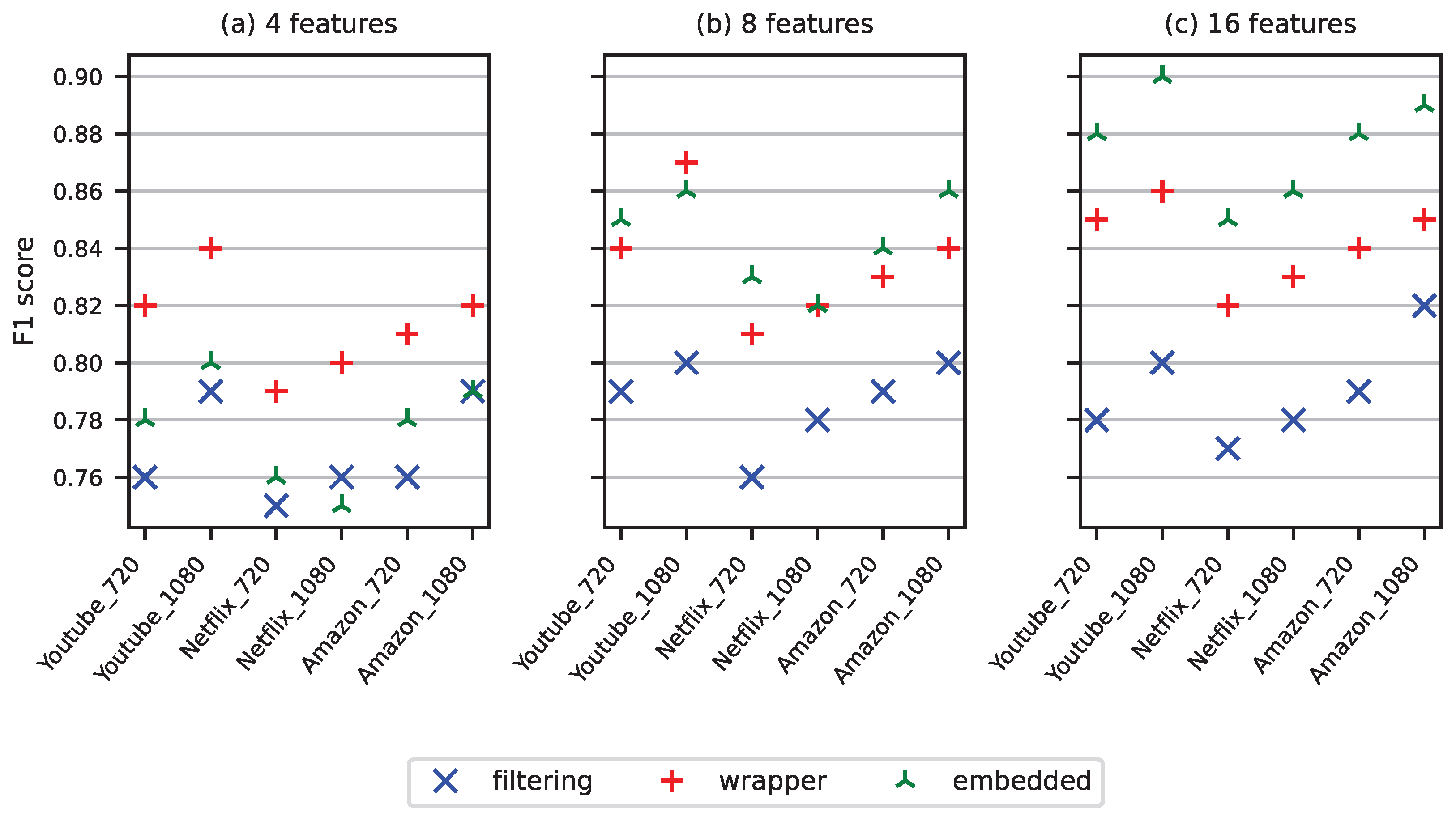

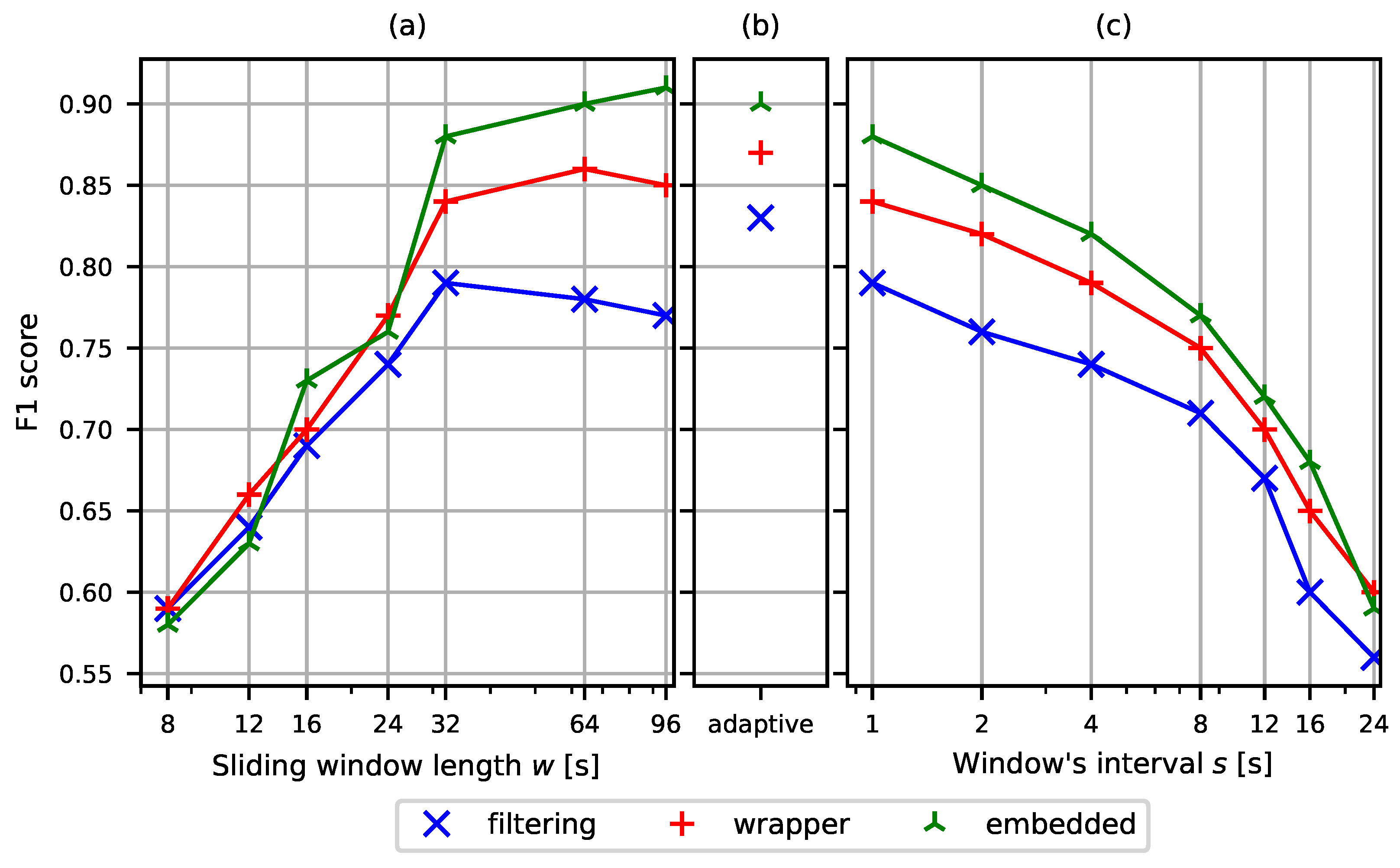

4.3. Clustering Performance

4.4. Discussion

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, Z.; Fok, K.W.; Thing, V.L. Machine learning for encrypted malicious traffic detection: Approaches, datasets and comparative study. Comput. Secur. 2022, 113, 102542. [Google Scholar] [CrossRef]

- Akbari, I.; Salahuddin, M.A.; Aniva, L.; Limam, N.; Boutaba, R.; Mathieu, B.; Moteau, S.; Tuffin, S. Traffic classification in an increasingly encrypted web. Commun. ACM 2022, 65, 75–83. [Google Scholar] [CrossRef]

- Shen, M.; Ye, K.; Liu, X.; Zhu, L.; Kang, J.; Yu, S.; Li, Q.; Xu, K. Machine Learning-Powered Encrypted Network Traffic Analysis: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2023, 25, 791–824. [Google Scholar] [CrossRef]

- Li, Z.; Chen, J.; Ma, X.; Du, M.; Zhang, Z.; Liu, Q. A robust and accurate encrypted video traffic identification method via graph neural network. In Proceedings of the 2023 26th International Conference on Computer Supported Cooperative Work in Design (CSCWD), IEEE, Rio de Janeiro, Brazil, 24–26 May 2023; pp. 867–872. [Google Scholar]

- Aouedi, O.; Piamrat, K.; Hamma, S.; Perera, J.K.M. Network traffic analysis using machine learning: An unsupervised approach to understand and slice your network. Ann. Telecommun. 2022, 77, 297–309. [Google Scholar] [CrossRef]

- Cao, J.; Wang, D.; Qu, Z.; Sun, H.; Li, B.; Chen, C.L. An improved network traffic classification model based on a support vector machine. Symmetry 2020, 12, 301. [Google Scholar] [CrossRef]

- Yang, L.Y.; Dong, Y.N.; Tian, W.; Wang, Z.J. The study of new features for video traffic classification. Multimed. Tools Appl. 2019, 78, 15839–15859. [Google Scholar] [CrossRef]

- Gudla, R.; Vollala, S.; Srinivasa, K.G.; Amin, R. TCC: Time constrained classification of VPN and non-VPN traffic using machine learning algorithms. Wirel. Netw. 2025, 31, 3415–3429. [Google Scholar] [CrossRef]

- Wang, Z.; Ma, B.; Zeng, Y.; Lin, X.; Shi, K.; Wang, Z. Differential preserving in XGBoost model for encrypted traffic classification. In Proceedings of the 2022 International Conference on Networking and Network Applications (NaNA), IEEE, Urumqi, China, 3–5 December 2022; pp. 220–225. [Google Scholar]

- Gil, G.D.; Lashkari, A.H.; Mamun, M.; Ghorbani, A.A. Characterization of encrypted and VPN traffic using time-related features. In Proceedings of the 2nd International Conference on Information Systems Security and Privacy (ICISSP 2016), Rome, Italy, 19–21 February 2016; SciTePress: Setúbal, Portugal, 2016; pp. 407–414. [Google Scholar]

- Min, Z.; Gokhale, S.; Shekhar, S.; Mahmoudi, C.; Kang, Z.; Barve, Y.; Gokhale, A. Enhancing 5G network slicing for IoT traffic with a novel clustering framework. Pervasive Mob. Comput. 2024, 104, 101974. [Google Scholar] [CrossRef]

- Yao, Z.; Ge, J.; Wu, Y.; Lin, X.; He, R.; Ma, Y. Encrypted traffic classification based on Gaussian mixture models and hidden Markov models. J. Netw. Comput. Appl. 2020, 166, 102711. [Google Scholar] [CrossRef]

- Yang, L.; Dong, Y.; Wang, Z.; Gao, F. One-dimensional CNN Model of Network Traffic Classification based on Transfer Learning. KSII Trans. Internet Inf. Syst. 2024, 18, 420–437. [Google Scholar] [CrossRef]

- Krupski, J.; Graniszewski, W.; Iwanowski, M. Data Transformation Schemes for CNN-Based Network Traffic Analysis: A Survey. Electronics 2021, 10, 2042. [Google Scholar] [CrossRef]

- Ren, X.; Gu, H.; Wei, W. Tree-RNN: Tree Structural Recurrent Neural Network for Network Traffic Classification. Expert Syst. Appl. 2021, 167, 114363. [Google Scholar] [CrossRef]

- Zhao, Z.; Li, Z.; Jiang, J.; Yu, F.; Zhang, F.; Xu, C.; Zhao, X.; Zhang, R.; Guo, S. ERNN: Error-resilient RNN for encrypted traffic detection towards network-induced phenomena. IEEE Trans. Dependable Secur. Comput. 2023, 1–18. [Google Scholar] [CrossRef]

- Jenefa, A.; Sam, S.; Nair, V.; Thomas, B.G.; George, A.S.; Thomas, R.; Sunil, A.D. A robust deep learning-based approach for network traffic classification using CNNs and RNNs. In Proceedings of the IEEE 2023 4th International Conference on Signal Processing and Communication (ICSPC), Coimbatore, India, 23–24 March 2023; pp. 106–110. [Google Scholar]

- Du, Y.; He, M.; Wang, X. A clustering-based approach for classifying data streams using graph matching. J. Big Data 2025, 12, 37. [Google Scholar] [CrossRef]

- Olabanjo, O.; Wusu, A.; Aigbokhan, E.; Olabanjo, O.; Afisi, O.; Akinnuwesi, B. A novel graph convolutional networks model for an intelligent network traffic analysis and classification. Int. J. Inf. Technol. 2024. [Google Scholar] [CrossRef]

- Yang, T.; Jiang, R.; Deng, H.; Li, Q.; Liu, Z. Ae-dti: An efficient darknet traffic identification method based on autoencoder improvement. Appl. Sci. 2023, 13, 9353. [Google Scholar] [CrossRef]

- Narmadha, S.; Balaji, N. Improved network anomaly detection system using optimized autoencoder- LSTM. Expert Syst. Appl. 2025, 273, 126854. [Google Scholar] [CrossRef]

- Atashgahi, Z.; Liu, T.; Pechenizkiy, M.; Veldhuis, R.; Mocanu, D.C.; Schaar, M.v.d. Unveiling the Power of Sparse Neural Networks for Feature Selection. arXiv 2024, arXiv:2408.04583. [Google Scholar] [CrossRef]

- Tekin, N.; Acar, A.; Aris, A.; Uluagac, S.; Gungor, V.C. Energy consumption of on-device machine learning models for IoT intrusion detection. Internet Things 2023, 21, 100670. [Google Scholar] [CrossRef]

- Hoefler, T.; Alistarh, D.; Ben-Nun, T.; Dryden, N.; Peste, A. Sparsity in deep learning: Pruning and growth for efficient inference and training in neural networks. J. Mach. Learn. Res. 2021, 22, 1–124. [Google Scholar]

- Atashgahi, Z.; Sokar, G.; Van Der Lee, T.; Mocanu, E.; Mocanu, D.C.; Veldhuis, R.; Pechenizkiy, M. Quick and robust feature selection: The strength of energy-efficient sparse training for autoencoders. Mach. Learn. 2022, 111, 377–414. [Google Scholar] [CrossRef]

- van der Wal, P.R.; Strisciuglio, N.; Azzopardi, G.; Mocanu, D.C. Multilayer perceptron ensembles in a truly sparse training context. Neural Comput. Appl. 2025, 37, 15419–15438. [Google Scholar] [CrossRef]

- Lemhadri, I.; Ruan, F.; Abraham, L.; Tibshirani, R. LassoNet: A Neural Network with Feature Sparsity. arXiv 2021, arXiv:1907.12207. [Google Scholar] [CrossRef]

- Peng, H.; Fang, G.; Li, P. Copula for instance-wise feature selection and rank. In Proceedings of the Uncertainty in Artificial Intelligence, PMLR, Pittsburgh, PA, USA, 31 July–4 August 2023; pp. 1651–1661. [Google Scholar]

- Moslemi, A.; Jamshidi, M. Unsupervised feature selection using sparse manifold learning: Auto-encoder approach. Inf. Process. Manag. 2025, 62, 103923. [Google Scholar] [CrossRef]

- Ou, J.; Li, J.; Xia, Z.; Dai, S.; Guo, Y.; Jiang, L.; Tang, J. RFAE: A high-robust feature selector based on fractal autoencoder. Expert Syst. Appl. 2025, 285, 127519. [Google Scholar] [CrossRef]

- Nascita, A.; Aceto, G.; Ciuonzo, D.; Montieri, A.; Persico, V.; Pescapé, A. A Survey on Explainable Artificial Intelligence for Internet Traffic Classification and Prediction, and Intrusion Detection. IEEE Commun. Surv. Tutor. 2024. [Google Scholar] [CrossRef]

- Guerra, J.L.; Catania, C.; Veas, E. Datasets are not enough: Challenges in labeling network traffic. Comput. Secur. 2022, 120, 102810. [Google Scholar] [CrossRef]

- Papadogiannaki, E.; Ioannidis, S. A survey on encrypted network traffic analysis applications, techniques, and countermeasures. ACM Comput. Surv. (CSUR) 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Liu, T.; Lu, Y.; Zhu, B.; Zhao, H. Clustering high-dimensional data via feature selection. Biometrics 2023, 79, 940–950. [Google Scholar] [CrossRef]

- Saisubramanian, S.; Galhotra, S.; Zilberstein, S. Balancing the tradeoff between clustering value and interpretability. In Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, San Jose, CA, USA, 21–23 October 2020; pp. 351–357. [Google Scholar]

- Salles, I.; Mejia-Domenzain, P.; Swamy, V.; Blackwell, J.; Käser, T. Interpret3C: Interpretable Student Clustering Through Individualized Feature Selection. In Proceedings of the International Conference on Artificial Intelligence in Education, Recife, Brazil, 8–12 July 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 382–390. [Google Scholar]

- Amaldi, E.; Kann, V. On the approximability of minimizing nonzero variables unsatisfied relations in linear systems. Theor. Comput. Sci. 1998, 209, 237–260. [Google Scholar] [CrossRef]

- Aloise, D.; Deshpande, A.; Hansen, P.; Popat, P. NP-hardness of Euclidean sum-of-squares clustering. Mach. Learn. 2009, 75, 245–248. [Google Scholar] [CrossRef]

- Huang, J.Z.; Ng, M.K.; Rong, H.; Li, Z. Automated variable weighting in k-means type clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 657–668. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Xiong, G.; Li, Z.; Yang, C.; Lin, X.; Gou, G.; Fang, B. Traffic spills the beans: A robust video identification attack against YouTube. Comput. Secur. 2024, 137, 103623. [Google Scholar] [CrossRef]

- Pandey, S.; Moon, Y.S.; Choi, M.J. Netflix, Amazon Prime, and YouTube: Comparative Study of Streaming Infrastructure and Strategy. J. Inf. Process. Syst. 2022, 18, 729–740. [Google Scholar] [CrossRef]

- Bae, S.; Son, M.; Kim, D.; Park, C.; Lee, J.; Son, S.; Kim, Y. Watching the watchers: Practical video identification attack in LTE networks. In Proceedings of the 31st USENIX Security Symposium, Boston, MA, USA, 10–12 August 2022; pp. 1307–1324. [Google Scholar]

- Christ, M.; Braun, N.; Neuffer, J.; Kempa-Liehr, A.W. Time Series FeatuRe Extraction on basis of Scalable Hypothesis tests (tsfresh—A Python package). Neurocomputing 2018, 307, 72–77. [Google Scholar] [CrossRef]

| Activity | Resolution | Average (MB/s) | Variance (MB/s) |

|---|---|---|---|

| YouTube | 720p | 0.32 | 0.45 |

| 1080p | 0.62 | 1.73 | |

| Netflix | 720p | 0.41 | 0.58 |

| 1080p | 0.61 | 0.69 | |

| Amazon | 720p | 0.41 | 0.20 |

| 1080p | 0.84 | 0.64 |

| Method | Measure | Video Trace | |||||

|---|---|---|---|---|---|---|---|

| Youtube_720 | Youtube_1080 | Netflix_720 | Netflix_1080 | Amazon_720 | Amazon_1080 | ||

| Filter: mutual information | F1 score | 0.79 | 0.8 | 0.76 | 0.78 | 0.79 | 0.8 |

| precision | 0.71 | 0.73 | 0.78 | 0.84 | 0.73 | 0.72 | |

| recall | 0.89 | 0.88 | 0.74 | 0.73 | 0.86 | 0.9 | |

| Filter: Chi-Square test | F1 score | 0.77 | 0.78 | 0.72 | 0.74 | 0.77 | 0.78 |

| precision | 0.69 | 0.71 | 0.76 | 0.78 | 0.71 | 0.71 | |

| recall | 0.86 | 0.86 | 0.69 | 0.7 | 0.85 | 0.86 | |

| Wrapper: Weighted Kmeans | F1 score | 0.84 | 0.87 | 0.81 | 0.82 | 0.83 | 0.84 |

| precision | 0.8 | 0.83 | 0.84 | 0.84 | 0.78 | 0.81 | |

| recall | 0.89 | 0.91 | 0.78 | 0.8 | 0.89 | 0.87 | |

| Wrapper: Sequential Forward Selection | F1 score | 0.83 | 0.86 | 0.82 | 0.83 | 0.84 | 0.85 |

| precision | 0.79 | 0.85 | 0.86 | 0.83 | 0.81 | 0.83 | |

| recall | 0.88 | 0.87 | 0.79 | 0.83 | 0.88 | 0.88 | |

| Embedded: LassoNet | F1 score | 0.85 | 0.86 | 0.83 | 0.82 | 0.84 | 0.86 |

| precision | 0.8 | 0.79 | 0.89 | 0.86 | 0.8 | 0.82 | |

| recall | 0.91 | 0.95 | 0.78 | 0.78 | 0.88 | 0.91 | |

| Autoencoder + SVM | F1 score | 0.82 | 0.82 | 0.79 | 0.78 | 0.81 | 0.83 |

| precision | 0.76 | 0.75 | 0.82 | 0.77 | 0.73 | 0.76 | |

| recall | 0.89 | 0.9 | 0.76 | 0.79 | 0.91 | 0.92 | |

| Method | Relative Exec. Time |

|---|---|

| Filter (MI) | <1% |

| Wrapper (Weightened KMeans) | ≈62% |

| Embedded (LassoNet) | ≈38% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Biernacki, A. Evaluating Filter, Wrapper, and Embedded Feature Selection Approaches for Encrypted Video Traffic Classification. Electronics 2025, 14, 3587. https://doi.org/10.3390/electronics14183587

Biernacki A. Evaluating Filter, Wrapper, and Embedded Feature Selection Approaches for Encrypted Video Traffic Classification. Electronics. 2025; 14(18):3587. https://doi.org/10.3390/electronics14183587

Chicago/Turabian StyleBiernacki, Arkadiusz. 2025. "Evaluating Filter, Wrapper, and Embedded Feature Selection Approaches for Encrypted Video Traffic Classification" Electronics 14, no. 18: 3587. https://doi.org/10.3390/electronics14183587

APA StyleBiernacki, A. (2025). Evaluating Filter, Wrapper, and Embedded Feature Selection Approaches for Encrypted Video Traffic Classification. Electronics, 14(18), 3587. https://doi.org/10.3390/electronics14183587