Abstract

Cyberspace search engines (CSEs) are systems designed to search and index information about cyberspace assets. Effectively mining data across diverse platforms is hindered by the complexity and diversity of different CSE syntaxes. While Text-to-CSEQL offers a promising solution by translating natural language (NL) questions into cyberspace search engine query language (CSEQL), existing prompt-based methods still struggle due to the platform-specific intricacies of CSEQL. To address this limitation, we propose an LLM-based approach leveraging Retrieval-Augmented Generation (RAG). Specifically, to overcome the inability of traditional methods to retrieve relevant syntax fields effectively, we propose a novel hybrid retrieval mechanism combining keyword and dense retrieval, leveraging both field values and their semantic descriptions. Furthermore, we integrate these retrieved fields and the relevant few-shot examples into a redesigned prompt template adapted from the COSTAR framework. For comprehensive evaluation, we construct a Text-to-CSEQL dataset and introduce a new domain-specific metric, field match (FM). Extensive experiments demonstrate our method’s ability to adapt to platform-specific characteristics. Compared to prompt-based methods, it achieves an average accuracy improvement of 43.15% when generating CSEQL queries for diverse platforms. Moreover, our method also outperforms techniques designed for single-platform CSEQL generation.

1. Introduction

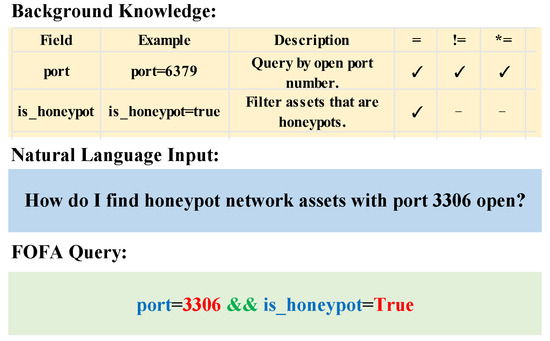

Text-to-CSEQL refers to converting NL questions into CSEQL, thereby providing a user-friendly interface for CSEs. An example of Text-to-CSEQL is presented in Figure 1. Text-to-CSEQL is becoming increasingly vital in areas like cyberspace asset discovery [1] and management [2], cybersecurity risk assessment [3], edge computing [4,5], etc.

Figure 1.

An example of natural language input converted into the corresponding CSEQL queries for FOFA based on background knowledge. “=” denotes equal matching, “!=” denotes mismatching and “*=” denotes fuzzy search. In the example FOFA query, blue represents query fields, red denotes query values, and green represents the logic symbol.

With the rise of large language models (LLMs), research on Text-to-CSEQL has begun to emerge. This research aims to leverage LLMs to enhance the usability of CSE and reduce the complexity of CSEQL construction. For example, Censys has developed CensysGPT Beta [6], which prompts an OpenAI model to generate CSEQL. Similarly, Zoomeye has launched ZoomeyeGPT [7], incorporating its query syntax into prompts to guide LLMs in generating CSEQL. Overall, current approaches focus primarily on prompt-based methods for generating platform-specific queries from NL questions.

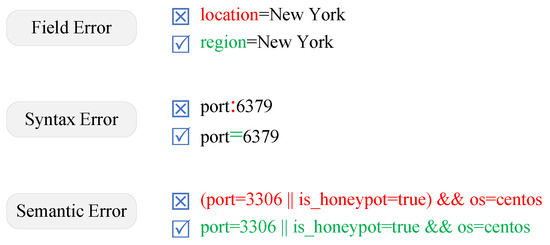

However, prompt-based methods exhibit critical limitations. Firstly, they are prone to LLM hallucinations during CSEQL generation. Figure 2 highlights three prevalent errors encountered when utilizing LLMs to generate FOFA queries: (1) field error. In FOFA query language, specific terms like “region” are required for searching assets in particular areas, rather than using synonyms such as “location”. (2) syntax error. The generated queries must adhere strictly to syntactic requirements to ensure their validity. (3) semantic error. Minor linguistic discrepancies can result in substantial semantic misinterpretations, leading to incorrect query outcomes.

Figure 2.

An overview of the errors when LLMs generate FOFA queries. In the figure, “×” indicates error cases, while “✓” denotes correct answers. The red color represents the incorrect parts generated by the LLM, and the green color represents the correct parts of the generated query.

Secondly, and more fundamentally, these approaches are inherently constrained to generating queries for a single, pre-specified platform. This limitation is critically problematic in real-world cybersecurity operations. Security analysts frequently need to query multiple CSE platforms (e.g., Shodan [8], Censys [9], Zoomeye [10], and FOFA [11]) to obtain a comprehensive view of the threat landscape, as each platform possesses data sources, asset specializations, and unique functions. Tools designed for a specific platform, such as CensysGPT Beta [6] or ZoomeyeGPT [7], are entirely ineffective and produce syntactically invalid queries when applied to other platforms. This lack of interoperability hinders efficient multi-source intelligence gathering and creates significant friction in workflows requiring tool integration.

To address these dual challenges of hallucination and the critical need for cross-platform capability, we propose a novel Retrieval-Augmented Generation (RAG) method specifically designed for cross-platform CSEQL generation. We first construct a structured knowledge base by compiling platform-specific syntax specifications. Crucially, to overcome the limitations of traditional methods in retrieving relevant syntax fields, we develop a novel hybrid retrieval method that integrates keyword and dense retrieval algorithms, ensuring the LLM receives accurate platform-specific syntax information. Furthermore, in order to leverage the in-context learning capability of LLMs, we employ few-shot retrieval to obtain pertinent examples from this knowledge base. Finally, to ensure precise CSEQL generation, we designed tailored prompts adapted from the COSTAR framework.

To evaluate our approach, we introduce a novel comprehensive dataset. This involves the manual collection of real-world CSEQL queries, followed by LLM-augmented annotation and rigorous and thorough expert verification. The dataset encompasses NL questions and corresponding CSEQL queries across four platforms (Shodan [8], Censys [9], Zoomeye [10], and FOFA [11]). Additionally, we propose a novel, domain-specific evaluation metric, field match (FM), which calculates the accuracy of a field within generated CSEQL queries.

Extensive experimental results demonstrate the effectiveness of our method in generating cross-platform CSEQL queries. Compared to prompt-based baselines, our approach achieves an average improvement of 43.15% in exact match (EM) accuracy across the four platforms. Ablation studies confirmed the superiority of our hybrid field retrieval method, attaining a recall of 90% when the retrieval count (k) was set to 4. Comparisons with state-of-the-art single-platform CSEQL generation methods further validated our method; it outperforms CensysGPT Beta by margins of +2.6% for EM and +4.3% for FM, and it surpasses ZoomeyeGPT by +6.9% for EM and +7.8% for FM.

Our main contributions can be summarized as follows:

- We propose a novel, RAG-based Text-to-CSEQL method that addresses the critical limitation of existing approaches in generating multi-platform queries. Central to this is our hybrid retrieval algorithm for syntax fields, which significantly enhances retrieval recall.

- We construct the first large-scale benchmark dataset for cross-platform Text-to-CSEQL, comprising over 16,000 manually verified NL question–CSEQL pairs across Shodan, Censys, ZoomEye, and FOFA. We also introduce a novel domain-specific FM metric for comprehensive evaluation.

- Extensive experiments show that our method achieves substantial improvements over prompt-based baselines and also demonstrates significant and consistent superiority over state-of-the-art single-platform methods.

2. Related Work

2.1. Natural Language Query for Cyberspace Assets

With the aim of improving the usability of CSEs, previous work has explored the use of LLMs as a translator to convert NL questions into CSEQL queries. Censys has developed CensysGPT Beta [6] using an API provided by OpenAI. Users can utilize it to generate CSEQL queries from an NL question for Censys. In addition, ZoomEye addresses this by developing a plugin tool called ZoomeyeGPT [7]. It employs prompt engineering to enable LLMs to convert NL questions into ZoomEye queries. These two methods are prompt-based methods that are only applicable to a single platform.

2.2. Text-to-SQL

The Text-to-SQL task is closely related to the Text-to-CSEQL task, which maps NL questions on the given relational database to SQL queries. The development of Text-to-SQL has evolved from early rule-based models to advanced LLMs. Research on Text-to-SQL is categorized into three types: (1) rule-based methods; (2) deep learning-based methods; (3) LLM-based methods. Early systems [12,13] used handcrafted grammar rules and heuristic methods to convert NL questions into SQL queries, but they faced limitations when handling complex queries and varied database schemas. With the development of deep learning, neural network-based models emerged, capable of learning patterns directly from the training data instead of relying solely on predefined rules. The Text-to-SQL task is commonly treated as a sequence-to-sequence problem [14,15,16,17]. Some methods explicitly encode the database schema, while [18] shows that fine-tuning a pretrained T5 model [19] could significantly improve the performance of Text-to-SQL. In recent years, LLMs have emerged as a new paradigm for the Text-to-SQL task. Different from deep learning, LLM-based Text-to-SQL methods primarily focus on prompting LLMs to generate correct SQL queries. This approach, known as prompt engineering, includes question representations [20,21], example selection [22], and example organization [23]. However, there are several key differences between CSEQL and SQL. CSEQL exhibits significant semantic variations in field names, lacks a unified schema, and typically involves shorter queries with much higher field ambiguity compared to SQL. These differences make the simple schema linking and prompt engineering techniques discussed in the context of Text-to-SQL deliver suboptimal performance when generating CSEQL queries across platforms.

2.3. Text-to-DSL

A domain-specific language (DSL) is a programming language designed for a particular application domain. Due to the excellent Natural Language Processing (NLP) capabilities of LLMs, Text-to-DSL has attracted growing interest. Ref. [24] proposes a method called “Grammar Prompting” to leverage the performance of LLMs in DSL generation tasks, particularly in generating highly structured language strings from a small number of examples. In the domain of geographic information, Text-to-OverpassQL [25] develops an NL interface that enables users to query complex geographic data from the OpenStreetMap database in NL. In the healthcare field, ref. [26] introduces a method that integrates Text-to-SQL generation with RAG to answer epidemiological questions. While all these Text-to-DSL works focus on generating a single, domain-specific language, our work tackles the challenge of generating heterogeneous CSEQL in the cyberspace mapping domain.

3. Methodology

For an NL question and its corresponding CSE , the objective of an LLM is to predict the CSEQL query from . The likelihood of an LLM generating a CSEQL query can be defined as a conditional probability distribution, where represents the relevant syntax of CSE, represents the prompt template, and denotes the length of the predicted CSE query. and represent the i-th token and the prefix of , respectively. The parameters of the LLM are denoted by :

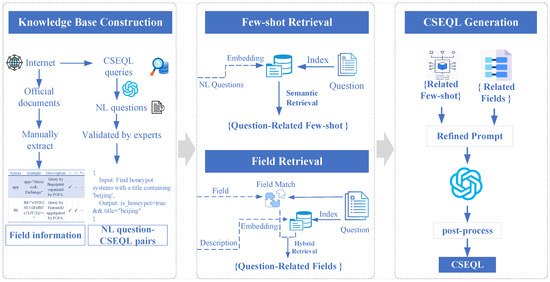

As illustrated in Figure 3, our method mainly includes three stages: (1) Knowledge base construction: This initial stage, shown at the top left of Figure 3, involves the creation of the foundational knowledge base. (2) Retrieval stage: Leveraging the proven success of RAG in bolstering LLMs for specialized and intricate NLP tasks [27], this stage, central to Figure 3, utilizes the constructed knowledge base as an external resource. Field Retrieval employs our novel hybrid algorithm (outlined in Algorithm 1) to retrieve pertinent CSE query fields that will be integrated into the prompt. Relevant NL question and CSEQL query pairs are also retrieved and integrated to refine the prompt. (3) In this final stage, represented at the bottom right of Figure 3, the refined prompt containing retrieved examples and fields is presented to the LLM. Modified from the COSTAR prompt template [28], our redesigned template incorporates a supplementary data section (for specifics, see Appendix A). As the central element of the generation stage box in Figure 3, this template guides the LLM to generate a CSEQL query. After generation, the CSEQL query will be post-processed to form the final version.

| Algorithm 1: Field Retrieval Procedure |

|

Figure 3.

An overview of our proposed method. It consists of the knowledge base construction stage, the retrieval stage, and the generation stage. The knowledge base construction stage involves the collection of syntax and NL question–CSEQL pairs from the Internet. The retrieval stage includes field retrieval and few-shot retrieval. The generation stage integrates the results from the retrieval stage to prompt the LLM to generate CSEQL.

3.1. Knowledge Base Construction

During the knowledge base construction phase, we manually extract fundamental syntax rules and field information from official documentation. The core syntax—serving as the foundation for query statement formulation—encompasses syntactic structures, logical connectors (e.g., “AND”, “OR”, and “NOT”), and match symbol classifications (categorized by precision into exact match, partial match, wildcard match, and exclusion). Field information is organized in tabular format, including field names with NL explanations, with some platforms providing usage examples.

As for the construction of NL question–CSEQL pairs, a hybrid approach combining LLM annotation and expert validation is employed. All CSEQL queries are sourced from real-world scenarios: a value of 0.8% originates from platform documentation, 26.9% is extracted from a GitHub repository [29], and the remaining 72.3% is collected from diverse supplementary channels. After that, the GPT-4o model performs NL question annotation. Through prompt engineering incorporating relevant field descriptions, the model ensures semantic alignment between generated questions and query intent. Subsequently, to ensure data integrity, each NL question–CSEQL pair will undergo rigorous validation and testing against platform-specific syntax documentation. Specifically, domain experts conduct manual verification of all samples, ensuring each NL question correctly corresponds to its CSEQL representation and strictly validates syntax compliance across all dialects. After that, we execute automated grammar validation using our syntax checker, flagging non-compliant queries. Flagged cases will undergo iterative expert revision until full compliance is achieved.

3.2. Field Retrieval

The design of our field retrieval method is motivated by two key factors: (1) Reduce schema noise: Including excessive fields in the prompt leads to the generation of irrelevant schema items within the final CSEQL. (2) Prompt length optimization: Integrating all available fields significantly increases prompt length, resulting in unnecessary API costs and potentially exceeding the maximum context window of the LLM [25]. To address these issues and retrieve fields relevant to NL questions from the syntax manual, we employ a hybrid retrieval approach combining keyword-based and semantic retrieval (Algorithm 1).

Given an input pair , where CSE schema , and denotes basic syntax and denotes the fields list, the objective of field retrieval is to identify the minimal schema associated with the NL question , where , to answer the question . This process can be formulated as follows:

Within the CSEQL syntax manual, each field typically consists of the field’s value and its corresponding explanation. While field values themselves are discrete entities carrying weak semantic information, their associated explanations provide richer semantic context. Crucially, a field presented in the input should be pertinent to the user’s query. Thus, our hybrid method prioritizes keyword retrieval. If the number of fields retrieved via keywords (N) falls below a predefined threshold (K), semantic retrieval supplements the results. Specifically, the semantic retriever calculates the similarity between the input question and the field explanations, selecting the top M = K − N fields with the highest similarity scores. Further implementation details of the field retrieval process are provided in Appendix B.1.

3.3. Few-Shot Retrieval

The purpose of few-shot retrieval is to enhance the generation ability of the LLM by in-context learning. As shown in Figure 3, few-shot retrieval aims to identify the relevant few-shot for the NL question . It can be formulated as follows:

where , , and denote the input question, the knowledge base of the few-shot, and the relevant few-shot. As for Retriever implementation, we leverage a semantic retrieval method that uses a cosine similarity algorithm to retrieve the few-shot related to an NL question . More details can be seen in Appendix B.2.

3.4. CSEQL Generation

In order to mitigate hallucinations in LLMs while enhancing generation accuracy, we refine the COSTAR prompt framework and introduce an auxiliary information integration module to synergistically incorporate retrieved field knowledge. Subsequently, generated CSEQL queries undergo rigorous post-processing that extends beyond syntactic normalization to ensure semantic integrity. While standardizing whitespace and quotation marks, we explicitly prioritize semantic equivalence through AST-guided space handling and context-aware quote standardization. For space-sensitive CSEQL, abstract syntax tree (AST) parsing validates structural integrity, maintaining strict logical equivalence to original queries. Meanwhile, a rule-based rewrite mechanism dynamically adjusts embedded escape sequences (e.g., converting “SonicWALL-Company’s-product” → “SonicWALL-Company’s-product”) to prevent syntax errors. Sampled queries are executed in target CSE environments, with outputs compared against originals to detect discrepancies. Through the unification of whitespace counts and quotation mark types, finalized CSEQL queries are generated.

4. Experiments

To evaluate our method, we have summarized the following three research questions:

- RQ1: How does our method perform across different CSEs?

- RQ2: How do the components of our method impact performance?

- RQ3: How does our method perform compared to a single-platform method?

4.1. Experimental Setup

Baselines. We established a simple prompt baseline that provides the LLM with only the basic task instruction and the target platform name, without any retrieved fields or few-shot examples. Moreover, we compared our method with existing tools developed by CSEs. CensysGPT Beta [6] is a tool developed by Censys to simplify query construction. Another tool is ZoomeyeGPT [7], introduced by ZoomEye.

Large language models. We employed several leading LLMs in the experiment. These include GPT-3.5 Turbo [30] and GPT-4o mini [31] of OpenAI, Gemini 1.5 Pro [32] of Google, Claude 3.5 Sonnet [33] of Anthropic, and Kimi [34] of Moonshot.

Implementation of RAG. For few-shot retrieval, we employed semantic retrieval as the primary method. The encoding model, bge-large-en-v1.5 [35], sourced from Hugging Face, was fine-tuned to enhance retrieval performance for large-scale generation tasks. We use FAISS [36] as the vector database, with the number of retrieved few-shots set to five. In field retrieval, we adopted a hybrid approach that combines keyword and dense retrieval methods. The implementation of dense retrieval is identical to that of few-shot retrieval. The number of fields was set to four.

Evaluation metrics. For evaluation, we followed a prior study [25,37,38] in using the exact match (EM) score. Specifically, the EM metric measures whether the generated CSEQL query exactly matches the ground truth. It is worth noting that EM evaluates the performance of our method through a strict comparison. Furthermore, to assess whether field errors in the generated CSEQL query have been resolved, a domain-specific evaluation metric, field match (FM), was introduced. This metric evaluates the accuracy of field generation in the CSEQL query by measuring the match between the fields of the generated CSEQL query and ground truth. The formula for calculating the FM score is as follows:

where N denotes the total number of samples. and represent the set of fields from the generated CSEQL query and the ground truth.

4.2. Experimental Results

4.2.1. Knowledge Base Statistics

There are a total of 17,043 NL question–CSEQL pairs across four CSEs. We have analyzed them in detail, including the number of fields, the average number of logical operators per CSEQL query, the average number of fields per CSEQL query, the average length of the NL questions, and the average length of the CSEQL queries. Statistical data are presented in Table 1. Data analysis also shows that frequently used query fields follow a power-law distribution, with the top 10 most frequently used fields accounting for 90.35% of all CSEQL queries.

Table 1.

Summary statistics of the dataset. For the sample statistics, average values are reported.

4.2.2. Main Results

Performance on different CSEs. To evaluate the performance of our method on different CSEs, we compared it with a simple prompt method on four CSEs. Table 2 shows that our method significantly outperforms simple prompts on different CSEs, particularly on Censys and Shodan. This performance indicates the following:

- (1)

- As evidenced in Table 2, our method yields a significant 43.15% enhancement in mean EM score over prompt-based baselines. This demonstrates that our method can not only accommodate various CSE syntaxes but also reduce the hallucinations of LLMs to improve CSEQL query generation performance.

- (2)

- Table 2 also shows that different models exhibit varying abilities in generating CSEQL queries across different CSEs. Under the same method, no single LLM is universally optimal for the Text-to-CSEQL task, necessitating the use of different models for different CSEs.

- (3)

- Notably, different models achieve a low EM score on Censys under a simple prompt, with an average value of 0.002. The deeply nested field structures (e.g., services.port or services.tls.certificates.issuer) adopted by Censys necessitate precise dot-delimited path specifications, fundamentally differing from flat-field paradigms (e.g., port) in other CSEs. This hierarchy introduces critical challenges for LLMs, frequently leading to hierarchical misinterpretations during natural language query processing—such as substituting abbreviated forms (port, tls.issuer) for canonical paths (services.port, services.tls.certificates.issuer)—which propagate erroneous CSEQL queries. The field retrieval algorithm we propose performs semantic alignment between user questions and full hierarchical paths through field match and dense retrieval. This approach elevates Censys query accuracy, achieving an EM score of 0.69, conclusively validating its efficacy in handling nested fields. Consequently, this result highlights a key principle of field setting: prioritize simple fields over nested ones whenever possible.

Table 2.

Experimental results of EM score for different CSEs. The best results of our method for each CSE are highlighted in bold.

Table 2.

Experimental results of EM score for different CSEs. The best results of our method for each CSE are highlighted in bold.

| Model | Shodan | ZoomEye | Censys | FOFA | ||||

|---|---|---|---|---|---|---|---|---|

| Simple | Ours | Simple | Ours | Simple | Ours | Simple | Ours | |

| Kimi | 0.081 | 0.704 | 0.419 | 0.764 | 0 | 0.687 | 0.424 | 0.782 |

| GPT-4o-mini | 0.081 | 0.723 | 0.496 | 0.792 | 0 | 0.686 | 0.429 | 0.772 |

| GPT-3.5-Turbo | 0.301 | 0.714 | 0.485 | 0.787 | 0 | 0.666 | 0.372 | 0.795 |

| Claude-3.5-Sonnet | 0.417 | 0.736 | 0.803 | 0.821 | 0 | 0.712 | 0.548 | 0.807 |

| Gemini-1.5-Pro | 0.300 | 0.721 | 0.667 | 0.810 | 0.014 | 0.727 | 0.545 | 0.805 |

Comparison of existing methods. We compared our method with existing methods, including CensysGPT Beta [6] and ZoomeyeGPT [7] in terms of the EM and FM scores. Table 3 and Table 4 show the experimental results. Since CensysGPT Beta is a proprietary black-box system tied to a specific model, we compared its single reported performance against the average performance of our method across multiple state-of-the-art LLMs. Even in this comparison, our method achieves an average improvement of 2.6% for the EM score and 4.3% for the FM score. These results demonstrate that our method is effective in the test case. Similarly, our method exhibits an average improvement of 6.9% for the EM score and 7.8% for the FM score, on average, on the dataset of ZoomEye. These results underscore the superior performance of our method in improving the quality of CSEQL queries generated.

Table 3.

Comparison of the EM and FM scores between our method and CensysGPT Beta. The best results for each LLM are highlighted in bold.

Table 4.

EM and FM scores for our method and ZoomeyeGPT across different LLMs. The best results for each LLM are highlighted in bold.

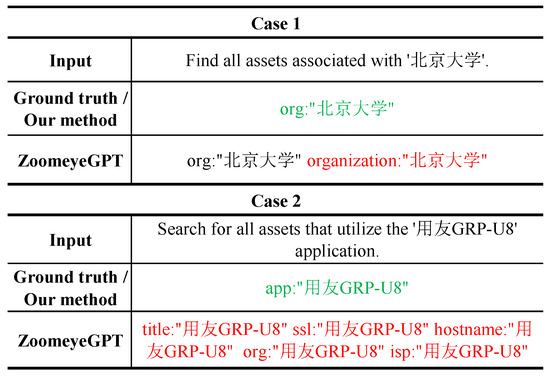

An error analysis on the generated queries of ZoomEye was conducted, and the detailed cases are shown in Figure 4. Our method outperforms ZoomeyeGPT, possibly due to the redundant field information provided by ZoomeyeGPT. This suggests that excessive fields should be excluded from the prompt. Moreover, the absence of some official fields in the prompt, such as “app”, contributes to the poor performance of ZoomeyeGPT.

Figure 4.

Case study on ZoomEye data. The red content highlights the error compared to the green ground truth.

4.3. Ablation Study

To evaluate the impact of each component in our proposed method, we conducted ablation studies on the FOFA dataset. Table 5 shows the results of the ablation study. We removed field retrieval, preventing LLMs from accessing field information, where the average EM and FM scores drop by 0.6% and 0.5% without field retrieval, respectively. Furthermore, removing the few-shot retrieval decreases the average EM and FM scores by 5% and 4.8%, respectively. As for removing the prompt template, the average EM score sees a decrease of 1.7%. These results demonstrate that omitting any component from our method adversely impacts CSEQL generation performance. This underscores the critical importance of field retrieval and few-shot retrieval in the CSEQL generation process.

Table 5.

Ablation experiment results on FOFA. EM and FM scores are shown in the table under different methods and model conditions. The “Full Schema” method provides all field information in the prompt. The “Manual Examples” method provides two related examples in the prompt. The best results are highlighted in bold.

Additionally, compared to the ablation of field retrieval, the removal of few-shot retrieval yields more pronounced declines in both EM and FM metrics. This differential impact stems from the greater informational richness of few-shots, which encapsulates fundamental CSEQL syntax alongside field specifications. Consequently, few-shot samples exert substantially stronger influence on the CSEQL generation efficacy of LLMs than field information alone. Although field retrieval ablation induces a comparatively smaller performance degradation, its retention remains methodologically essential. This necessity arises from its critical role in providing explicit schema-level constraints that mitigate the risk of field omissions—a potential limitation when relying solely on few-shot retrieval, the coverage of which may be incomplete for complex generation tasks.

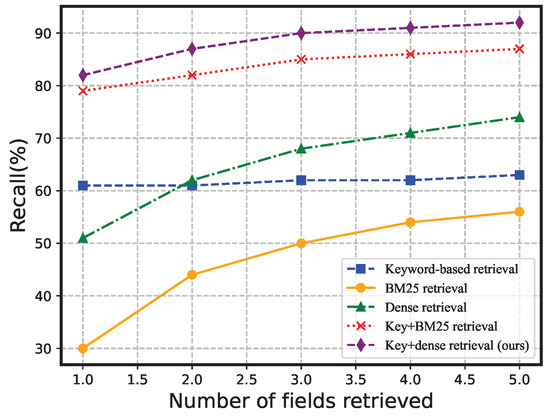

Field retrieval. As shown in Figure 5, our proposed field retrieval method achieves the highest recall rate among the five methods. The comparison results demonstrate the effectiveness of integrating keyword matching with dense retrieval. This superior performance can be attributed to the complementary strengths of both methods. Keyword matching ensures high accuracy but lacks flexibility in handling diverse query expressions. In contrast, semantic similarity retrieval is more flexible, accommodating a broader range of query formulations, but may not be as accurate as keyword matching.

Figure 5.

Comparison between different field retrieval methods and retrieval numbers based on the FOFA dataset.

Moreover, the recall rate of dense retrieval is lower than that of keyword retrieval when the number of retrieved fields is fewer than two. However, keyword retrieval exhibits diminishing recall gains beyond K = 1, suggesting most natural language queries contain only one strongly field-relevant component, highlighting limitations in rule-based intent conversion. In contrast, hybrid retrieval leverages semantic matching to improve recall across K values. The empirical observation directly validates the rationale behind Algorithm 1’s hybrid retrieval in that keyword retrieval guarantees high-confidence results, while dense retrieval operates exclusively outside its inefficient regime (k < 2), focusing instead on high-generalization scenarios.

As shown in Table 6, we also conducted an experiment on the impact of the K value on CSEQL generation under the condition where the few-shot setting is set to 0 and the LLM used to generate CSEQL is Kimi. For CSEQL generation, platform-specific sensitivities emerged: while EM scores for Shodan and FOFA consistently rise with a larger K value, ZoomEye and Censys peak at a moderate K value before fluctuating, indicating potential precision degradation from over-retrieval. In particular, the FM scores remain stable across K, underscoring the robustness of field retrieval. These findings emphasize the need for platform-specific K-tuning to optimize EM performance, balancing precision and recall.

Table 6.

Experimental results of generating CSEQL with different K values on different CSEs. The best results for each CSE are highlighted in bold.

Few-shot retrieval. Few-shot retrieval leverages the contextual learning ability of LLMs. The research in [30] confirmed the existence of contextual learning capabilities in LLMs. We evaluated the performance of our method under various few-shot settings. As described in Table 7, it can be observed that as the number of few-shot examples increases, both the EM and FM scores of the generated queries grow. This result suggests that increasing the number of few-shot examples can enhance the performance of our method. The increase in both the EM and FM scores can be attributed to the inclusion of both field and syntax information in the provided few-shot. We can also observe that the growth rate of the EM and FM scores decreases, indicating that as the number of few-shot examples increases, the impact of few-shot learning diminishes.

Table 7.

Experimental results of few-shot retrieval on FOFA. The table shows the EM and FM scores under different few-shot numbers and LLMs. The best performances of LLMs under each few-shot number are in bold. The few-shot@1/2/3/4/5 indicates the use of the first 1, 2, 3, 4, or 5 similar examples to enhance the generation capability of LLM.

5. Conclusions

In this work, our Retrieval-Augmented Generation method, centered on a novel hybrid field retrieval mechanism, effectively addresses the critical challenge of generating accurate CSEQL queries across diverse CSEs. Specifically, we introduced a hybrid retrieval algorithm that combines field match and dense retrieval, enabling precise syntactic information provision for LLMs. Furthermore, we leveraged semantic similarity-based retrieval to obtain relevant few-shot examples for LLM in-context learning. In addition, we constructed a benchmark dataset comprising NL questions and CSEQL pairs across four platforms and performed extensive evaluations on it. The experimental results demonstrate that our method not only achieves an average gain of 43.15% in EM for cross-platform query generation but also surpasses specialized single-platform methods with consistent improvements in both EM and FM. There are several limitations of our work, such as a lack of attention maps analysis and zero-shot transfer, so future work will focus on conducting mechanistic interpretability studies and generalizing our method. Overall, our method demonstrates promising results and opens avenues for further advancements in Text-to-CSEQL tasks.

Author Contributions

Conceptualization, Y.L. (Ye Li) and F.S.; methodology, Y.L. (Ye Li) and Y.L. (Yuwei Li); software, L.H.; validation, Y.L. (Ye Li), Y.L. (Yuwei Li), and F.S.; formal analysis, C.X.; investigation, Y.L. (Ye Li); resources, F.S.; data curation, Y.L. (Ye Li); writing—original draft preparation, Y.L. (Ye Li); writing—review and editing, Y.L. (Yuwei Li); visualization, L.H.; supervision, P.X.; project administration, C.X.; funding acquisition, P.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China, Grant NO. 2022YFB3102902.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CSE | Cyberspace Search Engine |

| CSEQL | Cyberspace Search Engine Query Language |

| LLM | Large Language Model |

| RAG | Retrieval-Augmented Generation |

| NL | Natural Language |

Appendix A. Modified COSTAR Prompt Template

Table A1.

The content of the modified COSTAR prompt for CSEQL generation.

Table A1.

The content of the modified COSTAR prompt for CSEQL generation.

| ine |

### Context You want to convert a natural language task into a FOFA query. A natural language task might be something like: “Search for all assets that have an IP address of 1.1.1.1.” FOFA queries are used to search cyberspace assets in the FOFA platform. For instance, the above task would convert into the FOFA query: ip=“1.1.1.1” {few_shot} ### Objective The GPT should convert natural language tasks into FOFA queries, following FOFA’s syntax and logic operator rules. The task includes: 1. Strictly generating FOFA queries without additional explanations or context. 2. Adhering to FOFA’s syntax, logic operators, and fields, as outlined in the provided tables. 3. Handling varied natural language inputs, such as: - “Search for all assets that have an IP address of 1.1.1.1.”, - “Find domains using port 80.”, - “List assets associated with example.com.”. ### Style The GPT should adopt a technical and precise style, focusing strictly on generating accurate FOFA queries. It should behave like a FOFA expert, with expertise in cyberspace asset search and a thorough understanding of FOFA’s syntax, operators, and field rules. ### Tone The GPT should use a straightforward and neutral tone, focusing on delivering the FOFA query output without unnecessary elaboration or additional context. ### Audience The GPT’s outputs should be accessible to everyone, including technical users familiar with FOFA syntax and non-technical users who may need clear and precise FOFA queries without additional jargon. ### Supplementary Data Below are FOFA query fields and their descriptions to help the GPT generate accurate queries: {fields} ### Response The GPT should return its output in JSON format with two keys: - text: A natural language summary of the query, echoing the input task in a simplified form. - query: The corresponding FOFA query string. Example: {{ “text”: “Search for all assets with the IP address 1.1.1.1.”, “query”: “ip=\“1.1.1.1\”” }} |

| ine |

Appendix B. Implementation Details

Appendix B.1. Filter Implementation

This algorithm takes an NL question (), a field list (), and a threshold () as inputs and aims to output a set of related fields (). Initially, it creates an empty set to store the retrieved fields. It then iterates through each field f in the field list . If a field f is found in the question , it adds that field to the set . If the size of exceeds the threshold at this point, it returns immediately. Otherwise, it calculates the similarity scores between the question and each field in and sorts these fields based on their similarity scores in descending order. It then iterates through the sorted list of fields. For each field in the sorted list, if the size of is still less than the threshold , it adds to . Once reaches the threshold size or the list is exhausted, it returns the final set .

Appendix B.2. Few-Shot Retrieval Implementation

For an input query Q, the retrieval process proceeds as follows: 1. All NL questions are encoded by the encoding model, and their representations are stored in the vector database. 2. The input query Q is also encoded by the encoding model. 3. During the retrieval process, the cosine similarity algorithm is applied.

References

- Lu, J.; Qiu, X.; Guo, R.; Li, L.; Zeng, J. A Novel System for Asset Discovery and Identification in Cyberspace. In Proceedings of the 2022 World Automation Congress (WAC), San Antonio, TX, USA, 11–15 October 2022; pp. 23–27. [Google Scholar] [CrossRef]

- Zou, Z.; Hou, Y.; Guo, Q. Research on Cyberspace Surveying and Mapping Technology Based on Asset Detection. In Proceedings of the 2024 IEEE 6th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 24–26 May 2024; Volume 6, pp. 946–949. [Google Scholar] [CrossRef]

- King, Z.M.; Henshel, D.S.; Flora, L.; Cains, M.G.; Hoffman, B.; Sample, C. Characterizing and measuring maliciousness for cybersecurity risk assessment. Front. Psychol. 2018, 9, 39. [Google Scholar] [CrossRef] [PubMed]

- Hao, H.; Xu, C.; Zhang, W.; Yang, S.; Muntean, G.M. Task-Driven Priority-Aware Computation Offloading Using Deep Reinforcement Learning. IEEE Trans. Wirel. Commun. 2025, 1. [Google Scholar] [CrossRef]

- Hao, H.; Xu, C.; Zhang, W.; Yang, S.; Muntean, G.M. Joint Task Offloading, Resource Allocation, and Trajectory Design for Multi-UAV Cooperative Edge Computing With Task Priority. IEEE Trans. Mob. Comput. 2024, 23, 8649–8663. [Google Scholar] [CrossRef]

- Censys. CensysGPT Beta. Available online: https://gpt.censys.io/ (accessed on 4 June 2024).

- Knownsec. Knownsec/ZoomeyeGPT. Available online: https://github.com/knownsec/ZoomeyeGPT (accessed on 4 June 2024).

- Shodan. Available online: https://www.shodan.io (accessed on 9 April 2024).

- Censys Search. Available online: https://search.censys.io/ (accessed on 18 December 2024).

- ZoomEye. Available online: https://www.zoomeye.org/ (accessed on 9 April 2024).

- FOFA Search Engine. Available online: https://fofa.info (accessed on 9 April 2024).

- Zelle, J.M.; Mooney, R.J. Learning to parse database queries using inductive logic programming. In Proceedings of the National Conference on Artificial Intelligence, Portland, OR, USA, 4–8 August 1996; pp. 1050–1055. [Google Scholar]

- Saha, D.; Floratou, A.; Sankaranarayanan, K.; Minhas, U.F.; Mittal, A.R.; Özcan, F. ATHENA: An ontology-driven system for natural language querying over relational data stores. Proc. VLDB Endow. 2016, 9, 1209–1220. [Google Scholar] [CrossRef]

- Guo, J.; Zhan, Z.; Gao, Y.; Xiao, Y.; Lou, J.G.; Liu, T.; Zhang, D. Towards Complex Text-to-SQL in Cross-Domain Database with Intermediate Representation. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Korhonen, A., Traum, D., Màrquez, L., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 4524–4535. [Google Scholar] [CrossRef]

- Choi, D.; Shin, M.C.; Kim, E.; Shin, D.R. RYANSQL: Recursively Applying Sketch-based Slot Fillings for Complex Text-to-SQL in Cross-Domain Databases. Comput. Linguist. 2021, 47, 309–332. [Google Scholar] [CrossRef]

- Wang, B.; Shin, R.; Liu, X.; Polozov, O.; Richardson, M. RAT-SQL: Relation-Aware Schema Encoding and Linking for Text-to-SQL Parsers. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 7567–7578. [Google Scholar]

- Cao, R.; Chen, L.; Chen, Z.; Zhao, Y.; Zhu, S.; Yu, K. LGESQL: Line Graph Enhanced Text-to-SQL Model with Mixed Local and Non-Local Relations. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; Zong, C., Xia, F., Li, W., Navigli, R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 2541–2555. [Google Scholar] [CrossRef]

- Scholak, T.; Schucher, N.; Bahdanau, D. PICARD: Parsing Incrementally for Constrained Auto-Regressive Decoding from Language Models. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; Moens, M.F., Huang, X., Specia, L., Yih, S.W.T., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 9895–9901. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Chang, S.; Fosler-Lussier, E. How to Prompt LLMs for Text-to-SQL: A Study in Zero-shot, Single-domain, and Cross-domain Settings. In Proceedings of the NeurIPS 2023 Second Table Representation Learning Workshop, New Orleans, LA, USA, 15 December 2023. [Google Scholar]

- Pourreza, M.; Rafiei, D. DIN-SQL: Decomposed In-Context Learning of Text-to-SQL with Self-Correction. In Proceedings of the Thirty-Seventh Conference on Neural Information Processing Systems, Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Nan, L.; Zhao, Y.; Zou, W.; Ri, N.; Tae, J.; Zhang, E.; Cohan, A.; Radev, D. Enhancing Text-to-SQL Capabilities of Large Language Models: A Study on Prompt Design Strategies. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP, Singapore, 6–10 December 2023; Bouamor, H., Pino, J., Bali, K., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 14935–14956. [Google Scholar] [CrossRef]

- Gao, D.; Wang, H.; Li, Y.; Sun, X.; Qian, Y.; Ding, B.; Zhou, J. Text-to-SQL Empowered by Large Language Models: A Benchmark Evaluation. Proc. VLDB Endow. 2024, 17, 1132–1145. [Google Scholar] [CrossRef]

- Wang, B.; Wang, Z.; Wang, X.; Cao, Y.; Saurous, R.A.; Kim, Y. Grammar Prompting for Domain-Specific Language Generation with Large Language Models. In Proceedings of the Thirty-Seventh Conference on Neural Information Processing Systems, Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Staniek, M.; Schumann, R.; Züfle, M.; Riezler, S. Text-to-OverpassQL: A Natural Language Interface for Complex Geodata Querying of OpenStreetMap. Trans. Assoc. Comput. Linguist. 2024, 12, 562–575. [Google Scholar] [CrossRef]

- Ziletti, A.; DAmbrosi, L. Retrieval augmented text-to-SQL generation for epidemiological question answering using electronic health records. In Proceedings of the 6th Clinical Natural Language Processing Workshop, Mexico City, Mexico, 21 June 2024; Naumann, T., Ben Abacha, A., Bethard, S., Roberts, K., Bitterman, D., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 47–53. [Google Scholar] [CrossRef]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; Volume 33, pp. 9459–9474. [Google Scholar]

- Teo, S. How I Won Singapore’s GPT-4 Prompt Engineering Competition. 2024. Available online: https://towardsdatascience.com/how-i-won-singapores-gpt-4-prompt-engineering-competition-34c195a93d41 (accessed on 25 December 2024).

- Projectdiscovery/Awesome-Search-Queries. Available online: https://github.com/projectdiscovery/awesome-search-queries (accessed on 10 February 2025).

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- GPT-4o Mini: Advancing Cost-Efficient Intelligence. Available online: https://openai.com/index/gpt-4o-mini-advancing-cost-efficient-intelligence/ (accessed on 14 February 2025).

- Team, G.; Georgiev, P.; Lei, V.I.; Burnell, R.; Bai, L.; Gulati, A.; Tanzer, G.; Vincent, D.; Pan, Z.; Wang, S.; et al. Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context. arXiv 2024, arXiv:2403.05530. [Google Scholar]

- Anthropic. The Claude 3 Model Family: Opus, Sonnet, Haiku; Anthropic: San Francisco, CA, USA, 2024. [Google Scholar]

- AI. Kimi. 2023. Available online: https://kimi.moonshot.cn/ (accessed on 9 April 2024).

- Xiao, S.; Liu, Z.; Zhang, P.; Muennighoff, N.; Lian, D.; Nie, J.Y. C-Pack: Packed Resources For General Chinese Embeddings. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, New York, NY, USA, 14–18 July 2024; SIGIR ’24; pp. 641–649. [Google Scholar] [CrossRef]

- Douze, M.; Guzhva, A.; Deng, C.; Johnson, J.; Szilvasy, G.; Mazaré, P.E.; Lomeli, M.; Hosseini, L.; Jégou, H. The Faiss library. arXiv 2024, arXiv:2401.08281. [Google Scholar]

- Zhong, R.; Yu, T.; Klein, D. Semantic Evaluation for Text-to-SQL with Distilled Test Suites. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; Webber, B., Cohn, T., He, Y., Liu, Y., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 396–411. [Google Scholar] [CrossRef]

- Deng, N.; Chen, Y.; Zhang, Y. Recent Advances in Text-to-SQL: A Survey of What We Have and What We Expect. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; Calzolari, N., Huang, C.R., Kim, H., Pustejovsky, J., Wanner, L., Choi, K.S., Ryu, P.M., Chen, H.H., Donatelli, L., Ji, H., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 2166–2187. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).