1. Introduction

Oral health is a fundamental component of overall well-being, influencing not only physical health but also psychological and social aspects of life [

1,

2,

3]. Dental care and tooth loss are critical indicators of oral health status, reflecting not only the progression of oral disease but also broader systemic, behavioral, and sociodemographic determinants. Beyond impairing essential functions like chewing and speaking, tooth loss can negatively impact nutrition, self-esteem, and overall quality of life [

4,

5]. Marked disparities by age, race, income, and geography highlight the need for targeted public health interventions [

2,

6].

The causes of tooth loss are multifactorial—primarily linked to periodontal disease and dental caries—but are strongly influenced by behavioral, socioeconomic, and access factors [

7,

8]. Traditional regression-based studies have reported associations between such factors and tooth loss [

9], but they are limited in capturing complex interactions, non-linear relationships, and heterogeneity. Machine learning (ML) approaches can address these gaps by identifying latent patterns across large datasets, improving prediction and interpretability [

10,

11,

12].

According to the Global Burden of Disease Study, untreated dental caries remains the most prevalent oral health condition globally, affecting over 2.4 billion people [

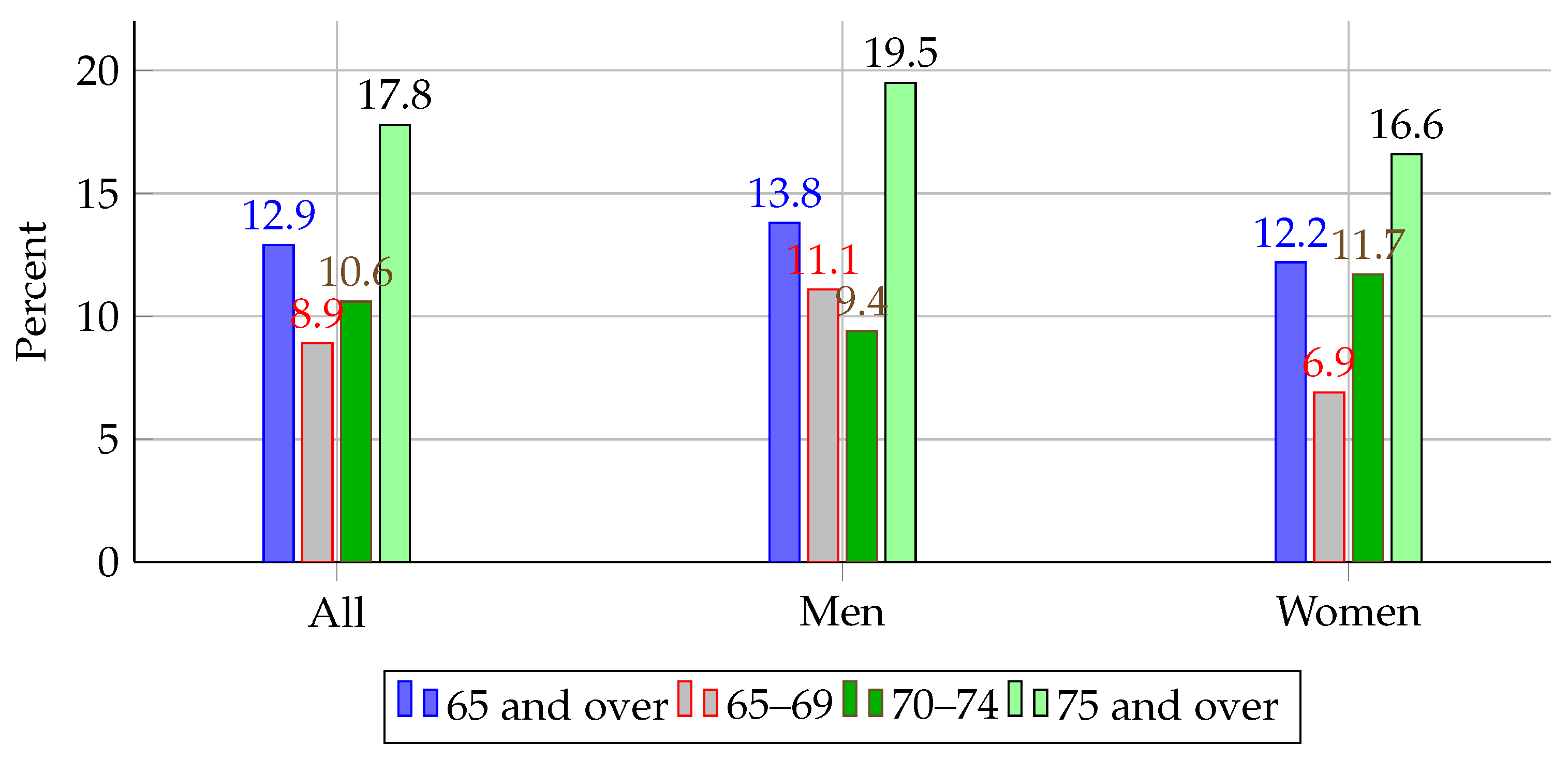

13]. While not all carious lesions lead to tooth loss, caries is recognized as one of the primary precursors when left untreated, particularly in populations with limited access to dental care. This highlights the global burden of preventable oral diseases and the potential impact of early detection and intervention strategies. In the U.S., recent data from the National Health and Nutrition Examination Survey (NHANES) reported that 12.9% of adults aged 65 and older had lost all their natural teeth between 2015 and 2018, with edentulism disproportionately affecting men aged 75 and above (19.5%) [

14].

Figure 1 illustrates these disparities across age and sex groups, further emphasizing the urgency for scalable, equitable solutions.

The Behavioral Risk Factor Surveillance System (BRFSS) offers such an opportunity. As a nationally representative survey of over 400,000 adults annually, it captures rich information on dental outcomes, health behaviors, and social determinants—making it well suited for ML-driven analysis of tooth loss.

In this study, we aim to develop and evaluate machine learning models using BRFSS 2022 data to classify both the presence and severity of tooth loss and to identify key predictors.

Our contributions are threefold: (1) we evaluate ten machine learning models for binary and multiclass classification of tooth loss using a nationally representative dataset; (2) we identify and interpret behavioral, demographic, and socioeconomic predictors; and (3) we demonstrate the potential of ML models to enhance oral health surveillance and inform equitable prevention strategies.

Together, these contributions offer new insights into the social epidemiology of tooth loss and demonstrate how machine learning can augment traditional methods in oral health surveillance.

2. Related Work

Machine learning (ML) has emerged as a powerful tool in oral healthcare, offering the ability to capture complex, nonlinear relationships across clinical and behavioral datasets. Prior studies have demonstrated ML applications for dental caries detection [

15], periodontal disease classification [

16], and oral health risk prediction [

17], often leveraging structured datasets such as NHANES, BRFSS, and electronic health records [

18,

19,

20,

21].

Specific to tooth loss prediction, multiple studies have used ML models on national-scale datasets and institutional EHRs. Elani et al. [

22] applied gradient-boosted trees on NHANES data, achieving AUCs above 0.80. Hasuike et al. [

23] reviewed existing models and confirmed the superior performance of ensemble methods such as random forest and XGBoost. Follow-up studies using institution-specific cohorts highlighted limitations in fairness and generalizability [

24,

25]. Ravidà et al. [

26] showed that ML-based prognostic systems outperformed conventional periodontal indices, particularly in moderate-risk populations. Other studies integrated socioeconomic variables—such as education, income, and smoking habits—into predictive models, enhancing interpretability and fairness [

27,

28,

29].

Despite these advances, important gaps remain. Many studies rely on relatively small or localized datasets that limit generalizability [

24,

25]. While NHANES offers breadth, it lacks recent data and behavioral nuance. Only a few works have rigorously analyzed subgroup fairness, despite its growing importance in healthcare ML [

27,

28]. Challenges in interpretability also persist—particularly for high-performing ensemble methods—hindering clinical trust and adoption [

12,

26].

In light of these limitations, our study builds on prior work by (1) leveraging the most recent BRFSS 2022 dataset with over 370,000 respondents to enhance generalizability, (2) systematically comparing ten classifiers, including interpretable and state-of-the-art boosting methods, using a unified evaluation framework, and (3) conducting feature importance and subgroup analyses to address fairness and equity in oral health surveillance.

3. Materials and Methods

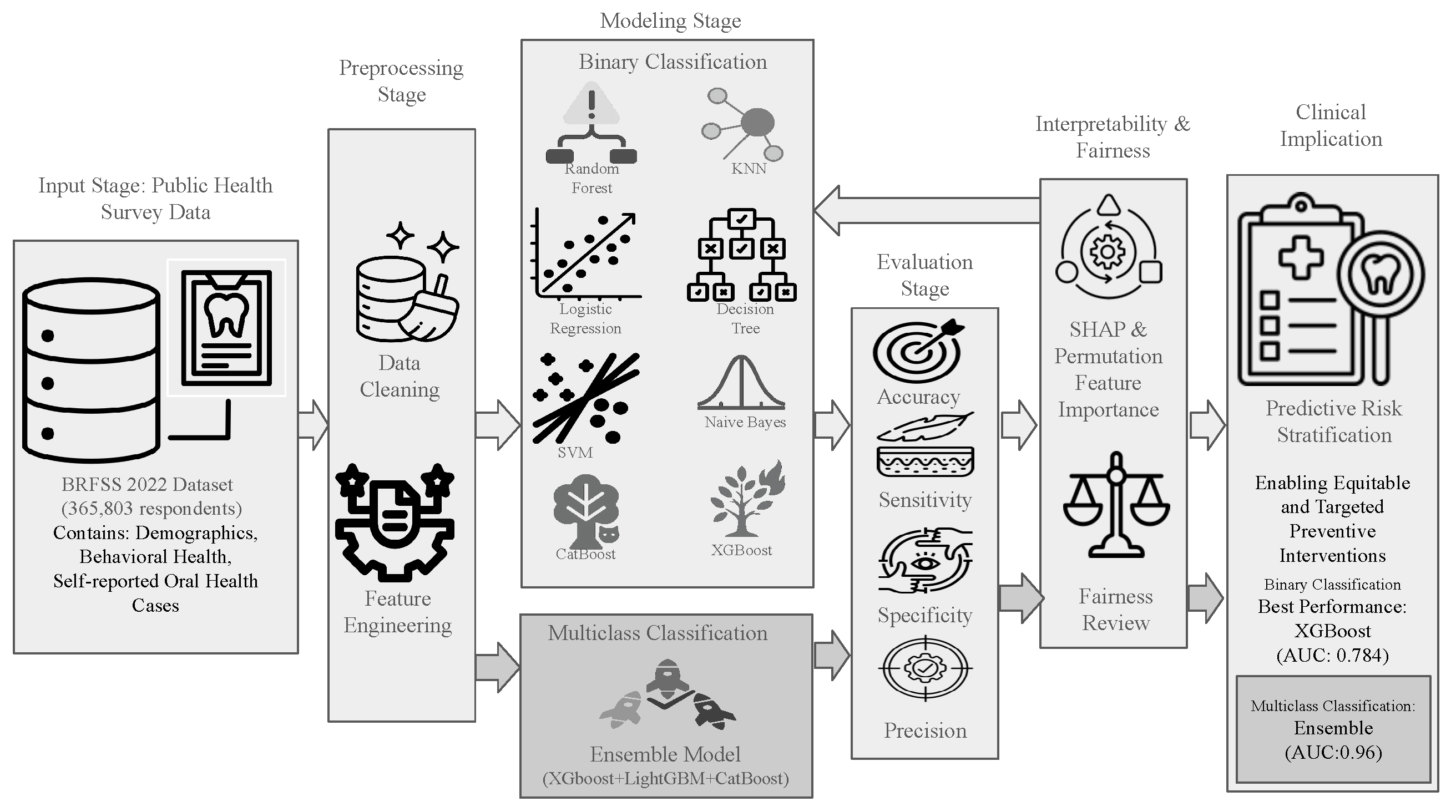

An end-to-end workflow for predicting tooth loss from the 2022 BRFSS LLCP survey comprises the following: binary and multiclass classification formulations; a preprocessing stage for data cleaning, imputation, and encoding; a modeling stage for algorithm selection and training; an evaluation stage for performance assessment on held-out data; and a model interpretability and feature attribution stage using SHAP and permutation methods to identify key predictors. The overall workflow for both tasks is depicted in

Figure 2.

3.1. Data Source

The 2022 Behavioral Risk Factor Surveillance System (BRFSS) is a cross-sectional, telephone-based survey conducted by the Centers for Disease Control and Prevention (CDC) to monitor health-related risk behaviors, chronic health conditions, and the use of preventive services among U.S. adults. This study relies on self-reported tooth loss from BRFSS. While self-reports can introduce recall or misclassification bias, multiple validation studies show high agreement between self-reported and clinically examined tooth counts and edentulism (e.g., kappa ≈ 0.93; correct classification ≈ 96%) and strong correlations with clinical counts [

30]. Accordingly, BRFSS oral-health indicators are widely used for population surveillance by the CDC [

31]. It employs random-digit dialing and stratified sampling to ensure national and state-level representativeness. After preprocessing and cleaning, our final modeling dataset included approximately 365,000 respondents and 37 input features. These features spanned six key domains: demographic (e.g., age, sex, race/ethnicity), behavioral (e.g., smoking, physical activity, alcohol use), socioeconomic (e.g., income, education, employment), health status (e.g., diabetes, BMI), dental care utilization (e.g., frequency of dental visits, insurance coverage), and composite indices (e.g., Health Risk Index, Care Access Score). The outcome variable was derived from the BRFSS item querying the number of permanent teeth removed due to decay or gum disease.

3.2. Classification Tasks: Binary and Multiclass Formulations

This study investigates two complementary supervised learning tasks using the 2022 BRFSS dataset, aiming to leverage ML for scalable, population-level prediction of tooth loss outcomes. The first task is a binary classification problem that predicts whether an individual has experienced any permanent tooth loss. The second task is a multiclass classification problem designed to assess the severity of tooth loss by categorizing individuals into one of four clinically meaningful groups based on the number of teeth removed.

Both tasks were designed to address different but related clinical and public health questions. The binary task enables coarse-grained risk stratification for early screening, while the multiclass task allows for stratifying tooth loss severity; its intended use is to assist in identifying at-risk populations and guiding preventive or restorative care strategies at a broader public health level. Despite differences in prediction granularity, both tasks share a unified machine learning pipeline—starting with common data preprocessing, feature engineering, and encoding strategies—followed by model training, hyperparameter tuning, evaluation, and interpretability analysis.

A central advantage of this dual-task framework is that it allows for a comparative analysis of algorithmic performance across different problem granularities using the same input features and survey population. It also allows us to assess whether predictors behave differently depending on the formulation of the target outcome. The 2022 BRFSS Landline–Cell-Phone Combined (LLCP) [

6] file is a large, state-based probability survey of U.S. adults that captures demographic, health-status, behavioral-risk, and preventive-service measures. In this study, we focus on a subset of key predictors—sex, age group, race, education, smoking status, alcohol use, dental-visit history, diabetes status, state of residence, income, marital status, employment status, health insurance coverage, transportation barriers, and self-reported general health—alongside the outcome

RemoveTeeth, which records permanent teeth removed due to decay or gum disease.

Table 1 lists each variable’s coded values and human-readable definitions (e.g., how “1 = Current smoker” versus “3 = Never smoked” corresponds to

_SMOKER3), ensuring transparency when discussing tooth-loss prevalence and its associations with demographic and behavioral factors.

3.3. Preprocessing Stage

The preprocessing stage begins with data cleaning, which removes duplicates, handles invalid codes and missing values, and standardizes variable formats. It then proceeds to feature engineering, through when new predictors—such as polynomial transforms, interaction terms, and composite risk scores—are constructed to enhance model performance.

3.3.1. Data Cleaning

To ensure data quality and model reliability, we applied structured inclusion and exclusion criteria during preprocessing. We retained only respondents with valid and interpretable responses across key variables. As part of the preprocessing pipeline for the proposed system, all non-informative and out-of-scope response codes were removed, such that every record fed into our models carried only clear, valid measurements, specifically the following:

Lastdentalvisit (LASTDEN4): Retained only codes 1–4 (visits within the past three years) and dropped codes 5–6 (older than three years), as well as “Don’t know,” “Never visited,” and “Refused” responses (7, 8, 9).

Diabetes, Education, Smoking, Drinking, Marital status, Employment status: Dropped all “Refused” entries (code 9).

Age group (_AGEG5YR): Excluded any code outside the defined five-year bands (dropped code 14).

RemoveTeeth: Excluded “Don’t know” and “Missing” codes (7, 9).

State (_STATE): Limited to the 50 U.S. states (dropped codes 77, 99).

Health insurance, Transportation barriers, General health: Removed “Don’t know,” “Refused,” or special missing codes (7, 77, 99).

After replacing these invalid codes with NaN, we dropped any row containing at least one such entry. This filtering yielded a cleaned dataset of 365,803 adult records, each with complete, interpretable values across all selected predictors and the outcome.

The primary response variable for both classification tasks was derived from the BRFSS item “How many of your permanent teeth have been removed because of tooth decay or gum disease?” Although originally recorded as an ordinal count (0–31, 96 = all), we recast it into two distinct formats to address different modeling goals. First, for early risk detection, we created a binary target indicating whether a respondent had lost any permanent teeth. Second, to capture severity, we defined four ordered classes reflecting increasing tooth loss.

For the binary classification task, the objective was to predict whether an individual had experienced any tooth loss. The responses were binarized as follows:

0—“None”: No permanent teeth removed.

1—“1 to 5,” “6 or more,” or “All”: One or more permanent teeth removed.

This formulation supports early risk identification by distinguishing between total retention versus any degree of tooth loss.

For the multiclass classification task, a more granular target encoding was applied to capture tooth loss severity. Four ordinal categories were constructed:

Class 0: No teeth removed.

Class 1: 1 to 5 teeth removed.

Class 2: 6 or more teeth removed.

Class 3: All teeth removed.

For the multiclass classification task, the outcome variable was categorized into four ordered classes: 0 (no tooth loss), 1 (1–5 teeth removed), 2 (6 or more but not all), and 3 (all teeth removed). This categorization scheme reflects both clinical progression and epidemiological thresholds used in oral health surveillance. The 1–5 range represents early or moderate tooth loss, which may be functionally less debilitating and more amenable to preventive care. The “6 or more” category captures more advanced tooth loss, often associated with periodontal disease or systemic factors. Complete tooth loss (edentulism) is a distinct clinical state with major implications for nutrition, prosthodontic need, and quality of life. These groupings also align with response options from the BRFSS survey instrument and support meaningful stratification for public health planning. Severity categories followed the CDC/BRFSS permanent tooth-loss item (RMVTETH4), which classifies respondents as: none, 1–5 teeth removed, 6 or more (but not all) removed, and all teeth removed. This scheme is standard in state and national oral-health surveillance and underlies CDC indicators such as “Lost 6 or More Teeth,” enabling comparability across years and geographies [

32,

33].

All predictor variables were then transformed to ensure compatibility with diverse machine-learning algorithms. Numeric and ordinal features (e.g., Age, Income, Lastdentalvisit) were retained in their native form or label-encoded when appropriate. Categorical predictors were encoded either via:

"Label encoding" for tree-based models that leverage ordinal relationships without assuming equidistance, or

"One-hot encoding" for algorithms sensitive to numeric ordering (e.g., Logistic Regression, SVM).

To accommodate distance-based classifiers (such as k-nearest neighbors and SVM), continuous features were standardized using either z-score normalization or Min–Max scaling. Ensemble methods (random forest, XGBoost, CatBoost), which are inherently scale-invariant, used the raw (unscaled) inputs.

3.3.2. Feature Engineering

To improve model performance and capture latent interactions not explicitly modeled by raw BRFSS variables, several domain-informed engineered features were constructed. The engineered features were developed based on a combination of domain expertise, existing public health literature, and preliminary exploratory analysis of the BRFSS dataset. These transformations aimed to enhance the expressiveness of the feature space and the models’ ability to learn complex relationships associated with tooth loss risk. Among these, we developed two composite indices: a Health Risk Index and a Care Access Score. These indices aggregate variables such as smoking status, diabetes diagnosis, dental insurance coverage, and frequency of preventive visits. Heuristic weights were assigned to reflect the relative contribution of each component, guided by evidence from prior public health and epidemiological studies [

6,

9]. This aggregation approach enhances interpretability, reduces dimensionality, and allows the model to leverage multifaceted behavioral and systemic factors more effectively.

- 1.

Polynomial Terms:

Age is one of the strongest predictors of oral health outcomes, but its relationship with tooth loss is rarely linear. To account for potential nonlinear effects of aging, we introduced second- and third-degree polynomial terms:

These terms allow models to better capture the curvature associated with increased tooth loss risk in older populations, particularly the acceleration of edentulism risk in the 65+ cohort.

- 2.

Socioeconomic Interaction Terms:

The interaction between demographic variables often modifies health outcomes. For example, the impact of income on oral health may vary by education level, and vice versa. To reflect these interdependencies, we created the following cross-terms:

These features enable the models to capture compound socioeconomic effects that could influence dental care utilization, preventive habits, and long-term oral health behaviors.

- 3.

Composite Health Risk Index:

Recognizing the multidimensional nature of behavioral risk, we synthesized multiple health-related features into a single composite index to represent overall health risk:

The weights were heuristically assigned to reflect the relative contributions of each factor based on existing literature. This index captures the cumulative burden of chronic disease and lifestyle-related risk factors that are strongly associated with tooth loss progression.

- 4.

Care Access Index:

Access to consistent and affordable care plays a critical role in both preventive and reactive dental interventions. To quantify this dimension, we constructed a binary index reflecting favorable care access conditions:

This feature indicates whether an individual is likely to have both routine access to a primary care provider and financial ability to seek care when needed, both of which are known to affect dental visit frequency and treatment continuity.

- 5.

Task-Specific Feature Selection Strategies:

Given the differing complexity and modeling needs of the binary and multiclass classification tasks, we employed task-specific feature selection strategies to balance predictive power, model generalization, and interpretability.

For the binary classification task, feature selection was performed using the SelectKBest method based on ANOVA F-statistics. This filter-based approach evaluated each candidate predictor individually against the binarized tooth loss outcome, selecting features that exhibited the strongest statistical associations. SelectKBest was chosen for its ability to identify predictive features while reducing dimensionality, thereby enhancing model stability and interpretability. Thus, a total of 17 features were retained for the binary classification models for the next stage.

In contrast, for the

multiclass classification task, the feature selection strategy combined both statistical filtering and domain-informed feature engineering. Initially, variables were screened based on their absolute Pearson correlation (

) with the encoded multiclass tooth loss target. Features with

were retained (

Table 2). In addition to these high-correlation variables, engineered features—such as polynomial transformations of age, socioeconomic interaction terms (e.g.,

EDUCA_INCOME), and composite indices capturing health risk and care access—were included to better capture nonlinearities and interaction effects inherent in the tooth loss progression continuum. Thus, in total, 25 features were retained for the multiclass classification models for the next stage.

All final feature subsets were subjected to multicollinearity diagnostics using correlation matrices and variance inflation factor (VIF) analysis. Redundant predictors were removed to ensure parsimony and improve interpretability across all models. To avoid data leakage, feature selection was performed exclusively on the training folds after the stratified split, and never on the test set. This ensured that model evaluation remained unbiased and that the test data were used only for final performance assessment.

3.4. Modeling Stage

Both tasks used supervised learning frameworks with 80/20 train-test splits. To ensure robust and unbiased model evaluation, we employed stratified 5-fold cross-validation during both hyperparameter optimization and performance assessment. Therefore, performance metrics reported in the

Section 4, represent averages across the five folds, rather than from a single train-test split. This approach reduced variance in model estimates and provided more reliable generalization performance.

3.4.1. Binary Classification Modeling Stage

For the binary classification task, we adopted a model-specific feature selection and evaluation framework to ensure optimal alignment between each machine learning algorithm and its most relevant predictors. Specifically, we implemented a two-stage pipeline: First, we applied the SelectKBest method with ANOVA F-statistics independently for each model to retain a subset of predictive features that showed statistically significant associations with tooth loss. These features were then used collectively for model training, ensuring that each classifier leveraged multiple relevant predictors (17 in the binary task). Permutation importance was applied subsequently during the interpretability stage to rank predictors, whereas ANOVA-based SelectKBest was used exclusively for feature selection on the training data.

Using the selected top feature, we trained binary classifiers for eight widely used algorithms:

Each model was trained and evaluated using a stratified 80/20 train–test split to preserve class balance across tooth loss categories. Hyperparameters for ensemble-based models (e.g., random forest, CatBoost, XGBoost) were optimized using randomized search over predefined parameter grids with 5-fold cross-validation. For gradient-boosting algorithms, early stopping was employed to prevent overfitting.

Although the binary classification task exhibited a mild class imbalance ( 55% prevalence of tooth loss), we opted not to apply synthetic resampling techniques such as SMOTE or ADASYN. Instead, we leveraged class weighting strategies supported by various algorithms (e.g., logistic regression, SVM, XGBoost, and CatBoost) to mitigate imbalance during model training. This approach preserved the original data distribution and avoided the potential introduction of noise from synthetic samples, which can be detrimental in large-scale datasets with subtle class boundaries. Additionally, to ensure a robust evaluation under imbalance, model performance was assessed using threshold-independent metrics such as AUC, sensitivity, and precision, rather than relying solely on accuracy.

This selective modeling strategy enabled us to compare the predictive capacity of diverse algorithms using consistent evaluation criteria while maintaining model simplicity and interpretability.

3.4.2. Multiclass Classification Modeling Stage

To model the ordinal severity of tooth loss across four classes, we implemented a gradient boosting–based ensemble learning framework, comprising XGBoost, LightGBM, and CatBoost classifiers. Each model was trained within a resampling-aware pipeline using the adaptive synthetic sampling (ADASYN) method to mitigate class imbalance during training. Deep learning algorithms were not included in this study, as the BRFSS dataset primarily consists of structured, tabular data with categorical and ordinal variables. In such settings, tree-based ensemble methods such as XGBoost, CatBoost, and LightGBM have consistently outperformed deep neural networks in both accuracy and interpretability [

34]. Additionally, ensemble models offer faster training times, better handling of missing data, and built-in mechanisms for class imbalance—all of which align well with the scale and structure of the BRFSS data. Given the public health context of our application, we prioritized interpretable models that offer clear insights into feature importance, which is less straightforward in deep learning models.

- 1.

Pipeline Architecture:

For each gradient-boosting algorithm, we constructed a dedicated ImbPipeline, placing ADASYN as the first step and the classifier as the second. This design ensures that oversampling is performed within each fold of cross-validation, thus preventing data leakage and maintaining the integrity of performance evaluation. The pipelines were as follows:

XGBoost Pipeline:

includes ADASYN and xgb.XGBClassifier with eval_metric=‘logloss’ and label encoder disabled.

LightGBM Pipeline: combines ADASYN with lgb.LGBMClassifier.

CatBoost Pipeline: integrates ADASYN with CatBoostClassifier, configured to run silently with a fixed random seed.

- 2.

Hyperparameter Tuning:

Each pipeline underwent hyperparameter optimization using GridSearchCV with 5-fold cross-validation. The multiclass One-vs-Rest AUC (macro-averaged) was used as the scoring metric to ensure balanced performance across all four tooth loss categories.

The search grids were deliberately constrained to small, high-performing hyperparameter spaces to reduce overfitting risk and expedite computation. The final grids used for tuning are summarized in

Table 3.

The best estimator for each model was selected based on the highest macro-averaged AUC during cross-validation. These tuned models were subsequently used for ensemble construction.

- 3.

Ensemble Construction:

The optimized base models were combined using a soft-voting ensemble implemented via VotingClassifier. In this approach, the predicted probabilities from each classifier are averaged, and the class with the highest mean probability is selected as the final classification. This method improves predictive robustness by leveraging the diverse strengths of each model:

XGBoost captures complex interactions and is efficient with sparse features.

LightGBM is highly efficient with large-scale data and leaf-wise growth.

CatBoost natively handles categorical variables and combats overfitting via ordered boosting.

The ensemble was trained on the full training set and evaluated on a stratified holdout set using macro-averaged metrics, confusion matrix analysis, and SHAP-based interpretability.

This ensemble-based architecture ensured stability, reduced variance, and improved the model’s capacity to generalize across varying severities of tooth loss.

3.5. Evaluation Stage

A consistent and rigorous evaluation framework was applied across both the binary and multiclass classification tasks to assess model performance from multiple perspectives. Five key classification metrics were used: accuracy, precision, sensitivity (recall), specificity, and area under the receiver operating characteristic curve (AUC). Each metric is briefly described below, along with its mathematical definition.

- 1.

Accuracy (Overall Correctness):

Accuracy measures the proportion of total predictions that were correctly classified.

where:

: true positive;

: true negative;

: false positive;

: false negative.

- 2.

Precision (Positive Predictive Value):

Precision measures the proportion of positive identifications that were actually correct.

- 3.

Sensitivity or Recall (True Positive Rate):

Sensitivity evaluates the model’s ability to correctly identify positive instances.

- 4.

Specificity (True Negative Rate):

Specificity assesses the model’s ability to correctly identify negative instances.

- 5.

Area Under the ROC Curve (AUC):

The ROC curve plots the true positive rate (sensitivity) against the false positive rate (

) at various threshold settings. The AUC summarizes the overall ability of the model to discriminate between classes.

where TPR is the true positive rate as a function of FPR.

Metric Application to Binary and Multiclass Tasks

For the binary classification task, all metrics were computed based on the standard binary confusion matrix. The AUC was calculated directly using the conventional two-class ROC formulation.

For the multiclass classification task, the One-vs-Rest (OvR) strategy was applied. In OvR, a separate ROC curve is computed for each class against all others, and macro-averaging was used to calculate a single summary AUC across all classes. This approach ensures that all classes are treated equally, regardless of imbalance.

3.6. Model Interpretability and Feature Attribution

In addition to quantitative performance evaluation, we conducted an interpretability analysis to understand how the trained models arrived at their predictions and identify the most influential features contributing to tooth loss classification.

Two complementary methods were used:

- 1.

Permutation Importance:

We computed permutation importance scores for each feature by measuring the drop in model performance (specifically, AUC) when that feature’s values were randomly permuted across the test set. This permutation breaks the statistical association between the feature and the target, thereby isolating its unique predictive contribution. Features that, when shuffled, caused a greater decrease in model performance were interpreted as more important. This method provides a model-agnostic, global ranking of feature relevance and was applied to all classifiers in both the binary and multiclass tasks.

- 2.

SHAP Analysis:

To further dissect the decision-making behavior of the models, we generated SHAP (Shapley Additive Explanations) values for selected test instances. SHAP assigns each feature a contribution value toward the final prediction, based on cooperative game theory principles. Unlike permutation importance, SHAP provides both global and local interpretability:

Global SHAP Values: average absolute SHAP values were computed for each feature across the dataset to determine its overall influence on predictions.

Local SHAP Values: for individual predictions, SHAP decomposes the model output into additive contributions from each input feature, providing detailed, instance-specific explanations.

This dual-stageed interpretability approach allowed us to validate model transparency, confirm alignment with known clinical risk factors (e.g., smoking, age, income, dental visits), and identify population-specific predictors of misclassification or prediction uncertainty.

Implementation Details

All experiments, modeling, and evaluation pipelines were developed using Python version 3.10, ensuring compatibility with the latest stable machine learning and data science libraries. Key libraries and packages utilized are as shown in

Table 4.

4. Results

The Results section is structured such that feature importance for the binary task of severe versus non-severe tooth loss is examined first, with accompanying model performance metrics (accuracy, AUC, precision, and recall). This is followed by the multiclass analysis—mild, moderate, and severe tooth-loss categories—again reporting both the most influential predictors and classification results. Finally, SHAP-based interpretability offers a global and local view of how individual features drive predictions.

4.1. Task 1: Binary Classification of Tooth Loss

We begin by identifying the top predictors of any tooth loss using univariate ANOVA (SelectKBest) for feature selection on the training data. Permutation importance was subsequently applied in the interpretability stage to provide a model-agnostic ranking of predictor influence across classifiers. The features retained via SelectKBest were then used as inputs to a suite of machine-learning classifiers (logistic regression, random forest, SVM, KNN, decision tree, naive bayes, CatBoost, XGBoost). For each model, we report five key performance metrics—accuracy, precision, recall (sensitivity), specificity, and ROC AUC—to allow direct comparison of predictive power and trade-offs.

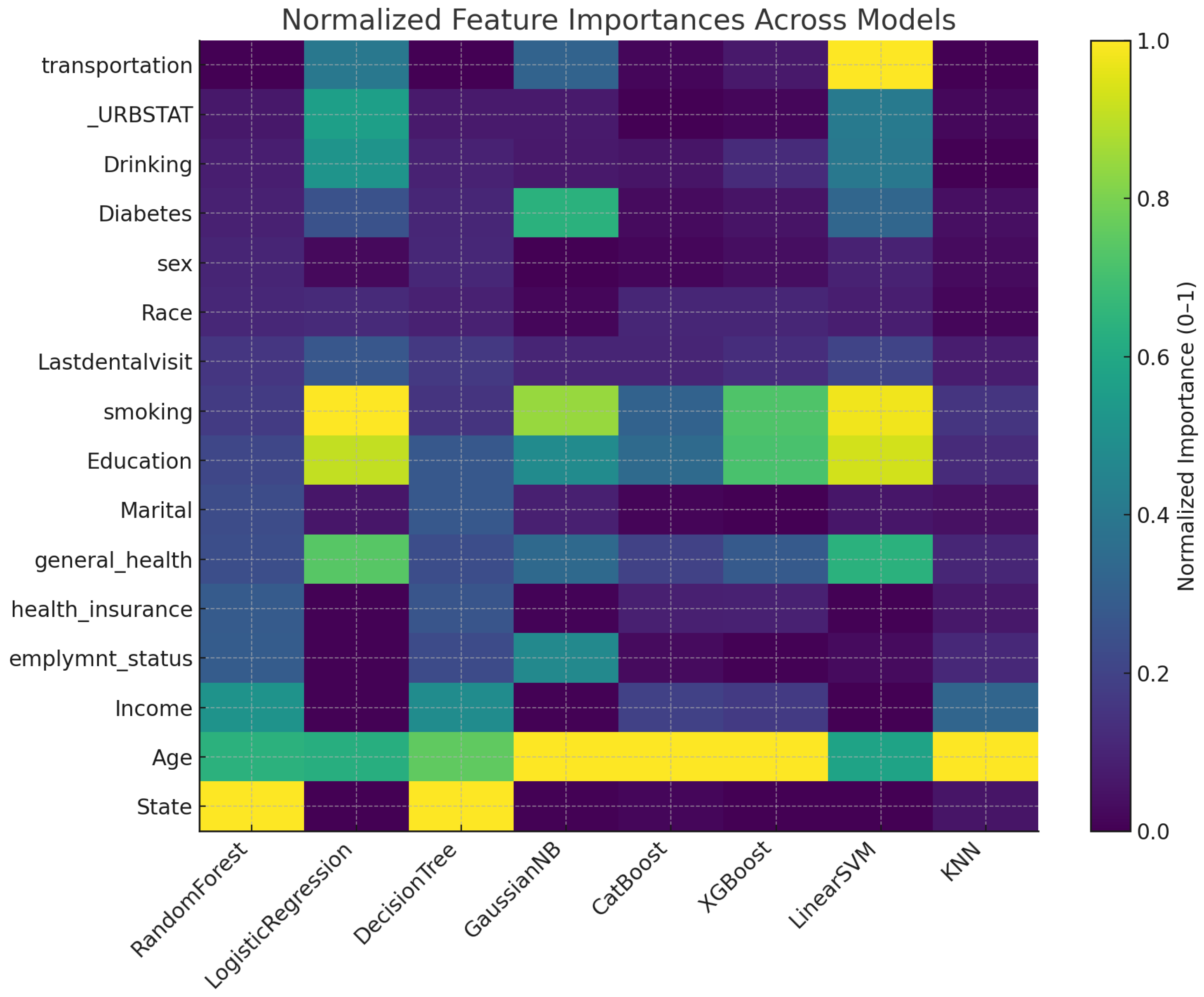

4.1.1. Feature Importance

Figure 3 displays a heatmap of each predictor’s relative importance after min–max scaling to the

range for all models. Darker shades indicate features that a given algorithm relied on most heavily. Notice that

Age is consistently among the top predictors across all eight methods, whereas features such as

sex and

_URBSTAT remain near zero in most models. Tree-based learners (random forest, CatBoost, XGBoost) and distance-based KNN place greater emphasis on

smoking,

education, and

general_health, while linear models (logistic regression, linear SVM) distribute importance more evenly but still highlight

smoking and

general_health. This visualization underscores both the common and model-specific drivers of severe tooth-loss prediction.

4.1.2. Model Performance

Table 5 presents accuracy, precision, recall (sensitivity), specificity, and AUC for eight classifiers on an 80/20 stratified split of the cleaned BRFSS 2022 data. Notably, the boosted ensembles, CatBoost (accuracy 0.711, precision 0.693, recall 0.677, specificity 0.740, AUC 0.786) and XGBoost (accuracy 0.714, precision 0.693, recall 0.675, specificity 0.741, AUC 0.786), achieve the highest discrimination and overall accuracy. Logistic regression follows closely (accuracy 0.705, AUC 0.773), balancing interpretability with strong performance. Random forest (accuracy 0.688, AUC 0.750) and naive Bayes (accuracy 0.677, AUC 0.742) deliver robust results, while simpler models—KNN (accuracy 0.665, AUC 0.712), SVM (accuracy 0.648, AUC 0.660), and decision tree (accuracy 0.616, AUC 0.612)—show lower efficacy. These outcomes highlight the superior predictive power of gradient-boosting methods for binary tooth-loss classification.

4.2. Task 2: Multiclass Classification of Tooth Loss

Here, we first perform feature selection via Pearson correlation and then enrich the feature set with domain-informed engineered variables. A custom ensemble of gradient-boosting learners (XGBoost, CatBoost, LightGBM) is trained to predict one of four ordered severity classes (none, 1–5, 6+, all removed). We present the overall accuracy and multiclass ROC AUC, followed by class-wise precision and recall. Finally, we conduct sub-analyses to explore how key socioeconomic and demographic factors—education, employment status, state of residence, age group, income bracket—relate to severity outcomes, illuminating underlying patterns in the data.

4.2.1. Feature Importance

Figure 4 shows that the location indicator (_STATE) is by far the strongest predictor (importance ≈ 91.7), followed closely by alcohol-use frequency (ALCDAY4 ≈ 87.7) and age (AGE_VALUE ≈ 79.7). Composite risk measures (HEALTH_RISK, EDUCA_INCOME) and clinical factors (DIABETE4, GENHLTH) occupy the mid-range. Behavioral and access variables (SMOKDAY2, EDUCA, MEDCOST1, PERSDOC3, CARE_ACCESS, INCOME3) contribute moderately, while sociodemographic and interaction terms (URBSTAT, SMOKE100, AGE_INCOME, EXERANY2) have a lower influence. Polynomial age terms (AGE_SQUARED, AGE_CUBIC) register minimal importance, indicating most predictive power is captured by the linear age effect.

4.2.2. Model Performance

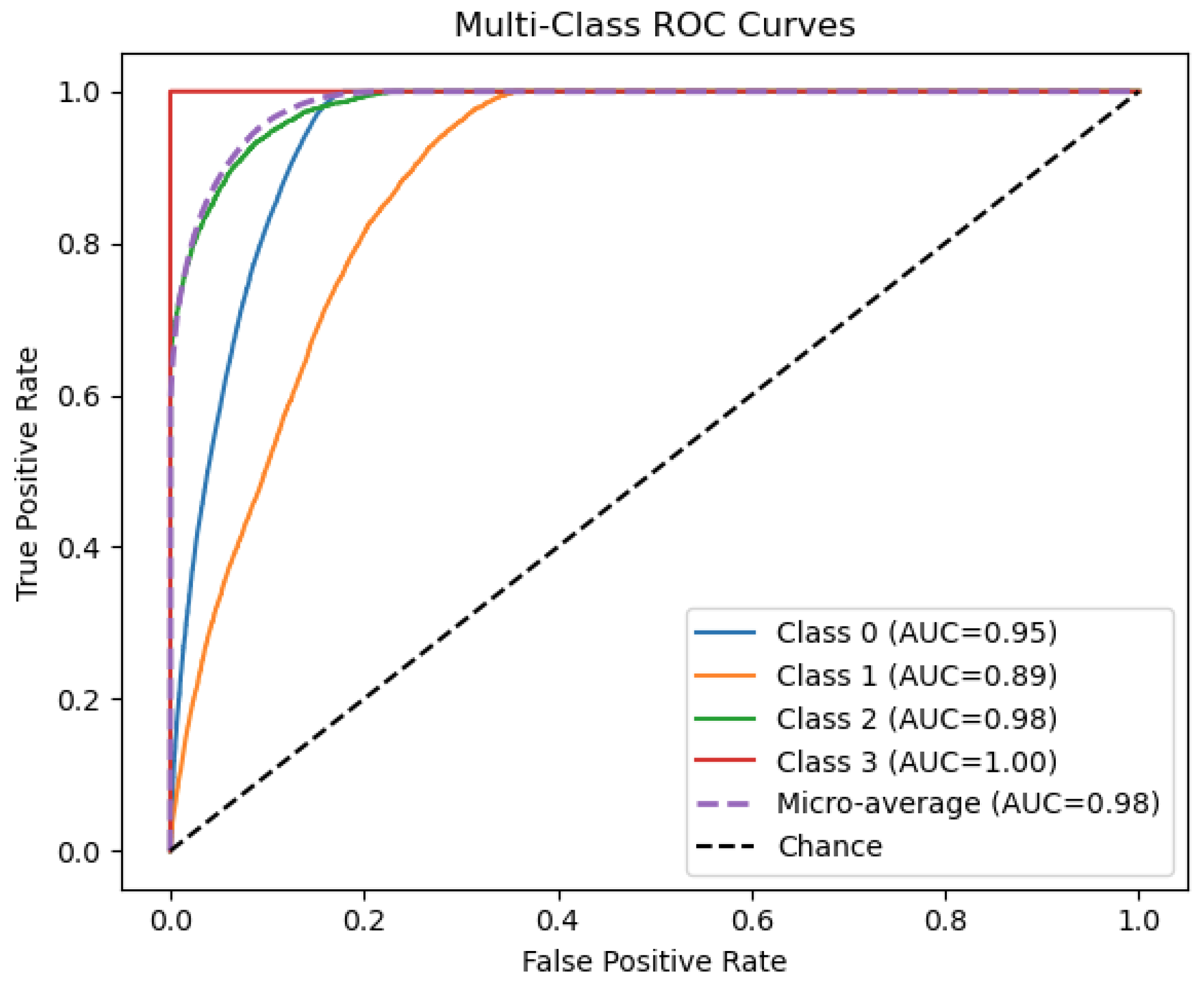

Figure 5 illustrates the discriminative ability of our ensemble model across four outcome categories. Class 3 achieves perfect separation (AUC = 1.00), while Classes 0 and 2 also show excellent performance (AUC = 0.95 and 0.98, respectively). Class 1 is the most challenging to distinguish (AUC = 0.89), indicating room for improvement on that subgroup. The micro-average ROC (AUC = 0.98) confirms that, overall, the classifier maintains very high sensitivity and specificity when aggregating across all classes.

4.3. SHAP Analysis

SHAP values provide a unified measure of each feature’s contribution to individual predictions, enabling both global insight into model behavior and local understanding of specific cases.

4.3.1. Geographic and Demographic Patterns

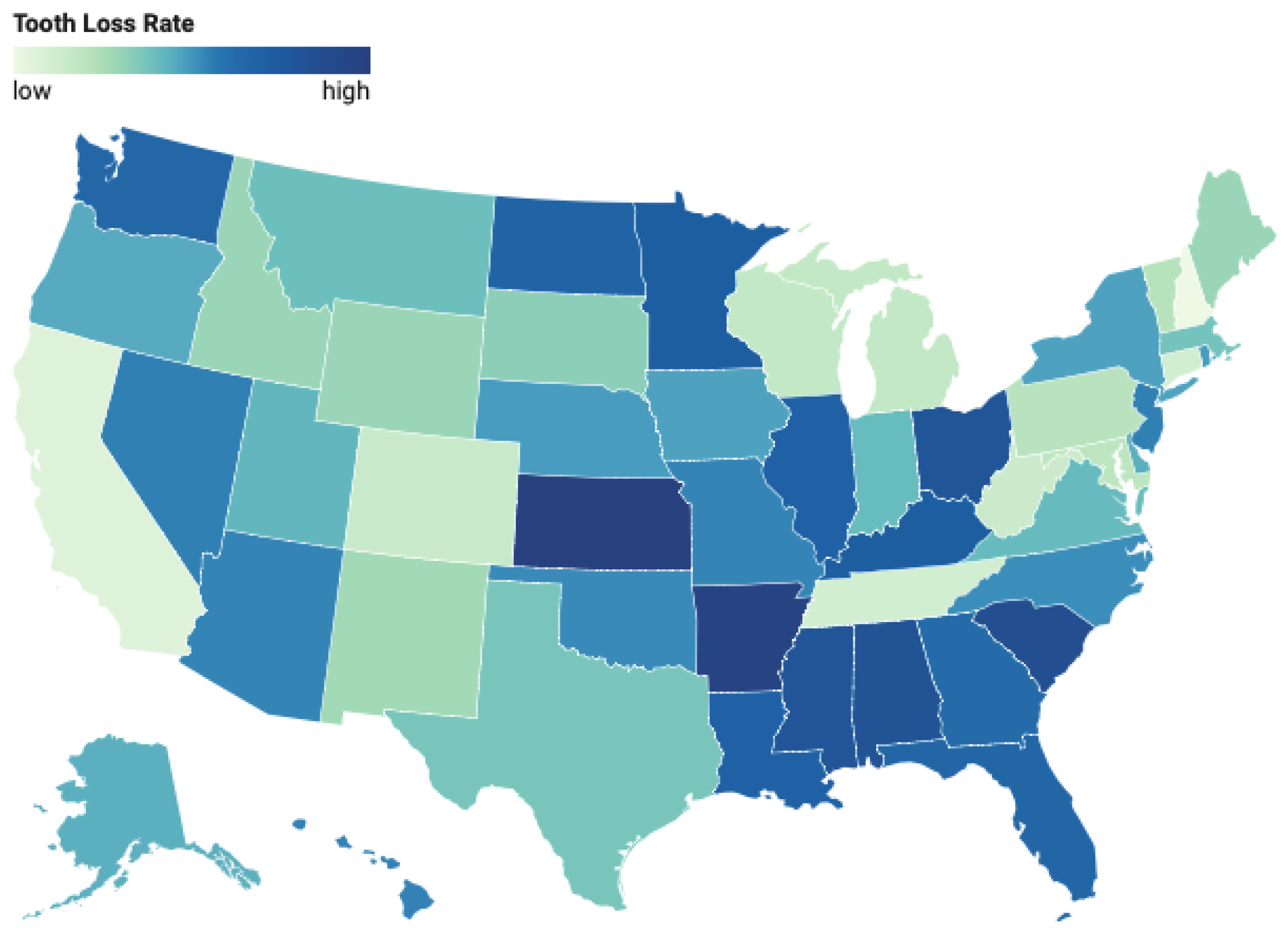

Figure 6 illustrates the geographic variation in tooth loss prevalence across U.S. states. As seen in the choropleth, states in the Appalachian region—particularly West Virginia, Kentucky, and Tennessee—as well as parts of the Deep South, exhibit significantly higher tooth loss rates. Conversely, states in the Northeast (e.g., Massachusetts, Connecticut) and the West (e.g., California, Colorado) report comparatively lower prevalence rates. These disparities remain evident even after adjusting for socioeconomic and behavioral variables, underscoring the potential influence of region-specific healthcare access, preventive care infrastructure, and cultural health practices.

Figure 7 illustrates that the prevalence of severe tooth loss increases markedly across successive age brackets. Among adults aged 18–29, fewer than 2 percent have lost six or more teeth; this proportion rises to approximately 5 percent in the 30–39 group, 8 percent in 40–49, and 17 percent in 50–59. By ages 60–69, nearly one quarter (24 percent) report severe tooth loss, climbing further to 28 percent in those aged 70–79, and exceeding one third (35 percent) in the 80+ cohort. This clear upward trend underscores age as a strong predictor of tooth-loss severity.

4.3.2. Behavioral Risk Factors

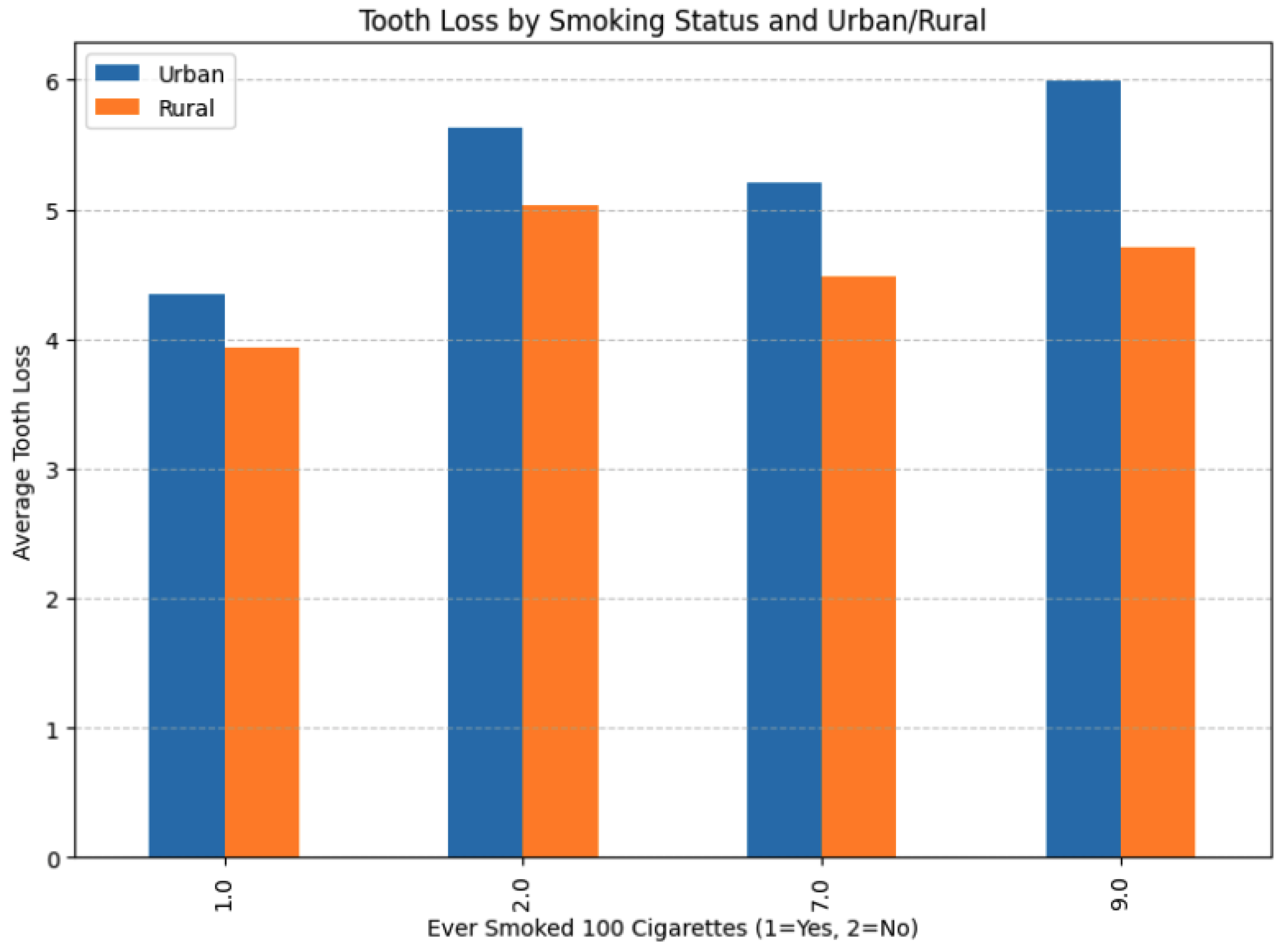

Figure 8 presents the average tooth-loss count across four smoking-status categories (1 = ever smoked 100 cigarettes; 2 = never; 7 and 9 are imputed) for urban (blue) and rural (orange) respondents. Smoking emerged as the strongest behavioral predictor of tooth loss. Among daily smokers, 70.0% had experienced any permanent tooth removal, versus 57.2% of occasional smokers and 58.2% of former smokers (those who reported ≥100 lifetime cigarettes but no current smoking). When restricting to severe tooth loss (≥ 6 teeth removed or all teeth), the gradient sharpened: 30.2% of daily smokers had severe loss compared to 18.5% of occasional smokers and 22.7% of former smokers.

Regular physical activity exerted a protective effect across the lifespan. In the 65+ cohort, 59.1% of respondents who reported exercise in the past 30 days had experienced tooth loss, whereas 69.9% of non-exercisers did so. Diabetes status also strongly predicted tooth loss, with the association intensifying with age: in those aged ≥65, 74.3% of diabetics had tooth loss compared to 62.9% of non-diabetics and 69.8% of individuals with pre-diabetes. Moreover, the combination of multiple risk factors—smoking, inactivity, and diabetes—was synergistic: among seniors, the tooth-loss rate was 86.3% for individuals with all three risk factors versus 51.2% for those with none, a 35.1 percentage-point difference.

4.3.3. Socioeconomic Factors

Figure 9 presents two heatmaps illustrating the distribution of severe tooth loss (defined as the removal of six or more teeth) across dimensions of age, income, and urbanicity. In the left panel, the prevalence of severe tooth loss is plotted by age group and urbanicity. A clear gradient emerges: as age increases, the proportion of individuals with severe tooth loss rises steadily in both rural and urban settings. However, rural populations consistently exhibit higher prevalence rates across all age strata. Notably, in the oldest age group (group 13), the prevalence reaches 26% in rural areas compared to 19% in urban areas, highlighting a substantial urban-rural disparity.

The right panel of

Figure 9 explores severe tooth loss in relation to income level and urbanicity. Here, a pronounced inverse relationship between income and tooth loss is evident. Among the lowest income groups, the rural population exhibits notably higher tooth loss prevalence, peaking at 32% in income group 2, versus 26% among their urban counterparts. As income increases, this disparity narrows, with tooth loss prevalence dropping below 5% in the highest income brackets for both urban and rural residents.

Education and income exhibited strong, inverse associations with tooth loss. In the 65+ age group, 79.1% of respondents with less than a high-school education reported tooth loss versus 53.4% of college graduates (a 25.7 percentage-point gap). Similarly, lower income brackets corresponded to higher loss prevalence, and although partially mediated by health behaviors and access, the income gradient remained significant after multivariable adjustment.

Healthcare access barriers further exacerbated disparities. Among respondents reporting “fair” general health, 66.7% of those unable to see a doctor due to cost had tooth loss, compared to 53.2% of those without cost constraints. The relationship with provider continuity was more nuanced: individuals with multiple healthcare providers initially appeared to have higher tooth-loss rates, but this reflected confounding by age and comorbidities. After adjustment, having a single, consistent provider was associated with lower tooth-loss prevalence, underscoring the value of coordinated care.

Collectively, these geographic, behavioral, and socioeconomic patterns highlight the multifactorial nature of tooth loss and point to targeted intervention opportunities—from tobacco cessation and exercise promotion to improving rural dental access and mitigating financial barriers to care.

5. Discussion

This study investigated predictors of tooth loss using the 2022 BRFSS dataset through two complementary machine learning frameworks: binary classification (any tooth loss vs. none) and multiclass classification (severity of tooth loss across four categories). The large, nationally representative dataset, combined with advanced modeling and interpretability techniques, provides important insights into the complex interplay of demographic, socioeconomic, behavioral, and geographic factors influencing oral health outcomes.

5.1. Binary Classification Insights

In the binary task, gradient boosting models—specifically XGBoost, gradient boosting classifier, and CatBoost—consistently outperformed baseline algorithms across evaluation metrics. Feature importance analyses revealed that age, education level, income, smoking behavior, and diabetes status were the strongest predictors of tooth loss.

The dominant influence of smoking on tooth loss was particularly notable, aligning with biological mechanisms such as reduced blood flow, impaired immune response, and heightened inflammation in periodontal tissues. Daily smoking, compared to occasional or former smoking, showed significantly higher tooth loss prevalence, reinforcing smoking cessation as a public health priority.

Socioeconomic factors emerged as powerful determinants. Lower education and income levels were strongly associated with increased tooth loss risk, underscoring systemic disparities in access to preventive dental care, health literacy, and health-promoting behaviors. These findings emphasize the need for targeted interventions in socioeconomically disadvantaged populations.

5.2. Multiclass Classification Insights

The multiclass ensemble model demonstrated strong overall predictive performance, achieving a test accuracy of 86.9% and a macro-averaged ROC AUC of 0.98. Class-specific ROC curves indicated excellent discriminative ability, with the model achieving near-perfect performance for individuals with no tooth loss (Class 0; AUC = 0.95) and those who had lost all their teeth (Class 3; AUC = 1.00). Intermediate severity levels posed slightly more classification challenges: Class 1 (1–5 teeth removed) had the lowest AUC (0.89), while Class 2 (6 or more teeth removed, but not all) showed high separability (AUC = 0.98). The comparatively lower classification performance for Class 1 (1–5 teeth removed) in the multiclass model can be attributed to several factors. First, this group likely exhibits substantial heterogeneity in both behavioral and clinical profiles, making it more difficult for the model to distinguish from adjacent categories. Individuals in this class may range from those with minor carious lesions to those with early-stage periodontal disease, resulting in overlapping feature distributions with both Class 0 (no tooth loss) and Class 2 (6 or more teeth removed). Additionally, the subjective nature of self-reporting may lead to misclassification or rounding, particularly between 5 and 6 teeth removed, which could further blur the decision boundary. This challenge highlights the need for more granular and objective measures of dental health, as well as improved feature representations to better capture the nuances of intermediate severity levels.

An analysis of the confusion matrix revealed that misclassifications most commonly occurred between adjacent severity levels, suggesting that the models captured the ordinal nature of disease progression to a reasonable extent. This validates the feasibility of using survey-based ML approaches for fine-grained oral health risk stratification. To contextualize our findings,

Table 6 presents a comparative overview of selected peer-reviewed studies that have employed machine learning techniques for tooth loss prediction or periodontal disease diagnosis. These studies vary considerably in data modalities, model architectures, and evaluation strategies, providing a broad landscape for benchmarking our model’s performance and applicability. Unlike prior studies focused on radiographic image analysis (e.g., Sunnetci et al. [

35], Lee et al. [

36]), our approach exploits structured survey data, achieving competitive AUCs without requiring specialized imaging inputs. In contrast to smartphone-based self-assessment systems [

37], our models scale to hundreds of thousands of records and reveal both behavioral and socioeconomic drivers of tooth loss at the population level.

5.3. Interpretability and SHAP Analysis

Permutation importance and SHAP analyses provided granular insights into the contribution of individual features:

Smoking: This study presents several key findings regarding predictors of tooth loss and their complex interrelationships. First, our results confirm the paramount importance of smoking as a risk factor for tooth loss, consistent with previous research linking smoking to periodontal disease and accelerated tooth loss. The dose–response relationship observed—with daily smokers showing higher tooth loss rates than occasional smokers—aligns with biological mechanisms of tobacco’s effects on periodontal tissues, including reduced blood flow, altered immune response, and increased inflammatory markers.

Exercise as a protective factor: Regular physical activity emerged as a novel and consistent protective factor against tooth loss. Among adults aged 65 and older, the prevalence of tooth loss was substantially lower among those who reported exercising in the past 30 days (59.1%) compared to their non-exercising counterparts (69.9%). This association remained robust even after adjusting for smoking status, diabetes, education, and income level. These findings suggest that the systemic benefits of exercise—such as reduced inflammation, enhanced glycemic control, and improved vascular function—may extend to oral health by mitigating risk pathways for periodontal disease and tooth loss.

Urban–Rural Disparities: In addition to individual-level determinants, the analysis revealed marked geographic disparities in tooth loss prevalence. Rural populations consistently exhibited higher rates of tooth loss compared to urban counterparts, even after controlling for demographic and behavioral covariates. This suggests the influence of structural and environmental barriers—including the limited availability of dental care providers, inadequate transportation infrastructure, and variability in Medicaid dental coverage across states. Furthermore, after adjusting for age and comorbidity burden, having a consistent healthcare provider—whether medical or dental—was associated with reduced tooth loss, underscoring the importance of integrated, continuous care in promoting long-term oral health outcomes.

Healthcare Provider Access: The association between healthcare provider status and tooth loss revealed nuanced patterns. While individuals with multiple providers initially appeared at higher risk, this relationship was largely confounded by age and comorbidity burden. After adjustment, having a single, consistent provider—whether medical or dental—was associated with reduced tooth loss, highlighting the protective role of continuous, coordinated care.

Compounding Effects of Geography and Socioeconomics: Heatmap analyses underscored the intersecting impact of geographic and socioeconomic factors on tooth loss severity. Rural residence, low income, and advanced age emerged as significant compounding risk factors. The urban–rural tooth loss disparity persisted even after controlling for behavioral and socioeconomic variables, suggesting additional structural influences such as provider availability, water fluoridation policies, and cultural attitudes toward preventive dental care.

Diabetes strongly influenced tooth loss risk, particularly among older adults, reaffirming its role as a major systemic risk factor for periodontal disease. It also exacerbates chronic inflammation and impairs tissue repair, accelerating periodontal breakdown and bone loss.

The synergistic effect of multiple risk factors (e.g., smoking, diabetes, no exercise) was particularly striking. Individuals exposed to all three showed disproportionately elevated tooth loss rates compared to those with isolated exposures, suggesting cumulative biological and behavioral impacts.

Several limitations must be acknowledged:

Self-Reported Data: all bRFSS measures were self-reported, introducing potential recall and reporting biases, particularly around sensitive behaviors such as smoking, income, and oral health history.

Cross-Sectional Design: The cross-sectional nature of the data precludes causal inference. Longitudinal studies are needed to validate temporal associations between risk factors and tooth loss progression.

Missing Clinical Variables: important oral health behaviors (e.g., brushing frequency, flossing habits, dietary sugar intake) and clinical parameters (e.g., periodontal probing depths) were not available, potentially limiting model performance.

Sample Imbalance and Representativeness: although BRFSS applies survey weights to improve generalizability, certain vulnerable populations (e.g., non-English speakers, institutionalized adults) may remain underrepresented.

Despite these limitations, this study offers significant advancements:

The use of a large, diverse, and nationally representative dataset capturing broad sociodemographic and behavioral variables.

The application of advanced ML pipelines, including class balancing (ADASYN), cross-validation, hyperparameter tuning, and ensemble learning.

The integration of interpretability methods (permutation importance, SHAP) to enhance transparency and model trustworthiness.

The identification of novel protective factors (e.g., exercise) and systemic disparities (urban–rural gaps) in tooth loss risk.

Overall, our model demonstrates competitive predictive power relative to image-based systems while offering improved scalability and public health relevance through its reliance on accessible, non-clinical survey data.

6. Conclusions and Future Work

In this work, we developed and evaluated both binary and multi-class machine-learning frameworks to predict tooth-loss patterns in U.S. adults using the 2022 BRFSS LLCP dataset. By training a diverse suite of models—including logistic regression, decision trees, random forests, gradient boosting, AdaBoost, CatBoost, and XGBoost—we demonstrated that ensemble approaches can robustly identify individuals at risk for any tooth loss, as well as stratify the severity of loss across multiple categories. Across both tasks, demographic and socioeconomic factors (age, education, income) and behavioral risk factors (smoking, diabetes, physical activity) consistently emerged as the strongest predictors, reaffirming longstanding public-health evidence and highlighting novel protective associations with regular exercise. Moreover, our multi-class analysis revealed that severe tooth-loss cases are exceptionally well discriminated, while mild-loss phenotypes remain more challenging to distinguish—an insight that points to opportunities for model refinement and additional feature collection.

The geographic and urban–rural disparities uncovered in our study underscore the importance of structural determinants in shaping oral health. Even after adjustments for individual risk profiles, residents of Appalachian and rural regions exhibited disproportionately high tooth-loss rates, calling for targeted interventions such as teledentistry, mobile clinics, and expanded public insurance coverage in underserved areas.

Despite these advances, our study is constrained by the cross-sectional design of the BRFSS and its reliance on self-reported measures, which limits causal inference and introduces potential biases. Key variables—such as smoking status, dental visit frequency, and diabetes diagnosis—are particularly susceptible to recall errors and social desirability bias, potentially leading to underreporting or misclassification. These inaccuracies can reduce input fidelity and, in turn, influence model performance. In addition, the BRFSS lacks clinically verified oral health measures such as periodontal probing depths, plaque indices, and radiographic findings. This omission restricts the model’s ability to capture subclinical disease progression or differentiate between underlying causes of tooth loss, thereby limiting its clinical generalizability. Moreover, as no external validation was performed in this study, the generalizability of the models beyond the BRFSS population remains untested. While the survey’s standardized methodology and large sample size help mitigate some sources of error, future research should prioritize the use of clinically validated data—such as electronic health records or dental examination datasets—to enhance predictive robustness and granularity. To further improve temporal validity and public health utility, longitudinal datasets like NHANES may be leveraged. Moreover, incorporating environmental factors (e.g., community water fluoridation) and adopting fairness-aware modeling techniques will be essential to improve model performance among underrepresented subgroups. Finally, we advocate for the integration of these predictive frameworks into community health programs and clinical decision support systems, where they can guide personalized prevention strategies and equitable resource allocation.

In summary, we developed interpretable machine learning models for classifying both the presence and severity of tooth loss using nationally representative BRFSS 2022 data. Our findings demonstrate the potential of ensemble-based models to identify key behavioral, demographic, and systemic risk factors associated with tooth loss in U.S. adults. While the models showed strong performance across multiple evaluation metrics, the absence of clinical validation and reliance on self-reported survey data limit their immediate applicability in clinical settings. Therefore, these results should be viewed as preliminary and hypothesis-generating. Future work should explore external validation using electronic health records, integrate clinical oral health variables, and assess the models’ utility in real-world public health and clinical decision-making contexts.

Author Contributions

Conceptualization, S.S. and V.G.; methodology, S.S.; software, S.S.; validation, S.S., G.V. and V.G.; formal analysis, S.S.; investigation, S.S.; resources, V.G. and C.K.; data curation, S.S.; writing—original draft preparation, S.S.; writing—review and editing, G.V., V.G. and C.K.; visualization, S.S.; supervision, V.G. and C.K.; project administration, V.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable. This study did not involve any experiments with human or animal subjects.

Informed Consent Statement

Not applicable. This study did not involve human participants.

Data Availability Statement

Acknowledgments

During the preparation of this manuscript/study, the authors used Mendeley for the purposes of bibliography and article selection. ChatGPT was used to perform basic operations for keyword counting and table generation. Gemini was used to perform bibtex generation from the web URLs. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Petersen, P.E.; Bourgeois, D.; Ogawa, H.; Estupinan-Day, S.; Ndiaye, C. The global burden of oral diseases and risks to oral health. Bull. World Health Organ. 2005, 83, 661–669. [Google Scholar] [PubMed]

- Peres, M.A.; Macpherson, L.M.D.; Weyant, R.J.; Daly, B.; Venturelli, R.; Mathur, M.; Listl, S.; Celeste, R.K.; Guarnizo-Herreño, C.C.; Kearns, C.; et al. Oral diseases: A global public health challenge. Lancet 2019, 394, 249–260. [Google Scholar] [CrossRef]

- Sanders, A.E.; Slade, G.D.; Turrell, G.; Spencer, A.J.; Marcenes, W. Does Psychological Stress Mediate Social Deprivation in Tooth Loss? J. Dent. Res. 2007, 86, 1166–1170. [Google Scholar] [CrossRef] [PubMed]

- Gerritsen, A.E.; Allen, P.F.; Witter, D.J.; Bronkhorst, E.M.; Creugers, N.H. Tooth loss and oral health-related quality of life: A systematic review and meta-analysis. Health Qual. Life Outcomes 2010, 8, 126. [Google Scholar] [CrossRef] [PubMed]

- Sanders, A.E.; Slade, G.D.; Lim, S.; Reisine, S.T. Impact of oral disease on quality of life in the US and Australian populations. Community Dent. Oral Epidemiol. 2009, 37, 171–181. [Google Scholar] [CrossRef] [PubMed]

- Centers for Disease Control and Prevention. Disparities in Oral Health. 2022. Available online: https://www.cdc.gov/oral-health/health-equity/index.html (accessed on 15 April 2025).

- Taiwo, O.A.; Alabi, O.A.; Yusuf, O.M.; Ololo, O.; Olawole, W.O.; Adeyemo, W.I. Reasons and pattern of tooth extraction among patients presenting at a Nigerian semi-rural specialist hospital. Niger. Q. J. Hosp. Med. 2012, 22, 200–204. [Google Scholar]

- Sanders, A.; Cardel, M.; Laniado, N.; Kaste, L.; Finlayson, T.; Perreira, K.; Sotres-Alvarez, D. Diet quality and dental caries in the Hispanic Community Health Study/Study of Latinos. J. Public Health Dent. 2020, 80, 140–149. [Google Scholar] [CrossRef]

- Singh, A.; Peres, M.A.; Watt, R.G. The Relationship between Income and Oral Health: A Critical Review. J. Dent. Res. 2019, 98, 853–860. [Google Scholar] [CrossRef]

- Patel, J.; Su, C.; Tellez, M. Developing and testing a prediction model for periodontal disease using machine learning and big electronic dental record data. Front. Artif. Intell. 2022, 5, 979525. [Google Scholar] [CrossRef]

- Polizzi, A.; Quinzi, V.; Lo Giudice, A.; Marzo, G.; Leonardi, R.; Isola, G. Accuracy of Artificial Intelligence Models in the Prediction of Periodontitis: A Systematic Review. JDR Clin. Transl. Res. 2024, 9, 312–324. [Google Scholar] [CrossRef]

- Huang, C.; Wang, J.; Wang, S.; Zhang, Y. A review of deep learning in dentistry. Neurocomputing 2023, 554, 126629. [Google Scholar] [CrossRef]

- Disease, G.; Incidence, I.; Collaborators, P. Global, regional, and national incidence, prevalence, and years lived with disability for 354 diseases and injuries for 195 countries and territories, 1990–2017: A systematic analysis for the Global Burden of Disease Study 2017. Lancet 2018, 392, 1789–1858. [Google Scholar]

- Fleming, E.; Afful, J.; Griffin, S.O. Prevalence of Tooth Loss Among Older Adults: United States, 2015–2018; NCHS Data Brief No. 368; National Center for Health Statistics: Hyattsville, MD, USA, 2020.

- Dashti, M.; Londono, J.; Ghasemi, S.; Zare, N.; Samman, M.; Ashi, H.; Amirzade-Iranaq, M.H.; Khosraviani, F.; Sabeti, M.; Khurshid, Z. Comparative analysis of deep learning algorithms for dental caries detection and prediction from radiographic images: A comprehensive umbrella review. PeerJ Comput. Sci. 2024, 10, e2371. [Google Scholar] [CrossRef] [PubMed]

- Alharbi, S.S.; Alhasson, H.F. Exploring the Applications of Artificial Intelligence in Dental Image Detection: A Systematic Review. Diagnostics 2024, 14, 2442. [Google Scholar] [CrossRef] [PubMed]

- Beak, W.; Park, J.; Ji, S. Data-driven prediction model for periodontal disease based on correlational feature analysis and clinical validation. Heliyon 2024, 10, e32496. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.; Chen, J.; Hu, Y.; Li, W. Embracing technological revolution: A panorama of machine learning in dentistry. Med. Oral Patol. Oral Y Cir. Bucal 2024, 29, e742–e749. [Google Scholar] [CrossRef]

- Bichu, Y.M.; Zou, B.; Chaudhari, P.K.; Adel, S.M.; Vaiid, N. Artificial Intelligence Applications in Orthodontics. In Artificial Intelligence for Oral Health Care: Applications and Future Prospects; Schwendicke, F., Chaudhari, P.K., Dhingra, K., Uribe, S.E., Hamdan, M., Eds.; Springer Nature: Cham, Switzerland, 2025; pp. 81–97. [Google Scholar] [CrossRef]

- Wu, Y.; Ning, L.; Tu, Y.; Huang, C.; Huang, N.; Chen, Y.; Chang, P. Salivary biomarker combination prediction model for the diagnosis of periodontitis in a Taiwanese population. J. Formos. Med. Assoc. 2018, 117, 841–848. [Google Scholar] [CrossRef]

- Shujaat, S. Automated Machine Learning in Dentistry: A Narrative Review of Applications, Challenges, and Future Directions. Diagnostics 2025, 15, 273. [Google Scholar] [CrossRef]

- Elani, H.W.; Batista, A.F.; Thomson, W.M.; Kawachi, I.; Chiavegatto Filho, A.D. Predictors of tooth loss: A machine learning approach. PLoS ONE 2021, 16, e0252873. [Google Scholar] [CrossRef]

- Hasuike, A.; Watanabe, T.; Wakuda, S.; Kogure, K.; Yanagiya, R.; Byrd, K.M.; Sato, S. Machine Learning in Predicting Tooth Loss: A Systematic Review and Risk of Bias Assessment. J. Pers. Med. 2022, 12, 1682. [Google Scholar] [CrossRef]

- Panda, N.; Satapathy, S.; Bhuyan, S.; Bhuyan, R. Impact of Machine Learning and Prediction Models in the Diagnosis of Oral Health Conditions. Int. J. Stat. Med. Res. 2023, 12, 51–57. [Google Scholar] [CrossRef]

- Lee, C.T.; Zhang, K.; Li, W.; Tang, K.; Ling, Y.; Walji, M.F.; Jiang, X. Identifying predictors of tooth loss using a rule-based machine learning approach: A retrospective study at university-setting clinics. J. Periodontol. 2023, 94, 1231–1242. [Google Scholar] [CrossRef] [PubMed]

- Ravidà, A.; Wang, H.H.; Giannobile, W.V.; Wang, H.L. Exploring the accuracy of tooth loss prediction between a clinical periodontal prognostic system and a machine learning prognostic model. J. Clin. Periodontol. 2023, 50, 123–132. [Google Scholar]

- Cooray, U.; Watt, R.G.; Tsakos, G.; Heilmann, A.; Hariyama, M.; Yamamoto, T.; Kuruppuarachchige, I.; Kondo, K.; Osaka, K.; Aida, J. Importance of socioeconomic factors in predicting tooth loss among older adults in Japan: Evidence from a machine learning analysis. Soc. Sci. Med. 2021, 291, 114486. [Google Scholar] [CrossRef]

- Schuch, H.S.; Furtado, M.; Silva, G.F.d.S.; Kawachi, I.; Chiavegatto Filho, A.D.; Elani, H.W. Fairness of Machine Learning Algorithms for Predicting Foregone Preventive Dental Care for Adults. JAMA Netw. Open 2023, 6, e2341625. [Google Scholar] [CrossRef]

- Tiwari, T.; Tranby, E.; Thakkar-Samtani, M.; Frantsve-Hawley, J. Determinants of Tooth Loss in a Medicaid Adult Population. JDR Clin. Transl. Res. 2021, 7, 289–297. [Google Scholar] [CrossRef]

- Høvik, H.; Kolberg, M.; Gjøra, L.; Nymoen, L.C.; Skudutyte-Rysstad, R.; Hove, L.H.; Sun, Y.Q.; Fagerhaug, T.N. The validity of self-reported number of teeth and edentulousness among Norwegian older adults, the HUNT Study. BMC Oral Health 2022, 22, 82. [Google Scholar] [CrossRef]

- Pierannunzi, C.; Hu, S.S.; Balluz, L. A systematic review of publications assessing reliability and validity of the Behavioral Risk Factor Surveillance System (BRFSS), 2004–2011. BMC Med. Res. Methodol. 2013, 13, 49. [Google Scholar] [CrossRef]

- Jiang, Y.; Okoro, C.A.; Oh, J.; Fuller, D.L. Sociodemographic and Health-Related Risk Factors Associated with Tooth Loss Among Adults in Rhode Island. Prev. Chronic Dis. 2013, 10, E45. [Google Scholar] [CrossRef]

- Wiener, R.C.; Shen, C.W.; Sambamoorthi, U.; Findley, P.A. Rural Veterans’ dental utilization, Behavioral Risk Factor Surveillance Survey, 2014. J. Public Health Dent. 2017, 77, 383–392. [Google Scholar] [CrossRef]

- Shwartz-Ziv, R.; Armon, A. Tabular data: Deep learning is not all you need. Inf. Fusion 2022, 81, 84–90. [Google Scholar] [CrossRef]

- Sunnetci, K.M.; Ulukaya, S.; Alkan, A. Periodontal bone loss detection based on hybrid deep learning and machine learning models with a user-friendly application. Biomed. Signal Process. Control 2022, 77, 103844. [Google Scholar] [CrossRef]

- Lee, C.T.; Kabir, T.; Nelson, J.; Sheng, S.; Meng, H.W.; Van Dyke, T.E.; Walji, M.F.; Jiang, X.; Shams, S. Use of the deep learning approach to measure alveolar bone level. J. Clin. Periodontol. 2022, 49, 260–269. [Google Scholar] [CrossRef]

- Liang, Y.; Fan, H.W.; Fang, Z.; Miao, L.; Li, W.; Zhang, X.; Sun, W.; Wang, K.; He, L.; Chen, X.A. OralCam: Enabling Self-Examination and Awareness of Oral Health Using a Smartphone Camera. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020. CHI ’20. pp. 1–13. [Google Scholar] [CrossRef]

- Alotaibi, G.; Awawdeh, M.; Farook, F.F.; Aljohani, M.; Aldhafiri, R.M.; Aldhoayan, M. Artificial intelligence (AI) diagnostic tools: Utilizing a convolutional neural network (CNN) to assess periodontal bone level radiographically—A retrospective study. BMC Oral Health 2022, 22, 399. [Google Scholar] [CrossRef]

Figure 1.

Prevalence of complete tooth loss among adults aged 65 and over by sex and age group in the U.S. (2015–2018). Source: NHANES [

14].

Figure 1.

Prevalence of complete tooth loss among adults aged 65 and over by sex and age group in the U.S. (2015–2018). Source: NHANES [

14].

Figure 2.

Overview of the machine learning pipeline developed for predicting tooth loss outcomes using the BRFSS 2022 dataset. The pipeline integrates data cleaning, task-specific feature selection strategies (correlation-based filtering for binary classification and extended engineered features for multiclass classification), model training, hyperparameter tuning via cross-validation, and final ensemble construction using a soft-voting classifier (XGBoost, LightGBM, CatBoost). Separate workflows were developed for binary (tooth loss vs. no tooth loss) and multiclass (severity of tooth loss) prediction tasks. Evaluation encompassed accuracy, AUC, confusion matrices, feature importance analysis (permutation and SHAP), and fairness assessment across demographic subgroups.

Figure 2.

Overview of the machine learning pipeline developed for predicting tooth loss outcomes using the BRFSS 2022 dataset. The pipeline integrates data cleaning, task-specific feature selection strategies (correlation-based filtering for binary classification and extended engineered features for multiclass classification), model training, hyperparameter tuning via cross-validation, and final ensemble construction using a soft-voting classifier (XGBoost, LightGBM, CatBoost). Separate workflows were developed for binary (tooth loss vs. no tooth loss) and multiclass (severity of tooth loss) prediction tasks. Evaluation encompassed accuracy, AUC, confusion matrices, feature importance analysis (permutation and SHAP), and fairness assessment across demographic subgroups.

Figure 3.

Min-max normalized feature importance (0-1) across eight classification algorithms: random forest, logistic regression, decision tree, GaussianNB, CatBoost, XGBoost, Linear SVM, and KNN.

Figure 3.

Min-max normalized feature importance (0-1) across eight classification algorithms: random forest, logistic regression, decision tree, GaussianNB, CatBoost, XGBoost, Linear SVM, and KNN.

Figure 4.

Full set of features ranked by average importance score across ensemble models for predicting severe tooth loss.

Figure 4.

Full set of features ranked by average importance score across ensemble models for predicting severe tooth loss.

Figure 5.

Multi-class ROC curves for the ensemble classifier on the test set. Each solid line shows the one-vs-rest ROC curve for a single class, with the corresponding area under the curve (AUC) in parentheses: Class 0 (0.95), Class 1 (0.89), Class 2 (0.98), and Class 3 (1.00). The purple dashed curve is the micro-average ROC (AUC = 0.98) over all classes, and the black dashed diagonal denotes random-chance performance.

Figure 5.

Multi-class ROC curves for the ensemble classifier on the test set. Each solid line shows the one-vs-rest ROC curve for a single class, with the corresponding area under the curve (AUC) in parentheses: Class 0 (0.95), Class 1 (0.89), Class 2 (0.98), and Class 3 (1.00). The purple dashed curve is the micro-average ROC (AUC = 0.98) over all classes, and the black dashed diagonal denotes random-chance performance.

Figure 6.

Choropleth map of tooth loss prevalence rates by U.S. state, derived from BRFSS 2022 data. Darker shades indicate higher prevalence.

Figure 6.

Choropleth map of tooth loss prevalence rates by U.S. state, derived from BRFSS 2022 data. Darker shades indicate higher prevalence.

Figure 7.

Proportion of respondents reporting severe tooth loss (six or more missing teeth) by age group.

Figure 7.

Proportion of respondents reporting severe tooth loss (six or more missing teeth) by age group.

Figure 8.

Mean number of missing teeth by smoking status code (1, 2, 7, 9) and urban versus rural residence.

Figure 8.

Mean number of missing teeth by smoking status code (1, 2, 7, 9) and urban versus rural residence.

Figure 9.

Heatmaps illustrating the proportion of individuals with severe tooth loss (≥6 teeth removed) by age group and income level across urban and rural populations. Left: tooth loss by age and urbanicity. Right: tooth loss by income and urbanicity.

Figure 9.

Heatmaps illustrating the proportion of individuals with severe tooth loss (≥6 teeth removed) by age group and income level across urban and rural populations. Left: tooth loss by age and urbanicity. Right: tooth loss by income and urbanicity.

Table 1.

BRFSS 2022 LLCP variable code mapping.

Table 1.

BRFSS 2022 LLCP variable code mapping.

| Variable | Value Codes and Definitions |

|---|

| LASTDEN4 | 1 = Within past year;

2 = 1 to <2 years ago;

3 = 2 to <3 years ago;

4 = 3 to <4 years ago;

5 = 4 to <5 years ago;

6 = ≥5 years ago;

7 = Do not know/Not sure;

8 = Never visited;

9 = Refused. |

| DIABETE4 | 1 = Yes;

2 = No;

3 = Borderline/Pre-diabetes;

4 = No, pre-diabetes;

7 = Do not know;

9 = Refused. |

| SEXVAR | 1 = Male;

2 = Female. |

| _AGEG5YR | 1 = 18–24;

2 = 25–29;

3 = 30–34;

4 = 35–39;

5 = 40–44;

6 = 45–49;

7 = 50–54;

8 = 55–59;

9 = 60–64;

10 = 65–69;

11 = 70–74;

12 = 75–79;

13 = ≥80. |

| _RACEPR1 | 1 = White;

2 = Black/African American;

3 = American Indian/Alaska Native;

4 = Asian;

5 = Native Hawaiian/Pacific Islander;

6 = Other/Multiple. |

| EDUCA | 1 = ≤8th grade;

2 = 9–11th grade;

3 = High school graduate/GED;

4 = Some college/technical school;

5 = College graduate;

7 = Do not know;

9 = Refused. |

| _SMOKER3 | 1 = Current smoker;

2 = Former smoker;

3 = Never smoked;

7 = Do not know;

9 = Refused. |

| DRNKANY6 | 1 = Yes (any alcohol in past 30 days);

2 = No;

7 = Do not know;

9 = Refused. |

| RMVTETH4 | 1 = 1-5 tooth removed;

2 = 6 or more, but not all;

3 = All;

7 = Do not know/Not sure;

8 = No permanent teeth removed;

9 = Refused. |

| _STATE | 01 = Alabama; …; 56 = Wyoming;

66, 72, 78 = U.S. territories. |

| INCOME3 | 1 = ≤ $10,000;

2 = $10,000–15,000;

3 = $15,000–20,000;

4 = $20,000–25,000;

5 = $25,000–35,000;

6 = $35,000–50,000;

7 = $50,000–75,000;

8 = ≥ $75,000;

9 = Refused;

99 = Do not know. |

| MARITAL | 1 = Married;

2 = Divorced;

3 = Widowed;

4 = Separated;

5 = Never married;

8 = Unmarried couple;

9 = Refused. |

| _URBSTAT | 1 = Metro, central city;

2 = Metro, suburban;

3 = Nonmetro;

9 = Missing/Refused. |

| EMPLOY1 | 1 = Employed for wages;

2 = Self-employed;

3 = Out of work ≥1 yr;

4 = Out of work <1 yr;

5 = Homemaker;

6 = Student;

7 = Retired;

8 = Unable to work;

9 = Refused. |

| PRIMINSR | 1 = Yes;

2 = No;

7 = Do not know;

9 = Refused. |

| SDHTRNSP | 1 = Yes (barrier);

2 = No;

7 = Do not know;

9 = Refused. |

| GENHLTH | 1 = Excellent;

2 = Very good;

3 = Good;

4 = Fair;

5 = Poor;

7 = Do not know;

9 = Refused. |

Table 2.

Top features with based on Pearson correlation for multiclass classification, grouped across four columns for compact presentation.

Table 2.

Top features with based on Pearson correlation for multiclass classification, grouped across four columns for compact presentation.

| Feature | | Feature | |

|---|

| _ALTETH3 | 0.326 | _AGE65YR | 0.143 |

| _AGE_G | 0.243 | _SMOKGRP | 0.140 |

| _AGE80 | 0.242 | _YRSSMOK | 0.131 |

| COLGSEX1 | 0.209 | _EDUCAG | 0.122 |

| _HCVU652 | 0.152 | HAVARTH4 | 0.115 |

| _DRDXAR2 | 0.152 | _INCOMG1 | 0.108 |

| HADMAM | 0.107 | _EXTETH3 | 0.103 |

| SMOKE100 | 0.102 | CRVCLCNC | 0.101 |

Table 3.

Hyperparameter search grids used for each base model.

Table 3.

Hyperparameter search grids used for each base model.

| Model | n_Estimators/Iterations | Depth/Leaves | Learning Rate |

|---|

| XGBoost | 200 | max_depth = 6 | 0.1 |

| LightGBM | 200 | num_leaves = 31 | 0.1 |

| CatBoost | 200 | depth = 6 | 0.1 |

Table 4.

Libraries and Packages Used in the Study.

Table 4.

Libraries and Packages Used in the Study.

| Library | Purpose |

|---|

| scikit-learn | Model development (logistic regression, decision tree, random forest, SVM, KNN, naive bayes); preprocessing; model evaluation |

| xgboost | Implementation of XGBoost classifiers for both binary and multiclass tasks |

| catboost | CatBoost classifier for handling categorical features with minimal preprocessing |

| lightgbm | LightGBM classifier for efficient gradient boosting on large datasets |

| imblearn | Application of ADASYN for class imbalance mitigation inside cross-validation folds |

| shap | Model interpretability using SHAP (Shapley Additive Explanations) values |

| matplotlib | Static plotting for evaluation curves (ROC, precision-recall, confusion matrices) |

| seaborn | Enhanced visualization and heatmap generation for correlation matrices and feature importances |

Table 5.

Binary classification performance metrics.

Table 5.

Binary classification performance metrics.

| Model | Accuracy | Precision | Recall | Specificity | AUC |

|---|

| Logistic Regression | 0.705 | 0.688 | 0.666 | 0.739 | 0.773 |

| Random Forest | 0.688 | 0.663 | 0.664 | 0.708 | 0.750 |

| Support Vector Machine | 0.648 | 0.689 | 0.668 | 0.682 | 0.660 |

| K-Nearest Neighbors | 0.665 | 0.648 | 0.608 | 0.715 | 0.712 |

| Decision Tree | 0.616 | 0.588 | 0.576 | 0.651 | 0.612 |

| Naive Bayes | 0.677 | 0.670 | 0.597 | 0.746 | 0.742 |

| CatBoost | 0.711 | 0.693 | 0.677 | 0.740 | 0.786 |

| XGBoost | 0.714 | 0.693 | 0.675 | 0.741 | 0.786 |

Table 6.

Comparison of this study with selected machine learning models in tooth loss prediction and periodontal assessment.

Table 6.

Comparison of this study with selected machine learning models in tooth loss prediction and periodontal assessment.

| Study | Dataset | Sample Size | ML Models Used | Best AUC / Accuracy | Key Predictors | Limitations |

|---|

| Sunnetci et al. (2022) [35] | 1432 tagged radiographic images | 1432 | AlexNet, SqueezeNet + SVM, KNN, NB, EfficientNetB5 | Accuracy: 81.49%, AUC: 0.88 | Periodontal bone loss features from 2D radiographs | 2D imaging limits depth; lack of standardization; underrepresentation in literature |

| Liang et al. (2020) [37] | Smartphone images (OralCam) | 3182 annotated images | CNN (custom architecture) | Sensitivity: 0.787 | Annotated oral photos + user-reported data | Image quality issues; poor lighting/focus; self-reported variability |

| Lee et al. (2022) [36] | UTHealth Clinical Radiographs | 693 (train), 644 (test) | U-Net + ResNet (Segmentation + Classification) | AUC: 0.89–0.90, Accuracy: 0.85 | Alveolar bone loss stages from periapical images | Does not detect vertical defects or replace charting |

| Alotaibi et al. (2022) [38] | ROMEXIS periapical radiographs | 1724 | VGG-16 CNN | Accuracy: 73%, Severity Classification: 59% | Alveolar bone loss via CNN | No clinical validation; 2D-only; imbalance in severity classes |

| This Study: Binary Classification (2025) | BRFSS 2022 | 365,803 | XGBoost, RF, LR, CatBoost, SVM, DT, KNN, GB | AUC: 0.786, Accuracy: 71.4% | Age, education, smoking, income | Cross-sectional; lacks clinical variables |