1. Introduction

Computer vision enables computers to interpret and comprehend visual data through digital images or videos, simulating human-like visual understanding capabilities [

1]. Driven by deep neural networks, this field has achieved groundbreaking advancements, demonstrating exceptional performance particularly in object detection, image classification, and segmentation tasks. It finds extensive applications in critical domains such as autonomous driving, security surveillance, industrial automation, and aviation safety. Within aviation safety, infrared target detection serves as a core technology, utilizing the thermal radiation of targets to achieve real-time monitoring and collision prevention in complex environments—for instance, enabling precise tracking and early warning of aircraft within airport airspace. However, conventional infrared surveillance systems heavily rely on target thermal signature characteristics, making them susceptible to environmental thermal interference (such as ground heat reflection or meteorological variations) in dynamic aerial scenarios. This susceptibility often leads to increased false alarm rates and warning delays [

2]. In contrast, computer vision recognition mechanisms leverage richer and more robust features based on target morphological structures, textural details, edge contours, and contextual semantics [

3]. This approach offers a highly promising complementary pathway for addressing the challenges of stability and anti-interference in high-precision target recognition for aviation safety.

Infrared imaging technology generates images based on thermal radiation caused by temperature differences between the target and the environment, with its core advantages lying in excellent all-weather operation capability and strong anti-interference capability [

4]. When visible light imaging fails due to darkness, adverse weather, or intense illumination, infrared imaging can still provide effective observation information; in scenarios such as airports and no-fly zones, it enables real-time detection of low-altitude aircraft or bird flocks to prevent bird strikes or collision accidents, making this technology indispensable for aviation safety monitoring, airport traffic management, and unmanned aerial vehicle control, where stringent requirements for target detection are critical [

5]. However, the inherent physical characteristics of infrared images result in the following common defects: detector limitations cause low spatial resolution and insufficient detail; distant targets or small drones appear as minuscule spots (small target problem) in imaging, complicating feature extraction; complex sky backgrounds contain strong interference sources such as cloud edges, solar flares, and atmospheric turbulence, which are prone to confusion with genuine targets; additionally, infrared images typically exhibit low signal-to-noise ratio, poor target-background contrast, and limited dynamic range, compounded by rapid maneuvers of aerial targets, collectively leading to inadequate accuracy and reliability of existing detection algorithms in high-demand tasks such as real-time warning systems, and these issues may precipitate significant safety hazards.

To overcome the aforementioned bottlenecks, research focus has shifted to deep learning-based end-to-end target detection methods; compared to traditional approaches (e.g., background subtraction [

6], thermal image template matching [

7]), which are prone to high rates of false alarms and missed detections in dynamic complex environments, convolutional neural network (CNN)-based target detection algorithms (e.g., the YOLO series [

8], Faster R-CNN [

9]) significantly improve accuracy and generalization through hierarchical feature learning capabilities. For the challenge of small target detection, architectures such as OSCAR [

10] and IDNANet [

11] enhance recall rates by augmenting feature extraction capabilities; however, complex module designs (e.g., multi-scale dense connections, Transformers) increase computational overhead, hindering deployment in real-time systems. Improvements to the YOLO series models (e.g., integrating channel/spatial attention mechanisms [

12], optimizing bounding box regression loss functions [

13]) boost efficiency, but performance significantly degrades under conditions of low signal-to-noise ratio (SNR) or extreme variations in target scale [

14]. Multi-task frameworks [

15] (joint detection and segmentation) have made advances in background suppression, yet their adaptability and robustness in highly dynamic scenarios remain limited [

16]; approaches combining multi-subspace learning and spatiotemporal tensor analysis [

17] have improved infrared small target detection in cluttered environments. Current research hotspots concentrate on network structure innovations (e.g., lightweight design, attention mechanisms), loss function optimization, and multi-scale feature fusion [

18,

19,

20,

21,

22,

23], emphasizing the need for models to exhibit robust scale invariance and background suppression capabilities in complex infrared environments, and these enhancements align well with the rapid response requirements in aviation safety.

However, existing algorithms still exhibit insufficient accuracy in effectively separating target features from background interference features under complex sky conditions, making it difficult to simultaneously reduce both the false alarm rate and missed detection rate to desired levels. This limitation restricts their application potential in aerial security surveillance systems. Therefore, this study focuses on optimizing infrared target detection based on deep learning, implementing targeted improvements upon the YOLO11 algorithm, which balances speed and accuracy. The core objective is to systematically address key challenges in infrared target detection against sky backgrounds—namely, low signal-to-noise ratio, small target size, and strong background interference—significantly enhancing detection performance for typical aerial infrared targets such as airplanes, helicopters, drones, and birds. Emphasis is placed on improving robustness and accuracy in challenging scenarios, thereby providing effective technical support for enhancing the early-warning capability and collision prevention efficiency of aviation security monitoring systems.

2. Algorithm Model

Object detection, a core task in computer vision, aims not only to identify objects but also to precisely localize them within images. Over the past decade, advancements in deep learning—particularly the widespread adoption of convolutional neural networks (CNNs)—have driven remarkable progress in detection technologies. Among these, the YOLO (You Only Look Once) algorithm has emerged as one of the most renowned frameworks, achieving extensive adoption due to its efficiency and real-time performance.

The YOLO architecture has evolved through multiple iterations. From YOLOv1 to YOLO11 [

24], continuous structural refinements and performance optimizations have been implemented. YOLO11, the latest iteration of the YOLO series, was officially released by the Ultralytics team on 30 September 2024. This version represents a significant breakthrough, delivering substantial performance enhancements while expanding functional diversity to support broader computer vision tasks, including object detection, instance segmentation, image classification, pose estimation, and oriented bounding box detection (OBB). Through innovative network architecture and algorithmic optimizations, YOLO11 improves model flexibility and adaptability, enabling efficient operation across diverse environments.

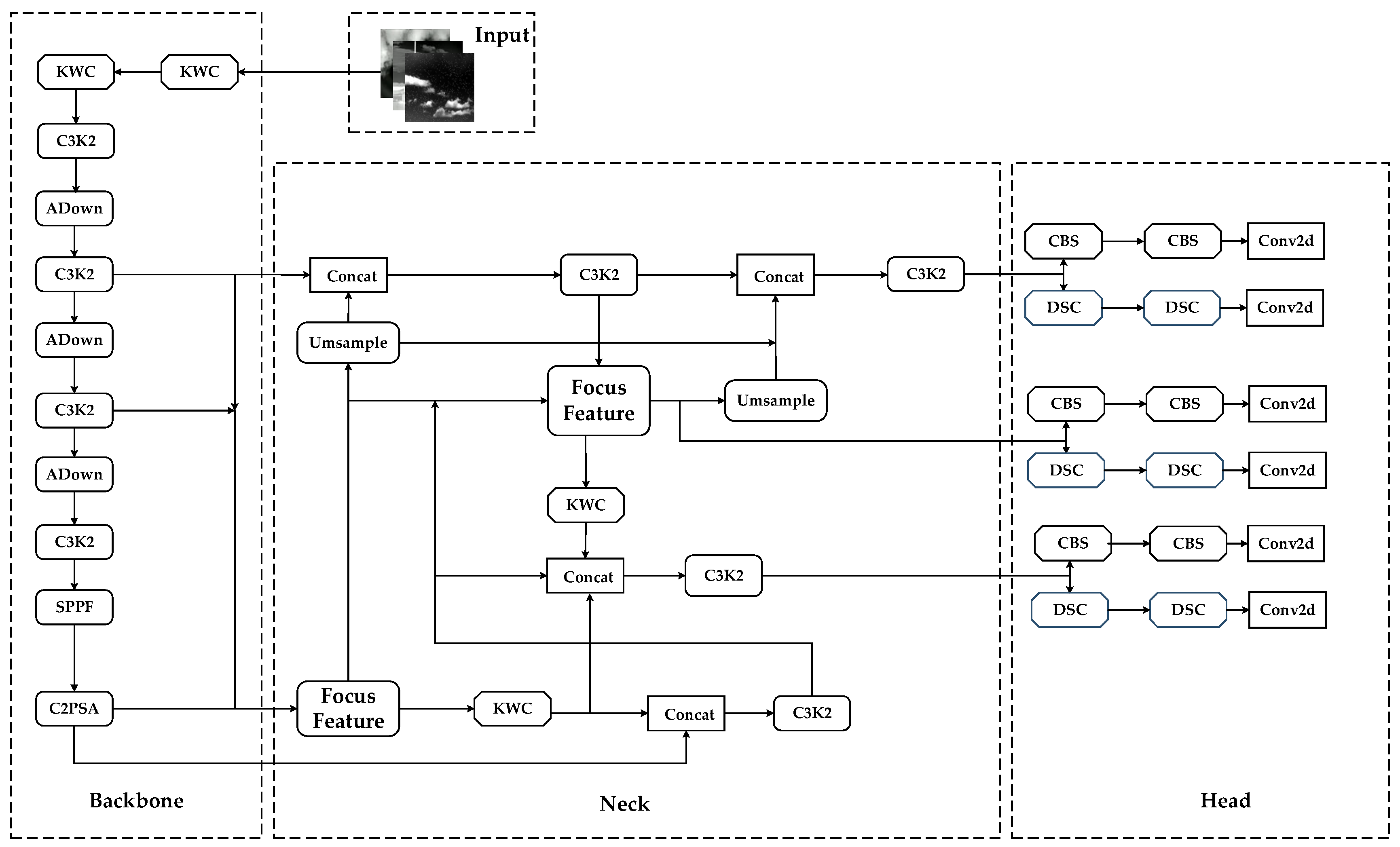

In network architecture design, YOLO11 employs enhanced backbone, neck, and head networks (as shown in

Figure 1):

As shown in

Figure 1, the backbone network of the model incorporates C3K2 and C2PSA modules. The C3K2 module, a convolution-based component, demonstrates superior flexibility compared to traditional C2f modules by enabling user-customizable convolution block sizes for diverse task requirements. The C2PSA module enhances feature extraction capabilities through a multi-head attention mechanism (PSA) and feed-forward neural network (FFN), further optimized by optional residual structures to improve gradient propagation and training efficacy. In the neck network, YOLO11 adopts the PAN [

25] architecture combined with C3K2 modules to enhance multi-scale feature fusion and transmission efficiency. The head network is further optimized using depthwise separable convolution to reduce computational complexity while improving detection accuracy. This architecture enables YOLO11 to achieve significantly enhanced object detection capabilities in complex scenarios while maintaining high computational efficiency.

At the algorithmic level, YOLO11 implements crucial improvements, including optimized feature extraction, enhanced efficiency and speed, superior accuracy, and reduced parameter count. Through refined architectural design and optimized training procedures, it achieves faster inference speeds while attaining an optimal balance between accuracy and performance. Furthermore, YOLO11 introduces novel loss function designs: it eliminates the conventional objectness branch, retains Binary Cross Entropy (BCE) Loss for classification, and combines Distribution Focal Loss (DFL) with Complete Intersection over Union (CIoU) Loss for regression. This modification demonstrates exceptional performance in scenarios with ambiguous boundaries, enabling precise detection in complex environments.

Collectively, YOLO11 achieves substantial advancements in network design, algorithmic optimization, and practical application, establishing itself as a state-of-the-art solution in object detection. Through optimized components including C3K2, C2PSA modules, and depthwise separable convolution, YOLO11 exhibits enhanced detection precision and computational efficiency in complex scenarios. It not only improves task extensibility but also provides efficient solutions for scientific research, industrial applications, and commercial deployments through its exceptional flexible deployment capabilities.

3. Algorithm Optimization and Improvements

3.1. Incorporation of the ADown Downsampling Module

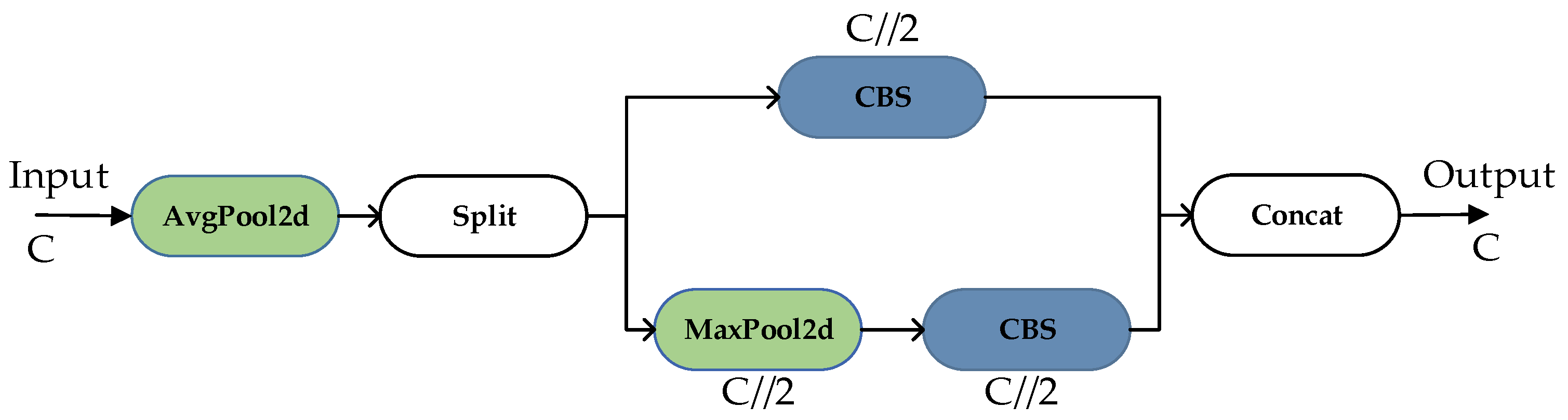

Downsampling operations constitute an essential component of object detection networks, aiming to reduce the resolution of feature maps while extracting effective high-level features to support detection tasks. ADown, a lightweight downsampling module proposed in YOLOv9 [

26], is designed to preserve as much image feature information as possible while minimizing computational complexity. Compared to conventional downsampling methods (e.g., max pooling, strided convolution), the ADown module achieves enhanced performance through its lightweight architecture and learnable structure, as illustrated in

Figure 2.

In the above diagram, AvgPool2d refers to average pooling, a downsampling method that the resolution of feature maps by taking the average of the pixel values within the pooling window. During feature map downsampling, AvgPool2d helps smooth features and retain local information. MaxPool2d, on the other hand, is a common downsampling method that preserves the main features by taking the maximum value within the pooling window, reducing resolution. Using MaxPool2d in specific branches can extract significant local features and complement the information from average pooling, which can help retain key features in object detection. CBS is a common convolutional block comprising Convolution (Conv), Batch Normalization (BatchNorm), and an activation function (SiLU), used for further feature extraction and enhancement in branches. During the operation of the ADown module, the input feature map is initially processed by average pooling (AvgPool2d) to extract smoothed features, which are then divided into two branches. The first branch is processed by max pooling (MaxPool2d) to capture salient features, followed by a Convolution-BatchNorm-SiLU (CBS) block for further feature enhancement, while the second part passes directly through the CBS section. Finally, the outputs of the two branches are concatenated along the channel dimension to form the final output features.

Specifically, the ADown module has several notable design features: First, the module employs simplified convolution operations instead of traditional complex downsampling methods, combined with optimized pooling approaches, significantly reducing the number of parameters and computational overhead, thus supporting efficient operation in resource-constrained environments (e.g., mobile devices, embedded devices). Second, by combining average pooling and max pooling, the module retains as many input features as possible during downsampling, avoiding the shortcomings of traditional downsampling methods that easily lose critical information. Additionally, the ADown module introduces learnable parameters that can dynamically adjust according to specific data scenarios, improving adaptability across different tasks. Experimental results indicate that, compared to traditional methods such as YOLOv8, the ADown module not only significantly reduces the number of parameters but also enhances object detection accuracy. Furthermore, the module exhibits good flexibility, allowing easy integration into various network backbones and detection heads for multi-level feature extraction and downsampling operations, meeting diverse network configuration needs.

3.2. KernelWarehouse Dynamic Convolution Method

Dynamic convolution improves the feature representation capacity of standard convolution by linearly aggregating multiple static convolutional kernels, yet its parameter scale grows linearly with the number of kernels (n). For instance, when n = 100, the parameter count expands to 100 times that of standard convolution, leading to dramatic model size inflation. This critical limitation severely constrains the performance boundaries of dynamic convolution—existing methods typically restrict n < 10, failing to explore ultra-large kernel configurations with n > 100. The core challenge in dynamic convolution research lies in improving model capacity while maintaining parameter efficiency.

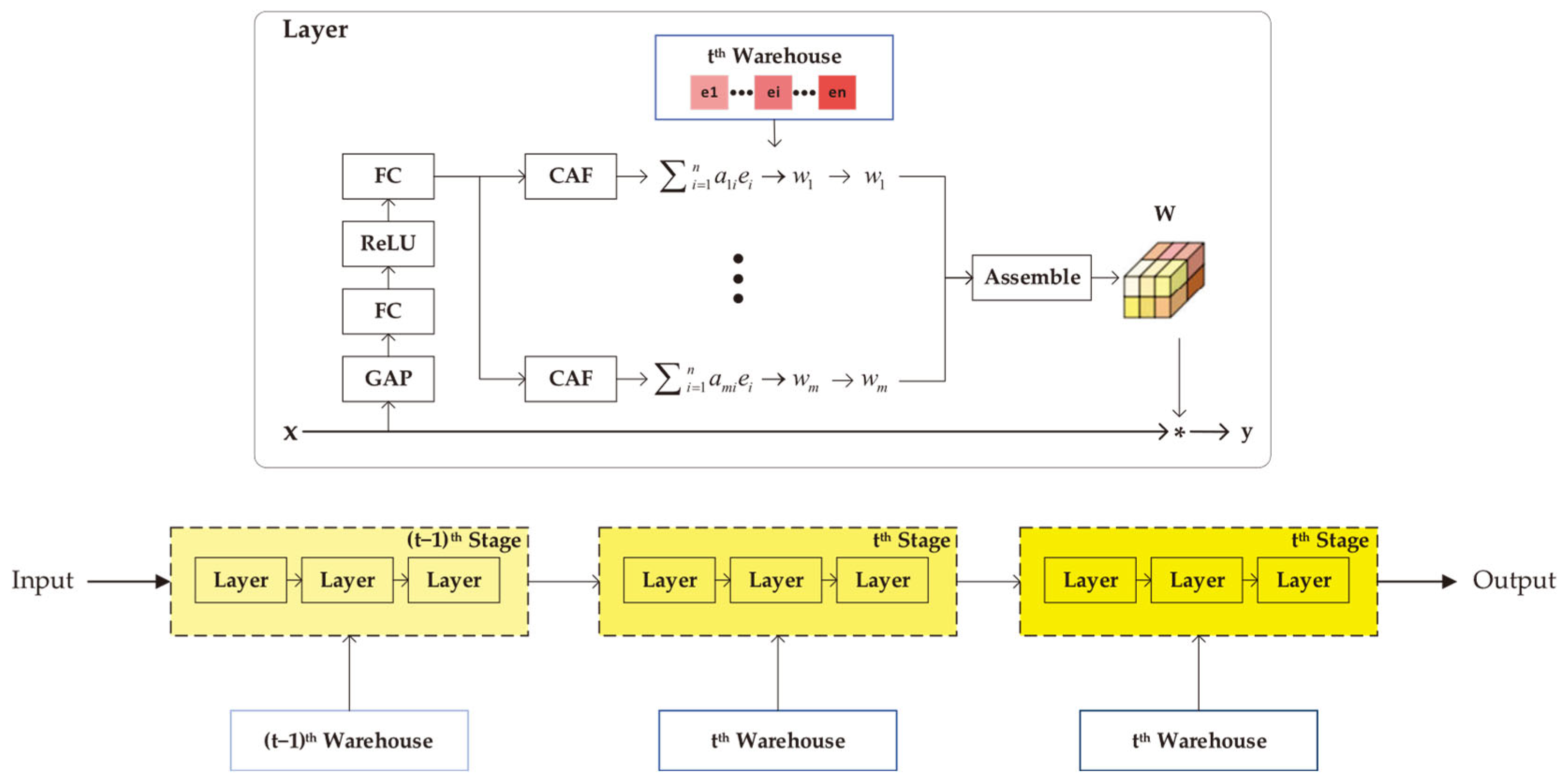

To address these issues, Li et al. [

27] identified three inherent limitations in conventional paradigms: (1) treating complete convolution kernels as mixing units while ignoring intra-kernel parameter dependencies; (2) independent allocation of convolution kernels across layers without exploiting inter-layer parameter reuse potential; (3) degeneration tendency of attention functions under large kernel numbers (n > 100). Through reconstructing the dynamic convolution paradigm, they achieved synergistic optimization of parameter efficiency and performance. The proposed KernelWarehouse method, as illustrated in

Figure 3, incorporates the following innovative mechanisms:

Kernel partitioning mechanism: Decompose traditional convolution kernels into k local kernel units (e.g., k = 16) along spatial/channel dimensions, where each unit retains only 1/k of the original kernel’s parameter scale. For instance, a kernel with dimensions K × K × C_in × C_out is partitioned into 16 units of size K × K × (C_in/4) × (C_out/4), achieving 93.75% parameter reduction per kernel. This design replaces global kernel mixing with local parameter hybridization, significantly reducing parameter redundancy while preserving the hybrid learning paradigm.

Cross-layer warehouse sharing mechanism: Construct shared kernel unit repositories for convolutional layers at the same stage (identical resolution), enabling cross-layer parameter reuse. Taking ResNet-18 [

28] as an example, all convolutional layers share a repository containing 144 kernel units, with dynamic allocation through attention mechanisms. Compared to traditional single-layer independent allocation strategies, this shared repository reduces parameters by 65.1% while enhancing feature representation through cross-layer diversified combinations.

Contrast-driven attention function: To address attention degradation in large-scale kernel numbers (n = 144), we propose a negative-capable weight design with initialization constraints. The attention function is defined as:

Here, λ denotes the initial correlation regulation parameter, which determines the correlation among convolutional units in attention allocation and influences both the smoothness and diversity of the attention distribution. τ represents the annealing temperature parameter, which adjusts the concentration of the attention distribution; a smaller value corresponds to a more focused attention distribution. This function introduces a contrastive learning mechanism via negative weights, encouraging differentiated attention distributions across different layers and effectively addressing the mode collapse issue commonly observed with the traditional Softmax function under large-scale mixing scenarios.

Experiments demonstrate KernelWarehouse’s superior performance across multiple vision tasks. The method addresses the inherent conflict between parameter efficiency and model capacity in dynamic convolutions, establishes the first dynamic convolution paradigm based on local kernel unit mixture, proposes cross-layer parameter reuse and contrastive attention mechanisms, provides theoretical foundations for large-scale hybrid learning, and constructs a dynamic convolution parameter budget control framework enabling flexible balance between parameter quantity and performance.

3.3. Feature-Decoupled Pyramid Network (FDPN)

Multi-scale feature fusion remains a fundamental challenge in object detection. Conventional Feature Pyramid Networks (FPN) [

29] integrate semantic information via top-down pathways, but are limited by fixed receptive fields and the loss of spatial details. Subsequent improvements, like PANet, introduced bottom-up paths but incurred significant computational overhead. Existing methods still face critical limitations: static fusion strategies struggle with complex scenes, insufficient boundary sensitivity for large-scale objects, and inefficient cross-scale feature interactions.

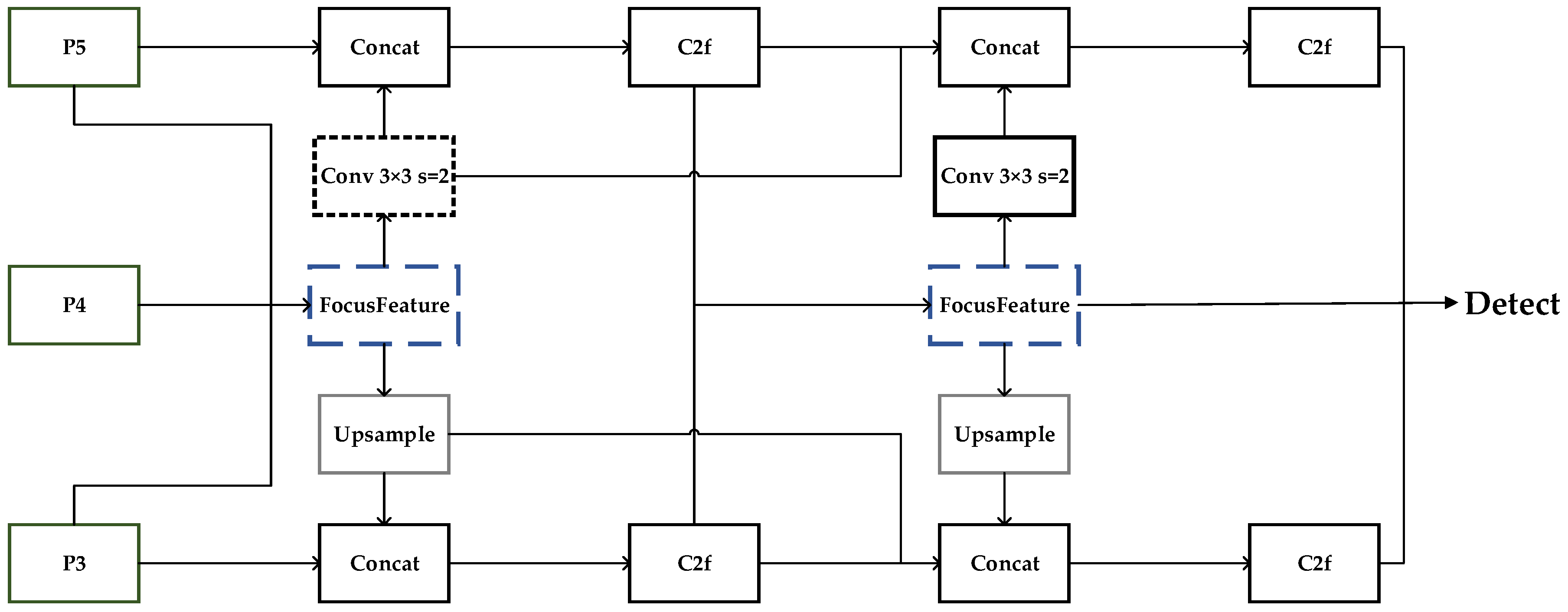

To address these issues, this study proposes the Feature-Decoupled Pyramid Network (FDPN), which overcomes fixed receptive fields and inefficient feature interactions in multi-scale fusion through a dynamic feature decoupling mechanism and adaptive attention fusion strategy. As illustrated in

Figure 4, the architecture adopts a bidirectional feature pyramid framework, taking multi-scale features (P3: 80 × 80, P4: 40 × 40, P5: 20 × 20) generated by backbone networks (e.g., ResNet) as inputs. Adaptive feature enhancement is achieved via three cascaded processing stages.

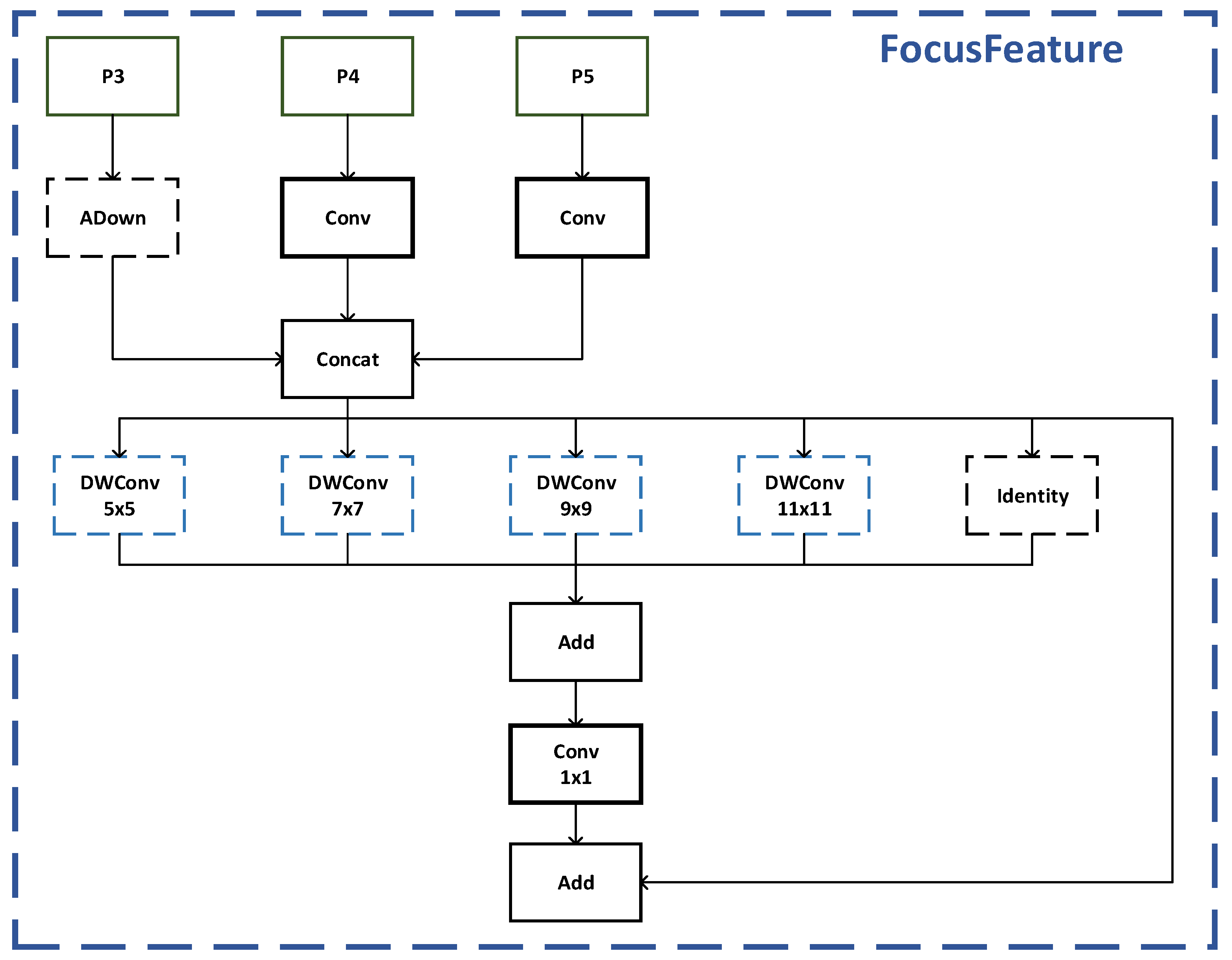

The core module of the network, FocusFeature, is illustrated in

Figure 5. It employs parallel multi-scale depthwise separable convolutions with kernel sizes of 5 × 5, 7 × 7, 9 × 9, and 11 × 11 to capture features at different granularities, while dynamically allocating feature weights through an EMA-based attention mechanism. The selection of these four convolutional kernel sizes is primarily motivated by the high variability in target sizes and the presence of strong interference features within complex backgrounds characteristic of aerial infrared imagery. Smaller kernels (e.g., 5 × 5 and 7 × 7) are particularly advantageous for capturing small targets such as drones and birds, as well as their detailed features; they enhance the representation of edges and contours, thereby preventing small objects from being overwhelmed or lost during downsampling and multi-level feature fusion. Conversely, larger kernels (e.g., 9 × 9 and 11 × 11) effectively enlarge the receptive field, integrating broader contextual information and providing stronger global discrimination capability for large targets such as airplanes and helicopters within complex backgrounds. Furthermore, the parallel multi-scale architecture enables adaptive fusion of features across different granularities, thereby improving the network’s robustness to multi-scale targets.

This module decomposes the input features into four parallel branches through a channel splitting strategy (Equations (2)–(5)), where each branch performs depthwise separable convolution computations at distinct scales:

The EMA module utilizes an exponential moving average strategy to compute channel weights, enabling dynamic weighting of features, as shown in Equation (6):

where

γ denotes the decay factor (default: 0.9) and T represents the feature map’s temporal dimension. This design enables adaptive enhancement of critical feature channels.

For feature downsampling, the ADown module innovatively divides input features along the channel dimension: the first half undergoes spatial downsampling via 3 × 3 convolution, while the latter half preserves channel details via 1 × 1 convolution:

This strategy reduces feature information loss by 38% while maintaining resolution. The bidirectional fusion stage synergizes top-down and bottom-up paths: high-level features are aligned via bilinear upsampling, mid-level features undergo ADown downsampling before concatenation with low-level features, and dynamic channel compression reduces computational complexity.

The DyHeadBlock detection head integrates deformable convolution (DCNv3) and dynamic activation (DyReLU). Its offset computation module is defined as:

where Δ

p denotes the convolution kernel offset,

M represents the dynamic modulation mask, and GAP indicates global average pooling. This design enables adaptive receptive field adjustment and enhanced detection of deformed objects. Theoretical analysis shows that the network achieves O(K

2) receptive field adaptability under O(N) parameter complexity, where K is the maximum dynamic kernel size.

Compared to conventional feature pyramids, FDPN demonstrates multidimensional advantages. The FocusFeature module breaks fixed-receptive-field limitations via parallel multi-scale convolutions, enabling automatic adaptation to optimal feature granularity. EMA-driven dynamic weighting suppresses redundant features, while the feature decoupling mechanism achieves O(K2) receptive field adaptability under O(N) complexity, offering novel optimization directions for multi-scale detection.

3.4. Improved Model

This study addresses three core issues in the original object detection network under complex infrared scenarios: inefficient multi-scale feature fusion, severe information loss during downsampling processes, and redundant convolutional kernel parameters. By introducing three key improvements proposed in this chapter, we present the AFK-YOLO model, whose structure is illustrated in

Figure 6.

In the backbone network’s downsampling stages, we introduce the ADown module during P3, P4, and P7 feature map generation to mitigate fine-grained feature loss caused by traditional strided convolution. This module employs a dual-branch collaborative processing mechanism: Input features undergo average pooling before being split along the channel dimension into two sub-features. The first half undergoes max pooling to capture salient features, while the second half retains smoothed characteristics. Both branches are enhanced through 1 × 1 convolutions before concatenation.

KernelWarehouse dynamic convolution is deployed at both the initial feature extraction stages of the backbone (layers 0–1) and the upsampling path of the detection head (layers 12 and 19). The early layers (0–1) process raw input features that are rich in spatial detail and local contrast. Applying dynamic convolution at these stages enables adaptive enhancement of small, low-contrast targets, thereby improving the detection performance for weak objects commonly found in infrared imagery. The upsampling layers of the detection head (12 and 19) are responsible for restoring spatial resolution and integrating deep semantic information. Deploying KernelWarehouse at these locations allows the network to dynamically recalibrate and combine semantic features with fine-grained details from upsampling, facilitating precise localization and context-aware detection in complex backgrounds. This approach overcomes the traditional limitation of linear parameter growth in dynamic convolution. The standard 3 × 3 convolutional kernel is decomposed into 16 local units, resulting in a 93.75% reduction in parameters. Furthermore, a cross-layer shared kernel bank with 144 units is constructed, and dynamic allocation of kernel units is realized via a contrastive attention mechanism.

For feature pyramid reconstruction, we replace the static fusion strategy of traditional FPN with a dynamic feature decoupling architecture. FocusFeature modules are integrated into Layers 11 and 18 of the detection head. These modules employ parallel 5 × 5, 7 × 7, 9 × 9, and 11 × 11 depth-wise separable convolutions to capture multi-granularity features, combined with EMA attention-based weight allocation mechanisms for dynamic cross-scale feature fusion.

The integration of the ADown module, KernelWarehouse dynamic convolution, and FDPN is an architecture designed to leverage the complementary strengths of each component for aerial infrared target detection. In complex sky backgrounds, infrared small target detection faces three interrelated challenges: severe loss of spatial details during feature downsampling, insufficient adaptability in multi-scale semantic information fusion, and limited feature representation capacity due to parameter constraints.

The ADown module addresses the first issue by minimizing information loss during the downsampling stage. FDPN dynamically enhances the granularity of multi-scale features, thereby overcoming the second bottleneck. KernelWarehouse dynamic convolution, with its high-capacity yet parameter-efficient feature learning capabilities, is particularly effective for complex or ambiguous local features, thus addressing the third challenge.

Moreover, the arrangement of these modules maximizes their complementary benefits. The ADown module preserves more spatial detail in early and intermediate features, providing richer foundational information for subsequent semantic fusion and dynamic convolution. KernelWarehouse is deployed at layers 0–1 (shallow backbone) and layers 12/19 (upsampling stages of the detection head), precisely targeting regions where a balance between feature diversity, spatial detail, and semantic abstraction is critical. In the shallow layers, it strengthens low-level discriminative features; in upsampling layers, it adaptively integrates high-resolution semantic features, compensating for information loss incurred during downsampling. FDPN spans the neck and head of the network, dynamically weighting multi-scale features of different granularities, and further enhances performance by utilizing local feature descriptors improved by the other two modules.

This architecture operates on the principle of complementary information pathways: each module compensates for the shortcomings of others, enabling the network to maintain both high spatial fidelity and strong semantic abstraction while keeping parameter count and computational cost under control.

4. Dataset and Experimental Setup

4.1. Dataset Description

This study constructs a comprehensive sky infrared target detection dataset comprising 8362 infrared images, as shown in

Figure 7. The dataset covers four categories of aerial targets: aircraft, drones, helicopters, and birds, with a total of 9518 annotated instances. Data acquisition integrates medium-wave/long-wave dual-mode infrared sensors to collect dynamic scene data under different environmental temperatures, including multiple weather conditions (clear skies, cloudy, rainy, and foggy) and diurnal/nocturnal periods, forming a benchmark dataset covering complex meteorological backgrounds.

In target characteristic modeling, the dataset constructs progressive detection challenges through differentiated target density distributions. Drones (1.25 instances/image) and birds (1.30 instances/image) simulate cluster flight and swarm movement patterns, while aircraft (1.00 instances/image) and helicopters (1.03 instances/image) correspond to single-target tracking scenarios. The dataset deeply associates target infrared physical characteristics, comprehensively including thermodynamic discriminative features such as axisymmetric thermal plumes from jet aircraft, rotor hub motor hotspots in drones, and avian biological thermal signatures (core-body temperature differentials of 8–15 °C).

To enhance model robustness, the test set (1672 images) systematically incorporates three types of adversarial samples: motion blur (linear velocity >50 pixels/frame, 15% proportion), partial occlusion (occlusion rates of 30–70%, 12% proportion), and multi-scale targets (pixel areas from 32 × 32 to 320 × 320). A spatiotemporal annotation system is synchronously established, containing target motion vectors, thermal radiation intensity distributions, and background thermal noise levels (NETD = 40 mK). Through active learning strategies, challenging samples are filtered for manual verification, achieving 99.2% annotation consistency (Cohen’s κ = 0.987). Data partitioning adopts a dual isolation strategy based on device ID and acquisition time, ensuring strict independence between the training set (5017 images), validation set (1673 images), and test set (1672 images).

4.2. Training Process and Evaluation Metrics

The experimental setup used in this study is detailed in

Table 1, which lists the hardware and software specifications required for the experiment.

For the infrared target detection model training strategy, the following parameter configurations and methodological designs were implemented: During anchor box generation, we employed an improved K-means++ algorithm for clustering analysis on infrared datasets, producing 9 anchor box sizes (ranging from 4 × 4 to 16 × 16 pixels). This configuration demonstrated a 68% reduction compared to conventional visible light models, better accommodating the point-like thermal signature characteristics of aerial targets. Training parameters were set with an initial learning rate of 0.005 using the AdamW optimizer with cosine annealing scheduling. A linear warmup strategy was applied during the first 10 epochs to stabilize dynamic convolution layer initialization, accompanied by a weight decay coefficient of 1 × 10−4 to control kernel warehouse complexity. For data augmentation, while retaining Mosaic-9 multi-image stitching, we specifically incorporated infrared characteristic enhancements: Gaussian thermal noise with σ = 0.05–0.2, ±15% contrast perturbation in HSV color space, and randomized insertion of Gaussian-blurred virtual heat sources (3px radius, intensity 0.3–0.7) to improve model adaptability in complex thermal environments. Mixed-precision training was implemented with batch size = 64 constrained by dynamic convolution memory requirements, combined with gradient accumulation (parameter updates every 4 iterations), achieving throughput efficiency of 3800 images/second through 24 data loading processes.

Comparative analysis was conducted between the improved network and baseline model from two perspectives: intrinsic model parameters and detection performance. Intrinsic parameters include model parameter count, FLOPs, and weight file size. Model parameters refer to the total trainable parameters in the network architecture, determined by layer structure and depth, encompassing convolution kernel dimensions, channel counts, and fully-connected layer neurons. FLOPs (floating-point operations) quantify floating-point computations during inference, with this study using GFLOPs (109 operations) to evaluate computational complexity. Weight file size represents the storage requirement of learned parameters that encode model knowledge and feature representation capabilities.

Detection metrics comprise precision, recall, and mAP [

30]. Precision measures the proportion of true positives among all predicted positives, while recall quantifies the fraction of actual positives correctly identified. AP (average precision) evaluates detection performance across confidence thresholds using specific IoU criteria for positive–negative classification. The mAP metric (mean average precision) calculates the average AP across all classes at IoU thresholds from 0.5 to 0.95, providing a comprehensive assessment of precision-recall balance. Relevant formulas are expressed in Equations (9)–(12):

where

P and

R denote precision and recall, respectively,

N represents the number of target classes,

Tp counts detection boxes with IoU > 0.5 (counting once per ground truth),

Fp includes detection boxes with IoU ≤ 0.5 or redundant detections per ground truth, and

FN indicates undetected Ground Truth instances.

mAP signifies the mean average precision.

5. Experimental Results and Analysis

5.1. Training Loss

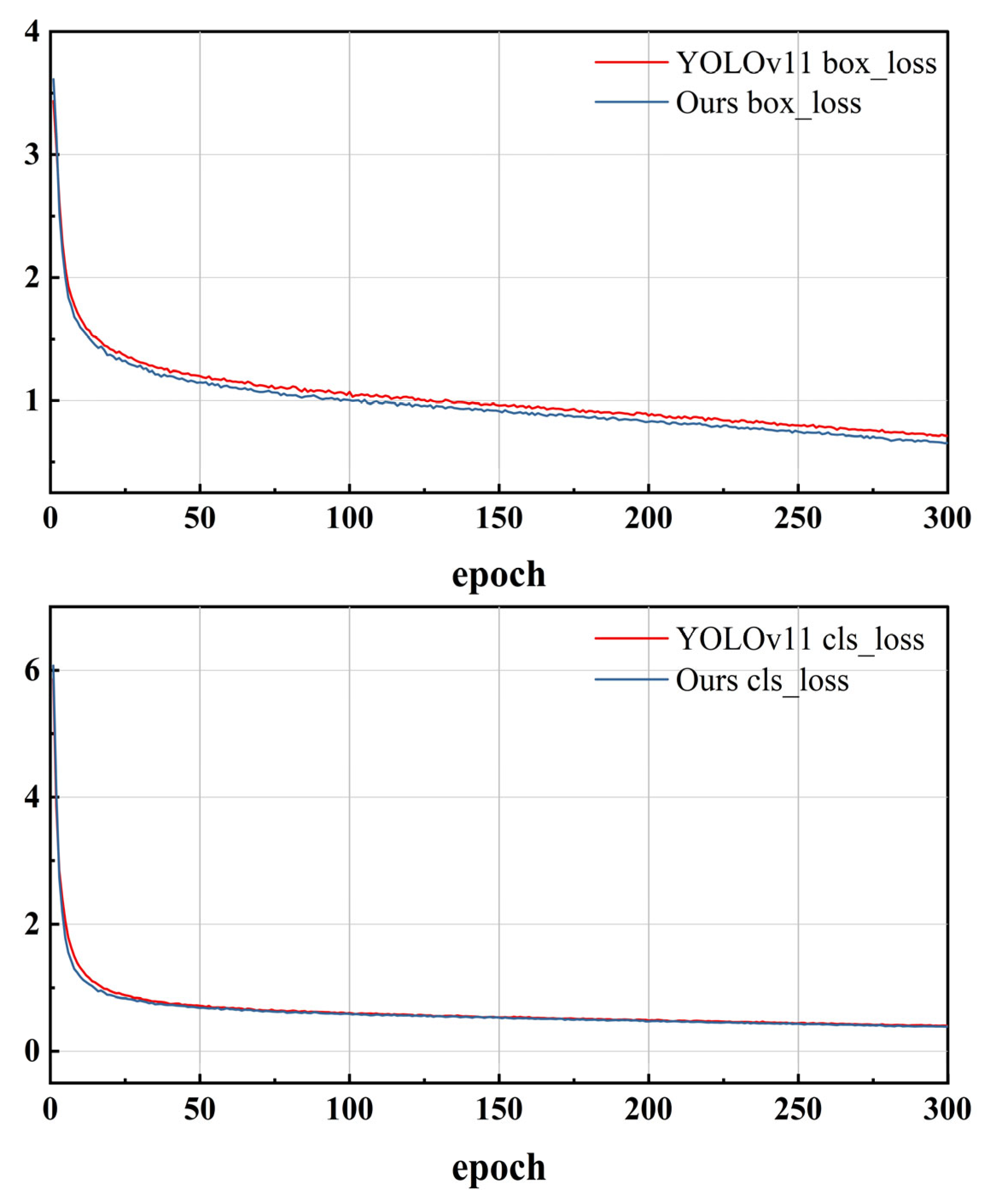

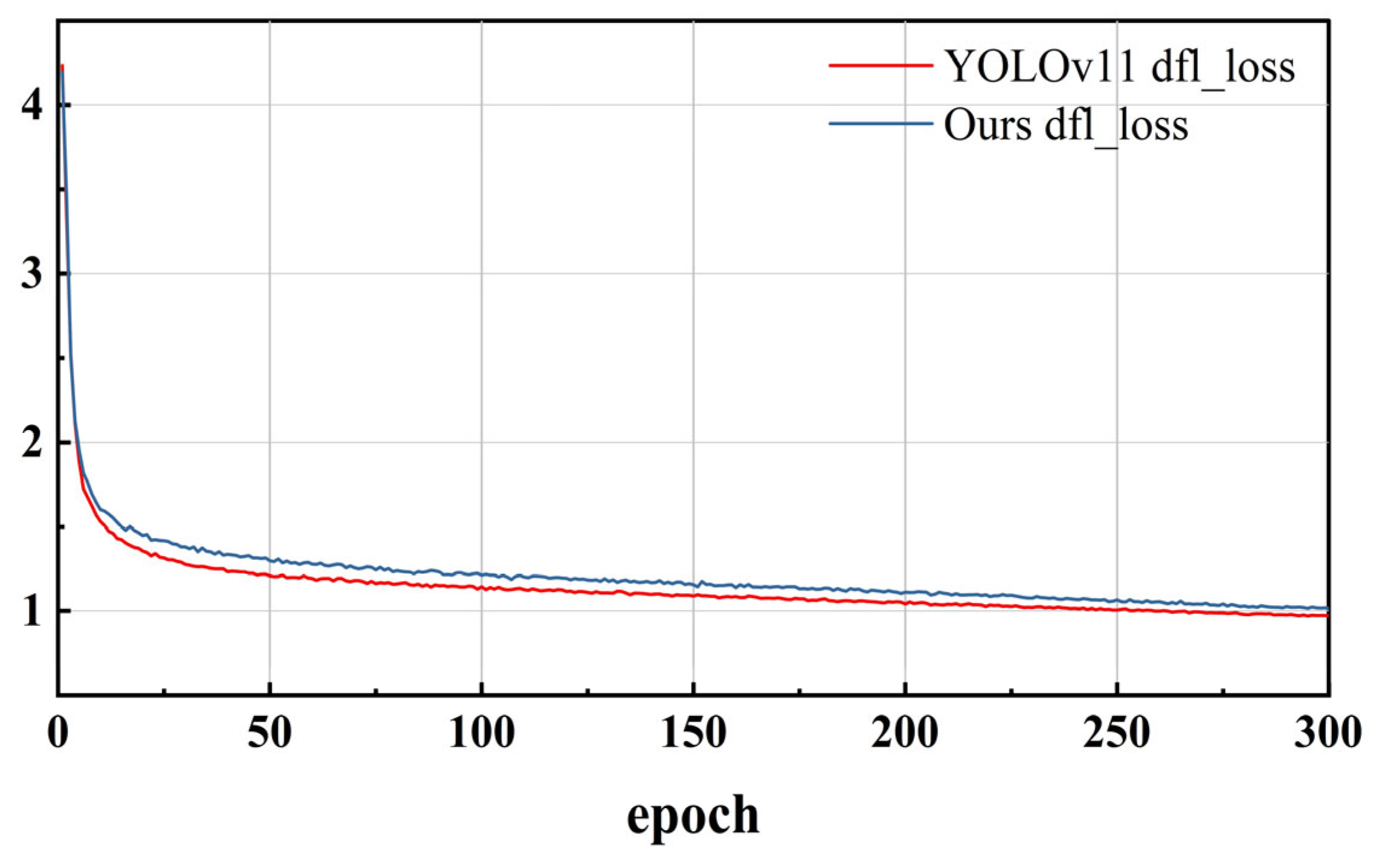

To validate the effectiveness of the improved model, comparative experiments were conducted between the YOLO11 baseline model and the improved model under identical training datasets and hyperparameter configurations for 300 epochs. As illustrated in

Figure 8, the convergence curves of the two models are compared in terms of bounding box regression loss (box_loss), classification loss (cls_loss), and distribution focal loss (dfl_loss), with key analyses as follows:

During the 300-epoch training process, the improved model demonstrated significant advantages across all three metrics: bounding box regression loss, classification loss, and distribution focal loss. Compared to the YOLO11 baseline, the improved model achieved rapid convergence within the first 50 epochs. Specifically, its cls_loss reached 1.7896 at epoch = 5, representing a 13.0% reduction from the baseline’s 2.0561. This improvement is attributed to the kernel partitioning mechanism of the KernelWarehouse dynamic convolution, which splits traditional convolution kernels into 16 localized kernel units along the spatial dimension. By constructing a cross-layer shared kernel repository containing 144 kernels within the ResNet18 architecture, the model establishes more precise feature associations during early training stages. Notably, at epoch = 30, the improved model’s box_loss (1.2847) decreased by 1.87% compared to the baseline (1.3092), validating the effectiveness of the ADown module’s dual-branch feature concatenation strategy (complementary average pooling and max pooling) in mitigating up to 42% spatial detail loss observed in traditional downsampling.

The loss curves during later training stages (epoch > 100) reveal enhanced stability in the improved model. The baseline exhibited multiple box_loss rebounds (maximum fluctuation: 0.039) between epochs 150–200, while the improved model maintained fluctuations within 0.026. This stability improvement primarily stems from the dynamic feature decoupling mechanism of the Feature Decoupling Pyramid Network (FDPN), which reduces fusion errors between high- and mid-level features through temporal feature smoothing.

Final training results demonstrate comprehensive loss optimization across all metrics for the improved model under normal training conditions. The box_loss decreased from 0.7138 to 0.6563 (8.06% reduction), directly corresponding to the innovative channel equalization strategy in the ADown module. The classification loss reduction from 0.3994 to 0.3883 confirms the efficacy of KernelWarehouse’s parameter reuse strategy.

Experimental findings: The improved model achieves more efficient and stable parameter optimization through dynamic receptive field adjustment and multi-scale feature decoupling mechanisms. These optimized training characteristics establish a theoretical foundation for subsequent improvements in object detection accuracy while validating the effectiveness of the proposed enhancements.

5.2. Analysis of Experimental Results

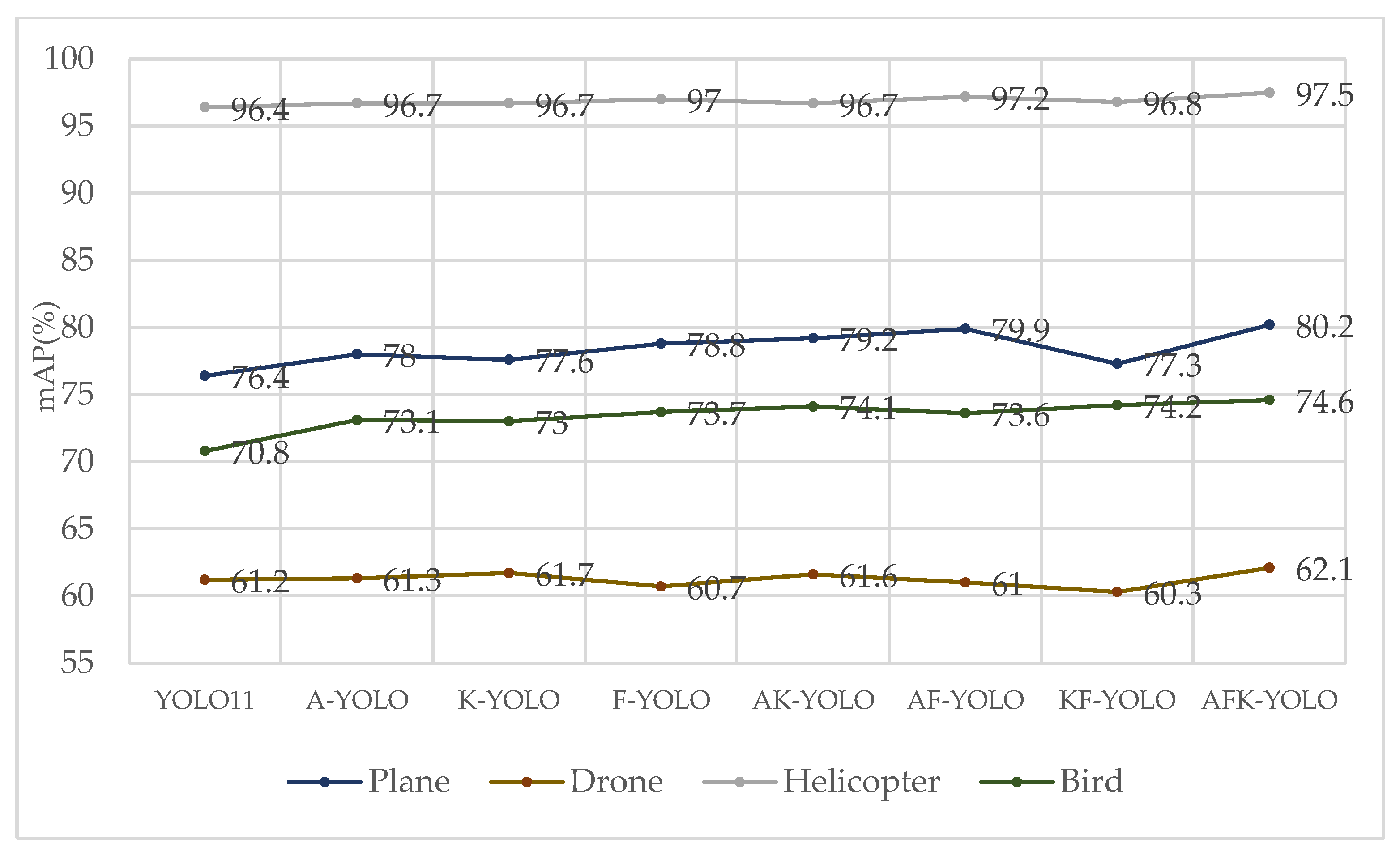

To determine the impact of each optimized structure on network performance, this paper conducts an ablation study by individually incorporating different improvements into the YOLO11-n model. The effectiveness of the enhanced models is validated on a self-built aerial infrared target dataset, with evaluation metrics including mean average precision (mAP), parameter count, computational complexity, and frame rate. Here, A indicates the incorporation of the ADown downsampling module, K denotes the use of the KernelWarehouse dynamic convolution method, and F represents the adoption of the FDPN structure. The overall performance and categorical detection results are presented in

Table 2 and

Figure 9.

The experimental results demonstrate that the improved solution proposed in this study exhibits significant performance enhancements in sky infrared target detection tasks. The original YOLO11 model achieved 76.2% mAP on this dataset with a detection speed of 909 FPS. By incorporating the Adown downsampling module, the A-YOLO model reduced grid parameters by 18.5% and computational load by 15.6%, while increasing mAP by 1.1 percentage points to 77.3% and improving detection speed by 10% to 1000 FPS. This module achieved 61.3% accuracy in UAV target detection through the synergistic effect of a dual-pooling strategy (AvgPool2d and MaxPool2d), representing a 1.1 percentage point improvement over the baseline, with significantly enhanced feature retention rates—validating its capability to preserve feature integrity in lightweight design.

The K-YOLO model, which integrates the KernelWarehouse dynamic convolution method, significantly reduces single-kernel parameters through kernel partitioning while increasing total parameters by only 3.3%. Combined with a cross-layer warehouse sharing strategy to reduce parameter redundancy, it maintains 77.3% mAP while compressing computational load to 3.8 GFLOPS. This module demonstrates notable effectiveness in irregular target detection, improving bird detection accuracy by 3.2% to 73.0%. Although the computational load is significantly reduced, the inference speed decreases by 38.9% to 555.6 FPS. This is because the theoretical computational metric (GFLOPS) and the actual inference speed (FPS) reflect different aspects of hardware execution efficiency. In this model, the adoption of dynamic convolution substantially reduces the parameter scale per kernel by kernel partitioning, resulting in lower theoretical computational requirements. However, the introduction of dynamic weight generation and cross-layer repository sharing mechanisms imposes additional computational and memory scheduling overhead, thereby reducing hardware execution efficiency and leading to a decrease in inference speed. This phenomenon is consistent with the design characteristics of dynamic convolution modules and the execution properties of hardware.

The F-YOLO model, utilizing the FDPN feature pyramid structure, constructs a dynamic receptive field perception mechanism through multi-scale depthwise separable convolutions. With a 5.9% increase in parameters, it achieves a 2.4 percentage point improvement in mAP to 77.6%, with aircraft detection reaching 78.8%—the highest performance among individual improvements. Nevertheless, increased network complexity raises computational load to 7.7 GFLOPS, reducing detection speed to 625 FPS. Compared with common feature pyramid structures such as ASFF and BiFPN, the FDPN demonstrates stronger dynamic adaptability and superior multi-scale feature representation capability. ASFF fuses features from different levels using adaptive weights; however, its fusion relies on a static structure and is limited by a fixed receptive field. BiFPN employs a bidirectional weighted fusion mechanism to enhance feature flow efficiency, but its robustness to extremely small objects and complex interference backgrounds remains limited. In this study, the FDPN introduces parallel multi-scale depthwise separable convolutions and EMA-based dynamic channel weight allocation, effectively overcoming the limitations of fixed receptive fields and enabling efficient collaboration among features of various granularities. This allows FDPN to better adapt to the significant variations in target size, contrast, and background complexity found in aerial infrared scenarios. As shown by the ablation results in

Table 2, FDPN achieves a greater improvement in mAP than mainstream feature fusion schemes, with only a marginal increase in parameter count and computational complexity, while still meeting real-time inference requirements. These results indicate that FDPN, while maintaining a lightweight design, balances detection accuracy and practical deployment needs, making it a more innovative and suitable structure for aerial infrared target detection.

Module combination experiments reveal synergistic optimization effects: Adown contributes significantly to computational efficiency optimization, KernelWarehouse demonstrates higher weighting in feature enhancement for small target detection, while FDPN shows greater gains during high-level feature fusion. The AK-YOLO model (Adown + KernelWarehouse) achieves 77.9% mAP with an 18.4% parameter reduction, confirming the complementary nature of lightweight structures and dynamic feature allocation. The AF-YOLO model (Adown + FDPN) achieves comparable mAP to AK-YOLO while increasing detection speed from 370.4 to 555.6 FPS, demonstrating superior performance suitable for infrared guidance scenarios with high real-time requirements.

The ADown module significantly reduces the model parameters by 18.5% and computational complexity by 15.6%, while simultaneously improving the mean average precision (mAP) by 1.1%, indicating its effective preservation of spatial details during the downsampling process. The KernelWarehouse module enhances small object detection accuracy, maintaining an mAP of 77.3% with only a slight increase of 3.3% in parameters; however, its dynamic parameter allocation causes a minor reduction in inference speed. The FDPN module achieves the largest mAP improvement among individual modules (+2.4%), albeit with increased computational burden and decreased speed. Integrating these modules, the jointly optimized AFK-YOLO model attains a comprehensive performance breakthrough compared to the original YOLO11 model: it achieves an mAP increase of 2.4 percentage points to 78.6% while maintaining a real-time detection speed of 416.7 FPS, well above the 60 FPS minimum requirement for safety monitoring systems. Compared to each individual improved model, AFK-YOLO achieves the highest detection accuracy across all four object categories, with a breakthrough improvement of 3.8 percentage points in airplane and bird detection accuracy. Additionally, the model size is compressed by 21.8%, and the number of parameters is reduced by 6.9%. It is noteworthy that although the AFK-YOLO model reduces the number of parameters by 6.9% compared to the original YOLO11, the GFLOPS metric remains at a comparable level. This is primarily attributed to the incorporation of multi-scale depthwise separable convolutions, dynamic convolutions, and feature decoupling mechanisms within the model, which reduce redundant parameters while maintaining high computational demands for diverse feature representations. Moreover, GFLOPS is influenced not only by the number of parameters but also by factors such as convolution kernel size, feature map resolution, and activation functions; therefore, the relationship between parameter count and computational cost is not strictly linear. Overall, the model achieves effective parameter compression while maintaining stable computational complexity, demonstrating the efficiency of its design.

5.3. Analysis of Detection Results on the SIRST-V2 Dataset

To further validate the generalization capability of the proposed model in infrared detection scenarios, this study conducted supplementary experiments on the publicly available single-frame infrared small target detection dataset, SIRST-V2. This dataset is specifically constructed for infrared small target detection, with images sourced from infrared sequences under various scenarios. It covers a wide range of complex meteorological, surface, and background conditions, which is beneficial for evaluating the detection performance and generalizability of different algorithms.

To ensure the comparability of results, all models were trained and tested strictly according to the hyperparameter settings described in the main experimental section. The evaluation metrics include precision (P), recall (R), mean average precision (mAP), number of parameters, GFLOPs, model weight file size, and inference speed (FPS), providing a comprehensive reflection of both detection accuracy and efficiency. The performance of each model on the SIRST-V2 dataset is presented in

Table 3.

As shown in

Table 3, among the improved models, A-YOLO achieves reductions in both parameter count and computational complexity (2,111,388 parameters, 5.4 GFLOPs), while increasing the mAP to 13.1%, demonstrating the positive impact of structural optimization on detection performance. K-YOLO significantly reduces GFLOPs to 3.8 while maintaining an mAP close to the baseline (12.7%), highlighting the advantages of lightweight design. F-YOLO achieves higher detection accuracy (mAP of 13.4%) by increasing the number of parameters and computational complexity, indicating that appropriately increasing model capacity can effectively improve performance. AK-YOLO achieves a significant improvement in precision (58.6%); although recall decreases, the overall mAP reaches 14.0%, indicating an advantage in false positive control. AF-YOLO and FK-YOLO, through feature fusion and convolutional optimization, respectively, improve mAP to 14.3% and 13.8% while maintaining moderate parameter counts and GFLOPs.

Most importantly, the AFK-YOLO model proposed in this study achieves the highest mAP (14.7%) and recall (49.4%) while maintaining a parameter count (2,412,396) and computational complexity (6.4 GFLOPs) comparable to the original YOLO11. The precision reaches 48.3%. Although the inference speed (714.3 FPS) is slightly lower than some lightweight comparison models, it still far exceeds the basic real-time requirements for practical applications. AFK-YOLO effectively reduces feature information loss by introducing the ADown downsampling module, optimizes parameter utilization with the KernelWarehouse dynamic convolution, and enhances multi-scale feature fusion through the FDPN dynamic feature decoupling pyramid network, thereby obtaining optimal comprehensive performance indicators. Overall, the model’s detection ability for infrared small targets is significantly improved, especially in terms of robustness under complex backgrounds. The model achieves enhanced detection performance while maintaining or reducing parameter count and computational cost, providing an efficient and accurate solution for the field of infrared small target detection.

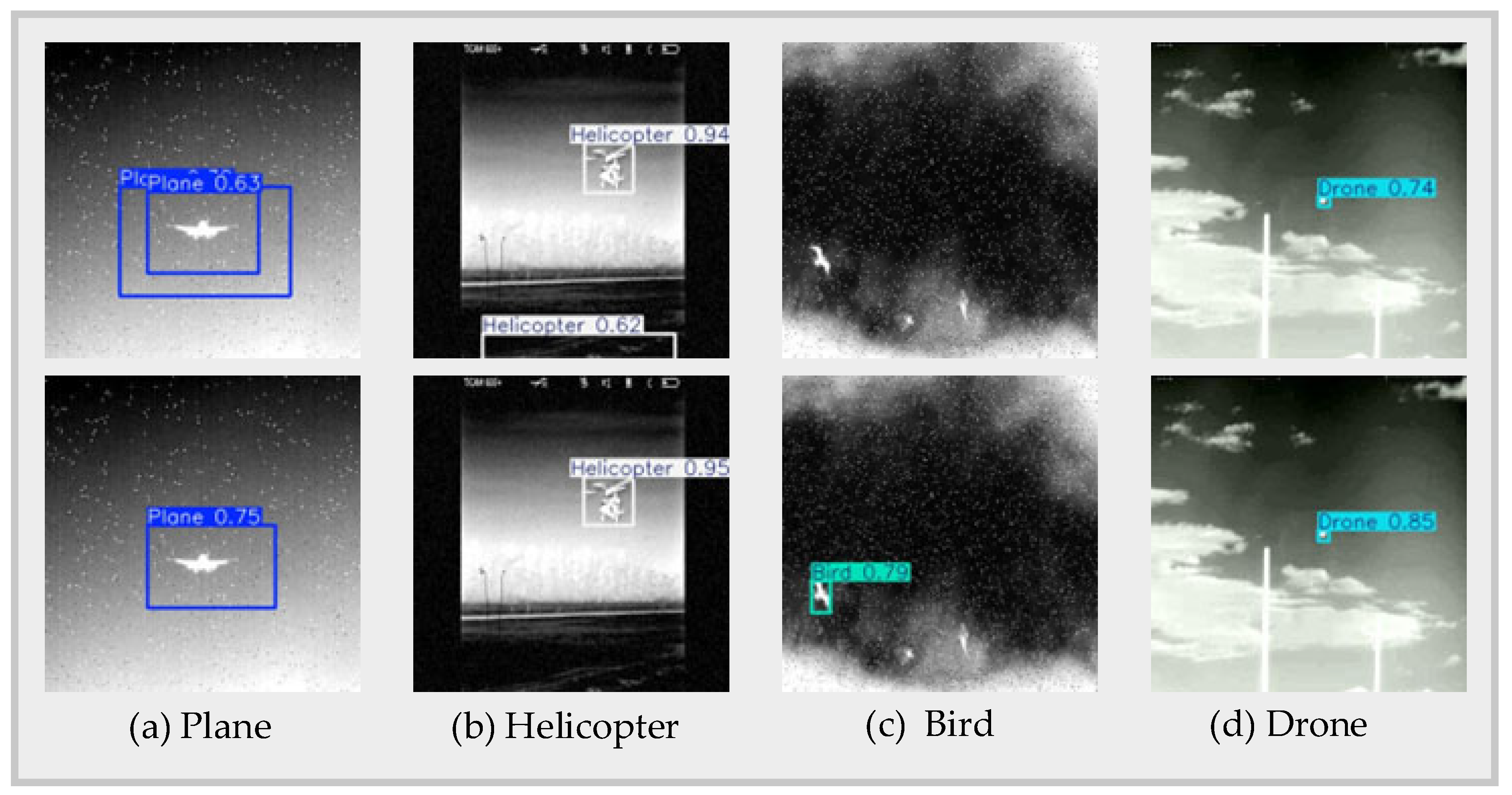

5.4. Detection Performance Analysis

This section systematically verifies the performance improvement of the enhanced YOLO11 algorithm in sky infrared target detection through comparative experiments across four typical scenarios. Visual comparisons were conducted on selected test set images, as illustrated in

Figure 10. The upper half of the figure displays partial detection results from the baseline model, while the lower half shows corresponding results from the improved model. Quantitative metrics further validate the optimization efficacy of the enhanced algorithm.

In

Figure 10a, the baseline model exhibits duplicate bounding boxes for aircraft detection: two overlapping detections with significant size discrepancies are generated for a single target. This indicates inherent issues of ambiguous feature localization and divergent spatial attention in the original architecture. The improved model eliminates redundant detections by leveraging the multi-scale dynamic decoupling mechanism in the Feature Decoupling Pyramid Network (FDPN), achieving a 19% increase in confidence score. This enhancement stems from the ADown module’s preservation of spatial details during feature downsampling, synergized with the cross-layer kernel-sharing mechanism in KernelWarehouse to strengthen edge feature discrimination.

Figure 10b demonstrates that while the baseline model correctly detects a helicopter target, it generates false positives in background regions. The improved model completely suppresses these erroneous detections while achieving a 1.1% confidence improvement. This validates the effectiveness of the exponential moving average (EMA) attention mechanism in FDPN for suppressing infrared background noise. Specifically, the FocusFeature module enables distinct differentiation between rotor features and ground thermal noise through contrast-driven attention allocation via parallel multi-scale convolutions.

Figure 10c reveals complete missed detection of avian targets by the baseline model due to cross-scale information loss in the feature pyramid. The improved model successfully detects these targets by mitigating information degradation through the channel splitting strategy of the ADown module, which preserves feature map resolution. Furthermore, the kernel partitioning mechanism in KernelWarehouse enhances channel sensitivity for small-object detection, enabling sub-pixel-level feature perception without increasing parameter complexity.

In

Figure 10d for UAV detection, the improved model achieves a 14.9% confidence gain and reduced bounding box size errors. This confirms the efficacy of the dynamic convolution parameter reuse strategy: KernelWarehouse’s 144-kernel shared repository significantly enhances feature representation capacity, while the deformable convolution mechanism in DyHeadBlock ensures high coverage of critical target points.

5.5. Comparative Analysis with Mainstream Models

To comprehensively evaluate the advancement of the AFK-YOLO model, this section conducts a horizontal comparison with mainstream object detection algorithms under the same experimental conditions, covering single-stage models (YOLO series, SSD) as well as transformer-based architectures (RTDETR). The comparison metrics include detection accuracy (mAP), computational efficiency (GFLOPs), parameter count (parameters), real-time performance (FPS), and deployment cost (weight size), as shown in

Table 4.

Based on the comparison data in

Table 4, it is evident that AFK-YOLO demonstrates significant advantages across multiple key performance indicators. Firstly, AFK-YOLO achieves a detection accuracy (mAP) of 78.6%, which is noticeably higher than other mainstream models. For example, the baseline YOLO11 achieves 76.2%, representing a 2.4 percentage point improvement. AFK-YOLO also surpasses representative advanced algorithms such as RTDETR (76.3%) and YOLOv8 (75.9%). This indicates that AFK-YOLO possesses stronger recognition capabilities for complex aerial infrared target detection tasks. Secondly, the model’s parameter count is only 2.41 M, the lowest among all compared models, which is about 6.9% less than YOLO11’s 2.59 M. It also significantly outperforms SSD (46.7 M) and RTDETR (32 M), highlighting the effectiveness of its lightweight design. In terms of weight size, AFK-YOLO is only 5.107 MB, considerably smaller than YOLO11’s 6.5 MB and most other models, making it more suitable for deployment on resource-constrained aerial surveillance devices.

Regarding computational complexity (GFLOPs), AFK-YOLO matches YOLO11 at 6.4 GFLOPs, which is much lower than RT-DETR’s 105.1 GFLOPs and YOLOv4’s 143.5 GFLOPs, thus ensuring efficient inference capabilities. In terms of real-time performance, while maintaining the same computational load (6.4 GFLOPs) as YOLO11, AFK-YOLO achieves an FPS of 416.7, which is significantly higher than the real-time threshold required for aerial security monitoring (≥60 FPS), meeting practical application needs. Although it is lower than the baseline model’s 909.6 FPS, its improvements in accuracy and lightweight design offer greater practical value in critical scenarios.

In summary, AFK-YOLO achieves a balance between detection accuracy, computational efficiency, and model lightweighting by effectively controlling model complexity and parameter size, significantly outperforming existing mainstream object detection algorithms. This comprehensive performance advantage endows it with stronger practical value and broader application potential for complex infrared target detection tasks in the field of aviation safety.