1. Introduction

Image super-resolution (SR) technology seeks to overcome the inherent limitations of optical imaging systems. This is accomplished through the reconstruction of high-resolution (HR) images starting from their corresponding low-resolution (LR) versions [

1]. As a prominent research topic in the field of computer vision (CV), SR has found significant applications in various domains. These include enhancing detail in CT scans for medical diagnostics [

2], overcoming resolution limitations in satellite remote sensing [

3], and improving the accuracy of facial recognition in security surveillance systems [

4]. Although techniques for SR have made considerable progress, several significant challenges remain. These are primarily manifested in (1) the inherent conflict in jointly optimizing global image structure and local details, making it difficult to simultaneously achieve long-range spatial consistency and local texture fidelity; and (2) the trade-off dilemma between pixel fidelity and perceptual quality. Specifically, reconstruction methods driven primarily by Mean Squared Error (MSE) often produce overly smooth results, while complex textures are prone to introducing artifacts.

Traditional interpolation-based super-resolution methods (e.g., Bilinear interpolation [

5], Bicubic interpolation [

6], NEDI [

7], and Contourlet-based interpolation [

8]) were widely adopted due to their high computational efficiency and ease of implementation. However, they fundamentally rely on simple mathematical formulas for pixel filling and lack the ability to reconstruct realistic textures and details. This inherent limitation leads to significant drawbacks: the upscaled images often suffer from noticeable detail blurring and over-smoothing, alongside aliasing artifacts around edge regions. Consequently, their performance is limited, thus failing to meet the demand for high visual quality super-resolution outcomes.

Convolutional Neural Network (CNN) ushered in a new era of data-driven approaches, providing novel solutions for SR. SRCNN [

9] pioneered an end-to-end mapping framework using a three-layer convolutional network, effectively overcoming the reliance on hand-crafted features inherent in traditional methods. However, it suffered from a limited receptive field and low computational efficiency. To expand the receptive field, VDSR [

10] employed a 20-layer residual structure, enhancing reconstruction accuracy. However, its requirement for pre-interpolated input significantly increased memory consumption. Targeting high-frequency information recovery, RCAN [

11] incorporated channel attention mechanisms and dense residual blocks (RRDBs). This design prioritized the enhancement of edge details and mitigated network degradation. However, its large parameter count impedes practical deployment. The SAN model [

12] introduced the second-order attention mechanism, modeling long-range dependencies among channels through covariance normalization. This significantly enhanced feature representation capabilities and addressed the difficulty of capturing global correlations in traditional CNNs. Unfortunately, the computation of higher-order statistics led to a substantial increase in computational complexity. Its extension, HAN [

13], constructed a multi-scale hierarchical attention structure. This progressively fused local details and global context across both spatial and channel dimensions, effectively improving cross-scale feature consistency and alleviating edge blurring. However, the redundancy introduced by its cascaded modules resulted in slow model convergence. Collectively, while these CNN-based methods have progressively addressed key challenges such as receptive field limitations, computational efficiency bottlenecks, and high-frequency detail modeling, they still commonly face persistent issues, including the accuracy-efficiency trade-off and limited multi-scale generalization capability.

The emergence of the generative adversarial network (GAN) [

14] has brought image generation and image SR into a new world. The SRGAN model [

15] pioneered the introduction of GAN into the SR domain. By leveraging perceptual loss and adversarial training, it could generate photo-realistic textures rich in detail, effectively addressing the overly smooth results typical of traditional methods. However, it was prone to producing artifacts and exhibited relatively low Peak Signal-to-Noise Ratio (PSNR) values. Its enhanced version, ESRGAN, incorporated Residual-in-Residual Dense Blocks (RRDBs) and a relativistic discriminator, greatly improving visual realism [

16]. Nevertheless, it grappled with training instability and issues of high-frequency noise. Targeting practical application challenges, Real-ESRGAN [

17] employed higher-order degradation modeling and a discriminator with spectral normalization. This effectively addressed complex blurring and noise in real-world images, albeit demanding extensive training with synthetic data. USRNet [

18] embedded prior knowledge into the iterative GAN training process through unfolding optimization, achieving interpretable blind SR. However, this came with high computational costs due to iterative refinement. FeMaSR [

19] introduced a feature matching mechanism to constrain the generation process, significantly enhancing identity consistency in face SR. Yet, it struggled to generalize effectively to natural scenes. Jia et al. proposed a generative adversarial network model specifically for super-resolution of retinal fundus images [

20]. Its key feature is the introduction of a “vascular structure prior”. However, the performance of image super-resolution is highly dependent on the accuracy of the pre-trained vascular segmentation network. If the prior knowledge of vascular structure is inaccurate or noisy, it may mislead the super-resolution process and introduce artifacts. While current GAN-based methods have achieved breakthroughs in perceptual quality, they still commonly face challenges such as the risk of mode collapse and weak generalization to real-world scenarios.

In 2021, the Vision Transformer (ViT) model [

21] was first proposed for image classification tasks. The IPT model [

22] pioneered the adaptation of the ViT architecture for SR tasks. By leveraging multi-head self-attention to model global dependencies, it effectively addressed the long-range structural reconstruction problem inherent in CNNs caused by their limited receptive fields. However, it suffered from an enormous parameter count and demanded massive training data. Swin-IR [

23] introduced a shifted window mechanism, extracting features within local windows to reduce computational complexity. This approach balanced global modeling with efficiency. Nevertheless, it exhibited sensitivity to rotational variations and demonstrated insufficient recovery of high-frequency details. Restormer [

24] employed channel-wise self-attention combined with a gating mechanism. This design significantly reduced computational overhead and enhanced cross-channel interactions. However, it showed limited generalization capability for motion blur artifacts. The HiT-SR model [

25] adopts a hierarchical architecture and processes image features at multiple scales (from coarse to fine), which is different from the standard Transformer that operates at a single scale. However, there are still challenges when dealing with extremely fine texture details. STGAN [

26] is a remote sensing image reconstruction model based on reference super-resolution, integrating a generative adversarial network and self-attention mechanism (based on Swin Transformer). The core objective is to enhance the details of LR images using reference images to achieve high-quality super-resolution reconstruction. STGAN relies heavily on the quality and similarity of the reference images. If the quality of the reference image fluctuates (such as inconsistent resolution), the robustness of the model may decline.

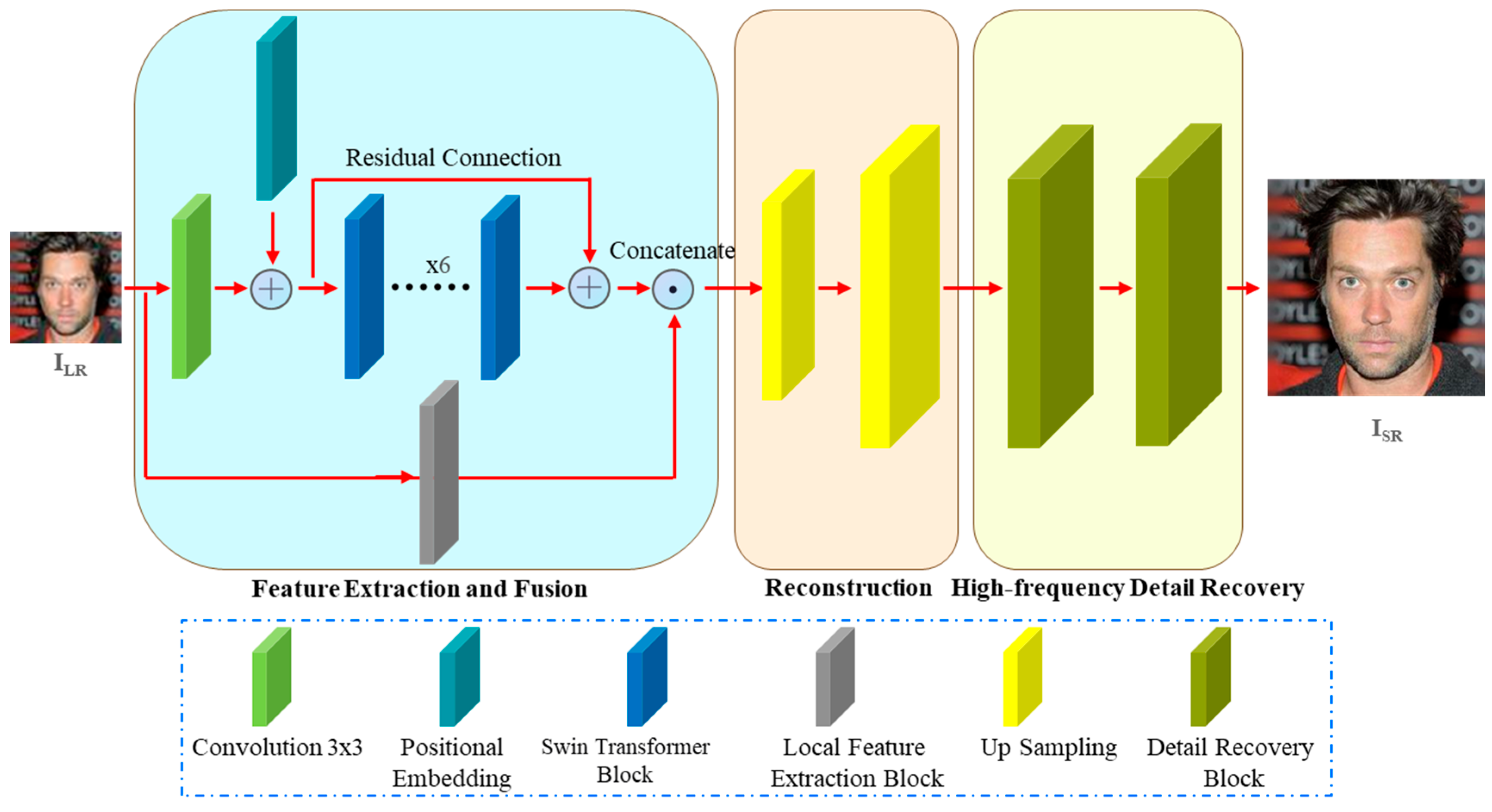

Given the complementary strengths of Transformers and GANs, we argue that their integration offers a promising solution to the aforementioned challenges. Transformers excel at capturing long-range dependencies through self-attention mechanisms, thereby enhancing global structural consistency. In contrast, GANs are particularly powerful in generating high-frequency details and photo-realistic textures through adversarial training. By combining these two paradigms, our approach aims to simultaneously achieve superior structural coherence and enhanced perceptual quality, effectively addressing the trade-offs between pixel-level accuracy and visual realism. Thus, we introduce SwinT-SRGAN, a novel GAN model that synergistically integrates Transformer and traditional CNN architectures. In this study, we contribute the following:

- (1)

A dual-path feature fusion generator architecture is proposed. The window-based attention module of Transformer performs global modeling to address long-range dependencies in image features. These globally enhanced features are then explicitly fused with the output of Local Feature Extraction Block (LFEB), significantly enhancing structural consistency and detail fidelity.

- (2)

An end-to-end high-frequency Detail Recovery Block (DRB) is innovatively introduced. This dedicated module specifically targets the restoration of crucial high-frequency details often lost during the SR process.

- (3)

A triple-branch multi-scale discriminator is designed. This discriminator provides hierarchical adversarial supervision spanning from global structure to local texture, effectively guiding the generator to make high-quality images with coherent details at multiple scales.

- (4)

A dynamic scheduling strategy for a six-component loss function is proposed. This strategy adaptively balances pixel fidelity, perceptual quality, and high-frequency constraints throughout the training process.

This paper is structured as follows:

Section 2 covers foundational studies in related works,

Section 3 elaborates on architectural enhancements of our proposed model,

Section 4 evaluates performance through comprehensive experiments, and

Section 5 summarizes conclusions.

4. Experiments

4.1. Datasets

Two publicly available datasets were utilized for model validation. CelebA-HQ is a dataset of excellent face images specifically designed for CV research, comprising 30,000 images at different resolutions. Flickr2K contains 2650 images covering diverse themes, including natural scenes, people, and architecture, and is primarily employed for research. We, respectively, selected 8000 images from CelebA-HQ and 2600 images from Flickr2K as the experimental datasets. Both datasets were partitioned into training sets and testing sets using a 9:1 ratio. Due to hardware constraints and training time considerations, images with resolutions of 64 × 64 and 256 × 256 were selected for the SR experiments.

4.2. Metrics

To comprehensively evaluate the quality of image SR reconstruction, this study employs three complementary evaluation metrics: PSNR, SSIM, and LPIPS [

30]. These metrics provide quantitative assessment from three distinct dimensions: pixel-level fidelity, structural similarity, and perceptual quality, respectively.

PSNR measures the pixel-level error between the reconstructed image and the original HR image, reflecting the signal-to-noise ratio level. It is expressed in decibels (dB), where higher values show superior reconstruction quality. The mathematical expression is as follows:

where

represents the maximum possible value, and MSE is defined as follows:

where

and

represent the image’s height and width, respectively.

SSIM evaluates the similarity between images across three different dimensions: luminance, contrast, and structure. Its value falls between 0 and 1, inclusive, where better structural fidelity is indicated by values nearer 1. The mathematical expression is given by the following:

where

, and

represent the luminance comparison, contrast comparison, and structure comparison functions, respectively, and

are parameters typically set to 1 by default.

LPIPS is a similarity metric based on deep feature space distance, directly quantifying human visual perception of image differences. Its value falls between 0 and 1, inclusive; higher perceptual quality is indicated by lower values (0 indicates perfect perceptual similarity). The mathematical expression is defined as follows:

where

denotes the feature map from the l-th layer of a pretrained CNN,

is a learnable channel weight vector, and

represents the channel-wise weighting operation.

It should be noted that all the quantitative data are obtained after three independent experiments.

4.3. Experiment Settings

A physical server with eight NVIDIA RTX 3090 GPUs was used for the experiments, running Ubuntu 20.04 with PyTorch 2.2.0. Training was conducted using data parallelism, with the generator’s learning rate adjusted via a cosine annealing strategy. During data preprocessing, channel-wise mean and standard deviation (std) values were calculated separately for the CelebA-HQ and Flickr2K datasets. Additional hyperparameters are listed in

Table 1, where

, and

follow linear scheduling rules. Specifically,

is defined as follows:

where epoch denotes the current training epoch number, and E represents the total number of training epochs (1000 epochs). During the initial 300 epochs,

maintains a high value (>0.8) to ensure the generator rapidly converges to a reasonable pixel-level solution. The weight of Content Loss is progressively reduced over the training process. This prevents the L1 norm from dominating the optimization, thereby mitigating the loss of textural details while avoiding excessive pixel smoothing.

is defined as follows:

During the initial training phase, the weight of the Perceptual Loss () is kept relatively low. This avoids premature optimization guided by deep semantic features, which could distort the generator’s learning direction. As training progresses, is progressively increased. This allows it to work synergistically with the diminishing Content Loss, thereby preserving the overall reconstruction quality without degradation.

is defined as follows:

Edge Loss is predominantly dedicated to edge formation during the early training stage. As optimization advances, its focus shifts to fine-grained refinement at the microscopic scale, such as recovering textures like wrinkles and hair strands.

Furthermore, it should be noted that the training time for the model on the CelebA-HQ dataset is approximately 35 h, while on the Flickr2K dataset, the training time is 9 h and 15 min.

4.4. Comparative Experiments

To scientifically assess the image SR performance of the SwinT-SRGAN model, qualitative and quantitative experiments were first conducted on the CelebA-HQ dataset. The models selected for comparison include SRGAN, ESRGAN, SAN, HAN, Real-ESRGAN, Swin-IR, and Omni-IR [

31]. The models being compared are all classic models among different types of methods in recent years. Among these, SRGAN, ESRGAN, and Real-ESRGAN belong to the category of traditional GAN-based models. SAN and HAN represent typical SR models based on attention mechanisms, while Swin-IR serves as a Transformer-based approach for SR. Omni-IR, in contrast, is a lightweight SR model. As illustrated in

Figure 8, the magnified views of the mouth region are displayed in the upper-right corner of all resulting images.

In

Figure 8, we will focus on the overall structure of the teeth after super-resolution and whether interdental gaps are clear. As evidenced by

Figure 8, SRGAN, ESRGAN, and Real-ESRGAN exhibit discernible artifacts and boundary blurring in critical facial regions (e.g., teeth, lips), accompanied by geometric distortions in the teeth. While SAN and HAN demonstrate superior structural preservation overall, their reconstructed teeth suffer from over-smoothing, resulting in the loss of interdental gaps that are inconsistent with anatomical reality. Swin-IR and Omni-IR perform well in maintaining the global image structure; however, they lack sufficient detail in high-frequency regions, indicating potential for further optimization. Critically, our proposed SwinT-SRGAN model demonstrably outperforms all seven comparative models in both global structural fidelity and high-frequency detail reproduction, achieving results closest to the original HR ground truth. Comparative analysis of features such as interdental gap clarity and lip contour geometry reveals SwinT-SRGAN’s superior capability in high-frequency detail reconstruction. The model achieves sub-pixel-level edge reconstruction while effectively suppressing geometric distortions.

To ensure a scientific comparison, SwinT-SRGAN was quantitatively compared to the seven baseline models.

Table 2 displays the relevant PSNR, SSIM, and LPIPS measurements. Among the baselines, SRGAN exhibits the poorest performance values. As the pioneering GAN-based SR model, it established a new paradigm for image SR; however, its relatively simplistic architecture results in suboptimal performance. Building upon SRGAN, ESRGAN incorporates Residual-in-Residual Dense Blocks to enhance feature reuse capability. Real-ESRGAN primarily focuses on degradation modeling and employs a U-Net structure. Consequently, it demonstrates limited improvement in image SR performance for the current experimental setup. Both SAN and HAN are designed to transcend the limitations of basic channel attention mechanisms. The SAN model delves into complex statistical relationships between channels, while the HAN model achieves multi-level feature fusion. These approaches significantly elevate the upper bound of image reconstruction quality, yielding quantitative metrics superior to the first three GAN models. The Swin-IR model leverages the long-range modeling capability of transformer architecture to further advance SR performance, achieving highly competitive results across PSNR, SSIM, and LPIPS metrics. Utilizing an innovative Omni-Scale Aggregation (OSA) mechanism, Omni-IR accomplishes image SR with exceptionally low computational overhead, albeit at the expense of less prominent quantitative scores.

Our proposed approach enhances the generator architecture by introducing a dual-path Transformer-CNN parallel feature extraction module, coupled with a multi-scale fusion discriminator network. Combined with a refined model training strategy, this comprehensive design achieves very good quantitative results across all evaluated metrics compared to the baseline models.

To further validate the generalization capability of the SwinT-SRGAN model for SR across diverse datasets, a cross-dataset experiment was conducted on the Flickr2K dataset. Experimental parameters retained identical configurations to those described in

Section 4.3. Evaluation metrics remained consistent with those used for CelebA-HQ (PSNR, SSIM, and LPIPS). Qualitative comparative results and quantitative results are shown in

Figure 9 and

Table 3, respectively.

Figure 9 presents a comparative visualization of SR results generated by different models on the Flickr2K dataset. To accentuate disparities in high-frequency detail recovery capabilities (e.g., textures, edges), a key region rich in intricate details is magnified in the upper-right corner of each image. We will focus on the magnified areas to reflect the advantages and disadvantages of each super-resolution algorithm. The super-resolved outputs from SRGAN, ESRGAN, and Real-ESRGAN exhibit pronounced artifacts and blurring in critical regions such as the eyes. While SAN and HAN leverage attention fusion mechanisms to recover more accurate textural structures in certain scenarios, the coherence of their restored details can be inconsistent. This limitation manifests as distortions and artifacts, particularly noticeable in high-frequency textures like facial features. The Swin-IR model demonstrates robustness in reconstructing regular structures; however, its restored details occasionally exhibit unnatural characteristics. Omni-IR, positioned as a practical lightweight model, delivers suboptimal performance for image SR tasks on smaller datasets like Flickr2K. Crucially, the superior high-frequency detail recovery capability demonstrated by the SwinT-SRGAN model on the Flickr2K dataset strongly corroborates the qualitative observations previously noted in the CelebA-HQ facial dataset analysis.

Comparison of the quantitative metrics (PSNR, SSIM, LPIPS) in

Table 3 and

Table 2 reveals a consistent trend: all evaluated models, including the proposed SwinT-SRGAN, exhibit lower performance on the Flickr2K compared to the CelebA-HQ dataset. In-depth analysis reveals that the disparity in training dataset scale is a critical factor underlying this cross-dataset performance gap. The powerful feature representation capabilities of deep learning models, particularly complex high-performance SR architectures (e.g., GAN-based, Transformer-based models), are highly dependent on large-scale and diverse training data. Notwithstanding this limitation, the data in

Table 3 conclusively demonstrate that the SwinT-SRGAN model still achieves superior SR reconstruction quality compared to the other baseline models.

Collectively, the qualitative and quantitative experiments conducted on both CelebA-HQ and Flickr2K prove that our model excels in restoring intricate high-frequency features (e.g., teeth, eyes) and suppressing image artifacts relative to the comparative models. This superior and consistent performance across diverse datasets provides compelling evidence for the robust generalization capability inherent in the designed generator architecture of the proposed model. Its effectiveness in capturing and learning essential image priors enables high-quality SR reconstruction across varied and complex visual content.

4.5. Ablation Experiments of the Models

To confirm the efficacy of the core blocks within our proposed generator architecture, an ablation study on the generator was designed. Under fixed training settings (CelebA-HQ, loss functions, number of iterations) and a unified discriminator structure, the following four key modules were successively removed from the full model for comparative analysis: Positional Embedding (PE) module, Local Feature Extraction Block (LFEB), Residual Connection (RC) structure, and Detail Recovery Block (DRB). The construction methods for each variant model are as follows:

w/o PE: PE is removed; feature maps are directly input into subsequent modules;

w/o LFEB: LFEB is removed, and the corresponding concatenation operation is omitted;

w/o RC: The residual connection path is eliminated, retaining only the forward propagation of the main branch;

w/o DRB: DRB is removed; deep features are directly upsampled for output;

Full Model: The complete model incorporating all four modules.

Figure 10 and

Table 4 display the comparative visualization and quantitative findings of the generator ablation experiments, respectively.

Figure 10 presents a comparative visualization of SR results on facial images, with a magnified focus on the teeth and lip details in each output. Critical observations from the ablation study include the following:

w/o DRB: Removal of DRB results in significantly blurred tooth edges and adhesion of adjacent interdental gaps, compromising the geometric separation of individual teeth. The absence of the DRB demonstrably weakens the model’s high-frequency detail synthesis capability.

w/o LFEB: Elimination of LFEB introduces blocky artifacts on the tooth surfaces and produces deviations in natural gloss.

w/o PE: Removal of the PE module causes blurring of the boundaries between the central incisors and lateral incisors.

w/o RC: Ablation of the residual connection path leads to the near-complete disappearance of interdental gaps in the lateral teeth.

As presented in

Table 4, the contributions of each core generator block to SR performance were systematically evaluated. On the CelebA-HQ test set, the full model (Ours) demonstrates comprehensive superiority over all variants where individual modules were ablated. The full model achieves the optimal overall performance: PSNR 26.49 dB, SSIM 77.70%, LPIPS 21.52. The removal of any single module consistently degrades all three metrics, confirming the indispensable synergistic role of each component in achieving high-quality SR. Crucially, the removal of DRB resulted in the most severe performance degradation: PSNR decreased significantly by 0.88 dB (25.61 vs. 26.49), SSIM dropped by 2.08 percentage points (75.62% vs. 77.70%), and LPIPS worsened by 0.71 (22.23 vs. 21.52). This quantitative evidence underscores the critical role of DRB in high-frequency detail reconstruction, aligning with the observed interdental gap adhesion in

Figure 10. The absence of the other three components (RC, PE, LFEB) also negatively impacted SR performance to varying degrees. Considering the PSNR metric as an example, the magnitude of performance degradation is ranked as follows: DRB removal > RC removal > PE removal > LFEB removal.

Similarly, to validate the necessity of the discriminator’s multi-branch design, a single-branch variant model (1 branch) was constructed. Comparative experiments were conducted using a fixed generator structure (Full Model) and an identical training strategy.

Figure 11 presents the comparative results, featuring magnified views of the mouth region in the upper-right corner. Close inspection reveals that images super-resolved by the single-branch variant exhibit the following:

The three-branch discriminator design significantly enhances the generator’s reconstruction accuracy of image features through multi-scale adversarial supervision (global-local-edge). Conversely, the single-branch variant fails to provide hierarchical discriminative signals, leading to unacceptable image degradation, including structural distortion and edge blurring.

As presented in

Table 5, on the CelebA-HQ test set, the three-branch discriminator (Ours) achieves significant improvements across all evaluation metrics compared to the single-branch variant (1 branch). The three-branch design effectively constrains macro-structural integrity through global semantic supervision, substantially mitigating image distortion. This is quantitatively evidenced by an increase of 0.58 dB in PSNR and a 0.73 percentage point improvement in SSIM. Furthermore, a corresponding enhancement in LPIPS underscores its contribution to perceptual quality.

4.6. Ablation Experiments of Swin Transformer Block

Swin Transformer Block (STB) within the generator utilizes a hybrid configuration of SW-MSA and W-MSA. To investigate the contribution of this hybrid design, we conducted an ablation study comparing three variants:

Exclusively SW-MSA: Two consecutive SW-MSA modules (no W-MSA);

Exclusively W-MSA: Two consecutive W-MSA modules (no SW-MSA);

Full Model: The original alternating arrangement of SW-MSA and W-MSA.

Comparative visual results are presented in

Figure 12, while the corresponding quantitative analysis is provided in

Table 6.

The experimental results demonstrate that the hybrid utilization of both window attention mechanisms within the STB leads to significant improvements across PSNR, SSIM, and LPIPS. Crucially, the performance of the two variants where either module was removed individually (Exclusively SW-MSA and Exclusively W-MSA) closely approximates each other, indicating a strong complementary relationship between SW-MSA and W-MSA. Specifically:

Exclusively W-MSA (no SW-MSA): Removal of SW-MSA results in blurring of the tooth-lip boundary. The fixed window partitioning inherent to W-MSA isolates information across windows, violating the biological continuity principle of dental tissue structures.

Exclusively SW-MSA (no W-MSA): Utilizing solely SW-MSA within the STB introduces salt-and-pepper noise near tooth roots. The global shifting operation characteristic of SW-MSA induces misalignment artifacts, disrupting the stability of local features.

The cascaded/alternating integration of both attention mechanisms within the STB facilitates cross-scale fusion of image features, thereby maximizing the SR performance.

Furthermore, to investigate the impact of attention module order within the generator’s STB, we conducted an order reversal experiment: placing SW-MSA before W-MSA. Experiments were performed on the CelebA-HQ test set, with focused analysis on visual quality differences in critical regions such as the mouth, eyes, and ears (as illustrated in

Figure 13). Key observations revealed are as follows:

Blunting of the lip apex in super-resolved outputs;

Subtle canthus details at the eye corners;

Blurred internal contours of the ear structure.

This performance degradation stems from the reversed order: Performing shifted window attention first disrupts the inherent local structure. The resulting cross-window information leakage induces local distortion within the image. Quantitative data in

Table 7 confirms that reversing the attention order causes synchronous degradation across all metrics, with the most pronounced decrease observed in SSIM (0.48 percentage point reduction). Consequently, the sequential order W-MSA → SW-MSA within the STB is non-interchangeable. This design implements a progressive reconstruction strategy—prioritizing local detail refinement followed by cross-window correction—thereby achieving superior high-frequency fidelity.

4.7. Ablation Experiments of Generator Loss Functions

The generator employs six distinct loss functions to constrain model training: Adversarial Loss, Content Loss, Edge Loss, Frequency Loss, Gradient Loss, and Perceptual Loss. Among these, Adversarial Loss serves as the cornerstone of the adversarial training between the generator and discriminator and is indispensable. Under the fixed Adversarial Loss constraint, comparative experiments were conducted by sequentially removing a single loss function. Analysis focused on detailed discrepancies in high-frequency regions (e.g., the mouth), with magnified views displayed in the upper-right corner, as illustrated in

Figure 14.

Key observations include the following:

w/o Content Loss: Removal of the Content Loss results in excessive smoothness due to the lack of pixel-level constraints, manifesting as global blurring of the teeth region.

w/o Edge Loss: The Edge Loss specifically targets the constraint of image edges and contours, aiming to ensure generated images exhibit sharp and well-defined outlines (e.g., lip contours, tooth boundaries). Its removal prompts the model to produce overly smoothed outputs, compromising critical edge information that defines object shape and structure. This leads to an overly “fleshy” appearance or ill-defined boundaries in the lip region.

Similarly, the removal of each of the other three loss functions (Frequency Loss, Gradient Loss, Perceptual Loss) resulted in distinct forms of degradation in the super-resolved images.

Quantitative analysis in

Table 8 further corroborates that the synergistic effect of multiple loss functions comprehensively enhances super-resolved image quality, particularly excelling at the level of human visual perception. The Content Loss exerts the most significant impact on PSNR, with its removal causing a substantial decrease of 0.96 dB. Without the constraint of the Edge Loss, blurred tooth and lip contours manifest, resulting in the most pronounced SSIM degradation (a 2.2 percentage point reduction). The Gradient Loss plays a crucial role for LPIPS, as its absence leads to a marked increase of 3.5 in the LPIPS value. Therefore, the loss function ablation experiments validate the unique contribution of each individual loss component. The removal of any single loss function induces specific, observable quality degradation in the generated SR images. Only the synergistic interplay of all loss functions enables the achievement of optimal performance across all objective metrics.

4.8. Experiments on the Validity of Dynamic Weights of Loss Functions

To validate the dynamic loss weighting strategy, comparative experiments were conducted:

As demonstrated in

Figure 15, the dynamic weighting strategy exhibits superior detail reconstruction:

The lower lip maintains a textured and nuanced contour, avoiding a blurred or swollen appearance.

The inner contours of the eye corners and ears remain relatively distinct.

Data presented in

Table 9 demonstrate improvements across all three metrics. Both qualitative visual comparisons and quantitative experimental results confirm that the dynamic weighting strategy significantly outperforms the fixed-weight method in preserving both structural authenticity (as measured by PSNR/SSIM) and perceptual quality (as measured by LPIPS). This core advantage stems from the precise alignment of weight adjustment with the demands of visual feature reconstruction.