Cycle-Iterative Image Dehazing Based on Noise Evolution

Abstract

1. Introduction

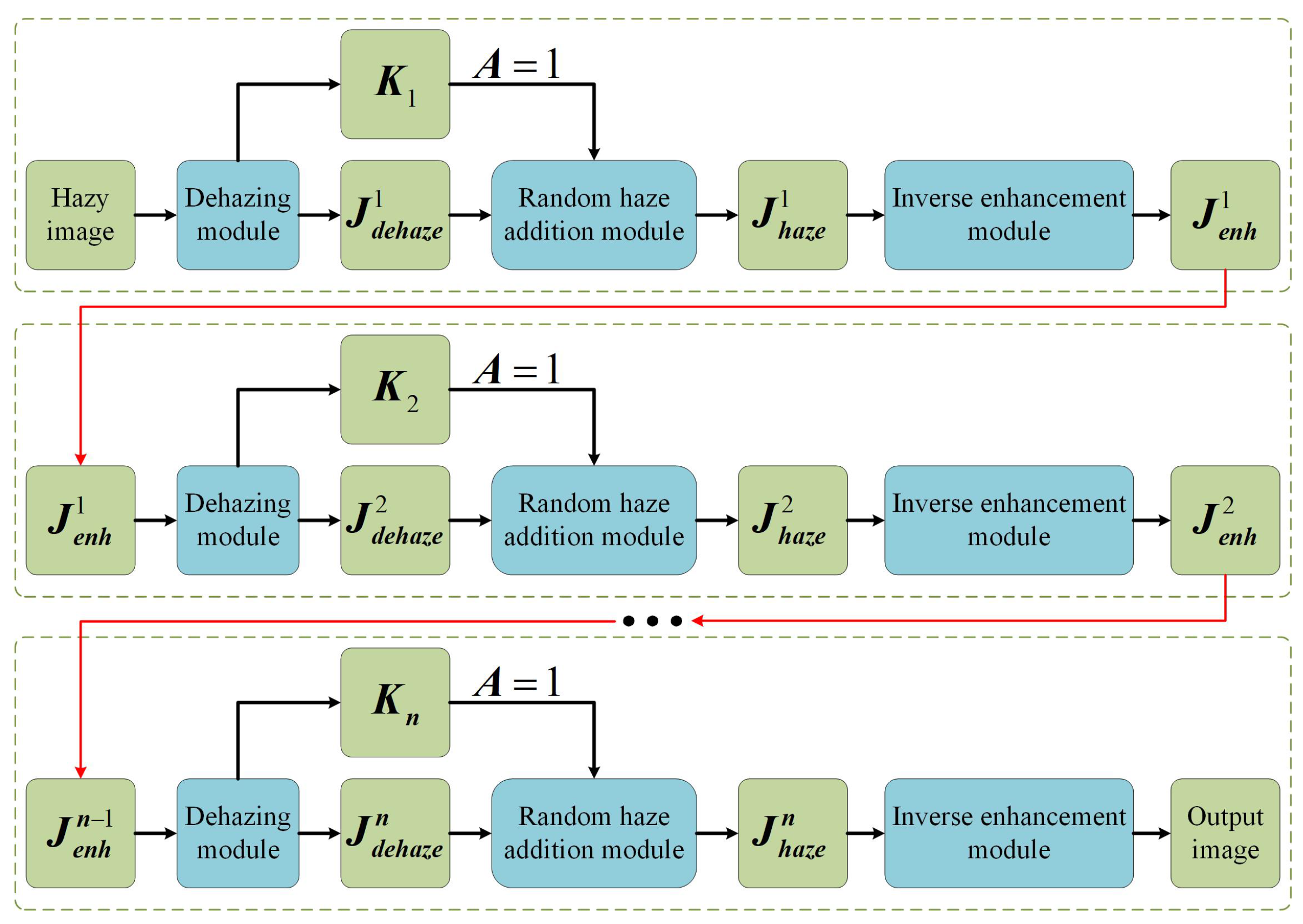

- To the best of our knowledge, we are the first to propose a novel dehazing framework grounded in the concept of noise evolution. This innovative approach not only enables the virtualization of haze data during the process but also facilitates the extraction of richer, more informative priors from both the atmospheric scattering model (ASM) and Retinex model. By integrating these models within a dynamic iterative cycle, our framework introduces a new paradigm for image dehazing that enhances both the interpretability and effectiveness of haze removal.

- In response to the limitations of existing symmetric datasets, particularly in terms of sample diversity and completeness, we introduce a novel random haze addition module. This module innovatively simulates haze and applies it to the existing dataset, effectively expanding the sample space. By generating virtual haze variations, our method not only enriches the dataset but also significantly enhances its robustness. This expansion improves the performance of our approach across both real-world images and their virtual counterparts, marking a substantial advancement in dehazing techniques by addressing the gap in diverse, high-quality training data.

- To address the limitation of existing algorithms, which primarily focus on haze-related depth features or overlook the Retinex model, we propose an innovative inverse enhancement module that leverages a reverse strategy. This module is designed to extract and refine depth features related to illumination, thereby solving the critical issues of over-dehazing and under-dehazing. By incorporating illumination-driven enhancements, our approach enables a more balanced and accurate dehazing process, offering a significant advancement over traditional methods that fail to account for these complex scenarios.

2. Related Works

2.1. Retinex-Based Image Dehazing

2.2. Atmospheric Scattering Model-Based Image Dehazing

2.3. Learning-Based Image Dehazing

2.4. Iterative Image Dehazing and Enhancement

3. Cycle-Iterative Image Dehazing Based on Noise Evolution

3.1. Overall Network Architecture

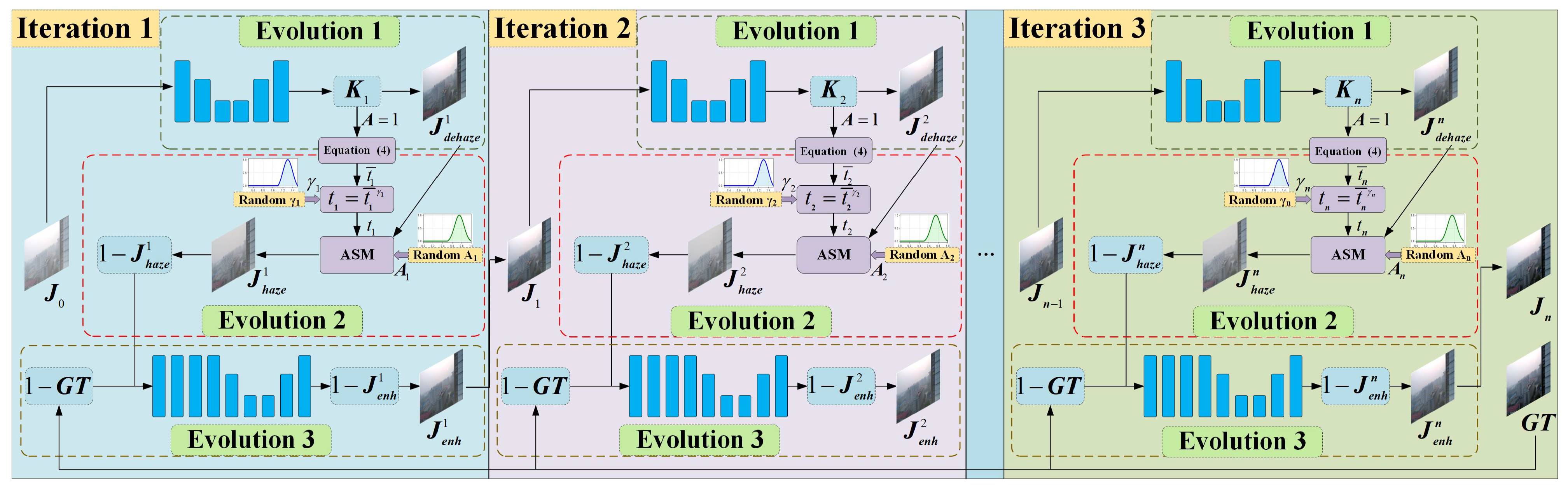

3.2. ASM-Based Dehazing Module (Evolution 1)

3.3. Random Haze Addition Module (Evolution 2)

- The first strategy applies a statistically guided linear normalization to the initial transmission map. Specifically, it is formulated aswhere and denote the scaling coefficient and offset term in the linear transformation, respectively. These parameters are derived from the statistical distribution of . By applying the linear transformation in Equation (5), the distribution of is normalized to as follows:where symbols and denote the maximum and minimum values of the coarse transmission map , respectively. To reduce the influence of outliers, the 5th and 95th percentiles are used in place of global extrema during experiments to improve robustness. This linear transformation maps the original values into the standard interval , ensuring physical plausibility of the transmission map generated.

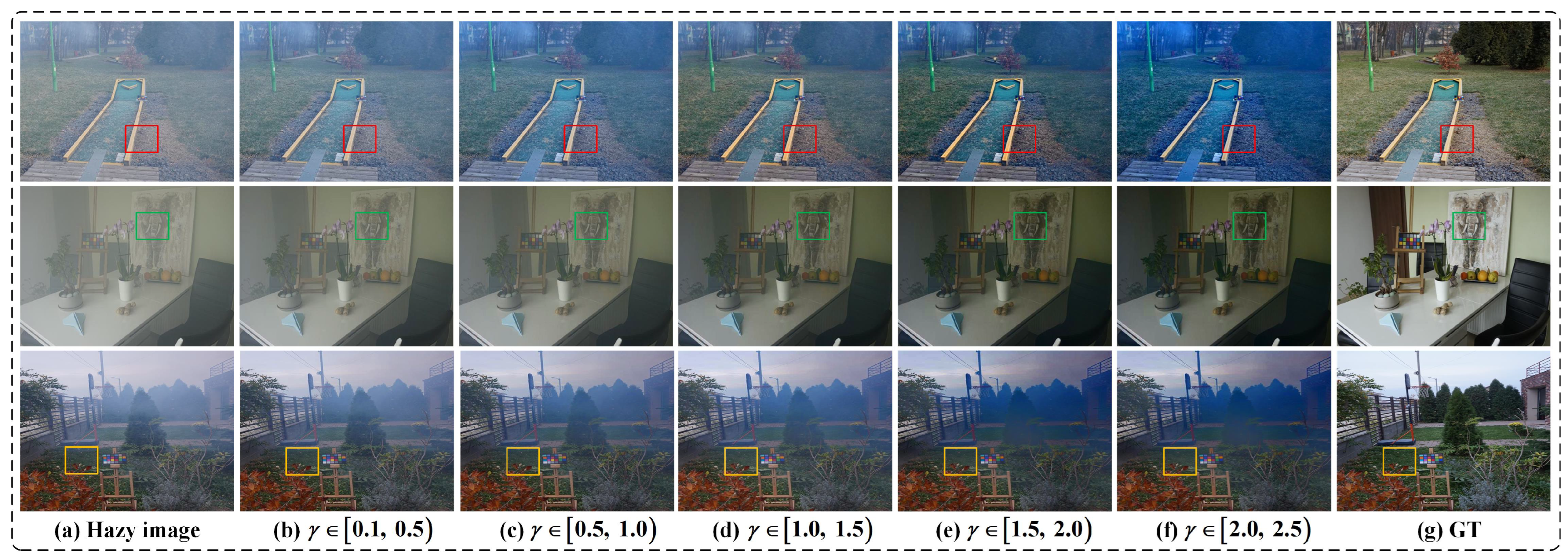

- The other strategy is based on gamma correction [8] to perform nonlinear adjustment. To effectively refine the transmission map t, the gamma value is randomly sampled within the range [1.1, 1.5]. Setting this range aims to balance low- and high-transmission regions and avoid excessive correction. By introducing randomness into gamma sampling, this method simulates the non-uniformity of haze in natural environments, thereby enhancing the realism and diversity of the synthesized hazy images. Mathematically, the expression of such a transformation is given bywhere denotes the randomly selected gamma value in the i-th iteration. Subsequently, the adjusted transmission map t and randomly generated atmospheric light are substituted into Equation (2) to synthesize a hazy image. The second stage of each iteration not only facilitates effective collaboration with the subsequent enhancement module but also significantly diversifies the training data through controlled noise introduction. For clarity, the architecture of the haze addition module is illustrated on the right side in Figure 3. Thanks to the second stage within the proposed noise evolution framework, the random haze addition module systematically introduces synthetic haze and is able to control noise into the output from the previous dehazing stage. The benefit of doing so is that it can expand the dataset from the original samples that may contain non-uniform haze cover, which is very useful to improve the robustness or generalization of our method.

3.4. Retinex-Based Inverse Enhancement Module (Evolution 3)

3.5. Loss Function

4. Experiment Results Analysis

4.1. Experiment Settings

4.2. Dataset and Benchmark

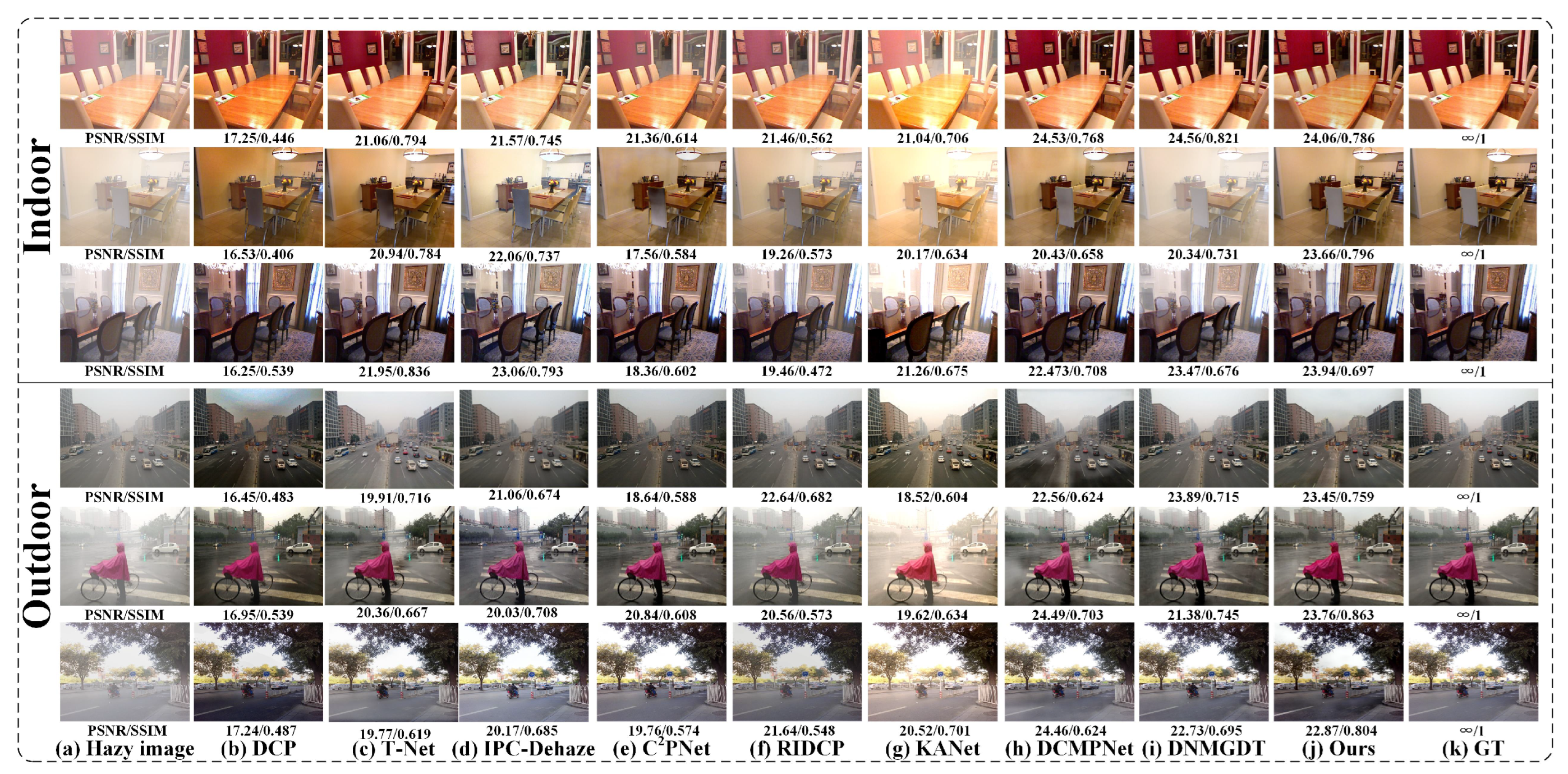

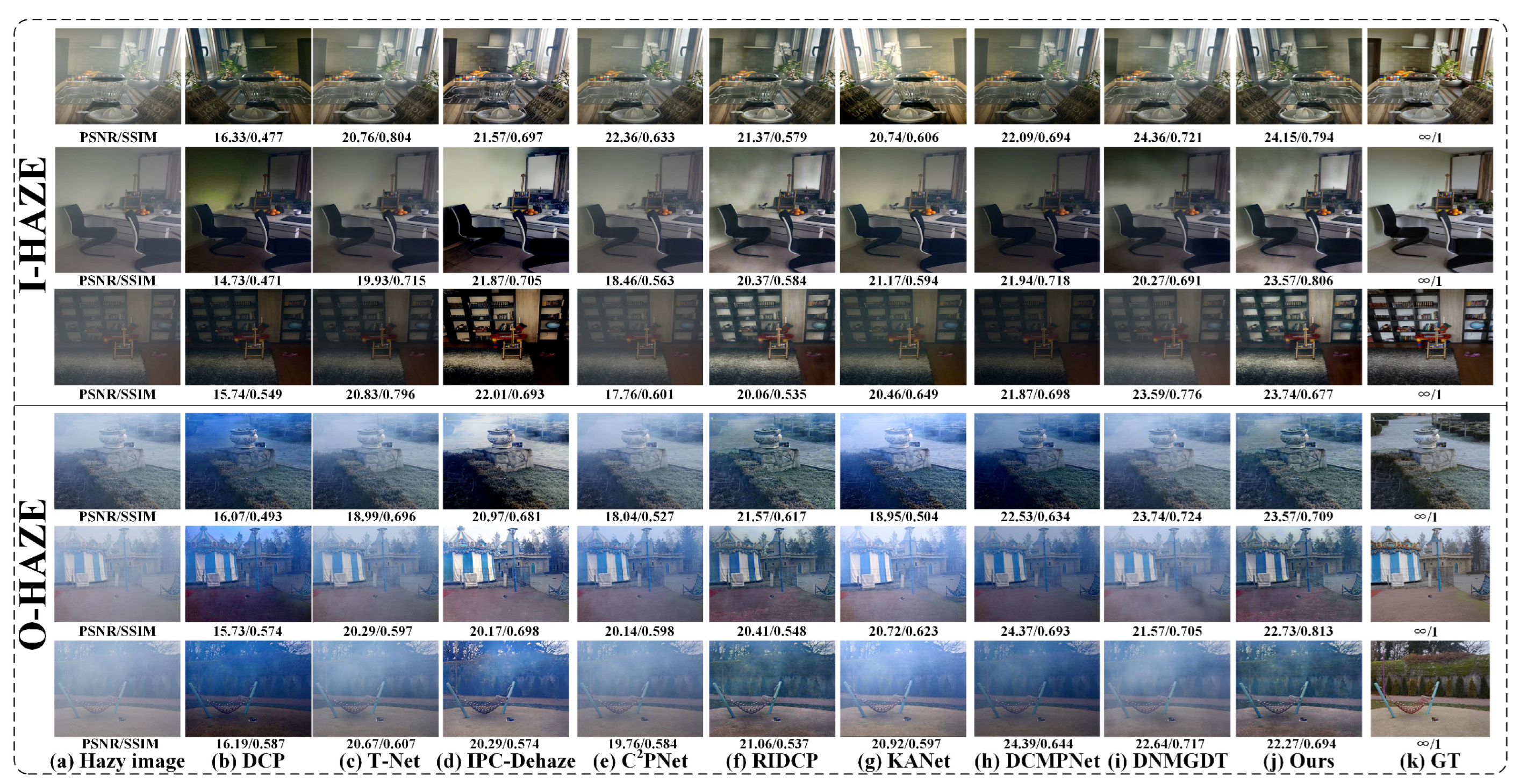

4.3. Experiment on Synthetic Datasets

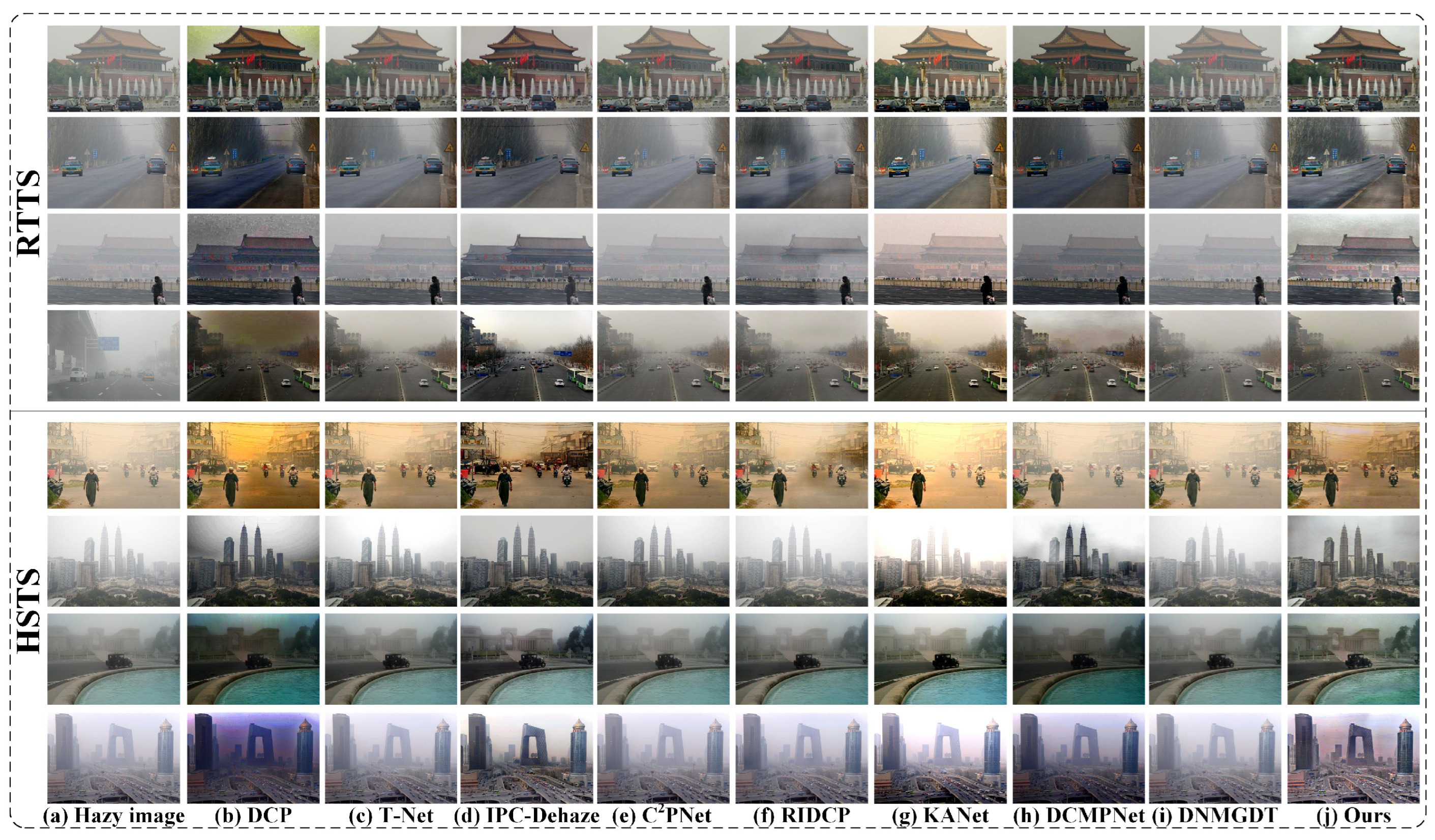

4.4. Experiment on Real-World Datasets

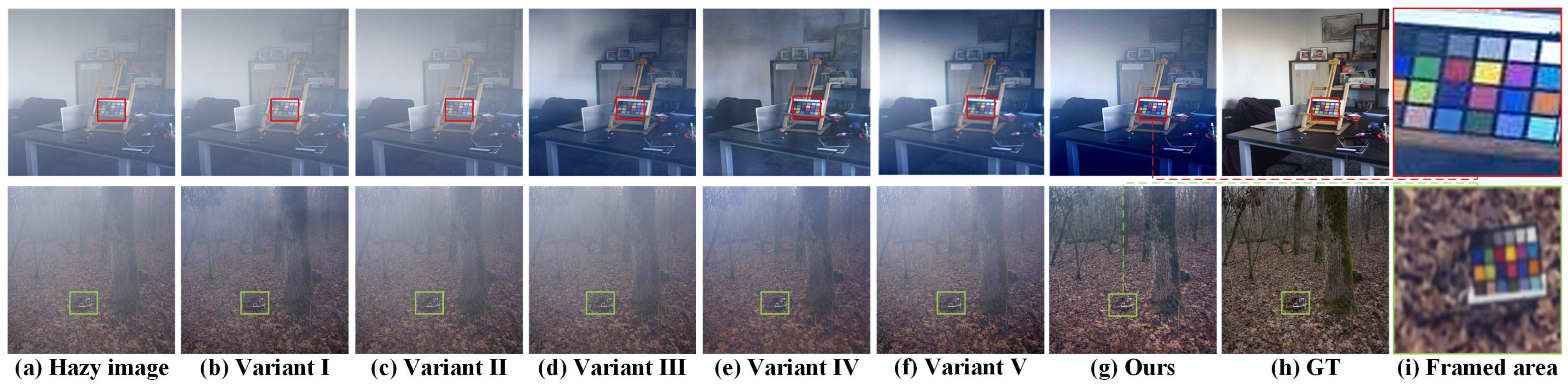

4.5. Ablation Experiments

- Variant I: Both the inverse enhancement module and the random haze addition module are removed.

- Variant II: The random haze addition module is removed, while the dehazing and inverse enhancement modules are retained.

- Variant III: The complete network structure is preserved, but the haze addition module adopts a linear transformation strategy to calibrate the initial transmission map, replacing the gamma correction method.

- Variant IV: The random haze addition module uses the gamma correction strategy, but the transmission-related parameter is fixed to 1, and the atmospheric light value is set to 0.85. This variant examines the model’s robustness and generalization ability under fixed parameter settings.

4.6. Performance Test on Different Gamma Value Ranges

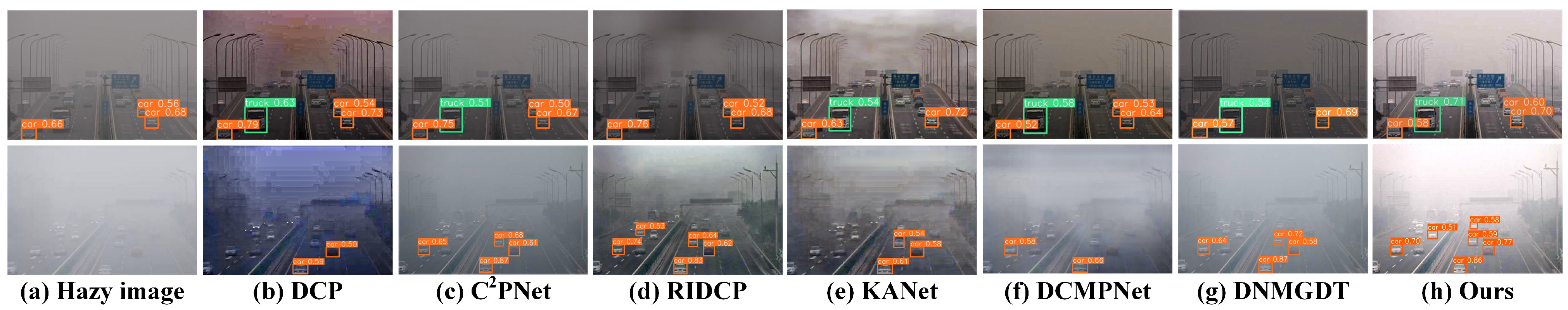

4.7. Impact of Algorithm on Object Detection Performance

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Yang, L.; Zhao, H.; Li, H.; Qiao, L.; Yang, Z.; Li, X. GCSTG: Generating Class-Confusion-Aware Samples With a Tree-Structure Graph for Few-Shot Object Detection. IEEE Trans. Image Process. 2025, 34, 772–784. [Google Scholar] [CrossRef]

- Feng, K.Y.; Gong, M.; Pan, K.; Zhao, H.; Wu, Y.; Sheng, K. Model sparsification for communication-efficient multi-party learning via contrastive distillation in image classification. IEEE Trans. Emerg. Top. Comput. Intell. 2023, 8, 150–163. [Google Scholar] [CrossRef]

- Ketcham, D.J.; Lowe, R.W.; Weber, J.W. Image enhancement techniques for cockpit displays. Technical report. 1974. [Google Scholar]

- Buchsbaum, G. A spatial processor model for object colour perception. J. Frankl. Inst. 1980, 310, 1–26. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.u.; Woodell, G.A. Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [CrossRef]

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [CrossRef]

- Ju, M.; Ding, C.; Guo, Y.J.; Zhang, D. IDGCP: Image dehazing based on gamma correction prior. IEEE Trans. Image Process. 2019, 29, 3104–3118. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Guo, X.; Yang, Y.; Wang, C.; Ma, J. Image dehazing via enhancement, restoration, and fusion: A survey. Inf. Fusion 2022, 86, 146–170. [Google Scholar] [CrossRef]

- Land, E.H. The retinex theory of color vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.u.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, X.; Ma, J.; Liu, W.; Zhang, J. Beyond brightening low-light images. Int. J. Comput. Vis. 2021, 129, 1013–1037. [Google Scholar] [CrossRef]

- Yi, X.; Xu, H.; Zhang, H.; Tang, L.; Ma, J. Diff-Retinex++: Retinex-Driven Reinforced Diffusion Model for Low-Light Image Enhancement. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 6823–6841. [Google Scholar] [CrossRef]

- Fan, G.; Yao, Z.; Chen, G.Y.; Su, J.N.; Gan, M. IniRetinex: Rethinking Retinex-type Low-Light Image Enhancer via Initialization Perspective. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 2834–2842. [Google Scholar]

- Narasimhan, S.G.; Nayar, S.K. Vision and the atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- Ju, M.; Ding, C.; Guo, C.A.; Ren, W.; Tao, D. IDRLP: Image dehazing using region line prior. IEEE Trans. Image Process. 2021, 30, 9043–9057. [Google Scholar] [CrossRef]

- Ju, M.; Ding, C.; Ren, W.; Yang, Y.; Zhang, D.; Guo, Y.J. IDE: Image dehazing and exposure using an enhanced atmospheric scattering model. IEEE Trans. Image Process. 2021, 30, 2180–2192. [Google Scholar] [CrossRef]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 154–169. [Google Scholar]

- Li, T.; Liu, Y.; Ren, W.; Shiri, B.; Lin, W. Single Image Dehazing Using Fuzzy Region Segmentation and Haze Density Decomposition. IEEE Trans. Circuits Syst. Video Technol. 2025; early access. [Google Scholar]

- Liu, H.; Hu, H.M.; Jiang, Y.; Liu, Y. PEIE: Physics Embedded Illumination Estimation for Adaptive Dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 5469–5477. [Google Scholar]

- Zhang, Y.; Zhou, S.; Li, H. Depth information assisted collaborative mutual promotion network for single image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–24 June 2024; pp. 2846–2855. [Google Scholar]

- Su, Y.; Wang, N.; Cui, Z.; Cai, Y.; He, C.; Li, A. Real scene single image dehazing network with multi-prior guidance and domain transfer. IEEE Trans. Multimed. 2025; early access. [Google Scholar]

- Cheng, D.; Li, Y.; Zhang, D.; Wang, N.; Sun, J.; Gao, X. Progressive negative enhancing contrastive learning for image dehazing and beyond. IEEE Trans. Multimed. 2024, 26, 8783–8798. [Google Scholar] [CrossRef]

- Fang, W.; Fan, J.; Zheng, Y.; Weng, J.; Tai, Y.; Li, J. Guided real image dehazing using ycbcr color space. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 2906–2914. [Google Scholar]

- Fu, J.; Liu, S.; Liu, Z.; Guo, C.L.; Park, H.; Wu, R.; Wang, G.; Li, C. Iterative Predictor-Critic Code Decoding for Real-World Image Dehazing. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 12700–12709. [Google Scholar]

- Zheng, L.; Li, Y.; Zhang, K.; Luo, W. T-net: Deep stacked scale-iteration network for image dehazing. IEEE Trans. Multimed. 2022, 25, 6794–6807. [Google Scholar] [CrossRef]

- Sun, X.; Wang, L.; Wang, C.; Jin, Y.; Lam, K.m.; Su, Z.; Yang, Y.; Pan, J. Adapting Large VLMs with Iterative and Manual Instructions for Generative Low-light Enhancement. arXiv 2025, arXiv:2507.18064. [Google Scholar] [CrossRef]

- Liang, Z.; Li, C.; Zhou, S.; Feng, R.; Loy, C.C. Iterative prompt learning for unsupervised backlit image enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 4–6 October 2023; pp. 8094–8103. [Google Scholar]

- Liu, C.; Wu, F.; Wang, X. EFINet: Restoration for low-light images via enhancement-fusion iterative network. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 8486–8499. [Google Scholar] [CrossRef]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. Aod-net: All-in-one dehazing network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4770–4778. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar] [CrossRef]

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking single-image dehazing and beyond. IEEE Trans. Image Process. 2018, 28, 492–505. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; Timofte, R.; De Vleeschouwer, C. O-haze: A dehazing benchmark with real hazy and haze-free outdoor images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 754–762. [Google Scholar]

- Ancuti, C.; Ancuti, C.O.; Timofte, R.; De Vleeschouwer, C. I-HAZE: A dehazing benchmark with real hazy and haze-free indoor images. In Proceedings of the International Conference on ADVANCED concepts for Intelligent Vision Systems, Poitiers, France, 24–27 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 620–631. [Google Scholar]

- Zheng, Y.; Zhan, J.; He, S.; Dong, J.; Du, Y. Curricular contrastive regularization for physics-aware single image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5785–5794. [Google Scholar]

- Wu, R.Q.; Duan, Z.P.; Guo, C.L.; Chai, Z.; Li, C. Ridcp: Revitalizing real image dehazing via high-quality codebook priors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22282–22291. [Google Scholar]

- Feng, Y.; Ma, L.; Meng, X.; Zhou, F.; Liu, R.; Su, Z. Advancing real-world image dehazing: Perspective, modules, and training. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9303–9320. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

| Method | Indoor | Outdoor | ||

|---|---|---|---|---|

| PSNR (dB) | SSIM | PSNR (dB) | SSIM | |

| DCP | 17.13 | 0.446 | 16.62 | 0.392 |

| T-Net | 21.79 | 0.813 | 19.03 | 0.674 |

| IPC-Dehaze | 22.87 | 0.793 | 20.06 | 0.694 |

| C2PNet | 21.32 | 0.605 | 17.35 | 0.525 |

| RIDCP | 22.54 | 0.542 | 17.26 | 0.461 |

| KANet | 23.22 | 0.725 | 19.18 | 0.618 |

| DCMPNet | 24.53 | 0.756 | 21.25 | 0.750 |

| DNMGDT | 24.55 | 0.873 | 21.12 | 0.757 |

| Proposed Method | 23.83 | 0.834 | 20.23 | 0.702 |

| Method | I-HAZE | O-HAZE | ||

|---|---|---|---|---|

| PSNR (dB) | SSIM | PSNR (dB) | SSIM | |

| DCP | 16.33 | 0.477 | 16.03 | 0.407 |

| T-Net | 20.13 | 0.803 | 19.23 | 0.594 |

| IPC-Dehaze | 22.09 | 0.699 | 19.97 | 0.673 |

| C2PNet | 19.79 | 0.577 | 19.05 | 0.563 |

| RIDCP | 20.71 | 0.537 | 19.52 | 0.515 |

| KANet | 22.17 | 0.725 | 18.89 | 0.598 |

| DCMPNet | 23.29 | 0.773 | 21.25 | 0.714 |

| DNMGDT | 23.55 | 0.786 | 21.17 | 0.710 |

| Proposed Method | 23.83 | 0.834 | 21.23 | 0.702 |

| Method | Publication | Platform | Time (s) |

|---|---|---|---|

| DCP | CVPR’09 | Matlab | 0.3276 |

| T-Net | TMM’22 | Pytorch | 0.297 |

| IPC-Dehaze | CVPR’25 | Pytorch | 0.0393 |

| C2PNet | CVPR’23 | Pytorch | 0.0284 |

| RIDCP | CVPR’23 | Pytorch | 0.0541 |

| KANet | TPAMI’24 | Pytorch | 0.0326 |

| DCMPNet | CVPR’24 | Pytorch | 0.0380 |

| DNMGDT | TMM’25 | Pytorch | 0.0367 |

| Proposed Method | – | Pytorch | 0.0276 |

| Method | Indoor | Outdoor | ||

|---|---|---|---|---|

| PSNR (dB) | SSIM | PSNR (dB) | SSIM | |

| Variant I | 18.13 | 0.546 | 16.62 | 0.592 |

| Variant II | 21.32 | 0.605 | 18.35 | 0.525 |

| Variant III | 21.45 | 0.674 | 18.26 | 0.653 |

| Variant IV | 22.56 | 0.765 | 19.36 | 0.674 |

| Proposed Method | 23.74 | 0.834 | 21.05 | 0.702 |

| Gamma Ranges | PSNR (dB) | SSIM |

|---|---|---|

| 18.61 | 0.513 | |

| 21.32 | 0.627 | |

| 22.97 | 0.723 | |

| 21.51 | 0.677 | |

| 20.98 | 0.691 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, G.; Wang, H.; Ju, M. Cycle-Iterative Image Dehazing Based on Noise Evolution. Electronics 2025, 14, 3392. https://doi.org/10.3390/electronics14173392

Huang G, Wang H, Ju M. Cycle-Iterative Image Dehazing Based on Noise Evolution. Electronics. 2025; 14(17):3392. https://doi.org/10.3390/electronics14173392

Chicago/Turabian StyleHuang, Gongrui, Han Wang, and Mingye Ju. 2025. "Cycle-Iterative Image Dehazing Based on Noise Evolution" Electronics 14, no. 17: 3392. https://doi.org/10.3390/electronics14173392

APA StyleHuang, G., Wang, H., & Ju, M. (2025). Cycle-Iterative Image Dehazing Based on Noise Evolution. Electronics, 14(17), 3392. https://doi.org/10.3390/electronics14173392