1. Introduction

Docker is a lightweight virtualization technology based on LXC. Its latest version is Docker 28.3.3, which has become an important part of modern computing infrastructure. Its cross-platform ability lets it run on Windows 10 (64-bit, Home/Pro/Enterprise/Education 22H2 build 19045 or later), Windows 11 (64-bit, Home/Pro/Enterprise/Education 22H2 or later), Linux distributions like Ubuntu 18.04 LTS or newer, CentOS 7 or newer, Debian 9 or newer, Fedora 32 or newer, macOS 10.15 (Catalina) or newer (with macOS 11+ recommended). It is widely used in DevOps, PaaS, and IoT. Currently, millions of Docker containers run on cloud platforms like AWS, Azure, Alibaba Cloud, and Tencent Cloud.

Container environments have limited resources like computation power, storage, and network bandwidth. This makes it hard to run traditional security software or hardware inside a container. As a result, the apps and data in a container are very vulnerable. If a container is hacked, it can damage data in the container and on the host machine. It can also let attackers act more laterally within the cloud setup, causing serious problems.

The integrity measurement mechanism (IMM) is a key technology for trusted computing. It offers a different layered defense than traditional security tools like firewalls, intrusion detection systems, or antivirus software. Firewalls use complex rules to filter network traffic. Intrusion detection systems monitor running programs in real time. Antivirus software uses large signature databases to find malicious code.

In contrast, an IMM checks that files have not been tampered with by unauthorized users. Its advantages include simplicity and the fact that it performs basic integrity checks. For this reason, IMMs are widely used in security-critical areas such as enterprise networks, military networks, and critical infrastructures where high reliability and low management costs are required.

Existing IMMs have developed alongside computing methods. Hardware–software designs form the core of trusted computing. Hardware security tools like Trusted Platform Modules (TPMs) [

1], Intel SGX [

2], and ARM TrustZone [

3] establish isolated trust roots. Virtualization-layer tools like vTPMs [

4] extend these capabilities to multi-VM environments.

However, these tools have major limits in containerized ecosystems:

TPMs and trusted execution environments (TEEs) struggle with resource-limited containers. This is because their isolation models are inflexible and they have limited support from hypervisors [

5,

6].

Virtualization-focused IMMs (like Container-IMA [

6]) are tied to heavy message-digest algorithms (MDAs) such as SHA-256. These algorithms require hashing entire files and carrying out cryptographic work, which leads to high computational costs [

6,

7].

Software-based methods, such as kernel-level isolation [

8] and blockchain-based verification [

9], make this problem worse. While they do not depend on hardware, they either create complex key management [

10] or have latency issues [

11]. This makes them unsuitable for containers with dynamic lifecycles (running, stopped, paused).

The core challenge is balancing strong security with lightweight operation in container environments that have limited resources and short lifespans. Existing solutions do not solve this.

To resolve this, we propose two novel metadata-driven IMMs: the Overlay2 IMM and the Btrfs IMM. These tools use the built-in metadata structures of the Overlay2 union filesystem and Btrfs (which is based on B-tree). Instead of using MDA computations, they redefine integrity checks as a lightweight metadata comparison process. This design offers three key improvements over previous work:

Combining filesystem metadata analysis with container integrity verification: Unlike tools that depend on a TEE [

5,

12] or vTPMs tied to hypervisors [

4], our IMMs use a lightweight isolation method that does not need hashing or specific hardware. They run external verification, so malware inside the container cannot undermine them. This creates a security layer that works regardless of the container’s running state, strengthening protection against insider threats without needing special hardware.

Efficiency from using metadata: By using metadata checks instead of hashing entire files, we reduce time complexity from O(n) (MDA-based full-file processing) to O(1) (metadata checks). We also eliminate the need to store large file data. This solves the performance–security balance problem that troubles MDA-based IMMs [

6,

13], making our tools suitable for resource-limited edge containers.

Lifecycle-independent verification: Our IMMs use metadata that stay the same no matter the container’s state (running, stopped, or paused). This ensures consistent integrity verification across all operational modes—unlike dynamic measurement tools [

14] that depend on the container’s runtime context.

The rest of the paper is organized as follows:

Section 2 reviews related work on container security and integrity measurement techniques.

Section 3 explains the basic technologies that support our proposed IMMs, including Overlay2, Btrfs, and metadata-based verification rules.

Section 4 gives a detailed technical description of the Overlay2 IMM and Btrfs IMM, including a description of their structure and how they work.

Section 5 analyzes the time complexity of the algorithms, comparing them with traditional MDA-based approaches. Experimental results on performance and scalability are documented in

Section 6, followed by conclusions and future research directions in

Section 7.

2. Related Work

Integrity measurement mechanisms (IMMs) ensure system integrity through verification using hashes. They include hardware–software designs and work in many different computing environments. To make this clearer, we group existing IMMs by their key technical foundations: hardware security tools, virtualization-layer tools, system software-based methods, and new decentralized technologies. We also explicitly show how well they work in both non-virtualized and virtualized environments.

Additionally, we look at how IMMs are being used in more areas across different fields. In these areas, unique constraints (e.g., limited resources or the need to balance privacy and security) lead to special innovations. This classification avoids overlapping groups, highlights critical trade-offs, and provides a basis for comparison with our proposed method.

2.1. IMMs Based on Hardware Security Tools

Hardware-backed security components such as specialized cryptoprocessors and processor-level security extensions act as trusted roots for measurement. These tools are widely used in both non-virtualized and virtualized environments, but they work differently in each.

2.1.1. Physical Security Chips (TPM/TCM)

In non-virtualized environments, physical Trusted Platform Modules (TPMs) [

1] and Trusted Cryptography Modules (TCMs) [

5] serve as trusted roots. Sailer et al. [

15] used TPMs to implement runtime integrity measurement for system components via the Integrity Measurement Architecture (IMA). Yang and Penglin [

7] extended TPMs to ensure control-flow integrity, restricting program behavior to predefined patterns. For cloud data, Shen et al. [

16] used TPM-generated codes to verify off-chain data consistency, reducing the risks from relying on a single central system.

In virtualized environments, virtual TPMs (vTPMs)—supported by hypervisors—extend this approach to environments with multiple VMs. Velten and Stumpf [

4] proposed shared vTPMs, allowing security services to run at the same time across multiple VMs. Du et al. [

17] improved vTPM-based VM integrity measurement by adding self-modification rules, reducing unnecessary checks.

However, both physical TPMs and vTPMs have limitations:

2.1.2. Trusted Execution Environments (TEEs)

Processor-level security extensions, such as ARM TrustZone [

3] and Intel SGX [

2], create isolated areas (TEEs) for sensitive tasks. These TEEs support IMMs in both non-virtualized and virtualized environments.

Non-virtualized use cases: Zhang et al. [

18] used TrustZone to protect off-chip memory integrity, while Zhang et al. [

19] ran critical integrity checks in TrustZone’s secure area to separate untrusted running environments. Song et al.’s TZ-IMA [

12] split cryptographic tasks between TrustZone’s normal and secure areas, resulting in only 5% extra performance cost on ARMv8 development boards. For remote attestation, Kucab and Michal [

20] employed SGX to verify VM filesystem integrity throughout its lifecycle, bypassing OS-level vulnerabilities.

Virtualized use cases: Duan et al. [

5] strengthened TEE security in virtualized setups by measuring hypervisor kernels using TEEs, fixing issues with TEE–virtualization compatibility. However, TEEs add significant performance costs (such as slow switches between secure and normal areas [

12]) and it is hard to integrate them closely with containers due to their inflexible isolation limits.

2.2. IMMs Based on Virtualization-Layer Tools

In virtualized ecosystems (like VMs and containers), IMMs must handle resource sharing managed by hypervisors. This has led to special tools focused directly on the virtualization layer.

Hypervisor-level monitoring tools include Zhou et al.’s HyperSpector [

21], a UEFI-based tool that checks the integrity of Virtual Machine Monitors (VMMs) dynamically; Lin et al.’s method [

22], which uses address translation to track changes in VM memory during operation; and, for keeping hypervisor code secure, Gu et al.’s Outlier [

23], which uses anomaly detection to reduce extra work, but only protects against threats specific to hypervisors.

Container-focused solutions prioritize checking the integrity of images and running containers. Luo et al.’s Container-IMA [

6] splits measurement logs to enhance privacy, but it still relies on hashing entire files, which is computationally expensive [

13]. Li’s RIMM [

24] reduces extra work using copy-on-write techniques but does not protect against tampering with metadata. These tools are tightly linked to a container system like Docker, which limits how well they work with other virtualization tools.

2.3. IMMs Based on System-Software Methods

Non-virtualized environments often use OS or kernel-level tools to ensure integrity without special hardware. Liu et al. [

25] designed an IoT system that combines trusted computing and real-time monitoring. Dong et al.’s KIMS [

8] uses secure isolation to protect the kernel’s integrity. Zhang et al. [

10] used encryption to safeguard critical files, but this adds extra work for managing keys.

Integrity during boot and load times is handled by hybrid tools: Ling et al.’s hybrid boot [

26] establishes trust roots during initialization, and Zhang’s dynamic measurement [

14] matches expected and real system states to check integrity while running. However, these software-based tools depend on the OS or kernel being trustworthy. This makes them vulnerable to attacks by users with high-level privileges.

2.4. IMMs Based on New Decentralized Technologies

Blockchain and decentralized protocols reduce over-reliance on a single trusted source in both virtualized and non-virtualized environments, but they also introduce new trade-offs.

Non-virtualized scenarios: Shen et al. [

27] used blockchain to build query tools that cannot be tampered with, while Xiaodong et al.’s decentralized fog audits [

28] mitigate key management flaws in traditional IMMs.

Virtualized scenarios: Blockchain enables trust in containers without a central authority. Xu et al. [

9] verified container image signatures via blockchain, and De Benedictis et al. [

29] applied remote attestation for end-to-end cloud software integrity. However, blockchain’s consensus overhead leads to latency issues [

11], limiting its applicability to real-time systems.

2.5. IMMs in Cross-Domain Applications

Beyond traditional environments, IMMs are becoming more important in distributed systems. These systems include edge computing, federated learning (FL), and multimedia processing. Each of these areas has unique constraints: limited resources in edge computing, the need to balance privacy and integrity in FL, and the need to preserve structural integrity in multimedia processing.

Edge computing: Edge environments have limited resources, so they need lightweight but reliable integrity checks. TCDA [

30] employs combinatorial auctions with critical-value pricing to ensure truthful resource allocation. It also handles localization limits and budget balance. However, it struggles with double dependencies when offloading tasks. MEC-P [

31] introduces third-party auditors for dynamic data integrity verification. It uses cryptography to avoid privacy leaks, but it slows down when handling large numbers of data.

Federated learning: FL systems are distributed, so they need to balance model integrity with data privacy. Incentive systems [

32] that use game theory (such as Shapley value and Stackelberg games) encourage honest data sharing. Blockchain and differential privacy are combined to secure transaction logs and data aggregation, but this adds extra complexity. Quantum-resistant cryptography [

32] is suggested to protect integrity from future quantum threats, but it is still mostly experimental.

Multimedia processing: Special solutions are needed to keep media (like images) structurally intact while removing noise or watermarks. PSLNet [

33], a self-supervised learning network, uses parallel designs and mixed loss functions for this, but it requires a lot of computing power. Model integrity verification [

33] uses checksums, watermarking, and runtime behavior analysis. However, it struggles with tampering that is context-aware (such as altering visual meaning to trick the system).

Across all these areas, one main challenge remains: balancing security guarantees with each domain’s specific constraints (like resource shortages in edge computing, privacy needs in FL, and structural integrity in multimedia). Existing IMMs rely on heavy cryptography or rigid trust models, so they cannot solve this conflict effectively.

2.6. Limitations of Existing IMMs

Despite advancements across different technical tools and fields, current IMMs have several major weaknesses:

Efficiency vs. security trade-offs: They rely on message-digest algorithms (MDAs) [

13,

34] and full-file hashing, which require a lot of time and storage space. This conflicts with the limited resources in edge computing [

30,

31] and the real-time needs of multimedia processing [

33].

Compatibility issues: Hardware tools (like TPMs and TEEs) do not work well with container environments or cross-domain systems [

5,

6]. Meanwhile, solutions designed specifically for FL or edge computing [

30,

32] cannot easily work with other systems.

Extra work and complexity: Blockchain causes delays [

9], TEEs have high costs when switching between contexts [

12], and FL’s game theory-based systems are overly complex [

32]—all of which make scaling difficult. Software-based methods [

5,

15] also remain vulnerable to attacks by users with high-level privileges.

Domain-specific weaknesses: Cross-domain IMMs struggle with context-related challenges. For example, edge computing faces dependencies in task offloading [

30], FL needs to balance privacy and integrity [

32], and multimedia processing deals with structural tampering [

33]. These issues arise because their measurement models are inflexible.

Our proposed metadata-driven IMM fixes these problems. It uses built-in filesystem metadata (like those from Overlay2 or Btrfs) to separate the measurement process from the system’s running states. By avoiding full-file processing and cryptographic redundancy, it achieves lightweight verification. This method works in many different environments—from virtualized containers to cross-domain edge or FL systems—without reducing security.

3. Technical Background

3.1. Docker and Container Images

Docker is a form of OS-level virtualization that allows containers running on the same host to share the base OS kernel. Containers have small image footprints, fast boot times, and low overheads, and can be deployed rapidly when compared to VMs at the hardware level. These features make containers especially attractive for deployment on low-power hardware such as edge servers and embedded devices [

32].

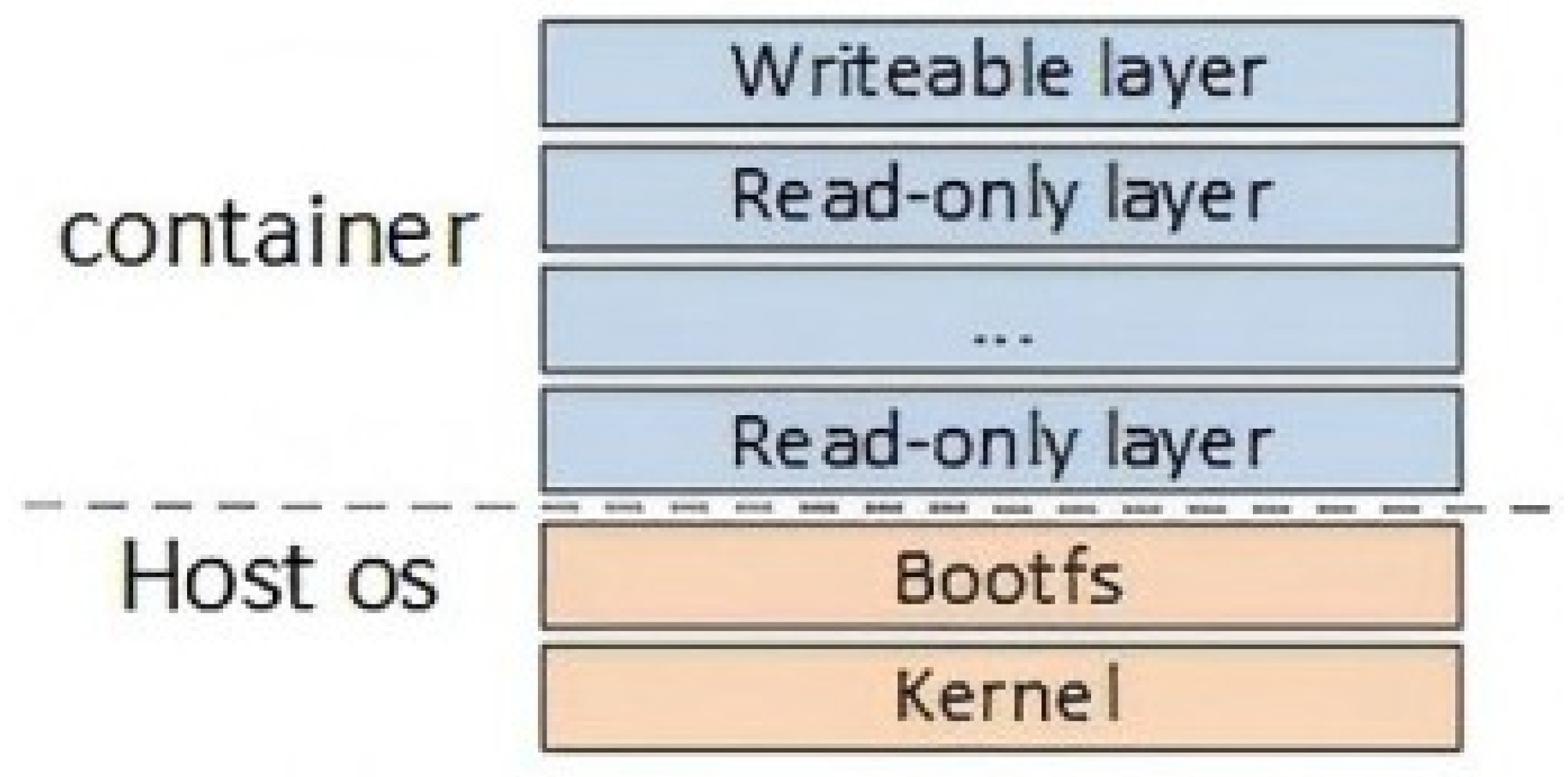

Docker uses a layered image structure, the conceptual organization of which is depicted in

Figure 1. The lower layers are read-only and may be shared between containers, whereas the top-most layer (which is writable) holds changed files from lower layers plus new content. The layered structure enables efficient storage and distribution of containerized applications. As summarized in

Table 1, Docker supports a variety of storage drivers and underlying filesystems, each with different characteristics and metadata structures for different workload needs. Backing filesystems can be divided into two types based on how they deal with modified data.

File-Level Copy Systems: When a file is modified in such systems, the original file is copied from the read-only lower layer to the writable upper layer. The changes are made in this upper layer. As a result, the upper layer must store entire modified files. These systems work well for workloads where reading is more common than writing. Examples include Overlay2 and AUFS filesystems. They are useful when keeping data unchangeable and allowing shared access for reading is important.

Block-level redirect-on-write systems: These systems handle file updates at the block level. Only the changed blocks are written to the upper layer. The file indices are then updated to point to blocks in both the lower and upper layers. This reduces the storage needed in the upper layer because only the changes (deltas) are stored. These systems are appropriate for write-intensive workloads. Examples include device-mapper, Btrfs, and ZFS.

3.2. Overlay2 Filesystem

Overlay2 is a modern, high-performance union filesystem that works at the file level. It enables efficient layered storage for container environments. A union filesystem like Overlay2 does not provide its own physical storage. Instead, it uses underlying base filesystems (such as ext4 or XFS) to store data. It combines multiple host directories into a single virtual filesystem structure.

Figure 2 shows Overlay2’s architecture.

In simple terms, Overlay2 uses two separate directory layers: a read-only lower layer (lowerdir) and a writable upper layer (upperdir). Imagine a setup where Dir1 (the lower layer) has files File1, File2, and File3, and Dir2 (the upper layer) has File2 and File4. Overlay2 merges these layers into one combined view (mergeddir) with the following rules:

Files with unique names in both layers (like File1 and File3 in Dir1 or File4 in Dir2) are all accessible in the merged view.

For files with the same names (like File2 in both layers), the upper-layer file takes priority. This effectively hides the version in the lower layer.

Docker uses Overlay2 to build container images by stacking read-only base layers (for example, from Docker Hub) on top of writable runtime layers. In

Figure 2, “merged” is the filesystem the container actually sees. The upperdir contains all changes made during the runtime, such as edited files or new content. The lowerdir combines multiple read-only base layers, so shares parts of images to be reused across different containers. This reduces storage overhead and allows images to deploy quickly. Overlay2 is well-suited for read-heavy container workloads.

3.3. Btrfs Filesystem

Btrfs is a new-generation block-level filesystem designed to meet the various storage needs of apps running on phones, servers, and personal computers. It is the default filesystem in some popular Linux distributions. It supports features like subvolumes, snapshots, dynamic inode allocation, and data compression.

Figure 3 shows Btrfs’s architecture.

At its core, Btrfs lets you create subvolumes—essentially logical partitions within the filesystem—and layer snapshots of those subvolumes on top of one another. At first, a subvolume and its snapshot share the same block storage. If a file in a lower-layer snapshot is modified, the upper-layer snapshot uses a copy-on-write (COW) method. It only stores the changed blocks and updates the file’s metadata to point to both the original and new blocks.

Each subvolume and snapshot has its own metadata, including a starting generation number, current generation number, and a structure tree that tracks block storage. Any change to a subvolume or snapshot increases its current generation number and updates its structure tree.

For Docker, Btrfs uses its snapshot and subvolume features to build container images as a hierarchy of read-only and writable layers. As shown in

Figure 3, what the container sees as its filesystem is a writable snapshot (the writable layer). This sits on top of read-only layers made from unchangeable snapshots and subvolumes of base images.

This block-level COW design reduces the storage overhead by sharing unchanged blocks across layers. Btrfs is well-suited for container workloads that involve a lot of writing. Generation numbers and structure trees ensure that image building and runtime changes are atomic and consistent.

4. Design Overview

This chapter covers the operational context, integrity measurement methods, and prototype architecture.

4.1. Operational Context

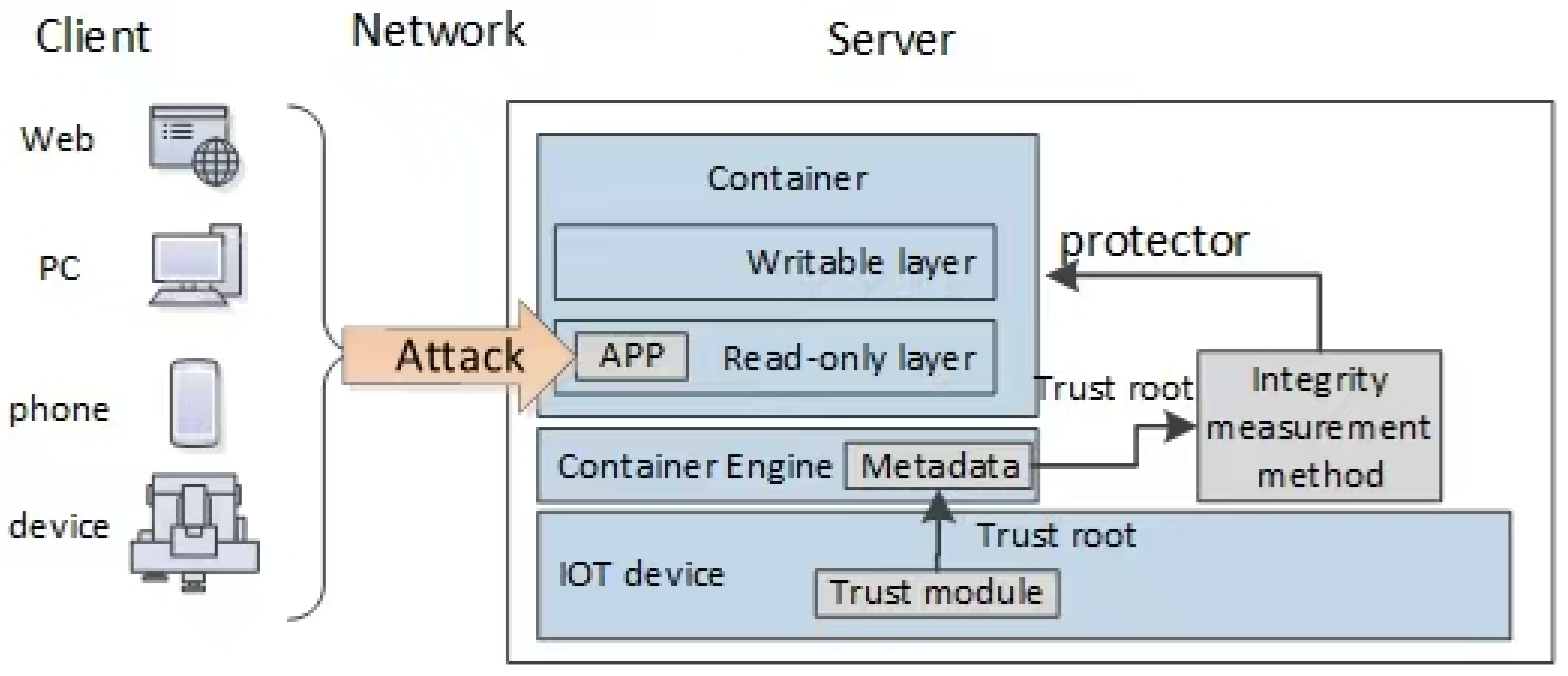

The proposed metadata-driven IMMs are used in scenarios where containerized apps need protection (see

Figure 4). These scenarios include resource-constrained environments such as edge servers and embedded devices. In such environments, multiple containers share the host OS kernel and rely on layered filesystems (Overlay2 or Btrfs) to store data efficiently.

This section refines the threat model by explicitly defining attacker capabilities, privilege escalation pathways, and the consequences of metadata compromise.

4.1.1. Threat Model

Attackers are grouped by their access level and ability to exploit vulnerabilities. They use increasingly advanced tactics to bypass integrity measurements:

Container-Level Attackers: These attackers initially take over a container through network intrusions (for example, exploiting unpatched flaws in web services) or by inserting malicious code through compromised images. Their initial privileges are restricted to the container’s writable layer. This means they can only carry out the following actions:

- (1)

Modify files in the writable layer (such as changing app settings or injecting malware).

- (2)

Try to gain higher privileges by exploiting container escape flaws (e.g., CVE-2021-41190, a Docker vulnerability that lets attackers break out using mishandled file descriptors).

- (3)

Tamper with processes inside the container to evade detection (e.g., disabling monitoring tools). However, they cannot directly access metadata on the host.

Host-Privileged Attackers: These attackers gain root access to the host OS by exploiting privilege escalation flaws. Examples include kernel vulnerabilities like CVE-2022-0847 or misconfigured Docker daemon permissions. With root access, they can carry out the following actions:

- (1)

Tamper with Docker daemon configurations (e.g., daemon.json) to redirect where containers store data, avoiding IMM monitoring.

- (2)

Tamper with filesystem configurations.

Such tampering can cause the system to run an incorrect instruction or misinterpret configuration, posing serious security risks [

31].

4.1.2. Defense Strategy

Metadata-driven IMMs are deployed outside containers. Their job is to verify that files in read-only layers have not been tampered with. Any deviation from the original file state indicates a potential security breach.

The integrity measurement method relies on trust in the filesystem’s metadata. These metadata are protected by a trust module (like a TPM) in the IoT device. Because trust can be passed along a chain, this module provides the foundation of trust for the metadata-driven IMMs.

We also employ other technical measures, such as configuring Cgroup access permissions, to enhance container isolation and improve the basic security of containers and filesystems. However, if the metadata themselves are altered, the algorithm faces the risk of failure.

4.2. Integrity Measurement Methodologies

The proposed IMMs work with two main components and two phases. The components comprise the following:

Integrity criteria (rules that define what counts as tampering);

Baseline records (data that capture the original state of files).

The two phases comprise the following:

Baseline acquisition (collecting the original state data);

Integrity validation (checking if files have been tampered with).

4.2.1. Overlay2-Based IMM (Overlay2 IMM)

Core Mechanism: Exploits Overlay2’s layered structure (a read-only lowerdir and a writable upperdir merged into a mergedir) to detect tampering by checking if metadata are present.

- (1)

Integrity Criteria

A file is considered tampered with if the following apply:

Technical Notes:

Whiteout Mechanism: Overlay2 uses special files (like whiteout and opaque entries) in the writable layer. These files hide files or folders from lower layers, making it look like they have been deleted from the read-only snapshots.

Copy-Up Operation: When a file in the read-only layer is modified, it is copied to the writable layer. This keeps the lower layers unchangeable while allowing writes in the container’s writable layer.

- (2)

Baseline Records

Baseline records consist of the paths to the upperdir (writable layer) and mergedir (merged view) for the target container. These paths are obtained using docker inspect. Each container has its own baseline records, so all files in a container share the same original context.

- (3)

Algorithm

The Overlay2 IMM follows a two-step algorithm, detailed in Algorithms 1 and 2. These algorithms are based on how filesystem layers work and how integrity verification is performed.

Acquisition: Extract the paths for the writableLayer and mergeLayer from the docker inspect output (Algorithm 1).

Validation: For each file in fileList (Algorithm 2), perform the following actions:

Check if it exists in the mergeLayer. If not, mark it as tampered.

Check if it exists in the writableLayer. If yes, mark it as tampered.

To help understand the algorithms below, we define the variables and formal symbols involved in the Overlay2 IMM in

Table 2. These definitions clarify the technical roles and meaning of each variable, ensuring they match the algorithmic logic and workflows detailed in the following sections.

| Algorithm 1. Integrity Information Acquisition Stage in Overlay2 IMM |

Input: Container name (containerName)

Output: Writable layer path (writableLayer), merged layer path (mergeLayer)

1 Retrieve container metadata using docker inspect ${containerName} to obtain containerInfo;

2 writableLayer = analyse(container info);

3 mergeLayer = analyse(container info);

4 return writableLayer; mergeLayer; |

| Algorithm 2. Integrity Validation Stage in Overlay2 IMM |

Input: List of files to check (fileList), writableLayer, mergeLayer

Output: List of tampered files (tamperedFileList)

1 for i = 0;i <Length(fileList);i++ do

2 //(1) Check for deletions in the merged layer;

3 pathDocker = fileList[i];

4 pathSys = mergedLayerPath + pathDocker;

5 isExist = Exist(pathSys);

6 if isExist! = True then

7 tamperedFileList.append(pathDocker);

8 continue;

9 end

10 //(2) Check for modifications in the writable layer;

11 pathSys = writableLayerPath + pathDocker;

12 isExist = Exist(pathSys);

13 if isExist == True then

14 tamperedFileList.append(pathDocker);

15 end

16 end

17 return tamperedFileList; |

4.2.2. Btrfs-Based IMM

Core Mechanism: It uses Btrfs’s generation numbers (which track changes to subvolumes) to find altered files without hashing their entire content.

- (1)

Integrity Criteria

A file is considered tampered with if it appears in the list of files modified since the baseline generation (startGen). This list is generated using the command btrfs subvolume find-new.

- (2)

Baseline Records

Baseline records comprise the subvolume path (where the container is stored) and startGen (the initial generation number). These are obtained using btrfs subvolume show. Notably, this information applies to the whole container, not individual files, so all files in a container share the same baseline.

- (3)

Algorithm

Table 3 gives the definition of variables and formal symbols involved in the Btrfs IMM. This IMM works in two stages: acquiring integrity measurement information (Algorithm 3) and verifying integrity (Algorithm 4).

Acquisition: Extract the subvolume path (from Docker’s layerdb) and startGen (Algorithm 3).

Validation: For each file in fileList (Algorithm 4), perform the following actions:

Use btrfs subvolume find-new to generate a list of modified files. This is achieved by comparing startGen to the current generation.

Mark any files in this list as tampered with.

| Algorithm 3. Integrity Information Acquisition Stage in Btrfs IMM |

Input: Container name (containerName)

Output: Subvolume path (subvolumePath) and subvolume start generation (startGen)

1 Retrieve container metadata (containerInfo) via the docker inspect command using containerName;

2 Extract the container ID from containerInfo;

3 Retrieve the subvolume path from/var/lib/docker/image/btrfs/layerdb/mounts/containerID/mount-id;

4 Obtain startGen using the btrfs subvolume show command;

5 return startGen,subvolumePath; |

| Algorithm 4. integrity verification stage in Btrfs IMM |

Input: containerName, startGen, subvolumePath, and a list of files to check (fileList)

Output: List of tampered files (tamperedFileList)

1 //(1) Changed Files Identification;

2 commandResult = btrfs command by containerName;

3 lenght = getLineNumber(commandResult);

4 for i = 0;i <lenght;i++ do

5 line = readLine(commandResult);

6 filePath = getfilePath(line);

7 ChangedFileList.append(filePath);

8 end

9 //(2) Tampered Files Detection;

10 length = Length(fileList);

11 for i = 0;i <length;i++ do

12 if fileList[i] in ChangedFileList then

13 tamperedFileList.append(fileList[i]);

14 end

15 end

16 return tamperedFileList; |

4.3. Prototype Architecture

To put our proposed metadata-driven IMMs (the Overlay2 IMM and the Btrfs IMM) into practice, we designed a modular prototype system. It validates feasibility in real-world container environments. This architecture follows the technical rules for using filesystem metadata (

Section 3). It also fits the needs of resource-constrained edge devices and industrial servers (

Section 4.1). Additionally, it can be extended to work with orchestration platforms like Kubernetes (detailed in

Section 4.3.3). The following sections explain the prototype’s core modules and their interactions.

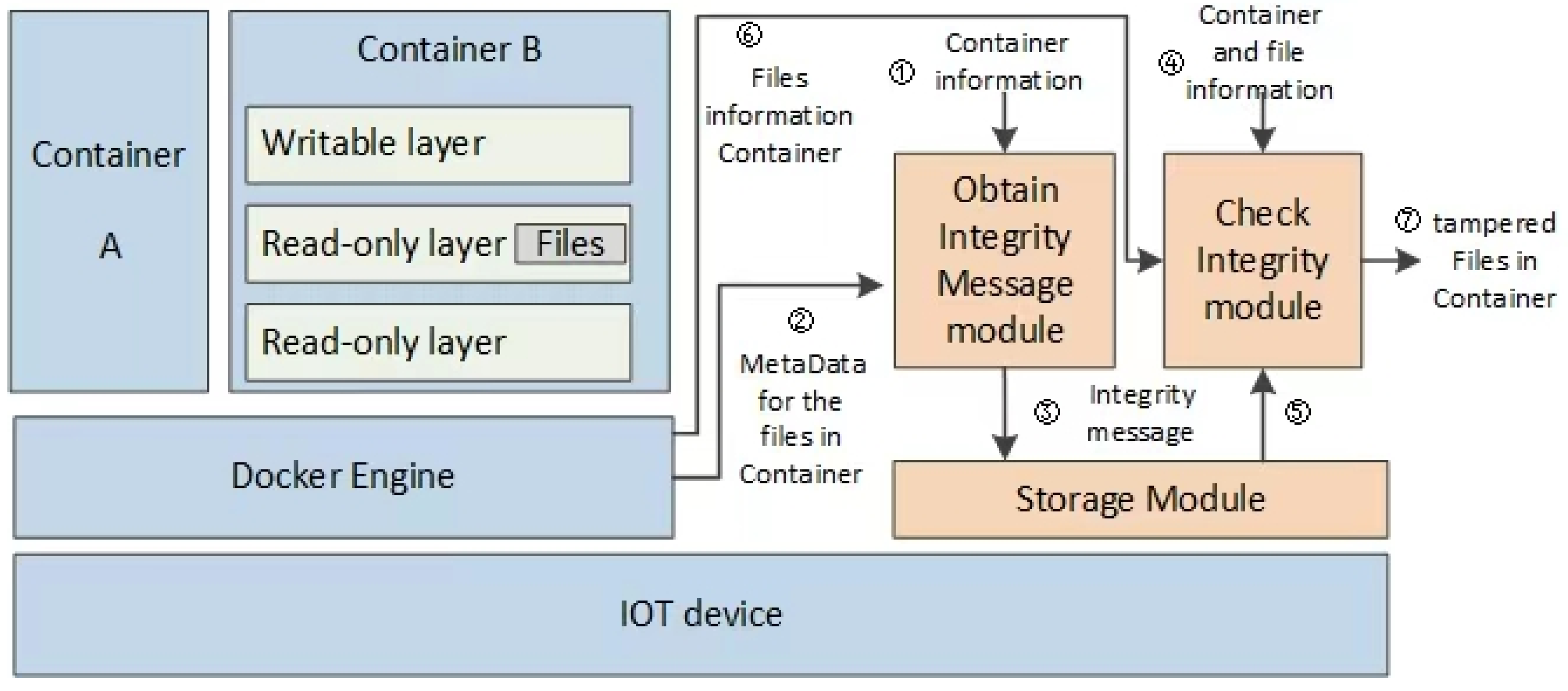

4.3.1. Basic Architecture

The prototype system includes three core modules, as illustrated in

Figure 5. All are deployed outside containers to avoid detection by intra-container malicious actors. These modules work together to enable secure, lightweight integrity measurement. They are the Integrity Information Acquisition Module, the Storage Module, and the Integrity Verification Module.

- (1)

Integrity Information Acquisition Module

This module retrieves baseline integrity information by implementing Algorithms 1 and 3 (for the Overlay2 and Btrfs IMMs, respectively). It takes container metadata (①) as input and extracts critical filesystem metadata (②) from container images. Examples include writable/merged layer paths (for Overlay2) or subvolume generation numbers (for Btrfs). The output (③) is stored in the Storage Module, where it serves as the reference state for subsequent integrity checks.

- (2)

Storage Module

This module stores all data critical to integrity (③). This includes container metadata (such as IDs and layer configurations), target file lists, and baseline information (like generation numbers and layer paths). It ensures that reference states—taken from the container’s filesystem structure—are stored permanently.

- (3)

Integrity Verification Module

This module performs real-time validation by comparing current filesystem metadata against baseline information. Given the container and file information (④), it executes Algorithms 2, 4, and A1 to retrieve stored integrity references (⑤) and current file states (⑥); check for discrepancies (e.g., file absence in merged layers, unexpected modifications in writable layers, or generation number mismatches); and generate reports on tampered files.

The prototype architecture has key advantages:

Enhanced Security: The system runs entirely outside the container. This means its components cannot be detected or interfered with by malware inside the container. This design reduces risks from insider threats or a compromised container.

State-Agnostic Integrity Checks: The system can verify file integrity no matter the container’s state (running, stopped, or paused). This is because it relies on the container’s immutable image structure (like read-only layers or subvolume snapshots), not temporary running states (unlike TEE-based methods [

34]). This ensures consistent, reliable checks throughout the container’s lifecycle.

Modular Design: Separation of the acquisition, verification, and storage modules makes the system easy to extend. It can integrate additional filesystem backends (such as ZFS or APFS) or measurement algorithms without changing the core structure.

Lightweight Verification: Instead of computing hashes, the system analyzes filesystem metadata. This eliminates the time and space overhead of processing entire files. This contrasts with MDA-dependent tools like Container-IMA [

6], which still rely on cryptographic hashing.

This prototype demonstrates the feasibility of metadata-driven IMMs for container environments. It provides a robust foundation for securing containerized apps against unauthorized modifications, while addressing key limitations of hardware-focused trust mechanisms.

4.3.2. Compatibility with Different Filesystem and Container Versions

Metadata-driven IMMs rely on the stability of filesystem-specific metadata structures (e.g., Overlay2 layer paths, Btrfs generation numbers) and consistent Docker API outputs. However, variations in filesystem versions and Docker engine releases can introduce discrepancies, potentially undermining these assumptions. Key considerations and mitigations include the following:

Overlay2 implementations vary across Linux kernel versions (e.g., kernel 5.4 vs. 6.1) in handling whiteout files and layer naming conventions. Btrfs updates (e.g., v5.15 vs. v6.0) may alter generation number increment logic for subvolumes. There are differences in the docker inspect output structure between v20.10 and v24.0. However, these differences do not affect the main core processes and only need to be adapted for different versions.

4.3.3. Integration with Kubernetes (k8s) and Handling of Cross-Node Migration

The proposed metadata-driven IMMs can be integrated with Kubernetes to enhance container security in orchestrated environments, with key implementations focused on alignment with Kubernetes’ native workflows:

- (1)

Storage Integration: Integrity-related metadata (e.g., Overlay2 layer paths, Btrfs generation numbers) can be stored in etcd, Kubernetes’ distributed key-value store. This ensures cross-node consistency of baseline information, enabling accessible reference data for integrity checks across the cluster.

- (2)

Orchestration Layer Integration: During container deployment and migration, the system can use Kubernetes’ node inspection capabilities to validate the target node’s filesystem compatibility (e.g., support for Overlay2 or Btrfs). For migrations, metadata consistency is maintained by re-acquiring baseline information (via Algorithms 1 and 3) on the target node post-filesystem migration, addressing potential discrepancies in layer naming or subvolume identifiers across nodes.

- (3)

Algorithm Integration: The integrity information acquisition and verification modules can be embedded into Kubernetes nodes, operating as lightweight daemons. These modules interface with the kubelet to trigger integrity checks during critical lifecycle events (e.g., pod startup, post-migration), ensuring seamless integration with node-level operations.

- (4)

Migration Integration: Cross-node pod migration involves two critical steps, both integrated with the IMM workflow:

After migrating the container’s filesystem to the target pod, the system needs to immediately re-acquire metadata (e.g., updated layer paths or generation numbers) using the same logic as the initial acquisition stage.

Before starting the migrated container, the verification module validates the newly acquired metadata against the stored baseline (from etcd), ensuring alignment with the target node’s filesystem structure.

This integration leverages Kubernetes’ native components to maintain metadata usability across nodes, with potential inconsistencies during migration addressed by re-executing metadata acquisition algorithms (

Section 4.2), ensuring the IMMs remain effective in orchestrated environments.

4.4. Limitations and Mitigation Strategies

While the prototype architecture demonstrates strong security and efficiency in controlled settings, it faces inherent limitations in real-world deployments. These challenges primarily manifest in two key scenarios: dynamic operational environments (e.g., container migration across nodes) and targeted filesystem-level attacks by privileged adversaries. Below, we detail these limitations and the corresponding mitigation strategies.

4.4.1. Challenges in Dynamic Environments and Mitigations

In dynamic environments, container migration across nodes introduces unique challenges for metadata-driven IMMs. The core issue stems from cross-node metadata inconsistency: when containers migrate, differences in filesystem layer paths (for Overlay2) or subvolume identifiers (for Btrfs) between source and target nodes can render precomputed baseline metadata invalid.

Additionally, re-acquiring metadata from the target node post-migration introduces latency, creating a potential security window. To address these issues, we can implement a metadata re-acquisition module that fetches container metadata immediately after migration and before startup. Integrated with Docker’s migration API, this module can ensure the detection baseline remains valid. We also can conduct targeted integrity checks on the target server’s filesystem prior to startup: for Btrfs, periodic checksums verification via btrfs scrub (cross-referenced with TPM-signed baselines); for Overlay2, real-time monitoring of critical metadata directories (e.g., /var/lib/docker/overlay2/*) via Linux’s fanotify API, triggering alerts and TPM revalidation on unauthorized modifications.

4.4.2. Mitigating Filesystem-Level Compromise via Trusted Anchors

Another critical vulnerability lies in filesystem-level compromise by host-privileged adversaries. These attackers, having gained root access to the host OS, can tamper with filesystem configurations or metadata, directly undermining the integrity of measurement results. To mitigate this, we propose two complementary safeguards:

- (1)

TPM-Backed Metadata Anchoring: Critical filesystem metadata (e.g., Overlay2 layer root hashes, Btrfs subvolume checksum logs) will be cryptographically signed using a TPM and stored in a read-only partition. During IMM validation, our prototype will first verify this TPM signature to ensure the metadata themselves have not been tampered with, adding a trust root below the filesystem layer. TPMs for edge devices (e.g., Raspberry Pi4B) cost USD 3–32, accounting for 2–5% of total hardware costs, while those for industrial edge servers range from USD 35 to USD 149, making up <1% of their total cost, making them economically feasible given the security benefits.

- (2)

Filesystem-Specific Integrity Checks: Leverage built-in filesystem mechanisms to detect tampering. For Btrfs: Enable periodic btrfs scrub to validate data/metadata checksums, with results cross-checked against the TPM-signed baseline. For Overlay2: Use Linux’s fanotify API to monitor write operations to critical metadata directories (e.g., /var/lib/docker/overlay2/*), triggering alerts and TPM re-verification when unauthorized modifications are detected.

5. Computing Complexity Analysis

This section analyzes the computational complexity of the three proposed metadata-driven IMMs, comparing them with other MDA-based IMMs, i.e., the MD5 IMM, the SHA256 IMM, and the Diff IMM described in

Appendix A.

We assess computational complexity based on CPU resource consumption; the lower the complexity, the better. Let n denote the number of files being measured, and let Li represent the length of the i-th file in the list.

5.1. Computational Complexity in the Integrity Information Acquisition Stage

Table 4 summarizes the computational complexity of different IMMs during the integrity information acquisition phase.

MDA-Based IMMs (MD5 IMM, SHA256 IMM): These tools use message-digest algorithms (MDAs) to create cryptographic hashes of files. Their complexity matches that of the MDA: for a single file of length L, the complexity is O(L). For n files, the total complexity is O(nL). This complexity depends directly on the number of data being processed and grows as more data are handled.

Overlay2 IMM and Btrfs IMM: These metadata-driven tools fetch static configuration parameters from Docker and filesystem metadata (such as layer paths or generation numbers). Their operations consist of parsing structured metadata (like the JSON output from docker inspect) and accessing specific filesystem paths. These operations have a time complexity of O(1) (constant time lookups) and involve lightweight parsing. As a result, their complexity does not depend on the number of files or their sizes.

Diff IMM: This tool does not need a separate stage to collect integrity information. Instead, it relies on comparing files incrementally during verification. Consequently, its complexity in this stage is O(0), showing that there is no computational overhead to collect baseline information.

Key Observations:

MDA-based IMMs have linear to superlinear complexity because they must hash entire file contents. This makes them computationally heavy when dealing with large datasets.

Metadata-driven IMMs (Overlay2 IMM, Btrfs IMM) achieve constant complexity by leveraging filesystem metadata and the container configuration, avoiding data-intensive operations.

The Diff IMM improves the acquisition stage by skipping baseline collection, but this may introduce higher complexity in the verification stage (analyzed in

Section 5.2).

This analysis shows that metadata-driven approaches significantly reduce computational overhead during the integrity information acquisition stage compared to traditional hash-based methods. This makes them more suitable for container environments with limited resources.

5.2. Computational Complexity in the Integrity Verification Stage

Table 5 summarizes the analysis of the computational complexity of various IMMs during the integrity verification phase.

For MDA-based IMMs (MD5 IMM, SHA256 IMM), verification requires computing cryptographic hashes of files and comparing them to precomputed message digests. The process is similar to the integrity information acquisition stage. Therefore, the computational complexity of the integrity verification stage matches that of the acquisition stage.

In contrast, metadata-driven IMMs (Overlay2 IMM, Btrfs IMM) leverage filesystem metadata and structural features to detect tampering.

For the Overlay2-IMM, verification only requires determining whether a file path exists in the writable or merged layers. Thus, the overall verification complexity for the Overlay2-IMM is O(n∙c), where c denotes the constant cost of each metadata query.

For the Diff-IMM and Btrfs-IMM, verification involves checking whether a file path is in the list of changed files (for example, via docker diff or btrfs subvolume find-new). Let D represent the time needed to obtain the names of all changed files between versions, and m stand for the number of changed files. Their complexities are therefore O(D + n∙ m).

This analysis shows that metadata-driven approaches have consistently lower complexity in the long run compared to MDA-based methods. This is especially true for large datasets or containers with numerous files.

In conclusion, metadata-driven IMMs have much lower computational complexity in both stages than hash-based approaches. This makes them better suited for real-time integrity monitoring in resource-constrained container environments.

6. Experimental Analysis

6.1. Experimental Setup

6.1.1. Configuration of Experimental Environment

This section describes the experimental setup, including the edge server and the Raspberry Pi4B. The hardware and software settings of both devices are detailed in

Table 6 and

Table 7, respectively. These setups provide a standard way to repeat and compare experimental results.

6.1.2. Dataset

IMM performance depends on file length, not content. Therefore, we created a custom dataset with different lengths and random contents. The dataset has three groups of files sizes: [0.1 MB, 0.3 MB, 0.5 MB, 0.7 MB, 0.9 MB], [1 MB, 3 MB, 5 MB, 7 MB, 9 MB], and [10 MB, 30 MB, 50 MB, 70 MB, 90 MB].

6.1.3. Evaluation Schema

We compared the proposed IMMs in three important aspects: time taken to collect integrity information, storage space needed for information, and time taken to check integrity.

Time complexity is a key measure of efficiency; the less time an algorithm takes, the more efficient it is. Storage consumption shows how well resources are used; less storage indicates better resource use.

In each aspect, we compared our metadata-based IMMs with the MD5 IMM, SHA256 IMM, and Diff IMM. These comparisons show the pros and cons of each IMM clearly.

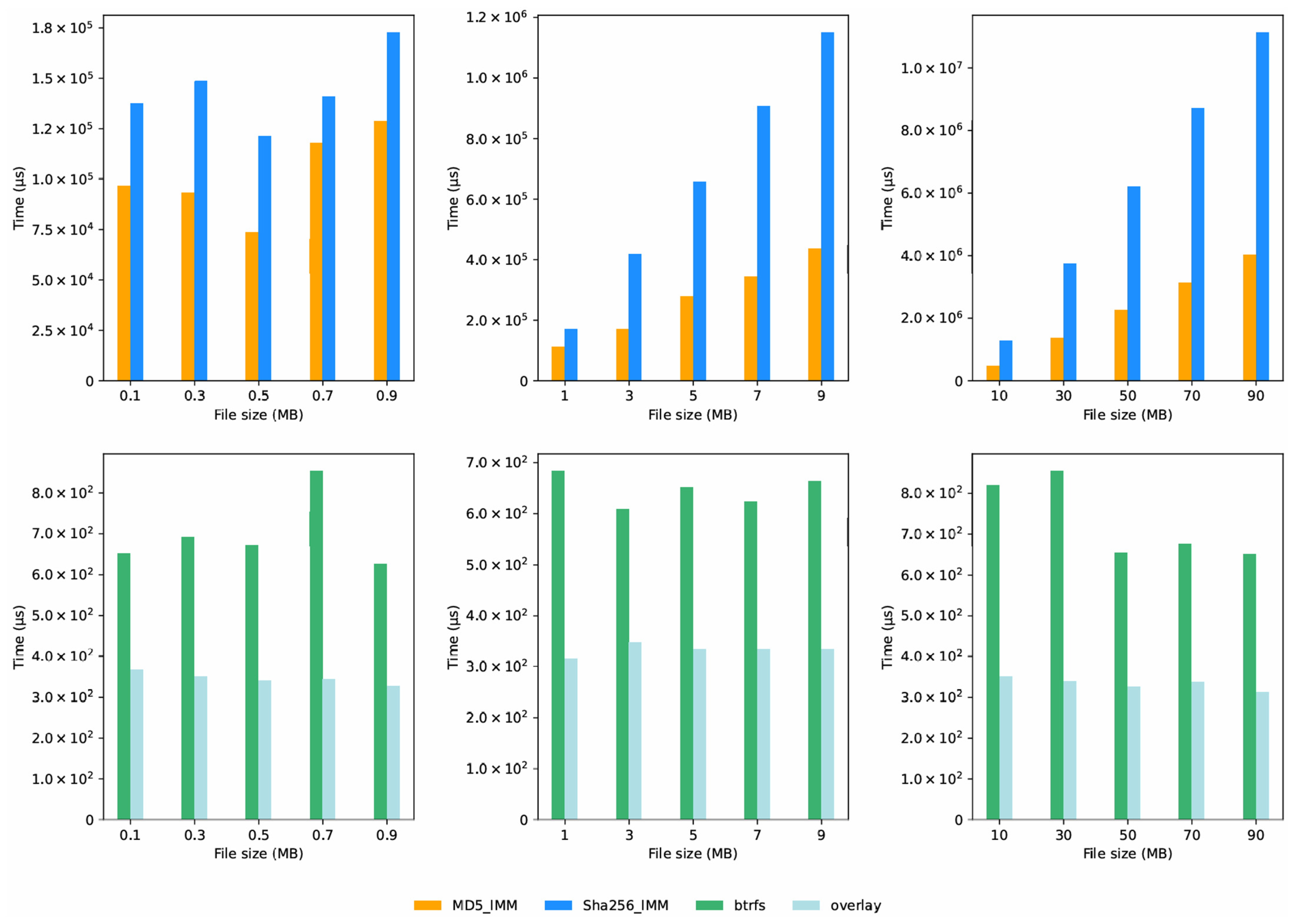

6.2. Integrity Information Acquisition Time

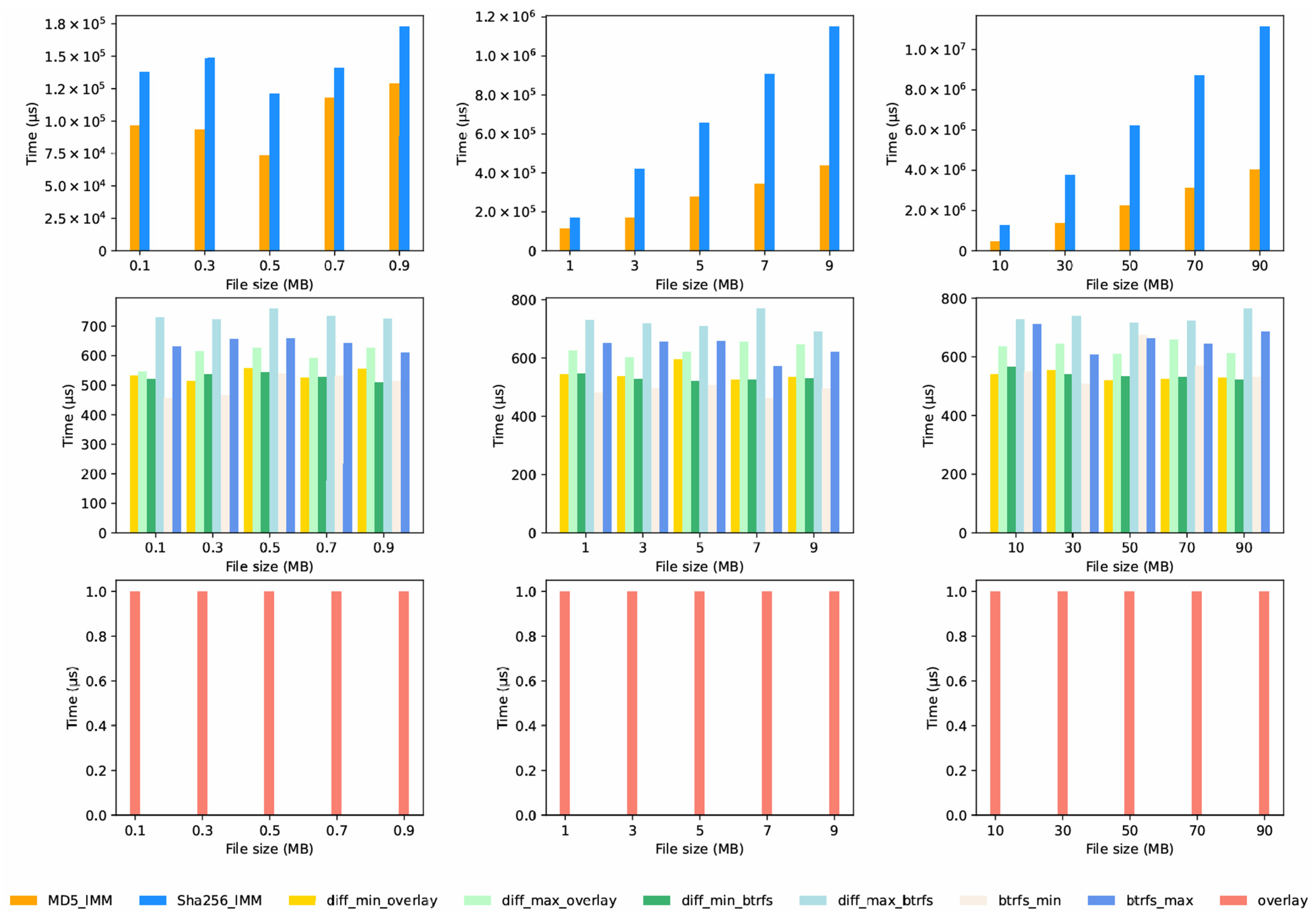

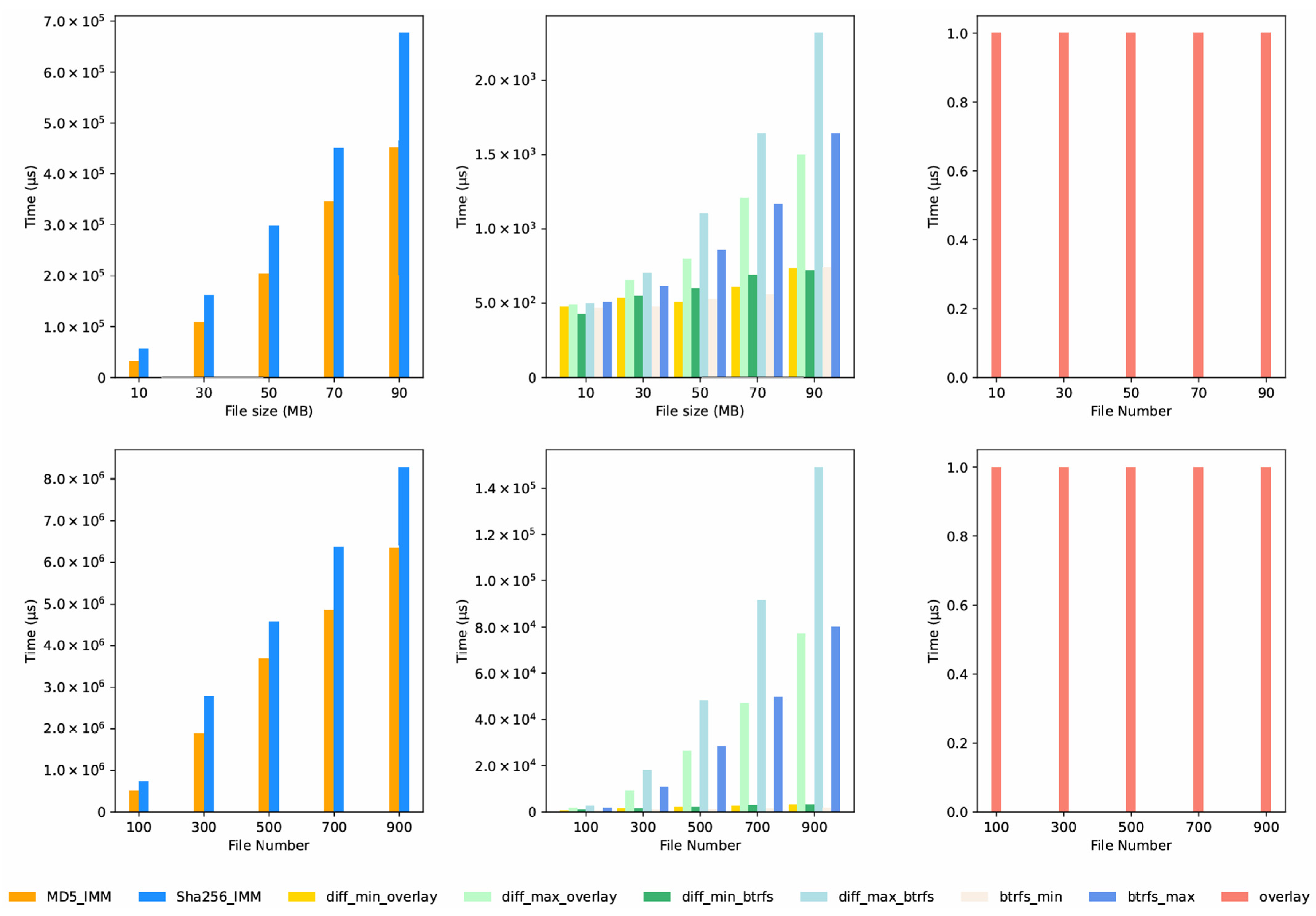

We ran an experiment to collect integrity information for 30 files of different lengths. The results are shown in

Figure 6 and

Figure 7, and we made several significant observations:

Firstly, the same algorithm takes very different amounts of time on different platforms. The edge server performs better than the Raspberry Pi4B. This is because the edge server has stronger hardware, such as higher-performance processors and quicker memory access.

Secondly, for the same file length and platform, the average time to collect integrity information follows this order: Diff IMM < Overlay2 IMM < Btrfs IMM < MD5 IMM < SHA256 IMM.

MD5 IMM and SHA256 IMM: The time recorded for both these IMMs is the time to process one file. It depends mostly on how long their respective cryptographic algorithms (MD5 or SHA256) take to run. Both algorithms process data one step at a time, so their execution time increases steadily as the file length grows. Since MD5 is less complex than SHA256, the MD5 IMM takes slightly less time than the SHA256 IMM.

Overlay2 IMM and Btrfs IMM: As explained in Algorithms 1 and 3, these metadata-based IMMs work by accessing and parsing filesystem metadata, not by processing entire file content. Therefore, their execution time remains relatively stable, no matter how many files there are or how long they are.

Diff IMM: This IMM takes no time to collect integrity information. Unlike other IMMs, it does not need a separate step to gather information—this is what makes it different.

6.3. Integrity Verification Time

IMMs run their integrity verification at regular intervals. This section compares the time consumption in two scenarios: when the number of files is fixed, but their lengths vary; and when the file length is constant, but the number of files changes.

6.3.1. Varying File Lengths with a Fixed Number of Files

We used the dataset described in

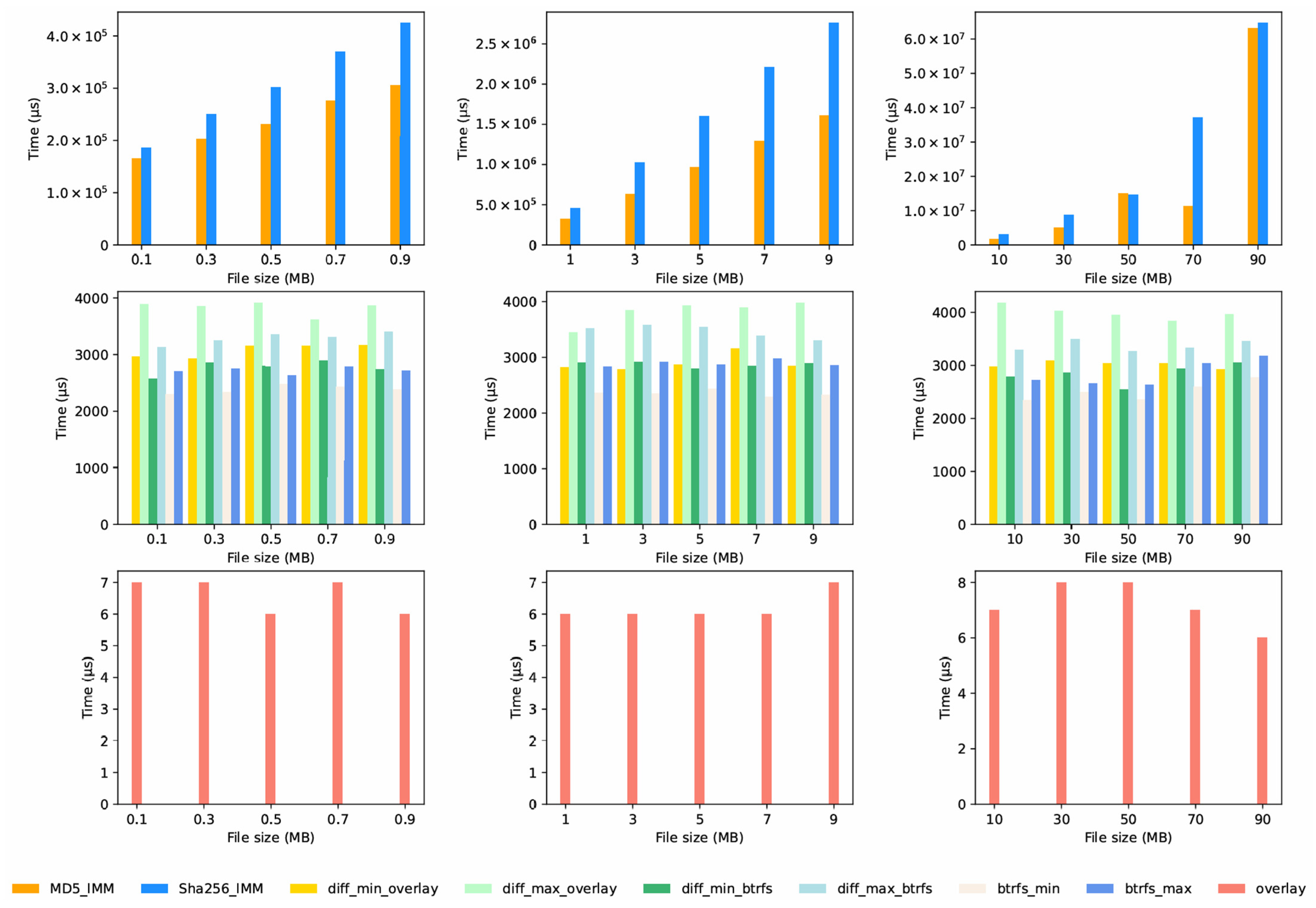

Section 6.1.3, with the number of files set to 30. The results, shown in

Figure 8 and

Figure 9, lead to the following conclusions:

Time Order: For the same file sizes and platforms, the average time for integrity verification follows this order: Overlay2 IMM < Btrfs IMM ≈ Diff IMM < MD5 IMM < SHA256 IMM.

Platform Performance Differences: The same algorithm takes very different times on different platforms. The edge server runs faster than the Raspberry Pi4B, thanks to its better hardware.

MD5 IMM and SHA256 IMM: Their integrity verification works the same way as described in

Section 6.2. This is because they use the same algorithms for both integrity information acquisition and file integrity verification.

Overlay2 IMM: As detailed in Algorithm 2, its integrity verification involves obtaining integrity information and the file list from the database, then checking if files exist in merged or writable layers. The Overlay2 IMM has the lowest computational complexity and uses the fewest data, so it runs the fastest.

Btrfs IMM and Diff IMM: As shown in Algorithms 4 and A1, their verification time involves obtaining integrity information and the file list from the database, retrieving the list of changed files in the container, and checking if files are in that changed list. The time depends on how many files were changed; it is shortest when no files are changed and longest when all files are changed. The Btrfs IMM takes slightly less time than the Diff IMM.

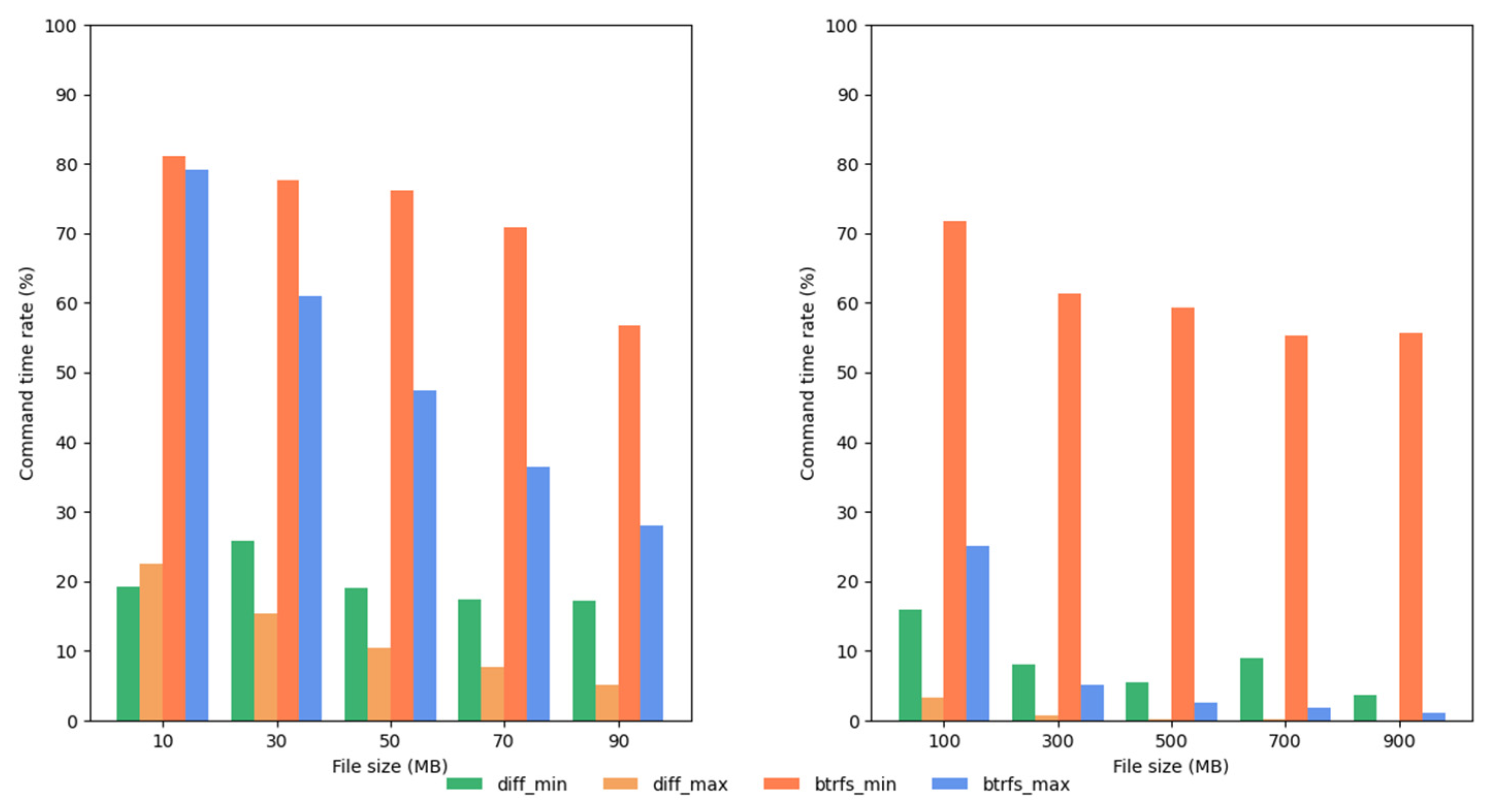

We also analyzed the ratio of the command execution time to the total time in the Btrfs IMM and Diff IMM. The results, shown in

Figure 10 and

Figure 11, lead to the following observations:

Command Time on the Same Platform: On the same platform, the diff command consumes less time than the btrfs command.

Command Time across Platforms: On the Raspberry Pi4B, running either the diff or btrfs command takes about 4–5 times longer than on the Intel platform.

Time Variation on the Same Platform: On the same platform, the difference between the shortest and longest execution times for the Btrfs IMM and Diff IMM is minimal, mainly due to the relatively new files.

File Size and Command Time: When the file size increases, the command execution time barely changes. This is because the commands read the file’s data structure, which is not affected by the file length.

Command Proportion of Total Time: The diff command makes up a larger part of the total time than the btrfs command. This is because (a) complexity depends on how many files were modified; (b) diff detects changed files across all layers; (c) btrfs obtains changed files from just one layer.

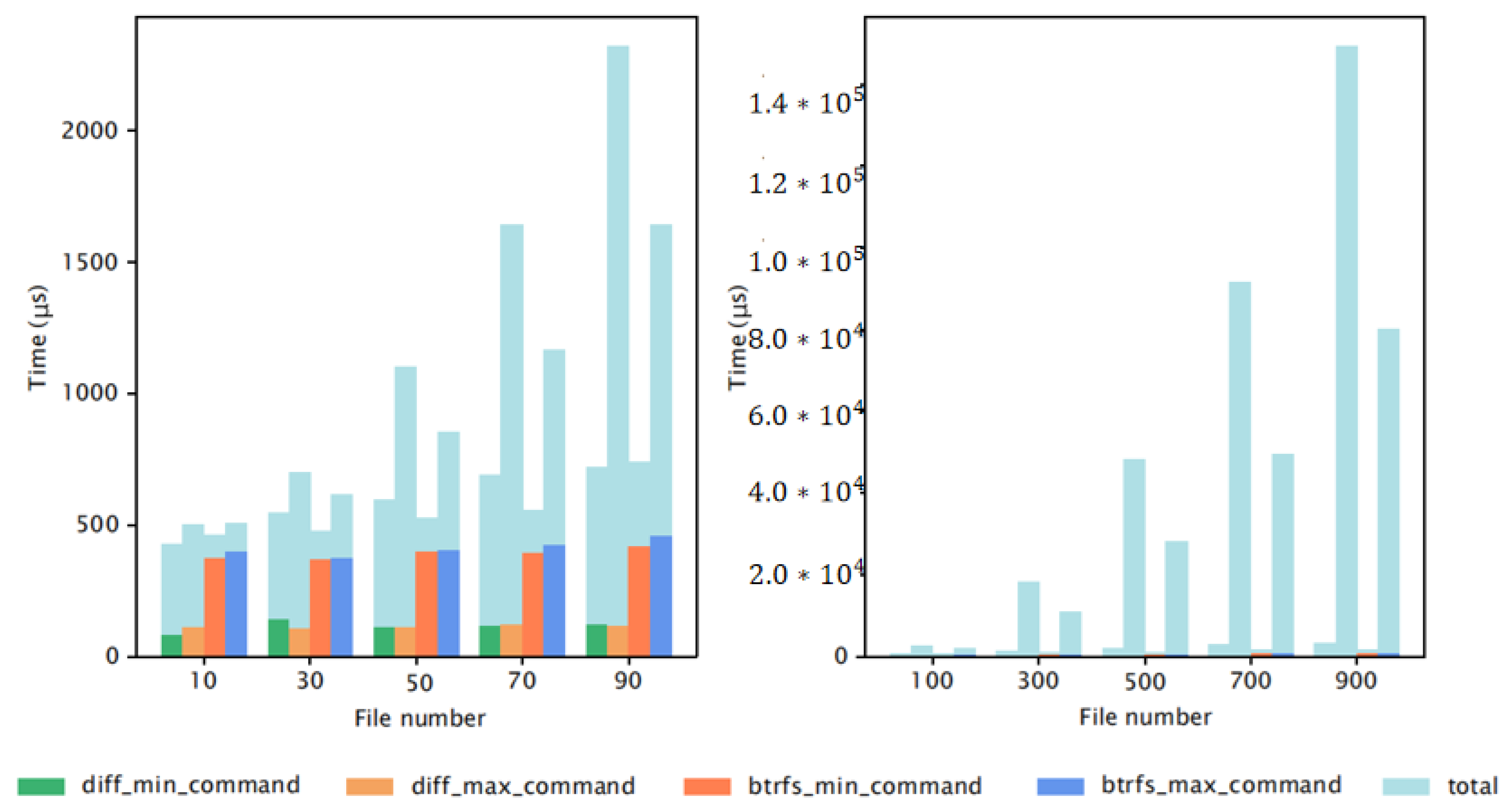

6.3.2. Fixed File Length and Variable Numbers of Files

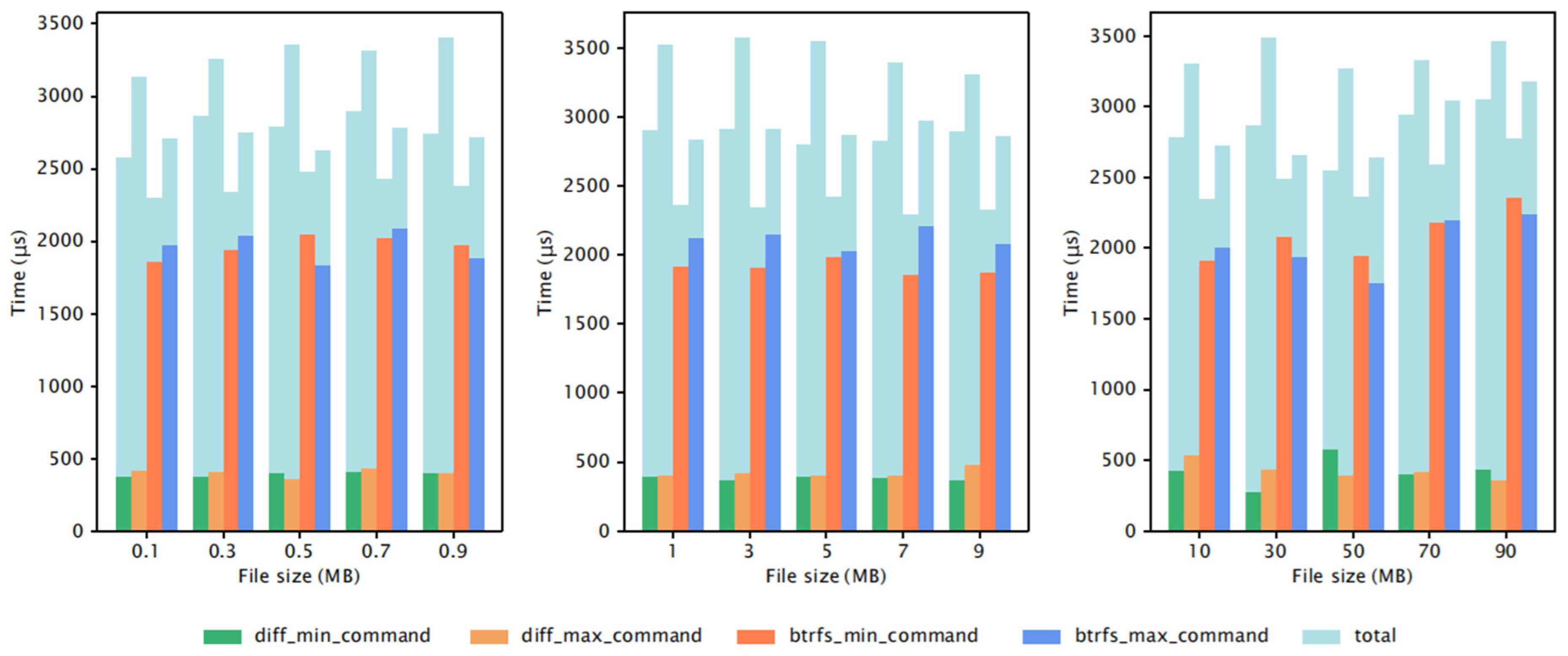

We chose two fixed file lengths (5 MB and 1 MB) and tested different numbers of files: [10, 30, 50, 70, 90] and [100, 300, 500, 700, 900]. The results of the integrity verification time analysis are depicted in

Figure 12 and

Figure 13, leading to the following conclusions:

When file lengths and the experimental platform are kept the same, the average integrity verification time for different IMMs follows a consistent order: Overlay2 IMM < Btrfs IMM ≈ Diff IMM < MD5 IMM < SHA256 IMM. This order matches the natural computational characteristics of each tool.

Platform-Dependent Performance: The same algorithm takes very different amounts of time on different platforms. The edge server performs better than the Raspberry Pi4B because of differences in hardware parameters, such as processing power, memory bandwidth, and storage read/write capabilities.

MD5 IMM, SHA256 IMM, and Overlay2 IMM: The average time for these IMMs to verify integrity does not change much when the number of files increases. These algorithms process files one after another, so the overall time goes up with more files, but the average time per file stays the same.

Btrfs IMM and Diff IMM: The integrity verification algorithms for these IMMs (Algorithms 4 and 5) have a complexity of O(n·c) or O(D). With the increase in the number of files (n), the overall time to check integrity rises sharply.

Moreover, we analyzed how the overall integrity checking time relates to command execution time, using 1 MB files and different numbers of files. We focused on the Btrfs IMM and Diff IMM, and the results are shown in

Figure 14 and

Figure 15. This led to the following conclusions:

Command Time on the Same Platform: On a single platform, the diff command takes slightly less time than the btrfs command. This is because the commands work differently and are implemented in ways that fit their respective IMMs.

Command Time across Platforms: On the Raspberry Pi4B, both the diff and btrfs commands take about 4–5 times longer to run than on the Intel-based edge server. This shows how hardware design affects command performance.

Number of Files and Command Time: Command execution time does not change much when more files are added. This is because the commands work with filesystem data structures, which are not affected by the total number of files. Accessing these structures takes roughly the same amount of time regardless of the number of files.

Furthermore, we analyzed the ratio of command time to overall integrity checking time, using 1 MB files and different numbers of files. We focused on the Btrfs IMM and Diff IMM, and the results are shown in

Figure 16 and

Figure 17. This led to the following conclusions:

The diff command’s time makes up a larger share of the total verification time than the btrfs command’s time. Three reasons explain this: (a) both IMMs’ complexity depends heavily on how many files were changed; (b) diff checks for changed files across all filesystem layers, which can involve more work; (c) btrfs obtains changed files from just one layer, making it less complex than diff.

As the number of files increases, the command time stays mostly the same, but the overall integrity checking time goes up. Therefore, the share of command time in the total time gradually decreases.

7. Conclusions

This study proposes two new metadata-based IMMs for containers and develops a prototype system. The evaluation of the proposed algorithms and the experimental results are summarized in

Table 8. When compared with traditional MDA-based IMMs, the proposed metadata-based IMMs show several advantages.

Enhanced Efficiency: Metadata-based IMMs significantly improve computational efficiency by reducing both space and time complexity. By using filesystem metadata instead of hashing entire file contents, these tools minimize resource consumption, making them more suitable for edge and cloud environments with limited resources.

Superior Security: Since they are placed outside the container, metadata-based IMMs remain invisible to malware operating inside the container. This design effectively reduces the risk of interference or bypass by insider threats, providing better security than IMMs that run inside containers.

State-Independent Integrity Verification: These IMMs can verify file integrity regardless of the container’s state (e.g., running, paused, or stopped). They rely on metadata from container images, ensuring consistent and reliable integrity verification throughout the container’s lifecycle.

We have discussed how the system might work with Kubernetes. This included looking at how it could fit into orchestrated workflows. We have also outlined a possible way to keep maintain metadata consistent during cross-node pod migration. This would involve dynamic re-acquisition of metadata and baseline alignment. This theoretical framework suggests the IMMs could remain effective in distributed edge clusters. They would not interfere with how orchestration normally runs. However, we will need to implement and test this in the future.

Limitations of the current design include its focus on container internal threats, which means scenarios involving host-level compromise fall outside the immediate scope. Additionally, while TPM-backed metadata anchoring mitigates some filesystem-level risks, full host-side defense requires deeper integration with kernel-level security modules.

Future work will address these gaps by extending the IMM framework to include host-side metadata integrity checks, enabling detection of post-escape tampering. We also plan to optimize cross-filesystem adaptability (e.g., supporting ZFS) and enhance Kubernetes integration with automated metadata synchronization policies. They can strengthen the mechanism’s applicability in large-scale industrial edge deployments.

Author Contributions

Methodology, S.-P.L. and J.-P.Z.; Software, S.-P.L.; Validation, L.Z. and S.-P.L.; Formal analysis, J.-P.Z.; Investigation, J.-P.Z.; Resources, G.-J.Q.; Writing—original draft, L.Z.; Writing—review & editing, L.Z.; Supervision, Y.-A.T.; Project administration, Y.-A.T.; Funding acquisition, L.Z. and G.-J.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the National Natural Science Foundation of China under grant no. 61802210 and the Academic Research Projects of Beijing Union University under grant no. ZK10202403.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

Author Jingpu Zhang was employed by the company SpaceWill Info. Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A

Appendix A.1. Diff-Based IMM

The Diff-based IMM uses the docker diff command to perform integrity verification, taking advantage of Docker’s built-in management features. The docker diff command lists changes to files between a container and its base image, generating an output with two columns:

Change Type: Denoted by C (creation), D (deletion), or M (modification).

File Path: The absolute path of the changed file.

A file is considered tampered with if its path appears in the

docker diff output. Unlike metadata-based or hash-based IMMs, the Diff-based IMM does not require an integrity information acquisition stage, as it directly uses runtime

diff results. The integrity verification algorithm (Algorithm A1) is defined as follows:

| Algorithm A1. integrity verification stage in diff IMM |

Input: containerName, filePathList

Output: tamperedFileList

1 //(1) acquisition of changed files;

2 commandResult = diff command by containerName;

3 lenght = getLineNumber(commandResult);

4 for i = 0;i <lenght;i++ do

5 line = readLine(commandResult);

6 changeType, filePath = Divide(line);

7 ChangedFileList.append(filePath);

8 end

9 //(2) cross-referencing stage;

10 length = Length(filePathList);

11 for i = 0;i <length;i++ do

12 if filePathList[i] in ChangedFileList then

13 tamperedFileList.append(filePathList[i]);

14 else

15 skip;

16 end

17 end

18 return tamperedFileList; |

Technical Rationale

Stateless Operation: The Diff IMM avoids storing baseline integrity information. Instead, it uses real-time diff queries. This eliminates the overhead of information acquisition but requires docker diff to be run repeatedly for periodic checks.

Change Detection Semantics: The docker diff command captures all filesystem modifications visible at the container layer, including whiteout deletions in Overlay2 or subvolume changes in Btrfs.

References

- TCG. Tpm 1.2 Main Specification. Available online: https://trustedcomputinggroup.org/resource/tpm-main-specification/ (accessed on 11 July 2024).

- Zheng, W.; Wu, Y.; Wu, X.; Feng, C.; Sui, Y.; Luo, X.; Zhou, Y. A survey of intel sgx and its applications. Front. Comput. Sci. 2021, 15, 153808. [Google Scholar] [CrossRef]

- Zhou, Y.; An, H.; Wang, G.; Sun, L. Uefi based operating system integrity measurement method. Comput. Sci. Appl. 2017, 7, 310–319. [Google Scholar]

- Michael, V.; Frederic, S. Secure and privacy-aware multiplexing of hardware-protected tpm integrity measurements among virtual machines. International Conf. Inf. Secur. Cryptol. 2012, 7839, 324–336. [Google Scholar] [CrossRef]

- GB/T 38638-2020; Information Security Technology: Trusted Computing Architecture of Trusted Computing. China National Standardization Administration Committee: Beijing, China, 2020.

- Luo, W.; Shen, Q.; Xia, Y.; Wu, Z. Container-IMA: A privacy-preserving integrity measurement architecture for containers. In Proceedings of the 22nd International Symposium on Research in Attacks, Intrusions and Defenses (RAID), Chaoyang District, Beijing, China, 25–28 September 2019; pp. 487–500. [Google Scholar]

- Yang, P.; Tao, L.; Wang, H. Rttv: A dynamic cfi measurement tool based on tpm. IET Inf. Secur. 2018, 12, 438–444. [Google Scholar] [CrossRef]

- Dong, S.; Xiong, Y.; Huang, W.; Ma, L. Kims: Kernel integrity measuring system based on trustzone. In Proceedings of the 2020 6th International Conference on Big Data Computing and Communications (BIGCOM), Deqing, China, 24–25 July 2020; pp. 103–107. [Google Scholar] [CrossRef]

- Xu, Q.; Jin, C.; Rasid, M.F.B.M.; Veeravalli, B.; Aung, K.M.M. Blockchain-based decentralized content trust for docker images. Multimed. Tools Appl. 2018, 77, 18223–18248. [Google Scholar] [CrossRef]

- Zhang, X.; Qi, L.; Ma, X.; Liu, W.; Sun, L.; Li, X.; Duan, B.; Zhang, S.; Che, X. Semantic integrity measurement of industrial control embedded devices based on national secret algorithm. In Proceedings of the 2023 IEEE/CIC International Conference on Communications in China (ICCC Workshops), Dalian, China, 10–12 August 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Shi, L.; Wang, T.; Xiong, Z.; Wang, Z.; Liu, Y.; Li, J. Blockchain-Aided Decentralized Trust Management of Edge Computing: Toward Reliable Off-Chain and On-Chain Trust. IEEE Netw. 2024, 38, 182–188. [Google Scholar] [CrossRef]

- Song, L.; Ding, Y.; Dong, P.; Yong, G.; Wang, C. Tz-ima: Supporting integrity measurement for applications with arm trustzone. Inf. Commun. Secur. 2020, 13407, 342–358. [Google Scholar] [CrossRef]

- Bohling, F.; Mueller, T.; Eckel, M.; Lindemann, J. Subverting linux’ integrity measurement architecture. In Proceedings of the 15th International Conference on Availability, Reliability and Security, Virtual Event, Ireland, 25–28 August 2020; pp. 1–20. [Google Scholar] [CrossRef]

- Zhang, C.; Xu, C.; Cheng, W.; Xia, Y.; Liu, Y. A dynamic trust chain measurement method based on mixed granularity of call sequence. In Proceedings of the 2023 8th International Conference on Signal and Image Processing (ICSIP), Wuxi, China, 8–10 July 2023; pp. 629–633. [Google Scholar] [CrossRef]

- Sailer, R.; Zhang, X.; Jaeger, T.; Van Doorn, L. Design and implementation of a tcg-based integrity measurement architecture. In Proceedings of the 13th USENIX Security Symposium, San Diego, CA, USA, 9–13 August 2004; Volume 13, pp. 223–238. [Google Scholar]

- Shen, W.; Yu, J.; Xia, H.; Zhang, H.; Lu, X.; Hao, R. Light-weight and privacy-preserving secure cloud auditing scheme for group users via the third party medium. J. Netw. Comput. Appl. 2017, 82, 56–64. [Google Scholar] [CrossRef]

- Du, R.; Pan, W.; Tian, J. Dynamic integrity measurement model based on vtpm. China Commun. 2018, 15, 88–99. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, Q.; Zhao, S.; Shi, Z.; Guan, Y. Softme: A software- based memory protection approach for tee system to resist physical attacks. Secur. Commun. Netw. 2019, 2019, 8690853. [Google Scholar] [CrossRef]

- Zhang, Y.; Feng, D.; Qin, Y.; Yang, B. A trust-zone based trusted code execution with strong security requirements. J. Comput. Res. Dev. 2015, 52, 2224–2238. [Google Scholar] [CrossRef]

- Kucab, M.; Boryło, P.; Chołda, P. Remote attestation and integrity measurements with intel sgx for virtual machines. Comput. Secur. 2021, 106, 102300. [Google Scholar] [CrossRef]

- Zhou, Y.; Xie, X.; Li, Z. Hyperspector: A uefi based vmm dynamic trusted monitoring base scheme. Softw. Guide 2016, 15, 154–157. [Google Scholar] [CrossRef]

- Lin, J.; Liu, C.; Fang, B. Ivirt: Integrity measurement mechanism of run- ning environment based on virtual machine introspection. Chin. J. Comput. 2015, 38, 191–203. [Google Scholar] [CrossRef]

- Gu, J.; Ma, Y.; Zheng, B.; Weng, C. Outlier: Enabling effective measurement of hypervisor code integrity with group detection. IEEE Trans. Dependable Secur. Comput. 2022, 19, 3686–3698. [Google Scholar] [CrossRef]

- Li, S.; Xiao, L.; Ruan, L.; Su, S. A novel integrity measurement method based on copy-on-write for region in virtual machine. Future Gener. Comput. Syst. 2019, 97, 714–726. [Google Scholar] [CrossRef]

- Liu, W.; Niu, H.; Luo, W.; Deng, W.; Wu, H.; Dai, S.; Qiao, Z.; Feng, W. Research on technology of embedded system security protection component. In Proceedings of the 2020 IEEE International Conference on Advances in Electrical Engineering and Computer Applications (AEECA), Dalian, China, 25–27 August 2020; pp. 21–27. [Google Scholar] [CrossRef]

- Ling, Z.; Yan, H.; Shao, X.; Luo, J.; Xu, Y.; Pearson, B.; Fu, X. Secure boot, trusted boot and remote attestation for arm trustzone-based iot nodes. J. Syst. Archit. 2021, 119, 102240. [Google Scholar] [CrossRef]

- Shen, T.; Li, H.; Bai, F.; Gong, B. Blockchain-assisted integrity attestation of distributed dependency iot services. IEEE Syst. J. 2023, 17, 6169–6180. [Google Scholar] [CrossRef]

- Yang, X.; Wang, X.; Li, X.; Hang, Z.; Caifen, W. Decentralized integrity auditing scheme for cloud data based onblockchain and edge computing. J. Electron. Inf. Technol. 2023, 45, 3759–3766. [Google Scholar] [CrossRef]

- De Benedictis, M.; Jacquin, L.; Pedone, I.; Atzeni, A.; Lioy, A. A novel architecture to virtualise a hardware-bound trusted platform module. Future Gener. Comput. Syst. 2024, 150, 21–36. [Google Scholar] [CrossRef]

- Ma, L.; Wang, X.; Wang, X.; Wang, L.; Shi, Y.; Huang, M. TCDA: Truthful Combinatorial Double Auctions for Mobile Edge Computing in Industrial Internet of Things. IEEE Trans. Mob. Comput. 2021, 21, 4125–4138. [Google Scholar] [CrossRef]

- Zhao, L.; Sun, L.; Hawbani, A.; Liu, Z.; Tang, X.; Xu, L. Dual Dependency-Awared Collaborative Service Caching and Task Offloading in Vehicular Edge Computing. IEEE Trans. Mob. Computing 2025, 1–15. [Google Scholar] [CrossRef]

- Zeng, R.; Zeng, C.; Wang, X.; Li, B.; Chu, X. Incentive Mechanisms in Federated Learning and A Game-Theoretical Approach. IEEE Netw. 2022, 36, 229–235. [Google Scholar] [CrossRef]

- Tian, C.; Zheng, M.; Li, B.; Zhang, Y.; Zhang, S.; Zhang, D. Perceptive Self-Supervised Learning Network for Noisy Image Watermark Removal. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 7069–7079. [Google Scholar] [CrossRef]

- Duan, J.; Cai, M.; Xu, K. Dual-mode integrity measurement system based on virtualized tee. J. Phys. Conf. Ser. 2021, 1955, 012079. [Google Scholar] [CrossRef]

Figure 1.

The logic structure of the container.

Figure 1.

The logic structure of the container.

Figure 2.

The logic structure of Overlay2 and the relation between Docker and Overlay2.

Figure 2.

The logic structure of Overlay2 and the relation between Docker and Overlay2.

Figure 3.

The logic structure of Btrfs and the relationship between Docker and Btrfs.

Figure 3.

The logic structure of Btrfs and the relationship between Docker and Btrfs.

Figure 4.

The scenario with IMMs based on metadata.

Figure 4.

The scenario with IMMs based on metadata.

Figure 5.

The architecture of the prototype.

Figure 5.

The architecture of the prototype.

Figure 6.

The time used in the integrity information acquisition stage on the Intel platform.

Figure 6.

The time used in the integrity information acquisition stage on the Intel platform.

Figure 7.

The time used in the integrity information acquisition stage on the PI4B platform.

Figure 7.

The time used in the integrity information acquisition stage on the PI4B platform.

Figure 8.

The integrity verification time on the Intel platform with a fixed number of files.

Figure 8.

The integrity verification time on the Intel platform with a fixed number of files.

Figure 9.

The integrity verification time on the Pi4B platform with a fixed number of files.

Figure 9.

The integrity verification time on the Pi4B platform with a fixed number of files.

Figure 10.

Command execution time in the integrity verification stage on the Intel platform with a fixed number of files.

Figure 10.

Command execution time in the integrity verification stage on the Intel platform with a fixed number of files.

Figure 11.

Command execution time in the integrity verification stage on the Pi4B platform with a fixed number of files.

Figure 11.

Command execution time in the integrity verification stage on the Pi4B platform with a fixed number of files.

Figure 12.

The integrity verification time on the Intel platform with fixed-length files.

Figure 12.

The integrity verification time on the Intel platform with fixed-length files.

Figure 13.

The integrity verification time on the Pi4 platform with fixed-length files.

Figure 13.

The integrity verification time on the Pi4 platform with fixed-length files.

Figure 14.

Command execution time in the integrity verification stage on the Intel platform with fixed-length files.

Figure 14.

Command execution time in the integrity verification stage on the Intel platform with fixed-length files.

Figure 15.

Command execution time in the integrity verification stage on the Pi4B platform with fixed-length files.

Figure 15.

Command execution time in the integrity verification stage on the Pi4B platform with fixed-length files.

Figure 16.

Command-to-total-time ratio on the Intel platform (fixed length).

Figure 16.

Command-to-total-time ratio on the Intel platform (fixed length).

Figure 17.

Command-to-total-time ratio on the Pi4B platform (fixed length).

Figure 17.

Command-to-total-time ratio on the Pi4B platform (fixed length).

Table 1.

The driver and backing filesystem.

Table 1.

The driver and backing filesystem.

| Storage Driver | Backing Filesystems | Type |

|---|

| Overlay, Overlay2 | xfs, ext4 | file level |

| fuse-overlayfs | any filesystem | file level |

| aufs | xfs, ext4 | file level |

| vfs | any filesystem | file level |

| devicemapper | direct-lvm | file level |

| Btrfs | Btrfs | block level |

| ZFS | ZFS | block level |

Table 2.

Definitions of variables and symbols in Overlay2 IMM algorithms.

Table 2.

Definitions of variables and symbols in Overlay2 IMM algorithms.

| Algorithm | Variable/Symbol | Meaning |

|---|

| Algorithm 1 (Baseline Acquisition) | containerName | Name of the target container for acquiring baseline integrity information. |

| containerInfo | Container metadata retrieved via docker inspect, containing Overlay2 layer configurations and mount points. |

| writableLayer | Path to Overlay2’s writable layer (upperdir), where modified read-only layer files are stored. |

| mergeLayer | Path to Overlay2’s merged layer (mergedir), presenting the unified view of read-only and writable layers. |

| Algorithm 2 (Integrity Validation) | fileList | List of files to be checked for integrity within the container. |

| pathDocker | Container-relative path of a file in fileList. |

| pathSys | Host filesystem path mapped from pathDocker (via Overlay2’s mount logic). |

| tamperedFileList | List of files flagged as tampered (absent from mergeLayer or present in writableLayer). |

Table 3.

Definitions of variables and symbols in Btrfs IMM algorithms.

Table 3.

Definitions of variables and symbols in Btrfs IMM algorithms.

| Stage | Variable/Symbol | Meaning |

|---|

| Baseline Acquisition (Algorithm 3) | containerName | Name of the target container for acquiring baseline integrity information. |

| containerInfo | Container metadata retrieved via docker inspect, used to extract the unique containerID. |

| containerID | Unique identifier of the container, extracted from containerInfo. |

| subvolumePath | Path to the Btrfs subvolume associated with the container (from Docker’s layerdb). |

| startGen | Initial generation number of the Btrfs subvolume (baseline for change tracking, via btrfs subvolume show). |

| Integrity Validation (Algorithm 4) | fileList | List of files to be checked for integrity within the container. |

| commandResult | Output of btrfs subvolume find-new, listing files modified since startGen. |

| ChangedFileList | List of modified files parsed from commandResult. |

| filePath | Path of a modified file extracted from commandResult. |

| tamperedFileList | List of files in fileList that appear in ChangedFileList (flagged as tampered). |

Table 4.

The computing complexity in the integrity information acquisition stage.

Table 4.

The computing complexity in the integrity information acquisition stage.

| Method | Computing Complexity |

|---|

| MDA IMM | ) |

| Overlay2 IMM | O(1) |

| Btrfs IMM | O(1) |

| Diff IMM | O(0) |

Table 5.

The computing complexity in the integrity verification stage.

Table 5.

The computing complexity in the integrity verification stage.

| Method | Computing Complexity |

|---|

| MDA IMM | ) |

| Overlay2 IMM | O(n·c) |

| Btrfs IMM | O(D + n·m) |

| Diff IMM | O(D + n·m) |

Table 6.

The configuration of the edge server.

Table 6.

The configuration of the edge server.

| | Classification | Content |

|---|

| Hardware | CPU | Intel(R) core(TM) i5-9400 2.90 GHz |

| Memory | 8 GB DDR4 2666MT/S |

| Disk | ASint AS606 128 GB |

| Software | OS | Ubuntu 20.04 Desktop LTS |

| Docker | version 20.10.7 |

| Filesystem | ext4 Overlay2/Btrfs |

| Database | Sqlite3 |

| Programming language | C++ |

Table 7.

The configuration of the Raspberry Pi4B.

Table 7.

The configuration of the Raspberry Pi4B.

| | Classification | Content |

|---|

| Hardware | CPU | ARM Cortex-A72 1.5 GHz |

| Memory | DDR4 4 GB |

| Disk | microSD 64 GB |

| Software | OS | Ubuntu 20.04 Desktop LTS |

| Docker | version 20.10.7 |

| Filesystem | ext4 Overlay2/Btrfs |

| Database | Sqlite3 |

| Programming language | C++ |

Table 8.

Summary of methods.

Table 8.

Summary of methods.

| Method | Overlay IMM | Diff IMM | Btrfs IMM | MDA IMM |

|---|

| metadata | merge layer

writable layer | diff command | Btrfs tree

subvolume | message digest |

| location | outside | outside | outside | in |

| container | container | container | container |

| status | any | any | any | running |

integrity

information | merge layer

writable layer

(for one container) | none | subvolume name

start generation

(for one container) | message digest

(for one file) |

the data for

obtaining integrity

information | configure length

(for one container) | none | configure length

(for one container) | file size |

the data for

checking integrity | 2 * sizeof(int) | the length of changed file path | the length of changed file path | file size |

| filesystem | Overlay2 | any | Btrfs | any |

| time | fastest | middle | middle | slowest |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).