A Retrieval-Augmented Generation Method for Question Answering on Airworthiness Regulations

Abstract

1. Introduction

- Constructing a civil aviation airworthiness certification QA dataset: Developing an LLM-based three-stage “generation–ehancement–correction” framework, producing 4688 high-quality questions in multiple-choice, fill-in-the-blank, and true/false formats.

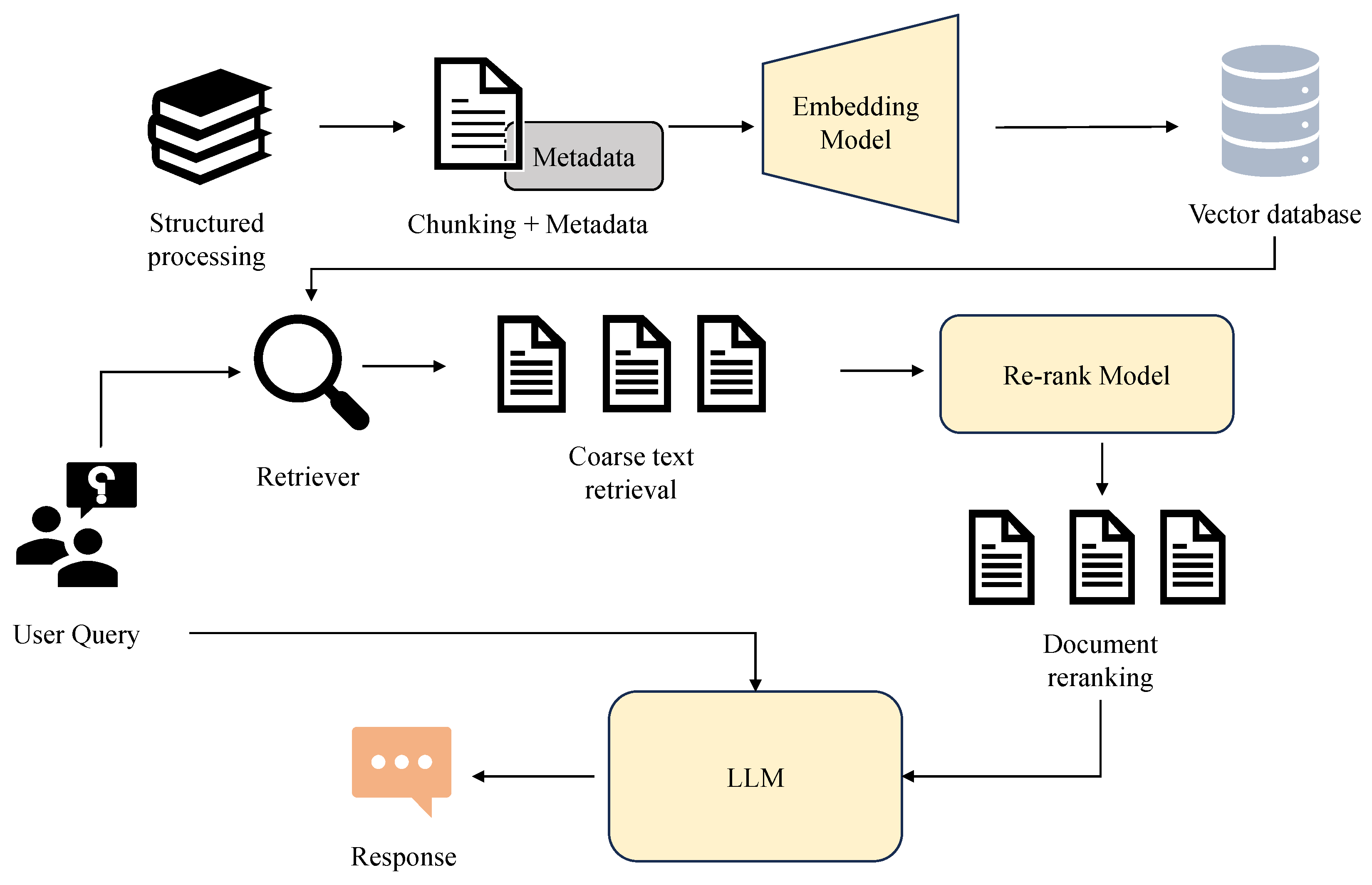

- Designing a hierarchical retrieval pipeline for certification regulations: Incorporating a two-stage “semantic retrieval–cross-encoder re-ranking” mechanism into the RAG framework, balancing retrieval efficiency and re-ranking accuracy.

- Effectiveness validation: Experiments on the self-constructed dataset demonstrate significant improvements over baseline values in relation to both answer accuracy and clause retrieval capability.

2. Related Work

2.1. Large Language Models

2.2. Retrieval-Augmented Generation

2.3. Question Answering

3. Materials and Methods

3.1. Dataset

3.1.1. Stage One: Initial Question Generation

3.1.2. Stage Two: Semantic Enhancement and Reverse Tracing

3.1.3. Stage Three: Manual Correction

3.2. RAG Framework

3.2.1. Data Processing

3.2.2. Retriever

Stage One: Semantic Vector Encoding and Embedding Matching

Stage Two: Cross-Encoder Re-Ranking

3.2.3. Generator

3.2.4. Metrics

- (1) Accuracy: Accuracy measures the degree of consistency between the answers generated by the question answering system and the reference answers. It serves as a key metric for the task of understanding airworthiness certification specifications and is computed as follows:

- (2) Recall, Precision, and F1 Score: In the task of understanding civil aviation airworthiness certification specifications, the ability of the retrieval module to locate the correct regulatory provisions is a critical factor in evaluating the performance of the RAG model. The relevant metrics are defined as follows:

- (3) Mean Reciprocal Rank (MRR): This metric evaluates the system’s ability to rank the correct regulatory provisions higher in the retrieved list. The calculation formula is defined as follows:

4. Experiment

4.1. Dataset

4.2. Experiment Settings

4.3. Experimental Results

4.3.1. Benchmark Testing

| Model | LLM | Choice Accuracy | Fill-in Accuracy | Judgement Accuracy | Precision | Recall | F1 Score | MRR |

|---|---|---|---|---|---|---|---|---|

| Vanilla RAG | Qwen2-7B | 0.5724 | 0.1394 | 0.5058 | 0.3215 | 0.4522 | 0.3758 | 0.4108 |

| ColBERT | Qwen2-7B | 0.6831 | 0.2166 | 0.7657 | 0.4944 | 0.6636 | 0.5666 | 0.5508 |

| FiD | Qwen2-7B | 0.8418 | 0.2457 | 0.7879 | 0.4337 | 0.6014 | 0.5039 | 0.5508 |

| Ours | ChatGLM3-6B | 0.7886 | 0.2552 | 0.7621 | 0.5783 | 0.7369 | 0.6480 | 0.6594 |

| Ours | Baichuan2-7B | 0.8151 | 0.2670 | 0.8012 | 0.6137 | 0.7423 | 0.6719 | 0.5836 |

| Ours | Qwen2-7B | 0.8747 | 0.3418 | 0.8376 | 0.6422 | 0.8041 | 0.7140 | 0.7148 |

4.3.2. Ablation Study

4.4. Generalization Experiments

5. Discussion

5.1. Discussion and Summary

5.2. Case Analysis

5.3. Limitations and Future Directions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. Available online: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 20 July 2025).

- Zhang, B.; Yang, H.; Zhou, T.; Babar, M.A.; Liu, X.Y. Enhancing Financial Sentiment Analysis via Retrieval Augmented Large Language Models. In Proceedings of the Fourth ACM International Conference on AI in Finance (ICAIF ’23), New York, NY, USA, 27–29 November 2023; ACM: New York, NY, USA, 2023; pp. 349–356. [Google Scholar] [CrossRef]

- Zakka, C.; Shad, R.; Chaurasia, A.; Dalal, A.R.; Kim, J.L.; Moor, M.; Fong, R.; Phillips, C.; Alexander, K.; Ashley, E.; et al. Almanac—Retrieval-Augmented Language Models for Clinical Medicine. NEJM AI 2024, 1, AIoa2300068. [Google Scholar] [CrossRef] [PubMed]

- Yepes, A.J.; You, Y.; Milczek, J.; Laverde, S.; Li, R. Financial Report Chunking for Effective Retrieval Augmented Generation. arXiv 2024, arXiv:2402.05131. [Google Scholar] [CrossRef]

- Reizinger, P.; Ujváry, S.; Mészáros, A.; Kerekes, A.; Brendel, W.; Huszár, F. Position: Understanding LLMs Requires More than Statistical Generalization. arXiv 2024, arXiv:22405.01964. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Kumar, Y.; Marttinen, P. Improving Medical Multi-Modal Contrastive Learning with Expert Annotations. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2024; pp. 468–486. [Google Scholar]

- Wiratunga, N.; Abeyratne, R.; Jayawardena, L.; Wiratunga, N.; Abeyratne, R.; Jayawardena, L.; Martin, K.; Massie, S.; Nkisi-Orji, I.; Weerasinghe, R.; et al. CBR-RAG: Case-Based Reasoning for Retrieval Augmented Generation in LLMs for Legal Question Answering. In Proceedings of the International Conference on Case-Based Reasoning Research and Development, Merida, Mexico, 1–4 July 2024; Springer: Cham, Switzerland, 2024; pp. 445–460. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models Are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. In Proceedings of the Tenth International Conference on Learning Representations (ICLR 2022), Virtual, 25–29 April 2022; pp. 1–3. [Google Scholar]

- Pozzi, A.; Incremona, A.; Tessera, D.; Toti, D. Mitigating Exposure Bias in Large Language Model Distillation: An Imitation Learning Approach. Neural Comput. Appl. 2025, 37, 12013–12029. [Google Scholar] [CrossRef]

- Ekin, S. Prompt Engineering for ChatGPT: A Quick Guide to Techniques, Tips, and Best Practices. Authorea Preprints. 2023. Available online: https://www.researchgate.net/publication/370554061_Prompt_Engineering_For_ChatGPT_A_Quick_Guide_To_Techniques_Tips_And_Best_Practices (accessed on 20 July 2025).

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.; Le, Q.; Zhou, D. Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Yu, D.; Zhu, C.; Fang, Y.; Yu, W.; Wang, S.; Xu, Y.; Ren, X.; Yang, Y.; Zeng, M. KG-FiD: Infusing Knowledge Graph in Fusion-in-Decoder for Open-Domain Question Answering. arXiv 2021, arXiv:2110.04330. [Google Scholar]

- Wang, Y.; Ren, R.; Li, J.; Zhao, W.X.; Liu, J.; Wen, J.-R. REAR: A Relevance-Aware Retrieval-Augmented Framework for Open-Domain Question Answering. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP 2024), Singapore, 6–10 November 2024; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 4213–4225. Available online: https://aclanthology.org/2024.emnlp-main.321.pdf (accessed on 25 July 2025).

- Lyu, Y.; Li, Z.; Niu, S.; Xiong, F.; Tang, B.; Wang, W.; Wu, H.; Liu, H.; Xu, T.; Chen, E. CRUD-RAG: A Comprehensive Chinese Benchmark for Retrieval-Augmented Generation of Large Language Models. ACM Trans. Inf. Syst. 2024, 43, 1–32. [Google Scholar] [CrossRef]

- Magesh, V.; Surani, F.; Dahl, M.; Suzgun, M.; Manning, C.D.; Ho, D.E. Hallucination-Free? Assessing the Reliability of Leading AI Legal Research Tools. J. Empir. Leg. Stud. 2025, 22, 216–242. [Google Scholar] [CrossRef]

- Wang, C.; Long, Q.; Xiao, M.; Cai, X.; Wu, C.; Meng, Z.; Wang, X.; Zhou, Y. BioRAG: A RAG-LLM Framework for Biological Question Reasoning. arXiv 2024, arXiv:2408.01107. [Google Scholar]

- Li, H.; Chen, Y.; Hu, Y.; Ai, Q.; Chen, J.; Yang, X.; Yang, J.; Wu, Y.; Liu, Z.; Liu, Y. LexRAG: Benchmarking Retrieval-Augmented Generation in Multi-Turn Legal Consultation Conversation. In Proceedings of the 48th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ’25), Washington, DC, USA, 13–17 July 2025; ACM: New York, NY, USA, 2025; pp. 3606–3615. [Google Scholar] [CrossRef]

- Möller, T.; Reina, A.; Jayakumar, R.; Pietsch, M. COVID-QA: A Question Answering Dataset for COVID-19. In Proceedings of the 1st Workshop on NLP for COVID-19 at ACL 2020, Virtual, 9 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020. [Google Scholar]

- Vasantharajan, C. SciRAG: A Retrieval-Focused Fine-Tuning Strategy for Scientific Documents. Ph.D. Thesis, McMaster University, Hamilton, ON, Canada, 2025. [Google Scholar]

- Joshi, M.; Choi, E.; Weld, D.S.; Zettlemoyer, L. TriviaQA: A Large Scale Distantly Supervised Challenge Dataset for Reading Comprehension. arXiv 2017, arXiv:1705.03551. [Google Scholar] [CrossRef]

- Zhao, Y.; Shi, J.; Chen, F.; Druckmann, S.; Mackey, L.; Linderman, S. Informed Correctors for Discrete Diffusion Models. arXiv 2024, arXiv:2407.21243. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Zhang, J.; Lopyrev, K.; Liang, P. SQuAD: 100,000+ Questions for Machine Comprehension of Text. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing (EMNLP 2016), Austin, TX, USA, 1–4 November 2016; Association for Computational Linguistics: Stroudsburg, PA, USA, 2016; pp. 2383–2392. [Google Scholar] [CrossRef]

- He, Y.; Li, S.; Liu, J.; Tan, Y.; Wang, W.; Huang, H.; Bu, X.; Guo, H.; Hu, C.; Zheng, B.; et al. Chinese SimpleQA: A Chinese Factuality Evaluation for Large Language Models. arXiv 2024, arXiv:2411.07140. [Google Scholar] [CrossRef]

- Deng, Y.; Zhang, W.; Xu, W.; Shen, Y.; Lam, W. Nonfactoid Question Answering as Query-Focused Summarization with Graph-Enhanced Multihop Inference. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 11231–11245. [Google Scholar] [CrossRef]

- Zhang, W.; Zhou, Z.; Zheng, Z.; Gao, C.; Cui, J.; Li, Y.; Chen, X.; Zhang, X.-P. Open3DVQA: A Benchmark for Comprehensive Spatial Reasoning with Multimodal Large Language Model in Open Space. arXiv 2025, arXiv:2502.03091. [Google Scholar]

- Zhou, Z.; Wang, R.; Wu, Z. Daily-Omni: Towards Audio-Visual Reasoning with Temporal Alignment across Modalities. arXiv 2025, arXiv:2502.01952. [Google Scholar]

- Clark, J.H.; Choi, E.; Collins, M.; Garrette, D.; Kwiatkowski, T.; Nikolaev, V.; Palomaki, J. TyDi QA: A Benchmark for Information-Seeking Question Answering in Typologically Diverse Languages. Trans. Assoc. Comput. Linguist. 2020, 8, 454–470. [Google Scholar] [CrossRef]

- Vilares, D.; Gómez-Rodríguez, C. HEAD-QA: A Healthcare Dataset for Complex Reasoning. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019. [Google Scholar] [CrossRef]

- Manes, I.; Ronn, N.; Cohen, D.; Ber, R.I.; Horowitz-Kugler, Z.; Stanovsky, G. K-QA: A Real-World Medical Q&A Benchmark. arXiv 2024, arXiv:2401.17218. [Google Scholar]

- Lai, V.D.; Krumdick, M.; Lovering, C.; Reddy, V.; Schmidt, C.; Tanner, C. SEC-QA: A Systematic Evaluation Corpus for Financial QA. arXiv 2024, arXiv:2406.14394. [Google Scholar] [CrossRef]

- Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; Bi, X.; et al. DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning. arXiv 2025, arXiv:2501.12948. [Google Scholar]

- Hendrycks, D.; Burns, C.; Basart, S.; Zou, A.; Mazeika, M.; Song, D.; Steinhardt, J. Measuring Massive Multitask Language Understanding. arXiv 2021, arXiv:2009.03300. [Google Scholar] [CrossRef]

- Liu, Z. SecQA: A Concise Question-Answering Dataset for Evaluating Large Language Models in Computer Security. arXiv 2023, arXiv:2310.12281. [Google Scholar]

| Question Type | Count | Answer Distribution |

|---|---|---|

| Multiple-Choice | 2119 | A/B/C/D: 530/530/530/529 |

| Fill-in-the-Blank | 1442 | - |

| True/False | 1127 | True/False: 563/564 |

| Question Type | Question | Options/Answer | Reference |

|---|---|---|---|

| Multiple-Choice | After a crash impact, within how much time should the cockpit voice recorder’s erase function cease operation? | A. Within 5 min B. Within 10 min C. Within 15 min D. Within 20 min Answer: B | AC-25.1457 Cockpit Voice Recorder The cockpit voice recorder must cease operation and disable all erase functions within 10 min after a crash impact. |

| Multiple-Choice | In the electrical distribution system design documentation, which characteristic of the bus bar should be given special attention? | A. Temperature variation range B. Voltage variation range C. Current variation frequency D. Resistance variation curve Answer: B | AC-25.1355 Electrical Systems Attention should be given to the voltage variation range of the bus bar. |

| Fill-in-the-Blank | In the design process based on statistical analysis of material strength properties, the probability of structural failure caused by ____ can be minimized. | Answer: Material Variability | AC-25.613 Material Strength Properties and Design Values This can minimize probability of failure caused by material variability. |

| True/False | In the accelerate–stop braking transition process, a 2 s period is considered part of the transition phase. | Answer: False | AC-25.109 Accelerate–Stop Distance The 2 s period is for distance calculation and is not part of the braking transition process. |

| Item | Configuration |

|---|---|

| Operating System | Ubuntu 20.04 |

| GPU | NVIDIA Tesla T4 * 3 (Nvidia Corporation, Santa Clara, CA, USA) |

| Cuda Version | 12.2 |

| PyTorch Version | 2.6.0 |

| Top-k | 3 |

| Temperature | 0.3 |

| Model | Choice | Fill-in-the-Blank | Judgement | Overall |

|---|---|---|---|---|

| Vanilla RAG | 0.5724 | 0.1394 | 0.5058 | 0.4232 |

| +Metadata & MdChunk | 0.7918 | 0.2572 | 0.6859 | 0.6019 |

| +Hybrid Retriever | 0.8339 | 0.3058 | 0.8066 | 0.6649 |

| +Re-rank | 0.8747 | 0.3418 | 0.8376 | 0.7019 |

| Datasets | Domain | QA Type | Accuracy |

|---|---|---|---|

| MMLU-Econometrics | Finance | Choice | 54 |

| MMLU-Us_foreign_policy | Policy | Choice | 89 |

| SecQA | Cybersecurity | Choice | 92 |

| Element | Content |

|---|---|

| Question | 8000 feet is the maximum cabin pressure altitude limit mandated by the International Civil Aviation Organization. |

| Reference Answer | False |

| Model Prediction | True |

| Retrieved Passage (excerpt) | “… under normal operating conditions, the aircraft shall maintain a cabin pressure altitude not exceeding 2438 m (8000 feet) at its maximum operating altitude …” |

| Element | Content |

|---|---|

| Question | Fatigue tests of windshields and window panels must account for the effects of ____ and the life factor. |

| Reference Answer | load amplification factor |

| Model Prediction | load factor |

| Retrieved Passage (excerpt) | “… in fatigue tests of windshields and window panels considering the load amplification factor and the life factor, the durability of the metal parts of the installation structure must be taken into account …” |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, T.; Shen, S.; Zeng, C. A Retrieval-Augmented Generation Method for Question Answering on Airworthiness Regulations. Electronics 2025, 14, 3314. https://doi.org/10.3390/electronics14163314

Zheng T, Shen S, Zeng C. A Retrieval-Augmented Generation Method for Question Answering on Airworthiness Regulations. Electronics. 2025; 14(16):3314. https://doi.org/10.3390/electronics14163314

Chicago/Turabian StyleZheng, Tao, Shiyu Shen, and Changchang Zeng. 2025. "A Retrieval-Augmented Generation Method for Question Answering on Airworthiness Regulations" Electronics 14, no. 16: 3314. https://doi.org/10.3390/electronics14163314

APA StyleZheng, T., Shen, S., & Zeng, C. (2025). A Retrieval-Augmented Generation Method for Question Answering on Airworthiness Regulations. Electronics, 14(16), 3314. https://doi.org/10.3390/electronics14163314