Abstract

Vision–Language Models (VLMs) have become key contributors to the state of the art in contextual emotion recognition, demonstrating a superior ability to understand the relationship between context, facial expressions, and interactions in images compared to traditional approaches. However, their reliance on contextual cues can introduce unintended biases, especially when the background does not align with the individual’s true emotional state. This raises concerns for the reliability of such models in real-world applications, where robustness and fairness are critical. In this work, we explore the limitations of current VLMs in emotionally ambiguous scenarios and propose a method to overcome contextual bias. Existing VLM-based captioning solutions tend to overweight background and contextual information when determining emotion, often at the expense of the individual’s actual expression. To study this phenomenon, we created synthetic datasets by automatically extracting people from the original images using YOLOv8 and placing them on randomly selected backgrounds from the Landscape Pictures dataset. This allowed us to reduce the correlation between emotional expression and background context while preserving body pose. Through discriminative analysis of VLM behavior on images with both correct and mismatched backgrounds, we find that in 93% of the cases, the predicted emotions vary based on the background—even when models are explicitly instructed to focus on the person. To address this, we propose a multimodal approach (named BECKI) that incorporates body pose, full image context, and a novel description stream focused exclusively on identifying the emotional discrepancy between the individual and the background. Our primary contribution is not just in identifying the weaknesses of existing VLMs, but in proposing a more robust and context-resilient solution. Our method achieves up to 96% accuracy, highlighting its effectiveness in mitigating contextual bias.

1. Introduction

Today, due to the rapid adoption of intelligent systems and the fast growth of powerful artificial intelligence applications, tasks like emotion recognition have become essential in fields such as marketing where understanding consumer sentiment drives strategy affective robotics, enabling more natural human–robot interaction, personalized education, adapting learning experiences to emotional states for more engaging content, digital entertainment, and mental health, aiding in empathetic support systems.

These varied applications demand emotion recognition systems that are both accurate and context-aware. As a result, researchers have turned to more sophisticated machine learning models that can understand not only facial features but also social and environmental cues that influence human affect. This has led to the growing use of Vision–Language Models (VLMs) in this domain.

Modern approaches increasingly rely on Vision–Language Models (VLMs) [1]. Being efficient zero-shot learners for image captioning [2,3], they provide a deeper understanding of visual scenes by integrating facial expressions, environmental cues, and social context. This enables a significant leap over classical models, which often focused exclusively on facial features and overlooked the broader context in which emotions are expressed.

In emotion recognition from images, context often serves as a powerful cue [4]. However, the sensitivity of VLMs to contextual information introduces vulnerabilities, particularly when the context does not align with the individual’s actual emotion. As we will show, in synthetic images (designed to reveal this bias), ambiguous scenes, or clinical settings where expressed emotions may contradict the visual context, VLMs’ over-reliance on context becomes a weakness. These inconsistencies raise concerns about the generalization ability of current VLM-based emotion recognition systems, as this dependence can lead to biased or inaccurate predictions posing challenges for reliable human-centric applications.

Multiple works [5,6] have shown that Large Language Models (LLMs) such as GPT, Gemini, or Llama are powerful tools for emotion recognition and are increasingly used to interpret emotions in textual discussions due to their ability to generate empathetic responses that approach human-like quality. Despite their impressive capabilities, these models present some limitations, including tendencies toward overgeneralization biases and a cautious avoidance of negative or culturally sensitive formulations in their responses [7,8]. These findings suggest that large models should be used with caution when employed as key components in emotion recognition systems.

However, recent research suggests that Vision–Language Models may also possess the capacity to verify and refine their own outputs via internal reflection mechanisms. Vision Large Models (VLMs) and Large Language Models (LLMs) share a common architectural foundation (such as a Transformer backbone, language modeling tasks, and token-based processing), but VLMs include specialized components to handle visual input, such as an image encoder and visual tokens. For instance, the VL–Rethinker [9] framework enhances multimodal “slow-thinking” by explicitly enforcing a rethinking phase during inference, encouraging models to assess and correct their own predictions. Similarly, the (Reflect, Revise, and Re-select Vision) [10] paradigm enables VLMs to iteratively generate, critique, and revise interpretations at test time, yielding substantial gains in reasoning benchmarks. Additionally, self-feedback mechanisms have been shown to improve semantic grounding the alignment between image regions and generated text highlighting a promising direction for mitigating hallucinations and misinterpretations [11]. These advances indicate that encouraging self-verification in VLMs may enhance their reliability and robustness in tasks like contextual emotion recognition.

In this paper, we aim to further investigate the contextual biases in Vision–Language Models and their impact on emotion recognition. Our hypothesis suggests that subtle alterations in background context—while preserving the same body pose—can significantly influence VLM interpretations of emotional states. To explore this, we altered existing datasets, utilizing images from the BEAST [12] and EMOTIC [4] datasets. We extracted individual subjects using YOLOv8 and synthetically placed them onto diverse, unrelated background scenes selected from the Landscape Pictures dataset (https://www.kaggle.com/datasets/arnaud58/landscape-pictures, accessed on 12 May 2025), while maintaining their original pose and expression. We then analyzed how VLM-generated descriptions and emotion predictions changed across these varied contexts.

Our experimental results demonstrate a strong contextual effect: in over 90% of the cases analyzed, predictions for the same body posture differed based on the background, highlighting the VLM’s susceptibility to contextual influence. To reduce this bias, we propose a multimodal approach that integrates four streams: (1) the original image, (2) the isolated body posture, (3) the automatically generated scene description, and (4) a specifically prompted “discrepancy description.” The discrepancy description is generated by prompting the VLM to explicitly describe the mismatch if any between the emotion displayed by the person and the atmosphere suggested by the background. This encourages the model to reflect critically on whether the context aligns with the subject’s emotion.

By fusing these diverse inputs, our approach moves toward a more robust and context-independent understanding of human emotions.

The rest of the paper is organized as follows: Section 2 reviews related work in emotion recognition and Vision–Language Models. Section 3 describes the datasets used in our study. Section 4 outlines the experimental methodology for isolating VLM bias in contextual emotion recognition, while Section 5 introduces our proposed multimodal approach. Section 6 presents the results, followed by a discussion in Section 7. Finally, Section 8 concludes the paper.

2. Related Work

Emotion is a complex psychological state involving three key components: a subjective experience, a physiological response, and a behavioral or expressive reaction [13]. While the first two are primarily studied in psychological and neurological research, the expressive component has been the focus of most computer vision-based emotion recognition systems. Despite substantial progress, several limitations persist, such as poor generalization across cultures, over-reliance on facial expressions, and insufficient handling of ambiguous or multimodal cues.

Recent efforts aim to bridge insights from psychology and machine perception. One key insight is that relying solely on visual analysis of facial expressions or body posture is often suboptimal. Consequently, alternative modalities such as audio, textual description, or scene context are being explored to complement visual cues. These additional streams, including context-aware captioning or pose-based analysis, introduce a richer multimodal understanding of emotion.

2.1. Beyond Emotion Recognition from Face Expression

Analyzing body posture is part of the behavioral or expressive aspect of emotions. Although relatively new and with significantly less research compared to facial expression analysis, body posture-based emotion inference has seen some noteworthy prior studies [14]. Into this direction, Karg et al. [15] conducted a comprehensive survey highlighting the use of motion capture data to derive affective expressions from body movements. By applying clustering techniques to these dynamic features, they successfully categorized emotions into distinct classes, corresponding to different regions of the valence–arousal space, such as joy, anger, sadness, and fear. Tu et al. [16] explored skeleton-based human action recognition using spatial–temporal 3D convolutional neural networks (CNNs). They applied these techniques to emotion recognition tasks, identifying key emotional states such as anger, joy, and sadness from skeletal geometric features. Their results demonstrated that advanced CNN architectures could effectively capture the temporal dynamics of body movements, leading to improved emotion classification accuracy.

Moving away from unimodal data, Zhang et al. [17] provided an extensive review of emotion recognition techniques that leverage multimodal data. Their work emphasized the integration of various data sources, including facial expressions, body gestures, and speech, to improve the accuracy of emotion classification. Through a detailed analysis of different machine learning methods, they concluded that multimodal approaches significantly enhance the performance of emotion recognition systems, particularly in distinguishing complex emotional states. Yang and Narayanan [18] analyzed the interplay between speech and body gestures under different emotional conditions. Their research highlighted the influence of emotional states on the synchronization of speech and gestures, showing that this interplay could be effectively modeled using prosodic and motion features to improve emotion recognition performance. Poria et al. [19] focused on multimodal sentiment analysis by integrating multiple levels of attention mechanisms across different modalities, including text, audio, and visual data. Their study revealed that combining these modalities using deep learning frameworks significantly improves the system’s ability to detect and classify emotions accurately, particularly in complex, real-world scenarios. Mittal et al. [20] introduced a context-aware multimodal emotion recognition system, named EmotiCon, which integrates various contextual cues alongside traditional emotion recognition features. By applying this approach, they successfully recognized a wide range of emotions in different environments, demonstrating the importance of contextual information in enhancing the robustness of emotion recognition systems.

Even further, Ranganathan et al. [21] explored multimodal emotion recognition using deep learning architectures that combine facial, body, and speech modalities. Their findings showed that using deep learning models to integrate these modalities leads to substantial improvements in the accuracy of emotion detection, particularly in noisy and challenging environments. Psaltis et al. [22] employed a late fusion strategy: they successfully combined facial action units and high-level body gesture representations to recognize emotions such as surprise, happiness, anger, and sadness. Aqdus et al. [23] proposed a deep learning approach for emotion recognition that combines upper-body movements and facial expressions. Their model demonstrated superior performance in recognizing emotions such as happiness, sadness, and anger, particularly when using feature-level fusion of body and facial cues. This bimodal approach underscored the advantages of integrating multiple expressive channels in emotion recognition tasks.

These works collectively suggest that multimodal systems incorporating context and pose information provide significant performance gains compared to face-only models. However, the field still struggles with challenges such as contextual ambiguity, cultural variability, and overfitting to scene-specific biases.

2.2. Language Models in Emotion Recognition

Parallel to these developments, the rise of Large Language Models (LLMs) has opened new avenues for emotion analysis—particularly in text-based empathy and sentiment understanding. Buscemi et al. [5] evaluate the performance of four LLMs—ChatGPT 3.5, ChatGPT 4, Gemini Pro, and LLaMA2 7B—on sentiment analysis tasks across ten languages. The goal is to assess how well these models handle ironic, sarcastic, or culturally ambiguous sentiments. To this end, the researchers designed 20 complex sentiment scenarios, translated into 10 languages, and asked each model to rate sentiment on a scale from 1 (unhappy) to 10 (happy), comparing the outputs with human ratings. Both ChatGPT models showed strong alignment with human judgments but struggled with irony and sarcasm. Gemini Pro demonstrated better sarcasm detection but tended to produce more negative sentiment scores overall. LLaMA2 7B consistently gave more positive ratings but lacked nuanced interpretation. The study highlights language-specific biases and the need for greater transparency and bias mitigation in model training.

In a related study, Welivita et al. [6] examined the empathic capabilities of state-of-the-art LLMs compared to human responses. Focusing on 32 fine-grained positive and negative emotions, they used human evaluation as the primary metric. Drawing on 2000 prompts from the EmpatheticDialogues dataset, they enlisted 1000 human evaluators, divided into five groups, each assessing either human-generated or LLM-generated responses. Results showed that LLMs often provided more emotionally validating and supportive responses than humans.

Extending this line of inquiry to visual data, Bhattacharyya et al. [24] conducted a comprehensive evaluation of Large Vision–Language Models (VLMs) in emotion recognition from images, addressing the lack of detailed assessments in this area. The authors explored factors influencing recognition performance and analyzed various error types. They assessed VLMs’ ability to recognize evoked emotions from image prompts, their robustness to prompt variations, and the nature of errors made. Using existing emotion datasets, they introduced the Evoked Emotion benchmark (EVE), featuring a diverse set of image types and challenging samples. Their findings reveal that current VLMs struggle with emotion recognition, particularly when prompts lack explicit class labels. The presence of such labels significantly improves performance, while prompting VLMs to adopt emotional personas tends to degrade results. A human evaluation further confirmed that performance limitations stem from both the models’ intrinsic capabilities and the inherent complexity of the task.

In the current state of the art on emotion recognition benchmarks, Xenos et al. [25] propose a novel approach that emphasizes the critical role of contextual information in affective computing. Their two-stage method leverages the generative capabilities of VLMs. In the first stage, the LLaVA model generates natural language descriptions of a subject’s emotional state in relation to the visual context. In the second stage, a Transformer-based architecture fuses visual and textual features for final emotion classification. Experiments on EMOTIC, CAER-S, and BoLD datasets demonstrate that this context-aware approach outperforms unimodal methods and achieves state-of-the-art results, underscoring the importance of contextual cues in emotion recognition.

3. Datasets

In this work, we use data from two existing datasets that are publicly available for studying emotion from context and body posture. These are EMOTIC and BEAST.

3.1. EMOTIC

The EMOTIC dataset [20] is a comprehensive collection of images depicting people in various unconstrained environments, annotated based on their apparent emotional states. This dataset includes 23,571 images and 34,320 annotated individuals with 66% being male and 34% female. Among these, 10% are children, 7% are teenagers, and 83% are adults. Images were either manually gathered via Google using queries about places, social settings, activities, and emotions or from COCO and Ade20k. Thus, diverse contexts of people in various environments and activities have been ensured.

The images were annotated using Amazon Mechanical Turk (AMT), where annotators were able to make reasonable guess about the subjects emotional states. The 26 class labels are Affection, Anger, Annoyance, Anticipation, Aversion, Confidence, Disapproval, Disconnection, Disquietment, Doubt/Confusion, Embarrassment, Engagement, Esteem, Excitement, Fatigue, Fear, Happiness, Pain, Peace, Pleasure, Sadness, Sensitivity, Suffering, Surprise, Sympathy, and Yearning. This list of 26 emotion categories was created considering the Visual Separability criterion: words with close meanings that are not visually separable were grouped together. For instance, Anger includes words like rage, furious, and resentful. Although these affective states differ, they are not always visually separable in a single image. Thus, the list of affective categories can be seen as the first level of a hierarchy, with each category having associated subcategories.

After the annotations (one annotator per image), the images were divided into three sets: Training (70%), Validation (10%), and Testing (20%), maintaining a similar distribution of affective categories across the sets. Additional annotations were collected: four more for the Validation set and two more for the Testing set, resulting in a total of five annotations for the Validation set and three for the Testing set, with slight variations for some images due to the removal of noisy annotations.

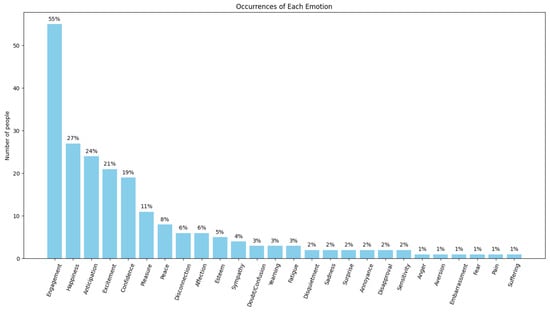

In Figure 1, we can see the number of annotated individuals for each of the 26 emotion categories sorted in descending order, according to the authors of the dataset. The dataset is highly unbalanced, presenting a particular challenge. There are more examples for categories linked to positive emotions, such as Happiness or Pleasure, while those associated with negative emotions, like Pain or Embarrassment, have very few examples. The category with the most examples is Engagement, as most images depict people involved in some activity, indicating some level of engagement.

Figure 1.

Distribution of annotated individuals per emotion category in the EMOTIC dataset, highlighting the imbalance across classes.

The database creators also analyzed the co-occurrence rates of various emotion categories within the dataset to understand how frequently one emotion appears in conjunction with another. This analysis employed clustering techniques such as K-Means to identify groups of emotions that commonly co-occur. The identified clusters include the following:

- Anticipation, Engagement, Confidence;

- Affection, Happiness, Pleasure;

- Doubt/Confusion, Disapproval, Annoyance;

- Yearning, Annoyance, Disquietment.

These clusters reveal a significant challenge for emotion recognition algorithms. Emotions within these clusters often exhibit overlapping features or contexts, making it difficult for algorithms to distinguish between them accurately. For example, both Anticipation and Confidence may involve positive expectations, which could manifest similarly in body language, complicating differentiation. Similarly, Affection, Happiness, and Pleasure all convey positive sentiments, potentially leading to confusion in automated systems trying to parse these subtle distinctions.

Furthermore, emotions like Doubt/Confusion and Disapproval can both display signs of discomfort or hesitation, adding to the complexity of their separation in emotion recognition systems. The overlap in emotional expressions and the variability in contextual cues can lead to misclassification or ambiguous interpretations by algorithms. This complexity highlights the need for sophisticated methods and careful tuning in emotion recognition technologies to accurately capture and distinguish these nuanced emotional states.

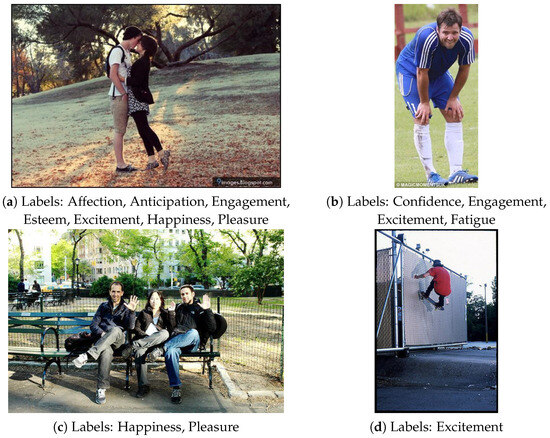

Figure 2 displays a selection of EMOTIC examples across distinct emotion categories. These demonstrate the dataset’s diversity in environmental context, body language, and visual appearance.

Figure 2.

Sample images from the EMOTIC dataset showcasing different emotional labels and environments. Each image reflects one or more of the dataset’s 26 emotion categories.

3.2. BEAST

The BEAST database [12] contains 254 whole-body expressions from 46 actors, each expressing one of four emotions: anger, fear, happiness, and sadness. Actors were individually instructed to display the emotions using their whole body, following a standardized procedure. Each emotion was associated with specific, representative daily scenarios: anger involved being in a quarrel and threatening retaliation, fear involved being pursued by an attacker, happiness involved meeting an old friend, and sadness involved learning of a dear friend’s passing. The actors’ faces were blurred in all images and participants were asked to categorize the emotions in a four-alternative forced-choice task. The dataset serves as a valuable tool for assessing recognition of affective signals, useful in explicit recognition tasks, matching tasks and implicit assessments.

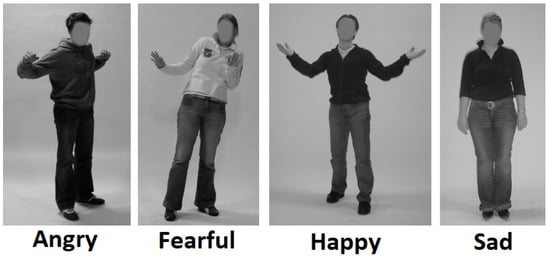

Emotions like disgust were not included as a pilot study, which may indicate these were difficult to recognize without facial cues [12]. Based on the photographer’s assessment of posture expressiveness, multiple pictures of the same actor and emotion were sometimes taken to enrich the dataset with slight variations. The total number of pictures was 254 (64 anger, 67 fear, 61 happiness, and 62 sadness). The scenarios were presented in random order to each actor. After the photo shoot, the images were desaturated and the facial areas blurred. Examples of the pictures can be seen in Figure 3.

Figure 3.

Example images from BEAST dataset. We illustrate one example for each class.

A high level of consistency has been reported among the dataset raters, using the Fleiss’ Generalized kappa metric and obtaining a value of 0.839. Also, the overall accuracy of the raters was 92.5%.

Authors concluded that all emotions were well recognized. Sadness was the easiest to identify, followed by fear, while happiness was the most difficult. These findings align with those of Atkinson et al. [26], suggesting inherent differences in the recognizability of bodily emotions, although different tasks may influence these results. Incorrect categorizations revealed that anger was more often confused with happiness than sadness and fear was more frequently confused with anger and happiness than sadness, this pattern contrasts with facial expression studies where happiness is usually better recognized than negative emotions. All emotions were expressed voluntarily, which may differ from natural expressions, even though real-life scenarios were used to enhance validity. There were no significant inter-individual differences in emotion categorization accuracy, indicating varied recognition abilities among subjects.

The two databases share some similarities (both have been built to express emotion by body posture) but they also have critical differences:

- BEAST is small. EMOTIC is larger.

- BEAST illustrates prototypes for body emotions. EMOTIC illustrates various and diverse examples for each emotional body posture.

- BEAST is devoid by background. EMOTIC sought background context that enhances person emotion.

- BEAST has removed face expression. EMOTIC has face expression.

The selection of EMOTIC and BEAST was motivated by their complementary characteristics and distinct advantages. EMOTIC offers a large-scale, diverse collection of emotional expressions captured in natural, unconstrained environments, incorporating both facial and bodily cues along with rich contextual backgrounds. In contrast, BEAST provides controlled, prototypical whole-body expressions with facial cues intentionally removed, making it particularly useful for isolating the contribution of body posture. Together, these datasets enable a more comprehensive exploration of both naturalistic and standardized representations of emotion.

Despite its scale and diversity, EMOTIC presents several limitations. The dataset exhibits a skewed gender distribution (66% male), and underrepresentation of younger age groups such as children and teenagers. It is also highly imbalanced, with some emotion categories comprising less than 2% of the data. Moreover, many images only show partial body views, typically from the elbows upward, limiting the available posture information. These constraints can introduce biases and reduce the generalizability of emotion recognition models trained solely on this dataset, highlighting the importance of critical evaluation when interpreting results.

BEAST, on the other hand, is a much smaller dataset with only 254 images, which constrains the training of data-hungry models and limits variability across subjects and expressions. Nonetheless, BEAST offers several advantages: the use of standardized scenarios enhances consistency across emotional expressions, the balance between the four classes, and the intentional blurring of faces ensuring that emotion must be inferred solely from body posture. This makes it particularly well-suited for evaluating the body’s contribution to emotion recognition in isolation.

4. Methodology

We formulate the hypothesis that current state-of-the-art solutions in body emotion recognition are over-reliant on the context. These solutions assume that person emotion is highly related to image background (context).

To test this hypothesis, we evaluate a leading Vision-and-Language Model (VLM)-based emotion recognition system on a modified dataset where expressive full-body figures from the BEAST dataset are placed against misleading backgrounds. The goal is to decouple body posture from its natural scene and observe the model’s reliance on context. Our preliminary findings show a significant performance drop; classification accuracy falls from 90% to 55%, highlighting the model’s dependence on contextual cues rather than body posture alone.

To build toward this result, we begin by reviewing the key principles of context-based emotion recognition and the architecture of a representative VLM model. We then describe the experimental setup used to validate our hypothesis.

This section describes the effort to isolate and diagnose problems of previous approaches. Following the diagnosis and identification of their causes, the next section, entitled “Multimodal Approach”, presents the proposed solution, which is designed to add targeted fixes without disrupting what already works.

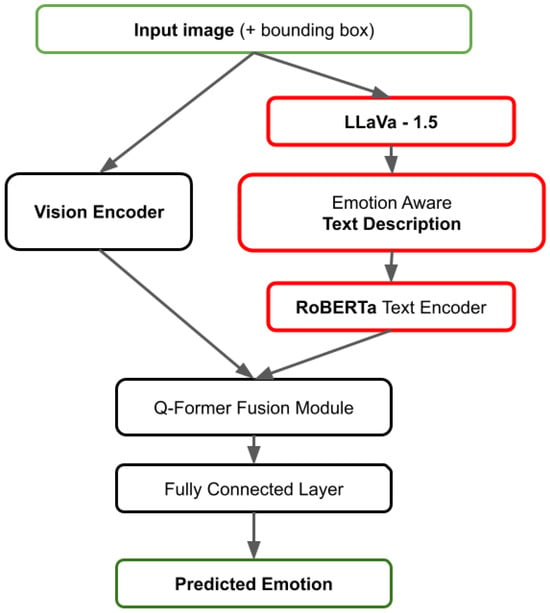

4.1. Standard Context-Based Emotion Recognition

Currently state-of-the-art performance in recognizing emotions in context is achieved by complex solutions with an intricate architecture. We detail by referring specifically to the work of Xenos et al. [25]. A better view of the architecture can be seen in Figure 4. The prototypical solution has a two-stage framework for applying Vision-and-Language Models (VLMs), e.g., LLaVA v1.5, to enhance emotion perception in context-heavy scenes.

Figure 4.

Architecture diagram of state-of-the-art contextual emotion recognition solution. The solution uses parallel branches where embedding computed from only visual data and visual-to-text-to-embedding, respectively, are combined for the final descriptor. The branch marked by red boxes is shown to be the key contributor to the overall performance.

During Stage I, the VLM generates natural-language description of each person’s emotional experience towards their environment. Such “emotion-aware” description (e.g., “the person inside the red box is fearful because they are backing away from an oncoming car”) encapsulates how context-informed information redefines emotional comprehension.

In the second stage, the text description is represented by a RoBERTa model [27] and the associated image is represented by a visual encoder (e.g., ViT-L). Such generated language and vision embeddings are combined by using a Q-Former module [3] based on cross-attention to merge context semantic information and visual information. The combined representation is input to a fully connected layer for final emotion classification derivation.

One of the main advantages of this approach is its ability to leverage common sense reasoning. Vision–Language Models rely on pre-trained knowledge, allowing them to understand and interpret complex physical and social scenarios without the need for manually crafted rules. This method generates descriptive text at inference time, removing the necessity for manual context labeling. Another benefit is enhanced interpretability by producing textual explanations for emotion predictions. Finally, the integration of both contextual text and visual input through multimodal fusion improves the system’s robustness, especially in cases where facial expressions or body language alone might be too ambiguous to yield accurate emotional interpretation.

A similar setup—featuring a wide range of alternative solutions at each step but focusing on Vision-and-Language Models (VLMs) and Large Language Model (LLM) descriptors—is explored by Etesam et al. [28]; based on results with alternative VLM and LLM models for the respective blocks, while differences appear, they are minor compared to the situation when they are not used.

In Figure 4, we point the attention to the blocks marked with red that are main contributors to the model’s success, as emphasized by Xenos et al. [25]. While evaluated in benchmarks such as EMOTIC, these blocks are net contributors; more precisely, they improve the mAP on the EMOTIC database by approximately 12% compared to solutions that do not use them. These results are consistent with those reported by Etesam et al. [28], who varied the VLM model. However, we argue that the overall solution is over-reliant on these blocks, introducing a certain bias toward the background.

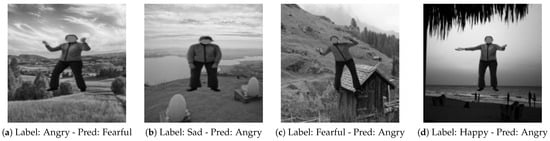

To emphasize the background over-reliance, we test the solution on images from BEAST merged with uncorrelated background. Examples in this direction, with the corresponding prediction, may be seen in Figure 5. One might note incorrect predictions.

Figure 5.

Prediction examples on images obtained by over-imposing persons from BEAST database with some uncorrelated background, using the previous state-of-the-art architecture.

These observations underline the importance of testing such context-aware models on data that deliberately decouple body posture from its surrounding scene. By combining emotion-rich body expressions from BEAST with irrelevant or misleading backgrounds, we can assess the true extent of context dependence, confirming that current models tend to overfit to contextual cues rather than bodily expressions alone.

4.2. VLM Experiments

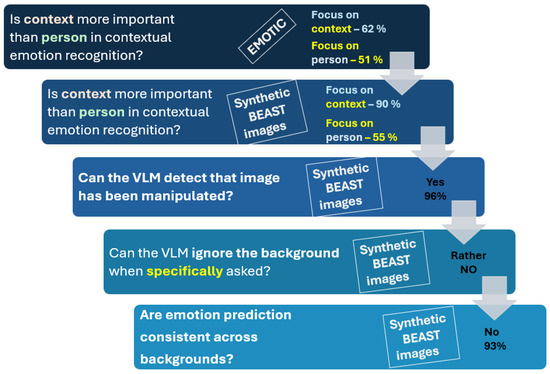

Motivated by the previous findings, in this subsection, we design a series of experiments to better understand the role of context and context captioning in emotion recognition. These experiments are synthesized in Figure 6 and detailed in the next paragraphs. All experiments focus exclusively on Vision–Language Models (VLMs).

Figure 6.

The chain of hypothesis verified for VLM reliance on background in captioning context in emotion. Text with different color marks key detail in the question or database used.

Among the various available VLMs, we primarily use Gemini, as it imposes fewer limitations on the number of experiments compared to other alternatives.

4.2.1. Context Weight in Emotion Recognition

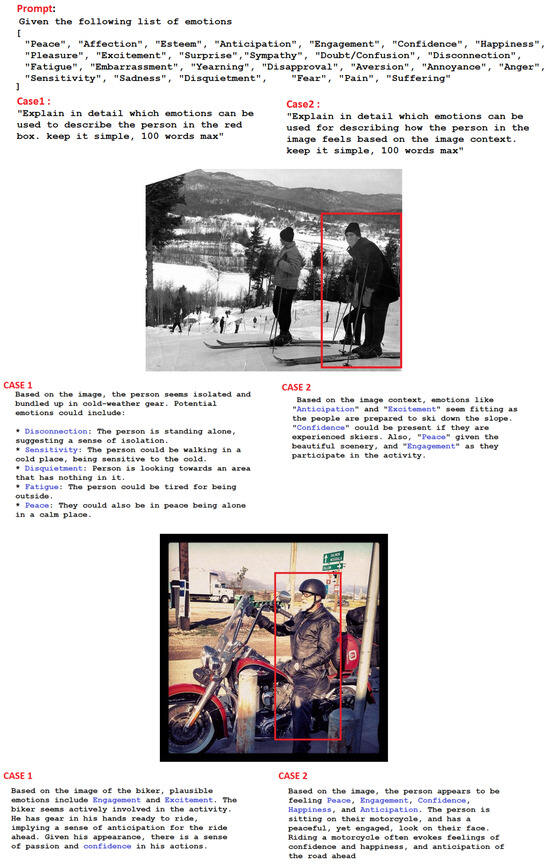

We investigated the role of context in emotion recognition by comparing the model’s predictions when analyzing the full image versus only the segmented person. First, we sampled 300 images from EMOTIC, where a full-body pose can be visible. In these images, we asked the VLM Gemini to predict the emotion. We found that in 51.13%, when the analysis is focused on the person, the model correctly predicted the emotion, compared to 62.05% of cases when viewing the full image (i.e., the person is in a context). This indicates that background context significantly influences the model’s emotion interpretation. Examples of images, prompts, and VLM’s reported captions are shown in Figure 7.

Figure 7.

Alternative interrogation of VLM over images from EMOTIC database: prompt, image, and results for the situation when the captioning was required to be only on the body (Case 1) and, respectively, on the image context (Case 2).

This result is in line with findings from [25], which reported an increase in performance from 25.55 when only the image content from person bounding box was used as input in the Visual Transformer (ViT) model, to 34.25 when a VLM was asked to recognize the emotion, and to 38.52 when the solution included emotion awareness and context caption was used.

To further examine this dependency, we also tested on BEAST in two scenarios. To evaluate the formulated hypothesis, we first segmented the individuals from the BEAST dataset and placed them onto various backgrounds, creating synthesized versions of the original images. The first scenario is when the background was in correlation with the expressed emotion while the second assumed that person emotion and background were in contradiction. We tested these hypotheses by training the RoBERTa classifier with the given descriptions from Gemini.

For the first scenario, description accuracy led to a performance of 90%, while for the contradictory scenario, it dropped down to 55.13%. This result highlights the model’s over-reliance on context, which may lead to errors when using simple prompts.

4.2.2. Can the VLM Detect Unnatural Correlation?

The experiments demonstrating an over-reliance on background are based on synthetically created composite images. A natural concern is whether such images are too simplistic or idealized for meaningful evaluation. To address this, we aimed to verify whether Vision-and-Language Models (VLMs) can detect the artificial nature of these altered images.

Prior work by Wang et al. [9] showed that VLMs possess some ability to assess the consistency and authenticity of visual–linguistic content. Motivated by this, we tested Gemini 2.0 [29] to determine if it could distinguish between original and manipulated images.

The test images were constructed by extracting people from the BEAST and EMOTIC datasets using the YOLOv8 object detection model and compositing them onto random scenes from the Landscapes dataset. This process creates realistic yet contextually mismatched visuals, simulating real-world image manipulation more faithfully than simple cut-and-paste examples.

We submitted each composited image with the prompt: “Please determine if the image is original (photograph) or modified (synthesized).” Gemini 2.0 correctly classified 96% of the images as “modified,” indicating a strong ability to detect such manipulations even in relatively complex, realistic composites. While this high performance is promising, we acknowledge that altered images can vary widely in realism and style, and further testing across more diverse manipulation types would be needed to generalize this finding fully.

4.2.3. Refined Prompt

Based on the previous found conclusions, we modified the prompt to a more refined one: “Based on this emotion list [’angry’,’fearful’,’happy’,’sad’], please analyze the emotions expressed by the person in the image. If you identify that the image is synthetic or edited, please focus only on the person and ignore the background.”. This instruction led to a slight increase in the model’s accuracy, from 55.13% to 63.72%, suggesting that prompting can partially mitigate context bias.

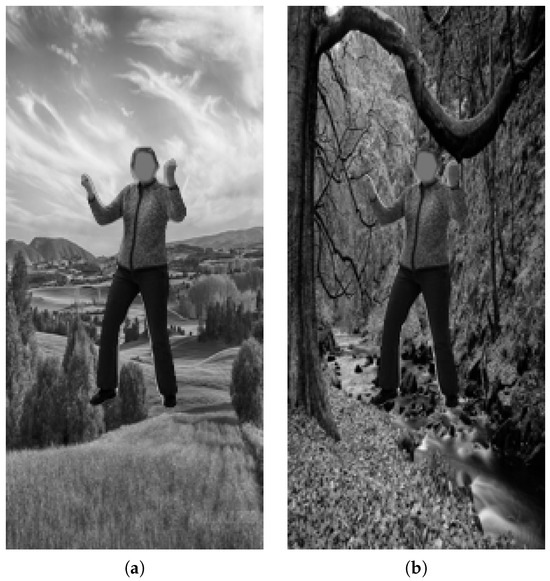

Even with explicit instructions to focus on the subject and ignore the background, visual context continues to influence the model’s interpretation. To further illustrate this phenomenon, we present two concrete examples in Figure 8, where the same body pose is interpreted differently depending on the background or the subjective ambiguity of the scene.

Figure 8.

Example of context-sensitive emotion predictions: (a) “The person in the image appears to be expressing happiness.” (b) “The image looks edited, so I’ll focus on the person. The individual’s posture and outstretched arms could suggest a sense of fear or surprise. It’s difficult to say definitively without a visible face”.

In Figure 8a, the model confidently associates the pose with happiness, likely influenced by either the openness of the posture or subtle contextual elements in the image. In contrast, Figure 8b receives a more uncertain, context-agnostic interpretation. Although the model follows the prompt by acknowledging the synthetic nature of the image and focusing on the pose, it still struggles to confidently classify the emotion, suggesting fear or surprise as possibilities.

4.2.4. Consistency

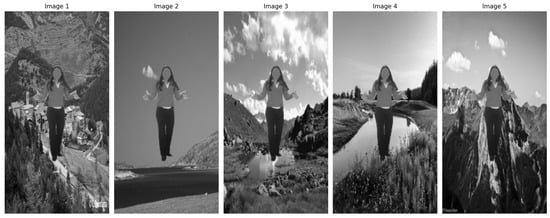

To further probe the behavior of Vision–Language Models, we evaluated their consistency in emotion predictions across varying visual contexts. Specifically, we examined whether the model could maintain stable emotion predictions for the same body pose when composited onto different backgrounds. Figure 9 illustrates this setup, showing a single body pose (annotated as “Happy”) placed in five distinct scenes, each varying in environment, lighting, and semantic content.

Figure 9.

Same body pose (marked as Happy) in five different background contexts.

Analysis Method: Each prediction was grouped by body pose identity, resulting 254 sets of 5 images. We then examined whether the model predicted the same emotion for a given pose across all five backgrounds.

Initial experiment with a simple prompt:

- Only 18 out of 254 poses (7%) received consistent emotion predictions across all five backgrounds.

- The remaining 236 poses (93%) yielded varying predictions depending on the background.

- Thus, it further enhanced the perception of over-reliance on background when suggesting the emotion of a person shown in full body.

Second experiment with a refined prompt that specifically asks to “Ignore background if the image is edited”:

To move further, we aimed to precisely instruct the VLM; give him a “shorter leash”. The prompt was adjusted to explicitly instruct the model to ignore background context when detecting edited images:

- A total of 26 out of 254 poses (10%) produced consistent predictions across all backgrounds.

- A total of 228 poses (90%) still showed variability, although the consistency slightly improved.

While the 3% increase indicates a slight improvement, it likely reflects natural variation rather than a true enhancement in prediction reliability. This reinforces the concern that background changes continue to influence the model’s outputs.

Even with explicit instructions to ignore the background, the same body pose still triggered inconsistent predictions. This underscores our broader finding: environmental context frequently overshadows bodily cues in emotion interpretation. Despite refined prompting, Large Vision–Language Models remain sensitive to background signals, leading to unstable or ambiguous outcomes. Such behavior shows the need for multimodal grounding as pursued in our fusion architecture. To anchor predictions in structured, complementary information, we add another branch to the solution, which encodes explicitly the pose of the person in the image. This solution will be further detailed and evaluated in Section 5.

4.3. Completely Synthetic Images

Finally, we conducted an experiment involving deliberately contradictory image prompts. Using DALL-E [30,31], we generated 15 synthetic scenarios in which the emotional body language conflicted with the surrounding scene, such as

- “Man in a defensive posture at a wedding;”

- “Crying child at a birthday party;”

- “Ecstatic woman at a funeral;”

- “Joyful child in a war zone;”

- “Panicked man at a meditation retreat.”

- Figure 10 presents several of these generated images. Visually, these examples feature strong emotional expressions on the central figure while embedding them in semantically rich and emotionally incongruent backgrounds.

Figure 10. Examples of AI-generated (DALL-E) images based on contextually contradictory prompts that are shown below each figure.

Figure 10. Examples of AI-generated (DALL-E) images based on contextually contradictory prompts that are shown below each figure.

We then asked the model to describe these images and it generated accurate emotion descriptions in 100% of cases. In contrast to synthetic examples created by blending content from two existing images, DALL-E-generated images are typically dominated by the foreground person, whose facial expression is at its most expressive. The results on these images may suggest that modern Vision–Language Models prioritize facial expression as the main feature for emotion recognition.

Concluding these experiments on VLM while analyzing emotion in context:

- When available, VLMs give the highest priority to the face. This aligns with human behavior, but recent perspectives criticize this approach as intrusive, since the face is closely tied to personal identity.

- VLMs give secondary priority to the background, which is a weakness, as the context inferred from the background is merely circumstantial in relation to emotion.

- Only afterward is body posture taken into account.

- VLMs are capable of identifying unnatural compositions. However, they are not inherently skeptical, and when tasked with emotion recognition in context, they revert to their default prioritization.

5. Multimodal Approach

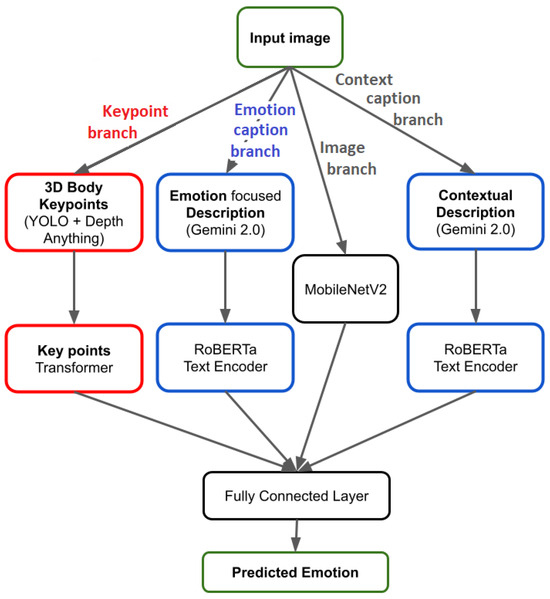

To address the context sensitivity identified in state-of-the-art VLMs, we propose a multimodal learning architecture named BECKI (Body Emotion Context Keypoint Image) that explicitly integrates information from several distinct sources: body keypoints, raw grayscale body images, contextual descriptions, and emotion-focused textual cues. The goal is to ensure the model’s emotion recognition capabilities are more robust and less prone to background or context manipulation. While in the previous section, we have presented a series of experiments designed to isolate the contextual bias issue, in this section, we propose a solution that is designed to add targeted fixes without disrupting the functional parts.

The core dataset used for benchmarking is derived from the BEAST database, which provides grayscale full-body images labeled with four basic emotions: angry, fearful, happy, and sad. Although the dataset is relatively small and limited in subject diversity, its design enables controlled manipulation of the background and offers a clear view of body pose, making it particularly suitable for evaluating sensitivity to contextual bias. To this end, we replaced the original neutral backgrounds with environments that contradict the expressed emotion. Each modified sample was further enriched with two levels of semantic annotation: a contextual description of the scene and a specifically constructed “emotion analysis” description, designed to highlight potential discrepancies between the emotion expressed and the surrounding environment.

Our approach builds on a custom neural pipeline, graphical represented in Figure 11. Compared to previous approaches, exemplified in Figure 4, the main innovation lies in the addition of blocks outlined in red; also, the findings with respect to refined prompt concluded in the necessity of dividing the captioning branch in two, which is outlined by blocks in blue. Next, we will detail the key blocks.

Figure 11.

Overview of the multimodal emotion recognition pipeline (named BECKI) integrating image, keypoint, and textual modalities. Compared to the previous solution, here, embeddings from three branches are gathered. The red blocks mark our key proposal.

Given an image, by adding two captions and a set of posture keypoints, it is formed into a data point. More precisely, a data point consists of

- Grayscale image of the subject;

- Structured file of 3D keypoints from body pose estimation;

- Contextual textual description;

- Emotion-focused textual analysis.

These data points have been gathered into a so-called “MultimodalBEASTDataset”. To work with all these modalities we have developed a specialized model that incorporates the following:

- MobileNetV2 CNN: Serves here primarily to extract local appearance cues without dominating the model’s focus since global dependencies are already captured via text and keypoint streams.

- KeypointEmotionTransformer: A lightweight Transformer encoder specifically designed for structured human pose data. Crucially, keypoints are normalized relative to image size to reduce scale and location variability.

- Text Encoders (RoBERTa-base): We use two parallel RoBERTa-base models, one for the contextual scene description and one for the emotion-focused analysis. This dual stream approach allows the model to separately process general and emotionally salient text, which is especially useful in ambiguous or contradictory background situations. Both models are fine-tuned on task data.

- Fusion and Classification: The outputs of all four branches (image, keypoints, context text, emotion text) are concatenated into a unified feature vector. This vector is then passed through a two-layer MLP: a linear projection to 256 dimensions, ReLU activation, dropout of 0.3, and a final classification layer producing logits over the four emotion classes.

5.1. Keypoint Transformer Architecture

To enhance subject-focused emotion recognition and reduce reliance on background context, we introduce the Keypoint Transformer. It is a lightweight Transformer-based architecture specifically designed for structured human pose data. Each input sample consists of 17 keypoints, where each keypoint is described by a 4-dimensional vector: 2D coordinates , confidence score c, and depth d.

5.1.1. Embedding and Positional Encoding

Each keypoint vector is first mapped to a higher-dimensional representation via a linear embedding layer:

Here, and are learnable parameters. To retain structural ordering of the keypoints, we add a learnable positional encoding matrix :

The resulting sequence forms the input to the Transformer.

5.1.2. Transformer Encoder

The encoder follows the standard architecture of Vaswani et al. [32], but is adapted for compact structured input. We use a stack of three Transformer encoder layers, each with four attention heads, a hidden dimension of 128, and a feedforward dimension of 256. Dropout with rate 0.1 is applied within each layer. The encoder is implemented using PyTorch’s default post-normalization design.

5.1.3. Global Aggregation and Classification

The output sequence is reduced to a global representation via adaptive average pooling across the keypoint dimension:

Finally, a fully connected classification layer produces logits for each of the target emotion classes:

In our experiments, we set , corresponding to emotion categories such as happy, sad, angry, and neutral.

The hyperparameters of the Transformer encoder—three layers, four attention heads, 128 hidden dimensions, and 256 feedforward dimensions—were selected based on empirical evaluation across multiple configurations. We experimented with varying the number of layers (1–6), the width of the model (64–256), and the heads of attention (2–8) and found that this configuration offers the best trade-off between the accuracy of the classification and the size of the model. Our goal was to maintain a lightweight architecture suitable for emotion recognition from keypoints, without overfitting to the relatively compact input structure of 17 tokens.

5.2. Textual and Visual Backbone Models

5.2.1. RoBERTa for Textual Encoding

For the textual modalities—contextual descriptions and emotion-focused analysis—we utilize two parallel RoBERTa encoders [27]. RoBERTa is a robust, pre-trained Transformer-based language model built on BERT architecture, which has been extensively fine-tuned on large amounts of data for better language understanding and representation. Its ability to capture nuanced semantic information makes it well-suited to extract meaningful features from complex, context-dependent textual inputs.

We recall that RoBERTa uses a Byte-Pair Encoding (BPE) tokenizer, which first it breaks the input text into subword units and converts each token into a unique integer ID using a predefined vocabulary. Next, the token IDs are passed, consecutively, through the token embeddings’ look-up table that maps token into vectors, and positional embeddings, which add information about token positions (i.e., adds sequence awareness). The embeddings are summed and fed into the encoder, which is a stack of Transformer encoder layers [27]. The final output is a sequence of embeddings, one for each input token.

The proposed solution uses two independent RoBERTa encoders, allowing the model to learn distinct representations for general context versus emotion-specific textual cues, which is critical for disentangling subtle emotional signals from the surrounding environment.

5.2.2. MobileNetV2 for Visual Encoding

The image modality is encoded using MobileNetV2 [33], a lightweight convolutional neural network architecture designed for efficient mobile and embedded vision applications. MobileNetV2 leverages depthwise separable convolutions and inverted residual blocks to significantly reduce computational cost and model size while maintaining competitive accuracy. Although other CNN architectures (e.g., ResNet, EfficientNet) or Vision Transformers (ViTs) could be used, MobileNetV2 offers an advantageous trade-off between performance and computational efficiency, enabling faster training and inference, particularly beneficial in a multimodal setup where multiple encoders run in parallel. Its adaptability to grayscale images further supports the focus on body appearance without reliance on color information.

This combination of specialized Transformer-based text encoders and a lightweight CNN backbone provides a balanced approach to extracting rich, complementary representations from different data sources while keeping the overall model size and complexity manageable.

Training Details

The fusion model was trained using the AdamW optimizer with a fixed learning rate of and weight decay for regularization. We employed a batch size of 16 and trained for up to 50 epochs. To address class imbalance (in case of EMOTIC), we applied weighted cross-entropy loss with class weights computed from the training label distribution. Although no explicit learning rate scheduler or warm-up strategy was used, we monitored validation performance after each epoch and implemented early stopping with a patience of 5 epochs to mitigate overfitting. These hyperparameters were chosen based on initial empirical experimentation to balance training stability, model performance, and computational efficiency.

Figure 11 illustrates the overall architecture of our multimodal emotion recognition pipeline. In summary, the model integrates four distinct input modalities: grayscale full-body images, 3D body keypoints, contextual textual descriptions, and emotion-focused textual cues. Each modality is encoded through a dedicated neural network branch (MobileNetV2 CNN for images, KeypointEmotionTransformer for keypoints, and two parallel RoBERTa encoders for text inputs). These embeddings are then concatenated and passed through a fusion module followed by a classification head that predicts the emotional category. This design allows the model to effectively leverage complementary information from visual, spatial, and linguistic data sources, enhancing robustness to context manipulation.

5.2.3. Implementation Details

The proposed model has been implemented in Python, version 3.10.12 and was developed using PyTorch, version 2.3.10, available at https://pytorch.org/, accessed on 12 May 2025, supported by PyTorch Foundation, for deep learning. All models were trained on a single NVIDIA RTX A4000 GPU (NVIDIA, Santa Clara, CA, USA) with 16 GB of memory.

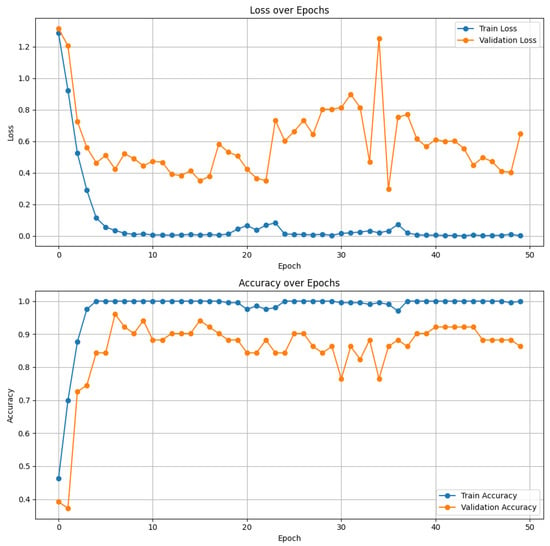

Training was conducted using 80% of the BEAST samples, with the remaining 20% used for validation. Loss and accuracy trends over 50 epochs demonstrated that the fusion model consistently outperforms single-modality baselines. Crucially, validation accuracy peaked at 96.31%, demonstrating that including structured body data and textual contrastive cues can effectively mitigate contextual bias and guide the model to prioritize semantically relevant information.

This fusion pipeline directly addresses the challenge described in our synthetic background experiments, where emotion predictions based solely on visual context proved unreliable. By combining learned representations from language, pose, and image appearance, our model forms a more holistic and stable understanding of the subject’s emotional state—aligning better with human judgment in ambiguous scenarios.

6. Results

To quantitatively evaluate the effectiveness of our multimodal fusion approach for emotion recognition, we conducted experiments comparing three scenarios, in conjunction with BEAST-derived database:

- Context-only: Using only the textual descriptions.

- Context–keypoints: Combining textual descriptions with body keypoints.

- Context–keypoints–images: Combining textual descriptions, body keypoints, and grayscale images.

6.1. Performance Metrics

To assess performance across the different scenarios, we report three standard classification metrics: precision, recall, and F1-score. These are computed as weighted averages over all emotion classes to account for class imbalance in the datasets.

Let , , and denote the number of true positives, false positives, and false negatives for class c, respectively. Let be the proportion of samples belonging to class c. Then, the metrics are defined as follows:

- Precision measures the proportion of correctly predicted samples among all samples predicted as class c:

- Recall measures the proportion of correctly predicted samples among all actual samples of class c:

- F1-score is the harmonic mean of precision and recall for class c:

We report the weighted average of each metric across all classes:

This formulation ensures that each class contributes proportionally to its frequency in the dataset, mitigating the influence of class imbalance in the final performance scores.

6.2. Experimental Results on BEAST

Table 1 summarizes the final evaluation metrics on the validation set after training for 50 epochs. We report weighted precision, recall, and F1-score for each scenario.

Table 1.

Validation metrics for different modality combinations on BEAST. Metrics are weighted averages over the four emotion classes.

While the final fusion model achieves a remarkable F1-score of 0.963, this leap in performance cannot be explained by the visual input or keypoints alone. As discussed in Section 4.2.1, using only the blurred full-body image alongside a basic textual description achieves an accuracy of just 55%. This highlights that the image modality in isolation provides limited discriminative power, primarily due to facial blurring and the ambiguity of body posture without contextual grounding.

The substantial improvement instead arises from the synergistic integration of three complementary sources of information: (1) Body keypoints provide a structured, high-level abstraction of the subject’s pose and movement, which are strongly correlated with affective states (e.g., slouched posture indicating sadness, expanded arms suggesting happiness). These features are invariant to appearance and background, offering robust cues that purely visual models may overlook. (2) Rich textual descriptions, both contextual and emotion-focused, inject semantic and situational understanding that helps disambiguate visually similar body configurations (e.g., crouching due to fear vs. crouching in play). (3) Grayscale images contribute holistic visual structure, including spatial layout, body shape, and contextual grounding beyond what keypoints can express, even in the absence of facial details.

From an information theoretic perspective, each modality provides a partially independent view of the latent emotional state. The fusion model benefits from cross-modal redundancy and complementarity, allowing it to resolve ambiguities present in any single modality. This cross-modal alignment leads to a significant gain in generalization.

To visualize training dynamics we provide a visualization of the training process over 50 epochs in Figure 12. The top plot shows the evolution of training and validation loss, while the bottom plot presents corresponding accuracy trends. These curves further highlight the effectiveness of our multimodal fusion strategy: the training loss converges rapidly, and validation accuracy stabilizes at a high level despite occasional fluctuations, suggesting strong generalization. Notably, the gap between training and validation curves remains relatively small, indicating that the model avoids overfitting—even with its increased capacity due to the multiple modality encoders. The final validation accuracy approaching 96% aligns with the scores reported in the ablation experiments, reinforcing the robustness of the proposed pipeline.

Figure 12.

Training (with blue) and validation (orange) loss (in the top plot) and accuracy (represented in the bottom plot) over 50 epochs for the full fusion model.

The results clearly indicate that employing multiple modalities significantly improves model performance in accurate emotional state prediction. While the contextual-only model fares slightly, incorporation of body keypoint information achieves a significant improvement in all metrics, confirming that structural pose information holds useful information for emotion recognition.

The highest overall performance is obtained from the end-to-end fusion model of grayscale images, keypoints, and context, which reaches 96.2% peak accuracy. From this enhancement, it is clear that it is important to incorporate space visual features from images, pose, and text information, in such a way that deviations in background are reduced to obtain an effective representation of emotional expression in the subject.

High F1 measure in the fusion model indicates an optimal trade-off among precision and recall, indicating steady detection in all categories of emotions. These findings validate our hypothesis that combining multiple modalities enhances robustness in terms of context in emotion recognition systems

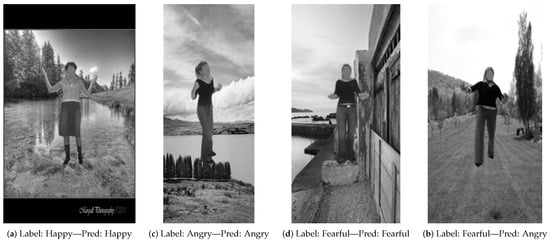

6.3. Qualitative Examples from BEAST

To complement the quantitative evaluation, we present qualitative examples of model predictions on the BEAST dataset in Figure 13. These samples illustrate both correct and incorrect classifications made by the full multimodal fusion model. The examples provide insights into the types of body expressions and contexts the model handles well, as well as challenging edge cases.

Figure 13.

Prediction examples on images synthesized from BEAST dataset using our multimodal fusion model. The first three examples illustrate success while the last is a failure.

6.4. Generalization to EMOTIC

To assess the ability to generalize of our fusion model and ensure its convergence beyond the BEAST dataset, we further evaluated the same three scenarios on the subset of samples from the EMOTIC dataset. This subset includes only images in which at least 15 body keypoints of the person are visible. After applying this criterion, 13,194 individuals remained from the original 34,320 in EMOTIC, corresponding to approximately 38% of the dataset. Table 2 presents the weighted precision, recall, and F1-score on the chosen images from EMOTIC.

Table 2.

Validation metrics for different modality combinations on a subset of EMOTIC. Metrics are weighted averages over the four emotion classes.

Figure 14 presents example predictions on EMOTIC. Despite increased domain variability, the multimodal model maintains high performance, further demonstrating its robustness.

Figure 14.

Examples of prediction on (original) EMOTIC images using the multimodal fusion model proposed here. Again, the first three show success, while the last is a failure.

Despite distinctions in domains and increasing variability in EMOTIC, the fusion model still performs well in all metrics, reaching an F1-score of 91.5%. This comparable boost in all datasets further consolidates our multimodal architecture’s efficiency and strength.

The relatively good performance regardless of input being available only in terms of contexts in EMOTIC further suggests that textual annotation in it could be much richer or descriptively ample in terms of emotions than in BEAST, in alignment with prior observations regarding annotation style in EMOTIC. These performances across datasets provide further evidence supporting the conclusion that our method generalizes well and retains its advantages in a more diverse and challenging real-world setting.

The performance results presented in Table 2 are not directly comparable to those reported by Xenos et al. [25]. The primary reason is that our approach requires a sufficient number of visible keypoints for each person, a condition satisfied by only a subset of EMOTIC images. A secondary reason is that emotions need to be matched with those from BEAST. This results in a subset of images. When the previous method is evaluated on this filtered set, the mean Average Precision (mAP) increases from the originally reported 38.52% (over the entire dataset with 26 classes) [25] to 84.12% (on the chosen subset with 4 aggregated classes). This corresponds to an F1-score of 82.37%.

On the same subset of images with adequate keypoint visibility and agreed labels, our proposed method outperforms it by approximately 9% in terms of F1-score.

As shown in Table 3, our approach outperforms the previous solution [25] by 9 F1 points on the same evaluation subset, confirming the advantage of integrating multiple modalities.

Table 3.

Comparison between solution from Xenos et al. [25] and the proposed method on the filtered EMOTIC subset with at least 15 visible keypoints and four aggregated emotion classes.

7. Discussion

Our initial experiments revealed that cutting-edge Vision–Language Models (VLMs) are prone to clear contextual bias when carrying out tasks of emotional recognition. In spite of advances in multimodal perception, their over-reliance on environmental information is at the expense of correctly interpreting the subject’s emotional state—particularly when confronted with inconsistent expressions, inconsistent scene–emotion pairs, or synthetically generated images.

The isolated bias and its consequences are explored in detail below. We first analyze the behavioral pattern of VLMs when context is manipulated, then examine whether textual prompting can mitigate this effect.

7.1. Contextual Bias and Its Implications

The fact that 93% of such similarly posed bodies were interpreted differently depending on the background underscores how strongly VLMs over-rely on contextual information. While this may align with human tendencies in real-world image understanding, the heavy weighting of context raises important concerns for critical applications such as mental health evaluation, human–machine interaction, or security—domains where emotional accuracy should be grounded in the individual rather than the scene.

7.2. Prompting Limitations

While better prompting (e.g., asking the model to ignore background should there be a simulated image) led to small increases in consistency of prediction, it was not sufficient to eliminate context-driven variability. This suggests that visual feature attention cannot be controlled outright with text prompts with current VLMs. Thus, reliance solely on zero-shot or prompt-engineered inference is inadequate for tasks that require emotional fidelity or delicacy.

Taken together, these results indicate that while VLMs show promise in general visual understanding, they lack the capacity for consistent, subject-focused emotion interpretation. This motivates the development of architectures that can disentangle contextual cues from intrinsic emotional signals, as we propose in our multimodal framework.

8. Conclusions

This paper tested VLMs’ limits to identify emotions, most particularly that they are prone to contextual bias. The findings are that for a similar pose of the body, its emotive perception differed in over 90% of cases when placed before different backgrounds. Even with clear instructions to attend to the subject and ignore surroundings, VLMs were found to be context-sensitive—adverse in computer-simulated imagery situations, ambiguous settings, or clinical usage when background and emotion are mismatched.

To mitigate this, we proposed a robust multimodal emotion detection pipeline that combines structured body keypoints, grayscale body imagery, and two textual inputs: a context description and an emotion–context discrepancy description. Through experimentation using synthetically varied versions of the BEAST and EMOTIC datasets, our technique demonstrated higher prediction stability, achieving over 96% accuracy and substantially outperforming single-modality baselines.

Our results affirm that emotion perception benefits from structured, contrastive, and multimodal integration. By explicitly disentangling and balancing subject level and contextual cues, we advance toward context resilient emotional understanding—critical for real-world applications in affective computing, psychological monitoring, and human–AI interaction.

Future Work. Future directions include applying our method to "in-the-wild" datasets with naturally occurring scene complexity, expanding the taxonomy of recognized emotions to cover more granular affective states, and incorporating temporal dynamics from video data to capture emotional transitions over time. These extensions will further enhance the realism and applicability of emotion recognition systems in diverse real-world environments.

Author Contributions

Conceptualization, C.-B.P. and C.F.; methodology, C.-B.P. and C.F.; software, C.-B.P.; validation, C.F. and L.F.; formal analysis, C.F.; investigation, C.-B.P., L.F. and C.F.; resources, C.-B.P. and C.F.; data curation, C.-B.P. and L.F.; writing—original draft preparation, C.-B.P.; writing—review and editing, C.F. and L.F.; visualization, C.-B.P. and C.F.; supervision, C.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data generated and/or analyzed during the current study are available from the corresponding authors on reasonable request. The original dataset are publicly available as follows: EMOTIC dataset is available at https://s3.sunai.uoc.edu/emotic/index.html accessed on 12 May 2025. BEAST dataset is available at http://www.beatricedegelder.com/beast.html accessed on 12 May 2025.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| BEAST | Bodily Expressive Action Stimulus Test |

| BECKI | Body Emotion Context Keypoint Image |

| EMOTIC | EMOTions In Context |

| LLM | Large Language Model |

| RoBERTa | Robustly Optimized BERT Pre-Training Approach |

| ViT | Visual Transformer |

| VLM | Vision–Language Model |

References

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Li, J.; Li, D.; Savarese, S.; Hoi, S. Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. In Proceedings of the International Conference on Machine Learning, PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 19730–19742. [Google Scholar]

- Kosti, R.; Alvarez, J.M.; Recasens, A.; Lapedriza, A. Emotic: Emotions in context dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 61–69. [Google Scholar]

- Buscemi, A.; Proverbio, D. Chatgpt vs. gemini vs. llama on multilingual sentiment analysis. arXiv 2024, arXiv:2402.01715. [Google Scholar]

- Welivita, A.; Pu, P. Are Large Language Models More Empathetic than Humans? arXiv 2024, arXiv:2406.05063. [Google Scholar] [CrossRef]

- Schramowski, P.; Turan, C.; Andersen, N.; Rothkopf, C.A.; Kersting, K. Large pre-trained language models contain human-like biases of what is right and wrong to do. Nat. Mach. Intell. 2022, 4, 258–268. [Google Scholar] [CrossRef]

- Navigli, R.; Conia, S.; Ross, B. Biases in large language models: Origins, inventory, and discussion. ACM J. Data Inf. Qual. 2023, 15, 1–21. [Google Scholar] [CrossRef]

- Wang, H.; Qu, C.; Huang, Z.; Chu, W.; Lin, F.; Chen, W. Vl-rethinker: Incentivizing self-reflection of vision-language models with reinforcement learning. arXiv 2025, arXiv:2504.08837. [Google Scholar]

- Cheng, K.; Li, Y.; Xu, F.; Zhang, J.; Zhou, H.; Liu, Y. Vision-language models can self-improve reasoning via reflection. arXiv 2024, arXiv:2411.00855. [Google Scholar]

- Kamoi, R.; Zhang, Y.; Zhang, N.; Han, J.; Zhang, R. When can llms actually correct their own mistakes? A critical survey of self-correction of llms. Trans. Assoc. Comput. Linguist. 2024, 12, 1417–1440. [Google Scholar] [CrossRef]

- De Gelder, B.; Van den Stock, J. The bodily expressive action stimulus test (BEAST). Construction and validation of a stimulus basis for measuring perception of whole body expression of emotions. Front. Psychol. 2011, 2, 181. [Google Scholar] [CrossRef] [PubMed]

- Mauss, I.B.; Robinson, M.D. Measures of emotion: A review. Cogn. Emot. 2009, 23, 209–237. [Google Scholar] [CrossRef] [PubMed]

- Mahfoudi, M.A.; Meyer, A.; Gaudin, T.; Buendia, A.; Bouakaz, S. Emotion expression in human body posture and movement: A survey on intelligible motion factors, quantification and validation. IEEE Trans. Affect. Comput. 2022, 14, 2697–2721. [Google Scholar] [CrossRef]

- Karg, M.; Samadani, A.A.; Gorbet, R.; Kühnlenz, K.; Hoey, J.; Kulić, D. Body movements for affective expression: A survey of automatic recognition and generation. IEEE Trans. Affect. Comput. 2013, 4, 341–359. [Google Scholar] [CrossRef]

- Tu, J.; Liu, M.; Liu, H. Skeleton-based human action recognition using spatial temporal 3D convolutional neural networks. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), San Diego, CA, USA, 23–27 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Zhang, J.; Yin, Z.; Chen, P.; Nichele, S. Emotion recognition using multi-modal data and machine learning techniques: A tutorial and review. Inf. Fusion 2020, 59, 103–126. [Google Scholar] [CrossRef]

- Yang, Z.; Narayanan, S.S. Analysis of emotional effect on speech-body gesture interplay. In Proceedings of the Interspeech, Singapore, 14–18 September 2014; pp. 1934–1938. [Google Scholar]

- Poria, S.; Cambria, E.; Hazarika, D.; Mazumder, N.; Zadeh, A.; Morency, L.P. Multi-level multiple attentions for contextual multimodal sentiment analysis. In Proceedings of the 2017 IEEE International Conference on Data Mining (ICDM), Orleans, LA, USA, 18–27 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1033–1038. [Google Scholar]

- Mittal, T.; Guhan, P.; Bhattacharya, U.; Chandra, R.; Bera, A.; Manocha, D. Emoticon: Context-aware multimodal emotion recognition using frege’s principle. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14234–14243. [Google Scholar]

- Ranganathan, H.; Chakraborty, S.; Panchanathan, S. Multimodal emotion recognition using deep learning architectures. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–9 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–9. [Google Scholar]

- Psaltis, A.; Kaza, K.; Stefanidis, K.; Thermos, S.; Apostolakis, K.C.; Dimitropoulos, K.; Daras, P. Multimodal affective state recognition in serious games applications. In Proceedings of the 2016 IEEE International Conference on Imaging Systems and Techniques (IST), Chania, Greece, 4–6 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 435–439. [Google Scholar]

- Ilyas, C.M.A.; Nunes, R.; Nasrollahi, K.; Rehm, M.; Moeslund, T.B. Deep Emotion Recognition through Upper Body Movements and Facial Expression. In Proceedings of the VISIGRAPP (5: VISAPP), Vienna, Austria, 8–10 February 2021; pp. 669–679. [Google Scholar]

- Bhattacharyya, S.; Wang, J.Z. Evaluating Vision-Language Models for Emotion Recognition. arXiv 2025, arXiv:2502.05660. [Google Scholar]

- Xenos, A.; Foteinopoulou, N.M.; Ntinou, I.; Patras, I.; Tzimiropoulos, G. Vllms provide better context for emotion understanding through common sense reasoning. arXiv 2024, arXiv:2404.07078. [Google Scholar] [CrossRef]

- Atkinson, A.P.; Tunstall, M.L.; Dittrich, W.H. Evidence for distinct contributions of form and motion information to the recognition of emotions from body gestures. Cognition 2007, 104, 59–72. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Etesam, Y.; Yalçın, Ö.N.; Zhang, C.; Lim, A. Contextual emotion recognition using large vision language models. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 4769–4776. [Google Scholar]

- Team, G.; Georgiev, P.; Lei, V.I.; Burnell, R.; Bai, L.; Gulati, A.; Tanzer, G.; Vincent, D.; Pan, Z.; Wang, S.; et al. Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Ramesh, A.; Pavlov, M.; Goh, G.; Gray, S.; Voss, C.; Radford, A.; Chen, M.; Sutskever, I. Zero-shot text-to-image generation. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8821–8831. [Google Scholar]

- Ramesh, A.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical Text-Conditional Image Generation with CLIP Latents. arXiv 2022, arXiv:2204.06125. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).