Optimization of Non-Occupied Pixels in Point Cloud Video Based on V-PCC and Joint Control of Bitrate for Geometric–Attribute Graph Coding

Abstract

1. Introduction

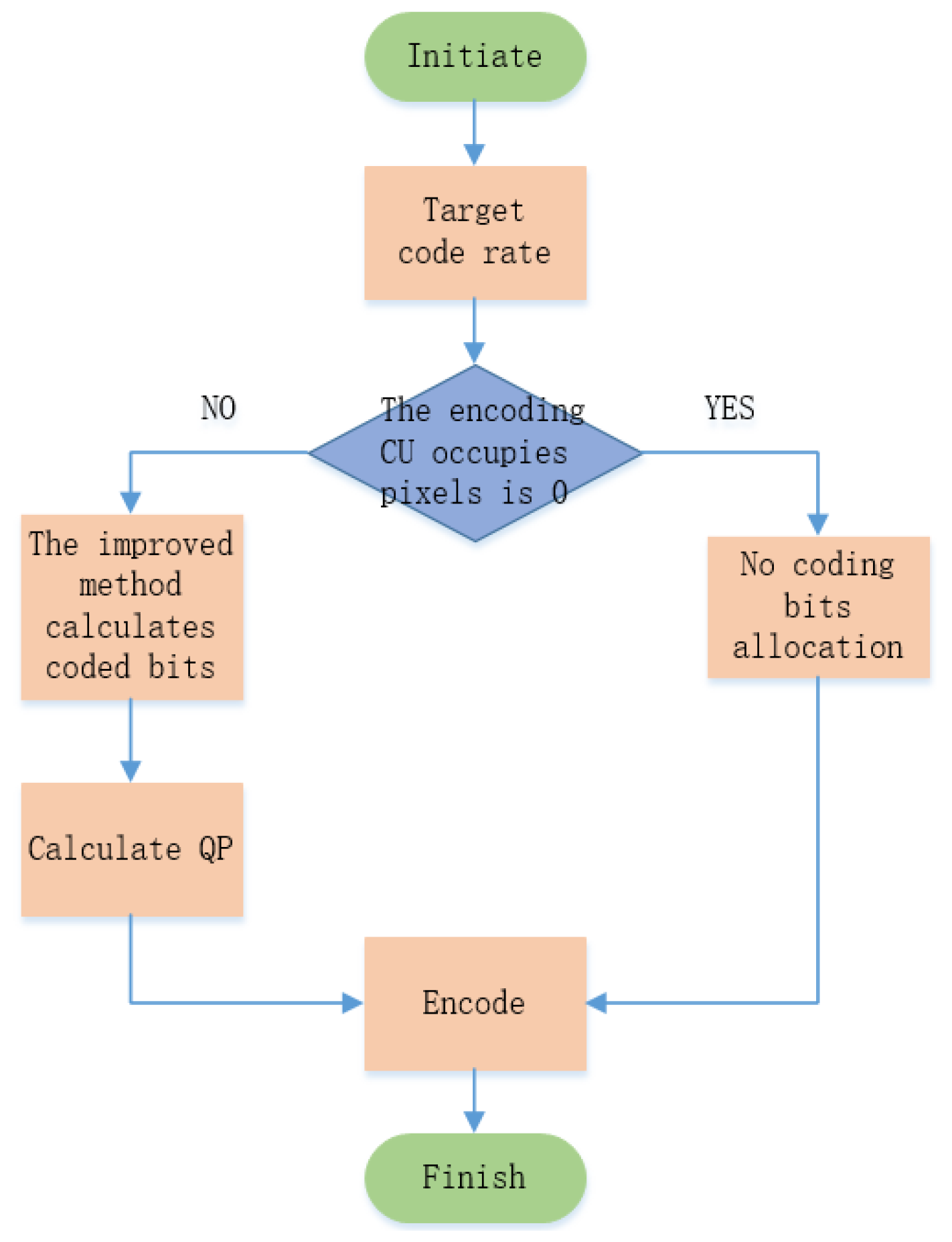

- For the geometric graph, this paper considers whether the encoded block contains unoccupied pixels and the proportion of occupied pixels. By obtaining the relationship between the bitrate and distortion of the geometric graph and the geometric distortion of the reconstructed point cloud, an improved allocation algorithm for the bitrate weight of the geometric encoding unit is designed. Moreover, no bitrate is allocated to the encoded blocks that are all unoccupied pixels, thereby saving encoding bits.

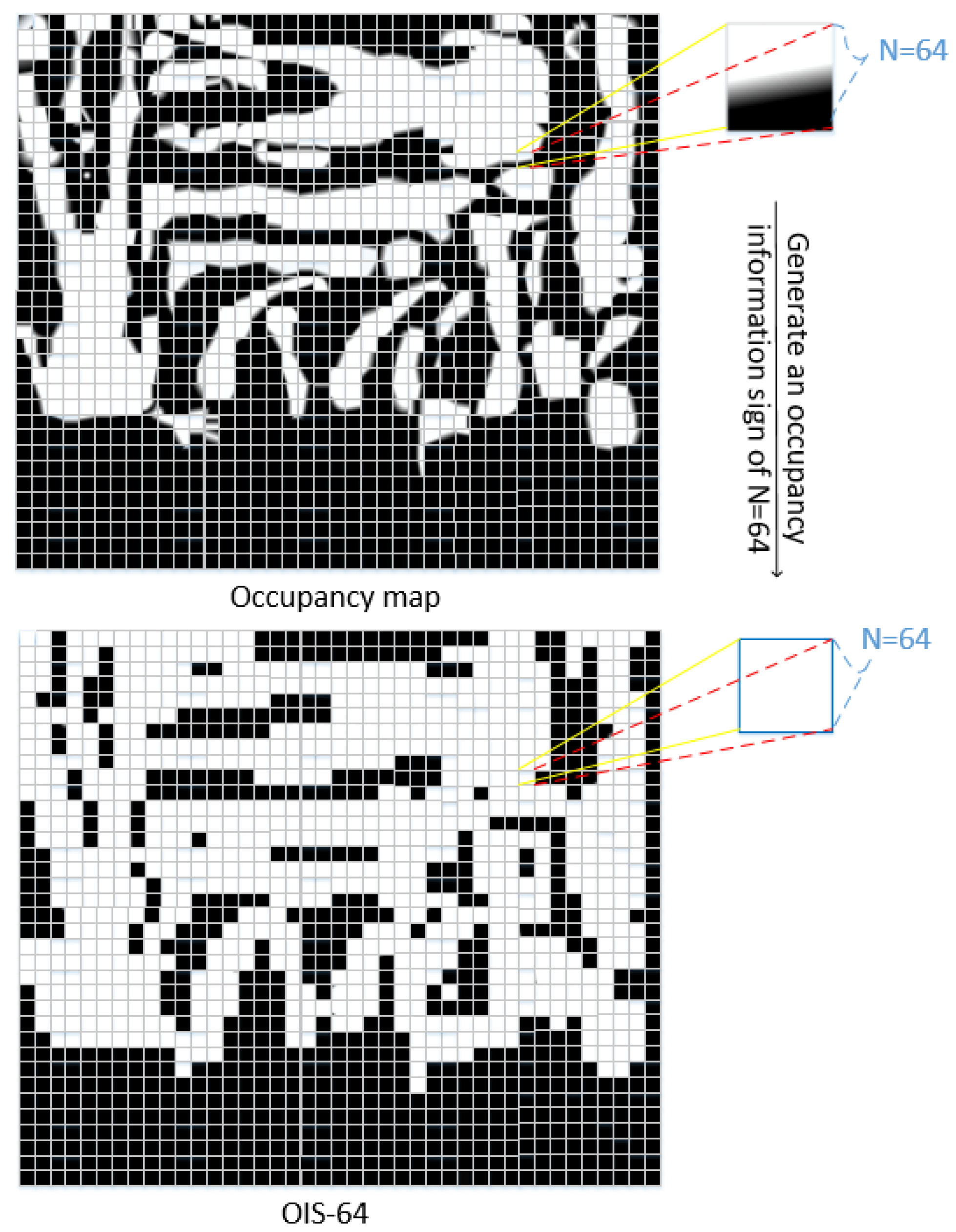

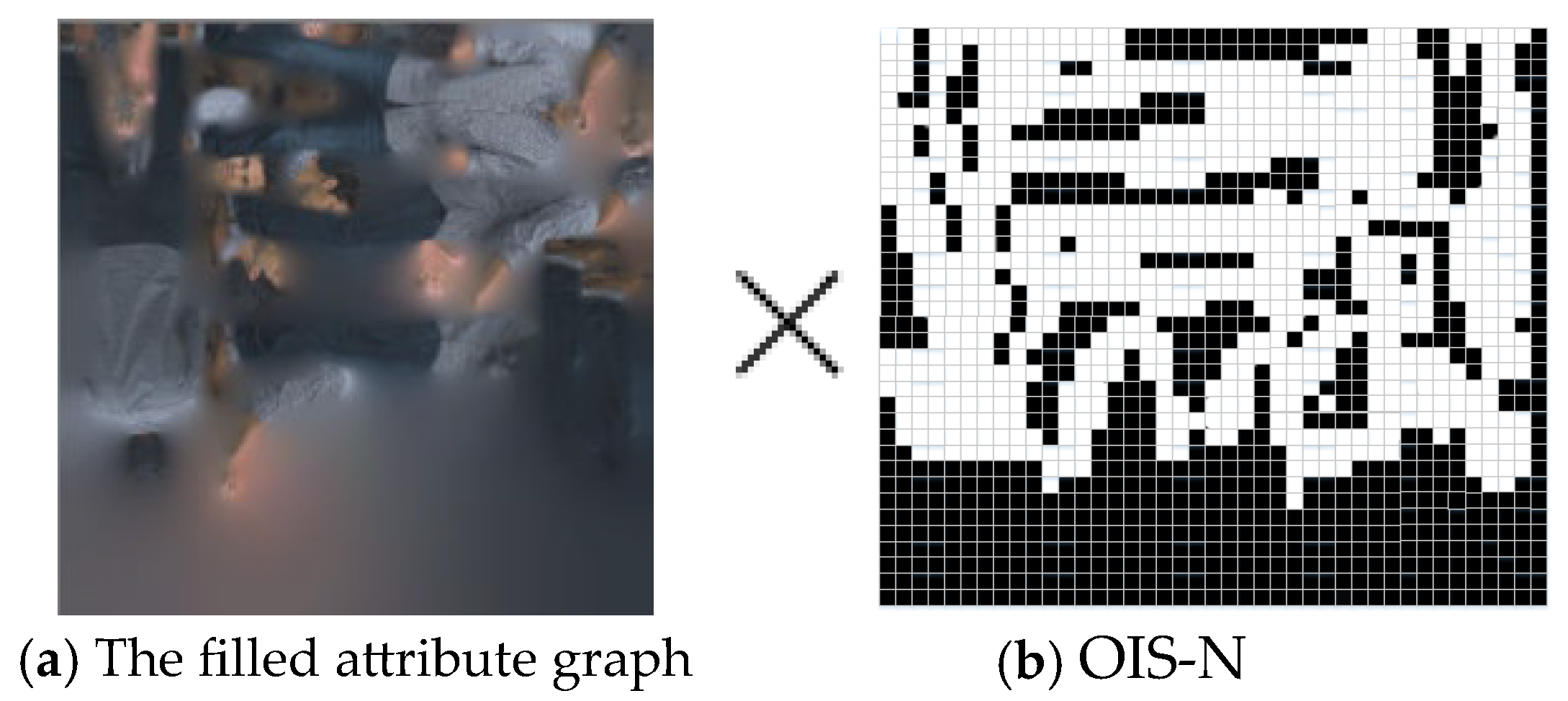

- For the attribute graph, the occupancy graph is used to obtain the block-based occupancy information representation. By generating the occupancy information sign OIS-N to void the invalid non-occupied pixel information of the attribute graph, an improved algorithm for attribute encoding input pixels is designed. Control is carried out from the encoding input end to avoid the waste of bitrate resources by non-occupied pixels and further save the bitrate.

2. Related Work

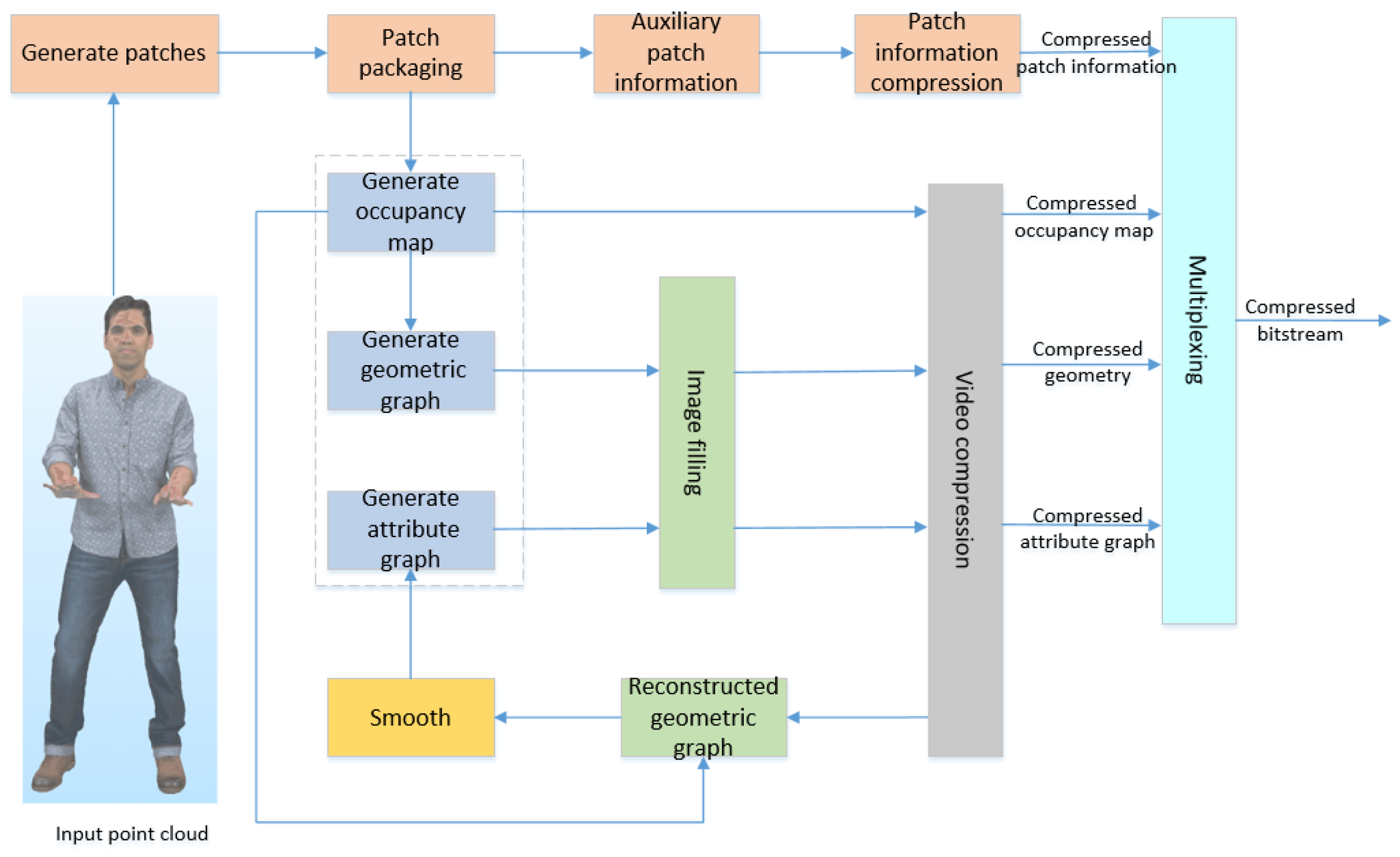

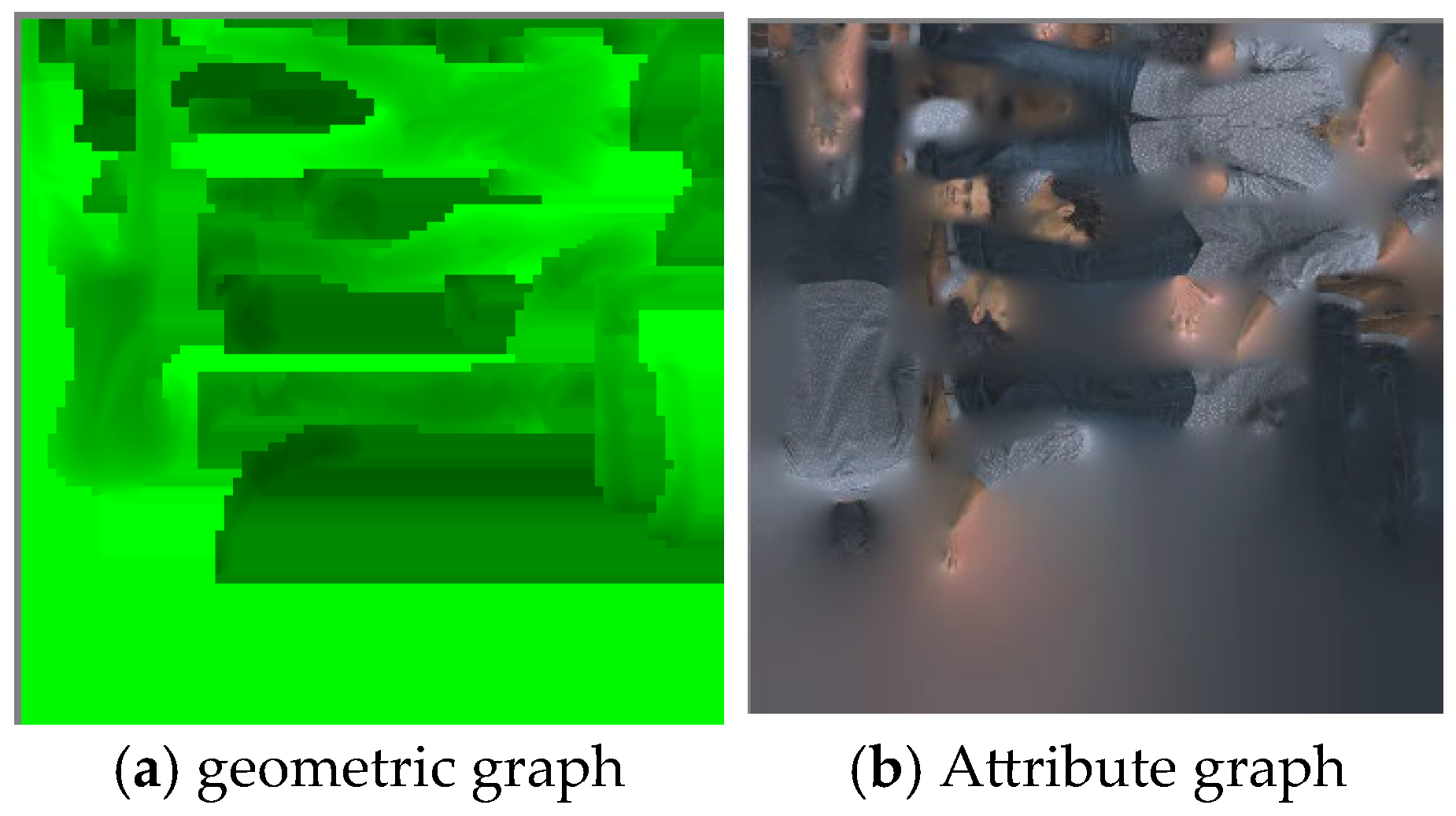

2.1. V-PCC Coding Method

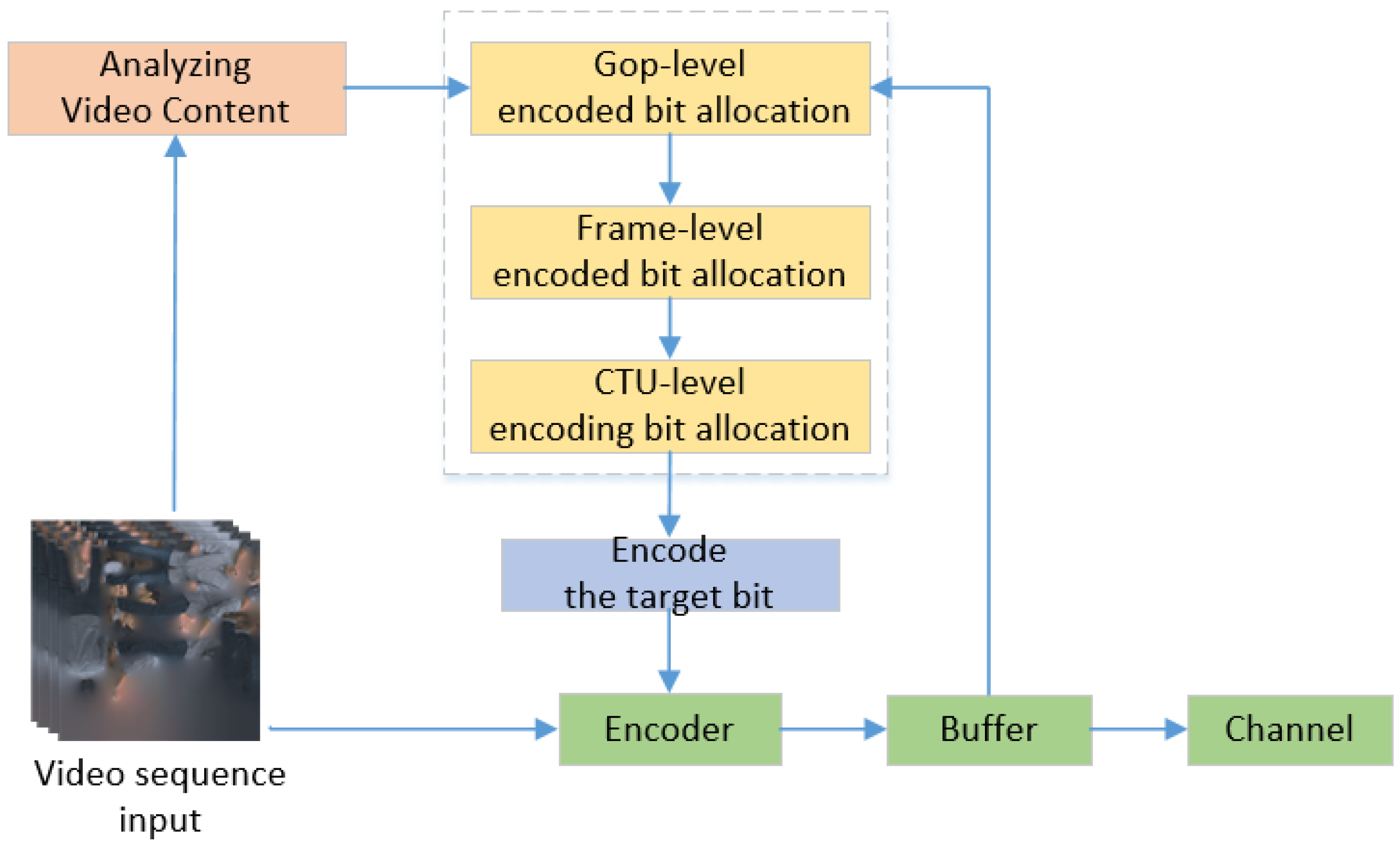

2.2. Bitrate Control Technology

2.3. Research on Coding Control

3. The Proposed Method

3.1. Optimization of Geometric Graph Encoding Based on Occupied Pixels

3.1.1. Principle of Bitrate Control

3.1.2. Optimization Ideas for Geometric Graph Coding

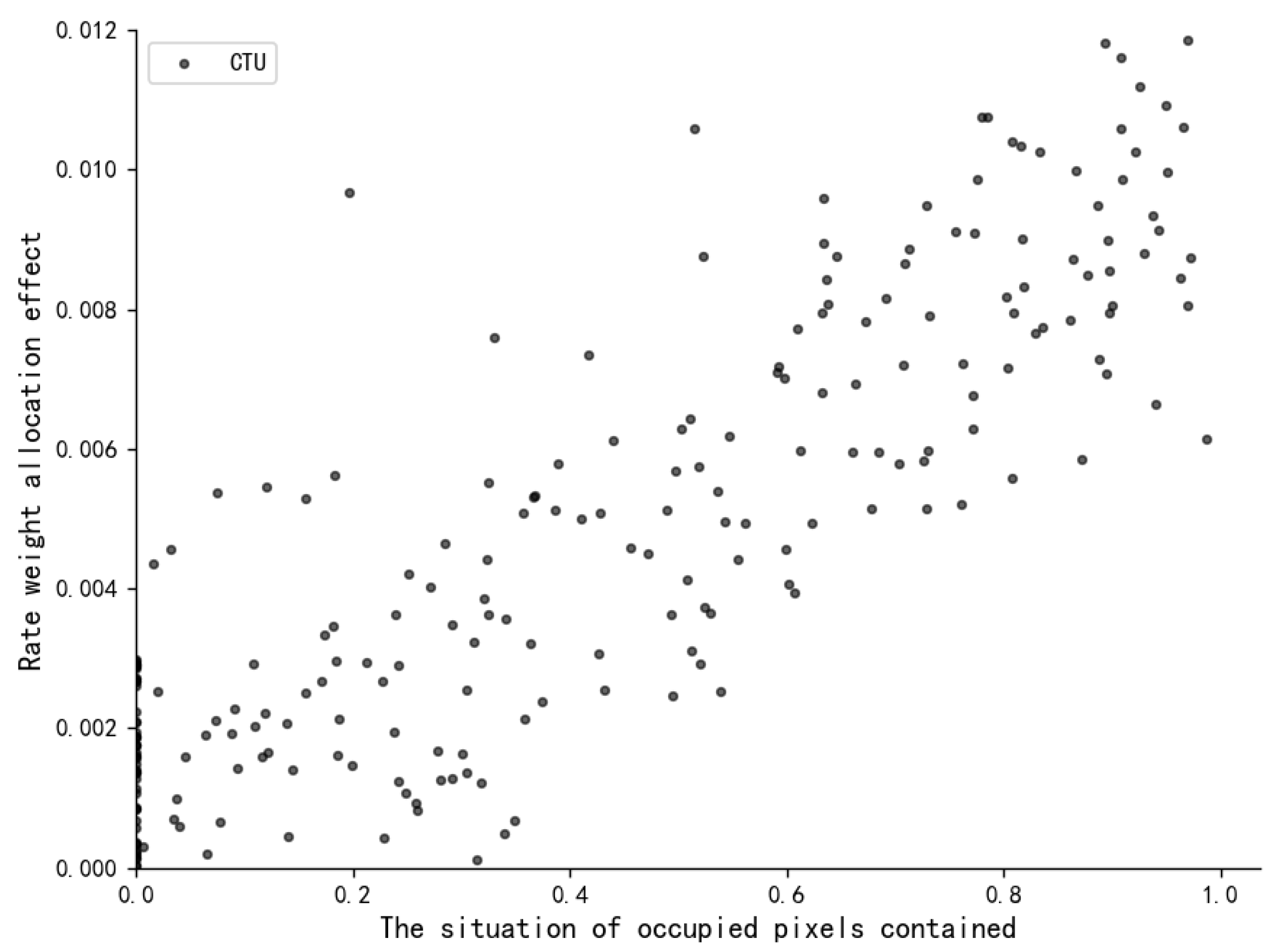

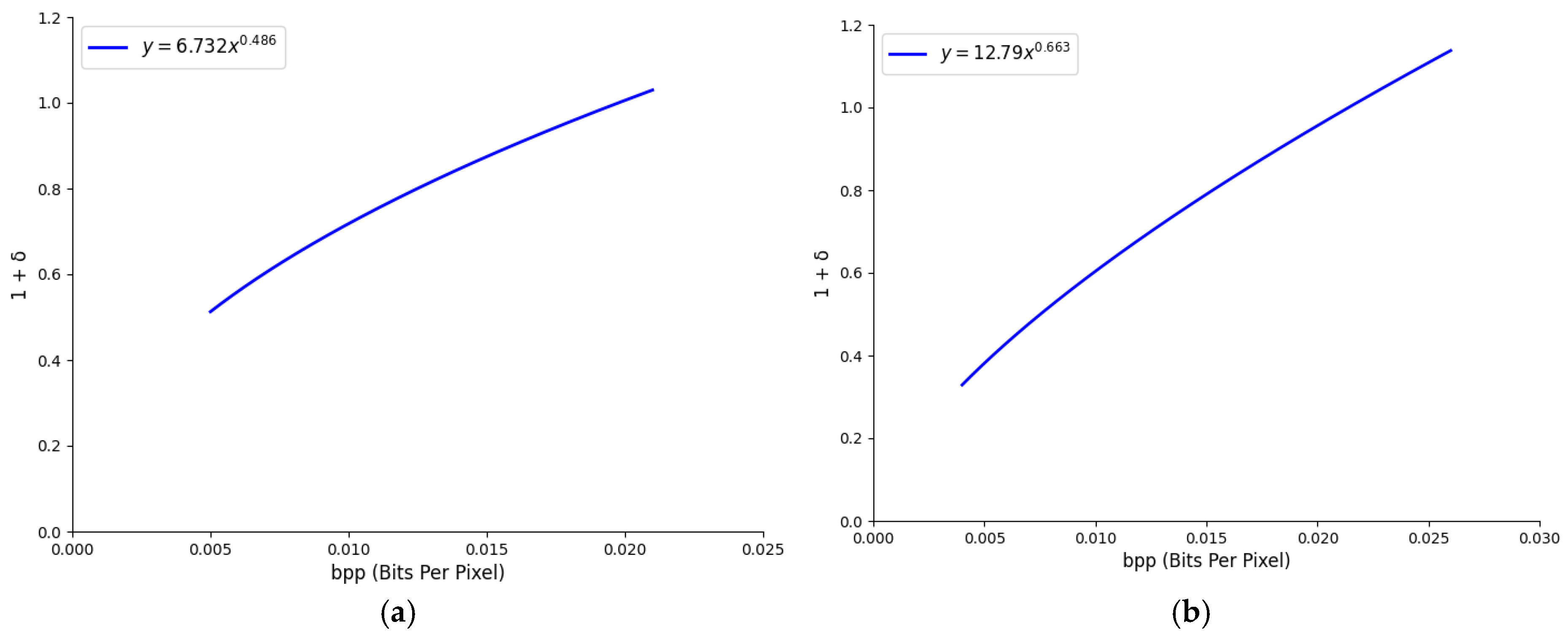

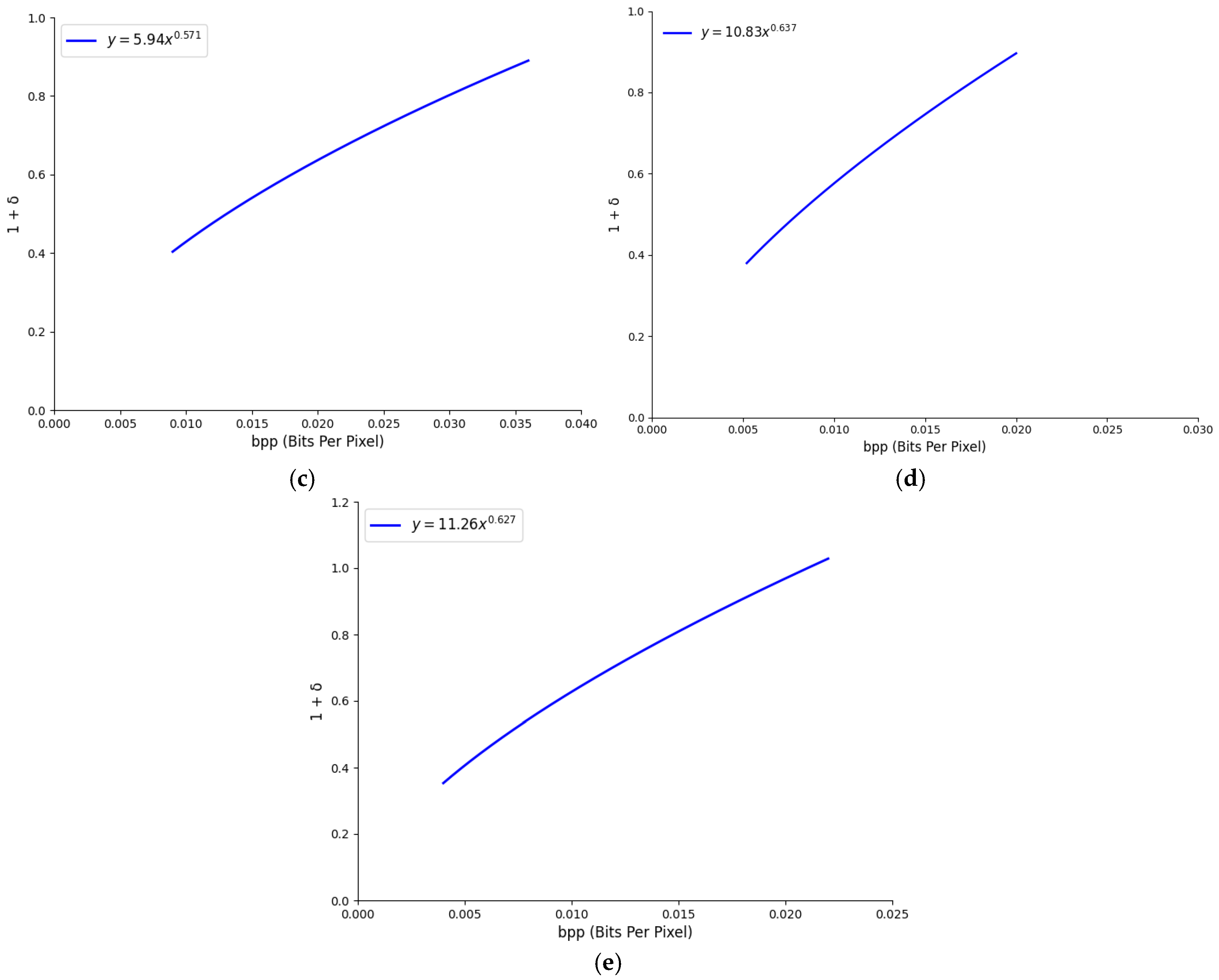

3.1.3. R-λ Optimization Based on Occupied Pixels

3.2. Optimization of Attribute Graph Encoding Based on Occupied Pixels

3.2.1. Obtain the Occupancy Information Sign OIS-N

3.2.2. Attribute Graph Encoding Input Pixel Improvement Algorithm

4. Experiments and Discussion

4.1. Experimental Setup

4.2. Obtain Appropriate Encoding Parameters

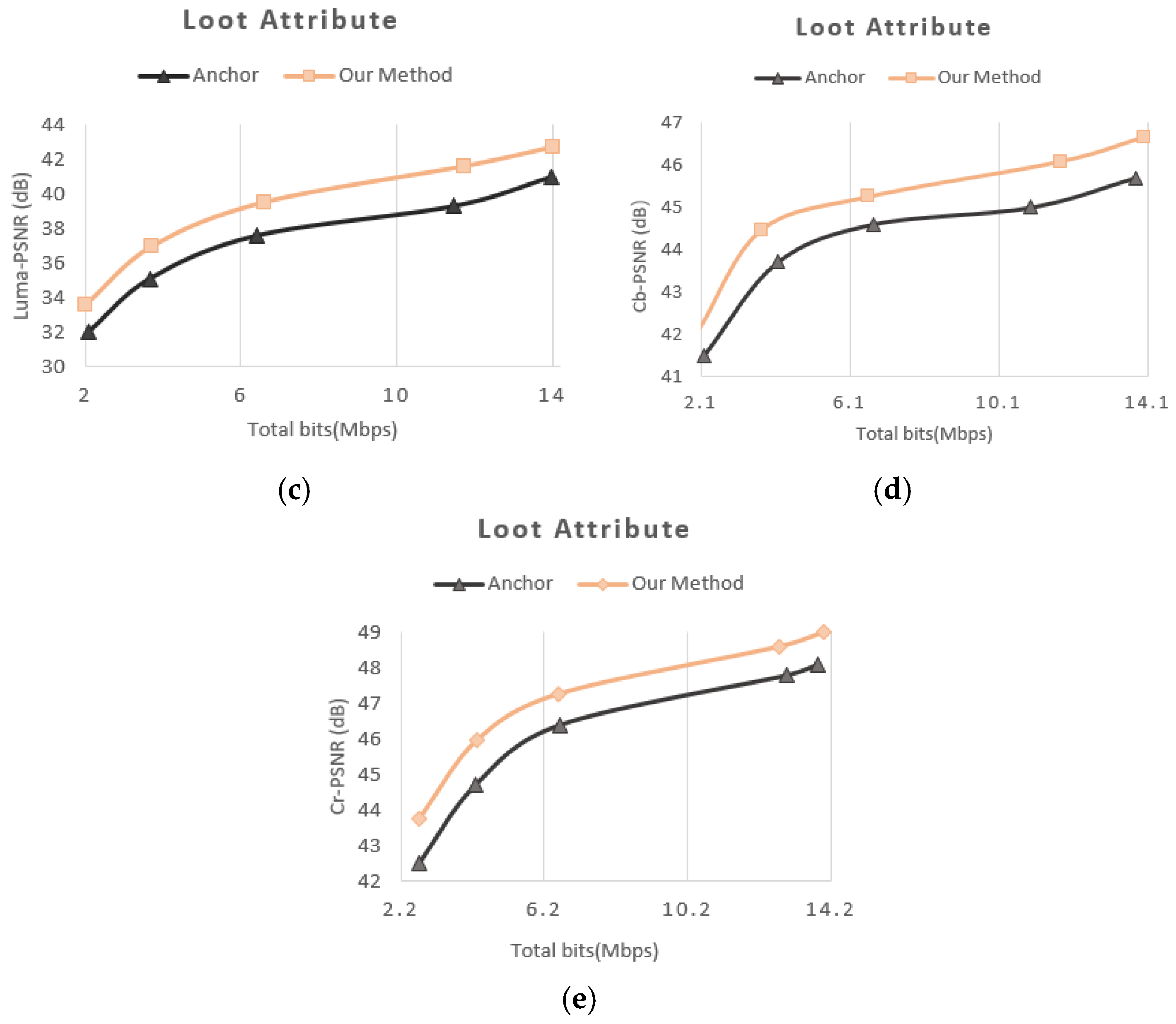

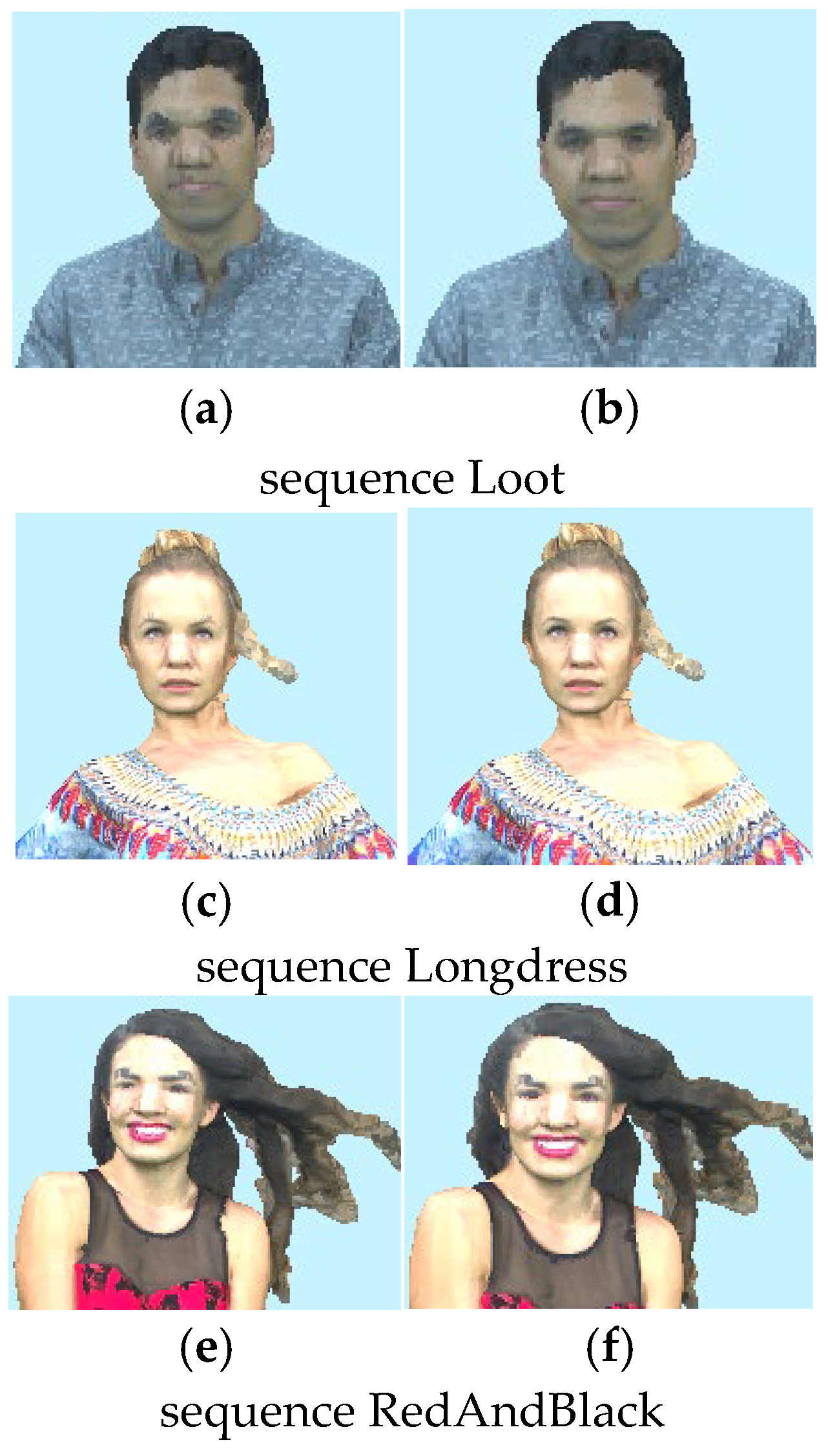

4.3. Overall Experimental Results and Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| V-PCC | Video-based Point Cloud Compression |

| G-PCC | Geometry-based Point Cloud Compression |

| H.264/AVC | Advanced video coding |

| H.265/HEVC | High efficiency video coding |

| H.266/VVC | Versatile video coding |

| CTU | Coding tree unit |

| OIS | Occupancy information flag |

References

- Rauschnabel, P.A.; Felix, R.; Hinsch, C.; Shahab, H.; Alt, F. What is XR? Towards a Framework for Augmented and Virtual Reality. Comput. Hum. Behav. 2022, 133, 107289. [Google Scholar] [CrossRef]

- Graziosi, D.; Nakagami, O.; Kuma, S.; Zaghetto, A.; Suzuki, T.; Tabatabai, A. An Overview of Ongoing Point Cloud Compression Standardization Activities: Video-Based (V-PCC) and Geometry-Based (G-PCC). APSIPA Trans. Signal Inf. Process. 2020, 9, e13. [Google Scholar] [CrossRef]

- MPEG-PCC-TMC13: Geometry Based Point Cloud Com-Pression G-PCC. 2021. Available online: https://github.com/MPEGGroup/mpeg-pcc-tmc13 (accessed on 13 August 2025).

- MPEG-PCC-TMC2: Video Based Point Cloud Compression VPCC. 2022. Available online: https://github.com/MPEGGroup/mpeg-pcc-tmc2 (accessed on 13 August 2025).

- Richardson, I.E. The H. 264 Advanced Video Compression Standard; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Sullivan, G.J.; Ohm, J.R.; Han, W.J.; Wiegand, T. Overview of the high efficiency video coding (HEVC) standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Bross, B.; Wang, Y.K.; Ye, Y.; Liu, S.; Chen, J.; Sullivan, G.J. Overview of the versatile video coding (VVC) standard and its applications. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3736–3764. [Google Scholar] [CrossRef]

- Tohidi, F.; Paul, M.; Afsana, F. Efficient Dynamic Point Cloud Compression Through Adaptive Hierarchical Partitioning. IEEE Access 2024, 12, 152614–152629. [Google Scholar] [CrossRef]

- Chen, A.; Mao, S.; Li, Z.; Xu, M.; Zhang, H.; Niyato, D.; Han, Z. An introduction to point cloud compression standards. GetMobile Mob. Comput. Commun. 2023, 27, 11–17. [Google Scholar] [CrossRef]

- Ahmad, I.; Swaminathan, V.; Aved, A.; Khalid, S. An Overview of Rate Control Techniques in HEVC and SHVC Video Encoding. Multimed. Tools Appl. 2022, 81, 34919–34950. [Google Scholar] [CrossRef]

- Li, B.; Li, H.; Li, L.; Zhang, J. Lambda Domain Rate Control Algorithm for High Efficiency Video Coding. IEEE Trans. Image Process. 2014, 23, 3841–3854. [Google Scholar] [CrossRef]

- Chen, Z.; Pan, X. An Optimized Rate Control for Low-Delay H. 265/HEVC. IEEE Trans. Image Process. 2019, 28, 4541–4552. [Google Scholar] [CrossRef]

- Li, S.; Xu, M.; Wang, Z.; Sun, X. Optimal Bit Allocation for CTU Level Rate Control in HEVC. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 2409–2424. [Google Scholar] [CrossRef]

- Zhou, M.; Wei, X.; Kwong, S.; Jia, W.; Fang, B. Rate control method based on deep reinforcement learning for dynamic video sequences in HEVC. IEEE Trans. Multimed. 2020, 23, 1106–1121. [Google Scholar] [CrossRef]

- Marzuki, I.; Lee, J.; Wiratama, W.; Sim, D. Deep convolutional feature-driven rate control for the HEVC encoders. IEEE Access 2021, 9, 162018–162034. [Google Scholar] [CrossRef]

- Chen, S.; Aramvith, S.; Miyanaga, Y. Learning-Based Rate Control for High Efficiency Video Coding. Sensors 2023, 23, 3607. [Google Scholar] [CrossRef] [PubMed]

- Zhou, M.; Wei, X.; Kwong, S.; Jia, W.; Fang, B. Just Noticeable Distortion-Based Perceptual Rate Control in HEVC. IEEE Trans. Image Process. 2020, 29, 7603–7614. [Google Scholar] [CrossRef]

- Liu, Z.; Pan, X.; Li, Y.; Chen, Z. A Game Theory Based CTU-Level Bit Allocation Scheme for HEVC Region of Interest Coding. IEEE Trans. Image Process. 2020, 30, 794–805. [Google Scholar] [CrossRef]

- Liu, Q.; Yuan, H.; Hou, J.; Hamzaoui, R.; Su, H. Model-based joint bit allocation between geometry and color for video-based 3D point cloud compression. IEEE Trans. Multimed. 2020, 23, 3278–3291. [Google Scholar] [CrossRef]

- Shen, F.; Gao, W. A rate control algorithm for video-based point cloud compression. In Proceedings of the 2021 International Conference on Visual Communications and Image Processing (VCIP), Munich, Germany, 5–8 December 2021; pp. 1–5. [Google Scholar]

- Li, L.; Li, Z.; Liu, S.; Li, H. Rate control for video-based point cloud compression. IEEE Trans. Image Process. 2020, 29, 6237–6250. [Google Scholar] [CrossRef]

- Chen, C.; Jiang, G.; Yu, M. Depth-Perception Based Geometry Compression Method of Dynamic Point Clouds. In Proceedings of the 2021 5th International Conference on Video and Image Processing (ICVIP’21), Hayward, CA, USA, 22–25 December 2021; Association for Computing Machinery: New York, NY, USA, 2022; pp. 56–61. [Google Scholar]

- Wang, T.; Li, F.; Cosman, P.C. Learning-based rate control for video-based point cloud compression. IEEE Trans. Image Process. 2022, 31, 2175–2189. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, J.; Ma, W.; Ding, D.; Ma, Z. Content-aware rate control for geometry-based point cloud compression. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 9550–9561. [Google Scholar] [CrossRef]

- Cai, Z.; Gao, W.; Li, G.; Gao, W. Distortion Propagation Model-Based V-PCC Rate Control for 3D Point Cloud Broadcasting. IEEE Trans. Broadcast. 2025, 71, 180–192. [Google Scholar] [CrossRef]

- Liu, W.; Yu, M.; Jiang, Z.; Xu, H.; Zhu, Z.; Zhang, Y.; Jiang, G. Cracks-suppression perceptual geometry coding for dynamic point clouds. Digit. Signal Process. 2024, 149, 104471. [Google Scholar] [CrossRef]

- Li, B.; Li, H.; Li, L.; Zhang, J. Rate Control by R-Lambda Model for HEVC. ITU-T SG16 Contribution, JCTVC-K0103, 2012, 1–5. Available online: https://scholar.google.com/scholar?as_q=Rate+control+by+r-lambda+model+for+hevc&as_occt=title&hl=en&as_sdt=0%2C31 (accessed on 13 August 2025).

- Point Cloud Compression Category 2 Reference Software, tmc2-18.0. 2025. Available online: https://github.com/MPEGGroup/mpeg-pcc-tmc2/tree/release-v18.0 (accessed on 13 August 2025).

- High Efficiency Video Coding Test Model, hm-16.20+scm8.8. Available online: https://hevc.hhi.fraunhofer.de/svn/svn_HEVCSoftware/tags/HM-16.20+SCM-8.8/ (accessed on 13 August 2025).

- Schwarz, S.; Martin-Cocher, G.; Flynn, D.; Budagavi, M. Common Test Conditions for Point Cloud Compression; Document ISO/IEC JTC1/SC29/WG11 w17766; 3DG Group: Ljubljana, Slovenia, 2018. [Google Scholar]

- Eon, E.; Harrison, B.; Myers, T.; Chou, P. 8i Voxelized Full Bodies, Version 2—A Voxelized Point Cloud Dataset. ISO/IEC JTC1/SC29 Joint WG11/WG1 (MPEG/JPEG) Input Document m40059 M 74006 (2017). Available online: https://plenodb.jpeg.org/pc/8ilabs (accessed on 13 August 2025).

- Xu, Y.; Lu, Y.; Wen, Z. Owlii Dynamic Human Mesh Sequence Dataset. In ISO/IEC JTC1/SC29/WG11 m41658, 120th MPEG Meeting. 2017, Volume 1. Available online: https://mpeg-pcc.org/index.php/pcc-content-database/owlii-dynamic-human-textured-mesh-sequence-dataset/ (accessed on 13 August 2025).

- Common Test Conditions for PCC; Document ISO/IEC JTC1/SC29/WG11 N18883; MPEG (Moving Picture Experts Group): Gothenburg, Sweden, 2019.

- Fan, Y.; Chen, J.; Sun, H.; Katto, J.; Jing, M. A fast QTMT partition decision strategy for VVC intra prediction. IEEE Access 2020, 8, 107900–107911. [Google Scholar] [CrossRef]

| Step | Description |

|---|---|

| 1. Input parameters | Obtain the current frame-level target Bit , the bits used for encoding the header information , the bits used for the current frame , the prediction distortion D of the current encoded block, the frame prediction error Dp, the number of pixels occupied by the frame Np, and the number N of pixels occupied by the current CTU. |

| 2. Determine the pixel occupation situation in the encoding CU | If N = 0, no encoding bits are allocated. Set QP to 51 and proceed to step 7. If N is not 0, perform step 3 for R-λ optimization based on occupied pixels. |

| 3. R-λ optimization based on occupied pixels | According to Formula (6), the occupied pixel distortion is calculated. Then, the occupied block bit allocation weight can be obtained from Formula (5). At this time, the bitrate weight improvement allocation coefficient is calculated and obtained by Formula (8). |

| 4. Obtain the pixel distortion impact factor occupied (combined with the experimental fitting parameters) | Statistical data are obtained based on Formulas (13) and (14), and the results are used for fitting to satisfy Formula (18). Then, the values of parameters and can be obtained from the fitting results, thereby determining . |

| 5. Calculate the optimized bitrate allocation | Update the bitrate weight improvement allocation coefficient in Formula (1) and calculate the optimized encoding bit allocation. |

| 6. Calculate the quantitative parameter QP | The encoding parameter λ is obtained from the actual bits, and then the quantization parameter QP can be calculated according to the formula. |

| 7. Perform coding | The QP value obtained through optimization is then subjected to subsequent encoding. |

| Bitrate | Geometric QP | Attribute QP |

|---|---|---|

| r5 | 16 | 22 |

| r4 | 20 | 27 |

| r3 | 24 | 32 |

| r2 | 28 | 37 |

| r1 | 32 | 42 |

| N | 64 | 32 | 16 | 8 | |

|---|---|---|---|---|---|

| Geom.BD-GeomRate | D1 | 0.0 | 0.0 | 0.0 | 0.0 |

| D2 | 0.0 | 0.0 | 0.0 | 0.0 | |

| Geom.BD-TotalRate | D1 | −0.7 | 0.0 | 1.6 | 5.7 |

| D2 | −0.8 | −0.1 | 1.3 | 4.9 | |

| End-to-End BD-AttrRate | Luma | −1.6 | 0.7 | 5.1 | 14.5 |

| Cb | −2.1 | 0.9 | 8.4 | 29.4 | |

| Cr | −3.0 | 0.3 | 5.6 | 22.7 | |

| End-to-End BD-TotalRate | Luma | −0.9 | −0.1 | 1.3 | 5.3 |

| Cb | −1.3 | 0.0 | 3.7 | 14.8 | |

| Cr | −1.8 | −0.4 | 1.6 | 10.6 | |

| ∆T | −5.07 | −5.73 | −7.19 | −6.45 | |

| Point Cloud Sequence | Loot | RedAndBlack | Soldier | Queen | Longdress | Average | |

|---|---|---|---|---|---|---|---|

| Geom.BD-GeomRate | D1 | −22.7 | −13.6 | −18.9 | −11.3 | −12.1 | −15.67 |

| D2 | −23.5 | −14.7 | −19.3 | −12.4 | −13.5 | −16.68 | |

| Geom.BD-TotalRate | D1 | −39.8 | −24.1 | −43.6 | −21.6 | −20.3 | −29.88 |

| D2 | −41.2 | −26.4 | −46.2 | −22.3 | −21.4 | −31.50 | |

| End-to-End BD-AttrRate | Luma | −3.6 | −4.2 | −8.1 | −2.8 | −3.2 | −4.38 |

| Cb | −0.3 | −1.1 | −0.9 | −0.5 | −0.6 | −0.68 | |

| Cr | −1.7 | −3.4 | −1.5 | −1.0 | −1.1 | −1.74 | |

| End-to-End BD-TotalRate | Luma | −6.0 | −5.3 | −7.7 | −3.6 | −4.9 | −5.50 |

| Cb | −3.4 | −2.6 | −2.4 | −2.2 | −2.7 | −2.66 | |

| Cr | −4.1 | −3.8 | −2.6 | −3.0 | −3.2 | −3.34 | |

| ∆T | −4.76 | −5.12 | −6.92 | −5.74 | −6.41 | −5.79 | |

| Point Cloud Sequence | Loot | RedAndBlack | Soldier | Queen | Longdress | Average | |

|---|---|---|---|---|---|---|---|

| Geom.BD-GeomRate | D1 | 0.13 | −0.37 | −0.08 | 2.05 | −1.44 | 0.06 |

| D2 | 0.75 | 0.10 | 0.16 | 2.74 | −0.52 | 0.65 | |

| Geom.BD-TotalRate | D1 | 0.22 | −0.68 | −0.15 | 3.62 | −2.03 | 0.20 |

| D2 | 0.94 | 0.21 | 0.39 | 4.37 | −0.93 | 1.00 | |

| End-to-End BD-AttrRate | Luma | −0.21 | −0.14 | 0.11 | 0.31 | −0.40 | −0.07 |

| Cb | −0.16 | −0.20 | 0.45 | −0.00 | −0.63 | −0.11 | |

| Cr | −1.22 | −0.07 | −0.30 | −0.84 | −0.37 | −0.56 | |

| End-to-End BD-TotalRate | Luma | −0.64 | −0.37 | 0.35 | 0.66 | −0.74 | −0.15 |

| Cb | −0.60 | −0.52 | 1.12 | −0.13 | −1.05 | −0.24 | |

| Cr | −2.65 | 0.13 | −0.81 | −1.37 | −0.62 | −1.06 | |

| Point Cloud Sequence | Loot | RedAndBlack | Soldier | Queen | Longdress | Average | |

|---|---|---|---|---|---|---|---|

| Geom.BD-GeomRate | D1 | −0.03 | 0.12 | −0.01 | 0.00 | 0.01 | 0.02 |

| D2 | −0.04 | 0.20 | 0.02 | −0.01 | 0.00 | 0.03 | |

| Geom.BD-TotalRate | D1 | −0.11 | 0.26 | 0.00 | 0.00 | 0.00 | 0.03 |

| D2 | −0.14 | 0.32 | 0.00 | 0.01 | 0.00 | 0.04 | |

| End-to-End BD-AttrRate | Luma | −1.22 | −1.18 | −0.61 | −0.34 | −1.22 | −0.91 |

| Cb | −1.63 | −2.23 | −0.96 | −0.75 | −1.40 | −1.39 | |

| Cr | −1.71 | −2.52 | −0.88 | −0.66 | −1.57 | −1.47 | |

| End-to-End BD-TotalRate | Luma | −3.15 | −3.23 | −1.10 | −0.72 | −2.61 | −2.16 |

| Cb | −2.77 | −4.32 | −1.57 | −1.38 | −2.54 | −2.52 | |

| Cr | −2.80 | −4.46 | −1.43 | −1.04 | −2.76 | −2.50 | |

| Point Cloud Sequence | Loot | RedAndBlack | Soldier | Queen | Longdress | Average | |

|---|---|---|---|---|---|---|---|

| Geom.BD-TotalRate | D1 | −5.13 | −7.08 | 20.94 | −11.16 | −43.92 | −9.27 |

| D2 | −3.20 | −3.16 | 23.48 | −9.24 | −40.18 | −6.46 | |

| End-to-End BD-TotalRate | Luma | 1.58 | 1.88 | −0.77 | 2.15 | 4.36 | 1.84 |

| Cb | 1.67 | 2.07 | −3.83 | 3.07 | 8.73 | 2.34 | |

| Cr | 2.82 | 3.01 | −3.91 | 2.79 | 8.54 | 2.65 | |

| Overall.BD-TotalRate | −4.77 | −6.24 | 18.62 | −10.51 | −40.73 | −8.73 | |

| Point Cloud Sequence | Loot | RedAndBlack | Soldier | Queen | Longdress | Average | |

|---|---|---|---|---|---|---|---|

| Geom.BD-TotalRate | D1 | −0.34 | 0.43 | −0.95 | −0.49 | −0.79 | −0.43 |

| D2 | −1.64 | 0.45 | −3.13 | −0.14 | −1.65 | −1.22 | |

| End-to-End BD-TotalRate | Luma | −7.80 | −5.10 | −5.87 | −0.21 | −3.85 | −4.57 |

| Cb | −10.96 | −5.11 | −6.10 | 1.75 | −3.32 | −4.75 | |

| Cr | −10.96 | −5.53 | −5.48 | 0.08 | −3.89 | −5.16 | |

| Point Cloud Sequence | Loot | RedAndBlack | Soldier | Queen | Longdress | Average | |

|---|---|---|---|---|---|---|---|

| Geom.BD-TotalRate | D1 | −1.55 | −0.62 | −0.42 | −2.04 | −1.26 | −1.18 |

| D2 | −3.93 | −1.87 | −4.21 | −1.93 | −3.35 | −3.06 | |

| End-to-End BD-TotalRate | Luma | −8.76 | −6.19 | −7.07 | 0.32 | −5.35 | −5.41 |

| Cb | −12.94 | −7.53 | −7.61 | 0.44 | −6.73 | −6.87 | |

| Cr | −12.75 | −7.27 | −7.36 | −0.36 | −7.07 | −6.96 | |

| Point Cloud Sequence | Loot | RedAndBlack | Soldier | Queen | Longdress | Average | Ebr (%) | ||

|---|---|---|---|---|---|---|---|---|---|

| r1 | Encoding bit | Anchor | 884,379 | 1,752,416 | 2,153,842 | 1,695,473 | 3,048,637 | 1,906,949.4 | 94.26 |

| Our Method | 857,164 | 1,631,473 | 2,071,936 | 1,532,078 | 2,894,605 | 1,797,451.2 | |||

| r2 | Anchor | 1,973,657 | 2,917,834 | 3,978,514 | 2,784,506 | 6,013,756 | 3,533,653.4 | 95.18 | |

| Our Method | 1,742,832 | 2,763,185 | 3,841,029 | 2,537,891 | 5,931,581 | 3,363,303.6 | |||

| r3 | Anchor | 3,752,618 | 4,896,771 | 8,707,536 | 5,731,084 | 13,756,492 | 7,368,900.2 | 92.27 | |

| Our Method | 3,543,265 | 4,653,217 | 8,342,793 | 5,527,603 | 11,930,784 | 6,799,532.4 | |||

| r4 | Anchor | 7,135,672 | 9,437,865 | 16,075,369 | 8,760,294 | 23,185,946 | 12,919,029.2 | 95.77 | |

| Our Method | 6,951,763 | 8,746,592 | 15,427,937 | 8,631,507 | 22,106,357 | 12,372,831.2 | |||

| r5 | Anchor | 14,031,792 | 16,356,219 | 28,943,556 | 15,796,803 | 42,180,976 | 23,461,869.2 | 94.13 | |

| Our Method | 12,157,329 | 15,478,366 | 27,459,630 | 14,337,964 | 40,985,783 | 22,083,814.4 | |||

| Average | 94.32 | ||||||||

| Point Cloud Sequence | Loot | RedAndBlack | Soldier | Queen | Longdress | Average |

|---|---|---|---|---|---|---|

| -D1 | 0.74 | 0.51 | 1.05 | 0.60 | 0.48 | 0.676 |

| -D2 | 0.97 | 0.63 | 1.27 | 0.87 | 0.57 | 0.862 |

| -Luma | 2.02 | 1.75 | 2.16 | 1.83 | 2.33 | 2.018 |

| -Cb | 1.14 | 0.76 | 0.81 | 0.92 | 1.07 | 0.940 |

| -Cr | 1.30 | 1.62 | 0.89 | 0.75 | 1.24 | 1.160 |

| Point Cloud Sequence | Loot | RedAndBlack | Soldier | Queen | Longdress | Average |

|---|---|---|---|---|---|---|

| r1 | 1.31 | 3.67 | 2.85 | 2.04 | 1.73 | 2.32 |

| r2 | 12.64 | 10.93 | 12.77 | 11.72 | 13.26 | 12.26 |

| r3 | 16.15 | 15.48 | 18.61 | 16.93 | 17.49 | 16.93 |

| r4 | 18.37 | 18.94 | 20.13 | 19.45 | 21.10 | 19.60 |

| r5 | 21.72 | 24.31 | 25.07 | 22.76 | 26.75 | 24.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, F.; Jia, J.; Zhang, Q. Optimization of Non-Occupied Pixels in Point Cloud Video Based on V-PCC and Joint Control of Bitrate for Geometric–Attribute Graph Coding. Electronics 2025, 14, 3287. https://doi.org/10.3390/electronics14163287

Wang F, Jia J, Zhang Q. Optimization of Non-Occupied Pixels in Point Cloud Video Based on V-PCC and Joint Control of Bitrate for Geometric–Attribute Graph Coding. Electronics. 2025; 14(16):3287. https://doi.org/10.3390/electronics14163287

Chicago/Turabian StyleWang, Fengqin, Juanjuan Jia, and Qiuwen Zhang. 2025. "Optimization of Non-Occupied Pixels in Point Cloud Video Based on V-PCC and Joint Control of Bitrate for Geometric–Attribute Graph Coding" Electronics 14, no. 16: 3287. https://doi.org/10.3390/electronics14163287

APA StyleWang, F., Jia, J., & Zhang, Q. (2025). Optimization of Non-Occupied Pixels in Point Cloud Video Based on V-PCC and Joint Control of Bitrate for Geometric–Attribute Graph Coding. Electronics, 14(16), 3287. https://doi.org/10.3390/electronics14163287