FPGA Chip Design of Sensors for Emotion Detection Based on Consecutive Facial Images by Combining CNN and LSTM

Abstract

1. Introduction

1.1. Field-Programmable Gate Array

- A.

- Reconfigurability: FPGAs are reconfigurable [5] and can define their digital logic circuits through programming, allowing developers to redesign the FPGA’s functions according to application requirements repeatedly.

- B.

- High parallel processing capability: FPGAs have multiple independent logic circuits and data paths that can run in parallel, enabling them to efficiently perform parallel processing for multiple tasks and hence provide high-performance computing power.

- C.

- Low latency and high-frequency operation: Due to the fact that FPGA’s logic circuits are composed of gate arrays and have high optimization capabilities, it can achieve low latency and high-frequency operation. This makes it ideal for applications requiring high-speed processing.

- D.

- Customizability: FPGAs are highly flexible in customization and can be designed and optimized according to application requirements. This includes the design of logic circuits, data paths, memory, and interfaces.

- E.

- Software and hardware co-design: FPGAs provide the ability to co-design software and hardware on a single chip [6]. This provides higher system integration and performance.

- F.

- Suitable for rapid development and testing: FPGAs have a rapid development cycle. Developers can quickly develop and test them within a shorter period [7].

1.2. Experimental Protocol

2. Related Works

2.1. CLDNN Model Architecture

2.2. Consecutive Facial Emotion Recognition (CFER)

3. Facial Emotion Recognition Methods and Parameter Setting

3.1. CLDNN Model

3.2. Experimental Environment for Model Training on PC

3.3. Consecutive Facial Emotion Recognition

3.3.1. Databases

RAVDESS

BAUM-1s

eNTERFACE’05

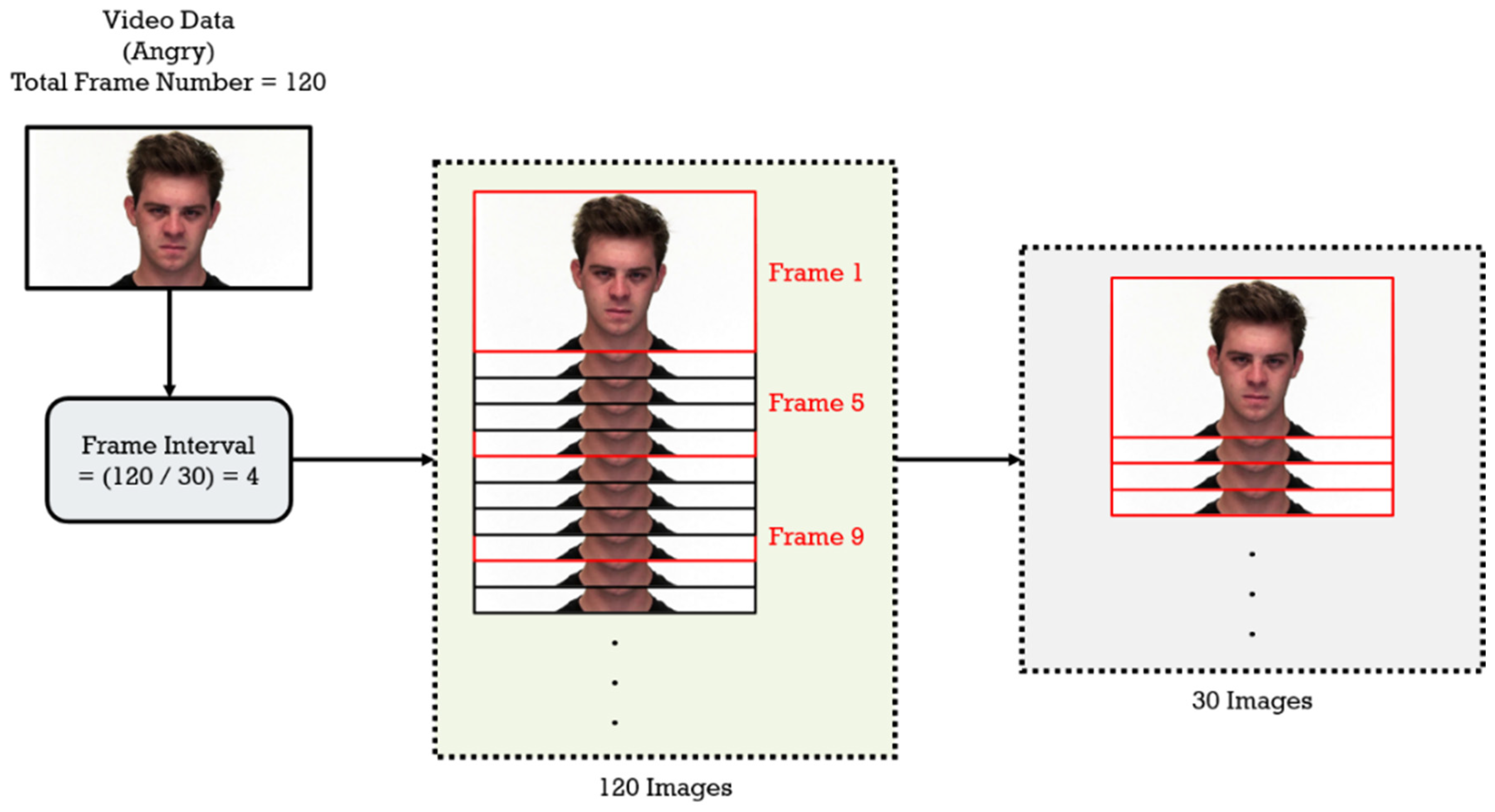

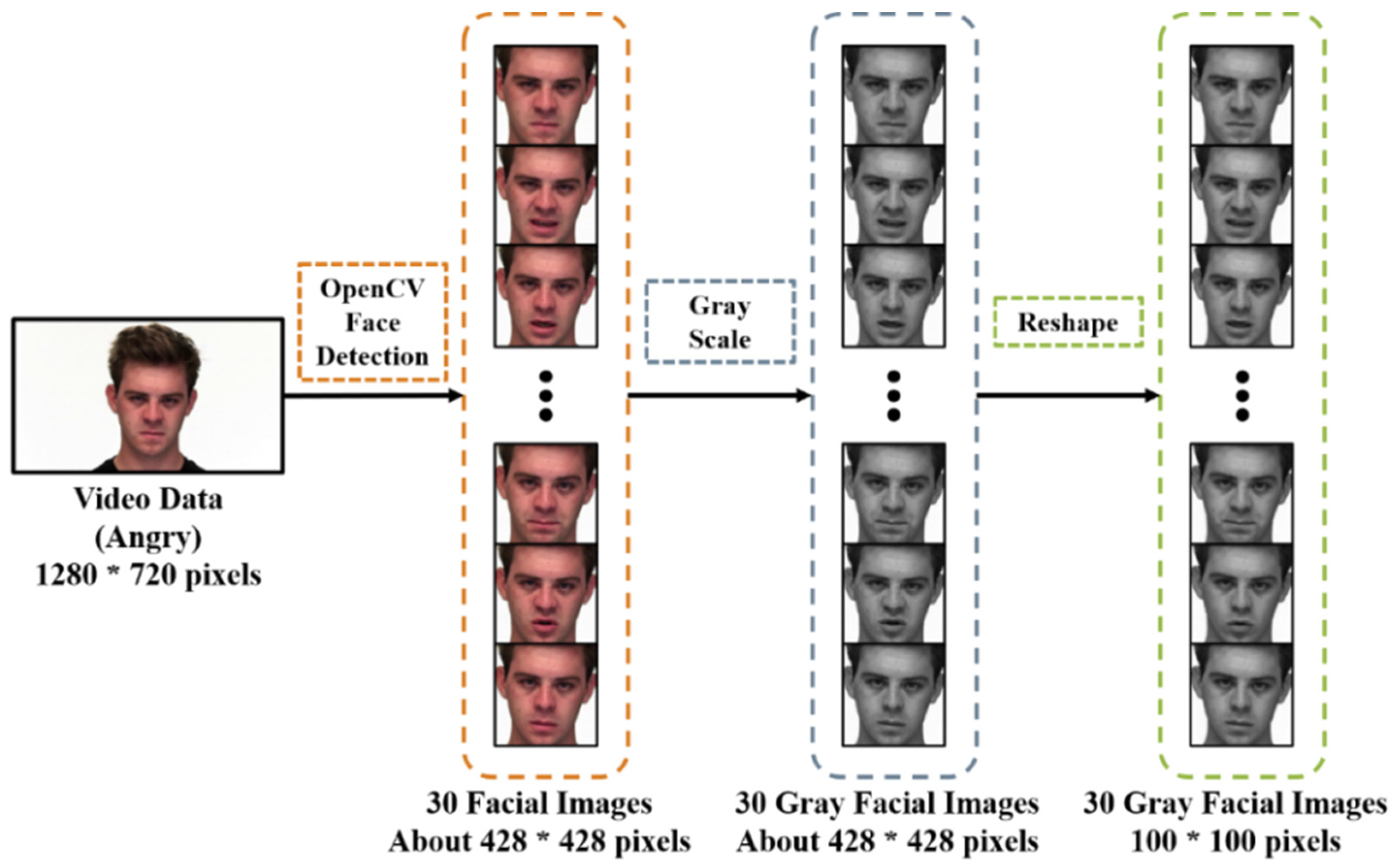

3.3.2. Pre-Processing

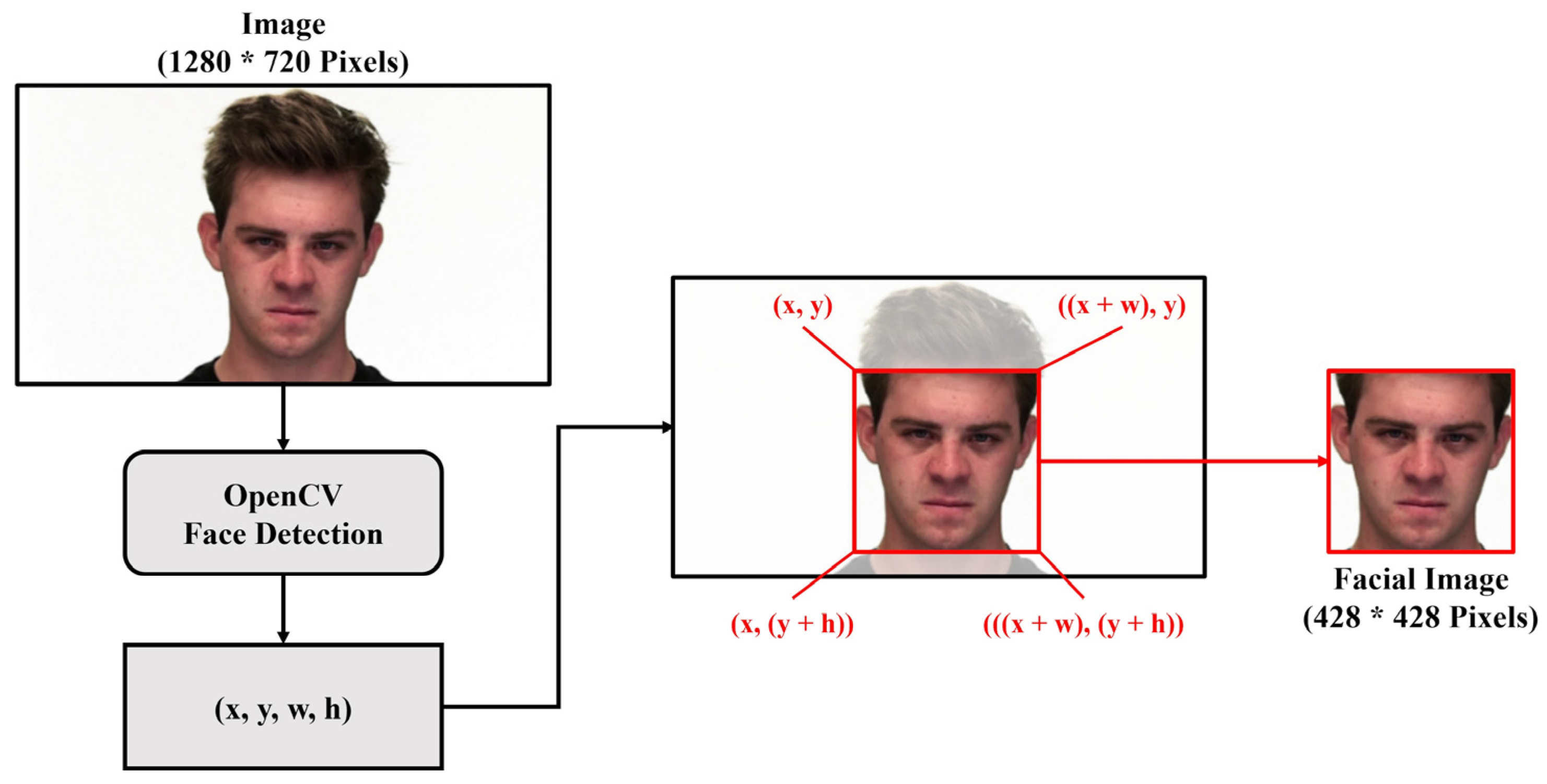

Facial Detection

Grayscale Conversion

Resize

3.3.3. Experiments

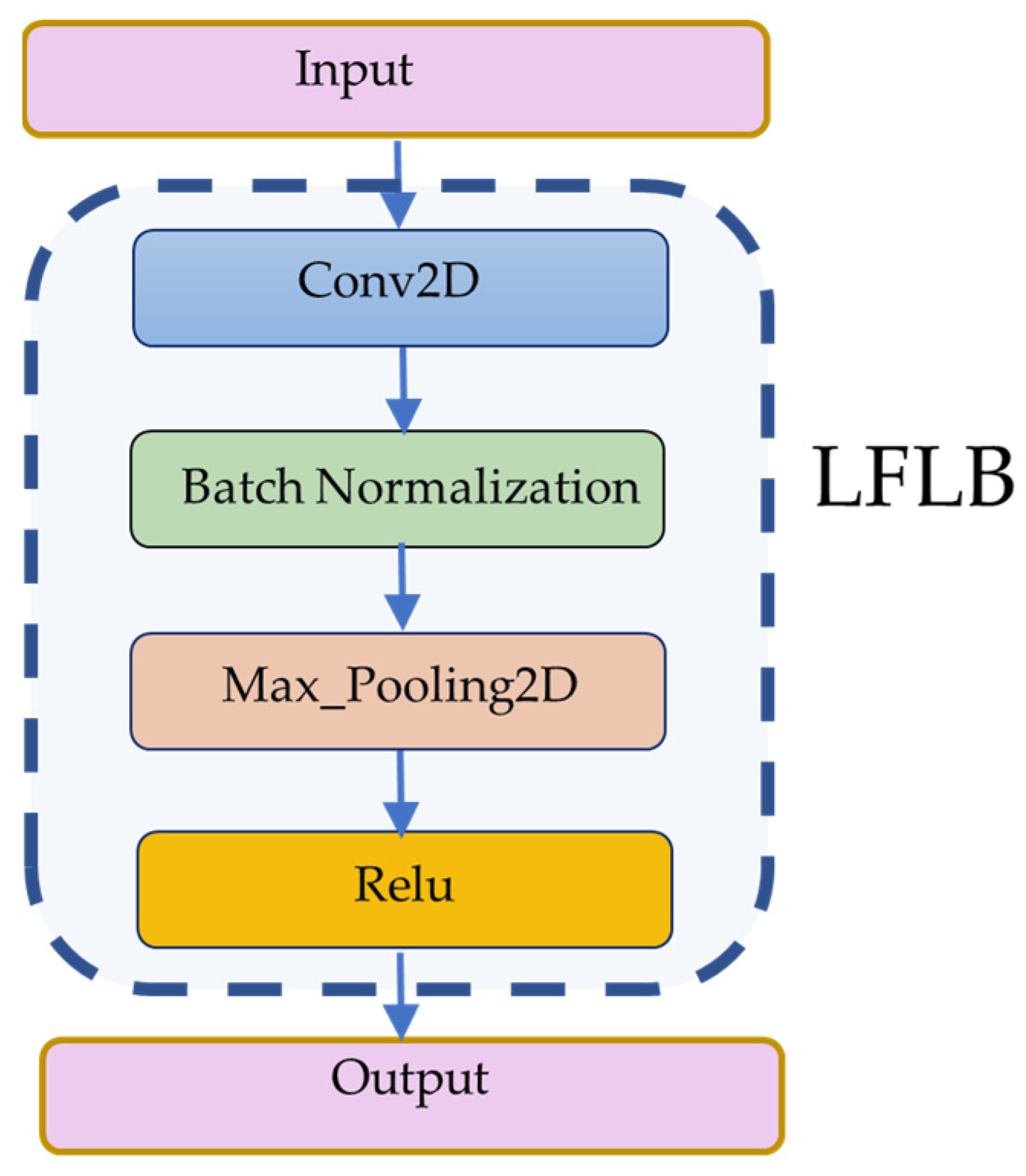

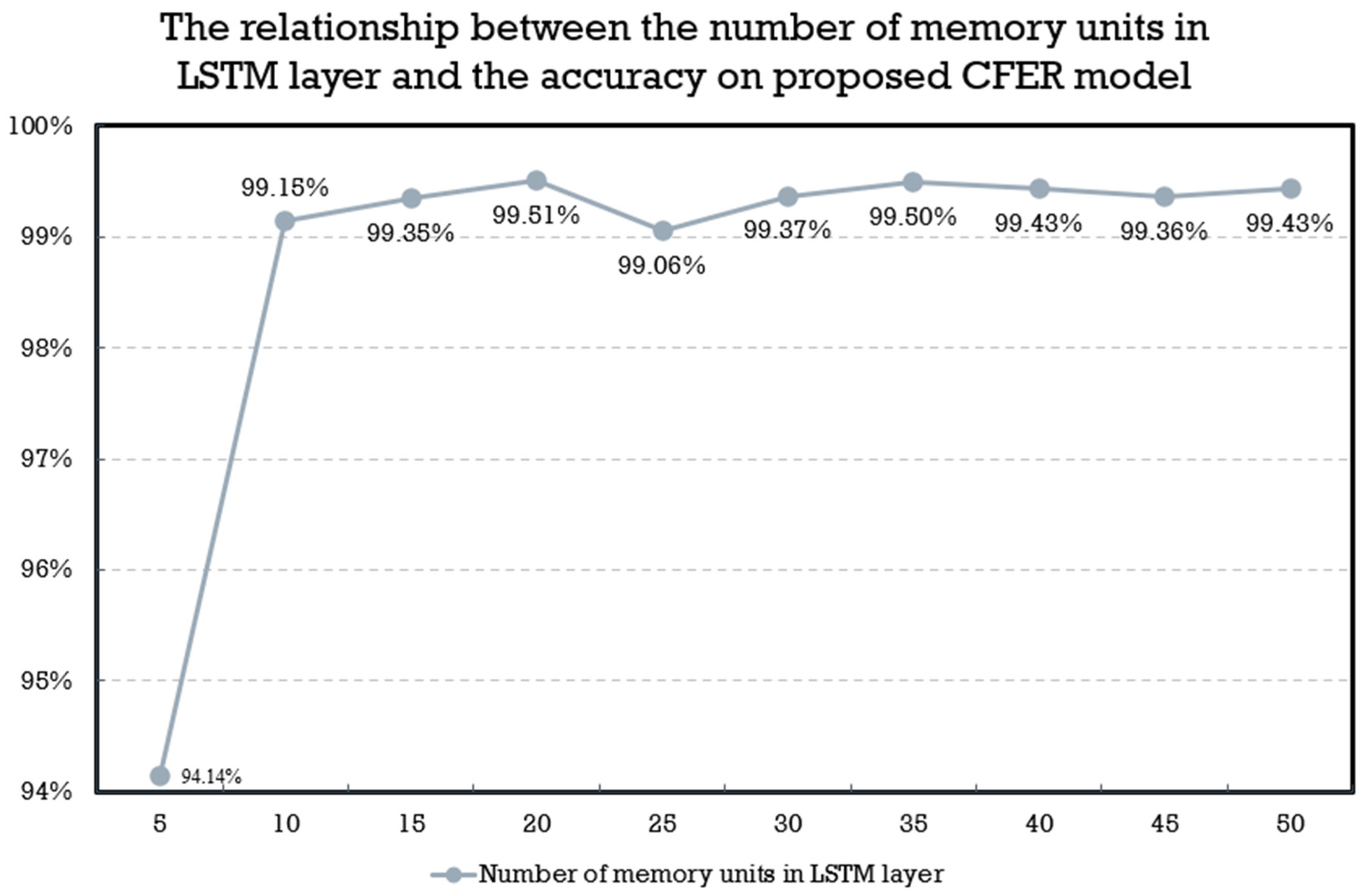

Local Feature Learning Block (LFLB)

Training Process and Parameters

4. Experimental Results

4.1. Experiments on RAVDESS Database

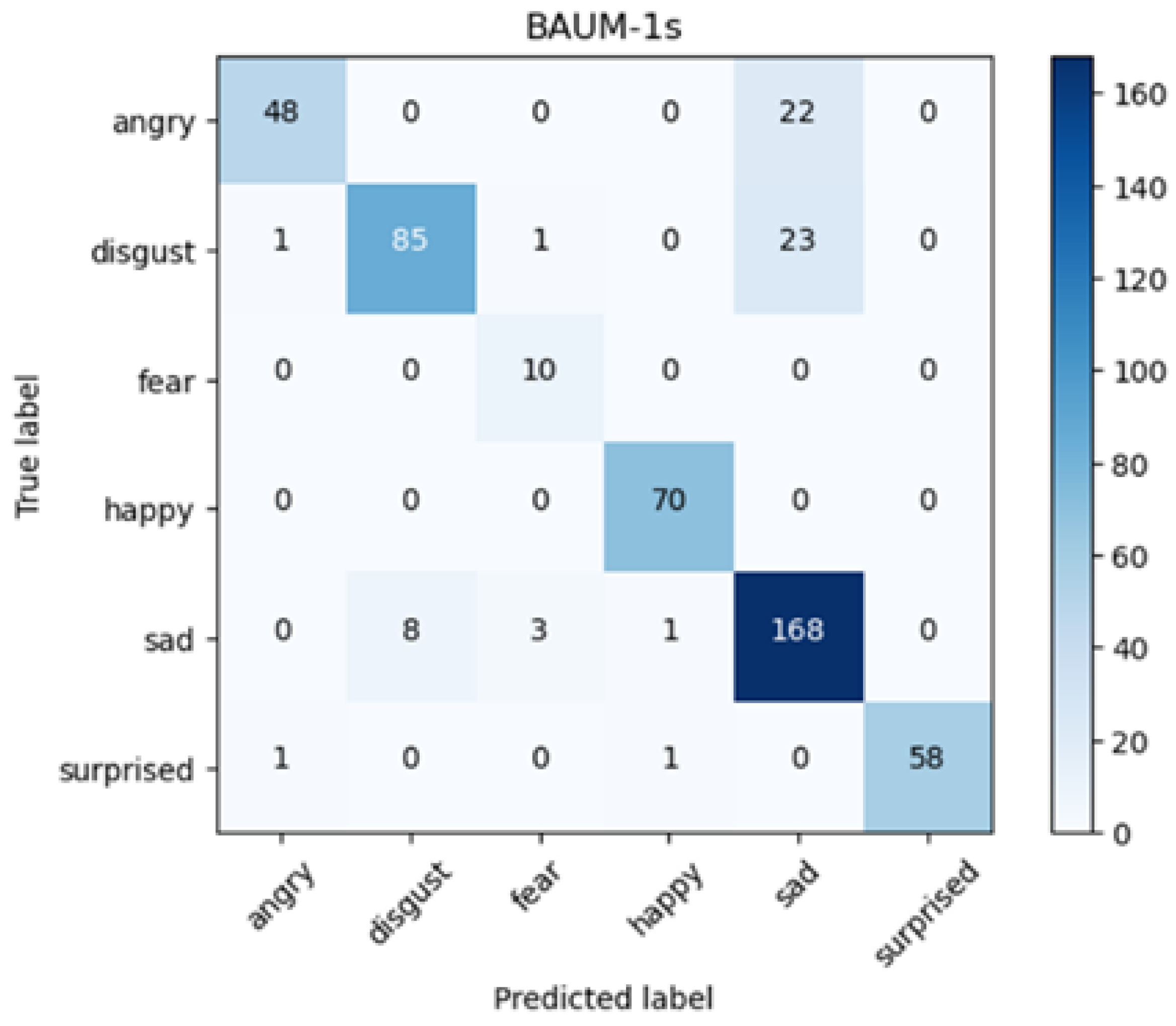

4.2. Experiments on BAUM-1s Database

- Lower recall for the angry and disgust classes due to misclassification.

- Lower precision and accuracy for the sad class, since it receives many incorrect predictions.

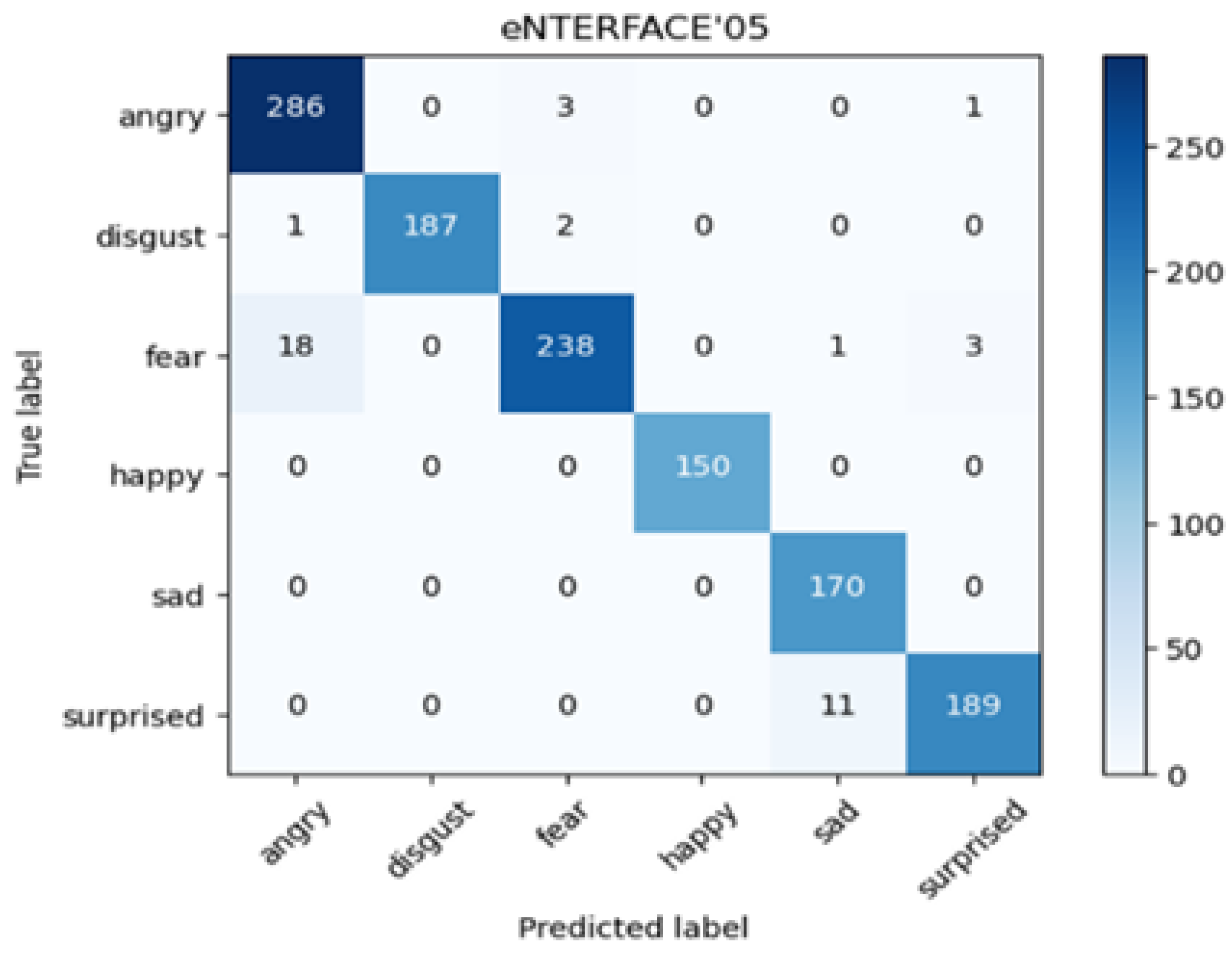

4.3. Experiments on eNTERFACE’05 Database

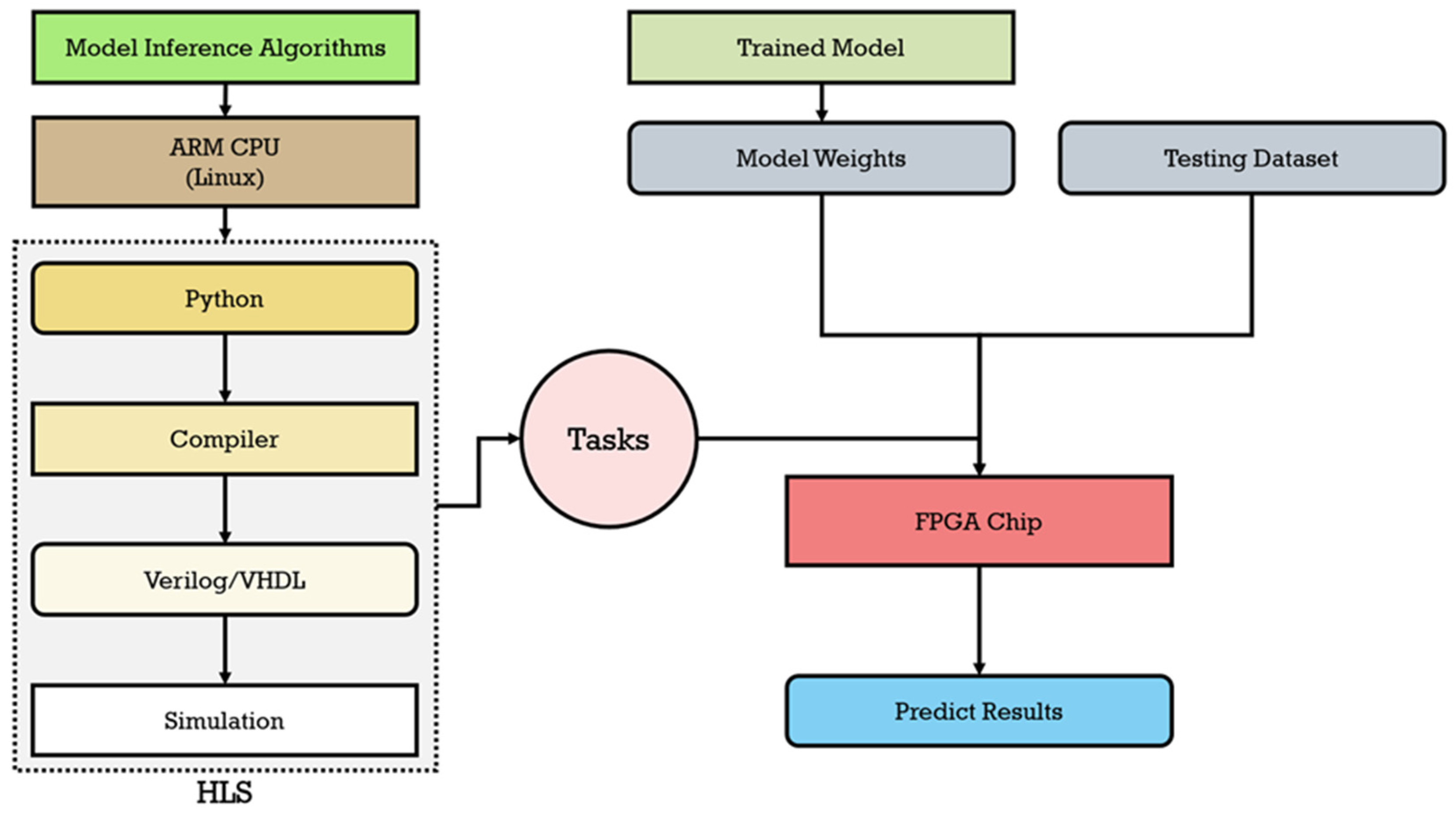

5. Model Inference and Experiments for FPGA Implementation

- A.

- Download the required Linux image file and cross-compiler, which have to be suitable for the ARM architecture.

- B.

- Connect to the DE10-Standard FPGA via JTAG and open the corresponding project file in the Quartus Prime software.

- C.

- Use the Quartus software to download the Linux image file to the FPGA.

- D.

- Configure the FPGA’s boot settings to boot the Linux system from the SD card.

- E.

- Add the downloaded cross-compiler to the system environment variables and compile the required applications or kernel modules, such as libraries of OpenCV and Python.

- F.

- Copy the generated binary files (.bin) to the SD card and insert it into the FPGA. The Linux system can then be started.

5.1. Model Inference Algorithms

5.1.1. Inference of CNN

| Algorithm 1: Convolution | |

| Input: input, filters, kernelsize, strides, weights Output: featuremap | |

| 1 | kernel, bias = weights |

| 2 | padheight = kernelsize // 2 |

| 3 | padwidth = kernelsize // 2 |

| 4 | paddedimage =zeropadding(image, (padheight, pad_width), 0) |

| 5 | for h in input do |

| 6 | for w in input do |

| 7 | convolved[h][w] = dot(input[h][w], kernel) + bias |

| 8 | featuremap = maximum(0, convolved) |

| 9 | return featuremap |

5.1.2. Inference of LSTM

| Algorithm 2: LSTM | |

| Input: input, weights, units Output: hiddenstate | |

| 1 | , , = weights |

| 2 | , , , = |

| 3 | , , , = |

| 4 | , , , = b |

| 5 | hiddenstate = cellstate = zerosarray(shape = (1, units)) |

| 6 | for element in input do |

| 7 | inputgate = sigmoid(dot(element,) + dot(hiddenstate, ) + ) |

| 8 | forgotgate = sigmoid(dot(element, ) + dot(hiddenstate, ) + ) |

| 9 | cellcandidategate = tanh(dot(element, ) + dot(hiddenstate, ) + ) |

| 10 | cellstate = forgotgate × cellstate + inputgate × cellcandidategate |

| 11 | outputgate = sigmoid(dot(element, ) + dot(hiddenstate, ) + ) |

| 12 | hiddenstate = outputgate × tanh(cellstate) |

| 13 | return hiddenstate |

5.1.3. Inference of Batch Normalization

| Algorithm 3: BatchNormalization | |

| Input: input, weights Output: normalized | |

| 1 | gamma, beta, mean, variance = weights |

| 2 | normalized = gamma × (inputs − mean)/sqrt(variance + 1 × 10−3.) + beta |

| 3 | return normalized |

5.2. Experimental Results on FPGA Chip

- Base Clock: 2.9 GHz (up to 4.8 GHz Turbo).

- 8 cores/16 threads.

- Peak MIPS: ~160,000–200,000 + MIPS.

- Average runtime for proposed model on a video: 0.45 s.

- Dual-core ARM Cortex-A9 @ ~925 MHz.

- Theoretical MIPS: ~2000–2500 (combined).

- DSP Block Usage: ~70% (for parallel multipliers).

- Memory Usage: ~50% (for weights and intermediate data).

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Matsugu, M.; Mori, K.; Mitari, Y.; Kaneda, Y. Subject Independent Facial Expression Recognition with Robust Face Detection using a Convolutional Neural Network. Neural Netw. 2003, 16, 555–559. [Google Scholar] [CrossRef] [PubMed]

- Pramerdorfer, C.; Kampel, M. Facial Expression Recognition using Convolutional Neural Networks: State of the Art. arXiv 2016, arXiv:1612.02903. [Google Scholar] [CrossRef]

- Ayadi, M.E.; Kamel, M.S.; Karray, F. Survey on Speech Emotion Recognition: Features, Classification Schemes, and Databases. Pattern Recognit. 2011, 44, 572–587. [Google Scholar] [CrossRef]

- Khalil, R.A.; Jones, E.; Babar, M.I.; Jan, T.; Zafar, M.H.; Alhussain, T. Speech Emotion Recognition Using Deep Learning Techniques: A Review. IEEE Access 2019, 7, 117327–117345. [Google Scholar] [CrossRef]

- Hauck, S.; DeHon, A. Reconfigurable Computing: The Theory and Practice of FPGA-Based Computation; Morgan Kaufmann: San Francisco, CA, USA, 2007; ISBN 9780080556017. [Google Scholar]

- Pellerin, D.; Thibault, S. Practical FPGA Programming in C; Prentice Hall Press: Upper Saddle River, NJ, USA, 2005; ISBN 9780131543188. [Google Scholar]

- Kilts, S. Advanced FPGA Design: Architecture, Implementation, and Optimization; Wiley-IEEE Press: New York, NY, USA, 2007; ISBN 9780470054376. [Google Scholar]

- Sainath, T.N.; Vinyals, O.; Senior, A.; Sak, H. Convolutional Long Short-Term Memory Fully Connected Deep Neural Networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015. [Google Scholar]

- Ryumina, E.; Karpov, A. Facial Expression Recognition using Distance Importance Scores Between Facial Landmarks. In Proceedings of the 30th International Conference on Computer Graphics and Machine Vision (GraphiCon 2020), St. Petersburg, Russia, 22–25 September 2020; pp. 1–10. [Google Scholar]

- Sagonas, C.; Tzimiropoulos, G.; Zafeiriou, S.; Pantic, M. 300 Faces in-the-Wild Challenge: The first facial landmark localization Challenge. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 2–8 December 2013; pp. 397–403. [Google Scholar]

- Ma, F.; Zhang, W.; Li, Y.; Huang, S.L.; Zhang, L. Learning Better Representations for Audio-Visual Emotion Recognition with Common Information. Appl. Sci. 2020, 10, 7239. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint Face Detection and Alignment using Multitask Cascaded Convolutional Networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Jaratrotkamjorn, A.; Choksuriwong, A. Bimodal Emotion Recognition using Deep Belief Network. In Proceedings of the 2019 23rd International Computer Science and Engineering Conference (ICSEC), Phuket, Thailand, 30 October–1 November 2019; pp. 103–109. [Google Scholar]

- Chen, Z.Q.; Pan, S.T. Integration of Speech and Consecutive Facial Image for Emotion Recognition Based on Deep Learning. Master’s Thesis, National University of Kaohsiung, Kaohsiung, Taiwan, 2021. [Google Scholar]

- Li, S.; Deng, W.; Du, J. Facial Emotion Recognition Using Deep Learning: A Survey. IEEE Trans. Affect. Comput. 2023, 14, 1234–1256. [Google Scholar]

- Wang, K.; Peng, X.; Qiao, Y. Emotion Recognition in the Wild Using Multi-Task Learning and Self-Supervised Features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 5678–5687. [Google Scholar]

- Zhang, Y.; Wang, C.; Ling, H. Cross-Domain Facial Emotion Recognition with Adaptive Graph Convolutional Networks. IEEE Trans. Image Process. 2024, 33, 1123–1135. [Google Scholar]

- Chen, L.; Liu, Z.; Sun, M. Efficient Emotion Recognition from Low-Resolution Images Using Attention Mechanisms. In Proceedings of the ACM International Conference on Multimedia (ACM MM), Ottawa, ON, Canada, 29 October–3 November 2023; pp. 2345–2354. [Google Scholar]

- Gupta, A.; Narayan, S.; Patel, V. Explaining Facial Emotion Recognition Models via Vision-Language Pretraining. Nat. Mach. Intell. 2024, 6, 45–59. [Google Scholar]

- Zhao, R.; Elgammal, A. Dynamic Facial Emotion Recognition Using Spatio-Temporal 3D Networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 9876–9885. [Google Scholar] [CrossRef]

- Livingstone, S.R.; Russo, F.A. The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A Dynamic, Multimodal Set of Facial and Vocal Expressions in North American English. PLoS ONE 2018, 13, e0196391. [Google Scholar] [CrossRef]

- Dataset BAUM-1. Available online: https://archive.ics.uci.edu/dataset/473/baum+1. (accessed on 10 July 2023).

- Dataset eNTERFACE’05. Available online: https://enterface.net/enterface05/emotion.html?utm_source=chatgpt.com. (accessed on 5 May 2023).

- Adeshina, S.O.; Ibrahim, H.; Teoh, S.S.; Hoo, S.C. Custom Face Classification Model for Classroom using Haar-like and LBP Features with Their Performance Comparisons. Electronics 2021, 10, 102. [Google Scholar] [CrossRef]

- Wu, H.; Cao, Y.; Wei, H.; Tian, Z. Face Recognition based on Haar Like and Euclidean Distance. J. Phys. Conf. Ser. 2021, 1813, 012036. [Google Scholar] [CrossRef]

- Gutter, S.; Hung, J.; Liu, C.; Wechsler, H. Comparative Performance Evaluation of Gray-Scale and Color Information for Face Recognition Tasks; Springer: Heidelberg/Berlin, Germany, 2021; ISBN 9783540453444. [Google Scholar]

- Bhattacharya, S.; Kyal, C.; Routray, A. Simplified Face Quality Assessment (SFQA). Pattern Recognit. Lett. 2021, 147, 108–114. [Google Scholar] [CrossRef]

- Khandelwal, A.; Ramya, R.S.; Ayushi, S.; Bhumika, R.; Adhoksh, P.; Jhawar, K.; Shah, A.; Venugopal, K.R. Tropical Cyclone Tracking and Forecasting Using BiGRU [TCTFB]. Res. Sq. 2022. [Google Scholar] [CrossRef]

- Pan, B.; Hirota, K.; Jia, Z.; Zhao, L.; Jin, X.; Dai, Y. Multimodal Emotion Recognition Based on Feature Selection and Extreme Learning Machine in Video Clips. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 1903–1917. [Google Scholar] [CrossRef]

- Tiwari, P.; Rathod, H.; Thakkar, S.; Darji, A. Multimodal Emotion Recognition Using SDA-LDA Algorithm in Video Clips. J. Ambient. Intell. Humaniz. Comput. 2021, 14, 6585–6602. [Google Scholar] [CrossRef]

- Available online: https://www.terasic.com.tw/cgi-bin/page/archive.pl?Language=English&CategoryNo=205&No=1176. (accessed on 1 June 2022).

- Zarzycki, K.; Ławrynczuk, M. LSTM and GRU Neural Networks as Models of Dynamical Processes used in Predictive Control: A Comparison of Models Developed for Two Chemical Reactors. Sensors 2021, 21, 5625. [Google Scholar] [CrossRef] [PubMed]

| CNN + LSTM + DNN | CNN + GRU + DNN | ||

|---|---|---|---|

| Execution Time on FPGA | Accuracy | Execution Time on FPGA | Accuracy |

| 11.70 s | 99.51% | 11.67 s | 97.86% |

| Category | Specification |

|---|---|

| CPU | Intel® Core™ i7-10700 CPU 2.90 GHz Manufacturer: Intel Corporation, Santa Clara, CA, USA |

| GPU | NVIDIA GeForce RTX 3090 32 GB Manufacturer: NVIDIA Corporation, Santa Clara, CA, USA |

| IDE (Integrated Development Environment) | Jupyter notebook (Python 3.7.6) |

| Deep learning frameworks | TensorFlow 2.9.1, Keras 2.9.0 |

| Label | Number of Data | Proportion |

|---|---|---|

| Angry | 376 | 15.33% |

| Calm | 376 | 15.33% |

| Disgust | 192 | 7.83% |

| Fear | 376 | 15.33% |

| Happy | 376 | 15.33% |

| Neutral | 188 | 7.39% |

| Sad | 376 | 15.33% |

| Surprised | 192 | 7.83% |

| Total | 2452 | 100% |

| Label | Number of Data | Proportion |

|---|---|---|

| Angry | 59 | 10.85% |

| Disgust | 86 | 15.81% |

| Fear | 38 | 6.99% |

| Happy | 179 | 32.90% |

| Sad | 139 | 25.55% |

| Surprised | 43 | 7.90% |

| Total | 544 | 100% |

| Label | Number of Data | Proportion |

|---|---|---|

| Angry | 211 | 16.71% |

| Disgust | 211 | 16.71% |

| Fear | 211 | 16.71% |

| Happy | 208 | 16.47% |

| Sad | 211 | 16.71% |

| Surprised | 211 | 16.71% |

| Total | 1263 | 100% |

| Number of LFLBs | Number of Local Features | Accuracy |

|---|---|---|

| 3 | 2704 | 28.89% |

| 4 | 784 | 52.96% |

| 5 | 256 | 88.58% |

| 6 | 64 | 99.51% |

| 7 | 16 | 32.68% |

| Model Architecture | Information | |

|---|---|---|

| LFLB 1 | Conv2d (Input) Batch_normalization Max_pooling2d ReLu | Filters = 16, Kernel_size = 5, Strides = 1 Pool_size = 5, Strides = 2 |

| LFLB 2 | Conv2d Batch_normalization Max_pooling2d ReLu | Filters = 16, Kernel_size = 5, Strides = 1 Pool_size = 5, Strides = 2 |

| LFLB 3 | Conv2d Batch_normalization Max_pooling2d ReLu | Filters = 16, Kernel_size = 5, Strides = 1 Pool_size = 5, Strides = 2 |

| LFLB 4 | Conv2d Batch_normalization Max_pooling2d ReLu | Filters = 16, Kernel_size = 5, Strides = 1 Pool_size = 5, Strides = 2 |

| LFLB 5 | Conv2d Batch_normalization Max_pooling2d ReLu | Filters = 16, Kernel_size = 3, Strides = 1 Pool_size = 3, Strides = 2 |

| LFLB 6 | Conv2d Batch_normalization Max_pooling2d ReLu | Filters = 16, Kernel_size = 3, Strides = 1 Pool_size = 3, Strides = 2 |

| Concatenation | Packages every 30 image features into a consecutive facial image feature sequence | |

| Flatten | ||

| Reshape | ||

| LSTM | Unit = 20 | |

| Batch_normalization | ||

| Dense (Output) | Unit = 8, Activation = “softmax” | |

| Training | Validation | Testing | ||||

|---|---|---|---|---|---|---|

| Loss | Acc | Loss | Acc | Loss | Acc | |

| Fold 1 | 0.0308 | 1.0000 | 0.0749 | 1.0000 | 0.4998 | 0.9919 |

| Fold 2 | 0.0366 | 1.0000 | 0.0745 | 1.0000 | 0.4517 | 1.0000 |

| Fold 3 | 0. 0192 | 1.0000 | 0.0415 | 1.0000 | 0.1363 | 1.0000 |

| Fold 4 | 0.0206 | 1.0000 | 0.0428 | 1.0000 | 0.2667 | 0.9959 |

| Fold 5 | 0.0369 | 1.0000 | 0.0593 | 1.0000 | 0.2978 | 0.9919 |

| Fold 6 | 0.0310 | 1.0000 | 0.0703 | 1.0000 | 0.4527 | 0.9959 |

| Fold 7 | 0.0179 | 1.0000 | 0.0382 | 1.0000 | 0.1913 | 0.9959 |

| Fold 8 | 0.0118 | 1.0000 | 0.0206 | 1.0000 | 0.0459 | 0.9959 |

| Fold 9 | 0.0225 | 1.0000 | 0.0378 | 1.0000 | 0.1757 | 0.9919 |

| Fold 10 | 0.0348 | 1.0000 | 0.0769 | 1.0000 | 0.2238 | 0.9919 |

| Average | 0.0262 | 1.0000 | 0.0536 | 1.0000 | 0.2741 | 0.9951 |

| Standard Deviation | 0.000145785 | 0 | 0.000398173 | 0 | 0.022792131 | 1.01707 × 10−5 |

| Label | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Angry | 0.9992 | 0.9967 | 0.9967 | 0.9967 |

| Calm | 0.9967 | 0.9949 | 0.9850 | 0.9899 |

| Disgust | 1.0000 | 1.0000 | 1.0000 | 1.0000 |

| Fear | 0.9992 | 0.9960 | 1.0000 | 0.9980 |

| Happy | 0.9996 | 0.9976 | 1.0000 | 0.9988 |

| Neutral | 0.9976 | 0.9559 | 1.0000 | 0.9774 |

| Sad | 0.9992 | 1.0000 | 0.9917 | 0.9959 |

| Surprised | 0.9988 | 1.0000 | 0.9875 | 0.9937 |

| Average | 0.9988 | 0.9926 | 0.9951 | 0.9938 |

| Method | Classes | Accuracy |

|---|---|---|

| E. Ryumina et al. [9] | 8 | 98.90% |

| F. Ma et al. [11] | 6 | 95.49% |

| A. Jaratrotkamjorn et al. [13] | 8 | 96.53% |

| Z. Q. Chen et al. [14] | 7 | 94% |

| Proposed model | 8 | 99.51% |

| Training | Validation | Testing | ||||

|---|---|---|---|---|---|---|

| Loss | Acc | Loss | Acc | Loss | Acc | |

| Fold 1 | 0.0505 | 0.9137 | 0.9556 | 0.9327 | 0.5868 | 0.8600 |

| Fold 2 | 0.0623 | 0.9951 | 0.2346 | 0.9405 | 0.8079 | 0.8600 |

| Fold 3 | 0.0852 | 0.9764 | 0.5582 | 0.9428 | 0.6573 | 0.8800 |

| Fold 4 | 0.0705 | 0.9553 | 0.4763 | 0.9053 | 0.4489 | 0.8600 |

| Fold 5 | 0.0792 | 0.9202 | 0.8127 | 0.9492 | 0.5791 | 0.8400 |

| Fold 6 | 0.0801 | 0.9015 | 0.6274 | 0.9266 | 0.4527 | 0.8600 |

| Fold 7 | 0.0928 | 0.9589 | 0.3468 | 0.9134 | 0.4802 | 0.9200 |

| Fold 8 | 0.0893 | 0.9668 | 0.7501 | 0.9431 | 0.6054 | 0.9000 |

| Fold 9 | 0.0934 | 0.9015 | 0.4307 | 0.9519 | 0.4205 | 0.9400 |

| Fold 10 | 0.0683 | 0.9907 | 0.6919 | 0.9203 | 0.6596 | 0.8600 |

| Average | 0.0772 | 0.9480 | 0.5884 | 0.9326 | 0.5698 | 0.8780 |

| Standard Deviation | 0.0141 | 0.0360 | 0.2225 | 0.0157 | 0.1214 | 0.0320 |

| Label | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Angry | 0.9520 | 0.9600 | 0.6857 | 0.8000 |

| Disgust | 0.9340 | 0.9140 | 0.7727 | 0.8374 |

| Fear | 0.9920 | 0.7143 | 1.0000 | 0.8333 |

| Happy | 0.9960 | 0.9722 | 1.0000 | 0.9859 |

| Sad | 0.8860 | 0.7887 | 0.9333 | 0.8550 |

| Surprised | 0.9960 | 1.0000 | 0.9667 | 0.9831 |

| Average | 0.9593 | 0.8915 | 0.8931 | 0.8825 |

| Paper | Classes | Accuracy |

|---|---|---|

| F. Ma et al. [11] | 6 | 64.05% |

| B. Pan et al. [27] | 6 | 55.38% |

| P. Tiwari [28] | 8 | 77.95% |

| Proposed model | 6 | 87.80% |

| Training | Validation | Testing | ||||

|---|---|---|---|---|---|---|

| Loss | Acc | Loss | Acc | Loss | Acc | |

| Fold 1 | 0.0437 | 0.9752 | 0.2978 | 0.9563 | 0.4727 | 0.9603 |

| Fold 2 | 0.0389 | 0.9747 | 0.1925 | 0.9632 | 0.2063 | 0.9683 |

| Fold 3 | 0.0471 | 0.9968 | 0.3194 | 0.9491 | 0.2167 | 0.9762 |

| Fold 4 | 0.0423 | 0.9604 | 0.3751 | 0.9578 | 0.4335 | 0.9603 |

| Fold 5 | 0.0312 | 0.9823 | 0.3530 | 0.9684 | 0.5808 | 0.9603 |

| Fold 6 | 0.0430 | 0.9521 | 0.2496 | 0.9467 | 0.3345 | 0.9524 |

| Fold 7 | 0.0488 | 0.9816 | 0.1693 | 0.9546 | 0.4768 | 0.9683 |

| Fold 8 | 0.0495 | 0.9873 | 0.3847 | 0.9619 | 0.3880 | 0.9762 |

| Fold 9 | 0.0456 | 0.9768 | 0.2319 | 0.9443 | 0.2920 | 0.9841 |

| Fold 10 | 0.0345 | 0.9765 | 0.3890 | 0.9691 | 0.4589 | 0.9762 |

| Average | 0.0424 | 0.9763 | 0.2962 | 0.9571 | 0.3860 | 0.9682 |

| Standard Deviation | 3.62 × 10−5 | 0.000160 | 0.0066 | 7.50 × 10−5 | 0.0149 | 9.79 × 10−5 |

| Label | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Angry | 0.9817 | 0.9377 | 0.9862 | 0.9613 |

| Disgust | 0.9976 | 1.0000 | 0.9842 | 0.9920 |

| Fear | 0.9786 | 0.9794 | 0.9154 | 0.9463 |

| Happy | 1.0000 | 1.0000 | 1.0000 | 1.0000 |

| Sad | 0.9905 | 0.9341 | 1.0000 | 0.9659 |

| Surprised | 0.9881 | 0.9793 | 0.9450 | 0.9618 |

| Average | 0.9894 | 0.9718 | 0.9718 | 0.9712 |

| Paper | Classes | Accuracy |

|---|---|---|

| F. Ma et al. [11] | 6 | 80.52% |

| B. Pan et al. [29] | 6 | 86.65% |

| P. Tiwari [30] | 7 | 61.58% |

| Proposed model | 6 | 96.82% |

| Accuracy (%) | Execution Time (sec) | |

|---|---|---|

| Fold 1 | 99.19 | 11.19 |

| Fold 2 | 100.00 | 12.04 |

| Fold 3 | 100.00 | 12.15 |

| Fold 4 | 99.59 | 11.24 |

| Fold 5 | 99.19 | 11.20 |

| Fold 6 | 99.59 | 12.17 |

| Fold 7 | 99.59 | 12.06 |

| Fold 8 | 99.59 | 11.45 |

| Fold 9 | 99.19 | 11.92 |

| Fold 10 | 99.19 | 11.59 |

| Average | 99.51 | 11.70 |

| Variance | 0.318915 | 0.410053 |

| Standard Deviation | 0.1017 | 0.1681 |

| Layer | Execution Time (sec) | Proportion (%) |

|---|---|---|

| Conv2D_1 | 1.3649 | 11.66 |

| Batch_Normalization_1 | 0.0008 | Less than 0.01 |

| Max_Pooling2D_1 | 0.8733 | 7.46 |

| Conv2D_2 | 6.9281 | 59.21 |

| Batch_Normalization_2 | 0.0010 | Less than 0.01 |

| Max_Pooling2D_2 | 0.2260 | 1.93 |

| Conv2D_3 | 1.5648 | 13.37 |

| Batch_Normalization_3 | 0.0009 | Less than 0.01 |

| Max_Pooling2D_3 | 0.0755 | 0.64 |

| Conv2D_4 | 0.4721 | 4.03 |

| Batch_Normalization_4 | 0.0006 | Less than 0.01 |

| Max_Pooling2D_4 | 0.0386 | 0.32 |

| Conv2D_5 | 0.0468 | 0.40 |

| Batch_Normalization_5 | 0.0006 | Less than 0.01 |

| Max_Pooling2D_5 | 0.0248 | 0.21 |

| Conv2D_6 | 0.0322 | 0.27 |

| Batch_Normalization_6 | 0.0005 | Less than 0.01 |

| Max_Pooling2D_6 | 0.0221 | 0.18 |

| LSTM | 0.0071 | 0.06 |

| Batch_Normalization_7 | 0.0000 | Less than 0.01 |

| Dense(Softmax) | 0.0002 | Less than 0.01 |

| Total | 11.70 | 100% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, S.-T.; Wu, H.-J. FPGA Chip Design of Sensors for Emotion Detection Based on Consecutive Facial Images by Combining CNN and LSTM. Electronics 2025, 14, 3250. https://doi.org/10.3390/electronics14163250

Pan S-T, Wu H-J. FPGA Chip Design of Sensors for Emotion Detection Based on Consecutive Facial Images by Combining CNN and LSTM. Electronics. 2025; 14(16):3250. https://doi.org/10.3390/electronics14163250

Chicago/Turabian StylePan, Shing-Tai, and Han-Jui Wu. 2025. "FPGA Chip Design of Sensors for Emotion Detection Based on Consecutive Facial Images by Combining CNN and LSTM" Electronics 14, no. 16: 3250. https://doi.org/10.3390/electronics14163250

APA StylePan, S.-T., & Wu, H.-J. (2025). FPGA Chip Design of Sensors for Emotion Detection Based on Consecutive Facial Images by Combining CNN and LSTM. Electronics, 14(16), 3250. https://doi.org/10.3390/electronics14163250