SN-YOLO: A Rotation Detection Method for Tomato Harvest in Greenhouses

Abstract

1. Introduction

- (1)

- We redesign the StarNet backbone as StarNet’ to improve feature representation under complex backgrounds. StarNet’ enhances robustness through dual-branch expansion, weighted fusion, and DropPath regularization.

- (2)

- We develop a Color-Prior Spatial-Channel Attention (CPSCA) module. By introducing red-channel priors, CPSCA improves robustness against color interference and lighting variation.

- (3)

- We design a multi-level attention fusion strategy. CPSCA is embedded at shallow and deep layers to suppress background noise and enhance semantic consistency.

- (4)

- We introduce OBBs into the detection framework. OBBs improve localization accuracy under arbitrary tomato orientations and dense greenhouse layouts.

2. Related Work

3. Methods

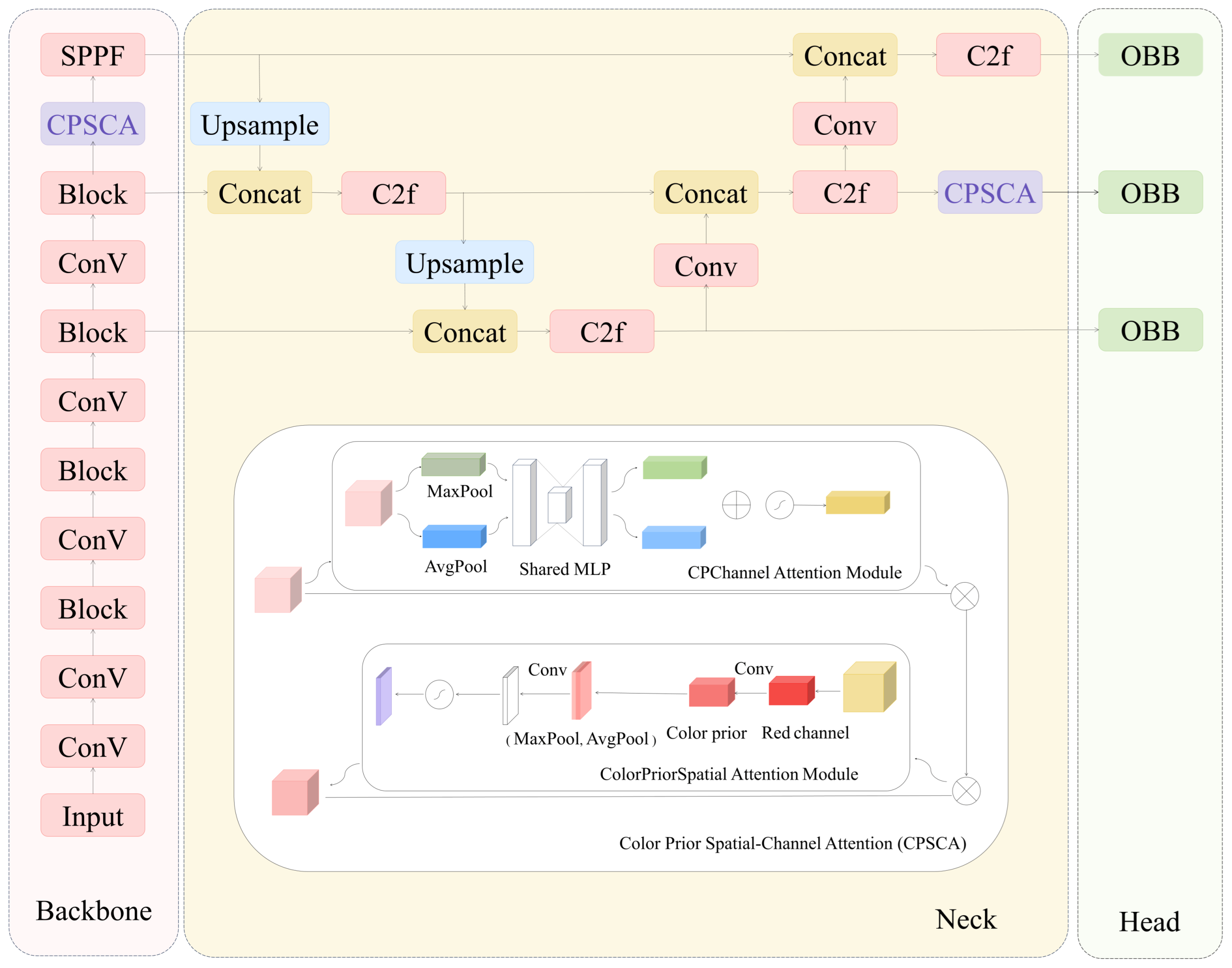

3.1. SN-YOLO Network Structure

| Algorithm 1: Feature Extraction and Fusion Pipeline of SN-YOLO |

Input: Input image x Output: Feature maps Stem // Initial feature extraction StarNet’ // Backbone feature maps CPSCA // Attention enhancement SPPF // Deep semantic feature Upsample + Concat→ C2f // Top-down fusion Upsample + Concat→ C2f // Top-down fusion Downsample + Concat→ C2f + CPSCA // Bottom-up fusion Downsample + Concat→ C2f // Bottom-up fusion return OBB_Detector // Rotated object detection |

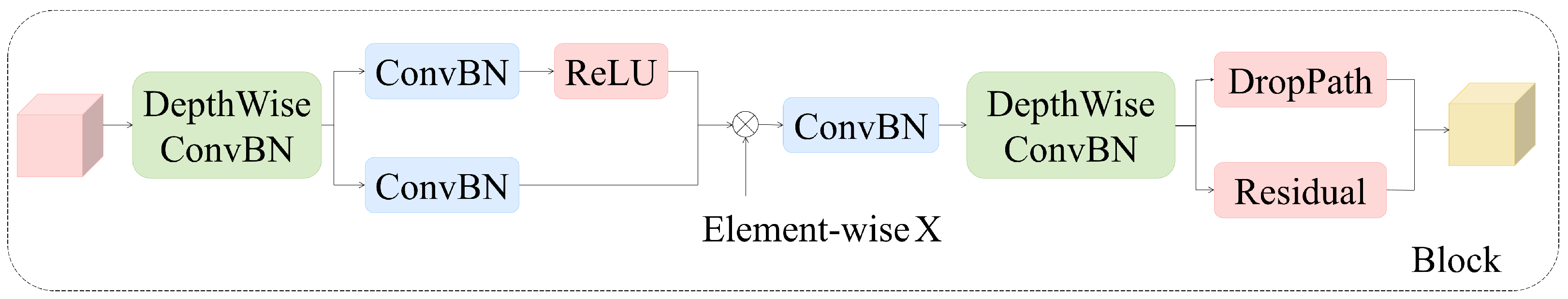

3.2. Backbone Network: StarNet’

3.2.1. Overall Architecture

3.2.2. Comparison with Original StarNet

3.3. Attention Modules and Multi-Level Fusion

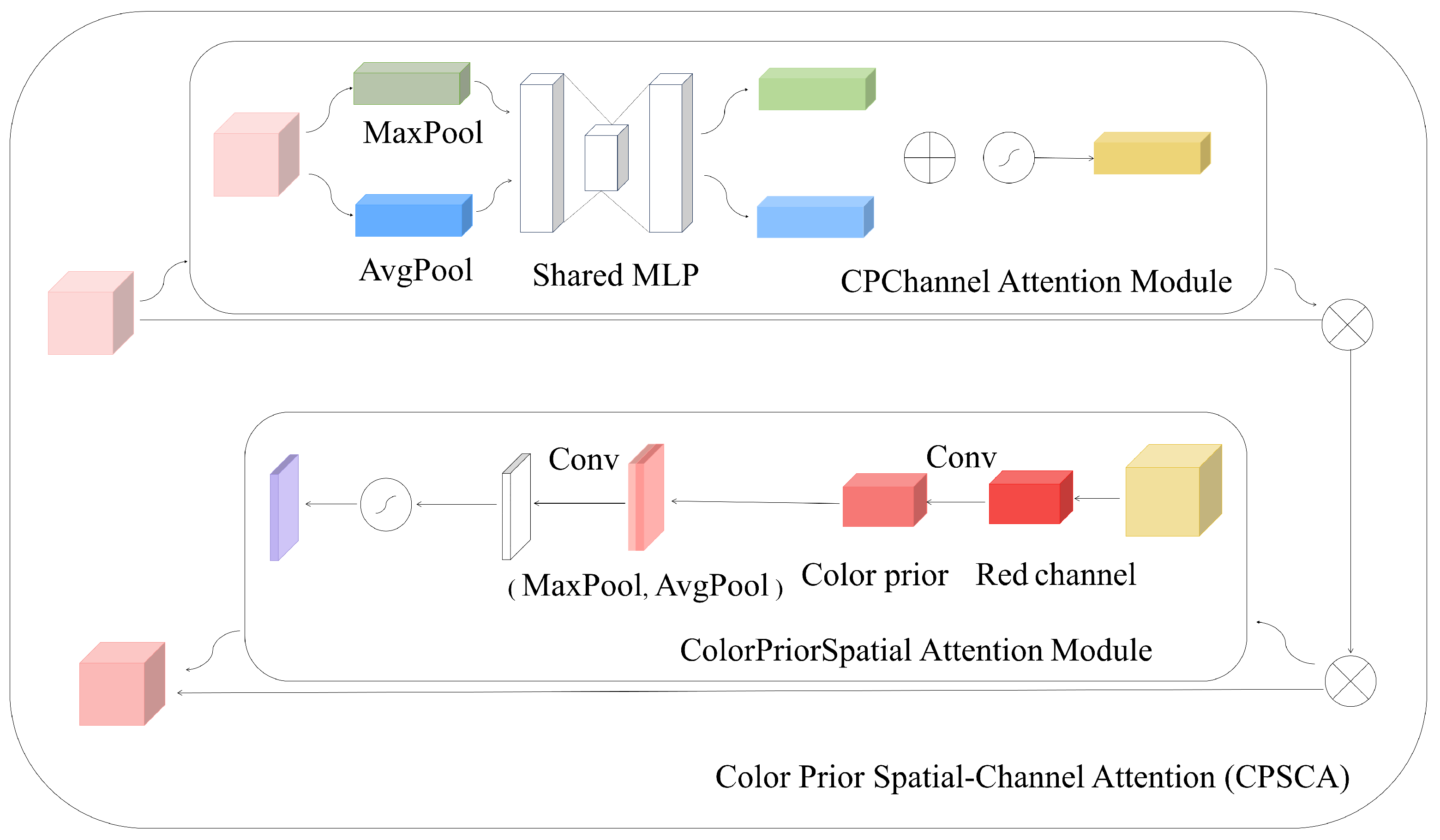

3.3.1. Color-Prior Spatial-Channel Attention (CPSCA) Module

- (1)

- Calculation process of channel attention stage

- (2)

- Colorprior spatial attention calculation

- (3)

- The CPSCA module combines channel attention and spatial color-prior attention

3.3.2. Low-Level Feature Filtering Based on Color-Prior Attention

3.3.3. Cross-Scale Attention-Driven High-Level Semantic Alignment

3.3.4. Attention-Guided Multi-Level Fusion Strategy

4. Results and Analysis

4.1. Environmental Settings

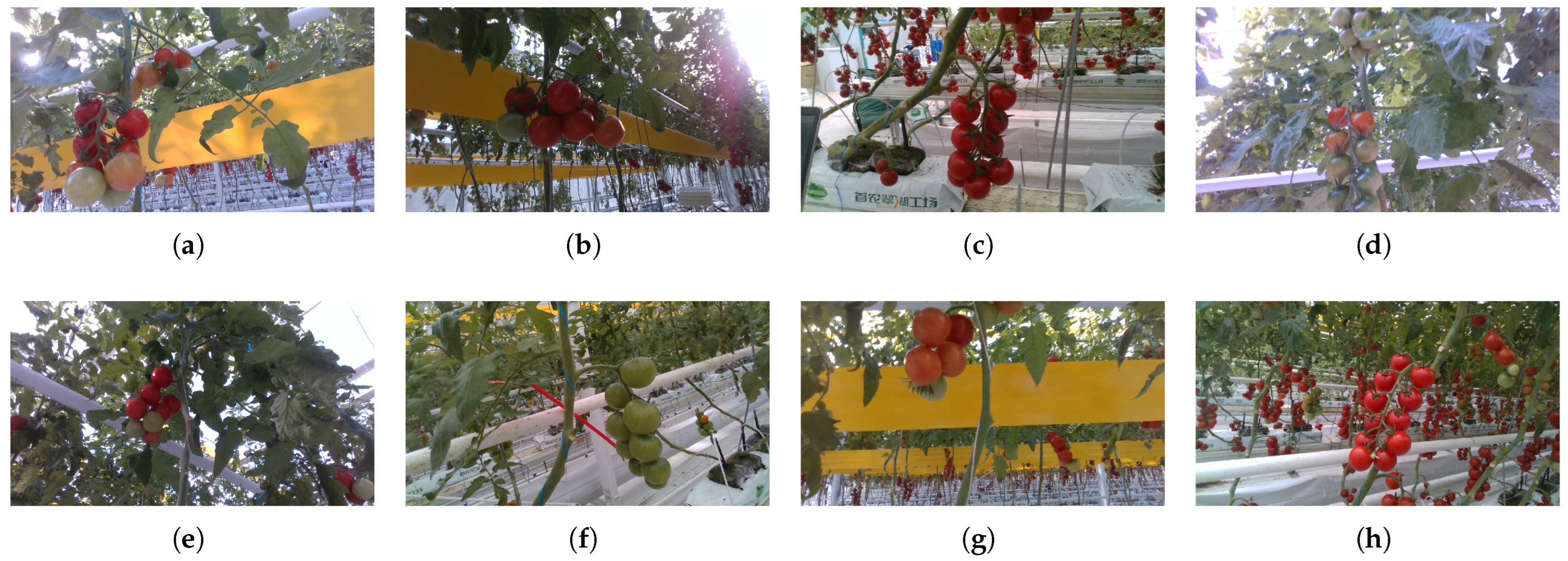

4.2. Experimental Dataset

4.2.1. Dataset Source

4.2.2. Dataset Sample Description

4.2.3. Dataset Splitting

4.3. Model Evaluation Metrics

4.4. Training and Testing Results

4.5. Comparison Experiment of Different Color Priors

4.6. Comparison of Different Models

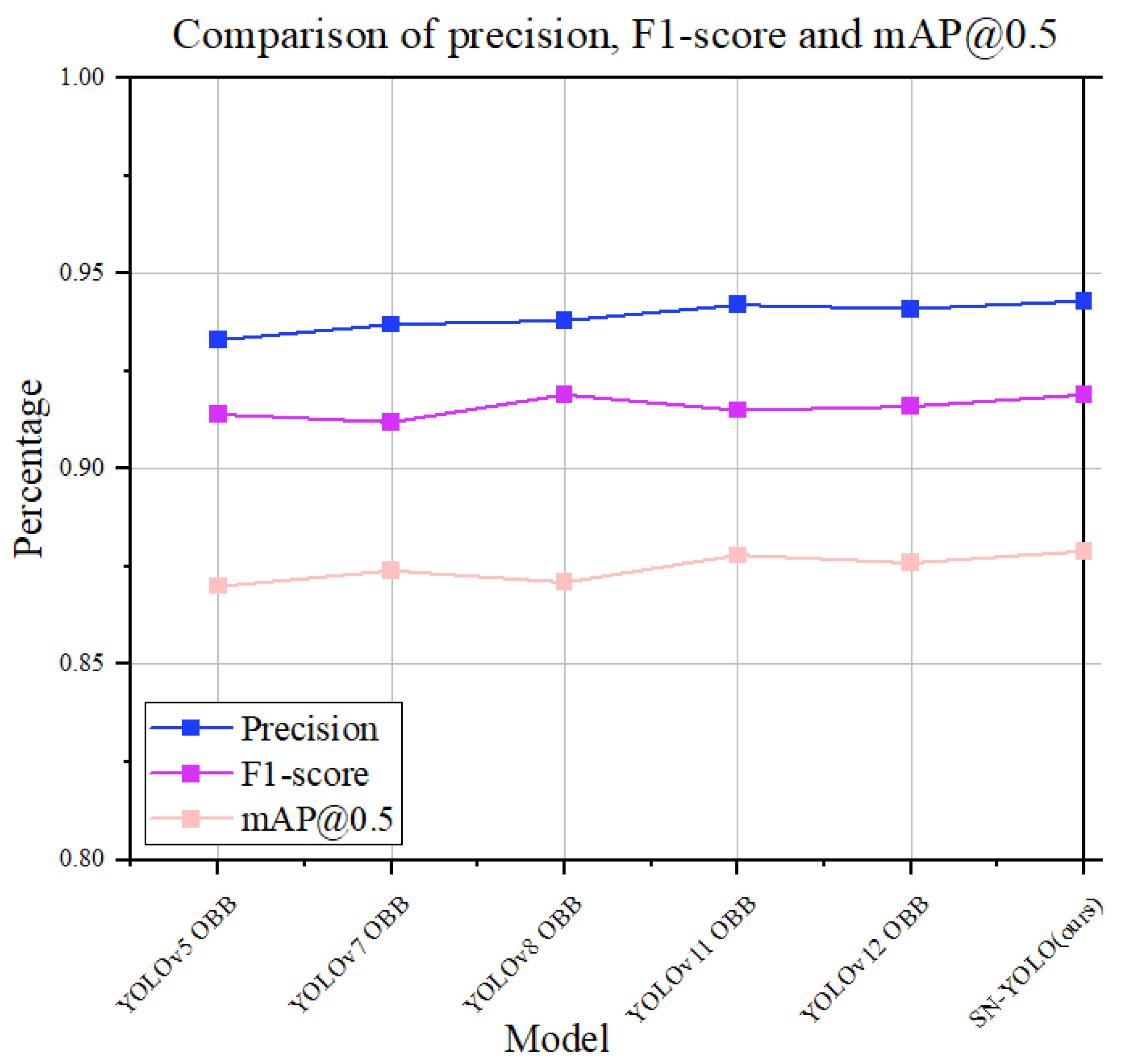

4.7. Comparison of Different Backbones

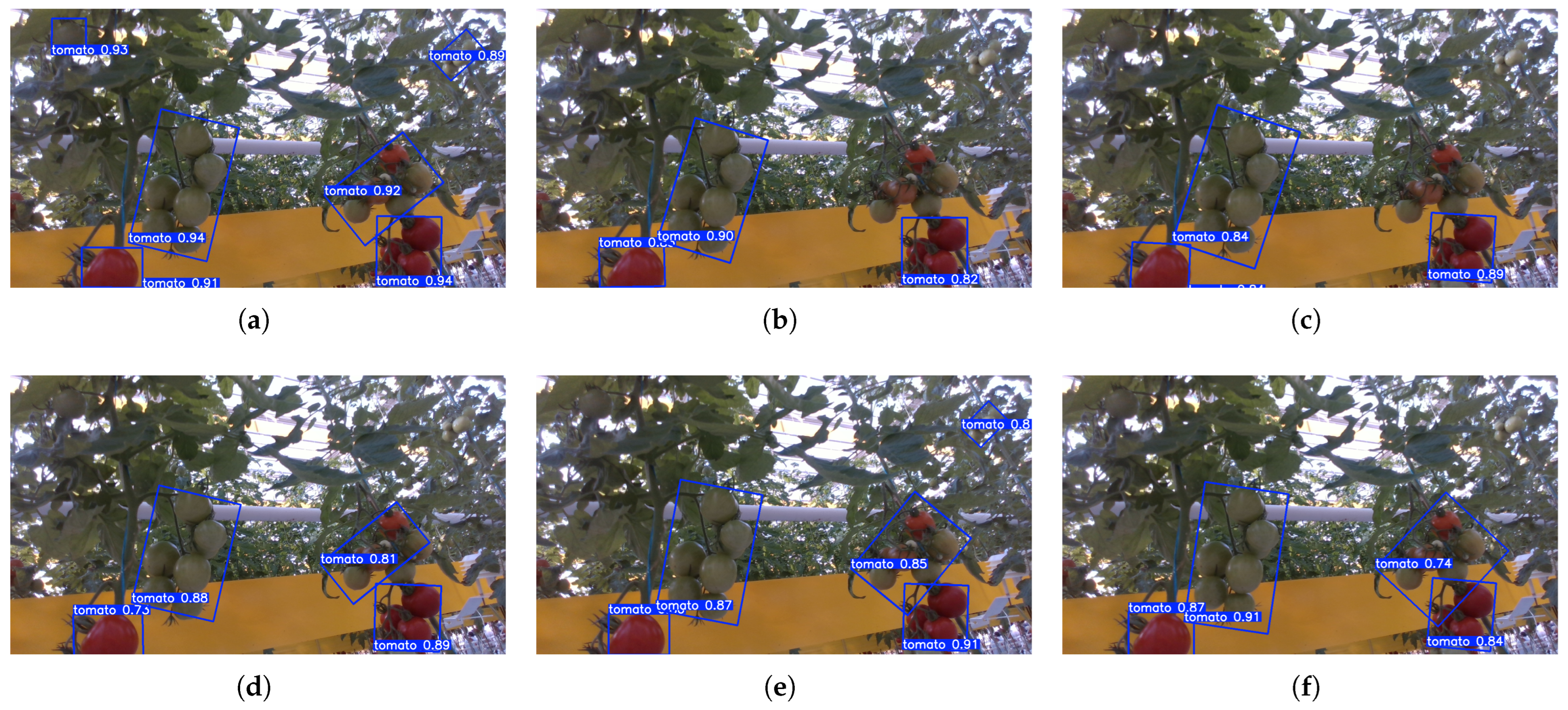

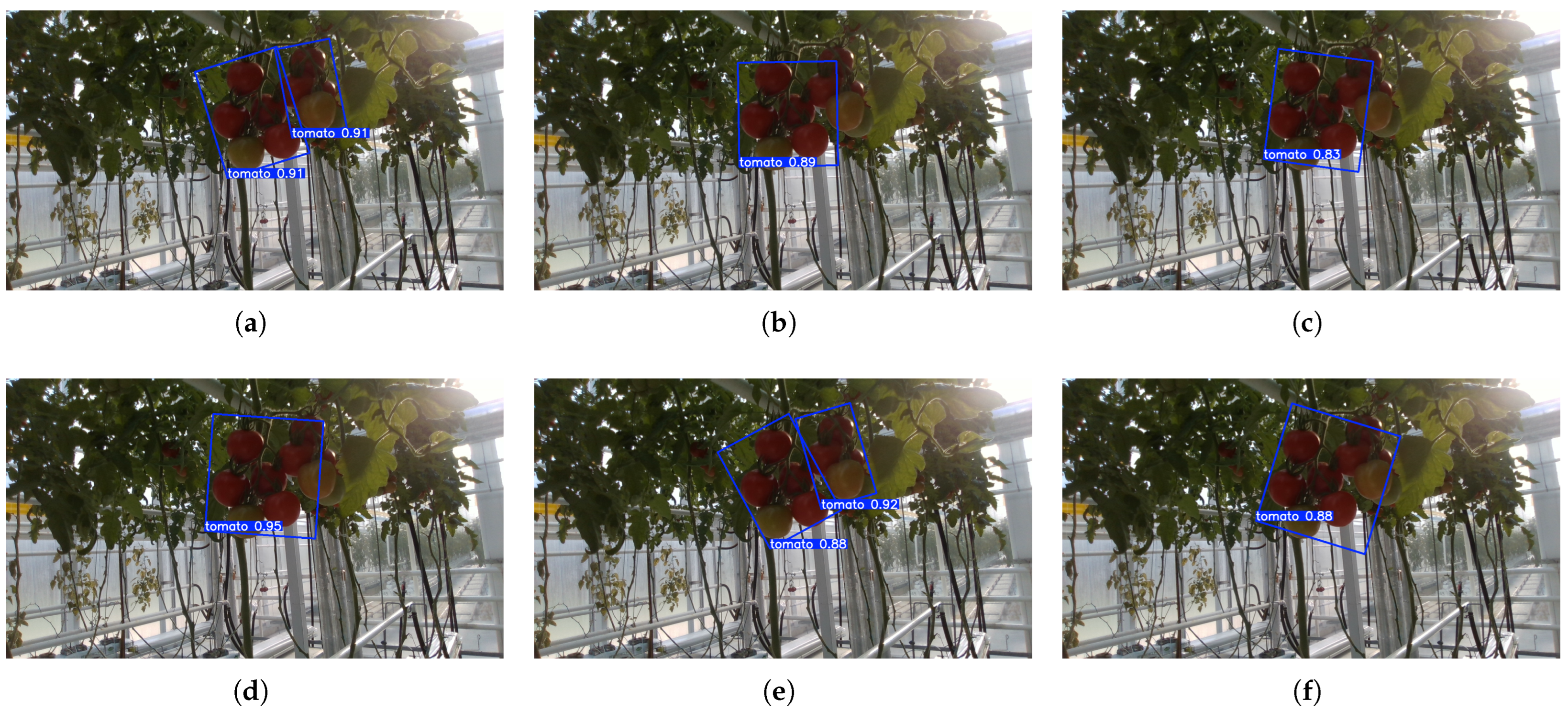

4.8. Display of Visual Results

4.9. Ablation Experiment

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. DeepFruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef]

- Bac, C.; Hemming, J.; van Henten, E. Harvesting robots for high-value crops: State-of-the-art review and challenges ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Lin, T.; Li, J.; Xie, Q.; Wang, B.; Zhang, Y. Fruit detection and localization for robotic harvesting in orchards: A review. Comput. Electron. Agric. 2020, 176, 105634. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning for real-time fruit detection and orchard mapping on an autonomous robot platform. ISPRS J. Photogramm. Remote Sens. 2019, 146, 24–35. [Google Scholar] [CrossRef]

- Liu, J.; Liu, Z. The vision-based target recognition, localization, and control for harvesting robots: A review. Int. J. Precis. Eng. Manuf. 2024, 25, 409–428. [Google Scholar] [CrossRef]

- Schertz, C.; Brown, G. Basic considerations in mechanizing fruit harvests. Trans. ASAE 1968, 11, 343–345. [Google Scholar]

- Xiao, F.; Wang, H.; Li, Y.; Cao, Y.; Lv, X.; Xu, G. A novel shape analysis method for citrus recognition under natural scenes. Agronomy 2023, 13, 639. [Google Scholar] [CrossRef]

- Hannan, M.; Burks, T.; Bulanon, D. A machine vision algorithm for fruit shape analysis using curvature and edge detection techniques. Trans. ASABE 2009, 52, 1747–1756. [Google Scholar]

- Arefi, A.; Motlagh, A.; Mollazade, K.; Teimourlou, R. Recognition and localization of ripen tomato based on machine vision. Aust. J. Crop Sci. 2011, 5, 1144–1149. [Google Scholar]

- Bargoti, S.; Underwood, J. Deep fruit detection in orchards. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3626–3633. [Google Scholar] [CrossRef]

- Chu, P.; Liu, J.; Liu, Z.; Liu, J. O2RNet: A Novel Fruit Detection Network Based on Occluded-to-Robust Reasoning. arXiv 2023, arXiv:2303.04884. [Google Scholar]

- Nejati, M.; Seyednasrollah, B.; Lee, R.; McCool, C.; Lehnert, C.; Perez, T.; Tow, P. Semantic segmentation of kiwifruit for yield estimation. arXiv 2020, arXiv:2006.11729. [Google Scholar]

- Wang, K.; Li, Z.; Su, A.; Wang, Z. Oriented object detection in optical remote sensing images: A survey. arXiv 2023, arXiv:2302.10473. [Google Scholar]

- Wen, L.; Cheng, Y.; Fang, Y.; Li, X. A comprehensive survey of oriented object detection in remote sensing images. Expert Syst. Appl. 2023, 224, 119960. [Google Scholar] [CrossRef]

- Fu, Y.; Wang, Z.; Zheng, H.; Yin, X.; Fu, W.; Gu, Y. Integrated detection of coconut clusters and oriented leaves using improved YOLOv8n-obb for robotic harvesting. Comput. Electron. Agric. 2025, 231, 109979. [Google Scholar] [CrossRef]

- Whittaker, D.; Miles, G.; Mitchell, O.; Gaultney, L. Fruit location in a partially occluded image. Trans. ASAE 1987, 30, 591–596. [Google Scholar] [CrossRef]

- Zheng, X.; Zhao, J.; Liu, M. Tomato Recognition and Localization Technology Based on Binocular Stereo Vision. Comput. Eng. 2004, 171, 155–156. [Google Scholar]

- Xiang, R.; Ying, Y.; Jiang, H.; Rao, X.; Peng, Y. Recognition of Overlapping Tomatoes Based on Edge Curvature Analysis. Trans. Chin. Soc. Agric. Mach. 2012, 43, 157–162. [Google Scholar]

- Feng, Q.; Cheng, W.; Yang, Q.; Xun, Y.; Wang, X. Recognition and Localization Method for Overlapping Tomato Fruits Based on Line-Structured Light Vision. J. China Agric. Univ. 2015, 20, 100–106. [Google Scholar]

- Ma, C.; Zhang, X.; Li, Y.; Lin, S.; Xiao, D.; Zhang, L. Recognition of Immature Tomatoes Based on Saliency Detection and Improved Hough Transform Method. Trans. Chin. Soc. Agric. Eng. 2016, 32, 219–226. [Google Scholar]

- Liu, C.; Lai, N.; Bi, X. Spherical Fruit Recognition and Localization Algorithm Based on Depth Images. Trans. Chin. Soc. Agric. Mach. 2022, 53, 228–235. [Google Scholar]

- Chen, X.; Yang, S.X. A practical solution for ripe tomato recognition and localisation. J. Real-Time Image Process. 2013, 8, 35–51. [Google Scholar] [CrossRef]

- Zhao, Y.; Gong, L.; Zhou, B.; Huang, Y.; Niu, Q.; Liu, C. Research on Non-color-coded Target Recognition Algorithm for Tomato Picking Robot. Trans. Chin. Soc. Agric. Mach. 2016, 47, 1–7. [Google Scholar]

- Li, H.; Zhang, M.; Gao, Y.; Li, M.; Ji, Y. Machine Vision Detection Method for Green-Mature Tomatoes in Greenhouses. Trans. Chin. Soc. Agric. Eng. 2017, 33. [Google Scholar]

- Moallem, P.; Serajoddin, A.; Pourghassem, H. Computer vision-based apple grading for golden delicious apples based on surface features. Inf. Process. Agric. 2017, 4, 33–40. [Google Scholar] [CrossRef]

- Yue, Y.; Sun, B.; Wang, H.; Zhao, H. Tomato Fruit Detection Based on Cascaded Convolutional Neural Network. Sci. Technol. Eng. 2021, 21, 2387–2391. [Google Scholar]

- Zhao, B.; Liu, S.; Zhang, W.; Zhu, L.; Han, Z.; Feng, X.; Wang, R. Lightweight Transformer Architecture Optimization for Cherry Tomato Harvesting Recognition. Trans. Chin. Soc. Agric. Mach. 2024, 55, 62–71, 105. [Google Scholar]

- Zhao, F.; Zuo, G.; Gu, S.; Ren, X.; Tao, X. Lightweight Detection Model for Greenhouse Tomatoes Based on Improved YOLO v5s. Jiangsu Agric. Sci. 2024, 52, 200–209. [Google Scholar] [CrossRef]

- Miao, R.; Li, Z.; Wu, J. Lightweight Cherry Tomato Ripeness Detection Method Based on Improved YOLO v7. Trans. Chin. Soc. Agric. Mach. 2023, 54, 225–233. [Google Scholar]

- Guo, K.; Yang, J.; Shen, C.; Wang, L.; Chen, Z. StarNet: Exploiting Star-Convex Polygons for Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 13014–13023. [Google Scholar]

| Author | Method | Advantages | Limitations |

|---|---|---|---|

| Whittaker et al. [16] Zheng et al. [17] Xiang et al. [18] Feng et al. [19] Ma et al. [20] Liu et al. [21] | D-Hough transform Color thresholding Curvature regression Color difference model Saliency and Hough Morphological features | Simple implementation; effective in controlled environments | Poor robustness under lighting variation, occlusion, and cluttered background |

| Chen et al. [22] Zhao et al. [23] Li et al. [24] Moallem et al. [25] | ROI and edge detector AdaBoost Bayesian classifier SVM | Better generalization; flexible feature learning | High computational cost; unstable in complex scenes |

| Yue et al. [26] Zhao et al. [27] Zhao et al. [28] Miao et al. [29] | Cascade R-CNN EfficientViT YOLOv5 YOLOv7 | Strong feature learning; robust to occlusion and lighting changes | Still challenged by extreme occlusion, clutter, and robustness |

| Aspect | Original StarNet | Proposed StarNet’ |

|---|---|---|

| Network structure | Depthwise separable conv + standard residuals | Dual-branch expansion + enhanced residuals |

| Feature extraction | Single path | Dual 1 × 1 conv paths with weighted fusion |

| Fusion strategy | Addition or concatenation | Learnable weight-based fusion |

| Regularization strategy | None | DropPath regularization |

| Gradient flow | Basic residual connections | More stable via enhanced residuals |

| Parameter Label | Selected Configuration |

|---|---|

| Number of epochs | 300 |

| Image dimensions | 640 × 640 |

| Batch size | 64 |

| Optimizer | AdamW |

| Learning rate | 0.002 |

| Momentum | 0.9 |

| Weight decay | 0.0005 |

| Warmup epochs | 3.0 |

| Warmup momentum | 0.8 |

| Color | Precision | F1-Score | mAP@0.5 | mAP@0.5:0.95 | Parameters |

|---|---|---|---|---|---|

| None | 91.7% | 90.5% | 86.1% | 68.6% | 3.92M |

| Green | 92.1% | 91.1% | 86.6% | 68.9% | 4.21M |

| Blue | 91.9% | 90.9% | 86.3% | 68.3% | 4.05M |

| RGB mean prior | 89.9% | 90.6% | 86.2% | 68.7% | 4.37M |

| Red | 92.8% | 91.3% | 86.8% | 69.2% | 4.3M |

| Model | Precision | F1-Score | mAP@0.5 | mAP@0.5:0.95 | Parameters | Inference Speed |

|---|---|---|---|---|---|---|

| YOLOv5 OBB | 93.5% | 91.4% | 87.0% | 69.5% | 3.51M | 5.8 ms |

| YOLOv7 OBB | 93.7% | 91.2% | 87.4% | 69.4% | 3.97M | 7.5 ms |

| YOLOv8 OBB | 93.3% | 91.5% | 87.1% | 69.6% | 3.91M | 9.3 ms |

| YOLOv11 OBB | 94.2% | 91.5% | 87.8% | 69.7% | 3.58M | 6.2 ms |

| YOLOv12 OBB | 94.1% | 91.6% | 87.6% | 69.7% | 3.73M | 5.7 ms |

| SN-YOLO (ours) | 94.3% | 91.9% | 87.9% | 69.9% | 3.63M | 7.5 ms |

| Model | Precision | F1-Score | mAP@0.5 | Inference Speed | FLOPs |

|---|---|---|---|---|---|

| YOLOv8 OBB-Res2Net | 93.7% | 91.6% | 87.2% | 8.3 ms | 17.2G |

| YOLOv8 OBB-HRNet | 94.0% | 91.3% | 87.5% | 9.2 ms | 18.5G |

| YOLOv8 OBB-FPN-ResNet50 | 93.7% | 90.9% | 87.0% | 7.4 ms | 15.8G |

| YOLOv8 OBB-RepVGG | 93.6% | 91.0% | 87.3% | 7.1 ms | 15.3G |

| SN-YOLO (ours) | 94.3% | 91.9% | 87.9% | 7.5 ms | 16.4G |

| Model | Precision | Recall | mAP@0.5 | Inference Speed | FLOPs |

|---|---|---|---|---|---|

| YOLOv8 OBB | 93.3% | 90.5% | 87.1% | 9.3 ms | 14.3G |

| StarNet’ in backbone | 93.1% | 90.6% | 87.0% | 6.4 ms | 12.7G |

| CPSCA in backbone | 92.8% | 89.8% | 87.3% | 7.1 ms | 20.3G |

| CPSCA in neck | 93.6% | 90.3% | 87.4% | 9.9 ms | 14.5G |

| SN-YOLO (ours) | 94.3% | 91.4% | 87.9% | 7.5 ms | 16.4G |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Yu, R.; Yang, M.; Che, W.; Ning, Y.; Zhan, Y. SN-YOLO: A Rotation Detection Method for Tomato Harvest in Greenhouses. Electronics 2025, 14, 3243. https://doi.org/10.3390/electronics14163243

Chen J, Yu R, Yang M, Che W, Ning Y, Zhan Y. SN-YOLO: A Rotation Detection Method for Tomato Harvest in Greenhouses. Electronics. 2025; 14(16):3243. https://doi.org/10.3390/electronics14163243

Chicago/Turabian StyleChen, Jinlong, Ruixue Yu, Minghao Yang, Wujun Che, Yi Ning, and Yongsong Zhan. 2025. "SN-YOLO: A Rotation Detection Method for Tomato Harvest in Greenhouses" Electronics 14, no. 16: 3243. https://doi.org/10.3390/electronics14163243

APA StyleChen, J., Yu, R., Yang, M., Che, W., Ning, Y., & Zhan, Y. (2025). SN-YOLO: A Rotation Detection Method for Tomato Harvest in Greenhouses. Electronics, 14(16), 3243. https://doi.org/10.3390/electronics14163243