Abstract

As Industrial Control Systems (ICSs) increasingly adopt cloud–edge collaborative architectures, they face escalating risks from complex cross-domain cyber threats. To address this challenge, we propose a novel threat assessment framework specifically designed for cloud–edge-integrated ICSs. Our approach systematically identifies and evaluates security risks across cyber and physical boundaries by building a structured dataset of ICS assets, attack entry points, techniques, and impacts. We introduce a unique set of evaluation indicators spanning four key dimensions—system modules, attack paths, attack methods, and potential impacts—providing a holistic view of cyber threats. Through simulation experiments on a representative ICS scenario inspired by real-world attacks, we demonstrate the framework’s effectiveness in detecting vulnerabilities and quantifying security posture improvements. Our results underscore the framework’s practical utility in guiding targeted defense strategies and its potential to advance research on cloud–edge ICS security. This work not only fills gaps in the existing methodologies but also provides new insights and tools applicable to sectors such as smart grids, intelligent manufacturing, and critical infrastructure protection.

1. Introduction

Industrial Control Systems (ICSs) have evolved dramatically since their inception in the 1960s, when highly automated production processes first saw widespread industrial use [1]. With the advent of initiatives like smart manufacturing and Industry 4.0, modern ICSs have transformed into complex cyber–physical systems (CPSs) that tightly couple physical processes with digital control, greatly improving efficiency and responsiveness [2,3]. In parallel, advances in computing have fostered new architectures for ICSs. Cloud–edge collaboration has emerged as a promising paradigm that combines the powerful data processing capabilities of cloud computing with the low-latency responsiveness of edge computing [4,5]. This integration provides higher operational efficiency and real-time data processing and has begun to gain attention in domains such as manufacturing ICSs [6], smart power grids [7], and intelligent transportation systems [8]. An ICS is no longer just a collection of automated devices but an intelligent network that can monitor and analyze data across an entire product lifecycle in real time.

However, the adoption of cloud–edge-integrated architectures also introduces new security challenges. In cloud–edge collaborative environments, ICSs face cross-domain attacks that span cyber and physical domains and often involve multiple layers of the system. Notably, several high-profile cyber–physical attacks have illustrated the complexity and multi-dimensional nature of such threats. For example, the Stuxnet worm of 2010 leveraged both cyber exploits and physical sabotage to infiltrate an air-gapped ICS network, illustrating one of the first globally recognized cross-domain ICS attacks [9]. Another case is the 2015 Ukraine power grid cyberattack, where attackers remotely compromised control systems and caused a widespread blackout [10]. Similarly, the 2017 NotPetya malware spread from IT networks to disrupt industrial operations worldwide, resulting in significant physical and economic damage (citelika2018notpetya). These incidents highlight the vulnerability of ICS to Advanced Persistent Threats (APTs) that traverse multiple domains (IT networks, control networks, and physical equipment), combining cyber techniques, physical disruption, and even social engineering.

Traditional cybersecurity assessment methods struggle to fully address such multi-faceted, cross-domain threats. Extensive research has been conducted on ICS security evaluation using tools like attack graphs (formal models that map potential sequences of exploits across system components), attack trees (hierarchical diagrams that decompose attacker goals into subgoals), and Bayesian networks (probabilistic graphical models capturing dependencies and uncertainties in attack scenarios) [11,12,13]. These approaches provide valuable insights into network vulnerabilities and attack probabilities. However, relatively few studies focus specifically on cross-domain attacks in cloud–edge scenarios, where the dynamic, distributed architecture and real-time requirements of cloud–edge ICS render many existing methods insufficient. Early work did not deeply explore the risks arising from interactions across different layers and domains in a cloud–edge-integrated ICS. For instance, conventional models lack the ability to evaluate dynamic cross-layer attack paths or the cascading effects of attacks that move between cyber (information) domain and physical domain.

In this context, this paper proposes a comprehensive framework and methodology to fill the research gap and provide a systematic solution for enhancing ICS security in cloud–edge collaborative scenarios. The main contributions are as follows:

- 1.

- Systematic Framework Construction: We systematically construct a cross-domain attack evaluation framework for cloud–edge collaborative ICS by detailed collection and classification of system assets, cross-domain attack entry points, attack methods, and attack impacts. This includes identifying various types of ICS assets, categorizing potential attack entry points, surveying attack techniques/tools, and classifying attack impacts. These preparatory steps establish the necessary data foundation and basic structure for subsequent attack evaluation.

- 2.

- Cross-Domain Attack Indicator System: We develop a novel cross-domain attack evaluation indicator system tailored to cloud–edge ICS scenarios. This indicator system accounts for the unique characteristics of cross-domain attacks in ICS and covers four key dimensions: system modules, attack paths, attack methods, and their potential impacts. It provides a standardized theoretical basis and methodology for identifying, evaluating, and defending against cross-domain attacks.

- 3.

- Simulation-Based Validation: We conduct simulation experiments under a representative ICS topology to test the practical effectiveness of the proposed evaluation method. The experiments verify the validity and feasibility of the approach in identifying weak points of the system and improving overall security.

2. Related Work

To address the escalating security threats in Industrial Control Systems (ICSs), especially in the context of cloud–edge collaboration, various attack assessment approaches have been proposed. A summary of representative works and their comparative characteristics is provided in Table 1. We categorize the existing research into four classes: static structural modeling, dynamic probabilistic analysis, cross-domain propagation modeling, and cloud–edge-tailored evaluation frameworks.

Table 1.

Summary of ICS threat assessment literature.

(1) Static Structural Assessment. Early studies primarily relied on fixed-attack structures such as attack trees and graphs to identify vulnerabilities. Wang et al. [12] employed attack–defense trees to quantify risk in ICS environments, capturing attacker and defender behavior in a static hierarchy. Zhou et al. [11] further extended attack-graph-based methods by introducing correlation among vulnerabilities to dynamically generate exploit paths. Zhu et al. [14] proposed a risk evaluation model for distribution cyber–physical systems by simulating static attack paths combined with system fault analysis. While insightful, these models lack adaptability to dynamic topology or temporal propagation, limiting their application in evolving ICS environments.

(2) Dynamic Risk Inference and Bayesian Modeling. To better capture uncertainty in ICS security, probabilistic approaches have been developed. Zhang et al. [15] constructed a Bayesian network to estimate the probability of sensor and actuator compromise, enabling physical-level risk prediction. Fu et al. [16] integrated fuzzy logic with Bayesian networks to assess cross-domain risk propagation in distribution systems. Huang et al. [17] proposed an association analysis method based on system state variables and security events, revealing probabilistic correlations among risk factors. Jin et al. [18] explored cloud–fog architectures for autonomous industrial CPS, emphasizing dynamic 3C (communication–computation–control) coupling and security-aware automation strategies. These works provide valuable insights but generally assume fixed data sources and are limited in modeling multi-layer interactions.

(3) Cross-Domain Attack Propagation Models. To address attack transitions between IT and OT domains, several studies have proposed integrated propagation frameworks. Liu et al. [19] combined colored Petri nets with Bayesian game theory to evaluate cyber–physical risk in power grid control systems, modeling both attacker strategies and system responses. Tan and Li [20] used attack graph models augmented by reinforcement learning to simulate attack progression across domains. Cao et al. [21] presented a deep-learning-based spatiotemporal detection framework (FedDynST) for ICS DDoS detection in cloud–edge settings, which inherently models cross-domain behavior via federated temporal features.

(4) Cloud–Edge Collaborative Evaluation. Despite the above progress, only limited work explicitly addresses the structural dynamics introduced by cloud–edge collaboration in the ICS. Liu et al. [22] analyzed cyber contingencies in active distribution networks using a co-simulation approach but did not focus on the cloud–edge architecture. Li et al. [23] proposed an optimization-based framework to assess worst-case attack strategies under resource constraints. Zheng et al. [24] surveyed cybersecurity solutions for edge-integrated IIoT and ICS networks, identifying specific risks in edge-cloud communication and recommending layered mitigation techniques. Wu et al. [25] proposed a federated-learning-based real-time threat scoring model for edge-enabled ICS, improving detection latency and decentralization robustness. However, none of these frameworks fully model the cloud–edge-specific deployment layers or account for real-time adaptive changes in such hybrid architectures. Our proposed method fills this gap by integrating multi-source indicators—entry points, propagation paths, method complexity, and impact types—into a unified scoring system suitable for cloud–edge ICS risk assessment.

3. Methodology

3.1. Framework Overview

Figure 1 presents our end-to-end framework for evaluating cross-domain attacks in a cloud–edge–field ICS. Unlike traditional cloud-only security assessments—which typically treat edge and field devices as passive data sources, assume unlimited compute and persistent connectivity, and apply homogeneous controls—our design explicitly captures the heterogeneity, resource constraints, intermittent connectivity, and physical accessibility of edge nodes, as well as the strict timing and safety requirements of field controllers. By integrating telemetry and configuration data across all three tiers (cloud, edge, and field), we can model multi-layer attack paths that span different domains and dynamically adjust risk estimates as conditions evolve.

Figure 1.

Our proposed framework for cross-domain attack evaluation in a cloud–edge collaborative ICS.

- Cloud Layer: centralized analytics, long-term data storage, containerized services.

- Edge Layer: distributed compute nodes deployed near physical processes, often with constrained resources and minimal hardening.

- Field Layer: programmable logic controllers (PLCs), sensors, actuators, and associated industrial protocols.

By integrating telemetry and configuration data from all three layers, we achieve a unified cross-domain view of attacker capabilities and system vulnerabilities. This multi-phase framework comprises the following:

- 1.

- Data Collection and Threat Modeling: inventory of assets and mapping of known ICS techniques.

- 2.

- Indicator Quantification: computation of 16 tailored metrics across four risk dimensions.

- 3.

- Score Aggregation and Analysis: synthesis into a composite risk score for benchmarking and “what-if” exploration.

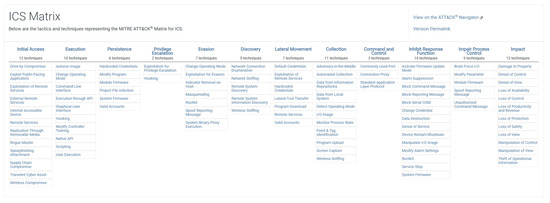

In Phase 1, we focus on assembling a detailed dataset and threat model for an Industrial Control System (ICS) within a cloud–edge architecture. The process begins with the systematic inventory of the ICS assets, which is derived from our private dataset. This dataset provides comprehensive details about the hardware, software, and network assets across the environment, forming the foundation for the entire evaluation. These assets are meticulously catalogued to identify key components and their attributes, such as entry points, attack methods, and potential impacts. One crucial aspect of this phase is identifying and categorizing cross-domain attack entry points. These are potential vulnerabilities where an attacker could breach the network to affect OT devices or vice versa. By following the MITRE ATT&CK for the ICS framework (see Figure A1), we map out known attack methods, tools, and the corresponding impacts on system operations and safety. This provides us with a thorough understanding of how an attacker might exploit various components in the cloud–edge architecture. This comprehensive dataset and attack model, which merges detailed asset information with structured threat data, form the cornerstone for the subsequent development of attack indicators and risk evaluation.

In Phase 2, we develop and compute a set of evaluation indicators across four dimensions: (a) system module metrics, (b) attack path metrics, (c) attack method metrics, and (d) attack impact metrics. These indicators quantitatively characterize different aspects of cross-domain threats. The details of each indicator and their calculation methods are provided in Section 3.2. In developing our indicator set, we deliberately selected 16 metrics to ensure both theoretical rigor and practical relevance across the cloud–edge–field architecture. First, we drew on an extensive literature review of risk-quantification techniques in industrial contexts—dependency metrics that capture cascading failures in multi-component systems [26], exposure-surface scoring methods for networked services [27], and probabilistic models of detection avoidance [28]—to inform our measures of module importance, interface exposure, and stealth potential. However, conventional frameworks invariably assume either cloud-only or on-premises deployments and thus overlook the distinct threats introduced by edge computing. In particular, edge nodes often operate with minimal security hardening in physically accessible locations, rendering them susceptible to tampering, intermittent connectivity, and rapid reconfiguration. To address these gaps, we devised novel indicators—such as Security Posture Deviation and Segmentation Effectiveness—that explicitly account for the resource constraints and physical coupling of edge devices. Finally, we ensured that each metric produces a normalized, interpretable score so that operators overseeing hundreds of distributed assets can rapidly compare and prioritize security investments. By combining well-established dependency and exposure measures with edge-specific risk factors and by structuring all sixteen indicators into four coherent dimensions, our framework advances beyond traditional ICS assessments to deliver a unified, actionable portrait of cross-domain vulnerability.

In the final phase, the various indicator values are aggregated to produce overall scores, which facilitate analysis of the system’s security posture. Specifically, we derive a composite score for each dimension (System Module Score, Attack Path Score, Attack Method Score, and Attack Impact Score), which are then combined into a single overall attack evaluation score for the ICS. This overall score provides a high-level quantitative measure of the cross-domain attack risk facing the system. By examining the contributions of each dimension, system defenders can pinpoint critical weaknesses (e.g., a particular module with high risk, or an attack path that is especially vulnerable and stealthy) and prioritize mitigation strategies. Figure 1 presents our end-to-end framework for evaluating cross-domain attacks in a cloud–edge–field ICS. Unlike traditional cloud-only security assessments—which typically treat edge and field devices as passive data sources, assume unlimited compute and persistent connectivity, and apply homogeneous controls—our design explicitly captures the heterogeneity, resource constraints, intermittent connectivity, and physical accessibility of edge nodes, as well as the strict timing and safety requirements of field controllers. By integrating telemetry and configuration data across all three tiers (cloud, edge, and field), we can model multi-layer attack paths that span IT and OT domains and dynamically adjust risk estimates as conditions evolve.

3.2. Indicator Definition and Calculation

In this section, we define the evaluation indicators for each of the four dimensions and present their calculation formulas. The indicator definitions take into account the unique features of cloud–edge-integrated ICS and cross-domain attack behavior.

3.2.1. System Module Indicators

This dimension evaluates the scope and significance of system components affected by the attack. We define four indicators for system modules:

- 1.

- Functional Dependency Score (FDS): This indicator measures the degree to which other modules in the system depend on module i for correct operation. Instead of subjective importance, is computed by analyzing the system’s functional dependency graph. A module that supports many downstream components or processes has a higher , indicating that its compromise could cause cascading failures. Let be the number of modules directly dependent on module i and the maximum such value across all modules:

- 2.

- Exposure Level (EL): reflects the number and type of interfaces through which module i communicates with other internal or external components, representing its potential attack surface. Interfaces may include APIs, network ports, or wireless connections. A module with many exposed endpoints or Internet-facing services has a higher risk of being targeted. Let be the number of such interfaces, normalized by the maximum observed value:

- 3.

- Security Posture Deviation (SPD): quantifies how much module i diverges from the recommended security configuration baseline. This includes outdated patches, misconfigurations, or policy violations. It is derived from audit data or security scans. A higher means the module has more security gaps. Let represent a normalized deviation score based on violations and be the worst-case deviation:

- 4.

- Segmentation Effectiveness (SE): evaluates how well module i is isolated from the rest of the system, based on logical or physical segmentation. Modules within high-trust or tightly segmented zones will have higher values, limiting lateral movement. This score is obtained via architectural analysis (e.g., network design and VLAN separation). Let be the assigned isolation score:

These indicators provide a balanced view of each module’s role and security posture: captures operational dependency, the exposure to threats, the internal configuration state, and the isolation strength. A module with high dependency, high exposure, poor configuration, and weak segmentation represents a critical vulnerability.

We define the overall System Module Score (SMS) to summarize these risks across all n modules by integrating the indicators into a weighted risk expression:

where a higher SMS indicates greater systemic vulnerability in the module layer.

3.2.2. Attack Path Indicators

For the attack paths dimension, we consider metrics that characterize the nature of each possible attack path an adversary might take through the system (as represented in the attack graph). We define four indicators for an attack path j:

- 1.

- Initial Access Barrier (IAB): represents the difficulty an attacker faces in gaining the initial foothold required to begin traversing path j. This includes required credentials, network reachability, and exploit availability. A higher means that starting the path requires more effort or privilege. Let be a normalized score reflecting access barriers:

- 2.

- Traversal Span (TS): measures how broadly path j stretches across different zones, layers, or security domains. It captures lateral movement capability and the scope of compromise. Let be the number of unique zones traversed, normalized by Z, the maximum across all paths:

- 3.

- Detection Evasion Potential (DEP): indicates how likely it is that attacks along path j will evade detection. We assess the residual undetected risk at each node i based on sensor coverage, logging, and response latency. Averaging across nodes yields the following:

- 4.

- Operational Impact Potential (OIP): estimates the damage to operations if path j is successfully exploited. This considers whether the path leads to critical process disruption, safety risks, or data loss. Let be a normalized score derived from threat impact analysis:

Together, these indicators provide insight into path feasibility (), scope (), stealth (), and impact (). A low-barrier, wide-spanning, undetectable path with high impact represents the highest threat.

The overall Attack Path Score (APS) summarizes the severity of all M attack paths:

which captures the ratio between attack benefits and required effort across paths.

3.2.3. Attack Method Indicators

For the attack methods dimension, we evaluate the techniques that an adversary employs at various stages of the attack. We define four indicators to characterize each attack method k used in cross-domain scenarios:

- 1.

- Adversarial Sophistication (AS): assesses the novelty and advancement of method k based on whether it is typically associated with advanced persistent threats (APTs), novel exploits, or automation. Higher implies the use of modern, often less detectable techniques.

- 2.

- Resource Requirement (RR): measures the effort, tools, and expertise needed to execute method k. This includes programming knowledge, availability of exploit kits, or hardware requirements. A higher score means fewer attackers are capable of using it.

- 3.

- Target Tailoring (TT): evaluates how specifically the method is designed for the victim system. Custom-built payloads or exploits tailored to particular ICS platforms yield high scores, signaling targeted intent and reconnaissance.

- 4.

- Detection Resistance (DR): reflects the inherent stealthiness of method k, considering whether it uses encrypted channels, fileless execution, or blending with legitimate processes. Higher indicates higher evasion capability.

These indicators ensure a comprehensive evaluation of the attacker’s means from multiple perspectives: adversarial sophistication (how novel and advanced the method is), resource demand (the level of skill and tooling required), target tailoring (the degree of customization to the victim system), and detection resistance (the stealthiness of the method). By analyzing attack methods through these metrics, defenders can better understand the threat landscape and prioritize their responses accordingly—for instance, by improving detection mechanisms for stealthy, tailored attacks or focusing mitigation efforts on techniques requiring minimal attacker resources.

Suppose the total number of distinct attack methods considered is K. We can compute average values for each of the four metrics across all methods:

Finally, we define an overall Attack Method Score (AMS) by combining these four aspects. One simple approach is to take the arithmetic mean:

resulting in a normalized 0–1 score that represents the cumulative threat posed by the attack techniques. Higher values of AMS correspond to more advanced, targeted, and stealthy methods that may require prioritized attention in system defenses.

3.2.4. Attack Impact Indicators

Finally, for the attack impact dimension, we categorize and quantify the potential consequences that cross-domain attacks could inflict on the industrial system. Drawing inspiration from the impact taxonomy in frameworks like MITRE ATT&CK [29], we classify attack impacts into the following categories:

- Loss of Availability (LA)—reduction in or loss of system availability, causing downtime.

- Loss of Productivity and Revenue (LPR)—direct impact on production output and financial losses due to disruption.

- Loss and Denial of Control (LDC)—attacker gains or denies control of the system, e.g., via disabling control commands or performing unauthorized control actions (akin to Denial-of-Service or command hijacking).

- Manipulation of Control (MC)—attacker issues malicious or altered control commands, causing the system to operate unsafely or incorrectly.

- Loss of Safety (LS)—compromised safety mechanisms, potentially endangering human operators or equipment (e.g., disabling alarms or safety interlocks).

- Loss of Protection (LP)—disabling or bypassing security protections, such as encryption, authentication, or physical security measures.

- Damage to Property (DP)—physical destruction or damage to equipment, facilities, or products as a result of the attack.

- Theft of Operational Information (TOI)—exfiltration of sensitive operational data, such as proprietary process recipes, engineering drawings, or system configurations.

For clarity, Table 2 shows how these specific impacts are grouped into broader impact categories affecting different aspects of the system. Each category addresses a dimension of potential damage: availability, control, safety/protection, and integrity of physical or informational assets.

Table 2.

Impact categories, specific indicators, and calculation methods.

Each of the eight specific impacts (LA, LPR, LDC, MC, LS, LP, DP, and TOI) can be viewed as an indicator on its own. We assess each impact type in terms of severity for the given scenario. To quantify the impact severity, we adopt a qualitative scoring approach: each impact is assigned a severity level from 0 to 4 (five levels), which is then mapped to a numerical score from 0 to 1 in equal increments. For example, an impact assessed as negligible would be level 0 (score 0), minor impact level 1 (score 0.25), moderate impact level 2 (score 0.5), serious impact level 3 (score 0.75), and catastrophic impact level 4 (score 1). The scoring criteria can be customized for each impact type. As an illustration, consider the Damage to Property (DP) impact: one could define the levels in terms of estimated financial loss in dollars. For instance,

where the thresholds (USD 104, USD 105, etc.) are chosen as example ranges for property damage costs. Similar piecewise definitions can be established for other impacts (e.g., downtime duration for LA, safety incident severity for LS, etc.).

By covering impacts from the cyber layer (information theft) to the physical layer (equipment damage), the evaluation system achieves a comprehensive view of potential damages. Our impact categorization is informed by the MITRE ATT&CK impact metrics [29], ensuring that the evaluation method is grounded in a well-vetted taxonomy and remains systematic and scientific. The outcome of this impact assessment is a clear quantitative analysis of attack consequences that can aid system managers in understanding worst-case scenarios.

Once each of the eight impact indicators is assigned a score (between 0 and 1), we compute the overall Attack Impact Score (AIS) as the average of these values:

where each term is the score for that impact type. This yields a single figure representing the overall severity of impacts an attacker could cause, on average, given the scenario.

3.3. Overall Evaluation Score

Based on the computed scores for each dimension (modules, paths, methods, and impacts), we derive a final aggregated metric, the Attack Evaluation Score (or simply Score), for the system. We define it as

summing the System Module Score, Attack Path Score, Attack Method Score, and Attack Impact Score. This overall score encapsulates the comprehensive risk level of cross-domain attacks on the cloud–edge-integrated ICS. Higher values indicate a more insecure system (greater combined risk across all dimensions). In practice, this score can be used as a benchmark to compare different system configurations or to track security improvements over time (for instance, after implementing mitigations, one would expect the score to decrease).

It is worth noting that while we use an unweighted sum here for simplicity, the framework could be extended by applying weighting factors to each component of the score if certain dimensions are considered more critical in a given context. For this study, we treat them equally to emphasize a balanced consideration of all aspects.

4. Experiments and Results

After constructing the cross-domain attack evaluation method, we validated its effectiveness and practicality through a repeatable digital-twin testbed. All scenarios and attacks described below are, therefore, fully deployable by third parties who reproduce the released testbed images and scripts.

4.1. Experimental Scenario Design

To thoroughly exercise the evaluation framework, we designed a test scenario inspired by real-world security incidents. In particular, we base our simulation on the 2015 Ukraine power grid cyberattack, which exemplified a coordinated multi-stage, cross-domain attack. This scenario was chosen because it fully reflects the complexity and multi-dimensional nature of cross-domain attacks, involving network intrusions, operational technology manipulations, and physical consequences.

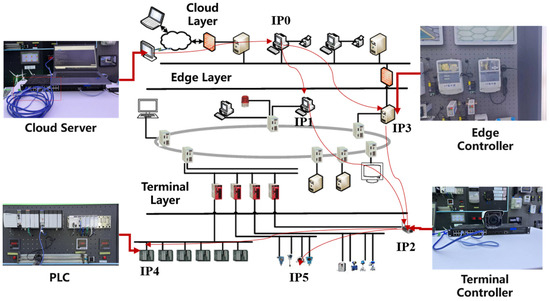

The simulated ICS environment (shown in Figure 2) is a representative cloud–edge collaborative architecture. It includes a cloud layer for high-level data analytics and decision support, an edge layer comprising edge controllers and gateways that interface with field equipment, and a terminal device layer containing the on-site controllers (PLCs and RTUs), sensors, and actuators on the factory floor or power grid substation. This architecture captures the typical multi-layer structure of modern ICS deployments that leverage cloud and edge computing. We carefully designed the network topology and communication patterns to reflect realistic configurations (for instance, how edge devices communicate with both cloud services and local controllers and how corporate IT networks connect to control networks under certain constraints). The attack graph for this scenario includes multiple entry points (e.g., phishing entry via corporate network, direct malware on an HMI workstation, etc.) and multiple known vulnerabilities that an attacker could exploit at various stages.

Figure 2.

Topology of our simulated ICS environment.

The details of our simulated ICS environment setting are as follows (Figure 2):

- Cloud layer—a three-node Kubernetes1.30 cluster (each node: 4vCPU@2.4GHz, 16 GB RAM, 100 GB SSD) running analytic micro-services and a TimescaleDB historian.

- Edge layer—two ARM-based gateways (RaspberryPi4B, 4 GB RAM) equipped with Modbus and OPCUA protocol adapters and an Nvidia JetsonNano (128-CUDA core, 4 GB RAM) for on-prem inference.

- Field/terminal layer—three OpenPLCv3 virtual controllers (compiled for x86), emulating Siemens S7-1200-class PLCs, each interfaced with a MiniCPS physics model of a power-distribution feeder; sensors/actuators exchange data via Modbus/TCP at 100 ms scan cycles.

We ran multiple iterative trials of the simulation to ensure stability, reliability, and reproducibility of the results. Each experimental scenario was executed 30 times under independent random seeds, influencing factors such as traffic timing and attacker decision branches. By repeating the experiment several times and averaging the results, we reduce the impact of random factors or simulation noise on the evaluation metrics and obtain statistically rigorous estimates. We report the mean and 95% confidence intervals using bootstrapping with 2000 resamples.

During the simulation, data on system state, attack progression, and detection events were collected dynamically, feeding into the calculation of the evaluation indicators in real time. This emulates how, in a real deployment, security monitoring and data acquisition would continuously provide input to our evaluation algorithm. Across all repetitions, the maximum confidence interval half-width observed for the overall score is 0.04, indicating high stability and reliability of the results. Furthermore, to ensure reproducibility and avoid environment-induced variability, we fixed the software versions and dependencies used in our experiments, preventing version skew and ensuring that identical experiments yield consistent results over time.

4.2. Results and Analysis

To systematically quantify the security gains and trade-offs offered by distinct defense mechanisms in our cloud–edge ICS deployment, we defined four experimental scenarios to evaluate the impact of targeted mitigations on overall risk posture:

- Scenario A—Baseline: The system operates with default settings (PLC firmware v3.4.5, flat network topology, no VLAN/ACL rules), representing the unprotected risk posture.

- Scenario B—Targeted Patch at IP1: Deploy vendor hotfix on the PLC at IP1 (firmware upgraded to v3.4.6 via our CI/CD pipeline), closing the known exploit while retaining original network segmentation.

- Scenario C—Micro-Segmentation of IP2: Enforce strict isolation on the edge controller at IP2 by migrating its interface into a dedicated VLAN 42 and applying ACLs that block all IT-subnet ingress, plus a stateful firewall on Modbus TCP port 502 (deny-by-default), effectively severing lateral attack pathways.

- Scenario D—Combined Defenses: Apply both the IP1 firmware patch (v3.4.6) and IP2 micro-segmentation together, removing the easy entry vector and reinforcing the critical pivot to demonstrate compounded security improvements.

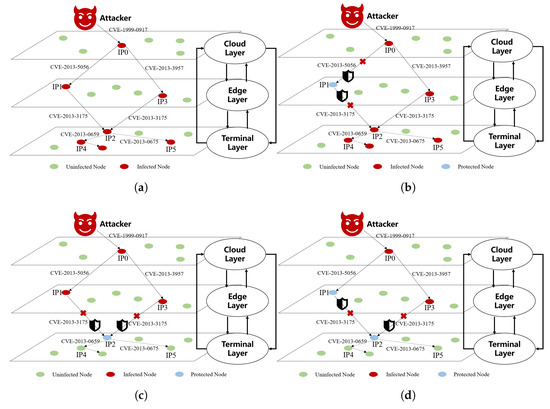

Using the designed scenario, we applied our evaluation framework to compute the security scores for the system under the simulated attack. The inputs to the evaluation included the system topology and the constructed cross-domain attack graph (as illustrated in Figure 2 and Figure 3). Figure 3 presents a layered view of the attack, showing the attacker’s utilized entry points, the specific vulnerabilities exploited, and the resultant attack paths through the system under different scenarios. The layered visualization makes clear how the attacker moves through the cloud–edge collaborative environment, highlighting cross-domain transitions between the IT network, cloud services, edge layer, and physical control layer.

Figure 3.

Attack graphs under different scenarios in the simulated ICS environment. Subfigures illustrate the attack surfaces under four configurations: (a) Scenario A—Baseline. (b) Scenario B—Mitigation at IP1. (c) Scenario C—Mitigation at IP2. (d) Scenario D—Both Mitigations.

Based on the indicators and formulas defined in Section 3.2, we calculated the score contributions for each dimension. Table 3 summarizes the security evaluation results for each scenario across the four dimensions (System Module, Attack Path, Attack Method, and Attack Impact) and the overall cross-domain attack score. The scores reflect the system’s risk exposure in each scenario, with lower values indicating a more secure posture.

Table 3.

Comparison of security evaluation scores under different scenarios.

As expected, Scenario A (Baseline) exhibits the highest risk across all dimensions, yielding an overall score of 3.04. With no safeguards in place, the System Module Score is relatively high (0.41) due to unpatched vulnerabilities and lack of isolation, the Attack Path Score is 1.00 (indicating an accessible multi-step attack chain), the Attack Method Score is 0.90 (common exploits are readily applicable), and the Attack Impact Score is 0.73 (significant potential damage if the attack succeeds). This baseline highlights the system’s weak points—notably, the vulnerability at IP1 and the pivotal role of IP2 in the attack chain—which contribute to an elevated cross-domain attack risk.

In Scenario B (Patch IP1), the act of patching the known vulnerability at node IP1 strengthens that node’s security, reflected by a lower System Module Score (0.30) compared to the baseline 0.41. Eliminating the easy exploit also forces the attacker to consider more complex or less direct attack methods, which reduces the Attack Method Score from 0.90 to 0.60. These changes result in a moderate improvement in the overall score (down to 2.63). However, because the network topology and IP2’s status are unchanged in this scenario, the Attack Path Score remains at 1.00, and the Attack Impact Score stays high (0.73)—an attacker who somehow gains a foothold at IP1 (e.g., via an alternative method or credential theft) could still traverse to IP2 and inflict similar impact as in the baseline. Thus, patching IP1 alone addresses the specific vulnerability and improves the security posture to a degree, but significant risk remains via the critical IP2 pathway.

In Scenario C (Isolate IP2), only the isolation measure is applied at node IP2, while IP1’s vulnerability is left unpatched. Here, we observe a substantial reduction in the Attack Path Score (from 1.00 down to 0.60) because isolating IP2 erects a barrier in the attack chain—the attacker’s path is curtailed, and crossing into the protected segment becomes significantly more difficult. The isolation also limits the potential spread and consequences of an attack, lowering the Attack Impact Score to 0.60 (versus 0.73 baseline) since a breach is less likely to reach critical assets beyond IP2. The System Module Score (0.30) is slightly improved relative to baseline, thanks to the increased isolation degree of that important module (even though IP1 remains vulnerable). Notably, the Attack Method Score (0.80) in Scenario C is still relatively high because the initial exploit on IP1 is still available to the adversary—the attacker does not need a more sophisticated method to compromise IP1. Overall, Scenario C achieves an improved overall score of 2.30, which is a greater risk reduction than Scenario B. This underscores the effectiveness of isolating the key node (IP2) in disrupting cross-domain attack paths and containing potential damage, even though one vulnerability (IP1) remains open.

Scenario D (Both Mitigations) yields the best security outcomes by combining the two defenses. In this scenario, the System Module Score drops to 0.21, the lowest of all cases, indicating that the system’s inherent resilience is greatly improved when IP1’s vulnerability is patched and IP2 is isolated. The Attack Path Score is reduced to 0.50—the attack graph is effectively broken; the path to the target is highly restricted by the IP2 isolation (requiring elevated privileges or extraordinary steps to bypass) and the initial entry is no longer trivial. Likewise, the Attack Method Score declines to 0.40 because the attacker must now resort to significantly more complex and less certain exploits at every stage (the previously available exploit at IP1 is gone, and additional specialized techniques would be needed to penetrate the isolated IP2). The Attack Impact Score also falls to 0.40, reflecting the fact that with the attack unable to reach its final target layer, the worst-case impact is substantially mitigated. Consequently, the overall cross-domain attack score in Scenario D improves to 1.51, which is roughly a 50% reduction in risk compared to the baseline scenario. This dramatic improvement confirms that addressing both identified weaknesses in tandem provides a compounded security benefit: the patch at IP1 removes an easy entry point, and the isolation of IP2 protects the critical pivot in the attack chain. Together, these measures reinforce each other and provide the strongest security posture, drastically reducing the system’s vulnerability to cross-domain attacks.

In summary, the comparative evaluation across the four scenarios clearly demonstrates the value of targeted defenses in a cloud–edge ICS. Scenario B (patching IP1) reduced the System Module and Attack Method Scores but left the Attack Path and Impact largely unchanged, yielding a moderate overall improvement. Scenario C (isolating IP2) proved more effective at severing attacker pathways and containing damage, producing a greater drop in overall risk than patching alone. The combined mitigations in Scenario D achieved the most substantial reduction—over 50% lower overall score relative to baseline—by simultaneously eliminating the easy exploit at IP1 and fortifying the critical pivot IP2. These results validate our framework’s ability to quantify security gains from specific countermeasures and to guide defenders toward the most impactful interventions.

5. Conclusions and Future Work

In this paper, we presented a comprehensive framework for assessing cross-domain threats in cloud–edge-integrated Industrial Control Systems. The framework systematically combines data collection, multi-dimensional indicator analysis, and scoring to evaluate the risk posed by attacks that span cyber and physical domains. By introducing a novel set of 16 evaluation indicators across system modules, attack paths, attack methods, and impacts, our approach addresses the limitations of traditional methods in dynamic, distributed cloud–edge environments.

Through a simulated case study based on a real-world attack scenario, we demonstrated that the framework can effectively identify critical vulnerabilities and attack pathways in an ICS. The evaluation results provided both a quantitative security score and qualitative insights into the system’s weak points, thereby enabling targeted defensive measures. After applying mitigations, the framework could validate an increase in overall security, highlighting its utility not just in assessment but also in iterative improvement of ICS defenses.

This work offers theoretical and practical guidance for the secure deployment of cloud–edge technologies in industrial settings. For practitioners, the indicator system and scoring method can be used as a tool to continuously monitor and assess an ICS as it evolves, ensuring that emerging cross-domain risks are recognized promptly. For researchers, our framework fills a gap by blending cyber and physical security analysis in a unified model and suggests several avenues for future work. One direction is to further refine the weighting and aggregation techniques for the indicators, potentially incorporating machine learning to adapt weights based on historical incident data. Another is to expand the framework to consider proactive defense measures (e.g., how quickly an attack could be quarantined once detected). Moreover, while our simulation was illustrative, testing the framework on more extensive real or emulated environments (such as digital twins of industrial facilities) would provide deeper validation.

Looking ahead, quantum computing introduces novel threat vectors and defensive opportunities for ICS security. Future investigations may explore quantum-enabled attack scenarios—such as accelerated key search and quantum-enhanced cryptanalysis—and evaluate quantum-resistant cryptographic primitives, including lattice-based and hash-based schemes tailored for resource-constrained edge devices. Moreover, the design of hybrid classical–quantum key management architectures could be examined to balance performance and long-term confidentiality in cloud–edge communications.

Advances in machine learning and automated defense mechanisms offer pathways to enhance the framework’s adaptability and responsiveness. Subsequent work might apply supervised and reinforcement learning to historical incident archives and live telemetry data, enabling dynamic indicator weighting and threshold tuning. The incorporation of explainable AI techniques could facilitate transparent risk assessments, while research into real-time mitigation strategies—such as automated micro-segmentation, adaptive traffic control, and rapid quarantine triggers—could inform best practices for maintaining operational continuity under attack.

To ensure practical applicability at scale, the framework could be validated through deployment on large-scale digital twins and pilot studies with industry partners. Such efforts would assess performance under high-throughput conditions, characterize scoring latency, and identify integration challenges. Additionally, further exploration of privacy-preserving data sharing methods—such as secure multi-party computation and homomorphic encryption—and blockchain-based provenance tracking for firmware updates may strengthen supply chain and data integrity. Finally, extending safety and resilience metrics to incorporate human factors and recovery objectives could yield a more holistic understanding of system robustness and post-incident restoration capabilities.

Author Contributions

Conceptualization, L.Z. and X.S.; methodology, L.Z.; software, L.Z.; validation, L.Z. and X.S.; formal analysis, L.Z. and Y.W.; investigation, L.Z., Y.W. and C.C.; resources, X.S.; data curation, Y.W.; writing—original draft preparation, L.Z. and Y.W.; writing—review and editing, L.Z., Y.W., C.C. and X.S.; visualization, L.Z. and Y.W.; supervision, X.S.; project administration, X.S.; funding acquisition, X.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Key R&D Program of China (2022YFB3104300).

Data Availability Statement

Data available on request from the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Figure A1 (available at https://attack.mitre.org/matrices/ics/ (accessed on 10 July 2025)) shows the MITRE ATT&CK ICS Matrix, which categorizes adversarial tactics and techniques specific to industrial control systems.

Figure A1.

MITRE ATT&CK ICS Matrix.

References

- Stouffer, K.; Falco, J.; Scarfone, K. Guide to industrial control systems (ICS) security. NIST Spec. Publ. 2011, 800, 16. [Google Scholar]

- Lee, E.A. Cyber physical systems: Design challenges. In Proceedings of the 2008 11th IEEE International Symposium on Object and Component-Oriented Real-Time Distributed Computing (ISORC), Orlando, FL, USA, 5–7 May 2008; pp. 363–369. [Google Scholar]

- Rajkumar, R.; Lee, I.; Sha, L.; Stankovic, J. Cyber-physical systems: The next computing revolution. In Proceedings of the 47th Design Automation Conference, Anaheim, CA, USA, 13–18 June 2010; pp. 731–736. [Google Scholar]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog computing and its role in the internet of things. In Proceedings of the First Edition of the MCC Workshop on Mobile Cloud Computing, Helsinki, Finland, 17 August 2012; pp. 13–16. [Google Scholar]

- Wang, K.; Wang, Y.; Sun, Y.; Guo, S.; Wu, J. Green industrial Internet of Things architecture: An energy-efficient perspective. IEEE Commun. Mag. 2016, 54, 48–54. [Google Scholar] [CrossRef]

- Samie, F.; Bauer, L.; Henkel, J. Edge computing for smart grid: An overview on architectures and solutions. In IoT for Smart Grids: Design Challenges and Paradigms; Springer: Cham, Switzerland, 2018; pp. 21–42. [Google Scholar]

- Arthurs, P.; Gillam, L.; Krause, P.; Wang, N.; Halder, K.; Mouzakitis, A. A taxonomy and survey of edge cloud computing for intelligent transportation systems and connected vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6206–6221. [Google Scholar] [CrossRef]

- Langner, R. Stuxnet: Dissecting a cyberwarfare weapon. IEEE Secur. Priv. 2011, 9, 49–51. [Google Scholar] [CrossRef]

- Case, D.U. Analysis of the cyber attack on the Ukrainian power grid. Electr. Inf. Shar. Anal. Cent. (E-ISAC) 2016, 388, 3. [Google Scholar]

- Zhou, Y.; Zhang, Z.; Zhao, K.; Zhang, Z. A Novel Dynamic Vulnerability Assessment Method for Industrial Control System Based on Vulnerability Correlation Attack Graph. Comput. Electr. Eng. 2024, 119, 109482. [Google Scholar] [CrossRef]

- Wang, S.; Ding, L.; Sui, H.; Gu, Z. Cybersecurity Risk Assessment Method of ICS Based on Attack-Defense Tree Model. J. Intell. Fuzzy Syst. 2021, 40, 10475–10488. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhou, C.; Tian, Y.C.; Xiong, N.; Qin, Y.; Hu, B. A fuzzy probability Bayesian network approach for dynamic cybersecurity risk assessment in industrial control systems. IEEE Trans. Ind. Inform. 2017, 14, 2497–2506. [Google Scholar] [CrossRef]

- Zhu, M.; Li, Y.; Xu, W. A Quantitative Risk Assessment Model for Distribution Cyber-Physical System under Cyber Attack. IEEE Trans. Smart Grid 2022, 13, 545–555. [Google Scholar]

- Huang, K.; Zhou, C.; Tian, Y.-C.; Yang, S.; Qin, Y. Assessing the Physical Impact of Cyberattacks on Industrial Cyber-Physical Systems. IEEE Trans. Ind. Electron. 2018, 65, 8153–8162. [Google Scholar] [CrossRef]

- Fu, Y.; Wang, X.; Liu, J.; Zhang, R. Cross-Space Risk Assessment of Cyber-Physical Distribution System under Integrated Attack Based on Fuzzy Bayesian Networks. IEEE Trans. Ind. Inform. 2021, 17, 6423–6433. [Google Scholar]

- Huang, L.; Zhang, X.; Luo, C. Association Analysis-Based Cybersecurity Risk Assessment for Industrial Control Systems. IEEE Access 2020, 8, 131479–131490. [Google Scholar]

- Jin, J.; Pang, Z.; Kua, J.; Zhu, Q.; Johansson, K.H.; Marchenko, N.; Cavalcanti, D. Cloud–Fog Automation: The New Paradigm towards Autonomous Industrial Cyber–Physical Systems. arXiv 2025, arXiv:2504.04908. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, J.; Huang, Z.; Zhang, P. Cyber-Physical Risk Assessment of Power Grid Control Systems Based on Colored Petri Nets and Bayesian Games. J. Electr. Comput. Eng. 2024, 2024, 1–15. [Google Scholar]

- Tan, R.; Li, M. Analyzing the Impact of Cross-Domain Attacks on Industrial Control Systems Using Attack Graph Models. SSRN Preprint, Posted 15 December 2024, 18p. Available online: https://ssrn.com/abstract=5056771 (accessed on 12 July 2025).

- Cao, Z.; Liu, B.; Gao, D.; Zhou, D.; Han, X.; Cao, J. A Dynamic Spatiotemporal Deep Learning Solution for Cloud–Edge Collaborative Industrial Control System Distributed Denial of Service Attack Detection. Electronics 2025, 14, 1843. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, T.; Zhang, P. Operational Risk Evaluation of Active Distribution Networks Considering Cyber Contingencies. IEEE Trans. Smart Grid 2019, 10, 5003–5013. [Google Scholar]

- Li, H.; Zhao, J.; Wu, C.; Sun, Y. An Integrated Cyber-Physical Risk Assessment Framework for Worst-Case Attacks in Industrial Control Systems. arXiv 2023, arXiv:2304.07363. [Google Scholar]

- Zheng, T.; Kumar, I.; Inam, R.; Naz, N.; Elmirghani, J.M. Cybersecurity Solutions for Industrial Internet of Things–Edge: A Comprehensive Survey. Sensors 2025, 25, 213. [Google Scholar] [CrossRef]

- Wu, Y.; Song, T.; Zhou, M.; Fang, J. Federated Real-Time Threat Scoring in Cloud–Edge Collaborative ICS: A Lightweight Anomaly Detection Approach. Electronics 2025, 14, 2050. [Google Scholar] [CrossRef]

- Baybulatov, A.; Promyslov, V. Industrial Control System Availability Assessment with a Metric Based on Delay and Dependency. IFAC-PapersOnLine 2021, 54, 472–476. [Google Scholar] [CrossRef]

- Ross, R.S. Guide for Conducting Risk Assessments 2012. Special Publication (NIST SP) 800-30 Rev 1, National Institute of Standards and Technology, Gaithersburg, MD, USA, September 2012 (Updated January 2020). Available online: https://nvlpubs.nist.gov/nistpubs/Legacy/SP/nistspecialpublication800-30r1.pdf (accessed on 8 July 2025).

- Wang, S.; Ko, R.K.; Bai, G.; Dong, N.; Choi, T.; Zhang, Y. Evasion attack and defense on machine learning models in cyber-physical systems: A survey. IEEE Commun. Surv. Tutorials 2023, 26, 930–966. [Google Scholar] [CrossRef]

- Strom, B.E.; Applebaum, A.; Miller, D.P.; Nickels, K.C.; Pennington, A.G.; Thomas, C.B. Mitre att&ck: Design and philosophy. In Technical Report; The MITRE Corporation: Bedford, MA, USA, 2018. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).