Substation Inspection Image Dehazing Method Based on Decomposed Convolution and Adaptive Fusion

Abstract

1. Introduction

- A new substation image dehazing method, SDCNet, is proposed, which is an efficient end-to-end architecture. SDCNet outperforms existing methods by using fewer parameters and lower computational overhead.

- A Decomposition Convolution Enhancement Module is designed to effectively extract rich spatial features while avoiding additional parameters and computational costs. This module can serve as a plug-in to enhance the performance of both CNN and Transformer architectures.

- An Adaptive Fusion Module is designed to effectively integrate features from the encoder and decoder, preserving key feature information.

- A large-scale substation hazy image dataset is constructed, providing strong data support for future research.

2. Related Work

2.1. Prior-Based Image Dehazing Methods

2.2. CNNs-Based Image Dehazing Methods

2.3. Transformer-Based Image Dehazing Methods

3. Materials and Methods

3.1. Decomposed Convolution Enhancement Module

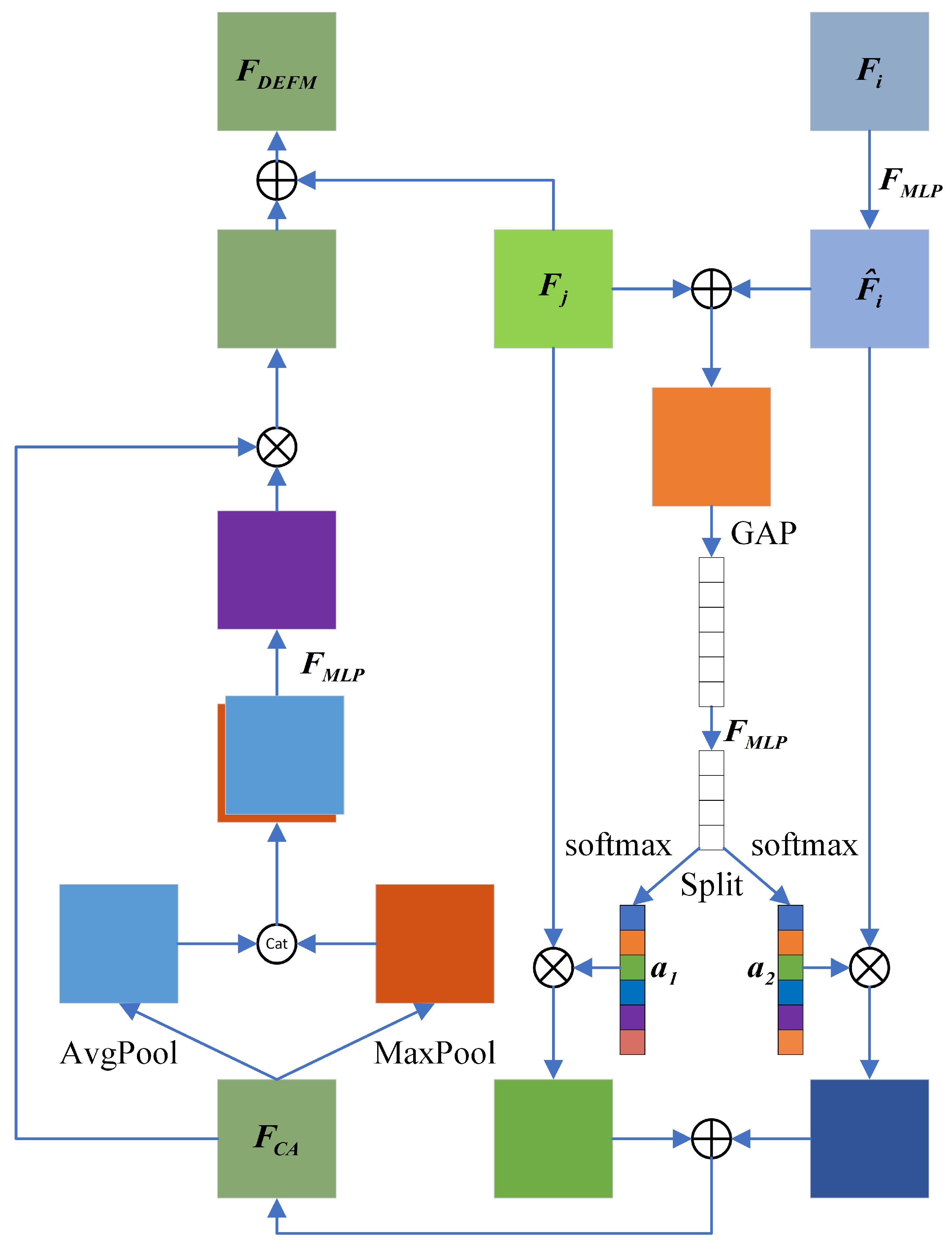

3.2. Adaptive Fusion Module

3.3. Loss Function

4. Experimental Results and Analysis

4.1. Dataset and Pre-Processing

4.2. Experimental Settings and Training Details

4.3. Evaluation Metrics

4.4. Experimental Results

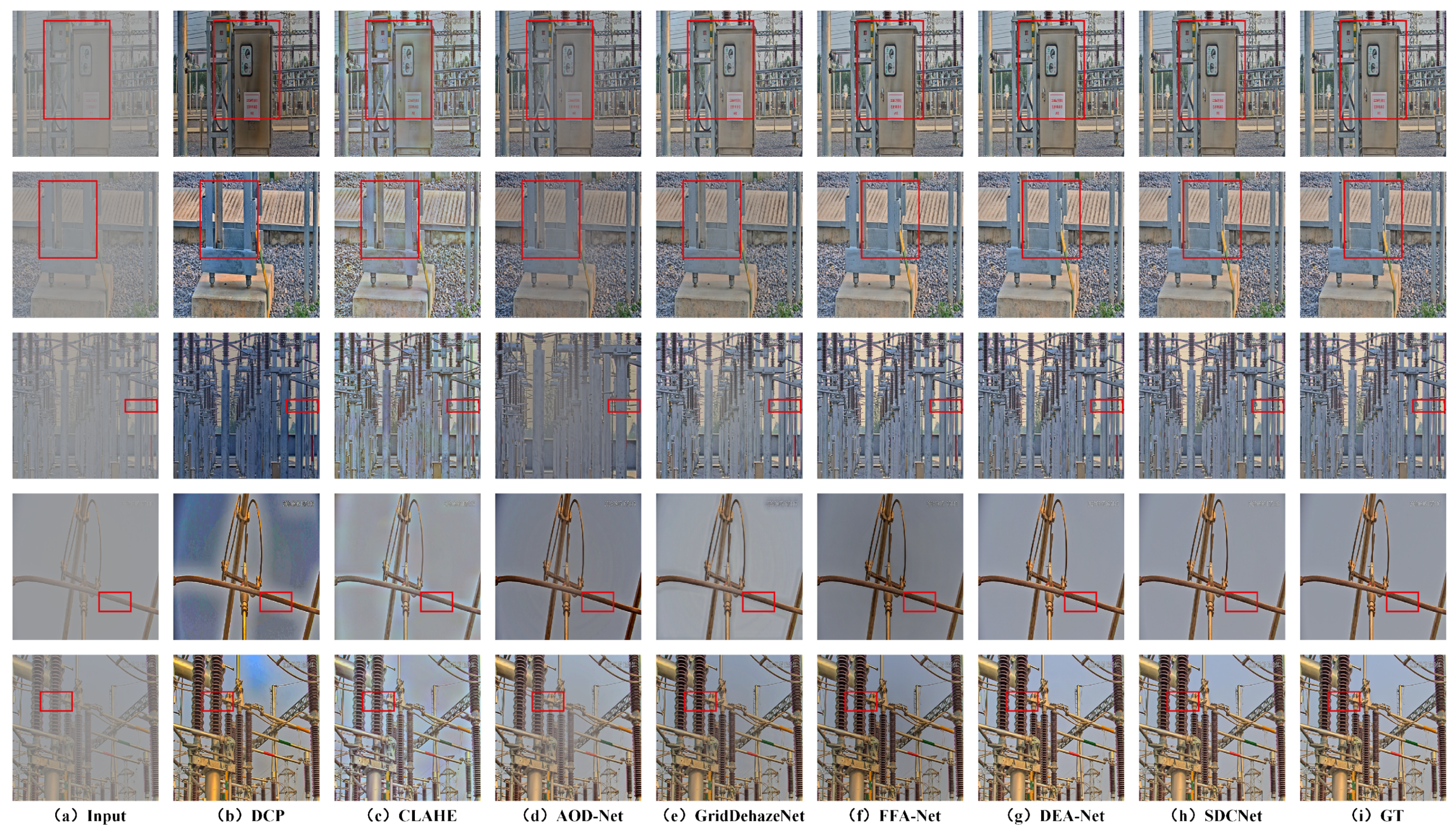

4.4.1. Test Results on MIIS

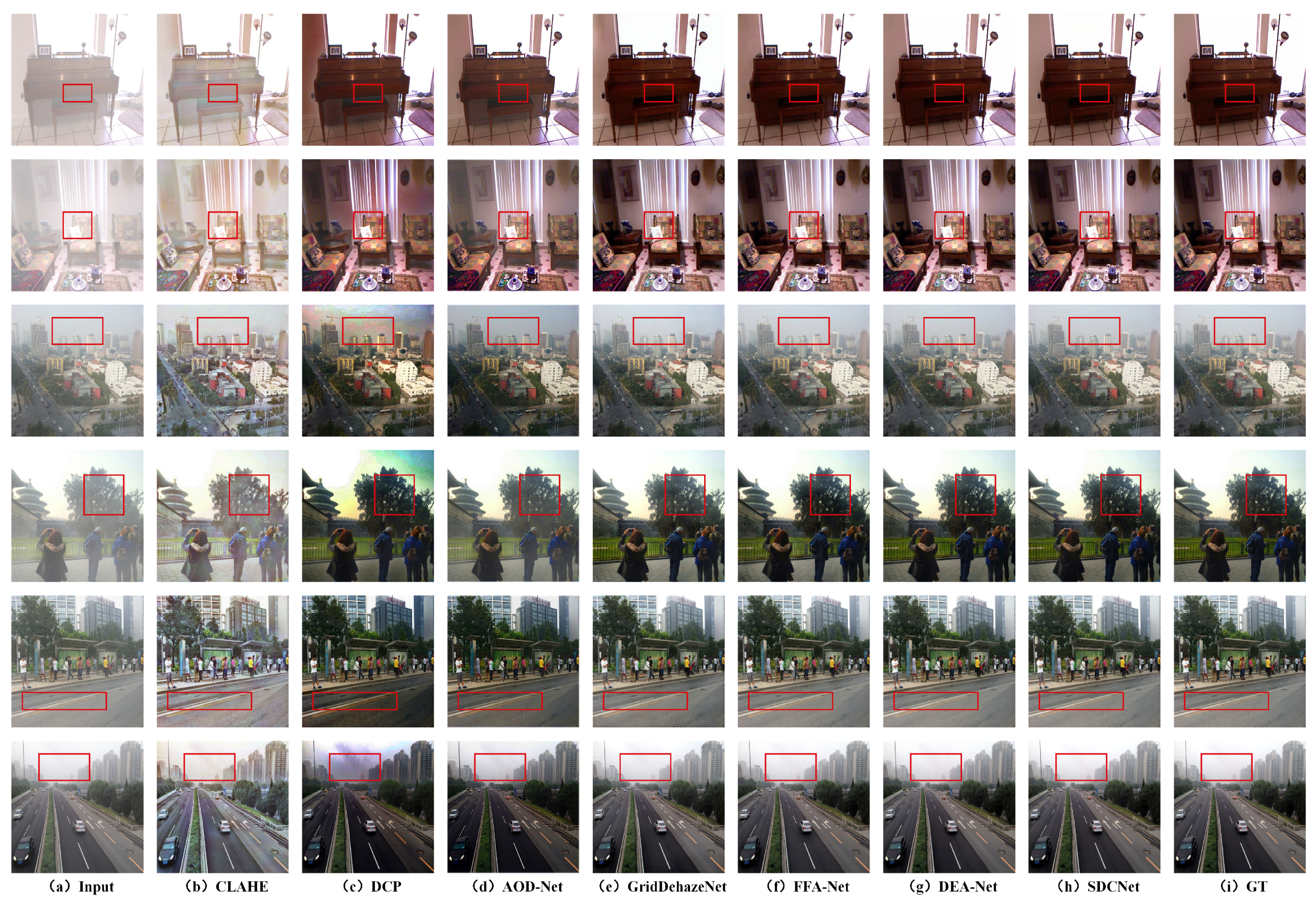

4.4.2. Test Results on RESIDE

4.4.3. Parameter Comparison

4.5. Ablation Experiments

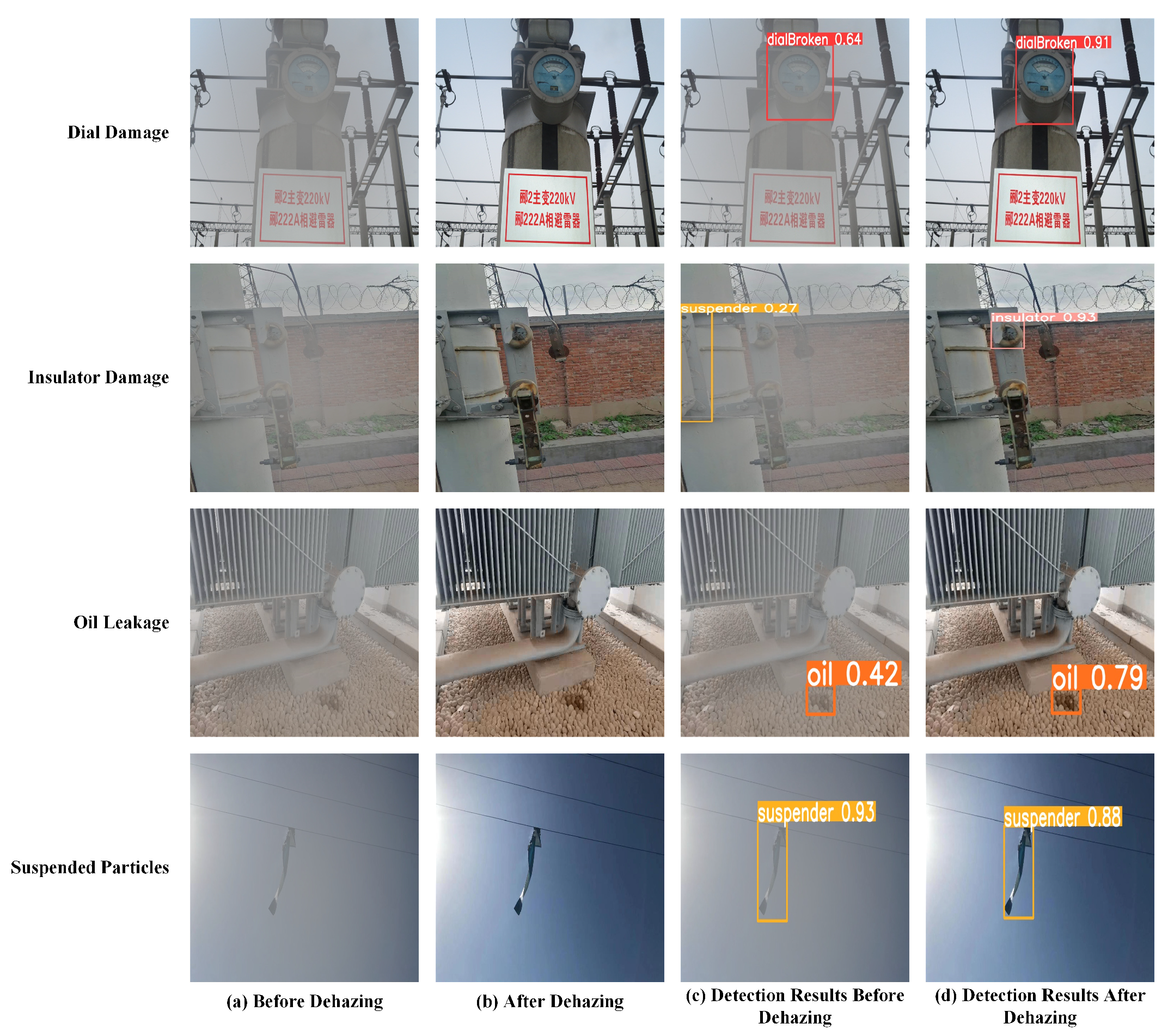

4.6. Application

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Huang, S.; Wu, Z.S.; Ren, Z.G.; Liu, H.; Gui, Y. Research Review on Intelligent Inspection Robots for Power Grids. Electr. Meas. Instrum. 2020, 57, 26–38. (In Chinese) [Google Scholar]

- Dai, H.; Cui, Z.W.; Xie, Z.Y.; Lan, X. Research on Inspection Image Enhancement Algorithm under Complex Weather Conditions. Mech. Des. Manuf. Eng. 2021, 50, 105–109. (In Chinese) [Google Scholar]

- Zhu, B.B.; Fan, S.S. Recognition Method of Pointer Instruments in Rainy and Foggy Environment of Substation. Prog. Laser Optoelectron. 2021, 58, 221–230. [Google Scholar]

- Tan, Y.X.; Fan, S.S.; Wang, Z.Y. Global and Local Contrast Adaptive Enhancement Methods for Low-Quality Substation Equipment Infrared Thermal Images. IEEE Trans. Instrum. Meas. 2023, 73, 5005417. [Google Scholar] [CrossRef]

- Bai, W.R.; Zhang, X.; Zhu, X.Q.; Liu, J.; Cheng, Q.; Zhao, Y.; Shao, J. Power Inspection Image Enhancement Based on E-FCNN. Electr. Power China 2021, 54, 179–185. (In Chinese) [Google Scholar]

- Chen, X.; Fan, S.S. Meter Image Enhancement Method in High Light Substation Based on Improved CycleGAN. In Proceedings of the Third International Conference on Optics and Image Processing, Hangzhou, China, 14–16 April 2023. [Google Scholar]

- Zhou, J.; Tian, Z.X.; Wang, M.Y. Haze Removal for Inspection Images Using a Diffusion Model with External Attention. Electron. Meas. Technol. 2024, 47, 144–152. [Google Scholar]

- Wang, Z.; Jing, M.; Shi, J.; Chen, T.; Liu, W.; Fan, R. A Single Image Dehazing Algorithm with Improved CBAM Mechanism and Detail Recovery. Electron. Meas. Technol. 2023, 46, 161–168. (In Chinese) [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Q.; Mai, J.; Shao, L. Single image dehazing using color attenuation prior. In Proceedings of the BMVC, Nottingham, UK, 1–5 September 2014; pp. 1674–1682. [Google Scholar]

- Berman, D.; Avidan, S. Non-local image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NE, USA, 26 June–1 July 2016; pp. 1674–1682. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Communications of the ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature fusion attention network for single image dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11908–11915. [Google Scholar]

- Wu, H.; Qu, Y.; Lin, S.; Zhou, J.; Qiao, R.; Zhang, Z.; Xie, Y.; Ma, L. Contrastive learning for compact single image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 10551–10560. [Google Scholar]

- Lu, L.P.; Xiong, Q.; Xu, B.; Chu, D. MixDehazeNet: Mix Structure Block for Image Dehazing Network. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–10. [Google Scholar]

- Chen, Z.; He, Z.; Lu, Z.M. DEA-Net: Single Image Dehazing Based on Detail-Enhanced Convolution and Content-Guided Attention. IEEE Trans. Image Process. 2024, 33, 1002–1015. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Song, Y.; He, Z.; Qian, H.; Du, X. Vision transformers for single image dehazing. IEEE Trans. Image Process. 2023, 32, 1927–1941. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking single-image dehazing and beyond. IEEE Trans. Image Process. 2018, 28, 492–505. [Google Scholar] [CrossRef] [PubMed]

- Guo, C.L.; Yan, Q.; Anwar, S.; Cong, R.; Ren, W.; Li, C. Image Dehazing Transformer with Transmission-Aware 3D Position Embedding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 5812–5820. [Google Scholar]

- Qiu, Y.; Zhang, K.; Wang, C.; Luo, W.; Li, H.; Jin, Z. Mb-TaylorFormer: Multi-Branch Efficient Transformer Expanded by Taylor Formula for Image Dehazing. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 12802–12813. [Google Scholar]

- Hong, M.; Liu, J.; Li, C.; Qu, Y. Uncertainty-driven dehazing network. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 22 February–1 March 2022; Volume 36, pp. 906–913. [Google Scholar]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Scaling up your kernels to 31x31: Revisiting large kernel design in CNNs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 11963–11975. [Google Scholar]

- Luo, P.; Xiao, G.; Gao, X.; Wu, S. LKD-Net: Large Kernel Convolution Network for Single Image Dehazing. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; pp. 1601–1606. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NE, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Khalid, R.; Rehman, S.; Riaz, F.; Hassan, A. Enhanced Dynamic Quadrant Histogram Equalization Plateau Limit for Image Contrast Enhancement. In Proceedings of the Fifth International Conference on Digital Information and Communication Technology and its Applications (DICTAP), Beirut, Lebanon, 29 April–1 May 2015; pp. 86–91. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. An All-in-One Network for Dehazing and Beyond. arXiv 2017, arXiv:1707.06543. [Google Scholar]

- Liu, X.; Ma, Y.; Shi, Z.; Chen, J. GridDehazeNet: Attention-Based Multi-Scale Network for Image Dehazing. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7314–7323. [Google Scholar]

- Chen, D.; He, M.; Fan, Q.; Liao, J.; Zhang, L.; Hou, D.; Yuan, L.; Hua, G. Gated Context Aggregation Network for Image Dehazing and Deraining. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 1375–1383. [Google Scholar]

- Dong, H.; Pan, J.; Xiang, L.; Hu, Z.; Zhang, X.; Wang, F.; Yang, M.H. Multi-Scale Boosted Dehazing Network with Dense Feature Fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 24 June 2020; pp. 2157–2167. [Google Scholar]

| Methods | PSNR/dB | SSIM | FSIM | Entropy | PIQE |

|---|---|---|---|---|---|

| DCP | 18.702 | 0.924 | 0.925 | 2.354 | 61.647 |

| CLAHE | 21.338 | 0.926 | 0.942 | 3.652 | 60.208 |

| AOD-Net | 23.06 | 0.969 | 0.945 | 3.681 | 59.255 |

| GridDehazeNet | 32.163 | 0.984 | 0.962 | 4.823 | 56.296 |

| FFA-Net | 36.395 | 0.989 | 0.968 | 4.322 | 56.254 |

| DEA-Net | 39.163 | 0.992 | 0.971 | 4.365 | 54.239 |

| SDCNet | 43.216 | 0.998 | 0.973 | 4.521 | 54.401 |

| Methods | SOTS Indoor (PSNR/dB) | SOTS Indoor (SSIM) | SOTS Outdoor (PSNR/dB) | SOTS Outdoor (SSIM) |

|---|---|---|---|---|

| CLAHE | 12.34 | 0.703 | 15.69 | 0.801 |

| DCP | 16.62 | 0.818 | 19.13 | 0.815 |

| AOD-Net | 19.06 | 0.850 | 20.29 | 0.877 |

| GridDehazeNet | 32.16 | 0.984 | 30.86 | 0.982 |

| FFA-Net | 36.39 | 0.989 | 33.57 | 0.984 |

| DEA-Net | 41.31 | 0.994 | 36.59 | 0.989 |

| SDCNet | 41.36 | 0.995 | 36.39 | 0.990 |

| Evaluation | GCANet | GridDehaze-Net | MSBDN | FFA-Net | SDCNet |

|---|---|---|---|---|---|

| PSNR/dB | 30.482 | 32.163 | 33.672 | 36.395 | 43.216 |

| SSIM | 0.976 | 0.984 | 0.985 | 0.989 | 0.998 |

| Number of parameters | 702818 | 958051 | 31353061 | 832825 | 374822 |

| Running time (s) | 0.09076 | 0.17248 | 1.03280 | 0.13791 | 0.10451 |

| Methods | DCEM | AFM | CRM | PSNR (dB) | SSIM | #Param | FLOPs |

|---|---|---|---|---|---|---|---|

| Baseline | – | – | – | 35.231 | 0.981 | 0.3533 M | 3.3867 G |

| Baseline | ✓ | – | – | 39.315 | 0.991 | 0.3734 M | 3.7060 G |

| Baseline | ✓ | ✓ | – | 41.189 | 0.995 | 0.3748 M | 3.7164 G |

| Baseline | ✓ | – | ✓ | 42.178 | 0.998 | 0.3748 M | 3.7164 G |

| Baseline | ✓ | ✓ | ✓ | 43.216 | 0.998 | 0.3748 M | 3.7164 G |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, L.; Yuan, S.; Mao, W.; Li, M.; Feng, A.; Bao, H. Substation Inspection Image Dehazing Method Based on Decomposed Convolution and Adaptive Fusion. Electronics 2025, 14, 3245. https://doi.org/10.3390/electronics14163245

Jiang L, Yuan S, Mao W, Li M, Feng A, Bao H. Substation Inspection Image Dehazing Method Based on Decomposed Convolution and Adaptive Fusion. Electronics. 2025; 14(16):3245. https://doi.org/10.3390/electronics14163245

Chicago/Turabian StyleJiang, Liang, Shaoguang Yuan, Wandeng Mao, Miaomiao Li, Ao Feng, and Hua Bao. 2025. "Substation Inspection Image Dehazing Method Based on Decomposed Convolution and Adaptive Fusion" Electronics 14, no. 16: 3245. https://doi.org/10.3390/electronics14163245

APA StyleJiang, L., Yuan, S., Mao, W., Li, M., Feng, A., & Bao, H. (2025). Substation Inspection Image Dehazing Method Based on Decomposed Convolution and Adaptive Fusion. Electronics, 14(16), 3245. https://doi.org/10.3390/electronics14163245