SOUTY: A Voice Identity-Preserving Mobile Application for Arabic-Speaking Amyotrophic Lateral Sclerosis Patients Using Eye-Tracking and Speech Synthesis

Abstract

1. Introduction

2. Related Work

2.1. Eye-Tracking and Gaze-Based Control

2.2. Speech Recognition and Audiovisual Monitoring

2.3. Multimodal Assistive Systems and BCI Integration

2.4. Arabic-Language Assistive Technologies

2.5. Design Motivation: Addressing Gaps Through SOUTY

- Predominant focus on English and Western language users.

- Lack of dialect-specific Arabic voice banks.

- Dependence on synthetic speech, which reduces emotional connection.

- Limited offline operation, making tools less accessible in low-connectivity settings.

- A fully Arabic-language interface and phrase set designed for Saudi users;

- A pre-recorded Arabic voice bank from an ALS patient, enabling personalized and culturally resonant speech;

- Offline functionality that allows uninterrupted use without internet access;

- A lightweight, gaze-only interface deployable on mainstream iOS devices.

3. Methodology

3.1. Methodological Framework

- Requirement Analysis: We conducted a focused literature review and consulted with domain experts—including speech-language pathologists and HCI specialists—to understand the needs of Arabic-speaking ALS patients and the limitations of existing AAC systems.

- System Design and Prototyping: Based on initial requirements, we developed interaction flows, a modular system architecture, and interface wireframes. Design emphasis was placed on gaze-based interaction, cultural and linguistic relevance, offline operability, and personalization via voice banking.

- Implementation: The prototype was developed using Swift and Xcode for iOS, employing Apple’s ARKit and AVFoundation frameworks. All system components (eye-tracking, phrase selector, audio output, keyboard input, and database management) were built modularly for extensibility and testability.

- Validation and Iteration: SOUTY was evaluated through qualitative expert interviews, an online public awareness survey, and internal usability walkthroughs. The feedback from these evaluations informed refinements to the UI, audio engine, phrase bank, and calibration process.The sample size for expert interviews (n = 2) was deemed sufficient for initial feedback as both individuals represent key domains (speech pathology and HCI). The public survey sample (n= 236) was collected via social media and academic channels using convenience sampling. The questionnaire underwent internal pilot testing with 10 participants to ensure clarity, and descriptive statistics were used to summarize readiness and awareness.

3.2. Ethical Considerations

4. System Design and Architecture

4.1. Design Objectives

- Provide a gaze-controlled communication platform for ALS patients who have lost the ability to speak.

- Support Arabic as the primary language with culturally relevant phrase sets.

- Enable speech playback using a real recorded Arabic voice instead of synthetic speech.

- Run offline on iOS devices without requiring network access or external hardware.

- Offer both predefined phrases and custom sentence construction through a gaze-controlled keyboard.

- Prioritize high-utility phrases (e.g., caregiver interactions, daily needs, pain, or discomfort) to reduce time-to-speech and ensure rapid communication in common scenarios.

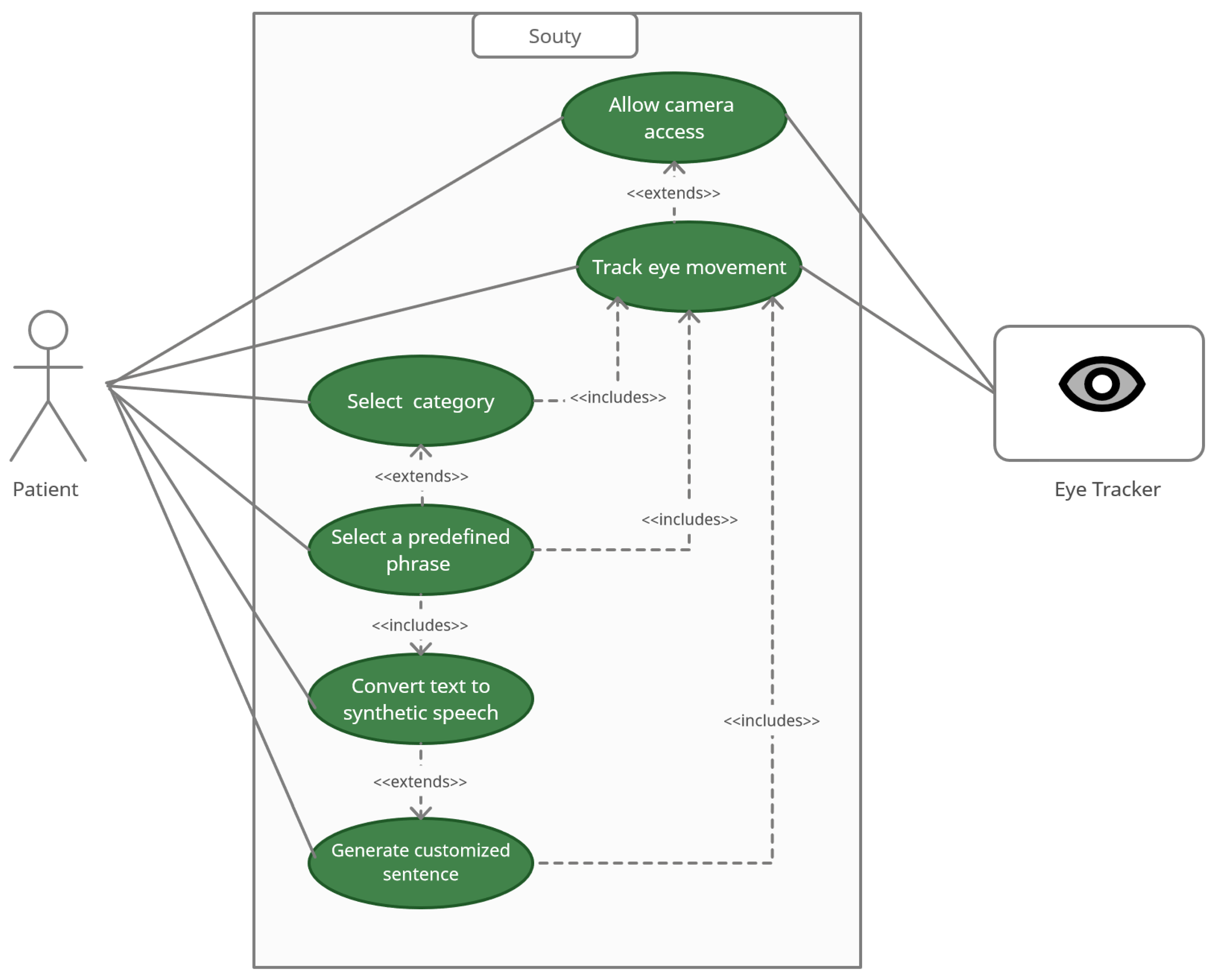

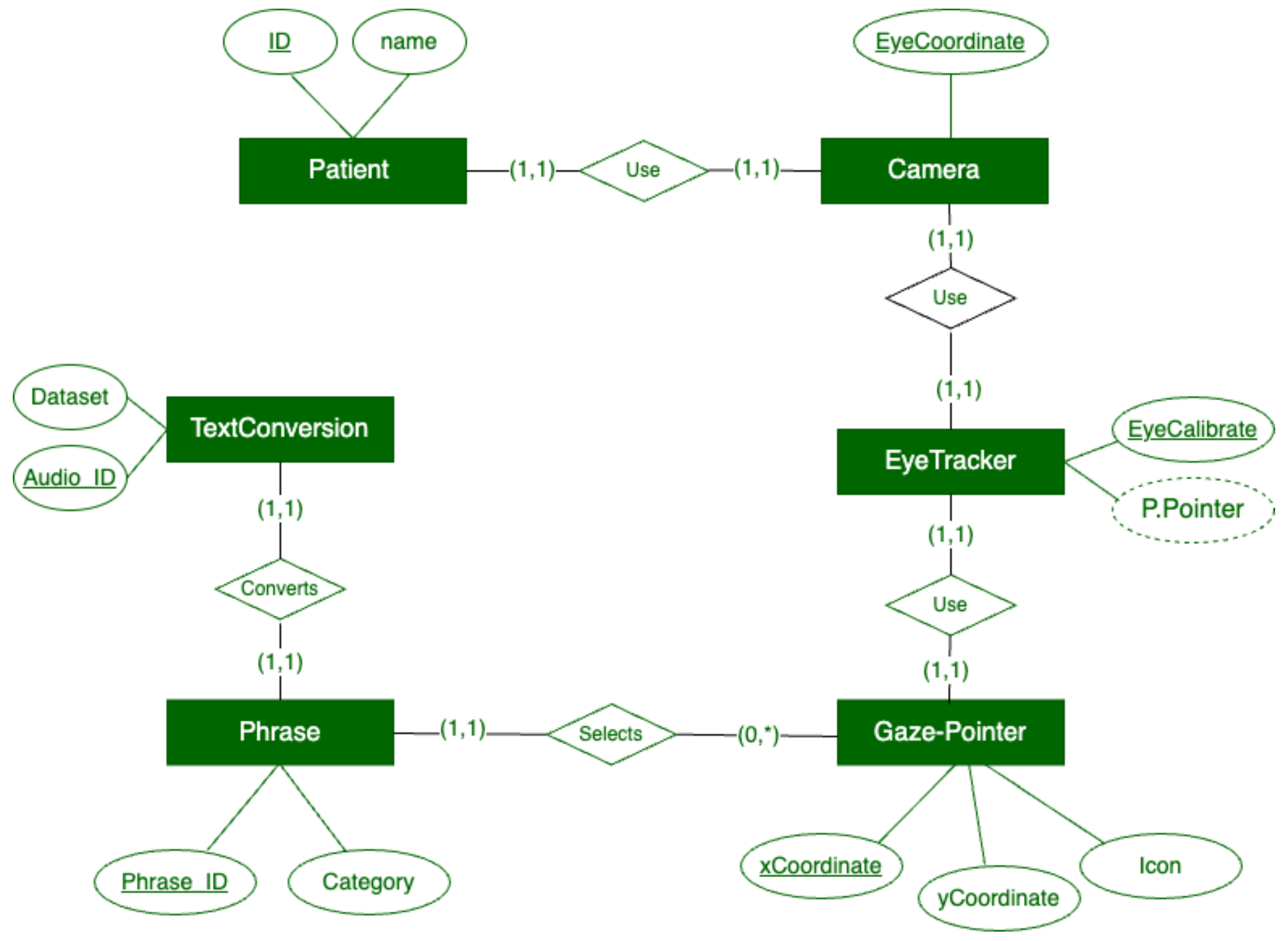

4.2. System Architecture

- Eye-Tracking Module: Utilizes the front-facing camera and ARKit to track gaze and detect dwell-time-based selections.

- Phrase Selector: Displays categorized, gaze-selectable Arabic phrases.

- Custom Keyboard: Allows the user to construct new phrases using an on-screen Arabic keyboard, also controlled by gaze.

- Audio Output Module: Maps selected phrases to audio files and plays them using AVFoundation.

- Voice Bank Repository: Stores pre-recorded WAV files, enabling natural speech output based on phrase selection.

- Logic Controller: Manages interaction flow, calibrates gaze input, and routes user selections to the appropriate output handler.

4.3. User Interface (UI) Design

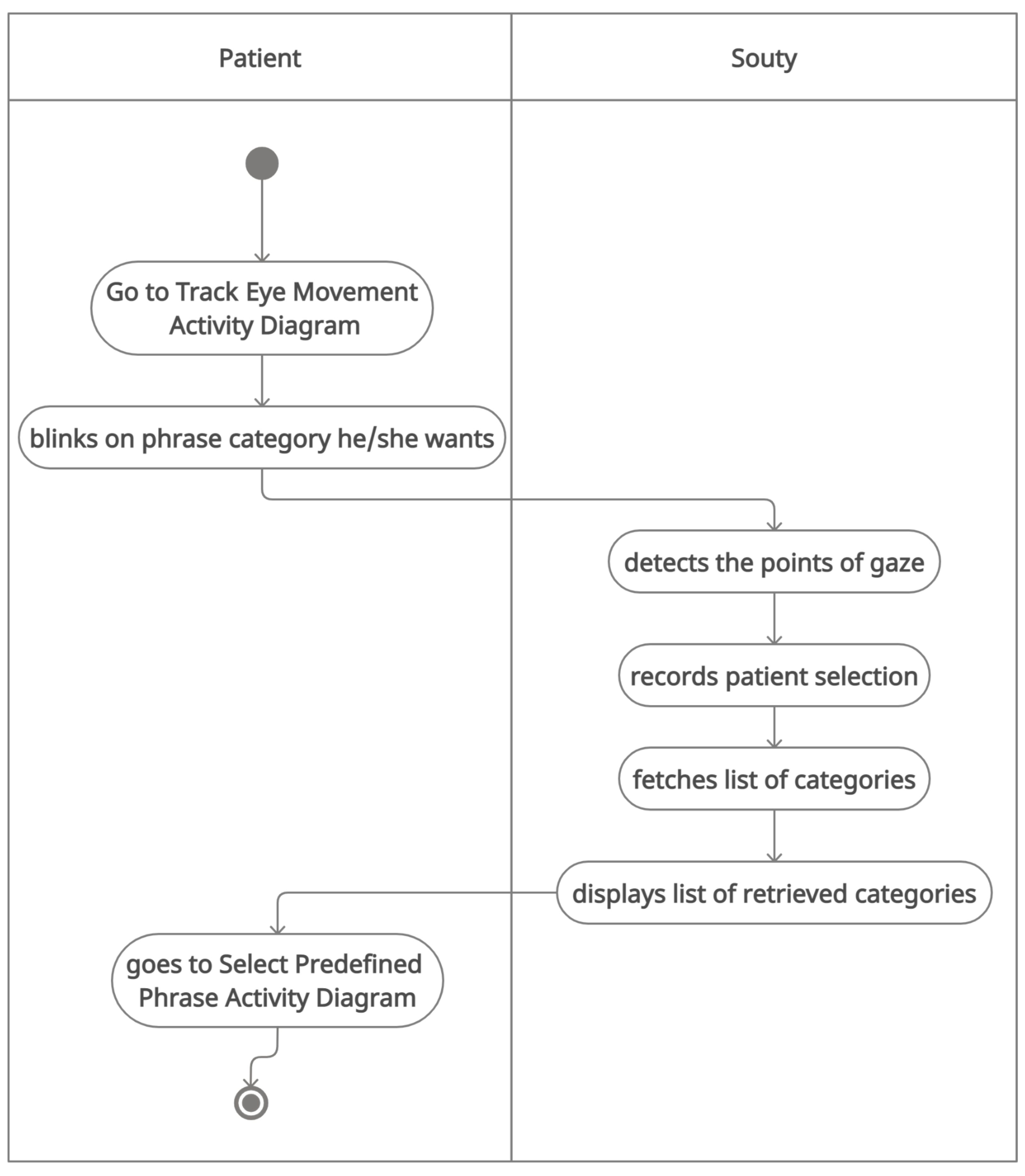

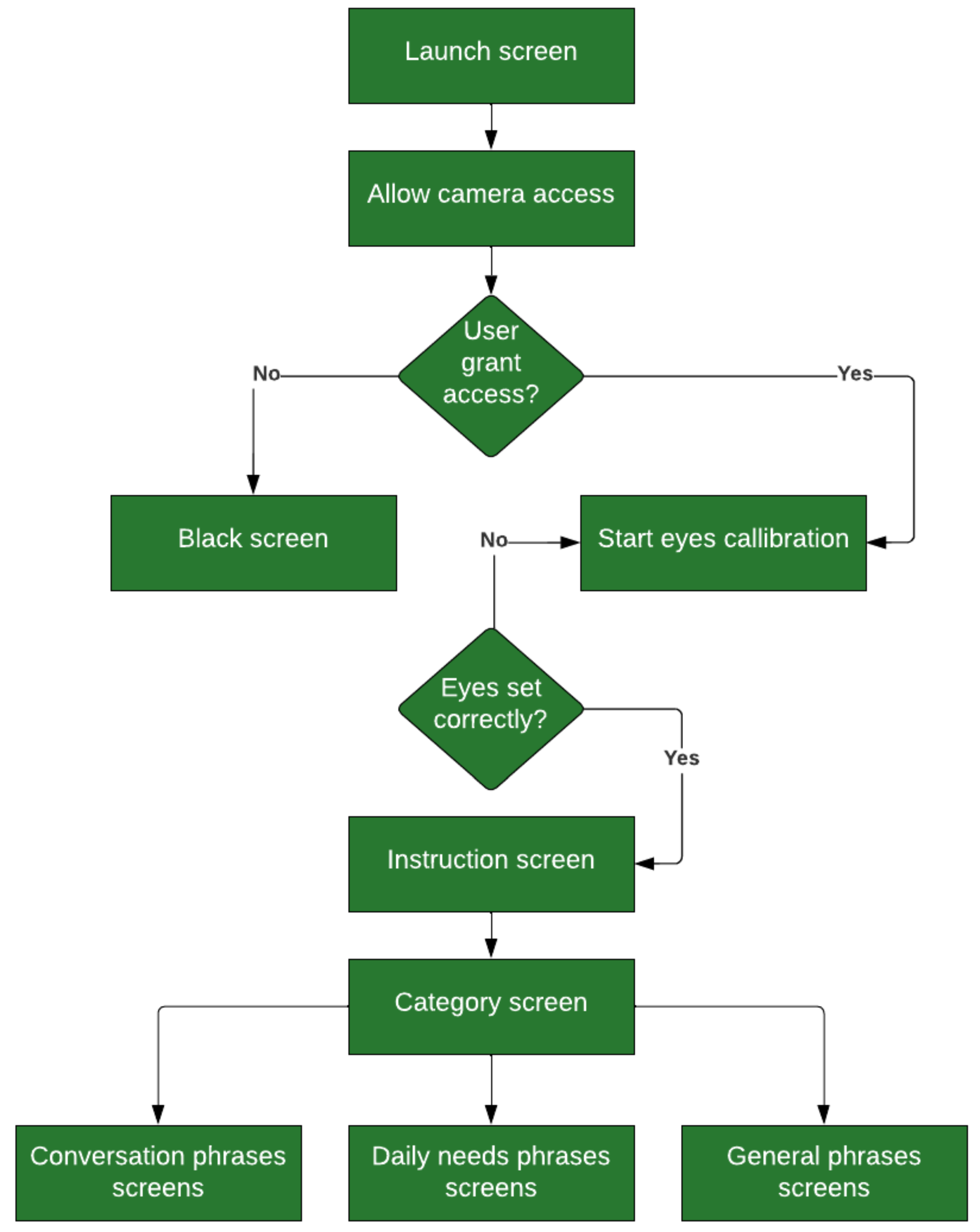

4.4. System Logic and Workflow

5. System Implementation

5.1. Platform and Tools

- ARKit: ARKit was used to track facial geometry through TrueDepth camera input. Specifically, ARKit’s ARFaceAnchor and eye transform data were used to estimate the user’s gaze direction and map it to on-screen coordinates. Gaze estimation was refined using device orientation and screen layout context to ensure robust interaction during natural head movement.

- AVFoundation: For precise playback of WAV files corresponding to selected phrases.

- UIKit: For rendering gaze-interactive RTL UI components and managing views.

- SQLite: For lightweight local database management of phrase categories and associated audio paths.

5.2. Modular Software Components

- Eye Tracking Module: Captures user gaze vectors using ARKit’s face anchors. Coordinates are mapped to interactive screen zones.

- UI Manager: Manages RTL interface layouts, tile highlighting, keyboard display, and gaze-based dwell timers.

- Audio Output Module: Interfaces with AVFoundation to load and play voice recordings.

- Phrase Manager: Interfaces with SQLite to retrieve phrases and audio mappings based on user selections.

- Voice Bank Repository: Contains WAV files recorded in Saudi Arabic by a real ALS patient, mapped to corresponding semantic phrases.

5.3. Calibration and Interaction Flow

5.4. Database and Audio Playback Integration

5.5. System Screen Flow

6. System Testing

6.1. Unit Testing

6.2. Integration Testing

6.3. Performance Testing

6.4. Summary of Technical Testing

7. Evaluation and Results

7.1. Expert Interviews

7.2. Public Awareness Survey

7.3. Usability Walkthroughs

- Granting camera access and completing gaze calibration;

- Navigating to and selecting a predefined phrase category;

- Playing a pre-recorded audio phrase;

- Constructing a custom message using the on-screen Arabic keyboard.

7.4. Qualitative Effectiveness Evaluation (Internal Walkthroughs)

8. Discussion

8.1. Novelty and Differentiators

- Arabic-first design: Unlike most gaze-based AAC tools that support English or generic Latin scripts, SOUTY was developed entirely in Arabic, featuring a user interface, a keyboard, and phrase categories specifically tailored to the Arabic script and cultural context. This design addresses the needs of underserved speakers of one of the most widely spoken languages in the world.

- Real voice bank: SOUTY uses a dataset of pre-recorded Arabic phrases by an ALS patient, preserving speaker identity and emotional expressiveness. This approach offers a more natural alternative to synthesized voices and resonates with users on a personal level.

- Offline operation: All functionalities—gaze tracking, phrase navigation, and audio playback—work without requiring internet access. This enhances privacy, lowers cost, and makes the app usable in home care and rural settings.

- Localized phrase design: Phrase categories were curated based on needs identified by medical experts and families of ALS patients. Categories such as “Daily Needs,” “General Phrases,” and “Conversations” reflect everyday communication scenarios. Internal walkthroughs confirmed that frequently used phrases—such as “I need help,” “I am in pain,” and “Please call someone”—could be selected and vocalized in under 5 s, validating the system’s suitability for urgent or high-frequency communication needs.

8.2. Design Trade-Offs

- Voice customization vs. scalability: Using pre-recorded audio provides authenticity but limits the ability to scale across dialects or genders. A future version may require hybrid models that combine recorded anchors with synthetic speech.

- Hardware dependence: SOUTY currently supports only iOS devices with front-facing cameras. Expanding to Android will require platform-specific calibration and UI adaptations.

- User fatigue: Extended use of eye-tracking can be tiring for some users. While dwell-time tuning helps, additional input modes (e.g., blink or facial gesture recognition) may be beneficial in future versions.

8.3. Comparison with Commercial Applications

8.4. Ethical and Cultural Considerations

8.5. Lessons Learned

9. Conclusions and Future Work

Future Directions

- Cross-platform support: Extend SOUTY to Android and web-based devices to reach a wider audience, including low-cost smartphones and tablets.

- Dynamic TTS integration: Explore hybrid models that combine pre-recorded anchors with Arabic deep learning-based TTS to offer real-time sentence flexibility while preserving voice identity.

- Voice diversity: Expand the voice bank to include female and child voices, allowing users to select or preserve their own voice during early ALS diagnosis.

- Custom phrase creation: Enable users or caregivers to add custom phrases, favorite phrases, and shortcut screens (e.g., for emergencies).

- Clinical validation: Conduct longitudinal trials with ALS patients and caregivers to evaluate performance, satisfaction, and real-world health outcomes.

- Internationalization: Collaborate with global accessibility networks and health organizations such as WHO to localize SOUTY for other Arabic dialects and regions.

- Scalable deployment: Extend SOUTY to support secure multi-user profiles on shared devices (e.g., in clinics or long-term care facilities), with optional cloud-based synchronization for larger voice datasets.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AAC | Augmentative and Alternative Communication |

| AI | Artificial Intelligence |

| ALS | Amyotrophic Lateral Sclerosis |

| BCI | Brain–Computer Interface |

| CETI | Communication Effectiveness Index |

| ERD | Entity-Relationship Diagram |

| HCI | Human–Computer Interaction |

| iOS | iPhone Operating System |

| LLM | Large Language Model |

| RTL | Right-to-Left |

| SOUTY | Voice Identity Preserving Arabic AAC Application |

| SUS | System Usability Scale |

| TTS | Text-to-Speech |

| UI | User Interface |

| UCD | User-Centered Design |

References

- Londral, A. Assistive Technologies for Communication Empower Patients with ALS to Generate and Self-Report Health Data. Front. Neurol. 2022, 13, 867567. [Google Scholar] [CrossRef] [PubMed]

- Mehta, P.; Raymond, J.; Nair, T.; Han, M.; Berry, J.; Punjani, R.; Larson, T.; Mohidul, S.; Horton, D.K. Amyotrophic lateral sclerosis estimated prevalence cases from 2022 to 2030, data from the National ALS Registry. Amyotroph. Lateral Scler. Front. Degener. 2025, 26, 290–295. [Google Scholar] [CrossRef] [PubMed]

- Arthur, K.C.; Calvo, A.; Price, T.R.; Geiger, J.T.; Chiò, A.; Traynor, B.J. Projected increase in amyotrophic lateral sclerosis from 2015 to 2040. Nat. Commun. 2016, 7, 12408. [Google Scholar] [CrossRef] [PubMed]

- Abuzinadah, A.R.; AlShareef, A.A.; AlKutbi, A.; Bamaga, A.K.; Alshehri, A.; Algahtani, H.; Cupler, E.; Alanazy, M.H. Amyotrophic lateral sclerosis care in Saudi Arabia: A survey of providers’ perceptions. Brain Behav. 2020, 10, e01795. [Google Scholar] [CrossRef] [PubMed]

- UNESCO. World Arabic Language Day. 2024. Available online: https://www.unesco.org/en/world-arabic-language-day (accessed on 10 July 2025).

- World Population Review. Arabic Speaking Countries 2025. 2025. Available online: https://worldpopulationreview.com/country-rankings/arabic-speaking-countries (accessed on 10 July 2025).

- Edughele, H.O.; Zhang, Y.; Muhammad-Sukki, F.; Vien, Q.T.; Morris-Cafiero, H.; Opoku Agyeman, M. Eye-Tracking Assistive Technologies for Individuals With Amyotrophic Lateral Sclerosis. IEEE Access 2022, 10, 41952–41972. [Google Scholar] [CrossRef]

- Bonanno, M.; Saracino, B.; Ciancarelli, I.; Panza, G.; Manuli, A.; Morone, G.; Calabrò, R.S. Assistive Technologies for Individuals with a Disability from a Neurological Condition: A Narrative Review on the Multimodal Integration. Healthcare 2025, 13, 1580. [Google Scholar] [CrossRef] [PubMed]

- Fischer-Janzen, A.; Wendt, T.M.; Van Laerhoven, K. A scoping review of gaze and eye tracking-based control methods for assistive robotic arms. Front. Robot. 2024, 11, 1326670. [Google Scholar] [CrossRef] [PubMed]

- Cave, R.; Bloch, S. The use of speech recognition technology by people living with amyotrophic lateral sclerosis: A scoping review. Disabil. Rehabil. Assist. Technol. 2023, 18, 1043–1055. [Google Scholar] [CrossRef] [PubMed]

- Neumann, M.; Roesler, O.; Liscombe, J.; Kothare, H.; Suendermann-Oeft, D.; Pautler, D.; Navar, I.; Anvar, A.; Kumm, J.; Norel, R.; et al. Investigating the Utility of Multimodal Conversational Technology and Audiovisual Analytic Measures for the Assessment and Monitoring of Amyotrophic Lateral Sclerosis at Scale. arXiv 2021, arXiv:2104.07310. [Google Scholar] [CrossRef]

- Hoyle, A.C.; Stevenson, R.; Leonhardt, M.; Gillett, T.; Martinez-Hernandez, U.; Gompertz, N.; Clarke, C.; Cazzola, D.; Metcalfe, B.W. Exploring the ‘EarSwitch’ Concept: A Novel Ear-Based Control Method for Assistive Technology. J. NeuroEngineering Rehabil. 2024, 21, 210. [Google Scholar] [CrossRef] [PubMed]

- Kew, S.Y.N.; Mok, S.Y.; Goh, C.H. Machine learning and brain-computer interface approaches in prognosis and individualized care strategies for individuals with amyotrophic lateral sclerosis: A systematic review. MethodsX 2024, 13, 102765. [Google Scholar] [CrossRef] [PubMed]

- Zhou, S.; Armstrong, M.; Barbareschi, G.; Ajioka, T.; Hu, Z.; Ando, R.; Yoshifuji, K.; Muto, M.; Minamizawa, K. Augmented Body Communicator: Enhancing daily body expression for people with upper limb limitations through LLM and a robotic arm. arXiv 2025, arXiv:2505.05832. [Google Scholar] [CrossRef]

- Hawkeye Labs, Inc. Hawkeye Labs, Inc.–Apple App Store Developer Profile. 2025. Available online: https://apps.apple.com/us/developer/hawkeye-labs-inc/id1439231626 (accessed on 10 July 2025).

- Vocable AAC (Voiceitt Inc.). Vocable AAC (Version—); Augmentative and Alternative Communication App. 2025. Available online: https://apps.apple.com/us/app/vocable-aac/id1497040547 (accessed on 10 July 2025).

- EyeTech Digital Systems, Inc. EyeTech Digital Systems—Eye Tracking and Speech-Generating Devices. 2025. Available online: https://eyetechds.com/ (accessed on 10 July 2025).

- Tobii AB. Tobii—Eye Tracking and Attention Computing. 2025. Available online: https://www.tobii.com/ (accessed on 10 July 2025).

- Benabid Najjar, A.; Al-Wabil, A.; Hosny, M.; Alrashed, W.; Alrubaian, A. Usability Evaluation of Optimized Single-Pointer Arabic Keyboards Using Eye Tracking. Adv. Hum. Comput. Interact. 2021, 2021, 6657155. [Google Scholar] [CrossRef]

- Ning, Y.; He, S.; Wu, Z.; Xing, C.; Zhang, L.J. A Review of Deep Learning Based Speech Synthesis. Appl. Sci. 2019, 9, 4050. [Google Scholar] [CrossRef]

- ISO 9241-11:2018; Ergonomics of Human-System Interaction—Part 11: Usability: Definitions and Concepts. International Organization for Standardization: Geneva, Switzerland, 2018.

| Module | Test Action | Result |

|---|---|---|

| Eye Tracking Engine | Eye coordinates detection | Pass |

| Phrase Selector | Gaze selection and highlight | Pass |

| Audio Playback Handler | WAV playback via AVFoundation | Pass |

| Custom Keyboard | Dwell-based character input | Pass |

| Database Access | Phrase retrieval via SQLite | Pass |

| Metric | Observed Value |

|---|---|

| CPU Usage | 28% (steady during gaze tracking and playback) |

| Memory Consumption | 132 MB |

| App Launch Time | 1.2 s |

| Audio Playback Latency | <0.3 s |

| Task No. | Description |

|---|---|

| 1 | Launch app and allow camera access |

| 2 | Complete gaze calibration |

| 3 | Navigate to phrase category |

| 4 | Select and play phrase |

| 5 | Compose message with keyboard |

| Participant | All Tasks Completed | Notes |

|---|---|---|

| P1 | Yes | Smooth tracking |

| P2 | Yes | Needed calibration repeat |

| P3 | Yes | Natural voice output appreciated |

| P4 | Yes | Suggested more phrase icons |

| P5 | Yes | Gaze accuracy was high |

| Feature | Hawkeye Access | Vocable AAC | SOUTY |

|---|---|---|---|

| Language Support | English only | English only | Arabic (Saudi dialect) |

| Offline Mode | Partial | Yes | Fully supported |

| TTS Source | Synthetic voice | Synthetic voice | Human voice bank |

| Device Platform | iOS only | iOS only | iOS only |

| Phrase Customization | Limited | Moderate | Predefined + Keyboard |

| User Interface | Western-centric | English phrases | Arabic UI and flow |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alsalamah, H.A.; Alhabrdi, L.; Alsebayel, M.; Almisned, A.; Alhadlaq, D.; Albadrani, L.S.; Alsalamah, S.M.; AlSalamah, S. SOUTY: A Voice Identity-Preserving Mobile Application for Arabic-Speaking Amyotrophic Lateral Sclerosis Patients Using Eye-Tracking and Speech Synthesis. Electronics 2025, 14, 3235. https://doi.org/10.3390/electronics14163235

Alsalamah HA, Alhabrdi L, Alsebayel M, Almisned A, Alhadlaq D, Albadrani LS, Alsalamah SM, AlSalamah S. SOUTY: A Voice Identity-Preserving Mobile Application for Arabic-Speaking Amyotrophic Lateral Sclerosis Patients Using Eye-Tracking and Speech Synthesis. Electronics. 2025; 14(16):3235. https://doi.org/10.3390/electronics14163235

Chicago/Turabian StyleAlsalamah, Hessah A., Leena Alhabrdi, May Alsebayel, Aljawhara Almisned, Deema Alhadlaq, Loody S. Albadrani, Seetah M. Alsalamah, and Shada AlSalamah. 2025. "SOUTY: A Voice Identity-Preserving Mobile Application for Arabic-Speaking Amyotrophic Lateral Sclerosis Patients Using Eye-Tracking and Speech Synthesis" Electronics 14, no. 16: 3235. https://doi.org/10.3390/electronics14163235

APA StyleAlsalamah, H. A., Alhabrdi, L., Alsebayel, M., Almisned, A., Alhadlaq, D., Albadrani, L. S., Alsalamah, S. M., & AlSalamah, S. (2025). SOUTY: A Voice Identity-Preserving Mobile Application for Arabic-Speaking Amyotrophic Lateral Sclerosis Patients Using Eye-Tracking and Speech Synthesis. Electronics, 14(16), 3235. https://doi.org/10.3390/electronics14163235