Multi-Source Heterogeneous Data Fusion Algorithm for Vessel Trajectories in Canal Scenarios

Abstract

1. Introduction

- (1)

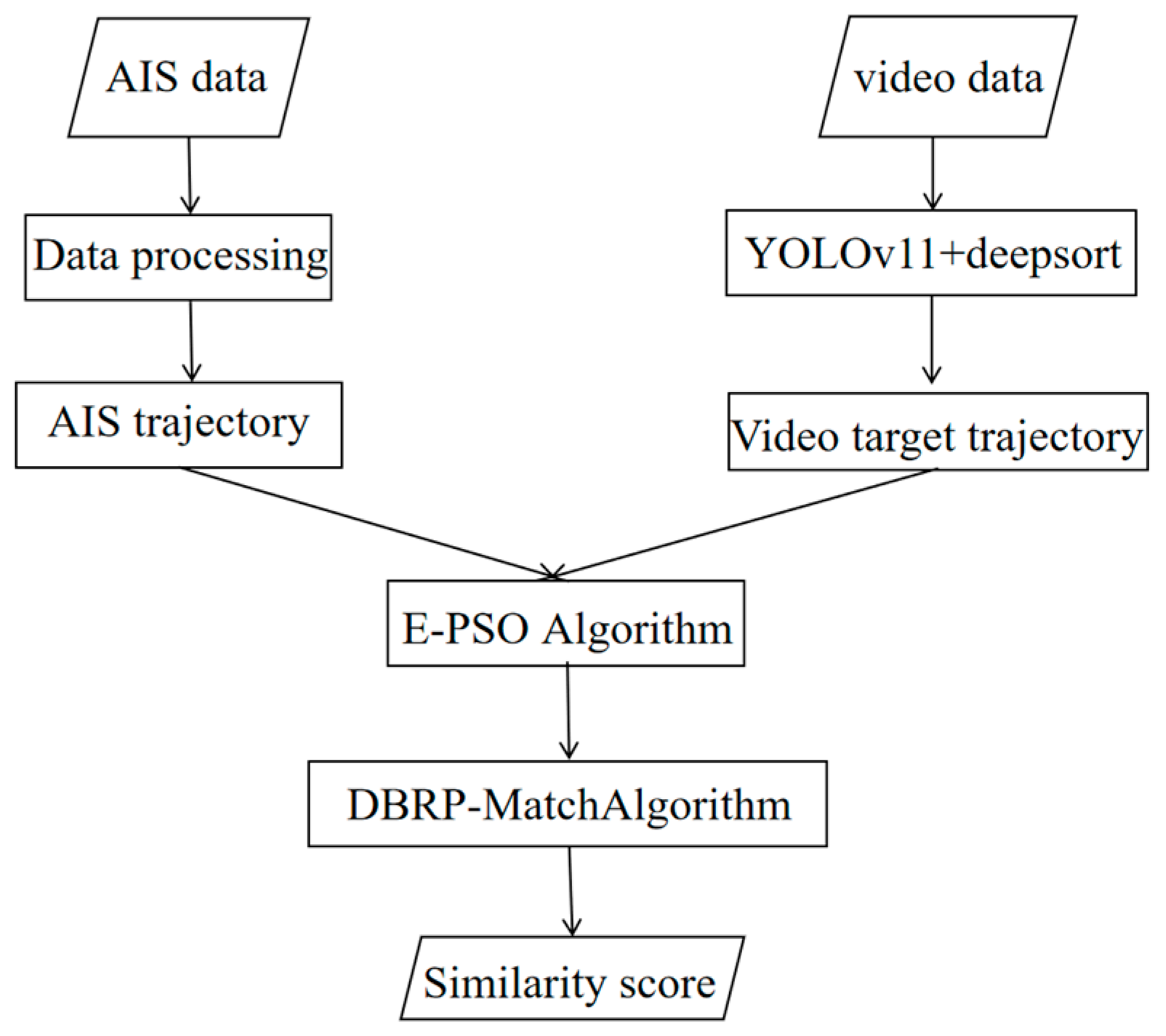

- This paper addresses the limitations of spatial coordinate transformation algorithms across heterogeneous coordinate systems and the insufficient correction of trajectory information at multiple granularities. Prior to trajectory matching, this study employed the Enhanced Particle Swarm Optimization (E-PSO) algorithm to perform optimal rigid transformation correction on Automatic Identification System (AIS) data, thereby mitigating the degradation of the matching precision caused by coordinate transformation errors.

- (2)

- To address the limitations of traditional trajectory-matching algorithms that rely solely on either local point-to-point alignment or the contour-based analysis of multi-source features, this study proposes a novel trajectory-matching algorithm for asynchronous multi-source data, incorporating a distance-based reward–penalty mechanism. By comparing distances between local sampling points and incorporating a dynamic reward–penalty mechanism, the proposed method enhances both the local sensitivity and global optimization capability when processing information with varying levels of granularity in complex multi-source scenarios. This method takes into account global trajectory shape similarity as well as multi-scale matching and inference capabilities for locally discrete points. Building upon the aforementioned work, the SSGDA framework is developed to adaptively balance local and global search priorities, thereby significantly improving the practical performance of trajectory-matching methods when handling information of varying granularity.

2. Related Work

2.1. Multi-Target Detection and Tracking

2.2. Trajectory Matching

2.3. Comparative Analysis of Various Multi-Source Fusion Frameworks

3. Methods

3.1. Trajectory Extraction Based on AIS Data

3.1.1. AIS Data Processing

3.1.2. AIS Coordinate Transformation

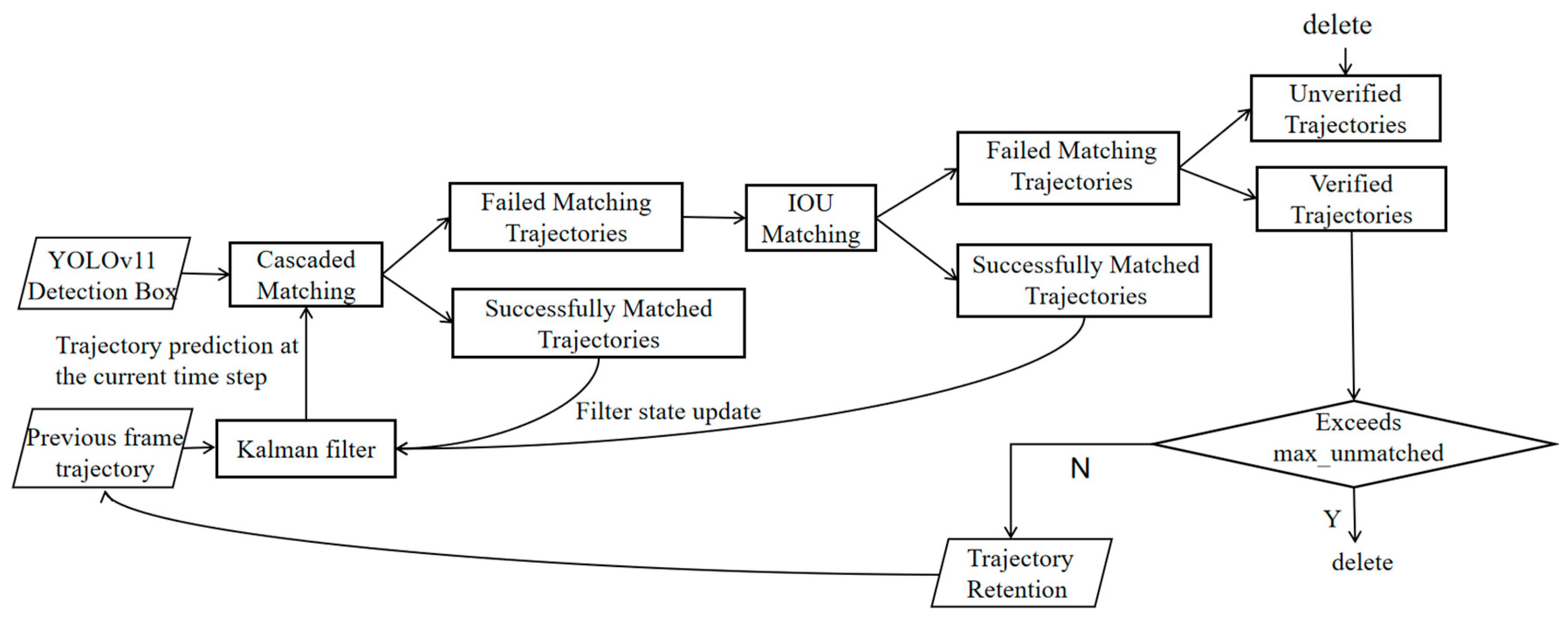

3.2. Video-Based Trajectory Extraction

- (1)

- Kalman filter

- (2)

- Feature Extraction

- (3)

- Hungarian Algorithm

3.3. Trajectory Fusion of Multi-Source Heterogeneous Vessel Data

3.3.1. Enhanced Particle Swarm Optimization

| Algorithm 1. Rigid transformation of the E-PSO algorithm | |

| Input: original trajectory PSO parameters sparsity regularization parameter | |

| Output: optimal transformation parameters FinalTrajectory ← Final Transformed Trajectory | |

| 1: | Initialization: Maximum number of particles , Particle parameters , (particle’s best position), (global best particle position) |

| 2: | for in 0, 1, 2, …, |

| 3: | |

| 4: | Construct , using cubic spline interpolation |

| 5: | Compute normalized curvature: . Generate downsampling sequence using: |

| 6: | Iterative update: |

| 7: | while < K do |

| 8: | for each ∈ {1, 2, 3, …, n} do |

| 9: | |

| 10: | |

| 11: | update and |

| 12: | end |

| 13: | delta = |

| 14: | if delta < |

| 15: | no_improve = no_improve + 1 |

| 16: | end |

| 17: | Return T, FinalTrajectory |

| 18: | End |

3.3.2. Trajectory-Matching Algorithm (DBRP-Match)

- (1)

- Dynamic Distance Penalty Mechanism:

- (2)

- Time Penalty Mechanism:

- (3)

- Dynamic Reward Mechanism:

| Algorithm 2. DBRP-Match Trajectory-Matching Algorithm | |

| Input: : a set of video trajectory points defined as : a set of AIS trajectory points defined as : matching tolerance distance threshold | |

| Output: # similarity score | |

| 1: | : |

| Set | |

| 2: | : |

| 3: | : |

| 4: | |

| 5: | |

| 6: | |

| 7: | else |

| 8: | |

| 9: | ← minimum total cost of trajectory matching |

| 10: | |

| # Compute the normalized trajectory similarity | |

| 11: | Return similarity |

| 12: | End |

4. Results

4.1. Evaluation Metrics

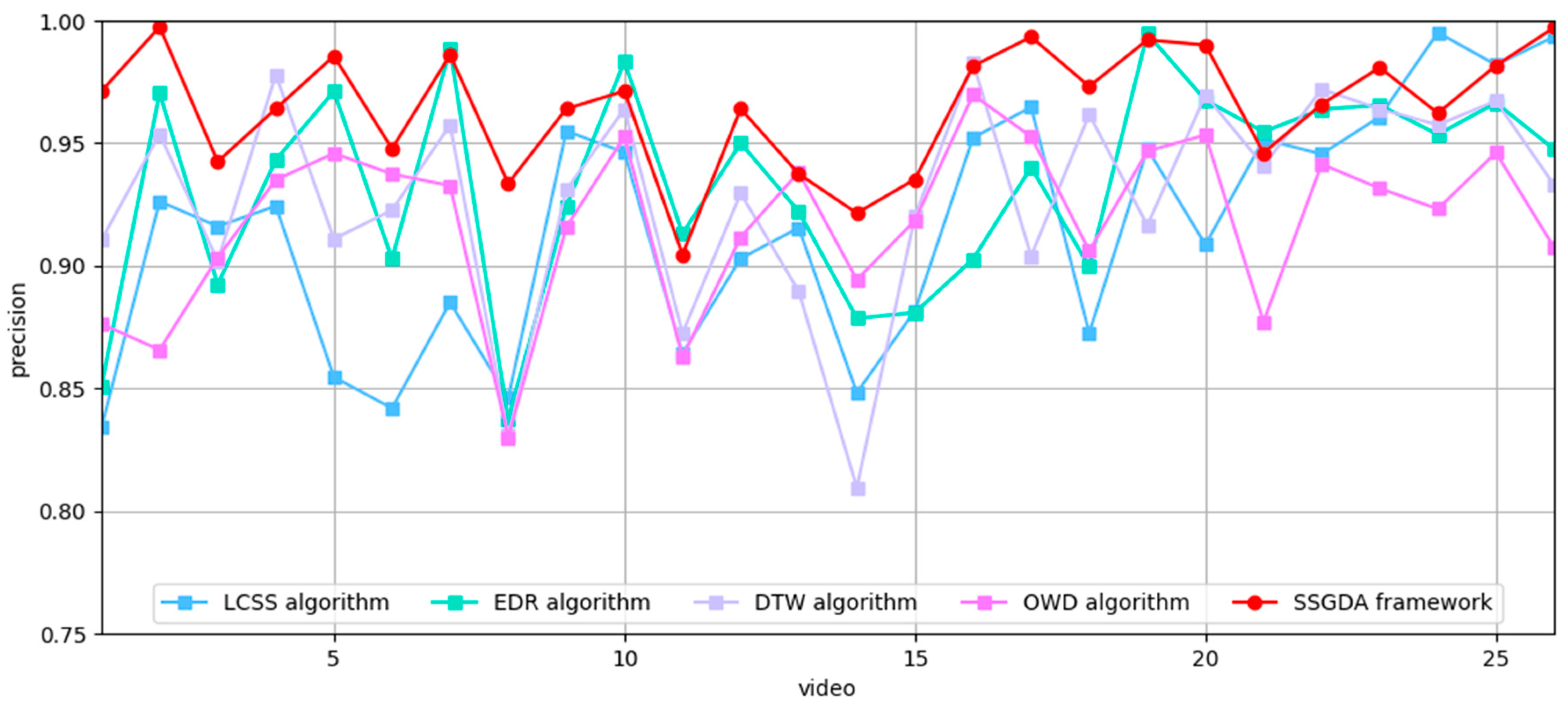

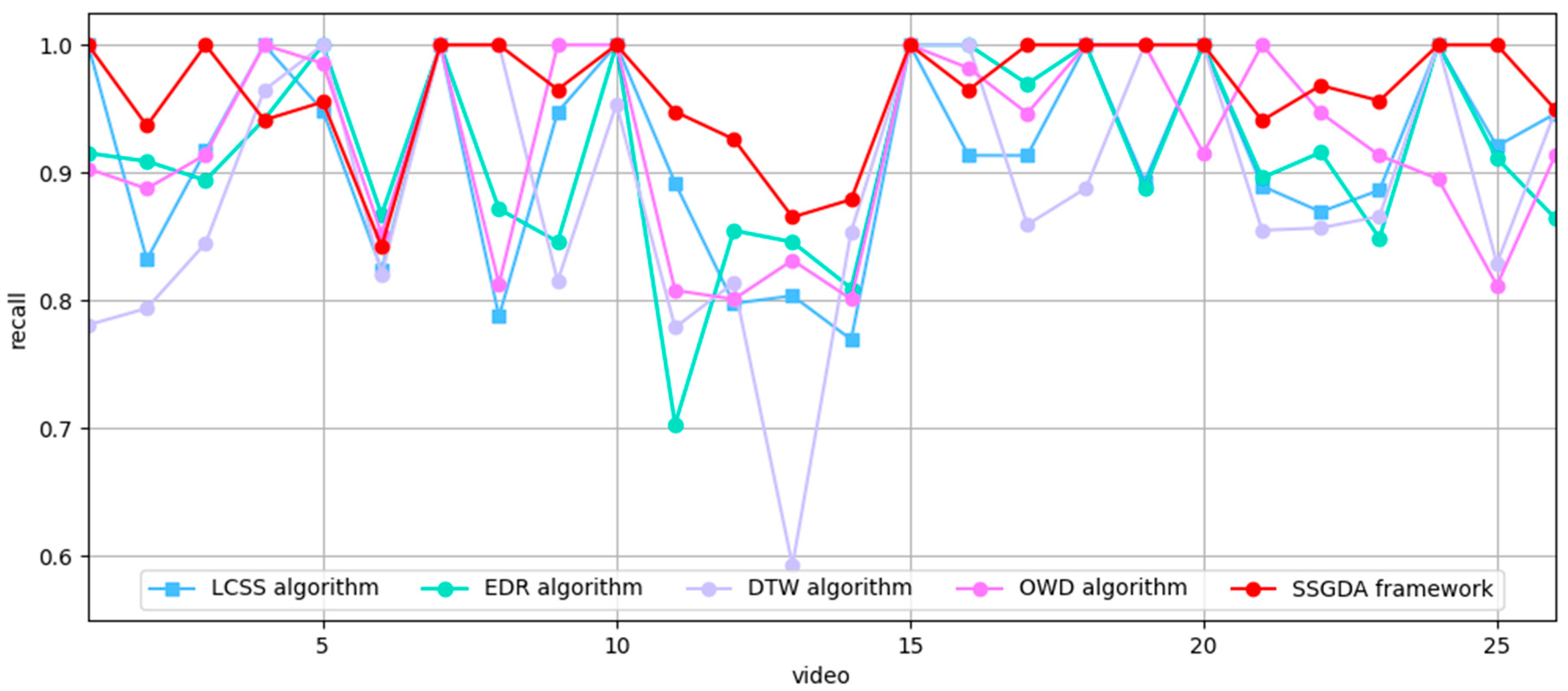

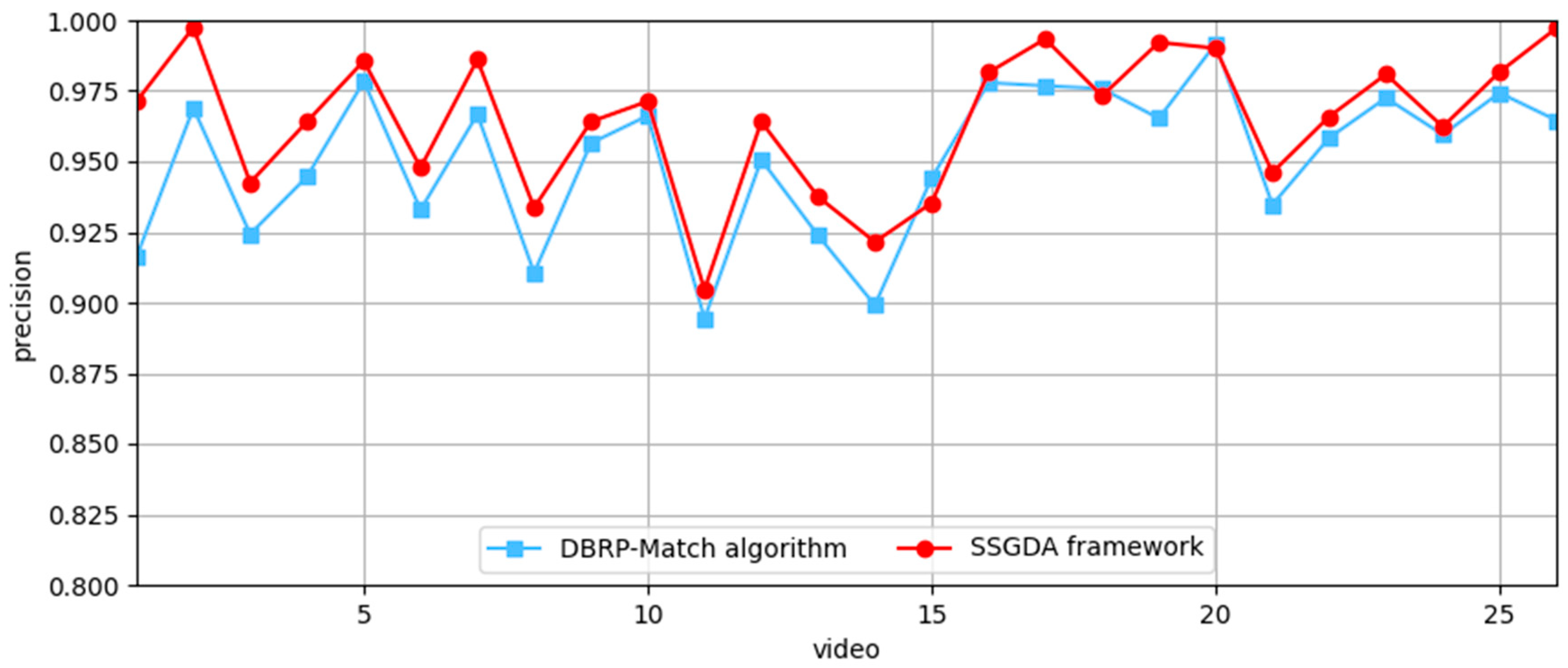

4.2. Comparison of SSGDA with Other Algorithms on the FVessel Dataset

4.3. Ablation Experiments

4.4. Trajectory Visualization Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ding, H.; Weng, J.; Shi, K. Real-time assessment of ship collision risk using image processing techniques. Appl. Ocean Res. 2024, 153, 104241. [Google Scholar] [CrossRef]

- Tang, N.; Wang, X.; Gao, S.; Ai, B.; Li, B.; Shang, H. Collaborative ship scheduling decision model for green tide salvage based on evolutionary population dynamics. Ocean Eng. 2024, 304, 117796. [Google Scholar] [CrossRef]

- Hao, G.; Xiao, W.; Huang, L.; Chen, J.; Zhang, K.; Chen, Y. The Analysis of Intelligent Functions Required for Inland Ships. J. Mar. Sci. Eng. 2024, 12, 836. [Google Scholar] [CrossRef]

- Hu, D.; Chen, L.; Fang, H.; Fang, Z.; Li, T.; Gao, Y. Spatio-temporal trajectory similarity measures: A comprehensive survey and quantitative study. IEEE Trans. Knowl. Data Eng. 2023, 36, 2191–2212. [Google Scholar] [CrossRef]

- Chen, G.; Liu, Z.; Yu, G.; Liang, J.; Hemanth, J. A new view of multisensor data fusion: Research on generalized fusion. Math. Probl. Eng. 2021, 2021, 5471242. [Google Scholar] [CrossRef]

- Guo, Z.; Yin, C.; Zeng, W.; Tan, X.; Bao, J. Data-driven method for detecting flight trajectory deviation anomaly. J. Aerosp. Inf. Syst. 2022, 19, 799–810. [Google Scholar] [CrossRef]

- Haoyan, W.; Yuangang, L.; Shaohua, L.; Bo, L.; Zongyi, H. A path increment map matching method for high-frequency trajectory. IEEE Trans. Intell. Transp. Syst. 2023, 24, 10948–10962. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Vu, T.; Jang, H.; Pham, T.X.; Yoo, C. Cascade RPN: Delving into high-quality region proposal network with adaptive convolution. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, CA, Canada, 8–14 December 2019; Advances in Neural Information Processing Systems 32, Volume 2 of 20. Curran Associates, Inc.: New York, NY, USA, 2020; pp. 1421–1431. [Google Scholar]

- Dewi, C.; Chen, R.-C.; Yu, H. Weight analysis for various prohibitory sign detection and recognition using deep learning. Multimedia Tools Appl. 2020, 79, 32897–32915. [Google Scholar] [CrossRef]

- Bai, D.; Sun, Y.; Tao, B.; Tong, X.; Xu, M.; Jiang, G.; Chen, B.; Cao, Y.; Sun, N.; Li, Z. Improved single shot multibox detector target detection method based on deep feature fusion. Concurr. Comput. Pract. Exp. 2021, 34, e6614. [Google Scholar] [CrossRef]

- Liu, H.; Wu, W. Interacting multiple model (IMM) fifth-degree spherical simplex-radial cubature Kalman filter for maneuvering target tracking. Sensors 2017, 17, 1374. [Google Scholar] [CrossRef] [PubMed]

- Ubeda-Medina, L.; Garcia-Fernandez, A.F.; Grajal, J. Adaptive auxiliary particle filter for track-before-detect with multiple targets. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 2317–2330. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–24 October 2022; Springer: Cham, Switzerland; pp. 1–21. [Google Scholar]

- Cao, J.; Pang, J.; Weng, X.; Khirodkar, R.; Kitani, K. Observation-centric sort: Rethinking sort for robust multi-object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9686–9696. [Google Scholar]

- Wu, B.; Liu, C.; Jiang, F.; Li, J.; Yang, Z. Dynamic identification and automatic counting of the number of passing fish species based on the improved DeepSORT algorithm. Front. Environ. Sci. 2023, 11, 1059217. [Google Scholar] [CrossRef]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8126–8135. [Google Scholar]

- Wang, Y.; Kitani, K.; Weng, X. Joint object detection and multi-object tracking with graph neural networks. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May 30–5 June 2021; pp. 13708–13715. [Google Scholar]

- Yi, C.; Xu, B.; Chen, J.; Chen, Q.; Zhang, L. An improved YOLOX model for detecting strip surface defects. Steel Res. Int. 2022, 93, 2200505. [Google Scholar] [CrossRef]

- Qin, X.; Yu, C.; Liu, B.; Zhang, Z. YOLO8-FASG: A high-accuracy fish identification method for underwater robotic system. IEEE Access 2024, 12, 73354–73362. [Google Scholar] [CrossRef]

- Huang, Y.; Wang, D.; Wu, B.; An, D. NST-YOLO11: ViT Merged Model with Neuron Attention for Arbitrary-Oriented Ship Detection in SAR Images. Remote Sens. 2024, 16, 4760. [Google Scholar] [CrossRef]

- Chen, M.; Yu, L.; Zhi, C.; Sun, R.; Zhu, S.; Gao, Z.; Ke, Z.; Zhu, M.; Zhang, Y. Improved faster R-CNN for fabric defect detection based on Gabor filter with Genetic Algorithm optimization. Comput. Ind. 2022, 134, 103551. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Y.; Zhou, Z.; Yang, G.; Wu, Q.M.J. Efficient object detector via dynamic prior and dynamic feature fusion. Comput. J. 2024, 67, 3196–3206. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, X.; Gao, G.; Lv, Y.; Li, Q.; Li, Z.; Wang, C.; Chen, G. Open pose mask R-CNN network for individual cattle recognition. IEEE Access 2023, 11, 113752–113768. [Google Scholar] [CrossRef]

- Shi, H.; Ning, J.; Fu, Y.; Ni, J. Improved MDNet tracking with fast feature extraction and efficient multiple domain training. Signal Image Video Process. 2020, 15, 121–128. [Google Scholar] [CrossRef]

- Xiang, S.; Zhang, T.; Jiang, S.; Han, Y.; Zhang, Y.; Guo, X.; Yu, L.; Shi, Y.; Hao, Y. Spiking siamfc++: Deep spiking neural network for object tracking. Nonlinear Dyn. 2024, 112, 8417–8429. [Google Scholar] [CrossRef]

- Choi, W.; Cho, J.; Lee, S.; Jung, Y. Fast constrained dynamic time warping for similarity measure of time series data. IEEE Access 2020, 8, 222841–222858. [Google Scholar] [CrossRef]

- Han, T.; Peng, Q.; Zhu, Z.; Shen, Y.; Huang, H.; Abid, N.N. A pattern representation of stock time series based on DTW. Phys. A Stat. Mech. Its Appl. 2020, 550, 124161. [Google Scholar] [CrossRef]

- Khan, R.; Ali, I.; Altowaijri, S.M.; Zakarya, M.; Rahman, A.U.; Ahmedy, I.; Khan, A.; Gani, A. LCSS-based algorithm for computing multivariate data set similarity: A case study of real-time WSN data. Sensors 2019, 19, 166. [Google Scholar] [CrossRef]

- Soleimani, G.; Abessi, M. DLCSS: A new similarity measure for time series data mining. Eng. Appl. Artif. Intell. 2020, 92, 103664. [Google Scholar] [CrossRef]

- Han, K.; Xu, Y.; Deng, Z.; Fu, J. DFF-EDR: An indoor fingerprint location technology using dynamic fusion features of channel state information and improved edit distance on real sequence. China Commun. 2021, 18, 40–63. [Google Scholar] [CrossRef]

- Koide, S.; Xiao, C.; Ishikawa, Y. Fast subtrajectory similarity search in road networks under weighted edit distance constraints. arXiv 2020, arXiv:2006.05564. [Google Scholar] [CrossRef]

- Har-Peled, S.; Raichel, B. The Fréchet distance revisited and extended. ACM Trans. Algorithms 2014, 10, 1–22. [Google Scholar] [CrossRef]

- Lin, B.; Su, J. One way distance: For shape based similarity search of moving object trajectories. GeoInformatica 2007, 12, 117–142. [Google Scholar] [CrossRef]

- Bang, Y.; Kim, J.; Yu, K. An improved map-matching technique based on the fréchet distance approach for pedestrian navigation services. Sensors 2016, 16, 1768. [Google Scholar] [CrossRef]

- Wen, J.; Liu, H.; Li, J. PTDS CenterTrack: Pedestrian tracking in dense scenes with re-identification and feature enhancement. Mach. Vis. Appl. 2024, 35, 54. [Google Scholar] [CrossRef]

- Yu, Q.; Hu, F.; Ye, Z.; Chen, C.; Sun, L.; Luo, Y. High-frequency trajectory map matching algorithm based on road network topology. IEEE Trans. Intell. Transp. Syst. 2022, 23, 17530–17545. [Google Scholar] [CrossRef]

- Wu, Z.; Chen, G.; Gan, Y.; Wang, L.; Pu, J. MVFusion: Multi-view 3D object detection with semantic-aligned radar and camera fusion. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 2766–2773. [Google Scholar]

- Li, Y.; Zeng, K.; Shen, T. CenterTransFuser: Radar point cloud and visual information fusion for 3D object detection. EURASIP J. Adv. Signal Process. 2023, 2023, 7. [Google Scholar] [CrossRef]

- Nigmatzyanov, A.; Ferrer, G.; Tsetserukou, D. CBILR: Camera Bi-directional LiDAR-Radar Fusion for Robust Perception in Autonomous Driving. In Proceedings of the International Conference on Computational Optimization, Innopolis, Russia, 14 June 2024. [Google Scholar]

- Singh, K.K.; Kumar, S.; Dixit, P.; Bajpai, M.K. Kalman filter based short term prediction model for COVID-19 spread. Appl. Intell. 2020, 51, 2714–2726. [Google Scholar] [CrossRef] [PubMed]

- Iwami, K.; Ikehata, S.; Aizawa, K. Scale drift correction of camera geo-localization using geo-tagged images. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhang, Z. Flexible camera calibration by viewing a plane from unknown orientations. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; IEEE: New York, NY, USA, 1999; Volume 1, pp. 666–673. [Google Scholar]

- Li, J.; Xu, X.; Jiang, Z.; Jiang, B. Adaptive Kalman Filter for Real-Time Visual Object Tracking Based on Autocovariance Least Square Estimation. Appl. Sci. 2024, 14, 1045. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, R.W.; Qu, J.; Lu, Y.; Zhu, F.; Lv, Y. Asynchronous trajectory matching-based multimodal maritime data fusion for vessel traffic surveillance in inland waterways. IEEE Trans. Intell. Transp. Syst. 2023, 24, 12779–12792. [Google Scholar] [CrossRef]

| Framework Name | Data Source Types | Fusion Approach | Application Domains |

|---|---|---|---|

| MVFusion [38] | Video + Radar | Cross-attention and feature fusion | Autonomous driving |

| CenterFusion [39] | Video + Radar | Cross-modal, cross-multiple attention, and joint cross-multiple attention | Autonomous driving |

| CBILR [40] | LiDAR, Radar, and Video | Bidirectional pre-fusion + BEV space fusion | Autonomous driving |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Wang, M.; Kan, R.; Xiong, Z. Multi-Source Heterogeneous Data Fusion Algorithm for Vessel Trajectories in Canal Scenarios. Electronics 2025, 14, 3223. https://doi.org/10.3390/electronics14163223

Zhang J, Wang M, Kan R, Xiong Z. Multi-Source Heterogeneous Data Fusion Algorithm for Vessel Trajectories in Canal Scenarios. Electronics. 2025; 14(16):3223. https://doi.org/10.3390/electronics14163223

Chicago/Turabian StyleZhang, Jiayu, Mei Wang, Ruixiang Kan, and Zihang Xiong. 2025. "Multi-Source Heterogeneous Data Fusion Algorithm for Vessel Trajectories in Canal Scenarios" Electronics 14, no. 16: 3223. https://doi.org/10.3390/electronics14163223

APA StyleZhang, J., Wang, M., Kan, R., & Xiong, Z. (2025). Multi-Source Heterogeneous Data Fusion Algorithm for Vessel Trajectories in Canal Scenarios. Electronics, 14(16), 3223. https://doi.org/10.3390/electronics14163223