DK-SMF: Domain Knowledge-Driven Semantic Modeling Framework for Service Robots

Abstract

1. Introduction

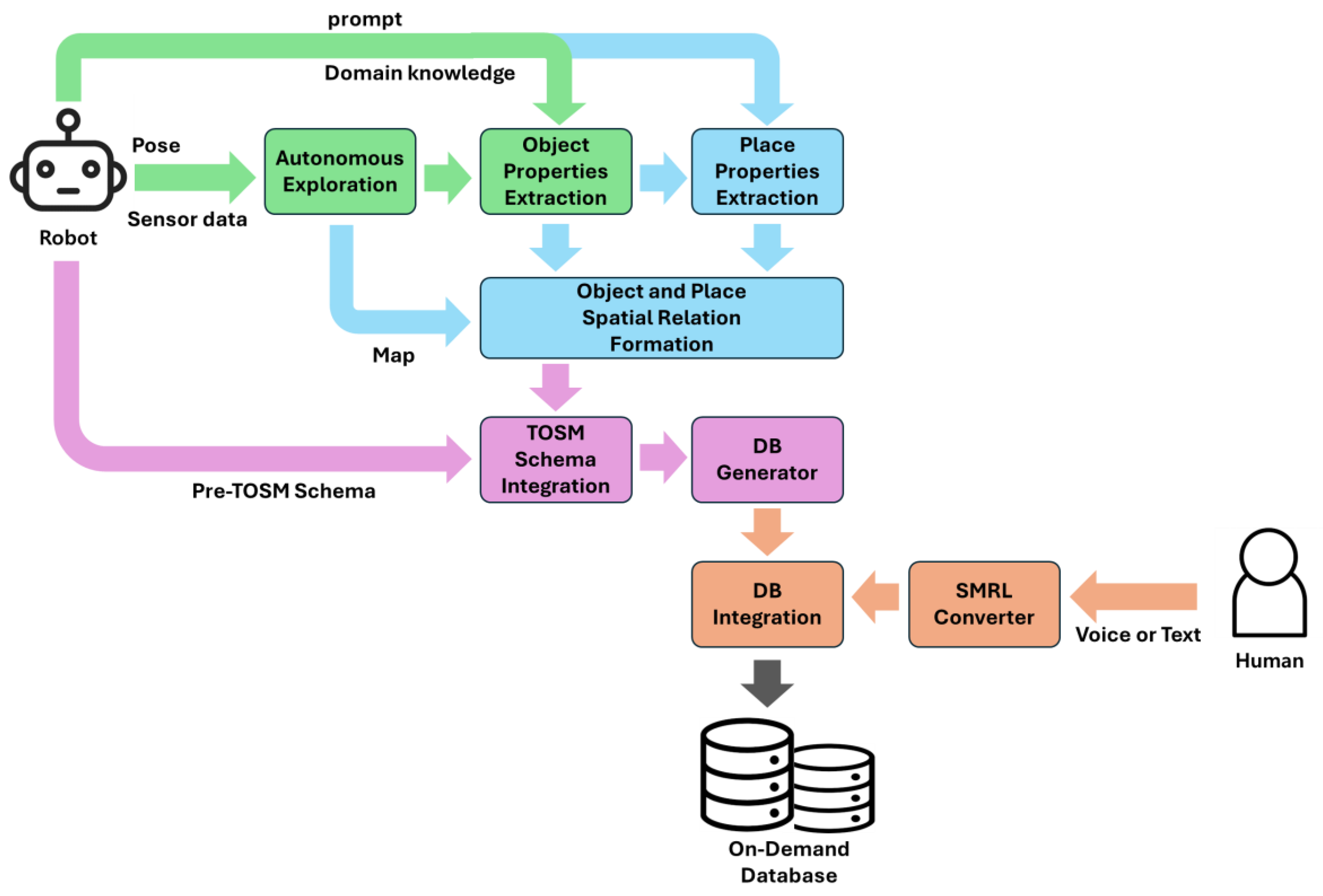

- We newly define TOSM properties with additional implicit properties suitable for service robots and DK-SMF.

- Semantic information extraction of objects and places in environmental modeling can be fully automated using VLMs and LLMs based on zero-shot methods.

- Framework leverages domain knowledge to make zero-shot-based semantic modeling robust.

- Framework integrates the extracted semantic information into a structured semantic database.

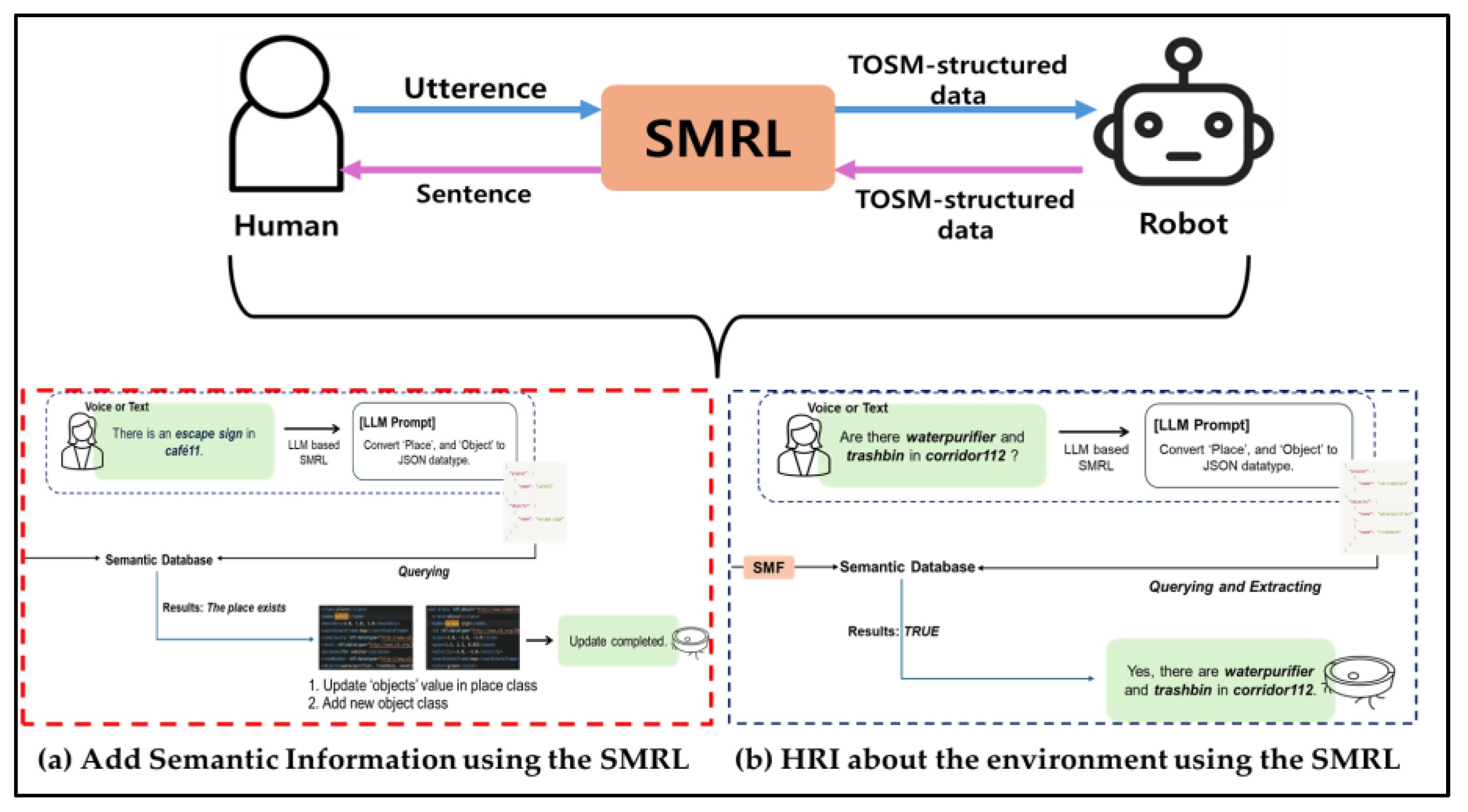

- Through SMRL, humans can directly participate in environmental modeling and enable environmental information updates and interaction with robots.

- A semantic map is built by integrating semantic information through the semantic database.

2. Related Work

3. Domain Knowledge-Driven Semantic Modeling Framework

3.1. Definition of TOSM-Based Properties for Service Robots

3.2. TOSM Properties-Based Object Semantic Information Extraction

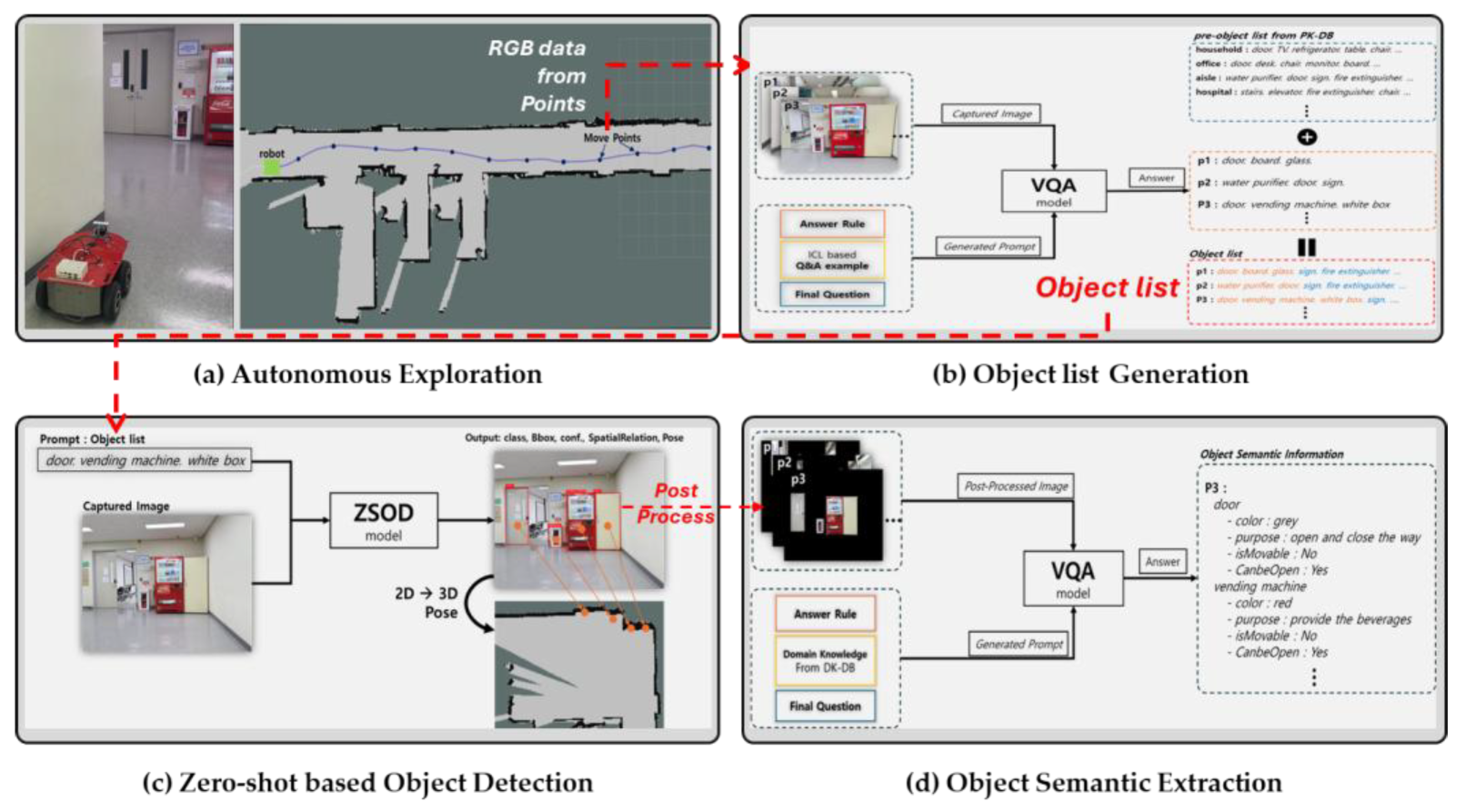

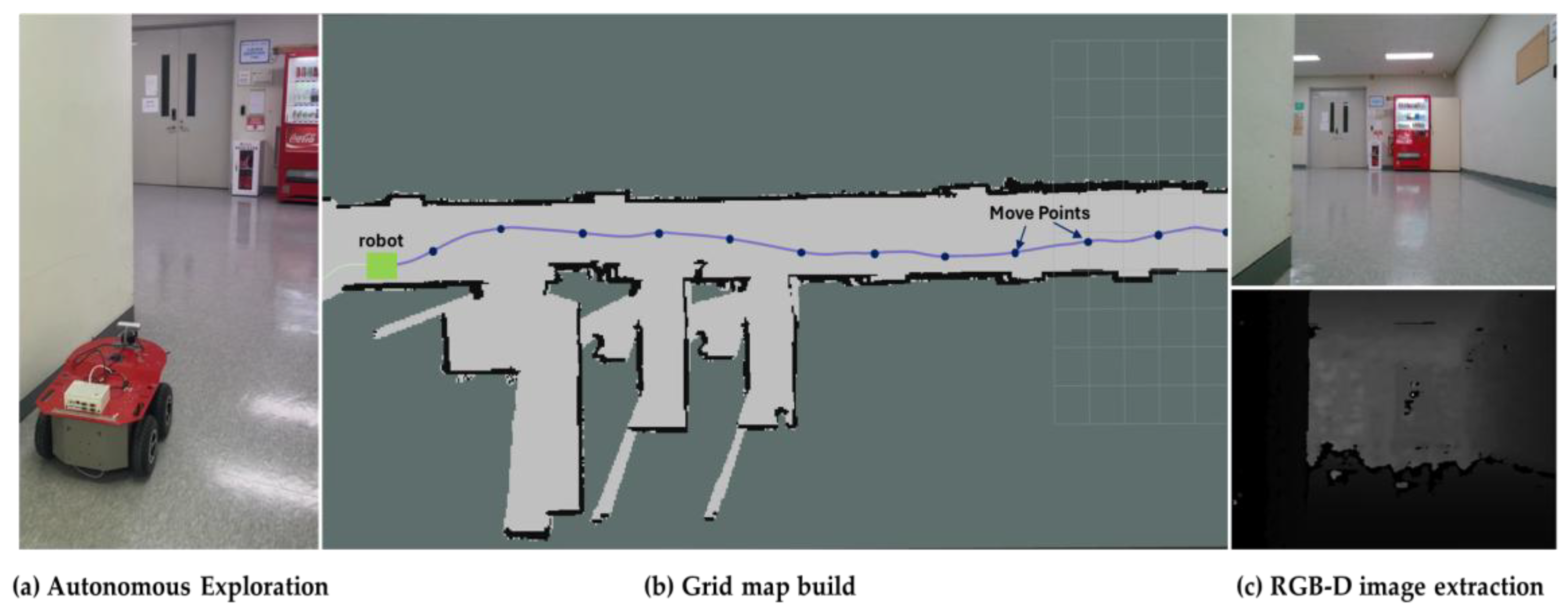

3.2.1. Autonomous Exploration

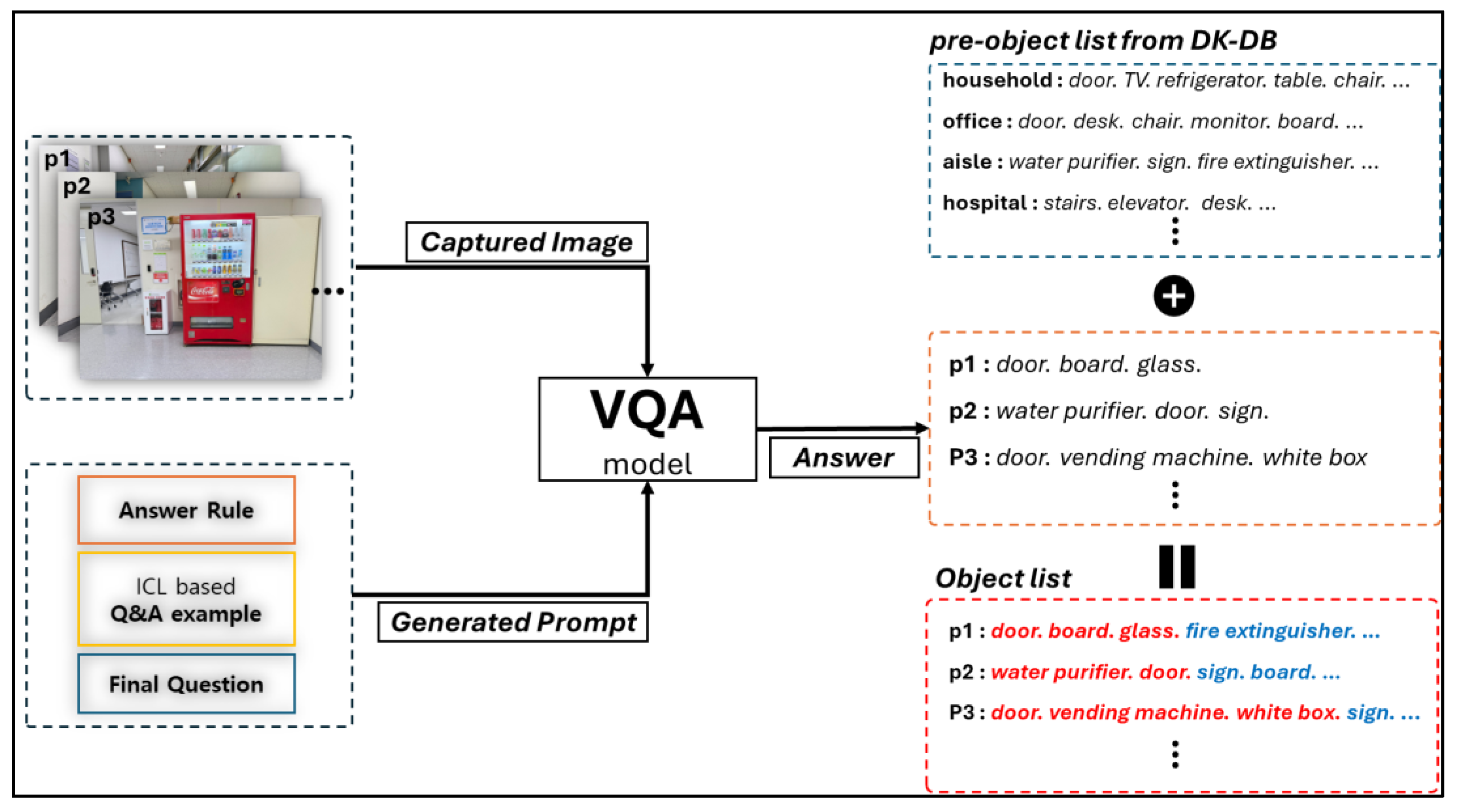

3.2.2. Object List Generation

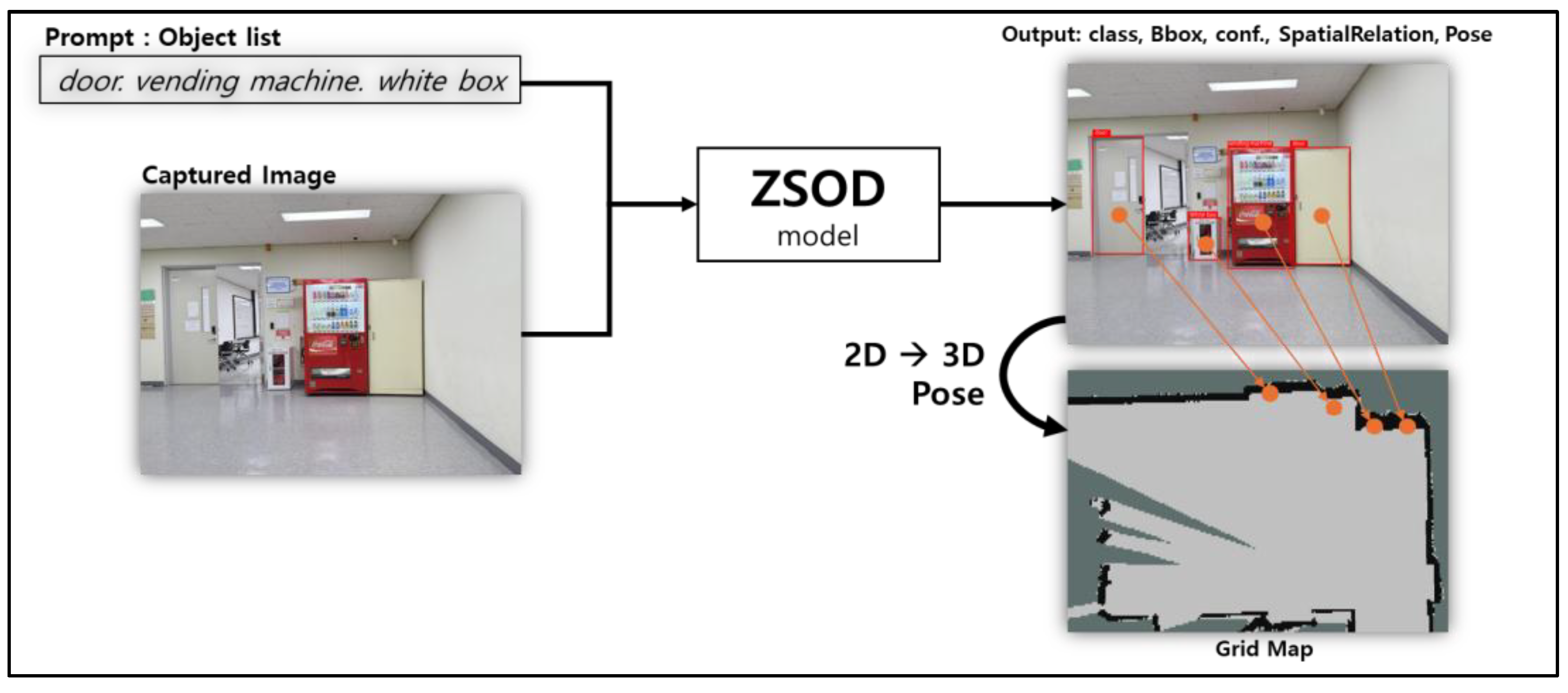

3.2.3. Zero-Shot-Based Object Detection

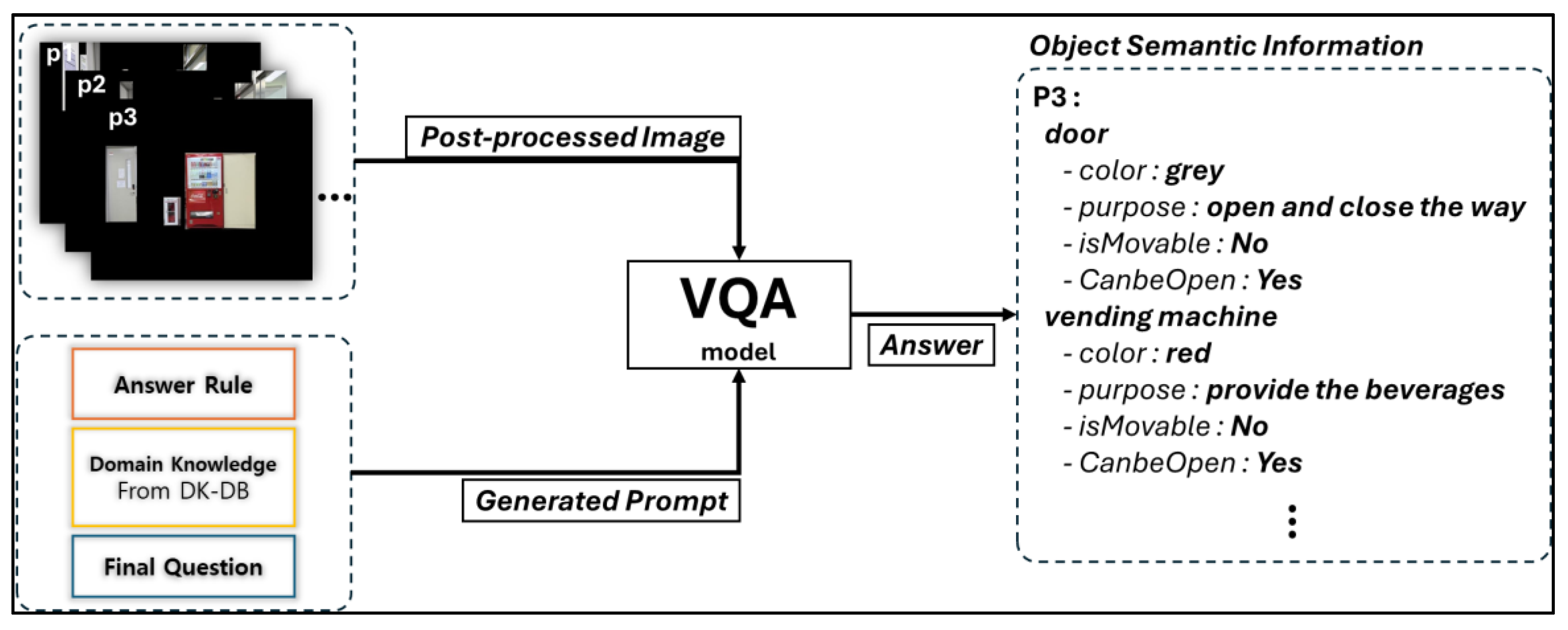

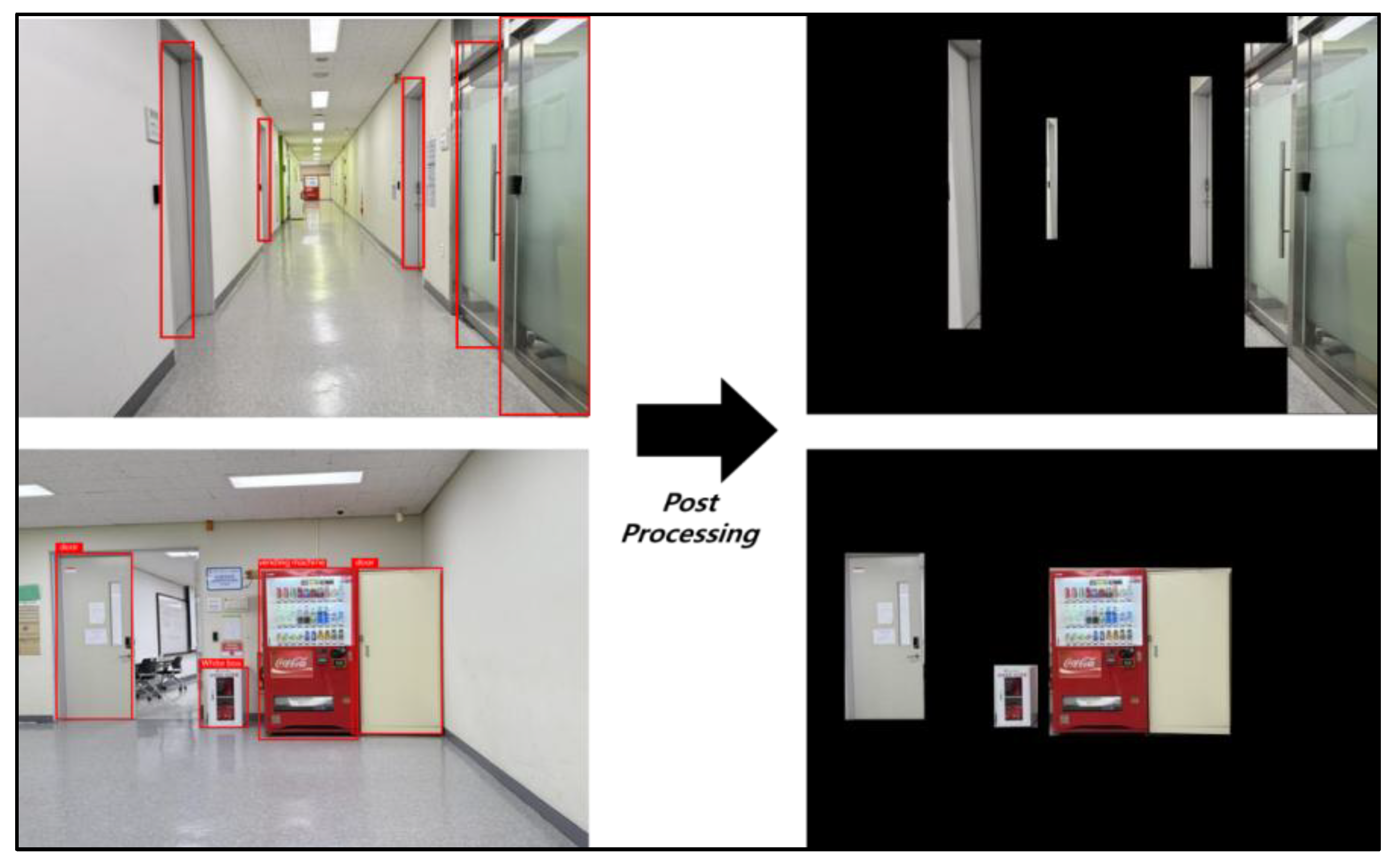

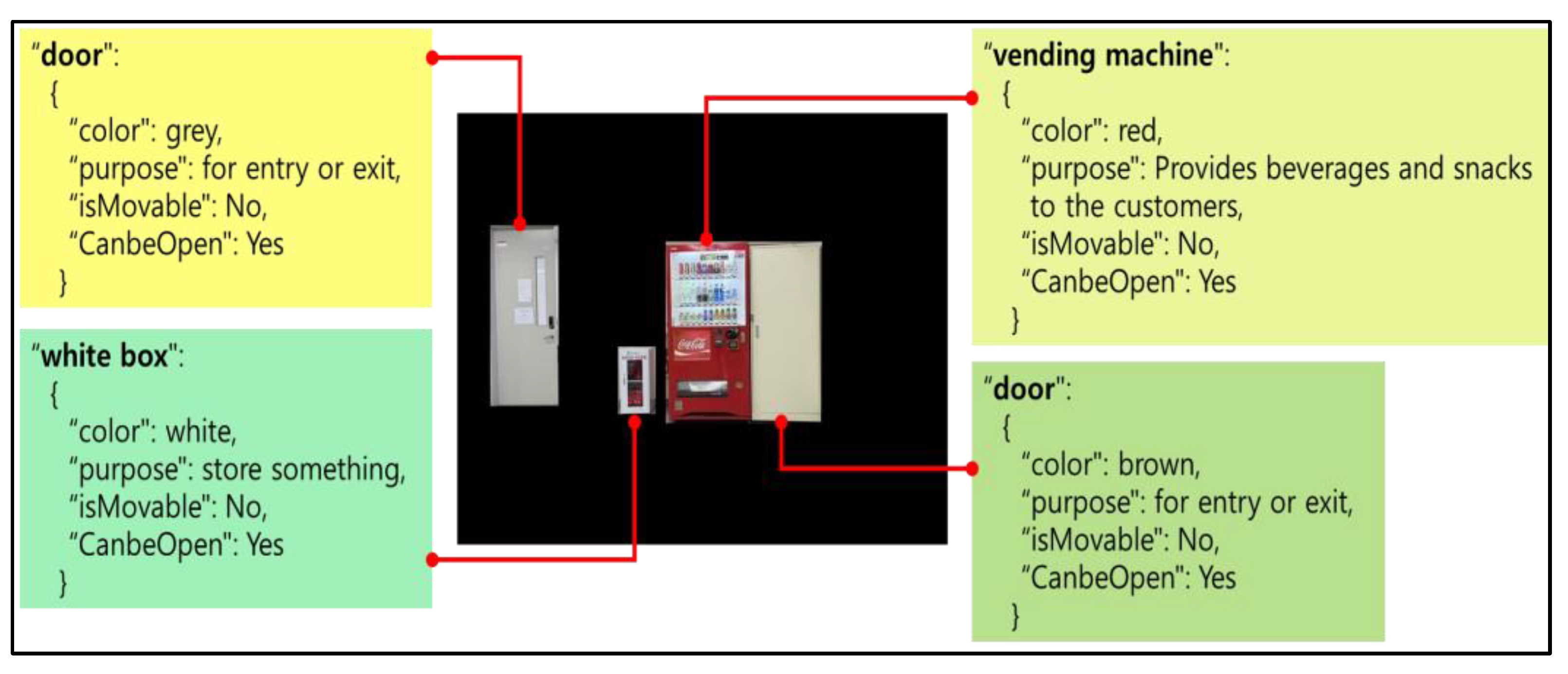

3.2.4. Object Semantic Information Extraction

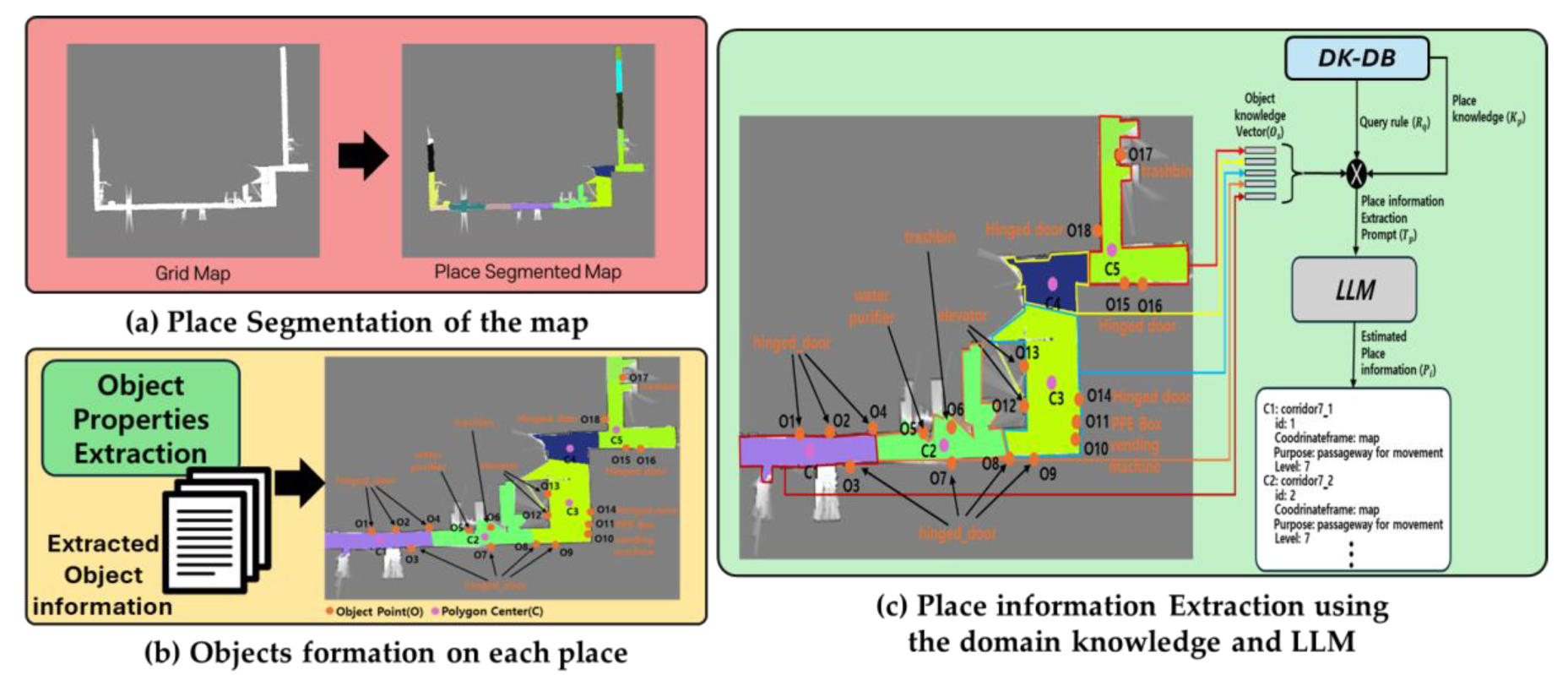

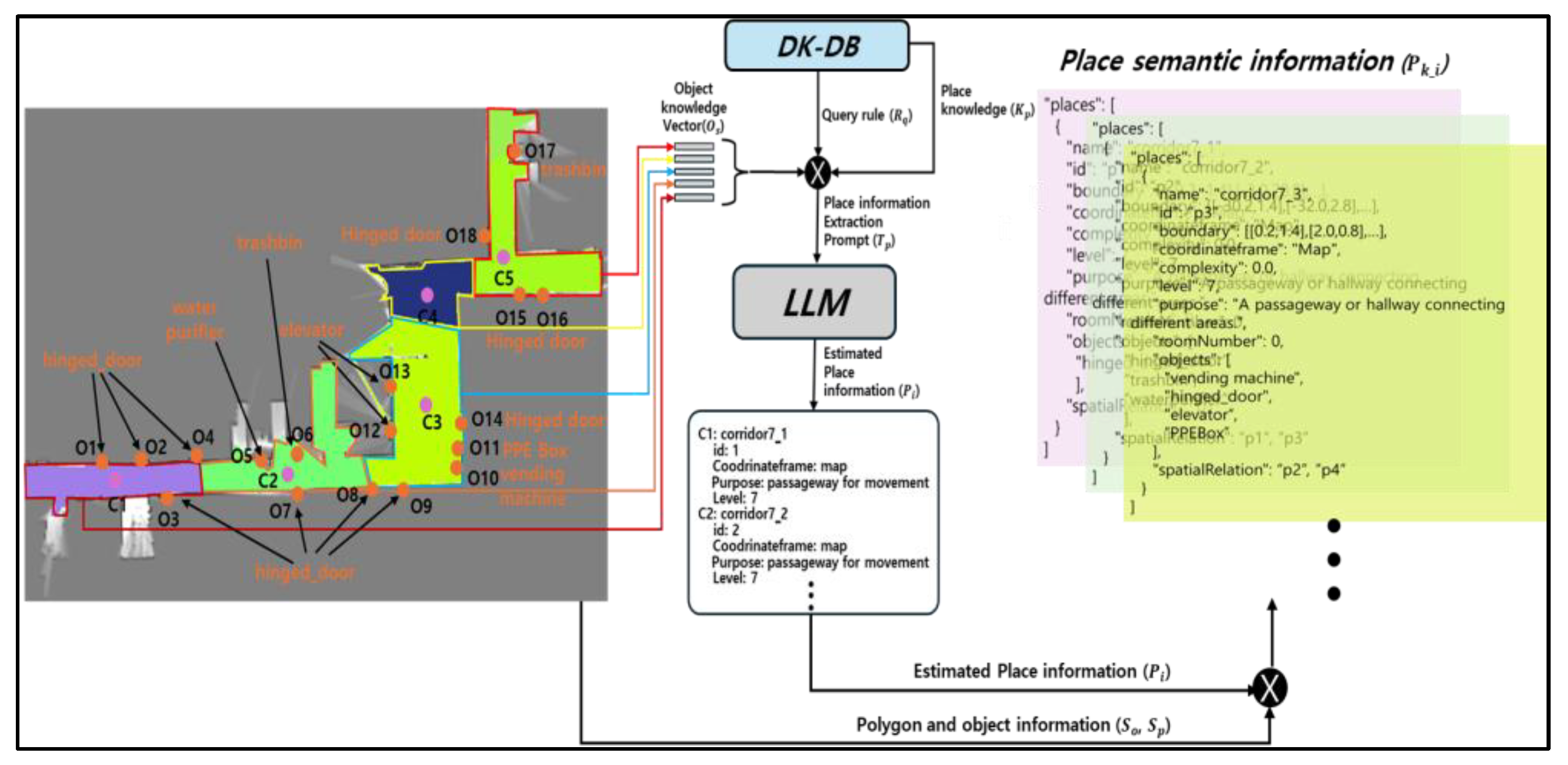

3.3. TOSM Properties-Based Place Semantic Information Extraction

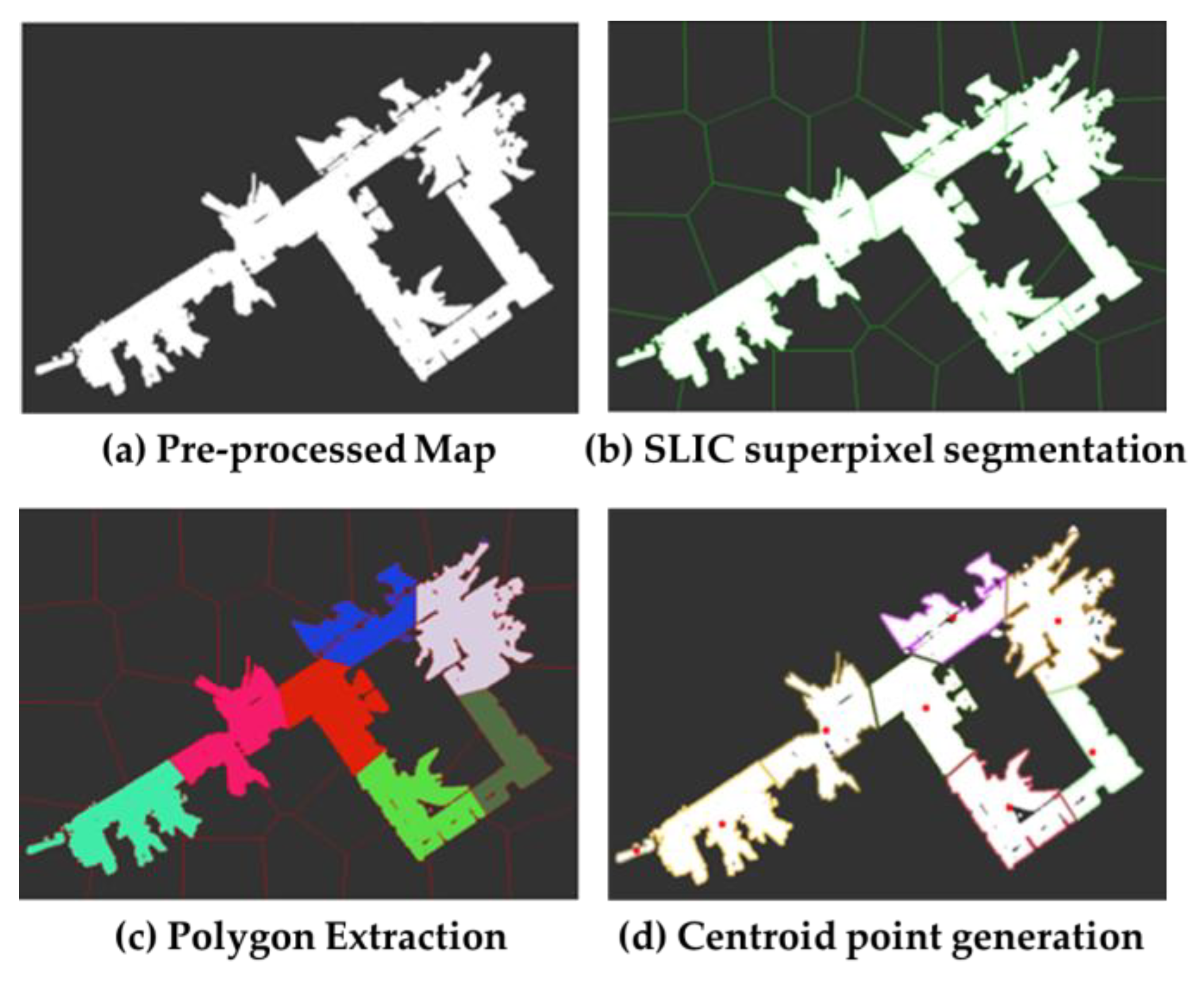

3.3.1. Place Segmentation of the Map

3.3.2. Place Semantic Information Extraction

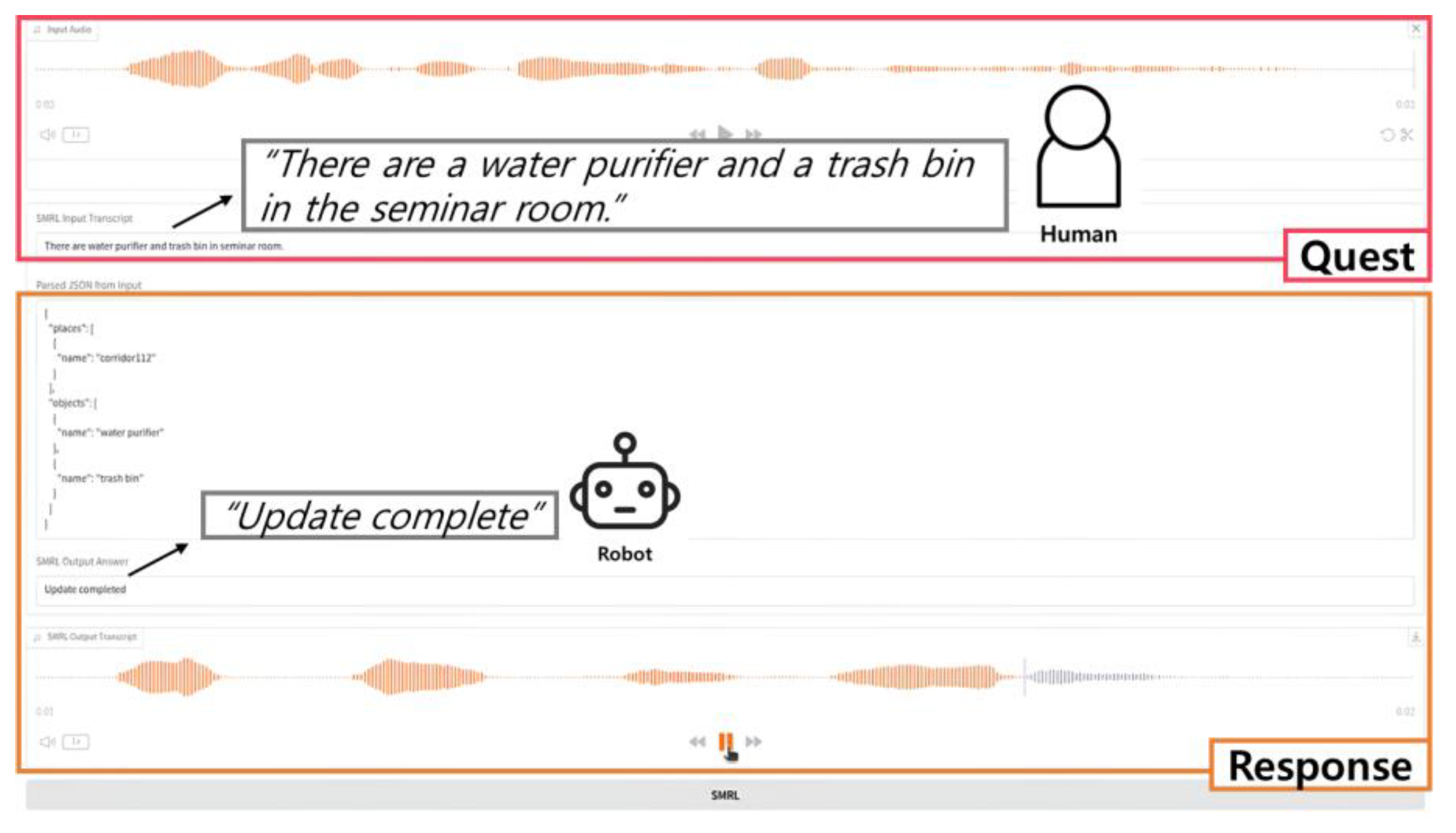

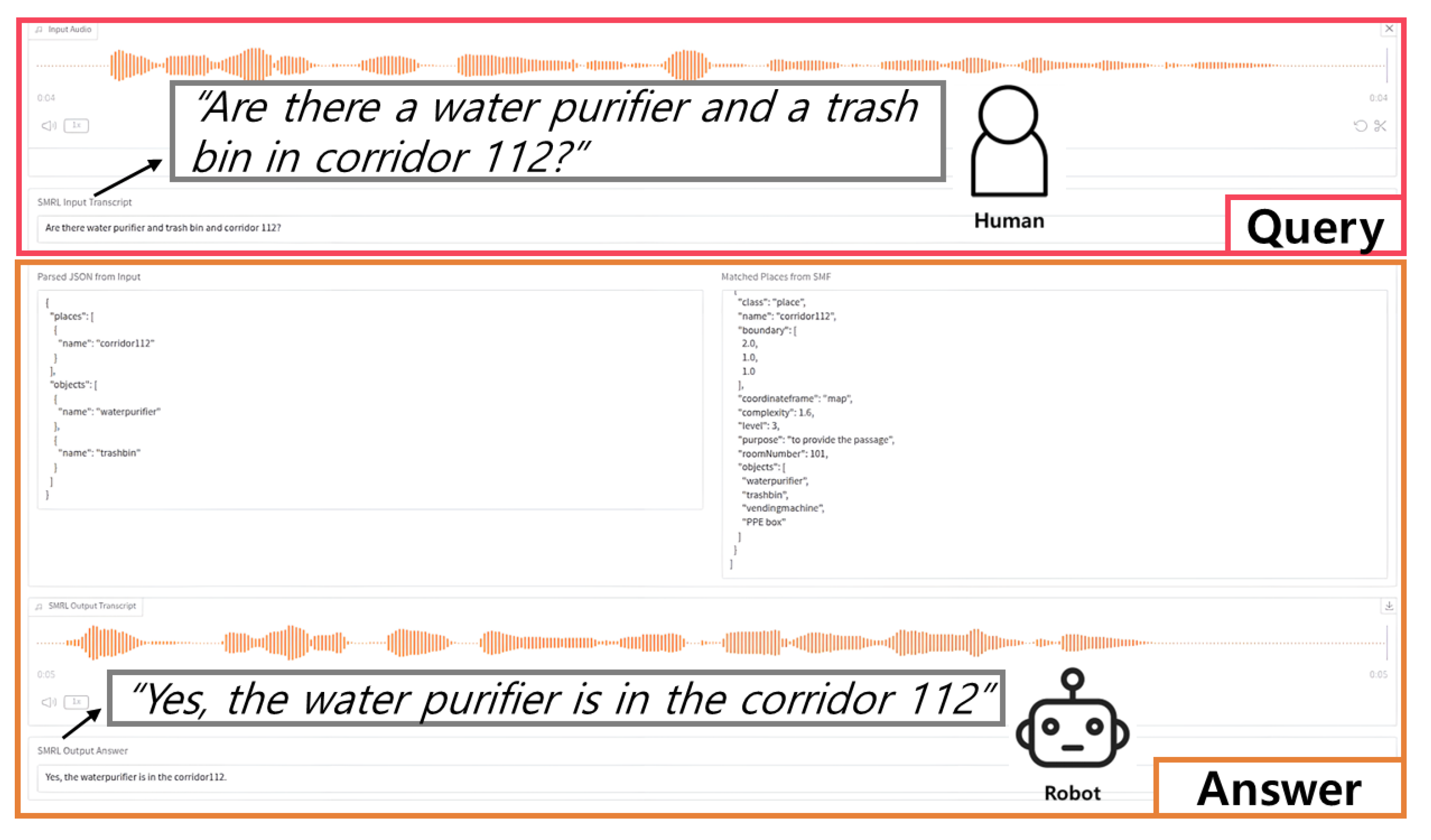

3.4. Semantic Modeling Robot Language

3.5. Semantic Database Generation and Map Representation

4. Experiments

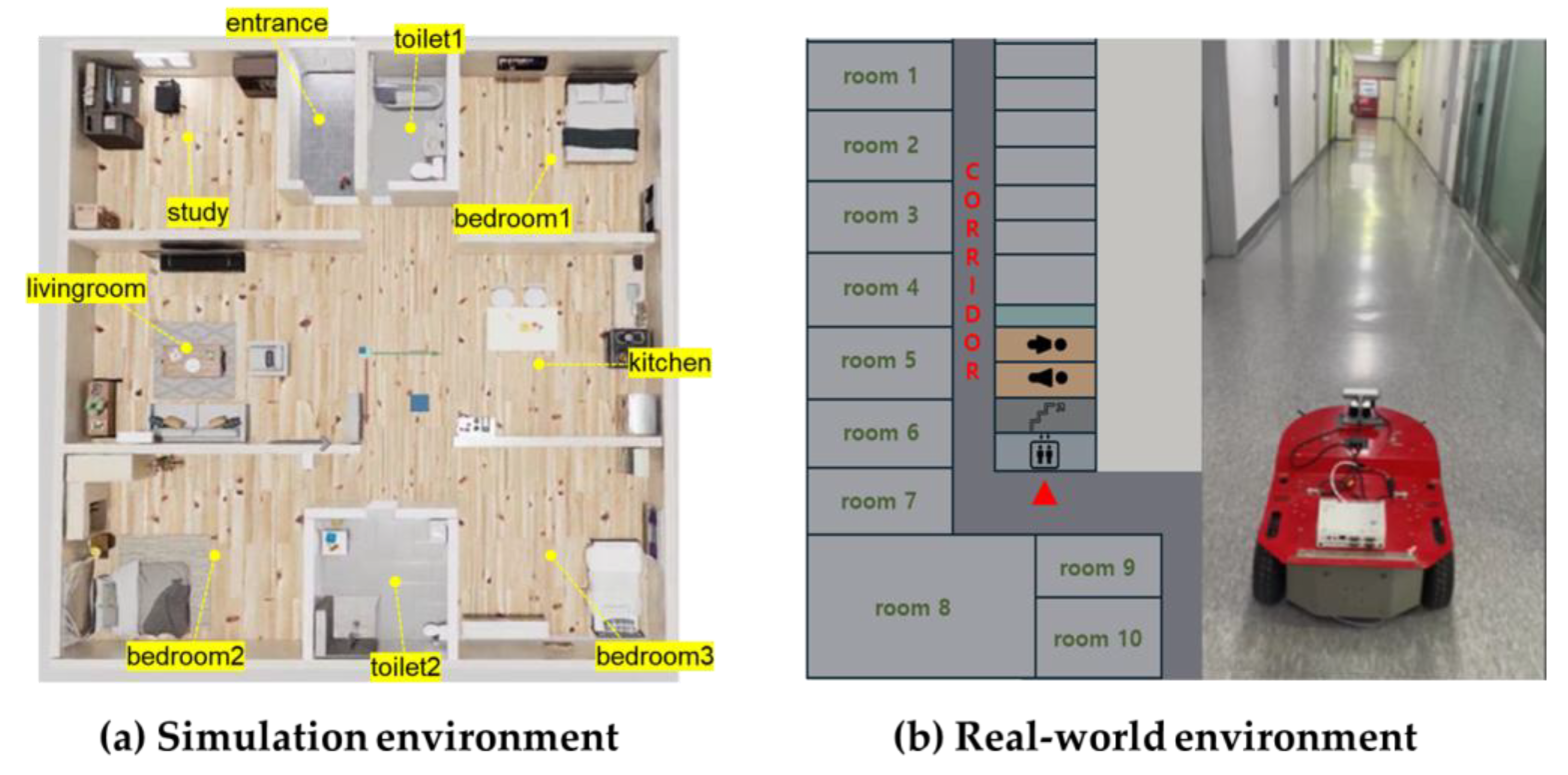

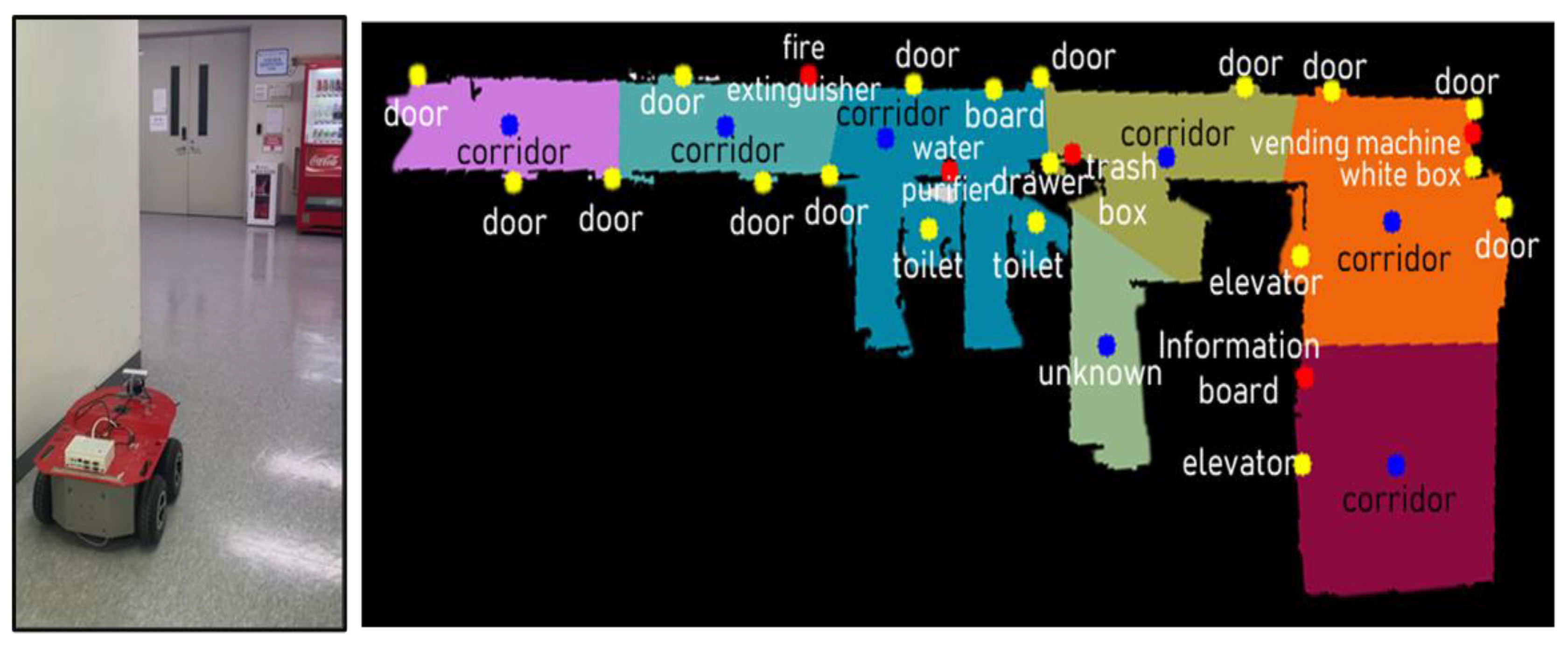

4.1. Experimental Environments and Setup

4.2. Experimental Scenario and Design

4.3. Experimental Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Deeken, H.; Wiemann, T.; Hertzberg, J. Grounding semantic maps in spatial databases. Robot. Auton. Syst. 2018, 105, 146–165. [Google Scholar] [CrossRef]

- Hassan, M.U.; Nawaz, M.I.; Iqbal, J. Towards autonomous cleaning of photovoltaic modules: Design and realization of a robotic cleaner. In Proceedings of the 2017 First International Conference on Latest Trends in Electrical Engineering and Computing Technologies (INTELLECT), Karachi, Pakistan, 15–16 November 2017; pp. 1–6. [Google Scholar]

- Islam, R.U.; Iqbal, J.; Manzoor, S.; Khalid, A.; Khan, S. An autonomous image-guided robotic system simulating industrial applications. In Proceedings of the 2012 7th International Conference on System of Systems Engineering (SoSE), Genova, Italy, 16–19 July 2012; pp. 344–349. [Google Scholar]

- Joo, S.-H.; Manzoor, S.; Rocha, Y.G.; Bae, S.-H.; Lee, K.-H.; Kuc, T.-Y.; Kim, M. Autonomous navigation framework for intelligent robots based on a semantic environment modeling. Appl. Sci. 2020, 10, 3219. [Google Scholar] [CrossRef]

- Joo, S.; Bae, S.; Choi, J.; Park, H.; Lee, S.; You, S.; Uhm, T.; Moon, J.; Kuc, T. A flexible semantic ontological model framework and its application to robotic navigation in large dynamic environments. Electronics 2022, 11, 2420. [Google Scholar] [CrossRef]

- Wang, Y.; Yao, R.; Zhao, K.; Wu, P.; Chen, W. Robotics Classification of Domain Knowledge Based on a Knowledge Graph for Home Service Robot Applications. Appl. Sci. 2024, 14, 11553. [Google Scholar] [CrossRef]

- Zhang, X.; Altaweel, Z.; Hayamizu, Y.; Ding, Y.; Amiri, S.; Yang, H.; Kaminski, A.; Esselink, C.; Zhang, S. Dkprompt: Domain knowledge prompting vision-language models for open-world planning. arXiv 2024, arXiv:2406.17659. [Google Scholar]

- Cao, W.; Yao, X.; Xu, Z.; Liu, Y.; Pan, Y.; Ming, Z. A Survey of Zero-Shot Object Detection. Big Data Min. Anal. 2025, 8, 726–750. [Google Scholar] [CrossRef]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Jiang, Q.; Li, C.; Yang, J.; Su, H. Grounding dino: Marrying dino with grounded pre-training for open-set object detection. In Proceedings of the European Conference on Computer Vision, Milano, Italy, 29 September–4 October 2024; pp. 38–55. [Google Scholar]

- Antol, S.; Agrawal, A.; Lu, J.; Mitchell, M.; Batra, D.; Zitnick, C.L.; Parikh, D. Vqa: Visual question answering. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2425–2433. [Google Scholar]

- Zhang, J.; Huang, J.; Jin, S.; Lu, S. Vision-language models for vision tasks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5625–5644. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Antoniou, G.; Harmelen, F.v. Web ontology language: Owl. Handbook on Ontologies; Springer: Berlin/Heidelberg, Germany, 2009; pp. 91–110. [Google Scholar]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E. On the opportunities and risks of foundation models. arXiv 2021, arXiv:2108.07258. [Google Scholar] [CrossRef]

- Firoozi, R.; Tucker, J.; Tian, S.; Majumdar, A.; Sun, J.; Liu, W.; Zhu, Y.; Song, S.; Kapoor, A.; Hausman, K. Foundation models in robotics: Applications, challenges, and the future. Int. J. Robot. Res. 2025, 44, 701–739. [Google Scholar] [CrossRef]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging properties in self-supervised vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 9650–9660. [Google Scholar]

- Hong, J.; Choi, R.; Leonard, J.J. Learning from Feedback: Semantic Enhancement for Object SLAM Using Foundation Models. arXiv 2024, arXiv:2411.06752. [Google Scholar] [CrossRef]

- Li, B.; Cai, Z.; Li, Y.-F.; Reid, I.; Rezatofighi, H. Hier-SLAM: Scaling-up Semantics in SLAM with a Hierarchically Categorical Gaussian Splatting. arXiv 2024, arXiv:2409.12518. [Google Scholar]

- Ciria, A.; Schillaci, G.; Pezzulo, G.; Hafner, V.V.; Lara, B. Predictive processing in cognitive robotics: A review. Neural Comput. 2021, 33, 1402–1432. [Google Scholar] [CrossRef]

- Mon-Williams, R.; Li, G.; Long, R.; Du, W.; Lucas, C.G. Embodied large language models enable robots to complete complex tasks in unpredictable environments. Nat. Mach. Intell. 2025, 7, 592–601. [Google Scholar] [CrossRef]

- Hughes, N.; Chang, Y.; Carlone, L. Hydra: A real-time spatial perception system for 3D scene graph construction and optimization. arXiv 2022, arXiv:2201.13360. [Google Scholar] [CrossRef]

- Zhang, L.; Hao, X.; Xu, Q.; Zhang, Q.; Zhang, X.; Wang, P.; Zhang, J.; Wang, Z.; Zhang, S.; Xu, R. MapNav: A Novel Memory Representation via Annotated Semantic Maps for VLM-based Vision-and-Language Navigation. arXiv 2025, arXiv:2502.13451. [Google Scholar]

- Rosinol, A.; Violette, A.; Abate, M.; Hughes, N.; Chang, Y.; Shi, J.; Gupta, A.; Carlone, L. Kimera: From SLAM to spatial perception with 3D dynamic scene graphs. Int. J. Robot. Res. 2021, 40, 1510–1546. [Google Scholar] [CrossRef]

- Liu, Q.; Wen, Y.; Han, J.; Xu, C.; Xu, H.; Liang, X. Open-world semantic segmentation via contrasting and clustering vision-language embedding. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 275–292. [Google Scholar]

- Gadre, S.Y.; Wortsman, M.; Ilharco, G.; Schmidt, L.; Song, S. Cows on pasture: Baselines and benchmarks for language-driven zero-shot object navigation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 23171–23181. [Google Scholar]

- Chen, B.; Xia, F.; Ichter, B.; Rao, K.; Gopalakrishnan, K.; Ryoo, M.S.; Stone, A.; Kappler, D. Open-vocabulary queryable scene representations for real world planning. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 11509–11522. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y. Segment anything. In Proceedings of the IEEE/CVF international conference on computer vision, Paris, France, 2–6 October 2023; pp. 4015–4026. [Google Scholar]

- Huang, C.; Mees, O.; Zeng, A.; Burgard, W. Visual language maps for robot navigation. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 10608–10615. [Google Scholar]

- Mehan, Y.; Gupta, K.; Jayanti, R.; Govil, A.; Garg, S.; Krishna, M. QueSTMaps: Queryable Semantic Topological Maps for 3D Scene Understanding. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024; pp. 13311–13317. [Google Scholar]

- Allu, S.H.; Kadosh, I.; Summers, T.; Xiang, Y. Autonomous Exploration and Semantic Updating of Large-Scale Indoor Environments with Mobile Robots. arXiv 2024, arXiv:2409.15493. [Google Scholar] [CrossRef]

- Jatavallabhula, K.M.; Kuwajerwala, A.; Gu, Q.; Omama, M.; Chen, T.; Maalouf, A.; Li, S.; Iyer, G.; Saryazdi, S.; Keetha, N. Conceptfusion: Open-set multimodal 3d mapping. arXiv 2023, arXiv:2302.07241. [Google Scholar]

- Kim, T.; Min, B.-C. Semantic Layering in Room Segmentation via LLMs. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024; pp. 9831–9838. [Google Scholar]

- Yamauchi, B. A frontier-based approach for autonomous exploration. In Proceedings of the 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation CIRA’97.’Towards New Computational Principles for Robotics and Automation’, Monterey, CA, USA, 10–11 June 1997; pp. 146–151. [Google Scholar]

- Zhang, C.; Han, D.; Qiao, Y.; Kim, J.U.; Bae, S.-H.; Lee, S.; Hong, C.S. Faster segment anything: Towards lightweight sam for mobile applications. arXiv 2023, arXiv:2306.14289. [Google Scholar] [CrossRef]

- Batra, D.; Gokaslan, A.; Kembhavi, A.; Maksymets, O.; Mottaghi, R.; Savva, M.; Toshev, A.; Wijmans, E. Objectnav revisited: On evaluation of embodied agents navigating to objects. arXiv 2020, arXiv:2006.13171. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning(ICML), Virtual Conference, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Minderer, M.; Gritsenko, A.; Stone, A.; Neumann, M.; Weissenborn, D.; Dosovitskiy, A.; Mahendran, A.; Arnab, A.; Dehghani, M.; Shen, Z. Simple open-vocabulary object detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 728–755. [Google Scholar]

- Kamath, A.; Singh, M.; LeCun, Y.; Synnaeve, G.; Misra, I.; Carion, N. Mdetr-modulated detection for end-to-end multi-modal understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1780–1790. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Bormann, R.; Jordan, F.; Li, W.; Hampp, J.; Hägele, M. Room segmentation: Survey, implementation, and analysis. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1019–1026. [Google Scholar]

- Luperto, M.; Kucner, T.P.; Tassi, A.; Magnusson, M.; Amigoni, F. Robust structure identification and room segmentation of cluttered indoor environments from occupancy grid maps. IEEE Robot. Autom. Lett. 2022, 7, 7974–7981. [Google Scholar] [CrossRef]

- Zhou, X.; Girdhar, R.; Joulin, A.; Krahenbuhl, P.; Misra, I. Detecting Twenty-thousand Classes using Image-level Supervision. arXiv 2022, arXiv:2201.02605. [Google Scholar]

- Kolve, E.; Mottaghi, R.; Han, W.; VanderBilt, E.; Weihs, L.; Herrasti, A.; Deitke, M.; Ehsani, K.; Gordon, D.; Zhu, Y.; et al. AI2-THOR: An Interactive 3D Environment for Visual AI. arXiv 2017, arXiv:1712.05474. [Google Scholar]

- Deitke, M.; VanderBilt, E.; Herrasti, A.; Weihs, L.; Salvador, J.; Ehsani, K.; Han, W.; Kolve, E.; Farhadi, A.; Kembhavi, A.; et al. ProcTHOR: Large-Scale Embodied AI Using Procedural Generation. arXiv 2022, arXiv:2206.06994. [Google Scholar]

- Manzoor, S.; Rocha, Y.G.; Joo, S.-H.; Bae, S.-H.; Kim, E.-J.; Joo, K.-J.; Kuc, T.-Y. Ontology-Based Knowledge Representation in Robotic Systems: A Survey Oriented toward Applications. Appl. Sci. 2021, 11, 4324. [Google Scholar] [CrossRef]

- Ard’on, P.; Pairet, É.; Lohan, K.S.; Ramamoorthy, S.; Petrick, R.P.A. Affordances in Robotic Tasks—A Survey. arXiv 2020, arXiv:2004.07400. [Google Scholar]

- Ahn, M.; Brohan, A.; Brown, N.; Chebotar, Y.; Cortes, O.; David, B.; Finn, C.; Gopalakrishnan, K.; Hausman, K.; Herzog, A.; et al. Do As I Can, Not As I Say: Grounding Language in Robotic Affordances. In Proceedings of the Conference on Robot Learning, Auckland, New Zealand, 14–18 December 2022. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved Techniques for Grid Mapping With Rao-Blackwellized Particle Filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef]

- Zhang, H.-y.; Lin, W.-m.; Chen, A.-x. Path Planning for the Mobile Robot: A Review. Symmetry 2018, 10, 450. [Google Scholar] [CrossRef]

- Fox, D.; Burgard, W.; Thrun, S. The dynamic window approach to collision avoidance. IEEE Robot. Autom. Mag. 1997, 4, 23–33. [Google Scholar] [CrossRef]

- Borji, A.; Cheng, M.-M.; Jiang, H.; Li, J. Salient object detection: A survey. Comput. Vis. Media 2014, 5, 117–150. [Google Scholar] [CrossRef]

- Dong, Q.; Li, L.; Dai, D.; Zheng, C.; Wu, Z.; Chang, B.; Sun, X.; Xu, J.; Li, L.; Sui, Z. A Survey on In-context Learning. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022. [Google Scholar]

- Bai, S.; Chen, K.; Liu, X.; Wang, J.; Ge, W.; Song, S.; Dang, K.; Wang, P.; Wang, S.; Tang, J.; et al. Qwen2.5-VL Technical Report. arXiv 2025, arXiv:2502.13923. [Google Scholar] [CrossRef]

- Li, J.; Li, D.; Xiong, C.; Hoi, S.C.H. BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation. In Proceedings of the International Conference on Machine Learning, Baltimore, Maryland, 17–23 July 2022. [Google Scholar]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual Instruction Tuning. arXiv 2023, arXiv:2304.08485. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.J.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Gupta, A.; Dollár, P.; Girshick, R.B. LVIS: A Dataset for Large Vocabulary Instance Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 5351–5359. [Google Scholar]

- Weller, O.; Marone, M.; Weir, N.; Lawrie, D.J.; Khashabi, D.; Durme, B.V. “According to …”: Prompting Language Models Improves Quoting from Pre-Training Data. In Proceedings of the Conference of the European Chapter of the Association for Computational Linguistics, Dubrovnik, Croatia, 2–6 May 2023. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef]

- Radford, A.; Narasimhan, K. Improving Language Understanding by Generative Pre-Training. 2018. [Google Scholar]

- Team, G.; Anil, R.; Borgeaud, S.; Alayrac, J.-B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; Millican, K. Gemini: A family of highly capable multimodal models. arXiv 2023, arXiv:2312.11805. [Google Scholar] [CrossRef]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.-A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Consortium, W.W.W. RDF 1.1 Turtle: Terse RDF triple language. 2014. [Google Scholar]

- Zhou, Z.; Song, J.; Xie, X.; Shu, Z.; Ma, L.; Liu, D.; Yin, J.; See, S. Towards building AI-CPS with NVIDIA Isaac Sim: An industrial benchmark and case study for robotics manipulation. In Proceedings of the 46th International Conference on Software Engineering: Software Engineering in Practice, Lisbon, Portugal, 14–20 April 2024; pp. 263–274. [Google Scholar]

| Name | Autonomous Exploration | Semantic Knowledge Extraction Method | DK Usage | Semantic Knowledge Extraction | Modeling by People | ||

|---|---|---|---|---|---|---|---|

| Robot | Object | Place | |||||

| Hydra [21] | - | closed-set labeling | - | ○ | ● | ○ | - |

| MapNav [22] | ✓ | closed-set labeling | ✓ | ○ | ● | ○ | - |

| Kimera [23] | - | closed-set labeling | - | ● | ● | ○ | - |

| Sai et al. [30] | ✓ | ZSOD, Mask generation | - | ○ | ● | ○ | - |

| CoWs [25] | ✓ | ZSOD, VLE | - | ○ | ● | ○ | - |

| NLMap [26] | ✓ | ZSOD, VLE | - | ○ | ● | ○ | - |

| ConceptFusion [31] | - | ZSOD, VLE, Mask generation | - | ○ | ● | ○ | - |

| VLMaps. [28] | - | Mask generation, VLE | - | ○ | ● | ○ | - |

| QueSTMaps [29] | - | Mask generation, VLE | - | ○ | ● | ● | - |

| SeLRoS [32] | - | ZSOD, LLMs | - | ○ | ● | ◉ | - |

| DK-SMF(our) | ✓ | ZSOD, VQA, LLMs | ✓ | ◉ | ◉ | ◉ | ✓ |

| Type | Module/Stage | Function/Description | Input | Output |

|---|---|---|---|---|

| Object | Autonomous exploration | Frontier-based exploration: building a map and collecting sensor data | scan, robot pose | 2d map, sensor data, way points |

| Object list generation | Object list prompt generation using the VQA model | image, prompt | object list | |

| Zero-shot object detection | Zero-shot object detection in images | 2d map, sensor data, object list (prompt) | class, Bbox, conf., pose, spatialRelation | |

| Object semantic information extraction | Object semantic information extraction using the VQA model | post-processed image, prompt | object semantic information | |

| Place | Place segmentation | SLIC superpixel-based room segmentation | 2d map | polygon |

| Place semantic information extraction | Place semantic information extraction using the LLMs | object list in each polygon | place semantic information | |

| Etc. | SMRL | Environment modeling by user utterance | utterance (voice or text) | semantic information |

| Semantic DB integration | Store all extracted semantic information | object place semantic information | semantic DB |

| TOSM Datatype Properties | DataType | Example | |

|---|---|---|---|

| Symbol | name | string | “Clean Robot” |

| ID | int | 1 | |

| Explicit | size | floatArray [width, length, height, weight] | [0.31, 0.31, 0.1, 4.13] (1) |

| pose | floatArray [x, y, z, theta] | [0.5, 0.5, 0, 0.352] (2) | |

| velocity | floatArray [linear, angular] | [1.2, 0] (3) | |

| sensor | dict., map | “lidar: spec., imu: spec., camera: spec., encoder: spec., …” | |

| battery | floatArray [voltage, ampere, capacity] | [24.0, 5.0, 3200] (4) | |

| coordinateFrame | string | “map” | |

| Implicit | affordance | string | “vacuum, water clean” |

| purpose | string | “home cleaning” | |

| current state | string | “move” | |

| environment | string | “house” | |

| TOSM Datatype Properties | DataType | Example | |

|---|---|---|---|

| Symbol | name | string | “refrigerator” |

| ID | int | 1 | |

| Explicit | size | floatArray [width, length, height] | [0.5, 0.5, 1.5] (1) |

| pose | floatArray [x, y, z, theta] | [1.5, −12.5, 0, 0] (2) | |

| velocity | floatArray [x, y, z] | [0, 0, 0] (3) | |

| color | string | “silver” | |

| coordinateFrame | string | “house map” | |

| Implicit | purpose | string | “food storage device” |

| isKeyObject | boolean | “Y” | |

| isPrimeObject | boolean | “N” | |

| isMovable | boolean | “N” | |

| isOpen | boolean | “N” | |

| canBeOpen | boolean | “Y” | |

| spatialRelation | dict., map | “isleftto: dining desk, isrightto: chair” | |

| TOSM Datatype Properties | DataType | Example | |

|---|---|---|---|

| Symbol | name | string | “kitchen” |

| ID | int | 3 | |

| Explicit | boundary | polygon | [(2.0, 1.0), (1.2,−0.5), …] |

| coordinateFrame | string | “house map” | |

| Implicit | complexity | float | 1.6 |

| level | int(floor) | “7” | |

| purpose | string | “place to prepare food” | |

| roomNumber | int | “0” | |

| isInsideOf | stringArray | [“object1”, “object2”, “object3”] | |

| spatialRelation | dict., map | “isleftto: 2, isrightto: 4” | |

| Environment | Moving Interval (m) | Mapped Area (m2) | Free Space Area (m2) | Points |

|---|---|---|---|---|

| household (simulation) | 1.2 | 127.31 | 123.49 | 23 |

| corridor (real-world) | 2.0 | 148.14 | 142.21 | 15 |

| Environment | Object Detection Accuracy (%) | Object Semantic Accuracy (%) | Object Type | Object Semantic Data |

|---|---|---|---|---|

| household (simulation) | 78.1 | 83.33 | 15 | 30 |

| corridor (real-world) | 81.36 | 87.51 | 11 | 24 |

| Environment | Place Semantic Accuracy (%) | Place Type | Place Semantic Data |

|---|---|---|---|

| household (simulation) | 85.0 | 6 | 11 |

| corridor (real-world) | 90.5 | 2 | 7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Joo, K.; Jeong, Y.; Kwon, S.; Jeong, M.; Kim, H.; Kuc, T. DK-SMF: Domain Knowledge-Driven Semantic Modeling Framework for Service Robots. Electronics 2025, 14, 3197. https://doi.org/10.3390/electronics14163197

Joo K, Jeong Y, Kwon S, Jeong M, Kim H, Kuc T. DK-SMF: Domain Knowledge-Driven Semantic Modeling Framework for Service Robots. Electronics. 2025; 14(16):3197. https://doi.org/10.3390/electronics14163197

Chicago/Turabian StyleJoo, Kyeongjin, Yeseul Jeong, Seungwon Kwon, Minyoung Jeong, Haryeong Kim, and Taeyong Kuc. 2025. "DK-SMF: Domain Knowledge-Driven Semantic Modeling Framework for Service Robots" Electronics 14, no. 16: 3197. https://doi.org/10.3390/electronics14163197

APA StyleJoo, K., Jeong, Y., Kwon, S., Jeong, M., Kim, H., & Kuc, T. (2025). DK-SMF: Domain Knowledge-Driven Semantic Modeling Framework for Service Robots. Electronics, 14(16), 3197. https://doi.org/10.3390/electronics14163197