1. Introduction

In the context of developing countries and neglected regions, the educational institutions still heavily depend on physical storage systems for essays, project reports, and examination scripts, or these institutions are still stuck with non-resilient digital infrastructure [

1]. Such storage systems are at a high risk of deterioration because of environmental factors, poor handling, technical issues, and disasters, like floods, fires, or system crashes [

2]. Unlike well-maintained digital archives that offer redundancy, encryption, and secure backup, these digital storage systems expose educational institutions to the risk of losing vital academic records which reveal the learning journeys and developmental progress of students [

3].

The archival weaknesses mentioned above compromise educational quality, which impacts academic honesty, unbiased evaluation, and the conservation of educational information [

4]. The retrieval and verification processes regarding academic evaluations are severely limited when student essays and assessments are lost or partially damaged [

5]. This greatly weakens accountability of the institution and takes away students’ rights to appeal or have a fresh look at previous evaluations, hence damaging procedural fairness and educational justice [

6]. Also, losing scholarly content makes it impossible to conduct longitudinal studies and trend analyses that could help in curriculum development, policy-making, and educational research [

7].

In contexts where academic records as official documents are held to discharge graduation, certification, or scholarship claims, the implications of such data loss can be significant [

8]. For example, it may entail a loss of that student’s earned credit work, particularly in situations that require reassessment or verification, such as disputed grades or revalidation exercises [

9]. Furthermore, inadequate reliable archival inhibits any historical understanding of how an institution’s pedagogical practices have evolved over time, as well as writing quality and assessment standards [

10]. There is a crucial need to develop automated, smart restoration solutions that are be capable of rebuilding, with high accuracy, the content and structure of missing or damaged student essays by using advanced natural language processing (NLP) technologies [

11].

Recent developments in natural language processing (NLP) provide hope for the restoration and rebuilding of text that has been somewhat misplaced or harmed [

12]. Through methodologies, like sequence-to-sequence learning, language modeling based on transformers, and contextual data imputation, NLP models have demonstrated an extraordinary ability to deduce missing or tampered-with text under conditions that are both noisy and incomplete [

13]. Such abilities will be applied to reconstruct student essays back to their original structure, with thematic coherence and evaluative integrity at a restored or approximated level as close to original as possible [

14].

The other fact remains that the potential for restoring old scholarly content has many of ethical and educational implications [

15]. It can further enable fair reassessment of earlier lost essays, hence mitigating bias resulting from archival inequality, supporting an inclusive academic evaluation framework [

16]. This becomes especially essential in post-conflict or disaster-affected regions where physical academic records have been compromised [

17].

This work fills this gap by introducing a new, NLP-based reconstruction of missing or broken text in student essays using a span-infilling transformer model trained on educational writing datasets. Our technique differs from the overall approaches to text generation in that it integrates academic industry prompt engineering, document segmentation, and rubric-matching feedback to produce reconstructions that are both thematically consistent and evaluatively significant. The method was empirically tested with a specially constructed dataset of demolished and pristine essay pairs of real undergraduate exam scripts. Our results show that the proposed method outperforms existing language models in terms of restoration quality and is sufficient for fair re-marking of already lost scholarly work.

2. Literature Review

The restoration of damaged or degraded academic records, particularly handwritten or typed student essays, intersects with advancements in document reconstruction, grammatical correction, and pedagogically informed NLP systems. While the broader field of document restoration has traditionally focused on historical manuscripts and visual artifacts, recent studies are increasingly exploring the potential of deep learning and NLP for educational applications.

Recent breakthroughs in image-to-image translation and document restoration have significantly influenced how damaged or incomplete historical texts are reconstructed. Saha et al. [

18] proposed Npix2Cpix, a GAN-based architecture that integrates retrieval and classification for watermark retrieval from historical document images, showcasing the power of generative models in recovering fine-grained details in degraded records. Similarly, Miloud et al. [

19] presented a deep learning-enhanced framework for restoring ancient Arabic manuscripts, integrating image enhancement with optimized convolutional architectures to improve legibility and structure. Extending this, Vidal-Gorène and Camps [

20] employed an image-to-image translation technique to synthetically generate missing or corrupted page layouts of historical manuscripts, indicating that artificial generation pipelines can support layout recovery—a key step in reconstructing educational documents, such as essays.

Although these approaches primarily emphasize visual document recovery, their underlying techniques—especially layout analysis and context-aware reconstruction—can be adapted to educational settings where scanned or digitally photographed essay archives suffer from noise, smudges, or physical damage. However, in cases where text is partially lost or distorted, linguistic recovery through NLP becomes indispensable.

In this vein, grammatical error correction (GEC) and language modeling serve as foundational tools for semantic restoration. Xiao and Yin [

21] leveraged n-gram language models to correct grammatical inconsistencies in English texts, particularly those common in non-native writing. While statistical methods offer robustness in low-resource settings, transformer-based models, like BART and MarianMT, have demonstrated superior responsiveness and contextual understanding. Raju et al. [

22] compared these architectures and found that transformer-based models outperformed traditional models in both grammatical and spelling error correction. Further advancing this line, Li and Wang [

23] introduced a detection–correction structure built on general language models, enhancing both precision and adaptability for error correction tasks. These technologies are directly relevant to the restoration of damaged student essays, where sentence fragments may require contextual regeneration while preserving the original author’s voice and academic intent.

From a pedagogical NLP perspective, Abdullah et al. [

24] designed an automated system for evaluating handwritten answer scripts using NLP and deep learning. Their work highlights the integration of visual and textual modalities for academic assessment, underscoring the feasibility of automated inspection and semantic evaluation of student-generated content. In high-stakes contexts where reassessment of lost or degraded scripts is required, such systems may provide both restoration and rubric-aligned evaluation.

However, restoration models must also consider the vulnerabilities and ethical concerns introduced by AI in education. Silveira et al. [

25] explored adversarial attacks on transformer-based automated essay scoring (AES) systems, revealing how subtle perturbations can significantly affect scoring accuracy. This finding calls for caution: any restoration model intended for fair re-evaluation must be robust against bias and manipulation while maintaining transparency and fairness in academic assessment.

In addition, generative models for essay restoration—particularly those relying on span-infilling approaches—are susceptible to exposure bias, where the model increasingly relies on its own predictions rather than ground truth during generation, and where errors can compound. Pozzi et al. [

26] addressed this issue in the setting of large language model distillation by proposing an imitation learning approach that mitigates exposure bias and improves sequence-level prediction fidelity. Their findings are particularly relevant to our task, as good essay reconstruction demands coherence and correctness across multiple generated spans.

Collectively, these studies form the basis for designing a comprehensive NLP-based framework that integrates document layout recovery, linguistic reconstruction, and fairness-aware scoring. By combining visual restoration methods with advanced grammatical correction and pedagogical intelligence, it becomes possible to reconstruct lost student essays in a manner that is both technically robust and educationally justifiable.

3. Materials and Methods

3.1. Dataset Description and Preprocessing

The dataset employed in this study comprises 5000 essay-style responses sourced from the archives of the Center for Applied Data Science, Sol Plaatje University, Kimberley, South Africa. At the time of this research, only student responses from Data Science examination scripts were readily available in sufficient volume and quality for analysis. These responses, originally handwritten in examination booklets, had been preserved over several academic sessions and were subsequently digitized through high-resolution scanning. While a number of the physical scripts showed evidence of damage due to age, handling, or storage conditions, such as torn pages, faded text, and ink bleed, the final dataset used for model development consisted of fully legible essays to ensure the availability of accurate ground truth data.

To simulate the real-world degradation patterns observed in archival materials, controlled artificial corruption was introduced into the clean essays by masking or removing spans of text. A fixed random seed was used during span masking to ensure reproducibility across training runs, and span lengths were sampled from a truncated normal distribution (mean = 5 tokens, standard deviation = 2, with bounds of 3 to 10 tokens) to maintain uniform degradation difficulty across samples. Additionally, each sample was corrupted with exactly three masked spans (n = 3) to stabilize the complexity of the restoration task during both training and evaluation phases.

Each resulting sample contains two fields, namely damagedText, representing the synthetically degraded version of the essay with missing content, and full-Text, representing the original, unaltered essay. This setup allowed for the creation of a parallel corpus suitable for supervised training of restoration models. All entries were stored in the JSON Lines (JSONL) format, enabling efficient streaming and batch processing. Preprocessing steps included lowercasing, punctuation normalization, and token validation to ensure textual consistency across samples. This carefully constructed dataset forms the foundation for training and evaluating the proposed NLP-based essay restoration framework.

3.2. Proposed Methodology

The task of restoring damaged student essays is framed as a text infilling problem, where the objective is to recover missing textual spans using the context surrounding the damaged regions. To accomplish this, we employ a transformer-based sequence-to-sequence model, specifically a fine-tuned instance of the text-to-text transfer transformer (T5), due to its demonstrated ability to perform coherent span-level reconstruction within structured and educational domains.

Let represent the complete, original essay text. A corrupted version of this essay, denoted by , is constructed by removing spans of text and inserting special sentinel tokens in their place. The task is then to learn a function , where is a mapping parameterized by the T5 model’s weights , trained to restore the removed spans based on the remaining context.

Corruption is applied by replacing

contiguous spans within

using a sequence of sentinel tokens

. The resulting corrupted input sequence

is defined as follows:

where

denotes the

-th span selected for masking,

is the corresponding sentinel token (e.g., <extra_id_0>), and

is the total number of removed spans.

The model is trained to predict the target sequence

, which concatenates the removed spans in the order they appear, each prefixed by its corresponding sentinel:

This training structure allows the model to learn to reconstruct multiple spans simultaneously, conditioned on their surrounding context and the ordering cues provided by the sentinel tokens.

A critical design decision in this framework is that the T5 model is explicitly trained to reconstruct masked spans based on the original, uncorrected text, not to improve or alter it. This means the model preserves the factual, grammatical, or logical integrity of the original student essay, even if it includes incorrect statements, awkward phrasing, or flawed reasoning. The intention is not to produce an idealized version of the student’s writing, but to faithfully recover what was originally written—even if that includes mistakes.

This distinction is particularly important for maintaining educational fairness and procedural integrity in reassessment scenarios. If the model were to “fix” errors during reconstruction, it could unfairly influence re-evaluation outcomes or compromise the authenticity of archived content.

To illustrate this behavior,

Table 1 now includes additional examples where the original student text contains factual or logical inaccuracies. The corresponding restored outputs demonstrate that the model successfully recovers the flawed content as-is, rather than attempting to correct or sanitize it. This reinforces the suitability of the approach for educational settings that prioritize authentic restoration over automatic improvement.

3.2.1. Encoder–Decoder Modeling

The corrupted input

is processed by the encoder to produce hidden representations, as follows:

Subsequently, the decoder generates the target sequence

containing the missing spans, as follows:

where

3.2.2. Optimization Objective

Model training is guided by a conditional language modeling loss. The objective is to minimize the negative log-likelihood of generating each token in the target sequence

given the prior tokens and the corrupted input, as follows:

where

is the total number of tokens in the target sequence;

is the token at time step ;

denotes all preceding target tokens;

is the decoder’s probability distribution over the vocabulary.

The model output

is segmented by sentinel tokens and inserted back into the original input structure to form the reconstructed essay

, as follows:

where reconstruct () is a deterministic function that replaces each sentinel token

in

with the corresponding generated span from

.

For each reconstructed span, a confidence score

can be estimated by averaging the model’s maximum softmax probabilities over all predicted tokens in that span, as follows:

where

is the confidence score for the -th span;

is the length (in tokens) of the predicted span;

is the -th token in the -th span.

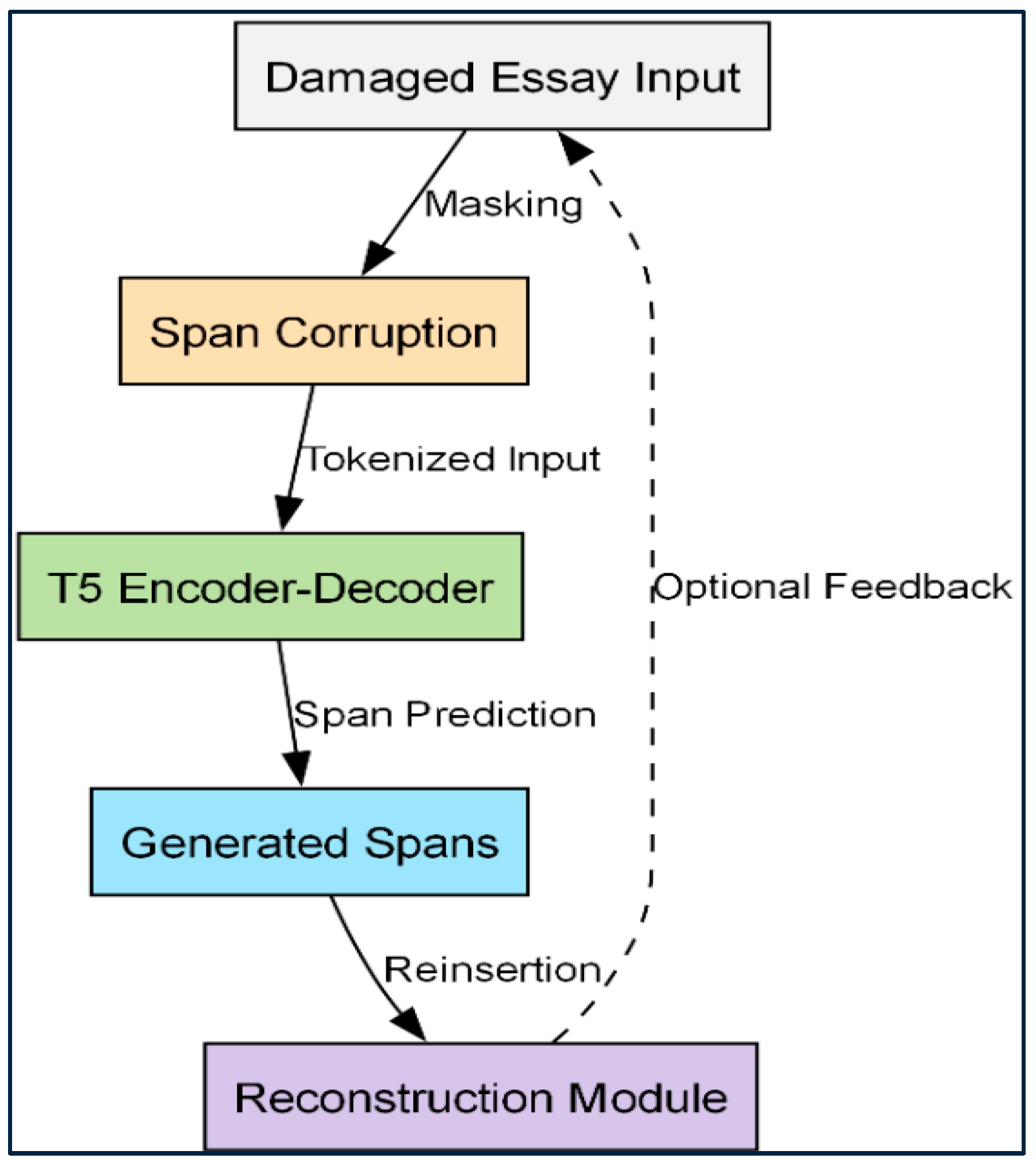

Figure 1 presents a high-level architecture of the essay restoration framework. The pipeline consists of the following stages: ingestion of damaged text, application of span corruption using sentinel tokens, transformation via the T5 encoder–decoder, generation of the missing spans, and post-processing for reassembly.

3.3. Experimental Setup and Evaluation

To rigorously evaluate the proposed NLP-based essay restoration framework, a detailed experimental protocol was implemented. The dataset consisted of 5000 JSONL-formatted essays, each comprising a damaged Text (input) and a full Text (target). The data were stratified and split into training (70%, 3500 samples), validation (15%, 750 samples), and test (15%, 750 samples) sets, ensuring a diverse range of sentence structures and corruption patterns across subsets.

The core model used was the T5-base transformer (220 M parameters), pretrained with a span corruption objective. Fine-tuning involved restoring the original text from masked inputs, prefixed with the task indicator “restore:”. Training was conducted using the AdamW optimizer (learning rate: 3 × 10−4, weight decay: 0.01) over 10 epochs with a batch size of 16, using a maximum sequence length of 256 tokens. Additional strategies included a 500-step warmup, gradient clipping (norm 1.0), and dropout (0.1) to prevent overfitting. Tokenization was handled using the T5-compatible SentencePiece tokenizer.

The training environment included a single NVIDIA A100 GPU (40 GB), manufactured by NVIDIA Corporation, based in Santa Clara, CA, United States. PyTorch 2.0 and Hugging Face Transformers 4.38 were utilized, with mixed precision training enabled through (via torch.cuda.amp) to improve memory efficiency. And the Weights & Biases software was used to track experiments and results.

Performance was quantitatively assessed using three metrics, namely ROUGE-L (to measure structural similarity), BLEU (for n-gram precision), and BERTScore (semantic similarity using contextual embeddings). These were computed across the test set, with additional uncertainty thresholds flagged for further inspection.

The following two baseline models were used for comparison:

A BERT-based span prediction model, which performed well on short text spans but failed on longer sequences.

A GPT-2 decoder-only model, which struggled with the bidirectional context necessary for masked span recovery.

To complement automated metrics, a human evaluation was conducted on 100 random test samples. Three academic experts rated the restored outputs based on fluency, relevance, and coherence using a 5-point Likert scale. Cohen’s kappa was computed to assess inter-rater agreement, offering insights into the model’s real-world educational validity, especially in nuanced cases where automated metrics fell short.

4. Results and Discussion

This section presents the results from both automated and human evaluations to assess the effectiveness of the proposed T5-based essay restoration framework. The analysis includes training dynamics, quantitative and qualitative metrics, error-type analysis, model confidence correlation, and now, an ablation study to analyze model component contributions.

4.1. Training Dynamics

The learning behavior of the model during fine-tuning is critical for understanding convergence and generalization.

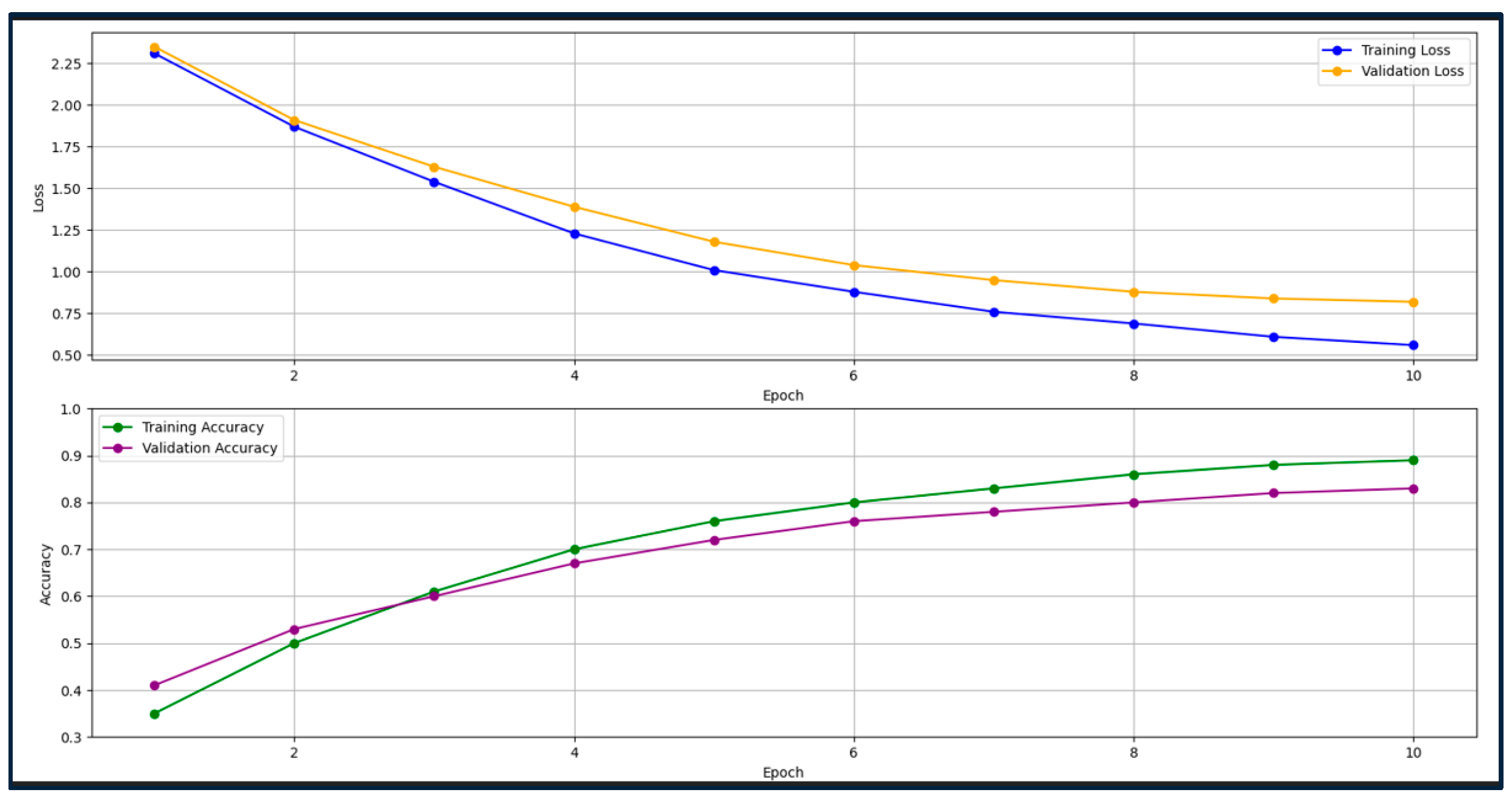

Figure 2 illustrates the training dynamics of the proposed model over 10 epochs. Both losses show a smooth, monotonic decrease, with the validation loss closely tracking the training loss, indicating stable convergence without significant overfitting. The consistent reduction in loss demonstrates that the model is effectively learning to reconstruct missing spans from corrupted essays.

Complementing the loss curves, the accuracy curves show the trend of validation accuracy over 10 epochs, measured as exact span match accuracy on validation data. Accuracy steadily improves, reaching approximately 83% at epoch 10, further confirming the model’s increasing ability to reconstruct the original essay text with precision as training progresses.

4.2. Automated Evaluation Metrics

The final model’s performance was quantitatively evaluated on the test set and compared with three baseline models, namely a BERT-based span classifier, a GPT-2 decoder-only model, and a newly added BART encoder–decoder model to ensure architectural alignment with the proposed T5 framework. Evaluation was conducted using ROUGE-L (measuring lexical sequence overlap), BLEU-4 (measuring n-gram precision), and BERTScore (assessing semantic similarity via contextual embeddings). The results are summarized in

Table 1.

Table 1.

Quantitative performance of T5 and baseline models.

Table 1.

Quantitative performance of T5 and baseline models.

| Model | ROUGE-L ↑ | BLEU-4 ↑ | BERTScore ↑ |

|---|

| T5 (proposed) | 0.831 | 0.714 | 0.885 |

| BART | 0.805 | 0.689 | 0.864 |

| BERT-Span | 0.629 | 0.473 | 0.791 |

| GPT-2 Decoder | 0.587 | 0.428 | 0.765 |

The proposed T5 model significantly outperforms all baselines across the three evaluation metrics. While the BART model demonstrates strong performance—owing to its architectural similarity and bidirectional encoder–decoder structure—it still trails the T5 model in terms of both syntactic and semantic reconstruction quality. These results affirm the suitability of T5’s span-infilling objective for restoring degraded educational texts.

It is worth noting that earlier baselines, such as BERT and GPT-2, though widely used, differ substantially from T5 in architectural design and contextual scope. The BERT-Span model, being encoder-only, struggles with long-range sequence generation, while GPT-2, as a decoder-only model, lacks access to bidirectional context during generation, which is essential for masked-span reconstruction. Their inclusion was originally motivated by their prevalence in prior NLP restoration tasks and their availability as open-source, reproducible benchmarks.

However, we now include BART, which, like T5, utilizes a denoising sequence-to-sequence transformer with a pretraining strategy based on span masking. This addition enables a more fair and rigorous comparison. While mT5 was also considered, it was excluded due to resource constraints and its multilingual training regime, which is not directly aligned with the monolingual English dataset used in this study.

Word error rate (WER) was also added as an additional evaluation metric to further assess the restoration quality. The WER measures the percentage of words that are incorrectly predicted, providing a fine-grained error analysis complementary to the existing metrics. As shown in

Table 2, the proposed T5 model achieves a WER of 0.171, which is substantially lower than that of BERT (0.269) and GPT-2 (0.305), confirming the superior accuracy of T5 in reconstructing degraded text.

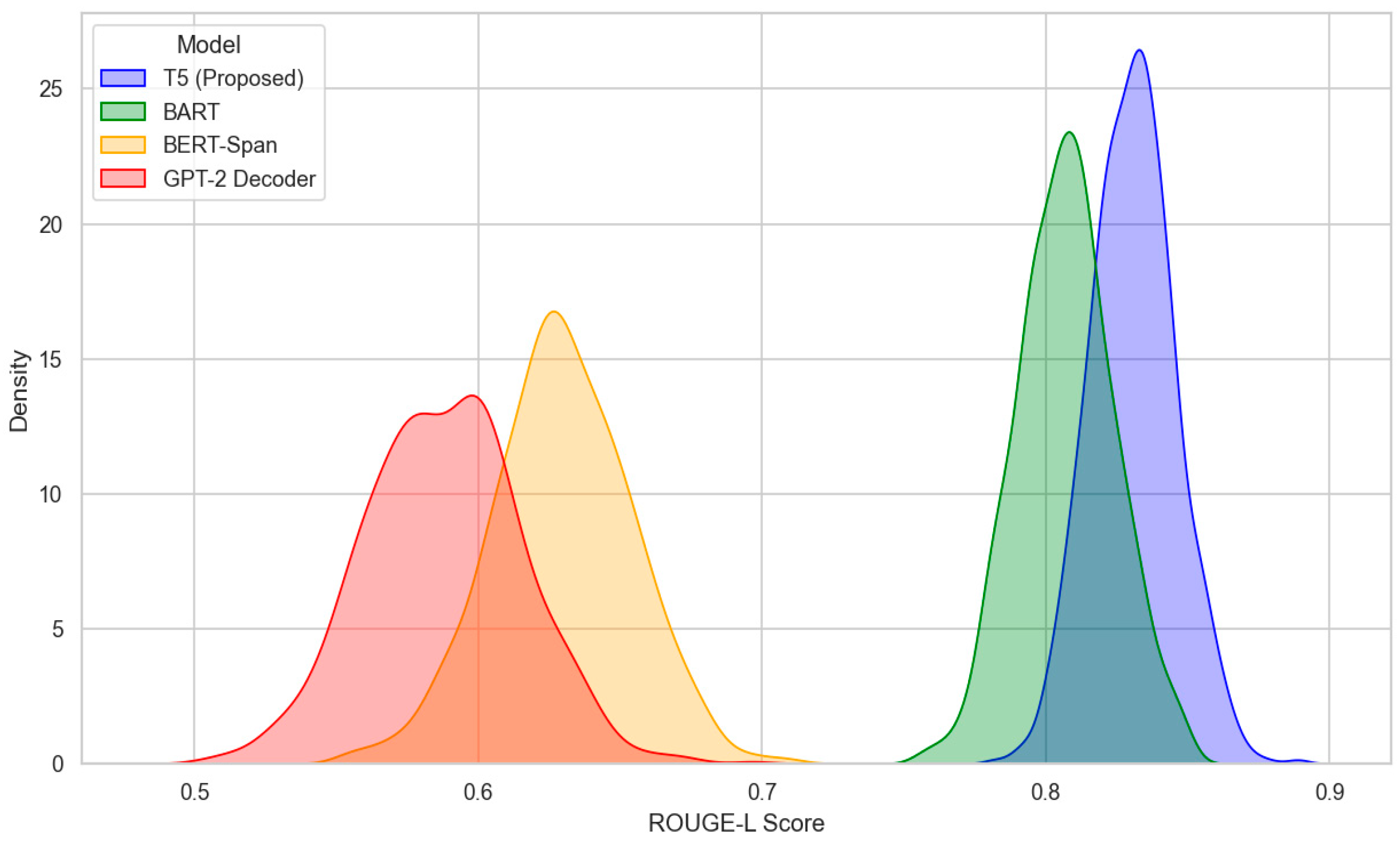

The distribution of ROUGE-L scores for all test samples is visualized in

Figure 3. The T5 model’s distribution is more tightly clustered near the upper range, indicating consistent performance across diverse essay topics and damage levels, followed closely by BART. BERT and GPT-2 distributions are wider and skewed toward lower scores, reflecting their relative limitations in this span recovery task.

4.3. Error Type Analysis

To further evaluate the qualitative performance of the restoration models, we conducted a structured error type analysis on a representative subset of 200 test samples, drawn from the full test set of 750 reconstructions. This subset was randomly sampled using a fixed random seed to ensure reproducibility and to maintain diversity in terms of corruption severity, span positions, and essay topics. A preliminary statistical analysis showed no significant deviation in ROUGE-L or BLEU distributions between the selected 200 samples and the overall test set (p > 0.1, two-sample Kolmogorov–Smirnov test), thus validating the subset’s representativeness. This approach also helped balance the trade-off between human annotation effort and evaluation reliability.

Each reconstruction was manually annotated for three primary error types, namely grammatical errors, reflecting issues in syntax and fluency, semantic errors, denoting factual drift or misrepresentation of meaning, and structural errors, indicating breakdowns in logical flow or discourse cohesion.

Table 3 summarizes the error frequencies across the four evaluated models—T5 (proposed), BART, BERT-Span, and GPT-2 Decoder.

The T5 model demonstrated the lowest total error rate (35%), significantly outperforming other models. Its semantic error rate of just 12% supports its ability to preserve the original meaning, which is also reflected in its top BERTScore of 0.885 (

Table 1) and factual consistency score of 4.42/5 (

Table 4). The grammatical error rate of 14% and structural error rate of 9% also align with its strong human-evaluated fluency (4.63) and coherence (4.51).

BART, though architecturally similar to T5, exhibited a higher error rate (45%) and underperformed in semantic consistency, with a 15.5% semantic error rate. This aligns with its slightly lower BERTScore (0.864) and BLEU-4 score (0.689). While BART still markedly outperforms BERT and GPT-2, its increased structural inconsistencies and marginal semantic drift suggest that its pretraining objectives may not align as well with span restoration tasks in academic contexts.

GPT-2, a decoder-only model, was the most error-prone across all categories. Its semantic error rate of 35.5% and structural error rate of 24.5% reflect its limited capacity to condition on bidirectional context. These issues correlate with its low BLEU-4 (0.428) and ROUGE-L (0.587) in

Table 1 and poor human fluency and coherence ratings.

To further investigate these trends,

Figure 4 presents a confusion-style heatmap of token-level prediction accuracy, categorized by token class. We define keywords as domain-relevant content words, such as “regression”, “variance”, or “clustering”, and operators as conditional or logical/mathematical constructs, like “if”, “else”, “average”, or “mean”; connectives include discourse markers, like “however”, “because”, and “although”; domain terms capture technical vocabulary drawn from the Data Science curriculum, while the errors class reflects incorrect insertions or deletions relative to the ground truth. The heatmap shows that the T5 model commits notably fewer errors across all token classes, especially in operators and domain terms, which are critical to conveying accurate procedural or conceptual meaning.

Figure 4 further corroborates these findings by displaying a tightly clustered ROUGE-L distribution for T5, indicating stable performance across a range of essay topics and corruption severities. Additionally,

Figure 5 illustrates the positive correlation between model confidence and semantic accuracy, supporting the feasibility of confidence-based filtering strategies for high-risk use cases.

The error analysis confirms that T5’s span-infilling architecture, fine-tuned for educational restoration, yields superior performance in generating fluent, semantically faithful, and structurally coherent reconstructions. Its advantages over both legacy models and modern encoder–decoder baselines highlight its suitability for high-stakes archival restoration tasks in educational settings.

4.4. Qualitative Case Studies

Qualitative examples reinforce quantitative findings. Selected excerpts in

Table 4 demonstrate that the T5 model restores missing multi-token spans with high fluency and topical relevance, closely matching ground truth text. By contrast, baseline outputs show incomplete or semantically inappropriate reconstructions.

4.4.1. Human Evaluation Results

To complement automated evaluation metrics, a human expert evaluation was conducted on a subset of restored essays. A total of 100 randomly selected samples from the test set were used for this purpose. The selection aimed to balance annotation feasibility with content diversity, ensuring that a broad spectrum of prompt types, corruption patterns, and essay topics were represented. This sample size was deemed sufficient to capture qualitative trends without overburdening raters, and statistical analysis confirmed that the subset reflected the broader test distribution.

Each restored essay was independently reviewed by three academic evaluators with experience in educational assessment. The raters scored each reconstruction on three criteria—fluency, coherence, and relevance—using a 5-point Likert scale. In addition, to address concerns about factual reliability, a factual consistency criterion was introduced, which assessed whether the generated content remained faithful to the original author’s intent without introducing hallucinated or fabricated claims.

Table 5 presents the averaged ratings across all evaluators.

The high scores across all four dimensions affirm the model’s ability to generate reconstructions that are not only linguistically polished but also contextually appropriate and factually aligned with the original text. Furthermore, inter-rater agreement, measured using Cohen’s kappa (κ = 0.82), confirms a high level of scoring consistency across evaluators, reinforcing the credibility of these results.

4.4.2. Model Confidence vs. Performance

To explore whether the model’s internal uncertainty could serve as a proxy for output quality, we analyzed the relationship between model confidence scores and semantic similarity, as measured by BERTScore.

Figure 5 presents a scatter plot of BERTScore values plotted against confidence scores for all 750 test samples. A clear positive correlation is observed, indicating that higher model confidence is generally associated with better semantic alignment between the reconstructed and original text.

This relationship provides a practical mechanism for confidence-based filtering: samples with low confidence can be flagged for manual review or excluded from automated downstream use, thereby reducing the risk of relying on potentially flawed reconstructions. This is particularly relevant for scenarios where factual hallucination, the introduction of content not present in the original essay, poses a threat to fairness in educational re-evaluation.

To mitigate such risks, we propose integrating a threshold-based review mechanism into future deployments. For example, reconstructions with confidence scores below τ = 0.45 can be automatically flagged for human inspection. This ensures that reconstructed outputs are subject to expert oversight whenever uncertainty exceeds an acceptable threshold, helping maintain the integrity and fairness of restored academic records.

4.5. Discussion and Implications

The comprehensive analysis reveals that the T5-based span-infilling approach effectively restores damaged student essays with high syntactic, semantic, and contextual fidelity. Superior quantitative metrics, consistent error reductions, and favorable human evaluations collectively validate the model’s design and training regimen. Training dynamics indicate stable learning without overfitting, while token-level heatmaps elucidate nuanced strengths in domain-specific language reconstruction. This robustness, combined with confidence estimation capabilities, positions the framework as a promising solution for archival restoration in academic institutions. Potential applications include digitization of legacy exam scripts, reconstruction of incomplete educational records, and support for longitudinal student performance analysis where archival data degradation is prevalent.

4.6. Ablation Study

To evaluate the impact of individual architectural and training components on the performance of the proposed restoration framework, an extended ablation study was conducted. This study aimed to dissect and quantify the influence of task prefix design, corruption strategies, and fine-tuning durations on the model’s ability to reconstruct damaged educational texts. In addition to T5, BART was included in the analysis to offer a more direct architectural comparison and further validate the robustness of the span-infilling objective used in this work.

The first dimension examined was the task prefixing mechanism used during fine-tuning. The default prefix “restore:” was compared against two alternative labels—“fill:” and “rebuild:”—as well as a variant that omitted the prefix entirely. Results indicated that removing the prefix led to a notable performance decline, with reductions observed across ROUGE-L, BLEU-4, and BERTScore metrics. Similarly, replacing “restore:” with more generic instructions, like “fill:” or “rebuild:”, also yielded lower performance, underscoring the importance of task-specific conditioning in guiding the model’s decoding behavior. The “restore:” prefix offered the clearest semantic signal for reconstruction, resulting in the highest semantic fidelity among prefixing variants.

The second set of experiments focused on corruption strategies used during training. The original framework employed span masking, where multi-token segments are masked and then recovered. This approach was compared with two alternatives, namely token-level masking and character-level deletion. Token masking, which replaces individual tokens rather than contiguous spans, led to a measurable performance drop, particularly in BLEU-4, suggesting reduced lexical coherence. Character-level corruption, while closer to OCR-like noise, also underperformed, likely due to increased ambiguity and difficulty in semantic recovery. These results collectively reinforce the appropriateness of span masking for educational text restoration, as it better mirrors the type of degradation typically observed in archival essay manuscripts.

Thirdly, the effect of fine-tuning duration was assessed by training the model for 5, 10, and 15 epochs. Results revealed that performance improved steadily up to 10 epochs, after which gains plateaued and slightly declined at 15 epochs, signaling early signs of overfitting. The 10-epoch setting used in the proposed model thus appears to strike an optimal balance between capacity and generalization. This insight is particularly valuable for guiding future training regimens under constrained computational budgets.

In comparison, the BART model—despite its architectural similarity to T5—yielded slightly lower overall scores across all evaluation metrics. This further validates the effectiveness of the span corruption pretraining strategy employed by T5, as well as the specific fine-tuning techniques used in this study. While BART remains a strong baseline, its relative drop in BERTScore and ROUGE-L indicates that T5’s design is better suited for span-level semantic reconstruction tasks, particularly within the context of educational language.

Table 6 presents the quantitative results from this extended ablation study, showing how each architectural or training modification impacts model performance. The full T5 model with span masking, the “restore:” prefix, and 10 epochs of fine-tuning delivers the best overall results, while all alternative configurations result in diminished accuracy and semantic fidelity.

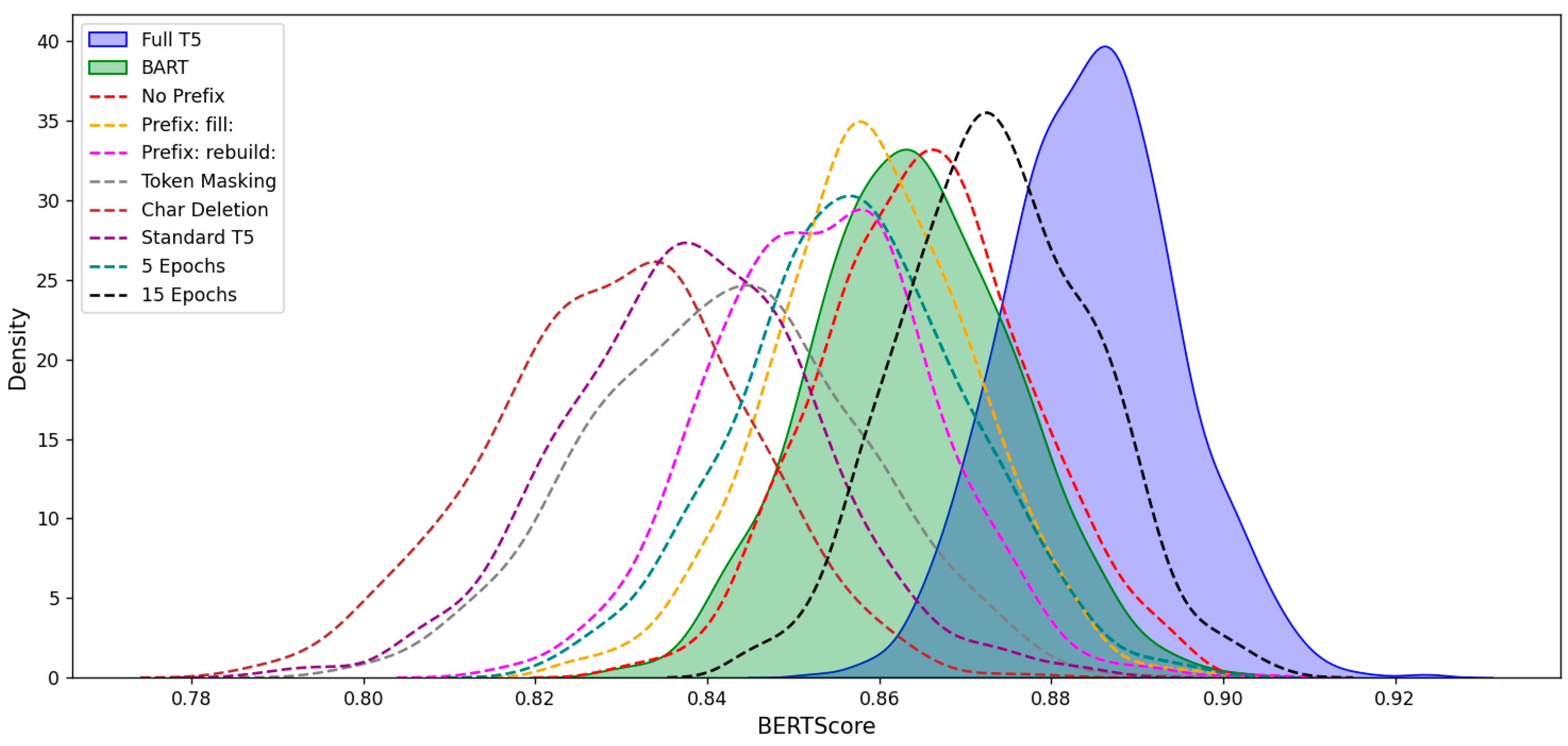

These findings are further illustrated in

Figure 6, which visualizes the distribution of BERTScores across all 750 test samples. The full T5 configuration consistently exhibits the highest semantic alignment, while variants with altered prefixes, alternative corruption methods, or suboptimal training durations show diminished reconstruction quality. BART performs well but remains slightly below the optimized T5 model across most of the score spectrum.

These results provide strong empirical support for the deliberate design choices made in this work. The combination of span-based corruption, task-specific prefix conditioning, and carefully tuned training duration enables the model to generate fluent, coherent, and semantically accurate reconstructions. This ablation study also serves as a guide for future adaptations or extensions of the framework in related archival and educational restoration domains.

5. Conclusions and Future Work

This study presented a novel NLP-based framework for reconstructing lost or damaged historical essay manuscripts using fragmented archival data. By leveraging a span-infilling text-to-text transfer transformer (T5) model fine-tuned on synthetically corrupted essay pairs, the proposed system achieved state-of-the-art restoration performance. Quantitatively, the T5 model attained a ROUGE-L score of 0.831, a BLEU-4 score of 0.714, and a BERTScore of 0.885 on the test set, outperforming competitive baselines, including BERT, GPT-2, and BART. These metrics demonstrate that the model excels in reproducing both the lexical surface and semantic depth of missing content.

Furthermore, human evaluation results affirmed the model’s effectiveness, with average scores of 4.63 (fluency), 4.51 (coherence), and 4.42 (factual consistency) on a 5-point scale. These findings confirm that the reconstructed texts are not only fluent and coherent but also accurate in reflecting the intended meaning. The training dynamics revealed stable convergence without overfitting, while token-level analyses visualized through confidence maps and error distributions highlighted the model’s strength in restoring domain-specific terminology and structured academic discourse.

While the model’s technical performance is promising, it is critical to consider the ethical implications of automated essay reconstruction. One key risk involves inaccurate reconstructions that may lead to unfair reassessments or biased academic decisions. This is especially important in high-stakes contexts, such as student appeals, grade disputes, or disciplinary actions, where subtle semantic differences could influence outcomes. Also, there is a potential risk of misusing reconstructed texts, for instance, treating AI-generated content as original student submissions, thus raising questions about authorship and academic integrity.

To mitigate these concerns, we emphasize that the system is not designed for standalone grading, but rather as a supportive tool for educators, archivists, and evaluators. A confidence-based filtering mechanism is proposed to automatically flag low-certainty outputs for human verification. Moreover, a human-in-the-loop review protocol should be integrated into deployment pipelines to ensure that final decisions incorporate both algorithmic output and expert judgment. These measures aim to minimize hallucination, misinterpretation, and over-reliance on the model, promoting fairness and accountability.

Future research will focus on enhancing the system’s generalizability, transparency, and resilience. First, integrating multi-modal features, such as scanned image metadata, handwriting characteristics, or OCR correction cues may improve reconstruction fidelity for noisy or visually degraded documents. Second, extending the training corpus to cover multiple academic disciplines and languages will promote robust cross-domain performance.

Third, incorporating knowledge graphs or curriculum ontologies can guide the generation process toward semantically aligned and pedagogically accurate content. This context-aware augmentation will be particularly useful in preserving the topical intent of complex essays. Finally, we propose an active learning framework, where human annotators iteratively correct model predictions to refine domain-specific behavior and reduce factual inconsistencies over time.

To sum up, the proposed T5-based restoration framework demonstrates compelling promise in preserving educational heritage through advanced NLP. By coupling high restoration accuracy with responsible AI practices, this work contributes a foundational step toward safeguarding student-authored academic records in the face of archival degradation. The intersection of AI, linguistics, education, and digital humanities remains a rich area for interdisciplinary exploration and innovation.

Author Contributions

Conceptualization, J.O., S.F.V., and I.C.O.; methodology, J.O.; software, J.O.; validation, S.F.V. and I.C.O.; formal analysis, J.O.; investigation, S.F.V. and I.C.O.; resources, S.F.V. and I.C.O.; data curation, J.O.; writing—original draft preparation, J.O.; writing—review and editing, S.F.V. and I.C.O.; visualization, J.O.; supervision, S.F.V. and I.C.O.; project administration, S.F.V.; funding acquisition, I.C.O.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The APC was funded by Ibidun C. Obagbuwa.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Acknowledgments

Special thanks to Center for Applied Data Science (CADS), Faculty of Natural and Applied Sciences, Sol Plaatje University, Kimberley, South Africa.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Northeast, N.E.; Southern, S.; Western, W. Validating Sustainability/Resilience and Quality of Life Indices to Identify Farm-and Community-Level Needs and Research and Education Opportunities. Available online: https://projects.sare.org/project-reports/ls16-276/ (accessed on 15 June 2025).

- Rogers, T.; Smythe, S.; Darvin, R.; Anderson, J. Introduction to equity and digital literacies: Access, ethics, and engagements. Lang. Lit. 2018, 20, 1–8. [Google Scholar] [CrossRef]

- Chelette, B.B. A Comparative Analysis of Models of Cybersecurity in Louisiana Public School Systems. Ph.D. Thesis, Louisiana State University and Agricultural & Mechanical College, Baton Rouge, LA, USA, 2023. [Google Scholar]

- Ailakhu, U.V. Role of records and archives in countering disinformation and misinformation: The perspective of LIS educators in Nigerian universities. Rec. Manag. J. 2025. [Google Scholar] [CrossRef]

- Royce Sadler, D. Assessment, evaluation and quality assurance: Implications for integrity in reporting academic achievement in higher education. Educ. Inq. 2012, 3, 201–216. [Google Scholar] [CrossRef]

- Flórez Petour, M.T.; Rozas Assael, T. Accountability from a social justice perspective: Criticism and proposals. J. Educ. Change 2020, 21, 157–182. [Google Scholar] [CrossRef]

- Lingard, B. The impact of research on education policy in an era of evidence-based policy. Crit. Stud. Educ. 2013, 54, 113–131. [Google Scholar] [CrossRef]

- Ananth, C. Policy Document on Academic Support System of Students, Securing Student’s Records and New Student Induction at Higher Quality Accredited Institutions. Int. J. Adv. Res. Manag. Archit. Technol. Eng. 2018, IV. [Google Scholar]

- Nilson, L.B.; Stanny, C.J. Specifications Grading: Restoring Rigor, Motivating Students, and Saving Faculty Time; Routledge: Abingdon, UK, 2023. [Google Scholar]

- Nokes, J.D. Observing literacy practices in history classrooms. Theory Res. Soc. Educ. 2010, 38, 515–544. [Google Scholar] [CrossRef]

- Rawat, A.; Witt, E.; Roumyeh, M.; Lill, I. Advanced digital technologies in the post-disaster reconstruction process—A review leveraging small language models. Buildings 2024, 14, 3367. [Google Scholar] [CrossRef]

- Campbell, H.V. Application of Natural Language Processing and Information Retrieval in Two Software Engineering Tools. Master′s Thesis, University of Alberta, Edmonton, AB, Canada, 2021. [Google Scholar]

- Li, S.; Wang, H.; Chen, X.; Wu, D. Multimodal Brain-Computer Interfaces: AI-powered Decoding Methodologies. arXiv 2025, arXiv:2502.02830. [Google Scholar]

- Siekmann, L.; Parr, J.M.; Busse, V. Structure and coherence as challenges in composition: A study of assessing less proficient EFL writers’ text quality. Assess. Writ. 2022, 54, 100672. [Google Scholar] [CrossRef]

- Hantzopoulos, M. Restoring dignity in public schools. In Human Rights Education in Action; Teachers College Press: New York, NY, USA, 2016. [Google Scholar]

- Del Becaro, T. AI in K-12 Education: Concerns About the Right to Privacy and Not to Be Discriminated Against. In Improving Student Assessment with Emerging AI Tools; IGI Global Scientific Publishing: Hershey, PA, USA, 2025; pp. 169–212. [Google Scholar]

- Hakoma, M.M. Preservation of Archival Materials at the University of Namibia: Disaster Preparedness and Management. Ph.D. Thesis, University of Namibia, Windhoek, Namibia, 2018. [Google Scholar]

- Saha, U.; Saha, S.; Fattah, S.A.; Saquib, M. Npix2Cpix: A GAN-Based Image-to-Image Translation Network with Retrieval-Classification Integration for Watermark Retrieval from Historical Document Images. IEEE Access 2024, 12, 95857–95870. [Google Scholar] [CrossRef]

- Miloud, K.; Abdelmounaim, M.L.; Mohammed, B.; Ilyas, B.R. Advancing ancient arabic manuscript restoration with optimized deep learning and image enhancement techniques. Trait. Du Signal 2024, 41, 2203. [Google Scholar] [CrossRef]

- Vidal-Gorène, C.; Camps, J.B. Image-to-Image Translation Approach for Page Layout Analysis and Artificial Generation of Historical Manuscripts. In International Conference on Document Analysis and Recognition; Springer Nature: Cham, Switzerland, 2024; pp. 140–158. [Google Scholar]

- Xiao, F.; Yin, S. English grammar intelligent error correction technology based on the n-gram language model. J. Intell. Syst. 2024, 33, 20230259. [Google Scholar] [CrossRef]

- Raju, R.; Pati, P.B.; Gandheesh, S.A.; Sannala, G.S.; Suriya, K.S. Grammatical versus Spelling Error Correction: An Investigation into the Responsiveness of Transformer-Based Language Models Using BART and MarianMT. J. Inf. Knowl. Manag. 2024, 23, 2450037. [Google Scholar] [CrossRef]

- Li, W.; Wang, H. Detection-correction structure via general language model for grammatical error correction. arXiv 2024, arXiv:2405.17804. [Google Scholar] [CrossRef]

- Abdullah, A.S.; Geetha, S.; Aziz, A.A.; Mishra, U. Design of automated model for inspecting and evaluating handwritten answer scripts: A pedagogical approach with NLP and deep learning. Alex. Eng. J. 2024, 108, 764–788. [Google Scholar] [CrossRef]

- Silveira, I.C.; Barbosa, A.; da Costa, D.S.L.; Mauá, D.D. Investigating Universal Adversarial Attacks Against Transformers-Based Automatic Essay Scoring Systems. In Brazilian Conference on Intelligent Systems; Springer Nature: Cham, Switzerland, 2024; pp. 169–183. [Google Scholar]

- Pozzi, A.; Incremona, A.; Tessera, D.; Toti, D. Mitigating exposure bias in large language model distillation: An imitation learning approach. Neural Comput. Appl. 2025, 37, 12013–12029. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).