Abstract

In this paper, a mobile-based deep learning approach for multi-object Aiwen mango grade classification, MDLMAGC, was proposed to instantly identify the quality grade of Aiwen mangoes through smart mobile devices. The new Ivan mango training set images could be uploaded to the cloud database for model training to improve model accuracy. Through MDLMAGC proposed in this paper, the labor cost of manual identification could be reduced. The grade of Ivan mango could be classified accurately. The different grades of Aiwen mangoes thus could adopt corresponding sales strategies to achieve the most effective sales benefits.

1. Introduction

Aiwen mango is one of Taiwan’s most valuable agricultural products, renowned for its rich flavor and vibrant color. Accurate quality grading is essential for ensuring market competitiveness, meeting export standards, and optimizing pricing strategies. Based on our literature review, there are currently no mobile applications designed specifically for Aiwen mango grade recognition, and most grading is still performed manually. Manual classification of Aiwen mangoes requires significant human resources, which can lead to increased grading errors due to human fatigue and subjective judgment. Furthermore, the lack of AI-based, mobile-compatible solutions for Aiwen mango grading limits the efficiency and scalability of the quality control process [1,2,3,4,5].

Although several AI- and computer vision-based grading systems have been developed for mango classification, most of these focus on other mango varieties, such as Himsagar or those cultivated in greenhouse environments. These approaches often rely on internal quality indicators (e.g., soluble solids, acidity) or are trained using non-Taiwanese datasets, which makes them unsuitable for Aiwen mangoes grown in Taiwan’s open-field conditions. Unlike other mango varieties, the quality evaluation of Aiwen mangoes primarily depends on external features such as shape, color, symmetry, and the presence of defects.

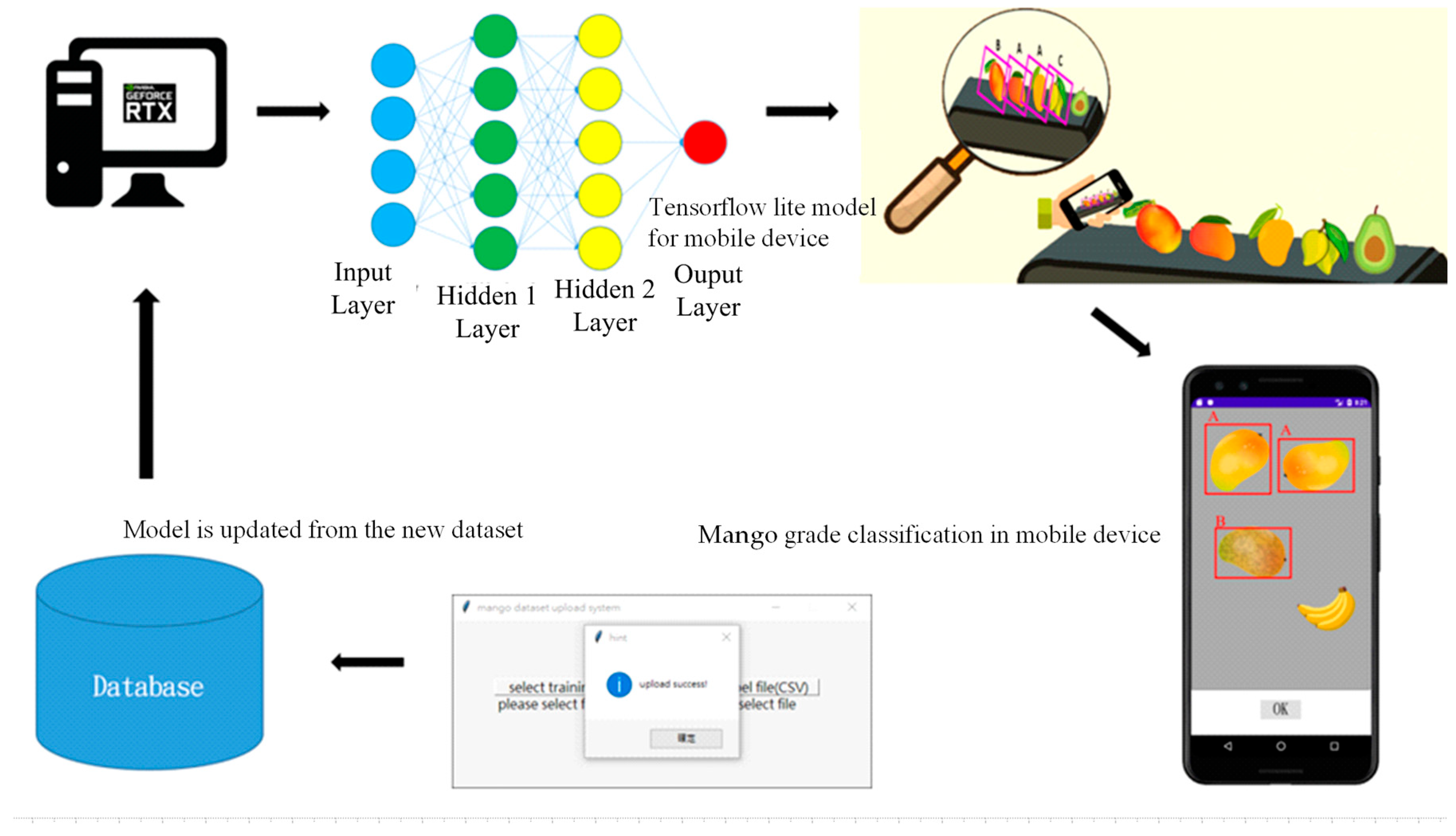

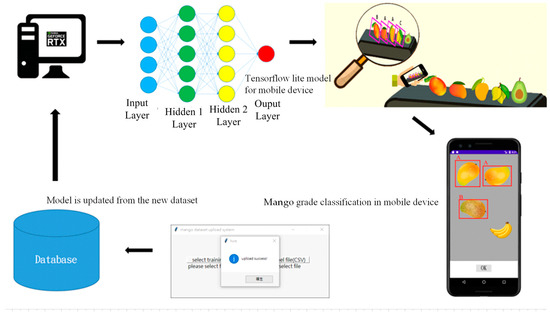

To address these limitations, this paper proposed a mobile-based deep learning approach for multi-object Aiwen mango grade classification, MDLMAGC, specifically tailored for mobile devices. The core of the system is based on the YOLOv4 object detection framework, chosen for its balance between speed and accuracy on resource-constrained devices. The system is deployed as a mobile application, allowing users to perform real-time grading of multiple mangoes using their smartphones. In addition, the application integrates a cloud-based database that enables continuous improvement through user-uploaded images and automatic model retraining. The architecture of MDLMAGC is shown in Figure 1.

Figure 1.

System architecture of MDLMAGC.

This study aims not only to automate the grading process but also to enhance the adaptability and accuracy of AI systems in agricultural contexts by incorporating region-specific data and user feedback mechanisms. The main contributions of this paper are as follows:

- Design of MDLMAGC: A mobile-friendly deep learning application using YOLOv4 to perform multi-object grade classification of Aiwen mangoes in real-time.

- Localized Dataset and Training: Integration of Taiwan-specific Aiwen mango datasets, enabling accurate grading based on domestic standards.

- Cloud-based Continuous Learning: Implementation of a Firebase-based cloud system that supports expert uploads and automatic retraining to improve classification performance over time.

- Deployment Optimization: Conversion of the YOLOv4 model into TensorFlow Lite format for seamless deployment on Android devices without sacrificing performance.

- Comprehensive Evaluation: Detailed experimentation on hyperparameter tuning (e.g., input size, confidence thresholds, activation functions) to optimize recognition accuracy on mobile devices.

The proposed system provides a scalable and efficient solution for improving the accuracy and efficiency of Aiwen mango grading, with significant implications for agricultural automation and smart farming in Taiwan. The rest of the paper is organized as follows. Section 2 presents the related work. MDLMAGC is described in Section 3. Section 4 presents the experimental results. Section 6 concludes the paper.

2. Related Work

In [6], the authors focused on Aiwen mangoes forcibly cultivated in plastic greenhouses in Korea and divided the fruits into five maturity stages, S1 to S5, based on the green-color ratio of the peel. At the same time, its physical and physiological qualities were analyzed, including changes in hardness, sugar content, acidity, respiration rate, and ethylene release. The experimental results showed that the edible quality is the best in stage S5. Stages S3 and S4 are suitable for storage. Hence, these findings could help determine the best time to harvest in order to improve quality, satisfy consumer preferences, and increase economic benefits. However, this paper focused on forced cultivation in Korean greenhouses. Its growing environment is different from Taiwan’s open-air cultivation conditions. Due to the significant differences in the growing environment and open-air cultivation conditions in Taiwan, the fruit ripening mechanism and quality performance are different. In addition, the maturity was evaluated by the green-color ratio of the peel in South Korea. Taiwan’s Aiwen mango grading is based on fruit weight, coloring fruit shape, pests and diseases, and mechanical damage. Hence, it could not be applied for Taiwan’s Aiwen mango grading. The evaluation indicators and application scenarios are also significantly different from Taiwan’s Aiwen mango.

In [7], an improved deep belief network, IDBN, combined computer vision and neutrosophic logic, was proposed for the estimation and grade classification of mango quality indicators, using a non-destructive and automated approach. IDBN was conducted in four steps. Firstly, the mango image is preprocessed, applying denoising and contrast enhancement. Secondly, the image is converted to the neutral domain to handle uncertainty information. Then, characteristics highly related to quality such as soluble solids (SSC), fruit firmness, and acidity are extracted. Finally, the IDBN model was used to predict the total soluble solids (TSSs) and perform quality grading. Hence, IDBN could effectively improve the accuracy and efficiency of mango quality inspection. In this paper, it focuses on training and analysis of specific mango varieties. TSSs, TAC, and hardness are used as quality indicators. However, the quality indicators of Aiwen mangoes are mainly based on appearance, color, fruit shape symmetry, and defect size. Hence, the model proposed in this paper could not be directly applied to the grade classification of Aiwen mangoes. In addition, Aiwen mangoes have regional characteristics. The model proposed in this paper did not use image data or sample training from Taiwan, which resulted in the inability to capture performance differences caused by the local cultivation environment.

In [8], the authors improved the accuracy of mango ripeness grading by machine learning, deep learning, and transfer learning techniques, respectively. Since the manual classification is prone to errors, a more effective method by comparing different models was proposed, such as Gaussian Naive Bayes, Support Vector Machine, Gradient Boosting, Random Forest, and K-Nearest Neighbors. This paper used a unique dataset of about 975 images of Himsagor mangoes and utilized image processing techniques to analyze visual features, such as skin color. It was found that the convolutional neural network model outperformed traditional machine learning methods and VGG16 in most cases. Among them, the gradient boosting model combined with CNN achieved the highest accuracy of 96.28%. This model was trained mainly on Indian mango varieties, which differ significantly from Irving mangoes in appearance characteristics. Moreover, it mainly considered indicators such as soluble solids, hardness and total acid content. However, the quality assessment of Irwin mangoes is mainly based on moderate maturity, uniform fruit shape, good color, and freedom from diseases and pests. Therefore, the model proposed in this paper could be able to accurately identify the quality characteristics of Irving mangoes.

In [9], the authors proposed a fruit-grading method based on deep learning. By integrating EfficientNet-B7, InceptionResNetV2, and GoogLeNet models. Through preprocessing techniques such as background removal and segmentation, the spatial, texture, and color features of fruits are extracted, and these features are fused to improve classification accuracy. Finally, a long short-term memory (LSTM) network is used to classify the fruits. It is divided into three categories: TYPE-I (high quality), TYPE-II (good), and TYPE-III (poor). However, it mainly focused on the classification and grading of various fruits without mentioning the experiments and data on Aiwen mangoes in Taiwan.

In summary, several studies have explored AI-based fruit-quality-grading systems using deep learning and computer vision techniques. For instance, in [6], the authors analyzed Irwin mango ripeness in greenhouse conditions based on peel coloration and physiological parameters such as firmness and acidity. Although effective in controlled environments, their grading criteria do not align with Taiwan’s open-air cultivated Aiwen mangoes, which are graded primarily based on external features such as color uniformity, shape, and defect detection.

In [7], the authors introduced an improved Deep Belief Network (IDBN) with neutrosophic logic to estimate internal mango quality indices like total soluble solids (TSSs). While promising for biochemical prediction, the method lacks compatibility with appearance-based grading standards.

Other works applied various machine learning and deep learning models for mango classification under CNNs and traditional classifiers for the Himsagar mango dataset [8]. Similarly, in [9], the authors combined EfficientNet-B7, InceptionResNetV2, and GoogLeNet for general fruit grading. However, these approaches often target different mango varieties, use non-local datasets, and neglect the regional grading standards specific to Aiwen mangoes.

In summary, the shared limitations of previous studies are listed in Table 1. Therefore, a mobile-based deep learning approach for multi-object Aiwen mango grade classification, MDLMAGC, was proposed in this paper to address the above issues.

Table 1.

Comparison of prior methods and MDLMAGC.

3. Materials and Methods

YOLOv4 is designed with real-time and performance balance in mind for practical applications, such as stable operation on mid-range and low-end GPUs. It is more practical to deploy it in agricultural sites such as orchards or packaging yards. In contrast, since YOLOv8 and YOLOv10 pursue higher accuracy, they must use a deeper and more complex backbone. Therefore, the hardware requirements will be higher than YOLOv4. In addition, YOLOv4 has a lot of open-source community support. Therefore, there is sufficient information and mature tools, such as the Darknet framework or conversion to TensorRT. And there are successful cases in agricultural and industrial applications. In contrast, YOLOv8 and YOLOv10 are Ultralytics-specific frameworks. Although modular design has improved, the degree of open source and deployment freedom are low, which limits certain application scenarios. In the field of agricultural automation, such as a handheld device for automatic mango grading, model operational efficiency and stability are usually more important than extreme accuracy. In the abovementioned scenario, YOLOv4 is more suitable in our scenario. Hence, in this paper, YOLOv4 was used as the deep learning model.

Different from other papers that focus on model development, a mobile-based deep learning approach for multi-object Aiwen mango grade classification, MDLMAGC, proposed in this paper was based on the YOLOv4 model. Through data preprocessing and hyperparameter adjustment, a deep learning model suitable for Taiwanese Irwin mango grade image recognition was developed. A lightweight model is then proposed. The developed model can thus be deployed on smart mobile devices, such as Android. In addition, this paper also uses Firebase as the cloud database to allow experts to upload Aiwen mango grade images at any time to enrich the Aiwen mango grade dataset. This allows the system to automatically retrain when receiving new datasets to improve the accuracy of Aiwen Mango grade image recognition.

3.1. Dataset Pre-Processing

The mango dataset is provided by ITRI’s AIdea artificial intelligence co-creation platform. This dataset is compiled by Taiwan agricultural experts. It includes complete quality grading standards and contains up to 45,000 records. An annotation consistency check process was added before the dataset was used to train the neural network model. The annotation method involved using a labeling tool to select the image and record the coordinates of the four points of the selection box. These coordinates were then converted into a format suitable for YOLOv4. After making the coordinate file and image file names the same, all the data were split into 40,000 training samples and 5000 test samples. After specifying the path, the neural network model was trained.

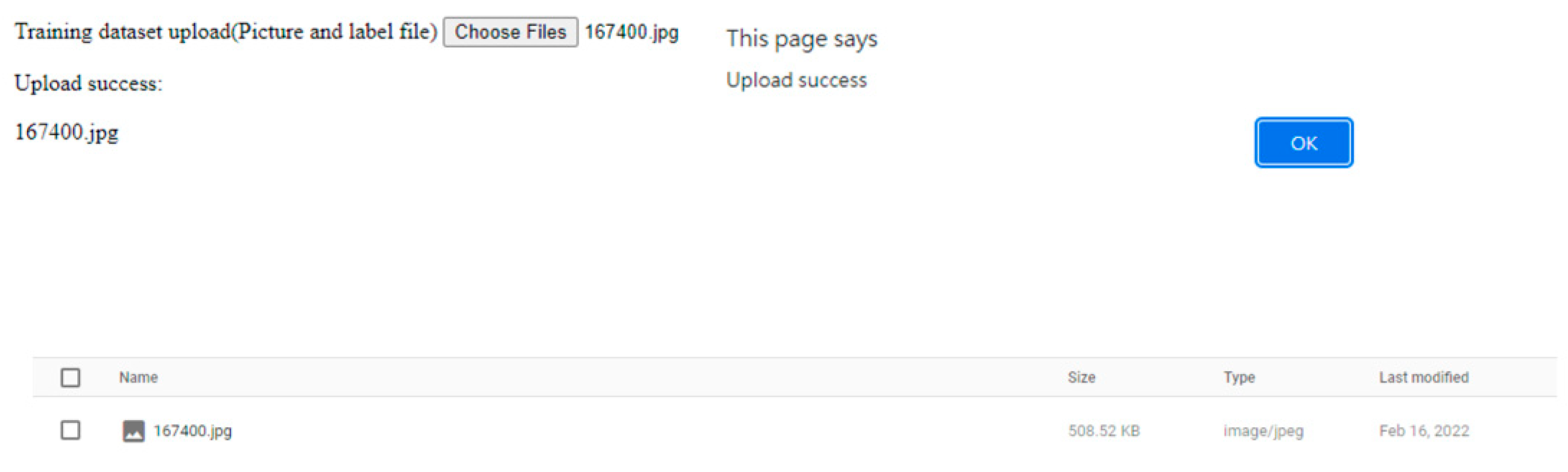

The accuracy of neural network model identification will vary with different datasets. More mango datasets are needed to train better neural network models. Before a new picture is added to the database as part of a new dataset, it is classified by MDLMAGC. If the predicted value is the same as the labeled value, this picture is included in the dataset. Hence, this paper provides a webpage where agricultural experts can upload the mango datasets produced by the preliminary work. It provides the ability to upload new datasets to the cloud database to allow the system to automatically train and generate new models based on the newly added datasets to improve recognition accuracy, as shown in Figure 2.

Figure 2.

New updated dataset.

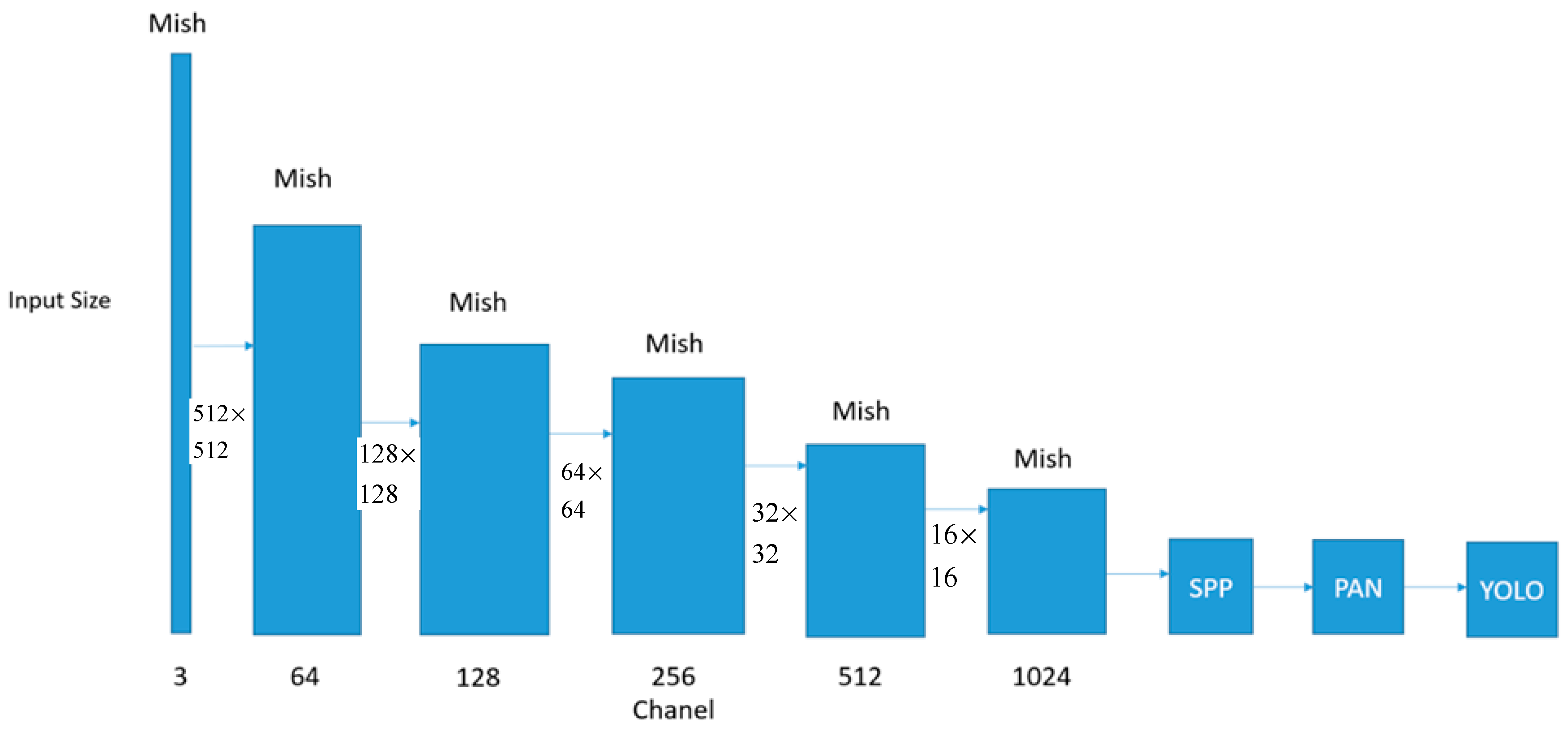

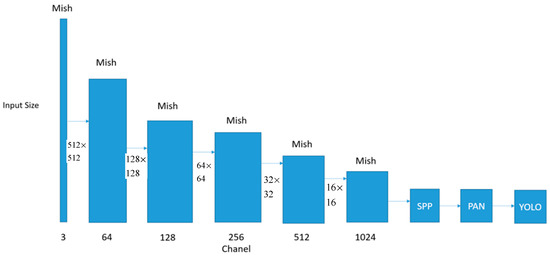

3.2. Model Training

In this paper, YOLOv4 was used as the model for Aiwen mango grade recognition, as shown in Figure 3. Since YOLOv4 is a one-stage detection neural network model, the recognition speed could be faster than the two-stage detection neural network model. The training model efficiency in YOLOv4 also could be better than the two-stage detection neural network model. Moreover, YOLOv4 could reduce the computing burden on mobile devices and shorten the time required to generate new models.

Figure 3.

Architecture of YOLOv4.

The purpose of activation functions in neural network models is to process the weights that are ultimately output by neurons. At the same time, the weight value is limited to a specific range, or the weight outside the threshold is changed or discarded. This can reduce the amount of value transfer between layers and reduce the amount of computation during training [10]. The ReLU (Rectified Linear Unit) function is the most classic and most commonly used activation function, because it solves the problems of gradient explosion and gradient vanishing [11,12]. Since a large number of weights are discarded in ReLU, the neuron disappearance problem can occur after multiple transfers. In order to solve this problem, a new version called Leaky ReLU was proposed to correct the original ReLU feature of negative value being 0 to a linear reduction. The problem of neuron disappearance thus can be mitigated due to the retention of negative values [13]. Mish is an advanced version of ReLU that modifies the property of ReLU where the negative value is 0. Compared to Leaky ReLU, Mish retains all negative values and compresses them. Mish only retains the smooth curve increase from 0 to −1. The area less than −1 approaches 0 infinitely. Therefore, Mish can be smoother than ReLU in terms of gradient preservation [14].

3.3. Tensorflow Lite for Android Studio

Tensorflow Lite is a lightweight model designed specifically for terminal devices, and its functionality is highly integrated with the Android Studio, which was used in this project [15]. However, YOLOv4 could not be built directly for mobile devices. Therefore, converting the trained YOLOv4 model into a TensorFlow Lite model is required for mobile deployment. In this paper, the YOLOv4-to-TensorFlow Lite software tool [15] was used to convert the YOLOv4 model to the TensorFlow Lite model for Android Studio.

3.4. App Design

After opening the app, the initial screen will display the user login and registration page. Registered users can log in to their account and access the main functions of the app. Unregistered users can go to the registration page to complete the registration. The main functional interface includes taking photos, querying records, settings, and information. After clicking the photo button, the app activated a pre-loaded neural network model. The recognition results are displayed on the screen using the pre-loaded neural network model with the phone’s built-in camera lens. After selecting the OK button, the recognition results can be uploaded to the cloud database. Users can then query the data stored in the cloud database. The data in the cloud database can also be added, deleted, modified, and visualized using pie charts. On the settings page, users can customize the font, color, icons, and other parameters in the app.

The TensorFlow Lite model, converted from YOLOv4, is pre-installed in the app developed with Android Studio. When a new model is generated, the app must be updated. To address this issue, a version number of the current project app is assigned to each release. For example, the current version is set to v1.0. When a new model is added to the app, the version number is updated to v1.1. The updated version number is compared with that of the user-side app. If the user-side version is outdated, a pop-up window appears on the initial login page to notify the user that a new version is available for update.

3.5. Cloud Database

This paper selects Firebase as the cloud database [16] to store basic user account information and the mango data. The data table format is shown in Table 2 and Table 3. The user can query the historical data of mango level identification at any time through the app. Based on the personal information security, the user account will not be displayed in the app and only be used to log in to the system.

Table 2.

Mango data format.

Table 3.

User account format.

4. Experimental Results

The parameters in YOLOv4 will affect the final identification results of the model. Hence, it is necessary to update and adjust the parameters for different datasets. The main adjustment items include non-maximum suppression (NMS), input size, confidence, and activation function. NMSThreshold is defined as the threshold value of non-maximum suppression. Confthreshold is defined as the threshold value of confidence. The model was trained on the training dataset with tuning hyperparameters, such as NMSthreshold and Confthreshold, on the validation dataset. The performance was evaluated on the test dataset only once.

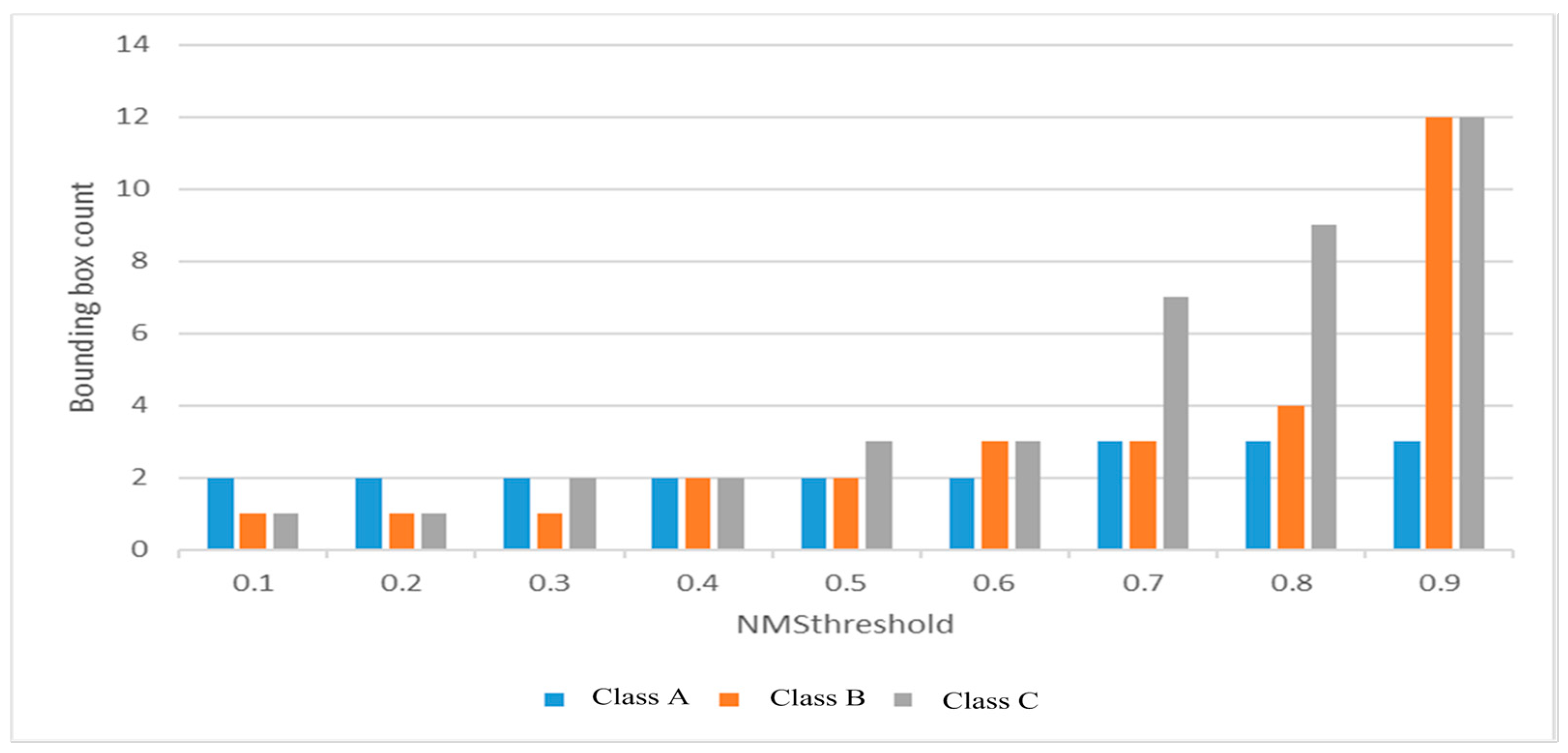

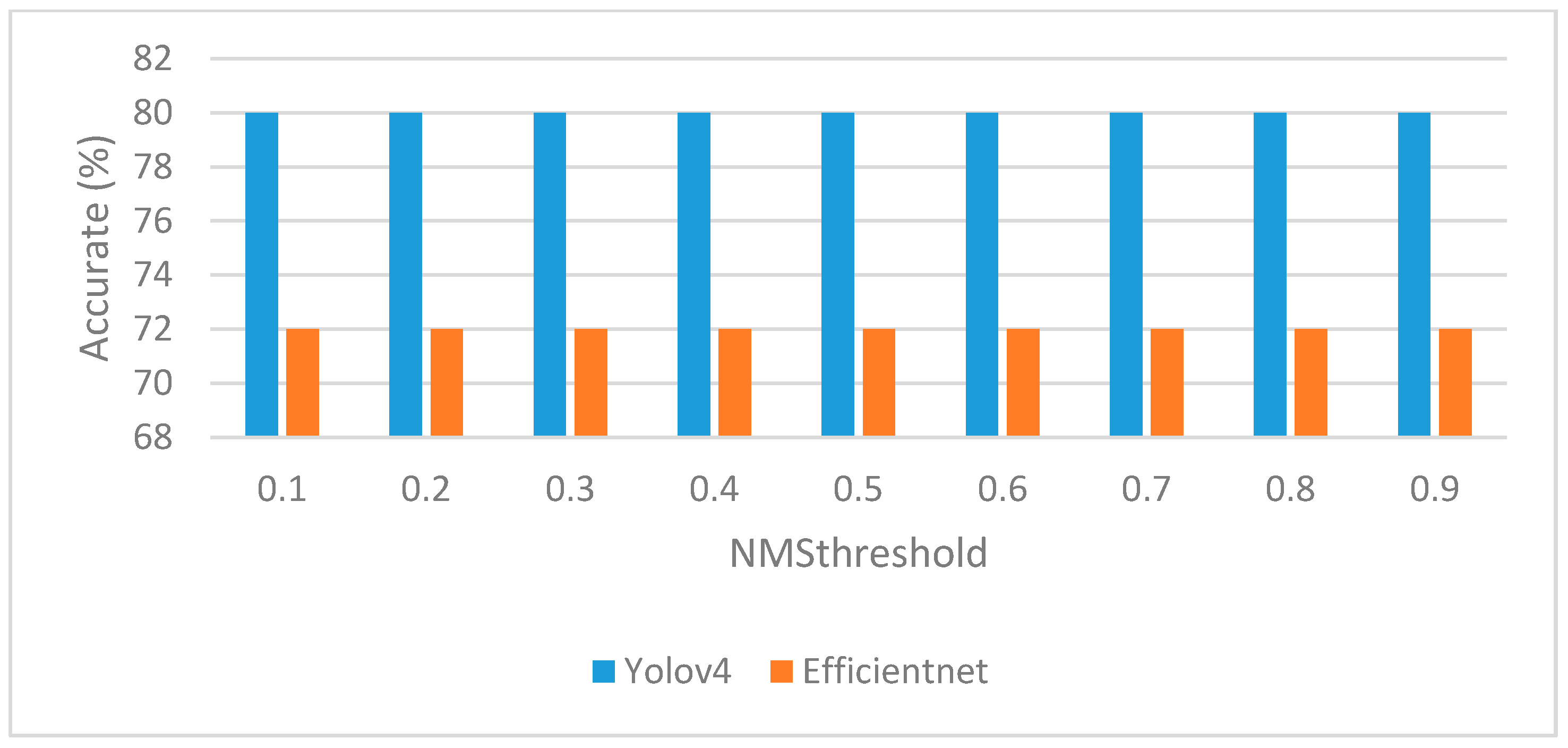

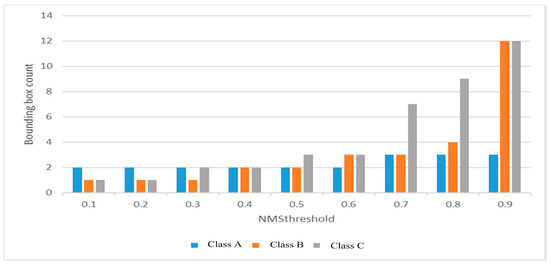

4.1. Non-Maximum Suppression

NMSThreshold is defined as the threshold value of non-maximum suppression. While NMSThreshold is increased, multiple object boxes are more likely to appear, as shown in Figure 4. On the contrary, if NMSThreshold is reduced, the appearance of multiple object boxes can be avoided, as shown in Figure 5. However, if NMSThreshold is set too low, an object box that should appear may fail to appear, resulting in the inability to recognize the object. In Figure 6, test set image A contains two mangoes; test set image B shows a single mango in the center; and test set image C shows a single mango not in the center. In order to prevent the appearance of too many object selection boxes, it is recommended to set a lower NMSThreshold to prevent too many object selection boxes to avoid visual confusion. Therefore, in subsequent experiments, NMSThreshold was set to 0.1. Figure 7 shows the recognition accuracy at different NMSThreshold values.

Figure 4.

Mango detection (NMSThreshold = 0.8).

Figure 5.

Mango detection (NMSThreshold = 0.1 or Confthreshold = 0.1).

Figure 6.

NMSthreshold for bounding box count statistics.

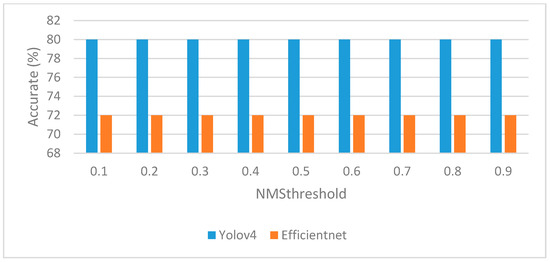

Figure 7.

Impact of identification accuracy for different NMSthreshold values.

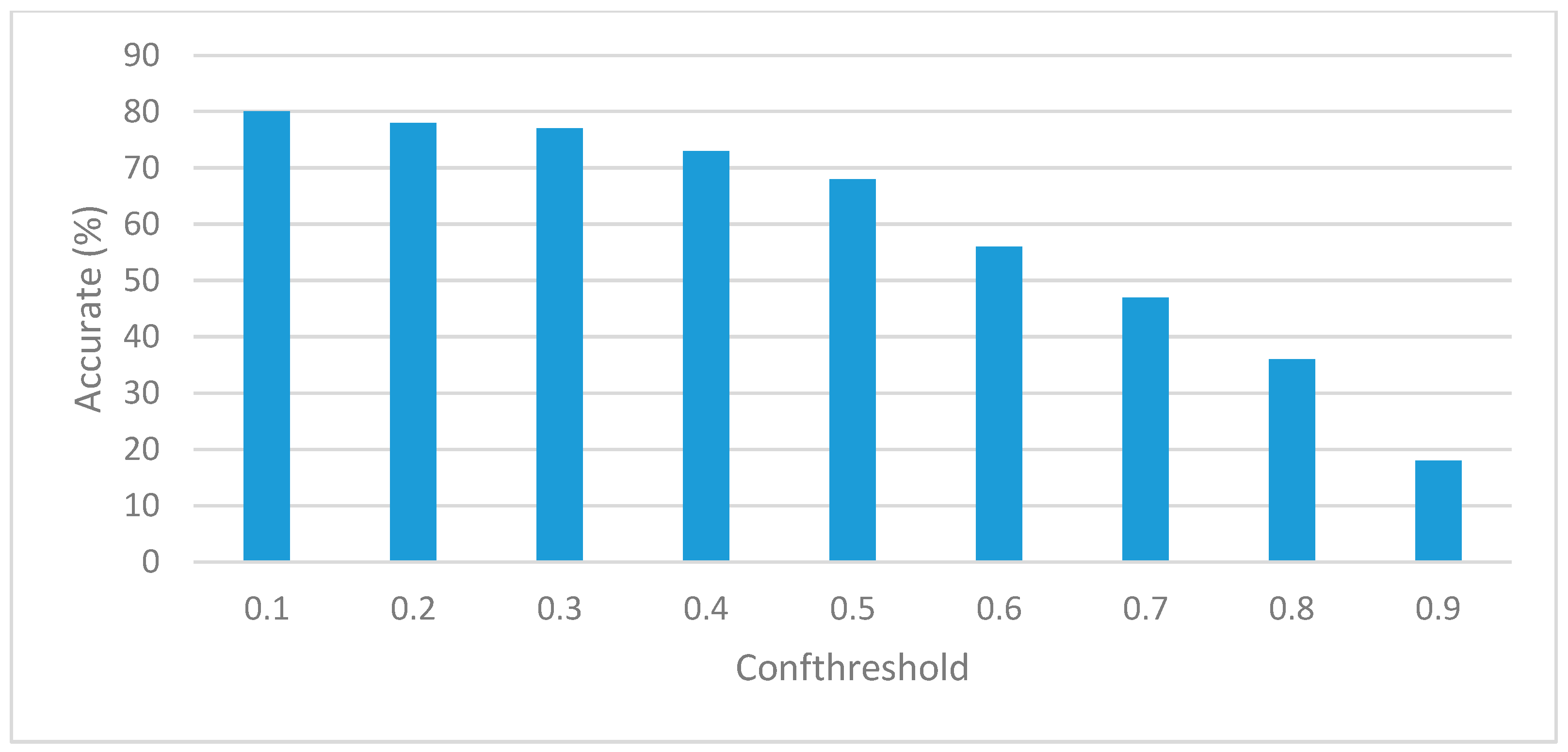

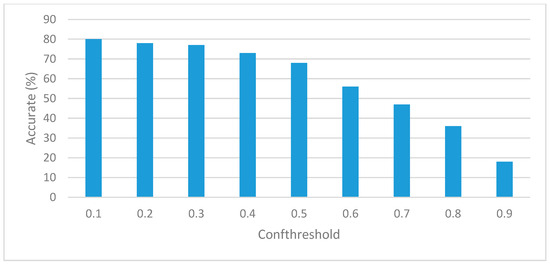

4.2. Confidence

A, B, and C denote the quality levels. The number next to each indicates the corresponding confidence level. The confidence level shows the probability of recognizing the corresponding level. Confthreshold is defined as the threshold value of confidence. If Confthreshold is set to 0.9, only objects with a confidence level greater than 0.9 will recognized and displayed, as shown in Figure 5 and Figure 8.

Figure 8.

Mango detection (Confthreshold = 0.9).

Confthreshold could be adjusted to limit the minimum confidence threshold to find the label of the object for model. Only the objects with confidence greater than Confthreshold could be correctly selected. Figure 9 shows the recognition accuracy at different Confthreshold.

Figure 9.

Impact of identification accuracy for different Confthreshold values.

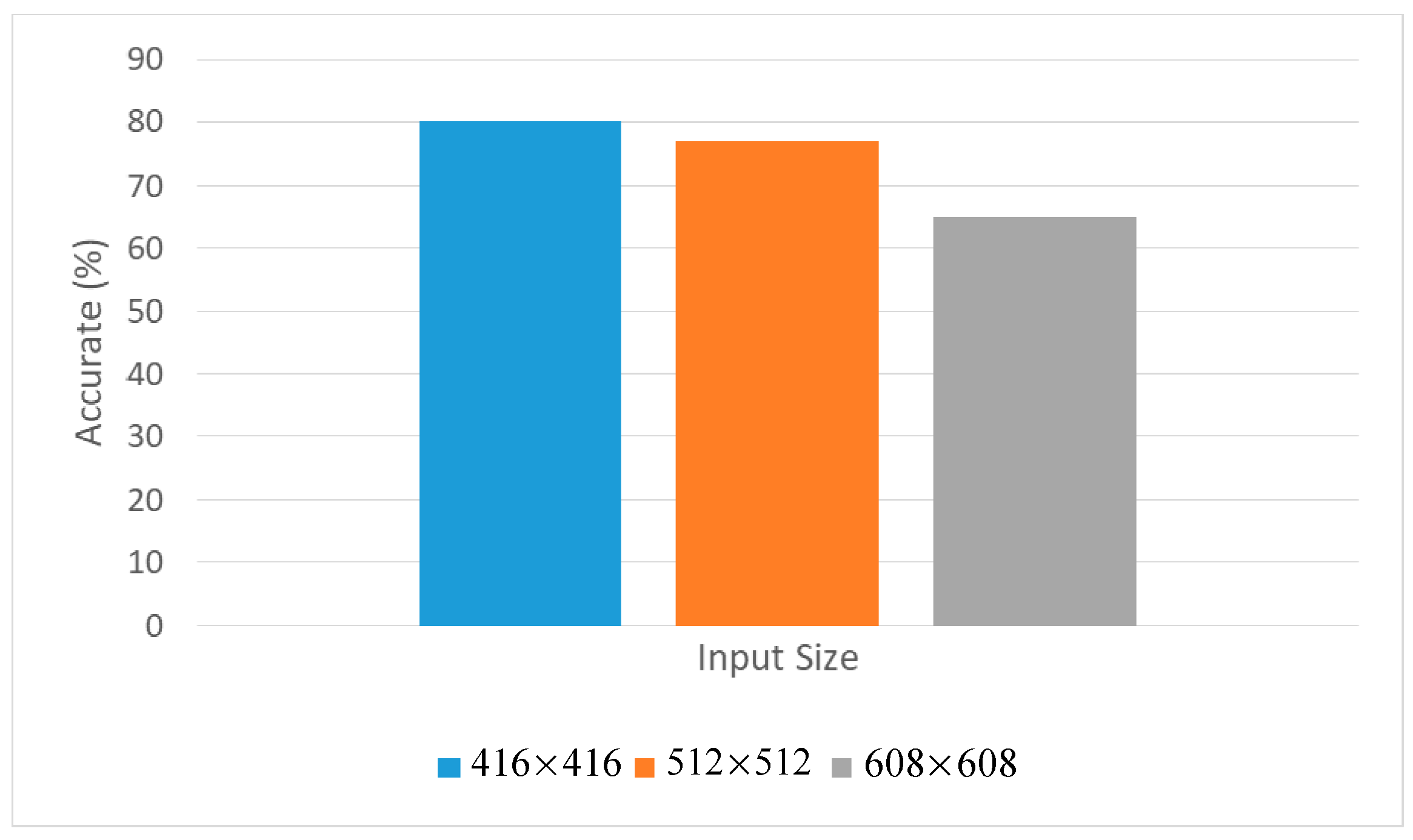

From Figure 5, Figure 8 and Figure 9, it can be observed that recognition accuracy decreases as the Confthreshold value increases. Even when the input image size is different, a high Confthreshold results in a recognition accuracy as low as 18%. Figure 10 shows that recognition accuracy is the best when Confthreshold is set to 0.1. Therefore, in subsequent experiments, Confthreshold is fixed at 0.1.

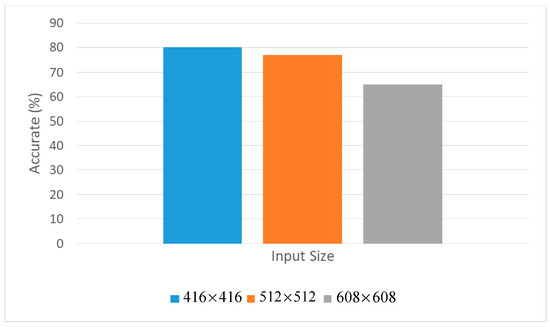

Figure 10.

Recognition accuracy with different input size.

4.3. Input Size

In addition to NMSthreshold and Confthreshold, recognition accuracy was also evaluated using different image input sizes The standard input sizes for YOLO are set to , , and . Stride is preset to 1. The recognition accuracy is evaluated with three input sizes, such as , , and , where Confthreshold and NMSthreshold are both set to 0, as shown in Figure 10. The results indicate that the smaller the input size, the higher the recognition accuracy. Hence, was used as the input size in this paper.

4.4. Activation Function

Since the activation function affects the weights generated by the convolutional neural network, selecting a suitable activation function is important for improving recognition accuracy of the model. Hence, ReLU, Leaky ReLU, and Mish were selected for performance evaluation in this paper. The experimental results show that recognition accuracy of ReLU is 63%, recognition accuracy of Leaky ReLU is 63%, and recognition accuracy of Mish is 80%. Precision, Recall, and F1-Score for Mish were 84%, 81%, and 82%, respectively. Precision, Recall, and F1-Score for Leaky ReLU were 82%, 63%, and 70%, respectively. Precision, Recall, and F1-Score of Leaky ReLU were 59%, 60%, and 56%, respectively. The confusion matrices for ReLU, Leaky ReLU, and Mish are presented in Table 4, Table 5 and Table 6. Based on these results, Mish was selected as the activation function in this paper.

Table 4.

Confusion matrix for ReLU.

Table 5.

Confusion matrix for Leaky ReLU.

Table 6.

Confusion matrix for Mish.

5. Discussion

Neural network models are divided into many types based on their design principles, including EfficientNet, which is similar to YOLOv4. EfficientNet is a new generation of neural network architecture extended from Mobilenet [17]. Moreover, EfficientNet has fast speed, low computational complexity, and high accuracy of the original model. Therefore, EfficientNet is used as the main comparison with multi-object image recognition of Aiwen mango grade classification (MOIRMGC).

Before classifying the grade of Aiwen mangoes, it is necessary to confirm whether there is a mango image in the picture or video in order to generate the bounding box of the mango. Since the threshold value of non-maximum suppression (NMSthreshold) may affect the number of bounding boxes, the number of bounding boxes at different NMSthreshold values was evaluated in our experiments, where NMSthreshold ranged from 0.1 to 0.9 increasing by 0.1 each time.

The experimental results showed that no redundant bounding boxes were generated when NMSthreshold was set to 0.1. In addition, the threshold value of Confidence (Confthreshold) and the input size will affect the Accuracy of mango recognition, where Confthreshold was set from 0.1 to 0.9, increasing by 0.1 each time, and the input sizes were set to , , and , respectively. The experimental results showed that the optimal Accuracy was achieved when Confthreshold was set to 0.9. The optimal Accuracy was also achieved when the input size was set to . Hence, the NMSthreshold, Confthreshold, and the input size of MDLMAGC were set to 0.1, 0.9, and to be compared with EfficientNet in the experiments. The experimental results showed that the Accuracy of MDLMAGC could reach up to 80%, but the Accuracy of EfficientNet only could only reach up to 72%. The Accuracy of MDLMAGC is 11% higher than the Accuracy of EfficientNet. It was proved that the proposed MDLMAGC could be applied for Aiwen mango grading image classification.

In the low-end device, such as the Asus Zenfone 6 (CPU: Snapdragon S855), the inference recognition time is about 1.4 s. It was proved that inference delay could still be low in lower-end devices. Once the processor is changed to S8 gen 3, such as in Samsung S24, Samsung Z Fil6, Samsung Z Flip6, Asus Zenfone 11 Ultra, and Sony Xperia 1 VI, the inference recognition time could be reduced to 0.59 s. Hence, it was indirectly proved that MDLMAGC proposed in this paper does not cause significant power consumption on low-end mobile devices with limited computing power. Hence, issues related to power consumption and dynamic computing allocation may not be addressed.

The incorporation of model uncertainty assessment was added in this revision. In category A, the maximum, minimum, and average values of confidence are 100%, 48%, and 72%, respectively. In category B, the maximum, minimum, and average values of confidence are 79%, 31%, and 67%, respectively. In category C, the maximum, minimum, and average values of confidence are 100%, 21%, and 53%, respectively. In category A, the maximum, minimum, and average values of accuracy rate are 93%, 71%, and 88%, respectively. In category B, the maximum, minimum, and average values of accuracy rate are 91%, 67%, and 74%, respectively. In category C, the maximum, minimum, and average values of accuracy rate are 93%, 52%, and 78%, respectively.

Moreover, in the dataset, the number of samples in category A is 28,585. The number of samples in category B is 11,140. The number of samples in category C is 5275. Category C represents severely damaged mangoes. A total of 1000 severely damaged samples were selected from category C. When a total of 45,000 images of all categories was used for training and testing, the accuracy rate was 80%. However, the accuracy rate decreased to 78% after removing 1000 severely damaged samples. The experimental results showed that the robustness of the model could be improved after supplementing difficult examples.

In this paper, the dataset is from AI CUP 2020 from https://aidea-web.tw/aicup_mango (accessed on 1 September 2021), certified and provided by BIIC Lab (Behavioral Informatics and Interaction Computation Lab) and Wacker International Taiwan. Since the mango grading requires expert identification, the general public cannot label the correct grade of mangoes by taking photos under extreme lighting conditions. To label the correct grade of mangoes in extreme lighting conditions this must be completed by experts taking photos of mangoes. However, the current Aiwen mango dataset does not include mango grading datasets under he extreme lighting conditions. Hence, the reflections or shadows on mango peels may interfere with the signal extraction of color, and defect features cannot be highlighted in this paper. The generalization to natural field environments is also prevented.

6. Conclusions

This paper aimed to solve the problem that Aiwen mangoes in Taiwan are currently mainly graded manually, which is time-consuming and prone to errors. At the same time, considering that the existing image recognition technology cannot be directly applied to Aiwen mangoes in Taiwan due to regional differences and different grading standards, a mobile-based deep learning approach for multi-object Aiwen mango grade classification (MDLMAGC) based on deep learning for smart mobile devices is proposed, aiming to achieve instant and accurate grading through smart mobile devices. Since YOLOv4 has been proven to be practical in agricultural and industrial applications and suitable for deployment on mobile devices, it was selected as the core of deep learning in this paper.

In order to improve the recognition accuracy of the model for Aiwen mangoes in Taiwan, the parameters are tuned in detail. The experimental results showed that redundant object selection boxes could be removed and the best accuracy could be achieved when the threshold value of non-maximum suppression (NMSThreshold) is set to 0.1, the threshold value of confidence (Confthreshold) is set to 0.9, the input size is set to 416 × 416, and Mish is selected as the activation function. In the experimental results, the accuracy of MDLMAGC proposed in this paper reached up to 80% for the grading of Aiwen mangoes. The accuracy of MDLMAGC proposed in this paper is only 72% for the grading of Aiwen mangoes. The accuracy of MDLMAGC is 11% higher than that of EfficientNet. It proved that the proposed MDLMAGC can be applied for the grading of Aiwen mangoes. In addition, a cloud database (Firebase) was built to allow agricultural experts to upload new Aiwen mango image data for automatic model retraining to continuously improve the recognition accuracy in this paper. The experimental results showed that MDLMAGC provides a feasible and efficient automated solution and significantly reduces the labor cost required for manual grading of Aiwen mangoes and improves the accuracy of grading. It helps users formulate more effective sales strategies based on different grades of mangoes to achieve the best economic benefits. In the future, we will integrate mango images into the cloud database for different seasons, such as the rainy season or dry season, as well as agricultural standards and specifications for mango grading. This will demonstrate the application value in agriculture.

Author Contributions

Conceptualization, Y.-C.W. and S.-W.C.; methodology, Y.-C.W.; software, H.-W.H.; validation, Y.-C.W. and H.-W.H.; formal analysis, Y.-C.W. and H.-W.H.; investigation, Y.-C.W. and S.-W.C.; resources, Y.-C.W. and S.-W.C.; data curation, Y.-C.W. and S.-W.C.; writing—original draft preparation, Y.-C.W.; writing—review and editing, Y.-C.W.; visualization, H.-W.H.; supervision, Y.-C.W. and S.-W.C.; project administration, Y.-C.W.; funding acquisition, none. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Science and Technology Council (NSTC), Taiwan, R.O.C., under Grant Numbers NSTC 114-2221-E-029-021, NSTC 112-2622-E-029-008, and NSTC 112-2221-E-029-020.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to express their sincere gratitude to the National Science and Technology Council (NSTC), Taiwan, R.O.C., for supporting this research under Grant Numbers NSTC 114-2221-E-029-021, NSTC 112-2622-E-029-008, and NSTC 112-2221-E-029-020.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tai, N.D.; Lin, W.C.; Trieu, N.M.; Thinh, N.T. Development of a Mango-Grading and -Sorting System Based on External Features, Using Machine Learning Algorithms. Agronomy 2024, 14, 831. [Google Scholar] [CrossRef]

- Tripathi, P.; Sharma, S. Improving Mango Classification Accuracy with Enhanced Image Preprocessing and Neural Networks. Afr. J. Biomed. Res. 2024, 27, 539–561. [Google Scholar] [CrossRef]

- Mulukalla, S.S.R.; Kadari, P.R.; Rathod, K.S. External Features Based Grading of Mangoes Using Deep Learning Int. J. Adv. Res. Comput. Commun. Eng. 2025, 14, 229–234. [Google Scholar]

- News of Taiwan Ministry of Agriculture. Available online: https://www.moa.gov.tw/theme_data.php?theme=news&sub_theme=agri&id=7624 (accessed on 1 September 2021).

- Agricultural publications of Taiwan Ministry of Agriculture. Available online: https://www.moa.gov.tw/ws.php?id=2505139 (accessed on 1 September 2021).

- Wijethunga, W.M.U.D.; Shin, M.H.; Jayasooriya, L.S.H.; Kim, G.H.; Park, K.M.; Cheon, M.G.; Choi, S.W.; Kim, H.L.; Kim, J.G. Evaluation of Fruit Quality Characteristics in Irwin Mango Grown via Forcing Cultivation in a Plastic Facility. Hortic. Sci. Technol. 2023, 41, 617–633. [Google Scholar] [CrossRef]

- Tripathi, M.K. Shivendra Improved Deep Belief Network for Estimating Mango Quality Indices and Grading: A Computer Vision-Based Neutrosophic Approach. Netw. Comput. Neural Syst. 2024, 35, 249–277. [Google Scholar] [CrossRef] [PubMed]

- Sikder, S.; Islam, M.S.; Islam, M.; Reza, S. Improving Mango Ripeness Grading Accuracy: A Comprehensive Analysis of Deep Learning, Traditional Machine Learning, and Transfer Learning Techniques. Mach. Learn. Appl. 2025, 19, 100619. [Google Scholar] [CrossRef]

- Patil, S.B.; Patil, J.B.; Patil, S. Fruit gradation using deep learning: Fusion of EfficientNet-B7, InceptionResNetV2, and GoogLeNet. Afr. J. Biomed. Res. 2024, 27, 14639–14645. [Google Scholar] [CrossRef]

- Nwankpa, C.E.; Ijomah, W.; Gachagan, A.; Marshall, S. Activation Functions: Comparison of Trends in Practice and Research for Deep Learning. arXiv 2018, arXiv:1811.03378. [Google Scholar]

- Agarap, A.F.M. Deep Learning using Rectified Linear Units (ReLU). arXiv 2019, arXiv:1803.08375. [Google Scholar]

- Le, Q.V.; Jaitly, N.; Hinton, G.E. A Simple Way to Initialize Recurrent Networks of Rectified Linear Units. arXiv 2015, arXiv:1504.00941. [Google Scholar]

- Xu, B.; Wang, N.; Chen, T.; Li, M. Empirical Evaluation of Rectified Activations in Convolution Network. arXiv 2015, arXiv:1505.00853. [Google Scholar]

- Misra, D. Mish: A Self Regularized Non-Monotonic Activation Function. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- Tensorflow-yolov4-tflite. Available online: https://github.com/hunglc007/tensorflow-yolov4-tflite (accessed on 1 September 2021).

- Mehta, B.M.; Madhani, N.; Patwardhan, R. Firebase: A Platform for your Web and Mobile Applications. Int. J. Adv. Res. Comput. Commun. Eng. 2017, 6, 45–52. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).