Abstract

Federated Clustering (FL clustering) aims to discover latent knowledge in multi-source distributed data through clustering algorithms while preserving data privacy. Federated learning is categorized into horizontal and vertical federated learning based on data partitioning scenarios. Horizontal federated learning is applicable to scenarios with overlapping feature spaces but different sample IDs across parties. Vertical federated learning facilitates cross-institutional feature complementarity, which is particularly suited for scenarios with highly overlapping sample IDs yet significantly divergent features. As a classic clustering algorithm, k-means has seen extensive improvements and applications in horizontal federated learning. However, its application in vertical federated learning remains insufficiently explored, with room for enhancement in privacy protection and communication efficiency. Simultaneously, client feature imbalance may lead to biased clustering results. To improve communication efficiency, this paper introduces Product Quantization (PQ) to compress high-dimensional data into low-dimensional codes by generating local codebooks. Leveraging the inherent k-means algorithm within PQ, local training preserves data structures while overcoming privacy risks associated with traditional PQ methods that require server-side data reconstruction (which may leak data distributions). To enhance privacy without compromising performance, Multidimensional Scaling (MDS) maps codebook cluster centers into distance-preserving indices. Only these indices are uploaded to the server, eliminating the need for data reconstruction. The server executes k-means on the indices to minimize intra-group similarity and maximize inter-group divergence. This scheme retains original codebooks locally for strict privacy protection.The nested application of PQ and MDS significantly reduces communication volume and frequency while effectively alleviating clustering bias caused by client feature dimension imbalance. Validation on the MNIST dataset confirms that the approach maintains k-means clustering performance while meeting federated learning requirements for privacy and efficiency.

1. Introduction

Federated learning (FL) is a distributed machine learning approach whose core concept involves multiple clients (e.g., mobile devices or enterprises) collaboratively training a shared global model without sharing local data [1]. Federated learning clustering integrates federated learning and clustering algorithms to mine latent knowledge from multi-source distributed data while ensuring data privacy protection [2]. Federated learning faces multiple challenges including data heterogeneity [3,4], communication bottlenecks [1,5], and privacy protection [6].

Federated learning is categorized into horizontal federated learning (horizontal federated learning, HFL) [7] and vertical federated learning (vertical federated learning, VFL) [8] based on data distribution patterns. In horizontal federated learning, participating parties share identical features but possess distinct sample IDs [9]. In vertical federated learning scenarios, the data distribution pattern entails different clients owning distinct feature sets while sharing identical sample IDs. Horizontal federated learning finds widespread application in edge computing and IoT collaboration [10,11]. Vertical federated learning’s value lies in cross-institutional feature complementarity, which is particularly suitable for scenarios with highly overlapping sample IDs yet significantly divergent features, such as in healthcare [12], finance [13], and other industries.

Current research on federated clustering algorithms predominantly focuses on the horizontal federated domain, with relatively limited exploration in vertical federated settings. Compared to horizontal federated learning, vertical federated learning relies more heavily on high-frequency encrypted interactions and high-dimensional feature transmission [14], imposing greater demands on communication. Simultaneously, vertical federated learning requires multi-party collaboration to compute loss functions and gradients. Due to features being dispersed across different participants, it necessitates reliance on Secure Multi-Party Computation (MPC), homomorphic encryption (HE), and other technologies to achieve joint training under privacy protection, all incurring substantial computational costs [15].

In 2020, Avishek et al. proposed an iterative federated clustering algorithm, which achieves efficient clustering by alternately estimating user identities and optimizing model parameters [16]. This method holds significant reference value for feature alignment. In 2023, Zitao Li et al. proposed a differentially private k-means clustering algorithm based on the Flajolet–Martin (FM) technique [17]. By aggregating differentially private cluster centers and membership information of local data on an untrusted central server, they constructed a weighted grid as a summary of the global dataset, ultimately generating global centers by executing the k-means algorithm. Subsequently, in the paper [18], they introduced Flajolet–Martin (FM) sketches to encode local data and estimate cross-party marginal distributions under differential privacy constraints, thereby constructing a global Markov Random Field (MRF) model to generate high-quality synthetic data. Federico [19] proposed a vertical federated k-means algorithm based on homomorphic encryption and differential privacy protection, demonstrating its superior clustering performance (e.g., k-means loss and clustering accuracy) over traditional privacy-preserving k-means algorithms while maintaining the same privacy level. Li et al. [20] proposed a vertical federated density peaks clustering algorithm based on a hybrid encryption framework. Building upon the merged distance matrix, they introduced a more effective clustering method under nonlinear mapping, enhancing density peaks clustering (DPC) performance while addressing privacy protection issues in vertical federated learning (VFL). Duan et al. [21] introduced communication models for federated learning and three state-of-the-art algorithms, and proposed a k-median clustering optimization algorithm. Huang et al. [22] conducted an in-depth analysis of vertical federated clustering mechanisms, proposing a privacy attack model targeting vertical federated k-means clustering. Their findings revealed the possibility of reconstructing raw data in vertical federated learning, thereby causing data privacy leakage.

This study addresses the privacy protection issues of k-means clustering in vertical federated learning scenarios by proposing a federated clustering framework based on lightweight multi-stage dimensionality reduction. It primarily resolves three major challenges: privacy constraints, communication bottlenecks, and feature imbalance problems.

Our main contributions are as follows:

- (1)

- We innovatively propose a multi-stage dimensionality reduction framework applicable to vertical federated learning clustering based on Product Quantization (PQ) and Multidimensional Scaling (MDS) techniques. Locally, feature compression and codebook generation effectively reduce data volume, while PQ-quantized parameters inherently provide noise injection effects to enhance privacy. Innovatively, we introduce one-dimensional MDS embedding to map clustering centers in the codebook into distance-preserving indices. This achieves zero raw codebook upload, abandons data reconstruction on the server side, and fundamentally eliminates the risk of data distribution leakage.

- (2)

- The multi-stage dimensionality reduction mechanism significantly reduces transmitted data volume and communication frequency while ensuring clustering accuracy, thereby improving communication efficiency.

- (3)

- The combination of PQ dimensionality reduction and MDS embedding algorithms mitigates clustering bias caused by feature imbalance in vertical federated learning.

- (4)

- Extensive experiments on the MNIST dataset validate that our algorithm satisfies federated learning privacy requirements while preserving clustering accuracy.

The structure of our paper is arranged as follows: Section 2 discusses existing clustering algorithms, challenges in VFL, and theoretical analyses of various dimensionality reduction techniques; Section 3 details our algorithmic design; Section 4 presents the experimental process and results analysis conducted to validate our algorithm; finally, Section 5 concludes the paper.

2. Related Work

2.1. K-Means Clustering Algorithm

k-means is an unsupervised learning algorithm (Algorithm 1) that aims to partition n data objects into k clusters (k < n), such that similarity within the same cluster is maximized while similarity between different clusters is minimized [23].

The core principle involves iteratively optimizing cluster centroid positions to minimize the sum of squared errors (SSEs) within clusters:

where is the centroid of cluster .

k-means exhibits linear time complexity growth [24], making it suitable for large-scale data while being simple, intuitive, and highly interpretable. However, it has several limitations: sensitivity to initial values [25], requirement of preset k-value, susceptibility to noise, and restrictions on cluster shapes. Its core challenges (k-value selection, sensitivity to initial centroids) can be mitigated through methods like the elbow method, silhouette coefficient, and k-means++.

| Algorithm 1 k-means [23] algorithm steps. |

Input: Dataset , number of clusters K, maximum iterations T. Output: Partitioning result of K clusters. Steps:

|

2.2. Vertical Federated Learning

Horizontal federated learning involves clients sharing the same feature space but having different sample spaces. Vertical federated learning, in contrast, deals with datasets sharing the same sample space but possessing different feature spaces. Vertical federated learning demonstrates broad application prospects across multiple fields such as healthcare, finance, government affairs, and agriculture. In the medical domain, different institutions (e.g., hospitals and insurance companies) may possess partial patient data but cannot directly share this information. Vertical federated learning enables data collaboration among these institutions while safeguarding patient privacy. For example, the 2022 paper [26] explored federated learning applications in healthcare, covering optimized algorithms, data distribution, privacy protection, and model architecture. Vertical federated learning finds significant applications in the financial sector. Within finance, data silos may exist among banks and financial institutions—for instance, banks hold customer transaction records while insurers possess customer health data. Through vertical federated learning, these entities can collaboratively train models without sharing raw data, enhancing accuracy in risk assessment and credit scoring. The 2023 paper [27] detailed such financial applications and proposed multiple privacy-preserving and security mechanisms. Dwarampudi et al. explored federated learning in smart agricultural systems in their paper [28], highlighting how it leverages machine learning capabilities while resolving data privacy concerns. The study emphasized that vertical federated learning can provide localized recommendations for specific agricultural conditions by integrating data from different geographical regions. This approach can also extend to edge computing scenarios such as poultry farming [29].

The vertical federated learning process comprises two stages: encrypted entity alignment (Private Set Intersection, PSI) (A Privacy-preserving Data Alignment Framework for Vertical Federated Learning) [30] and model training.

During the encrypted entity alignment stage, participants hash user IDs (e.g., using SHA-256) or encrypt them with RSA. They then perform secure intersection: exchanging encrypted IDs and comparing them through blinding and re-encryption techniques to avoid exposing non-overlapping samples. Ultimately, participants only learn the intersection ID list, with raw feature data remaining undisclosed [30].

In the model training phase, different participants own distinct feature spaces, while labels may be held by one party. To ensure privacy, encryption techniques (e.g., asymmetric encryption or homomorphic encryption) are typically employed during communication to transmit model updates or gradients, preventing data leakage. The central server or coordinator aggregates encrypted model updates from data providers securely [31].

Communication costs in vertical federated learning often become bottlenecks for training efficiency, particularly due to network heterogeneity among parties and the large volume of encrypted data. Coordinating communications incurs additional overhead. It is critical to minimize communication overhead without compromising model performance. As participant data dimensions increase, transmitting high-dimensional feature vectors significantly escalates communication burdens, requiring compression mechanisms to reduce bandwidth usage [32].

Privacy risks in vertical federated learning primarily stem from data distribution characteristics and multi-party data interactions. Sharing identical sample IDs across participants enables attackers to reconstruct user profiles through correlation analysis. During training, transmitting gradients or intermediate results may leak raw data distributions—for example, malicious participants could infer user scale or distribution during PSI. Model training requires collaborative computation of loss functions and gradients across parties. With features dispersed among participants, Secure Multi-Party Computation (MPC) and homomorphic encryption (HE) [31] are essential for privacy-preserving joint training, incurring substantial computational costs for security.

Two common approaches address privacy-security challenges: reducing communication volume (data quantity and transmission frequency) or transforming data. Reducing transmission volume and frequency simultaneously enhances communication efficiency.

Numerous approaches reduce communication volume, such as compression techniques including quantization, sparsification, model pruning, knowledge distillation, and embedding compression [33], which decrease transmitted model parameters. Quantization compresses gradients by reducing numerical precision, while sparsification transmits only critical gradient components while discarding insignificant ones. Communication strategies may also be optimized—for example, clients performing multiple local updates before server communication, or randomly selecting subsets of clients per round. Alternatively, constructing virtual datasets for unified server-side training reduces communication frequency.

Data processing methods involve homomorphic encryption, secure multi-party computation, differential privacy, random masking, etc. Current solutions face limitations: fully homomorphic encryption offers theoretical security but suffers from slow speeds; partially homomorphic variants are more practical yet provide compromised protection [34]. Differential privacy sacrifices model accuracy through noise injection [35].

Vertical federated learning faces an additional challenge: when participants (clients) exhibit significant disparities in feature quantities (e.g., one party’s feature dimension dA ≫ dB), this substantially impacts model performance, training efficiency, and privacy-security. The high-dimensional feature party carries greater information volume, potentially causing the model to over-rely on its features and drowning out the contributions of the low-dimensional party. In secure aggregation, the party with fewer features is vulnerable to statistical correlation attacks by the high-dimensional party. The computational/communication overhead of the high-dimensional party A far exceeds that of B, becoming a system bottleneck. Existing solutions to this problem include mapping features from different clients into a unified space and employing dual regularization (local consistency + complementary consistency) to reduce distribution discrepancies [36]. Other methods prioritize adjusting client weights based on feature importance; for instance, the FedFSA algorithm dynamically weights the loss function according to feature quality [37]. Constraining the feature norms of local model outputs can also prevent high-dimensional clients from dominating updates and enhance generalization capability [37]. Embedding compression serves as another effective strategy, using PCA or sparse coding to reduce dimensions of high-dimensional embedding vectors (e.g., from 100-dimensional to 20-dimensional) [38]. Adopting asynchronous updating mechanisms can alleviate this issue by allowing low-dimensional clients to submit updates more frequently, reducing waiting time [39].

This research focuses on implementing the k-means clustering algorithm within vertical federated learning. The core challenge lies in collaboratively computing the distances between sample points (to centroids) and determining cluster assignments for samples, as well as updating centroids, all while ensuring participant data remains local (privacy protection). We propose using the Product Quantization (PQ) process instead of local model training. This serves a dual purpose: firstly, it leverages PQ’s inherent column-wise k-means clustering to replace the original global dimension k-means clustering; secondly, it utilizes PQ to compress data dimensionality and transmitted parameters. The objective of clustering algorithms is to partition a dataset into groups (clusters) based on inherent similarities, achieving high intra-cluster similarity and low inter-cluster similarity. Given that clustering relies on distance features, as long as the index can accurately represent the distance relationships among the cluster centers within the codebook, the codebook itself can remain local. Only the index needs uploading to the server for subsequent clustering, thereby minimizing transmitted data volume. Consequently, we designed a PQ- and MDS-based multi-stage dimensionality reduction framework specifically for vertical federated scenarios, achieving the dual objectives of privacy preservation and communication efficiency. Adopting a multi-stage dimensionality reduction strategy (as opposed to a single-step direct reduction) is crucial for another key reason: significant disparities in feature dimensions across clients. Direct global dimensionality reduction could disproportionately favor clients with higher feature dimensions, leading to inaccurate results. Our framework effectively balances the impact of features with varying dimensions and avoids such bias through secondary dimensionality reduction in subspace.

2.3. PQ Quantization Technique

The PQ quantization technique (Product Quantization) [40] is a quantization technique used for compressing high-dimensional vector data and approximate nearest neighbor search. Its fundamental principle decomposes the high-dimensional vector space into the Cartesian product of multiple low-dimensional subspaces, then quantizes each subspace separately. In PQ quantization, the original vector is divided into multiple subvectors. Each subvector undergoes clustering via the k-means algorithm to generate a codebook, and the original vector is replaced by its nearest centroid, thereby achieving efficient compression.

Current applications of quantization techniques in federated learning primarily focus on communication efficiency optimization, storage optimization, computational acceleration, personalized training, and enhanced privacy protection. For instance [41] indicates that quantization can reduce communication costs by two orders of magnitude. Quantized compressed models require less device storage, accelerate computation, and suit edge deployment. Product Quantization (PQ) can mitigate data heterogeneity issues in federated learning (Optimized Product Quantization), such as clients generating personalized codebooks based on local data for server usage. PQ-quantized parameters inherently provide noise injection effects. For example, the medical federated learning framework FL-TTE adds Gaussian noise ( = 1.0) to quantized hazard ratio parameters, increasing model bias by only 0.5% while meeting privacy requirements (Gradient Obfuscation Gives a False Sense of Security in Federated Learning) [42].

However, traditional PQ techniques primarily emphasize data compression, and a critical step involves uploading the codebook to the server for data reconstruction. Although reconstructed data exhibits reduced accuracy compared to raw data, it still risks exposing the original data distribution. This research focuses on implementing clustering algorithms in vertical federated learning—where clustering is a classification problem rather than regression. Consequently, the server requires no data reconstruction. Capitalizing on clustering’s reliance on distance features, we innovatively re-encode the codebook indices to ensure they represent distance relationships among cluster centers within the codebook. This enables retaining the codebook locally while uploading only indices to the server for clustering, maximally preserving local data and reducing computational overhead on the server side.

The core process of Product Quantization (PQ) is as follows:

- (1)

- Space Decomposition:Decompose a vector of dimension D into Msubspaces, each with dimension D/M (requiring Dmod M = 0).Mathematical Expression:The original vector v ∈ s partitioned into M subvectors vi ∈ , with each subvector quantized independently

- (2)

- Subspace Quantization: Perform k-means clustering on each subspace to generate a codebook containing k cluster centers.

- (3)

- Encoding Representation:The original vector is represented by a combination of indices corresponding to the nearest cluster centers of its subvectors. For example, a 128-dimensional vector can be compressed into eight 8-bit indices (requiring only 64 bits of storage, achieving a compression ratio of up to 97%).

The advantages of PQ quantization are as follows: The codebook in PQ is a collection of multi-stage cluster centers, decomposing high-dimensional problems into low-dimensional subproblems through space partitioning; by utilizing Cartesian product combinations, it covers an enormous search space with minimal storage, achieving high compression ratios and rapid approximate computations through index mapping. This characteristic enables PQ to significantly reduce storage and computational overhead while maintaining acceptable accuracy loss [43].

The loss in PQ quantization stems from the destruction of correlations due to subspace partitioning and information truncation from discrete quantization [44]. Mathematically, it manifests as follows:

- (1)

- Reconstruction Error:Each subspace generates K centroids via k-means (). Subvector is quantized to its nearest centroid , with reconstruction error:This error decreases as K increases.

- (2)

- Subspace Partitioning Error:A D-dimensional vector is partitioned into M subspaces (each of dimension d = D/M). For an original vector v∈, partitioned as . Independent quantization across subspaces destroys interdimensional correlations, and the lower bound of the reconstruction error for is as follows:When subspace partitioning does not align with the principal components of the data distribution (e.g., without PCA preprocessing), the error increases significantly.

2.4. MDS Mapping Method

To ensure a mapping relationship between indices and cluster centers in the codebook, multiple mathematical methods can map point sets in n-dimensional space to one-dimensional values while preserving original distance relationships as much as possible. Examples include PCA projection [45], one-dimensional MDS embedding [46], random projections, and kernel PCA. PCA is simpler but preserves variance rather than pairwise distances. The one-dimensional MDS embedding method suits small datasets by directly optimizing distance preservation. We adopt the one-dimensional MDS embedding algorithm in Algorithm 2.

Classical one-dimensional MDS embedding maps points in high-dimensional space to a one-dimensional line, ensuring that Euclidean distances (absolute differences) between projected points approximate the original distances as closely as possible. It essentially solves for the optimal linear projection direction through eigenvalue decomposition.

Core ideas:

Given m n-dimensional points , the goal is to find a mapping as follows:

where the one-dimensional distance satisfies the following:

Optimization Objective: Minimize the stress function (Stress).

Loss Analysis:

During one-dimensional embedding, the loss is primarily caused by distance distortion, which can be strictly quantified using the stress function.

According to the Johnson–Lindenstrauss lemma, when embedding any n points from high-dimensional space to one dimension, distance distortion inevitably exists:

where is determined by the intrinsic dimensionality of the data.

Theoretical Lower Bound of Loss:For any dataset, the minimum achievable stress for one-dimensional embedding satisfies the following:

where are the eigenvalues of the double-centered matrix B.

| Algorithm 2 MDS [46] one-dimensional embedding algorithm steps. |

Input: m×ndata matrixX, distance metric (default: Euclidean distance). Output: One-dimensional coordinate vector Steps:

|

2.5. Baseline

The DP-VFC algorithm (Algorithm 3) originates from Paper [17]. Algorithm idea: DP-VFC extends the classical k-means (which minimizes the within-cluster sum of squared errors, SSEs) to a multi-party vertical federated setting under ()-differential privacy. Assuming S participants each hold non-overlapping feature blocks of the same batch of users , the global objective remains as minimizing SSE.

DP VFC comprises four steps: First, locally run differentially private k-means with local budget at each participant to obtain noisy centers and record cluster membership indices;

Then, encode these membership sets using differentially private Flajolet–Martin partition sketches (DPFMPSs), and upload them along with local centers to the server in one transmission without disclosing individual user affiliations;

The server performs Cartesian products on all local centers to form candidate global sample grids, and leverages the uploaded sketches to estimate the intersection cardinality of members at each grid point under the same budget using the inclusion–exclusion principle or a two-phase iterative method;

Finally, execute noise-free weighted k-means on this set of “weighted pseudo-samples,” iteratively updating centers until convergence, thereby outputting global k cluster centers satisfying ()-differential privacy within a single communication round.

Thus concludes the iteration formula.

The DF-VFC algorithm ensures approximately minimizing the global SSE without disclosing either individual samples or cluster membership relationships.

| Algorithm 3 DP-VFC [17] algorithm steps. |

|

3. Method

The essence of clustering is grouping data points based on similarity. As long as compressed data preserves the “proximity relationships among data points” (i.e., distant samples remain separated and close samples remain aggregated), clustering on compressed data remains effective. Motivated by this principle, we design a multi-level dimensionality-reduction-based clustering framework for vertical federated learning that jointly accounts for feature imbalance, communication overhead, and privacy protection.

This framework is implemented based on PQ quantization and MDS techniques. After privacy-preserving alignment across multiple clients, the Product Quantization technique replaces traditional local model training. Algorithmically, PQ fundamentally relies on k-means clustering principles, compressing high-dimensional features into low-dimensional codes to significantly reduce communication overhead. Regarding privacy protection design: directly uploading codebooks (cluster center sets) risks exposing original data distributions. We observe clustering effectiveness heavily depends on relative distances between cluster centers. Thus, we employ the MDS one-dimensional embedding algorithm, ensuring the following:

The server executes a one-shot clustering algorithm on distance-preserving indices to obtain the clustering structure, achieving maximized intra-group similarity and maximized inter-group divergence

This approach preserves raw codebook privacy locally while maintaining distance relationships essential for clustering through indices, ensuring algorithmic performance and further enhancing communication efficiency.

The adoption of multi-stage dimensionality reduction instead of direct dimensionality reduction is partially due to significant disparities in feature counts across clients. Direct reduction would bias clustering results toward certain features under such imbalances.

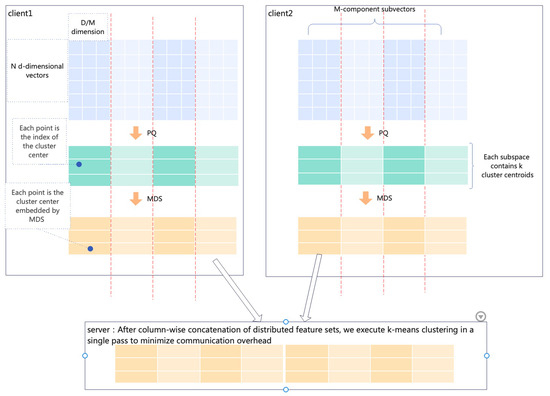

Figure 1 illustrates the data flow diagram of the entire algorithm. Locally on the client side, the total data dimension is D-dimensional. The original data is partitioned into M groups of subvectors, each with a dimension of D/M. After performing PQ quantization on them, a codebook is generated. At this stage, original data values are converted into indices within the codebook. Subsequently, the MDS one-dimensional embedding algorithm is executed on the cluster centers in this codebook to obtain remapped values. These values replace the original indices in the data. Finally, these local indices are uploaded to the server side. After aligning all data by columns on the server side, the k-means clustering algorithm is executed to obtain global cluster centers.

Figure 1.

Data flow diagram.

3.1. Algorithm Design

Suppose there is a federated learning framework with m clients. The global dataset is D with global dimension dim, distributed across clients. denotes the dataset of the g-th client, where data points in are vectors. There are . Define sub_dim as the subspace dimensionality in PQ quantization, and sub_k as the number of cluster centers per subspace in PQ quantization. represents the codebook of the g-th client, and denotes the cluster center set of the i-th subspace for the g-th client, is the data index set of the g-th client, and B is the global data index set.

Algorithm mainly consists of the following steps:

- 1.

- Encrypted Entity AlignmentSelect common samples: Extract common samples from each party’s dataset.

- 2.

- Local Initialization and Training of PQ Quantizer:

- (1)

- Pad dimensions: Determine whether the local dimension dim is divisible by sub_dim. If not divisible, pad dimensions.First, calculate the number of dimensions p to pad:Then, pad the original vector by appending p zeros to its end.

- (2)

- Generate subspace codebooks based on training data.

- 3.

- Secondary mapping:Perform secondary mapping on cluster centers in subspace codebooks using MDS one-dimensional embedding algorithm, then use the normalized mapped values as codebook indices.

- 4.

- Data transmission:Codebooks are stored locally. Transfer the indices to the server side.

- 5.

- Server-side global cluster center aggregation:Execute k-means algorithm on the indices uploaded by clients at the server side to obtain abstract global cluster centers.When using abstract cluster centers, apply the same mapping operation to local data, then compute distances to global abstract cluster centers to determine true cluster assignments.The algorithm requires only one round of communication.

Table 1 shows the explanation of symbols:

Table 1.

Symbolexplanation.

Algorithm 4 pseudocode:

| Algorithm 4 our algorithm |

Input: Distributed dataset , where is the data of the g-th client. Number of clusters k. Maximum iterations T. Output: Global cluster centers . Steps:

|

3.2. Cluster Accuracy Analysis

The performance guarantee of the algorithm stems from the multi-stage dimensionality reduction framework preserving critical information required for clustering tasks—namely, the relative similarity (distance structure) between data points.

3.2.1. PQ Quantization

PQ quantization reduces dimensionality via subspace decomposition. Its core principle is distributing high-dimensional quantization errors across low-dimensional subspaces, thereby controlling overall information loss and preserving fundamental similarity for subsequent clustering.

As shown in the error analysis of PQ quantization in Section 2, when the codebook size k is sufficiently large, the quantization error in each subspace converges to the inherent noise level (e.g., data variance) of that subspace. Consequently, the total error is bounded. As m increases (subspace dimensionality decreases), errors distribute more uniformly, avoiding error explosion caused by the “curse of dimensionality” in high-dimensional spaces.

Clustering relies on the property that “similar data points are closer in space”. For any two raw data points x, y, if x and y are similar ( small), their corresponding subvectors , should also be similar ( small) across subspaces. With bounded PQ quantization errors, the quantized distance satisfies the following:

( denotes the maximum quantization error of a single vector).

When , the trend of aligns with —that is, quantized distances between similar data points remain smaller than those between dissimilar points, preserving the fundamental similarity required for clustering.

3.2.2. MDS Mapping

Multidimensional Scaling (MDS) seeks to preserve the original pairwise distance relationships in a low-dimensional embedding. Because clustering (especially k-means) is driven by relative distances, as long as the distance ordering after the MDS mapping is consistent with that of the original space, clustering accuracy can be maintained. As shown in Section 2, in MDS the stress function attains its minimum when the mapping uses the eigenvector associated with the largest eigenvalue; equivalently, the squared error between the one-dimensional mapped distances and the original distances is minimized. In this case, pairs that are close in the original space remain close in one dimension, and pairs that are far remain far, thereby preserving the global distance structure.

Assume that in the original codebook, belong to the same class (small ) and belong to different classes (large ). Because the MDS stress is minimized, the mapping preserves this relation with high probability, i.e., the mapped distances satisfy , so intra-class distances remain smaller than inter-class distances.

Even under a one-dimensional mapping, as long as this “intra-class near, inter-class far” trend is maintained, clustering will correctly group the points. The advantage of a one-D embedding is that it suppresses high-dimensional noise while compressing global distance relations into a simple numerical ordering, which is well suited for cross-party concatenation and global clustering.

3.2.3. Effectiveness of the Two-Stage Joint Compression

In this framework, although the errors introduced by PQ and by MDS are not independent, their accumulated effect does not destroy the distance structure required for clustering.

- (1)

- Bound of the error-propagation chain:

- i.

- PQ: quantizes the original vector x to x′ with a bounded reconstruction error

- ii.

- MDS: maps the codebook centroids to a one-dimensional embedding z with a bounded distance distortion , where is small due to stress minimization.

The joint effect on clustering appears as the probability of similarity inversion (i.e., pairs that were similar becoming dissimilar, or vice versa). By theoretical analysis, when and are both smaller than the gap between the maximum intra-class distance and the minimum inter-class distance in the original data, the probability of such inversions trends to zero, and the clustering result on the compressed data coincides with that on the original data. - (2)

- Consistency between the multi-stage compression and the clustering objective: The k-means algorithm seeks to minimize the within-cluster sum of squared errors (SSEs), denoted as follows:After PQ quantization, the corresponding SSE differs from J by the total quantization distortion. Similarly, when applying MDS to the PQ centroids, the resulting SSE deviates from by the distance-preservation error of the embedding. Because both the PQ and MDS errors can be made arbitrarily small (by choosing sufficiently large codebook size and by minimizing the MDS stress), the optimal cluster centers obtained on the fully compressed data (minimizer of ) will coincide closely with those of the original problem (minimizer of J). In other words, the two-stage compressed representation still admits an approximately optimal k-means solution.

Overall, PQ controls the quantization error by decomposing the feature space into low-dimensional subspaces, thereby preserving local similarity among data points. MDS then preserves the global distance structure by embedding codebook centroids into one dimension. Because both PQ and MDS introduce bounded distortions that are compatible with the clustering criterion—“intra-cluster points remain closer than inter-cluster points”—the two-stage compression can guarantee that, for sufficiently large codebook size and sufficiently small MDS stress, clustering on the compressed data closely approximates the result on the original data.

3.3. Hyperparameter Selection and Trade-Offs

In our framework, PQ quantization granularity is controlled by the subspace dimension () and the number of codebook centroids per subspace ().

A smaller (more subspaces) disperses quantization errors more evenly but increases the number of codebooks, raising both computational and communication costs. A larger yields higher quantization fidelity (smaller error) and thus better global clustering performance, but reduces compression ratio and increases privacy and communication overhead.

In our experiments, we evaluated combinations with and to quantify performance under varying PQ granularities.

3.4. Impact of Dimension Padding

Padding dimensions may introduce additional information or noise. In our solution, since we pad with zeros, these dimensions may not contribute to the original data’s features yet increase computational and storage demands. Although the padded values are zeros, each subspace quantization must process these dimensions as distance calculations during quantization still account for them. This cost remains relatively acceptable compared to PQ quantization’s data compression benefits.

However, dimension padding may disrupt natural correlations between subspaces. High-dimensional features in real data often exhibit local correlations (e.g., adjacent dimensions in image features). When padded dimensions are irrelevant to the original data, they may break intrinsic intra-subspace correlations. Consequently, the quantized indices fail to accurately reflect the original structure, thereby increasing quantization error.

3.5. Comparison with Other Dimensionality-Reduction Methods

Consistency with the clustering objective: PCA excels at preserving global linear variance but does not directly preserve pairwise distances, which can distort the “near-dense, far-sparse” structure that clustering exploits. MDS explicitly preserves both local and global distance relationships, making it more effective for similarity-based clustering.

Information loss vs. communication cost: One-dimensional MDS retains only the principal distance variations, incurring higher information loss. Two-dimensional MDS or PCA can capture more complex relationships but multiply the communication cost and risk leaking additional distributional information. Thus, for vertical federated clustering under strict privacy and communication constraints, our PQ and 1D MDS two-stage approach strikes a balanced trade-off between fidelity, privacy, and efficiency.

3.6. Privacy Enhancement

The privacy protection mechanism of this algorithm derives from two aspects: For one thing, PQ quantization technology reduces dimensionality and processes raw data, preventing its direct upload to the server. For another, locally retained PQ codebooks avoid leakage of original data distributions.

Additionally, to defend against attacks like membership inference analysis, differential privacy noise protection can be incorporated. Differential privacy provides a rigorous framework, ensuring analytical results never reveal individual information. By adding noise to data or intermediate results, attackers cannot determine any individual’s presence in the dataset. According to differential privacy serial/parallel theorems [47] we have the following:

- 1.

- Serial Composition: For a given dataset D, assume there exists random algorithms , with privacy budgets , respectively. The composition algorithm provides - DP protection. That is, for the same dataset, applying a series of differentially private algorithms sequentially provides protection equivalent to the sum of privacy budgets.

- 2.

- Parallel Composition: For disjoint datasets , assume there exists random algorithms , with privacy budgets , respectively. provides ()-DP privacy budgets. That is, for disjoint datasets, applying different differentially private algorithms separately in parallel provides privacy protection equivalent to the maximum privacy budget among the composed algorithms.

Adding differential privacy noise to raw data or codebooks can effectively enhance privacy protection.

4. Experiments and Results

4.1. Experimental Settings

4.1.1. Dataset

The MNIST dataset is adopted, with columns split according to client feature ratios, and different subsets are distributed to different clients.

4.1.2. Parameter Settings

- Total number of clients: 2;

- Total number of client features: 784;

- Client feature ratios: (1:1), (1:6), (1:13);

- PQ quantization subspace dimensions: 1, 2, 4, 8;

- Number of PQ quantization cluster centers per subspace: 10, 64, 128, 256.

4.1.3. Evaluation Metrics

The evaluation employs NMI and ARI metrics.

4.2. Performance Analysis

Centralized data clustering serves as the baseline for validation.

4.2.1. Comparison Between Proposed Method and Centralized Kmeans

This experiment involves two clients, each with 392 data dimensions at a 1:1 ratio. As shown in Table 2, the proposed algorithm achieves slightly higher average NMI and ARI values under various subspace dimensions and cluster center counts than centralized k-means, indicating effective extraction of original data features. When the subspace dimension is 1, equivalent to performing k-means on each data column and replacing values with cluster centers, PQ loss is minimized. Data confirms superior performance in this scenario. Performance improves with fewer cluster centers per subspace, partly because the sparse MNIST dataset can be effectively represented by fewer clusters.

Table 2.

Algorithm performance comparison.

4.2.2. Impact of MDS on Algorithm Performance

To evaluate MDS’s impact, we compare against two alternative implementations without MDS: One clusters codebook indices at the server, and another reconstructs data using PQ quantization at the server. A privacy comparison is as follows: direct index clustering > proposed method > data reconstruction.

As Table 2 shows, performance comparison is as follows: proposed method ≥ data reconstruction direct index clustering. When subspace dimension = 1, direct index clustering performs comparably to other methods. However, its performance declines sharply as PQ subspace dimension increases, indicating insufficient capture of data distance features. The proposed method better captures data distance features, yielding slightly superior performance over data reconstruction.

4.2.3. Impact of Client Feature Quantity on Performance

As Table 3 shows, by varying the feature-partition ratios among clients (1:1, 1:6, and 1:13), our algorithm consistently achieves NMI scores of approximately 0.5 and ARI scores of approximately 0.4, significantly outperforming the baseline. Moreover, its performance remains stable within a narrow range, indicating that the relative number of features held by each client has minimal effect on clustering quality and that the method effectively overcomes feature-dimension imbalance.

Table 3.

Experimental results: impact of client feature quantity on performance.

5. Conclusions

Based on the characteristics of clustering algorithms, this study transforms the core contradiction of federated clustering—“data utility vs. privacy preservation vs. communication efficiency”—into a verifiable distance-preserving optimization problem, providing a secure clustering implementation framework for vertical federated learning. The proposed algorithm can address communication challenges in vertical federated learning while preserving privacy.

Addressing three core challenges of k-means clustering in vertical federated learning—inadequate privacy protection, excessive communication overhead, and feature dimension imbalance—this paper innovatively proposes a multi-level dimensionality reduction federated clustering framework integrating Product Quantization (PQ) and Multidimensional Scaling (MDS). By compressing original high-dimensional features into low-dimensional codes via PQ and further reducing dimensionality through one-dimensional MDS embedding on codebooks, communication efficiency is significantly enhanced. Privacy protection is achieved through the following: (1) dimensionality reduction lowering data precision and transmission volume, and (2) mapping sensitive codebooks to secure indices via MDS embedding, transmitting only distance-preserving indices to the server. The original codebooks and feature data remain exclusively on local clients.

Experimental results demonstrate that our algorithm significantly outperforms the baseline algorithm DP-VFC across multiple scenarios and even marginally surpasses centralized algorithms. This indicates that while performing data dimensionality reduction and compression, our algorithm effectively preserves distance characteristics of the data. Through varying the ratio of feature dimensions across clients during experiments, the stability of the algorithm is validated. We conclude that in federated settings, our approach enhances communication efficiency and privacy while reducing sensitivity to data distribution, demonstrating strong performance stability.

Nevertheless, this study has certain limitations. Currently, uniform quantization granularity is adopted during data quantization. To better handle heterogeneous data, personalized granularity warrants further investigation—for example, allowing participants to set parameters based on their local data characteristics. The trade-off between privacy protection and compression loss also requires deeper exploration. Although single-round communication improves efficiency, critical information loss in client-uploaded statistical features (due to noise or compression) cannot be corrected through multiple rounds of interaction. This is especially so in extreme heterogeneity scenarios, as single-pass compression may cause irreversible deviation in global clustering, leading to an accuracy–efficiency trade-off dilemma. Consequently, single-round communication imposes exceptionally high requirements on local data training and compression quality. Subsequent research will address these aspects theoretically and experimentally.

Beyond clustering applications, the multi-stage dimensionality reduction framework for vertical federated learning can be readily extended to other machine learning algorithms, such as replacing global aggregation mechanisms. Future work may explore optimization and integration of multi-layered privacy-preserving techniques, including the fusion of secure multi-party computation (MPC) with homomorphic encryption (HE), as well as multi-tier privacy protection policies.

Author Contributions

Methodology, J.W.; software, J.W. and J.Z.; validation, J.Z. and X.C.; investigation, X.C.; writing—original draft preparation, J.W.; writing—review and editing, J.W. and J.Z.; visualization, X.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, Mai Khao, Thailand, 3–5 May 2025; PMLR: Cambridge, MA, USA, 2017; pp. 1273–1282. [Google Scholar]

- Stallmann, M.; Wilbik, A. Towards Federated Clustering: A Federated Fuzzy c-Means Algorithm (FFCM). arXiv 2022, arXiv:2201.07316. [Google Scholar]

- Chen, M.; Shlezinger, N.; Poor, H.V.; Eldar, Y.C.; Cui, S. Communication-efficient federated learning. Proc. Natl. Acad. Sci. USA 2021, 118, e2024789118. [Google Scholar] [CrossRef]

- Zhu, H.; Xu, J.; Liu, S.; Jin, Y. Federated learning on non-IID data: A survey. Neurocomputing 2021, 465, 371–390. [Google Scholar] [CrossRef]

- Zhou, X.; Yang, G. Communication-efficient and privacy-preserving large-scale federated learning counteracting heterogeneity. Inf. Sci. 2024, 661, 120167. [Google Scholar] [CrossRef]

- Mohammadi, N.; Bai, J.; Fan, Q.; Song, Y.; Yi, Y.; Liu, L. Differential privacy meets federated learning under communication constraints. IEEE Internet Things J. 2021, 9, 22204–22219. [Google Scholar] [CrossRef]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and open problems in federated learning. Found. Trends® Mach. Learn. 2021, 14, 1–210. [Google Scholar] [CrossRef]

- Wei, K.; Li, J.; Ma, C.; Ding, M.; Wei, S.; Wu, F.; Chen, G.; Ranbaduge, T. Vertical federated learning: Challenges, methodologies and experiments. arXiv 2022, arXiv:2202.04309. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. (TIST) 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Li, J.; Wei, H.; Liu, J.; Liu, W. FSLEdge: An energy-aware edge intelligence framework based on Federated Split Learning for Industrial Internet of Things. Expert Syst. Appl. 2024, 255, 124564. [Google Scholar] [CrossRef]

- Khan, L.U.; Pandey, S.R.; Tran, N.H.; Saad, W.; Han, Z.; Nguyen, M.N.; Hong, C.S. Federated learning for edge networks: Resource optimization and incentive mechanism. IEEE Commun. Mag. 2020, 58, 88–93. [Google Scholar] [CrossRef]

- Rieke, N.; Hancox, J.; Li, W.; Milletari, F.; Roth, H.R.; Albarqouni, S.; Bakas, S.; Galtier, M.N.; Landman, B.A.; Maier-Hein, K.; et al. The future of digital health with federated learning. NPJ Digit. Med. 2020, 3, 119. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Hou, J.; He, B. Vertibench: Advancing feature distribution diversity in vertical federated learning benchmarks. arXiv 2023, arXiv:2307.02040. [Google Scholar]

- Khan, A.; ten Thij, M.; Wilbik, A. Communication-efficient vertical federated learning. Algorithms 2022, 15, 273. [Google Scholar] [CrossRef]

- Cheng, K.; Fan, T.; Jin, Y.; Liu, Y.; Chen, T.; Papadopoulos, D.; Yang, Q. Secureboost: A lossless federated learning framework. IEEE Intell. Syst. 2021, 36, 87–98. [Google Scholar] [CrossRef]

- Ghosh, A.; Chung, J.; Yin, D.; Ramchandran, K. An Efficient Framework for Clustered Federated Learning. Adv. Neural Inf. Process. Syst. 2020, 33, 19586–19597. [Google Scholar] [CrossRef]

- Li, Z.; Wang, T.; Li, N. Differentially private vertical federated clustering. arXiv 2022, arXiv:2208.01700. [Google Scholar] [CrossRef]

- Zhao, F.; Li, Z.; Ren, X.; Ding, B.; Yang, S.; Li, Y. VertiMRF: Differentially Private Vertical Federated Data Synthesis. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 4431–4442. [Google Scholar]

- Mazzone, F.; Brown, T.; Kerschbaum, F.; Wilson, K.H.; Everts, M.; Hahn, F.; Peter, A. Privacy-Preserving Vertical K-Means Clustering. arXiv 2025, arXiv:2504.07578. [Google Scholar]

- Li, C.; Ding, S.; Xu, X.; Guo, L.; Ding, L.; Wu, X. Vertical Federated Density Peaks Clustering under Nonlinear Mapping. IEEE Trans. Knowl. Data Eng. 2024, 37, 1004–1017. [Google Scholar] [CrossRef]

- Duan, Q.; Lu, Z. Edge Cloud Computing and Federated–Split Learning in Internet of Things. Future Internet 2024, 16, 227. [Google Scholar] [CrossRef]

- Huang, Y.; Huo, Z.; Fan, Y. DRA: A data reconstruction attack on vertical federated k-means clustering. Expert Syst. Appl. 2024, 250, 123807. [Google Scholar] [CrossRef]

- Ahmed, M.; Seraj, R.; Islam, S.M.S. The k-means algorithm: A comprehensive survey and performance evaluation. Electronics 2020, 9, 1295. [Google Scholar] [CrossRef]

- Han, J.; Kamber, M.; Mining, D. Concepts and techniques. Morgan Kaufmann 2006, 340, 94104–103205. [Google Scholar]

- Mary, S.S.; Selvi, T. A study of K-means and cure clustering algorithms. Int. J. Eng. Res. Technol. 2014, 3, 1985–1987. [Google Scholar]

- Hwang, H.; Yang, S.; Kim, D.; Dua, R.; Kim, J.Y.; Yang, E.; Choi, E. Towards the practical utility of federated learning in the medical domain. In Proceedings of the Conference on Health, Inference, and Learning, Cambridge, MA, USA, 22–24 June 2023; PMLR: Cambridge, MA, USA, 2023; pp. 163–181. [Google Scholar]

- Luo, Y.; Lu, Z.; Yin, X.; Lu, S.; Weng, Y. Application research of vertical federated learning technology in banking risk control model strategy. In Proceedings of the 2023 IEEE Intl Conf on Parallel & Distributed Processing with Applications, Big Data & Cloud Computing, Sustainable Computing & Communications, Social Computing & Networking (ISPA/BDCloud/SocialCom/SustainCom), Wuhan, China, 21–24 December 2023; IEEE: New York, NY, USA, 2023; pp. 545–552. [Google Scholar]

- Dwarampudi, A.; Yogi, M. Application of federated learning for smart agriculture system. Int. J. Inform. Technol. Comput. Eng. (IJITC) ISSN 2024, 2455–5290. [Google Scholar] [CrossRef]

- Jiang, D.; Wang, H.; Li, T.; Gouda, M.A.; Zhou, B. Real-time tracker of chicken for poultry based on attention mechanism-enhanced YOLO-Chicken algorithm. Comput. Electron. Agric. 2025, 237, 110640. [Google Scholar] [CrossRef]

- GAO, Y.; XIE, Y.; DENG, H.; ZHU, Z.; ZHANG, Y. A Privacy-preserving Data Alignment Framework for Vertical Federated Learning. J. Electron. Inf. Technol. 2024, 46, 3419–3427. [Google Scholar]

- Yang, L.; Chai, D.; Zhang, J.; Jin, Y.; Wang, L.; Liu, H.; Tian, H.; Xu, Q.; Chen, K. A survey on vertical federated learning: From a layered perspective. arXiv 2023, arXiv:2304.01829. [Google Scholar] [CrossRef]

- Liu, Y.; Kang, Y.; Zou, T.; Pu, Y.; He, Y.; Ye, X.; Ouyang, Y.; Zhang, Y.Q.; Yang, Q. Vertical federated learning: Concepts, advances, and challenges. IEEE Trans. Knowl. Data Eng. 2024, 36, 3615–3634. [Google Scholar] [CrossRef]

- Zhao, Z.; Mao, Y.; Liu, Y.; Song, L.; Ouyang, Y.; Chen, X.; Ding, W. Towards efficient communications in federated learning: A contemporary survey. J. Frankl. Inst. 2023, 360, 8669–8703. [Google Scholar] [CrossRef]

- Yang, H.; Liu, H.; Yuan, X.; Wu, K.; Ni, W.; Zhang, J.A.; Liu, R.P. Synergizing Intelligence and Privacy: A Review of Integrating Internet of Things, Large Language Models, and Federated Learning in Advanced Networked Systems. Appl. Sci. 2025, 15, 6587. [Google Scholar] [CrossRef]

- Zhang, C.; Li, S. State-of-the-art approaches to enhancing privacy preservation of machine learning datasets: A survey. arXiv 2024, arXiv:2404.16847. [Google Scholar]

- Qi, Z.; Meng, L.; Li, Z.; Hu, H.; Meng, X. Cross-Silo Feature Space Alignment for Federated Learning on Clients with Imbalanced Data. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025. [Google Scholar]

- Hu, K.; Xiang, L.; Tang, P.; Qiu, W. Feature norm regularized federated learning: Utilizing data disparities for model performance gains. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence, Jeju Island, Republic of Korea, 3–9 August 2024; pp. 4136–4146. [Google Scholar]

- Aramian, A. Managing Feature Diversity: Evaluating Global ModelReliability in FederatedLearning for Intrusion Detection Systems in IoT. Eng. Technol. 2024, 39. [Google Scholar]

- Johnson, A. A Survey of Recent Advances for Tackling Data Heterogeneity in Federated Learning. Preprints 2025. [Google Scholar]

- Jegou, H.; Douze, M.; Schmid, C. Product quantization for nearest neighbor search. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 117–128. [Google Scholar] [CrossRef]

- Konečnỳ, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated learning: Strategies for improving communication efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- Yue, K.; Jin, R.; Wong, C.W.; Baron, D.; Dai, H. Gradient obfuscation gives a false sense of security in federated learning. In Proceedings of the 32nd USENIX Security Symposium (USENIX Security 23), Anaheim, CA, USA, 9–11 August 2023; pp. 6381–6398. [Google Scholar]

- Ge, T.; He, K.; Ke, Q.; Sun, J. Optimized product quantization. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 744–755. [Google Scholar] [CrossRef]

- Xiao, S.; Liu, Z.; Shao, Y.; Lian, D.; Xie, X. Matching-oriented product quantization for ad-hoc retrieval. arXiv 2021, arXiv:2104.07858. [Google Scholar]

- Deisenroth, M.P.; Faisal, A.A.; Ong, C.S. Mathematics for Machine Learning; Cambridge University Press: Cambridge, UK, 2020. [Google Scholar]

- Izenman, A.J. Linear Dimensionality Reduction; Springer: New York, NY, USA, 2013. [Google Scholar]

- Vadhan, S.; Zhang, W. Concurrent Composition Theorems for Differential Privacy. In Proceedings of the 55th Annual ACM Symposium on Theory of Computing, Orlando, FL, USA, 20–23 June 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).