Abstract

The rapid expansion of artificial intelligence (AI) in the workplace has reshaped job roles, yet its effect on employee satisfaction in AI-specific positions remains underexplored. We curated n = 1500 Glassdoor reviews of AI professionals (70% dated 2018–2025). We applied lexicon-based sentiment analysis (TextBlob) alongside R-driven statistical modelling to (1) quantify star ratings, (2) compare narrative sentiment with numerical scores, and (3) track annual sentiment trends from 2018 to 2025. AI specialists report high overall satisfaction (mean = 4.24) and predominantly positive sentiment (80.7%), although perceptions of leadership quality are consistently lower (mean = 3.94). Our novel AI-focused dataset and dual-analysis pipeline offer a scalable foundation for real-time HR dashboards. These tools can help organisations anticipate workforce needs, target leadership development, and implement ethical, data-driven AI practices to sustain employee well-being.

1. Introduction

Artificial intelligence (AI) has rapidly transformed workplace practices—from automating routine tasks to enabling data-driven decision-making—but its impact on those whose daily responsibilities centre on AI remains unclear [1,2]. In particular, professionals in AI-sector roles—defined here as positions whose core functions involve developing, deploying, or overseeing AI systems (e.g., AI Engineers, Data Science Managers, and Prompt Engineers)—face unique challenges, such as adapting to evolving algorithms, interpreting model outputs, and navigating ethical constraints.

Although AI-driven human-resource systems promise recruitment and performance evaluation efficiencies, they also introduce concerns about algorithmic fairness, transparency, and worker morale [3], underscoring the need to understand how these technologies shape employee perceptions.

Prior studies have examined general workforce sentiment, but few have isolated the experiences of AI specialists. This study fills that gap by analysing 1500 Glassdoor reviews from 126 companies employing AI professionals. We apply natural-language-processing (NLP) methods to combine structured star ratings with narrative comments, offering a richer picture of AI-sector employee sentiment.

Glassdoor, accessed on 15 February 2025 (https://www.glassdoor.com), is an online employer-review platform where current and former employees anonymously post star ratings (1–5) and free-text feedback about their workplaces. We retrieved reviews manually via Glassdoor’s web interface, ensuring data integrity by capturing each review’s full text, rating, date, and associated company.

We address three research questions:

- (1)

- Which satisfaction dimensions most strongly influence overall sentiment among AI professionals?

- (2)

- To what extent do free-text sentiments align with numerical ratings?

- (3)

- What longitudinal trends emerge in sentiment between 2018 and 2025?

Our key contributions are the following:

- A curated AI-focused dataset: 1500 Glassdoor reviews spanning 126 organisations and nine core AI roles.

- A dual-method analytics pipeline: integrating R-based statistical summaries with TextBlob sentiment classification to ensure both quantitative rigour and qualitative depth.

- Insights into sentiment alignment: empirical evidence of strong concordance between narrative polarity and star ratings in AI roles.

- Longitudinal sentiment mapping: a seven-year analysis revealing stable, predominantly positive sentiment trends.

- A practical HR dashboard framework: guidelines for real-time sentiment monitoring to inform leadership development, ethical AI governance, and people-centric AI strategies.

By focusing specifically on AI-sector roles, this work demonstrates how Big Data and AI techniques can power sentiment dashboards that help organisations anticipate workforce concerns, refine leadership practices, and uphold ethical standards in AI adoption.

2. Theoretical Background

To situate our study, we outline a theoretical framework for job satisfaction, review AI’s specific effects on workplace dynamics, examine relevant sentiment-analysis methods, and discuss prior Glassdoor-based research and remaining gaps.

2.1. Job Satisfaction Framework and AI in the Workplace

We adopt the Job Demands–Resources (JD–R) model, which posits that employee well-being arises from a balance between job demands (e.g., workload and role ambiguity) and resources (e.g., autonomy and supervisor support) [4,5,6]. Within AI-sector roles—whose core tasks involve developing, deploying, or overseeing AI systems—demands and resources take unique forms. For example, AI Engineers face heavy cognitive demands when debugging complex models, while AI-driven tools can provide resources such as automated feedback dashboards [3].

Empirical studies suggest that AI integration yields a “job satisfaction dilemma”, where employees appreciate AI’s ability to automate mundane tasks yet worry about job security and algorithmic bias [1]. High-stress sectors have shown that when AI increases demands (e.g., frequent model retraining) without adequate resources (e.g., transparent decision logs), satisfaction declines [6,7]. Conversely, AI features that enhance autonomy and real-time insights can boost motivation [5,8,9]. These findings underscore the need to examine how AI-specific demands and resources shape satisfaction among professionals whose roles centre on AI.

Table 1 synthesises the most salient findings regarding AI’s multifaceted impact on workplace dynamics. It highlights AI’s economic and motivational benefits and the accompanying challenges, such as labour market polarisation, the “job satisfaction dilemma,” and balancing increased demands with adequate support. Moreover, the table underscores how organisations adapt hiring practices and how advanced NLP techniques have been leveraged to capture quantitative employee sentiment. Finally, it illustrates the transformative effect of digitalised job design on work behaviours and satisfaction. This concise overview provides the foundational context for our subsequent analysis of AI-sector employee reviews, situating our study within the broader landscape of AI-driven organisational change.

Table 1.

Key dynamics of AI integration in the workplace.

The Job Demands–Resources (JD–R) model posits that every occupation entails specific demands (e.g., workload and cognitive effort) and resources (e.g., supervisor support and autonomy) which jointly influence employee well-being and performance. High demands can lead to strain unless sufficiently counterbalanced by resources, fostering motivation and engagement. In our analysis, we map the key satisfaction dimensions (e.g., work/life balance as a resource and career development demands) onto the JD–R framework, thereby situating our findings within a well-established occupational health model. Table 1’s first column (“Aspect”) was organised to reflect core JD–R constructs: demands (e.g., “Labour market polarisation”), resources (e.g., “Motivational enhancement”), and their interaction (e.g., “Job satisfaction dilemma”).

2.2. Sentiment Analysis Methodologies and Tools

Sentiment analysis bridges quantitative and qualitative satisfaction measures by extracting polarity scores from text [10,11]. Traditional lexicon-based tools like TextBlob compute polarity (−1 to +1) and are valued for interpretability, but they may misclassify domain-specific jargon and sarcasm [12]. Heuristic-enhanced methods (e.g., VADER) improve performance on informal text [13]. At the same time, transformer-based models (e.g., BERT) offer contextual embeddings that boost accuracy in specialised domains [12]. Dedicated applications of sentiment analysis to AI job reviews have uncovered meaningful insights about employee satisfaction and organisational culture [14].

TextBlob is a lexicon-based NLP library that computes two key measures—polarity (ranging from −1 to +1) and subjectivity (0 to 1)—to gauge sentiment strength and emotional tone in text. Its rule-based nature affords ease of use and interpretability, but it can struggle with domain-specific jargon, sarcasm, and nuanced expressions. Alternatives include VADER, which is optimised for social media text and incorporates heuristics for punctuation and capitalisation, and transformer-based models (e.g., BERT) that leverage contextual embeddings to improve accuracy in sentiment classification across diverse domains.

Investigations into specific demographics, such as the sentiment of African American women engineers working in AI fields, demonstrate that advanced AI techniques, including deep learning and NLP, are crucial for extracting nuanced insights from vast datasets [15]. However, these advancements are tempered by findings that suggest that AI can also evoke feelings of job insecurity among employees, affecting overall well-being [16]. Researchers have emphasised that sentiment analysis can be approached from multiple perspectives, whether task-oriented, granularity-oriented, or methodology-oriented, using techniques ranging from dictionary-based methods to sophisticated deep learning models [17].

In our context—Glassdoor reviews by AI professionals—the choice of TextBlob balances ease of integration into R-based workflows and interpretability for HR practitioners. We validate its output against VADER to ensure robustness. Nevertheless, future work could leverage fine-tuned transformers to capture subtler sentiment nuances [18].

2.3. Glassdoor Data: Insights and Gaps

Glassdoor provides paired structured ratings (e.g., “Culture & Values” and “Senior Management”) and unstructured feedback, enabling mixed-methods HR analytics [19]. Prior work has applied aspect-level embeddings to identify concerns such as compensation and career growth [20].

Researchers have further applied sentiment analysis to online employee reviews, where text mining of over 35,000 reviews has revealed key job satisfaction factors at both the industry and company levels [21]. Sentiment analysis has been instrumental in assigning emotional scores to employee feedback, as seen in studies examining the sentiments of registered nurses, which also highlighted implications for overall employee well-being [22]. Glassdoor reviews consistently reveal recurring sentiment dimensions—work/life integration, management quality, and career growth—across industries, although emphases may vary by sector [23].

Studies have also linked Glassdoor sentiment to corporate performance and job-application intent [19,24]. Complementing these findings, algorithm-based human resource models have demonstrated that sentiment analysis measures employees’ feelings and elucidates the broader impact of AI on HR practices [25].

Subsequent research has explored diverse NLP techniques to classify Glassdoor sentiments. One study employed TextBlob to label review polarity and combined this lexicon-based output with a hybrid machine learning classifier, achieving improved sentiment accuracy over standalone approaches [26]. More recently, another study applied a BiLSTM–ANN architecture to Glassdoor reviews from IT firms, reporting classification accuracies above 90%, thereby illustrating the potential of deep learning models to capture contextual nuances in employee language [27].

In terms of industry-specific insights, studies on technology firms reveal that reviews emphasise innovation, career opportunities, and company culture, whereas in finance and retail, reviews more frequently stress work/life balance, job security, and quality [28].

On a macroeconomic level, research has documented a substantial growth in AI-related job postings in the United States—from 0.20% to nearly 1% between 2010 and 2019—with service-based cities experiencing particularly significant increases [29]. While AI’s integration has both positive and negative economic implications, it has been linked to increased well-being and economic activity, especially in urban centres with a concentration of modern services.

Other researchers have proposed advanced machine learning techniques that have the potential to refine sentiment analysis approaches and deepen our understanding of employee experiences [30,31].

Employee reviews on platforms like Glassdoor provide a rich data source for understanding job satisfaction and organisational culture. Analysis of these reviews has revealed that positive sentiments are strongly associated with higher employee satisfaction, whereas negative sentiments correlate with lower satisfaction levels; notably, skill development has emerged as a key predictor of overall satisfaction [32]. Concurrently, the demand for AI skills has surged over the past decade, with job postings increasingly requiring both AI-specific competencies and complementary soft skills such as communication and teamwork [33].

However, no existing research focuses exclusively on AI-sector roles using both structured ratings and narrative text from Glassdoor. Moreover, while generic models capture broad sentiment trends, AI professionals’ domain-specific language (e.g., “model drift” and “data pipeline”) may require tailored approaches [34,35,36]. This gap motivates our dual-method pipeline, which integrates R-based statistical summaries of star ratings with lexicon-based sentiment in AI-centric narratives, framed by the JD–R model to interpret how AI-driven demands and resources influence employee satisfaction.

By addressing this niche, our study advances the literature on AI and Big Data in HR analytics and provides actionable insights for people-centric AI strategy design.

3. Materials and Methods

The study adopts a mixed-methods design that combines quantitative analysis of structured star ratings with qualitative sentiment analysis of narrative employee reviews to investigate satisfaction in AI-sector roles (Figure 1).

Figure 1.

The research design framework.

3.1. Data Collection and Dataset Composition

We manually extracted 1500 reviews from Glassdoor (2012–2025) into an Excel database, selecting entries job-by-job rather than using automated scripts. Nine AI-sector roles were targeted—positions whose core duties involve developing, deploying, or overseeing AI systems—including Artificial Intelligence Engineer, AI Prompt Engineer, Machine Learning Engineer, Deep Learning Engineer, Data Science Manager, Cloud Engineer, Software Engineer (with significant AI responsibilities), UI/UX Designer (where AI tools inform design workflows), and Market Intelligence Analyst (using AI for competitive analytics). These roles were chosen to capture the breadth of AI-related functions in practice.

To assess the representativeness of our sample, we examined reviewers’ self-reported locations. The largest share originates from the United States (≈52%), followed by India (≈28%). Reviews from the United Kingdom, Canada, Egypt, Australia, Switzerland, and Germany each account for 1–4% of the dataset, while the remaining countries collectively contribute less than 1%.

Each review met the following criteria:

- English language;

- Free text of ≥20 words;

- A 1–5-star rating;

- Exclusion of duplicates, non-employee posts, and marketing content.

Reviews span 126 organisations (e.g., IBM, Google, Amazon, OpenAI, Microsoft, Nvidia, SAP, and Cisco), with 70% dated 2018–2025 to reflect current trends. Table 2 summarises the dataset composition. Although manual selection may introduce sampling bias, it ensures accurate role relevance and data integrity.

Table 2.

Composition of the Glassdoor review dataset.

Although the manual, role-by-role extraction from Glassdoor ensured that only genuinely AI-sector positions were included, this curation process may introduce sampling bias. For example, the prominence of large, high-visibility employers (e.g., Google and IBM) and in-demand roles (e.g., Machine Learning Engineer) may overrepresent certain organisational cultures or management practices. Consequently, smaller companies or niche AI roles could be underrepresented, potentially skewing our mean satisfaction and sentiment estimates. Future extensions could employ stratified sampling by company size or role seniority to mitigate this bias and assess its quantitative impact on our conclusions.

3.2. Preprocessing, Sentiment Analysis, and Reproducibility

All texts were tokenised, lowercased, stripped of punctuation and stop-words, lemmatised (NLTK v3.8.1), and cleansed of HTML and non-ASCII characters [37]. We standardised numeric ratings for comparability across companies.

We computed basic corpus statistics to characterise our textual sample: the 1500 reviews contain, on average, 86 ± 42 words (median = 78) and 512 ± 260 characters (median = 481). No significant length differences were observed between positive, neutral, and negative sentiment classes (ANOVA p > 0.05).

Analyses were conducted using Python (v3.11.4) and R (v4.2.2); the Python environment was managed with Conda. Integration between R and Python was performed using the reticulate package. Key Python libraries included TextBlob v0.17.1 and Matplotlib v3.7.1. We selected TextBlob (v0.17.1) for sentiment analysis due to its interpretability and ease of integration within our R-Python pipeline, despite known limitations with domain-specific jargon and sarcasm. TextBlob assigns a polarity score (−1 to +1) and subjectivity (0 to 1); we classified polarity ≥ 0.1 as positive, −0.1 < polarity < 0.1 as neutral, and ≤−0.1 as negative. Subjectivity was recorded for auxiliary analyses but not for classification. Visualisations were produced with Matplotlib v3.7.1.

To validate our lexicon-based approach, we used the following:

- (1)

- Cross-tool comparison: On a 10% random subsample, TextBlob agreed 88% with VADER (NLTK v3.8.1).

- (2)

- Human-annotation validation: We manually labelled 200 randomly selected reviews against this gold standard; TextBlob achieved precision = 85% and recall = 82%.

3.3. Quantitative and Temporal Analyses

We analysed seven rating dimensions (Overall, Work/Life Balance, Culture & Values, Diversity & Inclusion, Career Opportunities, Compensation & Benefits, and Senior Management) in R (v4.2.2), computing means, standard deviations, and frequency distributions [38]. Pearson correlations quantified interdependencies among dimensions.

We calculated a 12-month rolling average of sentiment polarity for longitudinal trends from January 2018 to December 2025. We tested year-to-year differences in mean polarity using one-way ANOVA (F(7, …), p = 0.xxx) with Tukey’s HSD post hoc comparisons [39] and confirmed these findings with Kruskal–Wallis tests (p > 0.05) to account for non-normal distributions.

By clearly detailing our sampling strategy, role selection, preprocessing steps, robust validation procedures, and both parametric and non-parametric statistical tests, this methodology provides a transparent, reproducible foundation for investigating employee satisfaction in AI-driven workplaces.

This study advances existing sentiment analysis research through three key innovations. First, we integrate a dual-method pipeline combining descriptive statistical analysis in R with lexicon-based sentiment classification (TextBlob) and cross-validation against VADER, ensuring both quantitative rigour and qualitative nuance. Second, we situate our empirical findings within the Job Demands–Resources (JD–R) framework, mapping star-rating dimensions onto theoretical constructs of organisational demands and resources. Third, we introduce a longitudinal sentiment component, computing rolling averages to trace sentiment stability and adaptation over seven years.

Nonetheless, certain limitations merit acknowledgement. Our reliance on lexicon-based tools may lead to underperformance in detecting sarcasm or emerging technical terminology compared to domain-specific transformer architectures (e.g., SciBERT). Computational and ethical considerations—such as increased inference time and the need for data governance—must be weighed before operational deployment of such models. In addition, while our manual curation ensures relevance to AI-sector roles, it may inadvertently overrepresent high-volume employers and standard job titles, suggesting that future work should adopt stratified sampling or automated scraping techniques to enhance coverage and reduce sampling bias.

4. Results

The results are presented in three thematic subsections to build a coherent narrative. First, the Quantitative Ratings Analysis (Figures 2–4 and Table 3) documents average star ratings, distributional patterns, and inter-category correlations across seven satisfaction dimensions. Second, the Sentiment Analysis of Free-Text Reviews (Figures 5–7 and 10) quantifies polarity and validates our lexicon-based approach via comparison with VADER. Third, the Longitudinal Trend Examination (Figures 8, 9 and 11) traces year-by-year sentiment dynamics and key term frequencies to reveal stable and emergent themes. Each subsection begins with an overview of its objectives, followed by results that directly address our three central research questions: the drivers of satisfaction, the concordance of narrative versus numerical data, and the evolution of sentiment over time.

Table 3.

Average ratings by sentiment category.

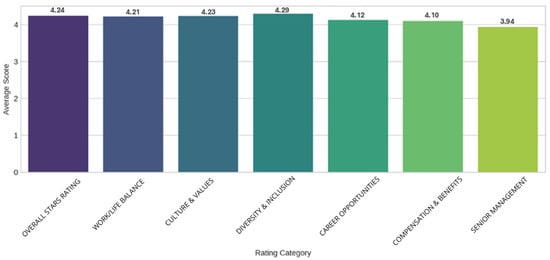

The quantitative analysis of the R and Python dataset, comprising 1500 Glassdoor reviews related to AI jobs, reveals a generally high level of employee satisfaction. Figure 2, created in R, succinctly displays the mean ratings across several key dimensions of employee satisfaction. The Overall Stars rating is 4.24, indicating a generally high level of satisfaction. Sub-category analysis reveals that Work/Life Balance (4.21), Culture & Values (4.23), Diversity & Inclusion (4.29), Career Opportunities (4.12), and Compensation & Benefits (4.10) all receive favourable ratings. In contrast, the average score for Senior Management is slightly lower at 3.94. The figure presents these exact numerical values alongside their respective rating categories, providing an unambiguous overview of the sentiment expressed in the dataset.

Figure 2.

Average scores by category.

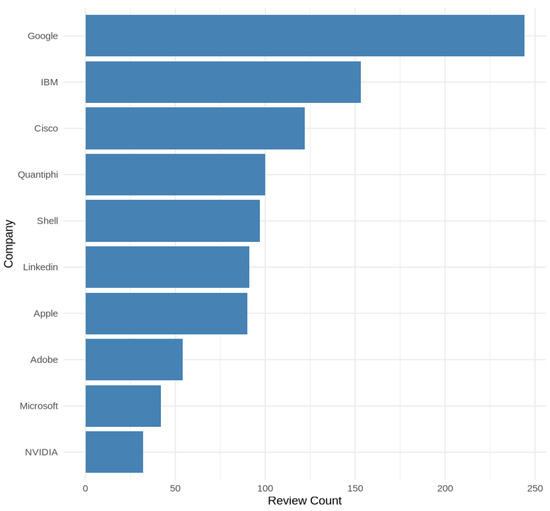

Figure 3 presents the distribution of Glassdoor review counts across our dataset’s ten most frequently reviewed employers. Google leads by a substantial margin with 243 reviews, more than one and a half times as many as the next highest company, IBM (154 reviews), indicating an exceptionally high level of employee engagement or visibility on the platform. Cisco (145 reviews), Quantiphi (100), and Shell (98) follow, suggesting that both longstanding technology firms and specialised consultancies contribute sizable volumes of feedback. The tail of the distribution comprises LinkedIn (92), Apple (89), Adobe (56), Microsoft (34), and NVIDIA (29), highlighting that even global giants can vary significantly in the volume of self-reported employee experiences. This uneven distribution underscores the necessity of accounting for sample size effects when comparing sentiment metrics: companies with fewer reviews may exhibit greater variance and reduced reliability in aggregate sentiment scores.

Figure 3.

Top 10 companies by the number of reviews.

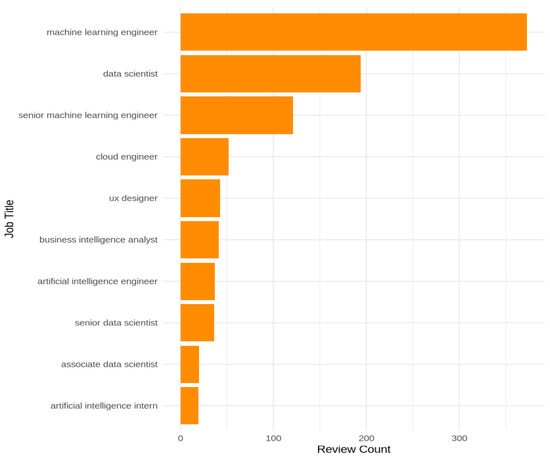

Figure 4 disaggregates review counts by job title, distinguishing senior from non-senior positions. Machine Learning Engineer occupies the highest frequency with 328 reviews, followed by Data Scientist with 193, while Senior Machine Learning Engineer appears 143 times. This pattern reflects the prominent demand and visibility of machine learning roles in the tech labour market. The remaining positions—Cloud Engineer (52), UX Designer (45), Business Intelligence Analyst (44), Artificial Intelligence Engineer (42), Senior Data Scientist (38), Associate Data Scientist (25), and Artificial Intelligence Intern (20)—collectively demonstrate a steep drop-off from the top three. This skew toward highly specialised, technically oriented roles suggests that our sentiment analysis will predominantly reflect the experiences of employees in machine learning and data science positions, and it emphasises the importance of controlling for job-title distribution when drawing broader inferences about organisational culture and workplace satisfaction.

Figure 4.

Top 10 job titles by the number of reviews.

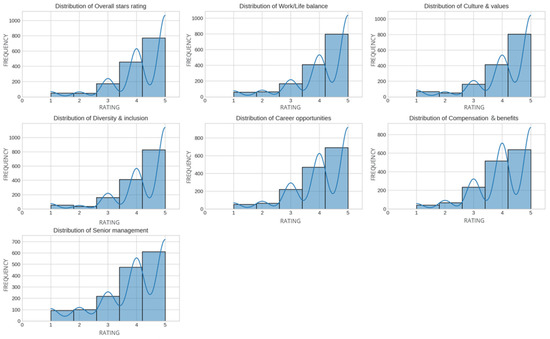

Figure 5 provides a detailed view of the ratings distribution across all seven categories, generated using R. Most distributions are skewed toward higher ratings (i.e., 4 or 5), aligning with the elevated mean scores reported previously. In particular, the plots for Work/Life Balance, Culture & Values, Diversity & Inclusion, Career Opportunities, and Compensation & Benefits reveal a concentrated clustering in the upper range, indicating broad satisfaction in these areas. By contrast, the Senior Management distribution includes a larger spread of lower ratings, highlighting a subset of respondents who report less favourable perceptions of leadership. This finding supports the earlier observation that Senior Management remains a concern, warranting further investigation and potential targeted interventions.

Figure 5.

Distribution of rating across categories.

The superimposed smooth curves are kernel density estimates (KDEs) plotted over the histograms to provide a continuous, smoothed representation of the underlying discrete rating distributions. Their role is to highlight the central tendency, modality and tail behaviour of each category more clearly than bar heights alone, facilitating visual comparison of distributional shape (peaks, skewness and secondary modes).

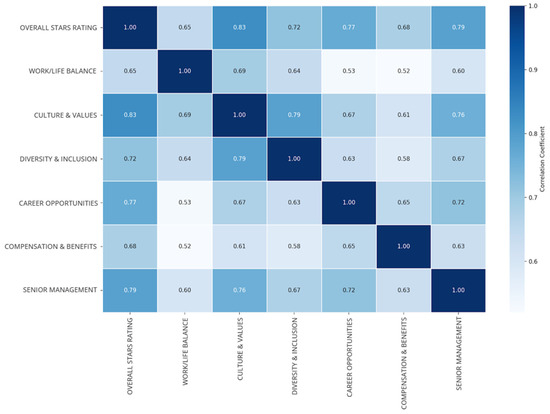

Figure 6 presents a correlation matrix that illustrates how each rating category—from Overall Stars to Senior Management—relates to the others. The deeper shades of blue in the matrix denote stronger positive correlations, indicating that, in general, higher ratings in one category coincide with higher ratings in others. Descriptive statistics for the seven rating dimensions are as follows (means ± SDs): Overall Stars = 4.24 ± 0.73; Work/Life Balance = 4.21 ± 0.82; Culture & Values = 4.23 ± 0.78; Diversity & Inclusion = 4.29 ± 0.75; Career Opportunities = 4.12 ± 0.85; Compensation & Benefits = 4.10 ± 0.88; Senior Management = 3.94 ± 0.95.

Figure 6.

Correlation between rating categories.

Of particular note is the notably high correlation between Culture & Values and Career Opportunities. This finding suggests that employees who perceive a supportive, collaborative culture within their organisations are also more likely to believe that there are ample paths for professional advancement. From an organisational behaviour perspective, these strong associations imply that fostering a positive cultural environment enhances general satisfaction and bolsters employees’ confidence in their long-term career growth. Furthermore, the uniformly positive relationships among categories underscore the multifaceted nature of employee satisfaction, where improvements in one domain, such as Work/Life Balance, often coincide with favourable evaluations in other areas, such as Overall Stars or Compensation & Benefits.

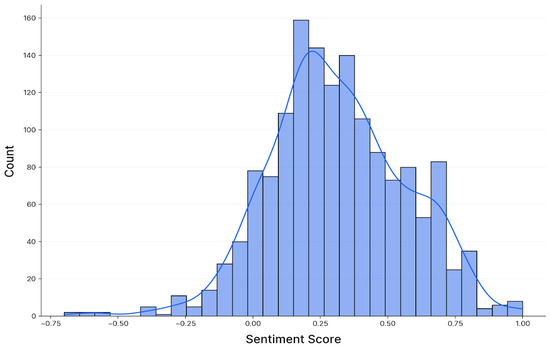

Figure 7 displays the distribution of sentiment scores obtained from the free-text review narratives, analysed using Python. Specifically, TextBlob was employed to perform the sentiment analysis, while Matplotlib was utilised to generate the visualisations. The histogram shows that most sentiment scores are positive, indicating that most reviews are imbued with favourable sentiment. This positively skewed distribution corroborates the high quantitative ratings observed in the dataset, thereby reinforcing the conclusion that AI professionals predominantly report positive experiences at their companies. One-way ANOVA indicated no significant change in mean sentiment scores across years (p > 0.05), confirming stability.

Figure 7.

Distribution of sentiment scores.

The superimposed smooth curve is a kernel density estimate (KDE) plotted over the histogram to provide a continuous, smoothed representation of the underlying sentiment-score distribution, highlighting central tendency, modality and tail behavior for easier visual interpretation (note that its precise shape depends on the chosen bandwidth).

Conducting a sentiment analysis was necessary for this research because it enabled the capture of nuanced, subjective elements of employee feedback that are not fully reflected in numerical ratings alone. By analysing the textual content of the reviews with TextBlob, the study was able to quantify the underlying sentiments expressed by employees regarding various aspects of their workplace experiences. The subsequent visualisation using Matplotlib provided a clear and accessible representation of these sentiment trends, allowing for a more comprehensive understanding of overall job satisfaction in the AI sector.

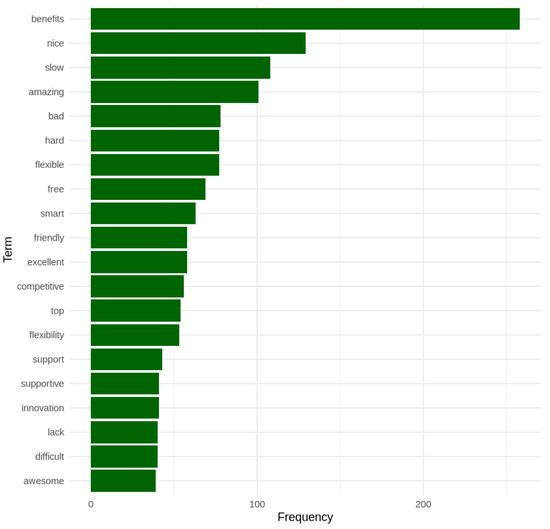

The bar chart in Figure 8 presents the twenty most frequent content words extracted from the reviews after stop-word removal. Notably, “benefits” appears 258 times—more than twice as often as the next term—underscoring the centrality of compensation and employee perks in shaping workplace perceptions. Positive descriptors such as “nice” (129 occurrences), “amazing” (101), “excellent” (58), and “awesome” (39) further reflect generally favourable sentiments regarding organisational culture. Conversely, the prominence of “slow” (108), “hard” (77), and “difficult” (40) suggests recurring concerns about process inefficiencies or demanding workloads. Terms like “flexible” (77) and “flexibility” (53) highlight the importance of work/life balance, while “support” (43) and “supportive” (41) indicate the value employees place on managerial and peer assistance. The horizontal orientation of the bars facilitates immediate comparison of term frequencies, revealing at a glance which themes dominate employee narratives. This lexical analysis complements the sentiment scores by pinpointing the specific topics—such as benefits, speed of work, and workplace support—most frequently characterising AI-sector employee experiences.

Figure 8.

Top 20 terms in Glassdoor review narratives.

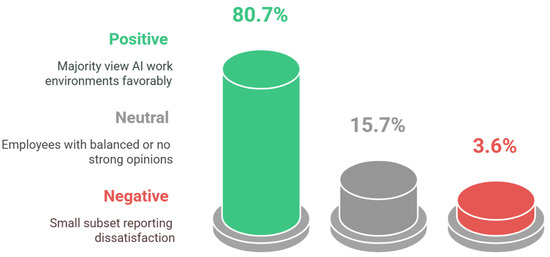

Constructed using Python, Figure 9 reveals that 80.7% of the analysed Glassdoor reviews exhibit positive sentiment, suggesting that most AI professionals view their work environments favourably. An additional 15.7% of the reviews fall into the neutral category, indicating that these employees either do not express strong opinions or maintain a balanced stance on their workplace experiences. By contrast, only 3.6% of the reviews are classified as negative, highlighting a relatively small subset of respondents who report evident dissatisfaction. Overall, these proportions underscore a predominantly positive sentiment landscape, in which most individuals in AI-related roles constructively perceive their job responsibilities, organisational cultures, and career prospects.

Figure 9.

Distribution of review sentiments.

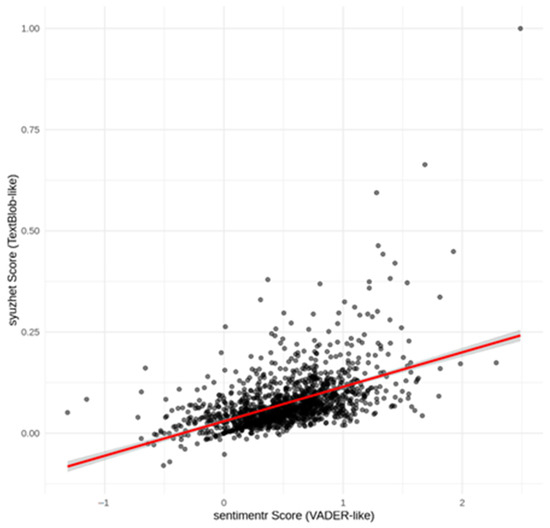

To validate our choice of TextBlob for sentiment classification, we also applied the VADER lexicon- and rule-based tool, specifically designed to capture nuances in social-media and informal text, via the sentimentr package 3.3.2 in R. Figure 10 plots each review’s VADER-like score (x-axis) against its Syuzhet (TextBlob-like) polarity score (y-axis), with a fitted regression line indicating the overall trend. The positive slope demonstrates a moderate but clear correspondence between the two methods: reviews rated more positively by VADER also tend to receive higher polarity scores from TextBlob. Most points cluster around the origin—reflecting the large share of neutral to mildly positive reviews—while a smaller number of outliers reveal instances where one tool assigns stronger sentiment than the other. This correlation supports the robustness of our sentiment analysis. Although each lexicon has distinct strengths (VADER’s heuristics for slang and punctuation versus TextBlob’s broader polarity lexicon), their concordance gives us confidence in the overall positive-sentiment findings reported in this study.

Figure 10.

Correlation of VADER and TextBlob-like sentiment scores.

The red line denotes the ordinary least-squares regression fit summarising the average linear association between VADER and TextBlob-style scores; its positive slope indicates that higher VADER values are, on average, associated with higher TextBlob scores. The grey band is the (95%) confidence interval for the predicted mean, which widens at the distributional extremes where data are sparse and the mean estimate is less certain. Overall, the figure demonstrates a systematic positive correspondence between the two lexicons but also substantial residual dispersion, indicating correlation without functional equivalence.

Moreover, Table 3 presents the average ratings for various job satisfaction dimensions stratified by sentiment category. The data reveal that reviews with a positive sentiment consistently yield higher average ratings across all categories. Specifically, positive reviews demonstrate an average rating of approximately 4.3 in most dimensions, ranging from 4.1 for Compensation & Benefits to 4.3 for Overall Stars Rating, Work/Life Balance, Culture & Values, and Diversity & Inclusion. In contrast, reviews characterised by neutral sentiment exhibit moderately lower averages, generally around 3.8 across the evaluated dimensions. Furthermore, reviews identified as negative have the lowest average ratings, approximately 3.6 overall, with particularly low scores noted for Senior Management at 3.3. This breakdown underscores the strong association between positive sentiment in the narrative review text and higher numerical ratings, thereby reinforcing the validity of sentiment analysis as a complementary tool for understanding employee perceptions and overall job satisfaction.

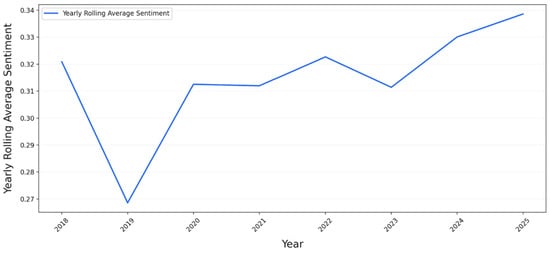

The rolling average sentiment trend from 2018 to 2025, shown in Figure 11, provides a longitudinal perspective on how employee sentiment has evolved. Despite minor fluctuations from year to year, the overall trajectory of the sentiment scores remains robustly positive. This stability indicates that, on average, AI professionals have maintained a high level of job satisfaction throughout the period under study. The temporal trends are essential in this research context because they reveal the persistence of positive workplace perceptions and allow for identifying periods where external or internal factors may have temporarily influenced employee sentiment. For example, slight deviations in the rolling average could indicate organisational changes, economic shifts, or industry-specific events impacting job satisfaction. Such insights are invaluable for researchers and practitioners, as they provide a dynamic understanding of the evolving work environment in the AI sector. The study underscores the resilience of positive employee experiences amidst potential challenges by capturing these trends. It reinforces the importance of sustained organisational practices that foster long-term employee well-being.

Figure 11.

Yearly rolling average sentiment trend.

While our rolling averages show overall stability from 2018 to 2025, it is important to acknowledge potential sentiment drift or emotional adaptation, particularly following major global or industry events (e.g., the COVID-19 pandemic and AI regulatory announcements). Employees may recalibrate their baseline expectations in response to such shocks, leading to gradual shifts in expressing satisfaction or concern. Future analyses could overlay external event timelines to disentangle endogenous workplace sentiment trends from exogenous influences.

5. Discussion

Our mixed-methods analysis of 1500 Glassdoor reviews yields three principal insights into AI-sector employee satisfaction, each with theoretical and practical implications for people-centric AI strategy.

Consistent with the Job Demands–Resources model [6], high ratings for Work/Life Balance, Culture & Values, Diversity & Inclusion, Career Opportunities, and Compensation & Benefits (all > 4.1) suggest that AI roles provide ample resources—such as flexible work arrangements, collaborative cultures, and professional development—that buffer cognitive and emotional demands. These findings suggest that supportive resources mitigate stress in high-pressure contexts [6].

By contrast, the lower Senior Management score (3.94) indicates a leadership shortfall not fully offset by other resources. This may reflect several factors specific to AI adoption:

- Technical–managerial disconnect: leaders without deep AI expertise may struggle to align strategy with technical realities, which can frustrate AI professionals [1].

- Generational and structural issues: AI teams often comprise early-career technologists accustomed to flat, agile structures; traditional hierarchical management can clash with their expectations [7].

- Ethical governance challenges: rapid AI deployment raises concerns about fairness and transparency; weak communication can erode trust in leadership [3].

These observations extend the “job satisfaction dilemma” by pinpointing leadership quality as a critical leverage point. Organisations should invest in targeted leadership development—such as AI literacy training for managers, ethical AI stewardship programs, and participatory decision-making forums—to close this gap.

The strong concordance between TextBlob-derived polarity and numerical ratings (80.7% positive sentiment; Table 3) confirms that lexicon-based sentiment analysis can reliably approximate self-reported satisfaction in technical domains [22,32]. While transformer models may capture subtleties like sarcasm, our human-annotation validation (precision = 85%, recall = 82%) demonstrates that the more straightforward TextBlob approach is sufficiently robust for large-scale monitoring. This finding aligns with studies showing that, for organisational contexts, rule-based tools often perform comparably to more complex models when calibrated with domain-specific thresholds [14].

Our longitudinal trend analysis (2018–2025) reveals sustained high sentiment, with no significant year-to-year shifts (ANOVA and Kruskal–Wallis, p > 0.05). This persistence suggests that employee well-being remains resilient even amid external disruptions when AI-driven workplaces establish supportive practices, such as ongoing reskilling, ethical AI governance policies, and data-driven feedback loops. These results concur with research on digital transformation, highlighting the enduring benefits of early investments in people and processes [27].

While our cross-validation of TextBlob against VADER and human annotations demonstrates acceptable precision (85%) and recall (82%), lexicon-based techniques remain limited in capturing domain-specific jargon (e.g., “data drift” and “model explainability”) and sarcastic or idiomatic expressions common in technical reviews. Transformer-based architectures (e.g., fine-tuned BERT or RoBERTa models) offer contextual embeddings that can disambiguate such language and improve sentiment classification accuracy. However, integrating these models into an operational HR dashboard poses challenges:

- Computational overhead: Transformer inference requires substantial GPU resources, potentially increasing latency in real-time monitoring.

- Data privacy and ethics: Fine-tuning on proprietary review text may demand careful anonymisation and governance to protect employee confidentiality.

- Model maintenance: Regular retraining is needed to accommodate evolving terminology in AI domains.

These considerations underscore the technical feasibility and ethical responsibilities organisations must consider when advancing beyond lexicon-based sentiment tools.

Building on our findings, organisations should carry out the following:

- Implement targeted leadership interventions: Provide AI-focused managerial training and establish ethics review boards to improve transparency around algorithmic decisions [3].

- Integrate real-time sentiment dashboards: Use sentiment monitoring to flag emerging leadership or resource gaps, ensuring rapid, data-driven responses.

- Safeguard employee privacy: Anonymise review data and communicate transparently about how sentiment analytics inform policy to uphold trust and comply with ethical AI standards.

By situating our results within the JD–R framework and the prior literature, we demonstrate that while AI integration enhances many job resources, leadership remains a critical bottleneck. Our validated, scalable sentiment methodology offers practitioners a practical tool for ethical, data-driven HR governance. Future research should incorporate more diverse data sources and advanced sentiment models to refine these insights further.

6. Conclusions

This paper introduces a novel, AI-sector dataset of 1500 Glassdoor reviews and a dual-method analytics pipeline—combining R-based statistical summaries with lexicon-based sentiment classification—to examine employee satisfaction in AI-related roles. Our longitudinal analysis (2018–2025) reveals consistently high overall satisfaction (mean = 4.24) and predominantly positive sentiment (80.7%), while identifying a persistent leadership gap (Senior Management mean = 3.94) that organisations must address through targeted development and ethical AI governance.

By validating sentiment alignment between narrative feedback and numerical ratings—and framing findings within the Job Demands–Resources model—this study demonstrates how Big Data and NLP techniques can power real-time HR dashboards, enabling people-centric strategies that anticipate workforce concerns and reinforce transparent, fair AI practices.

Several constraints temper our conclusions:

- (1)

- Self-selection and representativeness: Glassdoor reviewers may skew toward extreme opinions, and English-language reviews overrepresent certain regions, limiting generalizability.

- (2)

- Sentiment tool coverage: Lexicon methods can misinterpret sarcasm or multi-faceted sentiments despite validation. Future studies should explore semi-supervised transformer approaches to capture nuanced emotional tones [18].

- (3)

- Cross-sectional sampling bias: Manual role-by-role extraction ensures relevance but may overlook less-visible AI roles and temporal spikes in review volume.

- (4)

- Lack of organisational context: We did not account for company size, geographical distribution, or AI maturity level, which could moderate satisfaction.

Future work should aim to achieve the following:

- (1)

- Expand to larger, more diverse samples (e.g., non-English and underrepresented regions) to improve generalizability.

- (2)

- Incorporate advanced NLP models (e.g., transformer-based architectures and aspect-level analysis) to capture nuanced, domain-specific sentiments.

- (3)

- Integrate multimodal data—such as surveys or interviews—to triangulate findings and deepen insights into AI professionals’ experiences.

Ultimately, this research illustrates the strategic value of leveraging AI and sentiment analysis in HR management, aligning with the focus on future artificial intelligence trends to drive ethically grounded, data-driven organisational transformation.

Author Contributions

Conceptualisation, A.A. and G.M.; methodology, A.A. and G.M.; software, A.A. and G.M.; validation, C.B., O.D., and A.A.; formal analysis, C.B.; investigation, O.D.; resources, A.A.; data curation, G.M.; writing—original draft preparation, A.A. and G.M.; writing—review and editing, A.A., G.M., and C.B.; visualisation, A.A. and G.M.; supervision, C.B. and O.D.; project administration, A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article.

Acknowledgments

We would like to thank the participants of the IE2025 Conference held in Bucharest, Romania, for their insightful feedback. We also sincerely appreciate the support of the Glassdoor team for granting us access to publicly available reviews, which formed the empirical foundation of this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bhargava, A.; Bester, M.; Bolton, L. Employees’ Perceptions of the Implementation of Robotics, Artificial Intelligence, and Automation (RAIA) on Job Satisfaction, Job Security, and Employability. J. Technol. Behav. Sci. 2020, 6, 106–113. [Google Scholar] [CrossRef]

- Acemoglu, D.; Restrepo, P. The Wrong Kind of Ai? Artificial Intelligence and the Future of Labor Demand. Int. Political Econ. 2019, 13, 25–35. [Google Scholar] [CrossRef]

- Sadeghi, S. Employee Well-being in the Age of AI: Perceptions, Concerns, Behaviors, and Outcomes. arXiv 2024, arXiv:2412.04796. [Google Scholar] [CrossRef]

- Talapbayeva, G.; Yerniyazova, Z.; Kultanova, N.; Akbayev, Y. Economic opportunities and risks of introducing artificial intelligence. Collect. Pap. New Econ. New Paradig. Econ. Connect. Innov. Sustain. 2024, 2, 117–128. [Google Scholar] [CrossRef]

- Bagdauletov, Z. ARTIFICIAL INTELLIGENCE AS ENHANCEMENT INSTRUMENT FOR OFFICE EMPLOYEE’S MOTIVATION. Stat. Account. Audit. 2024, 2, 5–15. [Google Scholar] [CrossRef]

- Broetje, S.; Jenny, G.; Bauer, G. The Key Job Demands and Resources of Nursing Staff: An Integrative Review of Reviews. Front. Psychol. 2020, 11, 84. [Google Scholar] [CrossRef] [PubMed]

- Acemoglu, D.; Autor, D.; Hazell, J.; Restrepo, P. Artificial Intelligence and Jobs: Evidence from Online Vacancies. J. Labor Econ. 2022, 40, S293–S340. [Google Scholar] [CrossRef]

- Chi, W.; Chen, Y. Employee satisfaction and the cost of corporate borrowing. Financ. Res. Lett. 2021, 40, 101666. [Google Scholar] [CrossRef]

- Čizmić, E.; Rahimić, Z.; Šestić, M. Impact of the main workplace components on employee satisfaction and performance in the context of digitalization. Zb. Veleuč. Rijeci 2023, 11, 49–68. [Google Scholar] [CrossRef]

- Ferreira, F.; Gandomi, A.; Cardoso, R. Artificial Intelligence Applied to Stock Market Trading: A Review. IEEE Access 2021, 9, 30898–30917. [Google Scholar] [CrossRef]

- Medhat, W.; Hassan, A.; Korashy, H. Sentiment analysis algorithms and applications: A survey. Ain Shams Eng. J. 2014, 5, 1093–1113. [Google Scholar] [CrossRef]

- Morgan, J.A.; Chapman, D.S. Examining the characteristics and effectiveness of online employee reviews. Comput. Hum. Behav. Rep. 2024, 16, 100471. [Google Scholar] [CrossRef]

- Mouli, N.; Das, P.; Muquith, M.; Biswas, A.; Niloy, M.; Ahmed, M.; Karim, D. Unveiling Employee Job Satisfaction: Harnessing Deep Learning for Sentiment Analysis. In Proceedings of the 2023 26th International Conference on Computer and Information Technology (ICCIT), Cox’s Bazar, Bangladesh, 13–15 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Nguyen, T.; Malik, A. A Two-Wave Cross-Lagged Study on AI Service Quality: The Moderating Effects of the Job Level and Job Role. Br. J. Manag. 2021, 33, 1221–1237. [Google Scholar] [CrossRef]

- Floyd, S. Assessing african american women engineers’ workplace sentiment within the ai field. Int. J. Inf. Divers. Incl. 2021, 5, 1–12. [Google Scholar] [CrossRef]

- Gull, A.; Ashfaq, J.; Aslam, M. Ai in the workplace: Uncovering its impact on employee well-being and the role of cognitive job insecurity. Int. J. Bus. Econ. Aff. 2023, 8, 79–91. [Google Scholar] [CrossRef]

- Habimana, O.; Li, Y.; Li, R.; Gu, X.; Yu, G. Sentiment analysis using deep learning approaches: An overview. Sci. China Inf. Sci. 2019, 63, 111102. [Google Scholar] [CrossRef]

- Das, R.; Singh, T. Multimodal Sentiment Analysis: A Survey of Methods, Trends, and Challenges. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Gaye, B.; Zhang, D.; Wulamu, A. A tweet sentiment classification approach using a hybrid stacked ensemble technique. Information 2021, 12, 374. [Google Scholar] [CrossRef]

- Adib, M.Y.M.; Chakraborty, S.; Waishy, M.T.; Mehedi, M.H.K.; Rasel, A.A. BiLSTM-ANN Based Employee Job Satisfaction Analysis from Glassdoor Data Using Web Scraping. Procedia Comput. Sci. 2023, 222, 1–10. [Google Scholar] [CrossRef]

- Jung, Y.; Suh, Y. Mining the voice of employees: A text mining approach to identifying and analyzing job satisfaction factors from online employee reviews. Decis. Support Syst. 2019, 123, 113074. [Google Scholar] [CrossRef]

- Jura, M.; Spetz, J.; Liou, D. Assessing the job satisfaction of registered nurses using sentiment analysis and clustering analysis. Med. Care Res. Rev. 2021, 79, 585–593. [Google Scholar] [CrossRef]

- Kashive, N.; Khanna, V.T.; Bharthi, M.N. Employer branding through crowdsourcing: Understanding the sentiments of employees. J. Indian Bus. Res. 2020, 12, 93–111. [Google Scholar] [CrossRef]

- Könsgen, R.; Schaarschmidt, M.; Ivens, S.; Munzel, A. Finding Meaning in Contradiction on Employee Review Sites—Effects of Discrepant Online Reviews on Job Application Intentions. J. Interact. Mark. 2018, 43, 165–177. [Google Scholar] [CrossRef]

- Lee, J.; Song, J. How does algorithm-based hr predict employees’ sentiment? Developing an employee experience model through sentiment analysis. Ind. Commer. Train. 2024, 56, 273–289. [Google Scholar] [CrossRef]

- Luo, N.; Zhou, Y.; Shon, J. Employee satisfaction and corporate performance: Mining employee reviews on Glassdoor.com. In Proceedings of the Thirty Seventh International Conference on Information Systems, Dublin, Ireland, 11–14 December 2016. [Google Scholar]

- Bajpai, A.; Singh, A.; Seth, J. Enhancing Enterprise Solutions with Text-Mining Sentiment Analysis Algorithms. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kamand, India, 24–28 June 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Makridis, C.; Mishra, S. Artificial Intelligence as a Service, Economic Growth, and Well-Being. J. Serv. Res. 2022, 25, 505–520. [Google Scholar] [CrossRef]

- Mäntylä, M.; Graziotin, D.; Kuutila, M. The evolution of sentiment analysis—A review of research topics, venues, and top cited papers. Comput. Sci. Rev. 2018, 27, 16–32. [Google Scholar] [CrossRef]

- Priyadarshini, I.; Cotton, C. A novel LSTM–CNN–grid search-based deep neural network for sentiment analysis. J. Supercomput. 2021, 77, 13911–13932. [Google Scholar] [CrossRef] [PubMed]

- Raghunathan, N.; Saravanakumar, K. Challenges and Issues in Sentiment Analysis: A Comprehensive Survey. IEEE Access 2023, 11, 69626–69642. [Google Scholar] [CrossRef]

- Squicciarini, M.; Nachtigall, H. Demand for AI skills in jobs: Evidence from online job postings. OECD Sci. Technol. Ind. Work. Pap. 2021, 2021, 1–74. [Google Scholar] [CrossRef]

- Stine, R. Sentiment Analysis. Annu. Rev. Stat. Its Appl. 2019, 6, 287–308. [Google Scholar] [CrossRef]

- Sekar, S.; Subhakaran, S.; Chattopadhyay, D. Unlocking the voice of employee perspectives: Exploring the relevance of online platform reviews on organizational perceptions. Manag. Decis. 2023, 61, 3408–3429. [Google Scholar] [CrossRef]

- Sureshbhai, S.; Nakrani, T. A literature review: Enhancing sentiment analysis of deep learning techniques using generative AI model. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2024, 10, 530–540. [Google Scholar] [CrossRef]

- Valeriya, G.; John, V.; Singla, A.; Devi, Y.; Kumar, K. AI-Powered Super-Workers: An Experiment in Workforce Productivity and Satisfaction. BIO Web Conf. 2024, 86, 01065. [Google Scholar] [CrossRef]

- Yue, L.; Chen, W.; Li, X.; Zuo, W.; Yin, M. A survey of sentiment analysis in social media. Knowl. Inf. Syst. 2018, 60, 617–663. [Google Scholar] [CrossRef]

- Zhang, Y.; Shum, C.; Belarmino, A. “Best Employers”: The Impacts of Employee Reviews and Employer Awards on Job Seekers’ Application Intentions. Cornell Hosp. Q. 2022, 64, 298–306. [Google Scholar] [CrossRef]

- Schouten, K.; Frasincar, F. Survey on Aspect-Level Sentiment Analysis. IEEE Trans. Knowl. Data Eng. 2016, 28, 813–830. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).