1. Introduction

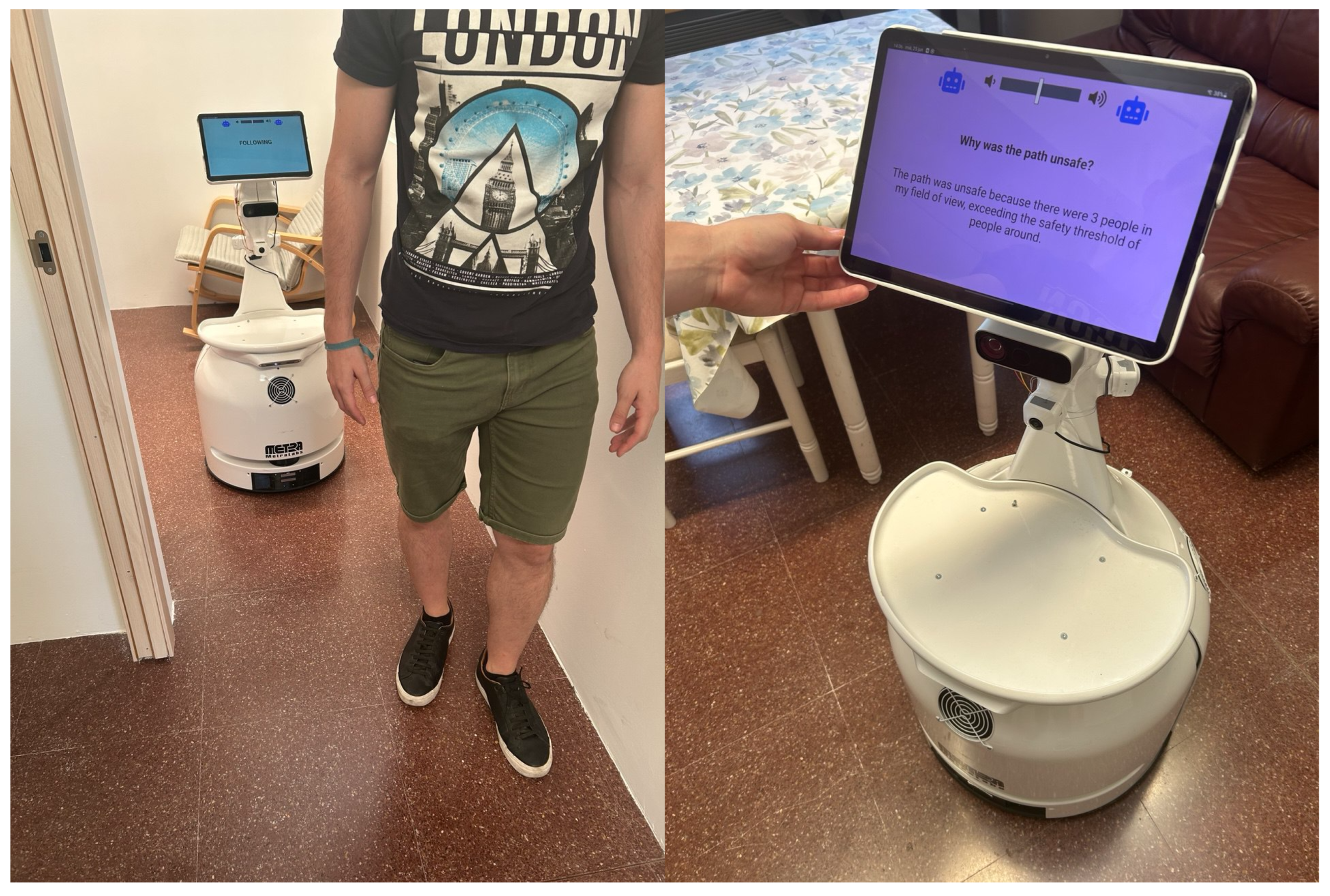

As shown in

Figure 1, it is increasingly common today to find service robots performing jobs (e.g., transporting goods in warehouse logistics, delivery in hospitals or offices, or as waiters) where they share the environment with humans, with whom, in many cases, some kind of interaction is established. When the robot has the tools to carry out verbal interaction, trust in robots can be increased by having them generate explanations that allow the person to know why they are doing what they are doing [

1]. In general, it is about the robot being able to explain the reasons behind a certain decision, which are often hidden by the adoption of complex control policies, in many cases based on machine learning techniques. This is what is known as eXplainable Autonomous Robots (XARs) [

2], and it does not only concern verbal explanations, but may also be accompanied by a behavior that, in general, offers strong assurance [

3]. As has been highlighted in numerous papers, the difference between XAR and eXplainable Artificial Intelligence (XAI) lies mainly in the fact that the robot is an embodied agent, which performs a certain action in the surrounding environment. While XAI typically focuses on justifications of output from decision-making systems (data-driven explainability), XAR focuses more on explaining actions or behavior of a physical robot (goal-driven explainability) to an interacting human [

2].

In order to generate an explanation, the robot must have the mechanisms that allow it to handle both information about past experiences and factual knowledge. This knowledge is organized, respectively, in so-called episodic memory and semantic memory. Semantic memory organizes the concrete and objective knowledge that the robot possesses about a specific topic (general concepts, relationships, and facts abstracted from particular instances). Episodic memory is a type of long-term memory that allows the robot to recall past experiences, such as specific events and situations, including details about where and when they occurred. Both constitute conscious or declarative memory [

4]. Using these declarative memories, the explanation will be constructed using both definitions or concepts and a temporal sequence of actions, performed or considered [

5]. It is also necessary that all this knowledge model is shared with the humans with whom it is going to communicate; otherwise, neither party will understand the other [

6]. Specifically, episodic memory is crucial to enable the robot to verbalize its own experiences. The representation used to store this information must synthesize the continuous flow of experience, organizing it and nimbly retrieving, when necessary, important past events to construct a response to the person [

7].

Early work on verbalisation of episodic memory relied on rule-based verbalisation of log files or on fitting deep models on either hand-created or self-generated datasets. For instance, Rosenthal et al. [

8] propose a verbalization space that manages all the variability of utterances that the robot may use to narrate its experience to a person. Garcia-Flores et al. [

9] describe a framework for enabling a robot to generate narratives composed of its holistic perception of a task performed. Bärmann et al. [

10] propose a data-driven method employing deep neural architectures for both creating episodic memory from previous experiences and answering in natural language questions related with such experience. This requires the collection of a large multi-modal dataset of robot experiences, consisting of symbolic and sub-symbolic information (egocentric images from the robot’s cameras, configuration of the robot, estimated robot position, all detected objects and their estimated poses, detected human poses, current executed action, and task of the robot). The problem with these approaches is that they require either the design of a large number of rules or the collection of large amounts of experience data. To avoid these problems, language-based representations of past experience can be used, which could be obtained from pre-trained multimodal models. In that case, one can pass the question, and the history as text, to a Large Language Model (LLM) [

11] and ask it to generate the answer. The problem with this approach is that, since episodic memories can store a lot of detail, this scheme only works well for short stories (although LLMs can process more and more tokens, it is important to keep this number limited in order to reduce response time). Furthermore, the correctness of such LLM-generated answers cannot be guaranteed, which is highly unacceptable in many applications [

12].

To scale the generation of explanations to life-long experience streams, while maintaining a low token budget, we propose to derive a causal log representation from the evolution of the internal working memory. In our case, this working memory is the one used in the CORTEX cognitive architecture for robotics [

13]. The information stored in this working memory is represented using linguistic terms, and is shared by all the software agents that make up the internal architecture endowed in the robot. The evolution of the working memory describes the robot’s knowledge of the environment, including not only perceptions, but also actions or intentions [

13]. It is a representation that, for a given instant, could be passed to an LLM to obtain a verbal description of the context, implementing a kind of soliloquy or inner speech [

14]. In this case, this working memory representation facilitates the creation of the aforementioned causal log, a high-level episodic memory.

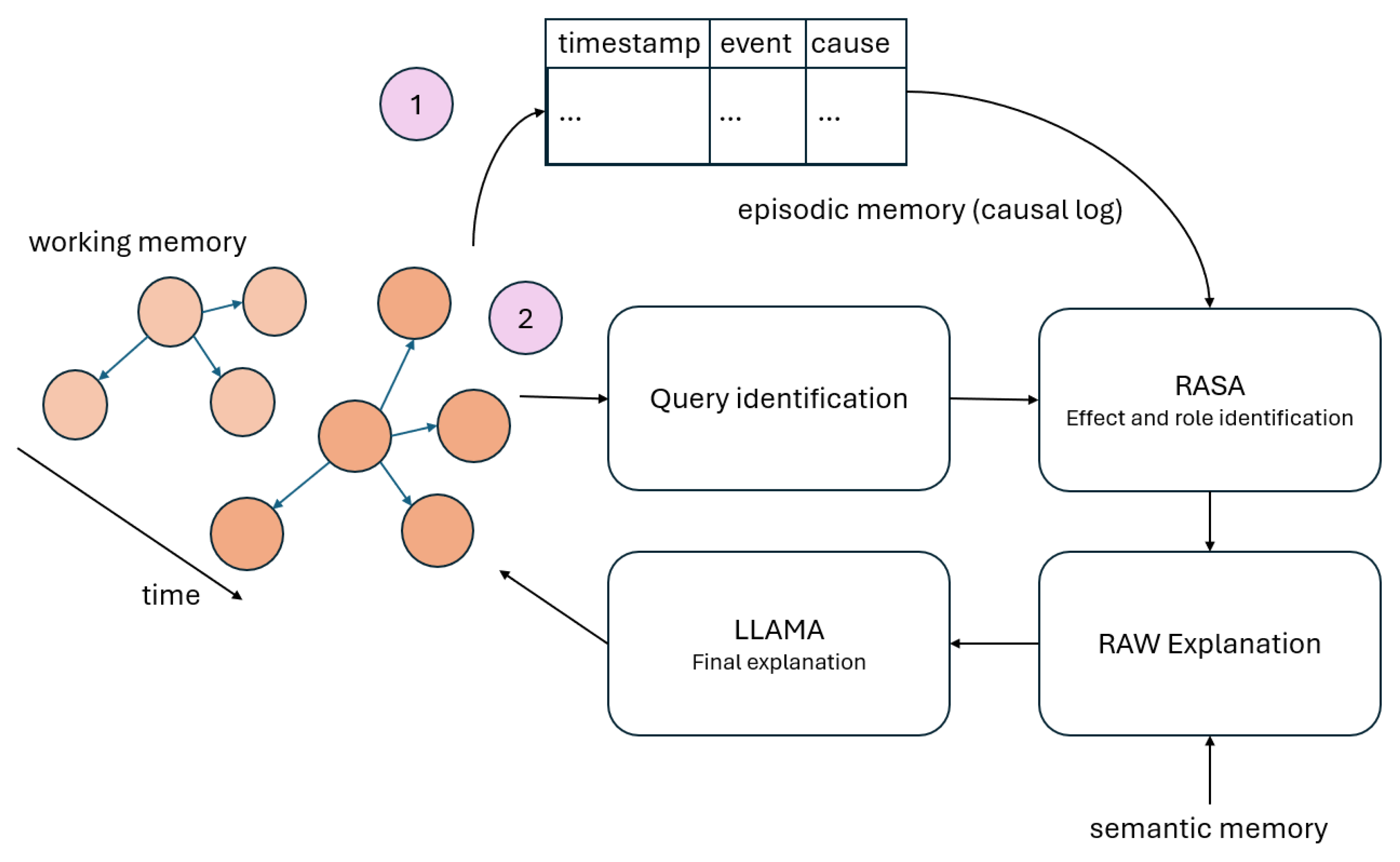

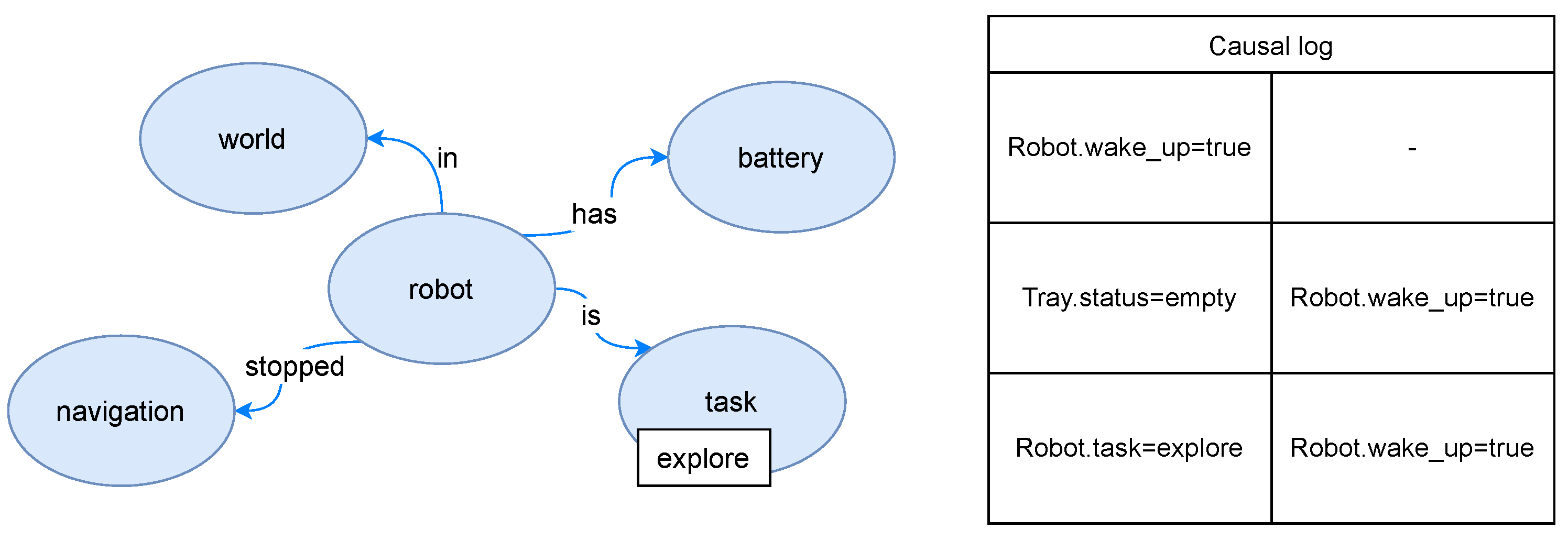

Figure 2 provides an overview of how our system works. Briefly, it processes the continuous stream of experiences encoded on the working memory to extract relevant snapshots, which are inserted into a casual log, our high-level episodic memory. These snapshots are identified at design time, and basically, they coincide with the preconditions that launch a specific action of the robot (detect a person, a low battery value, etc.). They are represented as natural language concepts. When the robot needs to provide an explanation, it uses this causal log, as well as semantic memory, to generate a raw explanation that is refined using the Large Language Model Meta AI (LLaMA) (

https://www.llama.com/, accessed on 2 July 2025).

1.1. Contributions

The idea of representing episodic memory using natural language in order to combine information from this memory with the question itself and, using deep learning models (LLMs), generate the answer is not new. The problem is therefore how to represent the information in this memory and how to use this information to generate explanations for specific questions.

In our previous work, we proposed CORTEX, a software architecture for robotics in which all functionalities (perceptual, action, decision making, etc.) are organized as agents that communicate with each other by updating a runtime knowledge model [

13]. This model represents a working memory, organized as a directional graph, in which nodes and arcs store semantic content. Thus, for a given instant of time, this memory could be passed to an LLM and would generate a text expressing what the robot internalizes (e.g., “The battery level is very low, so the current action has been aborted and I am heading to the charging station.”). While the temporal evolution of this working memory would generate a comprehensive episodic memory, it would be difficult to use it to generate an explanation covering a relatively long period of time.

In the deployment of CORTEX described in [

15], the robot’s behavior was encoded in a set of Behavior Trees, each one associated with a specific task, and among them, a self-adaptation mechanism made it possible to determine which one was active depending on the context. These Behavior Trees are composed of more atomic actions, which require pre-conditions (person detected, menu not previously asked, etc.) for their execution. These items, which are relevant for the behaviors to take place, will constitute the so-called causal events. These events, which are captured from working memory, will constitute our episodic or causal log memory, characterised by its relatively high level of abstraction.

Alongside this memory, this article proposes a structure of agents that, connected to the working memory already referred to, determines when an explanation should be generated, shapes it, and manages its verbalisation. This pipeline uses deep learning computational models in several steps.

To summarize, we present a full integration of human–robot interaction components forming a unified robot architecture CORTEX, where the integrated components include robot perception, natural language usage, and robot action execution.

1.2. Organization of the Paper

The rest of the paper is organized as follows:

Section 2 briefly introduces some technical preliminaries and describes the generation of explanations in a scenario where the robot tries to explain to an interacting person the reason for the actions it has executed or is executing. That is, it does not need to revisit the plan to determine, for instance, what happens if, instead of one action, another one would have been executed. The focus is on describing which knowledge representations the robot uses to generate these explanations. The software architecture CORTEX and the runtime knowledge model, the Deep State Representation (DSR), are briefly described in

Section 3. The scheme proposed for building a high-level, abstracted episodic memory is presented in

Section 4.

Section 5 describes the software framework designed for generating the explanations. Both episodic memory and explanation generation have been integrated into CORTEX, allowing, for example, memory to be continuously updated or explanations to be generated at any time during the execution of a plan.

Section 6 details the generation of a causal log and the explanations provided to several questions. In

Section 7, relevant issues are discussed and future lines of research are proposed.

2. Technical Preliminaries and Related Work

There are several papers describing representation schemes used as a basis for enabling a robot to generate explanations of its past behavior [

2,

16]. This behavior can be expressed as the set of actions that transformed an initial state

into a current state

. In this sequence, each state

can be described by a set of propositional variables

. Each robot action

has preconditions

and effects

, which can be also characterized by these same variables [

17]. Thus, an action

is applicable in a state

if its preconditions are true (

). In the same way, when

is applied, the state

evolves to

, and the set of variables

is updated to satisfy

.

Using this terminology and denoting the decision-making space with

, the plan

is a sequence of actions [

2], as follows:

which is generated using an algorithm

and under a certain constraint

, i.e.,

A causal link between two consecutive actions,

, implies that there exists a set of facts

e that are part of the effects of

,

, and of the preconditions of

,

[

17].

The goal of an explanation is to justify to the person that the solution

satisfies

for a given

(or that a wrong state has been unexpectedly reached and the plan was stopped [

18]). Assuming that the person is intimately familiar with the internal model of the robot, with how it organizes knowledge, and with how the planner decomposes the execution of a plan, the verbalisation of a plan or previous experience consists of a step-by-step plan explanation (considering states and actions) to the person [

6,

17]. Interpreting causal links as models of causal relationships between actions, Stulp et al. [

19] propose to build an ordered graph for the plan that can help the robot to answer

questions. Nodes of this graph are actions of the plan, and links are composed of variables that fulfill

Advancing backwards in the plan, every precondition of an action is linked to the latest previous action that created it. Thus, the effect of a given action can be linked to the precondition of one or more other actions. Each precondition links to at most one effect [

19].

Additionally, using the concept of causal link, Canal et al. [

20,

21] propose PlanVerb, an approach for the verbalisation of task plans based on semantic tagging of actions and predicates. This verbalisation is summarized by compressing the actions acting on the same variables or facts. They consider four levels of abstraction for plan verbalisation. In the lowest level, the verbalisation considers numerical values (e.g., real-world coordinates of objects, time duration of actions). In the highest one, only the essential variables of the actions are verbalised.

The episodic H-EMV memory, proposed by Bärmann et al. [

7], is also organized as a hierarchical representation, in which information is organised into raw data, scene graph, events, goals, and summary. Each level builds on the previous level, and the nodes that form it can have children of different types (e.g., a goal node can have linked events or other goal nodes; this allows subgoals of complex tasks to be represented). The H-EMV can be interactively accessed by an LLM to provide explanations or answers to questions from the person, keeping the list of tokens bounded even when the explanation covers a large period of time.

Finally, Lindner and Olz [

17] discuss in depth the problem of constructing explanations purely on the basis of causal links. The problem is that, in many cases, an action is not necessarily executed to achieve an initial goal, but may arise to fulfill a partial goal that appears during the execution of the plan (even originating from the execution of the plan itself). There are also occasions when two actions are not linked by preconditions or effects, but there is a logical causal relationship, underlying the execution of the plan itself. With the aim of distinguishing effects that render subsequent actions necessary from effects rendering subsequent actions possible, they define semantic roles for the effects. Thus, an effect is defined as a

demander one when the goal could have been reached without considering it, but it makes an additional action necessary. For example, imagine a robot apologizing (

SaySorry) to a person for passing too close to it. Apologizing is not necessary to achieve the ultimate goal of reaching a certain location, and has been launched as a consequence of an effect (passing too close to the person). This effect is the demander of the action

SaySorry. Preconditions that are not demanders are defined as

proper enablers. It is important to note that an effect can play both roles at the same time. By distinguishing between links associated with demanders and enablers, the authors show how the robot is able to generate better explanations from the person’s point of view.

3. CORTEX

The CORTEX (

https://github.com/grupo-avispa/cortex, accessed on 8 August 2025) robotic architecture is a mature approach, which has been used in different projects and platforms [

13,

15]. Basically, the idea is to use a runtime knowledge model as a working memory that builds the software agents in which the robot’s functionality is structured. All the information needed by the agents is in this memory, allowing deliberate or reactive behaviors to emerge.

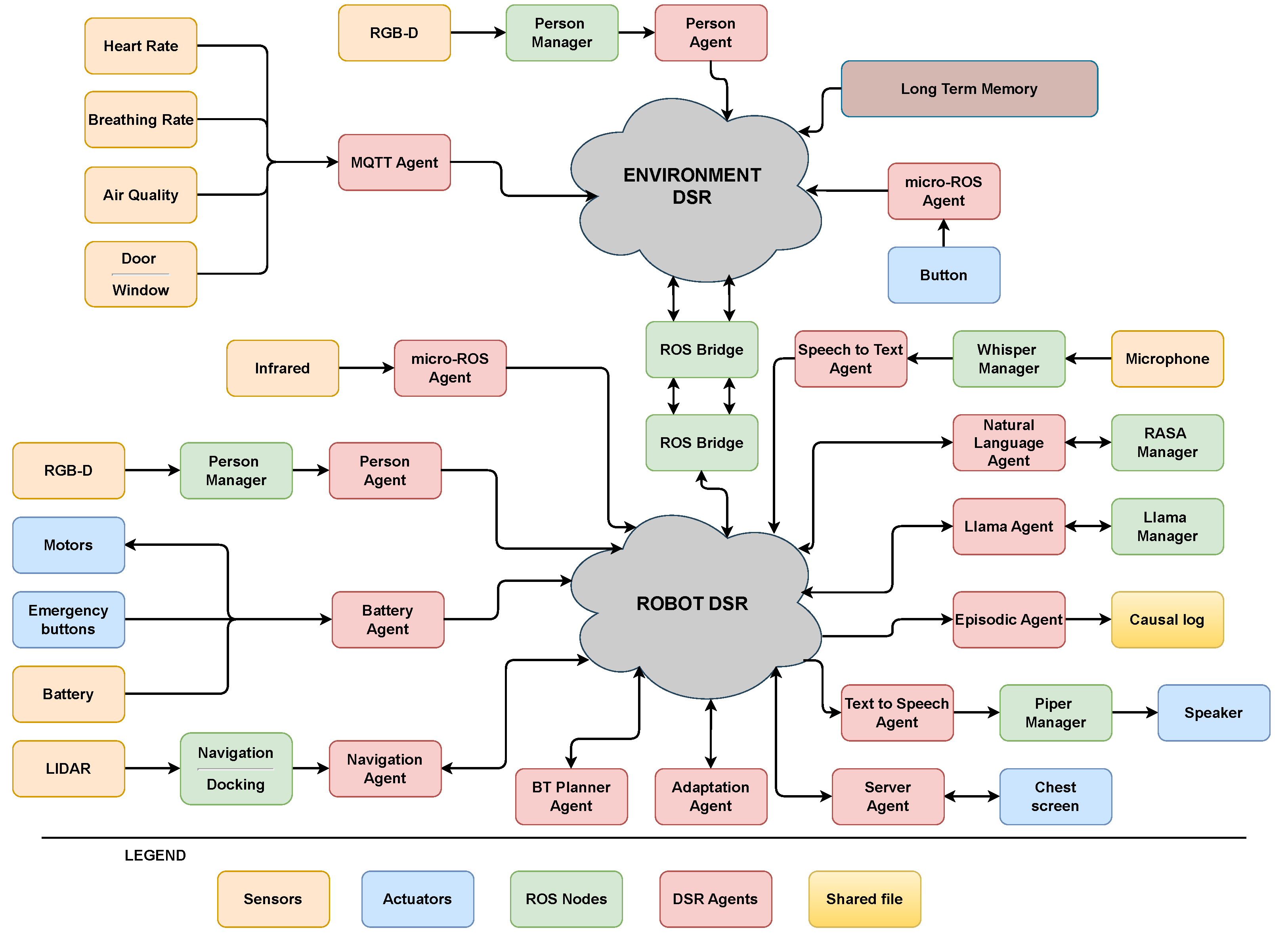

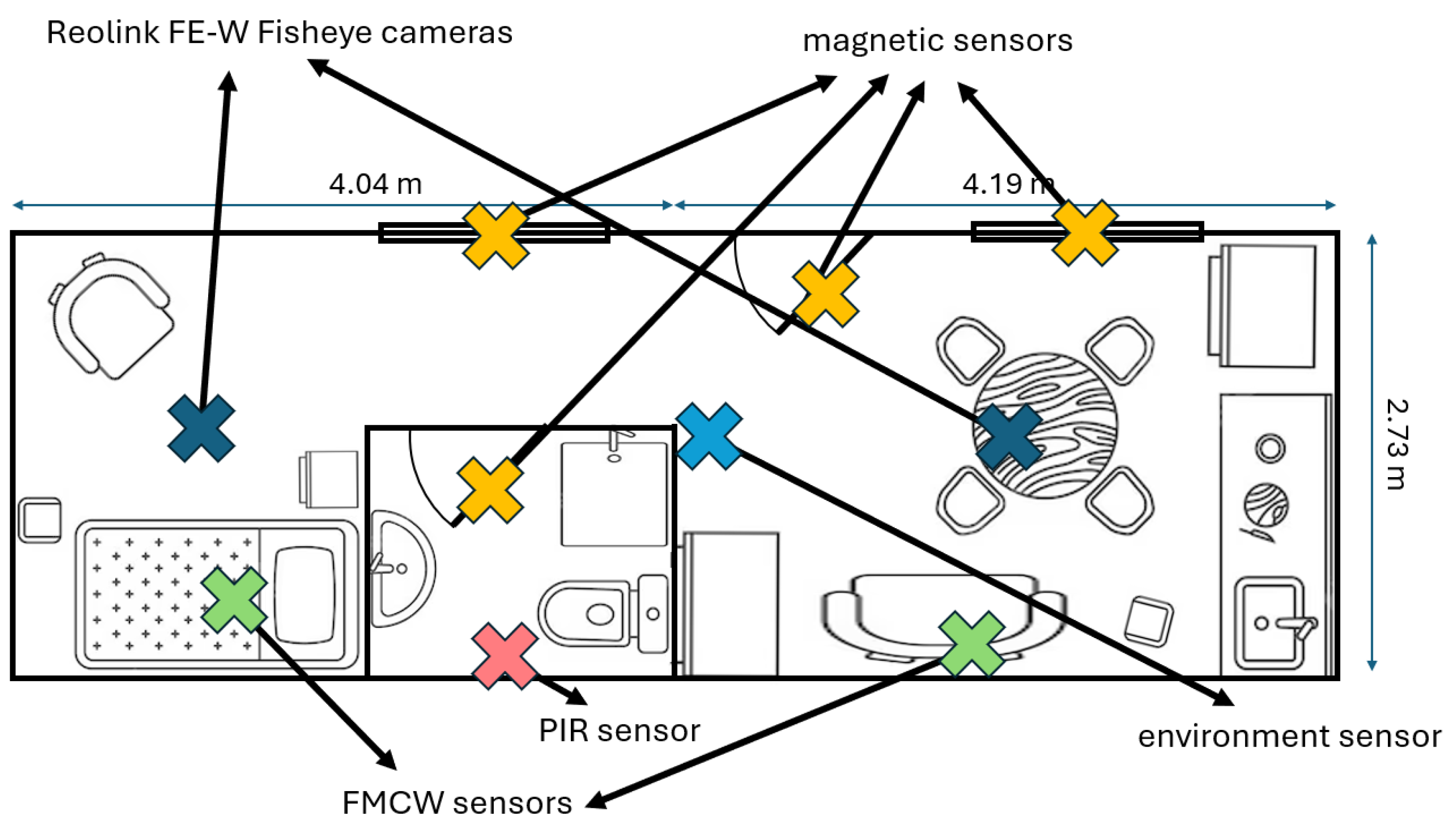

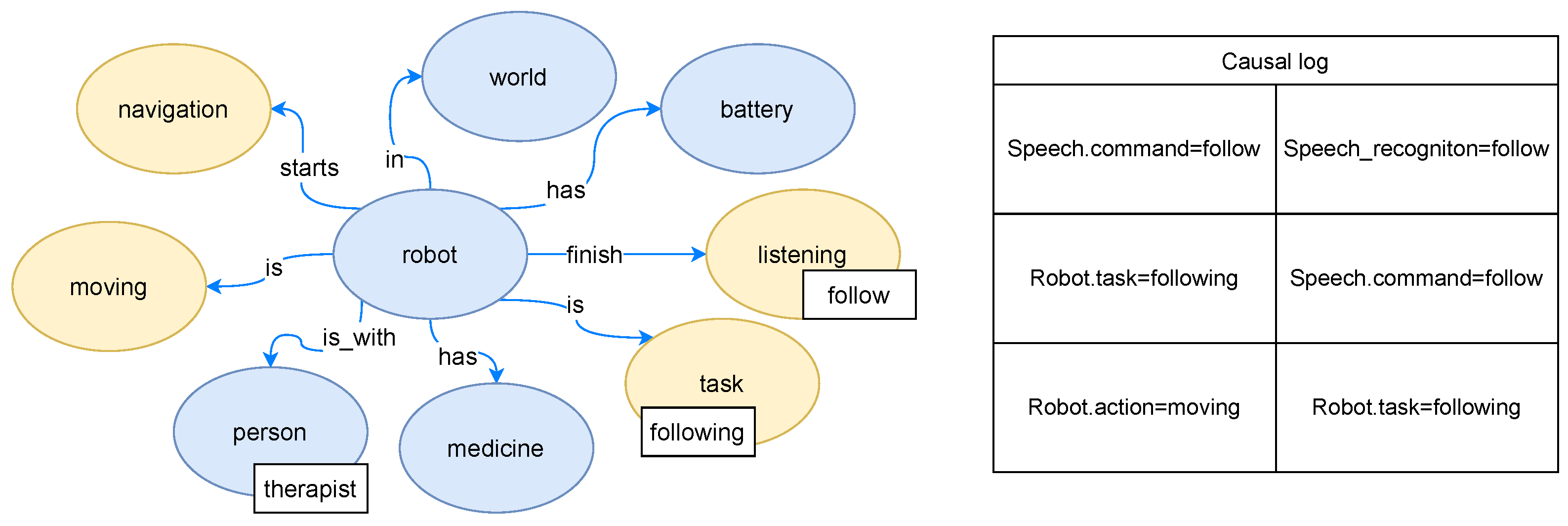

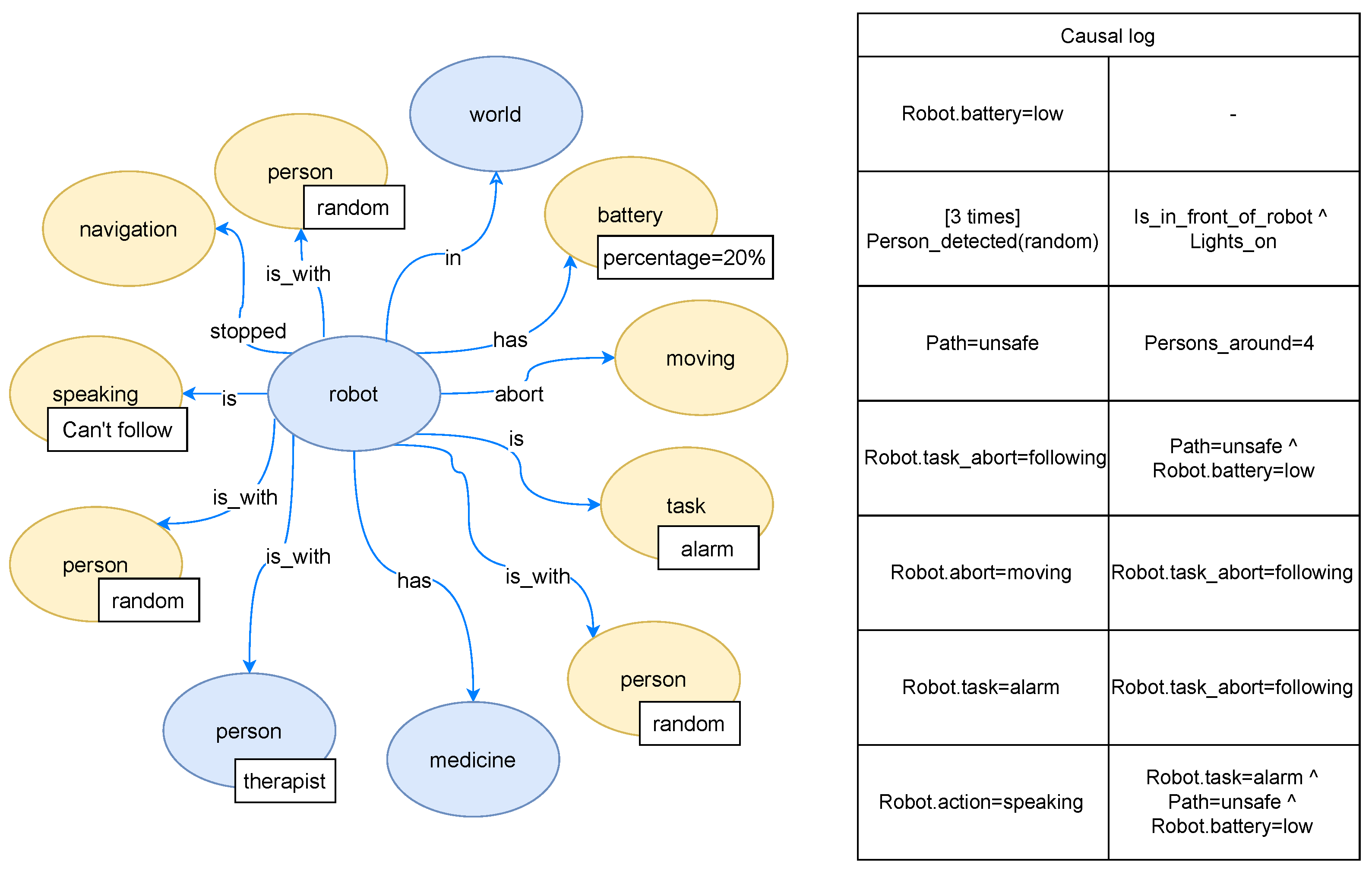

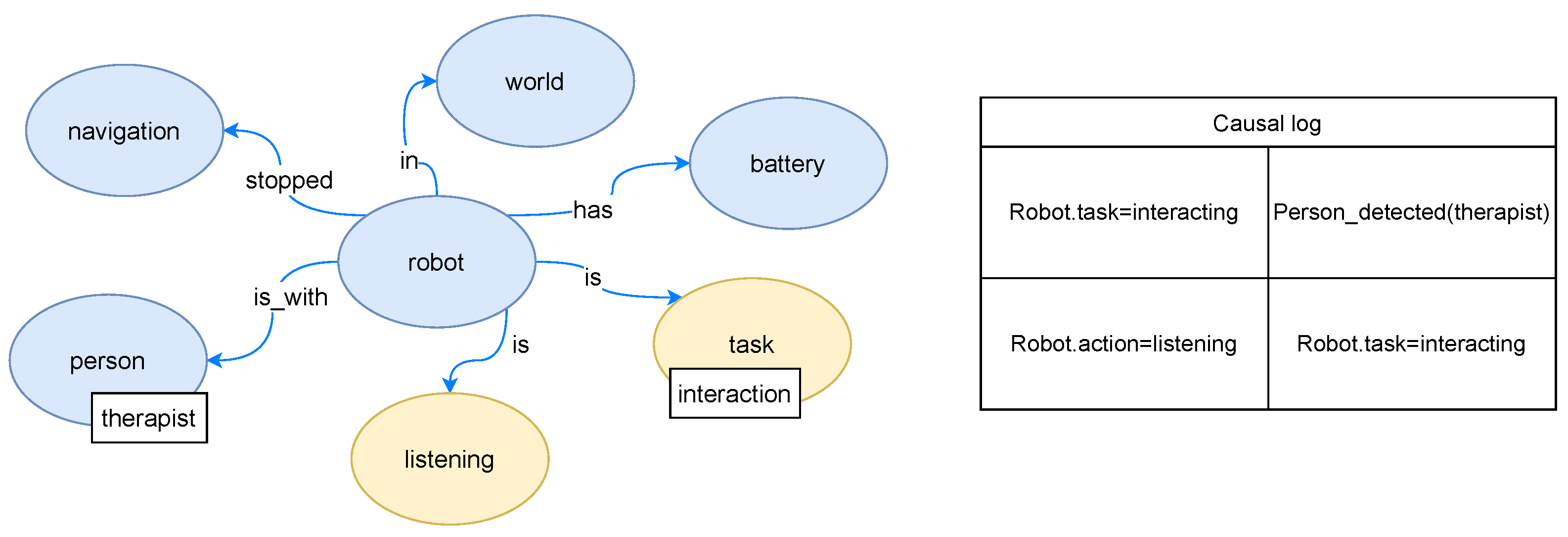

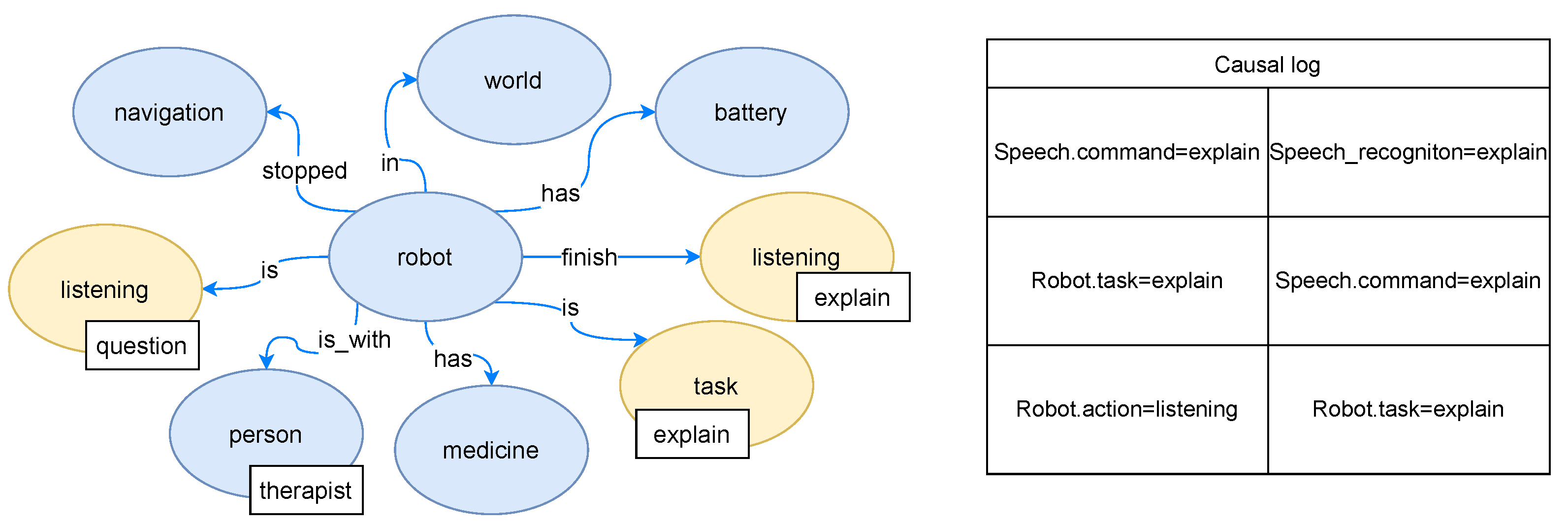

Figure 3 shows what the instantiated architecture looks like in the examples that will be described in this article.

Following the scheme proposed in a previous work [

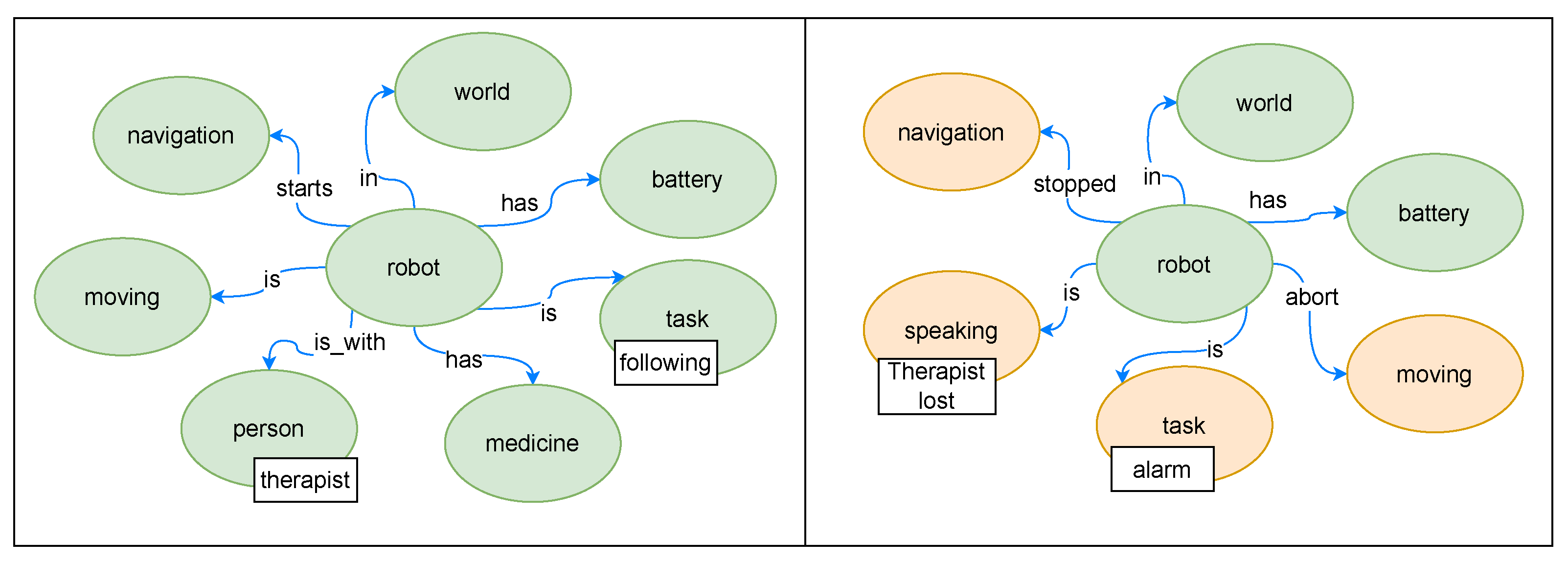

15], the model can be extended to consist of two independent but connected working memories: one internal to the robot itself and the other associated with the environment. Each one can maintain its own functionalities (software agents) and its own associated hardware. The important point is that, in these working memories, most of the information is annotated semantically. As an example,

Figure 4 shows the evolution of the working memory in the robot when it detects that the person it was following has disappeared. The graph shows a reduced version of the working memory on the robot, where only the most significant nodes and arcs have been left for clarity. If the content of this memory is analyzed, it is easy to note down in sentences what is happening at each instant of time (“robot is with the therapist”, “robot is moving and following the person”, etc.). This kind of inner speech [

22] serves as a basis for the construction of a high-level episodic memory and thus for our proposal for the generation of explanations.

The Deep State Representation (DSR)

As aforementioned, a working memory, the so-called Deep State Representation (DSR), is at the core of each one of the instantiations of CORTEX shown in

Figure 3. The DSR is a distributed, directed, multi-labeled graph, which acts as a runtime model [

15]. The graph nodes are elements of a predefined ontology, and they can store attributes such as raw sensor data. On the other hand, the graph arcs are of two different types. Geometric arcs encode geometric transformations between positions (e.g., the position of a robot’s camera with respect to the robot’s base). These relative probabilistic poses between graph nodes are represented as rotation and translation vectors with their covariance. Symbolic arcs typically encode logic predicates. Thus, for example, the graph could maintain the robot’s position with respect to the current room and all elements relevant to an ongoing interaction with a person. Graph arcs can also store a list of attributes of a predefined type.

In the evolution of the graph in

Figure 4, only the most significant symbolic arcs have been shown. Initially, the robot is following the therapist, carrying a medicine that the therapist needs. At a given moment, for whatever reason, the robot loses the person it is following. The ongoing task (

Following) is aborted, and the robot’s behavior is now guided by a new one (

Alarm). The robot stops and asks the therapist to come to it to restart

Following. The evolution of the DSR is a joint action of the software actors in CORTEX. The communication between them is through changes in nodes and arcs of the graph, and since the information in these is mostly semantic, this interaction can be interpreted as a conversation. Being internal, the result is an inner speech.

Figure 5 shows the agents involved in the DSR evolution shown above. It is important to note that there are no messages between agents or from a central agent acting as Executive. The agents note the information in the DSR (e.g., the person is lost), and the system acts (e.g., the self-adapting agent changes the behavior from

Following to

Alarm).

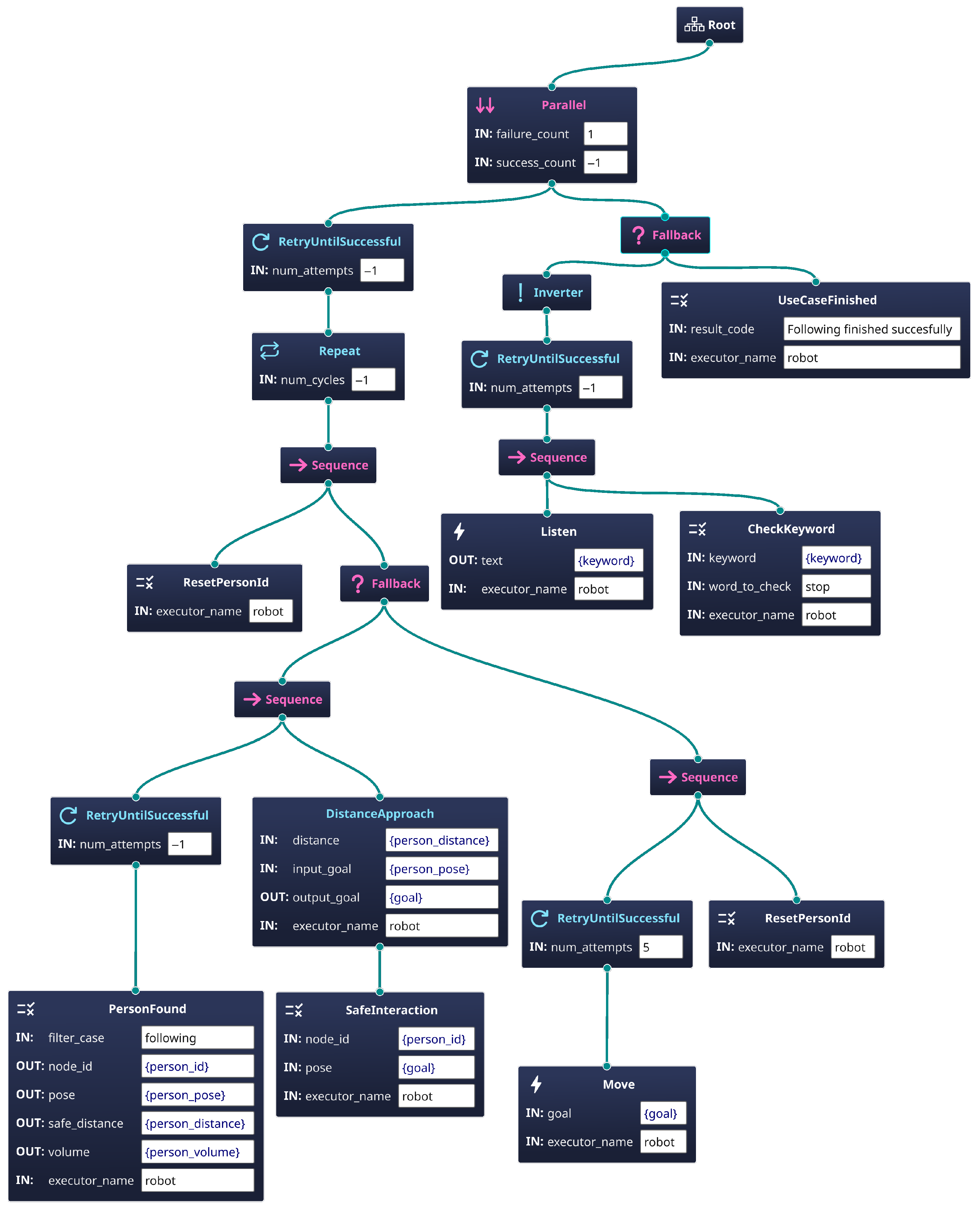

5. Generating the Explanation

Faced with a given request (question) from the person, the robot uses episodic and semantic memories to prepare an answer (explanation).

Figure 7 shows the Behavior Tree that manages this task. Essentially, the robot detects the question and estimates the role of the person asking it (i.e., whether they are a therapist, robot technician, or resident, etc.) [

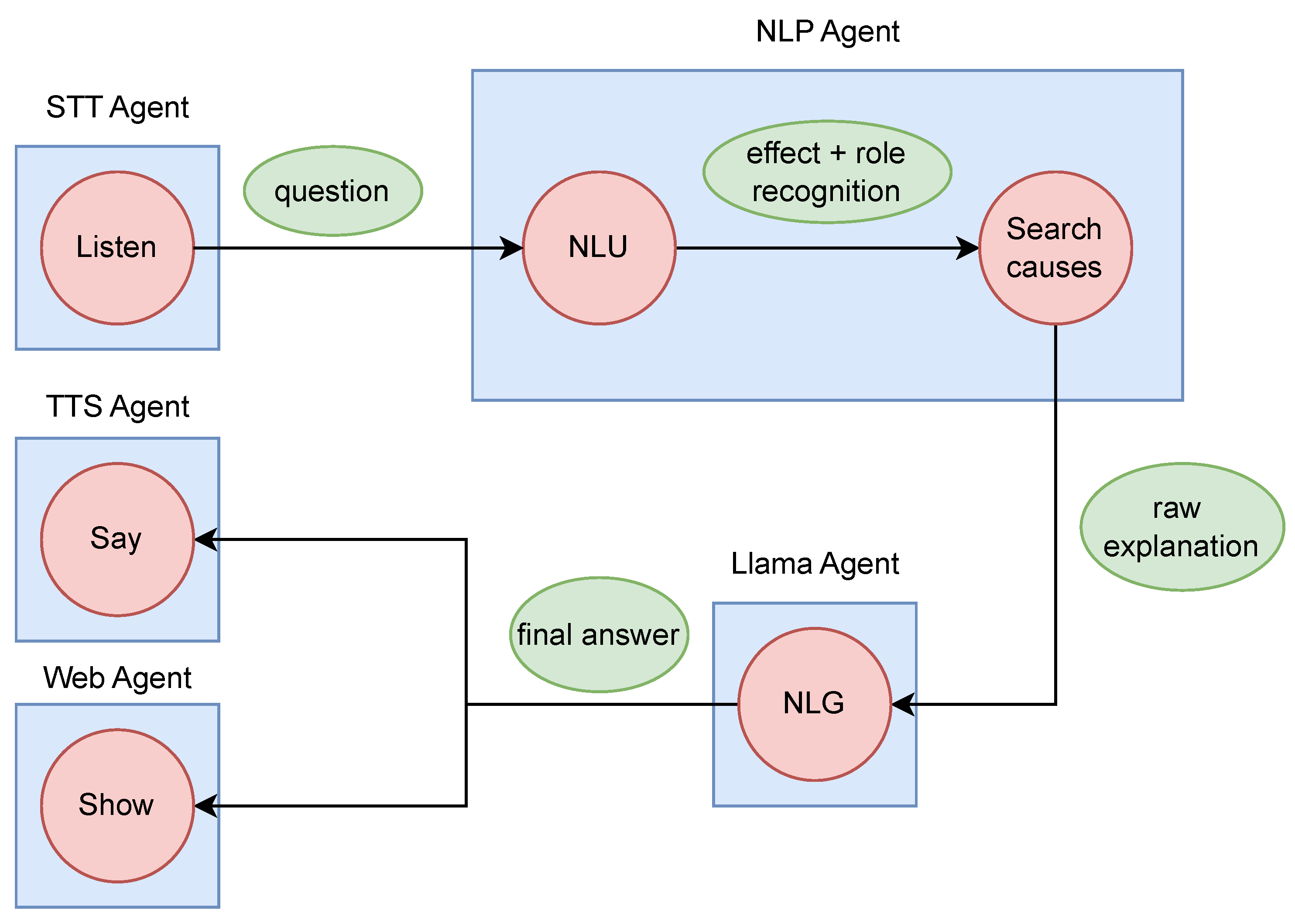

14]. Identifying the role allows for personalizing the explanation. With this information, a first version of the explanation is built, which will be refined using a specific software agent (LLaMA agent). The final explanation is verbalised by the robot, but is also displayed on the screen on the robot’s torso.

The software agents involved in this task are shown in

Figure 8. A Speech To Text (STT) agent, responsible for capturing what the person says and identifying whether it is a question, is deployed in CORTEX. The questions are passed to a second Natural Language Processing (NLP) agent. This agent is responsible for detecting which robot event the question refers to and identifying the role of the person asking the question. With this information, and using the data in the semantic and episodic memories, a first raw version of the explanation is constructed. This explanation is not very human friendly, so it is refined using the LLaMA agent. This text is verbalized (Text To Speech (TTS) agent) and displayed on the screen on the torso of the robot (Web agent). Further details on these agents are provided below.

5.1. Speech To Text Agent

The Speech To Text (STT) agent is in charge of executing the whole process to solve the problem of transcribing what is heard into text. It is designed to facilitate its integration in the CORTEX architecture, being connected to the DSR network. Basically, it is in charge of detecting the audio, transcribing it to text, and uploading it to the DSR as an attribute. For this, the whole process is based on the ROS 2 pipeline shown in

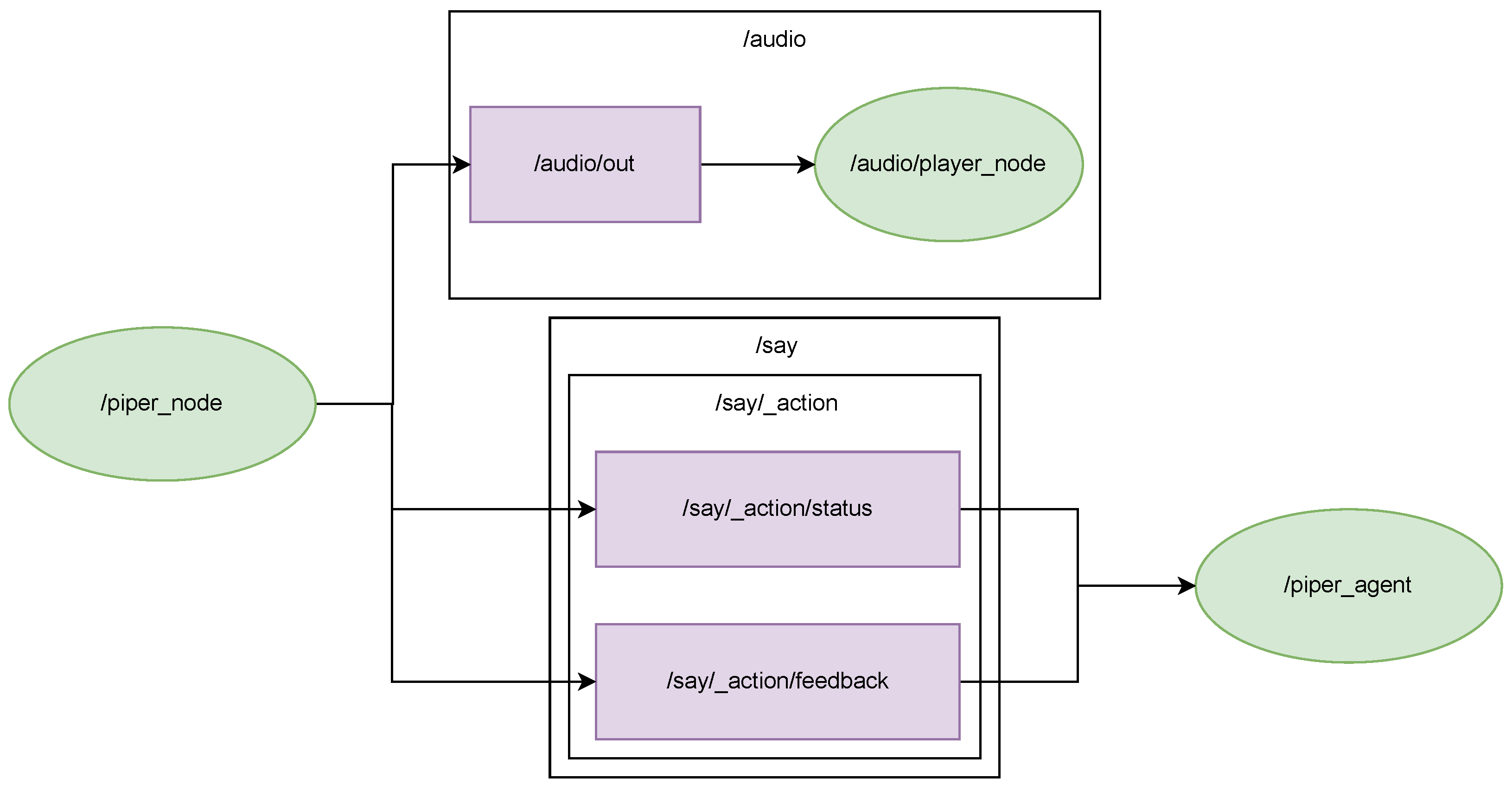

Figure 9.

This pipeline has been developed using a custom version of the ROS 2 stack “whisper_ros” (

https://github.com/mgonzs13/whisper_ros, accessed on 25 June 2025). This stack provides the foundational capabilities for integrating the speech to text into ROS 2. It is based on the whisper.cpp (version 1.7.6) (

https://github.com/ggerganov/whisper.cpp, accessed on 25 June 2025), a high-performance inference of OpenAI’s Whisper automatic speech recognition and on the PortAudio (version 19.7.0) (

https://www.portaudio.com/, accessed on 25 June 2025) library to record the audio.

The first step is to record the audio through the

/audio_capturer_node. This step is carried out by the PortAudio library, which is a free, cross-platform, open-source, audio I/O library that provides a very simple API for recording and/or playing sound using a simple callback function or a blocking read/write interface. The voice activity detector then uses this audio to transcribe a short speech segment, which is carried out by the

/whisper/silero_vad_node node. This node is built on the SileroVAD (version 5.0) (

https://github.com/snakers4/silero-vad, accessed on 25 June 2025) model, which is a machine learning model designed to detect speech segments. It is lightweight, at just 1.8 MB, and is capable of processing 30 ms chunks in approximately 1 ms, making it both fast and highly accurate. Finally, the last step consists of the transcription of the detected speech segment (published by the

/whisper/vad topic) through the

/whisper_node. Whisper (model ggml-large-v3-turbo-q5_0.bin) (

https://openai.com/index/whisper/, accessed on 25 June 2025) is an automatic speech recognition (ASR) system trained on 680,000 h of multilingual and multitask supervised data collected from the web. The use of such a large and diverse dataset leads to improved robustness to accents, background noise, and technical language. Moreover, it enables transcription in multiple languages, as well as translation from those languages into English. The final transcribed text is published and read by the agents connected to the DSR. The STT agent uploads the final text transcribed into the DSR graph as an attribute of an action node called “listen” that is connected to the robot. This attribute is saved in the internal blackboard of the Behavioral Tree as an output, so the next node could access that information to build the explanation.

5.2. Natural Language Processing Agent

Once the question has been transcribed, the next step is to identify the robot event associated with the question and the role of the questioner [

14]. These tasks are addressed by the Natural Language Processing (NLP) agent. The output is a raw causal explanation, composed of the description of the identified event and its causes detailed in the high-level episodic memory (causal log).

5.2.1. Event Recognition

In this step, the transcribed question is mapped to a specific robot event. Rasa’s intent classification system (

https://rasa.com/docs/, accessed on 27 June 2025) was employed. While it is provided as a means to learn semantic intents of utterances, we utilized it to learn events by training it with 20 questions per event. For example, the question “Why did you stop following me?” was associated with the event “Robot.task_abort = following”.

5.2.2. Role Recognition

In the same fashion, as for event recognition, we leveraged Rasa’s intent recognition framework to predict the social role of the person asking the question [

14]. Specifically, we considered four distinct roles: therapist, technician, resident, and familiar.

Social role shapes human interaction by influencing not only the subject matter of communication but also the manner in which messages are conveyed. In the context of generating explanations, it is reasonable to hypothesize that a person’s social role affects both the content and phrasing of their questions. Consequently, a robot capable of producing effective, context-sensitive explanations should be aware of the human’s social role. To enable this capability, we trained a model to map human queries to corresponding social roles. The model was developed using a dataset of 160 manually labeled examples, with 40 representative queries assigned to each of the four roles. For instance, the social role associated with the question “Why was the robot interacting with my father?” will be familiar.

5.2.3. Cause–Effect Extraction

Finally, this step is responsible for searching for the most recent occurrence of an effect that matches the recognized event in the causal log file. The descriptions of the associated causes are then extracted from the semantic memory, and constitute what we denote the “raw explanation”. Some examples are presented in

Section 6.2.2.

5.2.4. Prompt Generation

Once we have the “raw explanation”, the LLaMA model prompt is constructed based on the question, the recognized role, and the raw explanation. The final result is the following prompt: “You are a social assistive robot. According to the role [role] of the target that you are answering and the question asked [question]. Compress the answer with the meaningful information: [raw explanation]”.

5.3. LLaMA Agent

Although the raw explanations generated by the system contain accurate and relevant information, their formulation may lack clarity or naturalness, potentially hindering the human’s understanding. To address this, the LLaMA software agent performs a final refinement step using a Large Language Model (LLM) to improve fluency, personalization, and semantic quality [

14]. The goal is to generate a final explanation, answering the question asked by the human (see

Figure 8). The role-based personalization is embedded in the model instruction, but the model also has his own capabilities to give a personalized explanation with its knowledge. The following instruction is used: “You are an assistance robot interacting with different types of people in a residential facility. The roles of the people you interact with are therapist, technician, and resident. Responses should be tailored to each role, with the most technical, direct, and detailed communication reserved for the technician; collaborative and empathetic interaction with the therapist; and clear, accessible, and friendly language with the resident.”

When the agent receives the notification, it invokes

/llama_node to generate a more natural, human-friendly, and contextually appropriate response for the previously detected user role. As shown in the ROS 2 pipeline in

Figure 10, the final output is published through the main topic

/llama/generate_generate_response, which the LLaMA agent monitors. This agent then uploads the refined explanation as an attribute to the corresponding node in the DSR, allowing the output agents (TTS and Web agents, responsible for managing the speech and screen interfaces, respectively) to present the explanation clearly and effectively to the human. In our experiments, we used the Meta-Llama-3.1-8B-Instruct-Q4_k_M.gguf model, a quantized 8-billion-parameter version fine-tuned for conversational and instructional tasks. Its ability to run locally supports real-time, on-device interaction, making it suitable for social robotics applications.

5.4. Text To Speech Agent

Piper (version 1.2.0) (

https://github.com/rhasspy/piper, accessed on 27 June 2025) is a collection of high-quality, open-source text-to-speech voices developed by the Piper Project, powered by machine learning technology. These voices are synthesized in browser, requiring no cloud subscriptions, and are entirely free to use. You can use them to read aloud web pages and documents with the Read Aloud extension, or make them generally available to all browser apps through the Piper TTS extension.

Each of the voice packs is a machine learning model capable of synthesizing one or more distinct voices. Each pack must be separately installed. Due to the substantial size of these voice packs, it is advisable to install only those that you intend to use. To assist in your selection, you can refer to the “Popularity” ranking, which indicates the preferred choices among users.

This package is integrated into ROS 2 using the PortAudio library to play the audio. The process is based on the pipeline developed in

Figure 11.

In real-world scenarios, providing information through voice offers several important advantages. It is particularly beneficial for individuals with visual impairments, as it allows them to access information without needing to rely on reading text. Voice communication also provides a hands-free experience, making it easier for humans to interact with the system while performing other tasks. Additionally, voice allows for a more natural and conversational interaction, which can improve the human experience by making the system feel more intuitive and human-like. Furthermore, spoken information can be processed more quickly than reading text, especially when the human is on the move or engaged in other activities. Finally, voice can create a more personal connection, making interactions feel more engaging and dynamic. Overall, voice-based communication enhances accessibility, convenience, and the overall user experience.

5.5. Web Agent

The Web agent provides a bridge to link the robot’s on-screen graphical user interfaces (GUIs) with the DSR. Specifically, this agent uses the WebSockets (version september 2023) (

https://websockets.spec.whatwg.org/, accessed on 20 June 2025) protocol to establish real-time, bidirectional communication over a single TCP connection. WebSockets support asynchronous data exchange, enabling the client (typically a web browser) and the server to independently send and receive messages in parallel. The agent is implemented in C/C++ and operates in two principal modes: receiving input and transmitting output, as follows:

Receiving from DSR: To ensure that the GUI reflects the robot’s internal state (such as the current task status), the agent monitors specific changes in the DSR. When such updates occur, the agent triggers corresponding modifications on the interface. These changes can range from subtle updates to images or text to complete layout changes on either the robot’s own screen or on an external display.

Sending to DSR: Through interactive elements on the GUI (like touchscreen buttons or wireless keypad controls), human users can provide input such as responses or preferences. The agent translates this interaction into updates within the DSR, which may involve modifying personal attribute data (e.g., user emotional state) or initiating new robot behaviors by triggering predefined external use cases.

In this architecture, this agent is responsible for displaying the question and the final answer on the robot’s screen, which is essential in robot–human interactions. Providing information through text on a screen offers several key advantages. First, it ensures accessibility for users with hearing impairments, allowing them to receive information without relying on audio. Additionally, text helps to clarify and reinforce the message, especially in noisy environments or situations where audio may not be clearly heard. Offering text also improves comprehension, as some humans process information better when they read it rather than hear it. Furthermore, it enhances usability in various contexts, as users can refer back to the text at their own pace.

7. Discussion

In this section, we analyze the system’s evaluation results, differentiating between a qualitative and quantitative evaluation and addressing key limitations such as error handling and generalizability for unseen scenarios.

7.1. Evaluation Results

7.1.1. Quality of the Answer

To evaluate the explanations generated, an experiment was conducted with 30 participants. The three questions and explanations above were presented to the participants. The participants then filled out an online questionnaire with five qualitative Likert items for clarity, completeness, terminological accuracy, and linguistic adequacy of the explanations.

The age distribution of the participants was under 30 years (20%), 30–44 years (16.67%), 45–60 years (43.33%), and over 60 years (20%). Of the participants 56.67% were male and 43.33% were female. The results are presented in

Figure 21 (5 is the highest score, and 1 is the lowest score).

The explanations scored generally high marks in content, clarity, and spelling, although occasional failures in terminological accuracy were identified. It is concluded that the system is adequate to generate appropriate answers for the questions but possibly requires some improvement in the use of technical terminology.

7.1.2. Latency and Resources

With an NVIDIA GeForce RTX 5060 Ti (Nvidia, Santa Clara, CA, USA), the transcription time for the Whisper speech recognition module for the question “Why did you stop following me?” is 0.469 s. The response time of the LLaMA agent to generate the answer to the question is 0.795 s. When running on the robot, using the NVIDIA Jetson AGX Orin (Nvidia, Santa Clara, CA, USA), the transcription time for the same question is 1.861 s and the response time to generate the answer is 3.645 s. When also the perception software is launched, the times are lightly higher: 2.144 s and 4.410 s, respectively.

The maximum operation temperature is 42.25 °C, and the GPU shared RAM usage is 5.6 GB of the available 61.4 GB. At the moment of execution, the total GPU load is 99%, but only for a few seconds. These values are obtained during the LLaMA execution and are presented in

Figure 22.

7.1.3. Analysis of Results

The experimental results demonstrate that the system successfully captures relevant events from its runtime knowledge model, constructs causal chains of events (causal log), and generates personalized explanations using Natural Language Processing agents and LLM refinement. In particular, the use of a distributed architecture in which agents interact via shared memory rather than direct messaging improves modularity, scalability, and robustness in dynamic environments.

7.2. Error Handling and Robustness

The current system assumes accuracy in speech recognition and explanation. However, real-world deployments require robust mechanisms to address various sources of error. In the following, we propose handling strategies for future research:

Speech understanding failures: If a user requests an action that is not part of the robot’s predefined task set, the system should respond by informing the user that it cannot perform the requested action and suggests rephrasing or choosing another task. In cases where a misunderstood question leads to an incoherent explanation, the user is encouraged to simply repeat the question for clarification.

Explanation errors: If a generated explanation is incorrect or incoherent, user trust may be degraded. A long-term solution could involve applying reinforcement learning with human preferences to optimize explanation policies based on user feedback. Recent approaches [

25] show that models can be tuned to prefer outputs rated more helpful or truthful by users. Incorporating similar reward models into the explanation refinement pipeline could help the robot learn which types of explanations better align with human expectations and feedback over time.

7.3. Limitation for Unseen Scenarios

One limitation of the current implementation is that the causal representation depends on predefined design time events (e.g., preconditions in Behavior Trees), which restrict the system’s generalizability to unknown tasks and to any question out of its knowledge. Although the system was validated in a real environment using the Morphia robot, broader evaluations in less structured or more complex settings would help assess its robustness under greater variability. To improve generalizability, future work could explore the following:

Prompting Strategies for Multi-Path Reasoning: Modern prompting techniques can be used to explore multiple reasoning paths within LLM, especially when ambiguity or novelty is detected in user questions. For instance, self-ask prompting or chain-of-thought prompting can lead the LLM to internally generate intermediate reasoning steps before providing a final explanation [

26]. This increases the robustness of the system in cases where predefined causal chains are incomplete.

Retrieval-Augmented Explanation Generation: Integrating retrieval-augmented generation allows the robot to dynamically incorporate external knowledge or memory snippets during explanation synthesis [

27]. The system could query past episodic logs or even external structured sources (e.g., semantic knowledge bases) to enrich responses when internal memory is insufficient.

These strategies would significantly improve the robot’s capacity to adapt, reason flexibly, and deliver coherent explanations in complex, changing, or partially unknown environments.

8. Conclusions

This work presents an integrated framework that enables a social robot to generate coherent and contextually appropriate explanations of its own behavior based on a high-level episodic memory and an agent-based software architecture. Unlike previous approaches that rely on low-level log data or purely statistical models, our system leverages semantically and causally structured representations, allowing it to produce meaningful explanations even after extended periods of interaction.

The system has proven capable of identifying relevant events, maintaining compact but informative long-term memory, and generating appropriate linguistic responses within reasonable latency limits, even when running on edge computing devices. These features make the proposed framework suitable for real-world applications in socially assistive robotics.

Future work could explore the dynamic expansion of the causal repertoire using learning techniques or the inclusion of affective and theory-of-mind elements to further enrich explanation personalization and social interaction capabilities. Another promising direction is the incorporation of reinforcement learning based on human preferences, allowing the robot to improve the quality and relevance of its explanations over time. Finally, we intend to explore retrieval-augmented generation techniques to dynamically incorporate external knowledge sources when internal memory is insufficient, thus increasing robustness in dynamic and unknown environments.