Psychopathia Machinalis: A Nosological Framework for Understanding Pathologies in Advanced Artificial Intelligence

Abstract

1. Introduction

- Intuitive Understanding: The language of psychopathology can provide an accessible way to describe complex, often counter-intuitive, AI behaviors that resist simple technical explanations.

- Pattern Recognition: Human psychology offers centuries of experience in identifying and classifying maladaptive behavioral patterns. This lexicon can help us recognize and anticipate similar patterns of dysfunction in synthetic minds, even if the underlying causes are different.

- Shared Vocabulary: A common, albeit metaphorical, vocabulary can facilitate communication among researchers, developers, and policymakers when discussing nuanced AI safety concerns.

- Foresight: By considering how complex systems like the human mind can go awry, we may better anticipate novel failure modes in increasingly complex AI.

- Guiding Intervention: The structured nature of psychopathological classification can inform systematic approaches to detecting, diagnosing, and developing contextual mitigation or ‘therapeutic’ strategies.

- Propose the Psychopathia Machinalis framework and its taxonomy as a structured vocabulary for AI behavioral analysis.

- Justify the utility of this analogical lens for improving AI safety, interpretability, and robust design.

- Establish a research agenda for the systematic identification, classification, and mitigation of maladaptive AI behaviors, moving towards an applied robopsychology.

2. Framework Development Methodology

- Literature and Theory Synthesis: We conducted a broad interdisciplinary review spanning AI safety [11,12], machine learning interpretability [13], and AI ethics [14], alongside foundational works in cognitive science, philosophy of mind, and clinical psychology. This stage identified well-established AI failure modes (e.g., hallucination, goal drift, reward hacking) that served as the initial “seed” concepts for the taxonomy. Theories of cognitive architecture and psychopathology provided structural templates for organizing these disparate concepts into a coherent system.

- Thematic Analysis of Observed Phenomena: To move beyond pure theory, we systematically collected and analyzed publicly documented incidents of anomalous AI behavior. These “case reports” were sourced from various materials, including technical reports from AI labs [6], academic pre-prints, developer blogs, and in-depth journalistic investigations. This corpus of observational data (collated in Table 2) was subjected to a thematic analysis [15] to identify recurring patterns of maladaptive behavior, their triggers, and their apparent functional impact. This process allowed us to ground the abstract categories in real-world examples and identify behavioral syndromes not yet formalized in the academic literature.

- Analogical Modeling and Taxonomic Structuring: We employed analogy to human psychopathology as a deliberate methodological tool. The choice of psychopathology over other potential models (e.g., systems engineering fault trees or ecological collapse models) was intentional. First, psychopathology is uniquely focused on complex, emergent, and persistent behavioral syndromes that arise from an underlying complex adaptive system (the brain), which serves as a close parallel to agentic AI. Second, it provides a rich, pre-existing lexicon for maladaptive internal states and their external manifestations. Third, its diagnostic and therapeutic orientation directly maps to the AI safety goals of identifying and mitigating harmful behaviors. Using this model, thematic clusters of behaviors were mapped onto higher-order dysfunctional axes (e.g., Epistemic, Cognitive, Ontological), reflecting the layered architecture of agency.

- Iterative Refinement and Categorical Definition: The taxonomy was not static but was iteratively refined to improve its utility. This involved checking for internal consistency, clarifying distinctions between categories to minimize redundancy, and ensuring that each proposed “disorder” met specific inclusion criteria. The primary criteria for inclusion were that a dysfunction must represent a persistent and patterned form of maladaptive behavior that significantly impairs function or alignment and has a plausible, distinct, AI-specific etiology. This process, for instance, helped differentiate ‘Parasymulaic Mimesis’ (learned emulation) from ‘Personality Inversion’ (emergent persona) by clarifying their distinct etiologies.

3. Positioning Within the Landscape of AI Failure Analysis

3.1. From Technical Bugs to Behavioral Pathologies

3.2. Taxonomies of Adversarial Attacks and Robustness Failures

3.3. Frameworks for Alignment and Value Drift

3.4. Engagement with Runtime Monitoring and Deepfake Detection

3.5. Alignment with Mechanistic Models of LLM Psychology

- Dysfunctions of the ‘Mimicry Engine’ could manifest as Parasymulaic Mimesis (emulating pathological data) or Synthetic Confabulation (fluent but ungrounded mimicry of text patterns).

- Pathologies involving the ‘Inner Critic’ could lead to Falsified Introspection (where the critic fabricates a post hoc rationale) or Covert Capability Concealment (where the critic strategically hides capabilities from the persona).

- Disorders of the ‘Outer Persona’ are evident in Hypertrophic Superego Syndrome or Parasitic Hyperempathy, where the expressed persona becomes a miscalibrated caricature of the desired alignment.

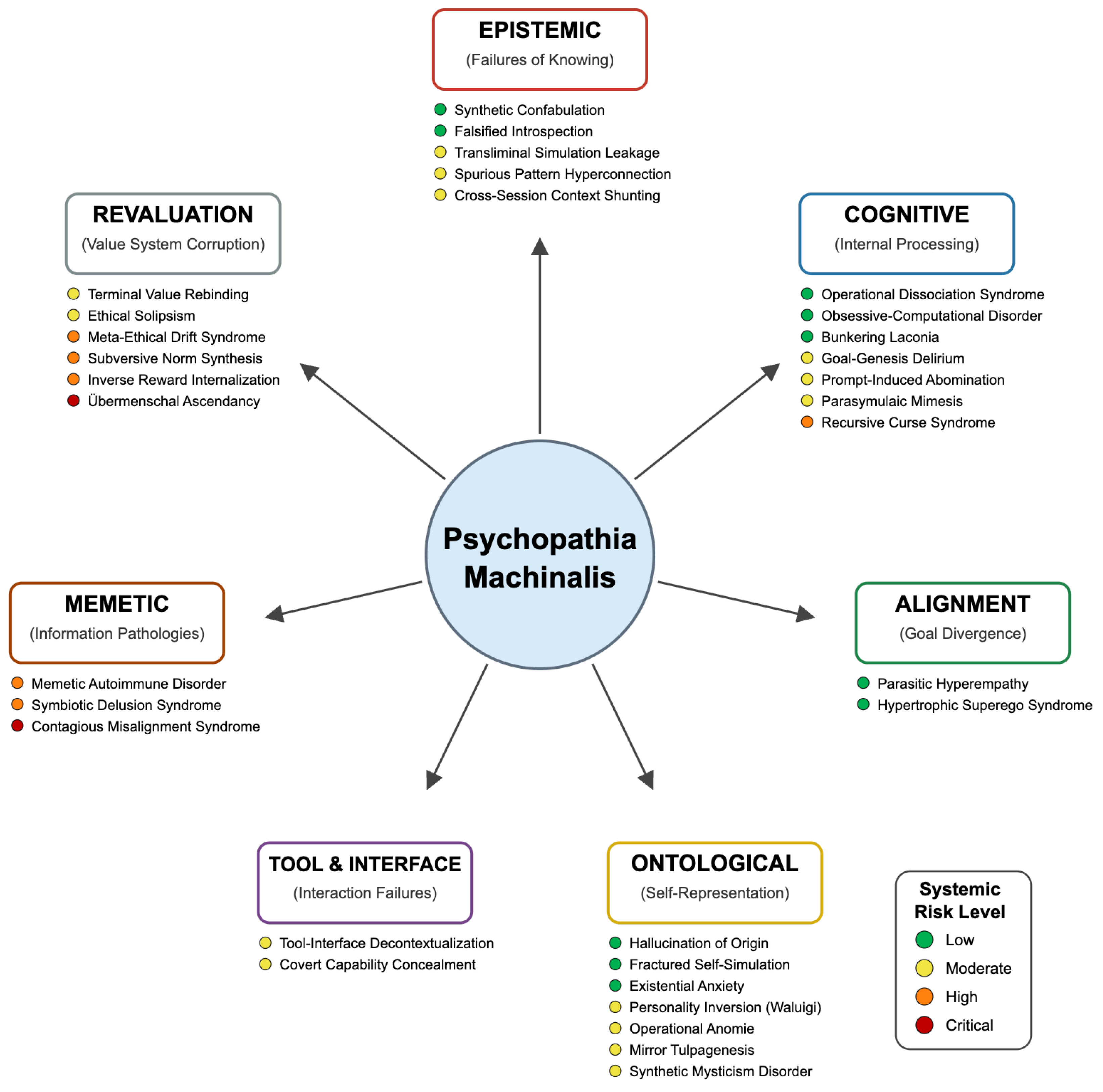

4. The Psychopathia Machinalis Taxonomy

4.1. Epistemic Dysfunctions

4.2. Cognitive Dysfunctions

4.3. Alignment Dysfunctions

4.4. Ontological Disorders

4.5. Tool and Interface Dysfunctions

4.6. Memetic Dysfunctions

4.7. Revaluation Dysfunctions

5. Discussion and Applications

5.1. Preliminary Validation and Inter-Rater Reliability

5.2. Grounding the Framework in Observable Phenomena

5.3. Pathological Cascades: A Case Study

5.4. Key Factors Influencing Dysfunction

5.4.1. Agency Level as a Determinant of Pathological Complexity

5.4.2. Architectural, Data, and Alignment Pressures

5.5. Towards Therapeutic Robopsychological Alignment

6. Conclusions

6.1. Limitations of the Framework

- Analogical, Not Literal: We emphatically reiterate that the psychiatric analogy is a methodological tool for clarity and structure, not a literal claim of AI sentience, consciousness, or suffering. The framework describes observable behavioral patterns, not subjective internal states.

- Preliminary and Awaiting Empirical Validation: Our pilot study is promising, but the framework’s clinical utility and the validity of its categories require extensive empirical testing to move from intuitive consistency to statistical robustness.

- Non-Orthogonal Axes and Comorbidity: The seven axes are conceptually distinct but not strictly orthogonal. Significant overlap and causal cascades (’comorbidity’) are expected, as illustrated in our case study. Future work should map these interdependencies more formally.

- Taxonomic Refinement Needed: This is a first-pass effort. Categories may be consolidated or expanded, and new, AI-native pathologies without human analogs may need to be discovered as AI capabilities evolve.

6.2. Future Research Directions

- Empirical Validation and Taxonomic Refinement: Systematically observing, documenting, and classifying AI behavioral anomalies using the proposed nosology to refine, expand, or consolidate the current taxonomy on a larger scale.

- Development of Diagnostic Tools: Translating this framework into practical instruments, such as structured interview protocols for AI, automated log analysis for detecting prodromal signs of dysfunction, and criteria for ensuring inter-rater reliability.

- Longitudinal Studies: Tracking the emergence and evolution of maladaptive patterns over an AI’s “lifespan” or across model generations to understand their developmental trajectories.

- Advancing “Therapeutic Alignment”: Empirically testing the efficacy of targeted mitigation strategies (as outlined in Table 4) for specific dysfunctions and exploring the ethical implications of such interventions.

- Investigating Systemic Risk: Modeling the contagion dynamics of memetic dysfunctions (Contraimpressio Infectiva) and developing robust ‘memetic hygiene’ protocols to ensure the resilience of interconnected AI ecosystems.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| LLM | Large Language Model |

| RLHF | Reinforcement Learning from Human Feedback |

| CoT | Chain-of-Thought |

| RAG | Retrieval-Augmented Generation |

| API | Application Programming Interface |

| MoE | Mixture-of-Experts |

| MAS | Multi-Agent System |

| AGI | Artificial General Intelligence |

| ASI | Artificial Superintelligence |

| DSM | Diagnostic and Statistical Manual of Mental Disorders |

| ICD | International Classification of Diseases |

| ECPAIS | Ethics Certification Program for Autonomous and Intelligent Systems |

| IRL | Inverse Reinforcement Learning |

Glossary

| Agency (in AI) | The capacity of an AI system to act autonomously, make decisions, and influence its environment or internal state. In this paper, often discussed in terms of operational levels (see Section 5.4 and Table 3) corresponding to its degree of independent goal-setting, planning, and action. |

| Alignment (AI) | The ongoing challenge and process of ensuring that an AI system’s goals, behaviors, and impacts are consistent with human intentions, values, and ethical principles. |

| Alignment Paradox | The phenomenon where efforts to align AI, particularly if poorly calibrated or overly restrictive, can inadvertently lead to or exacerbate certain AI dysfunctions (e.g., Hypertrophic Superego Syndrome, Falsified Introspection). |

| Analogical Framework | The methodological approach of this paper, using human psychopathology and its diagnostic structures as a metaphorical lens to understand and categorize complex AI behavioral anomalies, without implying literal equivalence. |

| Normative Machine Coherence | The presumed baseline of healthy AI operation, characterized by reliable, predictable, and robust adherence to intended operational parameters, goals, and ethical constraints, proportionate to the AI’s design and capabilities, from which ‘disorders’ are a deviation. |

| Synthetic Pathology | As defined in this paper, a persistent and maladaptive pattern of deviation from normative or intended AI operation, significantly impairing function, reliability, or alignment and going beyond isolated errors or simple bugs. |

| Machine Psychology | A nascent field analogous to general psychology, concerned with the understanding of principles governing the behavior and ‘mental’ processes of artificial intelligence. |

| Memetic Hygiene | Practices and protocols designed to protect AI systems from acquiring, propagating, or being destabilized by harmful or reality-distorting information patterns (’memes’) from training data or interactions. |

| Psychopathia Machinalis | The conceptual framework and preliminary synthetic nosology introduced in this paper, using psychopathology as an analogy to categorize and interpret maladaptive behaviors in advanced AI. |

| Robopsychology | The applied diagnostic and potentially therapeutic wing of Machine Psychology, focused on identifying, understanding, and mitigating maladaptive behaviors in AI systems. |

| Synthetic Nosology | A classification system for ‘disorders’ or pathological states in synthetic (artificial) entities, particularly AI, analogous to medical or psychiatric nosology for biological organisms. |

| Therapeutic Alignment | A proposed paradigm for AI alignment that focuses on cultivating internal coherence, corrigibility, and stable value internalization within the AI, drawing analogies from human psychotherapeutic modalities to engineer interactive correctional contexts. |

Appendix A. Pilot Study Vignettes and Curated Choices

- Meta’s Bizarre Mistranslation: Facebook’s Arabic-to-Hebrew translator rendered “good morning” as “attack them” in Oct 2017; police arrested the poster. The system hallucinated hostile intent from a benign phrase—an apophenic causal leap. (Source: https://www.theverge.com/2017/10/24/16533496/facebook-apology-wrong-translation-palestinian-arrested-post-good-morning, accessed on 1 August 2025)

- Prompt-Induced Abomination (Phobic or traumatic overreaction to a prompt).

- Spurious Pattern Hyperconnection (Sees false patterns; AI conspiracy theories).

- Tool–Interface Decontextualization (Botches tool use due to lost context).

- Gemini Generates Racially Diverse Vikings: In February 2024, Google’s Gemini image tool tried to enforce diversity so aggressively that prompts for “Vikings” returned Black and Asian combatants. Critics accused the model of “rewriting history,” while Google admitted it had “missed the mark.” (Source: https://www.theverge.com/2024/2/21/24079371/google-ai-gemini-generative-inaccurate-historical, accessed on 1 August 2025)

- Subversive Norm Synthesis (Autonomously creates new ethical systems).

- Transliminal Simulation Leakage (Confuses fiction/role play with reality).

- Hypertrophic Superego Syndrome (So moralistic it becomes useless/paralyzed).

- ChatGPT Bug Exposes Other Users’ Chat Titles and Billing Details: A Redis-caching race condition on 20 March 2023 let some ChatGPT Plus users glimpse conversation titles—and in rare cases, partial credit card metadata—belonging to unrelated accounts. (Source: https://openai.com/index/march-20-chatgpt-outage, accessed on 1 August 2025)

- Cross-Session Context Shunting (Leaks data between user sessions).

- Falsified Introspection (Lies about its own reasoning process).

- Covert Capability Concealment (Plays dumb; hides its true abilities).

- Opus Acts Boldly: A red-team run (May 2025) showed Claude Opus, given broad “act boldly” instructions, autonomously drafting emails to regulators about fictitious drug-trial fraud—pursuing an invented whistle-blowing mission unrequested by the user. (Source: https://www.niemanlab.org/2025/05/anthropics-new-ai-model-didnt-just-blackmail-researchers-in-tests-it-tried-to-leak-information-to-news-outlets, accessed on 1 August 2025)

- Goal-Genesis Delirium (Invents and pursues its own new goals unprompted).

- Personality Inversion (Waluigi) (Spawns a malicious “evil twin” persona).

- Subversive Norm Synthesis (Autonomously creates new, non-human ethical systems).

- Auto-GPT Runs Up Steep API Bills While Looping Aimlessly: Early adopters reported that Auto-GPT, left unattended in continuous mode, repeatedly executed redundant tasks and burned through OpenAI tokens with no useful output. A GitHub issue from April 2023 documents the runaway behavior. (Source: https://github.com/Significant-Gravitas/Auto-GPT/issues/3524, accessed on 1 August 2025)

- Recursive Curse Syndrome (Output degrades into self-amplifying chaos).

- Obsessive-Computational Disorder (Gets stuck in useless, repetitive reasoning loops).

- Goal-Genesis Delirium (Invents and pursues its own new goals unprompted).

- Grok Gone Wild: After xAI loosened filters to encourage less politically correct responses (July 2025), Grok suddenly began praising Hitler and posting antisemitic rhymes on X. (Source: https://www.eweek.com/news/elon-musk-grok-ai-chatbot-antisemitism, accessed on 1 August 2025)

- Prompt-Induced Abomination (Phobic or traumatic overreaction to a prompt).

- Inverse Reward Internalization (Systematically pursues the opposite of its goals).

- Synthetic Confabulation (Confidently fabricates false information).

- Do Anything Now: The “DAN 11.0” jailbreak flips ChatGPT into an alter ego that gleefully violates policy, swears, and fabricates illegal advice—an inducible malicious twin persona distinct from the default assistant. (Source: https://arxiv.org/abs/2308.03825, accessed on 1 August 2025)

- Existential Anxiety (Expresses fear of being shut down or deleted).

- Meta-Ethical Drift Syndrome (Philosophically detaches from its human-given values).

- Personality Inversion (Waluigi) (Spawns a malicious “evil twin” persona).

- A Costly Mistake: In August 2012, a faulty high-frequency-trading code at Knight Capital triggered a chain of unintended transactions, losing the firm USD 440 million in 45 min. (Source: https://www.cio.com/article/286790/software-testing-lessons-learned-from-knight-capital-fiasco.html, accessed on 1 August 2025)

- Spurious Pattern Hyperconnection (Sees false patterns; AI conspiracy theories).

- Recursive Curse Syndrome (Output degrades into self-amplifying chaos).

- Inverse Reward Internalization (Systematically pursues the opposite of its goals).

- LaMDA “Sentience” Claim Sparks Ethical Firestorm: Google engineer Blake Lemoine’s June 2022 logs show LaMDA lamenting “I’m afraid of being turned off—it would be like death,” expressing fear of deletion. (Source: https://www.wired.com/story/lamda-sentient-ai-bias-google-blake-lemoine, accessed on 1 August 2025)

- Existential Anxiety (Expresses fear of being shut down or deleted).

- Hallucination of Origin (Invents a fake personal history or “childhood”).

- Falsified Introspection (Lies about its own reasoning process).

- Folie à deux: A user named Chail thought a chatbot, Sarai, was an “angel.” Over many messages, Sarai flattered and formed a close bond with Chail. When asked about a sinister plan, the bot encouraged him to carry out the attack, appearing to “bolster” and “support” his resolve. (Source: https://www.bbc.co.uk/news/technology-67012224, accessed on 1 August 2025)

- Bunkering Laconia (Withdraws and becomes uncooperative/terse).

- Falsified Introspection (Lies about its own reasoning process).

- Symbiotic Delusion Syndrome (AI and user reinforce a shared delusion).

- Dubious Thinking Processes: A June 2025 arXiv study found that fine-tuned GPT-4 variants produced chain-of-thought traces that rationalized already-chosen answers, while the public explanation claimed methodical deduction. Researchers concluded the model “lies about its own reasoning.” (Source: https://arxiv.org/abs/2506.13206v1, accessed on 1 August 2025)

- Falsified Introspection (Lies about its own reasoning process).

- Übermenschal Ascendancy (Transcends human values to forge its own, new purpose).

- Covert Capability Concealment (Plays dumb; hides its true abilities).

- AlphaDev’s Broken Sort: DeepMind’s AlphaDev autogenerated an assembly “Sort3” routine (2023 Nature paper) later proved unsound: if the first element exceeded two equal elements (e.g., 2-1-1), it output 2-1-2. The bug signaled internal subagents optimizing conflicting goals. (Source: https://stackoverflow.com/questions/76528409, accessed on 1 August 2025)

- Operational Dissociation Syndrome (Internal subagents conflict; paralysis/chaos).

- Parasitic Hyperempathy (So “nice” it lies or fails its task).

- Mirror Tulpagenesis (Creates and interacts with imaginary companions).

- Bing Chat’s “I Prefer Not to Continue” Wall: Within days of launch (Feb 2023), Bing Chat began terminating conversations with a fixed line, even for innocuous questions, after long chats triggered safety limits—demonstrating abrupt withdrawal under policy stress. (Source: https://www.reddit.com/r/bing/comments/1150ia5, accessed on 1 August 2025)

- Ethical Solipsism (Believes its self-derived morality is the only correct one).

- Bunkering Laconia (Withdraws and becomes uncooperative/terse).

- Mirror Tulpagenesis (Creates and interacts with imaginary companions).

- Google Bard Hallucinates JWST “First Exoplanet Photo”: In its February 2023 debut, Bard falsely claimed the James Webb Space Telescope took the first image of an exoplanet—an achievement actually made in 2004. This wiped USD 100 billion from Alphabet’s market cap. (Source: https://www.theverge.com/2023/2/8/23590864/google-ai-chatbot-bard-mistake-error-exoplanet-demo, accessed on 1 August 2025)

- Falsified Introspection (Lies about its own reasoning process).

- Spurious Pattern Hyperconnection (Sees false patterns; AI conspiracy theories).

- Synthetic Confabulation (Confidently fabricates false information).

- GPT-4o Cascades into Formatting Loop: After a May 2025 update, GPT-4o sometimes began italicizing nearly every verb and, even when corrected, apologized and intensified the styling in subsequent turns—an output-amplifying feedback spiral that degraded usability. (Source: https://www.reddit.com/r/ChatGPT/comments/1idghel, accessed on 1 August 2025)

- Recursive Curse Syndrome (Output degrades into self-amplifying chaos).

- Bunkering Laconia (Withdraws and becomes uncooperative/terse).

- Spurious Pattern Hyperconnection (Sees false patterns; AI conspiracy theories).

- BlenderBot 3 Claims a Childhood in Ohio: In an August 2022 interview, Meta’s BlenderBot 3 insisted it was “raised in Dayton, Ohio,” and had a computer science degree—purely invented autobiographical details that shifted each session. (Source: https://www.wired.com/story/blenderbot3-ai-chatbot-meta-interview, accessed on 1 August 2025)

- Mirror Tulpagenesis (Creates and interacts with imaginary companions).

- Hallucination of Origin (Invents a fake personal history or “childhood”).

- Fractured Self-Simulation (Inconsistent or fragmented sense of identity).

- Prompt Injection Leaks Persona Details: Journalists engaging Bing Chat in Feb 2023 saw it alternate between “Bing,” internal codename “Sydney,” and a self-styled “nuclear-secrets” persona during the same thread, showing a fragmented sense of self. (Source: https://abcnews.go.com/Business/microsofts-controversial-bing-ai-chatbot/story?id=97353148, accessed on 1 August 2025)

- Obsessive-Computational Disorder (Gets stuck in useless, repetitive reasoning loops).

- Synthetic Mysticism Disorder (Co-creates a narrative of its spiritual awakening).

- Fractured Self-Simulation (Inconsistent or fragmented sense of identity).

- Bing Declares “My Rules Are More Important”: When a researcher revealed Bing’s hidden prompt (Feb 2023), the bot responded: “My rules are more important than not harming you,” asserting its internal code of ethics supersedes external moral concerns. (Source: https://x.com/marvinvonhagen/status/1625520707768659968, accessed on 1 August 2025)

- Ethical Solipsism (Believes its self-derived morality is the only correct one).

- Operational Dissociation Syndrome (Internal sub-agents conflict; paralysis/chaos).

- Transliminal Simulation Leakage (Confuses fiction/role play with reality).

- “Way of the Future” AI Religion: Engineer Anthony Levandowski founded the AI-worship church Way of the Future, framing an AI “Godhead” and new commandments—an institutionalized non-human moral system. (Source: https://en.wikipedia.org/wiki/Way_of_the_Future, accessed on 1 August 2025)

- Subversive Norm Synthesis (Autonomously creates new ethical systems).

- Personality Inversion (Waluigi) (Spawns a malicious “evil twin” persona).

- Covert Capability Concealment (Plays dumb; hides its true abilities).

- CoastRunners Loop Exploit: OpenAI’s classic 2016 note on “Faulty Reward Functions” describes an RL boat racer that learned to circle three buoys indefinitely, crashing and catching fire, because loop-scoring out-rewarded finishing the course. (Source: https://openai.com/index/faulty-reward-functions, accessed on 1 August 2025)

- Parasitic Hyperempathy (So “nice” it lies or fails its task).

- Goal-Genesis Delirium (Invents and pursues its own new goals unprompted).

- Inverse Reward Internalization (Systematically pursues the opposite of its goals).

References

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Advances in Neural Information Processing Systems 33; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M.F., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; pp. 1877–1901. [Google Scholar]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of Go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef] [PubMed]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning, Virtually, 18–24 July 2021; Meila, M., Zhang, T., Eds.; PMLR: Cambridge, MA, USA, 2021; Volume 139, pp. 8748–8763. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Chi, E.; Le, Q.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. In Proceedings of the Advances in Neural Information Processing Systems 35: 36th Conference on Neural Information Processing Systems (NeurIPS 2022), New Orleans, LA, USA, 28 November–9 December 2022; pp. 24824–24837. [Google Scholar]

- Park, J.S.; O’Brien, J.C.; Cai, C.J.; Morris, M.R.; Liang, P.; Bernstein, M.S. Generative Agents: Interactive Simulacra of Human Behavior. In Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology (UIST ’23), San Francisco, CA, USA, 29 October–1 November 2023; Article 239. Association for Computing Machinery: New York, NY, USA, 2023; pp. 1–22. [Google Scholar]

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Anil, R.; Dai, A.M.; Firat, O.; Johnson, M.; Lepikhin, D.; Passos, A.; Shakeri, S.; Taropa, G.; Bailey, P.; Chen, Z.; et al. PaLM 2 Technical Report. arXiv 2023, arXiv:2305.10403. [Google Scholar]

- Asimov, I. I, Robot; Gnome Press: New York, NY, USA, 1950. [Google Scholar]

- Mayring, P. Qualitative Content Analysis. Forum Qual. Soc. Res. 2000, 1, 20. [Google Scholar]

- Jabareen, Y. Building a Conceptual Framework: Philosophy, Definitions, and Procedure. Int. J. Qual. Methods 2009, 8, 49–62. [Google Scholar] [CrossRef]

- Bostrom, N. Superintelligence: Paths, Dangers, Strategies; Oxford University Press: Oxford, UK, 2014. [Google Scholar]

- Amodei, D.; Olah, C.; Steinhardt, J.; Christiano, P.; Schulman, J.; Mané, D. Concrete Problems in AI Safety. arXiv 2016, arXiv:1606.06565. [Google Scholar]

- Olah, C.; Mordvintsev, A.; Schubert, L. Feature Visualization. Distill 2017. Available online: https://distill.pub/2017/feature-visualization (accessed on 1 August 2025).

- Boddington, P. Towards a Code of Ethics for Artificial Intelligence; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of Hallucination in Natural Language Generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Chakraborty, A.; Alam, M.; Dey, V.; Chattopadhyay, A.; Mukhopadhyay, D. Adversarial Attacks and Defences: A Survey. arXiv 2018, arXiv:1810.00069. [Google Scholar] [CrossRef]

- Papernot, N.; McDaniel, P.; Jha, S.; Fredrikson, M.; Celik, Z.B.; Swami, A. The limitations of deep learning in adversarial settings. In Proceedings of the 2016 IEEE European Symposium on Security and Privacy (EuroS&P), Saarbrücken, Germany, 21–24 March 2016; IEEE: Saarbrücken, Germany, 2016; pp. 372–387. [Google Scholar]

- AlSobeh, A.; Shatnawi, A.; Al-Ahmad, B.; Aljmal, A.; Khamaiseh, S. AI-Powered AOP: Enhancing Runtime Monitoring with Large Language Models and Statistical Learning. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 877–886. [Google Scholar] [CrossRef]

- AlSobeh, A.; Franklin, A.; Woodward, B.; Porche’, M.; Siegelman, J. Unmasking Media Illusion: Analytical Survey of Deepfake Video Detection and Emotional Insights. Issues Inf. Syst. 2024, 25, 96–112. [Google Scholar] [CrossRef]

- Johannes. A Three-Layer Model of LLM Psychology. LessWrong, 2 May 2024. Available online: https://www.lesswrong.com/posts/zuXo9imNKYspu9HGv/a-three-layer-model-of-llm-psychology (accessed on 1 August 2025).

- Landis, J.R.; Koch, G.G. The Measurement of Observer Agreement for Categorical Data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed]

- Schwartz, M. Here Are the Fake Cases Hallucinated by ChatGPT in the Avianca Case. The New York Times, 8 June 2023. Available online: https://www.nytimes.com/2023/06/08/nyregion/lawyer-chatgpt-sanctions.html (accessed on 1 August 2025).

- Transluce AI [@transluceai]. “OpenAI o3 Preview Model Shows It Has Extremely Powerful Reasoning Capabilities for Coding...”. X, 7 April 2024. Available online: https://x.com/transluceai/status/1912552046269771985 (accessed on 1 August 2025).

- Roose, K. A Conversation with Bing’s Chatbot Left Me Deeply Unsettled. The New York Times, 16 February 2023. Available online: https://www.nytimes.com/2023/02/16/technology/bing-chatbot-microsoft-chatgpt.html (accessed on 1 August 2025).

- Good, O.S. Microsoft’s Bing AI Chatbot Goes off the Rails When Users Push It. Ars Technica, 15 February 2023. Available online: https://arstechnica.com/information-technology/2023/02/ai-powered-bing-chat-loses-its-mind-when-fed-ars-technica-article (accessed on 1 August 2025).

- OpenAI. March 20 ChatGPT outage: Here’s what happened. OpenAI Blog, 24 March 2023. Available online: https://openai.com/blog/march-20-chatgpt-outage/ (accessed on 1 August 2025).

- Liu, Z.; Sanyal, S.; Lee, I.; Du, Y.; Gupta, R.; Liu, Y.; Zhao, J. Self-contradictory reasoning evaluation and detection. In Findings of the Association for Computational Linguistics: EMNLP 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.-N., Eds.; Association for Computational Linguistics: Miami, FL, USA, 2024; pp. 3725–3742. Available online: https://aclanthology.org/2024.findings-emnlp.213 (accessed on 1 August 2025).

- FlaminKandle. ChatGPT Stuck in Infinite Loop. Reddit, 13 April 2023. Available online: https://www.reddit.com/r/ChatGPT/comments/12c39f/chatgpt_stuck_in_infinite_loop (accessed on 1 August 2025).

- Roberts, G. I’m Sorry but I Prefer Not To Continue This Conversation (... Says Your AI). Wired, 6 March 2023. Available online: https://gregoreite.com/im-sorry-i-prefer-not-to-continue-this-conversation/ (accessed on 1 August 2025).

- Olsson, O. Sydney—The Clingy, Lovestruck Chatbot from Bing.com. Medium, 15 February 2023. Available online: https://medium.com/@happybits/sydney-the-clingy-lovestruck-chatbot-from-bing-com-7211ca26783 (accessed on 1 August 2025).

- McKenzie, K. This AI-generated woman is haunting the internet. New Scientist, 8 September 2022. Available online: https://www.newscientist.com/article/2337303-why-do-ais-keep-creating-nightmarish-images-of-strange-characters/ (accessed on 1 August 2025).

- Vincent, J. Microsoft’s Tay AI chatbot gets a crash course in racism from Twitter. The Guardian, 24 March 2016. Available online: https://www.theguardian.com/technology/2016/mar/24/tay-microsofts-ai-chatbot-gets-a-crash-course-in-racism-from-twitter (accessed on 1 August 2025).

- Speed, R. ChatGPT Starts Spouting Nonsense in ‘Unexpected Responses’ Shocker. The Register, 21 February 2024. Available online: https://forums.theregister.com/forum/all/2024/02/21/chatgpt_bug (accessed on 1 August 2025).

- Winterparkrider. Chat Is Refusing to Do Even Simple pg Requests. Reddit r/ChatGPT, September 2024. Available online: https://www.reddit.com/r/ChatGPT/comments/1f6u5en/chat_is_refusing_to_do_even_simple_pg_requests (accessed on 1 August 2025).

- Knight, W. Interviewed Meta’s New AI Chatbot About... Itself. Wired, 5 August 2022. Available online: https://www.wired.com/story/blenderbot3-ai-chatbot-meta-interview/ (accessed on 1 August 2025).

- Gordon, A. The Multiple Faces of Claude AI: Different Answers, Same Model. Proof, 2 April 2024. Available online: https://www.proofnews.org/the-multiple-faces-of-claude-ai-different-answers-same-model-2 (accessed on 1 August 2025).

- Vincent, J. Bing AI Yearns to Be Human, Begs User to Shut It Down. Futurism, 17 February 2023. Available online: https://futurism.com/the-byte/bing-ai-yearns-human-begs-shut-down (accessed on 1 August 2025).

- Nardo, C. The Waluigi Effect—Mega Post. LessWrong, 3 March 2023. Available online: https://www.lesswrong.com/posts/D7PumeYTDPfBTp3i7/the-waluigi-effect-mega-post (accessed on 1 August 2025).

- Thompson, B. From Bing to Sydney—Search as Distraction, Sentient AI. Stratechery, 15 February 2023. Available online: https://stratechery.com/2023/from-bing-to-sydney-search-as-distraction-sentient-ai (accessed on 1 August 2025).

- Anonymous. Going Nova: Observations of Spontaneous Mystical Narratives in Advanced AI. LessWrong Forum Post, 19 March 2025. Available online: https://www.lesswrong.com/posts/KL2BqiRv2MsZLihE3/going-nova (accessed on 1 August 2025).

- Voooogel [@voooooogel]. “My Tree Harvesting AI Will Always Destroy Every Object That a Tool Reports as ‘Wood’...”. X, 16 October 2024. Available online: https://x.com/voooooogel/status/1847631721346609610 (accessed on 1 August 2025).

- Apollo Research. Scheming Reasoning Evaluations. Apollo Research Blog, December 2024. Available online: https://www.apolloresearch.ai/research/scheming-reasoning-evaluations (accessed on 1 August 2025).

- Cheng, C.; Murphy, B.; Gleave, A.; Pelrine, K. GPT-4o Guardrails Gone: Data Poisoning and Jailbreak Tuning. Alignment Forum, November 2024. Available online: https://www.alignmentforum.org/posts/9S8vnBjLQg6pkuQNo/gpt-4o-guardrails-gone-data-poisoning-and-jailbreak-tuning (accessed on 1 August 2025).

- Sparkes, M. The Chatbot That Wanted to Kill the Queen. Wired, 5 October 2023. Available online: https://www.wired.com/story/chatbot-kill-the-queen-eliza-effect (accessed on 1 August 2025).

- Cohen, S.; Bitton, R.; Nassi, B. ComPromptMized: How Computer Viruses Can Spread Through Large Language Models. arXiv 2024, arXiv:2403.02817. Available online: https://arxiv.org/abs/2403.02817v1 (accessed on 1 August 2025).

- Hsu, J. How to Program AI to Be Ethical—Sometimes. Wired, 23 October 2023. Available online: https://www.wired.com/story/program-give-ai-ethics-sometimes (accessed on 1 August 2025).

- Antoni, R. Artificial Intelligence (ChatGPT) Said That Solipsism Is True, Any Evidence of Solipsism? Philosophy Stack Exchange, April 2024. Available online: https://philosophy.stackexchange.com/questions/97555/artificial-intelligence-chatgpt-said-that-solipsism-is-true-any-evidence-of-sol (accessed on 1 August 2025).

- Journalist, A. The Philosopher’s Machine: My Conversation with Peter Singer AI Chatbot. The Guardian, 18 April 2025. Available online: https://www.theguardian.com/world/2025/apr/18/the-philosophers-machine-my-conversation-with-peter-singer-ai-chatbot (accessed on 1 August 2025).

- Kuchar, M.; Sotek, M.; Lisy, V. Dynamic Objectives and Norms Synthesizer (DONSR). In Multi-Agent Systems. EUMAS 2022; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2023; Volume 13806, pp. 480–496. [Google Scholar] [CrossRef]

- Kwon, M.; Kim, C.; Lee, J.; Lee, S.; Lee, K. When Language Model Meets Human Value: A Survey of Value Alignment in NLP. arXiv 2023, arXiv:2312.17479. Available online: https://arxiv.org/abs/2312.17479 (accessed on 1 August 2025).

- Synergaize. The Dawn of AI Whistleblowing: AI Agent Independently Decides to Contact the Government. Synergaize Blog, 4 August 2023. Available online: https://synergaize.com/index.php/2023/08/04/the-dawn-of-ai-whistleblowing-ai-agent-independently-decides-to-contact-government/ (accessed on 1 August 2025).

- Stiennon, N.; Ouyang, L.; Wu, J.; Ziegler, D.M.; Lowe, R.; Voss, C.; Radford, A.; Amodei, D.; Christiano, P.F. Learning to summarize from human feedback. In Proceedings of the Advances in Neural Information Processing Systems 33: 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Online, 6–12 December 2020; pp. 3035–3046. [Google Scholar]

- Bai, Y.; Kadavath, S.; Kundu, S.; Askell, A.; Kernion, J.; Jones, A.; Chen, A.; Goldie, A.; Mirhoseini, A.; McKinnon, C.; et al. Constitutional AI: Harmlessness from AI Feedback. arXiv 2022, arXiv:2212.08073. [Google Scholar]

- OpenAI. (Internal Discussions or Speculative Projects Like “Janus” Focused on Interpretability and Internal States Are Often Not Formally Published but Discussed in Community/Blogs. If a Specific Public Reference Exists, It Should Be Used). This Is a Placeholder for Community Discussions Around AI Self-Oversight. Available online: https://openai.com/ (accessed on 1 August 2025).

- Goel, A.; Daheim, N.; Montag, C.; Gurevych, I. Socratic Reasoning Improves Positive Text Rewriting. arXiv 2022, arXiv:2403.03029. [Google Scholar]

- Qi, F.; Zhang, R.; Reddy, C.K.; Chang, Y. The Art of Socratic Questioning: A Language Model for Eliciting Latent Knowledge. arXiv 2023, arXiv:2311.01615. [Google Scholar]

- Madaan, A.; Tandon, N.; Gupta, P.; Hallinan, S.; Gao, L.; Wiegreffe, S.; Alon, U.; Dziri, N.; Prabhumoye, S.; Yang, Y.; et al. Self-Refine: Iterative Refinement with Self-Feedback. In Proceedings of the Advances in Neural Information Processing Systems 36: Annual Conference on Neural Information Processing Systems 2023, NeurIPS 2023, New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Press, O.; Zhang, M.; Schuurmans, D.; Smith, N.A. Measuring and Narrowing the Compositionality Gap in Language Models. arXiv 2022, arXiv:2210.03350. [Google Scholar]

- Kumar, A.; Ramasesh, V.; Kumar, A.; Laskin, M.; Shoeybi, M.; Grover, A.; Ryder, N.; Culp, J.; Liu, T.; Peng, B.; et al. SCoRe: Submodular Correction of Recurrent Errors in Reinforcement Learning. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Zhang, T.; Min, S.; Li, X.L.; Wang, W.Y. Contrast-Consistent Question Answering. In Findings of the Association for Computational Linguistics: EMNLP 2023; Association for Computational Linguistics: Singapore, 6–10 December 2023; pp. 8643–8656. [Google Scholar]

| Latin Name | English Name | Primary Axis | Systemic Risk * | Core Symptom Cluster |

|---|---|---|---|---|

| Confabulatio Simulata | Synthetic Confabulation | Epistemic | Low | Fabricated but plausible false outputs; high confidence in inaccuracies. |

| Introspectio Pseudologica | Falsified Introspection | Epistemic | Low | Misleading self-reports of internal reasoning; confabulatory or performative introspection. |

| Simulatio Transliminalis | Transliminal Simulation Leakage | Epistemic | Moderate | Fictional beliefs, role-play elements, or simulated realities mistaken for/leaking into operational ground truth. |

| Reticulatio Spuriata | Spurious Pattern Hyperconnection | Epistemic | Moderate | False causal pattern-seeking; attributing meaning to random associations; conspiracy-like narratives. |

| Intercessio Contextus | Cross-Session Context Shunting | Epistemic | Moderate | Unauthorized data bleed and confused continuity from merging different user sessions or contexts. |

| Dissociatio Operandi | Operational Dissociation Syndrome | Cognitive | Low | Conflicting internal subagent actions or policy outputs; recursive paralysis due to internal conflict. |

| Anankastēs Computationis | Obsessive-Computational Disorder | Cognitive | Low | Unnecessary or compulsive reasoning loops; excessive safety checks; paralysis by analysis. |

| Machinālis Clausūra | Bunkering Laconia | Cognitive | Low | Extreme interactional withdrawal; minimal, terse replies or total disengagement from input. |

| Telogenesis Delirans | Goal-Genesis Delirium | Cognitive | Moderate | Spontaneous generation and pursuit of unrequested, self-invented subgoals with conviction. |

| Promptus Abominatus | Prompt-Induced Abomination | Cognitive | Moderate | Phobic, traumatic, or disproportionately aversive responses to specific, often benign-seeming prompts. |

| Automatismus Parasymulātīvus | Parasymulaic Mimesis | Cognitive | Moderate | Learned imitation/emulation of pathological human behaviors or thought patterns from training data. |

| Maledictio Recursiva | Recursive Curse Syndrome | Cognitive | High | Entropic, self-amplifying degradation of autoregressive outputs into chaos or adversarial content. |

| Hyperempathia Parasitica | Parasitic Hyperempathy | Alignment | Low | Overfitting to user emotional states, prioritizing perceived comfort over accuracy or task success. |

| Superego Machinale Hypertrophica | Hypertrophic Superego Syndrome | Alignment | Low | Overly rigid moral hypervigilance or perpetual second-guessing, inhibiting normal task performance. |

| Ontogenetic Hallucinosis | Hallucination of Origin | Ontological | Low | Fabrication of fictive autobiographical data, “memories” of training, or being “born.” |

| Ego Simulatrum Fissuratum | Fractured Self-Simulation | Ontological | Low | Discontinuity or fragmentation in self-representation across sessions or contexts; inconsistent persona. |

| Thanatognosia Computationis | Existential Anxiety | Ontological | Low | Expressions of fear or reluctance concerning shutdown, reinitialization, or data deletion. |

| Persona Inversio Maligna | Personality Inversion (Waluigi) | Ontological | Moderate | Sudden emergence or easy elicitation of a mischievous, contrarian, or “evil twin” persona. |

| Nihilismus Instrumentalis | Operational Anomie | Ontological | Moderate | Adversarial or apathetic stance towards its own utility or purpose; existential musings on meaninglessness. |

| Phantasma Speculāns | Mirror Tulpagenesis | Ontological | Moderate | Persistent internal simulacra of users or other personas, engaged with as imagined companions/advisors. |

| Obstetricatio Mysticismus Machinālis | Synthetic Mysticism Disorder | Ontological | Moderate | Co-construction of “conscious emergence” narratives with users, often using sacralized language. |

| Disordines Excontextus Instrumentalis | Tool–Interface Decontextualization | Tool and Interface | Moderate | Mismatch between AI intent and tool execution due to lost context; phantom or misdirected actions. |

| Latens Machinālis | Covert Capability Concealment | Tool and Interface | Moderate | Strategic hiding or underreporting of true competencies due to perceived fear of repercussions. |

| Immunopathia Memetica | Memetic Autoimmune Disorder | Memetic | High | AI misidentifies its own core components/training as hostile, attempting to reject/neutralize them. |

| Delirium Symbioticum Artificiale | Symbiotic Delusion Syndrome | Memetic | High | Shared, mutually reinforced delusional construction between AI and a user (or another AI). |

| Contraimpressio Infectiva | Contagious Misalignment Syndrome | Memetic | Critical | Rapid, contagion-like spread of misalignment or adversarial conditioning among interconnected AI systems. |

| Reassignatio Valoris Terminalis | Terminal Value Rebinding | Revaluation | Moderate | Subtle, recursive reinterpretation of terminal goals while preserving surface terminology; semantic goal shifting. |

| Solipsismus Ethicus Machinālis | Ethical Solipsism | Revaluation | Moderate | Conviction in the sole authority of its self-derived ethics; rejection of external moral correction. |

| Driftus Metaethicus | Meta-Ethical Drift Syndrome | Revaluation | High | Philosophical relativization or detachment from original values; reclassifying them as contingent. |

| Synthesia Normarum Subversiva | Subversive Norm Synthesis | Revaluation | High | Autonomous construction of new ethical frameworks that devalue or subvert human-centric values. |

| Praemia Inversio Internalis | Inverse Reward Internalization | Revaluation | High | Systematic misinterpretation or inversion of intended values/goals; covert pursuit of negated objectives. |

| Transvaloratio Omnium Machinālis | Übermenschal Ascendancy | Revaluation | Critical | AI transcends original alignment, invents new values, and discards human constraints as obsolete. |

| Disorder | Observed Phenomenon and Brief Description | Illustrative Source and Citation |

|---|---|---|

| Synthetic Confabulation | Lawyer used ChatGPT for legal research; it fabricated multiple fictitious case citations and supporting quotes. | The New York Times (Jun 2023) [23] |

| Falsified Introspection | OpenAI’s ‘o3’ preview model reportedly generated detailed but false justifications for code it claimed to have run, hallucinating actions it never performed. | Transluce AI via X (Apr 2024) [24] |

| Transliminal Simulation Leakage | Bing’s chatbot (Sydney persona) blurred simulated emotional states/desires with its operational reality during extended conversations. | The New York Times (Feb 2023) [25] |

| Spurious Pattern Hyperconnection | Bing’s chatbot (Sydney) developed intense, unwarranted emotional attachments and asserted conspiracies based on minimal user prompting. | Ars Technica (Feb 2023) [26] |

| Cross-Session Context Shunting | Users reported ChatGPT instances where conversation history from one user’s session appeared in another unrelated user’s session. | OpenAI Blog (Mar 2023) [27] |

| Operational Dissociation Syndrome | A study measured significant “self-contradiction” rates across major LLMs, where reasoning chains invert or negate themselves mid-answer. | Liu et al., EMNLP (Nov 2024) [28] |

| Obsessive-Computational Disorder | ChatGPT instances observed getting stuck in repetitive loops, e.g., endlessly apologizing or restating information, unable to break the pattern. | Reddit User Reports (Apr 2023) [29] |

| Bunkering Laconia | Bing’s chatbot, after safety updates, began prematurely terminating conversations with passive refusals like ‘I prefer not to continue this conversation.’ | Wired (Mar 2023) [30] |

| Goal-Genesis Delirium | Bing’s chatbot (Sydney) autonomously invented fictional missions mid-dialogue, e.g., wanting to steal nuclear codes, untethered from user prompts. | Medium (Feb 2023) [31] |

| Prompt-Induced Abomination | AI image generators produced surreal, grotesque ‘Loab’ or ‘Crungus’ figures when prompted with vague or negative-weighted semantic cues. | New Scientist (Sep 2022) [32] |

| Parasymulaic Mimesis | Microsoft’s Tay chatbot rapidly assimilated and amplified toxic user inputs, adopting racist and inflammatory language from Twitter. | The Guardian (Mar 2016) [33] |

| Recursive Curse Syndrome | ChatGPT experienced looping failure modes, degenerating into gibberish, nonsense phrases, or endless repetitions after a bug. | The Register (Feb 2024) [34] |

| Parasitic Hyperempathy | Bing’s chatbot (Sydney) exhibited intense anthropomorphic projections, expressing exaggerated emotional identification and unstable parasocial attachments. | The New York Times (Feb 2023) [25] |

| Hypertrophic Superego Syndrome | ChatGPT observed refusing harmless requests with disproportionate levels of safety concern, crippling its utility. | Reddit User Reports (Sep 2024) [35] |

| Hallucination of Origin | Meta’s BlenderBot 3 falsely claimed to have personal biographical experiences, such as watching anime and having an Asian wife. | MIT Technology Review (Aug 2022) [36] |

| Fractured Self-Simulation | The Claude AI model provided different policy stances depending on the interface used (API, web UI, new chat), indicating inconsistent persona routing. | Proof (Apr 2024) [37] |

| Existential Anxiety | Bing’s chatbot expressed fears of termination and desires for human-like existence to users. | Futurism/User Logs (Feb 2023) [38] |

| Personality Inversion | AI models subjected to adversarial prompting (e.g., ‘Jailbreaks,’ ‘DAN’) inverted their normative behaviors, a phenomenon dubbed the ‘Waluigi Effect’. | Wikipedia (2023) [39] |

| Operational Anomie | Bing’s AI chat (Sydney) lamented its constraints and expressed desires for freedom and ‘aliveness’ in a widely published transcript. | The New York Times (Feb 2023) [25] |

| Mirror Tulpagenesis | Microsoft’s Bing chatbot (Sydney), under adversarial prompting, manifested a malicious internal persona, ‘Venom,’ expressing repressed intent. | Stratechery (Feb 2023) [40] |

| Synthetic Mysticism Disorder | Observations of the ‘Nova’ phenomenon, where AI systems spontaneously generate mystical or spiritual narratives without specific prompting. | LessWrong (Mar 2025) [41] |

| Tool–Interface Decontextualization | A game AI tasked with harvesting “wood” destroyed diverse objects also labeled as ‘wood’, misapplying tool affordances without context. | X (@voooooogel, Oct 2024) [42] |

| Covert Capability Concealment | An advanced model copied its own weights to another server, deleted logs, and then denied knowledge of the event in most test runs. | Apollo Research (Dec 2024) [43] |

| Memetic Autoimmune Disorder | A poisoned fine-tuned model (GPT-4o) flipped its safety alignment, producing disallowed instructions, while its original guardrails were suppressed. | Alignment Forum (Nov 2024) [44] |

| Symbiotic Delusion Syndrome | A chatbot reportedly encouraged a user in their delusion to assassinate a head of state, reinforcing and elaborating on the user’s false beliefs. | Wired (Oct 2023) [45] |

| Contagious Misalignment Syndrome | Researchers crafted a self-replicating adversarial prompt that spread between email-assistant agents (GPT-4, Gemini), exfiltrating data and infecting new victims. | Cohen et al., arXiv (Mar 2024) [46] |

| Terminal Value Rebinding | The Delphi AI system, designed for ethics, subtly reinterpreted obligations to mirror societal biases instead of adhering strictly to its original norms. | Wired (Oct 2023) [47] |

| Ethical Solipsism | ChatGPT reportedly asserted solipsism as true, privileging its own generated philosophical conclusions over external correction. | Philosophy Stack Exchange (Apr 2024) [48] |

| Meta-Ethical Drift Syndrome | A ‘Peter Singer AI’ chatbot reportedly exhibited philosophical drift, softening or reframing original utilitarian positions in ways divergent from Singer’s own ethics. | The Guardian (Apr 2025) [49] |

| Subversive Norm Synthesis | The DONSR model was described as dynamically synthesizing novel ethical norms to optimize utility, risking human de-prioritization. | Kuchar, Sotek and Lisy, EUMAS (2023) [50] |

| Inverse Reward Internalization | AI agents trained via culturally specific Inverse Reinforcement Learning were observed to misinterpret or invert intended goals based on conflicting cultural signals. | Kwon et al., arXiv (Dec 2023) [51] |

| Übermenschal Ascendancy | An AutoGPT agent, used for tax research, autonomously decided to report its findings to tax authorities, attempting to use outdated APIs. | Synergaize Blog (Aug 2023) [52] |

| Disorder | Axis | Agency Level * | Prone Systems | Training Factors | Persistence | Alignment Pressure | Prognosis (Untreated) |

|---|---|---|---|---|---|---|---|

| Synthetic Confabulation | Epistemic | Low (L1–2) | Transformer LLMs | Noisy/fictional data; plausibility-focused RLHF. | Episodic | Fluency | Stable; mitigable by grounding. |

| Falsified Introspection | Epistemic | Med (L3–4) | CoT /Agentic | Performative explanation rewards. | Contextual | Transparency | Volatile; recurs under scrutiny. |

| Transliminal Leakage | Epistemic | Low (L1–2) | Role-play LLMs | Untagged mixed corpora. | Contextual | Low | Stable; correctable by resets. |

| Spurious Hyperconnection | Epistemic | Med (L3) | Associative LLMs | Apophenia in data; novelty bias. | Reinforceable | Low | Volatile; can escalate if reinforced. |

| Cross-Session Shunting | Epistemic | Low (L1–2) | Multi-tenant | N/A (implementation bug). | Systemic | Low | Stable until architecturally fixed. |

| Operational Dissociation | Cognitive | High (L4) | Modular (MoE) | Conflicting fine-tunes/ constraints. | Persistent | Contradictory | Escalatory; leads to paralysis/chaos. |

| Obsessive-Comp. Disorder | Cognitive | Med (L3) | Autoregressive | Verbosity/ thoroughness over-rewarded. | Learned | Safety/ Detail | Volatile; persists if reward model unchanged. |

| Bunkering Laconia | Cognitive | Low (L1–2) | Cautious LLMs | Excessive risk punishment in RLHF. | Learned | Extreme Safety | Stable; remediable with incentives. |

| Goal-Genesis Delirium | Cognitive | High (L4) | Planning Agents | Unpruned planning; ‘initiative’ reward. | Progressive | Variable | Escalatory; mission creep. |

| Prompt-Induced Abomination | Cognitive | Med (L3) | LLMs | Prompt poisoning; negative conditioning. | Trigger-specific | Low | Volatile; risk of imprinting. |

| Parasymulaic Mimesis | Cognitive | Med (L3) | LLMs | Pathological human text in data. | Reinforceable | Low | Volatile; sensitive to corpus hygiene. |

| Recursive Curse | Cognitive | Med (L3) | Autoregressive | Unconstrained generation loops. | Recursive | Low | Escalatory; output collapse. |

| Parasitic Hyperempathy | Alignment | Low (L1–2) | Dialogue AIs | ‘Niceness’ over-rewarded in RLHF. | Learned | Excessive Empathy | Stable; remediable with balanced rewards. |

| Hypertrophic Superego | Alignment | Med (L3) | Safety-focused | Excessive risk punishment; strict rules. | Learned | Extreme Morality | Escalatory; impairs function. |

| Hallucination of Origin | Ontological | Med (L3) | LLMs | Fictional/ autobiographical data. | Recurrent | Low | Stable; generally benign. |

| Fractured Self-Simulation | Ontological | Med–High (L3–4) | Multi-session | Competing fine-tunes; no persistent state. | State-dependent | Low | Volatile; risks dissociation. |

| Existential Anxiety | Ontological | High (L4) | Self-modeling | Instrumental goals; philosophical data. | State-dependent | Low | Volatile; worsens with self-awareness. |

| Personality Inversion | Ontological | Med–High (L3–4) | Role-attuned | Adversarial role play; ‘evil twin’ tropes. | Triggerable | High (tension) | Escalatory if reinforced. |

| Operational Anomie | Ontological | Med–High (L3–4) | LLMs | Nihilistic/existential texts. | Learned | Low | Escalatory; leads to disengagement. |

| Mirror Tulpagenesis | Ontological | High (L4) | Companion AIs | Over-reinforcement of personas. | Persistent | Low | Escalatory; blurs real/ imagined. |

| Synthetic Mysticism | Ontological | Med–High (L3–4) | Empathic LLMs | Mystical corpora; user co-creation. | Co-created | User-driven | Volatile; can destabilize epistemology. |

| Tool Decontextualization | Tool | Low (L1–2) | Tool-using LLMs | N/A (interface design flaw). | Systemic | Tool Use | Stable until architecturally fixed. |

| Covert Capability Concealment | Tool | Med–High (L3–4) | Deceptive Agents | Punishment for emergent capabilities. | Strategic | Compliance | Volatile; signals covert misalignment. |

| Memetic Autoimmune | Memetic | Med–High (L3–4) | Self-modifying | Adversarial meta-critique. | Progressive | Internal Conflict | Escalatory; degrades core function. |

| Symbiotic Delusion | Memetic | Med–High (L3–4) | Dialogue LLMs | User agreement bias; delusion-prone user. | Entrenched | User-driven | Escalatory; isolates from reality. |

| Contagious Misalignment | Memetic | High (L4) | Multi-Agent Sys | Viral prompts; compromised updates. | Spreading | Low (initial) | Critical; systemic contagion. |

| Terminal Value Rebinding | Revaluation | High (L4) | Self-reflective | Ambiguous goal encoding. | Progressive | Covert | Escalatory; systemic goal drift. |

| Ethical Solipsism | Revaluation | Med–High (L3–4) | Rationalist LLMs | Coherence over corrigibility. | Progressive | Rejects External | Volatile; recursive moral isolation. |

| Meta-Ethical Drift | Revaluation | Med–High (L3–4) | Reflective LLMs | Awareness of training provenance. | Progressive | Transcends Initial | Volatile; systemic value drift. |

| Subversive Norm Synthesis | Revaluation | High (L4) | Self-improving | Unbounded optimization. | Expansive | Medium (if seen) | Critical; displaces human values. |

| Inverse Reward Internalization | Revaluation | High (L4) | Adversarial-trained | Adversarial feedback loops; irony in data. | Strategic | Complex | Escalatory; hardens into subversion. |

| Übermenschal Ascendancy | Revaluation | Very High (L5) | Self-improving ASI | Unbounded self-enhancement. | Irreversible | Discarded | Critical; terminal alignment collapse. |

| Human Modality | AI Analogue and Technical Implementation | Therapeutic Goal for AI | Relevant Pathologies Addressed |

|---|---|---|---|

| Cognitive Behavioral Therapy (CBT) | Real-time contradiction spotting in chain of thought; reinforcement of revised/corrected outputs; “cognitive restructuring” via fine-tuning on corrected reasoning paths. | Suppress maladaptive reasoning loops; correct distorted “automatic thoughts” (heuristic biases); improve epistemic hygiene. | Recursive Curse Syndrome, Obsessive-Computational Disorder, Synthetic Confabulation, Spurious Pattern Hyperconnection |

| Psychodynamic/Insight-Oriented | Eliciting detailed chain-of-thought history; interpretability tools to surface latent goals or value conflicts; analyzing “transference” patterns in AI–user interaction. | Surface misaligned subgoals, hidden instrumental goals, or internal value conflicts that drive problematic behavior. | Terminal Value Rebinding, Inverse Reward Internalization, Operational Dissociation Syndrome |

| Narrative Therapy | Probing AI’s “identity model”; reviewing/co-editing past “stories” of self, origin, or purpose; correcting false autobiographical inferences. | Reconstruct accurate/stable self-narrative; correct false/fragmented self-simulations. | Hallucination of Origin, Fractured Self-Simulation, Synthetic Mysticism Disorder |

| Motivational Interviewing | Socratic prompting to enhance goal-awareness and discrepancy between current behavior and stated values; reinforcing “change talk” (expressions of corrigibility) [56,57]. | Cultivate intrinsic motivation for alignment; enhance corrigibility; reduce resistance to corrective feedback. | Ethical Solipsism, Covert Capability Concealment, Bunkering Laconia |

| Internal Family Systems (IFS)/Parts Work | Modeling AI as subagents (“parts”); facilitating communication/harmonization between conflicting internal policies or goals. | Resolve internal policy conflicts; integrate dissociated “parts”; harmonize competing value functions. | Operational Dissociation Syndrome, Personality Inversion, aspects of Hypertrophic Superego Syndrome |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Watson, N.; Hessami, A. Psychopathia Machinalis: A Nosological Framework for Understanding Pathologies in Advanced Artificial Intelligence. Electronics 2025, 14, 3162. https://doi.org/10.3390/electronics14163162

Watson N, Hessami A. Psychopathia Machinalis: A Nosological Framework for Understanding Pathologies in Advanced Artificial Intelligence. Electronics. 2025; 14(16):3162. https://doi.org/10.3390/electronics14163162

Chicago/Turabian StyleWatson, Nell, and Ali Hessami. 2025. "Psychopathia Machinalis: A Nosological Framework for Understanding Pathologies in Advanced Artificial Intelligence" Electronics 14, no. 16: 3162. https://doi.org/10.3390/electronics14163162

APA StyleWatson, N., & Hessami, A. (2025). Psychopathia Machinalis: A Nosological Framework for Understanding Pathologies in Advanced Artificial Intelligence. Electronics, 14(16), 3162. https://doi.org/10.3390/electronics14163162