Abstract

Simple Baseline has become a dominant benchmark in human pose estimation (HPE) due to its excellent performance and simple design. However, its “strong encoder + simple decoder” architectural paradigm suffers from two core limitations: (1) its non-branching, linear deconvolutional path prevents it from leveraging the rich, fine-grained features generated by the encoder at multiple scales and (2) the model lacks explicit prior knowledge of both the absolute positions and structural layout of human keypoints. To address these issues, this paper introduces AFJ-PoseNet, a new architecture that deeply enhances the Simple Baseline framework. First, we restructure Simple Baseline’s original linear decoder into a U-Net-like multi-scale fusion path, introducing intermediate features from the encoder via skip connections. For efficient fusion, we design a novel Attention Fusion Module (AFM), which dynamically gates the flow of incoming detailed features through a context-aware spatial attention mechanism. Second, we propose the Joint-Aware Positional Encoding (JAPE) module, which innovatively combines a fixed global coordinate system with learnable, joint-specific spatial priors. This design injects both absolute position awareness and statistical priors of the human body structure. Our ablation studies on the MPII dataset validate the effectiveness of each proposed enhancement, with our full model achieving a mean PCKh of 88.915, a 0.341 percentage point improvement over our re-implemented baseline. On the more challenging COCO val2017 dataset, our ResNet-50-based AFJ-PoseNet achieves an Average Precision (AP) of 72.6%. While this involves a slight trade-off in Average Recall for higher precision, this result represents a significant 2.2 percentage point improvement over our re-implemented baseline (70.4%) and also outperforms other strong, publicly available models like DARK (72.4%) and SimCC (72.1%) under comparable settings, demonstrating the superiority and competitiveness of our proposed enhancements.

1. Introduction

Human pose estimation (HPE) is a fundamental task in computer vision that aims to localize human keypoints (e.g., joints and facial features) from images. It provides critical technical support for high-level applications such as action recognition, human–computer interaction, sports science analysis, and virtual reality [1]. In recent years, driven by deep learning, top-down HPE methods based on heatmap regression have become the mainstream. This paradigm first detects each human instance in an image using an object detector and then performs single-person pose estimation on the cropped image patch of each instance.

Among the numerous models, Simple Baseline, proposed by Xiao et al. [2], has become a milestone in the field due to its surprising simplicity and excellent performance. It demonstrated that a strong backbone network (e.g., ResNet [3]) pre-trained on ImageNet, combined with a simple decoder consisting of only a few deconvolutional layers, could achieve or even surpass the performance of more complex architectures of its time, such as Stacked Hourglass [4]. This “simplicity is key” design philosophy has made it a widely adopted baseline for subsequent research.

However, the simplicity of Simple Baseline also introduces two pressing limitations that hinder its further performance improvement:

- Information Bottleneck in Decoding: The decoder of Simple Baseline only begins its upsampling process from the final, lowest-resolution feature map of the backbone network (commonly referred to as C5 in ResNet). We term this a linear deconvolutional decoder to emphasize its non-branching, unidirectional information flow. This structure means that the high-resolution structural and textural information captured by the shallower and middle layers (e.g., C2, C3, and C4) during the encoder’s forward pass is completely discarded. This information is crucial for accurately localizing occluded, blurry, or small-sized keypoints.

- Lack of Spatial and Structural Priors: Standard Convolutional Neural Networks (CNNs) possess an inherent property of translation invariance. While beneficial for pattern recognition, this makes them insensitive to the absolute spatial location of objects, a limitation addressed by methods like CoordConv [5] in other domains. Furthermore, Simple Baseline fails to explicitly leverage the intrinsic structural priors of the human body—for example, that “eyes” are always above the “shoulders,” and “ankles” are typically at the bottom of the body. Such prior knowledge is vital for inferring biologically plausible poses in ambiguous scenarios with incomplete visual information, such as occlusion or truncation. For instance, without explicit structural constraints, a model might predict a valid but anatomically impossible pose where the left wrist is closer to the right shoulder than the left elbow, especially under heavy occlusion.

To systematically address these two issues, we propose AFJ-PoseNet, built upon the Simple Baseline framework. We retain its powerful ResNet-50 backbone and introduce two orthogonal and targeted enhancements: (1) transforming the linear decoder into an Attention-Guided Fusion Path and (2) shifting from implicit learning to the explicit injection of spatial priors.

The main contributions of this paper can be summarized as follows:

- We propose a U-Net-like reconstruction of the Simple Baseline decoder, centered around a novel Attention Fusion Module (AFM), which significantly enhances the model’s ability to fuse multi-scale features for fine-grained localization. Unlike generic attention modules, our AFM is specifically designed for the decoder’s fusion points to selectively gate the flow of low-level spatial details based on high-level semantic context.

- We introduce the Joint-Aware Positional Encoding (JAPE) module, which innovatively combines global positional information with joint-specific, learnable spatial priors. This hybrid approach allows the model to leverage both a universal coordinate system and learned, non-uniform spatial likelihoods for each keypoint type.

- We integrate these modules into an end-to-end model, AFJ-PoseNet. Extensive experiments, including a rigorous ablation study, demonstrate that our model significantly outperforms a strong Simple Baseline under identical configurations.

2. Related Work

2.1. Heatmap-Based Human Pose Estimation

Top-down HPE methods, which localize keypoints via heatmap regression, have become the current mainstream. Classic works like Stacked Hourglass [4] iteratively refine features through cascaded encoder–decoder modules. CPN [6] processes hard examples using a cascaded global network and a refinement network. HRNet [7] achieves outstanding performance by maintaining high-resolution feature branches in parallel throughout the network. Unlike these complex architectures, Simple Baseline [2] proved the effectiveness of a “strong encoder + simple decoder” paradigm and inspired a series of improvements built upon it. For instance, DARK [8] and UDP [9] improve performance by refining the generation and distribution of heatmaps. Our work differs from these methods; we do not alter the backbone network or the heatmap encoding itself. Instead, we focus on fundamentally improving Simple Baseline’s decoder architecture and spatial prior modeling capabilities to address its inherent flaws in information flow and prior knowledge.

2.2. Multi-Scale Fusion in U-Net Architectures

U-Net [10], originally designed for biomedical image segmentation, features a signature encoder–decoder structure with skip connections that has become a standard configuration for dense prediction tasks like semantic segmentation [11] and object detection [12]. The core idea of skip connections is to pass shallow, detailed features from the encoder to the decoder. However, standard U-Net often uses simple feature concatenation or addition for fusion. Our AFM aims to implement a more discriminative feature fusion strategy by incorporating a spatial attention mechanism [13], thereby optimizing this classic structure.

2.3. Attention Mechanisms in Computer Vision

The attention mechanism has become an indispensable tool in modern deep learning. From SENet [14], which recalibrates channel responses, to CBAM [13], which combines channel and spatial attention, and the self-attention mechanism in Transformers [15], attention has achieved success across various vision tasks. In HPE, attention has also been used to model inter-keypoint dependencies [16] or to focus on specific body regions [17]. Our AFM applies spatial attention to explicitly model the importance of multi-scale skip information from the encoder within a U-Net-like decoding path.

2.4. Positional and Structural Priors in Vision Tasks

Explicitly providing CNNs with positional information is a key research direction for improving performance in localization tasks. CoordConv [5] enhances a network’s positional awareness by directly adding coordinate channels. Positional encoding (PE), originating from NLP, has also proven crucial for vision tasks in models like Vision Transformer (ViT) [18] and DETR [19]. Our JAPE module takes a step further by not only including global positional information but, more importantly, learning a spatial bias that is strongly correlated with both the task and the keypoint class.

2.5. Recent Advances in Pose Estimation

Since 2020, the field has seen significant advancements. On the one hand, methods like UDP [9] and DARK [8] have improved upon the Simple Baseline paradigm by refining data processing and coordinate representation, respectively. On the other hand, Transformer-based architectures have emerged as powerful alternatives. Works like TokenPose [20] introduce keypoint-specific tokens into a hybrid CNN–Transformer architecture, while ViTPose [21] demonstrates the strong performance of plain Vision Transformers as backbones. These methods achieve state-of-the-art results by effectively modeling long-range dependencies. Our work contributes to the former line of research, demonstrating that by systematically addressing the architectural limitations of a strong baseline, significant performance gains can still be achieved in an efficient, convolution-only framework.

3. Methods

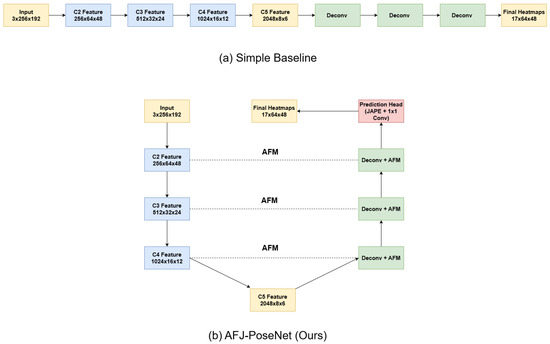

Our work is built upon the Simple Baseline proposed by Xiao et al. [2]. Its standard configuration consists of a ResNet encoder and a simple decoder composed of three transposed convolution layers. The central problem is the information gap between the decoder and encoder. To overcome this, we propose AFJ-PoseNet. The overall architectural comparison is shown in Figure 1.

Figure 1.

Architectural comparison. (a) The original Simple Baseline, featuring a linear decoding process where the feature flow originates unidirectionally from ResNet’s final output C5. (b) Our proposed AFJ-PoseNet architecture, which reconstructs the decoder into a U-Net-like path with skip connections and our Attention Fusion Modules (AFMs). The Prediction Head in (b) incorporates the JAPE module before the final 1 × 1 convolution.

3.1. U-Net-Like Multi-Scale Fusion Decoder with AFM

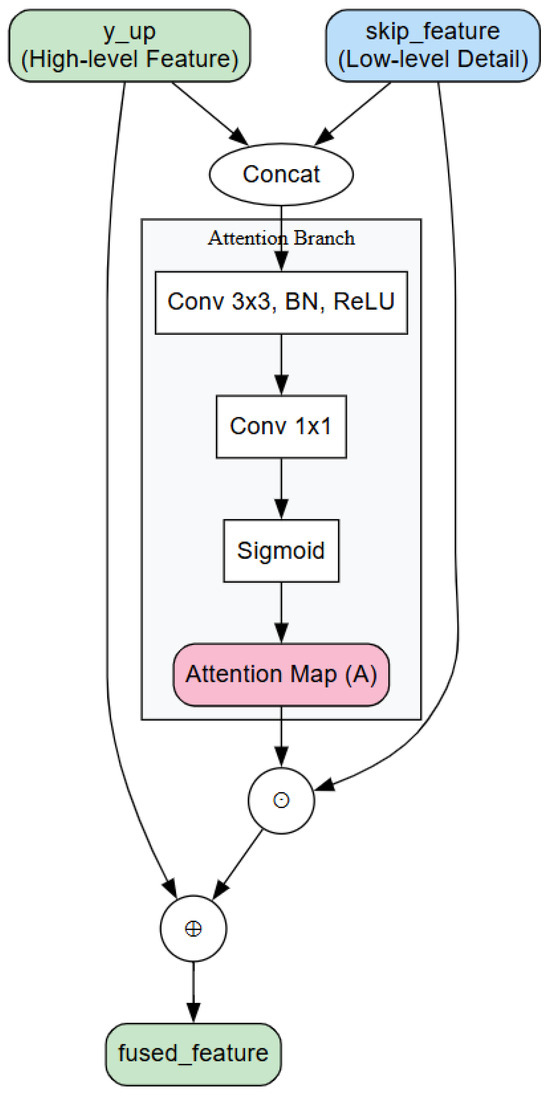

We re-architect the Simple Baseline decoder into a U-Net-like fusion path. After each upsampling stage, we introduce a feature map of the corresponding spatial resolution from the encoder as a skip connection. While standard U-Net architectures often fuse features via simple concatenation or addition, this approach treats all incoming low-level information equally, potentially introducing noise alongside useful details. To facilitate a more intelligent and adaptive fusion, we designed the Attention Fusion Module (AFM), shown in Figure 2, to guide this process.

Figure 2.

A detailed illustration of the Attention Fusion Module (AFM). The inputs are y_up (High-level Feature) and skip_feature (Low-level Detail). The diagram shows the concatenation, the attention branch, the element-wise multiplication, and the final residual addition. The plus sign (+) denotes element-wise addition, and the circle with a cross (⊗) denotes element-wise multiplication. The ‘Conv 3 × 3, BN, ReLU’ block represents a standard convolutional layer with a 3 × 3 kernel, followed by Batch Normalization and a ReLU activation function.

The core idea of the AFM is to use both high-level semantic context (y_up) and low-level spatial details (skip_feature) to generate a dynamic attention map, which then selectively gates the flow of the low-level features. Specifically, as depicted in Figure 2, the high-level and low-level features are first concatenated along the channel dimension. This combined feature is then passed through an attention branch—consisting of a 3 × 3 convolution, a 1 × 1 convolution, and a final sigmoid activation—to produce the spatial attention map A. Let denote the attention branch; the generation of A is described as follows:

where and is the Sigmoid function.

This generated attention map A is then used to modulate the original skip_feature via element-wise multiplication. The result is added to the upsampled feature y_up through a residual connection to produce the final fused feature. This fusion step is described by

where ⊙ denotes the Hadamard product. The attention map A, with a shape of (N, 1, H, W), is automatically broadcast across the channel dimension of the skip_feature, ensuring efficiency. This two-step process allows the model to adaptively amplify key details from skip_feature (e.g., edges of a joint) while suppressing irrelevant background textures, leading to a more refined feature representation.

3.2. Joint-Aware Positional Encoding (JAPE)

A fundamental limitation of standard CNNs is their inherent translation invariance. While advantageous for image classification, this property is suboptimal for localization-sensitive tasks like HPE, which require understanding not only what a feature is but also where it is located in the image. Furthermore, standard models lack explicit knowledge of the human body’s anatomical structure, such as the statistical likelihood that a “head” appears in the upper part of an image. To address this dual challenge of spatial ambiguity and lack of structural priors, we propose the Joint-Aware Positional Encoding (JAPE) module.

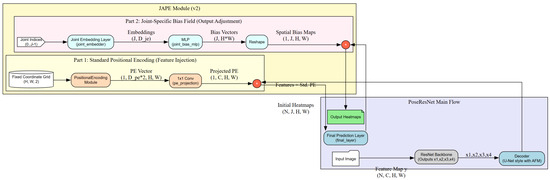

The JAPE module, as illustrated conceptually in Figure 3, is designed with an elegant simplicity to inject both global spatial awareness and local, learned structural priors into the final stage of the network. It operates through two distinct, parallel pathways that address the two aforementioned limitations orthogonally: one for providing a universal coordinate system and the other for learning joint-specific spatial biases.

Figure 3.

A conceptual diagram of the JAPE module. The module comprises two parallel paths that enhance the network’s output. Part 1 (Feature Injection): The lower path takes a fixed grid of normalized coordinates, converts them into sine/cosine embeddings via the ‘PositionalEncoding Module’, and projects them through a ‘1 × 1 Conv’ to match the feature map’s channels before addition. Part 2 (Output Adjustment): The upper path takes joint indices, passes them through a ‘Joint Embedding Layer’ to obtain a learnable vector for each joint, and then processes this vector with an ‘MLP’ to generate a unique ‘Spatial Bias Map’. This bias map is added to the final heatmap prediction. The plus sign (+) denotes element-wise addition. The asterisk (*) on each joint index indicates it is a unique identifier for that joint type.

- Global Positional Encoding (Feature Injection): This path’s primary goal is to counteract the translation invariance of CNNs by providing the model with a sense of absolute coordinates, making it location-aware. We first generate a fixed positional encoding (PE) map using standard sine/cosine functions [15] for every pixel location in the heatmap space. This high-dimensional PE map is then linearly projected by a 1 × 1 convolution to match the channel dimension of the decoder’s final feature map, y. The resulting projected PE is added to y, enriching the features with explicit, class-agnostic positional information before they are passed to the final prediction layer. This operation directly informs the model of the absolute spatial context of the visual features it is processing.

- Joint-Specific Bias Field (Output Adjustment): This path is designed to explicitly model and leverage human anatomical regularities as a powerful form of prior knowledge. We create a learnable embedding vector for each of the K keypoint types. Each embedding, which represents a condensed, learnable signature for a specific joint (e.g., “left ankle”), is passed through a small, independent Multi-Layer Perceptron (MLP) to generate a unique spatial bias map . This map is produced at a small, fixed resolution and then upsampled to the target heatmap’s dimensions using bilinear interpolation, ensuring its adaptability to different output sizes. This bias map, which learns the statistical likelihood of a joint’s location from the training data (e.g., heads are rarely at the bottom of an image), is then added directly to the corresponding predicted heatmap logit just before the final activation. The final logit for keypoint k is thus computed as follows: . This acts as a powerful regularizer, guiding the model to produce anatomically plausible poses, especially in ambiguous cases like heavy occlusion.

This dual-path design, which synergistically combines a global, fixed coordinate system with a local, learnable, and joint-specific prior, forms the core innovation of the JAPE module.

3.3. Experimental Setup

3.3.1. Datasets and Evaluation Metrics

We use two standard benchmarks: MPII Human Pose [22], a classic dataset with 25 K images, evaluated using the Percentage of Correct Keypoints (PCKh) metric at a threshold of 0.5 and PCK@0.1 for a stricter evaluation, and COCO Keypoints 2017 [23], a large-scale dataset with over 200 K images, evaluated using the official Average Precision (AP) metric.

3.3.2. Implementation Details

All models are implemented using the PaddlePaddle framework and trained on a single NVIDIA Tesla V100 (32 GB) GPU (Nvidia Corporation, Santa Clara, CA, USA). The backbone is a ResNet-50 pre-trained on ImageNet. For training, we use the Adam optimizer with a batch size of 128 and an initial learning rate of 0.001. The learning rate is decayed by a factor of 0.1 at the 90th and 120th epochs, out of a total of 140 epochs. Input image sizes are 256 × 256 for MPII and 256 × 192 for COCO, with corresponding heatmap sizes of 64 × 64 and 64 × 48, respectively. Standard data augmentation (random flipping, rotation up to ±30 degrees, and scaling between 0.75 and 1.25) and flip testing are applied during inference.

4. Results

4.1. Ablation Study on MPII

To isolate and quantify the contribution of each proposed enhancement, we conducted a rigorous ablation study on the MPII validation set. We compare three models under an identical ResNet-50 backbone and 256 × 256 input configuration: (1) our re-implementation of the original Simple Baseline, (2) the baseline enhanced with our U-Net/AFM decoder, and (3) our full model, AFJ-PoseNet, which adds the JAPE module. The detailed scores are shown in Table 1.

Table 1.

Ablation study of the proposed modules on the MPII validation set, including an analysis of model complexity. All models use a ResNet-50 backbone and are benchmarked on a single NVIDIA Tesla V100 GPU. Best results are in bold.

The results in Table 1 clearly demonstrate the effectiveness of our proposed modules and their synergy, not only in terms of accuracy but also in computational efficiency.

Comparing model (2) to our baseline (1), adding the U-Net/AFM decoder improves the overall mean PCKh@0.5 from 88.574 to 88.715 (+0.141 percentage points). This enhancement yields noticeable gains for joints requiring fine-grained detail, such as the hip (+1.091) and ankle (+0.378). This accuracy improvement comes at the cost of a 1.35M (3.96%) increase in parameters and a 15.3% reduction in inference speed (FPS), which is an expected trade-off for introducing multi-scale fusion paths.

By adding the JAPE module to create our full AFJ-PoseNet model (3), we observe a further improvement, boosting the mean PCKh@0.5 to 88.915. This represents a total +0.341 percentage point gain over the original Simple Baseline. Crucially, JAPE not only provides its own boost but also mitigates the performance drop seen with the AFM on the shoulder and elbow, suggesting that the explicit structural priors from JAPE help to regularize the feature fusion process. The full AFJ-PoseNet model adds only 1.91M parameters (+5.61%) compared to the baseline, while the inference speed remains at a practical 57.23 FPS. This analysis highlights that our proposed architecture achieves a significant accuracy gain with only a modest and acceptable increase in computational complexity, demonstrating the efficiency of our design.

4.2. Performance Comparison on COCO

To contextualize the performance of our method, we conduct a comprehensive comparison with several state-of-the-art methods on the challenging COCO val2017 dataset.

4.2.1. Baseline Implementation and Fair Comparison

For a rigorous evaluation, we first re-implemented the Simple Baseline [2], which achieved 70.4% AP. We note that some open-source toolboxes like MMPose [24] report a higher baseline of 71.8% AP, likely due to different training strategies or data processing optimizations. To maintain strict experimental control and accurately measure the contribution of our proposed modules, all performance gains reported in this section are calculated relative to our own 70.4% AP baseline. The results are detailed in Table 2.

Table 2.

Performance comparison with state-of-the-art methods on the COCO val2017 dataset. All methods use a 256 × 192 input size unless otherwise specified. Our results are compared against our re-implemented baseline. Publicly reported results from other methods are included for reference. The best result among comparable ResNet-50 methods is in bold.

4.2.2. Analysis of Results

As shown in Table 2, our proposed AFJ-PoseNet achieves a final AP of 72.6%. This represents a significant 2.2 percentage point improvement over our re-implemented Simple Baseline (70.4%), highlighting the efficacy of our architectural enhancements.

Notably, our model’s performance is highly competitive when contextualized with other state-of-the-art methods evaluated under the same rigorous conditions. AFJ-PoseNet (72.6% AP) not only surpasses the stronger public baseline from MMPose (71.8% AP) but also outperforms other prominent enhancement methods like DARK (72.4% AP) and SimCC (72.1% AP). This result strongly suggests that our synergistic combination of attention-guided multi-scale fusion and joint-aware spatial priors provides a more effective solution than tackling either the coordinate representation (like DARK) or the regression paradigm (like SimCC) in isolation. While Transformer-based architectures like ViTPose-B (75.7% AP) demonstrate a higher performance ceiling, our work validates that substantial improvements can still be achieved within the well-established and efficient convolutional paradigm.

5. Discussion

Our experimental results strongly support the advantages of AFJ-PoseNet. The ablation study on MPII (Table 1) provides insight into the synergy between our modules. We observe that adding the U-Net/AFM decoder alone (model 2) leads to a slight performance degradation on stable joints like the shoulder and elbow. A plausible explanation is that for these less challenging keypoints, the fusion of additional low-level features via the AFM might introduce more noise than useful signal. However, upon the subsequent introduction of the JAPE module (model 3), this performance drop is fully recovered, and accuracy is boosted across nearly all joints. This strongly suggests that JAPE’s explicit spatial and structural priors act as a powerful regularizer, effectively guiding the feature fusion process and validating the synergistic relationship between our two proposed modules.

The comprehensive comparison on COCO (Table 2) further solidifies our claims. Our model’s ability to outperform other strong ResNet-50-based methods like DARK and SimCC indicates the robustness of our dual-enhancement approach. It is noteworthy that while AFJ-PoseNet achieves a higher Average Precision (AP), its Average Recall (AR) is slightly lower than the baseline and other methods (75.6 vs. 76.3–78.1). This suggests that our model’s primary advantage lies in improving the localization precision and prediction confidence, rather than simply detecting more keypoint candidates. The explicit structural priors from the JAPE module and the selective gating in the AFM likely act as a strong regularizer, pruning ambiguous or low-confidence detections that a less constrained baseline might otherwise output. This trade-off, which favors precision over recall, is a desirable characteristic for applications requiring high-fidelity pose estimation.

The performance gap between our model and Transformer-based approaches like ViTPose-B also warrants discussion. Transformers excel at modeling long-range, global dependencies, which is inherently beneficial for understanding the holistic structure of the human body. Our AFJ-PoseNet, while significantly improving information flow via its U-Net structure, still relies on the limited receptive fields of convolutions. The success of JAPE, which explicitly injects global and structural information, hints that bridging this global context gap is a critical direction for future improvements in CNN-based models.

Finally, we consider the future directions for our work. While JAPE effectively addresses translation variance, rotation variance remains a challenge for many CNN-based models. Future work could explore integrating rotation-invariant features to further enhance model robustness against diverse human poses. Furthermore, while AFJ-PoseNet demonstrates clear improvements, its inference speed is still tied to the ResNet-50 backbone. Future work could also involve applying our AFM and JAPE principles to other, more lightweight backbones to create efficient models suitable for real-time applications. Extending these 2D-centric concepts to the more complex domain of 3D human pose estimation is also a promising research direction.

6. Conclusions

This paper introduced AFJ-PoseNet, an enhanced architecture that addresses the core limitations of the widely-used Simple Baseline framework in feature fusion and spatial prior modeling. We performed two targeted, synergistic enhancements: (1) we re-architected the linear decoder into a U-Net-like path guided by our novel Attention Fusion Module (AFM), and (2) we designed the Joint-Aware Positional Encoding (JAPE) module to explicitly inject critical spatial and structural priors. Comprehensive experiments on the MPII and COCO benchmarks demonstrate the clear superiority of our approach, highlighted by a significant 2.2 percentage point AP gain on COCO val2017 over a strong baseline, which favors higher precision at the cost of a minor decrease in recall. Our work provides a clear and effective blueprint for systematically improving upon established CNN-based models in computer vision.

Author Contributions

Conceptualization, W.Z. and J.L.; methodology, W.Z. and Y.S.; software, W.Z.; validation, W.Z., Y.S., and J.L.; formal analysis, W.Z. and Y.S.; investigation, W.Z.; resources, J.L.; data curation, W.Z.; writing—original draft preparation, W.Z.; writing—review and editing, W.Z., Y.S., and J.L.; visualization, W.Z.; supervision, J.L.; project administration, J.L.; funding acquisition, W.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Innovation Project of GUET Graduate Education, grant number 2025YCXS061.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The COCO and MPII datasets used in this study are publicly available at https://cocodataset.org/ and http://human-pose.mpi-inf.mpg.de/, respectively. The code for our model will be made available upon publication.

Acknowledgments

We would like to thank the anonymous reviewers for their constructive feedback, which helped improve the quality of this manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

Correction Statement

This article has been republished with a minor correction to the Funding statement. This change does not affect the scientific content of the article.

Abbreviations

The following abbreviations are used in this manuscript:

| AFM | Attention Fusion Module |

| AFJ-PoseNet | Attention Fusion and Joint-Aware Pose Network |

| AP | Average Precision |

| AR | Average Recall |

| CNN | Convolutional Neural Network |

| HPE | Human Pose Estimation |

| JAPE | Joint-Aware Positional Encoding |

| MLP | Multi-Layer Perceptron |

| PCK | Percentage of Correct Keypoints |

| PE | Positional Encoding |

References

- Chen, X.; Yu, T.; Li, B.; Huang, T.; Wang, S.; Li, J. Human Pose Estimation: A Comprehensive Review. Comput. Graph. 2022, 102, 137–152. [Google Scholar]

- Xiao, B.; Wu, H.; Wei, Y. Simple Baselines for Human Pose Estimation and Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 469–486. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Newell, A.; Yang, K.; Deng, J. Stacked Hourglass Networks for Human Pose Estimation. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 483–499. [Google Scholar]

- Liu, R.; Lehman, J.; Molino, P.; Such, F.P.; Frank, E.; Sergeev, A.; Yosinski, J. An Intriguing Failing of Convolutional Neural Networks and the CoordConv Solution. In Advances in Neural Information Processing Systems 31 (NeurIPS), Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Montreal, QC, Canada, 3–8 December 2018; Curran Associates: New York, NY, USA, 2019; pp. 9628–9639. [Google Scholar]

- Chen, Y.; Wang, Z.; Peng, Y.; Zhang, Z.; Yu, G.; Sun, J. Cascaded Pyramid Network for Multi-Person Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7103–7112. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 5693–5703. [Google Scholar]

- Zhang, F.; Zhu, X.; Dai, H.; Ye, M.; Zhu, C. Distribution-Aware Coordinate Representation for Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 7093–7102. [Google Scholar]

- Huang, J.; Zhu, Z.; Guo, F.; Huang, G. The Devil is in the Details: Delving into Unbiased Data Processing for Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 5493–5502. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Advances in Neural Information Processing Systems 30 (NeurIPS), Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Curran Associates: New York, NY, USA, 2018; pp. 5998–6008. [Google Scholar]

- Artacho, B.; Savakis, A. UniPose: Unified Human Pose Estimation in Single Images and Videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 7737–7746. [Google Scholar]

- Chu, X.; Yang, W.; Ouyang, W.; Ma, C.; Yuille, A.L.; Wang, X. Multi-Context Attention for Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1837–1846. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Li, Y.; Zhang, Z.; Wang, X.; Liu, W.; Wang, Y.; Luo, P. TokenPose: Learning Keypoint Tokens for Human Pose Estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 11339–11348. [Google Scholar]

- Xu, Y.; Zhang, Q.; Zhang, J.; Tao, D. ViTPose: Simple Vision Transformer Baselines for Human Pose Estimation. In Advances in Neural Information Processing Systems 35 (NeurIPS), Proceedings of the 36th Conference on Neural Information Processing Systems (NeurIPS 2022), New Orleans, LA, USA, 28 November–9 December 2022; Curran Associates: New York, NY, USA, 2023. [Google Scholar]

- Andriluka, M.; Pishchulin, L.; Gehler, P.; Schiele, B. 2D Human Pose Estimation: New Benchmark and State of the Art Analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 3686–3693. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- OpenMMLab. MMPose: OpenMMLab Pose Estimation Toolbox and Benchmark. 2020. Available online: https://github.com/open-mmlab/mmpose (accessed on 1 August 2024).

- Li, Y.; Yang, S.; Liu, P.; Zhang, S.; Wang, Y.; Wang, Z.; Yang, W.; Xia, S.T. SimCC: A Simple Coordinate Classification Perspective for Human Pose Estimation. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 37–54. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).