Decision Tree Pruning with Privacy-Preserving Strategies

Abstract

1. Introduction

2. Related Works

2.1. Pruning-Based Misclassification Error Rate (Minimum Error)

2.2. Pruning-Based Structural Complexity

2.3. Pruning-Based Multiple Metrics

2.4. Pruning with Weighting (Cost-Sensitive)

2.5. Hybrid Pruning

2.6. Other Pruning

2.7. Privacy Pruning

Computational Complexity in Privacy Preservation

3. Proposed Model

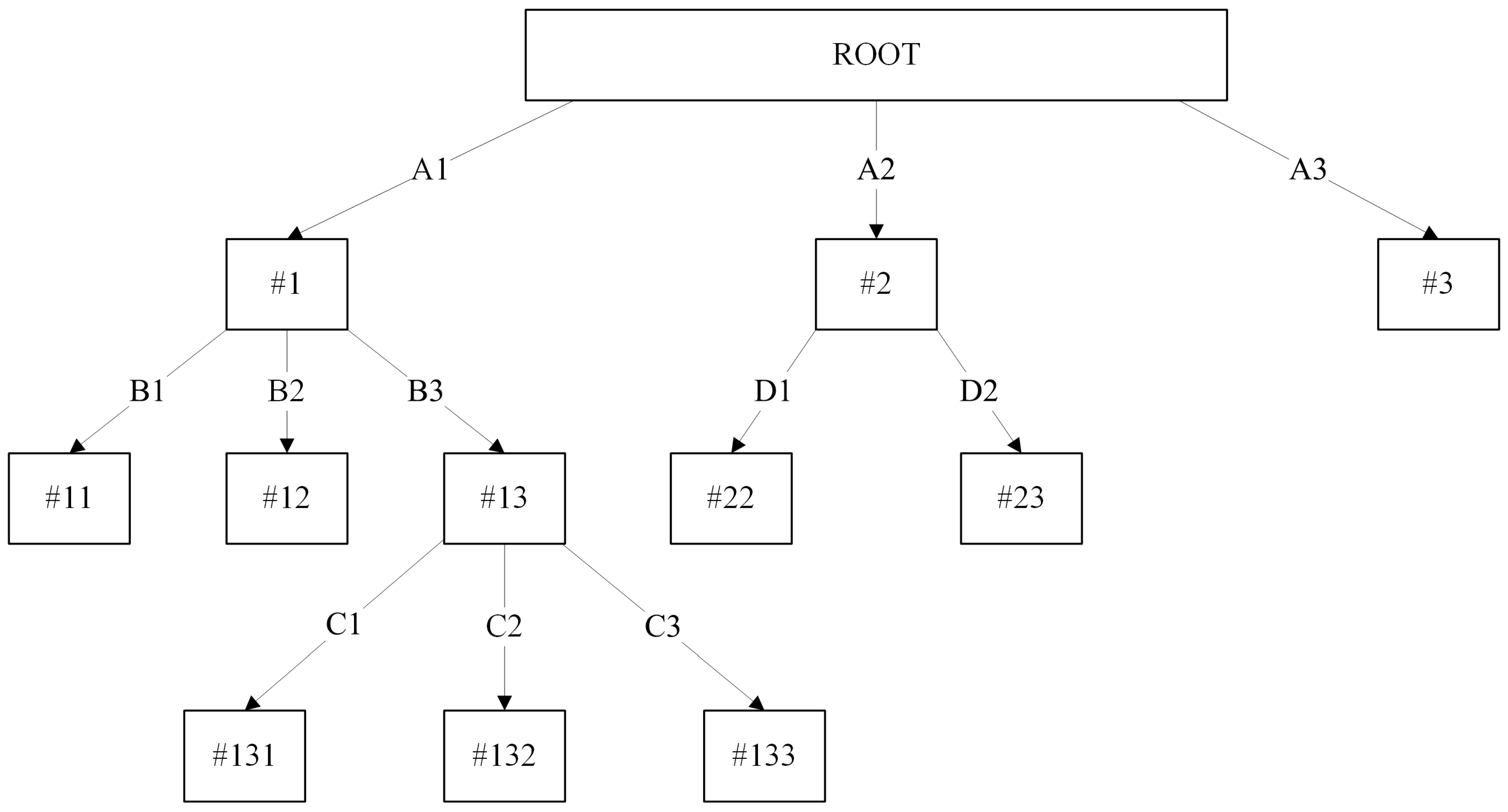

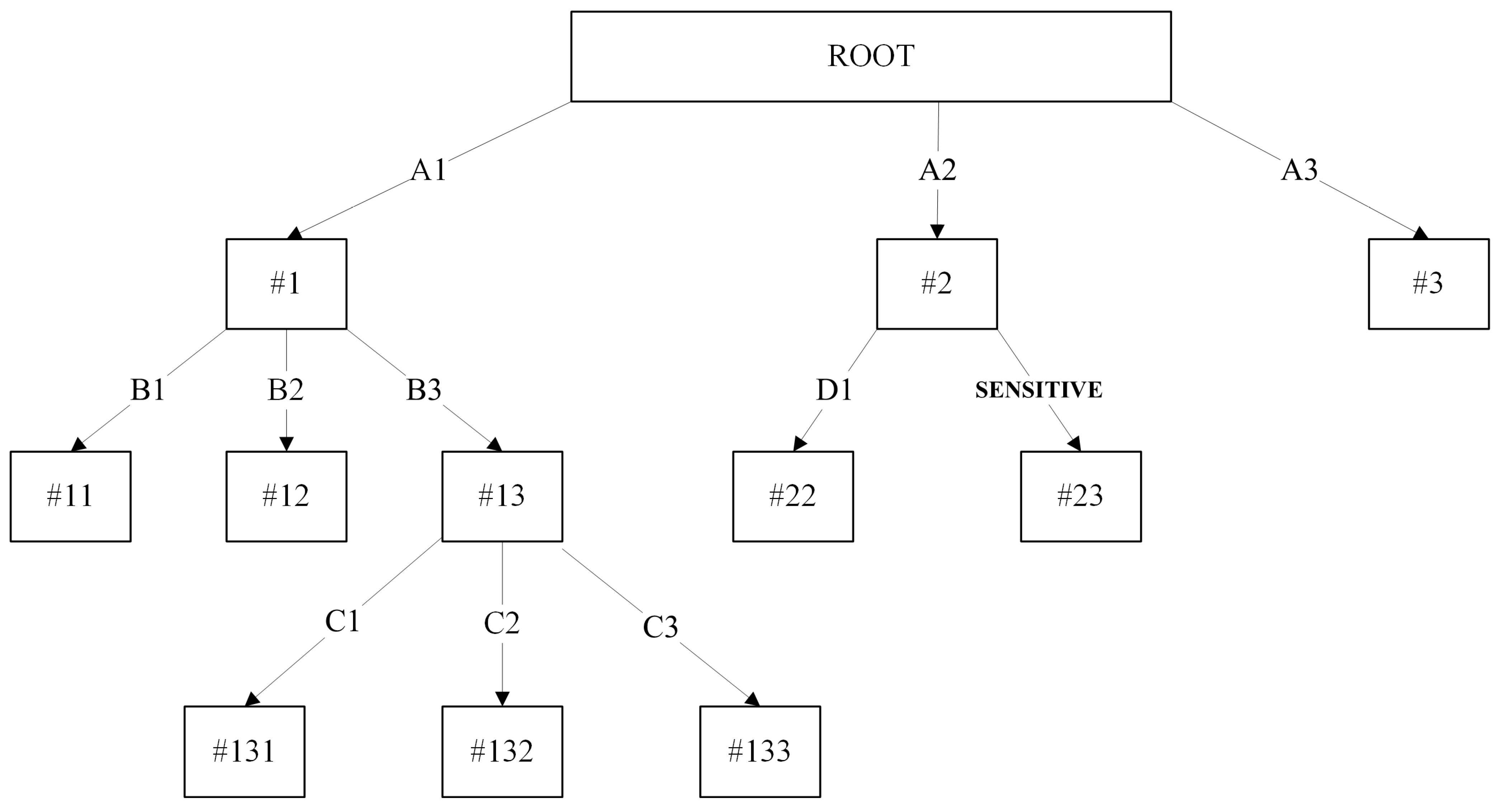

3.1. Sensitive Pruning

| Algorithm 1: Sensitive Pruning Algorithm |

| Input: |

| Tmax, Unpruned Decision Tree |

| Sensitive Attribute Value, AS |

| Output: |

| Pruned Sensitive Decision Tree |

| Procedure: |

| Learning Procedure I: |

| 1. for all split in node t, do |

| 2. Let node ts be the child node of t |

| 3. Let Attribute Value A equals to current split attribute value |

| 4. if (A == AS): |

| 5. Replace A value with “SENSITIVE” |

| 6. if (ts contains any child node): |

| 7. Replace ts with a leave node |

| 8. endif |

| 9. endif |

| 10. Endfor |

| Learning Procedure II: |

| 11. Let T = Tmax |

| 12. if T is not “leave node”: |

| 13. for all node t ∈ T, starting from leaf nodes towards the root node, do |

| 14. Repeat step 1–10 |

| 15. endfor |

| 16. endif |

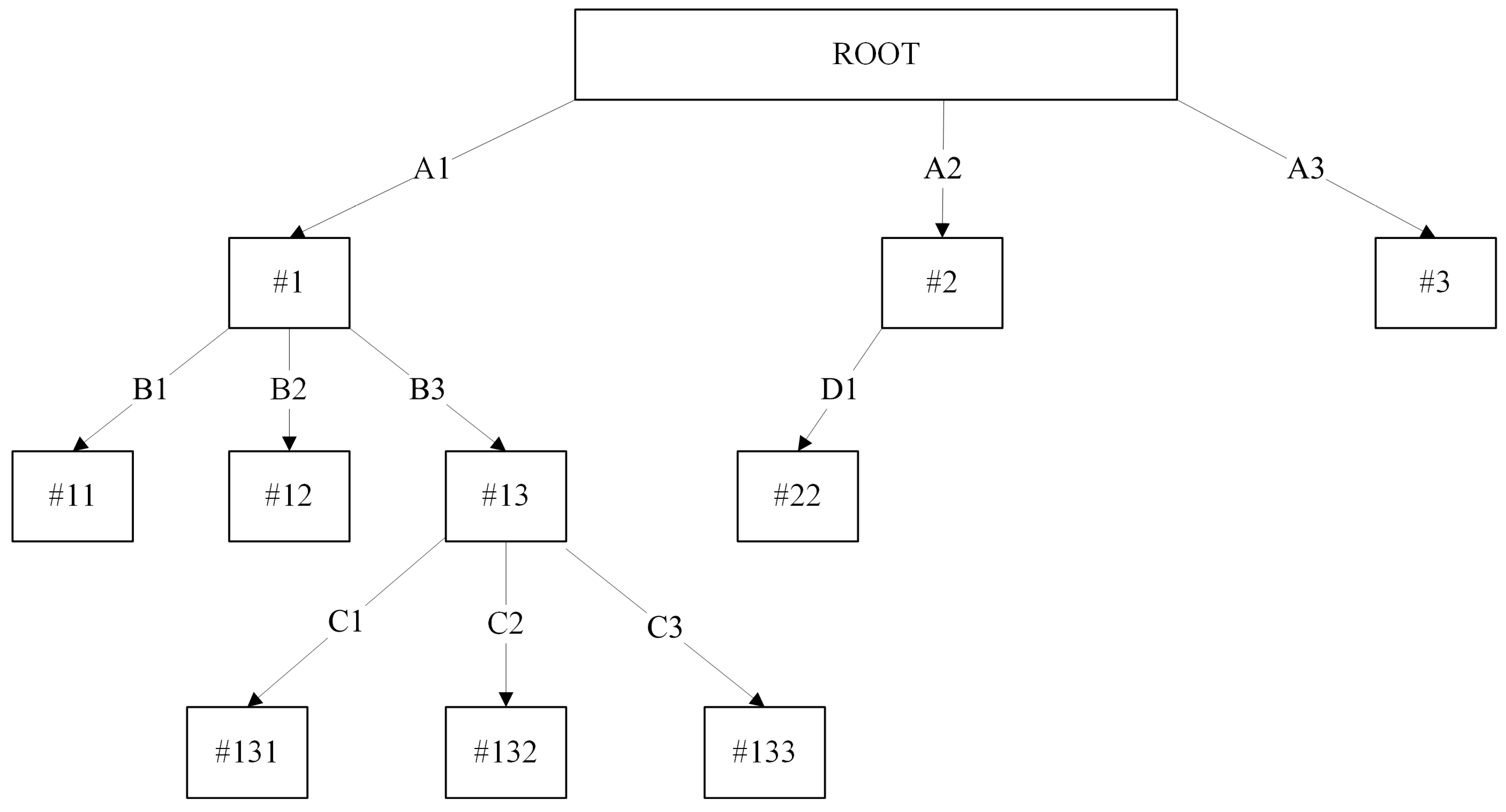

3.2. Optimistic Sensitive Pruning

| Algorithm 2: Optimistic Sensitive Pruning Algorithm |

| Input: |

| Tmax, Unpruned Decision Tree |

| Sensitive Attribute Value, AS |

| Output: |

| Pruned Sensitive Decision Tree |

| Procedure: |

| Learning Procedure I: |

| 1. for all split in node t, do |

| 2. Let node ts be the child node of t |

| 3. Let Attribute Value A equals to current split attribute value |

| 4. if (A == AS): |

| 5. Replace A value with “SENSITIVE” |

| 6. if (ts contains any child node): |

| 7. Replace ts with a leave node |

| 8. endif |

| 9. if (number of split == 2): |

| 10. Replace t with a leave node |

| 11. endif |

| 12. endif |

| 13. endfor |

| Learning Procedure II: |

| 14. Let T = Tmax |

| 15. if T is not “leave node”: |

| 16. for all node t ∈ T, starting from leaf nodes towards the root node, do |

| 17. Repeat step 1–13 |

| 18. endfor |

| 19. endif |

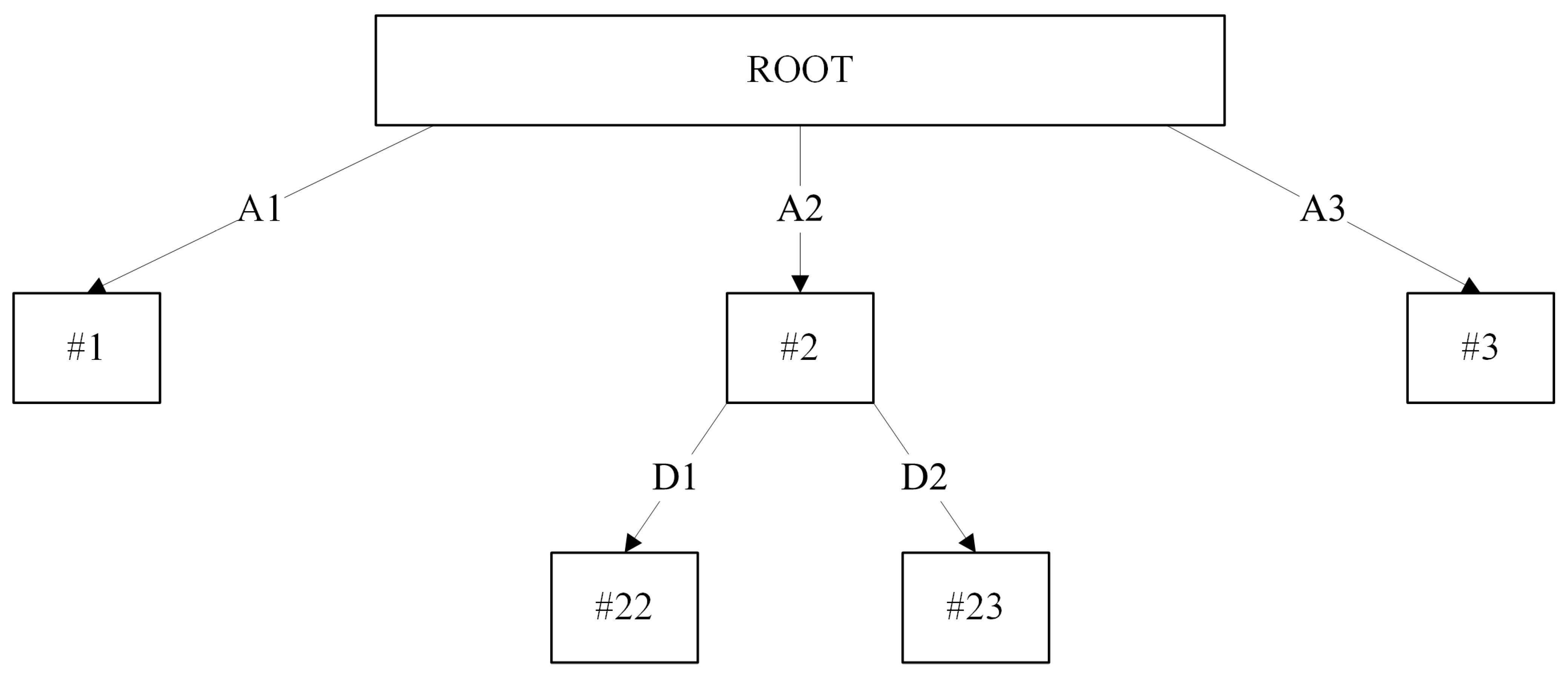

3.3. Pessimistic Sensitive Pruning

| Algorithm 3: Pessimistic Sensitive Pruning Algorithm |

| Input: |

| Tmax, Unpruned Decision Tree |

| Sensitive Attribute Value, AS |

| Output: |

| Pruned Sensitive Decision Tree |

| Procedure: |

| Learning Procedure I: |

| 1. for all split in node t, do |

| 2. Let node ts be the child node of t |

| 3. Let Attribute Value A equals to current split attribute value |

| 4. if (A == AS): |

| 5. Replace t with a leave node |

| 6. endif |

| 7. endfor |

| Learning Procedure II: |

| 8. Let T = Tmax |

| 9. if T is not “leave node”: |

| 10. for all node t ∈ T, starting from leaf nodes towards the root node, do |

| 11. Repeat step 1–7 |

| 12. endfor |

| 13. endif |

3.4. Sensitive Values Considerations

3.5. Privacy Risk Metrics Considerations

3.6. GDPR-Compliant Model Sharing

4. Application and Motivation

5. Experiment

5.1. Datasets and Experimental Settings

5.1.1. GureKDDCup Dataset and Pre-Processing

5.1.2. UNSW-NB15 Dataset and Pre-Processing

5.1.3. CIDDS-001 Dataset and Pre-Processing

5.2. Types of Pruning, Evaluation Metrics, and Experimental Setup

5.3. Experimental Results

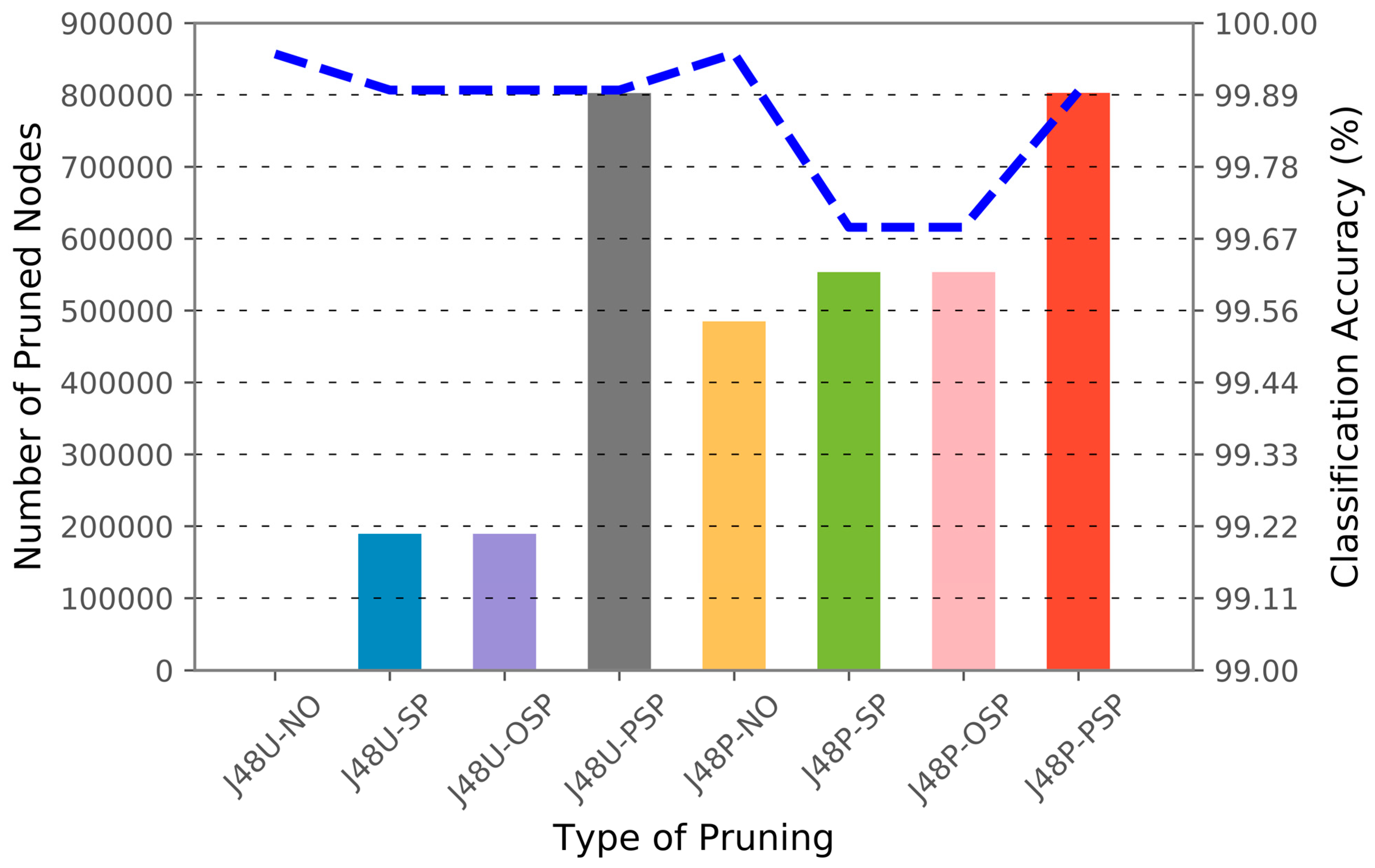

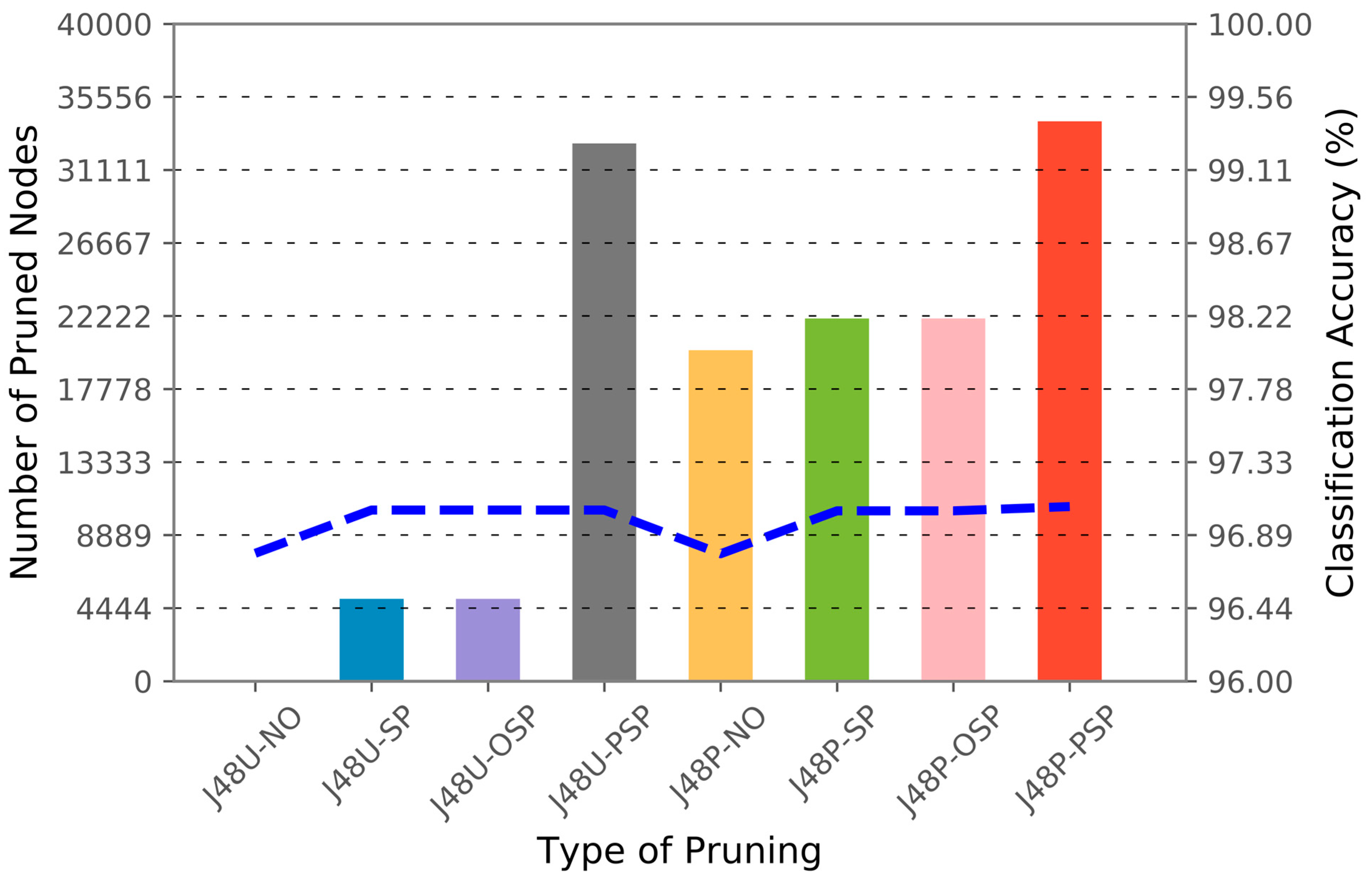

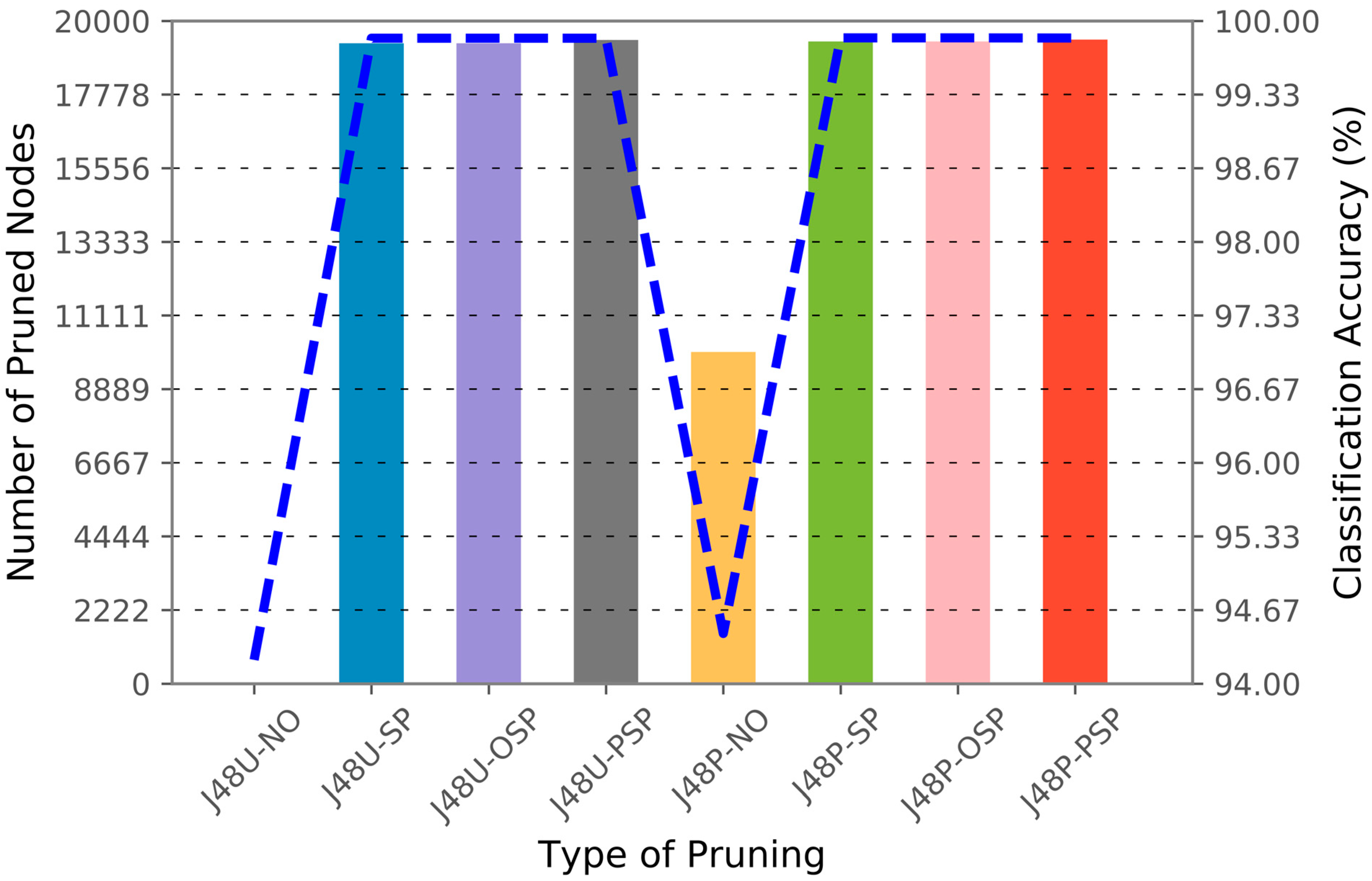

5.3.1. Performance Comparison of All Pruning Algorithms

5.3.2. Visibility of Sensitive Information in Decision Tree

5.3.3. Computational Complexity Comparison Against All Pruning Algorithms

5.3.4. Application of Anonymization Algorithm: Truncation and Bilateral Classification

5.3.5. Benchmarking Against Other Machine Learning Models

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AA | Adaptive Apriori |

| AAAoP-DT | Adaptive Apriori post-pruned Decision Tree |

| CARTs | Classification and Regression Trees |

| CCP | Cost–Complexity Pruning |

| CSP | Cost-Sensitive Pruning |

| CVP | Critical Value Pruning |

| DI | Depth–Impurity |

| EBP | error-based pruning |

| IEEP | Improved Expected Error Pruning |

| IQN | Impurity Quality Node |

| KL | Kullback–Leibler |

| MEP | Minimum Error Pruning |

| NIDS | Network Intrusion Detection System |

| OPT | Optimal Pruning Algorithm |

| PEP | Pessimistic Error Pruning |

| PSO | Particle Swarm Optimization |

| REP | Reduced Error Pruning |

| ROC | Receiver Operating Characteristics |

| BMR | Bayes Minimum Risk |

References

- Fung, B.C.M.; Wang, K.; Chen, R.; Yu, P.S. Privacy-Preserving Data Publishing. ACM Comput. Surv. 2010, 42, 1–53. [Google Scholar] [CrossRef]

- Nettleton, D. Chapter 18: Data Privacy and Privacy-Preserving Data Publishing. In Commercial Data Mining: Processing, Analysis and Modeling for Predictive Analytics Projects; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2014; pp. 217–228. ISBN 0124166024/9780124166028. [Google Scholar]

- Aggarwal, C.C.; Yu, P.S.-L. A General Survey of Privacy-Preserving Data Mining Models and Algorithms. In Privacy-Preserving Data Mining: Models and Algorithms; Springer: Boston, MA, USA, 2008; Volume 34, pp. 11–52. [Google Scholar] [CrossRef]

- Aldeen, Y.A.A.S.; Salleh, M.; Razzaque, M.A. A Comprehensive Review on Privacy Preserving Data Mining. Springerplus 2015, 4, 694. [Google Scholar] [CrossRef] [PubMed]

- Dhivakar, K.; Mohana, S. A Survey on Privacy Preservation Recent Approaches and Techniques. Int. J. Innov. Res. Comput. Commun. Eng. 2014, 2, 6559–6566. [Google Scholar]

- Li, X.-B.; Sarkar, S. Against Classification Attacks: A Decision Tree Pruning Approach to Privacy Protection in Data Mining. Oper. Res. 2009, 57, 1496–1509. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of Decision Trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Quinlan, J.R. C4.5: Program for Machine Learning; Morgan Kaufmann Publishers Inc.: San Mateo, CA, USA, 1993; ISBN 1-55860-238-0. [Google Scholar]

- Breiman, L.; Friedman, J.; Stone, C.J.; Olshen, R.A. Classification and Regression Trees; Taylor & Francis: Oxfordshire, UK, 1984. [Google Scholar]

- Chew, Y.J.; Ooi, S.Y.; Wong, K.-S.; Pang, Y.H. Decision Tree with Sensitive Pruning in Network-Based Intrusion Detection System BT—Computational Science and Technology; Alfred, R., Lim, Y., Haviluddin, H., On, C.K., Eds.; Springer: Singapore, 2020; pp. 1–10. [Google Scholar]

- Martin, J.K. An Exact Probability Metric for Decision Tree Splitting and Stopping. Mach. Learn. 1997, 28, 257–291. [Google Scholar] [CrossRef]

- Breslow, L.A.; Aha, D.W. Simplifying Decision Trees: A Survey. Knowl. Eng. Rev. 1997, 12, 1–40. [Google Scholar] [CrossRef]

- Frank, E.; Witten, I.H. Reduced-Error Pruning with Significance Tests; Department of Computer Science, University of Waikato: Hamilton, New Zealand, 1999. [Google Scholar]

- Quinlan, J.R. Simplifying Decision Trees. Int. J. Man. Mach. Stud. 1987, 27, 221–234. [Google Scholar] [CrossRef]

- Mingers, J. An Empirical Comparison of Selection Measures for Decision Tree Induction. Mach. Learn. 1989, 3, 319–342. [Google Scholar] [CrossRef]

- Esposito, F.; Malerba, D.; Semeraro, G.; Kay, J. A Comparative Analysis of Methods for Pruning Decision Trees. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 476–491. [Google Scholar] [CrossRef]

- Costa, V.G.; Pedreira, C.E. Recent Advances in Decision Trees: An Updated Survey. Artif. Intell. Rev. 2023, 56, 4765–4800. [Google Scholar] [CrossRef]

- Harviainen, J.; Sommer, F.; Sorge, M.; Szeider, S. Optimal Decision Tree Pruning Revisited: Algorithms and Complexity. arXiv 2025, arXiv:2503.03576. [Google Scholar]

- Esposito, F.; Malerba, D.; Semeraro, G. Simplifying decision trees by pruning and grafting: New results (Extended abstract). In Machine Learning: ECML-95. ECML 1995, Lecture Notes in Computer Science; Lavrac, N., Wrobel, S., Eds.; Springer: Berlin/Heidelberg, Germany, 1995; Volume 912. [Google Scholar] [CrossRef]

- Witten, I.H.; Frank, E.; Hall, M.A.; Christopher, J.P. Data Mining: Practical Machine Learning Tools and Techniques, 3rd ed.; Elsevier: Amsterdam, The Netherlands, 2011; ISBN 9780128042915. [Google Scholar] [CrossRef]

- Jensen, D.; Schmill, M.D. Adjusting for Multiple Comparisons in Decision Tree Pruning. In Proceedings of the 3rd International Conference on Knowledge Discovery and Data Mining, Newport Beach, CA, USA, 14–17 August 1997; pp. 195–198. [Google Scholar] [CrossRef]

- Cestnik, B.; Bratko, I. On Estimating Probabilities in Tree Pruning. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 1991; Volume 482, pp. 138–150. [Google Scholar] [CrossRef]

- Watkins, C.J.C.H. Combining Cross-Validation and Search. In Proceedings of the 2nd European Conference on European Working Session on Learning, Bled, Yugoslavia, 1 May 1987; Sigma Press: Rawalpindi, Pakistan, 1987; pp. 79–87. [Google Scholar]

- Zhang, Y.; Chi, Z.X.; Wang, D.G. Decision Tree’s Pruning Algorithm Based on Deficient Data Sets. In Proceedings of the Sixth International Conference on Parallel and Distributed Computing Applications and Technologies (PDCAT’05), Dalian, China, 5–8 December 2005; Volume 2025, pp. 1030–1032. [Google Scholar] [CrossRef]

- Mitu, M.M.; Arefin, S.; Saurav, Z.; Hasan, M.A.; Farid, D.M. Pruning-Based Ensemble Tree for Multi-Class Classification. In Proceedings of the 2024 6th International Conference on Electrical Engineering and Information & Communication Technology (ICEEICT), Mirpur, Dhaka, 2–4 May 2024; IEEE: Dhaka, Bangladesh, 2024; pp. 481–486. [Google Scholar]

- Gelfand, S.B.; Ravishankar, C.S.; Delp, E.J. An Iterative Growing and Pruning Algorithm for Classification Tree Design. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 163–174. [Google Scholar] [CrossRef]

- Bohanec, M.; Bratko, I. Trading Accuracy for Simplicity in Decision Trees. Mach. Learn. 1994, 15, 223–250. [Google Scholar] [CrossRef]

- Almuallim, H. An Efficient Algorithm for Optimal Pruning of Decision Trees. Artif. Intell. 1996, 83, 347–362. [Google Scholar] [CrossRef]

- Lazebnik, T.; Bunimovich-Mendrazitsky, S. Decision Tree Post-Pruning without Loss of Accuracy Using the SAT-PP Algorithm with an Empirical Evaluation on Clinical Data. Data Knowl. Eng. 2023, 145, 102173. [Google Scholar] [CrossRef]

- Bagriacik, M.; Otero, F. Fairness-Guided Pruning of Decision Trees. In Proceedings of the 2025 ACM Conference on Fairness, Accountability, and Transparency, Athens, Greece, 23–26 June 2025; Association for Computing Machinery: New York, NY, USA, 2025; pp. 1745–1756. [Google Scholar]

- Osei-Bryson, K.-M. Post-Pruning in Decision Tree Induction Using Multiple Performance Measures. Comput. Oper. Res. 2007, 34, 3331–3345. [Google Scholar] [CrossRef]

- Fournier, D.; Crémilleux, B. A Quality Index for Decision Tree Pruning. Knowl.-Based Syst. 2002, 15, 37–43. [Google Scholar] [CrossRef][Green Version]

- Barrientos, F.; Sainz, G. Knowledge-Based Systems Interpretable Knowledge Extraction from Emergency Call Data Based on Fuzzy Unsupervised Decision Tree. Knowl.-Based Syst. 2012, 25, 77–87. [Google Scholar] [CrossRef]

- Wei, J.M.; Wang, S.Q.; You, J.P.; Wang, G.Y. RST in Decision Tree Pruning. In Proceedings of the Fourth International Conference on Fuzzy Systems and Knowledge Discovery (FSKD 2007), Haikou, China, 24–27 August 2007; Volume 3, pp. 213–217. [Google Scholar] [CrossRef]

- Pawlak, Z. Rough Sets. Int. J. Comput. Inf. Sci. 1982, 11, 341–356. [Google Scholar] [CrossRef]

- Wei, J.-M.; Wang, S.-Q.; Yu, G.; Gu, L.; Wang, G.-Y.; Yuan, X.-J. A Novel Method for Pruning Decision Trees. In Proceedings of the 2009 International Conference on Machine Learning and Cybernetics, Baoding, China, 12–15 July 2009; Volume 1, pp. 339–343. [Google Scholar]

- Osei-Bryson, K.-M. Post-Pruning in Regression Tree Induction: An Integrated Approach. Expert Syst. Appl. 2008, 34, 1481–1490. [Google Scholar] [CrossRef]

- Wang, H.; Chen, B. Intrusion Detection System Based on Multi-Strategy Pruning Algorithm of the Decision Tree. In Proceedings of the 2013 IEEE International Conference on Grey Systems and Intelligent Services (GSIS), Macao, China, 15–17 November 2013; pp. 445–447. [Google Scholar] [CrossRef]

- Knoll, U.; Nakhaeizadeh, G.; Tausend, B. Cost-Sensitive Pruning of Decision Trees. In Proceedings of the European Conference on Machine Learning, Catania, Italy, 6–8 April 1994; pp. 383–386. [Google Scholar]

- Bradley, A.; Lovell, B. Cost-Sensitive Decision Tree Pruning: Use of the ROC Curve. In Proceedings of the Eighth Australian Joint Conference on Artificial Intelligence, Canberra, Australia, 13–17 November 1995; pp. 1–8. [Google Scholar]

- Ting, K.M. Inducing Cost-Sensitive Trees via Instance Weighting. In Proceedings of the European Symposium on Principles of Data Mining and Knowledge Discovery, Nantes, France, 23–26 September 1998; pp. 139–147. [Google Scholar] [CrossRef]

- Bradford, J.P.; Kunz, C.; Kohavi, R.; Brunk, C.; Brodley, C.E. Pruning Decision Trees with Misclassification Costs. In Machine Learning ECML-98, Proceedings of the 10th European Conference on Machine Learning, Chemnitz, Germany, 21–23 April 1998; Springer: Berlin/Heidelberg, Germany, 1998; Volume 1398, pp. 131–136. [Google Scholar] [CrossRef]

- Chen, J.; Wang, X.; Zhai, J. Pruning Decision Tree Using Genetic Algorithms. In Proceedings of the 2009 International Conference on Artificial Intelligence and Computational Intelligence, Shanghai, China, 7–8 November 2009; Volume 3, pp. 244–248. [Google Scholar] [CrossRef]

- Zhang, W.; Li, Y. A Post-Pruning Decision Tree Algorithm Based on Bayesian. In Proceedings of the 2013 International Conference on Computational and Information Sciences, Shiyang, China, 21–23 June 2013; pp. 988–991. [Google Scholar] [CrossRef]

- Mehta, S.; Shukla, D. Optimization of C5.0 Classifier Using Bayesian Theory. In Proceedings of the 2015 International Conference on Computer, Communication and Control (IC4), Indore, India, 10–12 September 2015. [Google Scholar] [CrossRef]

- Malik, A.J.; Khan, F.A. A Hybrid Technique Using Binary Particle Swarm Optimization and Decision Tree Pruning for Network Intrusion Detection. Clust. Comput. 2017, 21, 667–680. [Google Scholar] [CrossRef]

- Sim, D.Y.Y.; Teh, C.S.; Ismail, A.I. Improved Boosted Decision Tree Algorithms by Adaptive Apriori and Post-Pruning for Predicting Obstructive Sleep Apnea. Adv. Sci. Lett. 2011, 4, 400–407. [Google Scholar] [CrossRef]

- Ahmed, A.M.; Rizaner, A.; Ulusoy, A.H. A Novel Decision Tree Classification Based on Post-Pruning with Bayes Minimum Risk. PLoS ONE 2018, 13, e0194168. [Google Scholar] [CrossRef]

- Bahnsen, A.C.; Stojanovic, A.; Aouada, D. Cost Sensitive Credit Card Fraud Detection Using Bayes Minimum Risk. In Proceedings of the 2013 12th International Conference on Machine Learning and Applications, Miami, FL, USA, 4–7 December 2013; Volume 1, pp. 333–338. [Google Scholar] [CrossRef]

- Frank, E.; Witten, I.H. Using a Permutation Test for Attribute Selection in Decision Trees. In Proceedings of the the 15th International Conference on Machine Learning, Madison, WI, USA, 24–27 July 1998; pp. 152–160. [Google Scholar]

- Crawford, S.L. Extensions to the CART Algorithm. Int. J. Man. Mach. Stud. 1989, 31, 197–217. [Google Scholar] [CrossRef]

- Efron, B.; Tibshirani, R. Improvements on Cross-Validation: The.632+ Bootstrap Method. J. Am. Stat. Assoc. 1997, 92, 548–560. [Google Scholar] [CrossRef]

- Scott, C. Tree Pruning with Subadditive Penalties. IEEE Trans. Signal Process. 2005, 53, 4518–4525. [Google Scholar] [CrossRef]

- García-Moratilla, S.; Martínez-Muñoz, G.; Suárez, A. Evaluation of Decision Tree Pruning with Subadditive Penalties. In Intelligent Data Engineering and Automated Learning—IDEAL 2006, Proceedings of International Conference on Intelligent Data Engineering and Automated Learning, Burgos, Spain, 20–23 September 2006; Sringer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Rissanen, J. Modeling by Shortest Data Description. Automatica 1978, 14, 465–471. [Google Scholar] [CrossRef]

- Mehta, M.; Rissanen, J.; Agrawal, R. MDL-Based Decision Tree Pruning. In Proceedings of the First International Conference on Knowledge Discovery and Data Mining, Montreal, QC, Canada, 20–21 August 1995; AAAI Press: Washington, DC, USA, 1995; pp. 216–221. [Google Scholar]

- Sweeney, L. K-ANONYMITY: A MODEL FOR PROTECTING PRIVACY. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 2002, 10, 557–570. [Google Scholar] [CrossRef]

- Pantoja, D.; Rodríguez, I.; Rubio, F.; Segura, C. Complexity Analysis and Practical Resolution of the Data Classification Problem with Private Characteristics. Complex. Intell. Syst. 2025, 11, 274. [Google Scholar] [CrossRef]

- Patil, D.D.; Wadhai, V.M.; Gokhale, J.A. Evaluation of Decision Tree Pruning Algorithms for Complexity and Classification Accuracy. Int. J. Comput. Appl. 2010, 11, 975–8887. [Google Scholar] [CrossRef]

- Tsai, C.F.; Hsu, Y.F.; Lin, C.Y.; Lin, W.Y. Intrusion Detection by Machine Learning: A Review. Expert. Syst. Appl. 2009, 36, 11994–12000. [Google Scholar] [CrossRef]

- Buczak, A.; Guven, E. A Survey of Data Mining and Machine Learning Methods for Cyber Security Intrusion Detection. IEEE Commun. Surv. Tutor. 2016, 18, 1153–1176. [Google Scholar] [CrossRef]

- Mishra, P.; Varadharajan, V.; Tupakula, U.; Pilli, E.S. A Detailed Investigation and Analysis of Using Machine Learning Techniques for Intrusion Detection. IEEE Commun. Surv. Tutor. 2018, 21, 686–728. [Google Scholar] [CrossRef]

- Sharkey, P.; Tian, H.W.; Zhang, W.N.; Xu, S.H. Privacy-Preserving Data Mining through Knowledge Model Sharing. In Proceedings of the International Workshop on Privacy, Security, and Trust in KDD, San Jose, CA, USA, 12 August 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 97–115. [Google Scholar]

- Prasser, F.; Kohlmayer, F.; Kuhn, K.A. Efficient and Effective Pruning Strategies for Health Data De-Identification. BMC Med. Inform. Decis. Mak. 2016, 16, 49. [Google Scholar] [CrossRef][Green Version]

- Yurcik, W.; Woolam, C.; Hellings, G.; Khan, L.; Thuraisingham, B. Privacy/Analysis Tradeoffs in Sharing Anonymized Packet Traces: Single-Field Case. In Proceedings of the 2008 Third International Conference on Availability, Reliability and Security, Barcelona, Spain, 4–7 March 2008; pp. 237–244. [Google Scholar] [CrossRef]

- Lakkaraju, K.; Slagell, A. Evaluating the Utility of Anonymized Network Traces for Intrusion Detection. In Proceedings of the the 4th International Conference on Security and Privacy in Communication Netowrks, Istanbul, Turkey, 22–25 September 2008; ACM: New York, NY, USA, 2008; p. 17. [Google Scholar]

- SSH Port|SSH.COM. Available online: https://www.ssh.com/ssh/port (accessed on 8 September 2018).

- Zhang, J.; Borisov, N.; Yurcik, W. Outsourcing Security Analysis with Anonymized Logs. In Proceedings of the 2006 Securecomm and Workshops, Baltimore, MD, USA, 28 August–1 September 2006. [Google Scholar] [CrossRef]

- Coull, S.E.; Wright, C.V.; Monrose, F.; Collins, M.P.; Reiter, M.K. Inferring Sensitive Information from Anonymized Network Traces. Ndss 2007, 7, 35–47. [Google Scholar]

- Riboni, D.; Villani, A.; Vitali, D.; Bettini, C.; Mancini, L. V Obfuscation of Sensitive Data for Increamental Release of Network Flows. IEEE/ACM Trans. Netw. 2015, 23, 2372–2380. [Google Scholar] [CrossRef]

- Yurcik, W.; Woolam, C.; Hellings, G.; Khan, L.; Thuraisingham, B. SCRUB-Tcpdump: A Multi-Level Packet Anonymizer Demonstrating Privacy/Analysis Tradeoffs. In Proceedings of the 2007 Third International Conference on Security and Privacy in Communications Networks and the Workshops—SecureComm 2007, Nice, France, 17–21 September 2007; pp. 49–56. [Google Scholar] [CrossRef]

- Qardaji, W.; Li, N. Anonymizing Network Traces with Temporal Pseudonym Consistency. In Proceedings of the 2012 32nd International Conference on Distributed Computing Systems Workshops, Macau, China, 18–21 June 2012. [Google Scholar] [CrossRef]

- Xu, J.; Fan, J.; Ammar, M.H.; Moon, S.B. Prefix-Preserving IP Address Anonymization: Measurement-Based Security Evaluation and a New Cryptography-Based Scheme. In Proceedings of the 10th IEEE International Conference on Network Protocols, Paris, France, 12–15 November 2002; Volume 46, pp. 280–289. [Google Scholar] [CrossRef]

- Coull, S.E.; Wright, C.V.; Keromytis, A.D.; Monrose, F.; Reiter, M.K. Taming the Devil: Techniques for Evaluating Anonymized Network Data. In Proceedings of the Network and Distributed System Security Symposium, NDSS 2008, San Diego, CA, USA, 10–13 February 2008; pp. 125–135. [Google Scholar]

- Perona, I.; Arbelaitz, O.; Gurrutxaga, I.; Martin, J.I.; Muguerza, J.; Perez, J.M. Generation of the Database Gurekddcup. 2016. Available online: http://hdl.handle.net/10810/20608 (accessed on 3 August 2025).

- Perona, I.; Gurrutxaga, I.; Arbelaitz, O.; Martín, J.I.; Muguerza, J.; Ma Pérez, J. Service-Independent Payload Analysis to Improve Intrusion Detection in Network Traffic. In Proceedings of the 7th Australasian Data Mining Conference, Glenelg/Adelaide, SA, Australia, 27–28 November 2008; Volume 87, pp. 171–178. [Google Scholar]

- Moustafa, N.; Slay, J. UNSW-NB15: A Comprehensive Data Set for Network Intrusion Detection Systems. In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, Australia, 10–12 November 2015; pp. 1–6. [Google Scholar]

- Ring, M.; Wunderlich, S.; Grüdl, D.; Landes, D.; Hotho, A. Flow-Based Benchmark Data Sets for Intrusion Detection. In Proceedings of the 16th European Conference on Cyber Warfare and Security, Dublin, Ireland, 29–30 June 2017; pp. 361–369. [Google Scholar]

- Stolfo, S.J.; Fan, W.; Lee, W.; Prodromidis, A.; Chan, P.K. Cost-Based Modeling for Fraud and Intrusion Detection: Results from the JAM Project. In Proceedings of the DARPA Information Survivability Conference and Exposition DISCEX’00, Hilton Head, SC, USA, 25–27 January 2000; IEEE Computer Society: Los Alamitos, CA, USA, 2000; Volume 2, pp. 130–144. [Google Scholar]

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A Detailed Analysis of the KDD CUP 99 Data Set. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, Ottawa, ON, Canada, 8–10 July 2009; pp. 1–6. [Google Scholar]

- Nicholas, L.; Ooi, S.Y.; Pang, Y.H.; Hwang, S.O.; Tan, S.-Y. Study of Long Short-Term Memory in Flow-Based Network Intrusion Detection System. J. Intell. Fuzzy Syst. 2018, 35, 5947–5957. [Google Scholar] [CrossRef]

- Bhuyan, M.H.; Bhattacharyya, D.K.; Kalita, J.K. Network Anomaly Detection: Methods, Systems and Tools. IEEE Commun. Surv. Tutor. 2014, 16, 303–336. [Google Scholar] [CrossRef]

- Kohavi, R. A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection. In Proceedings of the 14th International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 20–25 August 1995; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1995; Volume 2, pp. 1137–1143. [Google Scholar]

- Chew, Y.J.; Lee, N.; Ooi, S.Y.; Wong, K.-S.; Pang, Y.H. Benchmarking Full Version of GureKDDCup, UNSW-NB15, and CIDDS-001 NIDS Datasets Using Rolling-Origin Resampling. Inf. Secur. J. A Glob. Perspect. 2021, 31, 544–565. [Google Scholar] [CrossRef]

- Ahmed, M.; Mahmood, A.N.; Hu, J. A Survey of Network Anomaly Detection Techniques. J. Netw. Comput. Appl. 2016, 60, 19–31. [Google Scholar] [CrossRef]

- Catania, C.A.; Garino, C.G. Automatic Network Intrusion Detection: Current Techniques and Open Issues. Comput. Electr. Eng. 2012, 38, 1062–1072. [Google Scholar] [CrossRef]

- Mivule, K. An Investigation Of Data Privacy And Utility Using Machine Learning As A Gauge. In Proceedings of the Doctoral Consortium, Richard Tapia Celebration of Diversity in Computing Conference, TAPIA, Seattle, WA, USA, 5–8 February 2014; Volume 3619387, pp. 17–33. [Google Scholar] [CrossRef]

- Mivule, K.; Turner, C. A Comparative Analysis of Data Privacy and Utility Parameter Adjustment, Using Machine Learning Classification as a Gauge. Procedia Comput. Sci. 2013, 20, 414–419. [Google Scholar] [CrossRef]

- Chew, Y.J.; Ooi, S.Y.; Wong, K.; Pang, Y.H.; Hwang, S.O. Evaluation of Black-Marker and Bilateral Classification with J48 Decision Tree in Anomaly Based Intrusion Detection System. J. Intell. Fuzzy Syst. 2018, 35, 5927–5937. [Google Scholar] [CrossRef]

- Chew, Y.J.; Ooi, S.Y.; Wong, K.; Pang, Y.H. Privacy Preserving of IP Address Through Truncation Method in Network-Based Intrusion Detection System. In Proceedings of the 2019 8th International Conference on Software and Computer Application, Penang, Malaysia, 19–21 February 2019. [Google Scholar]

| Starting IP | Ending IP |

|---|---|

| 10.0.0.0 | 10.255.255.255 |

| 172.16.0.0 | 172.31.255.255 |

| 192.168.0.0 | 192.168.255.255 |

| Dataset | #Used Instances | Original Attributes | Dataset | #Used Instances |

|---|---|---|---|---|

| Full GureKDDCup | 2,759,494 | 48 | 36 | 2008 (2016) |

| Full UNSW-NB15 | 2,540,047 | 49 (raw) 48 (processed) | Full UNSW-NB15 | 2,540,047 |

| CIDDS-001 | 18,762,253 | 12 (raw) 16 (processed) | CIDDS-001 | 18,762,253 |

| Week | #Instances |

|---|---|

| 1 | 177,910 |

| 2 | 188,790 |

| 3 | 288,369 |

| 4 | 113,946 |

| 5 | 604,303 |

| 6 | 951,361 |

| 7 | 434,815 |

| total | 2,759,494 |

| Training Group | Testing Group |

|---|---|

| 1 | 2~7 |

| 1~2 | 3~7 |

| 1~3 | 4~7 |

| 1~4 | 5~7 |

| 1~5 | 6~7 |

| 1~6 | 7 |

| Attribute Name | Original Attribute | Modified Attribute Value |

|---|---|---|

| state | - | ? |

| service | - | ? |

| attack_cat | Nan | Normal |

| label | Backdoors | Backdoor |

| (ALL) | Drop | |

| sport/dport | - | |

| 0xc0a8 | ? | |

| 49,320 | ||

| 0x000b | 11 | |

| 0x000c | 12 | |

| 0x20205321 | ? | |

| 0xcc09 | 52,233 |

| Week | #Instances |

|---|---|

| 1 | 700,001 |

| 2 | 700,001 |

| 3 | 700,001 |

| 4 | 440,044 |

| total | 2,540,047 |

| Training Group | Testing Group |

|---|---|

| 1 | 2~4 |

| 1~2 | 3~4 |

| 1~3 | 4 |

| Attribute Name | Original Attribute | Modified Attribute Value |

|---|---|---|

| Bytes | (M) | Multiply (Bytes) with 100,000 |

| Flows | (ALL) | DROP |

| Attacktype | --- | normal |

| Flags | APRSF | Flag_A |

| Flag_P | ||

| Flag_R | ||

| Flag_S | ||

| Flag_F | ||

| IP Address (Src IP Addr/Dst IP Addr) | DNS | 1000.1000.1000.1000 |

| EXT_SERVER | 2000.2000.2000.2000 | |

| (Anonymized IP) | 3000.3000.3000.3000 |

| Week | #Instances |

|---|---|

| 1 | 8,451,520 |

| 2 | 10,310,733 |

| 3 | 6,349,783 |

| 4 | 6,175,897 |

| total | 31,287,933 |

| Training Group | Testing Group |

|---|---|

| 1 | 2 |

| Algorithm Name | Pruning Type | Technical Implementation |

|---|---|---|

| J48U-NO | No Prune | Default J48 Weka package pruning is disabled. |

| J48U-SP | Single | Standard sensitive pruning as described in Section 3.1 is applied. |

| J48U-OSP | Single | Optimistic sensitive pruning as described in Section 3.2 is applied. |

| J48U-PSP | Single | Pessimistic sensitive pruning as described in Section 3.3 is applied. |

| J48P-NO | Single | Default J48 Weka package pruning is applied. |

| J48P-SP | Hybrid | Default J48 Weka package pruning and standard sensitive pruning as described in Section 3.1 are applied. |

| J48P-OSP | Hybrid | Default J48 Weka package pruning and optimistic sensitive pruning as described in Section 3.2 are applied. |

| J48P-PSP | Hybrid | Default J48 Weka package pruning and pessimistic sensitive pruning as described in Section 3.3 are applied. |

| J48U-NO | No Prune | Default J48 Weka package pruning is disabled. |

| Dataset | Performance Comparison of Four Pruning Algorithms on Classification Accuracy (%), Number of Pruned Nodes, and Number of Nodes in the Final Tree | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| J48U-NO | J48U-SP | J48U-OSP | J48U-PSP | ||||||||||

| Train | Test | Accuracy (%) | #Pruned Nodes | #Final Nodes | Accuracy (%) | #Pruned Nodes | #Final Nodes | Accuracy (%) | #Pruned Nodes | #Final Nodes | Accuracy (%) | #Pruned Nodes | #Final Nodes |

| GureKDDCup | |||||||||||||

| 1 | 2~7 | 38.0276 | 0 | 1098 | 38.0294 | 56 | 1042 | 38.0294 | 56 | 1042 | 38.0294 | 1083 | 15 |

| 1~2 | 3~7 | 33.1902 | 0 | 8864 | 33.1782 | 122 | 8742 | 33.1782 | 122 | 8742 | 33.1782 | 8794 | 70 |

| 1~3 | 4~7 | 82.8676 | 0 | 52,296 | 82.8609 | 15,938 | 36,358 | 82.8609 | 15,938 | 36,358 | 82.8609 | 52,121 | 175 |

| 1~4 | 5~7 | 88.1449 | 0 | 220,366 | 88.1300 | 78,233 | 142,133 | 88.1300 | 78,233 | 142,133 | 88.1300 | 219,967 | 399 |

| 1~5 | 6~7 | 88.8453 | 0 | 471,472 | 88.8410 | 161,785 | 309,687 | 88.8410 | 161,785 | 309,687 | 88.8410 | 471,079 | 393 |

| 1~6 | 6~7 | 99.9522 | 0 | 803,218 | 99.8963 | 189,442 | 613,776 | 99.8963 | 189,442 | 613,776 | 99.8963 | 802,599 | 619 |

| UNSW-NB15 | |||||||||||||

| 1 | 2~4 | 96.4373 | 0 | 8548 | 97.1296 | 792 | 7756 | 97.1296 | 792 | 7756 | 97.1296 | 6670 | 1878 |

| 1~2 | 3~4 | 96.5973 | 0 | 20,411 | 96.7678 | 2550 | 17,861 | 96.7678 | 2550 | 17,861 | 96.7678 | 16,116 | 4295 |

| 1~3 | 4 | 96.7778 | 0 | 38,928 | 97.0410 | 5007 | 33,921 | 97.0410 | 5007 | 33,921 | 97.0410 | 32,720 | 6208 |

| CIDDS-001 | |||||||||||||

| 1 | 2 | 94.2148 | 0 | 19,557 | 99.8429 | 19,326 | 231 | 99.8429 | 19,326 | 231 | 99.8429 | 19,422 | 135 |

| Dataset | Performance Comparison of Four Hybrid Pruning Algorithms on Classification Accuracy (%), Number of Pruned Nodes, and Number of Nodes in the Final Tree | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| J48P-NO | J48P-SP | J48P-OSP | J48P-PSP | ||||||||||

| Train | Test | Accuracy (%) | #Pruned Nodes | #Final Nodes | Accuracy (%) | #Pruned Nodes | #Final Nodes | Accuracy (%) | #Pruned Nodes | #Final Nodes | Accuracy (%) | #Pruned Nodes | #Final Nodes |

| GureKDDCup | |||||||||||||

| 1 | 2~7 | 38.0293 | 1093 | 5 | 38.0293 | 1093 | 5 | 38.0293 | 1093 | 5 | 38.0293 | 1093 | 5 |

| 1~2 | 3~7 | 33.1808 | 5874 | 2990 | 33.1786 | 5936 | 2928 | 33.1786 | 5936 | 2928 | 33.1786 | 8834 | 30 |

| 1~3 | 4~7 | 82.8830 | 32,681 | 19,615 | 82.8717 | 39,013 | 13,283 | 82.8717 | 39,013 | 13,283 | 82.7145 | 52,211 | 85 |

| 1~4 | 5~7 | 88.1692 | 117,944 | 102,422 | 88.1503 | 151,734 | 68,632 | 88.1503 | 151,734 | 68,632 | 88.1286 | 220,122 | 244 |

| 1~5 | 6~7 | 88.8412 | 295,568 | 175,904 | 88.8361 | 348,936 | 122,536 | 88.8361 | 348,936 | 122,536 | 88.8363 | 471,255 | 217 |

| 1~6 | 6~7 | 99.9526 | 484,956 | 318,262 | 99.6842 | 553,549 | 249,669 | 99.6842 | 553,549 | 249,669 | 99.8942 | 802,822 | 396 |

| UNSW-NB15 | |||||||||||||

| 1 | 2~4 | 96.5079 | 4804 | 3744 | 97.1351 | 5080 | 3468 | 97.1351 | 5080 | 3468 | 97.1318 | 7122 | 1426 |

| 1~2 | 3~4 | 96.6146 | 11,649 | 8762 | 96.6977 | 12,462 | 7949 | 96.6977 | 12,462 | 7949 | 96.6983 | 17,337 | 3074 |

| 1~3 | 4 | 96.7751 | 20,141 | 18,787 | 97.0362 | 22,073 | 16,855 | 97.0362 | 22,073 | 16,855 | 97.0623 | 34,072 | 4856 |

| CIDDS-001 | |||||||||||||

| 1 | 2 | 94.4537 | 10,006 | 9551 | 99.8459 | 19,378 | 179 | 99.8459 | 19,378 | 179 | 99.8445 | 19,432 | 125 |

| Dataset | #Number of Unique IP Addresses | ||||

|---|---|---|---|---|---|

| Original Source IP | Original Destination IP | Private Source IP | Private Destination IP | Anonymized Source IP | |

| GureKDDCup | 8483 | 21,018 | 45 | 4602 | 29 |

| UNSW-SB15 | 43 | 47 | 8 | 9 | 6 |

| CIDDS-001 | 38 | 790 | 34 | 785 | 6 |

| Dataset | Model Evaluation Computation Time (s) | |||||||

|---|---|---|---|---|---|---|---|---|

| J48U-NO | J48U-SP | J48U-OSP | J48U-PSP | J48P-NO | J48P-SP | J48P-OSP | J48P-PSP | |

| GureKDDCup * | 7.07 | 6.18 | 6.13 | 6.35 | 5.99 | 6.09 | 5.91 | 5.82 |

| UNSW-NB15 † | 1.30 | 1.39 | 1.39 | 1.44 | 1.38 | 1.37 | 1.43 | 1.38 |

| CIDDS-001 • | 57.60 | 14.62 | 15.24 | 14.49 | 54.41 | 15.44 | 14.58 | 13.91 |

| Dataset | Model Building Computation Time (s) | |||||||

|---|---|---|---|---|---|---|---|---|

| J48U-NO | J48U-SP | J48U-OSP | J48U-PSP | J48P-NO | J48P-SP | J48P-OSP | J48P-PSP | |

| GureKDDCup * | 858.47 | 844.21 | 892.66 | 902.75 | 941.60 | 987.43 | 1000.22 | 1003.23 |

| UNSW-NB15 † | 716.19 | 726.27 | 800.56 | 840.07 | 772.63 | 806.63 | 810.90 | 826.64 |

| CIDDS-001 • | 426.21 | 432.50 | 462.97 | 505.36 | 547.07 | 525.35 | 536.31 | 531.94 |

| Dataset | Performance Comparison of Four Pruning Algorithms on Classification Accuracy (%), Number of Pruned Nodes, and Number of Nodes in the Final Tree | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| J48U-NO | J48U-SP | J48U-OSP | J48U-PSP | ||||||||||

| Train | Test | Accuracy (%) | #Pruned Nodes | #Final Nodes | Accuracy (%) | #Pruned Nodes | #Final Nodes | Accuracy (%) | #Pruned Nodes | #Final Nodes | Accuracy (%) | #Pruned Nodes | #Final Nodes |

| GureKDDCup | |||||||||||||

| 1 | 2~7 | 85.6394 | 0 | 81 | 85.6394 | 10 | 71 | 85.6394 | 10 | 71 | 85.6394 | 68 | 13 |

| 1~2 | 3~7 | 84.6436 | 0 | 60 | 84.6440 | 6 | 54 | 84.6440 | 6 | 54 | 84.6440 | 29 | 31 |

| 1~3 | 4~7 | 87.6357 | 0 | 817 | 87.5664 | 199 | 618 | 87.5664 | 199 | 618 | 87.5664 | 651 | 166 |

| 1~4 | 5~7 | 89.1386 | 0 | 2059 | 89.1355 | 565 | 1494 | 89.1355 | 565 | 1494 | 89.1355 | 1763 | 296 |

| 1~5 | 6~7 | 92.3514 | 0 | 2599 | 92.3154 | 593 | 2006 | 92.3154 | 593 | 2006 | 92.3154 | 2206 | 393 |

| 1~6 | 6~7 | 99.9043 | 0 | 3057 | 99.8395 | 664 | 2393 | 99.8395 | 664 | 2393 | 99.8395 | 2534 | 523 |

| UNSW-NB15 | |||||||||||||

| 1 | 2~4 | 96.6075 | 0 | 5126 | 96.5761 | 10 | 5116 | 96.5761 | 10 | 5116 | 96.5761 | 573 | 4553 |

| 1~2 | 3~4 | 96.5706 | 0 | 11,410 | 96.5034 | 14 | 11,396 | 96.5034 | 14 | 11,396 | 96.5034 | 1436 | 9974 |

| 1~3 | 4 | 96.1983 | 0 | 18,939 | 96.7406 | 14 | 18,925 | 96.7406 | 14 | 18,925 | 96.7406 | 2317 | 16,622 |

| CIDDS-001 | |||||||||||||

| 1 | 2 | 89.7284 | 0 | 834 | 99.4486 | 603 | 231 | 99.4486 | 603 | 231 | 99.4486 | 695 | 139 |

| Dataset | Performance Comparison of Four Hybrid Pruning Algorithms on Classification Accuracy (%), Number of Pruned Nodes, and Number of Nodes in the Final Tree | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| J48P-NO | J48P-SP | J48P-OSP | J48P-PSP | ||||||||||

| Train | Test | Accuracy (%) | #Pruned Nodes | #Final Nodes | Accuracy (%) | #Pruned Nodes | #Final Nodes | Accuracy (%) | #Pruned Nodes | #Final Nodes | Accuracy (%) | #Pruned Nodes | #Final Nodes |

| GureKDDCup | |||||||||||||

| 1 | 2~7 | 85.6394 | 76 | 5 | 85.6394 | 76 | 5 | 85.6394 | 76 | 5 | 85.6394 | 76 | 5 |

| 1~2 | 3~7 | 84.6445 | 30 | 30 | 84.6445 | 33 | 27 | 84.6445 | 33 | 27 | 84.6445 | 39 | 21 |

| 1~3 | 4~7 | 87.6289 | 433 | 384 | 87.5762 | 465 | 352 | 87.5762 | 465 | 352 | 87.4157 | 698 | 119 |

| 1~4 | 5~7 | 88.1952 | 1446 | 613 | 88.2147 | 1519 | 540 | 88.2147 | 1519 | 540 | 88.3048 | 1853 | 206 |

| 1~5 | 6~7 | 98.7124 | 1467 | 1132 | 98.6753 | 1469 | 1130 | 98.6753 | 1469 | 1130 | 98.6852 | 2304 | 295 |

| 1~6 | 6~7 | 98.7802 | 1832 | 1225 | 98.7668 | 2042 | 1015 | 98.7668 | 2042 | 1015 | 99.8565 | 2746 | 311 |

| UNSW-NB15 | |||||||||||||

| 1 | 2~4 | 96.6439 | 1287 | 3839 | 96.6146 | 1297 | 3829 | 96.6146 | 1297 | 3829 | 96.6146 | 1679 | 3447 |

| 1~2 | 3~4 | 96.6120 | 3499 | 7911 | 96.5437 | 3513 | 7897 | 96.5437 | 3513 | 7897 | 96.5437 | 4031 | 7379 |

| 1~3 | 4 | 96.2399 | 7097 | 11,842 | 96.7874 | 7109 | 11,830 | 96.7874 | 7109 | 11,830 | 96.7940 | 7767 | 11,172 |

| CIDDS-001 | |||||||||||||

| 1 | 2 | 89.7644 | 202 | 632 | 99.4484 | 724 | 110 | 99.4484 | 724 | 110 | 99.4492 | 771 | 63 |

| Dataset | Performance Comparison of Eight Pruning Algorithms on Classification Accuracy and Number of Final Nodes Against Original Dataset and Modified Dataset | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| J48U-NO | J48U-SP | J48U-OSP | J48U-PSP | J48P-NO | J48P-SP | J48P-OSP | J48P-PSP | ||||||||||

| Train | Test | Accuracy (%) | #Final Nodes | Accuracy (%) | #Final Nodes | Accuracy (%) | #Final Nodes | Accuracy (%) | #Final Nodes | Accuracy (%) | #Final Nodes | Accuracy (%) | #Final Nodes | Accuracy (%) | #Final Nodes | Accuracy (%) | #Final Nodes |

| GureKDDCup | |||||||||||||||||

| 1 | 2~7 | +47.61 | −1017 | +47.61 | −971 | +47.61 | −971 | +47.61 | −2 | +47.61 | 0 | +47.61 | 0 | +47.61 | 0 | +47.61 | 0 |

| 1~2 | 3~7 | +51.45 | −8804 | +51.47 | −8688 | +51.47 | −8688 | +51.47 | −39 | +51.46 | −2960 | +51.47 | −2901 | +51.47 | −2901 | +51.47 | −9 |

| 1~3 | 4~7 | +4.77 | −51,479 | +4.71 | −35,740 | +4.71 | −35,740 | +4.71 | −9 | +4.75 | −19,231 | +4.70 | −12,931 | +4.70 | −12,931 | +4.70 | +34 |

| 1~4 | 5~7 | +0.99 | −218,307 | +1.01 | −140,639 | +1.01 | −140,639 | +1.01 | −103 | +0.03 | −101,809 | +0.06 | −68,092 | +0.06 | −68,092 | +0.18 | −38 |

| 1~5 | 6~7 | +3.51 | −468,873 | +3.47 | −307,681 | +3.47 | −307,681 | +3.47 | 0 | +9.87 | −174,772 | +9.84 | −121,406 | +9.84 | −121,406 | +9.85 | −78 |

| 1~6 | 6~7 | −0.05 | −800,161 | −0.06 | −611,383 | −0.06 | −611,383 | −0.06 | −96 | −1.17 | −317,037 | −0.92 | −248,654 | −0.92 | −248,654 | −0.04 | −85 |

| UNSW-NB15 | |||||||||||||||||

| 1 | 2~4 | +0.17 | −3422 | −0.55 | −2640 | −0.55 | −2640 | −0.55 | +2675 | +0.14 | +95 | −0.52 | +361 | −0.52 | +361 | −0.52 | +2021 |

| 1~2 | 3~4 | −0.03 | −9001 | −0.26 | −6465 | −0.26 | −6465 | −0.26 | +5679 | 0.00 | −851 | −0.15 | −52 | −0.15 | −52 | −0.15 | +4305 |

| 1~3 | 4 | −0.58 | −19,989 | −0.30 | −14,996 | −0.30 | −14,996 | −0.30 | +10,414 | −0.54 | −6945 | −0.25 | −5025 | −0.25 | −5025 | −0.27 | +6316 |

| CIDDS-001 | |||||||||||||||||

| 1 | 2 | −4.49 | −18,723 | −0.39 | 0 | −0.39 | 0 | −0.39 | +4 | −4.69 | −8919 | −0.40 | −69 | −0.40 | −69 | −0.40 | −62 |

| Machine Learning Model | Classification Accuracies for Each Dataset | ||

|---|---|---|---|

| GureKDDCup * | UNSW-NB15 † | CIDDS-001 • | |

| ZeroR | 47.2845 | 79.7988 | 82.5870 |

| Random Tree | 99.3880 | 79.1657 | 78.9227 |

| REPtree | 97.6507 | 82.0959 | 71.5996 |

| Decision Stump | 95.7251 | 91.6120 | 85.5956 |

| Adaboost | 95.7251 | 91.6120 | 96.8684 |

| Bayesnet | 98.3662 | 94.3472 | 85.4093 |

| Naïve Bayes | 87.4997 | 88.9631 | 56.3338 |

| Random Forest | 99.5161 | 96.6649 | 84.6270 |

| SMO | 83.4074 | 94.0967 | 75.1466 |

| J48U-NO | 99.9522 | 96.7778 | 94.2148 |

| J48U-SP | 99.8963 | 97.0410 | 99.8429 |

| J48U-OSP | 99.8963 | 97.0410 | 99.8429 |

| J48U-PSP | 99.8963 | 97.0410 | 99.8429 |

| J48P-NO | 99.9526 | 96.7751 | 94.4537 |

| J48P-SP | 99.6842 | 97.0362 | 99.8459 |

| J48P-OSP | 99.6842 | 97.0362 | 99.8459 |

| J48P-PSP | 99.8942 | 97.0623 | 99.8445 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chew, Y.J.; Ooi, S.Y.; Pang, Y.H.; Lim, Z.Y. Decision Tree Pruning with Privacy-Preserving Strategies. Electronics 2025, 14, 3139. https://doi.org/10.3390/electronics14153139

Chew YJ, Ooi SY, Pang YH, Lim ZY. Decision Tree Pruning with Privacy-Preserving Strategies. Electronics. 2025; 14(15):3139. https://doi.org/10.3390/electronics14153139

Chicago/Turabian StyleChew, Yee Jian, Shih Yin Ooi, Ying Han Pang, and Zheng You Lim. 2025. "Decision Tree Pruning with Privacy-Preserving Strategies" Electronics 14, no. 15: 3139. https://doi.org/10.3390/electronics14153139

APA StyleChew, Y. J., Ooi, S. Y., Pang, Y. H., & Lim, Z. Y. (2025). Decision Tree Pruning with Privacy-Preserving Strategies. Electronics, 14(15), 3139. https://doi.org/10.3390/electronics14153139