A Multimodal Deep Learning Framework for Consistency-Aware Review Helpfulness Prediction

Abstract

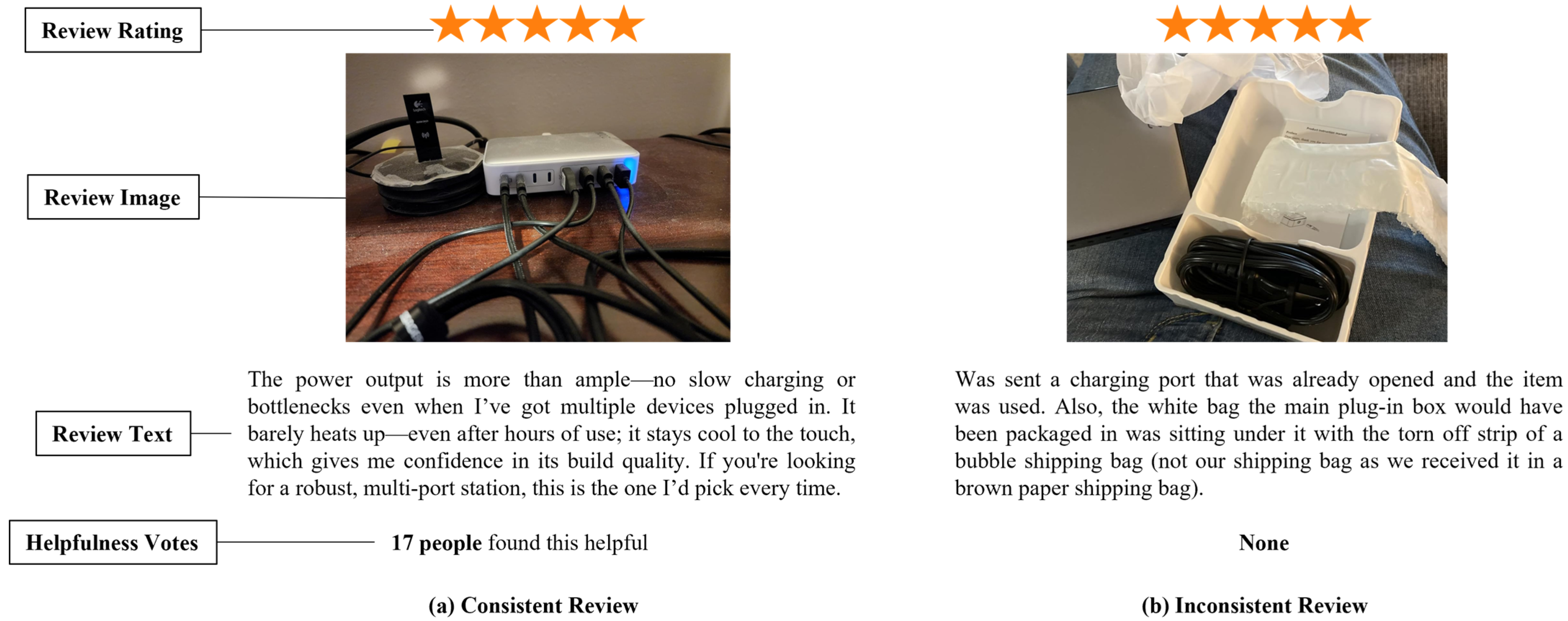

1. Introduction

- This study introduces CRCNet that incorporates not only the interaction between text and images but also the consistency between ratings and review content. This approach extends prior work by emphasizing the role of consistency between ratings and review content in improving prediction performance.

- CRCNet applies a co-attention mechanism to capture the consistency between review content and ratings and leverages a GMU to integrate text and image features. This approach extends beyond simple feature fusion by effectively modeling relationships across modalities, resulting in better prediction performance.

- We conduct extensive experiments using a large-scale Amazon review dataset, not only comparing baseline models but also validating the effectiveness of the proposed components. The results demonstrate that incorporating the GMU, multimodal integration, and consistency between review content and rating significantly enhances prediction performance across diverse product categories.

2. Related Works

2.1. Review Helpfulness Prediction

2.2. Multimodal Review Helpfulness Prediction

3. CRCNet Framework

3.1. Multimodal Representation Module

3.2. Rating Embedding Module

3.3. Semantic Consistency Module

3.4. Helpfulness Prediction Module

4. Experiments

4.1. Datasets

4.2. Evaluation Metrics

4.3. Baseline Models

- D-CNN [13]: A dual-layer CNN model designed to extract semantic features from review text. It applies convolutional kernels of sizes 2, 3, and 4 in the first layer to capture n-gram-level patterns, followed by a second convolutional layer that generates document-level representations. The final prediction is obtained by averaging the outputs from each kernel size, allowing the model to effectively capture local semantic patterns within reviews.

- LSTM [13]: A sequential model designed to capture long-range contextual dependencies in review text. Similarly to the D-CNN model, it uses 50-dimensional GloVe embeddings as input, followed by a 100-cell LSTM layer and a fully connected layer for prediction. A dropout rate of 0.09 is applied to reduce overfitting during training. In contrast to the D-CNN, which captures local semantic patterns, the LSTM captures the overall semantic flow.

- TNN [39]: A multi-channel CNN model that captures local semantic patterns from multiple perspectives. It uses 100-dimensional GloVe embeddings and applies three parallel 1D convolutional layers with kernel sizes of 3, 4, and 5, each with 100 filters. The max-pooled outputs are concatenated and passed through a fully connected layer. This model learns complementary semantic features from multiple n-gram perspectives, which contribute to effective review helpfulness prediction.

- DMAF [15]: A multimodal fusion model that emphasizes the most discriminative features within each modality through visual and semantic attention mechanisms. In addition, it incorporates a multimodal attention mechanism to integrate complementary multimodal features. The model adopts a deep intermediate fusion strategy to jointly learn modality-specific and unified representations.

- CS-IMD [8]: An MRHP model that explicitly distinguishes between complementary and substitutive relationships between text and images. Multimodal features are extracted using pre-trained BERT and VGG-16 and then processed through attention-based modules designed to capture the relative importance of each modality component. This approach captures both shared and modality-specific contributions to review helpfulness prediction by jointly optimizing complementation and substitution loss terms.

- MFRHP [27]: An MRHP model that incorporates both hand-crafted and deep features from text and images. For textual input, it uses review length, readability scores, and BERT embeddings; for visual input, it adopts pixel brightness and VGG-16 features. These are fused using a co-attention mechanism to capture multimodal interactions, thereby enhancing both prediction accuracy and interpretability.

4.4. Hyperparameter Settings and Experimental Environment

5. Experimental Results and Discussions

5.1. Model Performance Comparison

5.2. Effectiveness Analysis of Consistency Modeling in RHP

5.3. Effectiveness Analysis of Multimodal Fusion Methods

5.4. Efficiency Analysis of the Rating Embedding Mechanism

5.5. Efficiency Analysis of the MRHP Model

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, Q.; Li, X.; Lee, B.; Kim, J. A hybrid CNN-based review helpfulness filtering model for improving e-commerce recommendation Service. Appl. Sci. 2021, 11, 8613. [Google Scholar] [CrossRef]

- Siering, M.; Muntermann, J.; Rajagopalan, B. Explaining and predicting online review helpfulness: The role of content and reviewer-related signals. Decis. Support Syst. 2018, 108, 1–12. [Google Scholar] [CrossRef]

- Zheng, T.; Lin, Z.; Zhang, Y.; Jiao, Q.; Su, T.; Tan, H.; Fan, Z.; Xu, D.; Law, R. Revisiting review helpfulness prediction: An advanced deep learning model with multimodal input from Yelp. Int. J. Hosp. Manag. 2023, 114, 103579. [Google Scholar] [CrossRef]

- Lee, M.; Kwon, W.; Back, K.-J. Artificial intelligence for hospitality big data analytics: Developing a prediction model of restaurant review helpfulness for customer decision-making. Int. J. Contemp. Hosp. Manag. 2021, 33, 2117–2136. [Google Scholar] [CrossRef]

- Li, X.; Li, Q.; Kim, J. A Review Helpfulness Modeling Mechanism for Online E-commerce: Multi-Channel CNN End-to-End Approach. Appl. Artif. Intell. 2023, 37, 2166226. [Google Scholar] [CrossRef]

- Kwon, W.; Lee, M.; Back, K.-J.; Lee, K.Y. Assessing restaurant review helpfulness through big data: Dual-process and social influence theory. J. Hosp. Tour. Technol. 2021, 12, 177–195. [Google Scholar] [CrossRef]

- Moro, S.; Esmerado, J. An integrated model to explain online review helpfulness in hospitality. J. Hosp. Tour. Technol. 2021, 12, 239–253. [Google Scholar] [CrossRef]

- Xiao, S.; Chen, G.; Zhang, C.; Li, X. Complementary or substitutive? A novel deep learning method to leverage text-image interactions for multimodal review helpfulness prediction. Expert Syst. Appl. 2022, 208, 118138. [Google Scholar] [CrossRef]

- Baek, H.; Ahn, J.; Choi, Y. Helpfulness of online consumer reviews: Readers’ objectives and review cues. Int. J. Electron. Commer. 2012, 17, 99–126. [Google Scholar] [CrossRef]

- Wang, S.; Qiu, J. Utilizing a feature-aware external memory network for helpfulness prediction in e-commerce reviews. Appl. Soft Comput. 2023, 148, 110923. [Google Scholar] [CrossRef]

- Kim, S.-M.; Pantel, P.; Chklovski, T.; Pennacchiotti, M. Automatically assessing review helpfulness. In Proceedings of the 2006 Conference on empirical methods in natural language processing, Sydney, Australia, 22–23 July 2006; pp. 423–430. [Google Scholar]

- Tsur, O.; Rappoport, A. Revrank: A fully unsupervised algorithm for selecting the most helpful book reviews. In Proceedings of the International AAAI Conference on Web and Social Media, San Jose, CA, USA, 17–20 May 2009; pp. 154–161. [Google Scholar]

- Mitra, S.; Jenamani, M. Helpfulness of online consumer reviews: A multi-perspective approach. Inf. Process. Manag. 2021, 58, 102538. [Google Scholar] [CrossRef]

- Saumya, S.; Singh, J.P.; Dwivedi, Y.K. Predicting the helpfulness score of online reviews using convolutional neural network. Soft Comput. 2020, 24, 10989–11005. [Google Scholar] [CrossRef]

- Huang, F.; Zhang, X.; Zhao, Z.; Xu, J.; Li, Z. Image–text sentiment analysis via deep multimodal attentive fusion. Knowl. Based Syst. 2019, 167, 26–37. [Google Scholar] [CrossRef]

- Ren, G.; Diao, L.; Kim, J. DMFN: A disentangled multi-level fusion network for review helpfulness prediction. Expert Syst. Appl. 2023, 228, 120344. [Google Scholar] [CrossRef]

- Mudambi, S.M.; Schuff, D. Research note: What makes a helpful online review? A study of customer reviews on Amazon. com. MIS Q. 2010, 34, 185–200. [Google Scholar] [CrossRef]

- Li, Q.; Park, J.; Kim, J. Impact of information consistency in online reviews on consumer behavior in the e-commerce industry: A text mining approach. Data Technol. Appl. 2024, 58, 132–149. [Google Scholar] [CrossRef]

- Aghakhani, N.; Oh, O.; Gregg, D.G.; Karimi, J. Online review consistency matters: An elaboration likelihood model perspective. Inf. Syst. Front. 2021, 23, 1287–1301. [Google Scholar] [CrossRef]

- Li, X.; Li, Q.; Jeong, D.; Kim, J. A novel deep learning method to use feature complementarity for review helpfulness prediction. J. Hosp. Tour. Technol. 2024, 15, 534–555. [Google Scholar] [CrossRef]

- Lee, S.; Choeh, J.Y. Predicting the helpfulness of online reviews using multilayer perceptron neural networks. Expert Syst. Appl. 2014, 41, 3041–3046. [Google Scholar] [CrossRef]

- Krishnamoorthy, S. Linguistic features for review helpfulness prediction. Expert Syst. Appl. 2015, 42, 3751–3759. [Google Scholar] [CrossRef]

- Hu, Y.-H.; Chen, K.; Lee, P.-J. The effect of user-controllable filters on the prediction of online hotel reviews. Inf. Manag. 2017, 54, 728–744. [Google Scholar] [CrossRef]

- Malik, M.S.I. Predicting users’ review helpfulness: The role of significant review and reviewer characteristics. Soft Comput. 2020, 24, 13913–13928. [Google Scholar] [CrossRef]

- Ma, Y.; Xiang, Z.; Du, Q.; Fan, W. Effects of user-provided photos on hotel review helpfulness: An analytical approach with deep leaning. Int. J. Hosp. Manag. 2018, 71, 120–131. [Google Scholar] [CrossRef]

- Li, Y.; Xie, Y. Is a picture worth a thousand words? An empirical study of image content and social media engagement. J. Mark. Res. 2020, 57, 1–19. [Google Scholar] [CrossRef]

- Ren, G.; Diao, L.; Guo, F.; Hong, T. A co-attention based multi-modal fusion network for review helpfulness prediction. Inf. Process Manag. 2024, 61, 103573. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Lu, S.; Li, Y.; Chen, Q.-G.; Xu, Z.; Luo, W.; Zhang, K.; Ye, H.-J. Ovis: Structural embedding alignment for multimodal large language model. arXiv 2024, arXiv:2405.20797. [Google Scholar] [CrossRef]

- Chen, S.; Song, B.; Guo, J. Attention alignment multimodal LSTM for fine-gained common space learning. IEEE Access 2018, 6, 20195–20208. [Google Scholar] [CrossRef]

- Liu, M.; Liu, L.; Cao, J.; Du, Q. Co-attention network with label embedding for text classification. Neurocomputing 2022, 471, 61–69. [Google Scholar] [CrossRef]

- Arevalo, J.; Solorio, T.; Montes-y-Gómez, M.; González, F.A. Gated multimodal units for information fusion. arXiv 2017, arXiv:1702.01992. [Google Scholar] [CrossRef]

- He, R.; McAuley, J. Ups and downs: Modeling the visual evolution of fashion trends with one-class collaborative filtering. In Proceedings of the 25th International Conference on World Wide Web, Montreal, QC, Canada, 11 April 2016; pp. 507–517. [Google Scholar]

- Ni, J.; Li, J.; McAuley, J. Justifying recommendations using distantly-labeled reviews and fine-grained aspects. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–19 November 2019; pp. 188–197. [Google Scholar]

- Lee, M.; Jeong, M.; Lee, J. Roles of negative emotions in customers’ perceived helpfulness of hotel reviews on a user-generated review website: A text mining approach. Int. J. Contemp. Hosp. Manag. 2017, 29, 762–783. [Google Scholar] [CrossRef]

- Bilal, M.; Marjani, M.; Hashem, I.A.T.; Malik, N.; Lali, M.I.U.; Gani, A. Profiling reviewers’ social network strength and predicting the “Helpfulness” of online customer reviews. Electron. Commer. Res. Appl. 2021, 45, 101026. [Google Scholar] [CrossRef]

- Zhang, Y.; Lin, Z. Predicting the helpfulness of online product reviews: A multilingual approach. Electron. Commer. Res. Appl. 2018, 27, 1–10. [Google Scholar] [CrossRef]

- Olmedilla, M.; Martínez-Torres, M.R.; Toral, S. Prediction and modelling online reviews helpfulness using 1D Convolutional Neural Networks. Expert Syst. Appl. 2022, 198, 116787. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Lee, S.; Lee, S.; Baek, H. Does the dispersion of online review ratings affect review helpfulness? Comput. Hum. Behav. 2021, 117, 106670. [Google Scholar] [CrossRef]

| Dataset | Component | Min | Max | Mean | Std. Dev. |

|---|---|---|---|---|---|

| Cell Phones and Accessories | Number of helpfulness votes | 1 | 4149 | 12.334 | 39.068 |

| Number of helpfulness votes (logarithm) | 1.099 | 8.820 | 2.106 | 0.991 | |

| Electronics | Number of helpfulness votes | 1 | 6770 | 17.256 | 64.381 |

| Number of helpfulness votes (logarithm) | 1.099 | 8.331 | 1.956 | 0.898 |

| Dataset | Model | MAE | MSE | RMSE | MAPE |

|---|---|---|---|---|---|

| Cell Phones and Accessories | D-CNN | 0.704 ± 0.002 | 0.792 ± 0.002 | 0.890 ± 0.001 | 40.483 ± 0.203 |

| LSTM | 0.706 ± 0.000 | 0.806 ± 0.000 | 0.898 ± 0.000 | 40.053 ± 0.061 | |

| TNN | 0.697 ± 0.007 | 0.788 ± 0.008 | 0.888 ± 0.004 | 39.836 ± 0.816 | |

| DMAF | 0.686 ± 0.001 | 0.786 ± 0.002 | 0.887 ± 0.001 | 38.110 ± 0.166 | |

| CS-IMD | 0.683 ± 0.001 | 0.777 ± 0.000 | 0.882 ± 0.000 | 37.942 ± 0.081 | |

| MFRHP | 0.677 ± 0.004 | 0.767 ± 0.008 | 0.876 ± 0.005 | 37.359 ± 0.287 | |

| CRCNet | 0.675 ± 0.001 | 0.765 ± 0.001 | 0.875 ± 0.001 | 37.236 ± 0.163 | |

| Electronics | D-CNN | 0.750 ± 0.003 | 0.926 ± 0.002 | 0.962 ± 0.001 | 40.375 ± 0.459 |

| LSTM | 0.745 ± 0.005 | 0.930 ± 0.002 | 0.964 ± 0.001 | 39.255 ± 0.758 | |

| TNN | 0.752 ± 0.004 | 0.923 ± 0.010 | 0.961 ± 0.005 | 40.843 ± 0.745 | |

| DMAF | 0.738 ± 0.000 | 0.930 ± 0.000 | 0.964 ± 0.000 | 38.612 ± 0.043 | |

| CS-IMD | 0.730 ± 0.004 | 0.888 ± 0.001 | 0.943 ± 0.001 | 38.725 ± 0.598 | |

| MFRHP | 0.728 ± 0.004 | 0.883 ± 0.001 | 0.940 ± 0.001 | 38.562 ± 0.519 | |

| CRCNet | 0.720 ± 0.004 | 0.877 ± 0.002 | 0.936 ± 0.001 | 37.634 ± 0.605 |

| Dataset | Method | MAE | MSE | RMSE | MAPE |

|---|---|---|---|---|---|

| Cell Phones and Accessories | Concatenation | 0.698 ± 0.000 | 0.794 ± 0.000 | 0.891 ± 0.000 | 39.400 ± 0.006 |

| Element-wise product | 0.703 ± 0.000 | 0.792 ± 0.000 | 0.890 ± 0.000 | 40.335 ± 0.005 | |

| GMU | 0.676 ± 0.001 | 0.765 ± 0.001 | 0.875 ± 0.001 | 37.279 ± 0.127 | |

| Electronics | Concatenation | 0.733 ± 0.000 | 0.894 ± 0.000 | 0.946 ± 0.000 | 38.948 ± 0.001 |

| Element-wise product | 0.739 ± 0.000 | 0.895 ± 0.000 | 0.946 ± 0.000 | 39.829 ± 0.003 | |

| GMU | 0.720 ± 0.004 | 0.877 ± 0.002 | 0.936 ± 0.001 | 37.610 ± 0.521 |

| Dataset | Model | MAE | MSE | RMSE | MAPE |

|---|---|---|---|---|---|

| Cell Phones and Accessories | CRCNet | 0.676 ± 0.001 | 0.765 ± 0.001 | 0.875 ± 0.001 | 37.279 ± 0.127 |

| w/o rating | 0.696 ± 0.001 | 0.819 ± 0.000 | 0.905 ± 0.000 | 38.297 ± 0.073 | |

| Electronics | CRCNet | 0.720 ± 0.004 | 0.877 ± 0.002 | 0.936 ± 0.001 | 37.610 ± 0.521 |

| w/o rating | 0.737 ± 0.001 | 0.901 ± 0.000 | 0.949 ± 0.000 | 39.267 ± 0.001 |

| Dataset | Model | MAE | MSE | RMSE | MAPE | Training Time (s) |

|---|---|---|---|---|---|---|

| Cell Phones and Accessories | CS-IMD | 0.683 ± 0.001 | 0.777 ± 0.000 | 0.882 ± 0.000 | 37.942 ± 0.081 | 4 |

| DMAF | 0.686 ± 0.001 | 0.786 ± 0.002 | 0.887 ± 0.001 | 38.110 ± 0.166 | 9 | |

| MFRHP | 0.677 ± 0.004 | 0.767 ± 0.008 | 0.876 ± 0.005 | 37.359 ± 0.287 | 13 | |

| CRCNet | 0.675 ± 0.001 | 0.765 ± 0.001 | 0.875 ± 0.001 | 37.236 ± 0.163 | 8 | |

| Electronics | CS-IMD | 0.730 ± 0.004 | 0.888 ± 0.001 | 0.943 ± 0.001 | 38.725 ± 0.598 | 8 |

| DMAF | 0.738 ± 0.000 | 0.930 ± 0.000 | 0.964 ± 0.000 | 38.612 ± 0.043 | 19 | |

| MFRHP | 0.728 ± 0.004 | 0.883 ± 0.001 | 0.940 ± 0.001 | 38.562 ± 0.519 | 34 | |

| CRCNet | 0.720 ± 0.004 | 0.877 ± 0.002 | 0.936 ± 0.001 | 37.634 ± 0.605 | 14 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, S.; Li, X.; Li, Q.; Kim, J. A Multimodal Deep Learning Framework for Consistency-Aware Review Helpfulness Prediction. Electronics 2025, 14, 3089. https://doi.org/10.3390/electronics14153089

Park S, Li X, Li Q, Kim J. A Multimodal Deep Learning Framework for Consistency-Aware Review Helpfulness Prediction. Electronics. 2025; 14(15):3089. https://doi.org/10.3390/electronics14153089

Chicago/Turabian StylePark, Seonu, Xinzhe Li, Qinglong Li, and Jaekyeong Kim. 2025. "A Multimodal Deep Learning Framework for Consistency-Aware Review Helpfulness Prediction" Electronics 14, no. 15: 3089. https://doi.org/10.3390/electronics14153089

APA StylePark, S., Li, X., Li, Q., & Kim, J. (2025). A Multimodal Deep Learning Framework for Consistency-Aware Review Helpfulness Prediction. Electronics, 14(15), 3089. https://doi.org/10.3390/electronics14153089