A Review of Spatial Positioning Methods Applied to Magnetic Climbing Robots

Abstract

1. Introduction

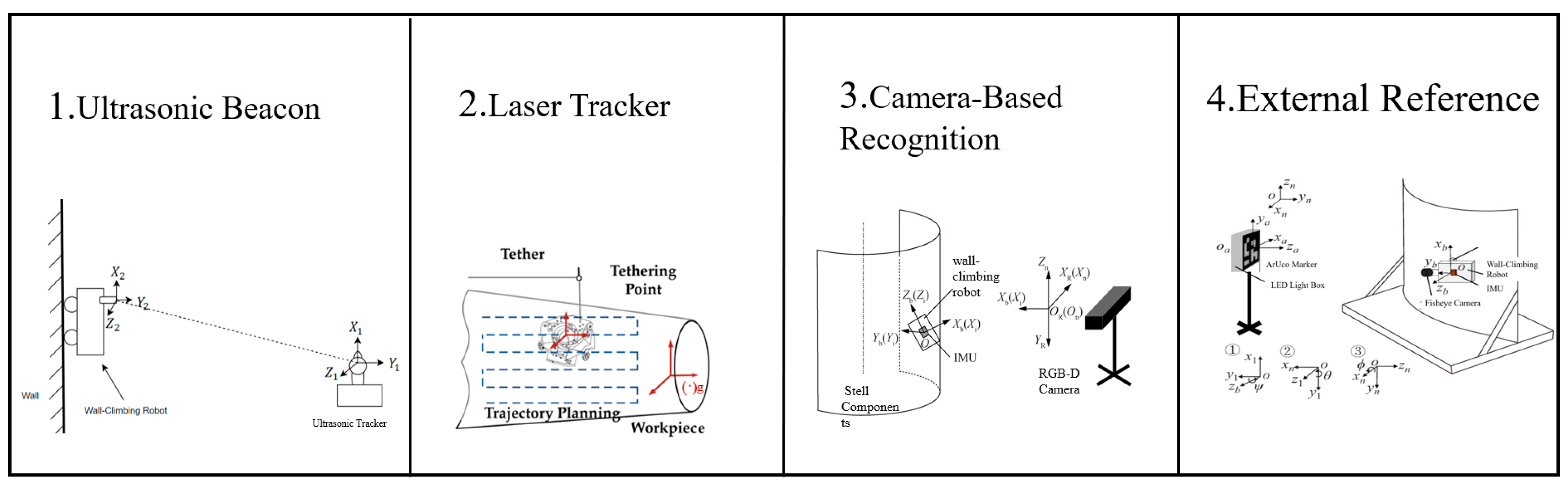

2. Signal-Sensor-Based Spatial Positioning Methods

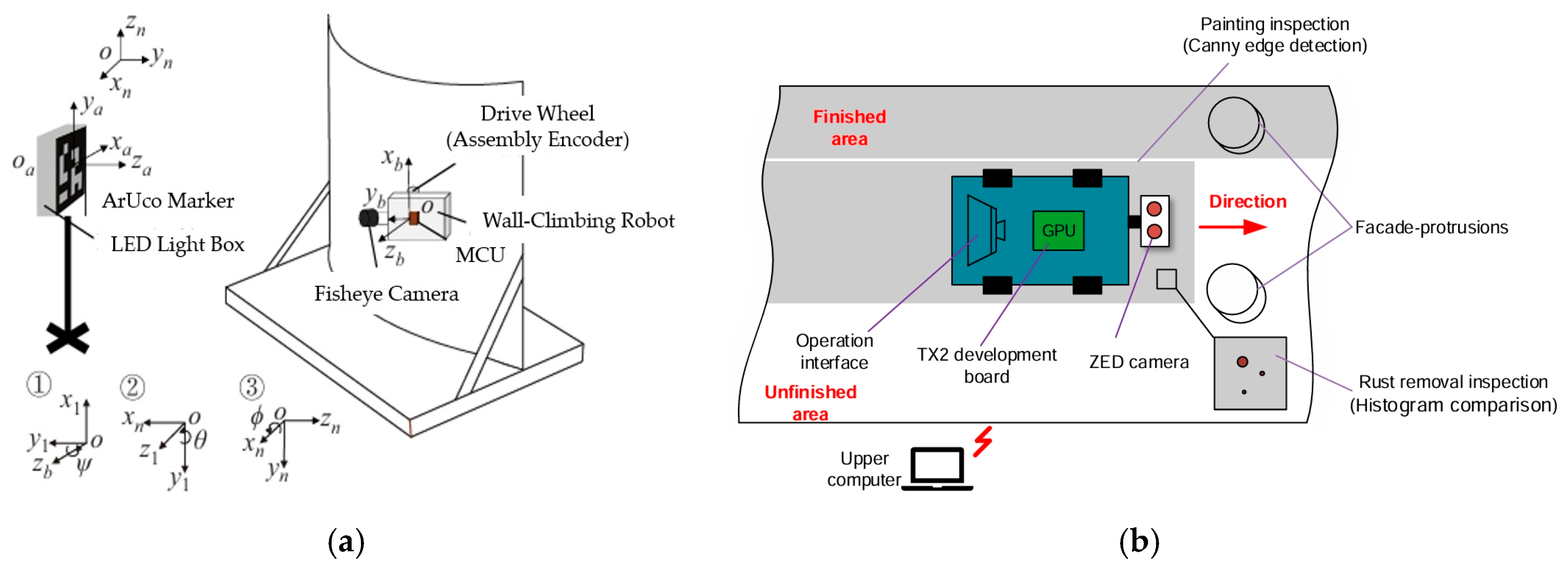

2.1. External Sensor-Based Spatial Positioning

2.2. Onboard Sensor-Based Spatial Positioning

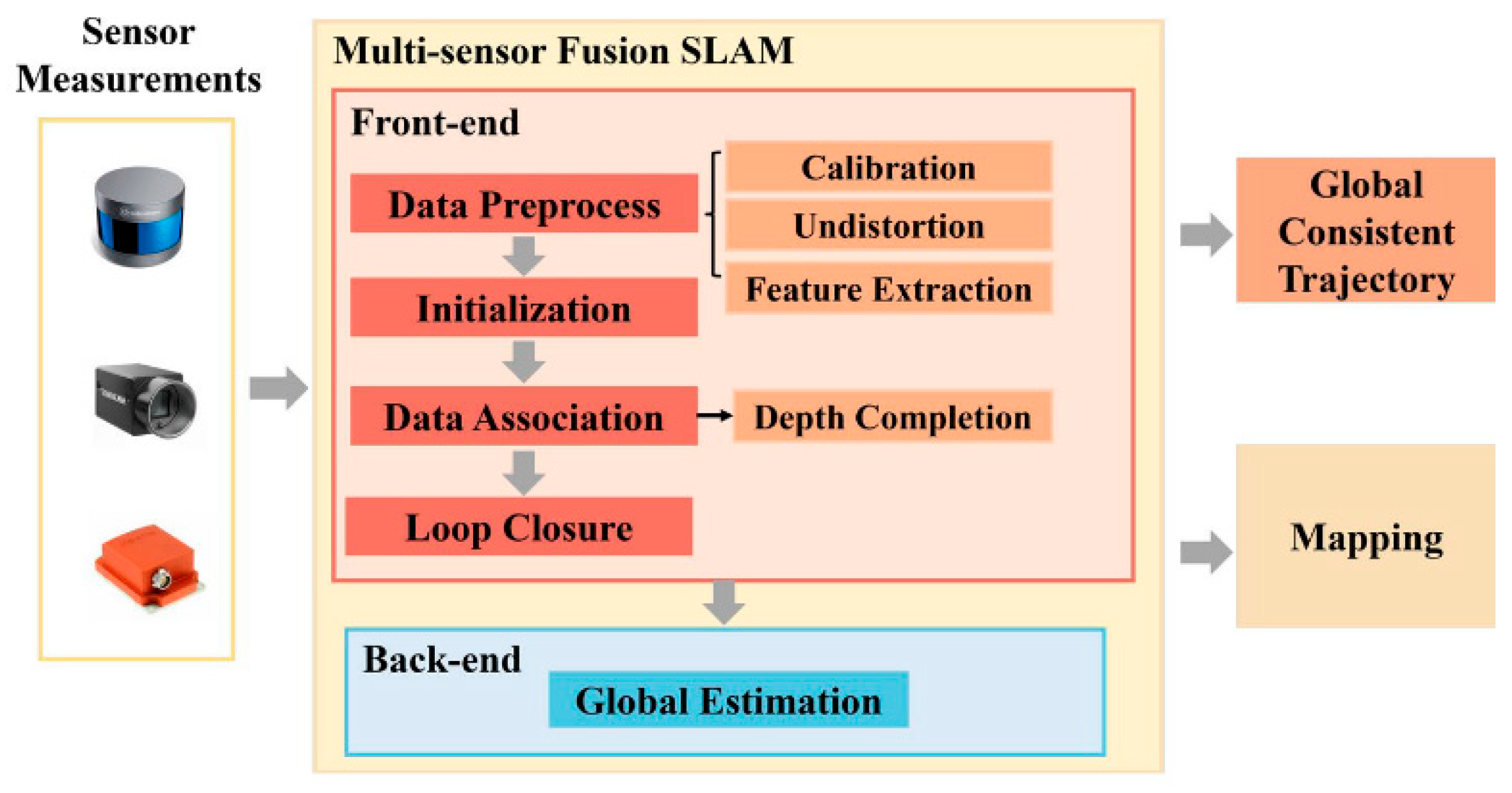

3. Multi-Sensor Fusion-Based Spatial Positioning Methods

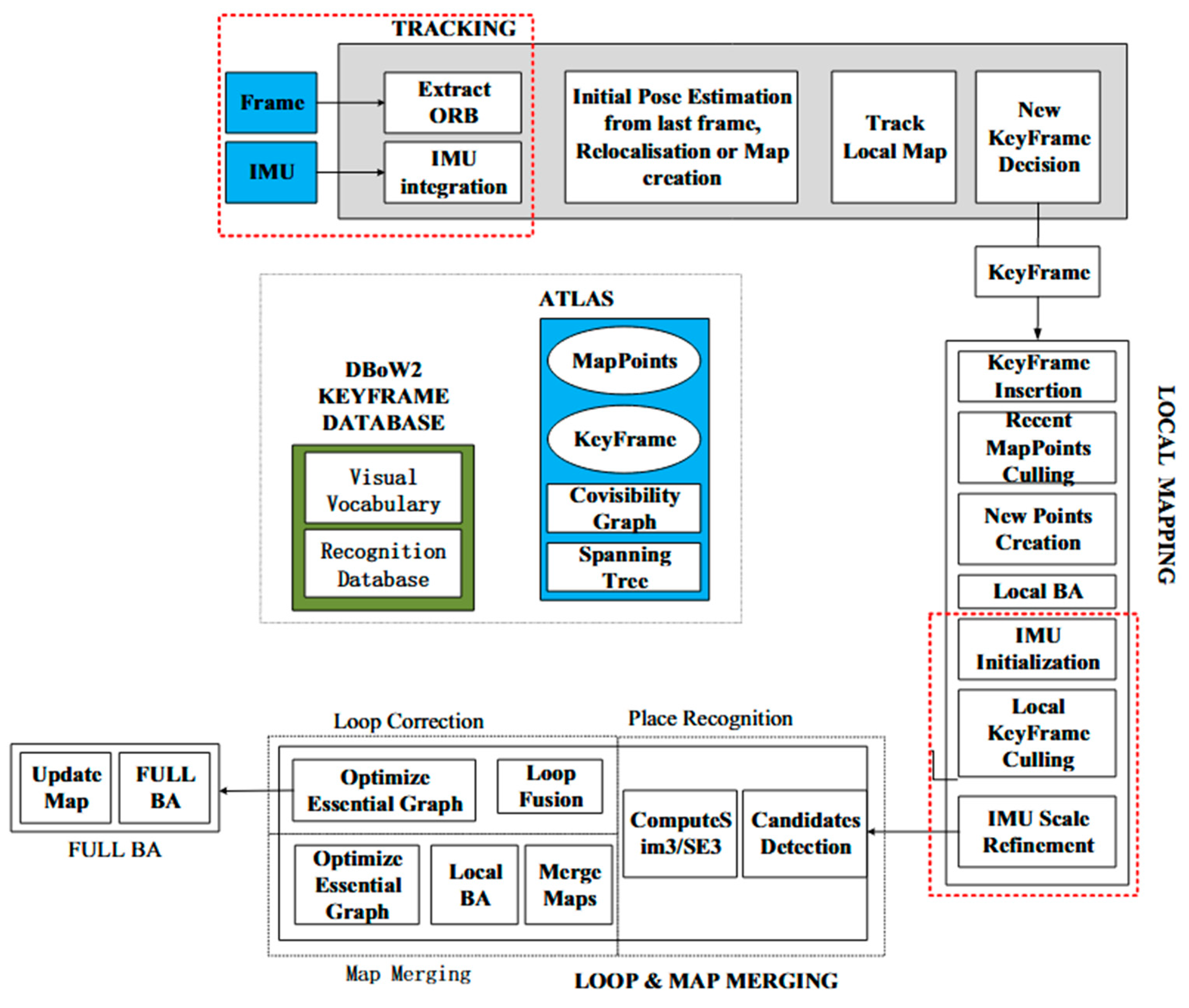

3.1. Multi-Sensor Fusion-Based SLAMs

3.2. Multi-Sensor Integrated Positioning Systems

3.3. Multi-Robot Cooperative Positioning

4. Spatial Positioning Reinforcement Methods

4.1. Filtering-Based Reinforcement Methods

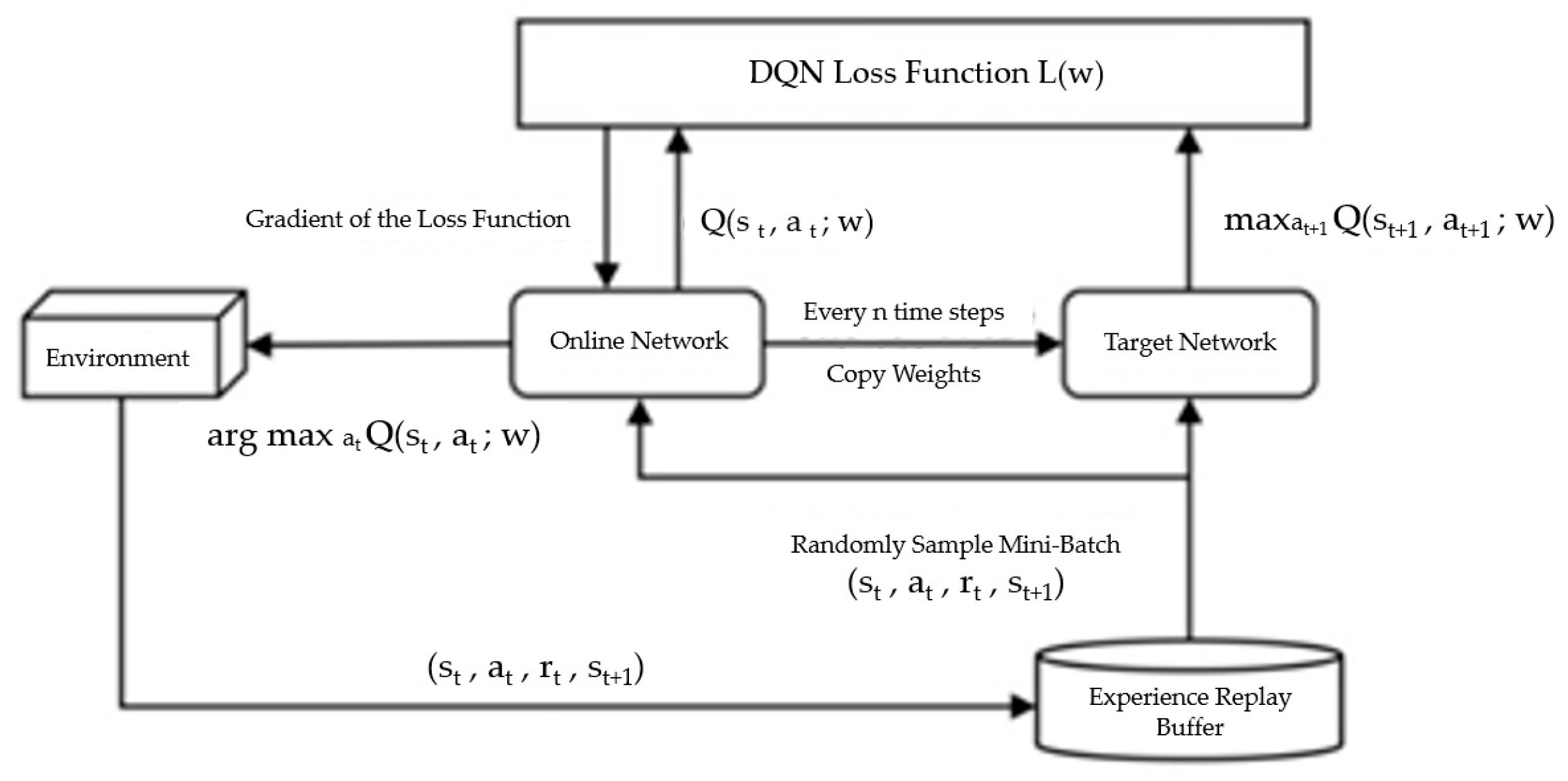

4.2. Deep Reinforcement Learning-Based Reinforcement Methods

- Spatial position prediction of climbing robots.

- The interference elimination of robot positioning in a dynamic environment, such as surface texture, partial occlusion, reflected light, etc.

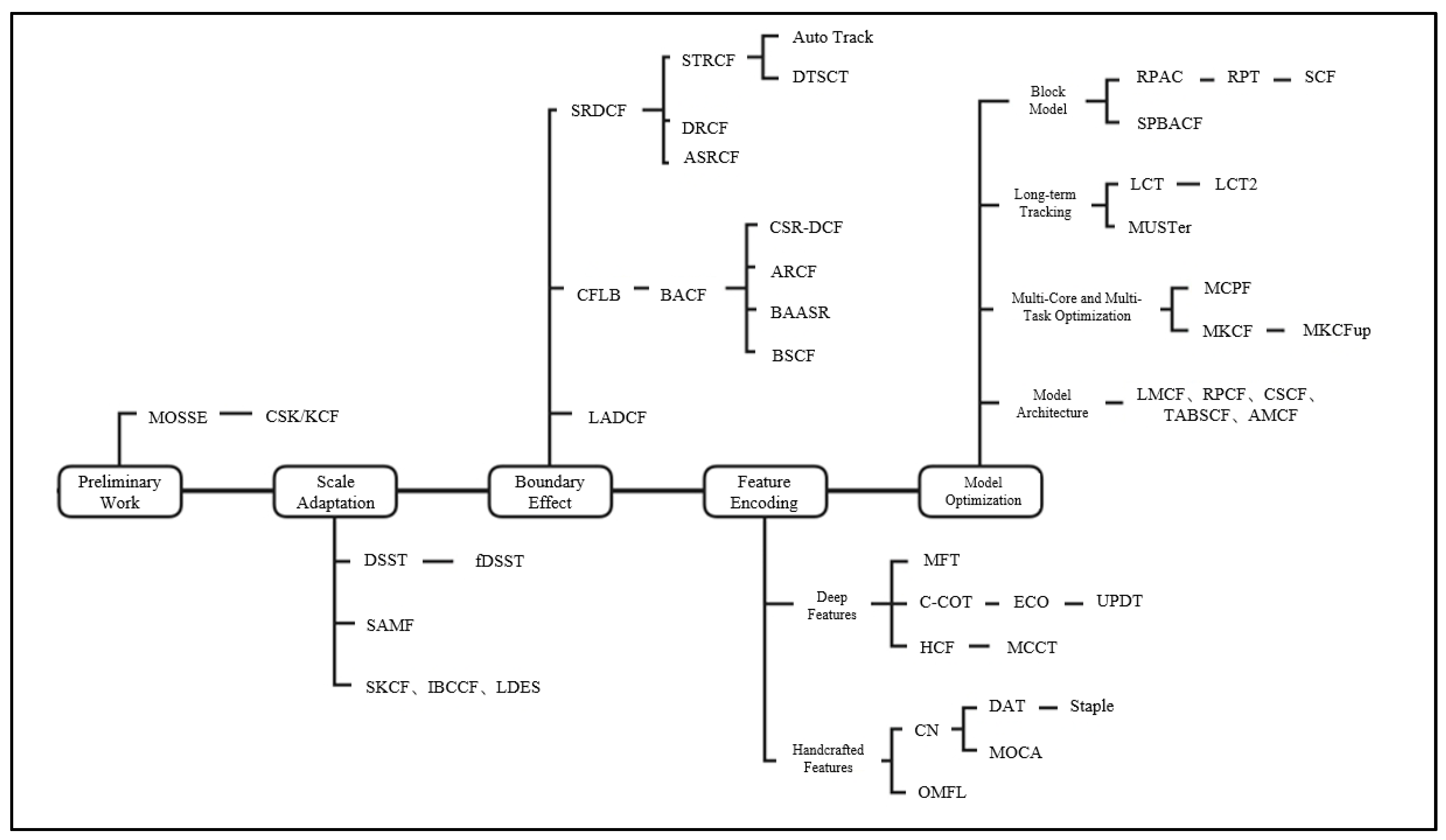

- Target recognition and tracking.

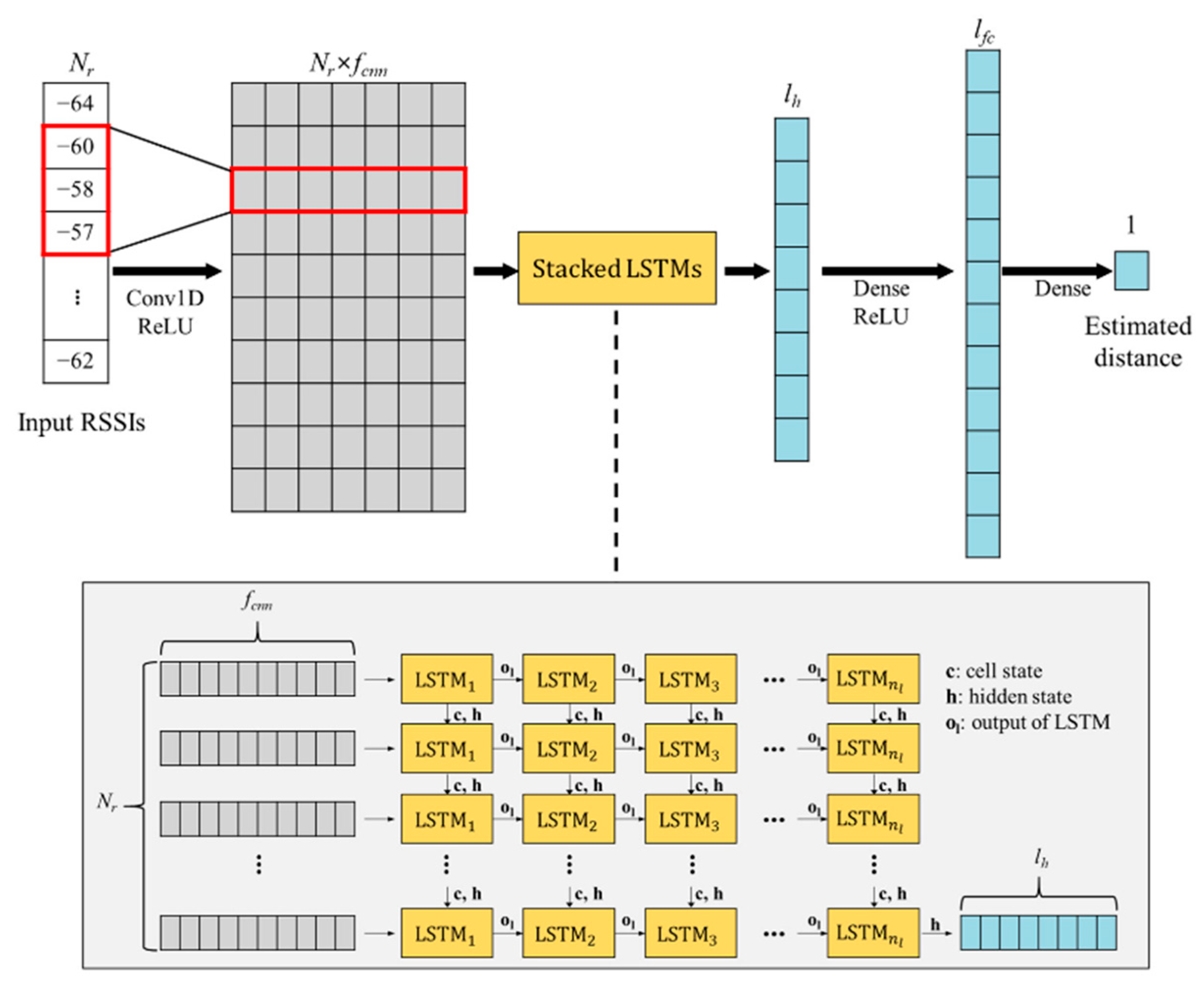

4.3. Neural Network-Based Reinforcement Methods

5. Challenges and Trends for the Spatial Positioning

5.1. Challenges for the Spatial Positioning

- Variations in surface shape and material properties: Due to the different radiation properties, frictional coefficients, and geometry changes [60], magnetic climbing robots’ sensor data quality and positioning accuracy are heavily affected.

- Accumulated sensor errors: Since the positioning sensors, such as IMU, suffer from the accumulation error, the attenuated precision makes the positioning sensors unsuitable for long-duration or long-distance tasks [61].

- Interference of the dynamic environment: The positioning stability is usually affected by the wind force, lighting conditions, and abnormal vibration in high-altitude operations.

- The constraints of the working environment: Magnetic climbing robots must frequently adjust their posture to adapt to complex geometries. Due to the continuous change in the robot’s posture, the camera’s viewpoint also changes constantly.

- Energy consumption problem: The magnetic robot’s battery power is limited, but the positioning sensors, vision systems, and motion computing units usually require high power consumption. It is necessary to find an optimal balance among the positioning accuracy, battery capacity, and system size.

- Limited computing resources: High-precision positioning and target recognition require large amounts of computing resources. Nevertheless, the design of magnetic climbing robots aims for lightweight, and the integration of high-performance computing platforms is difficult for magnetic climbing robots.

5.2. Trends for the Spatial Positioning

- Data-Processing Demands on Various Operation Surfaces: To cope with the impact of various operation surfaces on the sensor’s data quality, appropriate sensor combinations should be selected according to the environmental conditions, guided by fusion models that balance accuracy, energy, and computational load. To enable real-time deployment, future research should focus on lightweight machine learning models and efficient data-processing pipelines that are suitable for onboard computing.

- Correction of Accumulated Sensor Errors: To realize the long-duration and long-distance operations, filtering algorithms, such as Kalman-type filtering, particle filtering, correlation filtering, etc., should be used for the rectification of accumulated sensor errors.

- Handling Dynamic Environment Disturbances: Since the dynamic environment disturbances heavily affect the stability of sensor data in real applications, anti-interference algorithms and mechanical design methods should be considered to cope with the dynamic environment disturbances.

- Intelligent Energy Consumption Management: Due to this, energy efficiency is crucial for the sustained operation of positioning sensors and computing units. Low-power sensors will be a major development trend, and the dynamic adjustment of sensor operation modes is an effective energy-saving method.

- Multi-Robot Cooperation: Multi-robot cooperation is helpful for expanding the positioning range and improving task efficiency. The multi-robot cooperative SLAM algorithms are useful to realize map-sharing and the collaborative optimization of position and posture parameters under large-scale or complex operation environments.

- Lightweight Algorithms: Image tracking should be integrated with spatial positioning to develop a lightweight algorithm to reduce computational complexity.

- Deployment of AI models: More artificial intelligence models are expected to be applied to the localization of wall-climbing robots in the future.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tao, B.; Gong, Z.; Ding, H. Climbing robots for manufacturing. Natl. Sci. Rev. 2023, 10, nwad042. [Google Scholar] [CrossRef]

- Bridge, B.; Sattar, T.P.; Leon-Rodriguez, H.E. Climbing robot cell for fast and flexible manufacture of large scale structures. In CAD/CAM Robotics and Factories of the Future, 22nd International Conference; Narosa Publishing House: New Delhi, India, 2006; pp. 584–597. [Google Scholar]

- Tao, J.; Yubo, H.; Lirong, S.; Wenfei, F.; Weijun, Z. Design and Research of Segmented-Stepping Tower Climbing Robot. Mach. Des. Res. 2024, 40, 46–51. [Google Scholar]

- Jiang, A.; Zhan, Q.; Zhang, Y. Development of New Magnetic Adhesion Wall Climbing Robot. Mach. Build. Autom. 2018, 47, 146–148+161. [Google Scholar]

- Fang, Y.; Wang, S.; Bi, Q.; Cui, D.; Yan, C. Design and technical development of wall-climbing robots: A review. J. Bionic Eng. 2022, 19, 877–901. [Google Scholar] [CrossRef]

- Schmidt, D.; Berns, K. Climbing robots for maintenance and inspections of vertical structures—A survey of design aspects and technologies. Robot. Auton. Syst. 2013, 61, 1288–1305. [Google Scholar] [CrossRef]

- Wang, H. Research on Rose Recognition and Spatial Localization Based on Monocular Vision. Master’s Thesis, Wuhan Institute of Technology, Wuhan, China, 2024. [Google Scholar]

- Shuwen, H.; Keyu, G.; Xiangyu, S.; Feng, H.; Shijie, S.; Huansheng, S. Multi-target 3D visual grounding method based on monocular images. J. Comput. Appl. 2025; 1–11, in press. [Google Scholar]

- Yan, W. Research on Motion Control and Positioning Method of Wall-Climbing Robot. Master’s Thesis, Changzhou University, Changzhou, China, 2023. [Google Scholar]

- Shao, S. Research on Global Navigation System of Indoor Unmanned Vehicle Based on Ultrasonic Positioning. Master’s Thesis, Northeast Petroleum University, Daqing, China, 2023. [Google Scholar]

- Wu, J. Research on Indoor LiDAR Localization and Mapping Technology of Mobile Robot. Master’s Thesis, Shanghai Ocean University, Shanghai, China, 2024. [Google Scholar]

- Zhang, Y. Research on Positioning of High-Altitude Strong Magnetic Climbing Robots Based on Multi-Sensor Fusion. Master’s Thesis, Fujian University of Technology, Fuzhou, China, 2023. [Google Scholar]

- Fang, X.; Liu, J.; Chen, Y. Research on autonomous movement of the wall-climbing robot based on SLAM. Manuf. Autom. 2023, 45, 85–88. [Google Scholar]

- Zhao, M. Research on Visual Positioning and Path-Planning Algorithms for Mobile Robots. Master’s Thesis, Inner Mongolia University, Hohhot, China, 2024. [Google Scholar]

- Li, D. Optimization Design and Algorithm Research on Ultra Wide Band Indoor Positioning. Master’s Thesis, Shandong Jianzhu University, Jinan, China, 2024. [Google Scholar]

- Xu, S. Non-Line-of-Sight Identification and Error Compensation for Indoor Positioning Based on UWB. Master’s Thesis, Nanjing University of Information Science & Technology, Nanjing, China, 2024. [Google Scholar]

- Liu, M.; Yang, S.; Rathee, A.; Du, W. Orientation Estimation Piloted by Deep Reinforcement Learning. In Proceedings of the 2024 IEEE/ACM Ninth International Conference on Internet-of-Things Design and Implementation (IoTDI), Hong Kong, China, 13–16 May 2024; pp. 134–145. [Google Scholar]

- Zeng, F.; Wang, C.; Ge, S.S. A Survey on Visual Navigation for Artificial Agents with Deep Reinforcement Learning. IEEE Access 2020, 8, 135426–135442. [Google Scholar] [CrossRef]

- Ouyang, G.; Abed-Meraim, K.; Ouyang, Z. Magnetic-Field-Based Indoor Positioning Using Temporal Convolutional Networks. Sensors 2023, 23, 1514. [Google Scholar] [CrossRef] [PubMed]

- Li, A.; Tao, B.; Ding, H. Research Progress and Application of Magnetic Adhesion Wall-climbing Robots. Robot 2025, 47, 123–144. [Google Scholar]

- Enjikalayil Abdulkader, R.; Veerajagadheswar, P.; Htet Lin, N.; Kumaran, S.; Vishaal, S.R.; Mohan, R.E. Sparrow: Amagnetic climbing robot for autonomous thickness measurement in ship hull maintenance. J. Mar. Sci. Eng. 2020, 8, 469. [Google Scholar] [CrossRef]

- Wang, T.; Zhu, S.; Song, W.; Li, C.; Shi, H. Path planning for wall climbing robot in volume measurement of vertical tank. Robot 2023, 46, 36–44. [Google Scholar]

- Zhang, W.; Ding, Y.; Chen, Y.; Sun, Z. Autonomous positioning for wall climbing robots based on a combination of an external camera and a robot-mounted inertial measurement unit. J. Tsinghua Univ. (Sci. Technol.) 2022, 62, 1524–1531. [Google Scholar]

- Gu, Z.; Gong, Z.; Tao, B.; Yin, Z.; Ding, H. Global localization based on tether and visual-inertial odometry with adsorption constraints for climbing robots. IEEE Trans. Ind. Inform. 2023, 19, 6762–6772. [Google Scholar] [CrossRef]

- Zhang, W.; Yang, Y.; Huang, T.; Sun, Z. ArUco-assisted autonomous localization method for wall climbing robots. Robot 2024, 46, 27–35, 44. [Google Scholar]

- Tache, F.; Pomerleau, F.; Caprari, G.; Siegwart, R.; Bosse, M.; Moser, R. Three dimensional localization for the Magne Bike inspection robot. J. Field Robot. 2011, 28, 180–203. [Google Scholar] [CrossRef]

- Zhong, M.; Ma, Y.; Li, Z.; He, J.; Liu, Y. Facade protrusion recognition and operation-effect inspection methods based on binocular vision for wall-climbing robots. Appl. Sci. 2023, 13, 5721. [Google Scholar] [CrossRef]

- Wang, Z.; Yan, B.; Dong, M.; Wang, J.; Sun, P. A localization method of wall-climbing robot based on LiDAR and improved AMCL. Chin. J. Sci. Instrum. 2022, 43, 220–227. [Google Scholar]

- He, J. Research on Visual Object Tracking method based on Discriminative Correlation Filter. Master’s Thesis, Guilin University of Electronic Technology, Guilin, China, 2023. [Google Scholar]

- Gao, Q.; Lu, K.; Ji, Y.; Liu, J.; Xu, L.; Wei, G. Survey on the Research of Multi-sensor Fusion SLAM. Mod. Radar 2024, 46, 29–39. [Google Scholar]

- Zhou, Z.; Zhang, C.; Li, C.; Zhang, Y.; Shi, Y.; Zhang, W. A tightly-coupled LIDAR-IMU SLAM method for quadruped robots. Meas. Control 2024, 57, 1004–1013. [Google Scholar] [CrossRef]

- Liu, S.; Dong, N.; Mai, X. Research on Visual-Inertial Fusion SLAM Based on Mean Filtering Algorithm. In Proceedings of the 42nd Chinese Control Conference, Tianjin, China, 24–26 July 2023; pp. 392–397. [Google Scholar]

- Wang, C.; Wu, B.; Wang, H.; Zheng, H.; Wang, L. Indoor localization technology of SLAM based on binocular vision and IMU. In Proceedings of the 2022 4th International Conference on Robotics, Intelligent Control and Artificial Intelligence, Dongguan, China, 16–18 December 2022; pp. 441–446. [Google Scholar]

- Zhang, L.; Wu, X.; Gao, R. A multi-sensor fusion positioning approach for indoor mobile robot using factor graph. Measurement 2023, 216, 112926. [Google Scholar] [CrossRef]

- Zhai, Y.; Zhang, S. A Novel LiDAR–IMU–Odometer Coupling Framework for Two-Wheeled Inverted Pendulum (TWIP) Robot Localization and Mapping with Nonholonomic Constraint Factors. Sensors 2022, 22, 4778. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, X.; Jiang, J. Spatiotemporal LiDAR-IMU-Camera Calibration: A Targetless and IMU-Centric Approach Based on Continuous-time Batch Optimization. In Proceedings of the 2022 34th Chinese Control and Decision Conference (CCDC), Hefei, China, 15–17 August 2022. [Google Scholar]

- Hou, L.; Xu, X.; Ito, T. An Optimization-Based IMU/LiDAR/Camera Co-calibration Method. In Proceedings of the 2022 7th International Conference on Robotics and Automation Engineering (ICRAE), Singapore, 18–20 November 2022; pp. 118–122. [Google Scholar]

- Liu, W.; Li, Z.; Sun, S.; Du, H.; Angel Sotelo, M. A novel motion-based online temporal calibration method for multi-rate sensors fusion. Inf. Fusion 2022, 88, 59–77. [Google Scholar] [CrossRef]

- Cao, Y.; Li, M.; Svogor, I.; Wei, S.; Beltrame, G. Dynamic Range-Only Localization for Multi-Robot Systems. IEEE Access 2018, 6, 46527–46537. [Google Scholar] [CrossRef]

- Varghese, G.; Reddy, T.G.C.; Menon, A.K. Multi-Robot System for Map** and Localization. In Proceedings of the 2023 8th International Conference on Robotics and Automation Engineering (ICRAE), Singapore, 17–19 November 2023; pp. 79–84. [Google Scholar]

- Dai, Y.; Li, C.; Wang, D. Joint SLAM and Joint Search of Unmanned Car Cluster. In Proceedings of the 2023 38th Youth Academic Annual Conference of Chinese Association of Automation (YAC), Hefei, China, 27–29 August 2023; pp. 1015–1019. [Google Scholar]

- Zhuang, Y.; Sun, X.; Li, Y. Multi-sensor integrated navigation/positioning systems using data fusion: From analytics-based to learning-based approaches. Inf. Fusion 2023, 95, 62–90. [Google Scholar] [CrossRef]

- Yang, Y. Research on Key Technologies for Aerial Ground Cooperative Navigation and Localization of Warehouse Inspection Robots. Master’s Thesis, Southwest University of Science and Technology, Mianyang, China, 2024. [Google Scholar]

- Xu, J. Research on Indoor Mobile Robot Navigation System. Master’s Thesis, Guangdong University of Technology, Guangzhou, China, 2022. [Google Scholar]

- Li, C. Research on the Combination of Siamese Network and Correlation Filter in Visual Tracking. Ph.D. Thesis, Zhejiang University, Hangzhou, China, 2021. [Google Scholar]

- Wang, M. Research on Visual Object Tracking Algorithm Based on Correlation Filtering. Master’s Thesis, Beijing Jiaotong University, Beijing, China, 2020. [Google Scholar]

- Wang, Z.; Liu, K.; Qin, Y.; Miao, B.; Tian, G.; Tang, T. Real Time Tracking Algorithm for High Speed Railway Catenary Support Device Based on Correlation Filtering and Saliency Detection. China Transp. Rev. 2024, 46, 98–105. [Google Scholar]

- Yu, J.; Zhang, L.; Zhang, K. Autonomous Avoidance Pedestrian Control Method for Indoor Mobile Robot. J. Chin. Comput. Syst. 2020, 41, 1776–1782. [Google Scholar]

- Zhang, P. Design and Implementation of Interactive Navigation System for Mobile Robot in Dynamic Environments. Master’s Thesis, Southeast University, Nanjing, China, 2021. [Google Scholar]

- Liu, Q. Research on Indoor Interactive Visual Navigation Based on Deep Reinforcement Learning. Master’s Thesis, Xiangtan University, Xiangtan, China, 2023. [Google Scholar]

- Sivaranjani, A.; Vinod, B. Artificial Potential Field Incorporated Deep-Q-Network Algorithm for Mobile Robot Path Prediction. Intell. Autom. Soft Comput. 2023, 35, 1135–1150. [Google Scholar] [CrossRef]

- Wang, Y.; Peng, T.; Wang, W.; Luo, M. High-efficient view planning for surface inspection based on parallel deep reinforcement learning. Adv. Eng. Inform. 2023, 55, 101849. [Google Scholar] [CrossRef]

- Zuo, G.; Tong, J.; Wang, Z.; Gong, D. A Graph-Based Deep Reinforcement Learning Approach to Grasping Fully Occluded Objects. Cogn. Comput. 2022, 15, 36–49. [Google Scholar] [CrossRef]

- Jiang, W.; Xu, G.; Wang, Y. A Method for Autonomous Obstacle Avoidance and Target Tracking of Unmanned Aerial Vehicle. J. Astronaut. 2022, 43, 802–810. [Google Scholar]

- Wang, Z. Research on Target Tracking via Multiple-Feature Based on Deep Reinforcement Learning. Master’s Thesis, Liaoning Normal University, Dalian, China, 2022. [Google Scholar]

- Guo, H.; Xu, Y.; Ma, Y.; Xu, S.; Li, Z. Pursuit Path Planning for Multiple Unmanned Ground Vehicles Based on Deep Reinforcement Learning. Electronics 2023, 12, 4759. [Google Scholar] [CrossRef]

- Wang, D. Research and Application of CNN-Based Visual Localization. Master’s Thesis, Shanghai University of Electric Power, Shanghai, China, 2024. [Google Scholar]

- Yoon, J.; Kim, H.; Lee, D. Indoor Positioning Method by CNN-LSTM of Continuous Received Signal Strength Indicator. Electronics 2024, 13, 4518. [Google Scholar] [CrossRef]

- Wu, S.; Wang, X.; Zhang, L.; Xu, K.; Zhang, M.; Jin, S. Temporal convolutional neural network indoor UWB positioning method based on SimCLR-CIR-SC autonomous classification. J. Electron. Meas. Instrum. 2025, 39, 65–76. [Google Scholar]

- Xu, F.; Meng, F.; Jiang, Q.; Peng, G. Grappling claws for a robot to climb rough wall surfaces: Mechanical design, gras** algorithm, and experiments. Robot. Auton. Syst. 2020, 128, 103501. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. Visual-LiDAR odometry and map**: Low-drift, robust, and fast. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2174–2181. [Google Scholar]

- Zhou, X.; Wen, X.; Wang, Z.; Gao, Y.; Li, H.; Wang, Q.; Yang, T.; Lu, H.; Cao, Y.; Xu, C. Swarm of micro flying robots in the wild. Sci. Robot. 2022, 7, eabm5954. [Google Scholar] [CrossRef]

- Mehrez, M.W.; Mann, G.K.I.; Gosine, R.G. An optimization based approach for relative localization and relative tracking control in multi-robot systems. J. Intell. Robot. Syst. 2017, 85, 385–408. [Google Scholar] [CrossRef]

| Technology Category | Accuracy | Robustness | Real-Time Capability | Power Consumption |

|---|---|---|---|---|

| Single-Sensor Approaches | Medium: Limited by sensor errors accumulation | Low: Sensitive to environmental changes and interference | High: Low computational complexity | Low: Simple hardware, low power consumption |

| Multi-Sensor Fusion Strategies | High: Fusion of multiple sensors improves positioning accuracy | High: Effectively compensates for single sensor weaknesses | Medium: Fusion computations are complex but can be optimized for real-time performance | Medium: Multiple sensors and higher computational demand increase power usage |

| Filtering-Based Reinforcement Methods | Medium to High: Effective error correction in Gaussian noise systems | Medium: Works well with Gaussian noise, but less so in complex noise environments | High: Filtering algorithms are computationally efficient | Low to Medium: Requires moderate processing power for filtering |

| Deep Reinforcement Learning-based Methods | Relatively high: Can adapt to complex environments and dynamically optimize | High: Adapts to dynamic environments and nonlinear disturbances | Low to Medium: Training requires high computation, and inference can be optimized | High: Deep learning models require significant computational resources |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ru, H.; Sheng, M.; Qi, J.; Li, Z.; Cheng, L.; Zhang, J.; Xiao, J.; Gao, F.; Wang, B.; Jia, Q. A Review of Spatial Positioning Methods Applied to Magnetic Climbing Robots. Electronics 2025, 14, 3069. https://doi.org/10.3390/electronics14153069

Ru H, Sheng M, Qi J, Li Z, Cheng L, Zhang J, Xiao J, Gao F, Wang B, Jia Q. A Review of Spatial Positioning Methods Applied to Magnetic Climbing Robots. Electronics. 2025; 14(15):3069. https://doi.org/10.3390/electronics14153069

Chicago/Turabian StyleRu, Haolei, Meiping Sheng, Jiahui Qi, Zhanghao Li, Lei Cheng, Jiahao Zhang, Jiangjian Xiao, Fei Gao, Baolei Wang, and Qingwei Jia. 2025. "A Review of Spatial Positioning Methods Applied to Magnetic Climbing Robots" Electronics 14, no. 15: 3069. https://doi.org/10.3390/electronics14153069

APA StyleRu, H., Sheng, M., Qi, J., Li, Z., Cheng, L., Zhang, J., Xiao, J., Gao, F., Wang, B., & Jia, Q. (2025). A Review of Spatial Positioning Methods Applied to Magnetic Climbing Robots. Electronics, 14(15), 3069. https://doi.org/10.3390/electronics14153069